Abstract

A novel AGV (Automated Guided Vehicle) control architecture has recently been proposed where the AGV is controlled remotely by a virtual Programmable Logic Controller (PLC), which is deployed on a Multi-access Edge Computing (MEC) platform and connected to the AGV via a radio link in a 5G network. In this scenario, we leverage advanced deep learning techniques based on ensembles of N-BEATS (state-of-the-art in time-series forecasting) to build predictive models that can anticipate the deviation of the AGV’s trajectory even when network perturbations appear. Therefore, corrective maneuvers, such as stopping the AGV, can be performed in advance to avoid potentially harmful situations. The main contribution of this work is an innovative application of the N-BEATS architecture for AGV deviation prediction using sequence-to-sequence modeling. This novel approach allows for a flexible adaptation of the forecast horizon to the AGV operator’s current needs, without the need for model retraining or sacrificing performance. As a second contribution, we extend the N-BEATS architecture to incorporate relevant information from exogenous variables alongside endogenous variables. This joint consideration enables more accurate predictions and enhances the model’s overall performance. The proposed solution was thoroughly evaluated through realistic scenarios in a real factory environment with 5G connectivity and compared against main representatives of deep learning architectures (LSTM), machine learning techniques (Random Forest), and statistical methods (ARIMA) for time-series forecasting. We demonstrate that the deviation of AGVs can be effectively detected by using ensembles of our extended N-BEATS architecture that clearly outperform the other methods. Finally, a careful analysis of a real-time deployment of our solution was conducted, including retraining scenarios that could be triggered by the appearance of data drift problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

An Automated Guided Vehicle (AGVs) is a transport vehicle that operates under the direction of a computer system. AGVs are widely used in material transport applications, such as industrial manufacturing and automotive assembly plants, as well as commercial settings such as warehouses and hospitals [1, 2]. A key factor of AGVs is that they can be remotely controlled, allowing greater flexibility and efficiency on the job site [3]. 5G is the key enabler for remote control of AGVs because it provides the high-speed, low-latency and reliable connectivity that these vehicles need to operate safely and efficiently in real-time.

Taking advantage of 5G capabilities, in [4] we proposed a novel control scheme for 5G-enabled AGVs that involves deploying their remote control as a virtualized Programmable Logic Controller (PLC) running in a 5G MEC (Mobile Edge Computing) infrastructure. By migrating the controller to the MEC, the AGV can reduce its hardware requirements, as the controller can share the resources of the MEC, which can result in significant cost savings for the AGV manufacturer. Furthermore, using this scheme, the AGV can take advantage of the flexibility, scalability, and fault tolerance of the virtualized infrastructure, allowing the AGV to adapt more quickly to changing needs and requirements and ensuring that it remains available to perform its tasks. Furthermore, since the controller is no longer inside the AGV, this scheme also results in a reduction in the weight of the AGV, which also allows for reduced power consumption.

In the scheme described above, the controller uses the information from the sensors placed on the AGV to make decisions about its trajectory. In particular, the controller can use information from the guiding sensors to measure the current deviation from the trajectory. This information is stored in AGV variables that are periodically transmitted from the AGV to the PLC. The controller processes this information to generate a corrective action that is sent back to the AGV to bring it back to the desired trajectory or to raise an alarm if the deviation is too large. For the logic implemented in the PLC to guide the AGV it is important to consider that the AGV, due to the inertia of its mass, may not be able to respond immediately to the controller’s commands. To solve this problem, predictive models can be used to compensate for AGV response time. Based on the current deviation from the track path, AGV malfunction can be detected ahead of time, allowing appropriate corrective actions to be applied to ensure that the AGV remains on the track or to bring it to a complete stop in order to prevent an accident from occurring, thus ensuring work safety and reducing operational downtime. This problem can be modeled as a time series forecasting problem, where the objective is to predict the future state of the AGV location based on its past state and the current deviation from the desired trajectory. In AGV applications, trajectory deviation is often referred to as "Guide Error" or "Guiding Error", so we will use the term "Guide Error" to refer to trajectory deviation in the remainder of this paper. Note that this term should not be confused with a malfunction of the AGV, as the Guide Error is the natural deviation from the trajectory that appears when the AGV is in motion.

In this work, we propose innovative sequence-to-sequence approaches based on an enhanced N-BEATS architecture to improve the accuracy of predicting ahead of time the deviation of an AGV from the desired trajectory. The research questions this work addresses are:

-

RQ-1: Is it worth deploying a state-of-the-art neural network architecture (N-BEATS) that consumes more resources than traditional machine learning (Random Forest), statistical (ARIMA) and deep learning (LSTM) models for this AGV scenario? (In this context, our aim is to maximize the forecasting performance/accuracy when limited CPU/GPU resources are available).

-

RQ-2: What are the most appropriate forecasting variables to predict AGV malfunction?

-

RQ-3: Is there an optimal combination of DL/ML models, time windows, and input features that maximizes the forecast performance for this AGV scenario?

-

RQ-4: How does each of these factors affect the forecast accuracy?

-

RQ-5: How can the occurrence of data drift problems in real-time deployment scenarios be effectively resolved?

To address the research question RQ-1, in sharp contrast to a previous work [4], this research aims to adapt modern sequence-to-sequence time-series forecasting models, including recent state-of-the-art techniques such as N-BEATS and other advanced methods such as ensemble learning, to assess whether it is possible (i) to further improve the accuracy of AGV deviation prediction generating a sequence of future predictions instead of a single point in the future, and (ii) to achieve better forecast stability with a longer forecast horizon to more reliably anticipate AGV malfunction.

In this context, we propose a novel application of sequence-to-sequence prediction in the AGV scenario that differs fundamentally from the classical approach of predicting a single value representing the expected AGV deviation over a specific time horizon, as it offers the advantage of allowing greater flexibility in the selection of the maximum prediction horizon. This improvement provides the operator with the ability to evaluate a range of prediction horizons and dynamically choose the optimal one that maximizes accuracy for the specific operational context in which the AGV is deployed.

Furthermore, N-BEATS was selected in this work because it is considered the state-of-the-art method for univariate time-series forecasting. As a novelty, we modified the original N-BEATS architecture to add exogenous variables in its input. We compare the forecasting performance of the modified N-BEATS architecture to a variety of representative DL, ML and statistical methods for time-series forecasting. In addition, we apply the model ensemble technique to further increase the final prediction accuracy of individual models. Although N-BEATS as the state-of-the-art model in time-series forecasting is expected to outperform the rest of the models in the proposed AGV scenario, it should be noted that there is a trade-off between the increase in performance and the extra resources that are consumed by this model.

To tackle the second research question (RQ-2), several forecasting variables, were proposed. Regarding the fact that due to the inertia of a moving AGV, it takes approximately 10 s in the worst case to stop it, we establish the period from 10 to 20 s as the useful range to predict in advance the AGV deviation. To infer trajectory problems in an AGV, two variables, the Guide Error and Guide Oscillations, were considered to be predicted in advance. The former stores the deviation of the AGV’s guiding sensor from the tape on the floor, and the latter stores the number of times that the guiding sensor has crossed the tape from left to right or vice versa. Intuitively, high values for these two variables would suggest that the AGV is having difficulties maintaining its trajectory.

To address research question RQ-3, several experiments were conducted to evaluate the optimal combinations of models, time windows and input feature set to achieve the best forecasting performance. To train the models, we used real data collected by conducting extensive experiments with an industrial grade AGV provided by ASTI Mobile Robotics and a virtualized PLC that were connected in a 5G network deployed at 5TONIC, an open laboratory for 5G experimentation. Realistic network errors (e.g. delay and jitter) were reproduced in the experiments with different degrees of intensity. Therefore, the proposed forecast models were trained to predict the AGV malfunction even when different degrees of network disturbances appeared.

Once trained, we compared the performance of the different models in order to understand the importance of each of the selected feature sets, the importance of time window segmentation, and ultimately the capabilities of each of the proposed architectures. Our experimental results showed that the proposed ensembles of N-BEATS provide consistently robust predictions throughout the forecast horizon, producing highly accurate long-term predictions even in the presence of significant degradation of network conditions.

Finally, to address the research question RQ-4, a careful analysis of a real-time deployment of our solution was conducted, including retraining scenarios that might be triggered by the appearance of data drift problems. We apply the Transfer Learning technique to perform a realistic experimental analysis of the retraining of the proposed models in an online fashion using data previously collected in the 5TONIC lab. The results show a significant decrease in the time required to retrain the model with respect to training the models from scratch.

1.1 Contributions

The main contributions of this work can be highlighted as follows.

-

An innovative approach to predict AGV deviations in a sequence-to-sequence fashion is presented for the first time in the AGV literature. Unlike existing approaches, which rely on predicting a single time step at a time and applying a rolling mechanism to obtain a sequence of future values, the proposed approach relies on advanced sequence-to-sequence models applied to N-BEATS and LSTM architectures to learn to forecast a sequence of future AGV deviations based on the temporal correlation between future time steps and a window of past deviations. Importantly, our approach does not incur the accumulation of errors that rolling strategies generate, as it does not rely on an iteration-based algorithm to generate the desired sequence of predictions, which can lead to a dramatic increase in prediction error. Instead, using a single-learned model and a window of historical data, our approach is able to provide the complete time series of future predictions in which all time steps to be predicted are contained in the same output vector. In this way, multiple horizons are constrained in the same model structure, providing the AGV operator with a flexible way to select the most suitable horizon for predicting the future deviation sequence based on the current needs of the application without requiring model retraining and without losing performance.

-

As a novelty, we extend the architecture of N-BEATS to consider exogenous variables as input. The inclusion of exogenous features was motivated by the need to provide the model with additional context that could explain, at least partially, the outcome of the predictions. We focus specifically on the AGV-PLC connection statistics, as they are directly affected by degradation of network conditions. A degradation in the quality of the AGV-PLC connection will result in poor control of the AGV trajectory, which will eventually generate difficulties for the AGV that will be reflected in an increase in the deviation of the AGV from its trajectory. To the best of our knowledge, this is the first time that exogenous features that are not directly related to the domain of the target series under study are considered as input to the multivariate time-series prediction task in N-BEATS. By incorporating the AGV-PLC connection parameters as exogenous variables into an N-BEATS model, we achieve the best overall results in forecasting the deviation of the AGV trajectory, demonstrating that feeding salient features into an N-BEATS model can significantly improve the overall predictive performance achievable with this architecture.

-

We propose a new approach to AGV malfunction prediction based on the analysis of AGV’s Guide Oscillations, a derived variable we calculate using some of the AGV variables present in the packets of the PLC-AGV connection. This derived variable presents great potential to improve prediction performance and opens a new line of research in the AGV motion modeling literature. To our knowledge, the proposed approach is the first in the literature to perform a multivariate analysis of the measured AGV’s Guide Oscillations, allowing for the combination of this new variable with other conventional measures, such as the Guide Error and network-related statistics. Our empirical results confirm that the use of AGV oscillations as an additional exogenous input can be exploited as a useful indicator of AGV malfunction, as confirmed by the significantly better performance achieved by the LSTM and Random Forest models when trained with this variable. This result allows us to highlight the potential of using the Guide Oscillations variable in AGV control systems.

-

We observed that on some occasions the training of N-BEATS and Random Forest can generate models that obtain a good score in the forecasting metric (e.g., MAE or MSE) but tend to predict values close to the mean of the target variable. In other words, the distribution of the predictions is highly centered around the mean of the Guide Error variable, which causes highly inaccurate predictions in extreme values and regions of high fluctuations of the target variable. We refer to this specific phenomenon as "lazy behavior", as the models attempt to be on the safe side in almost every prediction, avoiding extreme values to minimize the likelihood of making highly erroneous predictions. To the best of our knowledge, this is the first time this bad effect has been reported in the literature. This anomalous behavior prevents the deployment of such lazy models in realistic scenarios, as these models will not be able to predict in advance that an AGV is having difficulties because these difficulties are directly correlated with the sudden appearance of large Guide Error values that tend not to be predicted by the lazy models. It is worth noting that we found that this problem was not present in the LSTM, indicating that this architecture seems to be robust against this anomaly. In addition, we suggest a manual heuristic to detect and discard lazy models, but specific research should be conducted as future work to avoid or mitigate this harmful behavior. Furthermore, another important task is to explore which ML/DL algorithms are shown to be vulnerable and which appear to be robust against this type of phenomenon.

-

We performed a simulation of a real-time deployment of the models, conducting extensive analyses of (a) the deployment feasibility of N-BEATS and LSTM models in a production environment for real-time control of a fleet of AGVs; and (b) the model retraining times in a data drift scenario when Transfer Learning techniques are applied.

1.2 Paper structure

The rest of the manuscript is organized as follows: Sect. 2 discusses related work. In Sect. 3 we describe the use case architecture and the setup procedure that we use to simulate different network conditions and explain the data collection and processing steps. Section 4.1 identifies the ML and DL models we selected to carry out the experiments and provides a justification for why they were selected among the rest of similar techniques. In Sect. 4 we define the experimental framework used for data processing, model training, and performance evaluation. Section 5 presents the results obtained in the experiments. This section details the training and testing of a variety of deep learning models (N-BEATS and LSTM), machine learning algorithms (Random Forest) and statistical methods (ARIMA) using different combinations of endogenous and exogenous features and ensembles. Furthermore, a realistic deployment and the re-training issues that can appear when data drift occurs are detailed in this section. In Sect. 6 we conclude by summarizing the main findings derived from the results obtained and present interesting future work to explore. Finally, Appendix A contains the preliminary analysis of the Guide Oscillations variable Sect. 6, details of the experimental results Sect. 1, additional plots reflecting the lazy behaviour we observed in some models Sect. 3, and details of the ensemble experiments Sect. 4.

2 Related work

Modern AGVs can operate to follow a dynamic trajectory, allowing greater flexibility and efficiency in many applications [1, 5]. In real smart factories, AGVs must coexist and interact with other automated systems and human [2]. These interactions must be properly managed to avoid disruptions, maintain efficiency, and ensure safe operation [6]. In real situations, AGVs cannot move freely in the environment because otherwise the factory floor must be mapped in advance, which is impractical and expensive. Instead, many studies have proposed the use of guide lines to establish a predefined circuit to address this issue. This guide line can be physical (e.g., a tape physically embedded on the floor [7]) or virtual (e.g., a memorized path [8]). The guide line restricts the movement of the AGV and provides a mean for the AGV to locate itself on the factory floor. There is a lack of published research that takes advantage of advanced DL techniques for time-series forecasting to predict ahead of time the AGV trajectory deviation from the guideline in order to avoid unexpected vehicle collisions and to identify system malfunctioning in advance.

Industrial sectors are benefiting from the adoption of time-series analysis to improve the efficiency of their operations [9,10,11]. Although several statistical and Machine Learning (ML) techniques have been applied to time-series forecasting, such as Autoregressive Integrated Moving Average (ARIMA) models or linear regression, in recent years there has been a growing interest in the application of Deep Learning (DL) models to perform this task because of their ability to automatically learn complex patterns in data [12, 13]. In particular, DL architectures have shown to be successful in forecasting time-series data with long-term dependencies [14, 15], which is highly relevant for many Industry 4.0 applications such as predictive maintenance and fault detection. Recently, Oreshkin et al. introduced "Neural Basis Expansion Analysis for Interpretable Time Series Forecasting" (N-BEATS) [16], a DL-based architecture that uses a sequence of deeply stacked blocks consisting of several fully connected layers connected through residual links. The proposed architecture exhibits several desirable properties: It is applicable without modification to a wide range of target domains, is fast to train, and can produce interpretable results. Furthermore, this architecture has been widely applied to prediction problems, and among the application fields are energy [17], healthcare [18, 19], and telecommunications [20].

Several studies have investigated path tracking control algorithms for remotely controlled AGVs. One such study [21] presents a wireless AGV path tracking control algorithm that accounts for varying network delays caused by the wireless network. The proposed method includes an optimal delay estimator that adjusts the received AGV position to account for the wireless network delay. This delay estimator utilizes a Kalman filter and a simple stochastic model of wireless delay dynamics to produce an optimal delay estimate. The estimated delay is then used to infer the actual AGV position, which is utilized to compute the appropriate control commands. The efficacy of the proposed approach is evaluated through simulation by measuring the vehicle’s path deviation and total travel time for different paths and network traffic conditions.

Another study [22] proposes a goal-oriented wireless communication solution for remotely controlled AGVs in time-varying wireless channel dynamic factory environments. The authors highlight the inherent dependence between data rate and control accuracy for such a system. To address this issue, they propose a model that can dynamically adapt the transmission data rate to optimize the AGV trajectory. The problem is formulated as a semi-Markov Decision Process, where the channel correlation is evaluated over time to address the fading issue. The Cross Track Error (XTE) is utilized as a metric to measure the distance deviation from the planned path. The proposed approach outperforms fixed-data rate policies as well as state-of-the-art solutions that are solely based on Age-of-Information (AoI), achieving the objective of higher system trajectory accuracy.

However, few articles consider a 5G network for AGV control. The 5G network offers improved data rates, low latency and reliability, which are crucial for the reliable and deterministic operation of an AGV in an industrial environment. To our knowledge, only two studies in the literature address the scenario where an AGV is remotely controlled using a 5G network.

In [23], the authors present an AGV that is remotely operated using 5G equipment deployed on customer premises equipment. In this scenario, the authors proposed an AGV control scheme based on an MEC platform to provide an end-to-end solution for predicting the movement of AGVs. In this case, the AGV is automatically controlled from the 5G base station based on visual information collected by a camera attached to the AGV and transmitted to the remote MEC platform via the 5G RAN link. However, as the authors argue, the control algorithms are based on a simplistic kinematic approach without using any ML or DL technique for predicting ahead of time the AGV trajectory. In sharp contrast, we propose a use case in which predicting AGV trajectory deviations ahead of time is crucial to avoid harmful situations that could arise when the AGV deviates from the trajectory due to errors in the guidance control. Another limitation of the work presented in [23] compared to ours is that its approach was not tested under a variety of realistic conditions in a real factory environment (e.g., no evaluation was performed under network disturbance effects, different traffic loads, etc.) that can significantly affect the performance and effectiveness of the proposed solution in a real environment. In contrast, our work explores the performance of deep learning models in a realistic setup where a wide range of network disturbances (e.g. delay, jitter) were introduced programatically during the AGV operation. Furthermore, their work does not propose a realistic Industry 4.0 setup as ours in which industrial grade components (PLC and AGV) are used in the experiments. Finally, their work does not present any analysis of the response time of the control algorithm under different traffic loads and network conditions as we do in our work.

Previous research has focused primarily on improving the navigation of remotely controlled AGVs using wireless networks. However, anticipating and planning corrective maneuvers in response to deviations caused by network disturbances is an under-explored area of research. To fill this gap and ensure the safe operation of AGVs, prevent collisions, and minimize disruptions in the factory workflow, our study builds on the initial work presented by [4]. In that preliminary work, the proposed solution was mainly focused on demonstrating in an industrial-grade environment that the AGV malfunctioning can be forecast with anticipation by exclusively analysing the AGV-PLC connection and without needing to deploy any meter in the end-user equipment (AGV and PLC). However, several important differences appear when comparing our work with [4]:

-

(i)

First, the approach of [4] relied on the prediction of a single instantaneous value (mean value between 10 and 15 s ahead of time) using a typical regression strategy. In sharp contrast, our proposed solution addresses this problem using an approach based on predicting a sequence of 200 future values (from 1 to 20 s ahead of the instant time with 100 milliseconds steps) using powerful sequence-to-sequence DL models. With this new approach, multiple horizons are contained in the same model structure, providing the AGV operator with a flexible way to select the most appropriate horizon to predict the future deviation sequence based on current application needs without requiring model retraining and without losing performance.

-

(ii)

Furthermore, the forecast horizon of the previous work (15 s) was considerably extended to 20 s in our work, allowing a greater safety margin to apply appropriate maneuvers to prevent the AGV from colliding with surrounding obstacles and thus improving the safety of the work area.

-

(iii)

An important limitation of [4] is that only traditional DL algorithms (LSTM and 1D-CNN) were used and a very modest number of model combinations were trained and tested. In sharp contrast, our work provides an in-depth comparison of an extended version of N-BEATS, the state-of-the-art architecture for time-series forecasting, with traditional ML/DL and statistical models. In this comparison a rich set of hyperparameters was selected and a significant number of model combinations were evaluated. Indeed, our results demonstrate that N-BEATS models allow achieving significantly better forecasting performance than traditional approaches while being able to meet the stringent demands of real-time operation. In addition, we analyze the resultant performance when ensembles of models are used, demonstrating that ensembles of N-BEATS outperform individual models.

-

(iv)

Another significant limitation of the previous work is the use of a fixed temporal window size (60 s). On the contrary, our study explores a broader range of temporal windows, specifically 4, 7.5, 15, and 30 s, and demonstrates that the optimal window size is a hyperparameter that must be tuned individually for each model. Our findings indicate that increasing the time window mostly lead to improved performance.

-

(v)

A very simple study of real-time deployment was conducted in [4]. In contrast, our work thoroughly analyzes the feasibility of a real-time deployment considering CPU or GPU availability, the management a single AGV or groups of them by a single model, and the retraining times by applying or not applying Transfer Learning when models become obsolete.

-

(vi)

Finally, the previous work only used the Guide Error variable as the representative of the AGV status. In contrast, our work also considers a new variable based on AGV guide oscillations. Our results show that the use of AGV oscillations as an additional exogenous input can be successfully exploited as a useful indicator of AGV malfunction, as confirmed by the significantly better performance achieved by some models.

Table 1 provides a comprehensive comparative analysis of the main findings and contributions of our study and other articles that are closely related. This comparison aims to offer a comprehensive overview of the research conducted on the topic addressed in this article, highlighting the distinctive contributions of our study to the existing literature.

3 Use case

In this section, we describe the use case that we intend to solve in this work. Our intention in this study is to exploit the capabilities of sophisticated DL techniques to build predictive models that forecast the deviations of an AGV controlled through a remote PLC.

All AGVs are equipped with a sensor that measures the distance between a point of the AGV and the trajectory to be followed. To this aim, different sensors can be used depending on the nature of the physical reference: magnetic (a magnetic tape on the floor and a magnetic antenna in the AGV), electromagnetic (a wired buried in the ground and an antenna in the AGV), optical (a line painted on the floor and a camera in the AGV). Later AGVs are equipped with SLAM navigation systems that use natural landmarks to create a map and locate the robot in the map. Normally these devices store a virtual trajectory to be followed, and provide the distance to this virtual line, but in essence it is the same concept.

In our case the AGV is equipped with a magnetic antenna in the traction unit which provides the distance between the center of the unit and the circuit described by a magnetic tape. In the AGV field it is common to call this distance "Guide Error", thus hereafter we have followed this convention. The Guide Error is not a strict euclidean distance but it has a sign to indicate if the AGV is located at the left or the right of the circuit. This way, the AGV adjusts the angular speed considering the sign of the Guide Error to move closer to the desired trajectory.

Figure 1 shows a schematic representation of the AGV and the Guide Error. As is possible to observe, this AGV is similar to a tricycle robot, but the front-direction wheel is replaced by a differential traction unit. This way the behavior of the traction unit is similar to a differential robot, but the whole AGV movement is limited by the kinematic constraints of a tricycle. The magnetic sensor is mounted on the traction unit and provides the Guide Error information to correctly follow the path drawn on the floor by the magnetic tape. This AGV configuration is very common in the automotive industry.

Assuming that there is no slippage on the wheels, the movement of this AGV can be described by (1), (2), (3) and (4).

Where the position and orientation of the coordinate system located in the center of the rear axle is denoted by \((x_b, y_b,\theta _b)\) [m, m, rad]; the position and orientation of the coordinate system located on the center of the traction unit is denoted by \((x_h, y_h, \theta _h)\) [m, m, rad]; \(L_b\) is the distance between the rear axle and the centre of the traction unit [m]; \(L_h\) is the distance between the wheels of the traction unit [m]; and \((v_l, v_r)\) [m/s, m/s] are the longitudinal speed of the left wheel and right traction wheels, respectively.

Our main objective is to exploit this information to anticipate the AGV’s movements, allowing it to be stopped before potentially dangerous situations occur, such as a collision with an imminent obstacle or a sudden departure from the circuit, even in situations of degraded network performance between the AGV and the PLC connection.

3.1 Use case architecture

As mentioned previously, AGVs are controlled by a Programmable Logic Controller (PLC). This PLC is a device specifically designed and programmed to control the sequence of operations that the AGV will perform. The PLC is connected to the AGV through a communication network that can be either wired or wireless. The AGV has several sensors that allow it to detect its environment and its location at the factory. The PLC uses this information to control the movement of the AGV. To do this, the AGV is also equipped with actuators that allow it to move in the desired direction. In our case, the PLC is virtualized and deployed at the edge of the 5G network in a MEC infrastructure. Virtualization of the PLC allows a large number of AGVs to be controlled concurrently from a reduced number of PLCs, thus allowing for cost savings while providing greater scalability and flexibility to the system. In addition, virtualization of the PLC in a remote location allows one to save space and reduce the weight and power consumption of the AGVs. Furthermore, placing the PLC close to the AGV greatly reduces communication latency, which is a crucial factor in meeting the stringent real-time requirements of the AGV operation. In this context, URLLC (ultra-reliable low-latency communication) is a key enabler for remote AGV operation. URLLC is a feature of 5G that is designed to provide low-latency and reliable communications that are required in the industrial setting. In the above scenario, the communication between the AGV and the PLC occurs as follows:

-

1.

The AGV sends the location data to the PLC (i.e., the deviation from the current path).

-

2.

The PLC uses the location data to correct the AGV’s trajectory and sends updated commands to the AGV.

-

3.

The AGV executes the commands and sends status updates to the PLC.

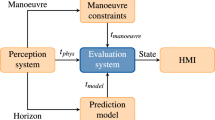

The 5G network architecture of the proposed use case is depicted in Fig. 2. As can be seen, the architecture includes a 5G Radio Access Network (5G RAN) to provide wireless communication capabilities to an AGV. The signaling to authenticate and deliver IP connectivity is managed by the signaling traffic within the 5G Core. The introduction of a 5G link that connects the AGV with the PLC allows replacing the internal PLC module that is traditionally mounted over an AGV with a more lightweight and energy efficient 5G modem chipset. In order to meet the low-latency requirements that enable the effective operation of the AGV through a remote PLC, the use of a MEC platform that hosts a Virtual Machine (VM), on which the remote PLC is deployed, is required. The MEC platform also contains several computing resources, including a component that provides access to the user data plane for different demands of services. These computing resources are deployed using virtualization technology with a hypervisor and several VMs. One of these VMs is a Master PLC that is responsible for controlling multiple AGVs. The ML engine represents the DL-based predictive models running on the MEC platform. The ML engine uses the information captured in real-time from the connection between the AGV and the PLC to predict the occurrence of AGV malfunction in advance.

The input variables to be fed to the ML Engine are obtained from the network packets sent from the AGV to the PLC through the network connection. From these network packets, two different sets of variables are extracted and processed to be used as input to the predictive models: (i) NET variables: connection statistics that can be extracted and aggregated from the packet headers that allow determining and quantifying network degradation problems. Connection statistics can be used as input to ML/DL models to predict the target variable accordingly to the network degradation problems identified by the model. In addition, the use of these features allows us to train more reliable models that remain robust against the occurrence of these situations in the real world scenario. (ii) AGV variables: the current values of the Guide Error that can be further processed to extract the variable Guide Oscillations that measures the number of times the AGV crossed the magnetic tape that traces the circuit on the factory’s floor.

In the experiments conducted, these two sets of variables (AGV and NET) were first tested separately and then combined to determine which combination worked best for predicting AGV malfunction. Demonstrating that using only connection statistics (NET variables) the AGV malfunctioning can be predicted would be extremely beneficial as it would allow operating the AGV without the need for the network operator to intervene in the factory’s non-public network (NPN) and thus enabling the AGV to be controlled from a public network where the transmitted payloads can be encrypted and only network statistics are available. On the contrary, using only the set of AGV variables to predict the AGV malfunctioning will demonstrate that the PLC-AGV operator could eliminate the need to measure and collect the network parameters, which can require the installation of special equipment in the factory to access to the private network to collect the network packets of the PLC-AGV connection. However, this installation may be infeasible in some contexts.

Furthermore, as also shown in Fig. 2, our architecture includes a Network Degradation Emulator (NDE), which is a component that is placed as a man in the middle in the connection between the radio access network and the MEC platform. The NDE is designed to simulate various effects of network degradation on communication between these two entities, such as packet loss, delay, and jitter. By introducing these network degradation effects, the NDE allows evaluating the impact of different network conditions on the performance of the MEC application, such as weak signal, presence of noise, network congestion, and high latency. These conditions are often absent or intermittent in the real environment, making it difficult to capture a sufficient amount of data to adequately train ML/DL models. Simulation of these anomalous network conditions is essential to generate enough data to develop robust models that can accurately predict the behavior of AGVs even under difficult and unpredictable network conditions, which is a critical requirement in the industrial setting.

This use case proposed to use two software connectors deployed on the MEC platform and based on the European Industrial Data Space (IDS) Trusted Connector (IDSTC) technology. These connectors were added to the MEC application with the ultimate goal of consolidating the MEC infrastructure as a valid resource for Industry 4.0 verticals in 5G networks. The IDSTC is an open IoT edge gateway platform that provides a standardized way to communicate with external components. The IDSTC is an implementation of the Trusted Connector in the Industrial Data Space reference architecture, following the open standards DIN Spec 27070 and ISO 62443–3. IDSTC can be used to connect sensors, cloud services and other connectors using a wide range of protocol adapters. In our use case, the left IDS connector allows exporting ML predictions of the Guide Error variable to an external Operation Support System where either human operators or a fully automated Logistic Process Control will process the predictions to apply some corrective manoeuvre to the AGV through the right IDS connector whenever required.

The predictive model proposed in this architecture tries to detect in advance AGV’s trajectory deviations to compensate for AGV response time as due to the inertia of its mass, it may not be able to respond immediately to the PLC’s commands. By forecasting significant deviations from the track path, it is possible to anticipate AGV malfunctions and take appropriate corrective actions to keep the AGV on track or bring it to a complete stop.

In normal situations, AGV deviations can be corrected by the PLC using the instantaneous guide error that is detected by the magnetic antenna and informs the AGV if it is situated at the right or at the left of the trajectory. If the error is positive then the AGV is instructed by the PLC to increase the steering angle to approximate the path. Conversely, if it is negative the PLC indicates the AGV to decrease its steering angle. Finally, if the error is zero means that the AGV is correctly situated in the middle of the path and the direction is maintained. This control strategy allows the AGV to follow the desired path in real time.

Complementarily, forecasting these errors can provide valuable information about the quality of the navigation in the future. If this quality is bad the AGV will have problems following the trajectory, as it will oscillate too much or even it may leave the path. To avoid these situations in advance, the Logistic Process Control can enforce specific actions such as adjusting the longitudinal speed reference using the predicted error. In this way, when the predicted error grows, the longitudinal speed reference is reduced to maintain the navigation level. This severe action may momentarily reduce productivity but avoids the production line shutdown. In an extreme case, the Logistic Process Control can decide to completely stop the AGV to prevent causing damage to material objects or people.

3.2 Use case setup

The use case described in Subsection 3.1 was set up and performed at 5TONIC, an open laboratory founded by Telefónica and IMDEA Networks that provides a controlled but realistic environment to deploy experiments that make use of 5G network capabilities as a core technology. At 5TONIC, we prepared different AGV experimentation scenarios that involve simulation of various network degradation effects to recreate realistic scenarios of network impairments. First, we established a reference circuit indicated by a magnetic tape on the floor in a \(300\,m^{2}\) room that included a battery recharging point for the AGV. Next, a MEC platform was created to host the virtualized PLC and the rest of the services that are required to provide the intended functionality (data collector, packet aggregator, ML engine, and IDS connectors). The virtualized PLC, the Machine Learning engine and the rest of services were deployed in several virtual machines. To acquire data for training and testing the ML/DL models, network packets transmitted from the AGV to the virtual PLC were captured in the Machine Learning virtual machine using the Unix command line tool "tcpdump" and stored in standard "pcap" file format. During this experimentation procedure, several degradation effects on the connection between the AGV and the virtual PLC were applied to ensure robust behavior of the predictive models during operation in the real environment. To generate these network perturbations (delay, jitter, packet drop, and corruption) in the link between the AGV and the PLC, the Unix “Traffic Control” command line tool was run on the MEC platform. The following subsections detail the process that was followed to generate the data, which was later pre-processed, in order to convert them to a suitable format for the training and testing of the ML/DL models. A summary of the workflow of this process can be appreciated in Fig. 3.

3.3 Network data generation scenarios

As we plan to train our ML/DL predictive models in a supervised manner, we need to collect labeled data that is representative of real AGV operating scenarios. To this end, we have also performed multiple network data captures in which we emulate network degradation effects on the communication between the AGV and the PLC. Network data captures contain the packets transmitted in the AGV-PLC connection. We refer one data capture to one run of the AGV with the same network configuration. In each data capture, the AGV is initially placed at a fixed position in a figure-eight circuit and then the PLC is commanded to move the AGV across the circuit at least five times. We introduce delay and jitter as network degradation effects. Delay refers to the addition of a fixed delay to the network packets. Jitter refers to the addition of a random delay to network packets. The delay values were randomly sampled from a normal Pareto distribution with a mean of between 50 and 300 microseconds and a standard deviation of between 10 and 50 microseconds. These values were chosen by carefully analyzing the network traffic of a real AGV production line for a considerable time. We should note that for the same experiment, delay and jitter were not introduced simultaneously. The reason is that we wanted to evaluate the effects of each network degradation effect independently. After a thorough inspection of the network traffic, we concluded that a scenario in which both effects are present at the same time is not representative of the real AGV operation scenario and would have made ML/DL training more difficult. Three different types of data capture were differentiated:

-

1.

Clean: No delay or jitter has been introduced in this type of data capture. These data captures are used as a formal verification of the performance of the AGV control system in the absence of network degradation effects (i.e., normal situation).

-

2.

Static: In this type of data capture, delay and jitter are introduced as a constant network degradation effect and the AGV is made to operate under these conditions. The objective of this data capture is to evaluate the effects of network degradation on AGV performance. We should note that during the first and last 30 s, as well as during the 30 s of the middle part of the data capture, no degradation effects were introduced. In this way, the transition from a clean network to a degraded network, and vice versa, is also captured. This is to evaluate the effects of network degradation on AGV performance when these effects are only temporarily introduced. This can help train models that behave as expected, even in situations where network degradation effects appear and disappear abruptly during AGV operation.

-

3.

Ramp: In this type of data capture, delay and jitter are introduced as a network degradation effect that increase gradually over time. This type of data capture allows the effects of network degradation on AGV performance to be evaluated while the AGV is initially under control, but the effects of network degradation become more severe over time, leading to a departure of the AGV from the circuit.

All data captures were collected at least three times to ensure data reliability for each scenario. During each capture, we collected all packets transmitted via the UDP protocol between the AGV and the PLC. Any other packets on the 5G network that were not related to the communication between the AGV and PLC were discarded. Once the data was captured, it was stored as raw PCAP files, totaling almost 100GB. The data captures contained around 434,000 snapshots of the AGV-PLC connection that generated roughly the same number of examples in the dataset used for training and testing.

3.4 Data feature extraction

The network data captured between the connection of the AGV and its virtual PLC underwent a feature extraction process before been input to the ML components. Two types of features were selected: (i) AGV variables that were contained within the payload of the AGV-PLC connection packets, and (ii) statistics of the AGV-PLC connection. An AGV’s proprietary tool was used to decode the control payload of the AGV-PLC connection, and the Tstat tool, a widely-used network analysis tool (http://tstat.polito.it/), was used to extract network statistics from the connection. It is worth noting that because the ML models presented in this work try to predict the future deviation of an AGV controlled by a virtual PLC under a degraded network connection, it was considered important to add network features as exogenous variables to the models in order to detect network degradation and complement the AGV variable that is being forecast.

From over one hundred variables extracted from the AGV-PLC connection payload, only two of them were selected for this study: the instantaneous Guide Error and the Stop Flag. The other variables containing AGV status data such as wheel speed and battery status, were discarded. A limited set of AGV features was used to minimize the dependence on the AGV system provider and to enable easy adaptation of the trained model to other AGVs of different providers in the future. The Stop Flag variable was used only during the preprocessing phase to exclude instances when the AGV was stopped (e.g., when recharging the batteries), as predictions were not relevant during these periods. The Guide Error variable was detected by a magnetic antenna that informed the AGV about its location to the right (with a positive value) or left (with a negative value) of the magnetic strip in centimeters.

Guide Oscillation, another AGV feature that was considered in this study, was derived from the Guide Error variable during the preprocessing phase as it was not present in the connection payload. The Guide Oscillation feature represents the number of times the value of the Guide Error variable changed sign in a predefined interval. We conjecture that an increment of AGV oscillation values with respect to the trajectory could alert us of an AGV malfunctioning. Both AGV variables were considered endogenous when they were the object of the prediction, or exogenous when they helped in the prediction of the other.

Three AGV-PLC connection statistics (packet timestamp, number of packets sent, and number of packets received since the start of the connection) were chosen from a set of eight variables generated by the Tstat tool. The other variables provided by Tstat were discarded as they were constant throughout the connection, which does not provide information to the ML models. Among these discarded variables was the packet size, which, due to the characteristics of the communication protocol between the AGV and the PLC, had a constant length of 80 bytes. It should be noted that Tstat can calculate these statistics from network connections without the need to use payload information, which means that it can do so even if the connection is encrypted.

The three selected features were processed to generate a set of seven exogenous variables, as presented in Table 2 (NET feature set). The reason for this expansion was because these new variables had already been used successfully in previous research works such as [24] and [25]. Specifically, the two features Inter-arrival Time of Client and Inter-arrival Time of Server were proposed in [24], and the five features Total Packets of Client, Total Packets of Server, Total Packets per Second of Client, Total Packets per Second of Server, and the ratio between Total Client Packets and Total Server Packets were presented in [25]. In a preliminary phase, the set of connection statistics used in [4] was also considered, but after observing that the suggested variables, apart from the seven previously mentioned, did not add any significant information to the models, it was decided to exclude them and use only the previous seven.

Finally, all processed data was compiled into a single dataset file that included the first nine columns representing the features in Table 2, along with two additional columns for the timestamp and experiment identifier. The dataset has as many rows as the number of times Tstat calculates statistics for the AGV-PLC connection during its lifetime. Generally, and unless there is a burst of packets, Tstat recalculates the statistics every time a packet arrives. When a burst arrives, the statistics are recalculated only once considering the entire burst of packets. The timestamp was necessary to maintain the packet sequence in the file, as the models proposed in this paper solve the forecasting problem with time series. Similarly, the experiment identifier ensured that data from different experiments were not mixed during the construction of time windows for time-series analysis. The resultant file was saved in Apache Parquet format, which compressed the information and reduced its size to approximately 20 MB. It is worth noting that the initial captures in PCAP format occupied approximately 100 GB of storage space.

3.5 Advantages and disadvantages of the proposed solution

The solution proposed in this section offers a range of benefits and limitations that must be carefully considered. The primary advantage of this solution is that it enables real-time scenarios with a realistic deployment of 5G MEC and industrial-grade AGV. This facilitates the evaluation of the proposed system in a more practical industry setting, enabling accurate assessment of its performance. Related to the above, the proposed system’s ability to realistically simulate and collect data of a wide range of disturbance patterns that may naturally arise in the 5G network is a significant advantage. This allows for the system to be accurately and reliably tested and evaluated under various realistic conditions, making it more robust and reliable when deployed in the real-world scenario. Furthermore, the use of a virtualized PLC system offers cost savings, redundancy, scalability benefits, and easier upgradeability. These features make the system more flexible and adaptable to varying requirements, increasing its overall efficiency.

In addition, as will be discussed in Sect. 5.4, the use of robust and powerful deep learning techniques, capable of running on commonly available hardware, has facilitated the execution of up to 64 AGVs in parallel in real time. This approach drastically reduces the total execution time compared to conventional forecasting methods, such as ARIMA. In addition, the use of deep learning algorithms allows the system to scale effortlessly to accommodate a larger number of AGVs, making it an ideal solution for companies with growing demands. Furthermore, our solution is based on multi-horizon forecasting models that provide the system operator or technician with the ability to dynamically select the most appropriate forecasting step based on factors such as AGV workload, network stability, and desired accuracy. Unlike conventional approaches that necessitate the training and validation of numerous models for different forecasting horizons, this method eliminates the need for such tedious procedures. Moreover, this approach represents a robust solution in the face of unexpected disruptions in the AGV network, which may cause the AGV and PLC to lose connectivity. This characteristic is particularly significant in industrial settings where operational reliability is critical, as it ensures that the system continues to operate even in the presence of disruptions or failures, thereby enhancing its overall reliability.

On the downside, the system reliance on powerful DL algorithms is a potential drawback that must be considered Although we have shown that DL models can be successfully used in real time to control a fleet of up to 64 AGVs working in parallel, the system can become computationally intensive if the number of AGVs increases significantly. Another limitation is that the disturbances used in the system are generated synthetically, and, although great efforts have been made to simulate a realistic environment, it is not possible to completely replicate the real-world conditions. Therefore, it is possible that the system’s performance may differ slightly in real-world situations. Additionally, the circuit chosen in a factory may be different, and the model would have to be retrained for different factories. Finally, the models used in the system are trained for a specific type of AGV, which means that if different AGVs are used, with different guidance mechanisms, it is necessary to train new models.

One additional limitation of the solution to consider is that the proposed forecasting models are trained, validated and evaluated on specific conditions and may not possess the capacity to generalize to different scenarios. In particular, the models are designed to be optimized for a particular circuit layout and AGV type. Consequently, if varying AGV types are utilized, or if there are significant alterations to the circuit layout or factory environment, then the models would require a fine-tuning process with data collected in this new environment to ensure optimal performance.

4 Experiments

The research described in this work has been conducted in the form of a series of experiments. Two AGV variables, Guide Error and Guide Oscillations, were initially considered interesting variables to be predicted in advance, as large values of them suggested a direct correlation with AGV difficulties (Subsection 4.2). Finally, the Guide Oscillations variable was discarded as no direct correlation was found with AGV difficulties (Appendix Subsection A.1). Therefore, only the Guide Error variable was used in our experiments to predict it in advance.

The experiments consist in turn of three different variations:

-

First, we trained two different DL models (N-BEATS and LSTM), Random Forest and ARIMA using only the guide error as input feature to the models.

-

Second, we added a fixed set of network variables consisting of seven different statistics of the UDP connection established between the AGV and the PLC as they can provide timely information of network degradation situations.

-

Third, we tried combining all the previous features with the Guide Oscillations variable that represents the oscillations made by the AGV along the line on the floor that marks the path the AGV must follow. These oscillations are not directly generated by an AGV sensor, but are a handcrafted feature that we computed from the Guide Error measurement. More precisely, we calculated the oscillations as changes in the Guide Error sign, which can be interpreted as the AGV crossing the line. Note that the occurrence of large values for this feature might indicate that the AGV is struggling to maintain its trajectory, and therefore it could be particularly useful for detecting when the AGV is about to leave the track.

Using these three sets of input features, the objective of the experiments is to predict in advance the Guide Error of the AGV. We aim to predict 200 time steps (20 s) in the future for all the time series that we use to feed the models. Table 3 shows the three variations of experiments that we have proposed and their associated coding, which we will use to refer to them throughout the article.

Note that ARIMA was used only in the first variation, as only univariate ARIMA has been reported in the literature to achieve decent performance when compared to more complex models such as DL.

4.1 Time-series forecasting techniques selection

In this work, our aim is to evaluate whether N-BEATS models, as representatives of state-of-the-art sequence to sequence models for time-series forecasting, are able to outperform traditional DL architectures, ML models and statistical methods in the proposed problem.

Our choice of N-BEATS as the DL architecture was motivated by its state-of-the-art performance in several well-known forecasting competitions [16]. N-BEATS is a DL model that was specifically designed for time-series forecasting and has shown excellent results with univariate time-series data, while providing model interpretability capabilities that are absent in other DL architectures [16].

The N-BEATS architecture exhibits a number of highly desirable properties, such as being directly applicable to a wide range of problem types without the need for extensive feature engineering, being faster to train and more scalable than other DL architectures, such as LSTM, with the added benefit of being interpretable, which is extremely valuable in some practical scenarios. In addition, the N-BEATS architecture has shown better generalization capabilities than other DL models when trained on a specific source time-series dataset and applied to a different target dataset [16, 18,19,20, 26].

To carry out our study, we propose a modification of the original N-BEATS architecture [16] to enhance the architecture’s ability to model our multivariate series forecasting problem. In Sect. 4.1.1 a more detailed explanation of this novel architecture is provided highlighting its key components and mechanisms.

Furthermore, Sect. 4.1.2 presents the rationale behind the selection of other techniques for time-series forecasting that will be used as benchmarks to compare with the N-BEATS model. We outline the criteria used to choose these alternative approaches, which encompass a range of traditional deep learning architectures, machine learning models, and statistical methods. By including these diverse techniques, we can perform a comprehensive comparative analysis, examining the strengths and weaknesses of each approach in relation to the proposed problem.

4.1.1 N-BEATS architecture and proposed modification

The development of N-BEATS is based on the motivation to create a method for the prediction of univariate time series using only pure DL architectures, while maintaining the ability of statistical models to interpret the predictions made [16].

N-BEATS is an ensemble of deeply stacked feed-forward networks organized in blocks and interconnected via residual connections. N-BEATS takes advantage of a novel residual network topology that facilitates model interpretability and enables smoother gradient flow. The residual connections of the N-BEATS architecture allow each subsequent block to directly learn the residuals of the previous block, which alleviates the training difficulty and speeds up convergence. The target prediction is then obtained by linearly combining the predictions of all blocks in the network, allowing for better interpretability. Furthermore, in the N-BEATS architecture, specific constraints can be imposed to force the model to decompose the predicted time series into its seasonal and trend components, providing additional information about the data.

The architecture of N-BEATS is constructed using a basic building block, which is depicted on the left side of Fig. 4. Each of these blocks has four fully connected layers with 512 neurons and the ReLU activation function. The output of this last layer is divided into two branches, one used for future prediction, named forecast, and one for prediction of past data, named backast. The forecast is the block’s contribution to predict the sequence of future values following the window of past values it has received as input, while the backast is the result of partially approximating the input it has received. Both predictions are carried out by obtaining an expansion coefficient, which is a numerical vector that allows reconstructing a sequence of the time series from a linear transformation using a basis vector, this basis vector is defined by the set of weights of the next layer to which each branch of the block is connected. More precisely, this other layer, which also has no activation function, will calculate the pointwise product between the expansion coefficient of the branch and the base vector, obtaining as output the forecast or the backast, depending on the branch. As for the weights of this layer, these can be learned together with the rest of the weights of the network or, on the contrary, they can be manually specified to consider certain aspects of the problem to be solved, shaping the structure of the outputs to enable later analysis of the predictions based on its decomposition in trend and seasonality components.

A fixed number of the blocks described above is inserted into an arbitrary number of stacks, as illustrated in Fig. 4. Each of these stacks is connected using a novel topology based on the residual blocks of other well-known architectures such as DenseNet [27]. This topology is based on the introduction of connections that allow each stack to receive as input the output of the previous stack, which corresponds to the backast branch of its internal blocks, subtracted from the input of the previous stack. In this way, the input of the next stack will not contain the part of the input of the model that has already been predicted by the previous stack, allowing it to focus on what it has not yet learned to predict. The only exception to the above rule is the first stack, which only receives as input the data that are used to feed the model (i.e. the sequence of values observed in the past). On the other hand, the output of each stack will be the one corresponding to the forecasts of its internal blocks. These outputs are added together to obtain the final future prediction of the model, as can also be seen in Fig. 4. Another clever detail of the proposed architecture is that it allows one to observe the values of the partial forecasts and backcasts, allowing one to clearly identify the contribution of each stack, a fundamental need to achieve a model that produces interpretable results.

Although N-BEATS was originally conceived as a univariate model, we have proposed as a novelty a redesign to provide the architecture with the ability to model multivariate series as well, even allowing to consider variables according to the nature of their relationship with the independent variables (endogenous or exogenous). To achieve this purpose, we have included a concatenation layer that combines multiple inputs into a one-dimensional input layer. With this approach, exogenous features can be incorporated into model learning without interfering with model optimization. That is, the model is optimized only to model the dynamics of the endogenous variables, since the exogenous variables are only used as support for model learning and are not the target of forecasting. Our approach allows the model capability to be fully dedicated to modeling the endogenous dynamics and not the entire multivariate context.

4.1.2 Selected forecasting techniques for comparison

To compare N-BEATS performance with other well-established machine and deep learning models, we reviewed recent literature on time-series forecasting to identify the most relevant models for comparison. Based on this review, we selected the LSTM neural network architecture as the main representative of DL architectures for time-series forecasting, as it has shown superior performance in learning temporal dependencies in multivariate time-series forecasting [28,29,30] and has been used extensively in the literature. As representative of traditional ML regressors, we selected Random Forest, which has demonstrated high robustness and performance in several time-series forecasting applications [31], thus providing a fair comparison with DL models. For statistical methods, we chose ARIMA, which is a widely used and well-established method for general-purpose time-series forecasting and because it is considered a standard benchmark for evaluating forecasting models performance [32]. Additionally, to establish a baseline for comparison, we used a naive baseline based on the prediction of the last known value of each time series, which is a common method in time-series forecasting [32]. Finally, we also used the model ensembling technique to boost the overall prediction performance of individual models using them as the base regressors. Further details of the advantages of the selected models compared to other well-known techniques is given in Subsection 6. In addition, a detailed overview of the selected techniques is presented later in Subsections 1 and 2.

4.2 Forecast target variables

To solve the proposed use case one of our objectives is to predict the future deviations of an AGV with respect to the magnetic tape running along the circuit established on the floor in order to safely plan for corrective manoeuvres. We refer to the variable that quantifies the amount of deviation of this magnetic tape detected by the AGV sensors in both directions as Guide Error. In addition, since the main objective of this work is to detect when the AGV is likely to deviate from the circuit, we have also proposed a second predictor variable called "Guide Oscillations". This variable is quantified as the number of times the AGV has crossed the centre line of the magnetic tape. We have determined that this variable is closely related to the AGV deflection. Specifically, a high number of oscillations is a clear indicator that the AGV is operating erratically and therefore it is highly likely to start deviating from the circuit.

Due to the inertia presented by an AGV moving at high speed, correcting its trajectory once it starts to deviate from the magnetic tape requires a considerable amount of time. In real deployments, it has been observed that it takes 10 s to stop a fully loaded AGV in motion. By conducting several experiments, we have concluded that dedicating a margin of 10 to 15 s has proven to be sufficient in order to provide the AGV operator with the time needed to make the necessary corrections to keep the AGV on track. In our case, we have placed the forecast horizon even further in time (20 s) in order to provide a greater margin of safety for the AGV operator.

Furthermore, when communication between the AGV and the PLC starts to degrade, it causes high fluctuations of the Guide Error variable from positive to negative values. In fact, in preliminary experiments we observed that the models tended to predict the mean value (zero). To solve this problem, we decided to ignore the sign of the values and predict instead the absolute value of the Guide Oscillations variable. We found no evidence that the sign provides relevant information about AGV deviation, i.e., there does not appear to be any correlation between AGV malfunction and a deviation toward one of the two particular sides. Therefore, by omitting this information, we avoided this problem, resulting in more accurate predictions.

Following the same reasoning for the Guide Error variable, by predicting the absolute values of the variable, we put greater emphasis on the intensity of deviations occurring on short periods of time on both sides of the magnetic tape (positive and negative), which is clearly a key indicator of anomalous behaviour of the AGV.

We also decided to predict the mean of the absolute values of the Guide Error and Oscillations variables for the next 20 s instead of predicting the actual values. The reason for this decision is that small deviations of the AGV may occur naturally when it travels through certain curves of the circuit. Fluctuations in the mean value regarding specific locations in the circuit are a strong indicator that the AGV is not performing as expected and therefore corrective action should be taken.

In a preliminary analysis of the Guide Oscillation variable before training any model, we did not observe any significant correlation between this variable and the AGV deviation of the circuit, which led us to discard the Guide Oscillations variable as a predictor of AGV malfunction. Details of this analysis can be found in Appendix Subsection A.1. However, it is worth noting that although we discarded the Guide Oscillations variable as the predicted variable in our experiments, this variable was used as input variable to give the models an opportunity to extract some useful information from it.

4.3 Data processing

To learn the temporal relationship of the Guide Error and Guide Oscillations variables in a supervised learning task, the data is first converted into a set of lagged observations. In this way, the models can be trained to predict the 200 subsequent time steps of the Guide Error and Guide Oscillations from the window of past values of both variables. In the following subsections, we detail the process we followed for the processing of the collected data, including the splitting of the dataset in train and test set, the granularization, aggregation and standardization of the forecast variables and finally, the time-series windowing procedure.

4.3.1 Data granularization, aggregation, and standardization

All the collected data have been processed to obtain granularized time series at a fixed time interval. We decided to forecast time-aggregated values instead of instantaneous values because we observed that this variable presents too much noise for the forecast to be feasible with this approach. In fact, we have been confronted by a considerable difficulty to obtain better results than a model based on random guess. The aggregation of these values can be interpreted as an additional feature engineering step that greatly facilitates the identification of statistical patterns for the DL models, greatly speeding up training times and smoothing the convergence process.

Based on a trial-and-error procedure and evaluating the quality of the predictions using various quantitative metrics (MAE and MSE) over the validation data, we concluded that 100 ms is the best and most logical trade-off that reduces the presence of noise in the measurements while minimizing data loss. For this purpose, we combined the values in 100 ms intervals using the mean absolute value as the aggregation method for the Guide Error variable. We used the absolute value in this aggregation to prevent the model from overfitting to zero.

To accomplish this aggregation process, we decided to perform a moving average using a sliding window of size 100 ms. We have chosen the moving average instead of other aggregation methods to obtain a linear aggregation of the data that does not affect its distribution and only allows a reduction of the noise without introducing bias on the temporal correlations of the measured values. A moving average consists of dividing the time series into contiguous windows of a given size and calculating the mean of the values in each window. The result is a new time series in which each value is the average of the values of the original time series over a given time period. For example, suppose that we have a time series with 10 values and we want to calculate the mean over a sliding window of size 5 with an interval of 1. This would result in a new time series with 10 values, where the values are the result of calculating the mean over 5 contiguous values in the original time series, stepping one value at a time. When there are not enough values available to fill a window, the mean is calculated over the available values. This aggregation process was not performed for the AGV flags (obstacle detection, battery warning, etc.) because these values do not present any kind of temporal behavior related to the target variable forecasting and, therefore, aggregation is not necessary as they are not used as input for the ML/DL models. For this reason, only the last value of every 100 ms interval of the AGV flags was kept. In addition, missing values were filled with the last observed (i.e., non-missing value) of the same variable.

Finally, we applied standardization to the processed data. Standardization is a common pre-processing step in DL used to transform in order to have zero mean and unit variance. This normalization process is crucial to ensure that all the input features are represented on the same scale, which results in easier model trainings. There are several ways to standardize data, but the most simple and effective method is the Z-score method, which transforms each characteristic by individually subtracting the mean of each feature and dividing by its standard deviation.

4.3.2 Time-series windowing

To construct the samples, we used to train our models, we applied a sliding window with a unit window size to each experiment to create a time-series data set of lagged observations of window size K (\(t_{-1},\ t_{-2},...,t_{-K}\)) of each value. It should be noted that this moving window was applied separately to each experiment to ensure that the inter-dependencies captured by the lagged values in the window are between values from a single experiment and are not diluted by the inclusion of values from other experiments that are associated with a different network scenario and thus with dissimilar initial conditions. As the optimal choice of the window size K is very much problem dependant, we defined four different temporal window sizes: 40 time steps (4 s), 75 time steps (7.5 s), 150 time steps (15 s) and 300 time steps (30 s). For this procedure, we decided not to apply padding because we decided not to include any information about future values regarding different experimental conditions. Similarly, the use of a null value to serve as padding was also discarded, as this would have introduced artificial correlations in the data that could potentially bias the neural network learning process.

Finally, samples in which the AGV was completely stationary were removed. As this information was not explicitly collected during the data collection process, we used the AGV flags to identify those samples. In particular, we found that the activation of at least one AGV flag was strongly correlated with an AGV stall. In addition, the first and last 10 s of each data capture were also removed, as they exhibited large fluctuations caused by the transition from a fully stopped state to an operational mode or vice versa.

4.3.3 Dataset creation

In ML and DL, it is common to divide the data into a training dataset and a test dataset. The training dataset is used to train the model, while the test set is used to evaluate the performance of the model. Based on our experience in previous works [33], instead of using the common method of randomly spliting all available data in training and testing datasets, we applied a different separation method to obtain more conservative and robust results after testing: in our data recollection process, the first experiment was used for building the test dataset while the other two experiments were reserved as training data.

Once data separation in training and testing was completed, the training dataset was randomly divided into two separated sets: a training set (\(80\%\)) and a validation set (\(20\%\)). Subsequently, the validation set was also randomly divided into two sets, one for hyperparameter validation (\(10\%\)) and the other to monitor the training procedure in order to prevent overfitting (\(10\%\)).

4.4 Model training procedure