Abstract

The School-Work Alternation (SWA) programme was developed (under a European Commission call) to bridge the gaps and establish a well-tuned partnership between education and the job market. This work details the development of the AI4Citizen pilot, an AI software suite designed to support the SWA programme. The AI4Citizen pilot, developed within the H2020 AI4EU project, offers AI tools to automate and enhance the current SWA process. At the same time, the AI4Citizen pilot offers novel tools to support the complex problem of allocating student teams to internship programs, promoting collaborative learning and teamwork skills acquisition. Notably, the AI4Citizen pilot corresponds to a pipeline of AI tools, integrating existing and novel technologies. Our exhaustive empirical analysis confirms that the AI4Citizen pilot can alleviate the difficulties of current processes in the SWA, and therefore it is ready for real-world deployment.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Citizens daily engage with the public sector in a variety of domains and with different needs. Finding the correct contact and associated procedure, understanding and following the instructions and procedure in the proper manner and making the right decision when several alternatives exist takes much work. Furthermore, conveniently guiding citizens is challenging for public servants, who typically lack decision-support tools to assist them. As a result, the time devoted by public servants to public services makes them costly. Nevertheless, more is needed because the limited availability of public servants to guide citizens hinders the quality of service.

A similar case stands for public services offered in the realm of education, which is the target domain of this paper. In particular, here we focus on Alternanza scuola lavoro or School-Work Alternation (SWA) programme leveraging the know-how of Fondazione Bruno Kessler (FBK) who is a partner in the organisation of SWA in Trentino. The European Commission calls for reinforcing the partnership between educational institutions and the job market [1]. The aim is to support the development of skills for the employability of new generations, pointed out as a collective responsibility within educational and training contexts. Following European recommendations, Italy started a training programme for students of every high school, for whom it is compulsory to spend a significant number of hours within workplaces during the three last years of their high school courseFootnote 1. This scheme is called the School-Work Alternation programme and is described by the Italian Law 107 / 2015: it involves circa 1.5 million pupils ranging from 15 to 19 years old. As argued in [2], the SWA programme creates transformative learning spaces by spurring teachers to investigate how to approach formal learning to real-world needs and providing students with experiences that help them develop both their technical and soft skills (Fig. 1).

Nowadays, implementing the SWA programme requires much manual intervention from public servants in schools. On the one hand, students are typically interviewed to guide them in their choice of internships on offer. Thus, because of limited resources, providing personalised guidance to students is suboptimal and very time-consuming. On the other hand, schools are challenged with the intricate task of matching internship offers with students’ competencies and skills so that they can ultimately assign students to internships while considering students’ preferences. Therefore, more decision-support tools need to be devised to aid school personnel in guiding students and producing allocations that satisfy both students and companies offering internships.

Besides that, the current SWA practice individually allocates each student to some internship. This practice hinders the full potential of the programme for two reasons. First, note that team-based, cooperative learning has been shown to tremendously succeed as a learning method in education (as evidenced by e.g. [3,4,5]). Such a finding has recently spurred research on Artificial Intelligence (AI) algorithms [5,6,7] and AI-based systems to compose teams in education scenarios (e.g. Team-maker within CATME [8], or Eduteams [9]), as well as on empirical studies (e.g. [7, 10]). Furthermore, since one of the aims of the SWA programme is to promote the development of students’ soft skills [2], working in teams would foster the learning of the soft skills that are considered crucial for collaboration and teamwork [11].

In this context, in this paper, we illustrate the development of the AI4Citizen pilot, a software prototype designed as a solution to the shortcomings of the SWA programme. The pilot, developed in the context of the H2020 AI4EU project [12], provides AI tools to automate the SWA programme’s current process while introducing new tools to facilitate team-based learning and the acquisition of teamwork skills. Notably, the AI4Citizen pilot involves pipelining a range of AI technologies, including (1) NLP algorithms to extract competencies and skills from students’ curricula and companies’ internship offers and to match them; (2) a chatbot to assist students in selecting internships; and (3) a novel algorithm to group students into teams and allocate them to internships. Specifically, the AI4Citizen pilot tries to make headway in supporting the SWA program with the following contributions:

-

An NLP-based tool to match students with internships. The main challenge is ensuring that both the employers and the job applicants speak the same language when describing the required skills and competencies. The descriptions are often in free text format, making it difficult to automatically match the job requirements with the applicant’s qualifications and experiences. To tackle this issue, we have developed a tool to bridge this gap by utilising ESCO [13], a multilingual classification system of European Skills, Competences, Qualifications, and Occupations. This system encompasses a taxonomy of 13,485 competencies and 13,485 jobs linked with relationships and is designed to map the free text descriptions of the job requirements and candidate experiences to a standardised language.

-

A chatbot to assist students. In order to develop the AI4Citizen chatbot, we used SAP Conversational AI, an end-to-end collaborative platform for creating chatbots. Our chatbot understands 28 different types of students’ intentions related to gathering information about the process and expressing and reviewing preferences. The NLP engine recognises the intents and triggers suitable skills to interact with the end users. These skills interact with a dedicated component interacting with the database minimising the need to exchange personal information outside the FBK perimeter.

-

An empirical evaluation of the chatbot usability. We conducted a user study to evaluate our study. Students from three different classes, 55 students in total, participated in the study. During the evaluation, each student was supposed to single out three internships (wishes) and understand the internship context. In the first class, \(10\%\) of the students did not manage to complete the evaluation due to a default which thereafter was fixed. The students (90%) who completed the evaluation needed less than half an hour (30 minutes) to do so, and they reported being reasonably satisfied with the experience. Experience satisfaction was improved due to fixes and vocabulary enrichment based on former interactions. However, the main criticism reported was regarding the over-guidance offered by the chatbot. Instead, the users would prefer a free interaction. These findings should be taken into consideration in the future. At the same time, further experiments should be conducted to help us understand the impact on task completion and the time to complete the global mission.

-

An empirical evaluation of the team allocation algorithm. We conducted a twofold evaluation to confirm the usability of our proposed algorithm. First, we pitched Edu2Com against a state-of-the-art linear programming solver, CPLEX [14]. The results of solving synthetically generated instances of the matching problem showed that Edu2Com outperforms CPLEX in solving time. Second, we tasked Edu2Com to solve large, real-world instances (involving real students’ profiles and internship descriptions) of the problem. Our proposed algorithm can handle the problem and find a solution, while an optimal solver such as CPLEX cannot generate the necessary encoding within a reasonable time. Thus Edu2Com solves large problem instances that CPLEX cannot handle.

-

An expert-driven validation of the team allocation algorithm. Finally, we tasked educational experts with experience in allocating students to internships to assess allocations produced by Edu2Com against allocations manually produced by experienced teachers. The results indicate that our algorithm is the one of choice to solve the problem.

The rest of the paper is organised as follows. Section 2 presents and analyses our case study, Section 3 outlines the overall architecture of the AI4Citizen pilot, Sections 4, 5 and 6 describe the core AI components of AI4Citizen, Section 9 studies the potential business value of AI4Citizen, and Section 7 empirically evaluates the core components of our systems. Finally, Section 10 draws conclusions and sets paths to future research.

2 The Alternanza Scuola Lavoro case study

An essential aspect of innovation is exploiting information technology to ease people’s everyday life. In this work, we followed SAP’s best practices and adopted a Design Thinking-led development process (see Fig. 2) to discern users’ needs and collect users’ feedback.

Design led approach (from https://saptraining.build.me/)

Scope. As described in the introduction, the AI4Citizen pilot focuses on the SWA scheme. The scheme involves all students from high schools and technical institutes during the last years of their curriculum. The goal of the scheme is to provide practical experience to students, to help them to consolidate the knowledge acquired at school, to test their attitudes on the field, to enrich their training and guide their study path, and, ultimately, to help them to choose their future career path.

Research. In the research phase, we focused on understanding how our users interact with the system and their individual needs. To better understand the specifics of this case study, we run a series of interviews with schools’ and companies’ stakeholders leveraging the central role of FBK in the organisation of SWA in Trentino. We eventually derive the following four personae [15]:

- Ludovica:

-

she is an 18-year-old student; while attending the fourth class of the classic high school, she is looking for an internship where she can experience team working. She has found a job offer, which seems suitable. However, she does not exactly understand which skills the company requests—Fig. 3 shows a detailed view of Ludovica’s persona.

- Arnoldo:

-

he is a teacher 61 years old; he manages the SWA office at his Institute, sharing this duty with a colleague; they help more than 300 students every year first to find out a suitable internship; then, they follow the initial administrative work, resolve problems during the internship, and at the end, collect students’ final report and tutors’ evaluation form.

- Carolina:

-

she is a 48-year-old assistant in the internships office at a large research centre; she takes care of the relationships with local high schools, interacts with tutors like Arnoldo when they have to select a team of interns, and chases researchers to stimulate them to prepare new internship offers. Carolina also supports researchers in preparing the final evaluation reports.

- Rosanna:

-

she is a 37 years old busy researcher; every summer, she looks for a team of two to four students able to help her with pet projects, also aiming to transmit her passion for scientific research work.

Synthesize. Following the interviews with teachers, researchers, and parents, we focused on Ludovica’s and Arnoldo’s experiences to synthesize the main scenario identifying their main pain points. The following paragraph, the scenario as-is, describes the typical interaction between our student Ludovica and the reference teacher Arnoldo nowadays.

Arnoldo is the reference teacher for the SWA for a set of classes. During the past weeks, he has already updated a list of all the internship offers received, classified, and published it on the school’s website. He has also sent several emails to all the students and their parents during the previous month concerning new internship proposals. First, Ludovica checks her mailing box to collect all these emails and then scrolls the internship list on the school website. She also asks a friend of her family, who owns a high-tech company, if he can host her for an internship. He answered positively to Ludovica. After this work, she prepares a list where she writes the internship proposals that seem interesting to her. In this list are two internships: an internship extracted from one of the emails sent by the reference teacher and the internship in the company of a friend of Ludovica’s family. At this point, Ludovica would like to discuss the two different options with Arnoldo, the teacher who manages SWA at her school. Ludovica should make an appointment with him. They set up a meeting during which Ludovica would like to interact with Arnoldo to understand the internship goals and objectives better and determine if the family friend internship can be performed. The discussion should focus on understanding how the different internships match Ludovica’s competencies, objectives, weaknesses, and strengths. Unfortunately, Arnoldo has to manage thousands of questions from students and can dedicate only a fraction of the needed time to tackle the different questions, which eludes the family’s friend’s internship question. Ludovica feels totally lost in the process and fills out a form with three preferences. A few weeks later, while Arnoldo is trying to make an educated choice after receiving the preferences of all students, composing the teams for the different internships looks to him like solving a complex jigsaw but without knowing the image to compose! Still, Arnoldo remembers the discussion with Ludovica, and he finds out that an apparently good global solution enables her to get assigned to her second choice. Apparently, the young Ludovica is frustrated, especially when Arnoldo cannot precisely explain how he figures out these internships’ matchings fighting with his spreadsheet.

Following this return on experience from the current process, and in order to enhance the student and referent experience, we decided to focus on two specific problems, which are particularly time-consuming and not supported by the current version of the Vivoscuola portalFootnote 2 operated by the Autonomous Province of Trento:

-

1.

How to best inform and guide Ludovica in her internship quest with a teenager-ready conversational interface?

-

2.

How to support Arnoldo in building teams of complementary interns while optimizing the acquisition of new skills and their personal wishes?

Ideate. The following descriptions, the scenario to-be, explain how AI will modify Ludovica’s quest for the perfect internship and support Arnoldo to dedicate more quality time.

An announcement was made on the school billboard, informing students that it was time to start thinking about their SWA options. Students were informed that they could use social media channels like Facebook and Twitter to search for and enrol in internships. These channels were securely connected to the SWA-FBK system, allowing it to access information about each student, including their competencies, previous internship experiences, and past year statistics and testimonials. Ludovica decided to connect to the chatbot using her SWA-FBK credentials. The general chatbot asked her questions to determine her interests and preferences, using both the information stored in the SWA-FBK system and additional information provided by Ludovica. The chatbot provided Ludovica with answers to common questions. At the same time, it redirected some questions about a specific internship offer to Arnoldo, who receives a small portion of questions so he is able to answer in due time. The chatbot proposed several internship programs aligned with Ludovica’s interests and preferences and passed this information on to the team formation algorithm. After a couple of hours, Arnoldo received the team assignment provided by the algorithm. Although Ludovica had expressed a strong desire to be assigned to her first choice, Arnoldo realized that this constraint decreased the overall assignment score and the fit of five teams, including Ludovica’s. As a result, he followed the algorithm’s recommendation and assigned Ludovica to the internship that was her second choice, explaining the rationale behind the decision to her.

3 A blueprint of the AI4Citizen architecture

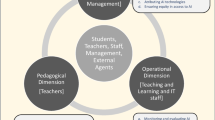

Before proceeding with the implementation details of each component, we detail in this section the overall architecture of the AI4Citizen pilot. The architecture of our pilot is funded on pipelining the different intelligence technologies: the competence and skill extraction tool, the chatbot assistant and the team formation algorithm. At the same time, these technologies are to be integrated with FBK’s existing IT system that manages students’ profiles (information regarding their studies and past activities) along with internship program offers. As a result, we deliver a prototype implementation of our end-to-end AI4Citizens pilot. In a nutshell, Fig. 4 illustrates the pilot’s architecture, which consists of the entities below:

-

A module for interfacing with and integrating from the external — preexisting — an IT system that contains and manages the information regarding students’ profiles, their activities, and experiences, etc.

-

A module for interfacing with and integrating from the external IT system that contains and manages job/ internship offers information.

-

Data management services to facilitate the “normalization” of the data in terms of vocabularies and taxonomies for the purpose of the offer/candidate matching. More specifically, the two key services here are (i) skill matching to associate an entity with the skills and competences in the ESCO ontology [13]; and (ii) a multi-dimensional classification of internships to guide the selection process. The latter service characterizes offers in different practical ways (e.g. activity domain, geographical distribution, context (e.g., private vs public hosting entities), etc.).

-

A team formation service to match teams of students to internships taking into account competencies, preferences, and availability in a holistic, cross-organizational manner.

-

An internship browser that brings this information together, exposing different APIs for searching, matching, and selecting offers, as well as for storing preferences and matching teams to available offers.

-

A chatbot-based Natural Language Processing (NLP) and User Interaction (UI) service and its associated chatbot logic component that make the AI4Citizen pilot available to the main stakeholders, in particular to students and teachers. While the role of the UI is to make AI4Citizen directly available on the web or a cell phone, the chatbot logic drives the selection process, answers frequently asked questions and helps collect students’ preferences.

The architecture of the AI4Citizen pilot considers two execution environments. On the one hand, the SWA FBK environment runs the SWA system prior to AI4Citizen. It is in charge of storing and handling the schools’ and companies’ data. On the other hand, the AI4EU environment hosts the AI4EU services required to enable an AI-based SWA system, namely competence and skill extraction, team formation, the chatbot, and the internship browser. The two environments, SWA FBK and AI4EU, provide strong data encapsulation, limiting the interactions with the new components to a set of restrictive APIs to avoid the usage of any Personal Identifiable Information and to respect the term of services between FBK and the Trento province.

Our implementation follows the principles of Cloud-Native Applications. It uses a container-based component model, where each separate micro-service is deployed in a Docker container on top of the Cloud infrastructure. The Chatbot NLP and UI component run on the SAP cloud and interact with the SAP logic thanks to a REST API over a secure channel.

The following sections propose a detailed description of the main components (Table 1).

4 Competence and skill extraction

Every day thousands of people look for a job and share their curriculum vitae using different channels, like social networks, leasing companies, etc. In the meantime, a similar number of companies look for workers and use the same channels to share the requirements for their open positions. In the context of the scenario presented in this paper, a similar situation arises between the students looking for internships and the companies searching for candidates with appropriate skills and competencies.

The fundamental problem here is to make the two parties speak the same language when it comes to characterising the skills and competencies required by the companies and those owned by the candidates obtained through the activities and experiences they have carried out during their careers and/or studies. Indeed, frequently the descriptions of experiences and school curriculum are expressed in natural language, making it challenging to match requests and offers in an automated manner.

To address this issue, we have defined and developed a tool that bridges the gap between the required and provided competencies when expressed as a free text description.

To reach this goal, we use two existing resources, (i) the ESCO Ontology [13] and (ii) the FastText software [16]. ESCO is the multilingual classification of European Skills, Competences, Qualifications and Occupations. It contains a taxonomy of 13,485 competencies and 13,485 jobs connected with relations. It has been developed as a part of the Europe 2020 strategy. It identifies and categorises skills, competencies, qualifications, and occupations relevant to the EU labour market and education and training. It systematically shows the relationships between the different concepts described in 27 European languages. It has been recently adopted in various countries and regions to describe the professional context in the corresponding territories unambiguously. We use the ESCO ontology as a basis for the description of the job and internship offers as well as for characterising candidates’ skills and experiences.

The latter resource, FastText, is a state-of-the-art technology developed by Facebook, which computes the semantic similarity between two text spans. It is released with an open-source license and is available in over 100 languages.

FastText is an evolution of word2vec [17], a word representation model where each word is an object in a vector space, and its position is optimised for the task of predicting the surrounding context.

The main difference between the two models is that the representation \(v_w\) of a word w is not only the representation of its symbol, but it is augmented with the sum of the representations of its subword units:

where \(\mathcal {S}\) is the set containing some character n-grams contained in w, \(u_w\) is the vector representation of the whole word w and \(u_s\) are the representations of the subwords.

Combining the two resources, we have developed the so-called Competence and Skill Extraction (CSE) tool, an AI component that provides the following functionalities:

-

using FastText, we compute the semantic similarity between plain text (from a job description or CV experience) and the competencies appearing in the ESCO ontology;

-

we then rank the identified competencies using this similarity value; and

-

according to the extracted ranking, we suggest the most appropriate candidate competencies for the description.

As a positive side effect of using native multi-language resources, the tool is automatically able to compare texts written in different languages. For example, the text “application of rules and scientific methods to solve problems” can be semantically connected to the competencies “develop strategy to solve problems”, “plan activities to accomplish specific goals”, and “problem solving”. A similar result can be obtained by replacing the English text with the Italian one, “applicare regole e metodi scientifici per risolvere problemi”. Consequently, the tool may be used in international markets, making it possible to relate different IT systems and their data across EU boundaries and facilitate internships abroad in our context.

It is important to remark that the tool allows for a wide range of use cases in the context of the proposed scenario and broader settings. In particular, the tool allows to:

-

annotate existing job descriptions with the skill/ competence information for further elaboration;

-

guide the description and annotation of the information within the IT systems managing the corresponding data (such a tool may assist the operators in appropriate and concise data entry activity);

-

support the data analysis tools when reasoning across all the data, also considering historical perspective, legacy sources and data, etc.

-

bridge the gap between the systems using different skill/competence taxonomies in different contexts, such as different territories and organisations.

The implementation of the skill matching tool is done in Python and is available on GitHub under the Apache 2.0 license [18].

5 Chatbot

To enable a smooth interaction between students and the system so that students can learn about the SWA program, get to know the offered internship programs, and ultimately express their preferences, we early took the decision to leverage the concepts of the conversational user interface. Conversational UX is becoming a de facto standard to address processes of medium complexity that can be customised to a specific user by gathering contextual information (e.g., student profile, topics, grade, past internships, past conversation). Leveraging this information, we enable students to ask questions using natural language about the process itself in a generic (e.g., What is an SWA program?), contextual (e.g., Who is my referent professor?) or individualised (e.g., What are my recommended internships?) way, which will eventually trigger questions to propose a pertinent answer. The choice of a conversational UX also enables to leverage the inclination of Generation Z better to use instant messaging platforms.

Our choice to use SAP Conversational AI [19] to implement the AI4Citizen chatbot was due to different factors:

-

1.

It does not provide self-learning capabilities since we wanted to avoid the danger of bot manipulation (e.g., the Tay experiment [20]);

-

2.

Its NLP engine provides multi-language support, including Italian, Spanish and Catalan on top of English;

-

3.

It mixed no-code and scripting capabilities with an API, enabling interaction in a bidirectional way with other software components;

-

4.

Its platform provides enterprise-grade level including GDPR support, monitoring and debugging capabilities.

To better understand a chatbot’s development process, we need to introduce some terminology used in the domain. The main aim of a bot is to understand the expression that a user might say. For this purpose, the NLP engine of the bot will analyse the expression and extract features such as date, city or person names - the entities - and enrich them with information suitable for programming (e.g., the GPS location associated with a city) and eventually the sentiment analysis of the expression. Twenty-eight types of entities, the gold entities, are predefined, and the programmer can add custom entities to adapt the capability of the chatbot to a given domain. Once this first stage is performed, the conversational engine attempts to match the expression — thanks to a concept of expression distance — with one of the expressions that are captured at the design stage to express an intent: intents are the core of the chatbot and are responsible for understanding user requests. Intent recognition is leveraging our platform’s machine-learning capabilities based on the corpus of expressions defined in the intent. To answer the intent, a skill is triggered that will eventually ask the user to enrich its request with details represented by entities (e.g., the time of departure for booking a train to a city) to enable to propose a pertinent answer and continue the conversation. Action enables skill to build the answer directly on the platform with message or interact with external components with webhook or trigger complementary skills.

The SWA chatbot leverages all these capabilities to interact with the student on one side and with the information stored about their curriculum or previous session. We can divide the skills developed into four groups:

-

14 skills related to answering frequently asked questions

-

10 customised skills that capture preferences and provide individual advice to the student

-

2 skills that implement an authentication pattern to interact with the customised skills

-

2 skills developed for facilitating the testing sessions

These skills are associated with circa 30 intents capturing more than 200 expressions. We also enriched the gold entities with eight custom entities serving three different purposes:

-

to enable domain-specific interactions, this encompasses competencies (e.g., related to the ECSO ontology), companies’ names and cities contained in the internship database. While we initially did not foresee adding cities as entities, we realised that the related gold entities lack precision for small cities. Fortunately, these entities can be loaded thanks to the chatbot API automatically upon database modification;

-

to capture the format of specific identifiers;

-

to enable the students to refer to the chatbot artefacts (e.g., to ask questions about the chatbot itself).

In order to propose individual advice to students, the system should access or store information related to each student.

This access should be done securely, and due to privacy concerns, we had to minimise the information stored at the chatbot level. For that purpose, our chatbot relies on a set of webhook, which uses a stateless proxy, called chatbot logic, residing on the FBK realm. The communication is done via a secure channel where we transit the information captured during the conversation with the student. The chatbot logic interacts with the internship browser component to store or retrieve the information in order to build the answer based on (i) the academic student profile (e.g., schools, grade, curriculum), (ii) the preferences already captured in the current session or previously, and (iii) the information captured by the current skill. Aside from the privacy advantages of such an approach, it also enables us to benefit from a full-fledged programming language to elaborate the answers relying on the provided message library (e.g., text, card, button). Figure 5 shows an example of a simple interaction during the early stage of the conversation.

To sum up, Fig. 6 shows the different type of skills and their related actions that enables Ludovica to engage with the chatbot in order to better understand the rationales around the SWA activity, to ask questions about the process and administrative details, to express preferences, and to browse internship offers.

6 Allocating teams of students to internships

This section focuses on the problem of forming teams of students and matching them with internships (i.e., allocating student teams to internships), which is equivalent to the team formation service shown in Fig. 4. Due to the complexity of this problem, it is difficult and time-consuming for an expert to make optimal allocations. Previously, we used existing technologies to develop the competence and skill extraction service and the chatbot, as explained in Sections 4 and 5. However, creating our team formation service requires new research contributions. Section 6.1 explains why this is necessary, followed by a formal definition of the allocation problem in Section 6.2. Then, in Section 6.3, we provide the encoding for the allocation problem to be optimally solved as a linear program (LP) with some state-of-the-art existing solver. Lastly, we introduce a novel heuristic algorithm for computing team allocations in Section 6.4. This algorithm allows us to tackle real-world problems of significant size, which cannot be solved with sophisticated optimisation libraries like CPLEX, as demonstrated in Section 7.3.

6.1 Motivation

Many real-world problems require teams of people or machines to work together on tasks, such as robot teams in search and rescue missions [21] or teams of drones for surveillance [22]. As a result, there has been much research on various methods for assigning teams to tasks in the field of artificial intelligence.

Existing literature has explored how to form one team to work on one task [23,24,25], form one team to work on many tasks [26], and form multiple teams to solve a single task [5, 10, 27, 28]. Some research has also been done on forming multiple teams to match multiple tasks, allowing overlaps either on agents participating in many teams [21] or on many teams performing the same task [29]. However, there needs to be more attention given to the problem of how to allocate teams to tasks with no overlaps, i.e., teams that share no common members and tasks that are uniquely tackled by one team. This problem above is the one our team formation service aims to address when allocating teams of students to internships. Despite limited existing research on this topic [30, 31], we cannot rely on prior approaches to solve our problem due to several shortcomings. Specifically, one of the approaches uses brute force and branch-and-bound techniques that limit the number of agents and tasks that can be analyzed [31], while the other approach handles only a small number of tasks [30].

Additionally, existing models assume that a team must possess the exact competencies a task requires. Such an assumption is only sometimes practical in real-world scenarios. As [28] points out, existing literature endures this assumption and distinguishes between two competence models: boolean models where an agent acquires or lacks some competence [23,24,25, 30], and graded models where an agent acquires some competence up to some degree [5, 27, 28, 32]. However, in the real world, it might be the case that acquiring some similar competence is sufficient for handling a specific required competence. Consider, for example, the educational world, where a student can be adequate for some internship program even if the student does not possess all the required competencies precisely as requested as long as the student’s competencies are similar enough.

Therefore, this work aims to develop a new formalization and algorithm that can practically address the many teams to many tasks allocation problem. In what follows, we use the terms agents and tasks instead of students and internship programs to present the problem more broadly. Later (in Section 7.3), we will apply our general problem to our case study of matching teams of students with internship programs. This approach will help us consider the competencies possessed by team members, including similar competencies that may still qualify a student for an internship, even if they do not possess the exact competency required by the task. Ultimately, our goal is to develop a novel algorithm that can effectively allocate teams of agents to tasks in real-world scenarios.

6.2 Defining the team allocation problem

This section aims to cast the problem as an optimisation one formally. First, we introduce the basic concepts of the team allocation problem, then we move to the concept of competence models and put forward the model used in this work. Finally, we formally define the optimisation problem.

6.2.1 Basic Concepts

According to the Oxford Learnner’s DictionaryFootnote 3, competence refers to the ability to perform well, the authority or power in handling a particular situation, or a necessary skill for performing a particular job or task. Let us denote with \(\mathcal {C}\) a fixed set of competencies. A task is described through its requirements on (i) competencies and (ii) team size. Firstly, for some agent(s) to successfully carry out a task, they must adequately deal with the required competencies. Secondly, the required team size signifies the necessary and sufficient number of agents working together to complete the task successfully—i.e., a team with fewer members than the required team size will not be able to complete the task, while a larger team over-consumes (agent) resources. For example, an internship program in a computer tech company might require four competencies (machine learning principles, coding in Python, web development, and fluency in English) and a team of size three. Therefore, the company offers an internship that needs three students together who possess the four required competencies. Moreover, even though every required competence is necessary for successfully completing a task, not all competencies are equally important; as such, we consider the competencies’ relative importance as part of the task description. For example, for an internship in a computer tech company, coding in Python might be more critical than fluency in English. Formally, taask \(\tau \) is given by \(\langle C_\tau , w_\tau ,\textrm{s}_\tau \rangle \), where \(C_\tau \subseteq \mathcal {C}\) denotes the required competencies, \(w_\tau :C_\tau \rightarrow (0,1]\) is a relative importance weight function, and \(\textrm{s}_\tau =1,2,\cdots \) denotes the required team size.Footnote 4 We denote with \(T=\{\tau _1,\cdots ,\tau _m\}\) a set of m tasks (with \(|T| = m\)). An agent is described through the competencies they possess. A team K corresponds to a subset of agents, \(K \subseteq A\), who jointly work on some task(s). A size-compliant team K for some task \(\tau \in T\) is a team such that \(|K| = \textrm{s}_\tau \), i.e., a team with as many members as required by \(\tau \). We denote with \(\mathcal {K}_\tau = \{K \subseteq A : |K| = \textrm{s}_\tau \}\) the set of the size-compliant teams for \(\tau \) given a set of agents A.

6.2.2 Competence coverage and affinity

In order to assign a team of agents to a task, it is necessary to ensure that the team possesses the competencies required to solve the task. To achieve this, the competencies, as determined by \(C_\tau \), must be assessed before allocating a team to a task. For a team of agents K to be considered suitable for a task \(\tau \), the team must possess the necessary competencies required by \(\tau \). That means that for each required competence by the task, there must be at least one agent in the team who possesses that competence.

The current literature on team-task allocation considers competencies as either Boolean or graded features, with a team’s matching quality being determined by a function, usually expressed as a utility function. However, these existing models are pretty restrictive, so a new approach is needed. In this study, we propose a method for assessing the matching quality of a team with a task based on the semantic similarity of the competencies required by the task and the competencies possessed by the team. To be more precise, we introduce the concepts of competence coverage and competence affinity, which provide an intuitive way to determine the matching quality of a team for a task. Competence coverage is determined by the degree of similarity between the required competencies of the task and the team’s collective competencies. Considering the semantic similarity of the competencies allows us to deal with the current competence models’ limitations.

Within the scope of some specific domain (e.g., the educational domain), we can structure the competencies related to the domain to capture semantic relations among them. More and more entities (countries, organisations, institutes, etc.) work towards registering and structuring competencies in ontologies—e.g., ESCO [13], O*Net [33], SFIA [34], ISFOL [35]. For example, two essentially different competencies, such as coding in C++ (competence c) and coding in Java (competence \(c'\)), share essential principles, e.g., both are compiled languages and object-oriented languages.

Therefore, we assume that the competencies in \(\mathcal {C}\) are structured in a competence ontology based on the semantic relations among the competencies. Then, for any two competencies \(c, c' \in \mathcal {C}\), there is a degree of similarity, denoted as \(\textrm{sim}({c,c'}) \in [0,1]\), reflected in the competence ontology. Moreover, we assume that the competencies can be represented in a (tree-like) graph structure, where nodes denote competencies and directed edges indicate intimate semantic relations between the nodes (such that Parent nodes are broader concepts of their successor nodes). In Section 7.3, we utilise ESCO ontology, a well-established ontology with such properties. Given a competence ontology of \(\mathcal {C}\) and two competencies \(c,c' \in \mathcal {C}\), to compute the semantic similarity between c and \(c'\) we use a variant of the similarity metric presented in [36]. Unlike [36], the variant we propose here guarantees that any competence node is maximally similar to itself (i.e., \(\textrm{sim}({c,c})=1)\). Thus, the similarity is given by

where l stands for the shortest path connecting competencies c and \(c'\) and h stands for the depth of the deepest competence subsuming c and \(c'\). Parameters \(\kappa ,\lambda \) control the impact of the distance l and depth of common ancestor h on the similarity metric, respectively. Similarly to [37], for any two \(c,c'\in \mathcal {C}\) it holds that \(\textrm{sim}({c,c'}) \in [0,1]\).

We say that some agent \(a\in A\) with acquired competencies \(C_a\subseteq \mathcal {C}\) covers competence \(c \in \mathcal {C}\) with a’s most similar competence \(c'\), and specifically with a degree reflecting the semantic similarity between c and \(c'\), i.e., \(\textrm{cvg}(c, a) = \max _{c' \in C_a} \textrm{sim}({c,c'})\). Moreover, we say that an agent \(a\in A\) covers a task \(\tau \in T\) with required competencies \(C_\tau \in \mathcal {C}\) with a degree depending on how well a can cover each of the required competencies. Specifically, we use the product over a’s competence coverage across all required competencies:

In a team context, it is unlikely that each agent will need to cover all the required competencies. Instead, team members collaborate and complement each other by sharing responsibilities [38]—notably, [39] highlights that recent studies define team through assigning responsibilities to agents. Thus, we must solve a competence assignment function. Let \(\tau \in T\) be a task and \(K\subseteq A\) be a subset of agents that forms a team; each agent, \(a \in K\), is assigned with some of the required competencies in \(C_\tau \). That is, each agent in the team is responsible for covering only some of the competencies required by the task. Therefore, a competence assignment function (CAF), denoted as \(\eta _{\tau \rightarrow K} : K \rightarrow 2^{C_\tau }\), assigns a subset of required competencies to each team member \(a\in K\). As noted in [27, 28], a CAF should satisfy the following property: at least one agent, \(a \in K\), must cover any competence \(c \in C_\tau \), i.e., \(\bigcup _{a \in K} \eta _{\tau \rightarrow K}(a) = C_\tau \). Additionally, let the reversed function (r-CAF), denoted as \(\theta _{\tau \rightarrow K}: C_tau \rightarrow 2^{K}\), show which agents are responsible for each required competence. Let \(\Theta _{\tau \rightarrow K}\) be the set of all CAFs of some team K for task \(\tau \). Notably, there are many different CAFs, though not all CAFs are equally good, i.e., some CAFs are better than others. [27] discusses inclusive competence assignments, thus, here we build on inclusive CAFs, and we propose the fair competence assignment functions (FCAF). In more detail, an FCAF satisfies two properties: each agent is responsible for (i) at least one competence (as in inclusive competence assignments), and (ii) at most \(\left\lceil \frac{|C_\tau |}{|K|}\right\rceil \). These properties ensure that all agents actively participate in the teamwork and that a few agents are assigned excessively many responsibilities while others are assigned just the minimum.

Given a task \(\tau \), a team K and an FCAF \(\eta _{\tau \rightarrow K}\), we assess the matching quality of K working on \(\tau \) as how well each team member can cover their responsibilities according to the FCAF at hand. Thus, we use the competence affinity metric, which (i) awards high coverage of competencies and (ii) does not penalise low coverage of competencies with low relative importance. First, we define the competence affinity from a single agent’s perspective:

Definition 6.1

(Agents’ Competence Affinity). Given an agent \(a \in A\), a task \(\tau \in T\), and a competence assignment function \(\eta _{\tau \rightarrow K}\), the competence affinity of a to \(\tau \) is:

Then, the team’s competence affinity depends on the competence affinity of each team member. Specifically, it is defined as the a-la-Nash product over the individual competence affinity of every agent in K. The product promotes equal contributions across the agents in the team (i.e., all agents are equally contributing to the task) and therefore favours teams with balanced contributions. Formally, the competence affinity from a team’s perspective is defined as:

Definition 6.2

(Team’s Competence Affinity). Given a team of agents \(K\subseteq A\), a task \(\tau \in T\), and a fair competence assignment \(\eta _{\tau \rightarrow K}\), the competence affinity of K to \(\tau \) is:

Notice that a team’s competence affinity changes as responsibilities are assigned differently, i.e., the very same team exhibits different matching qualities when different FCAFs are in place. The FCAF that results in the best competence affinity of a team for a task amounts to solving an optimisation problem:

such that \(\bigcup _{a\in K} \eta _{\tau \rightarrow K}^* (a) = C_\tau \) and \(1\le | \eta _{\tau \rightarrow K}^*(a)|\le \left\lceil \frac{|C_\tau |}{|K|}\right\rceil \) for every \(a \in K\). In practice, though, we can efficiently solve the above optimisation problem optimally since team sizes and the number of required competencies for a task (and therefore the search space \(\Theta _{\tau \rightarrow K})\) are relatively small—usually team size ranges in [2, 5] and a task requires \(\le 10\) competencies.

Then, for task \(\tau \), considering all the size-compliant tams \(\mathcal {K}_\tau \), the best team shall maximise the competence affinity; i.e., \(K^* = \underset{K \in \mathcal {K}_\tau }{\mathrm {arg\;max}} \; \textrm{aff}(K,\tau ,\eta _{\tau \rightarrow K}^*)\). Note that the search space for this optimisation problem is \(\left( {\begin{array}{c}n\\ \textrm{s}_\tau \end{array}}\right) \) large (and increases as n and \(\textrm{s}_\tau \) increase), where n is the number of agents in A and \(\textrm{s}_\tau \) is the team size of task \(\tau \).

6.2.3 The optimisation problem

Now, given a set of tasks T (with \(|T|>1\)), finding a team for each task, i.e., computing a many teams to many tasks allocation, is another optimisation problem. In more detail, the optimal allocation of agent teams to tasks is the one that maximises the competence affinity of every team, respecting (i) that each agent cannot be part of more than two teams; (ii) each team can work on at most one task; and (iii) at most one team can work on each task. These three properties we just mentioned describe a Feasible Team Allocation Function, which we formally define as:

Definition 6.3

(Feasible Team Allocation Function (FTAF)). Given a set of tasks T, and a set of agents A, a feasible team allocation function g is a function \(g:T\rightarrow 2^A\) such that: (1) every task \(\tau \in T\) is allocated its requested number of agents so that \(|g(\tau )| = \textrm{s}_\tau \); and (2) an agent can only be assigned to one team: for every pair of tasks \(\tau ,\tau ' \in T\), such that \(\tau \ne \tau '\), it holds that \(g(\tau )\cap g(\tau ') = \emptyset \).

Given tasks T and agents A, G denotes the family of all feasible team allocation functions. Then, the overall affinity of an FTAF g equals the product over the competence affinity of every team for their assigned task according to g. As we mentioned earlier, the product favours balance, i.e., allocations where every team formed is more or less equally good for their task. The optimum FTAF \(g^*\) is the one that maximises the overall team affinity:

Definition 6.4

(Non-Overlapping Many Teams to Many Tasks (NOMTMT) Allocation Problem). Given a set of tasks T, and a set of agents A, the Non-Overlapping Many Teams to Many Tasks Allocation Problem is to find the team allocation function \(g^* \in G\) that maximises the overall team affinity of the team allocation:

Here we highlight that for computing the overall team affinity for some FCAF \(g \in G\), we need to compute the optimal FCAF for each team for their assigned task according to g. Thus solving the NOMTM allocation problem requires solving |T| optimisation problems for each \(g \in G\)..Footnote 5 Next, we show that the NOMTMT allocation problem is \(\mathcal{N}\mathcal{P}\)-hard.

Theorem 6.5

The Non-Overlapping Many Teams to Many Tasks (NOMTMT) allocation problem is \(\mathcal{N}\mathcal{P}\)-hard.

We omit the proof due to space limitations. However, in Appendix B we show that the problem is \(\mathcal{N}\mathcal{P}\)-hardby using a reduction from binary combinatorial auction winner determination problem for single-unit auctions, which is known to be \(\mathcal{N}\mathcal{P}\)-hard [40].

6.3 Solving the team allocation problem: a linear programming approach

We can optimally solve the NOMTMT allocation problem with the means of a linear program (LP); thus, here, we provide the corresponding LP encoding. Let us start, though, with a non-linear encoding. We use a decision variable \(x_\tau ^K\) for each task \(\tau \in T\) and each size-compliant team \(K \in \mathcal {K}_\tau \). \(x_\tau ^K\) equals 1 when team K is allocated to work on task \(\tau \) in the optimal solution, and 0 otherwise. Then the non-linear program is:

subject to:

Then, for the optimal allocation function \(g^*\) it holds that \(g^*(\tau ) = K\) if and only if \(x_\tau ^K = 1\). Constraints (6b) and (6a) ensures that the resulted \(g^*\) is an FTAF (Def 6.3). As we said, the above is not a linear program due to the non-linear objective function (Eq. (6)). However, we can do a linear transformation by considering the logarithm. Thus, we can obtain the optimal allocation function by solving the equivalent linear program below:

subject to: Eqs. (6a), (6b), and (6c). To obtain the equivalent LP, we first shift the function’s domain—we do so in order to avoid computing \(\log (0)\)—and then we use the logarithm to convert the double product into a double sum and power factors into products. (i) we use the \(\log (\cdot )\) to convert the double product to double sum, and the powered factor into a product; and (ii) we change the function’s domain to avoid \(\log (0)\). We can solve this LP with the aid of an off-the-shelf solver (e.g. CPLEX [14], Gurobi [41]), GLPK [42], or SCIP [43]). Given sufficient time, an LP solver will return an optimal solution to the NOMTMT allocation problem.

Notice that to solve the LP above, we need to precompute the competence affinity between each task and every possible size-compliant team, meaning that we need to precompute the optimal CAF (via solving an optimisation problem) for each such \(\langle \)team,task\(\rangle \) pair. This is bound to lead to large linear programs as the number of agents and tasks grows.

6.4 Solving the team allocation problem: a heuristic approach

In this Section, we put forward Edu2Com, a novel two-stage heuristic algorithm, to overcome large LPs. In the first stage, we compute an initial feasible solution for the allocation problem, i.e., an initial FTAF (Definition 6.3), while in the second stage, we iteratively improve the initial solution via different swapping strategies.

6.4.1 Building an initial team allocation

First, the algorithm builds an initial FTAF. For each task, the algorithm forms a promising team, i.e., a team that seems capable of tackling the task based on the task’s required competencies and the competencies offered by each team member. The teams are formed sequentially, starting with the ‘hardest’ tasks. We characterise a task as hard when only a few agents can cover its required competencies. With this heuristic, we prioritise the tasks so that ‘lighter’ tasks (i.e., tasks whose required competencies can be easily covered by many agents) do not bind agents whose acquired competencies can be utilised in hard tasks.

Computing tasks’ allocation hardness. The allocation hardness (or simply ‘hardness’) of a task assesses the difficulty of finding agents who can adequately cover the task’s required competencies. Intuitively, when, for some competence c, more agents adequately cover c (i.e., with high coverage on c), it is easier to find an agent for some task requiring c, and therefore the task is less hard. Inspired by the notion of moment of inertia [44], we measure the difficulty to cover a competence, and therefore the task’s hardness requiring that competence, as the effort (distance from (1,0)) that agents should make to reach the ideal competence coverage of c, which is 1. We remind the reader that the coverage of a competence c ranges from 0 to 1. Thus, the ideal competence coverage for a competence occurs if every agent can fully cover the competence (i.e. competence coverage equals 1 for all agents). Let \(\mathcal {I}\) be a partition of the competence coverage domain [0, 1] in ten distinct intervals, and mid(J) be the midpoint of the interval \(J \in \mathcal {I}\). Now, given a competence \(c\in \mathcal {C}\) and a set of agents A, we compute the moment of inertia of c as \(I(c) = \sum _{J \in \mathcal {I}} n_J^c \cdot \big (1 - mid(J)\big )^2\), where \(n_J^c\) is the number of agents in A whose coverage of competence c lies within interval J, and hence represents the mass of c in the interval.

Then the hardness of a task \(\tau \in T\) depends on (i) the moment of inertia of each required competence \(c \in C_\tau \), and (ii) each competence’s relative importance \(w_\tau (c)\). As such, for some task \(\tau \) we compute \(\tau \)’s hardness as:

where \(\omega \) is a normalising factor on the importance weights of all competencies in \(C_\tau \).

Building an initial team allocation. When we compute each task’s hardness, we build the initial FTAF. To do so, our algorithm, Edu2Com, sequentially for all tasks picks a team out of the available agents, starting from the hardest one. In more detail, let us denote with \(A_\tau \subseteq A\) the available agents (i.e., the agents who have not yet been allocated to a team) while picking a team for task \(\tau \); and with \(\bar{C}_\tau \) task’s \(\tau \) required competences sorted according to their relative importance. The i-th most important competence is denoted as \(\bar{C}_\tau ^i\). The first agent to be allocated to \(\tau \)’s team is the agent in \(A_\tau \) that can cover best competence \(\bar{C}_\tau ^1\)—formally, the first agent to be picked is computed as \(\sigma _1 = \underset{a \in A_\tau }{\mathrm {arg\;max}} \{\textrm{cvg}(\bar{C}_\tau ^1,a)\}\). After picking the first agent \(\sigma _1\), the remaining available agents are \(A_\tau - \{\sigma _1\}\). Then, the i-th agent to be picked for the team of task \(\tau \) is computed as \(\sigma _i = \underset{a \in A_\tau - \Sigma _{i-1}}{\mathrm {arg\;max}} \ \{\textrm{cvg}(\bar{C}_\tau ^j,a)\}\) where \(\Sigma _{i-1} =\bigcup _{k=1}^{i-1}\{\sigma (\bar{C}^k_\tau )\}\) denotes the agents already picked for the team, and \(j = \big (i-1\pmod {|C_\tau |}\big )+ 1\) indicates which competence agent \(\sigma _i\) shall cover best. Thus, the team formed to work on \(\tau \) is \(K = \bigcup _{i=1}^{\textrm{s}_\tau } \sigma _i\), while the agents in K are no longer considered available, i.e., \(A_{\tau '} = A_\tau -K\) where \(\tau '\) is the hardest task after \(\tau \).

6.4.2 Improving team allocation

After finding the initial promising FTAF, in the second stage, Edu2Com attempts to improve it iteratively. Specifically, we introduce several heuristics to be applied in each iteration, exploiting swaps of agents among teams. We distinguish two kinds of iterations based on the heuristics applied in each kind of iteration:

-

1.

Single pairing. For two randomly selected tasks, Edu2Com applies the following two swapping-based heuristics:

-

(a)

Exploiting swap. Considering solely the agents currently allocated in the selected tasks, find the optimal team allocation.

-

(b)

Exploring swap. If there are available agents (i.e. agents assigned to no team), try to swap a randomly selected assigned agent (to any of the two tasks) with a randomly selected unassigned agent. Try a maximum of k times, and keep only the swaps that improve the competence affinity.

Each heuristic is applied (if possible) once within a single paring iteration.

-

(a)

-

2.

Exhaustive pairing. For every pair of tasks, swap every possible pair of agents within them. If competence affinity improves, keep the change and stop the exhaustive pairing iteration.

The iterative process lasts until (i) the global maximum competence affinity is reached, (ii) no solution improvement occurs for a number of iterations, or (iii) the algorithm is stopped by the user. In all cases, the most recently found solution is returned.

6.5 Handling students’ preferences

Notice that although the description of our case study in Section 2 considers students’ preferences, the definition of our task allocation problem in Section 6.2 focuses on competence affinity. However, our problem definition can be readily extended to handle preferences. Next, we show how to achieve that by turning the single-objective optimisation problem in Equation 5 into a multi-objective optimisation problem that accommodates both competencies and students’ satisfaction. Thus, we propose to follow a similar approach to our own work in [28], where we composed teams considering both competencies and personalities.

Formally, we consider that the preferences for each student a in A can be represented through a linear order (or a ranking) \(\succeq _a\) over the tasks in T. We will refer to this order as the preference profile of a. We will note as \(\succeq _A\) the set of preference profiles of agents in A. Then, given a task \(\tau \in T\), we can compute the satisfaction degree of student a as:

where \(pos(\succeq _a,\tau )\) stands for the position of \(\tau \) in the preference profile \(\succeq _a\), being 1 the position of the most preferred task, and |T| the position of the least preferred task. Again, since we want to guarantee a balanced preference satisfaction within a team, we compute the satisfaction degree of a team as follows:

Intuitively within a team K, we reach maximum satisfaction when the assigned task \(\tau \) is the first choice of all members in K, i.e., \(\tau \) is the most highly ranked task of all for every \(a \in K\):

On the other hand, if \(\tau \) is least proffered even for a single student, the satisfaction of the team is dropped, and of course, the minimum satisfaction is reached when the assigned task \(\tau \) is the least ranked task for every \(a \in K\):

Now we are ready to embed the preference satisfaction of a team in our problem by adding a further objective in the objective function of Equation 6, which then becomes:

We use weighting parameters \(w_a\) and \(w_s\) to regulate the influence of the team’s competence affinity and satisfaction, respectively, in the multi-objective optimisation function. Hence we can obtain a family of objective functions including two edge points (i) considering the competence affinity (\(w_a = 1\) and \(w_s = 0\)) solely, and (ii) considering the team’s satisfaction (\(w_a = 0\) and \(w_s = 1\)) solely. It also includes any function that (iii) proportionally considers the two parties (\(w_a+w_s=1\) with \(w_a>0\) and \(w_s>0\)).

7 Empirical analysis

Our empirical analysis aims to evaluate each of the core AI services that compose the AI4Citizen pilot. Unfortunately, we had to fragment our evaluation and conduct separate empirical analyses on each component due to the pandemic (COVID-19) since the School-Work Alternation programme was cancelled. Thus, Section 7.1 empirically explores using FastText that yields better performance. Thereafter, in Section 7.2, we involve high-school students in the evaluation of the usability of our chatbot. Finally, in Section 7.3, we analyse the time required by our team formation service to provide allocations over real-world data. Furthermore, we conduct a validation of the algorithm’s recommended allocations with the aid of experts. Thanks to the results of our empirical analysis, we conclude that the AI4Citizen pilot is ready for deployment in a real-world setting.

7.1 Evaluation of competence and skill extraction

This section aims to evaluate the extraction capabilities of the CSE tool introduced in Section 4. Recall that the CSE tool results from combining the ESCO Ontology [13] and FastText [16], allowing us to relate the texts taken from a CV with job offers by associating them to the competencies in the ESCO Ontology.

To evaluate the CSE tool, we performed two different experiments. In both experiments, we used competencies, CVs, and job offers described in Italian. For the first experiment, we adopted the FastText similarity algorithm out-of-the-box: (i) first, the description of the competence in natural language is converted into a vector; (ii) similarly, vectors are also computed from all the competences present in ESCO, written in the same language of the required competence; (iii) the cosine between the vector in (i) and each of the vectors in (ii) is calculated, and the results are ranked. The higher the cosine, the more similar the texts behind.

Let d be the text we want to compare with the competencies in ESCO. First, all the documents (job descriptions, CVs, ESCO descriptions of competencies) are pre-processed using Tint [45], a tool for analysing texts written in Italian. With Tint, we perform some pre-processing steps such as tokenisation, sentence splitting, and lemmatizationFootnote 6, so that words such as andavano, vado are all referred to the main form andare.

After that, vectors for each word are obtained using FastText. A document can therefore be represented as an ordered list of vectors:

where \(v_i\) is the vector representation of the word \(w_i\), being \(n_d\) the length of the document in term of words.

Following [46], all the vectors can be merged into a single one by computing the average of them, document by document. Each document d is therefore transformed in a single vector \(v_d\) as follows:

Finally, we use as a similarity function the cosine between two vectors. Let d and e be two documents, and \(v_d\) and \(v_e\) be their corresponding vectors. The cosine similarity between them is calculated as follows:

where \(\alpha \) is the angle between \(v_d\) and \(v_e\). The smaller the angle (and therefore, the larger the cosine), the more similar are the two documents.

For the second experiment, we added a pre-processing phase where only the most relevant words are taken into account, using TF-IDF (term frequency-inverse document frequency) [47], using an approach similar to the one described in [48].

TF-IDF is a statistical measure that reflects the importance of a word in a set of documents. The intuition is that a term occurring in many documents is not a good discriminator, and hence it should be given less weight than words that occur in fewer documents. The TF-IDF function measures the importance of a term in a particular document: it grows proportionally to the number of times the terms are used in the document, but it decreases proportionally to the frequency of the term in the whole collection.

The function is the product of the two components, TF and IDF. Let t be the term and d the document:

where \(N=\left| D\right| \) is the number of documents in the set D.

Once we have computed the TD-IDF value for each term and document, our goal is to use that function to remove from the set of words both the terms that are too rare and those that—conversely—are too common in the ESCO corpus. We then set two parameters, \(f_\textrm{min}\) and \(w_\textrm{min}\), respectively, the minimum frequency of a word in the ESCO ontology and the minimum TF-IDF weight. We test different settings with a matrix of possible values and find out that the best values wrt. our evaluation is \(f_\textrm{min}=5\) and \(w_\textrm{min}=2.0\). We then filter out terms that appear less than 5 times in the whole set of documents and words whose TF-IDF value is less than 2.0.

After these pre-processing steps, documents are processed as already described above.

To evaluate the approaches, we ask teachers from high schools in Italy to annotate some sentences written in Italian taken from the “Alternanza Scuola-Lavoro” project, plus a set of custom sentences written from scratch by the teachers themselves. The first ten competencies from ESCO were shown for each sentence, and teachers were asked to annotate whether they were relevant to the input sentence or not. A total of 20 teachers took part in the experiment, annotating 368 pre-defined sentences and 65 manually written sentences. Table 2 shows the number of sentences that were annotated as correct and incorrect in the first two ESCO competencies of the ranking.

Results show that both algorithms are effective on pre-defined sentences, and in particular, the pre-processing using TF-IDF increases the accuracy compared to the plain use of FastText. By analysing the sentences the teachers wrote, we can see a massive difference in the ratio between correct and incorrect in the two sets. This is due to the freedom left to the teachers, which led them to enter texts that are too long or too short concerning the average descriptions of competencies in ESCO, yielding more challenging examples to the algorithm.

Generally, the system performs well, and our experiments allowed us to understand which algorithm performs better. Most of the inaccuracies are due to the imbalance of the ESCO ontology: some branches are very detailed, while others are often poor or missing at all. Both the approaches (with and without TF-IDF) are easy to extend to any language, as long as both FastText and ESCO are available in that language.

Since the pre-processing phase using TF-IDF outperforms Fastext alone, we use that algorithm in the AI4Citizen pilot. Notice that our algorithm is publicly available on Github [18].

7.2 Evaluation of the chatbot usability

We evaluated the usability of the chatbot involving three high school classes of two different technical institutes for a total of 55 students:

-

20 students from a fourth class of a technical institute (referred to as Class1)

-

17 students from a fifth class of the same institute (referred to as Class2)

-

18 students of a fourth class of another institute (referred to as Class3)

We only involved students of technical institutes because these types of schools require their students to do long periods of SWA (school-work alternation) activities.

7.2.1 Procedure

The students of Class1 and Class2 were in their classroom assisted by their professor, whereas the researchers who organised the evaluation were remotely connected to the classroom due to the constraints imposed by the pandemic.

The students of Class3 were remotely connected to a virtual meeting room. Two professors chaired the evaluation activity from the school classroom connected to the virtual meeting room.

The evaluation activity consisted of three phases:

-

1.

Each student logged into the chatbot using their private institute’s credentials and worked on a given mission.

-

2.

After completing the mission, they filled in a questionnaire composed of the Chatbot Usability Questionnaire (CUQ) plus four open questions (see Appendix A for detail about the questionnaire).

-

3.

Finally, the researchers give a short presentation of the AI4EU project to inform students about the context of the evaluation test.

The mission given to the students consisted of (a) understanding if they had to do an internship this school year and (b) choosing three internships of interest. Class3 also had a third mission consisting of (c) looking at how to reach the companies of the formerly chosen internships and opening their website.

We enhanced the chatbot between the different assessment phases within this real-life experimentation. Since the students interacted freely with the chatbot, we enriched the intent recognition with the students’ expressions using the platform’s monitoring feature. We also identified that a few students were too restrictive in expressing their preferences, which ultimately did not enable them to complete their mission (e.g., the selection of 3 choices), causing frustrations. We modified our chatbot to guide them better to alleviate these restrictions and select other internships. We were also enabled to connect to the company website and to look at their precise locations taking into account informal remarks from the first class. The improvement of the results (see Table 3) showed how these relatively simple enhancements drastically changed the student experience and enabled a more significant proportion to complete their mission (see Table 4).

7.2.2 Results

The scores of the CUQ questionnaire filled in by the students are reported in Table 3. When asked if it was easy to solve the given mission, \(65\%\) of the participants (36 students) said yes (see Table 4 for details). When asked what they liked most about the chatbot, 21 students (\(38\%\) of the participants) answered that they liked to receive information on internship offers; 16 students (\(30\%\)) answered they liked the interaction with the chatbot. When asked about suggestions for improving the chatbot, we got many interesting answers that helped improve it. Seventeen students recommended expanding the vocabulary of allowed inputs. They would have preferred more tolerance in the type of questions they could enter.

7.2.3 Discussion of the results

From the data obtained with the CUQ questionnaire, it emerges that usability problems still need to be solved, even if the score has improved from the first to the third experimentation (see Table 4).

Despite the fact that the chatbot still has usability issues, students liked being able to use this modality to get information about their internship.

Interestingly, our results showed that students still believe that interacting with a chatbot is the same as interacting with a person. They would like to use a completely free vocabulary, not constrained at all. This issue has to be considered in future versions of the chatbot.

7.3 Evaluating the team allocation algorithm

In this section, we evaluate the capability of our team allocation algorithm to cope with real-world problems. Moreover, we evaluate the quality of the team allocations that it computes with the aid of experts. More precisely, Section 7.3.2 details the analysis of the solution quality, runtime performance and anytime capabilities of Edu2Com when pitched against CPLEX V12.10.0, a state-of-the-art linear programming solver, over synthetic data. Section 7.3.3 reports on the solution quality and time performance of Edu2Com when solving several problem instances with real-world data. Finally, Section 7.3.4 details the validation of the quality of allocations by experts.

7.3.1 Empirical settings

The implementation of Edu2Com, along with all the necessary supporting code, was made in Python3.7. All the experiments ran on a PC with Intel Core i7 CPU, 8 cores, and 8Gb RAM. In our experiments below, we set our algorithm’s parameters to: compute similarity with \(\kappa =0.35, \lambda =0.75\); perform one exhaustive-pairing every 50 single-pairings; stop the algorithm after two blocks of single-pairings and exhaustive pairings have elapsed with no improvements.

7.3.2 Quality, runtime and anytime analysis

Synthetic Data Generation. To compare our heuristic algorithm against the optimal solver, we created three sets of problem instances with varying sizes (small, medium, and large), all with solutions that CPLEX could obtain within acceptable time limits. The problem instances were created with the process described below. Initially, we determined the number of tasks by selecting from the set \(\{10,15,20\}\). Then, for each task \(\tau \), we randomly selected: the team size \(\textrm{s}_{\tau }\sim \mathcal {U}(2,3)\), the number of required competencies \(|C_\tau | \sim \mathcal {U}(2,5)\), the set of \(|C_\tau |\) competencies from the ESCO ontology, and an importance weight for each competence \(c \in C_\tau \) as \(w_{\tau }(c)\sim \mathcal {N}\big (\mu =\mathcal {U}(0,1),\sigma {\sim }\mathcal {U}(0.01,0.1) \big )\).Footnote 7 After that, for each task \(\tau \), we created \(\textrm{s}_\tau \) agents so that each agent acquires competencies that either a required competence in \(C_\tau \) or a child node (in ESCO) of a required competence. In total, we evaluated solving 60 problem instances of varying sizes. Specifically, we considered three data families containing 20 instances each, where each problem instance involves (1) 10 tasks and on average \(\sim 24.5\) agents in the low-size family, (2) 15 tasks and on average \(\sim 37.7\) agents in the middle-size family, and (3) 20 tasks and on average \(\sim 50.55\) agents in the large-size family.

Quality analysis. Figure 7 illustrates the quality of the solutions build with our algorithm, Edu2Com, across time. As quality, we consider the ratio of the overall competence affinity of a solution against the competence affinity of the optimal solution computed by CPLEX. In the figure, we see the average of over 20 problem instances per data family (low-size, middle-size, large-size). As we can see, Edu2Com always reaches the optimal solution. That is, our algorithm exhibits quality 1 in all instances across every data family.

Runtime analysis. The greatest advantage of our heuristic algorithm is that it is much faster than CPLEX. Table 5 shows the time we can save with respect to CPLEX to reach optimality. Overall, using our heuristic can save from \({\sim }52\%\) to \({\sim }65\%\) time with respect to CPLEX. Specifically, for problem instances with 10 tasks (small scenario), we save \(\sim 56\%\) time wrt. CPLEX; for problem instances with 15 tasks (medium scenario), we save \(\sim 52\%\) time; and, for problem instances with 20 tasks (large scenario), we save \(\sim 65\%\) time wrt. CPLEX. We should note that the primary time-consuming task for CPLEX is building the LP encoding the problem.

Anytime analysis. Our algorithm reaches high-quality solutions (with quality above 0.8) in \(\sim 6.4\%\) to \(\sim 12.6\%\) of the time required by CPLEX to yield a solution. Let \(t_{opt}\) be the time in seconds that CPLEX needs to yield a solution. Edu2Com finds the first solution: (1) after \(2.25\cdot 10^{-5}\cdot t_{opt}\) (=0.091) seconds with a quality \(32\%\) of the quality of the optimal in the small-size scenario, (2) after \(3.31\cdot 10^{-3}\cdot t_{opt}\) (=1.451) seconds and \(30\%\) in the medium-size scenario, and (3) after \(1.35\cdot 10^{-3}\cdot t_{opt}\) (=2.564) seconds and \(28\%\) in the large-size scenario. In Table 6, we provide the time needed by Edu2Com to reach a solution of a certain quality and the portion of time compared to \(t_{opt}\). Notably, our algorithm reaches a solution of quality above \(80\%\) in (1) \(0.064\cdot t_{opt}\) seconds in the small-size scenario, (2) \(0.149\cdot t_{opt}\) in the medium-size scenario, and (3) \(0.126\cdot t_{opt}\) seconds in the large-size scenario.

7.3.3 Solving real-world problems

In this empirical analysis section, we used real-world data. In more detail, we use 100 profiles of students and 50 internship programs, with the competencies described in the ESCO ontology [13]. There are 118 distinct competencies in the students’ profiles, each acquiring \(\sim 11.98\) competencies, while there are 34 distinct competencies in the internship programs’ description, each requiring 4 competencies (minimum 2, maximum 15).