Abstract

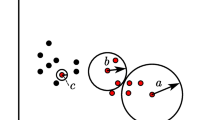

Feature selection based on neighborhood rough sets (NRSs) has become a popular area of research in data mining. However, the limitation that NRSs inherently ignore the differences between different classes hinders their performance and development, and feature evaluation functions for NRSs cannot reflect the relevance between features and decisions effectively. The above two points restrict the effect of NRSs on feature selection. Consequently, a feature selection algorithm using the rough mutual information in adaptive neighborhood rough sets (ANRSs) is presented in this paper. First, we propose the boundaries of samples to granulate all samples and build ANRSs model by combining the boundaries of all samples in the same class, and the model discovers the characteristics of each class from the given data and overcomes the inherent limitation of NRSs. Second, inspired by combining information and algebraic views, we naturally extend the mutual information that represents the information view to ANRSs and combine it with the roughness that represents the algebraic view to propose the rough mutual information to quantitatively compute the relevance between features and decisions. Third, a maximum relevance minimum redundancy-based feature selection algorithm using the rough mutual information is studied. Additionally, to decrease the time consumption of the algorithm in processing high-dimensional datasets and improve the classification accuracy of the algorithm, the Fisher score dimensionality reduction method is introduced into the designed algorithm. Finally, the experimental results of the designed algorithm based on ANRSs and various algorithms based on NRSs are compared on eleven datasets to demonstrate the efficiency of our algorithm.

Similar content being viewed by others

References

Xu WH, Yuan KH, Li WT (2022) Dynamic updating approximations of local generalized multigranulation neighborhood rough set. Appl Intell 52(8):9148–9173

Lu HH, Chen HM, Li TR, Chen H, Luo C (2022) Multi-label feature selection based on manifold regularization and imbalance ratio. Appl Intell 52(10):11652–11671

Yang XL (2021) Neighborhood rough sets with distance metric learning for feature selection. Knowl-Based Syst. https://doi.org/10.1016/j.knosys.2021.107076, Li TR

Ibrahim RA, Abd Elaziz M, Oliva D (2020) An improved runner-root algorithm for solving feature selection problems based on rough sets and neighborhood rough sets. Appl Soft Comput. https://doi.org/10.1016/j.asoc.2019.105517

Wan JH, Chen HM, Yuan Z (2021) A novel hybrid feature selection method considering feature interaction in neighborhood rough set. Knowl-Based Syst. https://doi.org/10.1016/j.knosys.2021.107167https://doi.org/10.1016/j.knosys.2021.107167

Hu QH, Yu DR, Liu JF (2008) Neighborhood rough set based heterogeneous feature subset selection. Inf Sci 178(18):3577–3594

Lin YJ, Hu QH, Liu JH (2015) Multi-label feature selection based on neighborhood mutual information. Appl Soft Comput 38:244–256

Wang LJ (2011) An improved multiple fuzzy NNC system based on mutual information and fuzzy integral. Int J Mach Learn Cybern 2(1):25–36

Gu XY, Guo JC, Xiao LJ, Ming T, Li CY (2020) A feature selection algorithm based on equal interval division and Minimal-Redundancy-Maximal-Relevance. Neural Process Lett 51(2):1237–1263

Sharmin S, Shoyaib M, Ali AA (2019) Simultaneous feature selection and discretization based on mutual information. Pattern Recogn 91:162–174

Xiong CZ, Qian WB, Wang YL (2021) Feature selection based on label distribution and fuzzy mutual information. Inf Sci 574:297–319

Wang JL, Dai XB, Luo HM (2021) MI_DenseNetCAM: a novel pan-cancer classification and prediction method based on mutual information and deep learning model. Front Genet. https://doi.org/10.3389/fgene.2021.670232

Sun L, Wang LY, Qian YH, Xu JC (2019) Feature selection using Lebesgue and entropy measures for incomplete neighborhood decision systems. Knowl-Based Syst. https://doi.org/10.1016/j.knosys.2019.104942https://doi.org/10.1016/j.knosys.2019.104942

Xu JC, Qu KL, Yang Y (2021) Feature selection combining information theory view and algebraic view in the neighborhood decision system. Entropy. https://doi.org/10.3390/e23060704

Sun L, Wang LY, Ding WP (2021) Feature selection using fuzzy neighborhood Entropy-based uncertainty measures for fuzzy neighborhood multigranulation rough sets. IEEE Trans Fuzzy Syst 29(1):19–33

Wang GY (2003) Rough reduction in algebra view and information view. Int J Intell Syst 18:679–688

Xu JC, Qu KL, Sun YH, Yang J (2022) Feature selection using self-information uncertainty measures in neighborhood information systems. Appl Intell. https://doi.org/10.1007/s10489-022-03760-5https://doi.org/10.1007/s10489-022-03760-5

Pawlak Z (2007) Rough sets: some extensions. Inf Sci 177(1):28–40

Yang X, He L, Qu D, Zhang WQ (2018) Semi-supervised minimum redundancy maximum relevance feature selection for audio classification. Multimed Tools Appl 77(1):713–739

Berrendero JR, Cuevas A, Torrecilla JL (2016) The mRMR variable selection method: a comparative study for functional data. J Stat Comput Simul 86(5):891–907

Huang NT, Hu ZQ, Cai GW (2016) Short term electrical load forecasting using mutual information based feature selection with generalized Minimum-Redundancy and Maximum-Relevance criteria. Entropy. https://doi.org/10.3390/e18090330

Peng HC, Long FH, Ding C (2005) Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans Pattern Anal Mach Intell 27(8):1226–1238

Shen CN, Zhang K (2021) Two-stage improved Grey Wolf optimization algorithm for feature selection on high-dimensional classification. Complex Intell Syst. https://doi.org/10.1007/s40747-021-00452-4https://doi.org/10.1007/s40747-021-00452-4

Zhang CC, Dai JH, Chen JL (2020) Knowledge granularity based incremental attribute reduction for incomplete decision systems. IEEE Trans Pattern Anal Mach Intell 11(5):1141–1157

Gan M, Zhang L (2021) Iteratively local fisher score for feature selection. Appl Intell 51 (8):6167–6181

Aran O, Akarun L (2021) A multi-class classification strategy for Fisher scores: application to signer independent sign language recognition. Pattern Recogn 43(5):1776–1778

Gu XY, Guo JC, Xiao LJ, Li CY (2021) Conditional mutual information-based feature selection algorithm for maximal relevance minimal redundancy. Appl Intell. https://doi.org/10.1007/s10489-021-02412-4https://doi.org/10.1007/s10489-021-02412-4

Xu JC, Qu KL, Meng XR, Sun YH, Hou QC (2022) Feature selection based on multiview entropy measures in multiperspective rough set. Int J Intell Syst 37(10):7200–7234

Chen YM, Zhu QX, Xu HR (2015) Finding rough set reducts with fish swarm algorithm. Knowl-Based Syst 81:22–29

Wang XJ, Yan YX, Ma XY (2020) Feature selection method based on differential correlation information entropy. Neural Process Lett 52(2):1339–1358

Hu QH, Liu JF, Yu DR (2008) Mixed feature selection based on granulation and approximation. Knowl-Based Syst 21(4):294–304

Zhang XY, Fan YR, Yang JL (2021) Feature selection based on fuzzy-neighborhood relative decision entropy. Pattern Recogn Lett 146:100–107

Chen YM, Qin N, Li W, Xu FF (2019) Granule structures distances and measures in neighborhood systems. Knowl-Based Syst 165:268–281

Wang CZ, Huang Y, Shao MW, Hu QH (2020) Feature selection based on neighborhood Self-information. IEEE Trans Cybern 50(9):4031–4042

Zhao H, Qin KY (2014) Mixed feature selection in incomplete decision table. Knowl-Based Syst 57:181–190

Hu QH, Pan W, An S, Ma PJ (2010) An efficient gene selection technique for cancer recognition based on neighborhood mutual information. Int J Mach Learn Cybern 1:63–74

Meng ZQ, Shi ZZ (2009) A fast approach to attribute reduction in incomplete decision systems with tolerance relation-based rough sets. Inf Sci 179(16):2774–2793

Sun L, Xu JC, Tian Y (2012) Feature selection using rough entropy-based uncertainty measures in incomplete decision systems. Knowl-Based Syst 36:206–216

Wang GY, Y H Yang DC (2002) Decision table reduction based on conditional information entropy. Chin J Comput 25(7):759– 766

Miao DQ, Hu GR (1999) A heuristic algorithm for knowledge reduction. J Comput Res Dev 36(6):681–684

Sun L, Zhang XY, Qian YH, Xu JC (2019) Feature selection using neighborhood entropy-based uncertainty measures for gene expression data classification. Inf Sci 502:18–41

Xu JC, Wang Y, Mu HY, Huang FZ (2019) Feature genes selection based on fuzzy neighborhood conditional entropy. J Intell Fuzzy Syst 36(1):117–126

Tan AH, Wu WZ, Qian YH, Liang JY, Chen JK, Li JJ (2019) Intuitionistic fuzzy rough set-based granular structures and attribute subset selection. IEEE Trans Fuzzy Syst 27(3):527–539

Yu DR, Hu QH, Wu CX (2007) Uncertainty measures for fuzzy relations and their applications. Appl Soft Comput 7:1135–1143

Hu QH, Zhang L, Zhang D, Pan W, An S, Pedrycz W (2011) Measuring relevance between discrete and continuous features based on neighborhood mutual information. Expert Syst Appl 38:10737–10750

Velswamy K, Velswamy R (2021) Classification model for heart disease prediction with feature selection through modified bee algorithm. Soft Computing. https://doi.org/10.1007/s00500-021-06330-yhttps://doi.org/10.1007/s00500-021-06330-y

Xu FF, Miao DQ, Wei L (2009) Fuzzy-rough attribute reduction via mutual information with an application to cancer classification. Comput Math Appl 57(6):1010–1017

Chen YM, Zhang ZJ, Zheng JZ, Ma Y, Xue Y (2017) Gene selection for tumor classification using neighborhood rough sets and entropy measures. J Biomed Inform 67:59–68

Friedman M (1940) A comparison of alternative tests of significance for the problem of m rankings. Ann Inst Stat Math 11(1):86–92

Dunn QJ (1961) Multiple comparisons among means. J Am Stat Assoc 56(293):52–64

Yao YY (2015) The two sides of the theory of rough sets. Knowl-Based Syst 80:67–77

Liu KY, Yang XB, Yu HL, Fujita H, Chen XJ, Liu D (2020) Supervised information granulation strategy for attribute reduction. Int J Mach Learn Cybern 11(9):2149–2163

Chen XW, Xu WH (2022) Double-quantitative multigranulation rough fuzzy set based on logical operations in multi-source decision systems. Int J Mach Learn Cybern 13(4):1021– 1048

Yang YY, Chen DG, Zhang X, Ji ZY, Zhang YJ (2022) Incremental feature selection by sample selection and feature-based accelerator. Appl Soft Comput. https://doi.org/10.1016/j.asoc.2022.108800https://doi.org/10.1016/j.asoc.2022.108800

Li WT, Xu WH, Zhang XY, Zhang J (2021) Updating approximations with dynamic objects based on local multigranulation rough sets in ordered information systems. Artif Intell Rev . https://doi.org/10.1007/s10462-021-10053-9

Su ZG, Hu QH, Denoeux T (2021) A distributed rough evidential K-NN classifier: integrating feature reduction and classification. IEEE Trans Fuzzy Syst 29(8):2322–2335

Wang CZ, Huang Y, Ding WP, Cao ZH (2021) Attribute reduction with fuzzy rough self-information measures. Inf Sci 549:68–86

Chen Y, Liu KY, Song JJ, Fujita H, Yang XB, Qian YH (2020) Attribute group for attribute reduction. Inf Sci 535:64–80

Yang X, Li MM, Fujita H, Liu D, Li TR (2022) Incremental rough reduction with stable attribute group. Inf Sci 589:283–299

Chen Z, Liu KY, Yang XB, Fujita H (2022) Random sampling accelerator for attribute reduction. Int J Approx Reason 140:75–91

Li WT, Zhou HX, Xu WH, Wang XZ, Pedrycz W (2022) Interval dominance-based feature selection for interval-valued ordered data. IEEE Trans Neural Netw Learn Syst. https://doi.org/10.1109/TNNLS.2022.3184120

Xu WH, Yuan KH, Li WT, Ding WP (2022) An emerging fuzzy feature selection method using composite entropy-based uncertainty measure and data distribution. IEEE Trans Emerg Top Comput Intell. https://doi.org/10.1109/TETCI.2022.3171784

Pan YZ, Xu WH, Ran WQ (2022) An incremental approach to feature selection using the weighted dominance-based neighborhood rough sets. Int J Mach Learn Cybern. https://doi.org/10.1007/s13042-022-01695-4https://doi.org/10.1007/s13042-022-01695-4

Acknowledgements

The paper is supported in part by the National Natural Science Foundation of China under Grant (61976082, 62002103).

Author information

Authors and Affiliations

Contributions

Conceptualization: Jiucheng Xu; Methodology: Kanglin Qu; Writing - original draft preparation: Kanglin Qu, Ziqin Han, Shihui Xu; Writing - review and editing: Jiucheng Xu, Ziqin Han, Shihui Xu; Funding acquisition: Jiucheng Xu.

Corresponding authors

Ethics declarations

Conflict of Interests

The authors declare no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Proof Proof of Proposition

1 From (13), (18), and (19), it is easy to know \(\left \lvert {{{\left [ {{a_{i}}} \right ]}_{{S_{1}}}}} \right \rvert \ge \left \lvert {{{\left [ {{a_{i}}} \right ]}_{{S_{2}}}}} \right \rvert \), \(\left \lvert {\underline {{R_{{S_{1}}}}} \left (D \right )} \right \rvert \le \left \lvert {\underline {{R_{{S_{2}}}}} \left (D \right )} \right \rvert \), and \(\left \lvert {\overline {{R_{{S_{1}}}}} \left (D \right )} \right \rvert \ge \left \lvert {\overline {{R_{{S_{2}}}}} \left (D \right )} \right \rvert \). Thus, it is clear that \({\alpha _{{S_{1}}}}\left (D \right ) \le {\alpha _{{S_{2}}}}\left (D \right )\), \(1 - {\alpha _{{S_{1}}}}\left (D \right ) \ge 1 - {\alpha _{{S_{2}}}}\left (D \right )\), and \({\gamma _{{S_{1}}}}\left (D \right ) \ge {\gamma _{{S_{2}}}}\left (D \right )\). Therefore, Proposition 1 is established. □

Proof Proof of Proposition

2

From (21) and (26), Proposition 2 is established. □

Proof Proof of Proposition

3 \(\left \lvert {{{\left [ {{a_{i}}} \right ]}_{{S_{1}}}}} \right \rvert \ge \left \lvert {{{\left [ {{a_{i}}} \right ]}_{{S_{2}}}}} \right \rvert \) and \(\left \lvert {{{\left [ {{a_{i}}} \right ]}_{D}} \cap {{\left [ {{a_{i}}} \right ]}_{{S_{1}}}}} \right \rvert \ge \left \lvert {{{\left [ {{a_{i}}} \right ]}_{D}} \cap {{\left [ {{a_{i}}} \right ]}_{{S_{2}}}}} \right \rvert \) are known from (13). Therefore, the relationship between \(M\left ({D;{S_{1}}} \right )\) and \(M\left ({D;{S_{2}}} \right )\) is fuzzy. There is \({\gamma _{{S_{1}}}}\left (D \right ) \ge {\gamma _{{S_{2}}}}\left (D \right )\) according to Proposition 1. Thus, the numerical relationship between \({\gamma _{{S_{1}}}}\left (D \right )*M\left ({D;{S_{1}}} \right )\) and \({\gamma _{{S_{2}}}}\left (D \right )*M\left ({D;{S_{2}}} \right )\) is unclear, Proposition 3 is established. □

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Qu, K., Xu, J., Han, Z. et al. Maximum relevance minimum redundancy-based feature selection using rough mutual information in adaptive neighborhood rough sets. Appl Intell 53, 17727–17746 (2023). https://doi.org/10.1007/s10489-022-04398-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-04398-z