Abstract

Injecting prior knowledge into the learning process of a neural architecture is one of the main challenges currently faced by the artificial intelligence community, which also motivated the emergence of neural-symbolic models. One of the main advantages of these approaches is their capacity to learn competitive solutions with a significant reduction of the amount of supervised data. In this regard, a commonly adopted solution consists of representing the prior knowledge via first-order logic formulas, then relaxing the formulas into a set of differentiable constraints by using a t-norm fuzzy logic. This paper shows that this relaxation, together with the choice of the penalty terms enforcing the constraint satisfaction, can be unambiguously determined by the selection of a t-norm generator, providing numerical simplification properties and a tighter integration between the logic knowledge and the learning objective. When restricted to supervised learning, the presented theoretical framework provides a straight derivation of the popular cross-entropy loss, which has been shown to provide faster convergence and to reduce the vanishing gradient problem in very deep structures. However, the proposed learning formulation extends the advantages of the cross-entropy loss to the general knowledge that can be represented by neural-symbolic methods. In addition, the presented methodology allows the development of novel classes of loss functions, which are shown in the experimental results to lead to faster convergence rates than the approaches previously proposed in the literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Deep Neural Networks [1] have been a break-through for several classification problems involving sequential or high-dimensional data. However, deep neural architectures strongly rely on a large amount of labeled data to develop powerful feature representations. Unfortunately, it is difficult and labor intensive to annotate such large collections of data. In this regard, prior knowledge expressed by First-Order Logic (FOL) rules represents a natural solution to make learning efficient when the training data is scarce and some domain expert knowledge is available. The integration of logic inference with learning could also overcome another limitation of deep architectures, namely that they mainly act as black-boxes from a human perspective, making their usage difficult in safety critical applications, like in health or car industry applications [2]. For these reasons, Neural-Symbolic (NeSy) approaches [3, 4] integrating logic and learning have become one of the fundamental research lines for the machine learning and artificial intelligence communities. One of the most common approaches to exploit logic knowledge to train a deep neural learner relies on mapping the FOL knowledge into differentiable constraints using t-norms. Then, the constraints can be enforced using gradient-based optimization techniques, like done in [5, 6]. Most work in this area approached the problem of translating logic rules into a differentiable form by defining a collection of heuristics that often lack semantic consistency and have no clear motivation from a theoretical point of view. For instance, there is no agreement on the relation between the selected t-norm and the aggregation function corresponding to the logic quantifiers, nor even on the chosen loss to enforce the constraints.

This paper first traces back the properties of t-norm fuzzy logic operators down to the selection of a generator function. Then, we show that the loss function of a learning problem accounting for both supervised data and logic constraints can also be determined by the single choice of the t-norm generator. The generator determines the fuzzy relaxation of connectives and quantifiers occurring in the logic rules. As a result, a simplified and semantically consistent optimization problem can be formulated. In this framework, the classical fitting of supervised training data can be enforced by atomic logic constraints. Since the careful choice of loss functions has been crucial to the success of deep learning, this paper also investigates the relation between supervised training losses and generator choices. As a special case, we get a novel justification for the popular cross-entropy loss [7], that has been shown to provide faster convergence and to reduce the vanishing gradient problem in very deep structures.

Contributions

This paper introduces a theoretical framework centered around the notion of t-norm generator, unifying the choice of the logic semantics and of the loss function in neural-symbolic learners. In particular, we extend the preliminary formalization sketched in [8], together with a more comprehensive experimental validation. This unification results in a simplified learning objective that is shown to be numerically more stable, while retaining the flexibility to customize the learning process on the considered applications.

The paper is organized as follows: Section 2 presents some prior work on the integration of learning and logic inference, Section 3 presents the basic concepts about t-norms, generators and aggregator functions and Section 4 introduces a general neural-symbolic framework used to extend supervised learning with logic rules. Section 5 presents the main results of the paper, showing the link between t-norm generators and loss functions and how these can be exploited in neural-symbolic approaches. Section 6 presents the experimental results and a discussion on the presented methodology is provided in Section 7. Finally, Section 8 draws some conclusions.

2 Related works

Neural-symbolic approaches [9, 10] aim at combining symbolic reasoning with (deep) neural networks, e.g. by exploiting additional logic knowledge when available. This knowledge can be either injected into the learner internal structure (e.g. by constraining the network architecture) or enforced on the learner outputs (e.g. by adding new loss terms). In this context, First-Order Logic is commonly chosen as the declarative framework to represent the knowledge because of its flexibility and expressive power. NeSy methodologies are rooted in previous work on Statistical Relational Learning (SRL) [3, 11], which developed frameworks for performing logic inference in presence of uncertainty. For instance, Markov Logic Networks (MLN) [12] and Probabilistic Soft Logic (PSL) [13] integrate FOL and probabilistic graphical models by using the logic rules as potential functions defining a probability distribution. MLNs have received a lot of attention by the SRL community [14,15,16] and have been widely used in different tasks like information extraction, entity resolution and text mining [17, 18]. More recently, MLNs have also been extended to work with neural potential functions in [19], showing impressive results e.g. in generating molecular data. PSL can be considered a fuzzy extension of MLNs, as it exploits a fuzzy relaxation of the logic potentials by using Łukasiewicz Logic. The framework proposed in this paper builds upon t-norm fuzzy logics, however it is not limited to any specific t-norm. Hence it could be also adopted to define alternative logic potential functions for PSL.

A common solution to integrate logic reasoning and deep learning relies on using deep neural networks to approximate the truth values (i.e. fuzzy semantics) or the probabilities (i.e. probabilistic semantics) of certain target predicates, and then apply logic or probabilistic inference on the network outputs [20]. In the former case, the logic rules can be relaxed according to a differentiable fuzzy logic and then the overall architecture can be optimized end-to-end. This approach is followed with minor variants by Semantic-Based Regularization (SBR) [5], Lyrics [21] and Logic Tensor Networks (LTN) [6], especially for classification problems. On the other hand, some examples of NeSy approaches based on probabilistic logic are given by Semantic Loss [22], Differentiable Reasoning [23], Deep Logic Models [24], Relational Neural Machines [25] and DeepProbLog [26]. Similarly, Lifted Relational Neural Networks [27] and Neural Theorem Provers [28, 29] realize a soft forward or backward chaining via an end-to-end gradient based scheme. This paper investigates the bound between the selected logic semantics to represent the knowledge and the loss function in the learning task. This is a common problem for all NeSy approaches, that encode the logic knowledge into differentiable constraints used by a deep learner.

Learning with fuzzy logic constraints

In general, if some FOL knowledge is available for a learning problem, this is expressed in Boolean form. To define a differentiable learning objective is then fundamental to establish a mapping to relax the logic formulas into differentiable functional constraints by means of an appropriate fuzzy logic. For instance, Serafini et al. [30] introduces a learning framework where the formulas are converted according to the t-norm and t-conorm of Łukasiewicz logic. Giannini et al. [31] also proposes to convert the formulas according to Łukasiewicz logic, however they exploit the weak conjunction in place of the t-norm, thus guaranteeing convex functional constraints. A more empirical approach has been considered in SBR, where all the fundamental t-norms have been evaluated on different learning settings to select the best t-norm on the single tasks [5]. More recent studies on the learning properties of different fuzzy logic operators have also been proposed by Van Krieken et al. [32, 33]. By combining different logic semantics for the connectives, the authors achieved the most significant performance improvement, but the dependence between the connectives is no longer obeying any specific logic theory.

The relaxation of logic quantifiers has also been the subject of a wide range of studies. On the performance side, different quantifier conversions have been taken into account and validated. For instance, in Diligenti et al. [5] the arithmetic mean and the maximum operator have been used to convert the universal and existential quantifiers, respectively. Different possibilities have been considered for the universal quantifier in Donadello et al. [34], while the existential quantifier depends on this choice via the application of the strong negation using the DeMorgan law. However, the arithmetic mean operator has been shown to achieve better performances in the conversion of the universal quantifier [34], with the existential quantifier implemented by Skolemization. In spite of improving the performances, the universal and existential quantifiers should be thought of as a generalized AND and OR, respectively. Therefore, converting these quantifiers using a mean operator has no direct justification inside a logic theory, and spoil the original semantics.

There have been a few attempts in the literature to address the problem of choosing semantically driven loss functions to enforce the satisfaction of the logic constraints. However, these works are generally not fully semantically coherent or too specific. A unified principle to select a suitable loss function that can be logically interpreted according to the adopted fuzzy logic semantics is still missing. For instance, both SBR [5] and LTN [30] rely on minimizing the strong negation of each logic constraint, whereas Lyrics [21] also allows the usage of the negative logarithm. A different perspective is considered in Semantic Loss [22], where the authors propose a new loss function that is very close to the negative logarithm one and that is able to achieve (near) state-of-the-art performances on semi-supervised learning tasks, by combining neural networks and logic constraints. In this paper, we show that these loss functions (and infinitely many more) are special cases of t-norm generators that can be uniquely determined by the choice of a fuzzy logic relaxation.

3 Background on t-norm fuzzy logic

Many-valued logics have been introduced in order to extend the admissible set of truth values from true (1) and false (0) to a scale of truth-degree having absolutely true and absolutely false as boundary cases. A fuzzy logic is a many-valued logic, whose set of truth values coincides with the real unit interval [0,1]. This section introduces the basic notions of fuzzy logic together with some illustrative examples.

T-norms [35] are a special kind of binary operations on the real unit interval [0,1], representing an extension of the Boolean conjunction.

Definition 1

T : [0,1]2 → [0,1] is a t-norm if and only if for every x,y,z ∈ [0,1]:

T is a continuous t-norm if it is a continuous function in [0,1].

A fuzzy logic can be uniquely defined according to the choice of a certain t-norm T [36]. A wide variety of operations corresponding to different fuzzy logic connectives are defined starting from T and the strong negation “¬”, and their notation is introduced in Definition 2. Table 1 reports the algebraic semantics of these connectives for Gödel, Łukasiewicz and Product logics, which are referred as the fundamental fuzzy logics, because all the continuous t-norms can be obtained from them by ordinal sums [37].

Definition 2

3.1 Archimedean t-norms

Continuous Archimedean t-norms [35] are special t-norms that can be constructed by means of unary monotone functions, called generators.

Definition 3

A t-norm T is Archimedean if for every x ∈ (0,1) it holds T(x,x) < x. T is said to be strict if for all x ∈ (0,1) we have 0 < T(x,x) < x, otherwise it is said to be nilpotent.

For example, Łukasiewicz (TL) and Product (TP) t-norms are nilpotent and strict respectively, while Gödel (TG) t-norm is idempotent (i.e. ∀x : TG(x,x) = x) and hence not even Archimedean. In addition, all the nilpotent and strict t-norms can be related to the Łukasiewicz and Product t-norms as follows.

Theorem 1 (35)

Any nilpotent t-norm is isomorphic to TL and any strict t-norm is isomorphic to TP.

The next theorem shows how to construct t-norms by additiveFootnote 1generators [35].

Theorem 2

Let \(g:[0,1]\to [0,+\infty ]\) be a strictly decreasing function with g(1) = 0 and \(g(x)+g(y)\in Range(g)\cup \{g(0^{+}),+\infty \}\) for all x,y in [0,1], and g(− 1) its pseudo-inverse. Then the function T : [0,1]2 → [0,1] defined as

is a t-norm and g is said an additive generator for T. Moreover, T is strict if \(g(0^{+})=+\infty \), otherwise T is nilpotent.

Example 1

If we take g(x) = 1 − x, we get the Łukasiewicz t-norm TL.

Example 2

If we take \(g(x)=-\log (x)\), we get the Product t-norm TP.

According to (1), the other fuzzy logic connectives deriving from the t-norm can be expressed with respect to the generator. For instance:

3.2 Parameterized classes of t-norms

T-norm generators can also depend on a parameter, by consequently defining a parameterized class of t-norms. For instance, given a generator g of a t-norm T and λ > 0, then Tλ denotes a class of increasing t-norms that correspond to the generator function gλ(x) = (g(x))λ. In addition, let TD and TG denote the Drastic (TD(x,y) = (x = y = 1)?1 : 0) and Gödel t-norms respectively, we get:

Over the years, several parameterized families of t-norms have been introduced and studied in the literature [35, 38]. In the following, we recall some prominent examples that we will exploit in the experimental evaluation.

Definition 4 (The Schweizer-Sklar family)

For \(\lambda \in (-\infty ,+\infty )\), consider:

The t-norms corresponding to this generator are called Schweizer-Sklar t-norms, and they are defined according to:

The Schweizer-Sklar t-norm \(T_{\lambda }^{SS}\) is Archimedean if and only if \(\lambda >-\infty \), continuous if and only if \(\lambda <+\infty \), strict if and only if \(-\infty <\lambda \leq 0\) and nilpotent if and only if \( 0<\lambda <+\infty \). This t-norm family is strictly decreasing for λ ≥ 0 and continuous with respect to \(\lambda \in [-\infty ,+\infty ]\), in addition \(T_{1}^{SS}=T_{L}\).

Definition 5 (Frank t-norms)

For \(\lambda \in [0,+\infty ]\), consider:

The t-norms corresponding to this generator are called Frank t-norms and they are strict if \(\lambda <+\infty \). The overall class of Frank t-norms is decreasing and continuous.

4 Background on the integration of learning and logic reasoning

According to the learning from logical constraints paradigm [20], the available prior knowledge is represented by a set of logic rules. which are relaxed into continuous and differentiable constraints over the task functions (implementing FOL predicates). Positive and negative supervised samples can also be seen as atomic constraints, and the learning process corresponds to finding the task functions that best satisfy the constraints.

Example 3

Let us assume that the prior knowledge for an image classification task is expressed by the following sentences “lions live in savanna or in zoos” and “there are no walls in the savanna” (see Fig. 1). This domain knowledge can be represented in FOL as “\(\forall x\ Lion(x)\rightarrow LiveIn(x,\) savanna) ∨ LiveIn(x,zoo)” and “\(\forall x\ Wall(x)\!\rightarrow \! \neg LiveIn(x,savanna)\)”, being Lion,Wall two unary predicates, LiveIn a binary predicate and savanna,zoo two constants. If a neural classifier is able to correctly detect the presence of a lion and a wall in Fig. 1, it is also able to establish that the lion is living in a zoo by exploiting the symbolic knowledge.

In the following, we introduce more formally the framework where our work takes place. Let us consider a multi-task learning problem where BP = (P1,…,PJ) denotes the vector of real-valued functions (task functions) to be determined. Given the set \(\mathcal {X}\subseteq \mathbb {R}^{n}\) of available data, a supervised learning problem can be generally formulated as \(\min \limits _{\mathbf {B}_P}{\mathscr{L}}(\mathcal {X},\mathbf {B}_P)\) where \({\mathscr{L}}\) is a positive-valued functional denoting a certain loss. In our framework, we assume that the task functions are FOL predicates and all the available knowledge about these predicates, including supervisions, is collected into a knowledge base KB = {ψ1,…,ψH} of FOL formulas. The learning task is then expressed as:

The link between FOL knowledge and learning was also presented e.g. in [21] and it can be summarized as follows.

-

Each Individual is an element of a specific domain, which can be used to ground the predicates defined on such a domain. Any replacement of variables with individuals for a certain predicate is called grounding.

-

Predicates express the truth degree of some property for an individual (unary predicate) or group of individuals (n-ary predicate). In particular, this paper will focus on learnable predicate functions implemented by (deep) neural networks, but other models can also be used. FOL functions can be included and learned in a similar fashion [39]. However, in this presentation, function-free FOL is used to keep the notation simpler.

-

Knowledge Base (KB) is a collection of FOL formulas expressing the learning task. The integration of learning and logical reasoning is achieved by compiling the logical rules into continuous real-valued constraints correlating all the defined elements and enforcing some expected behavior on them.

Given any rule in KB, individuals, predicates, logical connectives and quantifiers can all be seen as nodes of an expression tree [40]. Then, the translation into a functional constraint corresponds to a post-fix visit of the expression tree, consisting of the following steps:

-

visiting a variable substitutes the variable with the corresponding feature representation of the individual to which the variable is currently assigned;

-

visiting a predicate computes the output of the predicate with the current input groundings;

-

visiting a connective combines the grounded predicate values by means of the real-valued operation associated to the connective;

-

visiting a quantifier aggregates the outputs of the expressions obtained for the single individuals (variable groundings).

Thus, the compilation of the expression tree allows us to convert a formula into a real-valued function, represented by a computational graph. The different functions corresponding to predicates are composed (i.e. aggregated) by means of the truth-functions corresponding to connectives and quantifiers. Given a formula φ, we denote by fφ its corresponding real-valued functional representation. fφ tightly depends on the chosen t-norm driving the fuzzy relaxation. The expression tree corresponding to the FOL formula \(\forall x\ Wall(x)\rightarrow \neg LiveIn(x,savanna)\) is reported in Fig. 2 as an example.

Example 4

Given two predicates P1,P2 and the formula φ(x) = P1(x) ⇒ P2(x), the functional representation of φ is given by \(f_{\varphi }(x, \mathbf {B}_P) =\min \limits \{1,1-P_{1}(x)+P_{2}(x)\}\) and \(f_{\varphi }(x, \mathbf {B}_P) =\min \limits \{1,P_{2}(x)/P_{1}(x)\}\) in the Łukasiewicz and Product logics, respectively.

A special note concerns quantifiers. They aggregate the truth-values of predicates over their corresponding domains. For instance, according to [41], that first proposed a fuzzy generalization of FOL, the universal and existential quantifiers may be converted as the infimum and supremum over a domain variable (coinciding with minimum and maximum when dealing with finite domains). In particular, given a formula φ(x) depending on a certain variable \(x\in \mathcal {X}\), where \(\mathcal {X}\) denotes the finite set of available samples for one of the involved predicates in φ, the fuzzy semantics of the quantifiers is given by:

As shown in the next section, this quantifier relaxation is not convenient for all the t-norms and we propose a more principled approach for the translation.

Once all the formulas in KB are converted into real-valued functions, their distance from satisfaction (i.e. distance from 1-evaluation) can be computed according to a certain decreasing mapping L expressing the penalty for the violation of any constraint. In order to satisfy all the constraints, the learning problem can be formulated as the joint minimization over the single rules using the following loss function factorization:

Here any βψ denotes the weight for the logical constraint ψ in the KB, which can be selected via cross-validation or jointly learned [24, 42], fψ is the functional representation of the formula ψ according to a certain t-norm fuzzy logic and L is a decreasing function denoting the penalty associated to the distance from satisfaction of formulas, so that L(1) = 0.

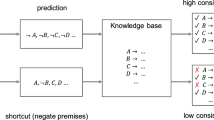

As described in Section 2, in this neural-symbolic scenario all the steps involved in the translation of FOL formulas into a loss function are treated separately, involving very heterogeneous choices. In the next section, we show instead that these steps are intrinsically connected and they can be uniformly derived from a unique global choice: the selection of a t-norm generator.

5 Loss functions by t-norms generators

This section presents a generalization of the approach introduced in [8], which was limited to supervised learning. In this paper, we present a unified principle to translate the fuzzy relaxation of FOL formulas into the loss function of general machine learning tasks. In particular, we study the mapping of FOL formulas into functional constraints by means of continuous Archimedean t-norm fuzzy logics. We adopt the t-norm generator to penalize the violation of the constraints, i.e. we take L = g. Moreover, since the quantifiers can be seen as generalized AND and OR over the grounded expressions (see Remark 1), we show that by adopting the same fuzzy conversion for connectives and quantifiers, the overall loss function expressed in (3) only depends on the chosen t-norm generator g.

Remark 1

Given a formula φ(x) defined on the available set of samples \(\mathcal {X}=\{x_{1},\ldots ,x_{N}\}\), the roles of the quantifiers have to be interpreted as follows:

5.1 General formulas

Given a certain formula φ(x) depending on a variable x that ranges in the set \(\mathcal {X}\) and its corresponding functional representation fφ(x,BP), the conversion of any universal quantifier may be carried out by means of an Archimedean t-norm T, while the existential quantifier by a t-conorm. For instance, given the formula ψ = ∀xφ(x), we have:

where g is a generator of the t-norm T.

Since any generator function g is decreasing and g(1) = 0, a generator is a suitable choice to map the fuzzy conversion of a formula into a constraint loss to be minimized. By exploiting the same generator of T as loss function (i.e. taking L = g) for ψ = ∀xφ(x) expressed by (4), we get the following term \(L\big (f_{\psi }(\mathcal {X},\mathbf {B}_P)\big )\) to be minimized:

As a consequence, the following result can be provided with respect to the convexity of the loss \(L\big (f_{\psi }(\mathcal {X},\mathbf {B}_P)\big )\).

Proposition 1

If g is a linear function and fψ is concave then \(L\big (f_{\psi }(\mathcal {X},\mathbf {B}_P)\big )\) is convex. If g is a convex function and fψ is linear then \(L\big (f_{\psi }(\mathcal {X},\mathbf {B}_P)\big )\) is convex.

Proof

Both the arguments follow since, if fψ is concave (we recall that a linear function is both concave and convex) and g is a convex non-increasing function defined over a univariate domain, then g ∘ fψ is convex. □

Proposition 1 establishes a general criterion to define convex constraints according to a certain generator depending on the fuzzy conversion fψ and, in turn, by the logical expression ψ. In the following of this section, we show some application cases of this proposition.

So far, we did not make any hypothesis on the formula φ. In the following, different cases of interest for the main connective of φ are reported. Given an additive generator g for a t-norm T, additional connectives may be expressed with respect to g, as reported by (2). If P1,P2 are two unary predicate functions sharing the same input domain \(\mathcal {X}\), the following formulas yield the following penalty terms, where we supposed T strict for simplicity:

Examples of derived losses

According to the selection of the generator, the same FOL formula can be mapped to different loss functions. This enables us to design customized losses that are more suitable for a specific learning problem, or to provide a theoretical justification to the losses that are already commonly utilized by the machine learning community. Examples 5–8 show some application cases. In particular, also the cross-entropy loss (see Example 6) can be justified under the same logical perspective.

Example 5

If g(x) = 1 − x we get the Łukasiewicz t-norm, that is nilpotent. Hence, from (5) we get:

In case fψ is concave (e.g. if ψ belongs to the concave fragment of Łukasiewicz logic [31]), this function is convex.

Example 6

If \(g(x)=-\log (x)\) we get the Product t-norm, that is strict. From (5) we get a generalization of the cross-entropy loss:

In case fψ(x) is linear (e.g. a literal), this function is convex.

Example 7

If \(g(x)=\frac {1}{x}-1\), with corresponding strict t-norm \(T(x,y)=\frac {xy}{x+y-xy}\), the penalty term that is obtained applying g to the formula ψ =∀xP1(x) ⇒ P2(x) is given by

Example 8

If g(x) = 1 − x2, with corresponding nilpotent t-norm \(T(x,y)=\min \limits \{1,2-x^{2}-y^{2}\}\), we get for ψ = ∀xP1(x) ⇒ P2(x)

5.2 Simplication property

An interesting property of the presented formulation consists in the fact that, in case of compound formulas, several occurrences of the generator may be simplified. For instance, the conversion \(f_{\psi }(\mathcal {X},\mathbf {B}_P)\) of the formula ψ =∀xP1(x) ⊗ P2(x) ⇒ P3(x) with respect to the selection of a strict t-norm generator g becomes:

The simplification expressed on the lower side is general and can be applied to a wide range of logical operators, reducing the required number of applications of g− 1 to just the one in front of the expression. In these cases, by applying L = g, the overall penalty of the formula can be determined by just evaluating g on the predicate functions and without applying g− 1. Since g and g− 1 can be in general affected by numerical issues (e.g. \(g=-\log \)), this property may allow the implementation of more numerically stable loss functions, totally preserving the initial semantics of the formula.

However, this property does not hold for all the connectives that are definable upon a certain generated t-norm (see Definition 2). For instance, ∀xP1(x) ⊕ P2(x) becomes:

This suggests to identify the connectives that allow, on one hand the simplification of any occurrence of g− 1 in \(L\big (f_{\psi }(\mathcal {X},\mathbf {B}_P)\big )\), and on the other hand the evaluation of g only on grounded predicates. For short, in the following we say that the formulas built upon such connectives have the simplification property.

Lemma 1

Any formula φ whose connectives are restricted to \(\{\wedge ,\vee ,\otimes ,\Rightarrow ,\sim ,\Leftrightarrow \}\) has the simplification property.

Proof

The proof is by induction with respect to the number l ≥ 0 of connectives occurring in φ.

-

If l = 0, i.e. φ = Pj(xi) for a certain j ≤ J and \(x_{i}\in \mathcal {X}\), then g(fφ) = g(Pj(xi)). Hence φ has the simplification property.

-

If l = k + 1 then φ = (α ∘ β) for \(\circ \in \{\wedge ,\vee ,\otimes ,\Rightarrow ,\sim ,\) ⇔} and we have the following cases.

-

If φ = (α ∧ β) then we get \(g(\min \limits \{f_{\alpha },f_{\beta }\})=\max \limits \{g(f_{\alpha }),g(f_{\beta })\}\). The claim follows by an inductive hypothesis on α,β whose number of involved connectives is less or equal than k. The argument still holds replacing ∧ with ∨ and \(\min \limits \) with \(\max \limits \).

-

If φ = (α ⊗ β) then we get

$$ \begin{array}{@{}rcl@{}} &&g(g^{-1}(\min\{g(0^{+}),g(f_{\alpha})+g(f_{\beta})\})) \\ &&\qquad\quad= \min\{g(0^{+}),g(f_{\alpha})+g(f_{\beta})\} \ . \end{array} $$As in the previous case, the claim follows by inductive hypothesis on α,β.

-

The remaining of the cases can be treated in the same way and noting that \(\sim \alpha =\alpha \Rightarrow 0\).

-

□

The simplification property provides several advantages from an implementation point of view. First, it allows the evaluation of the generator function only on grounded predicate expressions and avoids an explicit computation of the pseudo-inverse g− 1. Second, this property provides a general method to implement n-ary t-norms, of which universal quantifiers can be seen as a special case since we only deal with finite domains (see Section 7). Moreover, it is worth to notice that this property does not rely on specific assumptions on the neural models adopted to implement the predicate functions nor on the chosen fuzzy logic exploited for the relaxation. As a result, Lemma 1 can be applied in a wide range of cases.

Finally, the simplification property yields an interesting analogy between truth-functions and loss functions. In logic, the truth degree of a formula is obtained by combining the truth degree of its sub-formulas by means of connectives and quantifiers. In the same way, the loss corresponding to a formula that satisfies the simplification property is obtained by combining the losses corresponding to its sub-formulas, while connectives and quantifiers combine losses rather than truth degrees.

5.3 Manifold regularization: an example

Let us consider a simple multi-task classification problem where two objects A,B must be detected in a set of input images \(\mathcal {I}\), represented as a set of features. The learning task consists in determining the predicates PA(i), PB(i), which return true if and only if the input image i is predicted to contain the object A, B, respectively. The positive supervised examples are provided as two sets (or equivalently their membership functions) \({\mathcal {P}}_{A} \subset \mathcal {I}\), \({\mathcal {P}}_{B} \subset \mathcal {I}\) with the images known to contain the object A,B, respectively. The negative supervised examples for A,B are instead provided as two sets \({\mathcal {N}}_{A} \subset \mathcal {I}\), \({\mathcal {N}}_{B} \subset \mathcal {I}\). Furthermore, the location where the images have been taken is assumed to be known, and a predicate SameLoc(i1,i2) is used to express whether two images i1,i2 have been taken in the same location. Finally, we assume that two images taken in the same location are likely to contain the same object. This knowledge about the environment can be enforced via Manifold Regularization, which regularizes the classifier outputs over the manifold built by the image co-location defined via the SameLoc predicate.

The overall knowledge on this learning task can be expressed using FOL via the statement declarations shown in Table 2, where it was assumed that images i_23,i_60 have been taken in the same location and it holds that \({\mathcal {P}}_{A}=\{i\_10,i\_101\}, ~{\mathcal {P}}_{B}=\{i\_103\}, ~ {\mathcal {N}}_{A}=\{i\_11\}\) and \({\mathcal {N}}_{B}=\emptyset \). The statements define the constraints that the learners must respect on all the available samples, expressed as FOL rules. Please note that also the fitting of the supervisions on specific input images are expressed as constraints.

Given the selection of a strict generator g and a set of images \(I{\subseteq \mathcal {I}}\), the FOL knowledge in Table 2 is compiled into the following optimization task:

where BP = {PA,PB}, each βi is a meta-parameter deciding how strongly the i-th contribution should be weighted, Isl is the set of image pairs having the same location Isl = {(i1,i2) : SameLoc(i1,i2)}. The first four elements of the cost function express the fitting of the supervised data, while the latter two express manifold regularization over co-located images.

6 Experimental results

The experimental results have been carried out using the Deep Fuzzy Logic (DFL) softwareFootnote 2 which allows us to inject prior knowledge in form of a set of FOL formulas into a machine learning task. The formulas are compiled into differentiable constraints using the theory of generators as described in the previous sections. The learning task is then cast into an optimization problem like shown in Section 5.3 and, finally, optimized using the TensorFlow (TF) environmentFootnote 3 [43]. In the following section, it is assumed that each FOL constant corresponds to a tensor storing its feature representation. Predicates are mapped to generic functions in the TF computational graph. If the function does not contain any learnable parameter in the graph, it is said to be given, otherwise the function/predicate is said to be learnable, and its parameters will be optimized to maximize the constraints satisfaction. Please note that any learner expressed as a TF computational graph can be transparently incorporated into DFL.

6.1 The learning task

The CiteSeer dataset [44] consists of 3312 scientific papers, each one assigned to one of six classes: Agents, AI, DB, IR, ML and HCI. The papers are not independent as they are connected by a citation network with 4732 links. This dataset defines a relational learning benchmark, where it is assumed that the representation of an input document is not sufficient for its classification without exploiting the citation network. The citation network can be used to inject useful information into the learning task, as it is often true that two papers connected by a citation belong to the same category.

This knowledge can be expressed by providing a general rule of the form: ∀x∀yCite(x,y) ⇒ (P(x) ⇔ P(y)), where Cite is a binary predicate encoding the fact that x is citing y and P is a task function implementing the membership function of one of the six considered categories. This logical formula expresses a form of manifold regularization, which often emerges in relational learning tasks. Indeed, by linking the prediction of two distinct documents, the behavior of the underlying task functions is regularized enforcing smooth transition over the manifold induced by the Cite relation.

Each paper is represented via its bag-of-words, which is a vector having the same size of the vocabulary with the i-th element having a value equal to 1 or 0, depending on whether the i-th word in the vocabulary is present or absent in the document, respectively. In particular, the dictionary in this task consists of 3703 unique words. The set of input document representations is indicated by X, which is split into a train and test set Xtr and Xte, respectively. The percentage of documents in the two splits is varied across the different experiments. The six task functions Pi with i ∈{Agents,AI,DB,IR,ML,HCI} are bound to the six outputs of a Multi-Layer-Perceptron (MLP) implemented in TF. The neural architecture has 3 hidden layers, with 100 ReLU units each, and softmax activation on the output. Therefore, the task functions share the weights of the hidden layers in such a way that all of them can exploit a common hidden representation. The Cite predicate is a given function, which outputs 1 if the document passed as first argument cites the document passed as second argument, otherwise it outputs 0. Furthermore, an additional given predicate \(\mathcal {P}_{i}\) is defined for each Pi, such that it outputs 1 if and only if x is a positive example for the category i (i.e. it belongs to that category). \(\mathcal {P}_{i}\) is a supervision predicate, which easily allows us to introduce a supervised signal using FOL (Section 5.1). A manifold regularization learning problem [46] can be defined by providing, ∀i ∈{Agents,AI,DB,IR,ML,HCI}, the following two FOL formulas:

where only positive supervisions have been provided because the trained networks for this task employ a softmax activation function on the output layer, which has the effect of imposing mutually exclusivity among the task functions, reinforcing the positive class and discouraging all the others.

DFL allows the user to specify the weights of the formulas, which are treated as hyperparameters. Since we use two formulas per predicate, the weight of the formula expressing the fitting of the supervisions (7) is set to a fixed value equal to 1, while the weight of the manifold regularization rule (6) is cross-validated from the grid of values {0.1,0.01,0.006,0.003,0.001,0.0001}.

6.2 Results

The experimental results measure different aspects of the integration of the prior logic knowledge into a supervised learning task. In particular, different experiments have been designed to track the speed at which the training process converges to the best solution, and how the classification accuracy changes with a variable amount of training data.

Training convergence rate

This experimental setup aims at verifying the relation between the choice of the generator and the speed of convergence of the training process. In particular, a simple supervised learning setup is assumed for this experiment, where the learning task enforces the fitting of the supervised examples as defined by (7). The training and test sets are composed of 90% and 10% of the total number of papers, respectively. Two parameterized families of t-norms have been considered: the SS family (Definition 4) and the Frank family (Definition 5). Their parameter λ was varied to construct classical t-norms for some special values of the parameter but also to evaluate some intermediate ones. In order to keep a clear intuition behind the results, optimization was initially carried out using simply a Gradient Descent schema with a fixed learning rate equal to η = 10− 5. Results are shown in Fig. 3(-a) and (-b): it is evident that strict t-norms tend to learn faster than nilpotent ones by penalizing more strongly highly unsatisfied ground formulas. This difference is significant, although slightly reduced, when leveraging the state-of-the-art dynamic learning rate optimization algorithm Adam [45] as shown in Fig. 3-c and -d. This finding is consistent with the empirically well known fact that the cross-entropy loss performs well in supervised learning tasks for deep architectures, because it is effective in avoiding gradient vanishing in deep architectures. The cross-entropy loss corresponds to a strict generator with λ = 0 and λ = 1 in the SS and Frank families, respectively. This selection corresponds to a fast and stable converging solution when paired with Adam, while there are faster converging solutions when using a fixed learning rate.

Learning dynamics in terms of test accuracy on a supervised task when choosing different t-norms generated by the parameterized SS and Frank families: (a.) and (b.) are learning processes optimized with standard gradient descent, while (c.) and (d.) are optimized with Adam [45]

Classification accuracy

The effect of the selection of the generator on the classification accuracy is tested on a classification problem with manifold regularization. This learning task works in a transductive setting, where all the data is available at training time, even if only the training set supervisions are used during learning. In particular, the data is split into different datasets, where {10%,25%,50%,75%,90%} of the available data is used as a test set, while the remaining data is used as training set. The fitting of the supervised data defined by (7) is enforced for the training data during the learning process, whereas manifold regularization (6) can be enforced on all the available data. The Adam optimizer and the SS family of parametric t-norms have been employed in this experiment. Table 3 shows the average test accuracy and its standard deviation over 10 different samples of the train/test splits. As expected, all generator selections improve the final accuracy over what obtained by pure supervised learning, as manifold regularization brings relevant information to the learner.

Table 3 also shows the test accuracy when the parameter λ of the SS parametric family is selected from the grid {− 1.5,− 1,0,1,1.5}, where values of λ ≤ 0 move across strict t-norms (with λ = 0 being the product t-norm), and values greater than 0 move across nilpotent t-norms (with λ = 1 being the Łukasiewicz t-norm). Strict t-norms seem to provide slightly better performances than nilpotent ones on supervised tasks for the vast majority of the splits. However, this does not hold in manifold regularization learning tasks and a limited number of supervisions, where nilpotent t-norms perform better. An explanation of this behavior can be found in the different nature of the two constraints. Indeed, while supervisions provide hard constraints that need to be strongly satisfied, manifold regularization is a general soft rule, which should allow exceptions. When the number of supervision is small and manifold regularization drives the learning process, the milder behavior of nilpotent t-norms performs better, as it more closely models the semantics of the prior knowledge. Finally, it is worth noticing that very strict t-norms (e.g. λ = − 1.5 in the considered experiment) provide higher standard deviations compared to other t-norms, especially in the manifold regularization setup. This provides some evidence of a trade-off between the improved learning speed provided by strict t-norms and the introduced training instability due to their extremely non-linear behavior.

Competitive evaluation

Table 4 compares the accuracy of the selected neural model (NN) trained only with the supervised constraint against other two content-based classifiers, namely logistic regression (LR) and Naive Bayes (NB). These baseline classifiers have been compared against collective classification approaches using the citation network data: Iterative Classification Algorithm (ICA) [47] and Gibbs Sampling (GS) [48] applied on top of the output of the LR and NB content-based classifiers.

Furthermore, the results are compared against the two top performers on this task: Loopy Belief Propagation (LBP) [49] and Relaxation Labeling through Mean-Field Approach (MF) [49]. Finally, the results of DFL were built by training the same neural network with both supervision and manifold regularization constraints, for which it was used a generator from the SS family with λ = − 1. The accuracy values are obtained as an average over 10-folds created by random splits of 90% and 10% of the data for the train and test sets, respectively. Unlike the other relational approaches that can only be executed at inference time (collective classification), DFL can distill the knowledge in the weights of the neural network. The accuracy results are the highest among all the tested methodologies, in spite of the fact that the neural network trained only on the supervisions performs slightly worse than the other content-based competitors.

7 Discussion and practical implications

The presented framework can be contextualized among a new class of learning frameworks, which exploits the continuous relaxation of FOL to integrate logic knowledge in the learning process [5, 6, 21, 33].

Ease of design and numerical stability

Previous frameworks in this class require an a-priori definition of the operators of a given t-norm fuzzy logic. On the other hand, the presented framework requires only the generator to be defined. This provides two main advantages: a minimum design effort and an improved numerical stability. Indeed, it is possible to apply the generator only on grounded atoms by exploiting the simplification property to apply the penalty function (generator) to the atoms, whereas all compositions are performed via stable operators (e.g. min,max,sum). On the contrary, the previous FOL relaxations correspond to an arbitrary mix of non-linear operators, which can potentially lead to numerically unstable implementations.

Tensor-based integration

The presented framework provides a fundamental advantage in the integration with tensor-based machine learning frameworks like TensorFlow [43] or PyTorch [50]. Modern deep learning architectures can be effectively trained by leveraging tensor operations performed via Graphics Processing Units (GPU). However, this ability is conditioned on the possibility of concisely express the operators in terms of parallelizable operations like sums or products over n arguments, which are often implemented as atomic operations in GPU computing frameworks, without requiring to resort to slow iterative procedures. Fuzzy logic operators can not be easily generalized to their n-ary form. For example, the Łukasiewicz conjunction \(T_{L}(x,y) = \max \limits \{0,x+y-1\}\) can be generalized to n-ary form as \(T_{L}(x_{1},x_{2}, \dots , x_{n}) = \max \limits \{0,{\sum }_{i=1}^{n} (x_{i}) - n + 1\}\). On the other hand, the general SS t-norm \(T^{SS}_{\lambda }(x,y) = (x^{\lambda } + y^{\lambda } -1)^{\frac {1}{\lambda }}\), with \(-\infty < \lambda < 0\), does not have any (similarly simple) generalization and the implementation of the n-ary form must resort to an iterative application of the binary form, which is very inefficient in tensor-based computations. Previous frameworks like LTN and SBR had to limit the form of the formulas that can be expressed, or carefully select the t-norms in order to provide efficient n-ary implementations. However, the presented framework can express operators in n-ary form in terms of the generators. Thanks to the simplification property, n-ary operators for any continuous Archimedean t-norm can always be expressed as \(T(x_{1}, x_{2}, \dots , x_{n}) = g^{-1}(\min \limits \{g(0^{+}),{\sum }_{i=1}^{n} g(x_{i})\})\) in general, and \(T(x_{1}, x_{2}, \dots , x_{n}) = g^{-1}({\sum }_{i=1}^{n} g(x_{i}))\) if T is strict.

Limitations

Linking the loss function to the desired fuzzy semantics via the single choice of the t-norm generator guarantees logic coherence and simplification properties, but does not guarantee to achieve the highest accuracy for a given task. Another limitation of this approach is that it may not be directly applicable to neural-symbolic models not relaxing the Boolean formulas using t-norm fuzzy logic operators.

8 Conclusions

This paper presents a framework to embed prior knowledge expressed as logic statements into a learning task yielding several important contributions. First, we showed how human knowledge in the form of logical rules can be translated into differentiable loss functions used during learning. A critical aspect of our approach is that the translation from logic formulas to loss functions is uniquely defined by the choice of a unique operator, i.e. the generator of the corresponding t-norm. This feature clearly distinguishes our approach from the majority of related methods, which are often based on multiple specific choices for each of the fuzzy operators. Second, we have shown that the classical loss functions for supervised learning are naturally recovered within the theory, and that the use of parametric t-norm generators allows the definition of entire classes of loss functions with different convergence properties. The choice of the parameter can therefore be guided by the requirements of the specific applications. Third, the presented theory has driven to the implementation of a general software simulator, called Deep Fuzzy Logic (DFL), which bridges logic reasoning and deep learning using the unifying concept of t-norm generator, as general abstraction to translate any FOL declarative knowledge into an optimization problem solved in TensorFlow. Finally, we designed and implemented multiple experiments in DFL which show how the proposed method allows the definition of new loss functions with better performances both in terms of accuracy and training efficiency. Furthermore, by being able to incorporate logical knowledge seamlessly, our method outperforms several related works on the task of document classification in citation networks.

As future work, we plan to extend the method by allowing the learning of the parameters of the t-norm generator from data. In this regard, casting what presented in this paper within a Bayesian framework [24] is likely a promising direction. Furthermore, we plan to expand the range of applications of DFL to domains like visual question answering [51] and structure learning [3].

Notes

Since here we only deal with additive generators, we will drop the term “additive” for simplicity.

References

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436

Selbst A, Powles J (2018) meaningful information and the right to explanation. In: Conference on fairness, accountability and transparency. PMLR, pp 48–48

De Raedt L, Dumančić S, Manhaeve R, Marra G (2021) From statistical relational to neural-symbolic artificial intelligence. In: Proceedings of the twenty-ninth international conference on international joint conferences on artificial intelligence, pp 4943–4950

Garcez A, Gori M, Lamb L, Serafini L, Spranger M, Tran S (2019) Neural-symbolic computing: an effective methodology for principled integration of machine learning and reasoning. Journal of Applied Logics 6(4):611–631

Diligenti M, Gori M, Sacca C (2017) Semantic-based regularization for learning and inference. Artif Intell 244:143–165

Badreddine S, Garcez AD, Serafini L, Spranger M (2022) Logic tensor networks. Artif Intell 303:103649

Goodfellow I, Bengio Y, Courville A (2016) Deep learning

Giannini F, Marra G, Diligenti M, Maggini M, Gori M (2019) On the relation between loss functions and t-norms. In: Proceedings of the conference on inductive logic programming (ILP)

Garcez AD, Bader S, Bowman H, Lamb LC, De Penning L, Illuminoo B, Poon H, Gerson Zaverucha C (2022) Neural-symbolic learning and reasoning: a survey and interpretation. Neuro-Symbolic Artificial Intelligence: The State of the Art 342:1

Hitzler P (2022) Neuro-symbolic artificial intelligence: the state of the art

Raedt LD, Kersting K, Natarajan S, Poole D (2016) Statistical relational artificial intelligence: logic, probability, and computation. Synthesis Lectures on Artificial Intelligence and Machine Learning 10 (2):1–189

Richardson M, Domingos P (2006) Markov logic networks. Mach Learn 62(1):107–136

Bach SH, Broecheler M, Huang B, Getoor L (2017) Hinge-loss markov random fields and probabilistic soft logic. J Mach Learn Res 18:1–67

Niu F, Ré C, Doan A, Shavlik J (2011) Tuffy: scaling up statistical inference in markov logic networks using an rdbms. Proceedings of the VLDB Endowment 4(6)

Chekol MW, Huber J, Meilicke C, Stuckenschmidt H (2016) Markov logic networks with numerical constraints. In: Proceedings of the twenty-second european conference on artificial intelligence, pp 1017–1025

Qu M, Bengio Y, Tang J (2019) Gmnn: graph markov neural networks. In: International conference on machine learning. PMLR, pp 5241–5250

Khot T, Balasubramanian N, Gribkoff E, Sabharwal A, Clark P, Etzioni O (2015) Exploring markov logic networks for question answering. In: Proceedings of the 2015 conference on empirical methods in natural language processing, pp 685–694

Gayathri K, Easwarakumar K, Elias S (2017) Probabilistic ontology based activity recognition in smart homes using markov logic network. Knowl-Based Syst 121:173–184

Marra G, Kuželka O (2021) Neural markov logic networks. In: Uncertainty in artificial intelligence. PMLR, pp 908–917

Diligenti M, Giannini F, Gori M, Maggini M, Marra G (2021) A constraint-based approach to learning and reasoning. In: Neuro-symbolic artificial intelligence: the state of the art, pp 192– 213

Marra G, Giannini F, Diligenti M, Gori M (2019) Lyrics: a general interface layer to integrate logic inference and deep learning. In: Proceedings of the joint european conference on machine learning and knowledge discovery in databases (ECML/PKDD)

Xu J, Zhang Z, Friedman T, Liang Y, Broeck G (2018) A semantic loss function for deep learning with symbolic knowledge. In: International conference on machine learning. PMLR, pp 5502–5511

van Krieken E, Acar E, van Harmelen F (2019) Semi-supervised learning using differentiable reasoning. Journal of Applied Logics—IfCoLog Journal of Logics and their Applications 6(4)

Marra G, Giannini F, Diligenti M, Gori M (2019) Integrating learning and reasoning with deep logic models. In: Joint European conference on machine learning and knowledge discovery in databases. Springer, pp 517–532

Marra G, Diligenti M, Giannini F, Gori M, Maggini M (2020) Relational neural machines. In: Proceedings of the European conference on artificial intelligence (ECAI)

Manhaeve R, Dumancic S, Kimmig A, Demeester T, De Raedt L (2018) Deepproblog: neural probabilistic logic programming. Adv Neural Inf Process Syst 31

Sourek G, Aschenbrenner V, Zelezny F, Schockaert S, Kuzelka O (2018) Lifted relational neural networks: efficient learning of latent relational structures. J Artif Intell Res 62:69–100

Rocktäschel T, Riedel S (2017) End-to-end differentiable proving. In: Advances in neural information processing systems, pp 3788–3800

Minervini P, Riedel S, Stenetorp P, Grefenstette E, Rocktäschel T (2020) Learning reasoning strategies in end-to-end differentiable proving. In: ICML

Serafini L, Donadello I, Garcez AD (2017) Learning and reasoning in logic tensor networks: theory and application to semantic image interpretation. In: Proceedings of the symposium on applied computing. ACM, pp 125–130

Giannini F, Diligenti M, Gori M, Maggini M (2018) On a convex logic fragment for learning and reasoning. IEEE Transactions on Fuzzy Systems

van Krieken E, Acar E, van Harmelen F (2020) Analyzing differentiable fuzzy implications. In: KR2020: Proceedings of the 17th Conference on Principles of Knowledge Representation and Reasoning. Rhodes, Greece. September 12–18, 2020. IJCAI Organization, pp 893–903

van Krieken E, Acar E, van Harmelen F (2022) Analyzing differentiable fuzzy logic operators. Artif Intell 302:103602

Donadello I, Serafini L, d’Avila Garcez A (2017) Logic tensor networks for semantic image interpretation. In: IJCAI International joint conference on artificial intelligence, pp 1596–1602

Klement EP, Mesiar R, Pap E (2013) Triangular norms 8

Hájek P. (2013) Metamathematics of Fuzzy Logic 4

Jenei S (2002) A note on the ordinal sum theorem and its consequence for the construction of triangular norms. Fuzzy Sets Syst 126(2):199–205

Mizumoto M (1989) Pictorial representations of fuzzy connectives, part i: cases of t-norms, t-conorms and averaging operators. Fuzzy Sets Syst 31(2):217–242

Marra G, Giannini F, Diligenti M, Gori M (2019) Constraint-based visual generation. In: International conference on artificial neural networks. Springer, pp 565–577

Diligenti M, Roychowdhury S, Gori M (2018) Image classification using deep learning and prior knowledge. In: Proceedings of third international workshop on declarative learning based programming (DeLBP)

Novák V., Perfilieva I, Mockor J (2012) Mathematical Principles of Fuzzy Logic 517

Kolb S, Teso S, Passerini A, De Raedt L (2018) Learning smt (lra) constraints using smt solvers. In: IJCAI, pp 2333–2340

Abadi M, Barham P, Chen J, Chen Z, Davis A, Dean J, Devin M, Ghemawat S, Irving G, Isard M et al (2016) Tensorflow: a system for large-scale machine learning. In: OSDI, vol 16, pp 265–283

Fakhraei S, Foulds J, Shashanka M, Getoor L (2015) Collective spammer detection in evolving multi-relational social networks. In: Proceedings of the 21th ACM SIGKDD international conference on knowledge discovery and data mining. KDD ’15, pp 1769–1778, DOI https://doi.org/10.1145/2783258.2788606, (to appear in print)

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. arXiv:1412.6980

Belkin M, Niyogi P, Sindhwani V (2006) Manifold regularization: a geometric framework for learning from labeled and unlabeled examples. J Mach Learn Res 7(Nov):2399–2434

Neville J, Jensen D (2000) Iterative classification in relational data. In: Proc. AAAI-2000 workshop on learning statistical models from relational data, pp 13–20

Lu Q, Getoor L (2003) Link-based classification. In: Proceedings of the 20th international conference on machine learning (ICML-03), pp 496–503

Sen P, Namata G, Bilgic M, Getoor L, Galligher B, Eliassi-Rad T (2008) Collective classification in network data. AI Mag 29(3):93

Ketkar N (2017) Introduction to pytorch. In: Deep learning with python, pp 195–208

Yi K, Wu J, Gan C, Torralba A, Kohli P, Tenenbaum JB (2018) Neural-Symbolic VQA: disentangling reasoning from vision and language understanding. In: Advances in neural information processing systems (NIPS)

Funding

This project has received funding from the European Union’s Horizon 2020 research and innovation program under grant agreement No 825619. This work was also supported by TAILOR, a project funded by EU Horizon 2020 research and innovation programme under GA No 952215. Giuseppe Marra is funded by Research Foundation-Flanders (FWO-Vlaanderen, 1239422N). This project has received funding from the European Union’s Horizon-MSCA-2021 research and innovation program under grant agreement No 101073307.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare that they have no competing interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Francesco Giannini, Michelangelo Diligenti and Giuseppe Marra are contributed equally to this work.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Giannini, F., Diligenti, M., Maggini, M. et al. T-norms driven loss functions for machine learning. Appl Intell 53, 18775–18789 (2023). https://doi.org/10.1007/s10489-022-04383-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-04383-6