Abstract

One of the key design requirements for any portable/mobile device is low power. To enable such a low powered device, we propose an embedded gesture detection system that uses spiking neural networks (SNNs) applied directly to raw ADC data of a 60GHz frequency modulated continuous wave radar. SNNs can facilitate low power systems because they are sparse in time and space and are event-driven. The proposed system, as opposed to earlier state-of-the-art methods, relies solely on the target’s raw ADC data, thus avoiding the overhead of performing slow-time and fast-time Fourier transforms (FFTs) processing. The proposed architecture mimics the discrete Fourier transformation within the SNN itself avoiding the need for FFT accelerators and makes the FFT processing tailored to the specific application, in this case gesture sensing. The experimental results demonstrate that the proposed system is capable of classifying 8 different gestures with an accuracy of 98.7%. This result is comparable to the conventional approaches, yet it offers lower complexity, lower power consumption and faster computations comparable to the conventional approaches.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Gesture sensing technology allows users to interact easily and intuitively with machines compared to conventional mouse and touch screen systems [1] enabling applications in gaming, TVs, smart homes, and automotive. Gesture sensing technology is primarily dominated by vision-based systems, where large data volumes obtained with camera sensors are used by applying advance computer vision techniques [2,3,4,5]. However, camera-based solutions, on the other hand, raise privacy problems because they operate with photos and videos. Furthermore, they necessitate suitable lighting conditions and weather requirements (in outdoor scenarios), which limits their application. Non-vision based solutions have been devised to overcome these constraints. In non-vision based systems, specialized sensors in the form of gloves or bands are commonly attached to human subjects to record human hand motion and recognize gestures by analyzing the data received with these sensors [6,7,8,9,10]. Although these systems have been proven to overcome the limitation of vision-based systems, wearing these kinds of sensor provide a cumbersome experience to users.

To overcome the aforementioned limitations, contactless non-vision based systems are becoming more prevalent. Among them, radar-based technologies are favorable because they are subtle to lighting conditions, invariant to occlusion of hand, and possess a simple signal processing pipeline [11,12,13]. Additionally, their ability to preserve privacy, work inside enclosures and detect fine motions, it has become a sensor of choice for gesture sensing applications. There are two key research directions in radar-based gesture sensing systems, one direction is developing efficient miniature hardware that can produce high-fidelity target data [14,15,16,17,18,19,20,21], and the other being the signal processing pipeline, mostly driven by deep learning techniques [22,23,24,25,26,27,28,29,30,31].

With the current adoption of radars in IoT devices, radar-based techniques have focused on energy efficiency, the key requirement in IoT [32,33,34]. An effective gesture identification has been demonstrated with a tiny radar chip incorporated into a mobile phone and a small CNN network fitting resource constraint CPUs [35]. TinyRadarNN [36] uses a 2D CNN paired with a temporal convolutional neural network (TCN) to detect gestures utilizing range-frequency Doppler for low-power wearable devices. Similarly, in [37], to improve the robustness, additional features including range, Doppler, elevation and azimuth are fed to a CNN paired with recurrent neural networks (RNNs). In [38], to minimize power consumption and computational costs, a tiny CNN has been developed for an embedded solution for hand gesture recognition. Furthermore, to capture the micro-motion dynamics of the gestures [39,40,41], micro-Doppler [42] signatures are used to classify minute gestures such as gesture made with fingers. The potential of radar is not limited to just recognition of hand swipes, in [43,44,45] their capabilities have been shown in air-writing applications as well, where characters or phrases drawn on a virtual board in front of radar are recognized and categorized.

While traditional deep neural networks (deepNets) approaches remain the best candidates in detection and recognition, the energy efficiency of the systems during inference remains a concern [46], particularly for edge devices. The Multiply-accumulate (MAC) actions between layers deepNets cost the vast bulk of energy and research efforts are focused mainly on reducing MACs by employing smaller networks, pruning approaches, and weights quantization.

Spiking Neural Networks (SNNs) [47] in recent times have gained popularity for their energy efficiency because of the availability of the resources to build the hardware necessary to run SNNs efficiently. In contrast to deepNets, in SNN information is communicated by spike timing in SNNs, which includes latencies and spike rates. The communication in SNN is highly sparse as the information is only transmitted when the membrane potential of a node (neuron) reaches a specific threshold. Additionally, the sparse communication nature (1-bit activity) reduces the amount of data volume communicated between nodes significantly. Furthermore, since nodes are just integrating the spike coming at the node, therefore, the MAC arrays are replaced with adders significantly reducing the amount of computation. Despite the energy efficiency of SNNs [48,49,50,51], due to the non-differentiable transfer function training of SNNs is challenging.

Since conventional backpropagation cannot be applied, therefore, local unsupervised learning which involves mostly Spike timing-dependent plasticity (STDP) and its variants are used [52]. However, these kinds of methods only facilitate small networks requiring fewer parameters. Although recent advancements have shown very promising results with STDP [53], for bigger networks, mostly the concept of deepNets is incorporated where the network is trained in a backpropagation manner by using differential approximations to the spiking neurons [54]. Among the existing SNN neuron models, the leaky integrate-and-fire (LIF) [55] is the most well-known spiking neuron model and is used in this paper. The LIF is a good choice for developing SNN models since it is simple and easy to implement [56], requiring less computing (floating-point operations) and having neuro-computational features. The applications of SNNs in radar-based gesture sensing systems have been shown in [57,58,59,60] where range-Doppler features are fed to SNNs for robust gesture recognition and classification.

In contrast to approaches that operate on range-Doppler features in [61] we propose an SNN-based gesture recognition system that works only on raw data where the fast-time FFT is mimicked in the SNN itself. In this paper, we proposed to extend that approach where both the fast-time and slow-time FFTs are mimicked in the SNN allowing the system the classify up to 8 gestures. The main contributions of this paper are as follows:

-

An end-to-end radar-based gesture sensing system is proposed where the SNN takes the raw data and performs gesture recognition.

-

Unlike [57,58,59,60] that works on Doppler images, the proposed approach only relies on raw ADC data. The pre-processing steps such as slow-time and fast-time FFTs are not required reducing the overhead of performing computation and requiring additional computational units.

-

As an advancement to [61] that only classifies 4 gesture, the proposed approach not only mimics the fast-time FFT but also slow-time FFT in SNN enabling the system to classify up to 8 gestures.

-

A novel SNN architecture is proposed where the signal pre-processing (slow-time FFT, fast-time FFT) is mimicked in SNN.

2 System design

2.1 Hardware

To perform our experiments, we use in this work the hardware platform developed by Infineon Technologies [62] as shown in Fig. 1.

The simplified internal circuitry of the radar chipset is shown in  Fig. 2. It is consisted of 1 transmit (Tx) path antenna, 3 (Rx) receive paths, a mixer and Analogto-Digital Converters (ADCs). An external phase-locked loop is used for linear frequency sweeping. An 80 MHz reference oscillator is used to control the loop and the Finite State Machine (FSM) is controlled by a reference clock clicking at 80 MHz [63]. The tuning voltage is varied from 1 to 4.5 V to enable a voltage-controlled oscillator (VCO) that generates linear frequency sweeps from 57 GHz to 63 GHz enabling the chipset to transmit signal up to 6 GHz bandwidth. Serial Peripheral Interface (SPI) and Queued Serial Peripheral Interface (QSPI) are added for memory readout. The maximum data transfer for readout is up to 200Mb/s (4 × 50Mb/s). The out streaming of the data is achieved by an interrupt (IRQ) flag issued by FSM when a threshold set by the host is reached. The mixers are driven by high-pass filters trailed by a variable gain amplifier (VGA) and an antialiasing filter (AAF) and an ADC driver. A 4Mb/s 12 b Successive Approximation Register (SAR) is used for multi-channel ADC (MADC) and Static random-access memory (SRAM) of 196 k stores the raw data. The temperature and transmit power readout is done by a sensor ADC (SADC) [63].

For further hardware details, readers can refer to [63].

2.2 System design

The experimental setup used in this paper is shown in Fig. 3. A 60 GHz radar configured with the parameters shown in Table 1, collects the hand gesture data in form of raw ADC. The raw ADC data is then fed to a PC via a USB, where the signal processing steps take place.

The start and the end of the gesture are automatic. When the hand is detected by the radar, the recording starts and the end of the gesture is marked when the recording is completed for 32 consecutive frames. For shorter and quick gestures where the number of frames is less than 32 are appended with zeros chirp values.

2.3 Signal processing

2.3.1 Signal model

Frequency-Modulated Continuous Wave radar (FMCW) radar transmits chirps, which are linearly increasing frequency waves. The chirps when reflected from a target are collected at a receiver antenna. A mixer at the receiver mixes the transmitted and received signals, resulting in a low-pass filtered signal known as an Intermediate Frequency signal. For a signal of bandwidth Bw and duration T the frequency of the FMCW waveform can be mathematically expressed as:

where νc is the ramp start frequency. The beat signal is formed by mixing the reflected signal and replicate of the transmitted signal. The down-converted IF signal is given as:

with the assumption of τm/T << 1. The \(\tau _{m} = \frac {2R_{m} + \bold {v}_{m}t}{c} \) represents the time taken by the transmitted signal to reach back to the receiver after reflecting from mth target at distance Rm from the radar with radial velocity vm. The constant c represents the speed of light. The IF signal IF(t) is sampled and fed to subsequent processing steps.

2.3.2 Raw data

The IF(t) or raw ADC data from the radar chipset is collected chirp-wise (fast-time) and stacked together in rows (slow-time). So a frame is represented by a 2D array where each row represents a chirp.

2.3.3 Moving target indication filtering

In radar signals reflected from stationary objects can have greater magnitude than the target reflection thus subjugating the reflections of the target (in our case hand). To eliminate these reflections from stationary objects we used moving target indication (MTI). In MTI a moving average filter at each frame i is applied to the fast-time f(i) which is mathematically given as:

where α denotes forget factor set to 0.01. Once the data is filtered using MTI it is fed to the target detection block.

2.3.4 Target detection

For hand detection and recognition, thresholding is employed on the filtered fast-time data. The threshold is calculated by taking the mean value of the fast-time data with some scaling factor. For example, the threshold 𝜗 at frame j is given by:

where β is the scaling factor set to 3. The scaling factor is obtained empirically for the trade-off between the false positive and the probability of detection. The n in the equation represents the index along range bins and Nb is the number of range bins. As soon as the moving target is detected, the filtered raw data is collected and fed to the SNN block for gesture classification. fn is calculated when there is no target in the j th bin.

3 Spiking neural networks

Spiking neural networks or SNNs are 3rd generation of neuron that advances the previous neural networks by capturing closely the neurocomputational features of the human nervous system [47]. Neurons in SNNs are more computationally powerful because SNNs not only take into account the spatial information but also take the temporal aspects (precise timing of the spikes) [64]. SNNs are ideal for large-scale data processing due to their low power consumption and ability to perform parallel analysis [65]. Their low power consumption, quick inference, and event-driven information processing makes them an ideal candidate for deep neural networks/machine learning applications [57,58,59] where low energy consumption is desired.

There exist different neuron models whose computational efficiency and biological plausibility have been discussed briefly in [66]. Among them, LIF is popular it is a simple model with fewer computations and is biologically plausible.

4 Proposed spiking neural network architecture

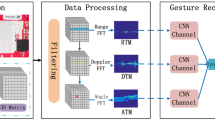

The aim of the proposed architecture is to be efficient in terms of computing power and energy. Therefore, we have opted for LIF as a neuron model choice. The proposed SNN architecture is shown in Fig. 4. Since the LIF is not differentiable and hence we cannot perform the backpropagation, therefore, we used a differentiable approximation to the LIF.

The proposed spiking neural network architecture where slow-time and fast-time FFTs are mimicked in the first layers of the SNN followed by a CNN and dense layer for classification. The block a) represents the multiplication of the input with the real coefficients of the FFT resulting in Yr and b) corresponds to the multiplication of the input with imaginary coefficients of the FFT resulting in Yi, thus mimics the range FFT. Similarly, the block c) represents the multiplication of the Yr with real coefficients of the FFT resulting in Yrr and the block d) represents the multiplication of the Yr with imaginary coefficients of the FFT resulting in Yri. Similarly, the block e) represents the multiplication of the Yi with real coefficients of the FFT resulting in Yri and the block f) represents the multiplication of the Yi with imaginary coefficients of the FFT resulting in Yii. Subsequently, the outputs Yr,Yi,Yrr,Yri,Yir and Yii are appended and fed to the convolution layer followed by dense and output layer

To mimic the discrete Fourier transform (DFT) in SNN layers we exploit the successive multiplication representation of DFT because of its linear transformation. Each DFT dimension is represented by a single Dense layer where the weights of the layer are real and complex parts of the coefficients of the DFT. Let the radar is operating with Fn total number of frames and Sn number of samples per chirp hence the input data dimension is Sn × Fn, to perform the DFT on this input data, the first layer contains 2 × Sn nodes to compute the real and imaginary values. The connectivity between the input nodes and the layer nodes would be 2Sn × Sn nodes. We use DFT trigonometric equation to calculate the weights of the connection mathematically expressed as:

where q and p take values between 0 and Sn − 1. When applied to an input vector Y, in matrix form (6) can be written as:

where C is the result of the transform, WR and WI are the real and imaginary coefficients.

Since in radar processing the 2nd FFT is applied across slow-time therefore, the output of the first layer is reshaped and then transposed using the transpose layer. Then the same formulation is applied to the 3rd layer where now the real part and imaginary part from the first layer output are separately connected to real and imaginary weights that are calculated using the above trigonometric (6). Let Yr represents the transformation YTWR and Yi represents the transformation YTWI then at layer 3 following transformation occurs:

The first and 3rd layers are appended with LIF as an activation function. The LIF transforms the output of each neuron into spikes. Let the 3rd layer’s output be represented as:

then the 1st and 3rd layer’s outputs are appended with one another as:

and is provided to the convolutional layer where each transformation is represented as a channel making ϕ as a 6 dimensional vector. The convolution layer has a total number of 16 filters of size 3. The stride is set to 1. The output of the convolutional layer is then fed to a fully connected layer with 64 neurons appended with LIF. Both the fully connected layer and convolutional layer use LIF as an activation function. In last a fully connected layer with 8 neurons is used as model output.

4.1 Training

The training of the network is performed in a conventional backpropagation manner using NengoDL [67] as it allows a differential approximation of the firing rate of the LIF neurons in the form SoftLIF [68] activation (an approximation to LIF). For calculating classification probabilities, we have used multi-class cross-entropy as an objective function.

Just like ANNs, SNNs learning performance also requires a suitable optimization solver and weight initialization. We employed the adaptive moment estimation (Adam) [69] as an optimization solver because of its computational efficiency while being proved to be a good candidate for large networks [70].

As a loss function softmax classifier is used that uses cross-entropy as a loss function. The cross-entropy for J number of training samples belonging to K number of classes if mathematically expressed as:

where \({z_{j}^{k}}\) is true label for training example j for class k. x is the input example to the model H with weights 𝜃.

4.2 Testing

To make the network spiking, the LIF neurons in the trained model are replaced with spiking LIF neurons. The connection weights and neuron biases for the spiking LIF are extracted from the trained model. In order to acquire an accurate estimate of the spiking neuron output over time, the test inputs or samples are adjusted for testing and presented to the network several times or steps.

5 Results and discussion

5.1 Dataset

We have used the dataset from [57]. This dataset has a total of 4800 hand gesture swipes collected with 5 people. The dataset is a collection of 8 different gestures as shown in Fig. 5.

The dataset has 600 samples for each gesture. During both training and validation, the dataset was collected with minimum prior supervision provided to the users. Furthermore, the data was gathered in a variety of settings, including different locations and environments. We performed 10 trials of the training and testing experiment, and then the accuracy is average along those trials. For each trial, we randomly select 80% of the total dataset for training and 20% for testing.

5.2 Results

To assess the performance of the proposed system we have used classification accuracy as a measure. Our proposed system achieved a similar average accuracy of 98.7% as is achieved by state-of-the-art methods over random trials as shown in Table 2. All the methods were applied to the same dataset. In [58, 59, 61] 4 out of 8 gestures are used from the same dataset.

5.3 Discussion

In this paper radar-based gesture sensing system running on SNN is proposed where the proposed system does not require the conventional radar signal pre-processing steps such as fast-time and slow-time FFTs followed by Constant false alarm rate (CFAR) and the gesture sensing is performed using the raw ADC data only. The fast-time and slow-time FFTs are mimicked within the SNN and the CFAR and other denoising steps are learned by the SNN itself demonstrating the full capabilities of the SNNs. SNNs are energy-efficient, fast, scalable and hardware friendly making them a suitable energy-efficient and cost-effective solution.

Besides avoiding the use of traditional feature engineering techniques (slow-time and fast-time FFTs) which are mimicked within the SNN network, in contrast to the state-of-the-art methods that rely on 32 chirps per frame, the proposed method only works with a single chirp per frame. This significantly reduces the computational cost.

The capabilities of the proposed system in terms of firing/prediction are shown in Fig. 6 where the firing overtime for some of the examples is considered. For every class, the model starts by firing with more or less similar probabilities but quickly starts firing with a higher probability for the correct class after a few time steps. This happens due to spikes integration over a longer time period enabling higher accuracy in terms of correct class prediction.

The firing choice of SNN for examples taken from each class. Where the class labels are: a) 0 - down up, b) 1 - up down, c) 2 - left-right, d) 3 - rubbing, e) 4 - right-left, f) 5 - diagonal southwest to northeast, g) 6 - diagonal southeast to northwest, h) 7 - clapping. It can be seen that after a few time steps the SNN starts firing for the correct class

Since we aim to make our model close to biological plausible and computationally efficient, therefore, we opted for the LIF model because of its simplicity. Like conventional deepNets or any machine learning model, the learning performance of the SNN depends on hyperparameters. When it comes to SNN there are two types of hyperparameters that need to be considered: one is for the SNN model itself and the second is the hyperparameters at the neuron level. The optimal parameters used for the training are shown in Tables 3 and 4. These parameters were obtained by performing a grid search allowing the network to overfit and underfit. Furthermore, the optimal parameters used for Adam and the weight initialization of the dense and convolutional layers are shown in Table 1. The convolution layer weights are drawn from the well-known Xavier initialization [72] and are proven to be well suited for SNN [73]. With the parameters given in Tables 3, 4 and 1, the proposed system is capable of classifying gestures 8 with an accuracy (98.7%) level similar to that of state-of-the-art SNNs and deepNets in Table 2.

Figure 7(c) shows the confusion matrix obtained for testing the model with the aforementioned testing dataset. It can be seen that the system is able to classify gestures 1 and 2 100% correctly without any confusion. Gesture 3 is confused with 1.10% with geesture 5 and gesture 4 is confused 0.87% with gesture 6 and gesture 7 respectively. The only high number of miss classification, around 5.88%, occurs for gesture 5 and is confused with gesture 7. Gesture 6 is confused with gesture 71.03% of the times and gesture 7 is confused 0.88% with gesture 6. The last gesture 8 is confused with gestures 4 and 5 each 0.98% times respectively. The classifying confusion of the proposed system (Model_3) shown in Fig. 7(c) is significantly less compared to the Figs. 7(a) (Model_1) and 7(b) (Model_2). This less confusion of the proposed model is attributed to the mimicked FFTs in starting layers (Table 5).

The confusion matrices of the models mentioned in Table 6 obtained with the test dataset. It can be seen that the Model_3 (our proposed model) performance is good as compared to the other models. This performance is attributed to mimicking the FFTs as the first layers. The confusion matrices values are shown in percentage. The labels on axes correspond to 8 classes of gestures as: 0- down up, 1 - up down, 2 - left-right, 3 - rubbing, 4 - right-left, 5 - diagonal southwest to northeast, 6 - diagonal southeast to northwest, 7 - clapping

To further evaluate the performance of our proposed system we used t-Distributed Stochastic Neighbour Embedding algorithm (t-SNE) to visualize high-dimensional feature space. This helps us how well discriminating features are produced by the network for each class in the dataset. We fed the output of the last layer (before the classification layer) into t-SNE with the associated labels. We change the layer neurons from 2 to 64 incrementally with a power of 2 and calculated the t-SNE for each case as shown in Fig. 8. It is observed that increasing the number of neurons (dimensions of embedding space) in the layer increases the separability. The proposed SNN learned both separable and discriminating features, as well as generated close-knit clusters for categorizing the 8 gesture at 32 neurons. This indicates that our SNN can correctly categorize the 8 gesture types at a lower dimension of 32.

Furthermore, Fig. 9(c) shows the discriminating features learned layer by layer. It can be seen that when moving along layers the features become more and more discriminating and form close clusters. At the last layer, the features are well discriminated and hence are easily classified. The importance of mimicking the range and Doppler FFT in the SNN model is evident from Table 6 where the performance in terms of accuracy is given for training and testing the model with and without the FFTs. It can be seen that without the FFTs in the SNN the performance is not so good with 81.03% accuracy. The performance becomes better by 7.87% when introducing the range FFT layer and reaches 98.7% by adding the Doppler FFT layer, our proposed method in this case. It is also evident from the tSNE plots of the CNN model and range model that the features produced are not well discriminating and hence resulting in a low performance than the proposed model.

Visualization of high dimensional feature spaces of layers of the network using t-SNE plots. Column a) shows the t-SNE for the input layer, CNN layer and dense layer for the CNN (Model_1). Similarly, column b) shows the t-SNE for the range model (Model_2) and column c) shows the t-SNE for the layers of the proposed method (Model_3). It can be seen the features getting discriminated as we move deeper in the network. The legend of the sub figures represent the classes as: 0 - down up, 1 - up down, 2 - left-right, 3 - rubbing, 4 - right-left, 5 - diagonal southwest to northeast, 6 - diagonal southeast to northwest, 7 - clapping

To increase further power efficiency we looked at the effect of the post-training quantization on the performance in terms of accuracy as shown in Table 7. The quantization increases the power efficiency in two aspects: it reduces the memory footprint costs and computational costs. Furthermore, quantized data with a lower bit rate requires less data movement on-chip and off-chip, resulting in better energy efficiency and reduced memory bandwidth. Table 7 shows the post-training quantization effect on all the 3 models of Table 6. The quantization is performed by keeping 1 bit for the sign, 2 bits for the integer part and 1 bit for the fractional part in case of 4-bits quantization. Similarly, for 8 and 16 bits quantization, 1 bit for sign, 3 bits are assigned to integer part and the rest of the bits are used for fractional parts. As expected the accuracy drops for high quantization i.e., 4 bits for all the models and drops with a higher percentage for our proposed model (model_3). This drop is due to the higher number of neurons used in the proposed model and hence the high impact of quantization. However, we believe higher accuracy can be achieved with quantization aware training which currently the nengoDL framework does not support. Increasing the bits for quantization increases the accuracy as expected and with 8-bit and 16-bit, the proposed model achieves 92.68% and 96.46% of accuracy with good precision and recall as indicated by f1-scores. Where for “f1-scores_micro” average is calculated by counting the total true positives, false positives and false negatives. For “f1-scores_macro” the metric is calculated for each class using their unweighted arithmetic mean.

Considering the goal of having a system that is energy-efficient, we looked into the energy consumption per classification of the proposed system. Since the actual hardware-based energy calculation is out of the scope of this research work,in the current study, we relied the hardware metrics of the μ Brain chip defined in [74] to estimate the energy consumption. If SPN is the maximum number of spikes, SPE = 2.1pJ is the energy per spike and LKP = 73μ W is the static leakage power, then the energy consumption CE per classification using μ Brain hardware metrics is given as:

where δT is the inference time. Assuming the δT = 28 ms. The energy consumption per classification of our proposed system is approx. CE = 2.1μ J.

To see the energy efficiency for SNN hardware, readers can refer to [75], where the SNN hardware is compared with the other deep learning hardware in terms of energy efficiency. The performance of SNN hardware was tested on a keyword spotting application using a dynamic energy cost per inference on some energy-efficient accelerators commercially available as shown in Fig. 10. The dynamic energy cost per inference is the difference between the total amount of energy consumed by hardware in a single inference versus the energy consumed while the hardware is idle [75]. Here, an inference means passing an input vector through a two hidden layer feed-forward ANN to predict a probability distribution over alphabetical characters. They showed up to 10 × improvement in power efficiency in their experiments.

Dynamic energy cost per inference across hardware devices for a feed-forward neural network with two hidden layers for keyword spotting application [75]

Despite its simplicity, the proposed prototype SNN solution has the ability to identify real-time hand gestures with high accuracy, comparable to state-of-the-art deepNets and SNN counterparts. Additionally, the use of SNNs makes the proposed system a low-power and hardware friendly solution suitable for applications where low-power is desired.

6 Conclusion

A novel spiking neural network(SNN)-based gesture sensing system implemented using a 60-GHz radar system is proposed. Unlike existing methods that use image-based input data or point cloud input data, here we propose to directly leverage raw ADC data as input to the SNN. The SNN implicitly mimics the Fourier transform processing that not only helps to reduce the overhead of additional FFT accelerators but also makes the FFT pre-processing specific to the task, in this case, gesture sensing. In comparison to the state-of-the-art, our suggested SNN architecture offers a similar degree of accuracy performance on 8 gestures, making the proposed system suitable for low latency and low power embedded implementations. As future work, we would like to investigate how to mimic the non-parametric Fourier transforms. Furthermore, we would also like to mimic the micro-Doppler behavior that would allow us to classify micro-Doppler gestures.

Data Availability

The data associated with this research cannot be made publicly available due to company confidentiality constraints.

References

Molchanov P et al (2015) Multi-sensor system for driver’s hand-gesture recognition. In: 11th IEEE FG, vol 1, pp 1–8. https://doi.org/10.1109/FG.2015.7163132

Zabulis X et al (2009) Vision-based hand gesture recognition for human-computer interaction. In: The universal access handbook

Ma X, Peng J (2018) Kinect sensor-based long-distance hand gesture recognition and fingertip detection with depth information. J Sens:1–9. https://doi.org/10.1155/2018/5809769

Malima AK et al (2006) A fast algorithm for vision-based hand gesture recognition for robot control. In: 14th IEEE SIU, pp 1–4. https://doi.org/10.1109/SIU.2006.1659822

Tran D-S et al (2020) Real-time hand gesture spotting and recognition using RGB-D camera and 3D convolutional neural network. Appl Sci 10:722. https://doi.org/10.3390/app10020722

Ramalingame R et al (2021) Wearable smart band for american sign language recognition with polymer carbon nanocomposite-based pressure sensors. IEEE Sens Lett 5(6):1–4. https://doi.org/10.1109/LSENS.2021.3081689https://doi.org/10.1109/LSENS.2021.3081689

Jiang D et al (2020) Hand gesture recognition using three-dimensional electrical impedance tomography. IEEE Trans Circuits Syst II Express Briefs 67(9):1554–1558. https://doi.org/10.1109/TCSII.2020.3006430https://doi.org/10.1109/TCSII.2020.3006430

Byun S-W, Lee S-P (2019) Implementation of hand gesture recognition device applicable to smart watch based on flexible epidermal tactile sensor array. Micromachines, vol 10(692). https://doi.org/10.3390/mi10100692

Georgi M et al (2015) Recognizing hand and finger gestures with imu based motion and emg based muscle activity sensing. In: Proceeding of the international joint conference on BIOSTEC, vol 4, pp 99–108. https://doi.org/10.5220/0005276900990108

Ferrone A et al (2016) Wearable band for hand gesture recognition based on strain sensors. In: 6th IEEE BioRob, pp 1319–1322. https://doi.org/10.1109/BIOROB.2016.7523814

Wang F-K et al (2015) Gesture sensing using retransmitted wireless communication signals based on doppler radar technology. IEEE TMTT 63(12):4592–4602. https://doi.org/10.1109/TMTT.2015.2495298https://doi.org/10.1109/TMTT.2015.2495298

Fan T et al (2016) Wireless hand gesture recognition based on continuous-wave doppler radar sensors. IEEE TMTT 64(11):4012–4020. https://doi.org/10.1109/TMTT.2016.2610427

Zhang Y et al (2021) Hand gesture recognition for smart devices by classifying deterministic doppler signals. IEEE TMTT 69(1):365–377. https://doi.org/10.1109/TMTT.2020.3031619

Lammert V et al (2020) A 122 ghz ism-band fmcw radar transceiver. In: 13th GeMiC, pp 96–99

Issakov V, Bilato A, Kurz V, Englisch D, Geiselbrechtinger A (2019) A Highly Integrated D-Band Multi-Channel Transceiver Chip for Radar Applications, 2019 IEEE BiCMOS and Compound semiconductor Integrated Circuits and Technology Symposium (BCICTS), 2019, pp. 1–4, https://doi.org/10.1109/BCICTS45179.2019.8972781

Rimmelspacher J et al (2020) Low power low phase noise 60 GHz multichannel transceiver in 28 nm CMOS for radar applications. In: IEEE RFIC, pp 19–22. https://doi.org/10.1109/RFIC49505.2020.9218297

Bilato A et al (2021) A multichannel d-band radar receiver with optimized lo distribution. IEEE SSCL 4:141–144. https://doi.org/10.1109/LSSC.2021.3099069https://doi.org/10.1109/LSSC.2021.3099069

Aguilar E et al (2020) A fundamental-frequency 122 ghz radar transceiver with 5.3 dbm single-ended output power in a 130 nm sige technology. In: 2020 IEEE/MTT-S IMS, pp 1215–1218. https://doi.org/10.1109/IMS30576.2020.9223903

Aguilar E et al (2020) Highly-integrated scalable d-band receiver front-end modules in a 130 nm sige technology for imaging and radar applications. In: 2020 GeMiC, pp 68–71

Aguilar E et al (2020) A 130 ghz fully-integrated fundamental-frequency d-band transmitter module with > 4 dbm single-ended output power. IEEE Trans Circuits Syst II Express Briefs 67 (5):906–910. https://doi.org/10.1109/TCSII.2020.2984597

Issakov V et al (2019) Highly-integrated low-power 60 ghz multichannel transceiver for radar applications in 28 nm cmos. In: 2019 IEEE MTT-S IMS, pp 650–653. https://doi.org/10.1109/MWSYM.2019.8700977https://doi.org/10.1109/MWSYM.2019.8700977

Molchanov P, Yang X, Gupta S, Kim K, Tyree S, Kautz J (2016) Online Detection and Classification of Dynamic Hand Gestures with Recurrent 3D Convolutional Neural Networks, 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016, pp. 4207–4215, https://doi.org/10.1109/CVPR.2016.456

Rautaray S et al (2015) Vision based hand gesture recognition for human computer interaction: a survey. Artif Intell Rev

Lien J et al (2016) Soli: ubiquitous gesture sensing with millimeter wave radar. ACM Trans Graph, vol 35(4)

Hazra S, Santra A (2018) Robust gesture recognition using millimetric-wave radar system. IEEE Sens Lett 2(4):1–4

Santra A, Hazra S (2020) Deep learning applications of short range radars artech house

Zhang Z et al (2018) Latern: dynamic continuous hand gesture recognition using fmcw radar sensor. IEEE Sens J

Sun Y et al (2020) Multi-feature encoder for radar-based gesture recognition. In: IEEE RADAR, pp 351–356. https://doi.org/10.1109/RADAR42522.2020.9114664

Kern N et al (2020) Robust doppler-based gesture recognition with incoherent automotive radar sensor networks. IEEE Sens Lett 4(11):1–4. https://doi.org/10.1109/LSENS.2020.3033586

Altmann M et al (2021) Multi-modal cross learning for an fmcw radar assisted by thermal and rgb cameras to monitor gestures and cooking processes. IEEE Access 9:22295–22303. https://doi.org/10.1109/ACCESS.2021.3056878https://doi.org/10.1109/ACCESS.2021.3056878

Ishak K et al (2020) Human gesture classification for autonomous driving applications using radars. In: IEEE MTT-S ICMIM, pp 1–4. https://doi.org/10.1109/ICMIM48759.2020.9298980

Nguyen MQ et al (2018) Range-gating technology for millimeter-wave radar remote gesture control in iot applications. In: 2018 IEEE MTT-S IWS, pp 1–4. https://doi.org/10.1109/IEEE-IWS.2018.8400811https://doi.org/10.1109/IEEE-IWS.2018.8400811

Gu C et al (2019) Motion sensing using radar: gesture interaction and beyond. IEEE Microw Mag 20(8):44–57. https://doi.org/10.1109/MMM.2019.2915490https://doi.org/10.1109/MMM.2019.2915490

Cai X et al (2021) One-shot radar-based gesture recognizer for fast prototyping. In: IEEE Sens J, pp 1–4. https://doi.org/10.1109/SENSORS47087.2021.9639694https://doi.org/10.1109/SENSORS47087.2021.9639694

Hayashi E et al (2021) Radarnet: efficient gesture recognition technique utilizing a miniature radar sensor. In: CHI ’21, pp 1–14

Scherer M et al (2021) TinyRadarNN: combining Spatial and temporal convolutional neural networks for embedded gesture recognition with short range radars. IEEE Internet Things J 8(13):10336–10346. https://doi.org/10.1109/JIOT.2021.3067382

Sun Y et al (2020) Real-time radar-based gesture detection and recognition built in an edge-computing platform. IEEE Sens J 20(18):10706–10716. https://doi.org/10.1109/JSEN.2020.2994292

Ren Y et al (2021) Hand gesture recognition using 802.11ad mmwave sensor in the mobile device. In: IEEE WCNCW, pp 1–6. https://doi.org/10.1109/WCNCW49093.2021.9419978

Helen Victoria A, Maragatham G (2021) Gesture recognition of radar micro doppler signatures using separable convolutional neural networks. Materials today: Proceedings. https://doi.org/10.1016/j.matpr.2021.05.658

Amin MG, Zeng Z, Shan T (2019) Hand gesture recognition based on radar micro-doppler signature envelopes. In: 2019 IEEE radar conference (radarconf), pp 1–6. https://doi.org/10.1109/RADAR.2019.8835661

Ritchie M, Jones AM (2019) Micro-doppler gesture recognition using doppler, time and range based features. In: 2019 IEEE radar conference (radarconf), pp 1–6. https://doi.org/10.1109/RADAR.2019.8835782

Chen VC, Li F, Ho S-S, Wechsler H (2006) Micro-doppler effect in radar: phenomenon, model, and simulation study. IEEE Trans Aerospace Electr Syst 42(1):2–21. https://doi.org/10.1109/TAES.2006.1603402

Arsalan M, Santra A (2019) Character recognition in air-writing based on network of radars for human-machine interface. IEEE Sens J, PP:1–1. https://doi.org/10.1109/JSEN.2019.2922395

Arsalan M et al (2021) Air-writing with sparse network of radars using spatio-temporal learning. In: 25th ICPR, pp 8877–8884. https://doi.org/10.1109/ICPR48806.2021.9413332

Arsalan M et al (2020) Radar trajectory-based air-writing recognition using temporal convolutional network. In: 19th IEEE ICMLA, pp 1454–1459. https://doi.org/10.1109/ICMLA51294.2020.00225

Sze V et al (2017) Efficient processing of deep neural networks: a tutorial and survey. Proc of the IEEE 105(12):2295–2329. https://doi.org/10.1109/JPROC.2017.2761740

Maass W (1997) Networks of spiking neurons: the third generation of neural network models. Neural Netw 10(9):1659–1671. https://doi.org/10.1016/S0893-6080(97)00011-7

Indiveri G, Horiuchi T (2011) Frontiers in neuromorphic engineering. Front Neurosci 5:118. https://doi.org/10.3389/fnins.2011.00118

Pfeiffer M, Pfeil T (2018) Deep learning with spiking neurons: opportunities and challenges. Front Neurosci 12:774. https://doi.org/10.3389/fnins.2018.00774

Panda P et al (2020) Toward scalable, efficient, and accurate deep spiking neural networks with backward residual connections, stochastic softmax, and hybridization. Front Neurosci 14:653

Nguyen D-A et al (2021) A review of algorithms and hardware implementations for spiking neural networks. J Low Power Electron Appl, vol 11(2). https://doi.org/10.3390/jlpea11020023

Shouval H, Wang S, Wittenberg G (2010) Spike timing dependent plasticity: a consequence of more fundamental learning rules. Front Computat Neurosc, vol 4. https://doi.org/10.3389/fncom.2010.00019

Safa A, Ocket I, Bourdoux A, Sahli H, Catthoor F, Gielen G (2021) A new look at spike-timing-dependent plasticity networks for spatio-temporal feature learning. arXiv:2111.00791. https://doi.org/10.48550

Tavanaei A, Ghodrati M, Kheradpisheh SR, Masquelier T, Maida A (2019) Deep learning in spiking neural networks. Neural Netw 111:47–63. https://doi.org/10.1016/j.neunet.2018.12.002

Dutta S et al (2017) Leaky integrate and fire neuron by charge-discharge dynamics in floating-body mosfet. Sci Rep, vol 7. https://doi.org/10.1038/s41598-017-07418-y

Lehmann HM, Hille J, Grassmann C, Issakov V (2022) Leaky integrate-and-fire neuron with a refractory period mechanism for invariant spikes. In: 2022 17th conference on Ph.D research in microelectronics and electronics (PRIME), pp 365–368. https://doi.org/10.1109/PRIME55000.2022.9816777

Arsalan M, Santra A, Issakov V (2022) Radarsnn: a resource efficient gesture sensing system based on mm-wave radar. IEEE Trans Microwave Theory Techn:1–11. https://doi.org/10.1109/TMTT.2022.3148403

Arsalan M et al (2021) Resource efficient gesture sensing based on fmcw radar using spiking neural networks. In: 2021 IEEE MTT-S IMS, pp 78–81

Arsalan M et al (2021) Radar-based gesture recognition system using spiking neural network. In: 2021 26th IEEE ETFA. https://doi.org/10.1109/ETFA45728.2021.9613183

Safa A, Bourdoux A, Ocket I, Catthoor F, Gielen GGE (2021) On the use of spiking neural networks for ultralow-power radar gesture recognition. IEEE Microwave Wire Components Lett:1–4

Arsalan M, Santra A, Issakov V (2022) Spiking neural network-based radar gesture recognition system using raw adc data. IEEE Sensors Lett:1–1. https://doi.org/10.1109/LSENS.2022.3173589

(2011). 60 GHz infineon technologies. https://www.infineon.com/cms/en/product/sensor/radar-sensors/radar-sensors-for-iot/60ghz-radar/bgt60tr13c/. Accessed 30 June 2022

Trotta S et al (2021) 2.3 soli: a tiny device for a new human machine interface. In: 2021 IEEE ISSCC, vol 64, pp 42–44. https://doi.org/10.1109/ISSCC42613.2021.9365835

Gerstner W, Kistler WM (2002) Signal transmission and neuronal coding cambridge university press. https://doi.org/10.1017/CBO9780511815706.008

Kasabov N et al (2013) Dynamic evolving spiking neural networks for on-line spatio- and spectro-temporal pattern recognition. Neural Netw

Izhikevich EM (2004) Which model to use for cortical spiking neurons? IEEE Trans Neural Netw 15(5):1063–1070. https://doi.org/10.1109/TNN.2004.832719

(2019). NengoDL. https://www.nengo.ai/nengo-dl/. Accessed 30 June 2022

Hunsberger E, Eliasmith C (2016) Training spiking deep networks for neuromorphic hardware. arXiv:1611.05141. https://doi.org/10.13140/RG.2.2.10967.06566

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. arXiv:1412.6980. https://doi.org/10.48550

Schmidt RM, Schneider F, Hennig P (2020) Descending through a crowded valley - benchmarking deep learning optimizers. arXiv:2007.01547. https://doi.org/10.48550

Chmurski M et al (2021) Highly-optimized radar-based gesture recognition system with depthwise expansion module. Sensors, vol 21(21). https://doi.org/10.3390/s21217298

Glorot X, Bengio Y (2010) Understanding the difficulty of training deep feedforward neural networks. J Mach Learn Res - Proc Track 9:249–256

Safa A, Corradi F, Keuninckx L, Ocket I, Bourdoux A, Catthoor F, Gielen GGE (2021) Improving the accuracy of spiking neural networks for radar gesture recognition through preprocessing. IEEE Trans Neural Netw Learn Syst:1–13. https://doi.org/10.1109/TNNLS.2021.3109958

Stuijt J et al (2021) μ brain: an event-driven and fully synthesizable architecture for spiking neural networks. Front. Neurosci. 15:538. https://doi.org/10.3389/fnins.2021.664208

Blouw P, Choo X, Hunsberger E, Eliasmith C (2019) Benchmarking keyword spotting efficiency on neuromorphic hardware. arXiv:1812.01739

Acknowledgements

The authors would like to acknowledge the financial support provided by the Electronic Components and Systems for European Leadership Joint Undertaking under grant agreement No 826655 (Tempo) and by the German Federal Ministry of Education and Research (BMBF) within the KI-ASIC project (16ES0992K).

Funding

Open Access funding enabled and organized by Projekt DEAL. This work has received funding from the Electronic Components and Systems for European Leadership Joint Undertaking under grant agreement No 826655 (Tempo) and by the German Federal Ministry of Education and Research (BMBF) within the KI-ASIC project (16ES0992K).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Consent for Publication

We certify that the submission is original and has not been submitted for publication anywhere else.

Conflict of Interest

The manuscript was reviewed and approved by all co-authors, and there are no conflicts of interest to report.

Additional information

Human and animal research disclosure

No animal or human subjects were used while conducting this research.

Competing interest

The authors have no competing interests to declare that are relevant to the content of this paper.

Open Access

This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit ttp://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Arsalan, M., Santra, A. & Issakov, V. Power-efficient gesture sensing for edge devices: mimicking fourier transforms with spiking neural networks. Appl Intell 53, 15147–15162 (2023). https://doi.org/10.1007/s10489-022-04258-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-04258-w