Abstract

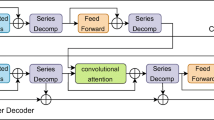

Time series forecasting provides insights into the far future by utilizing the available history observations. Recent studies have demonstrated the superiority of transformer-based models in dealing with multivariate long-sequence time series forecasting (MLTSF). However, the data complexity hinders the forecasting accuracy of current deep neural network models. In this article, a hybrid framework - Waveformer - is proposed, which decomposes fluctuated and complex data sequence into multiple stable and more predictable subsequences (components) through the entire forecasting process. Waveformer interactively learns temporal dependencies on each pair of decomposed components, which enhances its ability of learning their temporal dependencies. Moreover, Waveformer treats the implicit and dynamic dependencies among variables as a set of dynamic direct graphs. Based on which, an attention adaptive graph convolution net (AAGCN) is designed, which combines self-attention and adaptive direct graph convolution to capture multivariate dynamic dependencies in a flexible manner. The experimental results on six public datasets show that Waveformer considerably outperforms a varied range of state-of-the-art benchmarks, with at the most 54.3% relative improvement.

Similar content being viewed by others

Data Availability

The datasets generated or analyzed during this study are available in the links of Section 4.

Notes

Amazon Web Services https://aws.amazon.com/greengrass/

Available at https://github.com/zhouhaoyi/ETDataset.

Available at http://pems.dot.ca.gov.

References

Qian Z, Pei Y, Zareipour H, et al. (2019) A review and discussion of decomposition-based hybrid models for wind energy forecasting applications. Appl Energy 235:939–953

Bai L, Yao L, Li C, et al. (2020) Adaptive graph convolutional recurrent network for traffic forecasting. In: Larochelle H, Ranzato M, Hadsell R, et al. (eds) Advances in neural information processing systems, vol 33. Curran Associates, Inc., pp 17,804–17,815

Younis MC (2021) Evaluation of deep learning approaches for identification of different corona-virus species and time series prediction. Comput Med Imaging Graph 90:101,921

Williams BM, Hoel LA (2003) Modeling and forecasting vehicular traffic flow as a seasonal arima process: theoretical basis and empirical results. J Transp Eng 129(6):664–672

Greff K, Srivastava RK, Koutník J, et al. (2017) Lstm: a search space odyssey. IEEE Trans Neural Netw Learn Syst 28(10):2222–2232

Zhou H, Zhang S, Peng J, et al. (2021) Informer: beyond efficient transformer for long sequence time-series forecasting. In: Proceedings of the AAAI conference on artificial intelligence, pp 11,106–11,115

Yu B, Yin H, Zhu Z (2018) Spatio-temporal graph convolutional networks: a deep learning framework for traffic forecasting. In: Proceedings of the twenty-seventh international joint conference on artificial intelligence, IJCAI-18. international joint conferences on artificial intelligence organization, pp 3634–3640

Song C, Lin Y, Guo S, et al. (2020) Spatial-temporal synchronous graph convolutional networks: a new framework for spatial-temporal network data forecasting. In: Proceedings of the AAAI conference on artificial intelligence, pp 914–921

Guo S, Lin Y, Wan H, et al. (2021) Learning dynamics and heterogeneity of spatial-temporal graph data for traffic forecasting. IEEE Transactions on Knowledge and Data Engineering

Wu Z, Pan S, Long G, et al. (2020) Connecting the dots: multivariate time series forecasting with graph neural networks. In: Proceedings of the 26th ACM SIGKDD international conference on knowledge discovery & data mining. KDD ’20. Association for Computing Machinery, New York, pp 753–763

Zhang N, Guan X, Cao J, et al. (2019) Wavelet-hst: a wavelet-based higher-order spatio-temporal framework for urban traffic speed prediction. IEEE Access 7:118,446–118,458

He H, Gao S, Jin T, et al. (2021) A seasonal-trend decomposition-based dendritic neuron model for financial time series prediction. Appl Soft Comput 108:107,488

Tian Z (2020) Network traffic prediction method based on wavelet transform and multiple models fusion. Int J Commun Syst 33(11):e4415

Sun S, Wang S, Zhang G, e al. (2018) A decomposition-clustering-ensemble learning approach for solar radiation forecasting. Sol Energy 163:189–199

Liu Y, Yang C, Huang K, et al. (2020) Non-ferrous metals price forecasting based on variational mode decomposition and lstm network. Knowl-Based Syst 188:105,006

Zhang D (2019) Wavelet transform. Springer, Cham, pp 35–44

Wu X, Wang Y (2012) Extended and unscented kalman filtering based feedforward neural networks for time series prediction. Appl Math Model 36(3):1123–1131

Joo TW, Kim SB (2015) Time series forecasting based on wavelet filtering. Expert Syst Appl 42(8):3868–3874

Han Z, Liu Y, Zhao J, et al. (2012) Real time prediction for converter gas tank levels based on multi-output least square support vector regressor. Control Eng Pract 20(12):1400–1409

Zivot E, Wang J (2006) Vector autoregressive models for multivariate time series. Springer, New York, pp 385–429

Hoermann S, Bach M, Dietmayer K (2018) Dynamic occupancy grid prediction for urban autonomous driving: a deep learning approach with fully automatic labeling. In: 2018 IEEE international conference on robotics and automation (ICRA), IEEE, pp 2056–2063

Ding X, Zhang Y, Liu T, et al. (2015) Deep learning for event-driven stock prediction. In: Proceedings of the 24th international conference on artificial intelligence. AAAI Press, IJCAI’15, pp 2327–2333

Zhang J, Zheng Y, Qi D (2017) Deep spatio-temporal residual networks for citywide crowd flows prediction. In: Proceedings of the thirty-first AAAI conference on artificial intelligence. AAAI Press, AAAI’17, pp 1655–1661

Devlin J, Chang MW, Lee K, et al. (2019) BERT: pre-training Of deep bidirectional transformers for language understanding. In: Proceedings of the 2019 conference of the North American. association for computational linguistics, Minneapolis, Minnesota, pp 4171–4186

Kitaev N, Kaiser L, Levskaya A (2020) Reformer: the efficient transformer. In: 8th international conference on learning representations, ICLR 2020, Addis Ababa, Ethiopia, April 26-30, 2020. OpenReview.net

Guo S, Lin Y, Feng N, et al. (2019) Attention based spatial-temporal graph convolutional networks for traffic flow forecasting. In: Proceedings of the AAAI conference on artificial intelligence. AAAI Press, pp 922–929

Li S, Jin X, Xuan Y, et al. (2019) Enhancing the locality and breaking the memory bottleneck of transformer on time series forecasting. In: Wallach H, Larochelle H, Beygelzimer A (eds) Advances in neural information processing systems, vol 32. Curran Associates, Inc., pp 5244–5254

Lai G, Chang WC, Yang Y, et al. (2018) Modeling long- and short-term temporal patterns with deep neural networks. In: The 41st international ACM SIGIR conference on research & development in information retrieval. SIGIR ’18. Association for Computing Machinery, New York, pp 95–104

Lin Y, Koprinska I, Rana M (2021) Temporal convolutional attention neural networks for time series forecasting. In: 2021 International joint conference on neural networks (IJCNN), pp 1–8

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Xiaohu Wang, Yong Wang, Jianjian Peng and Zhicheng Zhang contributed equally to this work.

Appendices

Appendix A: Visualized prediction results

Appendix B: Waveformer model implementation details

STL/DWT | L = 1 | |||

|---|---|---|---|---|

Input | 1×3 Conv1d | Embedding (d = 512) | ||

Encoder: | ||||

Dependency extraction block | Multi-head self-Attention/Multi-head ProbSparse self-Attention/FFT fast self-Attention(h = 16,d = 32) | Learning multivariate dynamic relations | GCN(dout = 512) | 1×3 Conv1d |

Add, LayerNorm, Dropout(p = 0.1) | ||||

Pos-wise FFN(dinner = 2048), GELU | ||||

Add, LayerNorm, Dropout(p = 0.1) | ||||

Distilling | 1×3 conv1d, ELU | |||

Max Pooling (stride = 2) | ||||

Fusion | Add | |||

Interactive learning | din = 512 | |||

Decoder: | ||||

Masked DEB and Learning multivariate dynamic relations | add Mask on Full self-Attention/ProbSparse self-Attention/FFT fast self-Attention | |||

Distilling | 1×3 conv1d, ELU | |||

Max Pooling (stride = 2) | ||||

Fusion | Add | |||

Interactive learning | din = 512 | |||

Output decoder block | Multi-head self-Attention/Multi-head ProbSparse self-Attention/FFT fast self-Attention(h = 8,d = 64) | |||

Add, LayerNorm, Dropout(p = 0.1) | ||||

Pos-wise FFN(dinner = 2048),GELU | ||||

Add, LayerNorm, Dropout(p = 0.1) | ||||

Final: | ||||

Inverse STL/IDWT | L = 1 | |||

Outputs | FCN(din = dmodel, dout = m ) | |||

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, X., Wang, Y., Peng, J. et al. A hybrid framework for multivariate long-sequence time series forecasting. Appl Intell 53, 13549–13568 (2023). https://doi.org/10.1007/s10489-022-04110-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-04110-1