Abstract

We propose a framework for the assessment of uncertainty quantification in deep regression. The framework is based on regression problems where the regression function is a linear combination of nonlinear functions. Basically, any level of complexity can be realized through the choice of the nonlinear functions and the dimensionality of their domain. Results of an uncertainty quantification for deep regression are compared against those obtained by a statistical reference method. The reference method utilizes knowledge about the underlying nonlinear functions and is based on Bayesian linear regression using a prior reference. The flexibility, together with the availability of a reference solution, makes the framework suitable for defining benchmark sets for uncertainty quantification. Reliability of uncertainty quantification is assessed in terms of coverage probabilities, and accuracy through the size of calculated uncertainties. We illustrate the proposed framework by applying it to current approaches for uncertainty quantification in deep regression. In addition, results for three real-world regression tasks are presented.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Methods from deep learning have made tremendous progress over recent years and are meanwhile routinely applied in many different areas. Examples comprise the diagnosis of cancer [1], autonomous driving [2] or language processing [3, 4]. Physical and engineering sciences also benefit increasingly from deep learning and current applications range from optical form measurements [5] over image quality assessment in mammography [6, 7] or medical imaging in general [8] to applications in weather forecasts and climate modeling [9, 10].

Especially for safety relevant applications, such as medical diagnosis, it is crucial to be able to quantify the uncertainty associated with a prediction. Uncertainty quantification can help to understand the behavior of a trained neural net and, in particular, foster confidence in its predictions. This is especially true for deep regression, where a single point estimate of a sought function without any information regarding its accuracy can be largely meaningless.

Bayesian networks [11,12,13] lend themselves to an uncertainty quantification in a natural way. All network parameters are modeled as random variables, and their posterior distribution can be used to calculate the epistemic part of the uncertainty. However, the shear number of network parameters prohibits the application of established computation techniques such as Markov chain Monte Carlo [14], which have been successfully applied in numerous Bayesian inferences on low-dimensional models. Approximate Bayesian techniques, and in particular Variational Bayesian Inference [11], have therefore been suggested for deep learning. At the same time, these methods are closely related to classical network regularization techniques [15,16,17]. Approaches based on ensemble learning [18] comprise another branch of current uncertainty quantification methods. These techniques scale well and are easy to implement. Furthermore, they have been shown to perform very successfully compared to other methods for uncertainty quantification [19,20,21,22].

In accordance with the growing efforts on the development of methods for uncertainty quantification in deep learning, the amount of work on comparing different uncertainty quantification approaches increases as well. To assess and compare methods for uncertainty quantification two ingredients are needed: a suitable dataset, for training and evaluation, and a metric that judges the performance of an uncertainty method. Metrics for comparing uncertainties are the subject of a variety of works, cf., e.g., [21,22,23,24,25,26,27]. For benchmarking either real world data [21, 23, 24, 26, 28,29,30,31] or simulated datasets with known ground truth [19, 20, 23, 24, 32,33,34,35] are used. The existence of a ground truth allows for a more extensive and thorough analysis of the performance of an uncertainty method. However, while some simulated datasets seem to reflect a certain use case [19, 33], the choice of simulated datasets used in most of the literature for benchmarking uncertainties appears to be rather arbitrary. In particular, there seems to be no consensus on which simulated datasets to use for comparing uncertainties.

The main goal of this work is to propose a systematic, and flexible, approach to the problem of creating datasets for regression problems with a known ground truth for benchmarking uncertainties in deep learning. Namely, we propose to use regression problems of the kind

that are linear in the parameter γ = (γ1,γ2,…)T, and where G(x) = (G1(x),G2(x),…)T is a vector built of nonlinear functions G1,G2,…. Deep regression for (1) intends to infer the function G(x)Tγ with a deep neural network without making use of the specific structure of (1). As G can be chosen arbitrarily this allows for a larger flexibility compared to benchmarking frameworks that build on specific datasets. For instance, through the choice of the nonlinearity in the function G(x) as well as by the dimensionality of its input x the level of complexity can be varied.

Moreover, results of an uncertainty quantification for deep regression of problems of the kind in (1) can be compared to those obtained by a statistical reference method. The reference method utilizes knowledge about G and the linearity with respect to the unknown parameters γ. It consists of a Bayesian inference using the improper prior π(γ) ∝ 1, which coincides with the reference prior for the statistical model (1) [36]. This prior is probability matching [37] so that the proposed reference uncertainty quantification can also be recommended from the perspective of classical statistics. The statistical reference method provides an anchor solution against which different methods of uncertainty quantification for deep regression can be compared. Some of the works in the literature mentioned above also use a reference solution. In [19, 20, 32] a Hamilton Monte Carlo algorithm is used for sampling from the posterior of the network parameters, which however limits the number of network parameters that can be used in a comparison. In [19] the authors use an analytical (non-Bayesian) statistical reference solution similar to the one used here, but for a very specific use case.

In this work, the performance of uncertainty quantification is assessed in terms of reliability and accuracy. The former is quantified through coverage probabilities of interval estimates for the regression function at individual inputs x, and the latter by the size of the associated uncertainty. We concentrate on the assessment of the epistemic uncertainty which is relevant when inference of the regression function is the goal of the task. The performance metrics have been chosen on grounds of their simplicity and typical use in statistics. Nevertheless, other performance metrics, cf. for instance [20, 23], could be used as well in conjunction with the proposed framework.

The assessment of methods for uncertainty quantification in deep regression is illustrated by applying it to several current approaches and for several choices of the function G(x). Application of the anchor solution and comparison to deep regression approaches is also illustrated for three real-world examples.

The paper is structured as follows. In Section 2 we present the proposed structure of test problems along with their treatment by a Bayesian reference inference. In Section 3 we illustrate the usage of the framework from Section 2 for various choices of the underlying nonlinearity and study the performance of several deep learning methods for uncertainty quantification. Results for three real-world examples are presented in Section 4. Finally, a discussion and some conclusions and an outlook are given in Sections 5 and 6.

2 Creating reference models for uncertainty quantification

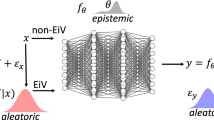

2.1 A primer on (deep) regression and uncertainty

A typical, normal regression for training data \(\mathcal {D}=\{(x_{1},y_{y}),\ldots ,(x_{N},y_{N})\}\) assumes a model of the sort

where \(\mathcal {N}\) denotes a normal distribution, f𝜃 is some function, parametrized by 𝜃, and σ2 denotes the variance of underlying noise. For simplicity we will, in this work, only consider one-dimensional outputsyi, and we will also assume knowledge about σ2. The input xi and the parameter 𝜃 will be of arbitrary dimensions. Assuming independent observations, (2) gives rise to the statistical model \(p(\mathcal {D}|\theta )={\prod }_{i=1}^{N} \mathcal {N}(y_{i}| f_{\theta }(x_{i}),\sigma ^{2})\) and inferring 𝜃 could be done, for instance, by maximizing \(p(\mathcal {D}|\theta )\) with respect to 𝜃 (maximum likelihood estimate - MLE) or by a Bayesian approach, that is by computing the posterior

where π(𝜃) denotes the prior for 𝜃. The uncertainty associated with 𝜃 might, in the Bayesian case, be taken as the variation arising from the distribution (3) while, in the frequentist case, often the variation of the MLE under the data distribution is used.

In the case of deep regression, f𝜃 is given by a neural network and the parameter 𝜃 contains all parameters of the network, such as weights and biases, and is therefore typically of a pretty high dimension (106 or more). In such cases there is usually no hope of sampling from \(\pi (\theta | \mathcal {D})\) or computing its normalization constant. Instead, one has to rely on approximations. In deep learning, many methods [38,39,40,41,42] rely on the statistical tool of variational inference, that is to approximate \(\pi (\theta | \mathcal {D})\) via a feasible distribution qϕ(𝜃), parametrized by a hyperparameter ϕ, by minimizing the Kullback-Leibler divergence with respect to ϕ. Depending on the class of variational distributions qϕ that is chosen, a variety of approximations arise without a clear gold standard. In addition to approaches based on variational inference, there are other approaches to quantify the uncertainty about 𝜃 [18, 43,44,45]. The approach of deep ensembles [18], introduced as a sort of frequentist approach, can also be viewed as a Bayesian approach [46, 47].

2.2 A generic model for benchmarking uncertainty

While for neural networks it is non-trivial to obtain an uncertainty for 𝜃 in (2), for problems that are linear in a lower dimensional parameter this is standard. In this section we will exploit this fact to propose a generic scheme to create benchmarking test problems of arbitrary complexity. Given a generally nonlinear function G(x) with p-dimensional output and a p-dimensional parameter γ, we can define the following model

where we assume σ2 to be known. We will use (4) in three different ways:

-

1.

To generate training data \(\mathcal {D}\): fix input data x = (x1,…,xN) and generate (independent) y = (y1,…,yN) according to (4). Store both, x and y, in \(\mathcal {D}=\{ (x_{i},y_{i}) | i=1,\ldots ,N\}\).

-

2.

To generate test data with a known ground truth: we fix test inputs \(\textbf {x}^{\ast }=(x_{1}^{\ast }, \ldots , x_{N^{\ast }}^{\ast })\). The values \(G(x_{i}^{\ast })\gamma \) will be taken as the (unnoisy) ground truth to judge the quality of predictions and uncertainties of the studied models.

-

3.

To create an anchor model: the test input x∗ from 2. is evaluated under a Bayesian inference, based on \(\mathcal {D}\) from 1., and the “true model” (4). This will be described in the following and is summarized by the distribution (6) below. The anchor model serves as a reference point to judge the performance of uncertainty methods for other (neural network based) models of the more general shape (2).

The map G(x) in (4) allows to tune the nonlinearity and complexity underlying the training data \(\mathcal {D}\). The linearity of (4) with respect to γ, on the other hand, allows for an explicit Bayesian inference in the anchor model (assuming knowledge about G(x)). We here propose to use the (improper) reference prior π(γ) ∝ 1 for the statistical model (4), see e.g. [36]. Taking this prior has, in our case, the convenient effect of exact probability matching, and hence the coverage probability under repeated sampling of y of credible intervals derived from the Bayesian posterior equals their credibility level. This ensures that credible and confidence intervals coincide. The according posterior distribution can be specified explicitly [48] as

where \(\hat {\gamma } = V G(\mathbf {x})\mathbf {y}, V=(G(\mathbf {x}) G(\mathbf {x})^{T})^{-1}\) and where the evaluation G(x) = (G(x1) ,…,G(xN)) \(\in \mathbb {R}^{p\times N}\) should be read element-wise. For the test points x∗, the distribution, conditional on \(\mathcal {D}\), for the prediction G(x∗)Tγ is then given by

We will refer to (6) as the anchor model in the remainder of this work and use it for benchmarking the performance of other methods on \(\mathcal {D}\). For the evaluation we will treat, for the anchor model and all other methods, σ2 as known and use the same value that was taken to generate \(\mathcal {D}\). The distribution (6) models the epistemic uncertainty for the test data points x∗, which is, as we explained in the introduction, the only uncertainty we study in this work. To consider the aleatoric uncertainty, the posterior predictive distribution \(\pi (\mathbf {y}^{\ast }| \mathcal {D}, \mathbf {x}^{\ast })=\mathcal {N}(\mathbf {y}^{\ast }| G(\mathbf {x}^{\ast })^{T}\hat {\gamma }, \sigma ^{2} G(\mathbf {x}^{\ast })^{T} V G(\mathbf {x}^{\ast })+ \sigma ^{2} \mathbf {I})\) could be used instead.

Due to the employed probability matching prior the anchor model expresses both, the Bayesian and the frequentist view, and can thus be taken as reference point for approaches from both worlds. From a frequentist point of view, the anchor model (6) is unbiased and achieves the Cramer-Rao bound. This makes the model “optimal” in the sense of the following lemma.

Lemma 1

Consider an x∗ from the domain of G and an estimator T(y;x∗) for G(x∗)Tγ, based on \(\mathbf {y} \sim \mathcal {N}(G(\mathbf {x})\gamma , \sigma ^{2} \mathbf {I})\). Consider further some non-negative function u(y;x∗). Then, at least one of the following two statements is true

-

1.

The estimator T(⋅;x∗) is biased.

-

2.

Whenever, for some \(\mathbf {y}^{\prime }\), \(u(\mathbf {y}^{\prime };x^{\ast })\) is smaller than the uncertainty of the anchor model (6), that is

$$ \begin{array}{@{}rcl@{}} u(\mathbf{y}^{\prime}; x^{\ast})^{2}< \sigma^{2} G(x^{\ast})^{T}VG(x^{\ast}) , \end{array} $$(7)then \(u(\mathbf {y}^{\prime }; x^{\ast })\) is also smaller than the root mean squared error of T(⋅;x∗) under repeated sampling, i.e. \(u(\mathbf {y}^{\prime }; x^{\ast })^{2}<\mathbb {E}_{\mathbf {y}\sim \mathcal {N}(G(\mathbf {x})^{T}\gamma , \sigma ^{2}\mathbf {I})}\left [(T(\mathbf {y}; x^{\ast })-G(x^{\ast })^{T}\gamma )^{2}\right ]\).

Proof

Assume that 1 is not true, so that T(⋅;x∗) is unbiased. Note first, that, in this case, for any \(\mathbf {y}^{\prime }\) that satisfies (7) we do have

Indeed, otherwise we could apply the Cramer-Rao inequality (in the form of [49, Theorem 3.3]) to the unbiased estimator T(⋅;x∗) and would get a contradiction to (7):

From (8) we arrive at 2, since the mean squared error is bounded from below by the variance under repeated sampling

□

For our purposes, the statement of lemma 1 can be summarized as follows: suppose for some test data point x∗ and training data y we have a prediction T(y;x∗) of a neural network, for instance the average of the outputs under an approximation to the posterior (3), and an uncertainty u(y;x∗), for instance the corresponding standard deviation. If the uncertainty is smaller than the one from the anchor model, lemma 1 tells us that the prediction is biased or/and the uncertainty is “too small”, at least compared to the root mean squared error.

3 Experiments

In this section we study various choices for the benchmarking framework from Section 2. Namely, we analyze the performance of a couple of state of the art methods for uncertainty quantification in deep learning under test scenarios created with (4) and compare them with each other and with the anchor model from (6). Methods under test are variational Bayes methods such as Bernoulli-Dropout [12] (abbreviated “BD” in the following), Concrete Dropout (CD) [40], Variational Dropout (VD) [39] as well as methods based on Deep Ensembles [18]. For the latter we used three modes of training: “standard” (Ens), with adversarial examples included in training (EnsAdvA) and trained via bootstrapping the training data (EnsBS). In both, Concrete and Variational Dropout, the dropout rate is inherently optimized during the training process. As described in Section 2 the anchor model (6) will be included as a reference model. As the anchor model is based on Bayesian linear regression we will use the abbreviation BLR.

Based on the framework from Section 2 we can test these methods on various numerical experiments, arising from different choices of the nonlinear functions G(x) in (4). In this work we will consider the following three experiments.

-

E.1

As a first choice we will consider a linear combination of p = 4 sinusoidal functions, with different phases and varying frequencies:

$$ G(x_{i}) = (\sin(2\pi f_{1} \cdot x_{i} + \rho_{1}), {\ldots} , \sin(2\pi f_{4} \cdot x_{i} + \rho_{4}))^{T} , $$(9)where the xi are univariate and where the \(\gamma \in \mathbb {R}^{4}\) in (4) are randomly chosen from [0,1] (uniform distribution with support in [0,1]). All four frequencies f1,…,f4 are equally spaced between [0.9,1.1]. The phases ρ are equidistantly chosen between [0,2π], where we will consider various choices for fmain = 1,2,3,…. The used σ for this experiment is 0.75.

-

E.2

As a second example, we will use the Styblinsky-Tang-function to scale the dimensionality in an arbitrary manner, i.e. for d = 1,2…, we define

$$ G(x_{i}) = (x_{i,1}, x_{i,1}^{2}, x_{i,1}^{4}, x_{i,2}, x_{i,2}^{2}, x_{i,2}^{4}, \ldots, x_{i,d}, x_{i,d}^{2}, x_{i,d}^{4})^{T}, $$(10)with xi,j denoting the j-th component of \(x_{i}\in \mathbb {R}^{d}\) and with

$$ \gamma=(2.5, -8, 0.5, 2.5, -8, 0.5, \ldots, 2.5, -8, 0.5)^{T}\in \mathbb{R}^{3d}. $$The used σ for this experiment is 3.

-

E.3

As a third example, we will choose a simple polynomial mapping, so that GTγ represents a linear combination of linear, quadratic and mixed terms

$$ G(x_{i}) = (1, x_{i,1}, x_{i,2}, x_{i,1}\cdot x_{i,2}, x_{i,1}^{2}, x_{i,2}^{2})^{T} $$(11)with randomly chosen coefficients \(\gamma \in \mathbb {R}^{6}\) (uniform with support in [0,1]) in (11) and \(x_{i}\in \mathbb {R}^{2}\). The used σ for this experiment is 0.5.

As stated in the introduction of this work we evaluate the performance of the different approaches for uncertainty quantification using common statistical metrics. Namely, we will base the evaluation of the experiments on the following three objectives.

-

1.

Prediction for an input: for the anchor model (BLR) and Bayesian deep learning methods (BD, VD, CD) this is the mean of the output of the regression function or the neural network, given the input, when the according parameter is drawn from the posterior distribution (or its approximation). For ensemble based methods (Ens, EnsAdvA, EnsBS) this is the average of the outputs of the single ensemble members for the given input.

We will also compute the deviation as the absolute value of the difference between the prediction and the unnoisy ground truth, as defined in Section 2.2.

-

2.

Uncertainty for an input: for the anchor model (BLR) and Bayesian deep learning methods (BD, VD, CD) this is the standard deviation of the output of the regression function or the neural network, given the input, when the according parameter is drawn from the posterior distribution (or its approximation). For ensemble based methods (Ens, EnsAdvA, EnsBS) this is the standard deviation of the outputs of the single ensemble members for the given input.

-

3.

Coverage for an input: draw repeatedly training data under the corresponding model (4), for neural network based methods retrain the involved networks, and check whether the deviation, as in point 1, is smaller than 1.96 times the computed uncertainty, as in point 2 (where the 1.96 is motivated from a normal approximation). The coverage denotes the percentage of cases where this succeeded. For the anchor model we expect a coverage of 95%.

Details on the implementation: For all experiments below, we used the same network architecture of three hidden, fully connected layers with 128, 64, 32 neurons, a single output neuron and leaky ReLU activations (with negative slope 0.01) between the layers. For the methods based on dropout, dropout layers were added to the next-to-last layer. All methods and networks, together with the benchmarking framework, are implemented by using MATLAB®; and the MATLAB®; “Deep Learning Toolbox” [50]. All networks were initialized via the Xavier initialisation. For ensemble based methods, we used 120 ensemble members. In experiments with the sinusoidal generic function E1 (9) we have |train set| = 50 elements in the training set (uniformly distributed in the training range) and |test set| = 103 elements in the test set (chosen equidistantly over the test range). For the Experiment E.2 the number of elements in the train set scaled with the input dimensionality d as |train set| = 100 ⋅ 9d− 1, d = 1,2,3,…. For the simple 2D polynomial mapping (11) we have 450 inputs in the train set. The ranges for the test set were [− 6,6] for the Experiment E.1, and [− 5,5] in every dimension of the input domain for Experiment E.2 and E.3. The range(s) for the train set are [− 4,4] (in every input dimensions) for all above listed experiments. For all methods, we scaled the number of epochs used for training as 300 ⋅ c, where c was chosen equal to fmain for E1 (9) and equal to the dimension of the input domain for (10) and (11). The mini batch size was chosen equal to |train set|, except for ensemble learning with bootstrap (EnsBS), where we took |train set|/2 to run the bootstrapping in a pull with back scenario during one epoch. We used an Adam optimizer [51] with learning rate 10− 2, an L2 regularization of 1/|train set| and momentum parameters β1 = 0.9,β2 = 0.999 for all experiments.

3.1 Results

As a first application of the benchmarking framework, we perform the Experiment E.1 with different frequencies fmain. For every choice of fmain we define a train and a test set as described in Section 2. As stated above, the input range of the test set is broader, so that we are able to observe the behavior of the methods under test for out-of-distribution data. For the evaluation, we will distinguish between the out-of-distribution range and the range used in the train set (in-distribution). Two examples are illustrated in Figs. 1 and 2. Both figures show the predictions (solid line) and associated uncertainties times 1.96 (shaded) provided by the anchor model (BLR), Bernoulli Dropout (BD), Variational Dropout (VD) and ensemble with bootstrap (EnsBS), for a “complexity level” of fmain = 2 (see Fig. 1) and fmain = 5 (see Fig. 2). The ground truth is illustrated as a dashed red line. The ground truth is completely covered by the predictions and associated uncertainties (times 1.96) of the BLR (6). In the in-distribution range nearly all methods cover the ground truth by their associated uncertainties. In the out-of-distribution range the uncertainty grows for the deep learning methods, but is often too small to cover the ground truth.

Experiment E.1 with fmain = 1. Predictions (solid lines) and calculated uncertainties times 1.96 (shaded areas) of the anchor model BLR (subplot (a)) and different methods in deep learning (subplots (b)-(d)), together with the used train set (black dots) and ground truth (red dashed line). The abbreviations for the methods are as in the beginning of Section 3

Experiment E.1 with fmain = 5. Predictions (solid lines) and calculated uncertainties times 1.96 (shaded areas) of the anchor model BLR (subplot (a)) and different methods in deep learning (subplots (b)-(d)), together with the used train set (black dots) and ground truth (red dashed line). The abbreviations for the methods are as in the beginning of Section 3

Figure 3 summarizes the deviation between the ground truth and the prediction, the uncertainty and the coverage, as specified above, for two in-distribution inputs (x = − 2.38 and x = 1.2) and one out-of-distribution input (x = − 5.11) over varying complexity, i.e increasing frequency fmain. We repeated the experiment, for every fmain, k = 50 times with the same test set but with different realization of the added noise of the train set and re-trained the neural networks in each repetition. The two left columns show the average of the deviation from the ground truth and the uncertainty together with their associated standard error for the different choices of input x. For the coverage (third column from the right) the size of the error bars were generated by using the fact that a sum of k Bernoulli distributed numbers (ground truth in or outside the area spanned by the standard deviation times 1.96 around the estimate) are Binomially distributed with variance p(1 − p)/k, where p is the success probability, for which the calculated coverage over k repetitions was taken.

Experiment E.1 for various choices of the frequency fmain. The three columns show for the anchor model and different methods from deep learning the deviation from the ground truth (left column), the uncertainty (middle column) and the coverage of the ground truth by the uncertainty times 1.96 (right column) for three different inputs x = − 2.38 (first row), x = 1.2 (second row) and x = − 5.11 (third row, out-of-distribution) and different choices of the frequency fmain (on the abscissa). The plots were generated with 50 runs. For the deviation and uncertainty the mean together with its standard errors are plotted. The abbreviations for the methods are as in the beginning of Section 3

The BLR-solution (red in Fig. 3) provides a complexity independent coverage probability of 0.95 , i.e. 95% of the ground truth is covered by the estimate ± 1.96 times the standard deviation, as expected from the theory. At the same time, the deviation of the prediction of the BLR from the ground truth is quite low. The uncertainty is also comparably low (compare Fig. 3), but large enough to ensure 95% coverage.

As motivated by lemma 1 we can, in a certain sense, consider a deep learning method as “optimal” if it reaches the BLR-solution in all three characteristics: deviation, uncertainty and coverage. Every method under test in the benchmarking framework should thus strive for the goal to reach the corner of a cube, defined by these three quantities, where the BLR-solution is located. However, since the number of parameters in a deep regression drastically exceeds that of the BLR model, and because the employed deep regression does not make use of the particular model structure, we cannot expect a deep regression approach to actually match the BLR-solution.

In Fig. 3, row 1 and 2, we observe an increasing deviation for increasing complexity for all methods, except for BLR, for inputs of the in-distribution range (row 1 → x = − 2.38, row 2 → x = 1.20). At the same time we can observe, in the subplots of the second column, that the size of the associated uncertainty over increasing frequency is only slightly increasing or constant for the approaches based on dropout (BD, VD and CD). As predictable from subplot (a) and (b) the coverage, depicted in (c), is poor for the Dropout-based methods and substantially lower as the 0.95 provided by the anchor model. The non-standard ensemble methods (EnsAdvA and EnsBS), on the other hand, deliver a coverage near 0.95 but overestimate for larger complexities their uncertainty, which be can be seen by the coverage of almost 1.0. Note, that for the considered scenario the uncertainty of the anchor model is below the uncertainties of all other models.

Row 3 of Fig. 3 shows the results for a out-of-distribution input (x = − 5.11). We encounter a slightly different behavior. The deviation from the ground truth is not increasing with increasing complexity for all methods, except for VD. The size of the associated uncertainty is not increasing and too low to deliver a sufficient coverage, except for the ensemble methods. The reason for this behavior seems to lie in the poor or not sufficient quality of the prediction in the out-of-distribution range together with an underestimated uncertainty.

Figure 4 shows the results of three other metrics for comparing uncertainty evaluation from the literature on the experiment E.1, namely the the Expected Normalized Calibration Error (ENCE) [22, 23], the Area Under Sparsification Error (AUSE) [20, 52] and the Prediction Interval Coverage Probability (PICP) [32]. While ENCE and PICP are both computed for single inputs x, the computation of AUSE takes the entire test dataset into account. The top row of Fig. 4 shows the in-distribution case, while the bottom row shows results for out-of-distribution x (for ENCE and PICP) and the entire out-of-distribution range (for AUSE). Note, that the PICP criterion yields a similar ranking to the one obtained by the coverage in Fig. 3. In all plots in Fig. 4 we observe that BLR performs best, which confirms our usage of this model as an anchor solution.

Ranking of the anchor model and different methods from deep learning on Experiment E.1 for three common used metrics for evaluating uncertainty in deep learning ENCE [22, 23], AUSE [20] and PICP [32]. The first row shows the results for in-distribution input, while the second row shows the results for out-of-distribution input. For ENCE and PICP specific inputs are used, for the computation of the AUSE the entire (in- or out-of-distribution) range was used. The abbreviations for the methods are as in the beginning of Section 3

Figures 5 and 6 shows the results of the considered uncertainty methods for the Experiment E.2. The elements of the test set were chosen to contain only inputs that are located at the diagonal of the d-dimensional hypercube from \((-5,\ldots ,-5)^{T}\in \mathbb {R}^{d}\) to \((5,\ldots ,5)^{T}\in \mathbb {R}^{d}\), i.e. xλ = (1 − λ)(− 5,− 5,…,− 5)T + λ(5,5,…,5)T with 0 ≤ λ ≤ 1. In that way, the diagonal contains both, out-of-distribution and in-distribution inputs.

Experiment E.2 in dimension d = 2. Predictions (solid lines) and according uncertainties times 1.96 (shaded areas) of the anchor model BLR (subplot (a) - uncertainties are very small, barely visible) and different methods in deep learning (subplots (b)-(d)) along a diagonal of the d-dimensional hypercube (cf. Section 3), together with the ground truth (red dashed line). The abbreviations for the methods are as in the beginning of Section 3

Experiment E.2 in dimension d = 4. Predictions (solid lines) and according expanded uncertainties (shaded areas) of the anchor model BLR (subplot (a) - uncertainties are very small, barely visible) and different methods in deep learning (subplots (b)-(d)) along a diagonal of the d-dimensional hypercube (cf. Section 3), together with the ground truth (red dashed line). The abbreviations for the methods are as in the beginning of Section 3

Figure 7 depicts the deviation from the ground truth, uncertainty and coverage for two different choices of λ, namely λ = 0.6 for in-distribution and λ = 0.07 for out-of-distribution. For both inputs xλ we observe an overestimation of the uncertainty, compared with the anchor model, for the ensemble methods with adversarial attacks (EnsAdvA) and bootstrapping (EnsBS). Both ensemble methods cover for larger dimensions the increasing deviation from the ground truth with an increasing uncertainty, but the increase of uncertainty in dependence in the increasing dimensionality seems a bit too large, especially for EnsAdvA. For CD and VD we observe a decreasing coverage over increasing dimensionality. At the same time the deviation from the ground truth is strongly increasing for CD. The increasing uncertainty over increasing dimensionality is too low, so that the uncertainty does not compensate for the increasing error for this method. For BD we observe an increasing uncertainty with increasing dimensionality and a moderately increasing deviation, which leads to a coverage of round about 0.8 over increasing dimensionality. For one representative input of the out-of-distribution range (see subplots Figs. 5 and 6) we observe for all DL-methods a poor coverage, i.e. we observe a poor behavior in the out-of-distribution range and at the same time an underestimated uncertainty.

Experiment E.2 for various choices of the dimensionality d. The three columns show for the anchor model and different methods from deep learning the deviation from the ground truth (left column), the uncertainty (middle column) and the coverage of the ground truth by the uncertainty times 1.96 (right column) for two different inputs λ = 0.5996 (first row, ind-distribution, cf. Fig. 5) and λ = 0.074 (second row, out-of-distribution, cf. Fig. 6). The abbreviations for the methods are as in the beginning of Section 3

For Experiment E.3, Fig. 8 shows the deviation from the ground truth, the uncertainty and the coverage as a heat map for every input of the test set (regular grid in x1 and x2). The in-distribution region is located at 1 ≤ x1 ≤ 4 and 1 ≤ x2 ≤ 4. Every row represents a method, the first row contains results provided by BLR, row 2, 3 and 4 contain results from BD, VD, EnsBS. The deviation and uncertainty is shown as a decadic logarithm. The BLR method shows on every position of the input grid a coverage around 0.95. BD and VD show in some regions of the input space a poor coverage, i.e. the associated uncertainties do not cover the deviations in these regions. The ensemble method using bootstrapping (EnsBS) only exhibits in the out-of-distribution range a coverage well below 0.95.

Results for the Experiment E.3 of the anchor model and different methods from deep learning for regular gridded test data. Every plot of the 9 visible plots arranged in a tabular, contains one heat map and shows the objectives introduced at the beginning of Section 3 averaged over 30 repeated samplings: the deviation from the ground truth (first column, in \(\log _{10}\) scale), uncertainty (second column, in \(\log _{10}\) scale) and the coverage (third column). The different rows correspond to different methods with abbreviations are as in the beginning of Section 3

4 Real world examples

The proposed framework for benchmarking uncertainty in deep regression is based on regression problems of the kind introduced in (1). The derived anchor solution accounts for the specific structure of these problems. We recommend using simulated example problems which ensure that the ground truth is known precisely and therefore provide a true benchmark. However, regression problems of the kind (1) are encountered frequently in real world applications as well. In the following, we apply the anchor solution to the regression tasks Boston house price (BHP) [53], wine quality (WineQ) [54] and the kin8nm dataset [55], and present the comparison of obtained uncertainties with those achieved by the deep regression approaches. In contrast to the simulated problems, assumptions, on which the anchor solution is based, cannot be strictly validated. Nevertheless, in applications where these assumptions appear to be fulfilled, supported for example by carrying out a residual analysis and statistical tests, real world examples could complement a set of benchmarking problems within the proposed framework.

The anchor solutions are based on the assumption that the three cases can be modeled by homoscedastic, Gaussian noise and that the following regression functions adequately model the data Footnote 1

-

BHP

$$ f(x) = c_{0}+\sum\limits_{i=1}^{13}c_{i}x_{i}+\sum\limits_{i,j=1,i\neq j}^{13}c_{i,j}x_{i}x_{j} $$(12) -

WineQ

$$ f(x) = c_{0}+\sum\limits_{i=1}^{11}c_{i}x_{i} $$(13) -

kin8nm

$$ f(x) = c_{0}+\sum\limits_{i=1}^{8}c_{i}x_{i}+\sum\limits_{i,j=1,i\neq j}^{8}c_{i,j}x_{i}x_{j}. $$(14)

We concentrate on a comparison of mean uncertainties over the in-distribution range. More precisely, uncertainties were calculated for predictions on the test set and then averaged. The test sets have been drawn randomly from the complete data set and were not used for training.

The mean uncertainties were then normalized by dividing them by the mean uncertainties obtained by the anchor solution. Figure 9 shows the results for the three considered real-world applications. One can observe, that the performance of the different methods varies between the examples, which exhibit a different dimensionality. This observation is in accordance with the behavior we can see in Fig. 7, where the performance also depends on the dimensionality of the input space. For the Boston house price example almost all methods appear to underrate the uncertainty, which has rarely been observed in the simulated examples. This might indicate that not all assumptions of the anchor solution are truly satisfied here. For the other two examples the anchor solution produces smaller uncertainties than the considered deep regression approaches. Furthermore, with the exception of Bernoulli dropout, the ranking of the mean uncertainties between these methods is the same for the wine quality and the kin8nm data set.

Relative mean uncertainty over different uncertainty quantification methods for three real world datasets. The abbreviations for the methods are as in the beginning of Section 3

5 Discussion

The presented framework allows us to study the performance of uncertainty methods for deep learning. While it is possible to use the framework for real world data, as we showed in Section 4, using it for simulated data gives deeper insights into the performance of different uncertainty methods as this allows us to tune complexity parameters such as the the dimension and the shape of the underlying regression function. We observe that the performance of the methods studied in this work depends on the complexity. In the in-distribution range, the deviation from the ground truth raises consistently as the complexity increases. Only those methods based on ensembles show an increase in uncertainty that leads to a sufficient coverage of this deviation. These methods, however, tend to partially overestimate their uncertainty. In the out-of-distribution range a more complex behavior of the studied methods can be observed, that seems to vary between different regression functions. For all examples we observed the anchor solution to perform best, which was also confirmed by using various criteria from the literature.

6 Conclusion and outlook

In this article we described a method to create benchmarking test problems for uncertainty quantification in deep learning. We demonstrated how the linearity of a generating model in its parameters can be exploited to create an anchor model that helps to judge the quality of the prediction and the quantified uncertainty of deep learning approaches. Within this set-up it is possible to design generic data sets with explicit control over problem complexity and input dimensionality. For various test problems we studied the performance of several approaches for uncertainty quantification in deep regression. Common statistical metrics were used to compare the performance of these methods with each other and with the anchor model. In particular we assessed the behavior of these methods under increasing problem complexity and input dimensionality. The flexibility to design test problems with specified complexity, along with the availability of a reference solution, qualifies the proposed framework to form the basis for designing a set of benchmark problems for uncertainty quantification in deep regression.

As stated above, the main focus of this work was to propose a framework for creating benchmarking problems. A more extensive comparison of the performance of various uncertainty methods on test problems created with the framework from this work could be an appealing option for future work. For example, comparing various methods that are conceptually close to each other but differ in their implementation, such as the different variants of deep ensembles from [18] and [56], for varying complexity could yield valuable insights that could help in the decision on which to use for a given practical use case. We focussed in this work on classical statistical metrics for the evaluation of the considered methods for uncertainty quantification, also some results for other metrics were shown in Fig. 4. The proposed benchmarking framework could also be used in future work to study the behavior of the different metrics and to evaluate their usefullness.

Notes

The anchor solution requires the value of the standard deviation of the noise of a data set, which has simply been taken as the estimate obtained by fitting the corresponding model to the data set.

References

Kooi T, Litjens G, van Ginneken B, Gubern-Mérida A, Sánchez CI, Mann R, den Heeten A, Karssemeijer N (2017) Large scale deep learning for computer aided detection of mammographic lesions. Medical Image Anal 35:303–312. https://doi.org/10.1016/j.media.2016.07.007

Huang Y, Chen Y (2020) Survey of state-of-art autonomous driving technologies with deep learning. In: 2020 IEEE 20th international conference on software quality, reliability and security companion (QRS-C), pp 221–228. https://doi.org/10.1109/QRS-C51114.2020.00045

Otter DW, Medina JR, Kalita JK (2021) A survey of the usages of deep learning for natural language processing. IEEE Trans Neural Netw Learn Syst 32(2):604–624. https://doi.org/10.1109/TNNLS.2020.2979670

Deng L, Liu Y (2018) Deep Learning in Natural Language Processing. Springer, Singapore

Hoffmann L, Elster C (2020) Deep neural networks for computational optical form measurements. J Sens Sens Syst 9(2):301–307. https://doi.org/10.5194/jsss-9-301-2020

Kretz T, Müller K-R, Schaeffter T, Elster C (2020) Mammography image quality assurance using deep learning. IEEE Trans Biomed Eng 67(12):3317–3326. https://doi.org/10.1109/TBME.2020.2983539

Kretz T (2020) Development of model observers for quantitative assessment of mammography image quality. Doctoral thesis, Technische Universität Berlin, Berlin. https://doi.org/10.14279/depositonce-10552

Lundervold AS, Lundervold A (2019) An overview of deep learning in medical imaging focusing on mri. Zeitschrift für Medizinische Physik. Special Issue Deep Learn Med Phys 29(2):102–127. https://doi.org/10.1016/j.zemedi.2018.11.002

Schultz M, Betancourt C, Gong B, Kleinert F, Langguth M, Leufen LH, Mozaffari A, Stadtler S (2021) Can deep learning beat numerical weather prediction? Philos Trans Royal Soc 379 (2194):20200097. https://doi.org/10.1098/rsta.2020.0097

Watson-Parris D (2021) Machine learning for weather and climate are worlds apart. Philos Trans Series A Math Phys Eng Sci 379(2194):20200098. https://doi.org/10.1098/rsta.2020.0098

Bishop CM (2006) Pattern Recognition and Machine Learning (Information Science and Statistics). Springer, Berlin

Gal Y (2016) Uncertainty in deep learning. PhD Thesis:174

Depeweg S, Hernandez-Lobato JM, Doshi-Velez F, Udluft S (2018) Decomposition of uncertainty in Bayesian deep learning for efficient and risk-sensitive learning. 35th Int Conf Mach Learn ICML 3:1920–1934. arXiv:1710.07283

Robert CP, Casella G (2005) Monte Carlo statistical methods (Springer texts in statistics). Springer, https://doi.org/10.1007/978-1-4757-4145-2

Hinton GE, Srivastava N, Krizhevsky A, Sutskever I, Salakhutdinov R (2012) Improving neural networks by preventing co-adaptation of feature detectors. CoRR 1207.0580

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 15(1):1929–1958

Wang S, Manning C (2013) Fast dropout training. Proc 30th Int Conf Mach Learn 28 (2):118–126

Lakshminarayanan B, Pritzel A, Blundell C (2017) Simple and scalable predictive uncertainty estimation using deep ensembles. Adv Neural Inf Process Syst 2017-Decem(Nips):6403–6414. arXiv:1612.01474v3

Caldeira J, Nord B (2020) Deeply uncertain: comparing methods of uncertainty quantification in deep learning algorithms. arXiv:2004.10710, https://doi.org/10.1088/2632-2153/aba6f3

Gustafsson FK, Danelljan M, Schön TB (2019) Evaluating scalable Bayesian deep learning methods for robust computer vision. CoRR 1906.01620

Ovadia Y, Fertig E, Ren J, Nado Z, Sculley D, Nowozin S, Dillon JV, Lakshminarayanan B, Snoek J (2019) Can you trust your model’s uncertainty? Evaluating predictive uncertainty under dataset shift. arXiv:1906.02530v2

Scalia G, Grambow CA, Pernici B, Li Y-P, Green WH (2020) Evaluating scalable uncertainty estimation methods for deep learning-based molecular property prediction. J Chem Inf Model 60(6):2697–2717. https://doi.org/10.1021/acs.jcim.9b00975

Levi D, Gispan L, Giladi N, Fetaya E (2019) Evaluating and calibrating uncertainty prediction in regression tasks. arXiv:1905.11659

Wang C, Sun S, Grosse R (2021) Beyond marginal uncertainty: how accurately can bayesian regression models estimate posterior predictive correlations?. In: International conference on artificial intelligence and statistics. PMLR, pp 2476–2484

Naeini MP, Cooper G, Hauskrecht M (2015) Obtaining well calibrated probabilities using bayesian binning. In: Twenty-ninth AAAI conference on artificial intelligence

Kuleshov V, Fenner N, Ermon S (2018) Accurate uncertainties for deep learning using calibrated regression. In: International conference on machine learning. PMLR, pp 2796–2804

Henne M, Schwaiger A, Roscher K, Weiss G (2020) Benchmarking uncertainty estimation methods for deep learning with safety-related metrics. In: SafeAI@ AAAI, pp 83–90

Guo C, Pleiss G, Sun Y, Weinberger KQ (2017) On calibration of modern neural networks. In: International conference on machine learning. PMLR, pp 1321–1330

Nado Z, Band N, Collier M, Djolonga J, Dusenberry MW, Farquhar S, Feng Q, Filos A, Havasi M, Jenatton R et al (2021) Uncertainty baselines: benchmarks for uncertainty & robustness in deep learning. arXiv:2106.04015

Band N, Rudner TG, Feng Q, Filos A, Nado Z, Dusenberry MW, Jerfel G, Tran D, Gal Y (2021) Benchmarking bayesian deep learning on diabetic retinopathy detection tasks. In: NeurIPS 2021 workshop on distribution shifts: connecting methods and applications

Ståhl N, Falkman G, Karlsson A, Mathiason G (2020) Evaluation of uncertainty quantification in deep learning. In: International conference on information processing and management of uncertainty in knowledge-based systems. Springer, pp 556–568

Yao J, Pan W, Ghosh S, Doshi-velez F (2019) Quality of uncertainty quantification for Bayesian neural network inference. arXiv:1906.09686

Tran K, Neiswanger W, Yoon J, Zhang Q, Xing E, Ulissi ZW (2020) Methods for comparing uncertainty quantifications for material property predictions. Mach Learn Sci Technol 1(2):025006

Psaros AF, Meng X, Zou Z, Guo L, Karniadakis GE (2022) Uncertainty quantification in scientific machine learning: methods, metrics, and comparisons. arXiv:2201.07766

Chung Y, Char I, Guo H, Schneider J, Neiswanger W (2021) Uncertainty toolbox: an open-source library for assessing, visualizing, and improving uncertainty quantification. arXiv:2109.10254

Berger JO, Bernardo JM (1992) On the development of the reference prior method. Bayesian Statistics 4(4):35–60

Kass RE, Wasserman L (1996) The selection of prior distributions by formal rules. J Am Stat Assoc 91(435):1343–1370. https://doi.org/10.1080/01621459.1996.10477003

Graves A (2011) Practical variational inference for neural networks. In: Shawe-Taylor J, Zemel R, Bartlett P, Pereira F, Weinberger KQ (eds) Advances in neural information processing systems. Curran associates inc 57 Morehouse Lane, Red Hook NY, United States, vol 24

Kingma DP, Salimans T, Welling M (2015) Variational dropout and the local reparameterization trick. Adv Neural Inf Process Syst 2015-Janua(Mcmc):2575–2583. arXiv:1506.02557v2

Gal Y, Hron J, Kendall A (2017) Concrete dropout. In: Proceedings of the 31st international conference on neural information processing systems. NIPS’17. Curran Associates Inc., Red Hook, USA, pp 3584–3593

Blundell C, Cornebise J, Kavukcuoglu K, Wierstra D (2015) Weight uncertainty in neural networks. arXiv:1505.05424 [stat.ML]

Martin J, Elster C (2021) Errors-in-variables for deep learning: rethinking aleatoric uncertainty. arXiv:2105.09095 [cs.LG]

Pearce T, Leibfried F, Brintrup A, Zaki M, Neely A (2020) Uncertainty in neural networks: approximately Bayesian ensembling. arXiv:1810.05546 [stat.ML]

Barber D, Bishop C (1998) Ensemble learning in Bayesian neural networks. In: Generalization in neural networks and machine learning. Springer, Berlin Heidelberg, pp 215–237

Bishop C (1994) Mixture density networks. Workingpaper, Aston University

He B, Lakshminarayanan B, Teh YW (2020) Bayesian deep ensembles via the neural tangent kernel. arXiv:2007.05864 [stat.ML]

Hoffmann L, Elster C (2021) Deep ensembles from a Bayesian perspective. arXiv:2105.13283 [cs.LG]

Gelman A, Carlin JB, Stern HS, Dunson DB, Vehtari A, Rubin DB (2013) Bayesian Data Analysis. CRC Press, 6000 Broken Sound Parkway NW, Suite 300, Boca Raton, Fl, pp 33487– 2742

Shao J (2003) Mathematical statistics. Springer

Mathworks T (2020) Inc: MATLAB version 9.9.0.1467703 (r2020b). Natick, Massachusetts. The mathworks, Inc

Kingma DP, Ba J (2017) Adam: a method for stochastic optimization. arXiv:1412.6980 [cs.LG]

Ilg E, Çiçek Ö, Galesso S, Klein A, Makansi O, Hutter F, Brox T (2018) Uncertainty estimates and multi-hypotheses networks for optical flow. In: Ferrari V, Hebert M, Sminchisescu C, Weiss Y (eds) Computer vision – ECCV 2018. Springer, Cham, pp 677–693

Harrison D, Rubinfeld DL (1978) Hedonic housing prices and the demand for clean air. J Environ Econ Manag 5:81–102

Cortez P, Cerdeira A, Almeida F, Matos T, Reis J (2009) Modeling wine preferences by data mining from physicochemical properties. Decis Support Syst 47 (4):547–553. https://doi.org/10.1016/j.dss.2009.05.016. Smart business networks: concepts and empirical evidence

Ghahramani Z (1996) Kin family of datasets. https://www.cs.toronto.edu/delve/data/kin/desc.html. Accessed 29 Oct 2021

Huang G, Li Y, Pleiss G, Liu Z, Hopcroft JE, Weinberger KQ (2017) Snapshot ensembles: Train 1, get m for free. arXiv:1704.00109

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Jörg Martin and Clemens Elster contributed equally to this work

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Schmähling, F., Martin, J. & Elster, C. A framework for benchmarking uncertainty in deep regression. Appl Intell 53, 9499–9512 (2023). https://doi.org/10.1007/s10489-022-03908-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03908-3