Abstract

Video surveillance is an indispensable part of the smart city for public safety and security. Person Re-Identification (Re-ID), as one of elementary learning tasks for video surveillance, is to track and identify a given pedestrian in a multi-camera scene. In general, most existing methods has firstly adopted a CNN based detector to obtain the cropped pedestrian image, it then aims to learn a specific distance metric for retrieval. However, unlabeled gallery images are generally overlooked and not utilized in the training. On the other hands, Manifold Embedding (ME) has well been applied to Person Re-ID as it is good to characterize the geometry of database associated with the query data. However, ME has its limitation to be scalable to large-scale data due to the huge computational complexity for graph construction and ranking. To handle this problem, we in this paper propose a novel scalable manifold embedding approach for Person Re-ID task. The new method is to incorporate both graph weight construction and manifold regularized term in the same framework. The graph we developed is discriminative and doubly-stochastic so that the side information has been considered so that it can enhance the clustering performances. The doubly-stochastic property can also guarantee the graph is highly robust and less sensitive to the parameters. Meriting from such a graph, we then incorporate the graph construction, the subspace learning method in the unified loss term. Therefore, the subspace results can be utilized into the graph construction, and the updated graph can in turn incorporate discriminative information for graph embedding. Extensive simulations is conducted based on three benchmark Person Re-ID datasets and the results verify that the proposed method can achieve better ranking performance compared with other state-of-the-art graph-based methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Smart Cities are evolving along a number of paths, such as social services and governance, water and power networks, healthcare and transportation systems et al. And with the increasing number of vehicles, buildings and citizens, many studies have been started to develop extensive big data processing and analysis methods for modeling human behavior, urban systems, et al., while some new technique, such as memetic computing, have been adopted or applied on these challenging tasks. Among different real-world area for smart cities, video surveillance is an indispensable part to ganrantee the public security, especially for the traffic and safety management. As one of critical learning tasks in video surveillance, Person Re-Identification (Re-ID) is to track and identify a given pedestrian in a multi-camera scene in different challenging environments [1,2,3,4,5], such as camera views, person poses and changes in illumination, background clutter, and occlusion. In general, most currently state-of-the-art Person Re-ID is first to adopt a CNN based detector to obtain the cropped pedestrian image, it is then to learn a specific distance metric for ranking, where the distance is usually measured by the similarity between two low-dimensional image features extracted from CNN framework [4, 5]. For example, the work in [6] has developed FastReID to provide strong baselines that are capable of achieving the state-of-the-art performance, where the pedestrian images are firstly cropped from video sequence by human labeler or selected by CNN based detector, and then extensive CNN backbones with certain aggregation strategies and loss functions are trained to extract the feature of pedestrian images for distance metric. In addition, to handle a real-world video Re-ID problem in surveillance applications, [1] has developed a video-based Person Re-ID by exploring useful information captured from sequences. In detail, it firstly crops the image sequences belonging to a certain pedestrian via certain detector. It then trains a special aggregation network with these sequential data to grasp both spatial and temporal information for feature extraction, where these features can be utilized for distance metric for realizing Re-ID task.

However, the distance-based ranking methods only focuses on the pair-wise similarity among training dataset but the distribution of the whole dataset is neglected and not considered. In some real-world application case, e.g., the pose of the pedestrian changes greatly, the pedestrian image with the front view may be quite different from the one with back view. In such case, the system may judge them belonging to uncorrelated pedestrians, since distance-based ranking method only consider pair-wise similarity. However, if the similarity is measured by involving the distribution information or intrinsic manifold, such problem may be avoided, as the pedestrian images belonging to the same person but with different view are in the same manifold. This is the main drawback of distance-based ranking method. Obviously, a good Re-ID method should consider image features as well as the intrinsic structure of the image database. To handle the above problem, a key issue is to how to involve the data distribution or manifold information into the calculating of similarity between any two pair-wise images, so that the extracted feature can better provide more geometrical structure for guiding the distance-based ranking method. Motivated by this end, we in this paper consider the graph embedding method by taking the underlying structure into account for distance metrics.

The Graph Embedding (GE) methods [7,8,9,10] are developed to preserve the properties of a graph for feature extraction, where these methods are firstly to construct a graph to approximate the geometrical structure of data and then to learn a project matrix to cast the high-dimensional dataset to low-dimensional subspace while preserving the geometrical information. From this point of view, ME can naturally preserve the distribution of dataset. many GE methods have been proposed during the past decades in order to discover the intrinsic geometrical structure of data manifold [11,12,13,14,15,16,17,18,19]. Typical methods include ISOMAP [20], Locally Linear Embedding (LLE) [21] and Laplacian Eigenmap (LE) [22]. But these methods cannot handle the new-coming samples, hence suffering out-of-sample problems. To solve this problem, He et al. has developed Locality Preserving Projections (LPP) [23] to project the original high-dimensional data into low-dimensional subspace with a projection matrix. Therefore, the out-of-sample problem can be naturally handled as the projection matrix can be used for handling new-coming samples. Cai et al. [24, 25] further pointed that LPP is time-consuming especially for calculating the generalized eigenvalue problem given the data matrix is with high-dimensionality. They therefore proposed Spectral Regression (SR) to handle the above problem, in which they first to calculate the low-dimensional subspace, and then to calculate the projection via solving a regression problem. Recently, Nie et al. [26] have detailed analyzed the equivalent relationship between LPP and SR, through which they proposed a more efficient and effective method, namely Unsupervised Large Graph Embedding (ULGE), for dimensionality reduction and subspace clustering [27, 28].

On the other hand, graph neural network (GNN) is one of the most popular topic during the past few years, which has been proved to be effective for dealing with structured data [29,30,31,32]. For example, the work in [33, 34] have applied GCN for handling diagnosis problem of COVID-19. The goal of GNN [29, 30] is to integrate local geometrical structure and data features in the convolutional layers, so that both the connectivity patterns and feature attributes of graph-structured data can be preserved via the graph convolution and outperforms many state-of-the-art methods significantly. However, most of these methods could only be utilized to small-scale graph due to the computation limitation; another type of GNN is Spatial GNN [31, 32], which is to constructs a new feature vector for each vertex by aggregating the neighborhood information. But these methods need a predefined local graph so that they cannot learn the global information. Our work can be potentially viewed to make GNN to deal with large-scale graph without local sampling.

In general, the above graph construction methods are good to characterize the geometrical structure of data manifold. But these methods are all unsupervised, which means they do not utilize the discriminative information, such as class label or side information, which is good to enhance the clustering performance. Since the graph construction is unsupervised, it may confront the case that the data belonging to two different clusters or classes are connected. In such case, the graph construction tends to not be correct and the learned graph embedding results may cause some mistakes. Another problem is that these methods are usually two-stage approach, i.e. the graph construction and subspace learning are separated. The two stages do not have any information to improve graph embedding. In practice, we hope the geometrical information learned by the graph embedding procedure can well be used to enhance the local preserving ability of graph construction, and the modificed graph construction can in turn promote the learning of graph embedding [35].

In this paper, to handle this problem, we develop a new spectral regression method for dimensionality reduction by integrating both graph construction and manifold regularized term into the same framework. In order to handle the case that nearby data points with different clusters may be mis-connected, we in this paper has adopted the side information for graph construction, which is utilized to make the constraint that the pair-wise data points have no link when they are clearly belonged to different clusters. In addition, we further involve the normalized and symmetrical constraint to make the graph doubly-stochastic. As mentioned by the work [36], the doubly-stochastic property can also guarantee the graph is highly robust and less sensitive to the parameters. Meriting from such a graph, we then incorporate the graph construction, the subspace learning method in the unified loss term. Therefore, the subspace results can be utilized into the graph construction, and the updated graph can in turn incorporate discriminative information for graph embedding. Extensive simulations have been conducted based on several benchmark datasets. Simulation results indicate that the proposed work can achieve much better clustering and image retrieval performance than other methods.

The main contribution of the proposed work are as follows:

-

1)

we have proposed a discriminative and doubly-stochastic graph for characterizing the geometrical of data manifold. By adopting the side information into consideration, the proposed graph can guarantee the data points with different clusters will not be mis-connected. In addition, the normalized and symmetrical constraint can make the graph doubly-stochastic so that the graph is highly robust and less sensitive to the parameters;

-

2)

we have incorporated the graph construction, the subspace learning method in the unified loss term. Therefore, the subspace results can be utilized into the graph construction, and the updated graph can in turn incorporate discriminative information for graph embedding;

-

3)

we also developed an iterative solution to handle the above optimization problem. Theoretical analysis has shown the convergence.

-

4)

we have applied the proposed method on Person Re-Identification. Extensive simulation results also verify the effectiveness of the proposed method.

This paper is structured as follows: in Section 2, we have briefly review some related work about graph embedding; in Section 3, we give detail description of the proposed work for graph embedding; in Section 4, we will conduct extensive simulations to show the effectiveness of the proposed work on Person Re-Identification and final conclusion is drawn in Section 5.

2 Related work

In this paper, we first give some notations used in this work and review the work of LE, LPP, SR and ULSR.

2.1 Notations

Specifically, let \(X = \left \{ {{x_{1}},{x_{2}}, \cdot \cdot \cdot ,{x_{l}}} \right \} \in {R^{D \times l}}\) be the original-high dimensional dataset, each xi belongs to a class \({c_{i}} = \left \{ {1,2, \cdot \cdot \cdot ,c} \right \}\), where li be the number of data points in the i th class and l be the number of data points in all classes. We also denote that \(Y = \left \{ {{y_{1}},{y_{2}}, \cdot \cdot \cdot ,{y_{l}}} \right \} \in {R^{d \times l}}\) is the low-dimensional representation to X. In graph-based subspace learing framework, a similarity matrix is to defined for measuring the similarity of dataset. In detail, denote \( G = \left ({ V, E} \right )\) as the graph, where V is the vertex set of G representing the training set, E is the edge set of G related with W involving the geometrical information. In addition, let L = D − W and \(\widetilde L = {D^{{{ - 1} \mathord {\left / {\vphantom {{ - 1} 2}} \right . \kern -\nulldelimiterspace } 2}}}L{D^{{{ - 1} \mathord {\left / {\vphantom {{ - 1} 2}} \right . \kern -\nulldelimiterspace } 2}}}\) be the graph and normalized graph Laplacian matrix, which is to approximate the geometrical structure of dataset, D is a diagonal matrix satisfying \({D_{jj}} = \sum \nolimits _{i = 1}^{l} {{W_{ij}}} \).

2.2 Review of LE, LPP and SR

The goal of LE is to calculate the low-dimensional representation as follows:

where the optimal result for Y can be formed by the top p eigenvectors of D- 1L or \({D^{{\text { - }}1/2}}L{D^{{\text { - }}1/2}}{\text { = }}\widetilde L\). However, LE cannot calculate the subspace graph embedding for new-coming data points hence cannot handle out-of-sample problem. To solve this problem, LPP further assume that the low-dimensional representation can be projected by high-dimensional data, i.e.Y = VTX, where V is the projection matrix. it is then to calculate the projected matrix following the objective function as:

where I is an identity matrix to avoid ill-posed problem, α is a small value. The optimal solution of V can be calculated by solving generalized eigenvalue decomposition (GEVD) of \({\left ({XD{X^{T}}{\text { + }}\alpha I} \right )^{- 1}}XL{X^{T}}\). Then, the out-of-sample problem can be naturally handled by projecting the new-coming data points to the low-dimensional graph embedding by the projection matrix. However, the computational cost for LPP is huge given that XDXT and XLXT are dense matrixes. To solve this problem, SR is developed, which is first to calculate the low-dimensional representation Y following (1), it then calculate the projection matrix via solving a regression problem as follows:

The optimal solution of SR is \({\left ({X{X^{T}} + \alpha I} \right )^{- 1}}{X^{T}}Y\), which can be efficiently solved by some well-studies methods, such as LSQR. The SR is more efficient than LPP it only needs to calculate the GEVD of sparser matrix D- 1L. However, SR is not equivalent to LPP. In addition, both SR and LPP cannot handle large-scale datasets. As pointed in [26], given the degree of similar matrix W equivalent to 1, i.e. D = I, so that the graph Laplacian matrix is equivalent to normalized one \(L = I - W = \tilde L\), the optimal solution of SR can be equivalent to LPP.

2.3 Unsupervised large graph embedding (ULGE) with anchor graph construction

Though the conventional graph embedding methods have achieved satisfied results, they cannot be extended to large-scale dataset due to the computational complexites for both graph construction and graph embedding are not linear with the number of data points. To handle this problem, ULGE has developed an efficient SR method for graph embedding, which is based anchor graph construction [37].

In general, anchor graph first seeks m anchors, where m ≪ n, and then calculate the weight matrix based on the anchor data and each data point. In detail, let \(A = \left \{ {{a_{1}},{a_{2}}, {\ldots } {a_{m}}} \right \} \in {R^{d \times m}}\) represents the set of anchor points, Z ∈ Rm×n represents the adjancy matrix. Each element Zij is to evaluate the similarity between xj and ai with constraint Zij ≥ 0, \(\sum \nolimits _{i = 1}^{m} {{Z_{ij}}} = 1\). Then, the anchor graph W is contructed based Z, which can be shown as follows:

where Δ ∈ Rm×m represents a diagonal matrix satisfying \({{\Delta }_{ii}} = \sum \nolimits _{j = 1}^{n} {{Z_{ij}}} \), S = Δ− 1/2Z ∈ Rm×n is the bilinear decomposition of W. It can be easily proved that Wa is doubly-stochastic hence it has probability meaning. Compared with k NN graph, which needs O(n2k) to contruct the graph, the anchor graph construction is more efficient since it only needs O(m3 + nm2) computational complexity.

Finally, The ULGE is to formulate the graph embedding Y by the eigenvectors corresponding to the largest eigenvalues of ST and to calculate the projection matrix by solving a regression problems as (3). Since S ∈ Rm×n, the computational complexity for calculating the GEVD of S is linear with n and much smaller than the one for directly calculating the GEVD of \(\widetilde {L}\).

- Notes: :

-

Cai et al. [27, 38] recently developed a graph embedding method for subspace clustering, namely, L arge-scale S pectral C lustering (SLC), which is also based on anchor graph construction and share similar concept with ULGE. However, SLC is only to formulate the graph embedding Y by the eigenvectors corresponding to the largest eigenvalues of ST and then perform conventional k-means on Y for calculating the cluster labels for the dataset. Therefore, compared with ULGE, SLC cannot obtain the graph embedding for new-coming dataset so that it cannot handle out-of-sample problem. In other word, ULGE can be viewed as an extension work to SLC.

3 Semi-supervised adaptive graph embedding (SAGE)

3.1 Motivation and problem formulations

The above graph construction methods are good to grasp the geometrical information of dataset [26]. However, one problem is that they are all unsupervised and do not utilize the discriminative information provided by the class labels to enhance the clustering performance. A case in point is that if two data points xi and xj are in different cluster structure, they should not share any comment anchors so that \({s_{i}^{T}}s_{j}=0\). An illustration can be shown in Fig. 1.

Motivations: the conventional anchor graph construction is unsupervised and do not utilize the discriminative information provided by the class labels. This will cause the case that nearby data points belonging to different classes will share the common anchors hance causing mis-connections between them. An ideal case should guarantee there is no connection between any pairwise data points that belong to different classes

To solve the above problem, it is natural to integrate side information into the graph construction so that the samples with different clusters will has no link. Here, we induce a matrix T ∈ Rn×n in this work, where Tij = 1 if xi and xj are not connected in the graph; Tij = 0, otherwise. Then, we can constrain graph weight matrix W with WijTij = 0 for optimization. As can be seen in the simulation, more and more link is disconnected so that more clear clustering structure can be observed.

Another problem is that the graph construction and subspace learning are separated. The two stages do not share any information to enhance the performance of graph embedding. By this end, we then propose the proposed method by simutaneously calculating

the optimal graph matrix W, the bilinear decomposition S and the graph embedding Y as follows:

where γ is a parameter balancing the tradeoff between two terms. We further add the constraint of degree normalization, i.e. Dii = 1, i.e. W1T = 1T, where 1 ∈ R1×n is a one vector. Since W is both non-negative and symmetric, imposing W1T = 1T can make it doubly-stochastic [39] and less sensitive to the parameters. From (5), we can see that we have unified the graph construction and subspace learning into the same object function. Then, the subspace results can be used to enhance the geometrical preserving ability of the graph construction, and the modified graph can in turn incorporate discriminative information for graph embedding.

3.2 Solution

We next show how to solve the optimal solution of W, S and Y in (5), we use the alternative optimization approach to handle the problem. In detail, we first let St, Wt and Yt be the t-th iteration of S, W and Y. To calculate graph embedding Yt+ 1, we first fix Wt and St. Then (5) will be back to the conventional graph based subspace learning method, i.e.

The optimal Yt+ 1 is to be obtained by the largest eigenvectors of \({S_{t}^{T}}S_{t}\).

To calculate the updated St+ 1, we need to replace the objective function of (5) with its first order approximation. Then (5) can be rewritten as:

where \({W_{t}^{Y}} \in R^{n \times n}\) with each element satisfying \({{W_{t}^{Y}}}|_{ij}=||y_{i}-y_{j}||_{F}^{2}\), so that \(Tr(YLY^{T})=\sum \nolimits _{ij}{||y_{i}-y_{j}||_{F}^{2}S^{T}S}=Tr(S{W_{t}^{Y}}S^{T})\), \(Q\left (S \right )\text {=}\left \| W_{t}-{{S}^{T}}S \right \|_{F}^{2}+\gamma Tr\left (S{W_{t}^{Y}}{{S}^{T}} \right )\) and \({{\nabla }_{S}}Q\left ({{S}_{t}} \right )\text {=}{{S}_{t}}{S_{t}^{T}}{{S}_{t}}-{{S}_{t}}\left (W_{t}-\gamma {W_{t}^{Y}} \right )\) is the partially differential of \(Q\left (S \right )\) w.r.t. S at St, η is the Lipschitz parameter and we choose it as the trace of second differential of \(Q\left (S \right )\), i.e. \(\eta \text {=}\left \| {{S}^{T}}S \right \|_{F}^{2}\), \(P\left ({{S}_{t}} \right )\) is a fixed term with St that can be neglected. The updated St+ 1 is equivalent to solve:

We next fix Yt and St and update Wt+ 1. Then (5) falls into an instance of quadratic programming (QP), which can be shown as follows

where \({{W}_{t}^{0}}={S_{t}^{T}}S_{t}\). For efficient computation, we divide the QP problem into two convex sub-problems:

and

The Wt+ 1 in (10) can be simply formulated by as the non-negative elements of \({W_{t}^{0}}\). On the other hand, (11) is solved to take the Lagrangian:

where t and μ ∈ R1×n are the Lagrangian parameters. We then set the derivatives of \(J\left (W \right )\) w.r.t. W to zero, i.e.

To fulfill \(Tr\left (WT \right )=0\), we have:

Considering the normalized constraint W1T = 1T or 1W = 1, we have

We then replace t in (15) with (14) and with some math derivations, we have

where R is a fixed matrix as:

The detail derivations of (16) from (15) can be seen in A. Then by replacing μ in (13) with (16), we can obtain the updated Wt+ 1. This iterative procedure will be converged due to the Von-Neumann’s successive projection lemma [36, 40, 41]. Therefore, we iteratively updated W, S and Y following (6), (8) and (9), respectively, until the objective function in (5) is converged to a given small value. The detail algorithm for iteratively solving (5) can be seen in Algorithm 1.

3.3 Computational complexity analysis

We next analyze the computational complexity of the proposed SAGE. Based on the basic steps in Algorithm 1, the computational complexity can be divided into three parts:

-

1)

Obtain the m anchors by k-means. If we choose the Balanced and Hierarchical K-means (BAHK) methods as in [42], this will needs O(ndlog(m)t), where t is the number of iterations;

-

2)

Initialize the similarity matrix S0 and formulate the original graph weight matrix W0, where we need the computational complexity O(ndm);

-

3)

Update the low-dimensional representation Y, the similarity matrix S, and graph weight matrix W according to Algorithm 1. In detail, to update Yt+ 1 in each iteration, we needs to perform the SVD of matrix St, where the computational complexity is O(nmk) and k is the number of nearest neighbors; to update St+ 1, we needs to calculate \({W_{t}^{Y}}\) and δSQ(St). The computational complexity is O(nd2) and O(nmd + nmq), respectively, where q is the average non-zero number of Wt or \({W_{t}^{Y}}\). Therefore the total complexity O(nd2 + nmd + nmq); to update Wt+ 1, we need to calculate the alternate projection procedure of (10) and (11), where the total complexity is O(nqtp) and tp is the iteration number of the alternate projection procedure;

-

4)

Calculate the projection matrix V by solving the regression problem of (3), where the computational complexity is O(ndm).

Considering that m ≪ n, the computational complexity of the proposed SAGE is O(ndm). Compared with the conventional spectral based methods, such as LPP and SR, the proposed method is computationally efficient that can handle large-scale data.

4 Simulations

In this section, we will evaluate the proposed method based on one toy example and several real-world benchmark datasets, and compare the performance with other state-of-the-art methods. In toy example, we generalize a two-cycle dataset with two clusters, each follows a cycle distribution but with different radius; for real-world datasets, we choose three benchmark datasets, i.e. COIL100 dataset [43], CASIA-HWDB [44] and Yale-B dataset [45] and Fashion-MNIST dataset [46] for evaluating the performance of graph embedding. To further show the effectiveness of the proposed work, we also utilize the proposed work for handling the task of content-based image retrieval. Our goal is to verify the distance calculated by the low-dimensional representation of datasets can well preserve the geometrical structure of data manifold, which is good to enhance some real-world applications.

4.1 Parameter analysis

We first verify the convergence of the proposed method. Specifically, we randomly initialize the similarity matrix S0 and graph weight matrix W0, we also fix the regularized parameter γ = 10 in (5) and the number of anchors as 500. For the parameter T, we randomly select 10% side information to form matrix T. We then show the convergence for the object function value in (5) based on CASIA-HWDB [44] dataset. Figure 2 shows the iterative procedure of the proposed method for updating the graph weight. From the simulation results, we can see that with the iterations the missing link between different classes are graduate weakened until the structure of two classes are distinctively separated. This toy example well shows the effectiveness of the proposed method.

We next evaluate some important parameters of the proposed work. Here, it should be noted that there are three main parameters in our work including matrix T, anchor number and tradeoff parameter γ. To get the optimal values, we first fix matrix T, then set the candidates of anchor number as [500,1000,1500,2000,2500] and those of γ as [10− 3,10− 2,10− 1,1,10,102,103], respectively. We then perform the proposed method and calculate the clustering accuracies based on certain value of T, anchor number and γ, through which the optimal values can be selected according to the best accuracies. Here, for the parameter T, 10% side information are randomly selected to form matrix T. The reason is that the proposed work mainly focuses on semi-supervised learning, which aims to utilize side information to enhance the clustering or ranking performance. If we choose 0% side information, it means the proposed work is totally an unsupervised learning method so that the parameter γ has no use as it is to balance the manifold term and side information regularization term; on the other hand, if we choose 20% side information, it means we use too much discriminative information. A good strategy is to use as fewer side information as possible and to achieve competitive results, so that we randomly select 10% side information to form matrix T. Figure 3 shows accuracies with varied number of anchors with range from 500 to 2500 and γ from 10− 3 to 103. From Fig. 3, we can see that the results are satisfied and related stable when γ fall in the range of [0.1,103] and the number of anchors is larger than 1000.

4.2 Subspace embedding evaluations

In this section, we will evaluate the effectiveness of the proposed work based on one synthetic dataset and three benchmark datasets. In the synthetic dataset, we evaluate the proposed work based on a dataset with two classes. each one follows a cycle distribution. In this toy example, we will show how the proposed method can update the graph weight so that both geometrical structure and discriminative information can be perserved. In the real-world datasets, we evaluate the performance of subspace embedding based on COIL100 dataset [43], CASIA-HWDB [44], Yale-B dataset [45] and Fashion-MNIST [46].

- COIL100 dataset :

-

[43] is an object image dataset where each object is viewed from varying angles at an interval of five degrees so that each object has 72 images. The original size of each cropped image is 128 × 128, with 24bit color levels per pixel. In our study, we down-sample the images to 32 × 32 and transfer them to 256 gray levels.

- CASIA-HWDB dataset :

-

[44] is a handwritten image dataset which include both isolated characters (52 categories including 26 upper case letters and 26 lower case letters) and handwritten texts (10 categories including 0-9 digits). In our study, we choose a subset from it which includes images from 52 isolated characters. Then, the subset has 12456 samples with an image size of 16 × 16 in 256 gray levels.

- Yale-B dataset :

-

[45] is a famous face dataset that contains 16128 images for 38 humans under 64 illumination conditions and 9 poses. In our study, we resize them to 32 × 32 pixels and choose approximately 64 near frontal images under different illuminations for each human.

- Fashion-MNIST dataset :

-

[46] is a Zalando’s clothes images dataset having 10 classes. It has 60000 and 10000 images for training set and test set, respectively. Each image is with 28 × 28 size in 256 gray level.

We first utilize one-cycle dataset to verify the effectiveness of the proposed method, where we randomly select 10% side information to form matrix T. Figure 2 shows the updated graph weight matrix during the iterative procedure in Algorithm 1, where the black line is the weight connection. From Fig. 2, we can observe that the graph weights between pairwise data points that belong to different clusters are gradually weakened, while those in the same cluster are strengthened. Therefore, the clustering structure of data manifold is much more distinctive. This illustrates the effectiveness of the proposed method.

We next evaluate the clustering results of the proposed method, where we use Clustering Accuracy to evaluate the performance for different methods [47]. The average results for ACC and NMI based on 20 random splits are given in Tables 1 and 2. Here, we evaluate the clustering results for a different number of clustering k. Tables 1 and 2 give the clustering results based on different datasets, where the left columns are the results of LE, LPP, SR, ULGE and the unsupervised version of the proposed method, while the right two columns are the results of semi-supervised versions of SAGE with 10% and 20% side information. From simulation results, we can obtain the following observations:

-

1)

the performance of ULGE and unsupervised version of SAGE outperform the conventional graph embedding methods such as LE, LPP and SR by approximately 4-5% improvement. The reason is that ULGE and the proposed method have utilized anchor graph so that the doubly-stochastic property can make the graph highly robust and less sensitive to the parameters;

-

2)

the unsupervised version of SAGE is superior to other methods, by approximately 3% and 4% enhancement compared to ULGE and other methods. This is believable due to the reason that the proposed method has incorporated the graph construction and graph embedding learning into the unified framework;

-

3)

by utilizing side information, the semi-supervised version of SAGE can achieve much better results than other state-of-the-art unsupervised methods. The improvement can achieve approximately 5% in COIL100 datasets given 20% side information. This indicates that the discriminative information is indeed good to improve the subspace clustering results.

4.3 Applications for person re-identification

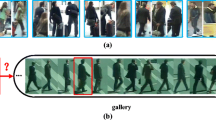

In this section, we utilize the proposed method on Person Re-Identification [48, 49]. In detail, given a query person provided, we first calculate its low-dimensional representation. We then calculate the Euclidean Distance between the query person image and database person images via the low-dimensional subspace. Finally, the top person images in database with smallest distance to the query person image are chosen as the relevant results. The original query data combined with relevant data will be formulated as a new set of query data for another round retrieval procedure. The procedure will be repeated iteratively for several times after the retrieval performance is satisfied by the user. In this work, we select three benchmark Re-ID datasets, i.e. PKU [50], WARD (Wide Area Camera Network) [51] and RAiD (Re-Identification Across Indoor-Outdoor) Dataset [52] datasets for evaluations.

PKU-ReID dataset [50] is a Re-ID dataset. This dataset has 114 individuals including 1824 images captured from two disjoint camera views. For each person, eight images are captured from eight different orientations under one camera view and are normalized to pixels. In our study, we split it into two parts randomly, i.e., 57 individuals for training, and the other 57 ones for testing.

WARD dataset [51] is also a Re-ID dataset collected with three non-overlapping cameras. Each person has several images in each camera. As a result, a total of 4786 images of 70 different individuals has been extracted. The original size of images is with 320 × 240 pixels. In our work, we down-sample them to 96 × 48 pixels.

RAID dataset [52] is collected at the Winstun Chung Hall of UC Riverside. It is a 4-camera Re-ID dataset with 2 indoor and 2 outdoor cameras. A total of 43 peoples have walked into these camera views causing 6920 images. The size of each cropped image is originally 128 × 64 with 24bit color levels. In our work, we down-sample the size of images to 32 × 16pixels.

4.3.1 Qualitative analysis

We first shown the qualitative analysis of the proposed method for Person Re-ID. Figure 4 shows the retrieval results of the proposed work under different query data for PKU [50], WARD [51] and RAiD [52] datasets. Simulation results can show that the proposed work can almost achieve 100% results given certain query data illustrating the effectiveness of the proposed work. Figures 5, 6, 7 show the image retrieval results by the proposed method with 10 scope. In this result, the images of the first column represent the queries and those in the following columns are the retrieval reslts with the top 15, 25 and 25 values under different iterations for PKU [50], WARD [51] and RAiD [52] datasets. The image with blue box in each group represents the query image randomly selected from WARD dataset, while those with green boxes represents the ones that are related to the query and those with yellow boxes represents the ones that are not related to the query. From Figs. 5, 6, 7, we can observe the retrieval performance given the queries are good, which can achieve almost up to 90% accuracies after the fourth iteration with user’s provided revelant feedback. In addition, we can see that with the iteration increased, the retrieval results can be greatly enhanced showing that the user’s revelant feedback information can provide useful supervised information that are good for the Person ReID.

4.3.2 Quantitative analysis

In this subsection, we compared the proposed method with other graph embedding methods for CBIR. The compared methods are the same as in data clustering evaluation. But we do not compare the performance with LE [22] and LSC [38] as they cannot calculate the low-dimensional representations of query data as it handle out-of-sample data. In our study, we use MAP-scope to evaluate the performance of the proposed work and to compare with those of other state-of-the-art methods. In detail, the scope is the number of top-ranked images given to a certain query, while M ean A verage P recision (MAP) represents the average ratios of the numbers of relevant data to certain scope. Therefore, it evaluates the accuracy under different scopes for certain method.

Figure 8 gives the mean of MAP-scope under the fixed iteration number 1, 2 and 4, respectively. In addition, Fig. 9 gives MAP with increased iterations but with certain scope of 4, 7, 10 for PKU dataset, and 10, 20, and 50 for WARD and RAiD datasets respectively. From results in Figs. 8 and 9, we can see that the MAPs for all methods will become better given the user feedback is increased. This indicates that user feedback is indeed helpful to increase the discriminative information for handling CBIR problem. For example, the proposed method in Fig. 9 can achieve 15% enhancement with the fourth iteration than those without iteration in 20 scope of RAiD dataset. In addition, the proposed method can obtain better retrieval results over almost all scope and iterations than other compared methods.

5 Conclusion

In this paper, we in this paper develop a new scalable manifold ranking method for Person ReID by incorporating both graph weight construction and manifold regularized term in the same framework. The graph we developed is discriminative and doubly-stochastic. This can make the graph highly robust and insensitive to the dataset and parameters. In addition, by adopting the side information into consideration, the proposed graph can guarantee the data points with different clusters will not be mis-connected so that it can enhance the classification performances. Meriting from such a graph, we then incorporate the graph construction, the subspace learning method in the unified loss term. Therefore, the subspace results can be used to enhance the geometrical preserving ability of graph construction, and the modified graph can in turn incorporate discriminative information for graph embedding. Simulation indicates that the proposed method can achieve superor Person Re-ID performance.

While the proposed work can achieve satisfied results, our future work will focus on several issues: first, graph neural network (GNN) is one of the most popular topics during the past few years, which has been proved to be effective for dealing with structured data. We can consider to extend the proposed work to form a graph convolutional layer so that it can merit from the strong ability of CNN for grasp both the geometrical and data attribute features; secondly, we can also consider to connect with CNN network to form an end-to-end deep learning framework, which can adopt the powerful feature extraction ability of CNN as well as maintain the geometrical information via GCN. This is also of great significance to improve the performance of image retrieval.

References

Song W, Zheng J, Wu Y, Chen C, Liu F (2021) Discriminative feature extraction for video person re-identification via multi-task network. Appl Intell 51(2):788–803

Pang Z, Guo J, Sun W, Xiao Y, Yu M (2021) Cross-domain person re-identification by hybrid supervised and unsupervised learning. Applied Intelligence, pp 1–15

Su J, He X, Qing L, Cheng Y, Peng Y (2021) An enhanced siamese angular softmax network with dual joint-attention for person re-identification. Applied Intelligence, pp 1–19

Luo H, Jiang W, Gu Y, Liu F, Liao X, Lai S, Gu J (2019) A strong baseline and batch normalization neck for deep person re-identification. IEEE Trans Multimed 22(10):2597–2609

Cheng Z, Dong Q, Gong S, Zhu X (2020) Inter-task association critic for cross-resolution person re-identification. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2605–2615

He L, Liao X, Liu W, Liu X, Cheng P, Mei T (2020) Fastreid: A pytorch toolbox for general instance re-identification. arXiv preprint arXiv:2006.02631

Zhou D, Weston J, Gretton A, Bousquet O, Schölkopf B. (2003) Ranking on data manifolds. In: NIPS, vol 3

Xu B, Bu J, Chen C, Wang C, Cai D, He X (2015) Emr: A scalable graph-based ranking model for content-based image retrieval. IEEE Trans Knowl Data Eng 27(1):102–114

Wang F, Zhang C, Shen HC, Wang J (2006) Semi-supervised classification using linear neighborhood propagation. In: 2006 IEEE computer society conference on Computer vision and pattern recognition, vol 1, IEEE, pp 160–167

Yang Y, Nie F, Xu D, Luo J, Zhuang Y, Pan Y (2012) A multimedia retrieval framework based on semi-supervised ranking and relevance feedback. IEEE Trans Pattern Anal Mach Intell 34(4):723–742

Zhao M, Zhang Z, Chow TW (2012) Trace ratio criterion based generalized discriminative learning for semi-supervised dimensionality reduction. Pattern Recogn 45(4):1482–1499

Zhao M, Chow TW, Wu Z, Zhang Z, Li B (2015) Learning from normalized local and global discriminative information for semi-supervised regression and dimensionality reduction. Inf Sci 324:286–309

Zhao M, Zhang Z, Chow TW, Li B (2014) A general soft label based linear discriminant analysis for semi-supervised dimensionality reduction. Neural Netw 55:83–97

Zhang Z, Li F, Zhao M, Zhang L, Yan S (2017) Robust neighborhood preserving projection by nuclear/l2, 1-norm regularization for image feature extraction. IEEE Trans Image Process 26(4):1607–1622

Gao Y, Ma J, Zhao M, Liu W, Yuille AL (2019) Nddr-cnn: Layerwise feature fusing in multi-task cnns by neural discriminative dimensionality reduction. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3205–3214

Zhang H, Zhang Z, Zhao M, Ye Q, Zhang M, Wang M (2020) Robust triple-matrix-recovery-based auto-weighted label propagation for classification. IEEE Trans Neural Netw Learn Syst 31(11):4538–4552

Zhang Z, Jia L, Zhao M, Liu G, Wang M, Yan S (2018) Kernel-induced label propagation by mapping for semi-supervised classification. IEEE Trans Big Data 5(2):148–165

Zhao M, Lin M, Chiu B, Zhang Z, Tang XS (2018) Trace ratio criterion based discriminative feature selection via l2, p-norm regularization for supervised learning. Neurocomputing 321: 1–16

Zhang Z, Li F, Zhao M, Zhang L, Yan S (2016) Joint low-rank and sparse principal feature coding for enhanced robust representation and visual classification. IEEE Trans Image Process 25(6):2429–2443

Tenenbaum JB, De Silva V, Langford JC (2000) A global geometric framework for nonlinear dimensionality reduction. Science 290(5500):2319–2323

Roweis ST, Saul LK (2000) Nonlinear dimensionality reduction by locally linear embedding. Science 290(5500):2323–2326

Belkin M, Niyogi P (2002) Laplacian eigenmaps and spectral techniques for embedding and clustering. Adv Neural Inf Process Syst 14(6):585–591

Yu W, Teng X, Liu C (2006) Face recognition using discriminant locality preserving projections. Image Vis Comput 24(3):239–248

Deng C, He X, Han J (2007) Spectral regression: a unified subspace learning framework for content-based image retrieval. In: Proceedings of the 15th international conference on multimedia 2007, Augsburg, Germany, September 24-29, 2007

Cai D (2015) Compressed spectral regression for efficient nonlinear dimensionality reduction. In: Twenty-fourth international joint conference on artificial intelligence

Nie F, Zhu W, Li X (2017) Unsupervised large graph embedding. In: Thirty-first AAAI conference on artificial intelligence

Chen X, Cai D (2011) Large scale spectral clustering with landmark-based representation. In: Twenty-fifth AAAI conference on artificial intelligence

Li Y, Nie F, Huang H, Huang J (2015) Large-scale multi-view spectral clustering via bipartite graph. In: Twenty-ninth AAAI conference on artificial intelligence

Defferrard M, Bresson X, Vandergheynst P (2016) Convolutional neural networks on graphs with fast localized spectral filtering. arXiv preprint arXiv:1606.09375

Kipf TN, Welling M (2016) Semi-supervised classification with graph convolutional networks. arXiv preprint arXiv:1609.02907

Hamilton WL, Ying R, Leskovec J (2017) Inductive representation learning on large graphs. arXiv preprint arXiv:1706.02216

Veličković P, Cucurull G, Casanova A, Romero A, Lio P (2017) Bengio, Y.: Graph attention networks. arXiv preprint arXiv:1710.10903

Lu S, Zhu Z, Gorriz JM, Wang SH, Zhang YD (2022) Nagnn: Classification of covid-19 based on neighboring aware representation from deep graph neural network. Int J Intell Syst 37(2):1572–1598

Wang SH, Govindaraj V, Gorriz JM, Zhang X, Zhang YD (2021) Explainable diagnosis of secondary pulmonary tuberculosis by graph rank-based average pooling neural network. Journal of Ambient Intelligence and Humanized Computing, pp 1–14

Zhao M, Zhang Y, Zhang Z, Liu J, Kong W (2019) Alg: Adaptive low-rank graph regularization for scalable semi-supervised and unsupervised learning. Neurocomputing 370:16–27

Liu W, Chang SF (2009) Robust multi-class transductive learning with graphs. In: 2009 IEEE Conference on computer vision and pattern recognition, IEEE, pp 381–388

Liu W, He J, Chang SF (2010) Large graph construction for scalable semi-supervised learning. In: Proceedings of the 27th international conference on machine learning (ICML-10), pp 679–686

Cai D, Chen X (2014) Large scale spectral clustering via landmark-based sparse representation. IEEE Trans Cybern 45(8):1669–1680

Zhou D, Bousquet O, Lal TN, Weston J, Schölkopf B (2004) Learning with local and global consistency. In: Advances in neural information processing systems, pp 321–328

Von Neumann J (1950) Functional operators: Measures and integrals, vol 1, Princeton University Press

Horn RA, Johnson CR (2012) Matrix analysis. Cambridge University Press

Nie F, Zhu W, Li X (2020) Unsupervised large graph embedding based on balanced and hierarchical k-means IEEE Transactions on Knowledge and Data Engineering

Nene SA, Nayar SK, Murase H (1996) Columbia object image library (coil-100). Tech. Rep. Technical Report CUCS-005-96 Columbia University

Liu CL, Yin F, Wang DH, Wang QF (2011) Casia online and offline chinese handwriting databases. In: 2011 International conference on document analysis and recognition, IEEE, pp 37–41

Lee K, Ho J, Kriegman D (2005) Acquiring linear subspaces for face recognition under variable lighting. IEEE Trans Pattern Anal Mach Intell 27(5):947–963

Xiao H, Rasul K, Vollgraf R (2017) Fashion-mnist: A novel image dataset for benchmarking machine learning algorithms

Fan J, Tian Z, Zhao M, Chow TW (2018) Accelerated low-rank representation for subspace clustering and semi-supervised classification on large-scale data. Neural Netw 100:39–48

Zhao M, Chow TW, Zhang Z, Li B (2015) Automatic image annotation via compact graph based semi-supervised learning. Knowl-Based Syst 76:148–165

Zhao M, Liu J, Zhang Z, Fan J (2021) A scalable sub-graph regularization for efficient content based image retrieval with long-term relevance feedback enhancement. Knowledge-Based Systems 212:106505

Ma L, Liu H, Hu L, Wang C, Sun Q (2016) Orientation driven bag of appearances for person re-identification. arXiv preprint arXiv:1605.02464

Martinel N, Micheloni C (2012) Re-identify people in wide area camera network. In: 2012 IEEE Computer society conference on computer vision and pattern recognition workshops. IEEE, Providence, RI, pp 31–36, DOI https://doi.org/10.1109/CVPRW.2012.6239203https://doi.org/10.1109/CVPRW.2012.6239203, (to appear in print)

Das A, Chakraborty A, Roy-Chowdhury AK (2014) Consistent re-identification in a camera network. In: European conference on computer vision, lecture notes in computer science, vol 8690, Springer, pp 330–345

Acknowledgements

This work is partially supported by National Natural Science Foundation of China (61971121, 72004174), partially supported by the Science and Technology Research Project of Chongqing Education Commission under Grant KJQN202000612.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of Interests

The authors declared that they have no conflicts of interest to this work.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Jiao Liu and Mingquan Lin contribute equally to this work.

Appendix

Appendix

We in this A derive (16) from (15). We first rewrite (16) as follows:

Noted that in (14), \(t = {{\left ({ - Tr\left ({{W_{t}^{0}}T} \right ) - 2\mu T{1^{T}}} \right )} \mathord {\left / {\vphantom {{\left ({ - Tr\left ({{W_{t}^{0}}T} \right ) - 2\mu T{1^{T}}} \right )} {\left | T \right |}}} \right . \kern -\nulldelimiterspace } {\left | T \right |}}\). By replacing it in (15), we have

Then, we some math derivation, we have:

By multiply 1T into both sides of the first and second equations, and replacing the derivation results 1Tμ1T and 1TμT1T into the third equation, we have:

Finally, we prove:

where the final equations hold as in (23) and (24):

Rights and permissions

About this article

Cite this article

Liu, J., Lin, M., Zhao, M. et al. Person re-identification via semi-supervised adaptive graph embedding. Appl Intell 53, 2656–2672 (2023). https://doi.org/10.1007/s10489-022-03570-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03570-9