Abstract

Industrialization and population growth have been accompanied by many problems such as waste management worldwide. Waste management and reduction have a vital role in national management. The presents study represents a multi-objective location-routing problem for hazardous wastes. The model was solved using Non dominated Sorting Genetic Algorithm-II, Multi-Objective Particle Swarm Optimization, Multi-Objective Invasive Weed Optimization, Pareto Envelope-based Selection Algorithm, Multi-Objective Evolutionary Algorithm Based on Decomposition and Multi-Objective Grey Wolf Optimizer algorithms. The findings revealed that the Multi-Objective Invasive Weed Optimization algorithm was the best and the most efficient among the algorithms used in this study. Obtaining income from the incineration of the wastes and reducing the risk of COVID-19 infection are the first innovation of the present study, which considered in the presented model. The second innovation is that uncertainty was considered for some of the crucial parameters of the model while the robust fuzzy optimization model was applied. Besides, the model was solved using several meta-heuristic algorithms such as Multi-Objective Invasive Weed Optimization, Multi-Objective Evolutionary Algorithm Based on Decomposition and Multi-Objective Grey Wolf Optimizer, which were rarely used in literature. Eventually, the most efficient algorithm was identified by comparing the considered algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Today, waste production has been on the rise with the increase in urbanization and industrialization. Therefore, waste management has emerged as a critical issue [1]. The basic principle of waste management is to minimize waste production. Also, using proper recycling management leads to a considerable reduction in the potential hazards and damage caused by waste, especially those causing a dire threat to society and the environment via burning [2]. Hazardous wastes are referred to wastes that have at least one of the hazardous properties such as toxicity, pathogenicity, flammability, and corrosion, which must be handled with care. The medical and industrial wastes that require special care and management belong to this category.

Due to improper waste management, wastes produced by healthcare and industrial facilities impose a great risk to human health and the environment, increasing the risk of diseases spread [3]. The process from production to disposal can be considered a supply chain. This chain is composed of organizations, people, activities, information, and sources responsible for transferring a product or providing services from the supplier to the consumer [4]. In this cycle, hazardous wastes are considered as products that should be distributed among recycling, incineration, sterilization, and disposal centers. Consequently, the mentioned supply chain requires location, routing, and waste-allocation to these routes [5]. However, it differs from the other product supply chains as it contains a risk in transportation, disposal, and storing and can affect the environment and individuals [6]. Physical transportation of hazardous wastes is one of the key procedures done by waste collector companies. Improvement of these systems reduces the costs and lowers the probability of contamination and adverse environmental effects [7, 8]. Nowadays, Healthcare and industrial facilities are trying to find an optimum method to dispose of their wastes with the lowest costs and scheduled transfers. Thus, decisions made on the location of recycling, incineration, sterilization and disposal centers, and routing of waste transfer canals play a key role in waste management. Management of hazardous wastes is one of the most vital parameters in a stable development. Such a management aims to preserve the environment and to protect social health.

In recent years, some scholars have developed multi-objective optimization models in different application areas, such as hazardous waste [9]. For example, Rabbani, Heidari [10] demonstrated a multi-objective location-routing problem for the hazardous industrial wastes with reliance on the novel appearances of its formulation, such as incompatibility between some of the wastes and combination of the routing decisions. This author applied transportation risks, and location risks, and metaheuristic algorithms for optimizing the total costs [10]. Farahbakhsh and Forghani [11] determined the optimal locations for reducing environmental pollution, reducing costs, and improving the service-providing systems to society to create a stable approach. They determined the optimum locations for organizing the collection and classifying centers in the city using geographical information system (GIS). For this purpose, they used population density, route network, distance to healthcare facilities, distance to disposal centers, waste classifying culture, the scale of the land, and its cost. These variables were weighted using the analytic hierarchy process (AHP). Afterward, using a routing problem, each vehicle’s quantity and capacity to provide services to the determined locations were determined considering the economic, social, and environmental limitations [11]. Hu, Li [6] proposed a multi-objective optimization method to determine the optimal routes considering the traffic limitations in intercity roads. This study considered several routes between the start and destination pairs to achieve a practical application similar to the solution method.

The multi-objective location-routing model can commonly consider the vital aspects of risks, cost, and customer satisfaction in the logistic management of hazardous wastes. Asefi, Lim [12] have investigated the location-routing model for integrated management of the solid wastes containing various municipal solid wastes (MSW). Supporting an affordable integrated management system of solid wastes requires optimizing the quantity of the locations of the system’s parameters (i.e., transfer stations, recycling centers, sorting centers, and disposal centers) and vehicle routing between the centers. Kargar, Pourmehdi [13] suggested multi-objective linear programming to minimize the costs, transportation risk, and cure of infectious medical waste, and the maximum amount of waste uncollected in medical waste generation centers. These researchers applied the Revised Multi-Choice Goal Programming method for solving the problem. Araee, Manavizadeh [14] developed a multi-objective model to transfer hazardous waste using vehicle routing problems. They applied the meta-heuristic algorithms to solve the model based on travel time, distance, and risks, and economic aspects. Ahlaqqach, Benhra [15] proposed a multi-objective vehicle routing problem for the hospital waste incineration and solved it using the genetic algorithm (GA). Saeidi, Aghamohamadi-Bosjin [16] proposed a location routing model for hazardous waste to manage wastes and determination of best decisions considering the concept of Information and Communications Technology and Internet of Things technology. The model is solved by using non-dominated sorting genetic algorithm III. Alumur and Kara [17] suggested a multi-objective location and routing problem for hazardous wastes to lower the cost and the risk of transfer to the minimum. They defined waste sorting centers’ location, disposal centers, and various waste routings from production nodes to waste sorting centers and from sorting centers to disposal centers. The suggested model was used in the central Anatolia region of Turkey. Xie, Lu [18] suggested a novel multi-objective and multistate model for transferring hazardous material to optimize transfer locations and routing simultaneously. They implemented the model in two case studies to demonstrate its applications. Toumazis and Kwon [19] suggested a novel model for hazardous waste transfer to evaluate risk conditions. These researchers aimed to minimize the risk of hazardous waste transfer in places where accidents and time-dependent consequences were probable. Chauhan and Singh [20] selected a stable location for the burial of hospital wastes using interpretive structural modeling (ISM), AHP, and Technique for Order of Preference by Similarity to Ideal Solution)TOPSIS(. Jabbarzadeh, Darbaniyan [21] have demonstrated a multi-objective model for determining the appropriate locations to establish waste processing facilities. Minimizing the costs, production of greenhouse gases and fuel consumption were their study’s main aims. Asgari, Rajabi [22] suggested a location-routing model for hazardous wastes considering various separation technologies. The distribution network studied includes the production node, separation, and disposal facilities. They developed a multi-objective location-routing model with three objectives: 1) minimizing the undesirable rate of separation and disposal facilities, 2) minimizing the various annual costs of the problem, and 3) minimizing the transportation risks and solving the model using algorithms. Yilmaz, Kara [23] suggested a multi-objective complex integer location-routing model for minimization of the transfer costs and risks of hazardous wastes management in large scales. The suggested approach was used in a study in Turkey. This research is distinguishable from other studies since it has considered a new definition for the environmental effects consisting of identifying sensitive environmental regions such as water, agricultural lands, coastal areas, forests neighboring the transportation routes. Mantzaras and Voudrias [24] conducted a study to create an optimization problem for minimization of the costs of a set, transportations, purification, and disposal of infectious medical wastes. The model defines the optimized locations for separation facilities and transfer stations, the capacity of the designs, quantity and the capacity of the waste collection equipment, transportation and transfer equipment, and their optimum routing and the minimum cost of the infectious medical waste management system. The objective function is a nonlinear equation that minimizes the total cost of collection, transportation, separation, and disposal. Sultana, Jahan [25] demonstrated a complex linear model in their study, i.e., “Multi-objective location-routing problem for managing hazardous waste systems”. The study’s objective function was to minimize the total costs, the risk for the individuals on the route of waste transportation vehicles, and the risk for the individuals near the separation and disposal facilities.

Rabbani et al. [3] developed a multi-objective model for hazardous wastes. They considered time window and workload balance and used multi-objective metaheuristic algorithms such as NSGA-II, PESA-II, and SPEA-II to solve the model. The results show that Pareto Envelope-based Selection Algorithm II)PESA-II(and Implementation of Strength Pareto Evolutionary Algorithm II)SPEA-II(outperform the Non dominated Sorting Genetic Algorithm-II)NSGA-II(, although NSGA-II creates wider Pareto frontiers. Aydemir-Karadag [26] proposed a mathematical location-routing model for hazardous wastes considering producer levels, recycling centers, disposal centers, and interim storage warehouses. In this model, two types of products (i.e., industrial wastes and hospital wastes) were investigated in Turkey. The main objective of this study was to raise the annual income from executing the model. Other objectives were locating the recycling centers, temporary storage centers, and disposal centers at a strategical level and determining the optimum flow of products (hospital and industrial wastes) between different levels. Also, they showed transportation vehicles routing for transferring the wastes in different levels. Their model is single-objective and considers all parameters definitively. The above model was developed in the current research to a multi-objective problem, considering some important parameters as uncertain. Next, a robust fuzzy optimization model is developed. Finally, meta-heuristic algorithms were used to solve the model, and the most efficient algorithm was studied using various indicators.

2 Problem description

In this study, the location routing of hazardous was modeled considering the generation levels, recycling centers, incineration centers, sterilization centers, interim storage centers, and disposal centers. Two types of wastes (i.e., industrial and hospital wastes) were investigated in the mentioned model. The model has three main objectives. The first objective of the model is raising annual income from executing this system. Because the world’s population is increasing and technologies are developed faster than ever, the production of wastes is inevitable. Thus, communities must find suitable methods with the least hazard to recycle, incinerate or dispose of the wastes so that the environment is preserved and the risk posing a threat to the general health of society is declined.

On the other hand, wastes are not totally useless since countries are trying to find a novel energy source alternative to renewable energies according to the world’s energy demand. One of these alternatives is bioenergy in which the wastes are burned to produce energy. The effective use of this energy produced continuously can supply a great portion of the world’s energy demand. Therefore, one of the main objectives of this study is to increase the income from the incineration of hazardous wastes. The second objective of the model is reducing the risk of COVID-19 infection. The novel coronavirus, which appeared for the first time in the Wuhan of China and distributed worldwide, has taken people’s lives. While governments try to prevent the outbreak from taking more lives, hospital wastes might be contaminated with the virus and can increase the risk of COVID-19 infection via transferring these hazardous wastes [27]. Consequently, the other objective of this study is to reduce the risk of COVID-19 infection via hazardous waste transfer. The third objective of the model is to lower CO2 gas produced from the transportation and transfer of the hazardous wastes, simultaneously. The negative effects of the incremental waste production on the environment have led to an increase in greenhouse gases. Thus, the other objective of this study is to reduce the production of greenhouse gases.

The study’s sub-objectives included locating recycling centers, disposal centers, incineration centers, sterilization centers, interim storage centers, determining the optimum value of product flow (Industrial and hospital wastes), and routing between levels. Since some parameters such as transportation costs and the amount of hazardous wastes produced are effective in the model, they are considered as uncertain parameters.

Figure 1 shows the produced waste transfer from production centers to recycling centers, interim storage, incineration, sterilization, and disposal centers. In the interim storage centers, wastes are received and temporarily stored until they are sent to recycling centers and incineration centers. Some of the wastes are recycled in the recycling centers, while the rest are sent to disposal centers. In the incineration centers, wastes are burned. Then, the energy produced from them is sold (as a source of income) and the residue of the wastes is sent to disposal centers. In the sterilization centers, the wastes are disinfected in the process.

Proposed framework for hazardous wastes [26]

2.1 Location-routing uncertainty model for hazardous wastes

The assumptions of the model are as follows (Table 1):

-

The capacity of all levels has already been determined.

-

The amount of hazardous wastes and the costs of transportation parameters are uncertain and trapezoidal fuzzy numbers.

-

Each vehicle can only travel between two nodes.

Equation (1) determines the total annual profit achieved from the design of supply chain network, which is equal to the differentiation costs from income. TP is total annual profit. Equation (2) determines the total annual income from the production of electrical energy and selling it to the local power grids. Equation (3) calculates the uncertain transportation expenses for the hazardous wastes from start to destination nodes. Equation (4) calculates the investment cost in establishing different centers.

Equation (5) minimizes the level of CO2 gas produced from the transportation of the hazardous wastes from the producer to other centers.

Equation (6) minimizes the risk of COVID-19 infection from transporting the hazardous wastes from the producer to other centers.

Equation (7) shows the total produced hazardous wastes that the related centers should collect.

Equation (8) shows the total amount of stored hazardous wastes in interim storage centers that will be transferred to other centers.

Equations (9) to (12) are flow balance limitations for ensuring that the disposal amount from the center is equal to the decreased amount of the total wastes entered the center.

Equations (13) to (17) determine the maximum capacity each center can provide.

Equations (18) to (22) determine the minimum capacity of the centers such as recycling, incineration, and disposal centers.

Equation (23) determines the energy production capacity in the incineration centers.

Equation (24) shows that the decision variables are non-negative.

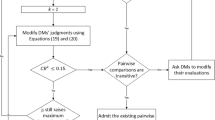

3 Methodology

In this part, the robust-fuzzy optimization model is formulated and meta-heuristic algorithms are described. Then, Taguchi method is used for parameters tuning.

3.1 Robust-fuzzy optimization model

Some important parameters such as the amount of generated waste and transportation costs (the determination of which are beyond programming) are estimated mainly based on the opinions and experiences of experts. This expert judgment is performed considering the dynamic and oscillatory nature of the unavailability and even inaccessibility of historical data required in the design phase. Therefore, these ambiguous parameters are formulated as uncertain data in the form of trapezoidal fuzzy numbers.

Possibilistic chance-constrained programming (PCCP) is commonly used to deal with uncertain (probable) limitations that have uncertain data on either side of their equations. If this method is used to control the assurance level in establishing these uncertain limitations, the concept of decision-making can achieve the minimum level of assurance level as a suitable safe margin for establishing any of these limitations. To this end, two standard fuzzy method actions, called optimistic fuzzy and pessimistic fuzzy, are commonly used. It is noteworthy that optimistic fuzzy shows the optimistic level of probability of an indeterminate event including uncertain parameters, while pessimistic fuzzy indicates the pessimistic decision-making about an indeterminate event. Nevertheless, it is more conservative to use a pessimistic fuzzy because it is assumed that the decision-making has a pessimistic (conservative) tendency to establish uncertain limitations. Therefore, pessimistic fuzzy action has been used to guarantee the establishment of uncertain limitations. Currently, the obvious equivalent of the original uncertain model can be formulated based on the mentioned ambiguous parameters and using the expected value for the objective function and the pessimistic action for the uncertain limitations.

Now, according to the abbreviated form, the basic model of PCCP is as follows:

where ρ controls the minimum degree of certainty of establishing uncertain limitations with a (pessimistic) decision-making approach. Given the trapezoidal probability distribution for ambiguous parameters, the general form of relations (25) to (29) is as follows:

In PCCP models, the minimum assurance level for establishing uncertain limitations must be determined by considering decision-making preferences. As it’s clear, in the suggested approach, the objective function is not sensitive to deviation from its expected value, which means that the achievement of robust solutions in the PCCP model is not guaranteed. In some cases, the high risk may be constrained on decision-making in real-world cases, specifically in strategic decision-making where making the solution robust is almost critical. Hence, to deal with this inefficient situation, a robust uncertain programming approach is used. Pishvaee, Razmi [28] first introduced the robust uncertain programming method. This method profits from the significant benefits of both robust and uncertain programming, which clearly distinguishes it from other uncertainty programming methods. This research applies uncertain programming based on the proposed model as follows:

In the first objective function of Eq. (35), the first expression refers to the expected value of the first objective function using the mean values of the uncertain parameters of the model. The second expression refers to the cost of the penalty for deviating more than the expected value of the first objective function (Optimality Robustness). The third expression shows the total cost of the penalty for deviation from demand. Here, ξ is the weighting coefficient of the objective function, and η is the cost of the penalty for not estimating demand. Also, ρ represents the correction coefficient in the value of fuzzy levels of numbers, which should be a number between 0.5 and 1. As a result, Eqs. (1–7) are rewritten as follows:

3.2 Solution representation

In this study, considering the Np-Hard nature of the supply chain network model and the inefficiency of GAMS software, a super-innovative algorithm has been used. Therefore, to use this algorithm, it is necessary to design the primary chromosome. This encryption is known as priority-based encryption, introduced by Gen, Altiparmak [29]. In this encryption, the supply chain network is divided into its constituent levels. Then, each level is considered in the design of the primary chromosome according to the capacity, demand, and type of vehicle. For example, one tier (depot and source) is considered in a two-tier supply chain network. According to Tables 2 and 4 depots and 3 sources are considered in this encoding.

The designed chromosome is a permutation of the total number of nodes (depots and resources). Therefore, random encryption (7–4–1-6-2-5-3) is considered and (7–4–1-6) priorities are allocated to depots, and (2–5-3) are related to resources. The following steps must be taken to decode the said chromosome:

-

Step 1.

The highest priority (number) is selected from the created chromosome. If this number is related to the priorities of the depot, the house related to that priority will be considered as the first depot for the allocation of the goods. Also, if the highest number is related to resource priorities, the house related to that priority is considered as the first resource for goods allocation.

-

Step 2.

After identifying the first node for allocation (depot/resource) based on transportation costs or other related costs, the second node for allocation (source/depot) is selected. This choice is based on the minimum shipping cost.

-

Step 3.

After determining the depot and source, the minimum amount of depot demand and resource capacity is considered for the optimal allocation. After the allocation operation, the amount of depot demand and the resource capacity will be updated.

3.3 Non-dominated sorting genetic algorithm

NSGA-II algorithm, proposed by Deb, Pratap [30], is one of the most powerful algorithms for solving multi-objective optimization problems. The general procedure of this algorithm is that after identifying a suitable mechanism for converting each solution to a chromosome, a set of chromosomes is created and evaluated as the initial population. Now that all the corresponding chromosome responses are compared in the phenotype space, the number of times each chromosome fails are determined and prioritized. In this way, the members on the first front will be a completely non-dominated group by the other members of the current population. The members on the second front are defeated only by the members of the first front on this basis. This process continues in the same way in other categories, such that all the members on each front are ranked based on the number of their front. In the next step, the swarm distance is calculated for each answer on each front, and the proximity degree of the desired answer to the other answers on that front is determined. Obviously, the less crowded the answer is located, the longer swarm distance the answer will be.

The swarm distance of an answer such as i is determined by calculating its average distance from two adjacent answers (within a front) for each objective function (m), and their sum is denoted by di:

In the NSGA-II algorithm, parent selection is made using a binary tournament. In this approach, two members are randomly selected from the answers of each generation, and then a comparison is made between these two answers. First, the rank of the two answers will be compared, and the one with the lower rank will be selected; however, if the ranks of the two answers are equal, the second criterion, which is the swarm distance, will be the criterion, the member with more swarm distance is considered the parent. After selecting the parent and performing the Recombination/Crossover and mutation operation, the generated answers are evaluated. Afterward, the offspring of the recombination/crossover and mutation operators are added to the main population, and a larger population of Rtis created. Members of the Rt population are reclassified, their swarming distance is calculated, and the population is sorted in ascending order by rank. With this action, the answers with lower rank will be at a lower level, but among the members whose rank is equal, another sorting will be done based on the swarm distance. In this way, the answers with more swarm distance are placed at the beginning. As large as the initial population, members are now selected from the top of the ordered population Rt to form the next generation population. Other members of the population are also thrown away. Finally, the necessary condition for the end of the algorithm is checked: if it is achieved, the algorithm is stopped; otherwise, the parent is selected, and the algorithm is repeated.

3.4 Multi-objective particle swarm optimization algorithm

MOPSO was introduced by Coello, Pulido [31]. This algorithm is a generalization of the PSO to solve multi-objective problems. Unlike the GA, in this PSO algorithm, each response is first identified by a particle instead of a chromosome. Secondly, the movement of particles in space to find new answers leads to the exchange of information and convergence between population members. This convergence is due to the following three factors:

-

1)

Behavior that these particles have already shown

-

2)

The best position that each particle has experienced in the entire search space (p-best)

-

3)

The best place experienced by the whole population (g-best)

The first step in applying a meta-heuristic algorithm is to display the answers to the problem. Then, a set of particles is produced in a certain number as the initial population (p0). A velocity vector is considered for each population member, which is assumed zero at the beginning of the algorithm. Finally, the answers are evaluated. At this point, the best situation that any particle has experienced is the situation where it is located. Therefore, the current position of the particles is recorded as the p-best of each particle. After that, the non-dominated members of the population are identified and kept in an archive. Then, according to the members of the external archive, the target space of the problem (phenotype space) is tabulated. Finally, it is tried to identify the index of the houses where the members of the external archive are located. Each member of the population chooses a leader to move from the members of the external archive. In this algorithm, the selection is based on areas rather than the individual. In other words, instead of deciding which of the members of the foreign archive to choose, we tabulate the space and decide which house to choose, in such a way that the probability of choosing houses with less population is higher. Eventually, we chose one of the members of that house by chance. The velocity and position of particles in the next step are updated and evaluated according to the following equations:

where v (t) and x (t) are the current velocity and position of the particle, v (t + 1) and x (t + 1) are the updated velocity and position of the particle, respectively, and p (t) is the particle’s p-best. At the next step, p-best should be updated according to the new situation as follows:

The particle swarm optimization (PSO) algorithm has a high speed of convergence degree. Therefore, it is necessary to reduce this convergence speed as much as possible to ensure that the algorithm searches all over the space to a suitable level. For this reason, at the beginning of the algorithm, a mutation occurs at a relatively high rate, and then gradually decreases. After each particle mutation at a certain rate, its position changes, so the new position must be evaluated and each particle’s best personal memory must be updated. As a result, new particles are created that can overcome other particles. These particles are added to the external archive, and the dominated members are removed from the external archive. Then, the target space of the problem is tabulated again and the indexes of the houses in which the members of the external archive are located are identified. If the archive is full, some members are removed. This issue is in stark contrast to the leader selection mode, which means that houses with more members have priority over members with smaller populations. Finally, the necessary condition for ending the algorithm is checked: if it is Ok, the algorithm is stopped; otherwise, a leader is selected from among the archive members, and the algorithm is repeated.

3.5 Pareto multi-objective envelope-based selection algorithm II

PESA II is one of the most well-known multi-objective algorithms. This algorithm, which uses genetic algorithm (GA) operators to produce new responses, was proposed in 2001 by Corne, Jerram [32]. The parameters and steps of this algorithm are as below:

- NE:

-

Largest archive size of Non-dominated answers E

- NP:

-

Population size

- N:

-

Number of networks in each axis of the objective function

-

Step 1.

We start with a random initial population (p0) and set the external archive E0 to empty and the counter t = 0.

-

Step 2.

Divide the answer space into nk hypercubes so that n is the number of networks in each axis of the objective functions and k is the number of objectives.

-

Step 3.

Integrate the archive of non-dominated answers Et with new answers from Pt and do the following.

-

Case 1:

If the new answer is dominated by at least one of the answers in the Et archive, discard the new answer.

-

Case 2:

If a new answer overcomes various answers in Et, eliminate the rejected answers from the archive and add the latest answer to the Et archive and update the members of the hypercube.

-

Case 3:

If a new answer is not dominated by any answer in Et and does not overcome any answer in Et, add this answer to Et . If | Et | = NE + 1, randomly select a hypercube (selection is made by applying the roulette wheel so that more crowded hypercubes are more likely to select). Then, we select an answer in it by accident, delete it, and update the members of the hypercubes.

-

Case 1:

-

Step 4.

If the stop criterion is met, stop and show the final Et.

-

Step 5.

By setting Pt = ∅, select answers from Et to recombination/crossover and mutation according to data density from the hypercube. This selection is done by applying the roulette wheel to make less populated hypercubes more likely to be selected. Use recombination/crossover and mutation to generate NP child and copy it in (Pt + 1).

-

Step 6.

Set t to t + 1 and go to step 3.

The same method of recombination/crossover and mutation is applied in this method.

3.6 Multi-objective evolutionary algorithm based on decomposition

In 2007, Zhang and Li [33] introduced MOEA/D as an evolutionary algorithm. The algorithm takes a multi-objective problem as input, breaks it down into a number of sub-problems, and optimizes them simultaneously. In this method, a weight vector is defined for each sub-problem, and the objective functions are aggregated into an objective function using this weight vector. The following number of sub-problems is usually considered equal to the size of the population. Therefore, each member of the population represents an answer generated with an aggregation vector. Theoretically, each sub-problem finally provides an answer from the Pareto front at the end of the search. In each generation, the population consists of the best answers found for each sub-problem. Neighbor relations between sub-problems are defined based on the distance between the aggregation vectors. During the search, the answer to each sub-problem is generated through the cooperation of the neighborhood members. In addition, the answer to the sub-problems in the current neighborhood is provided to that sub-problem, but with its own weighted aggregation vector. If the answer of a neighbor sub-problem is better than the sub-problem answer itself, that answer replaces the current sub-problem’s answer. This stage is called the Competition stage. Cooperation and competition stages are implemented for all sub-problems. Therefore, there is always an exchange of information between neighborhoods in the MOEA/D algorithm. Each sub-problem is optimized by applying only the data of the sub-problem located in its neighborhood. Two well-known methods for decomposing multi-objective problems are the weight sum method and the Tchebycheff weighted method.

3.7 Multi-objective invasive weed optimization

The multi-objective invasive weed optimization was developed by Kundu, Suresh [34]. The invasive weed algorithm is a comprehensive algorithm inspired by weed behavior and its growth in nature. By definition, a weed is a plant that produces and grows in unwanted places, spreads among crops, and inhibits growth. This simple and effective algorithm implements weeds basic and natural characteristics such as seed, growth, and survival struggle in one operation. The steps for performing this algorithm include the following:

Determine the amount of the initial population: Initially, an initial population is generated. This population is random, limited, and scattered in the problem-solving space.

Reproduction: In this optimization method, each member of the population produces seeds according to their capabilities. Each plant can produce the number of seeds varies linearly from the smallest possible number of seeds to the largest number. The weed produces more seeds with better adaptation. The number of grains produced is expressed as follows:

where seedn is the number of produced seeds, f is the fitness of the current invasive weed, fmax and fmin are the maximum and minimum adaptations of the current population, respectively, and Smax and Smin are the maximum and minimum possible amount of grain production, respectively.

Spatial distribution

Here, the produced seeds are randomly scattered in the multidimensional space of the problem, which is a normal function. The mean value is zero, and its standard deviation varies at different stages, ensuring that the randomly distributed seeds are very close to their parent plant. The standard deviation value (σ) of the normal distribution function diminishes in each step from the initial value defined σinitial to the final value σfinal. These parameters and the standard deviation relationship can be expressed as follows:

where itermax is the highest number of repetitions, σiteris the standard deviation in the operation step, and n is the nonlinear modulation index or the nonlinear oscillation index.

Competitive elimination

In the invasive weed algorithm, following various iterative steps, the number of colony seeds reaches its maximum due to reproduction (Pmax) and then a mechanism is used to remove weak seeds. Once the maximum number of allowed seeds is produced, each seed can produce new seeds according to the method described in the previous steps, which can be dispersed in the space. When all the seeds are distributed in place, each seed is given a score. In the last step, the seeds with a lower score are removed to keep the seed population at the maximum. These steps are repeated until the seeds gradually converge to the optimal seed.

The main advantage of the invasive weed algorithm over other meta-heuristic algorithms is that this algorithm can find a wide range of feasible solutions that is highly dispersed according to the concept of seed distribution and growth. Then, by performing different repetitions, it creates a complete coverage of the justified space. Overall, it can be expected that this algorithm, unlike other algorithms that are trapped in the local optimal trap, can find the optimal solution to the problem by covering the maximum justified space. The steps of the invasive weed optimization algorithm according to the behavior of this creature in nature are as follows:

-

Step 1:

Spread the seeds in the desired space

-

Step 2:

Seed growth according to desirability and environmental distribution

-

Step 3:

Survival of more desirable grasses (Competitive elimination)

-

Step 4:

Continue the process until reaching the most desirable plants

3.8 Multi objective Grey wolf optimizer

Mirjalili, Saremi [35] proposed Multi Objective Grey Wolf Optimizer algorithm for solving multi objective problems. The hunting method of wolves is utilized in this algorithm to optimize the problems. Wolves live in groups, and the group leader, Alpha, is responsible for making decisions such as attack and timing. It consists of the following three steps:

-

1.

Tracking, chasing and approaching the prey

-

2.

Pursuing, encircling, and harassing the prey until it stops moving

-

3.

Attack towards the prey

In order to model the social behavior of wolves, a random population of solutions is generated, and the fittest solution called alpha(α), the second and third best solutions, are also called beta(β) and delta(δ), respectively. Other candidate solutions are considered omega(ω) wolves. The gray wolf algorithm uses α, β,δ answers for hunting (optimization) and the ω answers follow them. As mentioned above, grey wolves encircle prey during the hunt. In order to mathematically model encircling behavior the following equations are proposed:

- T:

-

the current iteration

- \( \overrightarrow{A} \),\( \overrightarrow{C} \):

-

coefficient vectors

- \( {\overrightarrow{X}}_P(t) \) :

-

the position vector of the prey

- \( \overrightarrow{X}(t) \) :

-

the position vector of a grey wolf

- r1, r2:

-

random vectors

- \( \overrightarrow{a} \) :

-

linearly decrease from 2 to 0 over the course of iterations

In order to develop MOGWO algorithm, two new components added in Grey Wolf Optimizer (GWO) algorithm. First component is archive (responsible for saving non-dominated Pareto optimal solutions) and second component is the strategy of leader selection (help to choose alpha, beta, and delta from archive). Archive is a storage unit which saves or protects the non-dominated solution. It controls the entrance solutions and fullness of the archive. Leader selection uses roulette wheel method to choose a non-dominated solution from the least crowded archive to propose it as alpha, beta and delta wolves.

4 Parameters tuning

Before solving the problem in a larger scale using metaheuristic algorithms, the parameters of MOPSO, NSGA II, MOEA/D, MOIWO, PESA II and MOGWO algorithms are adjusted using the Taguchi method. In the Taguchi method, appropriate factors should be identified, followed by selecting the levels of each factor. Next, the appropriate test design for these control factors is determined. Once the test design is determined, the experiments are performed and then analyzed to find the best combination of parameters. In this study, for each factor, 3 levels are considered according to Table 3. For each algorithm, the design and execution of the experiment are determined according to the number of factors and their levels.

Given that the proposed model has three objective functions, the value of each experiment must first be calculated from Eq. (57). In this equation, the numerator consists of the indicators used to compare metaheuristic algorithms, including the average of the first to third objective functions, Number of Pareto Solution, Maximum Spread, Spacing Metric, Mean Ideal Distance, and Cpu-time. After determining the value of each experiment, the unscaled value of each experiment (RPD) is calculated from Eq. (58) to analyze the design of the Taguchi experiment.

After calculating each experiment and unscaling the values of each algorithm, the data are entered into Minitab 16 software for analysis. In this method, the maximum value of the SN criterion is the standard for selecting the values of the parameters. Figures 2, 3, 4, 5, 6, 7 show the optimum values of the parameters of metaheuristic algorithms by the Taguchi method (Table 4).

5 Result and discussion

Methodology includes validating the model. Then the model is solved in small size with GAMZ software and the problem is solved with meta-heuristic algorithms in large size. Finally, the most efficient algorithm is determined.

5.1 Model validation

In this section, to assess the model’s validity, a sample is designed considering Table 5 and the approximate size of the problem parameters according to Table 6. Due to the lack of access to real-world data, random data based on a uniform distribution function are used to determine the values of the parameters.

After designing the problem and solving the model using GAMS software and Cplex solver, 4 efficient answers are obtained according to Table 7. Considering the values of efficient answers, it can be seen that with the increase in profits from the network design, the amount of greenhouse gas emissions has increased due to excessive vehicle traffic and COVID-19 transmission risk.

After verifying the validity of the model, the sensitive analysis of the problem under different rates of uncertainty and its effect on the values of objective functions is performed.

According to the Table 8, by raising uncertainty rate, the amount of waste transfer to recycling and incineration centers increases. As a result, the revenue from energy sales (first objective function), the amount of greenhouse gas emissions (second objective function) and the risk of COVID-19 infection (third objective function), which depend on the amount of waste, increase.

Due to the problem being complex and NP-hard and the inability of GAMS software to resolve problems in larger scales, the proposed algorithms were used to solve the problem. All five algorithms are coded in MATLAB software.

5.2 Comparison metrics

Since meta-heuristic methods are defined as an estimation algorithm for resolving optimization problems and have a random nature, solving a problem using various methods might result in different answers. Hence, many researchers have paid attention to assessing the algorithms and choosing the proper algorithm with the help of different indicators. Although convergence in Pareto answers and proving density and distribution in sets are the study’s objectives, they are separate issues and, to some extent, contradictory objectives in the multi-objective evolutionary algorithms. Consequently, there are no absolute criteria to decide the performance of the algorithms. After investigating the literature of the topic, the following indicators are identified and introduced as the algorithm performance assessment indicators in this study.

Cpu-time

Cpu-time indicator focuses on algorithm execution time and is one of the most important indicators in comparing different algorithms. The less the value of the Cpu-time indicator, the more efficient the algorithm is (in the case of equality of other indicators).

Number of Pareto solutions

This indicator shows the found Pareto answers using the algorithm. The more the value of the NPS indicator, the more efficient the algorithm is.

Maximum spread

The maximum spread indicator equals the sum of the Euclidian distance of the first and last points of Pareto fronts of objective functions. The more the maximum spread indicator, the more efficient the algorithm is.

-

Spacing metric

The spacing metric is equal to the standard deviation distance of non-dominant answers. In other words, the spacing metric calculates the relative distance of Pareto‘s consecutive answers. The more the metric spacing indicator, the more efficient the algorithm is.

where N is the number of the non-dominant answers, di is the minimum distance of Pareto’s optimum answer from other answers, \( \overline{d} \) is the mean value of di for all non-dominant answers, and \( {f}_t^i \) is the value of objective function t∈{1,…,T} for all Pareto’s optimum answers.

-

Mean ideal distance

The MID indicator calculates the mean distance of Pareto points from the ideal answer. The ideal answer is equal to the best possible value for each objective function in all applied algorithms. The less the MID indicator, the more efficient the algorithm is.

where n is the number of Pareto points.

5.3 Analysis of the first objective function

Based on the obtained results, it can be seen that the average of the first objective function has increased linearly with increasing the problem size. Also, the NSGA II algorithm has obtained the highest average of the first objective function among the solving methods. Therefore, a t-test at 95% confidence level was used to evaluate the significance of the averages of the first objective function among the proposed algorithms. Thus, if the test statistic P is less than 0.05, it denotes a significant difference between the averages of that indicator between the two compared algorithms.

Table 9 shows the statistical comparisons of the t-test on the averages of the first objective function at 95% confidence level.

According to the results of Table 9, the P value in comparison of most algorithms is higher than 0.05 and therefore there is no considerable difference between the averages of the first objective function. But P value, between MOGWO – PESA II algorithms is less than 0.05, and there is a considerable difference between the averages of the first objective function of these two algorithms.

5.4 Analysis of the second objective function

Based on the obtained results, the vehicle traffic has increased and, therefore, the amount of greenhouse gas emissions has increased with increasing the size of the problem. Also, according to the results, the MOPSO algorithm has obtained the lowest average of the second objective function among the solution methods.

According to the findings of Table 10, the P value between the two algorithms is higher than 0.05, suggesting the lack of any considerable difference between the averages of the second objective function obtained by solving metaheuristic algorithms.

5.5 Analysis of the third objective function

The results show that as the size of the problem increases, the amount of vehicle traffic has increased, thereby increasing the COVID-19 transmission risk. Also, according to the results, the NSGA II algorithm has obtained the lowest average of the third objective function among the solution methods.

According to Table 11, the P value between the two algorithms is higher than 0.05, indicating lack of any significant difference between the averages of the third objective function achieved by solving metaheuristic algorithms.

5.6 Analysis of the number of Pareto solution

The results show no specific trend in increasing or decreasing the number of Pareto solutions by increasing the problem size. Also, the NSGA II algorithm has obtained the highest average number of efficient answers among the solving methods.

According to the findings of Table 12, the P value in comparison of two algorithms, only between NSGA II - PESA II, MOPSO - MOEA/D, MOGWO - NSGA II and MOGWO - PESA II is higher than 0.05 and there is no considerable difference between the averages of the number of efficient answers.

5.7 Analysis of the maximum spread

The results indicate no specific trend in increasing or decreasing the maximum spread indicator with increasing the size of the problem. Also, according to the results, the PESA II algorithm has the highest average of maximum expansion indicator among the solving methods.

According to the findings of Table 13, P value between the two algorithms is higher than 0.05, suggesting lack of any significant difference between the averages of maximum expansion achieved from solving metaheuristic algorithms.

5.8 Spacing metric analysis

According to the obtained findings, there is no specific trend in increasing or decreasing the metric spacing metric with increasing the problem size. In addition, the MOIWO algorithm has obtained the lowest average spacing metric between the solving methods.

According to Table 14, the P value in comparison between the two algorithms is higher than 0.05, and therefore, there is no considerable difference between the averages of spacing metrics achieved from solving metaheuristic algorithms.

5.9 Mean ideal distance analysis

According to the obtained findings, there is no specific trend in increasing or decreasing the mean ideal distance with increasing the problem size. Besides, the MOIWO algorithm has obtained the lowest average mean ideal distance among the solving methods.

According to Table 15, the P value between the two algorithms is higher than 0.05. Therefore, there is no considerable difference between the averages of mean ideal distance obtained from solving metaheuristic algorithms.

5.10 Cpu-time analysis

Based on the obtained results, the average Cpu-time obtained from metaheuristic algorithms increases exponentially by increasing problem size. Also, the MOIWO algorithm has obtained the lowest average Cpu-time among the solving methods.

According to the results of Table 16, it is obvious P value in comparison of two algorithms, between NSGAII-MOPSO, NSGAII-MOIWO, MOIWO-MOEA/D, MOIWO-PESAII, MOGWO-NSGAII, MOGWO-MOPSO, MOGWO-MOEA/D, MOGWO-PESA II and MOGWO-MOIWO algorithms is less than 0.05, and there is a considerable difference between the Cpu-time averages obtained from solving metaheuristic algorithms.

5.11 Determination of the most efficient algorithm

Since it is not possible to provide a particular opinion on the performance of metaheuristic algorithms in solving the three-objective hazardous waste problem, the TOPSIS method was applied to determine the most effective method in this regard. Table 17 represents the final score achieved from the TOPSIS method considering 6 alternatives (NSGAII, MOPSO, MOEA/D, PESAII, MOIWO and MOGWO algorithms) and 8 criteria (average of the first to third objective functions, Number of Pareto Solution, Maximum Spread, Spacing Metric, Mean Ideal Distance, and Cpu-time).

According to the results of Table 17, MOIWO algorithm with a score of 0.6254 is known as the most efficient algorithm in solving large-sized sample problems. Also, MOPSO, MOGWO, PESA II, MOEA/D and NSGA II algorithms are other efficient algorithms in solving large-sized sample problems of the proposed model, respectively.

6 Conclusion

This paper proposed a multi objective location- routing problem for transportation of hazardous waste. Hazardous wastes are one of the major problems in polluting the environment such as soil, surface water and underground water in both direct and indirect ways. Due to this issue, a proper management must be conducted for the disposal of these wastes. In recent years, environmental movements have drawn the attention to the management of wastes. On the other hand, not only burning waste can bring income to the countries but also it can reduce the amount of disposal wastes. Furthermore, due to the critical conditions of societies facing the COVID-19 pandemic and the exponential growth of hazardous wastes, a comprehensive network from generation to disposal of hazardous wastes in which economic was proposed in this study. In this network, social and environmental issues were considered for sustaining a healthy society. After investing the theoretical foundations, research background, and proposal of initial chromosomes, the robust-fuzzy optimization approach was used to control the uncertain parameters of the problem. The model was validated using a small-scale sample in GAMZ software. After adjusting the problem parameters using the Taguchi method, the model was solved using a meta-heuristic algorithm on a larger scale. Next, the efficient answers set of each algorithm was compared with each other using the average of the first to third objective functions, Number of Pareto Solution, Maximum Spread, Spacing Metric, Mean Ideal Distance, and Cpu-time indicators. According to the results, Multi-Objective Invasive Weed Optimization algorithm was identified as the most efficient algorithm in solving large-scale sample problems. The results show that the recent algorithms perform better than the old ones and they give favorable results in some important indicators such as time and number of Pareto solution compared with old algorithms. In addition to the conclusions above, this study demonstrates the application of the Multi-Objective Invasive Weed Optimization and Multi Objective Grey Wolf Optimizer method in location- routing problems which is scarce in the literature, and it can be a new subject for interested researchers.

From the obtained results, managers can use the model to manage costs and benefits and estimate them in pessimistic and uncertain situations. Also, the results help decision makers to control and manage the environmental pollution and damages. On the other hand, the recycling system and energy production methods can be developed to reduce the amount of disposal wastes. In future studies, other meta-heuristic algorithms can be applied to solve the multi-objective model. Also, vehicle routing problems can be added to the model, and stochastic programming can be considered in the model. Furthermore, considering social aspects in the hazardous waste management problems can improve the efficiency and comprehensiveness of the results.

References

Ghoushchi SJ, Dorosti S, Moghaddam SH (2020) Qualitative and quantitative analysis of waste management literature from 2000 to 2015. Int J Environ Waste Manag 26(4):471–486

Jafarzadeh-Ghoushchi S, Dorosti S (2017) Effects of exposure to a variety of waste on human health-a review. J Liaquat Univ Med Health Sci 16(1):3–9

Rabbani M, Nikoubin A, Farrokhi-Asl H (2021) Using modified metaheuristic algorithms to solve a hazardous waste collection problem considering workload balancing and service time windows. Soft Comput 25(3):1885–1912

Fardi K, Jafarzadeh-Ghoushchi S, Hafezalkotob A (2019) An extended robust approach for a cooperative inventory routing problem. Expert Syst Appl 116:310–327

Mahajan J, Vakharia AJ (2016) Waste management: a reverse supply chain perspective. Vikalpa 41(3):197–208

Hu H, Li X, Zhang Y, Shang C, Zhang S (2019) Multi-objective location-routing model for hazardous material logistics with traffic restriction constraint in inter-city roads. Comput Ind Eng 128:861–876

Tirkolaee EB, Goli A, Mardani A (2021) A novel two-echelon hierarchical location-allocation-routing optimization for green energy-efficient logistics systems. Ann Oper Res:1–29

Tirkolaee EB, Abbasian P, Weber G-W (2021) Sustainable fuzzy multi-trip location-routing problem for medical waste management during the COVID-19 outbreak. Sci Total Environ 756:143607

Babaee Tirkolaee E, Aydın NS (2021) A sustainable medical waste collection and transportation model for pandemics. Waste Manag Res:0734242X211000437

Rabbani M, Heidari R, Farrokhi-Asl H, Rahimi N (2018) Using metaheuristic algorithms to solve a multi-objective industrial hazardous waste location-routing problem considering incompatible waste types. J Clean Prod 170:227–241

Farahbakhsh A, Forghani MA (2019) Sustainable location and route planning with GIS for waste sorting centers, case study: Kerman, Iran. Waste Manage Res 37(3):287–300

Asefi H, Lim S, Maghrebi M, Shahparvari S (2019) Mathematical modelling and heuristic approaches to the location-routing problem of a cost-effective integrated solid waste management. Ann Oper Res 273(1):75–110

Kargar S, Pourmehdi M, Paydar MM (2020) Reverse logistics network design for medical waste management in the epidemic outbreak of the novel coronavirus (COVID-19). Sci Total Environ 746:141183

Araee E, Manavizadeh N, Aghamohammadi Bosjin S (2020) Designing a multi-objective model for a hazardous waste routing problem considering flexibility of routes and social effects. J Ind Prod Eng 37(1):33–45

Ahlaqqach M et al (2020) Multi-objective optimization of heterogeneous vehicles routing in the case of medical waste using genetic algorithm. In: International conference on smart applications and data analysis. Springer

Saeidi A, Aghamohamadi-Bosjin S, Rabbani M (2020) An integrated model for management of hazardous waste in a smart city with a sustainable approach. Environ Dev Sustain:1–26

Alumur S, Kara BY (2007) A new model for the hazardous waste location-routing problem. Comput Oper Res 34(5):1406–1423

Xie Y, Lu W, Wang W, Quadrifoglio L (2012) A multimodal location and routing model for hazardous materials transportation. J Hazard Mater 227:135–141

Toumazis I, Kwon C (2013) Routing hazardous materials on time-dependent networks using conditional value-at-risk. Transp Res Part C Emerg Technol 37:73–92

Chauhan A, Singh A (2016) A hybrid multi-criteria decision making method approach for selecting a sustainable location of healthcare waste disposal facility. J Clean Prod 139:1001–1010

Jabbarzadeh A, Darbaniyan F, Jabalameli MS (2016) A multi-objective model for location of transfer stations: case study in waste management system of Tehran. J Ind Syst Eng 9(1):109–125

Asgari N, Rajabi M, Jamshidi M, Khatami M, Farahani RZ (2017) A memetic algorithm for a multi-objective obnoxious waste location-routing problem: a case study. Ann Oper Res 250(2):279–308

Yilmaz O, Kara BY, Yetis U (2017) Hazardous waste management system design under population and environmental impact considerations. J Environ Manag 203:720–731

Mantzaras G, Voudrias EA (2017) An optimization model for collection, haul, transfer, treatment and disposal of infectious medical waste: application to a Greek region. Waste Manag 69:518–534

Sultana I et al (2017) A-multi objective location-routing problem for the hazardous waste management system. J Modern Sci Technol 5(1):66–77

Aydemir-Karadag A (2018) A profit-oriented mathematical model for hazardous waste locating-routing problem. J Clean Prod 202:213–225

Wang J, Shen J, Ye D, Yan X, Zhang Y, Yang W, Li X, Wang J, Zhang L, Pan L (2020) Disinfection technology of hospital wastes and wastewater: Suggestions for disinfection strategy during coronavirus Disease 2019 (COVID-19) pandemic in China. Environ Pollut 262:114665

Pishvaee MS, Razmi J, Torabi SA (2012) Robust possibilistic programming for socially responsible supply chain network design: a new approach. Fuzzy Sets Syst 206:1–20

Gen M, Altiparmak F, Lin L (2006) A genetic algorithm for two-stage transportation problem using priority-based encoding. OR Spectr 28(3):337–354

Deb K, Pratap A, Agarwal S, Meyarivan T (2002) A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans Evol Comput 6(2):182–197

Coello CAC, Pulido GT, Lechuga MS (2004) Handling multiple objectives with particle swarm optimization. IEEE Trans Evol Comput 8(3):256–279

Corne DW et al (2001) PESA-II: Region-based selection in evolutionary multiobjective optimization. In: Proceedings of the 3rd annual conference on genetic and evolutionary computation

Zhang Q, Li H (2007) MOEA/D: a multiobjective evolutionary algorithm based on decomposition. IEEE Trans Evol Comput 11(6):712–731

Kundu D, Suresh K, Ghosh S, Das S, Panigrahi BK, Das S (2011) Multi-objective optimization with artificial weed colonies. Inf Sci 181(12):2441–2454

Mirjalili S, Saremi S, Mirjalili SM, Coelho LS (2016) Multi-objective grey wolf optimizer: a novel algorithm for multi-criterion optimization. Expert Syst Appl 47:106–119

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Raeisi, D., Jafarzadeh Ghoushchi, S. A robust fuzzy multi-objective location-routing problem for hazardous waste under uncertain conditions. Appl Intell 52, 13435–13455 (2022). https://doi.org/10.1007/s10489-022-03334-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03334-5