Abstract

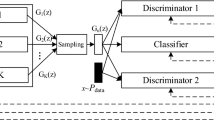

Generative adversarial networks (GANs) are one of the most widely used generative models. GANs can learn complex multi-modal distributions, and generate real-like samples. Despite the major success of GANs in generating synthetic data, they might suffer from unstable training process, and mode collapse. In this paper, we propose a new GAN architecture called variance enforcing GAN (VARGAN), which incorporates a third network to introduce diversity in the generated samples. The third network measures the diversity of the generated samples, which is used to penalize the generator’s loss for low diversity samples. The network is trained on the available training data and undesired distributions with limited modality. On a set of synthetic and real-world image data, VARGAN generates a more diverse set of samples compared to the recent state-of-the-art models. High diversity and low computational complexity, as well as fast convergence, make VARGAN a promising model to alleviate mode collapse.

Similar content being viewed by others

References

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. In: Advances in neural information processing systems, pp 2672–2680

Karras T, Aila T, Laine S, Lehtinen J (2017) Progressive growing of gans for improved quality, stability, and variation. CoRR, abs/1710.10196

Brock A, Donahue J, Simonyan K (2019) Large scale gan training for high fidelity natural image synthesis. In: 7th International conference on learning representations, ICLR 2019, New Orleans, LA, USA, May 6–9, 2019. https://openreview.net/forum?id=B1xsqj09Fm

Huang B, Chen W, Wu X, Lin C-L, Suganthan P N (2018) High-quality face image generated with conditional boundary equilibrium generative adversarial networks. Pattern Recogn Lett 111:72–79

Ledig C, Theis L, Huszár F, Caballero J, Cunningham A, Acosta A, Aitken A, Tejani A, Totz J, Wang Z et al (2017) Photo-realistic single image super-resolution using a generative adversarial network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4681–4690

Isola P, Zhu J-Y, Zhou T, Efros A A (2017) Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1125–1134

Zhu J-Y, Park T, Isola P, Efros A A (2017) Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE international conference on computer vision, pp 2223–2232

Salimans T, Goodfellow I, Zaremba W, Cheung V, Radford A, Chen X (2016) Improved techniques for training gans. In: Advances in neural information processing systems, pp 2234–2242

Mok TCW, Chung ACS (2018) Learning data augmentation for brain tumor segmentation with coarse-to-fine generative adversarial networks. In: International MICCAI brainlesion workshop. Springer, pp 70–80

Mohammadjafari S, Ozyegen O, Cevik M, Kavurmacioglu E, Ethier J, Basar A (2021) Designing mm-wave electromagnetic engineered surfaces using generative adversarial networks. Neural Comput Appl:1–15

Arjovsky M, Chintala S, Bottou L (2017) Wasserstein generative adversarial networks. In: International Conference on Machine Learning. PMLR, pp 214–223

Miyato T, Kataoka T, Koyama M, Yoshida Y (2018) Spectral normalization for generative adversarial networks. In: International conference on learning representations

Li W, Liang Z, Neuman J, Chen J, Cui X (2021) Multi-generator gan learning disconnected manifolds with mutual information. Knowl-Based Syst 212:106513

Lin Z, Khetan A, Fanti G, Oh S (2018) Pacgan: The power of two samples in generative adversarial networks. In: Advances in neural information processing systems, pp 1498–1507

Metz L, Poole B, Pfau D, Sohl-Dickstein J (2017) Unrolled generative adversarial networks. In: 5th International conference on learning representations, ICLR 2017, Toulon, France, April 24–26, 2017, Conference Track Proceedings. https://openreview.net/forum?id=BydrOIcle

Gurumurthy S, Kiran Sarvadevabhatla R, Venkatesh Babu R (2017) Deligan: Generative adversarial networks for diverse and limited data. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 166–174

Li W, Xu L, Liang Z, Wang S, Cao J, Lam T C, Cui X (2021) JDGAN: Enhancing generator on extremely limited data via joint distribution. Neurocomputing 431:148–162

Che T, Li Y, Jacob A P, Bengio Y, Li W (2016) Mode regularized generative adversarial networks. CoRR, abs/1612.02136

Gulrajani I, Ahmed F, Arjovsky M, Dumoulin V, Courville A C (2017) Improved training of wasserstein gans. In: Advances in neural information processing systems, pp 5767–5777

Tolstikhin I O, Gelly S, Bousquet O, Simon-Gabriel C-J, Schölkopf B (2017) Adagan: Boosting generative models. In: Advances in neural information processing systems, pp 5424–5433

Park D K, Yoo S, Bahng H, Choo J, Park N (2018) MEGAN: mixture of experts of generative adversarial networks for multimodal image generation. In: international joint conference on artificial intelligence, pp 878–884

Ghosh A, Kulharia V, Namboodiri V P, Torr PHS, Dokania P K (2018) Multi-agent diverse generative adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8513–8521

Khayatkhoei M, Elgammal A, Singh M (2018) Disconnected manifold learning for generative adversarial networks. In: Proceedings of the 32nd international conference on neural information processing systems, pp 7354–7364

Liu K, Tang W, Zhou F, Qiu G (2019) Spectral regularization for combating mode collapse in gans. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 6382–6390

Elfeki M, Couprie C, Riviere M, Elhoseiny M (2019) GDPP: Learning diverse generations using determinantal point processes. In: International conference on machine learning, pp 1774–1783

Mao Q, Lee H-Y, Tseng H-Y, Ma S, Yang M-H (2019) Mode seeking generative adversarial networks for diverse image synthesis. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1429–1437

Bang D, Shim H (2021) MGGAN: Solving mode collapse using manifold-guided training. In: international conference on computer vision, pp 2347–2356

Li W, Fan L, Wang Z, Ma C, Cui X (2021) Tackling mode collapse in multi-generator gans with orthogonal vectors. Pattern Recogn 110:107646

Tran N-T, Bui T-A, Cheung N-M (2018) Dist-gan: An improved gan using distance constraints. In: Proceedings of the european conference on computer vision (ECCV), pp 370–385

Tran N-T, Bui T-A, Cheung N-M (2019) Improving gan with neighbors embedding and gradient matching. In: Proceedings of the AAAI conference on artificial intelligence, vol 33, pp 5191–5198

Hoang Q, Nguyen T D, Le T, Phung D (2018) MGAN: Training generative adversarial nets with multiple generators. In: 6th International conference on learning representations, ICLR 2018, Vancouver, BC, Canada, April 30 – May 3, 2018, Conference Track Proceedings. https://openreview.net/forum?id=rkmu5b0a-

Srivastava A, Valkov L, Russell C, Gutmann M U, Sutton C (2017) Veegan: Reducing mode collapse in gans using implicit variational learning. In: Advances in neural information processing systems, pp 3308–3318

Zhong P, Mo Y, Xiao C, Chen P, Zheng C (2019) Rethinking generative mode coverage: A pointwise guaranteed approach. Adv Neural Inf Process Syst 32:2088–2099

Arjovsky M, Bottou L (2017) Towards principled methods for training generative adversarial networks. CoRR, abs/1701.04862

Zhu J-Y, Zhang R, Pathak D, Darrell T, Efros A A, Wang O, Shechtman E (2017) Multimodal image-to-image translation by enforcing bi-cycle consistency. In: Advances in neural information processing systems, pp 465–476

Mirza M, Osindero S (2014) Conditional generative adversarial nets. CoRR, abs/1411.1784

Radford A, Metz L, Chintala S (2016) Unsupervised representation learning with deep convolutional generative adversarial networks. In: 4th International conference on learning representations, ICLR 2016, San Juan, Puerto Rico, May 2–4, 2016, Conference Track Proceedings. 1511.06434

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Selection of MCR formulation and its parameters

We have explored three different formulations for the MCR values. Equation (5) presents a linear relationship between MCR value and percentage of covered modes.

Equation (6) models a relationship that achieves early convergence to reward the model with low generator error.

Equation (7) models a relationship where it avoids rewarding the generator too early to keep it motivated.

Figure 12 shows the trajectory of different formulations based on the percentage of covered modes.

Firstly, we have experimented with constant values of L, S1 and S2 for different formulations on synthetic data with 36 modes. Our results illustrated in Fig. 13 indicate that (6) is presenting a suitable trajectory for MCR values over the percentage of covered modes.

Finally, we have used different values of L, S1 and S2 on synthetic data with 36 modes. The hyperparameter optimization results are illustrated in Fig. 14.

Comparison of different hyperparameters for (6) on synthetic 2D grid data with 36 modes averaged over 5 repeats

Equation (6) with L, S1 and S2 of 1, 10 and 5 shows an early convergence for all the metrics compared to other formulations and hyperparameters. As shown in Fig. 12, L, S1 and S2 of 1, 10 and 5 enforce a low initial MCR value with moderate speed of convergence to MCR value of one. In other words, VARGAN performance does not improve by defining a large MCR value for initial low mode coverage or a fast convergence trajectory.

B Architecture of models

In this section, a detailed summary of GAN architectures is presented. Table 7 presents the feed-forward model architecture used for all the datasets.

Table 8 shows the convolutional model architecture used for stacked MNIST dataset. We have modified the architecture for other GAN variants to implement the specific details of their methodology.

Convolutional model architecture used for EES dataset is reported in Table 9. Number of convolutional layers is changed to implement both 9 × 9 and 19 × 19 designs.

C Detailed results

In this section, performance comparison of GAN models over the epochs is presented. Figures 15 and 16 illustrate the convergence of the models for synthetic data with 8 and 25 modes based on different performance metrics and over epochs. VARGAN and GDPP models seem to have early convergence in the beginning epochs for synthetic data with 8 modes. VARGAN shows great early convergence on synthetic data with 25 modes as well, and the rest of the models follow it by a large gap.

Figure 17 presents the convergence of performance metrics for convolutional GAN models on stacked MNIST data. MGGAN, VARGAN and PacVARGAN show great early convergence over all the metrics. Moreover, VARGAN and PacVARGAN present lower standard deviations compared to MGGAN.

Rights and permissions

About this article

Cite this article

Mohammadjafari, S., Cevik, M. & Basar, A. VARGAN: variance enforcing network enhanced GAN. Appl Intell 53, 69–95 (2023). https://doi.org/10.1007/s10489-022-03199-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03199-8