Abstract

To enhance the approximation and generalization ability of classical artificial neural network (ANN) by employing the principles of quantum computation, a quantum-inspired neuron based on controlled-rotation gate is proposed. In the proposed model, the discrete sequence input is represented by the qubits, which, as the control qubits of the controlled-rotation gate after being rotated by the quantum rotation gates, control the target qubit for rotation. The model output is described by the probability amplitude of state |1〉 in the target qubit. Then a quantum-inspired neural network with sequence input (QNNSI) is designed by employing the quantum-inspired neurons to the hidden layer and the classical neurons to the output layer. An algorithm of QNNSI is derived by employing the Levenberg–Marquardt algorithm. Experimental results of some benchmark problems show that, under a certain condition, the QNNSI is obviously superior to the ANN.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Over the last few decades, many researchers and publications have been dedicated to improve the performance of neural networks. Useful models to enhance the approximation and generalization abilities include: local linear radial basis function neural networks, which replaced the connection weights of conventional radial basis function neural networks by a local linear model [1]; selective neural networks ensemble with negative correlation, which employed the hierarchical pair competition-based parallel genetic algorithm to train the neural networks forming the ensemble [2]; polynomial based radial basis function neural networks [3]; hybrid wavelet neural networks, which employed rough set theory to help in decreasing the computational effort needed for building the networks structure [4]; simultaneous optimization of artificial neural networks, which employed GA to optimize multiple architectural factors and feature transformations of ANN to relieve the limitations of the conventional back propagation algorithm [5].

Many neurophysiological experiments indicate that the information processing character of the biological nerve system mainly includes the following eight aspects: the spatial aggregation, the multi-factor aggregation, the temporal cumulative effect, the activation threshold characteristic, self-adaptability, exciting and restraining characteristics, delay characteristics, conduction and output characteristics [6]. From the definition of the M–P neuron model, classical ANN preferably simulates voluminous biological neurons’ characteristics such as the spatial weight aggregation, self-adaptability, conduction and output, but it does not fully incorporate temporal cumulative effect because the outputs of ANN depend only on the inputs at the moment regardless of the prior moment. In the process of practical information processing, the memory and output of the biological neuron not only depend on the spatial aggregation of each input information, but also are related to the temporal cumulative effect. Although the ANNs in Refs. [7–10] can process temporal sequences and simulates delay characteristics of biological neurons; in these models, the temporal cumulative effect has not been fully reflected. Traditional ANN can only simulate point-to-point mapping between the input space and output space. A single sample can be described as a vector in the input space and output space. However, the temporal cumulative effect denotes that multiple points in the input space are mapped to a point in the output space. A single input sample can be described as a matrix in the input space, and a single output sample is still described as a vector in the output space. In this case, we claim that the network has a sequence input.

Since Kak [11] firstly proposed the concept of quantum-inspired neural computation in 1995, quantum neural network (QNN) has attracted a great attention by the international scholars during the past decade, and a large number of novel techniques have been studied for quantum computation and neural network. For example, Purushothaman et al. [12] proposed the model of quantum neural network with multilevel hidden neurons based on the superposition of quantum states in the quantum theory. In Ref. [13], an attempt was made to reconcile the linear reversible structure of quantum evolution with nonlinear irreversible dynamics of neural network. Michiharu et al. [14] presented a novel learning model with qubit neuron according to quantum circuit for XOR problem and describes the influence to learning by reducing the number of neurons. In Ref. [15], a new mathematical model of quantum neural network was defined, building on Deutsch’s model of quantum computational network, which provides an approach for building scalable parallel computers. Fariel Shafee [16] proposed the neural network with the quantum gated nodes, and indicates that such quantum network may contain more advantageous features from the biological systems than the regular electronic devices. In our previous work [17], we proposed a quantum BP neural network model with learning algorithm based on the single-qubit rotation gates and two-qubits controlled-rotation gates. In Ref. [18], we proposed a neural network model with quantum gated nodes and a smart algorithm for it, which shows superior performance in comparison with a standard error back propagation network. Adenilton et al. [19] proposed a weightless model based on quantum circuit. It is not only quantum-inspired but is actually a quantum NN. This model is based on Grover’s search algorithm, and it can perform both quantum learning and simulate the classical models. However, all the above QNN models, like M–P neurons, it also does not fully incorporate temporal cumulative effect because a single input sample is either irrelative to time or relative to a moment instead of a period of time.

In this paper, in order to fully simulate biological neuronal information processing mechanisms and to enhance the approximation and generalization ability of ANN, we proposed a qubit neural network model with sequence input based on controlled-rotation gates, called QNNSI. It’s worth pointing out that an important issue is how to define, configure and optimize artificial neural networks. Refs. [20, 21] make a deep research into this question. After repeated experiments, we opt to use a three-layer model with a hidden layer, which employs the Levenberg–Marquardt algorithm for learning. Under the premise of considering approximation ability and computational efficiency, this option is a relatively ideal. The proposed approach is utilized to predict the year mean of sunspot number, and the experimental results indicate that, under a certain condition, the QNNSI is obviously superior to the common ANN.

2 The qubit and quantum gate

2.1 Qubit

What is a qubit? Just as a classical bit has a state-either 0 or 1—a qubit also has a state. Two possible states for a qubit are the state |0〉 and |1〉, which as you might guess correspond to the states 0 and 1 for a classical bit. Notation like | 〉 is called the Dirac notation, and we will see it often in the following paragraphs, as it is the standard notation for states in quantum mechanics. The difference between bits and qubits is that a qubit can be in a state other than |0〉 or |1〉. It is also possible to form linear combinations of states, often called superposition

where 0≤θ≤π, 0≤ϕ≤2π.

Therefore, unlike the classical bit, which can only be set equal to 0 or 1, the qubit resides in a vector space parametrized by the continuous variables θ and ϕ. Thus, a continuum of states is allowed. The Bloch sphere representation is useful in thinking about qubits since it provides a geometric picture of the qubit and of the transformations that one can operate on the state of a qubit. Owing to the normalization condition, the qubit’s state can be represented by a point on a sphere of unit radius, called the Bloch Sphere. This sphere can be embedded in a three-dimensional space of Cartesian coordinates (x=cosϕsinθ, y=sinϕsinθ, z=cosθ). By definition, a Bloch vector is a vector whose components (x,y,z) single out a point on the Bloch sphere. We can say that the angles θ and ϕ define a Bloch vector, as shown in Fig. 1(a), where the points corresponding to the following states are shown: \(|A\rangle=[1,0]^{\rm T}\), \(|B\rangle=[0,1]^{\rm T}\), \(|C\rangle=|E\rangle=[\frac{1}{\sqrt{2}},-\frac{1}{\sqrt{2}}]^{\rm T}\), \(|D\rangle=[\frac{1}{\sqrt{2}},\frac{1}{\sqrt{2}}]^{\rm T}\), \(|F\rangle=[\frac{1}{\sqrt{2}},-\frac{{\rm i}}{\sqrt{2}}]^{\rm T}\), \(|G\rangle=[\frac{1}{\sqrt{2}},\frac{{\rm i}}{\sqrt{2}}]^{\rm T}\). For convenience, in this paper, we represent the qubit’s state by a point on a circle of unit radius as shown in Fig. 1(b). The corresponding relations between Figs. 1(a) and 1(b) can be written as

At this time, any state of the qubit may be written as

A n qubits system has 2n computational basis states. For example, a 2 qubits system has basis |00〉, |01〉, |10〉, |11〉. Similar to the case of a single qubit, the n qubits system may form the superpositions of 2n basis states

where a x is called probability amplitude of the basis states |x〉, and {0,1}n means the set of strings of length two with each letter being either zero or one. The condition that these probabilities can sum to one is expressed by the normalization condition

2.2 Quantum rotation gate

In the quantum computation, the logic function can be realized by applying a series of unitary transform to the qubit states, which the effect of the unitary transform is equal to that of the logic gate. Therefore, the quantum services with the logic transformations in a certain interval are called the quantum gates, which are the basis of performing quantum computation.

The definition of a single qubit rotation gate is written as

Let the quantum state  , then |ϕ〉 can be transformed by R(θ) as follows

, then |ϕ〉 can be transformed by R(θ) as follows

It is obvious that R(θ) shifts the phase of |ϕ〉.

2.3 Unitary operators and tensor products

A matrix U is said to be unitary if (U ∗)T U=I, where the ∗ indicates complex conjugation, and T indicates the transpose operation, I indicates the unit matrix. Similarly an operator U is unitary if (U ∗)T U=I. It is easily checked that an operator is unitary if and only if each of its matrix representations is unitary.

The tensor product is a way of putting vector spaces together to form larger vector spaces. This construction is crucial to understanding the quantum mechanics of multi-particle system. Suppose V and W are vector spaces of dimension m and n respectively. For convenience we also suppose the V and W are Hilbert spaces. Then V⊗W (read ‘V tensor W’) is an mn dimensional vector space. The elements of V⊗W are linear combinations of ‘tensor products’ |v〉⊗|w〉 of elements |v〉 of V and |w〉 of W. In particular, if |i〉 and |j〉 are orthonormal bases for the spaces V and W then |i〉⊗|j〉 is a basis for V⊗W. We often use the abbreviated notations |v〉|w〉, |v,w〉 or even |vw〉 for the tensor product |v〉⊗|w〉. For example, if V is a two-dimensional vector space with basis vectors |0〉 and |1〉 then |0〉⊗|0〉 and |1〉⊗|1〉 is an element of V⊗V.

2.4 Multi-qubits controlled-rotation gate

In a true quantum system, a single qubit state is often affected by a joint control of multi-qubits. A multi-qubits controlled-rotation gate C n(R) is a kind of control model. The multi-qubits system is also described by the wave function |x 1 x 2⋯x n 〉. In a (n+1)-bits quantum system, when the target bit is simultaneously controlled by n input bits, the input/output relationship of the system can be described by multi-qubits controlled-rotation gate in Fig. 2.

In Fig. 2(a), suppose we have n+1 qubits, and then we define the controlled operation C n(R) as follows

where x 1 x 2⋯x n in the exponent of R means the product of the bits x 1,x 2,…,x n . That is, the operator R is applied to last a qubit if the first n qubits are all equal to one; otherwise, nothing is done.

Suppose that the |x i 〉=cos(θ i )|0〉+sin(θ i )|1〉 are the control qubits, and the |ϕ〉=cos(φ)|0〉+sin(φ)|1〉 is the target qubit. From Eq. (8), the output of C n(R) is written by equation

We say that a state of a composite system having the property that it can’t be written as a product of states of its component systems is an entangled state. For reasons which nobody fully understands, entangled states play a crucial role in quantum computation and quantum information. It is observed from Eq. (9) that the output of C n(R) is in the entangled state of n+1 qubits, and the probability of the target qubit state |ϕ′〉, in which |1〉 is observed, equals to

In Fig. 2(b), the operator R is applied to last a qubit if the first n qubits are all equal to zero, and otherwise, nothing is done. The controlled operation C n(R) can be defined by the equation

By a similar analysis with Fig. 2(a), the probability of the target qubit state |ϕ′〉, in which |1〉 is observed, equals to

At this time, after the joint control of the n input bits, the target bit |ϕ′〉 can be defined as follows

3 The QNNSI model

3.1 The quantum-inspired neuron based on controlled-rotation gate

In this section, we first propose a quantum-inspired neuron model based on controlled-rotation gate, as shown in Fig. 3. This model consists of quantum rotation gates and multi-qubits controlled-rotation gate. The {|x i (t r )〉} defined in time domain interval [0,T] denote the input sequences, where \(t_{r}\in[0, {\rm T}]\). The |y〉 denotes the spatial and temporal aggregation results in [0,T]. The output is the probability amplitude of |1〉 after measuring |y〉. The control parameters are the rotation angles \(\overline{\theta}_{i}(t_{r})\), \(\overline{\varphi}(t_{r})\), i=1,2,…,n, r=1,2,…,q, n denotes the number of input space dimension, q denotes the length of input sequence.

Unlike classical neuron, each input sample of quantum-inspired neuron is described as a matrix instead of a vector. For example, a single input sample can be written as

Suppose |x i (t r )〉=cosθ i (t r )|0〉+sinθ i (t r )|1〉, |ϕ(t 1)〉=|0〉. Let

According to the definition of quantum rotation gate and multi-qubits controlled-rotation gate, the |ϕ′(t 1)〉 is given by

Let t=t r , r=2,3,…,q, from |ϕ(t r )〉=|ϕ′(t r−1)〉, the aggregate results of quantum neuron in [0,T] is finally written as

where \(\varphi(t_{q})=\arcsin(\{(\overline{h}_{q})^{2}(\sin^{2}(\varphi(t_{q-1})+\overline{\varphi}(t_{q})) - \sin^{2}(\varphi(t_{q-1})))+\sin^{2}(\varphi(t_{q-1}))\}^{1/2})\).

In this paper, we define the output of the quantum neuron as the probability amplitude of the corresponding state, in which |1〉 is observed. Let h(t r ) denote the probability amplitude of the state |1〉 in |ϕ′(t r )〉. Using some trigonometry, the output of the quantum neuron is rewritten as

where \(U_{q}=h(t_{q-1})\sqrt{1-(h(t_{q-1}))^{2}}\sin(2\overline{\varphi}(t_{q})) + (1-2(h(t_{q-1}))^{2})\sin^{2}(\overline{\varphi}(t_{q}))\), \(h(t_{1})=\overline{h}_{1}\sin(\overline{\varphi}(t_{1}))\).

3.2 The QNNSI model

In this paper, the QNNSI model is shown in Fig. 4, where the hidden layer consists of p quantum-inspired neurons based on controlled-rotation gate (Type I is employed for odd serial number, and type II is employed for even serial number), {|x 1(t r )〉},{|x 2(t r )〉},…,{|x n (t r )〉} denote the input sequences, h 1,h 2,…,h p denote the hidden output, the activation function in hidden layer employs the Eq. (18), the output layer consists of m classical neurons, w jk denote the connection weights in output layer, y 1,y 2,…,y m denote the network output, and the activation function in output layer employs the Sigmoid function.

For the lth sample, suppose \(|x_{i}^{l}(t_{r})\rangle=\cos\theta_{i}^{l}(t_{r})|0\rangle+\sin\theta_{i}^{l}(t_{r})|1\rangle\), \(0=t_{1}<t_{2}<\cdots<t_{q}={\rm T}\) denote the discrete sampling time points, set \(|\phi_{j}^{l}(t_{1})\rangle=|0\rangle\), j=1,2,…,p. Let

According to the input/output relationship of quantum neuron, the output of the jth quantum neuron in hidden layer can be written as

where \(U_{jq}^{l}=h_{j}^{l}(t_{q-1})\sqrt{1-(h_{j}^{l}(t_{q-1}))^{2}}\sin(2\overline{\varphi}_{j}(t_{q}))+ (1-2(h_{j}^{l}(t_{q-1}))^{2})\sin^{2}(\overline{\varphi}_{j}(t_{q}))\), \(h_{j}^{l}(t_{1})=\overline{h}_{j1}^{l}\sin(\overline{\varphi}(t_{1}))\).

The kth output in output layer can be written as

where i=1,2,…,n, j=1,2,…,p, k=1,2,…,m, l=1,2,…,L, L denotes the total number of samples.

4 The learning algorithm of QNNSI

4.1 The pretreatment of the input and output samples

Set the sampling time points \(0=t_{1}<t_{2}<\cdots<t_{q}={\rm T}\). Suppose the lth sample in n-dimensional input space \(\{\overline{X}^{l}(t_{r})\}=[\{\overline{x}_{1}^{l}(t_{r})\},\ldots,\{\overline{x}_{n}^{l}(t_{r})\}]^{\rm T}\), where r=1,2,…,q, l=1,2,…,L. Let

These samples can be converted into the quantum states as follows

where \(|x_{i}^{l}(t_{r})\rangle=\cos(\theta_{i}^{l}(t_{r}))|0\rangle+\sin(\theta_{i}^{l}(t_{r}))|1\rangle\).

It is worth pointing out that although a n-qubit system has 2n computational basis states, this n-qubit system may form the superpositions of 2n basis states. Although the number of these superpositions is infinite, in our approach, the superposition can be uniquely determined by the method of converting input samples into quantum states. Hence, the difference between our approach and a single input, zero-hidden layer, and one neuron ANN output, where input=n nodes, is embodied in the following two aspects. (1) For the former, the input sample is a specific quantum superposition state, and for the latter, the input sample is a specific real value vector. (2) For the former, the activation functions are designed through quantum computing principle, and for the latter, the classical Sigmoid functions are used as the activation functions.

Similarly, suppose the lth output sample \(\{\overline{Y}^{l}\}=[\{\overline{y}_{1}^{l}\},\allowbreak\{\overline{y}_{2}^{l}\},\ldots,\{\overline{y}_{m}^{l}\}]^{\rm T}\), where l=1,2,…,L. Let

then, these output samples can be normalized by the following equation

where k=1,2,…,m.

4.2 The adjustment of QNNSI parameters

The adjustable parameters of QNNSI include: (1) the rotation angles of quantum rotation gates in hidden layer: θ ij (t r ) and \(\overline{\varphi}_{j}(t_{r})\); (2) the connection weights in output layer: w jk .

Because the number of parameters is greater and gradient calculation is more complicated, the standard gradient descent algorithm is not easy to converge. Hence we employ the Levenberg–Marquardt algorithm in Ref. [22] to adjust the QNNSI parameters. Suppose \(\overline{y}_{1}^{l}, \overline{y}_{2}^{l}, \ldots, \overline{y}_{m}^{l}\) denote the normalized desired outputs of the lth sample, and \(y_{1}^{l}, y_{2}^{l}, \ldots, y_{m}^{l}\) denote the corresponding actual outputs. The evaluation function is defined as follows

Let \({\bf p}\) denote the parameter vector, \({\bf e}\) denote the error vector, and \({\bf J}\) denote the Jacobian matrix. \({\bf p}\), \({\bf e}\) and \({\bf J}\) are respectively defined as follows

where the gradient calculations in \({\bf J}({\bf p})\) see to the Appendix.

According to Levenberg–Marquardt algorithm, the iterative equation of adjusting QNNSI parameters is written as follows

where t denotes the iterative steps, \({\bf I}\) denotes the unit matrix, and μ t is a small positive number to ensure the matrix \({\bf J}^{\rm T}({\bf p}_{t}){\bf J}({\bf p}_{t})+\mu_{t}{\bf I}\) is invertible.

4.3 The stopping criterion of QNNSI

If the value of the evaluation function E reaches the predefined precision within the preset maximum number of iterative steps, then the execution of the algorithm is stopped, else the algorithm is not stopped until it reaches the predefined maximum number of iterative steps.

4.4 Learning algorithm description

The structure of QNNSI is shown in the following.

Procedure QNNSI

Begin

t←0

-

(1)

The pretreatment of the input and output samples.

-

(2)

Initialization of QNNSI, including

-

(a)

the predefined precision ε,

-

(b)

the predefined maximum number of iterative steps N,

-

(c)

the parameter of Levenberg–Marquardt algorithm μ t ,

-

(d)

the parameters of QNNSI \(\{\theta_{ij}(t_{r}),\overline{\varphi}_{j}(t_{r})\}\in (-\frac{\pi}{2},\frac{\pi}{2})\), {w jk }∈(−1,1).

-

(a)

-

(3)

While (not termination-condition)

Begin

-

(a)

computing the actual outputs of all samples by Eqs. (19)–(21),

-

(b)

computing the value of the evaluation function E by Eq. (27),

-

(c)

adjusting the parameters {θ ij (t r )}, \(\{\overline{\varphi}_{j}(t_{r})\}\), {w jk } by Eq. (31).

-

(d)

t←t+1,

End

-

(a)

End

4.5 Diagnostic explanatory capabilities

Finally, we briefly give the diagnostic explanatory capabilities of QNNSI, namely, given the complex model, how can one explain a given prediction, inference, or classification based on QNNSI. We believe that any given prediction, inference, or classification can be seen as an approximation problem from the input space to the output space. In this sense, the above problem is converted into the design problem of multi-dimension sequence samples. Our approach is below. For a n-dimension sample X of classical ANN, if n is a prime number, then extend the dimensions of this sample X to m=n+1 by setting X(m) equal X(n), and otherwise, nothing is done. We decompose m into the product of m 1 and m 2 and make these two numbers as close as possible. At this time, a n-dimension sample X of ANN is converted into a m 1 dimension sequence sample of QNNSI where the sequence length equals m 2, or a m 2 dimension sequence sample of QNNSI where the sequence length equals m 1.

5 Simulations

In order to experimentally illustrate the effectiveness of the proposed QNNSI, four examples are used to compare it with the ANN with a hidden layer in this section. In these experiments, we perform and evaluate the QNNSI in Matlab (Version 7.1.0.246) on a Windows PC with 2.19 GHz CPU and 1.00 GB RAM. Our QNNSI has the same structure and parameters as the ANN in these experiments, and the same Levenberg–Marquardt algorithm in Ref. [22] is applied in two models. Some relevant concepts are defined as follows.

Approximation error

Suppose \([\overline{y}_{1}^{l}, \overline{y}_{2}^{l}, \ldots, \overline{y}_{m}^{l}]\) and \([y_{1}^{l}, y_{2}^{l},\allowbreak \ldots ,y_{m}^{l}]\) denote the lth desired output and the corresponding actual output after training, respectively. The approximation error is defined as

where L denotes the number of the training samples, and m denotes the dimension of the output space.

Average approximation error

Suppose E 1,E 2,…,E N denote the approximation error over N training trials, respectively. The average approximation error is defined as

Convergence ratio

Suppose E denotes the approximation error after training, and ε denotes the target error. If E<ε, the network training is considered to have converged. Suppose N denotes the total number of training trials, and C denotes the number of convergent training trials. The convergence ratio is defined as

Iterative steps

In a training trial, the number of times of adjusting all network parameters is defined as iterative steps.

Average iterative steps

Suppose S 1,S 2,…,S N denote the iterative steps over N training trials, respectively. The average iterative steps are defined as

Average running time

Suppose T 1,T 2,…,T N denote the running time over N training trials, respectively. The average running time is defined as

5.1 Time series prediction for Mackey–Glass

Mackey–Glass time series can be generated by the following iterative equation

where t and τ are integers, a=0.2, b=0.1, τ=17, and x(0)∈(0,1).

From the above equation, we may obtain the time sequence \(\{x(t)\}^{1000}_{t=1}\). We take the first 800, namely \(\{x(t)\}^{800}_{t=1}\), as the training set, and the remaining 200, namely \(\{x(t)\}^{1000}_{t=801}\), as the testing set. Our prediction schemes is to employ n data adjacent to each other to predict the next one data. Namely, in our model, the sequence length equals to n. Therefore, each sample consists of n input values and an output value. Hence, there is only one output node in QNNSI and ANN. In order to fully compare the approximation ability of two models, the number of hidden nodes are respectively set to 10,11,…,30. The predefined precision is set to 0.05, and the maximum of iterative steps is set to 100. The QNNSI rotation angles in hidden layer are initialized to random numbers in (−π/2,π/2), and the connection weights in output layer are initialized to random numbers in (−1,1). For ANN, all weights are initialized to random numbers in (−1,1), and the Sigmoid functions are used as activation functions in hidden layer and output layer.

Obviously, ANN has n input nodes, and an ANN’s input sample can be described as a n-dimensional vector. For the number of input nodes of QNNSI, we employ the following six kinds of settings shown in Table 1. For each of these settings in Table 1, a single QNNSI input sample can be described as a matrix.

It is worth noting that, in QNNSI, a n×q matrix can be used to describe a single sequence sample. In general, ANN cannot deal directly with a single n×q sequence sample. In ANN, a n×q matrix is usually regarded as q n-dimensional vector samples. For fair comparison, in ANN, we have expressed the n×q sequence samples into the nq-dimensional vector samples. Therefore, in Table 1, the sequence lengths for ANN are not changed. It is clear that, in fact, there is only one kind of ANN in Table 1, namely, ANN32.

Our experiment scheme is that, for each kind of combination of input nodes and hidden nodes, six QNNSIs and one ANN are respectively run 10 times. Then we use four indicators, such as the average approximation error, the average iterative steps, the average running time, and the convergence ratio, to compare QNNSI with ANN. Training results contrast are shown in Tables 2, 3, 4 and 5, where QNNSIn_q denotes QNNSI with n input nodes and q sequence length.

From Tables 2–5, we can see that when the input nodes take 4 and 8, the performance of QNNSIs are obviously superior to that of ANN, and the QNNSIs have better stability than ANN when the number of hidden nodes changes. The same results also are illustrated in Figs. 5, 6, 7 and 8.

Next, we investigate the generalization ability of QNNSI. Based on the above experimental results, we only investigate QNNSI4_8 and QNNSI8_4. Our experiment scheme is that two QNNSIs and one ANN train 10 times on the training set, and the generalization ability is immediately investigated on the testing set after each training. The average results of the 10 tests are regarded as the evaluation indexes. We first present the following definition of evaluation indexes.

Average prediction error

Suppose \([\overline{y}_{1}^{l}, \overline{y}_{2}^{l}, \ldots, \overline{y}_{m}^{l}]\) and \([\widehat{y}_{1}^{l}(t), \widehat{y}_{2}^{l}(t), \ldots, \widehat{y}_{m}^{l}(t)]\) denote the desired output of the lth sample and the corresponding prediction output after the tth testing respectively. The average prediction error over N testing is defined as

where m denotes the dimension of the output space, L denotes the number of the testing samples.

Average error mean

Suppose \(\overline{y}^{l}=[\overline{y}_{1}^{l}, \overline{y}_{2}^{l}, \ldots, \overline{y}_{m}^{l}]\) and \(\widehat{y}^{l}(t)=[\widehat{y}_{1}^{l}(t), \widehat{y}_{2}^{l}(t), \ldots, \widehat{y}_{m}^{l}(t)]\) denote the desired output of the lth sample and the corresponding prediction output after the tth testing respectively. The average error mean over N testing is defined as

Average prediction variance

Suppose \(\overline{y}^{l}=[\overline{y}_{1}^{l}, \overline{y}_{2}^{l}, \ldots, \overline{y}_{m}^{l}]\) and \(\widehat{y}^{l}(t)\)= \([\widehat{y}_{1}^{l}(t), \widehat{y}_{2}^{l}(t), \ldots, \widehat{y}_{m}^{l}(t)]\) denote the desired output of the lth sample and the corresponding prediction output after the tth testing respectively. The average error variance over N testing is defined as

The evaluation indexes contrast of QNNSIs and ANN are shown in Table 6. Taking 24 hidden nodes for example, and the average prediction results contrast over 10 testing are illustrated in Fig. 9. The experimental results show that the generalization ability of the two QNNSIs is obviously superior to that of ANN.

These experimental results can be explain as follows. For processing of input information, QNNSI and ANN take two different approaches. QNNSI directly receives a discrete input sequence. In QNNSI, using quantum information processing mechanism, the input is circularly mapped to the output of quantum controlled-rotation gates in hidden layer. As the controlled-rotation gate’s output is in the entangled state of multi-qubits, therefore, this mapping is highly nonlinear, which makes QNNSI have the stronger approximation ability. In addition, QNNSI’s each input sample can be described as a matrix with n rows and q columns. It is clear from QNNSI’s algorithm that, for the different combination of n and q, the output of quantum-inspired neuron in hidden layer is also different. In fact, The number of discrete points q denotes the depth of pattern memory, and the number of input nodes n denotes the breadth of pattern memory. When the depth and the breadth are appropriately matched, the QNNSI shows excellent performance. For the ANN, because its input can only be described as a nq-dimensional vector, it does not directly deal with a discrete input sequence. Namely, it can only obtain the sample characteristics by way of breadth instead of depth. Hence, in the ANN information processing, there inevitably exists the loss of sample characteristics, which affects its approximation and generalization ability.

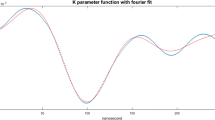

5.2 Annual average of sunspot prediction

In this section, we take the measured data of annual average of sunspot from 1749 to December 2007 as the experiment objects, and investigate the prediction ability of the proposed model. All samples data are shown in Fig. 10. In all samples, we use the first 200 years (1949–1948) data to train the network, and the remaining 59 years (1949–2007) data to test the generalization of the proposed model. For the input nodes and the sequence length, we employ the seven kinds of settings shown in Table 7. In this experiment, we set the number of hidden nodes to 20,21,…,40, respectively. The target error is set to 0.05, and the maximum number of iterative steps is set to 100. The other parameters of QNNSIs and ANNs are set by the same way as the previous experiment.

7 QNNSIs and 2 ANNs are run 10 times respectively for each setting of hidden nodes, and then we use the same evaluation indicators as the previous experiment to compare QNNSIs with ANNs. Training result contrasts are shown in Tables 8, 9, 10 and 11.

From Tables 8–11, we can see that the performance of QNNSI5_10, QNNSI7_7 and QNNSI10_5 are obviously superior to that of the two ANNs. The convergence ratio of these three QNNSIs reaches 100% under a variety of values of hidden nodes. Overall, the other three indicators of these three QNNSIs are better than that of two ANNs, and there is good stability when the number of hidden nodes changes. The same results also are illustrated in Figs. 11, 12, 13 and 14.

Next, we investigate the generalization ability of QNNSI. Based on the above experimental results, we only investigate QNNSI5_10, QNNSI7_7, and QNNSI10_5. Our experiment scheme is that three QNNSIs and two ANNs are respectively done 10 training by the first 200 years (1749–1948) data, and are immediately tested by the remaining 59 years (1949–2007) data after each training. The average prediction error of the 10 tests is regarded as the evaluation index. The average prediction error contrast of QNNSIs and ANNs are shown in Table 12. Taking 35 hidden nodes for example, the average prediction result contrast are illustrated in Fig. 15. The experimental results show that the generalization ability of three QNNSIs is obviously superior to that of corresponding ANNs.

5.3 Caravan insurance policy prediction

In this experiment, we predict who would be interested in buying a caravan insurance policy. This data set used in the CoIL 2000 Challenge contains information on customers of an insurance company and comes from the following url: http://kdd.ics.uci.edu/databases/tic/tic.html. The data was supplied by the Dutch data mining company Sentient Machine Research and is based on a real world business problem. The training set contains 5822 descriptions of customers. Each record consists of 86 attributes, containing sociodemographic data (attribute 1–43) and product ownership (attributes 44–86). The sociodemographic data is derived from zip codes. All customers living in areas with the same zip code have the same sociodemographic attributes. Attribute 86 is the target variable, which equals 0 or 1 and indicate information of whether or not they have a caravan insurance policy. The Dataset for predictions contains 4000 customer records of whom only the organisers know if they have a caravan insurance policy. It has the same format as the training set, only the target is missing. Participants are supposed to return the list of predicted targets only.

Considering the each customer consists of 85 feature attributes for the input nodes and the sequence length, we employ the four kinds of settings shown in Table 13. In this experiment, we set the number of hidden nodes to 10,11,…,20, respectively. The maximum number of iterative steps is set to 100. The other parameters of QNNSIs and ANN are set by the same way as the previous experiment. In this experiment, we do not set the value of target error. The algorithm is not stopped until it reaches the predefined maximum number of iterative steps.

QNNSIs and ANN train 10 times for each setting of hidden nodes by the training set data, and are immediately tested by the testing set data after each training. The evaluation indicators used in this experiment are defined as follows.

The number of correct prediction results

Suppose \(\overline{y}^{1}, \overline{y}^{2},\allowbreak \ldots, \overline{y}^{M}\) denote the desired outputs of M samples, and y 1,y 2,…,y M denote the corresponding actual outputs, where M denotes the number of samples in training set. The number of correct prediction results for training set is defined as

where N denotes the total number of training trials, if y m≥0.5, then [y m]=1, otherwise [y m]=0. Similarly, the number of correct prediction results for the testing set is defined as

where \(\overline{M}\) denotes the number of samples in testing set.

The ratio of correct prediction results

The ratio of correct prediction results for the training set is defined as

Similarly, the ratio of correct prediction results for the testing set is defined as

Then, we use these four indicators and the average running time T avg to compare QNNSIs with ANN. Experimental result contrasts are shown in Table 14.

It can be seen from Table 14 that the average running time of QNNSI85_1 is the shortest, and so, it is the most efficient. The R te of QNNSI17_5 is the greatest, and so, its generalization ability is the strongest. For ANN85, although the R tr is the greatest of the five models, its generalization ability is inferior to QNNSI17_5 and QNNSI5_17. In addition, for the four QNNSIs, almost all of the R te are greater than the corresponding R tr , which suggests that QNNSI has stronger generalization ability than ANN.

5.4 Breast cancer prediction

In this experiment, we give an example of predicting breast cancer with QNNSI and ANN. Features are computed from a digitized image of a fine needle aspirate (FNA) of a breast mass. They describe characteristics of the cell nuclei present in the image. A few of the images can be found at the following url: http://www.cs.wisc.edu/~street/images/. The dataset is linearly separable using all 30 input features, and 2 prediction fields respectively are benign and malignant. The number of instances in the dataset equals to 569, where 357 instances are benign and 212 instances are malignant. The best predictive accuracy obtained using one separating plane in the 3-D space of Worst Area, Worst Smoothness and Mean Texture. Separating plane described above can be obtained using Multi-surface Method-Tree (MSM-T), a classification method which uses linear programming to construct a decision tree. The actual linear program used to obtain the separating plane in the 3-dimensional space is that described in Ref. [23]. The above-mentioned classifier has correctly diagnosed 176 consecutive new patients as of November 1995.

In all samples, we use the first 400 instances (where 227 are benign) to train the network, and the remaining 169 instances (where 130 are benign) to test the generalization of the proposed model. For the input nodes and the sequence length, we employ the eight kinds of settings shown in Table 15. In this experiment, we set the number of hidden nodes to 5,6,…,15, respectively. The maximum number of iterative steps is set to 100. The other parameters of QNNSIs and ANN are set in the same way as the previous experiment. In this experiment, we do not set the value of the target error. The algorithm is not stopped until it reaches the predefined maximum number of iterative steps.

Our experiment scheme is below. QNNSIs and ANN train 10 times for each setting of hidden nodes by the training set data, and are immediately tested by the testing set data after each training. Then, we use the same evaluation indicators as the previous experiment to compare QNNSIs with ANN. Experimental contrast results are shown in Table 16.

It can be seen from Table 16 that, as far as the approximation and generalization ability are concerned, QNNSI1_30 and QNNSI30_1 are obviously inferior to ANN30; QNNSI2_15 and QNNSI15_2 are roughly equal to ANN30; QNNSI3_10 and QNNSI10_3 are slightly superior to ANN30; QNNSI5_6 and QNNSI6_5 are obviously superior to ANN30. As far as the average running time are concerned, QNNSI1_30, QNNSI2_15, and QNNSI3_10 are obviously longer than ANN30; QNNSI5_6 and QNNSI6_5 are slightly longer than ANN30; QNNSI10_3, QNNSI15_2, and QNNSI30_1 are roughly equal to ANN30. Synthesizing the above-mentioned two aspects, QNNSI shows better performance than ANN when the number of input nodes is close to the sequence length.

Next, we theoretically explain the above experimental results. Assume that n denotes the number of input nodes, q denotes the sequence length, p denotes the number of hidden nodes, and m denotes the number of output nodes, and the product of nq is approximately a constant.

It is clear that the number of adjustable parameters in QNNSI and ANN is the same, i.e., equals npq+pm. The weights adjustment formula in the output layer of QNNSI and ANN is also the same. But, their parameters adjustment of hidden layer is completely different. The adjustment of hidden parameters in QNNSI is much more complex than that in ANN. In ANN, each hidden parameter adjustment only involves two derivative calculations. In QNNSI, each hidden layer parameter adjustment involves at least two and at most q+1 derivative calculations.

In QNNSI, when q=1, although the number of input nodes is the greatest possible, the calculation of the hidden layer output and hidden parameter adjustment are also the most simple, which directly lead to the reduction of the approximation ability. When n=1, the calculation of the hidden layer output is the most complex, which make the QNNSI have the strongest nonlinear mapping ability. However, at this time, the calculation of hidden parameter adjustment is also very complex. A large number of derivative calculations can lead to the adjustment of parameters which tend to zero or infinity. This can hinder the convergence of the training process and lead to the reduction of the approximation ability. Hence, when q=1 or n=1, the approximation ability of QNNSI is inferior to that of ANN. When n>1 or q>1, the approximation ability of QNNSI tends to improve, and under a certain condition, the approximation ability of QNNSI will certainly be superior to that of ANN. The above analysis is consistent with the experimental results.

In addition, what is the accurate relationship between n and q to make QNNSI approximation ability the strongest? This problem needs further study, and usually depends on the specific issues. Our conclusions based on experiments is as follows: when q/2≤n≤2q, QNNSIn_q is superior to the ANN with nq input nodes.

It is worth pointing out that QNNSI is potentially much more computationally efficient than all the models referenced above in the Introduction section. The efficiency of many quantum algorithms comes directly from quantum parallelism that is a fundamental feature of many quantum algorithms. Heuristically, and at the risk of over-simplifying, quantum parallelism allows quantum computers to evaluate a function f(x) for many different values of x simultaneously. Although quantum simulation requires many resources in general, quantum parallelism leads to very high computational efficiency by using the superposition of quantum states. In QNNSI, the input samples have been converted into corresponding quantum superposition states after preprocessing. Hence, as far as a lot of quantum rotation gates and controlled-not gates used in QNNSI are concerned, information processing can be performed simultaneously, which greatly improves the computational efficiency. Because the above four experiments are performed in classical computer, the quantum parallelism has not been explored. However, the efficient computational ability of QNNSI is bound to stand out in future quantum computer.

6 Conclusions

This paper proposes a quantum-inspired neural network model with sequence input based on the principle of quantum computing. The architecture of the proposed model includes three layers, where the hidden layer consists of quantum neurons and the output layer consists of classical neurons. An obvious difference from classical ANN is that each dimension of a single input sample consists of a discrete sequence rather that a single value. The activation function of hidden layer is redesigned according to the principle of quantum computing. The Levenberg–Marquardt algorithm is employed for learning. With the application of the information processing mechanism of quantum controlled-rotation gates, the proposed model can effectively obtain the sample characteristics by way of breadth and depth. The experimental results reveal that a greater difference between input nodes and sequence length leads to a lower performance of the proposed model than that of the classical ANN, on the contrary, it obviously enhances the approximation and generalization ability of the proposed model when input nodes are closer to the sequence length. The following issues to the proposed model, such as continuity, computational complexity, and improvement of the learning algorithm, are subject of further research.

References

Nekoukar V, Beheshti MTH (2010) A local linear radial basis function neural network for financial time-series forecasting. Appl Intell 33:352–356

Lee H, Kim E, Pedrycz W (2012) A new selective neural network ensemble with negative correlation. Appl Intell 37:488–498

Park B-J, Pedrycz W, Oh S-K (2010) Polynomial-based radial basis function neural networks (P-RBF NNs) and their application to pattern classification. Appl Intell 32:27–46

Hassan YF (2011) Rough sets for adapting wavelet neural networks as a new classifier system. Appl Intell 35:260–268

Kim K-j, Ahn H (2012) Simultaneous optimization of artificial neural networks for financial forecasting. Appl Intell 36:887–898

Tsoi AC, Back AD (1994) Locally recurrent globally feedforward networks: a critical review of architectures. IEEE Trans Neural Netw 5(2):229–239

Kleinfeld D (1986) Sequential state generation by model neural network. In: Proceedings of the national academy of sciences of the United States of America, pp 9469–9473

Waibel A, Hanazawa T, Hinton G, Shikano K, Lang KJ (1989) Phoneme recognition using time-delay neural networks. IEEE Trans Acoust Speech Signal Process 37(3):328–339

Lippmann RP (1989) Review of neural network for speech recognition. Neural Comput 1(1):1–38

Moustra M, Avraamides M, Christodoulou C (2011) Artificial neural network for earthquake prediction using time series magnitude data or seismic electric signals. Expert Syst Appl 38:15032–15039

Kak S (1995) On quantum neural computing. Inf Sci 83(3–4):143–160

Purushothaman G, Karayiannis NB (1997) Quantum neural network (QNN’s): inherently fuzzy feedforward neural network. IEEE Trans Neural Netw 8(3):679–693

Zak M, Williams CP (1998) Quantum neural nets. Int J Theor Phys 37(2):651–684

Maeda M, Suenaga M, Miyajima H (2007) Qubit neuron according to quantum circuit for XOR problem. Appl Math Comput 185(2):1015–1025

Gupta S, Zia RKP (2001) Quantum neural network. J Comput Syst Sci 63(3):355–383

Shafee F (2007) Neural network with quantum gated nodes. Eng Appl Artif Intell 20(4):429–437

Li PC, Li SY (2008) Learning algorithm and application of quantum BP neural network based on universal quantum gates. J Syst Eng Electron 19(1):167–174

Li PC, Song KP, Yang EL (2010) Model and algorithm of neural network with quantum gated nodes. Neural Netw World 11(2):189–206

da Silva AJ, de Oliveira WR, Ludermir TB (2012) Classical and superposed learning for quantum weightless neural network. Neurocomputing 75(1):52–60

Gonzalez-Carrasco I, Garcia-Crespo A, Ruiz-Mezcua B, Lopez-Cuadrado JL (2011) Dealing with limited data in ballistic impact scenarios: an empirical comparison of different neural network approaches. Appl Intell 35:89–109

Gonzalez-Carrasco I, Garcia-Crespo A, Ruiz-Mezcua B, Lopez-Cuadrado JL (2012) An optimization methodology for machine learning strategies and regression problems in ballistic impact scenarios. Appl Intell 36:424–441

Hagan MT, Demuth HB, Beale MH (1996) Neural network design. PWS Publishing Company, USA

Bennetta KP, Mangasariana OL (1992) Robust linear programming discrimination of two linearly inseparable sets. Optim Methods Softw 1(1):23–34

Acknowledgements

We thank the three anonymous reviewers sincerely for their many constructive comments and suggestions, which have tremendously improved the presentation and quality of this paper. This work was supported by the National Natural Science Foundation of China (Grant No. 61170132).

Author information

Authors and Affiliations

Corresponding author

Appendix: The gradient calculation in Levenberg–Marquardt algorithm

Appendix: The gradient calculation in Levenberg–Marquardt algorithm

According to the gradient descent algorithm in Ref. [22], the gradient of the rotation angles of the quantum rotation gates in hidden layer and the connection weights in output layer can be calculated as follows

where

Based on the above Eq. (45), we obtain

where j=1,2,…,p, k=1,2,…,m, r=1,2,…,q, l=1,2,…,L.

The gradient of the connection weights in the outer layer can be calculated as follows

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Li, P., Xiao, H. Model and algorithm of quantum-inspired neural network with sequence input based on controlled rotation gates. Appl Intell 40, 107–126 (2014). https://doi.org/10.1007/s10489-013-0447-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-013-0447-3