Abstract

Programmatic cost assessment of clinical interventions can inform future dissemination and implementation efforts. We conducted a randomized trial of Project ImPACT (Improving Parents As Communication Teachers) in which community early intervention (EI) providers coached caregivers in techniques to improve young children’s social communication skills. We estimated implementation and intervention costs while demonstrating an application of Time-Driven Activity-Based Costing (TDABC). We defined Project ImPACT implementation and intervention as processes that can be broken down successively into a set of procedures. We created process maps for both implementation and intervention delivery. We determined resource use and costs, per unit procedure in the first year of the program, from a payer perspective. We estimated total implementation cost per clinician and per site, intervention cost per child, and provided estimates of total hours spent and associated costs for implementation strategies, intervention activities and their detailed procedures. Total implementation cost was $43,509 per clinic and $14,503 per clinician. Clinician time (60%) and coach time (12%) were the most expensive personnel resources. Implementation coordination and monitoring (47%), ongoing consultation (26%) and clinician training (19%) comprised most of the implementation cost, followed by fidelity assessment (7%), and stakeholder engagement (1%). Per-child intervention costs were $2619 and $9650, respectively, at a dose of one hour per week and four hours per week Project ImPACT. Clinician and clinic leader time accounted for 98% of per child intervention costs. Highest cost intervention activity was ImPACT delivery to parents (89%) followed by assessment for child’s ImPACT eligibility (10%). The findings can be used to inform funding and policy decision-making to enhance early intervention options for young children with autism. Uncompensated time costs of clinicians are large which raises practical and ethical concerns and should be considered in planning of implementation initiatives. In program budgeting, decisionmakers should anticipate resource needs for coordination and monitoring activities. TDABC may encourage researchers to assess costs more systematically, relying on process mapping and gathering prospective data on resource use and costs concurrently with their collection of other trial data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Early intervention (EI) services are critical for improving long-term learning outcomes for children with autism spectrum disorder (ASD). Interventions for ASD initiated at earlier ages improves children’s cognition, language, behavior, and development (Boyd et al., 2010; Bradshaw et al., 2015; Rogers, 1996). Caregiver-mediated interventions are gaining widespread recognition as an effective and feasible approach to EI for young children with ASD (Green et al., 2015; Kasari et al., 2014; Pickard et al., 2016). In addition to improving child outcomes across a range of developmental domains and reducing challenging behavior, they improve parental self-efficacy, treatment engagement, and stress (Green et al., 2010; Kasari et al., 2014; Rogers et al., 2012; Stadnick et al., 2015; Wetherby & Woods, 2006).

Project ImPACT (Improving Parents As Communication Teachers) is an evidence-based caregiver coaching model for families of young children with ASD (Ingersoll & Dvortcsak, 2019). It is a manualized, caregiver-mediated, naturalistic developmental behavior intervention (Schreibman et al., 2015) that includes two core components: (1) a child-directed curriculum to guide caregivers in supporting their child’s social communication; and (2) guidelines to help EI providers coach caregivers in using the intervention strategies. Project ImPACT has demonstrated efficacy in improving caregiver and child outcomes, including improved caregivers’ treatment adherence and responsiveness, and improved children’s language, communication, and behavior (Stadnick et al., 2015; Stahmer et al., 2020; Yoder et al., 2021).

We collaborated with the Philadelphia Infant Toddler Early Intervention System (Philadelphia EI system) to conduct a two-year, randomized, implementation-intervention (hybrid) trial determining the effectiveness of Project ImPACT in community settings while also examining feasibility and potential utility of employed implementation strategies (hybrid type II trial). (Curran et al., 2012) Philadelphia is one of the first EI systems to implement Project ImPACT system wide. Because cost is a leading barrier to adoption and sustainability of evidence-based practices (EBP) (Aarons et al., 2009; Bond et al., 2014), decision-makers would greatly benefit from learning about the budgetary impact of preparing to implement Project ImPACT in their agencies. Uncertainty regarding the costs and cost-effectiveness of such programs can serve as a deterrent to their implementation. A comprehensive analysis of programmatic costs can mitigate this barrier and provide valuable information for dissemination efforts by presenting the resource needs and associated costs required for implementation and intervention. Such information is also useful for comparing the costs of early intervention programs.

Rigorous, detailed, transparent resource use and cost estimates are not typically reported in implementation studies (Bowser et al., 2021; Eisman et al., 2020; Gold et al., 2016; Powell et al., 2019). This is at least partly due to a lack of clearly defined and standardized costing methods for use in implementation science (Bowser et al., 2021; Dopp et al., 2019a; Roberts et al., 2019). Recently, an approach to costing implementation strategies has been proposed which combines Time-Driven Activity-Based Costing (TDABC) with the Proctor et.al rubric which provides a set of guidelines for specifying and reporting implementation strategies (Cidav et al., 2020). By blending these two approaches, resource use and cost estimation is combined with the Proctor rubric to allow researchers to routinely estimate implementation strategy costs alongside the other rubric elements. The method has been demonstrated with synthetic data generated to exemplify the method but hasn’t been applied in a real-world implementation initiative. In addition, the synthetic example focuses on estimating the implementation strategy costs; the viability of the method for estimating the cost of the intervention being implemented, hasn’t been explored.

In this study, we aim to estimate Project ImPACT implementation and intervention delivery costs, while demonstrating an application of TDABC-Proctor rubric approach in a hybrid trial setting with real-word data. Our cost estimates will be used in a future economic evaluation study to examine intervention outcomes relative to its costs.

Methods

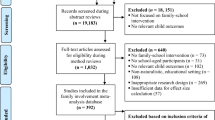

Project ImPACT

Project ImPACT is a parent-mediated intervention for young children with autism that blends developmental and naturalistic behavioral intervention techniques to teach core social communication skills (social engagement, language, social imitation, and play) during play and daily routines. To study the effects of Project ImPACT on social communication outcomes in children, responsiveness and self-efficacy in their parents, as well as the strategies utilized for Project ImPACT implementation, we are conducting a two-year hybrid type II (Curran et al., 2012) randomized controlled trial in which 6 community early intervention agencies in Philadelphia are blocked into groups of 3, based on agency size. Within each group, each agency was randomized to one of three conditions: (1) ImPACT at a dose of 1 h per week over six months (low dose Project ImPACT, 24 sessions in total); (2) ImPACT at 4 h per week over six months (high dose Project ImPACT, 96 sessions in total); or (3) treatment as usual.

These agencies are representative of the broader service system regarding the number of clinicians employed (23 per agency), and the number of children with or at risk for ASD they serve (36 per agency per year). All clinicians in these agencies have a Bachelor’s or Master’s degree in a relevant field (e.g., psychology, education, speech pathology). Most are independent contractors, provide home-based services, use an interdisciplinary approach, and have a treatment philosophy that includes caregivers engaging with their children.

From each of the 6 participating community agencies, 3 clinicians (18 total) are trained in Project ImPACT via a self-paced online tutorial, virtual interactive workshops, and virtual group and individual consultations. From each clinician’s caseload, 3 children younger than 30 months of age (54 total) and their families are recruited. Although Project ImPACT is developed as an in-home intervention, due to Covid-19 challenges, intervention protocol had to be changed to deliver Project ImPACT virtually over Zoom platform. Clinicians in the active treatment groups meet with the families virtually to deliver Project ImPACT every week for six months either for 1 h or 4 h depending on the assigned randomization group. Clinicians in the treatment as usual group meet with families virtually for 1 to 3 h each week to deliver standard early intervention focused on meeting each child’s individual developmental and communication goals using play-based therapeutic interactions.

Child and parent outcomes are measured through direct observation and self-report at baseline and 6 months. In addition to intervention effectiveness, implementation outcomes will be examined (implementation-intervention hybrid type 2 trial) to determine feasibility and potential utility of clinician training and education, ongoing consultation, and fidelity monitoring strategies. Various qualitative and quantitative measures on implementation outcomes of acceptability, adoption, feasibility, clinician, and parent fidelity are collected. Analyses of Project ImPACT implementation and effectiveness outcomes in a publicly funded EI setting are ongoing and research publications are underway.

TDABC and Proctor et al. Framework for Estimating Implementation Strategy and Intervention Costs

TDABC is based on process mapping which requires systematic, detailed, clear specification of the processes that can be broken down successively into a set of exact steps to complete the process.

Proctor rubric for implementation strategy specification and reporting (Cidav et al., 2020) suggests when studying implementation strategies, actor (who), action (does what), temporality (when), dosage (frequency and duration), action target, justification and outcomes should be clearly specified. Cidav et.al (2020) proposed a 5-step approach to apply the TDABC in Implementation Science by conceptualizing an implementation strategy as a process associated with executing a series of specific procedures performed by using personnel resources and non-personnel resources such as equipment and supplies. Proctor rubric is then used for TDABC’s process mapping to operationalize implementation strategies. This approach can be extended to estimate intervention costs and to the hybrid trial settings, by conceptualizing the intervention as a process.

Data Collection and Cost Estimation

We define two overarching processes that comprise: 1. Project ImPACT implementation, 2. Project ImPACT intervention. We define implementation process as the set of actions, methods taken to enable clinicians and clinics to take up Project ImPACT, introduce it into clinicians’ daily practice, and establish it in clinics. We define intervention process as the set of actions that comprise the intervention being implemented, what the children/families receive as opposed to “what otherwise would have happened” (Ovretveit, 2014). In our specific context, Project ImPACT, implementation actions are not permanent, are envisioned to be in place only for a certain period (although they might be reintroduced in the future based on clinic’s specific needs), while intervention actions are permanent, and would be in place as long as the clinic provides Project ImPACT. Hence, implementation costs are one-time startup costs that were incurred in the first year of program, while intervention costs are ongoing costs.

Our costing and data collection approach involved the following:

- Step 1: :

-

Name/identify the implementation strategies and intervention activities and list the associated actions, actors, and temporality.

For TDABC's process mapping step, we conducted interviews with the key personnel (e.g., study supervisor, project coordinator) on operational details to fully understand and document the implementation and intervention process and to create a blueprint of the clinical trial. We identified core implementation strategies, intervention activities and their specific workflows through the study protocol, in close collaboration with the key study personnel. In naming implementation strategies, we used existing taxonomies in the literature (Dopp et al., 2019b; Pinnock et al., 2017; Powell et al., 2015). For each core implementation strategy and intervention activity, we delineated specific actions. Then for each action, we listed the actors necessary for carrying out a single occurrence of the action and determined their temporality. In this way, we created a map of the implementation strategies, intervention activities, their associated actions, actors, and temporality and validated them with the key study personnel.

We defined research activities as activities that would not occur typically if the initiative didn’t have an embedded research component. As per the input from the EI system partners and intervention developers, we excluded data collection solely for research purposes, and management, and analysis of these data from process mapping.

- Step 2: :

-

Determine the frequency and average duration of each implementation and intervention action by actors, and calculate actors’ total time spent on each action.

For each action, we defined a “time driver” which is a feature that would causally affect the time required to perform the action and measures the volume of the action (e.g. number of consultation sessions). We then determined “unit time”, the time required to perform a single occurrence of the time driver (e.g. duration of a single consultation session). We defined the whole process at the time driver- and unit time-levels, allowing each element to be costed on a per instance basis.

For all actions, except those related to communication (meeting, making phone calls, emailing, texting), time driver frequencies and unit durations were collected in real time as part of the trial data collection and their actual values were documented. For communication related actions, real-time documentation was not feasible. Meetings were pre-scheduled, recurring, and standard (e.g. monthly meetings) that allowed us to estimate time driver frequencies and durations. For making phone calls, emailing, and texting, we relied on vignette-based data collection (Quigley et al., 2020) by which we observed frequencies and unit durations of these actions over a pre-determined period, then extrapolated these to estimate frequencies and durations over the study period.

Communication actions also involved communication among team members for managing research activities. Based on the study team’s input, we assumed that 20% of the communication actions were solely for the research purposes and therefore excluded them from the analysis.

- Step 3: :

-

Determine the price per hour of each actor.

We used actual wage rates of the involved personnel. We increased the wage rates by 30% (average employer costs for employee compensation (https://www.bls.gov/)) to incorporate workplace benefits, such as health insurance, paid by the employer.

- Step 4: :

-

Determine non-personnel and their associated expenses.

We itemized non-personnel resources and determined the expense for each item using the project budget and expense reports.

- Step 5: :

-

Calculate total costs.

We calculated actor-level action costs by multiplying the actor-specific time spent on the action and the actor wage rate. We summed action costs at the actor-level to determine implementation strategy and intervention activity costs at the action-level. The sum of the action costs yielded the total personnel time costs. We added non-personnel costs to obtain the total cost of Project ImPACT during the study period.

Analysis

We conducted a “programmatic (i.e., implementation and intervention) cost” study which aims to estimate costs that would fall on those paying for the program; thus, we excluded costs such as broader healthcare system costs or caregiver time costs. We do include though opportunity cost of clinician time to capture true costs, although payers may not compensate for these costs.

We assessed costs from the payer perspective. The payers of the implementation and intervention delivery were, respectively, the Eagles Autism Foundation and the Philadelphia EI system. The time horizon was the first year of the study. Since our focus is on estimating Project ImPACT programmatic costs, we only included Project ImPACT participants (clinicians and children/caregivers) and excluded usual care arm participants. Programmatic costs, as defined here, are $0 for those who did not participate in the program.

We estimated total implementation cost per clinician and site, and Project ImPACT intervention cost per child and per hour. We provided detailed estimates of total hours spent and associated costs by actor, action, implementation strategy and intervention activity, and compared them to the total overall cost. When possible, we provided standard deviations for action frequencies and unit durations.

For sensitivity analysis, we estimated costs 1. assuming perfect attendance to Project ImPACT sessions by caregivers, 2. using United States national average wage rates (https://www.bls.gov/) for personnel who are likely to carry out the project activities carried out by the study personnel, 3. including travel to/from child’s home as an additional intervention action to estimate costs incurred if Project ImPACT was delivered in-home, 4. using different frequencies of actions as they are likely to vary across different settings or time periods. We assumed 50% of communication actions would not occur in the long term when the intervention matures or in implementation initiatives that do not have an embedded research component, and adjusted action frequencies accordingly.

Results

Detailed estimation of resource use and costs of implementation, and low dose and high dose intervention can be found in Tables S1-S3 in the Supplementary File. Tables 1, 2, 3 are simplified versions of these tables. We categorized our results by TDABC steps presented above.

- Step 1: :

-

Name/identify the implementation strategies and intervention activities and list the associated actions, actors, and temporality.

We defined two phases of the trial, pre-intervention phase and intervention phase, which show the timeline and flow of the processes. Fig. 1 depicts the process map of the program by phase, delineating implementation strategies, intervention activities and their specific actions which are listed in the Columns A and B of Tables 1, 2, 3. Action description and temporality are presented in Tables S1-S3. Implementation process consists of 5 discrete implementation strategies: stakeholder engagement, clinician training and education, ongoing consultation, fidelity assessment (with 3 discrete sub-strategies: fidelity assessment for Project ImPACT direct delivery, for goal setting, for parent coaching), and implementation coordination and monitoring. Stakeholder engagement involved meeting with clinics individually and as a group. Clinician training and education involved conducting self-paced online training and interactive educational meetings. Ongoing consultation involved conducting individual and group consultation sessions. Clinician fidelity assessments involved watching recorded videos and rating them for clinician fidelity and evaluating clinicians’ own fidelity ratings. Implementation coordination and monitoring involved communicating via virtual meetings, ad hoc phone calls, email, and text.

Intervention process consists of 2 activities: assessment for Project ImPACT eligibility and Project ImPACT delivery. Assessment for the Project ImPACT eligibility involved conducting developmental assessment. Project ImPACT delivery involved conducting caregiver coaching sessions, completing administrative work, and conducting case reviews. In sensitivity analyses, this activity also included travel to/from child’s home.

During the first year of the study, overall personnel consisted of 25 individuals (actors): A team of 1 project manager, 2 project coordinators, 1 external trainer, 1 consultant, 12 clinicians, and 8 clinic leaders from 4 clinics. We report actors on a per action basis that is we listed the job category and the number of actors within the job category involved in a single instance of each action (Tables S1-S3). For example, in a single occurrence of the interactive educational meeting, 12 clinicians, 1 consultant, 1 external trainer and 2 project coordinators were present (Table S1).

- Step 2: :

-

Determine the frequency and average duration of each implementation and intervention action by actors, and calculate actors’ total time spent on each action.

Column D in Tables 1, 2, 3 shows total time spent on each action by each actor. For simplicity, time drivers, their frequencies and unit durations are presented in Tables S1-S3.

- Step 3: :

-

Determine the price per hour of each actor.

Column E in Tables 1, 2, 3 presents hourly wage rates for clinic leaders, clinicians, consultant, external trainer, project coordinator and project manager.

- Step 4: :

-

Determine non-personnel and their associated expenses.

Non-personnel, not time-driven resources shared across actions included Project ImPACT manual for clinicians and caregivers (Tables 2 and 3). No non-personnel costs were incurred other than manuals. Technology used was Zoom app which was available to all caregivers and clinicians through their own devices-computer, phone, iPad. All used their own internet service. No technology costs were incurred in this study.

- Step 5: :

-

Calculate total costs.

Action costs by specific actors, total time spent on each action, total cost of an action, total time spent on each implementation strategy and intervention activity, are given in the Columns F-J of Tables 1, 2, 3. At the bottom of Tables 1, 2, 3, we present total personnel and non-personnel costs of the implementation and intervention costs of Project ImPACT.

In the first year of the program, estimated total personnel hours devoted to implementation was 2168 with associated total implementation cost of $174,038 (2021 price year), $43,509 per clinic, $14,503 per clinician, of which all were personnel time costs (Table 1). Of these costs, only 40% were compensated by the Philadelphia EI system. The rest constituted uncompensated clinician time cost (60% of total costs). Consultant time (12%) was the next most expensive personnel resource, followed by project manager (10%), project coordinator (9%), external trainer (6%) and clinic leader (3%). Implementation coordination and monitoring (47%), ongoing consultation (26%) and clinician training (19%) represented most of the implementation cost, followed by fidelity assessment (7%), and stakeholder engagement (1%). Highest cost implementation actions were communication via email exchanges (21%), phone/virtual meetings (15%), followed by conducting group consultation (17%), interactive educational meetings (13%), individual consultation (9%), and communicating via text (7%). The rest of the implementation action costs were relatively small (< 3%). Implementation costs did not vary by low dose vs high dose intervention arms, since all clinicians were subject to the same implementation tasks as per Project ImPACT training protocol regardless of their assignment to intervention arms.

For virtually conducted, low dose Project ImPACT, delivered over six months, per child intervention cost was $2576 (21 h of personnel time, $125 per hour) of which 1% was expense for Project ImPACT manuals (Table 2), and the remaining was cost of clinician (98%) and clinic leader time (1%). For virtually delivered, high dose Project ImPACT delivered over six months, per child cost was $9650 (77 h of personnel time, $125 per hour) (Table 3). Highest cost intervention activity was Project ImPACT delivery to caregivers (89%) followed by assessment of child’s ImPACT eligibility (10%). Highest cost ImPACT delivery actions were conducting caregiver coaching sessions (68%), completing administrative work (17%), followed by conducting developmental assessments (10%) and conducting case reviews (5%).

Regarding the variance estimates, cost variation could occur due to variations in activity frequencies and unit durations across or within the actors in the same job category who perform a specific task. In most cases, there was not sufficient variation due to low sample size of actors (1 project manager, 2 project coordinators, 1 external trainer, 1 consultant). In addition, for clinicians, implementation actions were protocolized for Project ImPACT certification purposes. Same implementation tasks were assigned to all clinicians at the same frequency and duration and had to be completed by each clinician for them to be able to get certification.

For intervention activities, developmental assessment, administrative work, and case review were very standard actions, their frequencies and unit durations did not vary meaningfully across or within clinicians or clinic leaders. Caregiver coaching sessions are designed and manualized as 1 h sessions. They were scheduled for an hour with caregivers; there was not much room for flexibility in duration since the providers were clinicians with large workloads, mostly contract based, who had other appointments to attend. Number of caregiver coaching sessions per child, however, varied across clinicians. Number of coaching sessions per child for low dose Project ImPACT was 14 (SD = 7), and 59 (SD = 21) for high dose Project ImPACT.

Sensitivity Analysis

Assuming perfect attendance to Project ImPACT coaching sessions by caregivers, per child intervention cost was $4181 for low dose Project ImPACT and $15,431 for high dose Project ImPACT.

Using United States national average wage rates for personnel who are likely to carry out the project activities carried out by the study personnel (social and community service manager for clinic leader:$47, therapist for clinician:$39, psychologist for consultant and external trainer:$62, administrative support worker for project coordinator:$26, medical, health services manager for project manager $74), (https://www.bls.gov/) first year, one-time implementation cost was $8270 per clinician and $24,810 per site. Using national average wage rates, per child intervention cost was $842 for low dose intervention and $3055 for high dose intervention.

If we Include travel to/from child’s home as an additional intervention action to estimate costs incurred if Project ImPACT was delivered in-home, per child cost would be $4369 for low dose intervention and $17,025 for high dose intervention.

When we decreased the frequency of communication actions by 50%, the first year, one-time implementation cost was $11,520 per clinician and $34,561 per site.

Discusson

Estimating costs of implementing and delivering EI programs supports policy makers and health administrators in making budgeting decisions and allocating scarce healthcare resources efficiently. In this study, we estimated implementation and intervention delivery costs of Project ImPACT while demonstrating an application of TDABC-Proctor rubric approach in a hybrid trial setting with real-word data. In the first year of the program, estimated total personnel hours devoted to implementation was 2168 with associated total implementation cost of $174,038 (2021 price year), $43,509 per clinic, $14,503 per clinician, of which all were personnel time costs. For low dose Project ImPACT, per child intervention cost was $2576 (21 h of personnel time) of which 1% was expense for Project ImPACT manuals, and the remaining was time cost. For high dose Project ImPACT, per child cost was $9650 (77 h of personnel time).

Our costing approach yielded useful information about what programmatic factors have important impact on implementation and intervention costs. In this case, per clinician and per child costs are affected by (1) specific activities performed, (2) frequency of activities, (3) average duration per activity, (4) personnel involved in activities, and (5) personnel wage rates. Any change in any of these factors will have cost implications. An inherent challenge in randomized controlled trials is generalizability of findings (not only cost-related findings, but also effectiveness results) to other settings. In implementation settings that do not have an embedded research component, intervention might be carried out differently than in controlled trials. For example, intervention might have to be tailored or adapted to meet the community’s needs and available resources.

Reporting resource use in units and their unit prices, as opposed to reporting lumpsum total $ amounts, is helpful to circumvent the challenge of generalizability of cost estimates to other settings. When costs are disaggregated and reported in this way, a user of this data interested in replicating an intervention in their local setting could apply unit prices specific to their setting, change resource units according to their unique needs (e.g., more clinician hours are needed due to specific challenges in their setting), change a specific component of the implementation strategy (eg. provide only didactic training, drop ongoing consultation as it fits to their context) and see cost implications of these changes.

Our implementation cost estimates account for the opportunity costs, the efforts and time investments by the community partners for which they may not be compensated, and therefore represent true economic costs. These costs are not trivial; indeed, of the twelve clinicians, ten were contract employees who were not compensated for their time spent on training, consultation, and other implementation activities. Cost of their uncompensated time constituted the largest cost of implementation. Uncompensated labor of public mental health clinicians exacerbates economic precarity, financial strain and job-related stress among public mental health clinicians (Last et al., 2022). This also puts a burden on the publicly funded EI system and the EI agencies who are themselves facing financial constraints. These costs should be routinely captured in costing studies and considered in planning of the implementation initiatives and developing policies to support and retain public mental health workforce.

It is difficult to compare our implementation cost estimates to that of other similar parent-mediated programs due to scarcity of cost studies in this area. Our per clinician implementation cost estimate of $14,503 is almost as twice as what has been reported for per therapist cost for the Parent–Child Interaction Therapy (PCIT) training and consultation in the Philadelphia behavioral health system (Okamura et al., 2017). However, these costs are not directly comparable due to methodological differences in cost estimation. In future studies, transparent and detailed description of costing methods used, and detailed activity level resource use and costs would facilitate cross study comparisons and the potential for the findings to drive the policy decisions.

Implementation coordination and monitoring costs that arose from email and text correspondences and virtual/phone meetings constituted almost half of the implementation costs. High administrative costs have been observed in other studies that emphasize the importance of communication costs (Cidav et al., 2021; Ingels et al., 2016; Smith et al., 2020; Subramanian et al., 2011). Although we aimed to account for it in our sensitivity analysis, these large costs might still be due in part to the fact that this analysis took place alongside a research study and therefore study personnel made greater than average communication efforts to ensure that trial activities are performed as per protocol. Nevertheless, in budgeting for future programs, decisionmakers should anticipate resource needs related to coordination and monitoring activities and assess how these activities can be performed most efficiently (Dopp et al., 2020, 2021). As programs mature the proportion of total costs for such activities may decrease because of more efficient program management and the routinization of certain procedures.

Project ImPACT intervention or ongoing cost was low relative to implementation or startup cost. Many studies have noted substantial start-up costs that are separate from the ongoing costs of delivering the interventions themselves. (Bowser et al., 2021) In addition to informing program design, these cost estimates may be useful for comparative purposes when considering the costs of other EI programs. The costs of EI programs for ASD are substantial; most US studies indicate that the annual cost for such programs range between $40,000 and $80,000 per child (Rogge & Janssen, 2019). With $125 per hour cost, if high dose Project ImPACT were to be delivered for 52 weeks, totaling 208 h, annual cost of Project ImPACT delivery would be $26,000. One should always be careful when comparing such estimates. In most cases, direct comparison is not possible due to differences in methodological approach and underlying assumptions. For accurate cost comparisons across studies, standardized methods of costing and transparency in presenting the cost analysis are essential. Future cost studies conducted prospectively to capture detailed activity level costs would make such meaningful comparisons possible. Given, previous evidence on Project ImPACT’s efficacy (Stadnick et al., 2015; Stahmer et al., 2020; Yoder et al., 2021) and our cost estimates, it might be possible to provide high quality EI for young children with ASD at significantly lower costs. However, more evidence on Project ImPACT’s effectiveness on child outcomes and its costs in different settings and populations are warranted to explore this possibility.

We found significant travel costs associated with in-home delivery of Project ImPACT. We estimated that the cost of delivering Project ImPACT in-home would be almost twice as much as the cost of delivering it virtually. This is in line with the findings of the previous literature that found an outpatient model is 2.62 times more expensive, and an in-home model is 2.64 times more expensive than telehealth (Little et al., 2018). Early intervention systems may be able to increase the number of families that they serve, especially in rural and underserved areas, and decrease the program costs by using such innovative models of service delivery, such as combining face-to-face interactions with telehealth sessions. (Little et al., 2018; Pickard et al., 2016) Recent research comparing in-home vs. telehealth delivery of Project ImPACT found no differences in parent or child outcomes (Hao et al., 2021), suggesting that virtual delivery may be a cost-effective option. To explore this possibility, future studies should evaluate cost effectiveness of different delivery modes.

This study has several important strengths. To our knowledge, it is the first to examine implementation resources and costs of a manualized EI program in a community setting, while also providing resource use and cost estimates for the intervention itself. We demonstrated an application of TDABC alongside Proctor rubric, in a hybrid trial setting to estimate both implementation and intervention costs. We carefully described our methods, including the TDABC setup, data collection, and assigning a dollar value to the resources used, and provided a transparent and detailed composition of resource use and costs across various, granular implementation and intervention tasks. This level of detail is often missing, making it difficult to compare the results of the studies and evaluate the quality of the cost collection and valuation (Eisman et al., 2021; Gold et al., 2022). Having disaggregated, detailed information helps decision makers understand the true cost to their organizations of implementing and delivering Project ImPACT.

Another strength is the prospective estimation of costs that did not rely on retrospective self-reports, avoiding potential recall bias. Once Project ImPACT implementation and intervention processes were mapped, process data (e.g., number of group consultation sessions, duration of one group session) were captured in real-time from the trial documentation. This minimized the data collection burden on study personnel. Previous TDABC applications have demonstrated that determining activity frequencies and establishing a unit duration are less burdensome than asking personnel to log their time and activities (Kaplan & Anderson, 2007; Keel et al., 2017; Quigley et al., 2020; Silva Etges et al., 2019). Only for as- needed or ad hoc communication (e.g., phone calls, emails) activities, we needed to rely on vignette-based estimation in which staff tracked their ad hoc communication frequency and duration during two typical work weeks. Nonetheless, the majority of implementation and intervention actions were relatively straightforward to capture using this method.

Another methodological strength is that this costing approach allows estimation of replication costs that would be incurred in other settings or under different conditions, that is when the implementation strategies or Project ImPACT intervention must be tailored or adapted to meet the community’s needs and available resources. For example, the number of individual consultation sessions may have to be reduced in low resource settings; replacing the frequency of individual consultation sessions in Table S1 and recalculating the total costs would address this situation. If a provider expects to pay a different salary to consultants and external trainers, an updated hourly rate would be multiplied by the time provided in Table S1 to determine an estimated total personnel cost.

There are limitations to this study. First, the study was conducted as part of a randomized field trial, so the results may not be applicable to non-trial settings. However, we provided sufficient information to estimate replication costs as described above. Second, implementation coordination and monitoring costs may have been overestimated because some communication may have only occurred because they were part of the study, although we tried to account for this in our sensitivity analysis. However, given the detailed, granular information, these costs can easily be recalculated for varying communication action frequency and unit durations. Third, frequency and unit duration of communication activities were derived from vignette-based estimations because these were not collected as part of other trial data collection, and it was not possible for personnel to record each instance of communication actions. Fourth, insufficient variation in our data precluded us from estimating variation in cost estimates and examining uncertainty with a probabilistic costing approach. Lastly, we performed this analysis from a programmatic perspective. A more comprehensive cost estimation from a wider perspective would include time costs of the caregivers participating in Project ImPACT (e.g., time spent participating in the program activities) and costs associated with the changes in children’s use of other services. In addition, we did not provide a full economic evaluation in which outcomes and costs observed for the intervention arm are compared to those of usual care arm. Establishing the economic value of Project ImPACT requires examining its benefits in relation to its costs; our cost estimates provide the first step in determining Project ImPACT’s economic value. Current studies are underway to broaden our cost analysis perspective to account for the possible changes in children’s’ use of healthcare services and assess the program’s cost-effectiveness.

Conclusions

Our resource use and cost estimates can serve as a reference point to publicly funded EI systems who may wish to adopt Project ImPACT. The specific implementation and intervention procedures and staffing arrangements we identified in this study can function as a model to implement the program. However, cost is only one piece of information needed for making decisions about community-based implementation of Project ImPACT; outcome information also is necessary. Efficacy evaluation of Project ImPACT suggests the program improves caregiver and child outcomes (Stadnick et al., 2015; Stahmer et al., 2020; Yoder et al., 2021), and data about its effectiveness in community settings are currently being collected. Combining cost data with forthcoming outcome data to conduct economic evaluations should be useful to decisionmakers working with scarce resources.

Use of TDABC with the Proctor rubric may encourage implementation researchers to perform costing studies more regularly, relying on process mapping and collecting prospective data on costs concurrently with other trial data collection. This may enhance the knowledge base in this area with standardized, detailed, transparent, and quality cost information and evaluation. Results of the clinical effectiveness of a program, can then be simultaneously presented along with program cost findings. This would inform future replication, dissemination, adoption, implementation, and economic evaluation of new interventions and contribute to methodological advances in Implementation Science regarding standardized methods for tracking and reporting of implementation strategies.

References

Aarons, G. A., Wells, R. S., Zagursky, K., Fettes, D. L., & Palinkas, L. A. (2009). Implementing evidence-based practice in community mental health agencies: A multiple stakeholder analysis. American Journal of Public Health, 99, 2087–2095.

Bond, G. R., Drake, R. E., McHugo, G. J., Peterson, A. E., Jones, A. M., & Williams, J. (2014). Long-term sustainability of evidence-based practices in community mental health agencies. Administration and Policy in Mental Health, 41, 228–236.

Bowser, D. M., Henry, B. F., & McCollister, K. E. (2021). Cost analysis in implementation studies of evidence-based practices for mental health and substance use disorders: A systematic review. Implementation Science, 16, 26.

Boyd, B. A., Odom, S. L., Humphreys, B. P., & Sam, A. M. (2010). Infants and toddlers with autism spectrum disorder: Early identification and early intervention. Journal of Early Intervention., 32, 75–98.

Bradshaw, J., Steiner, A. M., Gengoux, G., & Koegel, L. K. (2015). Feasibility and effectiveness of very early intervention for infants at-risk for autism spectrum disorder: A systematic review. Journal of Autism and Developmental Disorders, 45, 778–794.

Cidav, Z., Mandell, D., Pyne, J., Beidas, R., Curran, G., & Marcus, S. (2020). A pragmatic method for costing implementation strategies using time-driven activity-based costing. Implementation Science, 15, 28.

Cidav, Z., Marcus, S., Mandell, D., et al. (2021). Programmatic costs of the telehealth ostomy self-management training: An application of time-driven activity-based costing. Value Health., 24, 1245–1253.

Curran, G. M., Bauer, M., Mittman, B., Pyne, J. M., & Stetler, C. (2012). Effectiveness-implementation hybrid designs: Combining elements of clinical effectiveness and implementation research to enhance public health impact. Medical Care, 50, 217–226.

da Silva Etges, A. P. B., Cruz, L. N., Notti, R. K., et al. (2019). An 8-step framework for implementing time-driven activity-based costing in healthcare studies. The European Journal of Health Economics, 20, 1133–1145.

Dopp, A. R., Kerns, S. E. U., Panattoni, L., et al. (2021). Translating economic evaluations into financing strategies for implementing evidence-based practices. Implementation Science, 16, 66.

Dopp, A. R., Mundey, P., Beasley, L. O., Silovsky, J. F., & Eisenberg, D. (2019a). Mixed-method approaches to strengthen economic evaluations in implementation research. Implementation Science, 14, 2.

Dopp, A. R., Parisi, K. E., Munson, S. A., & Lyon, A. R. (2019b). A glossary of user-centered design strategies for implementation experts. Transl Behav Med., 9, 1057–1064.

Dopp, A. R., Narcisse, M.-R., Mundey, P., et al. (2020). A scoping review of strategies for financing the implementation of evidence-based practices in behavioral health systems: State of the literature and future directions. Implementation Research and Practice., 1, 2633489520939980.

Eisman, A. B., Kilbourne, A. M., Dopp, A. R., Saldana, L., & Eisenberg, D. (2020). Economic evaluation in implementation science: Making the business case for implementation strategies. Psychiatry Research, 283, 112433.

Eisman, A. B., Quanbeck, A., Bounthavong, M., Panattoni, L., & Glasgow, R. E. (2021). Implementation science issues in understanding, collecting, and using cost estimates: a multi-stakeholder perspective. Implement Science, 16(1), 75.

Gold, R., Bunce, A. E., Cohen, D. J., et al. (2016). Reporting on the strategies needed to implement proven interventions: An example from a “Real-World” cross-setting implementation study. Mayo Clinic Proceedings, 91, 1074–1083.

Gold, H. T., McDermott, C., Hoomans, T., & Wagner, T. H. (2022). Cost data in implementation science: Categories and approaches to costing. Implementation Science, 17, 11.

Green, J., Charman, T., McConachie, H., et al. (2010). Parent-mediated communication-focused treatment in children with autism (PACT): A randomised controlled trial. Lancet, 375, 2152–2160.

Green, J., Charman, T., Pickles, A., et al. (2015). Parent-mediated intervention versus no intervention for infants at high risk of autism: A parallel, single-blind, randomised trial. Lancet Psychiatry, 2, 133–140.

Hao, Y., Franco, J. H., Sundarrajan, M., & Chen, Y. (2021). A pilot study comparing tele-therapy and in-person Therapy: Perspectives from parent-mediated intervention for children with autism spectrum disorders. Journal of Autism and Developmental Disorders, 51, 129–143.

Ingels, J. B., Walcott, R. L., Wilson, M. G., et al. (2016). A Prospective programmatic cost analysis of fuel your life: A worksite translation of DPP. Journal of Occupational and Environmental Medicine, 58, 1106–1112.

Ingersoll, B., & Dvortcsak, A. (2019). Teaching Social Communication to Children with Autism (p. 386). Guilford Press.

Kaplan, R. S., & Anderson, S. R. (2007). Time-driven activity-based costing (p. 266). Harvard Business Press.

Kasari, C., Lawton, K., Shih, W., et al. (2014). Caregiver-mediated intervention for low-resourced preschoolers with autism: An RCT. Pediatrics, 134, e72–e79.

Keel, G., Savage, C., Rafiq, M., & Mazzocato, P. (2017). Time-driven activity-based costing in health care: A systematic review of the literature. Health Policy, 121, 755–763.

Last, B. S., Schriger, S. H., Becker-Haimes, E. M., et al. (2022). Economic precarity, financial strain, and job-related stress among Philadelphia’s public mental health clinicians. Psychiatric Services (washington, d. c.), 73, 774–786.

Little, L. M., Wallisch, A., Pope, E., & Dunn, W. (2018). Acceptability and cost comparison of a telehealth intervention for families of children with autism. Infants & Young Children., 31, 275–286.

Okamura, K. H., Benjamin Wolk, C. L., Kang-Yi, C. D., et al. (2017). The price per prospective consumer of providing therapist training and consultation in seven evidence-based treatments within a large public behavioral health system: An example cost-analysis metric. Frontiers in Public Health, 5, 356.

Ovretveit, J. (2014). Evaluating improvement and implementation for health. McGraw-Hill Education (UK).

Pickard, K. E., Wainer, A. L., Bailey, K. M., & Ingersoll, B. R. (2016). A mixed-method evaluation of the feasibility and acceptability of a telehealth-based parent-mediated intervention for children with autism spectrum disorder. Autism, 20, 845–855.

Pinnock, H., Barwick, M., Carpenter, C. R., et al. (2017). Standards for Reporting Implementation Studies (StaRI) Statement. BMJ, 356, i6795.

Powell, B. J., Fernandez, M. E., Williams, N. J., et al. (2019). Enhancing the Impact of Implementation Strategies in Healthcare: A Research Agenda. Frontiers in Public Health, 7, 3.

Powell, B. J., Waltz, T. J., Chinman, M. J., et al. (2015). A refined compilation of implementation strategies: Results from the Expert Recommendations for Implementing Change (ERIC) project. Implementation Science, 10, 21.

Quigley, E., O’Donnell, S., & Doyle, G. (2020). Time-Driven Activity-Based Costing: A Step-by-Step Guide to Collecting Patient-Level Health Care Costs. SAGE Publications Ltd.

Roberts, S. L. E., Healey, A., & Sevdalis, N. (2019). Use of health economic evaluation in the implementation and improvement science fields-a systematic literature review. Implementation Science, 14, 72.

Rogers, S. J. (1996). Brief report: Early intervention in autism. Journal of Autism and Developmental Disorders, 26, 243–246.

Rogers, S. J., Estes, A., Lord, C., et al. (2012). Effects of a brief Early Start Denver model (ESDM)-based parent intervention on toddlers at risk for autism spectrum disorders: A randomized controlled trial. Journal of the American Academy of Child and Adolescent Psychiatry, 51, 1052–1065.

Rogge, N., & Janssen, J. (2019). The economic costs of autism spectrum disorder: A literature review. Journal of Autism and Developmental Disorders, 49, 2873–2900.

Schreibman, L., Dawson, G., Stahmer, A. C., et al. (2015). Naturalistic developmental behavioral interventions: Empirically validated treatments for autism spectrum disorder. Journal of Autism and Developmental Disorders, 45, 2411–2428.

Smith, M. J., Graham, A. K., Sax, R., et al. (2020). Costs of preparing to implement a virtual reality job interview training programme in a community mental health agency: A budget impact analysis. Journal of Evaluation in Clinical Practice, 26, 1188–1195.

Stadnick, N. A., Stahmer, A., & Brookman-Frazee, L. (2015). Preliminary effectiveness of project ImPACT: A parent-mediated intervention for children with autism spectrum disorder delivered in a community program. Journal of Autism and Developmental Disorders, 45, 2092–2104.

Stahmer, A. C., Rieth, S. R., Dickson, K. S., et al. (2020). Project ImPACT for Toddlers: Pilot outcomes of a community adaptation of an intervention for autism risk. Autism, 24, 617–632.

Subramanian, S., Tangka, F. K., Hoover, S., Degroff, A., Royalty, J., & Seeff, L. C. (2011). Clinical and programmatic costs of implementing colorectal cancer screening: Evaluation of five programs. Evaluation and Program Planning, 34, 147–153.

U.S. Bureau of Labor Statistics. https://www.bls.gov/ Last Accessed October 12.

Wetherby, A. M., & Woods, J. J. (2006). Early social interaction project for children with autism spectrum disorders beginning in the second year of life: A preliminary study. Topics Early Childhood Special Education., 26, 67–82.

Yoder, P. J., Stone, W. L., & Edmunds, S. R. (2021). Parent utilization of ImPACT intervention strategies is a mediator of proximal then distal social communication outcomes in younger siblings of children with ASD. Autism, 25, 44–57.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cidav, Z., Mandell, D., Ingersoll, B. et al. Programmatic Costs of Project ImPACT for Children with Autism: A Time-Driven Activity Based Costing Study. Adm Policy Ment Health 50, 402–416 (2023). https://doi.org/10.1007/s10488-022-01247-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10488-022-01247-6