Abstract

In this paper, we introduce differential exponential maps in Cartesian differential categories, which generalizes the exponential function \(e^x\) from classical differential calculus. A differential exponential map is an endomorphism which is compatible with the differential combinator in such a way that generalizations of \(e^0 = 1\), \(e^{x+y} = e^x e^y\), and \(\frac{\partial e^x}{\partial x} = e^x\) all hold. Every differential exponential map induces a commutative rig, which we call a differential exponential rig, and conversely, every differential exponential rig induces a differential exponential map. In particular, differential exponential maps can be defined without the need of limits, converging power series, or unique solutions of certain differential equations—which most Cartesian differential categories do not necessarily have. That said, we do explain how every differential exponential map does provide solutions to certain differential equations, and conversely how in the presence of unique solutions, one can derivative a differential exponential map. Examples of differential exponential maps in the Cartesian differential category of real smooth functions include the exponential function, the complex exponential function, the split complex exponential function, and the dual numbers exponential function. As another source of interesting examples, we also study differential exponential maps in the coKleisli category of a differential category.

Similar content being viewed by others

1 Introduction

Cartesian differential categories [3], introduced by Blute, Cockett, and Seely, come equipped with a differential combinator \({\mathsf {D}}\) which provides a categorical axiomatization of the differential from multivariable differential calculus. Important examples of Cartesian differential categories include the category of real smooth functions (Example 1), the coKleisli category of a differential category [5], the differential objects of a tangent category [12], and categorical models of Ehrhard and Regnier’s differential \(\lambda \)-calculus [16] (which are in fact called Cartesian closed differential categories [21]). Other interesting (and surprising) examples include abelian functor calculus [2] and cofree Cartesian differential categories [14, 19]. Since their introduction, Cartesian differential categories have a rich literature and have been successful in generalizing many concepts from classical differential calculus. More recently, Cartesian differential categories have also started to find their way in applications.

In particular, Cockett and Cruttwell have introduced the notion of dynamical systems and their solutions in tangent categories [9], which generalize ordinary differential equations in this context, specifically initial value problems. Since every Cartesian differential category is a tangent category, this implies that dynamical systems allow one to study differential equations in a Cartesian differential category. In classical differential calculus, one of the most important tools used for solving differential equations is the exponential function \(e^x\). Therefore, it is desirable to generalize the exponential function for Cartesian differential categories.

The exponential function \(e^x\) admits numerous equivalent characterization. It can either be defined as the inverse of the natural logarithm function ln(x), or as the limit:

or as the convergent power series:

or even as the solution to \(f^\prime (x) = f(x)\) with initial condition \(f(0) = 1\). However in arbitrary Cartesian differential categories, functions need to be defined at zero (which excludes ln(x)) and one does not necessarily have a notion of convergence, infinite sums, or even (unique) solutions to initial value problems. Therefore one must look for a more algebraic characterization of the exponential function. In classical algebra, an exponential ring [26] is a ring equipped with an endomorphism e which is a monoid morphism from the additive structure to the multiplicative structure, that is:

The canonical example of an exponential ring is the field of real numbers \({\mathbb {R}}\) with the exponential function \(e^x\). While this seems promising, arbitrary objects in a Cartesian differential category do not necessarily come equipped with a multiplication. Rather than requiring this extra ring structure on objects, it turns out that the differential combinator \({\mathsf {D}}\) will allow us to bypass the need for a multiplication.

In the category of real smooth functions, which is the canonical example of a Cartesian differential category, the differential combinator \({\mathsf {D}}\) applied to the exponential function \(e^x\) is the smooth function \({\mathbb {R}} \times {\mathbb {R}} \xrightarrow {{\mathsf {D}}[e^x]} {\mathbb {R}}\) defined as:

Thus the multiplication of \({\mathbb {R}}\) appears in \({\mathsf {D}}[e^x]\). Inspired by this observation, the generalization of the exponential function in a Cartesian differential category can be defined simply in terms of an endomorphism \(A \xrightarrow {e} A\) which is compatible with the differential combinator \({\mathsf {D}}\) in the sense that

We call such endomorphisms differential exponential maps, which is the main novel notion of study in this paper. Differential exponential maps generalize the exponential function for Cartesian differential categories. Indeed for \(e^x\), the differential exponential maps axioms correspond precisely to \(e^0 x = x\) and \(e^x e^y =e^{x+y}\) respectively.

Previously, we mentioned that not every object in a Cartesian differential category has a multiplication. However, it turns out that every differential exponential map \(A \xrightarrow {e} A\) does induce a commutative rig structure on A, and thus A does come equipped with a multiplication. The construction is once again inspired by the classical exponential function \(e^x\). Applying the differential combinator on \(e^x\) twice we obtain:

Setting \(x=0\) and \(w=0\), one re-obtains precisely the multiplication of \({\mathbb {R}}\):

The unit for the multiplication is, of course, obtain by evaluating \(e^x\) at 0, \(e^0 = 1\). This construction is easily generalized to an arbitrary Cartesian differential category and one can show that every differential exponential map induces a commutative rig. Commutativity of the multiplication follows from the symmetry of the partial derivatives axiom [CD.7] of the differential combinator. The unit identities for the multiplication will follow from both differential exponential map axioms. Proving associativity is a bit trickier but essentially follows from the observation that:

and then using both the differential combinator axioms and differential exponential map axioms, one shows that both sides of the associativity law are equal to the third-order partial derivative.

Conversely, it is possible to obtain differential exponential maps from special kinds of commutative rigs. Indeed, one can alternatively axiomatize an object equipped with a differential exponential map instead as a commutative rig equipped with an endomorphism which satisfies the three fundamental properties of the exponential function that \(e^0 = 1\), \(e^{x+y} = e^x e^y\), and \(\frac{\partial e^x}{\partial x} = e^x\). We call such rigs: differential exponential rigs. One of the main results of this paper it that there is a bijective correspondence between differential exponential maps and differential exponential rigs. In the category of real smooth functions, interesting examples of differential exponential rigs include \({\mathbb {R}}\) with the exponential function \(e^x\), \({\mathbb {R}}^2\) equipped with the complex numbers multiplication and the complex exponential function \(e^{x+iy} = e^x\cos (y) + i e^x\sin (y)\), \({\mathbb {R}}^2\) equipped with the split complex numbers multiplication and the split complex exponential function \(e^{x+jy} = e^x\cosh (y) + j e^x\sinh (y)\) [24], and also \({\mathbb {R}}^2\) equipped with the dual numbers multiplication and the dual numbers exponential function \(e^{x+y\varepsilon } = e^x + e^x y \varepsilon \) [25].

As one of the main motivations for their development, differential exponentials maps does allow one to solve a certain class of linear dynamical systems in any Cartesian differential category. Specifically, one can solve the dynamical systems which generalize the initial value problems of the form \(f^\prime (x) = f(x)a\) with initial condition \(f(0) = b\) (for some constants a and b), whose classical solution is \(f(x) = e^{ax} b\). The types of differential equations are of particular interest in control systems theory [1, Chapter 5]. Furthermore, it turns out that a differential exponential map is indeed the a solution to the dynamical system which generalizes the initial value problem \(f^\prime (x) = f(x)\) with initial condition \(f(0) = 1\). In future work on solving differential equations in a Cartesian differential category, differential exponential maps will hopefully play a key role. Such an application can be found in [11, Section 5], where it is shown that in a tangent category, a differential curve object admits a canonical differential exponential map, which induces solutions to many interesting dynamical systems including one which in turn induces an action on differential bundles.

An important example of a Cartesian differential category is the coKleisli category of the comonad \(!\) of a differential category (with finite products). In this setting, a differential exponential map is an endomorphism in the coKleisli category and therefore a map of type \(!A \xrightarrow {e} A\) in the base category. In a differential storage category, that is, when \(!\) has the Seely isomorphisms, the differential structure is captured by the codereliction map \(A \xrightarrow {\eta } !A\) and it turns out that a commutative rig in the coKleisli category is precisely a commutative monoid (over the tensor product) in the base category. As such, a differential exponential map in the coKleisli category can alternatively be given by a commutative monoid A in the base category equipped with a monoid morphism \(!A \xrightarrow {e} A\) which is a retract of the codereliction. We call such commutative monoids \(!\)-differential exponential algebras, and there is a bijective correspondence between \(!\)-differential exponential algebras and differential exponential maps in the coKleisli category. Interesting examples of differential storage categories include both the category of sets and relations and the category of vector spaces (over a field of characteristic 0), where the comonad \(!\) is given by the free exponential modality. In both of these examples, \(!\)-differential exponential algebras correspond precisely to commutative monoids.

Outline and Main Results Section 2 is a background section on Cartesian differential categories where we briefly review the basic definitions, as well as to introduce the notation and terminology used in this paper. In particular, we review the canonical commutative monoid structure \(\oplus \) on every object in a Cartesian differential category (Lemma 3), which plays a key role throughout this paper. In Sect. 3 we introduce differential exponential maps (Definition 4) and in particular show that the category of differential exponential maps is a Cartesian tangent category (Proposition 2). In Example 3 we provide examples of differential exponential maps in the category of smooth real functions, which include the classical exponential function, the complex exponential function, the split complex exponential function, and the dual number exponential function. In Sect. 4 we introduce differential exponential rigs. We show that every differential exponential rig induces a differential exponential map (Proposition 3) and conversely that every differential exponential map induces a differential exponential rig (Proposition 4), and show that these constructions are inverses of each other (Theorem 1). As an immediate consequence, the category of differential exponential rigs is also a Cartesian tangent category (Proposition 5). In Sect. 5 we explain the relationship between differential exponential maps and solutions to differential equations in arbitrary Cartesian differential categories. In particular, we show that every differential exponential map is a solution to the expected differential equation (Proposition 6) and that a certain class of dynamical systems admit a solution (Proposition 7). We also show that in the presence of unique solutions to differential equations, one can build a differential exponential map (Proposition 8). In Sect. 6 we study differential exponential maps in the coKleisli category of a differential (storage) category and give equivalent characterizations in these cases (Propositions 10 and 11). We also introduce \(!\)-differential exponential algebras (Definition 14) for differential storage categories. We show that every \(!\)-differential exponential algebra induces a differential exponential map in the coKleisli category (Proposition 12), and conversely that every differential exponential map in the coKleisli category induces a \(!\)-differential exponential algebra (Proposition 12), and that these constructions are inverses of each other (Theorem 2). We conclude this paper with Sect. 7 which discusses some interesting potential future work to do with differential exponential maps.

Conventions We use diagrammatic order notation for composition: this means that the composite map fg is the map which first does f then g.

2 Background: Cartesian Differential Categories

In this section, if only to introduce notation, we briefly review Cartesian differential categories, their underlying Cartesian left additive structure, and their induced Cartesian tangent category structure. That said, we assume that the reader is familiar with the theory of Cartesian differential categories. For a more in-depth discussion we refer the reader to [3, 12].

For a category with finite products, we denote the binary product of objects A and B by \(A \times B\) with projection maps \(A \times B \xrightarrow {\pi _0} A\) and \(A \times B \xrightarrow {\pi _1} B\), pairing operation \(\langle -, - \rangle \) and thus \(f \times g = \langle \pi _0 f, \pi _1 g \rangle \), and wed denote the chosen terminal object as \(\top \). Also, an important map which will use throughout this paper is the canonical interchange map \((A \times B) \times (C \times D) \xrightarrow {c} (A \times C) \times (B \times D)\) defined as follows:

which is a natural isomorphism such that \(cc=1\).

Definition 1

A left additive category [3, Definition 1.1.1] is a category \({\mathbb {X}}\) such that each hom-set \({\mathbb {X}}(A,B)\) is a commutative monoid with addition \({\mathbb {X}}(A,B) \times {\mathbb {X}}(A,B) \xrightarrow {+} {\mathbb {X}}(A,B)\), \((f,g) \mapsto f +g\), and zero \(0 \in {\mathbb {X}}(A,B)\), such that composition on the left preserves the additive structure, that is:

A map k in a left additive category is additive [3, Definition 1.1.1] if composition on the right by h preserves the additive structure, that is:

A Cartesian left additive category [3, Definition 1.2.1] is a left additive category \({\mathbb {X}}\) with finite products \(\times \) and terminal object \(\top \) such that all projection maps \(A \times B \xrightarrow {\pi _0} A\) and \(A \times B \xrightarrow {\pi _1} B\) are additive.

We note that the definition of a Cartesian left additive category presented here is not precisely that given in [3, Definition 1.2.1], but was shown to be equivalent in [19, Lemma 2.4]. Also note that in a Cartesian left additive category, the unique map from an object A to the terminal object \(\top \) is in fact the zero map \(A \xrightarrow {0} \top \).

Definition 2

A Cartesian differential category [3, Definition 2.1.1] is a Cartesian left additive category \({\mathbb {X}}\) equipped with a differential combinator \({\mathsf {D}}\), which is a family of operators \({{\mathbb {X}}(A,B) \xrightarrow {{\mathsf {D}}} {\mathbb {X}}(A \times A,B)}\), \(f \mapsto {\mathsf {D}}[f]\), such that the following axioms hold:

- [CD.1]:

-

\({\mathsf {D}}[f+g] = {\mathsf {D}}[f] + {\mathsf {D}}[g]\) and \({\mathsf {D}}[0]=0\);

- [CD.2]:

-

\(\left( 1 \times (\pi _0 + \pi _1) \right) {\mathsf {D}}[f] = (1 \times \pi _0){\mathsf {D}}[f] + (1 \times \pi _1){\mathsf {D}}[f]\) and \(\langle 1, 0 \rangle {\mathsf {D}}[f]=0\);

- [CD.3]:

-

\({\mathsf {D}}[1]=\pi _1\), \({\mathsf {D}}[\pi _0] = \pi _1\pi _0\) and \({\mathsf {D}}[\pi _0] = \pi _1\pi _1\);

- [CD.4]:

-

\({\mathsf {D}}[\langle f, g \rangle ] = \langle {\mathsf {D}}[f] , {\mathsf {D}}[g] \rangle \);

- [CD.5]:

-

\({\mathsf {D}}[fg] = \langle \pi _0 f, {\mathsf {D}}[f] \rangle {\mathsf {D}}[g]\);

- [CD.6]:

-

\(\left( \langle 1,0 \rangle \times \langle 0,1 \rangle \right) {\mathsf {D}}^2[f] = {\mathsf {D}}[f]\);

- [CD.7]:

-

\(c {\mathsf {D}}^2[f] = {\mathsf {D}}^2[f]\).

It is important to note that unlike in [3, 12, 13], we use the convention used in the more recent work on Cartesian differential categories where the linear argument of \({\mathsf {D}}[f]\) is its second argument rather than its first argument. We also note that the definition of a Cartesian differential category given here is not precisely that given in [3, Definition 2.1.1] but rather an equivalent version found in [13, Section 3.4]. A discussion on the intuition for the differential combinator axioms can be found in [3, Remark 2.1.3]. The canonical example of a Cartesian differential category is the category of Euclidean spaces and smooth maps between them—which will also be our main example throughout this paper.

Example 1

Let \({\mathbb {R}}\) be the set of real numbers and let \(\mathsf {SMOOTH}\) be the category whose objects are the Euclidean vector spaces \({\mathbb {R}}^n\) (including the singleton \({\mathbb {R}}^0 = \lbrace *\rbrace \)) and whose maps are smooth function \({{\mathbb {R}}^n \xrightarrow {F} {\mathbb {R}}^m}\), which of course are in fact tuples \(F = \langle f_1, \ldots , f_m \rangle \) for some smooth functions \({{\mathbb {R}}^n \xrightarrow {f_i} {\mathbb {R}}}\). \(\mathsf {SMOOTH}\) is a Cartesian differential category where the finite product structure and additive structure are defined in the obvious way, and whose differential combinator is given by the standard derivative of smooth functions. Explicitly, recall that for a smooth map \({\mathbb {R}}^n \xrightarrow {f} {\mathbb {R}}\), its gradient \({\mathbb {R}}^n \xrightarrow {\nabla (f)} {\mathbb {R}}^n\) is defined as:

Then its derivative \({\mathbb {R}}^n \times {\mathbb {R}}^n \xrightarrow {{\mathsf {D}}[f]} {\mathbb {R}}\) is defined as:

where \(\cdot \) is the dot product of vectors. In the case of \({\mathbb {R}}^n \xrightarrow {F} {\mathbb {R}}^m\), which is in fact a tuple of smooth functions \(F = \langle f_1, \ldots , f_m \rangle \), we define \({\mathbb {R}}^n \times {\mathbb {R}}^n \xrightarrow {{\mathsf {D}}[F]} {\mathbb {R}}^m\) as \({\mathsf {D}}[F] = \langle {\mathsf {D}}[f_1] \ldots , {\mathsf {D}}[f_n] \rangle \). It is also possible to define \({\mathsf {D}}[F]\) in terms of the Jacobian matrix of F.

Many other interesting examples of Cartesian differential categories can be found throughout the literature such as categorical models of the differential \(\lambda \)-calculus [16], which are called Cartesian closed differential categories [21], the subcategory of differential objects of a Cartesian tangent category [12], and the coKleisli category of a differential category [3, 5]. We will take a closer look at the coKleisli category of a differential category in Sect. 6.

An important class of maps in a Cartesian differential category is the class of linear maps. Later in Sect. 4, we will also discuss bilinear maps.

Definition 3

In a Cartesian differential category, a map f is said to be linear [3, Definition 2.2.1] if \({\mathsf {D}}[f]= \pi _1 f\).

Example 2

In \(\mathsf {SMOOTH}\), a map \({\mathbb {R}}^n \xrightarrow {F} {\mathbb {R}}^m\) is linear in the Cartesian differential sense precisely when it is \({\mathbb {R}}\)-linear in the classical sense, that is, \(F(s \mathbf {a} + t \mathbf {b}) = sF(\mathbf {a}) + t F(\mathbf {b})\) for all \(s,t \in {\mathbb {R}}\) and \(\mathbf {a}, \mathbf {b} \in {\mathbb {R}}^n\).

Here are now some useful properties about linear maps for this paper:

Lemma 1

[3, Lemma 2.2.2] In a Cartesian differential category:

- (i):

-

If f is linear then f is additive;

- (ii):

-

Identity maps \(A \xrightarrow {1} A\) and projection maps \(A \times B \xrightarrow {\pi _0} A\) and \(A \times B \xrightarrow {\pi _1} B\) are linear;

- (iii):

-

If \(A \xrightarrow {f} B\) and \(B \xrightarrow {g} C\) are linear then their composition \(A \xrightarrow {fg} C\) is linear;

- (iv):

-

If \(A \xrightarrow {f} B\) is an isomorphism and is linear then its inverse \(B \xrightarrow {f^{-1}} A\) is linear;

- (v):

-

If \(C \xrightarrow {f} A\) and \(C \xrightarrow {g} B\) are linear then their pairing \(C \xrightarrow {\langle f, g \rangle } A \times B\) is linear;

- (vi):

-

If \(A \xrightarrow {f} B\) and \(C \xrightarrow {g} D\) are linear then their product \(A \times C \xrightarrow {f \times g} B \times D\) is linear;

- (vii):

-

Zero maps \(A \xrightarrow {0} B\) are linear;

- (viii):

-

If \(A \xrightarrow {f} B\) and \(A \xrightarrow {g} B\) are linear then their sum \(A \xrightarrow {f+g} B\) is linear;

- (ix):

-

If \(A \xrightarrow {f} B\) is linear then \({\mathsf {D}}[fg] = (f \times f) {\mathsf {D}}[g]\) and \({\mathsf {D}}[kf] = {\mathsf {D}}[k]f\);

- (x):

-

The interchange map \((A \times B) \times (C \times D) \xrightarrow {c} (A \times C) \times (B \times D)\) is linear.

For a Cartesian differential category \({\mathbb {X}}\), define its subcategory of linear maps \(\mathsf {LIN}[{\mathbb {X}}]\) to be the category whose objects are the same as \({\mathbb {X}}\) and whose maps are linear in \({\mathbb {X}}\). Lemma 1 tells us that \(\mathsf {LIN}[{\mathbb {X}}]\) is a well-defined category and also that it has finite biproducts, and thus is a Cartesian left additive category where every map is additive. \(\mathsf {LIN}[{\mathbb {X}}]\) also inherits the differential combinator from \({\mathbb {X}}\) and so \(\mathsf {LIN}[{\mathbb {X}}]\) is a Cartesian differential category where every map is linear. Therefore the obvious forgetful functor \(\mathsf {LIN}[{\mathbb {X}}] \xrightarrow {{\mathsf {U}}} {\mathbb {X}}\) preserves the Cartesian differential structure strictly.

The differential combinator of a Cartesian differential category induces an endofunctor and this endofunctor makes a Cartesian differential category a Cartesian tangent category. We will not review the full definition of a tangent category here but we will highlight certain properties that will be important for this paper. We invite the reader to read the full definition of a tangent category in [12, 13].

Proposition 1

[12, Proposition 4.7] Every Cartesian differential category \({\mathbb {X}}\) is a Cartesian tangent category where the tangent functor \({\mathsf {T}}: {\mathbb {X}} \rightarrow {\mathbb {X}}\) is defined on objects as \({\mathsf {T}}(A) := A \times A\) and on morphisms as \({\mathsf {T}}(f) := \langle \pi _0 f, {\mathsf {D}}[f] \rangle \).

Corollary 1

For a Cartesian differential category \({\mathbb {X}}\), \(\mathsf {LIN}[{\mathbb {X}}]\) is a Cartesian tangent category where the tangent functor \({\mathsf {T}}: \mathsf {LIN}[{\mathbb {X}}] \rightarrow \mathsf {LIN}[{\mathbb {X}}]\) is defined on objects as \({\mathsf {T}}(A) := A \times A\) and on morphisms as \({\mathsf {T}}(f) := f \times f\). Furthermore, the forgetful functor \(\mathsf {LIN}[{\mathbb {X}}] \xrightarrow {{\mathsf {U}}} {\mathbb {X}}\) preserves the Cartesian tangent structure strictly.

Here are now some useful properties involving the tangent functor (which we leave to the reader to check for themselves):

Lemma 2

In a Cartesian differential category:

- (i):

-

\({\mathsf {D}}[fg] = {\mathsf {T}}(f) {\mathsf {D}}[g]\);

- (ii):

-

\(\langle 1, 0 \rangle {\mathsf {T}}(f) = f \langle 1, 0 \rangle \);

- (iii):

-

If f is linear then \({\mathsf {T}}(f) = f \times f\);

- (iv):

-

\({\mathsf {T}}(\langle f,g \rangle ) = \langle {\mathsf {T}}(f), {\mathsf {T}}(g) \rangle c\);

- (v):

-

\({\mathsf {D}}[f \times g] = c\left( {\mathsf {D}}[f] \times {\mathsf {D}}[g] \right) \) and \({\mathsf {T}}(f \times g) c = c ({\mathsf {T}}(f) \times {\mathsf {T}}(g))\);

- (vi):

-

\({\mathsf {T}}(f+g) = {\mathsf {T}}(f) + {\mathsf {T}}(g)\) and \({\mathsf {T}}(0) = 0\);

- (vii):

-

\({\mathsf {D}}\left[ {\mathsf {T}}(f) \right] = c {\mathsf {T}}({\mathsf {D}}[f])\).

We conclude this section with the observation that in a Cartesian differential category, the additive structure induces a canonical commutative monoid structure on every object. Cartesian left additive categories can be axiomatized in terms of equipping each object with a commutative monoid structure such that the projection maps are monoid morphisms [3, Proposition 1.2.2].

In a Cartesian differential category, a commutative monoid is a triple  consisting of an object A, map

consisting of an object A, map  , and a point \(\top \xrightarrow {i} A\) such that the following diagrams commute

, and a point \(\top \xrightarrow {i} A\) such that the following diagrams commute

Lemma 3

In a Cartesian differential category, for an object A define the map \(A \times A \xrightarrow {\oplus } A\) as \(\oplus = \pi _0 + \pi _1\). Then the triple \((A, \oplus , 0)\) is a commutative monoid and furthermore:

- (i):

-

\(\oplus \) is linear, that is, \({\mathsf {D}}[\oplus ] = \pi _1 \oplus \);

- (ii):

-

\({\mathsf {T}}(\oplus ) = \oplus \times \oplus \);

- (iii):

-

\(c {\mathsf {T}}(\oplus ) = \oplus \);

- (iv):

-

A map f is additive if and only if \(\oplus f = (f \times f) \oplus \) and \(0f = f\).

3 Differential Exponential Maps

In this section, we introduce differential exponential maps, which generalizes the notion of the classical exponential function \(e^x\) for arbitrary Cartesian differential categories.

Definition 4

A differential exponential map in a Cartesian differential category is a map \(A \xrightarrow {e} A\), such that the following diagrams commute:

where \(\oplus \) is defined as in Lemma 3.

The intuition for a differential exponential map is best explained in Example 3.i, which shows that the classical exponential function \(e^x\) (which is, of course, the main motivating example) is a differential exponential map. Briefly, since \(e^x\) is its own derivative, applying the differential combinator on \(e^x\) results in the smooth function \({\mathbb {R}} \times {\mathbb {R}} \xrightarrow {{\mathsf {D}}[e^x]} {\mathbb {R}}\) defined as:

The left diagram of (5) generalizes that \(e^0 y = y\) (since \(e^0 =1\)), while the right diagram generalizes that \(e^{x}e^y=e^{x+y}\). The differential combinator is the key piece that allows one to bypass the need for a multiplication operation and a multiplicative unit in the definition of a differential exponential map. That said, in Sect. 4 we will see that every differential exponential map does induce a multiplication and that analogues of the three essential properties of the classical exponential function are satisfied (Proposition 4). And conversely, we will also see how one can also axiomatize differential exponential maps in terms of rig structure and analogues of the three essential properties of the classical exponential function (Proposition 3). And, as mentioned in the introduction, we also highlight that the definition of a differential exponential map does not require any added structure or property on the Cartesian differential category such as a notion of converging limits or infinite sums. Before giving examples of differential exponential maps, which can be found in Example 3, let us first consider the category of differential exponential maps and constructions of differential exponential maps.

For a Cartesian differential category \({\mathbb {X}}\), define its category of differential exponential maps as the category \(\mathsf {DEM}[{\mathbb {X}}]\) whose objects are pairs (A, e) consisting of an object \(A \in {\mathbb {X}}\) and a differential exponential map \(A \xrightarrow {e} A\), and where a map \((A,e) \xrightarrow {f} (B,e^\prime )\) is a linear map \(A \xrightarrow {f} B\) in \({\mathbb {X}}\) such that the following diagram commutes:

and where composition and identity maps are as in \({\mathbb {X}}\). The reason for why maps in \(\mathsf {DEM}[{\mathbb {X}}]\) are restricted to being linear will become apparent in the proof of Theorem 1. There is the obvious forgetful functor \(\mathsf {DEM}[{\mathbb {X}}] \xrightarrow {{\mathsf {U}}} \mathsf {LIN}[{\mathbb {X}}]\) which maps \({\mathsf {U}}(A,e) = A\) and \({\mathsf {U}}(f) = f\).

Lemma 4

The forgetful functor \(\mathsf {DEM}[{\mathbb {X}}] \xrightarrow {{\mathsf {U}}} \mathsf {LIN}[{\mathbb {X}}]\) creates all limits.

Proof

Let \({\mathbb {D}} \xrightarrow {{\mathsf {F}}} \mathsf {DEM}[{\mathbb {X}}]\) be a functor such that the limit of the composite \({\mathbb {D}} \xrightarrow {{\mathsf {F}}} \mathsf {DEM}[{\mathbb {X}}] \xrightarrow {{\mathsf {U}}} \mathsf {LIN}[{\mathbb {X}}]\) exists in \( \mathsf {LIN}[{\mathbb {X}}]\) which we denote \(\lim \limits _{X \in {\mathbb {D}}} {\mathsf {U}}\left( {\mathsf {F}}(X) \right) \) with projections \(\lim \limits _{X \in {\mathbb {D}}} {\mathsf {U}}\left( {\mathsf {F}}(X) \right) \xrightarrow {\pi _x} {\mathsf {U}}\left( {\mathsf {F}}(X) \right) \). Note that \({\mathsf {U}}\left( {\mathsf {F}}(X) \right) \) is the underlying object of \({\mathsf {F}}(X)\), and so it comes equipped with a differential exponential map \({\mathsf {U}}\left( {\mathsf {F}}(X) \right) \xrightarrow {e_X} {\mathsf {U}}\left( {\mathsf {F}}(X) \right) \). Therefore \({\mathsf {F}}(X) = \left( {\mathsf {U}}\left( {\mathsf {F}}(X) \right) , e_X \right) \). Now for every map \(X \xrightarrow {f} Y\) in \({\mathbb {D}}\), since \({\mathsf {F}}(f)\) is a map in \(\mathsf {DEM}[{\mathbb {X}}]\), the following diagram commutes:

By the universal property of \(\lim \limits _{X \in {\mathbb {D}}} {\mathsf {U}}\left( {\mathsf {F}}(X) \right) \), there is a unique map:

which makes the following diagram commute:

We wish to show that \(\lim \limits _{X \in {\mathbb {D}}} e_X\) is a differential exponential map. To do so, first note that for each \(X \in {\mathbb {D}}\), \(\pi _X\) is linear and so by Lemma 1 it follows that:

Now since \(\pi _X\) is linear, it is also additive (Lemma 1) and therefore we obtain the following:

Therefore for each \(X \in {\mathbb {D}}\), we have that:

Then by the universal property of the limit, it follows that:

and so we conclude that \(\lim \limits _{X \in {\mathbb {D}}} e_X\) is a differential exponential map. From here, it is straightforward to conclude that the limit of \({\mathbb {D}} \xrightarrow {{\mathsf {F}}} \mathsf {DEM}[{\mathbb {X}}]\) is the pair:

with projections \((\lim \limits _{X \in {\mathbb {D}}} {\mathsf {U}}\left( {\mathsf {F}}(X) \right) , \lim \limits _{X \in {\mathbb {D}}} e_X) \xrightarrow {\pi _X} \left( {\mathsf {U}}\left( {\mathsf {F}}(X) \right) , e_X \right) \). Furthermore, \({\mathsf {U}}(\lim \limits _{X \in {\mathbb {D}}} {\mathsf {U}}\left( {\mathsf {F}}(X) \right) , \lim \limits _{X \in {\mathbb {D}}} e_X) = \lim \limits _{X \in {\mathbb {D}}} {\mathsf {U}}\left( {\mathsf {F}}(X) \right) \) and \({\mathsf {U}}(\pi _X) = \pi _X\), and it follows from the definition of \(\lim \limits _{X \in {\mathbb {D}}} e_X\) that this is the unique cone over \({\mathsf {F}}\) with this property. Therefore, we conclude that \(\mathsf {DEM}[{\mathbb {X}}] \xrightarrow {{\mathsf {U}}} \mathsf {LIN}[{\mathbb {X}}]\) creates all limits. \(\square \)

By Lemma 1, the projection maps of the product are linear. As such, an immediate consequence of Lemma 4 is that the product of differential exponential maps is again a differential exponential map. Therefore, the category of differential exponentials maps has finite products.

Corollary 2

In a Cartesian differential category:

- (i):

-

For the terminal object \(\top \), \(\top \xrightarrow {1} \top \) is a differential exponential map;

- (ii):

-

If \(A \xrightarrow {e} A\) and \(B \xrightarrow {e^\prime } B\) are differential exponential maps, then their product \(A \times B \xrightarrow {e \times e^\prime } A \times B\) is a differential exponential map.

Furthermore, for a Cartesian differential category \({\mathbb {X}}\), \(\mathsf {DEM}[{\mathbb {X}}]\) has finite products where the terminal object is \((\top , 1)\), and where the product of (A, e) and \((B, e^\prime )\) is \((A \times B, e \times e^\prime )\) with projection maps \((A \times B, e \times e^\prime ) \xrightarrow {\pi _0} (A,e)\) and \((A \times B, e \times e^\prime ) \xrightarrow {\pi _1} (B,e^\prime )\).

It is important to note that a differential exponential map for \(A \times B\) is not necessarily the product of differential exponential maps, that is, of the form \(e \times e^\prime \). See Example 3 for three examples of differential exponential maps which are not the products of differential exponential maps.

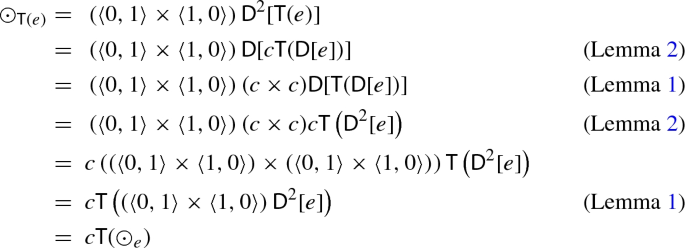

Our next observation is that the category of differential exponential maps is also a Cartesian tangent category. We first show that the tangent functor maps differential exponential maps to differential exponential maps.

Lemma 5

If \(A \xrightarrow {e} A\) is a differential exponential map, then \(A \times A \xrightarrow {{\mathsf {T}}(e)} A \times A\) is a differential exponential map.

Proof

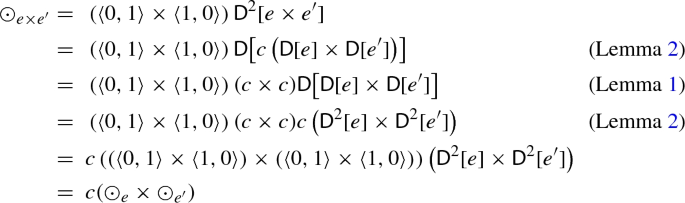

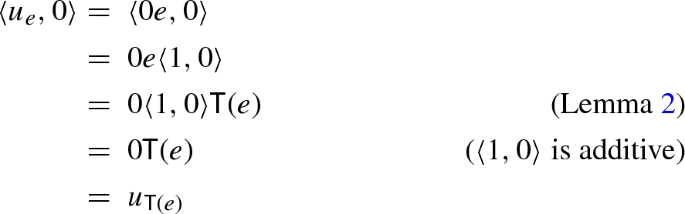

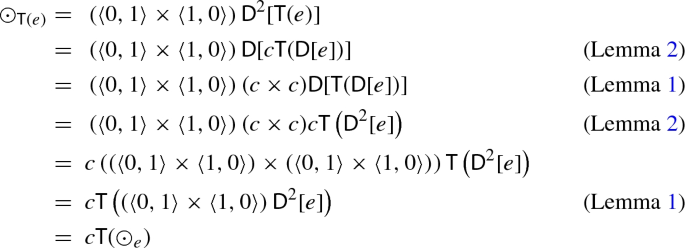

We first show that \(\langle 0, 1 \rangle {\mathsf {D}}\left[ {\mathsf {T}}(e) \right] = 1\):

Next we show that \((1 \times {\mathsf {T}}(e)) {\mathsf {D}}\left[ {\mathsf {T}}(e) \right] = \oplus {\mathsf {T}}(e)\):

So we conclude that \({\mathsf {T}}(e)\) is a differential exponential map. \(\square \)

Proposition 2

For a Cartesian differential category \({\mathbb {X}}\), \(\mathsf {DEM}[{\mathbb {X}}]\) is a Cartesian tangent category where the tangent functor \({\mathsf {T}}: \mathsf {DEM}[{\mathbb {X}}] \rightarrow \mathsf {DEM}[{\mathbb {X}}]\) is defined on objects as \({\mathsf {T}}(A, e) := (A \times A, {\mathsf {T}}(e))\) and on maps as \({\mathsf {T}}(f) = f \times f\), and where the remaining tangent structure is the same as for \(\mathsf {LIN}[{\mathbb {X}}]\) (which is the same as for \({\mathbb {X}}\) and can be found in [12, Proposition 4.7]).

Proof

The tangent functor \({\mathsf {T}}\) is well defined by Lemma 5. Since all the maps of the tangent structure of \({\mathbb {X}}\) are linear, it follows that by their respective naturality with the tangent functor of \({\mathbb {X}}\), they are also maps in \(\mathsf {DEM}[{\mathbb {X}}]\) which are natural for its tangent functor. The existence of the necessary limits for tangent structure in \(\mathsf {DEM}[{\mathbb {X}}]\) will follow from Corollary 1 and Lemma 4. And lastly, the required equalities for tangent structure will hold since they hold in \(\mathsf {LIN}[{\mathbb {X}}]\). So we conclude that \(\mathsf {DEM}[{\mathbb {X}}]\) is a tangent category. Furthermore, since \({\mathbb {X}}\) is a Cartesian tangent category, it follows that \(\mathsf {DEM}[{\mathbb {X}}]\) is also a Cartesian tangent category. \(\square \)

It may be tempting to think that \(\mathsf {DEM}[{\mathbb {X}}]\) is also a Cartesian differential category, but this is not the case. Indeed note that even if \((A, e) \xrightarrow {f} (B, e^\prime )\) and \((A, e) \xrightarrow {g} (A, e^\prime )\) are maps in \(\mathsf {DEM}[{\mathbb {X}}]\), their sum \(f +g\) is not (in general) a map in \(\mathsf {DEM}[{\mathbb {X}}]\) since it is not necessarily the case that \((f+g)e\) equals \(e^\prime (f+g)\). This implies that \(\mathsf {DEM}[{\mathbb {X}}]\) is not a Cartesian left additive category, and so in particular not a Cartesian differential category.

Example 3

Here are now examples of differential exponential maps in the Cartesian differential category \(\mathsf {SMOOTH}\) (as defined in Example 1).

- (i):

-

Consider the exponential function \({\mathbb {R}} \xrightarrow {e^x} {\mathbb {R}}\), \(x \mapsto e^x\). Since \(\frac{\partial e^x}{\partial x}(a) = e^a\), we have that:

$$\begin{aligned} {\mathsf {D}}[e](a,b) = \frac{\partial e^x}{\partial x}(a)b = e^a b \end{aligned}$$The exponential function satisfies the left diagram of (5) since \(e^0=1\):

$$\begin{aligned} {\mathsf {D}}[e](0,a) = e^0 a = a \end{aligned}$$while the right diagram of (5) is also satisfied since \(e^a e^b = e^{a+b}\):

$$\begin{aligned} {\mathsf {D}}[e^x](a,e^b) = e^a e^b = e^{a+b} \end{aligned}$$Then the exponential function \({\mathbb {R}} \xrightarrow {e^x} {\mathbb {R}}\) is a differential exponential map.

- (ii):

-

Applying Corollary 2.ii to the exponential function \({\mathbb {R}} \xrightarrow {e^x} {\mathbb {R}}\), the point-wise exponential functions \({\mathbb {R}}^{n} \xrightarrow {e^x \times \ldots \times e^x} {\mathbb {R}}^n\):

$$\begin{aligned} (x_1, \ldots , x_n) \mapsto (e^{x_1}, \ldots , e^{x_n}) \end{aligned}$$are differential exponential maps in \(\mathsf {SMOOTH}\).

- (iii):

-

Applying Lemma 5 to the exponential function \({\mathbb {R}} \xrightarrow {e^x} {\mathbb {R}}\), the smooth function \({{\mathbb {R}}^{2} \xrightarrow {{\mathsf {T}}(e^x)} {\mathbb {R}}^2}\), which is worked out to be:

$$\begin{aligned} (x,y) \mapsto (e^x, e^xy) \end{aligned}$$is a differential exponential map. To better understand \({\mathsf {T}}(e^x)\), consider the ring of dual numbers \({\mathbb {R}}[\varepsilon ]\) [25, Section 1.1.3]:

$$\begin{aligned} {\mathbb {R}}[\varepsilon ] = \lbrace x + y\varepsilon \vert ~ x,y \in {\mathbb {R}}, \varepsilon ^2=0 \rbrace \end{aligned}$$As explained in [25, Section 1.1.5], the dual number exponential function is \(e^{x+y\varepsilon } = e^x + e^x y \varepsilon \). It may be useful to the reader to work out this example using the power series definition of the exponential function:

$$\begin{aligned} e^{x+y\varepsilon } = \sum \limits ^{\infty }_{n =0} \frac{(x + y \varepsilon )^n}{n!} \end{aligned}$$Note that by the binomial theorem and power series multiplication, one can still derive that \(e^{x+y\varepsilon } = e^xe^{y\varepsilon }\). Therefore, it remains to compute \(e^{y\varepsilon }\). Since \(\varepsilon ^{n} =0\) for all \(n \ge 2\), we obtain:

$$\begin{aligned} e^{y\varepsilon } = \sum \limits ^{\infty }_{n =0} \frac{(y \varepsilon )^n}{n!} = \sum \limits ^{\infty }_{n =0} \frac{y^n \varepsilon ^n}{n!} = 1 + y \varepsilon \end{aligned}$$So \(e^{y\varepsilon } = 1 + y \varepsilon \). Therefore,

$$\begin{aligned} e^{x+y\varepsilon } = e^xe^{y\varepsilon } = e^x(1+y\varepsilon ) = e^x + e^x y \varepsilon \end{aligned}$$Writing dual numbers \(x+y\varepsilon \) instead as (x, y), it becomes clear that \({\mathsf {T}}(e^x)\) is the real smooth function associated to the dual numbers exponential function. This relation was to be expected since tangent categories are closely related to dual numbers and Weil algebras [20]. Furthermore, note that in dual number notation, \({\mathsf {D}}[{\mathsf {T}}(e^x)]\left( a+b\varepsilon , c+d\varepsilon \right) = e^{a+b\varepsilon }(c+d\varepsilon )\).

- (iv):

-

Let \({\mathbb {C}}\) be the field of complex numbers:

$$\begin{aligned} {\mathbb {C}} = \lbrace x + iy \vert ~ x,y \in {\mathbb {R}}, i^2=-1 \rbrace \end{aligned}$$The complex exponential function is \(e^{x+iy} = e^x\cos (y) + i e^x\sin (y)\), where \(\cos \) and \(\sin \) are the trigonometric cosine and sine functions respectively. The complex exponential function is of course derived from the power series definition:

$$\begin{aligned} e^{x+iy} = \sum \limits ^{\infty }_{n =0} \frac{(x + iy)^n}{n!} \end{aligned}$$and then simplifying by using that \(e^{x+iy}= e^x e^{iy}\), \(i^2=-1\), and the Taylor series expansions of \(\sin (x)\) and \(\cos (x)\). The complex exponential function can be expressed as the smooth real function \({\mathbb {R}}^2 \xrightarrow {\epsilon } {\mathbb {R}}^2\),

$$\begin{aligned} (x,y) \mapsto (e^x \cos (y), e^x \sin (y)) \end{aligned}$$By the Leibniz rule and the derivative identities for both the exponential and trigonometric functions, we have that:

$$\begin{aligned} {\mathsf {D}}[\epsilon ]\left( (a,b), (c,d) \right) = \left( e^a\cos (b)c - e^a \sin (b)d, e^a\sin (b)c + e^a \cos (b)d\right) \end{aligned}$$Or using complex number notation: \({\mathsf {D}}[\epsilon ]\left( a+ib , c+id \right) = e^{a+ib}(c+id)\). It is well known that the complex exponential function satisfies the same basic properties as the real exponential function, that is, \(e^0 = 1\) and \(e^{z+w} = e^z e^w\) for \(z,w \in {\mathbb {C}}\). As such, we can easily compute that (using complex number notation):

$$\begin{aligned} {\mathsf {D}}[\epsilon ]\left( 0, a+ib \right)&= e^{0}(a+ib) = a+ib \\ {\mathsf {D}}[\epsilon ]\left( a+ib , e^{c+id} \right)&= e^{a+ib}e^{c+id} = e^{(a+ib) + (c + id)} \end{aligned}$$Therefore, it follows that \({\mathbb {R}}^2 \xrightarrow {\epsilon } {\mathbb {R}}^2\) is a differential exponential map.

- (v):

-

Let \({\mathbb {C}}^\prime \) be the ring of split-complex numbers [25, Section 1.1.2] (also known as hyperbolic twocomplex numbers [24]):

$$\begin{aligned} {\mathbb {C}}^\prime = \lbrace x + jy \vert ~ x,y \in {\mathbb {R}}, j^2= 1 \rbrace \end{aligned}$$The split complex exponential function [24, Section 1.3] is instead defined using the hyperbolic cosine and sine functions \(\cosh \) and \(\sinh \), that is, \(e^{x+jy} = e^x\cosh (y) + j e^x\sinh (y)\). As in the previous example, the split complex exponential function is derived from its power series definition:

$$\begin{aligned} e^{x+jy} = \sum \limits ^{\infty }_{n =0} \frac{(x + jy)^n}{n!} \end{aligned}$$and then simplifying by using that \(e^{x+jy}= e^x e^{jy}\), \(j^2=1\), and the Taylor series expansions of \(\sinh (x)\) and \(\cosh (x)\), as done in [24, Section 1.3]. Similar to the complex exponential function, the split complex exponential function can be expressed as the smooth real function \({\mathbb {R}}^2 \xrightarrow {\epsilon ^\prime } {\mathbb {R}}^2\):

$$\begin{aligned} (x,y) \mapsto (e^x \cosh (y), e^x \sinh (y)) \end{aligned}$$By the Leibniz rule and the derivative identities for both the exponential and hyperbolic functions, we have that:

$$\begin{aligned} {\mathsf {D}}[\epsilon ^\prime ]\left( (a,b), (c,d) \right) = \left( e^a\cosh (b)c + e^a \sinh (b)d, e^a\sinh (b)c + e^a \cosh (b)d\right) \end{aligned}$$Or using split complex numbers: \({\mathsf {D}}[\epsilon ]\left( a+jb , c+jd \right) = e^{a+jb}(c+jd)\). As explained in [24], the split complex exponential function satisfies that \(e^0=1\) and \(e^{u+v} = e^u e^v\) for \(u,v \in {\mathbb {C}}^\prime \). Then we can compute that (using split complex number notation):

$$\begin{aligned} {\mathsf {D}}[\epsilon ^\prime ]\left( 0, a+jb \right) = {\mathsf {D}}[\epsilon ]\left( 0, a+jb \right) = e^{0}(a+jb) = a+jb \\ {\mathsf {D}}[\epsilon ^\prime ]\left( a+jb , e^{c+jd} \right) = e^{a+jb}e^{c+jd} = e^{(a+jb) + (c + jd)} \end{aligned}$$Therefore, \({\mathbb {R}}^2 \xrightarrow {\epsilon ^\prime } {\mathbb {R}}^2\) is a differential exponential map.

Note that Example 3.(iii), (iv), and (v) are not the product of differential exponential maps in the sense of Corollary 2.ii. That said, one could take the product of any of these differential exponential maps to obtain a multitude of other examples.

Example 4

Another example of a differential exponential map can be found in [11, Definition 5.20]. Briefly, in a Cartesian tangent category, a differential curve object [11, Definition 5.14] (viewed as an object in the Cartesian differential category of differential objects [12]) admits a canonical differential exponential map which arises as the solution to the well known associated differential equations. As a particular example, in the tangent category of real smooth manifolds, the differential curve object is \({\mathbb {R}}\) and its induced differential exponential map is the canonical exponential function \(e^x\). In Sect. 5 we will discuss the link between differential exponential maps and differential equations, and in particular, we will see in Proposition 8 how a differential exponential map arises as the solution of a certain initial value problem.

Corollary 2.i tells us that the identity map of the terminal object is a differential exponential map. So we conclude this section with the observation that a differential exponential map is linear if and only if it is the identity map of a terminal object.

Lemma 6

A differential exponential map \(A \xrightarrow {e} A\) is reduced (i.e. \(0e=0\)) if and only if A is a terminal object. Therefore, a differential exponential map \(A \xrightarrow {e} A\) is additive or linear if and only if A is a terminal object.

Proof

Suppose that \(A \xrightarrow {e} A\) is a differential exponential map which satisfies \(0e=0\). Then we have that:

So \(e=0\). Now note that in a Cartesian left additive category, A is a terminal object if and only if \(1_A = 0\) (we leave this to the reader to check for themselves). Then we have that:

So \(1_A = 0\), and so A is a terminal object. Conversely, if A is a terminal object, then we must have that \(e = 1_A = 0\), and so clearly e is reduced. For the second statement, note that by definition every additive map is reduced, and since linear maps are additive (Lemma 1), they are also reduced. So if e is additive or linear, it is reduced and therefore A is a terminal object. Conversely, if A is a terminal object, then e must be the identity, and identity maps are always linear and additive (Lemma 1). \(\square \)

Example 5

Every category with finite biproducts is a Cartesian differential category with differential combinator defined as \({\mathsf {D}}[f] = \pi _1 f\), which means that every map is linear. Therefore, in this case, the only differential exponential maps are the identity maps on terminal objects.

Note that for a Cartesian differential category \({\mathbb {X}}\), Lemma 6 also implies that the only differential object [12] in the Cartesian tangent category \(\mathsf {DEM}[{\mathbb {X}}]\) is the terminal object.

4 Differential Exponential Rigs

In this section, we introduce differential exponential rigs, which provide an equivalent alternative characterization of differential exponential maps. We will show that every differential exponential map induces a differential exponential rig (Proposition 4) and that conversely, every differential exponential rig induces a differential exponential map (Proposition 3). We will also show that for a Cartesian differential category, its category of differential exponential maps is isomorphic to its category of differential exponential rigs (Theorem 1). We begin by reviewing differential rigs, which are rigs in a Cartesian differential category whose multiplication is bilinear in the differential sense.

Definition 5

A differential rig in a Cartesian differential category is a triple \((A, \odot , u)\) consisting of an object A and two maps \(A \times A \xrightarrow {\odot } A\) and \(\top \xrightarrow {u} A\) such that \((A, \odot , u)\) is a commutative monoid and \(A \times A \xrightarrow {\odot } A\) is bilinear, that is, the following equality holds:

We should justify the use of the term rig in differential rig. Indeed, the term (commutative) rig should imply that there are two (commutative) monoid structures that satisfy the expected distributivity axioms. But we know that in a Cartesian differential category, as discussed in Lemma 3, every object A already comes equipped with a commutative monoid structure \((A, \oplus , 0)\). So every differential rig \((A, \odot , u)\) does come with two commutative monoid structures. The required distributivity axioms are captured by the fact that \(\odot \) is bilinear, and therefore additive in each argument—which is an equivalent way of saying that \(\oplus \) and \(\odot \) distribute over one another in the rig sense.

Lemma 7

If \((A, \odot , u)\) is a differential rig, then \((A, \odot , u, \oplus , 0)\) is a commutative rig, that is, the following diagrams commute:

where \(\oplus \) is defined as in Lemma 3.

A differential exponential rig is a differential rig equipped with an endomorphism which satisfies analogues of the three essential properties of the classical exponential function. This endomorphism will, of course, turn out to be a differential exponential map.

Definition 6

A differential exponential rig in a Cartesian differential category is a quadruple \((A, \odot , u, e)\) consisting of a differential rig \((A, \odot , u)\) and a map \(A \xrightarrow {e} A\), such that the following diagrams commute:

where \(\oplus \) is defined as in Lemma 3.

Examples of differential exponential rigs can be found below in Example 6 after we have proven Proposition 4 that every differential exponential map induces a differential exponential rig. If one keeps in mind the classical exponential function \(e^x\), then the axioms of a differential exponential rig are straightforward to understand. The leftmost diagram of (11) generalizes that \({\mathsf {D}}[e^x](x,y)=e^xy\), the middle diagram generalizes that \(e^0=1\), and lastly the rightmost diagram generalizes that \(e^{x+y}=e^xe^y\). Also note that the two right most diagrams of (11) says that e is a monoid morphism from \((A, \oplus , 0)\) to \((A, \odot , u)\). It is also worth discussing why the generalization of \(e^{x+y}=e^xe^y\) is included in the axioms of a differential exponential rig, apart from being a desirable useful identity and one which is often included in algebraic generalizations of exponential functions [26]. Indeed in the classical case, the two leftmost diagrams of (11) are sufficient for characterizing the exponential function since \(e^x\) is the unique solution to the initial value problem \(f^\prime (x) = f(x)\) with \(f(0)=1\), and from that definition it is possible to prove that \(e^{x+y}=e^xe^y\). In an arbitrary Cartesian differential category, however, it is not necessarily the case that \({\mathsf {D}}[e] = (e \times 1) \odot \) and \(0e=u\) implies \(\oplus e = (e \times e) \odot \). Furthermore, all three identities are necessary in proving that there is a bijective correspondence between differential exponential maps and differential exponential rigs. In turn, this allows us to drop the extra requirement for differential rig structure in characterizing these abstract exponential functions. That said, as we will see in Proposition 8, if one assumes uniqueness of solutions to certain initial value problems as in the classical case, then it is possible to derive \(\oplus e = (e \times e) \odot \) from \({\mathsf {D}}[e] = (e \times 1) \odot \) and \(0e=u\).

Proposition 3

Let \((A, \odot , u, e)\) be a differential exponential rig. Then \(A \xrightarrow {e} A\) is a differential exponential map.

Proof

We first show that \(\langle 0,1 \rangle {\mathsf {D}}[e] = 1\):

Next we show that \((1 \times e) {\mathsf {D}}[e] = \oplus e\):

So we conclude that e is a differential exponential map. \(\square \)

In order to show the converse of Proposition 3, consider the classical exponential function \(e^x\) and note that its second order derivative is:

Setting \(x=0\) and \(w=0\), one obtains precisely the multiplication of real numbers:

The unit for this multiplication is obtain from \(e^0 = 1\). Generalizing this construction allows one to show how a differential exponential map induces a differential exponential rig.

Before doing the proof in an arbitrary Cartesian differential category, it may be worth mapping out how the proof will go using \(e^x\). Commutativity of the multiplication, which is:

follows from [CD.7], which allows one to swap the middle two arguments of the second derivative. To show that \(e^0\) is the unit, that is:

we first observe that:

and then use the differential exponential map axiom to conclude that \(e^0\) is indeed the unit. Associativity of the multiplication, which is that:

is the trickiest part of the proof. Essentially, using the differential combinator axioms and the differential exponential map axioms, one can simplify both sides of the associativity law to the third order derivative of \(e^x\) (with 0 evaluated in the appropriate variables):

which itself turns out to be real numbers multiplication of three variables.

Proposition 4

Let \(A \xrightarrow {e} A\) be a differential exponential map, and define the maps \({A \times A \xrightarrow {\odot _e} A}\) and \(\top \xrightarrow {u_e} A\) respectively as follows:

Then \((A, \odot _e, u_e, e)\) is a differential exponential rig.

Proof

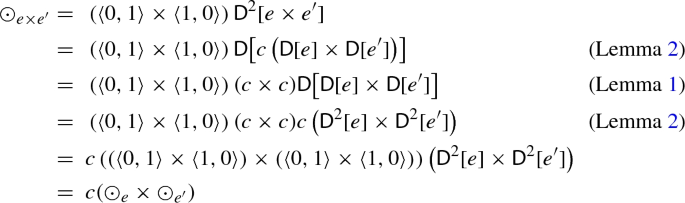

We will first prove that e satisfies the three identities of (11), as these will help simplify the proof that \((A, \odot _e, u_e)\) is a differential rig. We first prove that \({\mathsf {D}}[e] = (e \times 1) \odot _e\):

Using the above equality, we can easily show that \(\oplus e = (e \times e) \odot _e\):

The remaining identity, \(0 e = u_e\) is automatic by construction. So e satisfies three identities of (11). Next we show that \((A, \odot _e, u_e)\) is a differential rig.

We first explain why \(\odot _e\) is bilinear. In [14, Section 3] it was shown that for every map \({A \xrightarrow {f} B}\), its second order partial derivative:

was bilinear in context A, so bilinear in its last two arguments A or equivalently bilinear with respect to the differential combinator of the simple slice category over A [3, Section 4.5]. By [6, Proposition 4.1.3], bilinear maps in context are preserved by pre-composition with maps which leave the bilinear arguments unaffected, that is, by pre-composition by maps of the form \((g \times 1) \times 1\). Therefore the composite:

is bilinear in context \(\top \). However maps which are bilinear in context \(\top \) correspond precisely to bilinear maps without context. In this case, we obtain that the composite:

is bilinear. Setting \(f=e\), we conclude that \(\odot _e\) is bilinear.

Now we show that \((A, \odot _e, u_e)\) is a commutative monoid by following the intuition provided above. First that \(\odot _e\) is commutative, \(\langle \pi _1, \pi _0 \rangle \odot _e = \odot _e\), follows from [CD.7]:

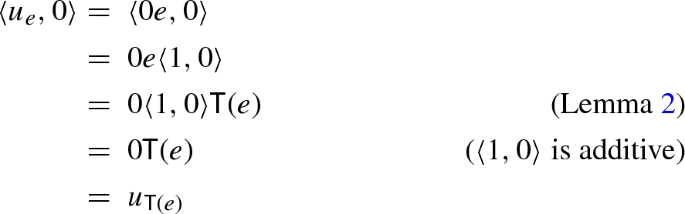

By commutativity, we need only show one of the unit identities, \(\langle 0u_e, 1 \rangle \odot _e = 1\):

Finally we prove associativity, which in the author’s opinion is the most complex proof in this paper. Let \((A \times A) \times A \xrightarrow {\alpha } A \times (A \times A)\) be the canonical associativity isomorphism \(\alpha = \langle \pi _0, \pi _1 \times 1 \rangle \). By Lemma 1, \(\alpha \) is linear and so is its inverse \(\alpha ^{-1}\). As suggested above, our goal will be to show that the third-order derivative gives the three-fold multiplication, that is, we will show that we have the following equality:

To do so, first note that by [CD.5] and [CD.6], one can show that we have the following equality (which we leave as an exercise to the reader):

Using the above identity, we compute that:

So we have that:

Using this identity, we can simplify \(\alpha (1 \times \odot _e) \odot _e\):

So we have that:

Now using the above identity and that we’ve already shown that \(\odot _e\) is commutative, we finally can show that \(\odot _e\) is associative, \((\odot _e \times 1) \odot _e= \alpha (1 \times \odot _e) \odot _e\):

So \((A, \odot _e, u_e)\) is a differential rig, and therefore we conclude that \((A, \odot _e, u_e)\) is a differential exponential rig. \(\square \)

In the proof of Theorem 1, we will show that the constructions of Proposition 3 and Proposition 4 are in fact inverse of each other. The construction from Proposition 4 is also compatible with some of the constructions of differential exponential maps in the following sense:

Lemma 8

In a Cartesian differential category:

- (i):

-

For the terminal object \(\top \), the following equality holds:

$$\begin{aligned} \odot _{1_\top } = 0 \quad \quad \quad u_{1_\top }= 1_\top \end{aligned}$$ - (ii):

-

If \(A \xrightarrow {e} A\) and \(B \xrightarrow {e^\prime } B\) are differential exponential maps, then the following equality holds for the differential exponential map \(A \times B \xrightarrow {e \times e^\prime } A \times B\):

$$\begin{aligned} \odot _{e \times e^\prime } = c (\odot _e \times \odot _{e^\prime }) \quad \quad \quad u_{e \times e^\prime } = \langle u_e, u_{e^\prime } \rangle \end{aligned}$$ - (iii):

-

If \(A \xrightarrow {e} A\) is a differential exponential map, then the following equality holds for the differential exponential map \(A \times A \xrightarrow {{\mathsf {T}}(e)} A \times A\):

$$\begin{aligned} \odot _{{\mathsf {T}}(e)} = c {\mathsf {T}}(\odot _e) \quad \quad \quad u_{{\mathsf {T}}(e)} = \langle u_e, 0 \rangle \end{aligned}$$

Proof

- (i):

-

This is automatic by uniqueness of maps into the terminal object.

- (ii):

-

For the unit, this is mostly straightforward:

$$\begin{aligned} u_{e \times e^\prime }&=~0 (e \times e^\prime ) \\&=~\langle 0 e, 0 e^\prime \rangle \\&=~ \langle u_e, u_{e^\prime } \rangle \end{aligned}$$For the multiplication, we have that:

- (iii):

-

We first show the equality for the unit:

For the multiplication, we have that:

\(\square \)

Example 6

Here we apply Proposition 4 to the examples of differential exponential maps from the previous section to construct examples of differential exponential rigs in \(\mathsf {SMOOTH}\) (which are in fact rings, since \(\mathsf {SMOOTH}\) has additive inverses).

- (i):

-

For the exponential function \({\mathbb {R}} \xrightarrow {e^x} {\mathbb {R}}\), the induced multiplication is precisely given by the standard multiplication of real numbers, that is:

$$\begin{aligned} \odot _{e^x}(x,y) = xy \end{aligned}$$and \(u_{e^x}(*) = 1\).

- (ii):

-

Applying Lemma 8.ii to the point-wise exponential \({\mathbb {R}}^{n} \xrightarrow {e^x \times \ldots \times e^x} {\mathbb {R}}^n\), we obtain the point-wise multiplication of vectors, that is,

$$\begin{aligned} \odot _{e^x \times \ldots \times e^x}\left( (x_1, \ldots , x_n), (y_1, \ldots , y_n) \right) = (x_1 y_1, \ldots , x_n y_n) \end{aligned}$$and \(u_{e^x \times \ldots \times e^x}(*) = (1, \ldots , 1)\).

- (iii):

-

Applying Lemma 8.iii to the tangent exponential function \({{\mathbb {R}}^{2} \xrightarrow {{\mathsf {T}}(e^x)} {\mathbb {R}}^2}\), we obtain the multiplication:

$$\begin{aligned} \odot _{{\mathsf {T}}(e)}\left( (x_1,y_1), (x_2,y_2) \right) = \left( x_1x_2, x_1y_2 + y_1x_2 \right) \end{aligned}$$with unit \(u_{{\mathsf {T}}(e)}(*) = (1, 0)\). Observe that this ring structure on \({\mathbb {R}}^2\) is precisely that of the ring of dual numbers \({\mathbb {R}}[\varepsilon ]\). Indeed, writing (x, y) as \(x + y \varepsilon \) with \(\varepsilon ^2=0\), we see that \(\odot _{{\mathsf {T}}(e)}\) is precisely the multiplication of dual numbers:

$$\begin{aligned} (x_1 + y_1\varepsilon )(x_2 +y_2 \varepsilon ) = x_1x_2 + (x_1y_2 + y_1x_2)\varepsilon \end{aligned}$$ - (iv):

-

For the complex exponential function \({\mathbb {R}}^2 \xrightarrow {\epsilon } {\mathbb {R}}^2\), we obtain the multiplication:

$$\begin{aligned} \odot _{\epsilon }\left( (x_1,y_1), (x_2,y_2) \right) = \left( x_1x_2 - y_1y_2, x_1y_2 + x_2y_1 \right) \end{aligned}$$with unit \(u_{\epsilon }(*) = (1, 0)\). Unsurprisingly, this ring structure on \({\mathbb {R}}^2\) is that of complex numbers \({\mathbb {C}}\). Indeed, writing (x, y) as \(x+iy\) with \(i^2=-1\), \(\odot _{\epsilon }\) gives precisely complex number multiplication:

$$\begin{aligned} (x_1+iy_1)(x_2+iy_2) = (x_1x_2 - y_1y_2) + i(x_1y_2 + x_2y_1) \end{aligned}$$ - (v):

-

For the split complex exponential function \({\mathbb {R}}^2 \xrightarrow {\epsilon ^\prime } {\mathbb {R}}^2\), we obtain the multiplication:

$$\begin{aligned} \odot _{\epsilon ^\prime }\left( (x_1,y_1), (x_2,y_2) \right) = \left( x_1x_2 + y_1y_2, x_1y_2 + x_2y_1 \right) \end{aligned}$$with unit \(u_{\epsilon ^\prime }(*) = (1, 0)\). This ring structure on \({\mathbb {R}}^2\) is that of split complex numbers \({\mathbb {C}}^\prime \). Indeed, writing (x, y) as \(x+jy\) with \(j^2=1\), \(\odot _{\epsilon ^\prime }\) gives split complex number multiplication:

$$\begin{aligned} (x_1+jy_1)(x_2+jy_2) = (x_1x_2 + y_1y_2) + j(x_1y_2 + x_2y_1) \end{aligned}$$

Example 7

As briefly discussed in Example 4, a differential curve object with solutions to linear systems admits a differential exponential map, and so by applying Proposition 4, a differential curve object is also a differential exponential rig [11, Corollary 5.26]. As an interesting application of this induced rig structure, in turns out that every differential bundle [13] is a module of the differential curve object [11, Proposition 5.4].

For a Cartesian differential category \({\mathbb {X}}\), define its category of differential exponential rigs as the category \(\mathsf {DES}[{\mathbb {X}}]\) whose objects are differential exponential rigs \((A,\odot , u, e)\) and where a map \((A,\odot , u, e) \xrightarrow {f} (B,\odot ^\prime , u^\prime , e^\prime )\) is a linear map \(A \xrightarrow {f} B\) in \({\mathbb {X}}\) such that the following diagrams commutes:

and where composition and identity maps are as in \({\mathbb {X}}\). Note that the two right most diagrams above imply that f is a monoid morphism.

Theorem 1

For a Cartesian differential category \({\mathbb {X}}\), its category of differential exponential maps \(\mathsf {DEM}[{\mathbb {X}}]\) is isomorphic to its category of differential exponential rigs \(\mathsf {DES}[{\mathbb {X}}]\) via the inverse functors \(\mathsf {DEM}[{\mathbb {X}}] \xrightarrow {{\mathsf {E}}} \mathsf {DES}[{\mathbb {X}}]\) and \(\mathsf {DES}[{\mathbb {X}}] \xrightarrow {{\mathsf {E}}^{-1}} \mathsf {DEM}[{\mathbb {X}}]\) defined respectively as follows:

Proof

We first need to show that \({\mathsf {E}}\) and \({\mathsf {E}}^{-1}\) are well-defined functors. By Proposition 3, \({\mathsf {E}}^{-1}\) is well-defined on objects, while \({\mathsf {E}}\) is well-defined on objects by Proposition 4. If f is a map in \(\mathsf {DES}[{\mathbb {X}}]\) then by definition it is also a map in \(\mathsf {DEM}[{\mathbb {X}}]\), so \({\mathsf {E}}^{-1}\) is well-defined on maps. Furthermore, \({\mathsf {E}}^{-1}\) clearly preserves composition and identity, and therefore \({\mathsf {E}}^{-1}\) is a well-defined functor. On the other hand, if \((A,e) \xrightarrow {f} (B,e^\prime )\) is a map in \(\mathsf {DEM}[{\mathbb {X}}]\), we must show that f also satisfies the three identities (15). By definition, one already has that \(ef= fe^\prime \), and so it remains to show that f is also a monoid morphism. Recall that since f is linear, f is also additive (Lemma 1). Now we first show that \(u_e f= u_{e^\prime }\):

Next we show that \(\odot _e f = (f \times f) \odot _{e^\prime }\):

Therefore f is a map in \(\mathsf {DES}[{\mathbb {X}}]\), so \({\mathsf {E}}\) is well-defined on maps. Clearly \({\mathsf {E}}\) preserves composition and identity, and therefore \({\mathsf {E}}\) is also a well-defined functor.

Next we show that \({\mathsf {E}}\) and \({\mathsf {E}}^{-1}\) are inverses of each other. Clearly we have both that \({\mathsf {E}}^{-1}{\mathsf {E}}(A,e) = (A,e)\) and \({\mathsf {E}}^{-1}{\mathsf {E}}(f) = f\). For the other direction, clearly \({\mathsf {E}}{\mathsf {E}}^{-1}(f) = f\) and so it remains to show that we also have that \({\mathsf {E}}{\mathsf {E}}^{-1}(A, \odot , u, e) = (A, \odot , u, e)\), that is, we must show that \(\odot = \odot _e\) and \(u_e = u\). Starting with the unit:

Next for the multiplication, we first observe that:

So we have that:

Using the above identity, we can easily show that \(\odot _e = \odot \):

So \((A, \odot , u, e)= (A, \odot _e, u_e, e)\). Therefore we conclude that \({\mathsf {E}}\) and \({\mathsf {E}}^{-1}\) are inverse functors and that \(\mathsf {DEM}[{\mathbb {X}}]\) is isomorphic to \(\mathsf {DES}[{\mathbb {X}}]\). \(\square \)

We conclude this section with the observation that as an immediate consequence of both Theorem 1 and Lemma 8: the category of differential exponential rigs is a Cartesian tangent category and that it is isomorphic as a Cartesian tangent category to the category of differential exponential maps.

Proposition 5

For a Cartesian differential category \({\mathbb {X}}\), \(\mathsf {DES}[{\mathbb {X}}]\) has finite products where the terminal object is \((\top , 0, 1_\top , 1_\top )\), and where the product of \((A, \odot , u, e)\) and \((B, \odot ^\prime , u^\prime , e^\prime )\) is \((A \times B, c (\odot \times \odot ^\prime ), \langle u, u^\prime \rangle , e \times e^\prime )\) with the obvious projection maps. \(\mathsf {DES}[{\mathbb {X}}]\) is also a Cartesian tangent category where the tangent functor \({\mathsf {T}}: \mathsf {DES}[{\mathbb {X}}] \rightarrow \mathsf {DES}[{\mathbb {X}}]\) is defined as follows:

and where the remaining tangent structure is the same as for \({\mathbb {X}}\) (which can be found in [12, Proposition 4.7]). Furthermore, both \(\mathsf {DEM}[{\mathbb {X}}] \xrightarrow {{\mathsf {E}}} \mathsf {DES}[{\mathbb {X}}]\) and \(\mathsf {DEM}[{\mathbb {X}}] \xrightarrow {{\mathsf {E}}^{-1}} \mathsf {DES}[{\mathbb {X}}]\) preserve the Cartesian tangent structure strictly.

5 Solutions to Dynamical Systems

As introduced in [9, Section 5], ordinary differential equations in a Cartesian differential category are described as dynamical systems, while solutions for these differential equations are described as morphisms between these dynamical systems. In the classical case, the exponential function \(e^x\) can be defined as the unique solution to the initial value problem \(f^\prime (x) = f(x)\) with \(f(0)=1\). In this section, we explain how differential exponential maps provide solutions to certain (parametrized) dynamical systems and conversely how one can obtain a differential exponential map if one assumes that solutions are unique. See [11, Section 5] for more applications of differential exponential maps in regards to solving differential equations.

We note that in [9, 11], dynamical systems were defined for tangent categories and thus involves the tangent functor. Here we present the resulting definition specific to Cartesian differential categories, where dynamical systems can be described in terms of the differential combinator.

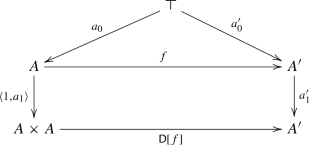

Definition 7

In a Cartesian differential category,

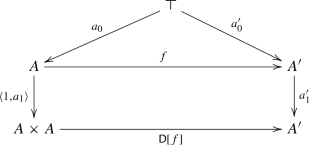

- (i):

-

A dynamical system [9, Definition 5.15] is a triple \((A, a_0, a_1)\) consisting of an object A, a point \(\top \xrightarrow {a_0} A\), and an endomorphism \(A \xrightarrow {a_1} A\);

- (ii):

-

A morphism of dynamical systems \((A, a_0, a_1) \xrightarrow {f} (A^\prime , a^\prime _0, a^\prime _1)\) is a map \(A \xrightarrow {f} A^\prime \) such that the following diagram commutes:

(17)

(17) - (iii):

-

If \((A, a_0, a_1) \xrightarrow {f} (A^\prime , a^\prime _0, a^\prime _1)\) is a morphism of dynamical systems, we say that f is an \((A, a_0, a_1)\)-solution of \((A^\prime , a^\prime _0, a^\prime _1)\).

Example 8

A dynamical system in \(\mathsf {SMOOTH}\) can be seen as a triple \(({\mathbb {R}}^n, \mathbf {a}, F)\) where \({\mathbb {R}}^n \xrightarrow {F} {\mathbb {R}}^n\) is a smooth function and a point \({\mathbf {a} \in {\mathbb {R}}^n}\). If \(({\mathbb {R}}^n, \mathbf {a}, F)\) and \(({\mathbb {R}}^m, \mathbf {b}, G)\) are dynamical systems, then a \(({\mathbb {R}}^n, \mathbf {a}, F)\)-solution of \(({\mathbb {R}}^m, \mathbf {b}, G)\) is a smooth function \({\mathbb {R}}^n \xrightarrow {H} {\mathbb {R}}^m\) such that:

which amounts to saying that H is a solution to a certain (large) system of differential equations. For a more explicit example, let \({\mathbb {R}} \xrightarrow {{\overline{c}}} {\mathbb {R}}\) be a non-zero constant function \({\overline{c}}(x) = c\), \(c\ne 0\), and define the smooth function \({\mathbb {R}} \xrightarrow {g} {\mathbb {R}}\) as \(g(x) = -rx\) for some \(r \in {\mathbb {R}}\). Then a \(({\mathbb {R}}, 0, {\overline{c}})\)-solution of \(({\mathbb {R}}, a, g)\) is a smooth function \({\mathbb {R}} \xrightarrow {f} {\mathbb {R}}\) such that:

which is equivalent to saying that f is a solution to the following linear differential equation:

where \(\lambda = \frac{r}{c}\). See [9, 11] for more details and intuition on dynamical systems.

For any differential rig \((A, \odot , u)\), there is a canonical dynamical system \((A, 0, {\overline{u}})\) where \(A \xrightarrow {{\overline{u}}} A\) is defined as follows:

and we can ask that \((A, 0, {\overline{u}})\)-solutions be compatible with the multiplication.

Definition 8

Let \((A, \odot , u)\) be a differential rig and \((A, a_0, a_1)\) a dynamical system. An \((A, \odot , u)\)-solution of \((A, a_0, a_1)\) is a map \(A \xrightarrow {f} A\) such that the following diagrams commute:

Lemma 9

Let \((A, \odot , u)\) be a differential rig, \((A, a_0, a_1)\) a dynamical system, \({A \xrightarrow {f} A}\) an endomorphism. Then f is an \((A, \odot , u)\)-solution of \((A, a_0, a_1)\) if and only if f is an \((A, 0, {\overline{u}})\)-solution of \((A, a_0, a_1)\) such that the following diagram commutes:

Proof

Suppose that f is an \((A, 0, {\overline{u}})\)-solution of \((A, a_0, a_1)\). We first show that f is also a \((A, 0, {\overline{u}})\)-solution of \((A, a_0, a_1)\). The top triangle of (17) is precisely the left diagram of (19). So it remains to show that f also satisfies the bottom square of (17):

So f is an \((A, 0, {\overline{u}})\)-solution of \((A, a_0, a_1)\). As an immediate consequence, it follows that:

So f also satisfies (20). Conversely, suppose that f is an \((A, 0, {\overline{u}})\)-solution of \((A, a_0, a_1)\) which satisfies (20). We must show that f satisfies the two diagrams of (19). As before, the left diagram of (19) is the same as the top triangle of (17). So it remains to show that f also satisfies the right diagram of (19):

So we conclude that f is an \((A, \odot , u)\)-solution of \((A, a_0, a_1)\). \(\square \)

Example 9

In \(\mathsf {SMOOTH}\), consider the differential rig induced from the exponential function \(e^x\) as defined in Example 6.i, that is, \({\mathbb {R}}\) with the standard multiplication of real numbers. Its canonical dynamical system as defined above is \(({\mathbb {R}}, 0, \overline{u_{e^x}})\) since \(\overline{u_{e^x}}(x) = 1\). A \(({\mathbb {R}}, \odot _{e^x}, u_{e^x})\)-solution of a dynamical system \(({\mathbb {R}}, a, g)\), where \(a \in {\mathbb {R}}\) and \({\mathbb {R}} \xrightarrow {g} {\mathbb {R}}\), is a smooth function \({\mathbb {R}} \xrightarrow {f} {\mathbb {R}}\) such that:

By Lemma 9, setting \(y = 1\), we see that f is also a solution to the differential equation \({f^\prime (x) = f(g(x))}\) with initial value \(f(0) = a\), or in other words, f is a \(({\mathbb {R}}, 0, \overline{u_{e^x}})\)-solution of \(({\mathbb {R}}, a, g)\) such that f satisfies (20):

In fact, note that every arbitrary smooth function \({\mathbb {R}} \xrightarrow {f} {\mathbb {R}}\) satisfies (20) since:

Then in this case, every \(({\mathbb {R}}, 0, \overline{u_{e^x}})\)-solution of \(({\mathbb {R}}, a, g)\) is also a \(({\mathbb {R}}, \odot _{e^x}, u_{e^x})\)-solution of \(({\mathbb {R}}, a, g)\).

For a differential exponential rig, its differential exponential map is the solution to the dynamical system which generalizes the initial value problem \(f^\prime (x) = f(x)\) with \(f(0) = 1\).

Proposition 6

Let \((A, \odot , u, e)\) be a differential exponential rig. Then the differential exponential map e is an \((A, \odot , u)\)-solution of the dynamical system (A, u, 1), and therefore e is also a \((A, 0, {\overline{u}})\)-solution of (A, u, 1).

Proof

The left diagram of (19) is precisely the middle diagram of (11) that \(0e=u\). While the right diagram of (19) is precisely the left diagram of (11) that \({\mathsf {D}}[e] = (e \times 1) \odot \). Therefore, e is an \((A, \odot , u)\)-solution of (A, u, 1). By Lemma 9, it follows that e is also a \((A, 0, {\overline{u}})\)-solution of (A, u, 1). \(\square \)

Example 10

In \(\mathsf {SMOOTH}\), \({\mathbb {R}} \xrightarrow {e^x} {\mathbb {R}}\) is an \(({\mathbb {R}}, \odot _{e^x}, u_{e^x})\)-solution of \(({\mathbb {R}}, u, 1)\). In other words, \(e^x\) is a solution to the following initial value problem:

In fact, \(e^x\) is the unique \(({\mathbb {R}}, \odot _{e^x}, u_{e^x})\)-solution of \(({\mathbb {R}}, u, 1)\). By Proposition 6, setting \(y=1\), \(e^x\) is also a \(({\mathbb {R}}, 0, \overline{u_{e^x}})\)-solution of \(({\mathbb {R}}, u, 1)\), that is, \(e^x\) is the unique solution to the initial value problem \(f^\prime (x) = f(x)\) with \(f(0) = 1\).

Note, however, that Proposition 6 does not say that a differential exponential map is a unique solution. Indeed, in an arbitrary Cartesian differential category, solutions of dynamical systems need not be unique, and so it is possible that for a differential rig \((A, \odot , u)\), there are multiple \((A, 0, {\overline{u}})\)-solutions of (A, u, 1). Furthermore, as discussed in Sect. 4, a \((A, 0, {\overline{u}})\)-solution of (A, u, 1) is not necessarily a differential exponential map since \(\oplus e = (e \times e) \odot \) does not necessarily hold. That said, as we will see in Proposition 8, with the extra assumption that solutions be unique, then it follows that a \((A, 0, {\overline{u}})\)-solution of (A, u, 1) is, in this case, a differential exponential map. To do so, we first discuss the notion of solutions of parametrized dynamical systems.

Definition 9

In a Cartesian differential category,

- (i):

-

A parametrized dynamical system (over X or in context X) is a triple \((B, b_0, b_1)\) consisting of an object B, a map \(X \xrightarrow {b_0} B\), and an endomorphism \(B \xrightarrow {b_1} B\);

- (ii):

-

If \((A, a_0, a_1)\) is a dynamical system and \((B, b_0, b_1)\) a parametrized dynamical system, then a parametrized \((A, a_0, a_1)\)-solution of \((B, b_0, b_1)\) is a map \(A \times X \xrightarrow {f} B\) such that the following diagram commutes:

(21)

(21) - (iii):

-

For a dynamical system \((A, a_0, a_1)\), an endomorphism \(B \xrightarrow {b_1} B\) is said to be \((A, a_0, a_1)\)-complete if for every map \(X \xrightarrow {b_0} B\), there is an \((A, a_0, a_1)\)-solution of the parametrized dynamical system \((B, b_0, b_1)\).

Note that dynamical systems can be described as parametrized dynamical systems over the terminal object \(\top \) and in this case (17) is the same as (21), modulo the isomorphism \(A \cong A \times \top \).

Example 11

In \(\mathsf {SMOOTH}\), a parametrized dynamical system is simply a triple \(({\mathbb {R}}^m, K, G)\) with smooth functions \({\mathbb {R}}^k \xrightarrow {K} {\mathbb {R}}^m\) and \({\mathbb {R}}^m \xrightarrow {G} {\mathbb {R}}^m\). If \(({\mathbb {R}}^n, \mathbf {a}, H)\) is a dynamical system, then a parametrized \(({\mathbb {R}}^n, \mathbf {a}, H)\)-solution of \(({\mathbb {R}}^m, K, G)\) is a smooth function \({\mathbb {R}}^n \times {\mathbb {R}}^k \xrightarrow {F} {\mathbb {R}}^m\) such that:

As a particular example, let \(m=1\) with smooth functions \({\mathbb {R}}^k \xrightarrow {h} {\mathbb {R}}\) and \({\mathbb {R}} \xrightarrow {g} {\mathbb {R}}\). A parametrized \(({\mathbb {R}}, 0, \overline{u_{e^x}})\)-solution of \(({\mathbb {R}}, h, g)\) is a smooth function \({\mathbb {R}} \times {\mathbb {R}}^k \xrightarrow {f} {\mathbb {R}}\) such that:

As before, in the case of a differential rig, one can also ask for parametrized solutions to be compatible with the rig multiplication.

Definition 10

Let \((A, \odot , u)\) be a differential rig and \((A, a_0, a_1)\) be a parametrized dynamical system over X.

- (i):

-

A parametrized \((A, \odot , u)\)-solution of \((A, a_0, a_1)\) is a map \(A \times X \xrightarrow {f} A\) such that the following diagrams commute:

(22)

(22) - (ii):

-

An endomorphism \(A \xrightarrow {a_1} A\) is \((A, \odot , u)\)-complete if for every map \(X \xrightarrow {a_0} A\), there is a parametrized \((A, \odot , u)\)-solution of the parametrized dynamical system \((A, a_0, a_1)\).

Lemma 10

Let \((A, \odot , u)\) be a differential rig.

- (i):

-

Let \((A, a_0, a_1)\) be a parametrized dynamical system over X. Then a map \({A \times X \xrightarrow {f} A}\) is a parametrized \((A, \odot , u)\)-solution of \((A, a_0, a_1)\) if and only if f is a parametrized \((A, 0, {\overline{u}})\)-solution of \((A, a_0, a_1)\) such that the following diagram commutes:

(23)

(23) - (ii):

-

If an endomorphism \(A \xrightarrow {a_1} A\) is \((A, \odot , u)\)-complete then \(a_1\) is \((A, 0, {\overline{u}})\)-complete.

Proof

Note that (i) is a generalization of Lemma 9 and it is proved by similar calculations. Now suppose that an endomorphism \(A \xrightarrow {a_1} A\) is \((A, \odot , u)\)-complete. Then for every map \(X \xrightarrow {a_0} A\), there is a parametrized \((A, \odot , u)\)-solution of \((A, a_0, a_1)\), which is therefore also a parametrized \((A, 0, {\overline{u}})\)-solution of \((A, a_0, a_1)\). So we conclude that \(a_0\) is also \((A, 0, {\overline{u}})\)-complete. \(\square \)

Example 12