Abstract

Approximately sixty years ago two seminal findings, the cutting plane and the subgradient methods, radically changed the landscape of mathematical programming. They provided, for the first time, the practical chance to optimize real functions of several variables characterized by kinks, namely by discontinuities in their derivatives. Convex functions, for which a superb body of theoretical research was growing in parallel, naturally became the main application field of choice. The aim of the paper is to give a concise survey of the key ideas underlying successive development of the area, which took the name of numerical nonsmooth optimization. The focus will be, in particular, on the research mainstreams generated under the impulse of the two initial discoveries.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Nonsmooth optimization (NSO), sometimes referred to as Nondifferentiable optimization (NDO), deals with problems where the objective function exhibits kinks. Even though smoothness, that is the continuity of the derivatives, is present in most of the functions describing real world decision making processes, an increasing number of modern and sophisticated applications of optimization are inherently nonsmooth. The most common source of nonsmoothness is in the choice of the worst-case analysis as a modeling paradigm. It results in choosing objective functions of the max or, alternatively, of the min type, thus in stating minmax or maxmin problems, respectively. Nonsmoothness typically occurs whenever solution of the inner maximization (or minimization) is not unique. Although such phenomenon is apparently rare, nevertheless its occurrence might cause failure of the traditional differentiable optimization methods when applied to nonsmooth problems. Among other areas where nonsmooth optimization problems arise we mention here:

-

Minmaxmin models, coming from worst–case–oriented formulations of problems where two types of decision variables are considered, “here and now” and “wait and see”, respectively, with in the middle the realization of a scenario taken from a set of possible ones.

-

Right-hand-side decomposition of large scale problems (e.g., multicommodity flow optimization) where the decomposition into subproblems is controlled by a master problem which assigns resources to each of them. In such framework, the objective function of the master is typically nonsmooth.

-

Lagrangian relaxation of Integer or Mixed-Integer programs, where the Lagrangian dual problem, tackled both for achieving good quality bounds and for constructing efficient Lagrangian heuristics, consists in the optimization of a piecewise affine (hence nonsmooth) function of the multipliers.

-

Variational inequalities and nonlinear complementarity problems, which benefit from availability of effective methods to deal with systems of nonsmooth equations.

-

Bilevel problems, based on the existence, as in Stackelberg’s games, of a hierarchy of two autonomous decision makers. The related optimization problems are non-differentiable.

Although the history of nonsmooth optimization dates back to Chebyshëv and his contribution to function approximation (Chebyshëv 1961), it was in the sixties of last century when mathematicians, mainly from former Soviet Union, started to tackle the design of algorithms able to numerically locate the minima of functions of several variables, under no differentiability assumption. The subgradient was the fundamental mathematical tool adopted in such context. We recall here the contributions by Shor (1985), Demyanov and Malozemov (1974), Polyak (1987), and Ermoliev (1966).

Based on quite a different philosophy, as it will be apparent in the following, a general method able to cope with nondifferentiability was devised, independently, by Kelley (1960) and by Cheney and Goldstein (1959). Instead of trusting on a unique subgradient, the approach consisted in the simultaneous use of the information provided by many subgradients. The parallel development of convex analysis, thanks to contributions by Fenchel, Moreau and Rockafellar, was providing, at that time, the necessary theoretical support.

A real breakthrough took place approximately in the mid seventies, when the idea of an iterative process based on information accumulation did materialize in the methods independently proposed by Lemaréchal (1974) and Wolfe (1975). From those seminal papers an incredibly large number of variants flourished, under the common label of bundle type methods. This family of methods, originally conceived for treatment of the convex case, was appropriately enriched by features able to cope with non convexity.

In more recent years, motivated by the interest in solving problems where exact calculation of the objective function is either impossible or computationally costly, several methods based on its approximate computation were devised. At this time the derivative free philosophy is successfully stepping in the nonsmooth optimization world.

Establishing a taxonomy of methods in such a rich area is a difficult and somehow arbitrary task. We will adopt the following, imperfect scheme, defining a classification in terms of methods based on single-point information and those grounded on multi-point models. All subgradient-related methods, ranging from classic fixed step one to recent accelerated versions, belong to the first group, while in the second group we will comprise the cutting-plane related approaches, including bundle methods and their variants. We will see, however, that even in the multi-point approaches, to paraphrase Orwell in his Animal farm, all points are equal, but some points are more equal than others.

The methods grounded on inexact function and/or subgradient evaluation will be also cast in the above framework. Some other methods, that can hardly fit the proposed scheme, will be treated separately.

We confine ourselves to the treatment of convex unconstrained optimization problems. When appropriate, we will also focus on the extension of some algorithms to nonconvex Lipschitz functions, or to special classes of nonconvex functions, such as the Difference-of-Convex (DC) ones.

The paper is organized as follows. After stating the main NSO problem, the relevant notation, and some basic theoretical background in Sect. 2, we introduce the NSO mainstreams in Sect. 3. In Sects. 4 and 5 we discuss, respectively, about the methods based on single-point and multi-point models. Some classes of algorithms hard to classify into the two mainstreams are surveyed in Sect. 6. Motivations and issues related to the use of inexact calculations are discussed in Sect. 7, while in Sect. 8 some possible extensions of convex methods to the nonconvex case are reported. We give only the strictly necessary references in the main body of this survey, postponing to the final Sect. 9 more detailed bibliographic notes and complementary information, along with few relevant reading suggestions.

The paper is a slightly revised version of Gaudioso et al. (2020c).

2 Preliminaries

We consider the following unconstrained minimization problem

where the real-valued function \(f:{\mathbb {R}}^n \rightarrow {\mathbb {R}}\) is assumed to be convex and not necessarily differentiable (nonsmooth), unless otherwise stated. We assume that f is finite over \({\mathbb {R}}^n\), hence it is proper. Besides, in order to simplify the treatment, we assume that f has finite minimum \(f^*\) which is attained at a nonempty convex compact set \(M^* \subset {\mathbb {R}}^n\). An unconstrained minimizer of f, namely any point in \(M^*\), will be denoted by \(\mathbf {x}^*\). For a given \(\epsilon >0\), an \(\epsilon \)-approximate solution of (1) is any point \(\mathbf {x} \in {\mathbb {R}}^n\) such that \(f(\mathbf {x}) < f^* + \epsilon \). Throughout the paper, the symbol \(\Vert \cdot \Vert \) will indicate the \(\ell _2\) norm, while for any given two vectors \(\mathbf {a},\mathbf {b}\), their inner product will be denoted by \(\mathbf {a}^\top \mathbf {b}\).

Next, the fundamental tools of nonsmooth optimization are briefly summarized. Further definitions and relevant findings will be recalled at later stages as they will be necessary.

Given any point \(\mathbf {x} \in {\mathbb {R}}^n\), a subgradient of f at \(\mathbf {x}\) is any vector \(\mathbf {g} \in {\mathbb {R}}^n\) satisfying the following (subgradient-)inequality

The subdifferential of f at \(\mathbf {x} \in {\mathbb {R}}^n\), denoted by \(\partial f(\mathbf {x})\), is the set of all the subgradients of f at \(\mathbf {x}\), i.e.,

At any point \(\mathbf {x}\) where f is differentiable, the subdifferential reduces to a singleton, its unique element being the ordinary gradient \(\nabla f(\mathbf {x})\).

Previous definitions are next generalized for any nonnegative scalar \(\epsilon \). An \(\epsilon \)-subgradient of f at \(\mathbf {x}\), is any vector \(\mathbf {g} \in {\mathbb {R}}^n\) fulfilling

and the \(\epsilon \)-subdifferential of f at \(\mathbf {x}\), denoted by \(\partial f_\epsilon (\mathbf {x})\), is the set of all the \(\epsilon \)-subgradients of f at \(\mathbf {x}\), i.e.,

In case \(\epsilon = 0\) it obviously holds that \(\partial _0 f(\mathbf {x})=\partial f(\mathbf {x})\).

Since f is convex and finite over \({\mathbb {R}}^n\), the subdifferential \( \partial f(\cdot )\) is a convex, bounded and closed set; hence, for the directional derivative \(f'(\mathbf {x},\mathbf {d})\) at any \(\mathbf {x}\), along the direction \(\mathbf {d} \in {\mathbb {R}}^n\), it holds that

In particular, at a point \(\mathbf {x}\) where f is differentiable, the formula of classic calculus

easily follows from (6) and recalling that \(\partial f(\mathbf {x})= \{\nabla f(\mathbf {x})\}\).

Any direction \(\mathbf {d} \in {\mathbb {R}}^n\) is defined as a descent direction at \(\mathbf {x}\) if there exists a positive threshold \(\bar{t}\) such that

Furthermore, we remark that for convex functions the following equivalence holds true

At a later stage we will sometimes relax the convexity assumption on f, only requiring that f be locally Lipschitz, i.e., Lipschitz on every bounded set. Under such assumption, f is still differentiable almost everywhere, and it is defined at each point \(\mathbf {x}\) the generalized gradient (Clarke 1983) (or Clarke’s gradient, or subdifferential)

\(\varOmega _f\) being the set (of zero measure) where f is not differentiable. Any point \(\mathbf {x}\) with \(0 \in \partial _C f(\mathbf {x}) \) will be referred to as a Clarke stationary point.

In the rest of the article, it will be referred to as an oracle any black-box algorithm capable to provide, given any point \(\mathbf {x}\), the objective function value \(f(\mathbf {x})\) and, in addition, a subgradient in \(\partial f(\mathbf {x})\) or in \(\partial _C f(\mathbf {x})\), depending on whether f is convex or just locally Lipschitz.

3 Nonsmooth optimization mainstreams

In order to understand the main difference between smooth (i.e., differentiable) and nonsmooth functions, in an algorithmic perspective, we focus on comparing equations (6) and (7). On one hand, for smooth functions, at any point \(\mathbf {x}\) the gradient \(\nabla f(\mathbf {x})\) provides complete information about the directional derivative, along every possible direction, through the formula \(f'(\mathbf {x},\mathbf {d})=\nabla f(\mathbf {x})^\top \mathbf {d}\), see (7). On the other hand, for nonsmooth functions, at a point \(\mathbf {x}\) where f is not differentiable, the directional derivative, along any given direction, can only be calculated via a maximization process over the entire subdifferential, see (6), thus making any single subgradient unable to provide complete information about \(f'(\mathbf {x},\cdot )\). From an algorithmic viewpoint, such a difference has relevant implications that make not particularly appealing the idea of extending classic descent methods to NSO (although some elegant results for classes of nonsmooth nonconvex functions can be found in Demyanov and Rubinov (1995)). In the following remark, we highlight why most of the available NSO methods do not follow a steepest descent philosophy.

Remark 1

Let \(\mathbf {x} \in {\mathbb {R}}^n\) be given, and assume there exists a descent direction at \(\mathbf {x}\). The steepest descent direction \(\mathbf {d}^*\) at \(\mathbf {x}\) is the one where the directional derivative is minimized over the unit ball, i.e.,

We observe that \(\mathbf {d}^*\) is well defined both in the smooth and in the nonsmooth case. As for the former, it simply holds that \(\mathbf {d}^*= - \nabla f(\mathbf {x})/\Vert \nabla f(\mathbf {x})\Vert \). As for the latter, it holds that \(\mathbf {d}^*\) is the solution of the following minmax optimization problem

By applying the minmax-maxmin theorem it easily follows that

Hence, in the nonsmooth case, the steepest descent direction can only be determined, if the complete knowledge of the subdifferential is available, by finding the minimum norm point in a compact convex set.

As already mentioned, our review of the main classes of iterative algorithms for NSO is based on the distinction between single-point and multi-point models. In the rest of the article, we will denote by \(\mathbf {x}_k\) the estimate of a minimizer of f at the (current) iteration k. Methods based on single-point models look for the new iterate \(\mathbf {x}_{k+1}\) by only exploiting the available information on the differential properties of the function at \(\mathbf {x}_k\). Such information consist either of a single subgradient or of a larger subset of the subdifferential, possibly coinciding with the entire subdifferential. Sometimes, an appropriate metric is also associated to \(\mathbf {x}_k\). The aim is to define a local approximation of f around \(\mathbf {x}_k\) to suggest a move towards \(\mathbf {x}_{k+1}\), possibly obtained via a univariate minimization (line search).

Methods based on multi-point models exploit similar local information about \(\mathbf {x}_k\), which are enriched by data coming from several other points (typically the iterates \(\mathbf {x}_1, \ldots , \mathbf {x}_{k-1}\)), no matter how far from \(\mathbf {x}_k\) they are. Here the aim is no longer to obtain a local approximation of f, but to construct an (outer) approximation of the entire level set of f at \(\mathbf {x}_k\), that is, of the set

In the next two sections we will survey the two classes of methods. We wish, however, to remark that the intrinsic difficulty in calculating a descent direction for a nonsmooth function, suggests to look for iterative methods that do not require at each iteration decrease of the objective function. In other words, monotonicity is not necessarily a “pole star” for designing NSO algorithms (note, in passing, that also in smooth optimization the monotonicity of objective function values is not a must (Barzilai and Borwein 1988; Grippo et al. 1991)).

4 Methods based on single-point models

The focus of this section in on the celebrated subgradient method, introduced by N. Z. Shor in the early 60s of the last century, see (Shor 1962). In particular, we aim to review the convergence properties of the classic versions of the method, next giving some hints on recent improvements. In its simplest configuration the subgradient method works according to the following iteration scheme

where \(\mathbf {g}_k \in \partial f(\mathbf {x}_k)\) and \(t > 0\) is a constant stepsize. In order to develop a convergence theory it is crucial to introduce the concept of minimum approaching direction at any point \(\mathbf {x}\), as a direction along which there exist points which are closer to a minimizer than \(\mathbf {x}\). More specifically, a direction \(\mathbf {d}\) is defined as a minimum approaching direction at \(\mathbf {x}\), if there exists a positive threshold \(\bar{t}\) such that

As previously pointed out, see (6)–(8), taking an anti–subgradient direction \(\mathbf {d}=-\mathbf {g}\), for any \(\mathbf {g} \in \partial f(\mathbf {x})\), thus satisfying the condition \(\mathbf {g}^\top \mathbf {d} < 0\), does not guarantee that \(\mathbf {d}\) is a descent direction at \(\mathbf {x}\). On the other hand, it can be easily proved that such \(\mathbf {d}\) is a minimum approaching direction at \(\mathbf {x}\). In fact, for any \(\mathbf {x} \notin M^*\), the convexity of f implies that

As a consequence, by letting

from inequality (11) it follows that

for every \(t \in (0,\bar{t})\), namely, that \(\mathbf {d}=-\mathbf {g}\) is a minimum approaching direction at \(\mathbf {x}\).

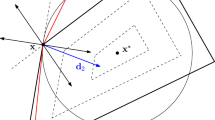

Different types of directions are depicted in Fig. 1, where the contour lines of a convex, piecewise affine function with minimum at \(\mathbf {x}^*\) are represented. Note in fact that, at point \(\mathbf {x}\), direction \(\mathbf {d}_2\) is both a descent and a minimum approaching one, since it points inside both the contour at \(\mathbf {x}\) and the sphere of radius \(\Vert \mathbf {x}-\mathbf {x}^*\Vert \) centered at \(\mathbf {x}^*\). Note also that \(\mathbf {d}_1\) is minimum approaching but not descent, while \(\mathbf {d}_3\) is descent but not minimum approaching.

In the following, taking any subgradient \(\mathbf {g} \in \partial f(\mathbf {x})\), we will indicate by \(\mathbf {d}=-\mathbf {g}\) an anti–subgradient direction, possibly normalized by setting \(\mathbf {d}=- \frac{\mathbf {g}}{\Vert \mathbf {g}\Vert }\).

The property of the anti–subgradient directions of being minimum approaching ones is crucial for ensuring convergence of the constant stepsize method based on the iteration scheme (10), as we show in the following theorem (Shor 1985).

Theorem 1

Let f be convex and assume that \(M^*\), the set of minima of f, is nonempty. Then, for every \(\epsilon >0\) and \(\mathbf {x}^* \in M^*\) there exist a point \(\bar{\mathbf {x}}\) and an index \(\bar{k}\) such that

and

Proof

Let \(U_k = \{\mathbf {x}\mid f(\mathbf {x})=f(\mathbf {x}_k)\}\) and \(L_k = \{\mathbf {x}\mid \mathbf {g}_k^\top (\mathbf {x}-\mathbf {x}_k)=0\}\) be, respectively, the contour line passing through \(\mathbf {x}_k\) and the supporting hyperplane at \(\mathbf {x}_k\) to the level set \(S_k = \{\mathbf {x} \mid f(\mathbf {x})\le f(\mathbf {x}_k)\}\), with normal \(\mathbf {g}_k\).

Consider now, see Fig. 2, \(a_k(\mathbf {x}^*)=\Vert \mathbf {x}^*-\mathbf {x}_P^*\Vert \), the distance of any point \(\mathbf {x}^* \in M\) from its projection \(\mathbf {x}_P^*\) onto \(L_k\), and observe that \(a_k(\mathbf {x}^*) \ge b_k(\mathbf {x}^*)=\Vert \mathbf {x}^*-\mathbf {x}^*_L\Vert \). Note also that \(b_k(\mathbf {x}^*)\) is an upper bound on \(dist(\mathbf {x}^*,U_k)\), the distance of \(\mathbf {x}^*\) from contour line \(U_k\). It is easy to verify that

and, as a consequence, that

Now, observe that from (10) and (13) it follows that

Next, suppose for a contradiction that \(b_k(\mathbf {x}^*) \ge \displaystyle t(1+\epsilon )/2\) for every k. Denoting by \(\mathbf {x}_1\) the starting point of the algorithm, and repeatedly applying the inequality (14), we have that for every k it holds

which contradicts \(\Vert \mathbf {x}_{k+1}-\mathbf {x}^*\Vert ^2 \ge 0\) for all k. \(\square \)

Remark 2

Note that the above theorem does not ensure that the method generates a point arbitrarily close to a minimum. In fact, it only allows to guarantee that a contour line is reached whose distance from any minimizer is arbitrarily small.

The constant stepsize subgradient method is interesting from the historical point of view but its numerical performance is strongly affected by the choice of t. Classic subgradient method (SM in the following), instead, is based on adjustable stepsize and works according to the iterative scheme

where \(\mathbf {g}_k \in \partial f(\mathbf {x}_k)\) and \(t_k > 0\). The following theorem guarantees standard convergence of SM under appropriate conditions on the stepsize \(t_k\) (Shor 1985).

Theorem 2

Let f be convex and assume that \(M^*\) is bounded and nonempty, with \(f^*=f(\mathbf {x}^*)\). If the stepsize sequence \(\{t_k\}\) in (15) satisfies the conditions

then either there exists an index \(k^*\) such that \(\mathbf {x}_{k^*} \in M^*\) or \(\lim _{k\rightarrow \infty }f(\mathbf {x}_k)=f^*\).

Remark 3

A possible choice of \(t_k\) satisfying (16) is \(t_k =\displaystyle c/k\), where c is any positive constant. The choice

known as Polyak stepsize (see Polyak 1987 for an alternative proof of convergence) is particularly popular in the area of application of nonsmooth convex optimization to solution of Integer Linear Programming (ILP) problems via Lagrangian relaxation (Gaudioso 2020). In the fairly common case when \(f^*\) is unknown, it is usually replaced in (17) by any lower bound on the optimal objective function value.

The following proposition provides an evaluation of the convergence speed of the subgradient method and an estimate of the number of iterations, under some simplifying assumptions. Detailed discussions can be found in Goffin (1977), Shor (1985).

Proposition 1

Assume that

-

(i)

f admits a sharp minimum, i.e., there exists \(\mu >0\) such that \(f(\mathbf {x}) \ge f^* + \mu \Vert \mathbf {x}-\mathbf {x}^*\Vert \), for every \(\mathbf {x} \in {\mathbb {R}}^n\), and

-

(ii)

the minimum value \(f^*\) is known, so that the Polyak stepsize (17) can be calculated.

Then, the subgradient method has linear convergence rate \(q=\sqrt{ 1- \frac{\mu ^2}{c^2}}\), where c is any upper bound on the norm of \(\mathbf {g}_k\). Moreover an \(\epsilon \)-approximate solution is achieved in \( O(\frac{1}{\epsilon ^2})\) iterations.

Proof

Note first that convexity of f implies

From (15), by adopting the Polyak stepsize (17) and taking into account (18), it follows that

hence that

The latter inequality implies boundedness of the sequence \(\{\mathbf {x}_k\}\), which in turn implies boundedness of the corresponding sequence of subgradients \(\{\mathbf {g}_k\}\), say \(\Vert \mathbf {g}_k\Vert \le c\), for every k, for some positive constant c. Taking into account assumption i) we rewrite (19) as

which proves first part of the Proposition. Next, let \( f^*_k=\min _{1 \le i \le k} f(\mathbf {x}_i)\), the best objective function value obtained up to iteration k, let \(R=\Vert \mathbf {x}_1-\mathbf {x}^*\Vert \), and observe that

From iterated application of inequality (19), for \(i=1,\ldots ,k\), since \(\Vert \mathbf {x}_{k+1}-\mathbf {x}^*\Vert \ge 0\) and \(\Vert \mathbf {g}_i\Vert \le c\), we obtain that

hence that

The latter inequality implies that an \(\epsilon \)-optimal solution is obtained in a number of iterations \(k \ge \frac{R^2c^2 }{\epsilon ^2}\) and the proof is complete. \(\square \)

Remark 4

We observe that monotonicity of the sequence \(\{f(\mathbf {x}_k)\}\) is not ensured, while the minimum approaching nature of the anti-subgradient direction is apparent from (19). On the other hand, we note that the convergence rate q can be arbitrarily close to 1.

Slow convergence of the subgradient method has stimulated several improvement attempts in more recent years. Starting from observation that the method is a black box one, as no problem structure is exploited, the newly introduced approaches have been designed for classes of weakly structured problems, still covering most of convex nonsmooth optimization programs of practical interest.

Here, we recall Nesterov’s smoothing method (Nesterov 2005), where the bound on the number of iterations improves from \( O(\frac{1}{\epsilon ^2})\) to \( O(\frac{1}{\epsilon })\). Denoting by \(S_1\) and \(S_2\) two convex and compact subsets of \(\mathbb {R}^n\) and \(\mathbb {R}^m\), respectively, the problem addressed in Nesterov (2005) is of type

with

where A is a matrix of appropriate dimension, and \(\phi : \mathbb {R}^m \rightarrow {\mathbb {R}}\) is a convex function. Note that f is convex, being the pointwise maximum of (an infinite number of) convex functions, and nonsmoothness of f occurs at those point \(\mathbf {x}\) where the maximum is not unique. In fact, smoothing of f is pursued in Nesterov (2005) by forcing such maximum to be unique. In particular, the following perturbation \(f_{\mu }\) of f is introduced

where \(\mu >0\) is the perturbation parameter, and \(\omega :\mathbb {R}^m \rightarrow \mathbb {R}\) is a strongly convex continuously differentiable function, i.e., for every \(\mathbf {v} \in {\mathbb {R}}^m\) it satisfies the condition

for some \(\sigma >0\). Minimization of the smooth function \(f_{\mu }(\mathbf {x})\) is then pursued via a gradient-type method (see also Frangioni et al. 2018 for a discussion on tuning of the smoothing parameter \(\mu \).)

The Mirror Descent Algorithm (MDA) Nemirovski and Yudin (1983) is yet another method inspired by SM. We give here its basic elements, following the presentation of Beck and Teboulle (2003), and confine ourselves to treatment of the unconstrained problem (1). Consider the following iteration scheme for passing from \(\mathbf {x}_k\) to \(\mathbf {x}_{k+1}\), once an oracle has provided both \(f(\mathbf {x}_k)\) and \(\mathbf {g}_k \in \partial f(\mathbf {x}_k)\),

where \(\gamma _k>0\). Simple calculation provides

which coincides with (15) when \( \gamma _k=\frac{t_k}{\Vert \mathbf {g}_k\Vert }\). Note that \(\mathbf {x}_{k+1}\) is obtained as the minimizer of the linearization of f rooted at \(\mathbf {x}_k\), augmented by a proximity term which penalizes long steps away from \(\mathbf {x}_k\), on the basis of the proximity parameter \(\gamma _k\). Now consider, as in previous Nesterov’s method, any strongly convex continuously differentiable function \(\omega :\mathbb {R}^n \rightarrow \mathbb {R}\) and let

Function \(\alpha _{k}(\mathbf {x})\) measures the error at \(\mathbf {x}\) associated to the linearization of \(\omega \) rooted at \(\mathbf {x}_k\), and resumes information about the curvature of \(\omega \) along the direction \((\mathbf {x}-\mathbf {x}_k)\). Moreover \(\alpha _{k}\) can be considered as a distance-like function, since strong convexity of \(\omega \) implies \(\alpha _{k}(\mathbf {x})>0\) for \(\mathbf {x} \ne \mathbf {x}_k\). On the basis of the definition of \(\alpha _{k}\) the iterative scheme (22) is generalized by setting:

Note that, letting \(\omega (\mathbf {x})=\frac{1}{2 }\Vert \mathbf {x}\Vert ^2\), it is easy to verify that

and the two iterative schemes (22) and (24) coincide. Hence, we conclude that the SM iteration scheme (22) is a special case of (24) which is, in fact, MDA (see Beck and Teboulle 2003). The function \(\alpha _k\) is usually referred to as a Bregman-like distance generated by function \(\omega \).

5 Methods based on multi-point models

As previously mentioned, here we deal with those iterative methods for NSO where the next iterate \(\mathbf {x}_{k+1}\) is calculated on the basis of information related to both the current iterate \(\mathbf {x}_k\) and to several other points (e.g., previous estimates of an optimal solution).

The fundamental leverage in constructing such class of methods is that a convex function is the pointwise supremum of affine ones, namely, for any convex function \(f: {\mathbb {R}}^n \rightarrow {\mathbb {R}}\) and every \(\mathbf {x} \in {\mathbb {R}}^n\) it holds that

where \(\mathbf {g}(\mathbf {y}) \in \partial f(\mathbf {y})\), see (Hiriart-Urruty and Lemaréchal (1993), Th. 1.3.8). The latter formula has some relevant consequences. In fact, taking any finite set of points \(\mathbf {x}_1,\mathbf {x}_2, \ldots , \mathbf {x}_k \in {\mathbb {R}}^n\), letting

for every \(i \in \{1,\ldots ,k\}\), with \(\mathbf {g}_i \in \partial f(\mathbf {x}_i)\), and defining

it holds that

Thus, function \(f_k\) is a global approximation of f, as it minorizes f everywhere, while interpolating it at points \(\mathbf {x}_1, \ldots , \mathbf {x}_k\). Note, in addition, that \(f_k\) is convex and piecewise affine, being the pointwise maximum of the affine functions \(\ell _i(\mathbf {x})\), the linearizations of f rooted at \(\mathbf {x}_1, \ldots , \mathbf {x}_k\). We observe in passing that, even for the same set of points \(\mathbf {x}_1, \ldots , \mathbf {x}_k\), the model function \(f_k\) can be not unique, since the subdifferential \(\partial f(\cdot )\) is a multifunction. In the following, we will refer to \(f_k\) as to the cutting plane function, a term which deserves some explanation.

Consider the epigraph of f, namely, the subset of \({\mathbb {R}}^{n+1}\) defined as

and define the set of halfspaces \(H_i \subset {\mathbb {R}}^{n+1}\), for every \(i \in \{1,\ldots ,k\}\):

Observing that for every \(\mathbf {x} \in {\mathbb {R}}^n\) there holds

and that

it is easy to see that \(\big (\mathbf {x}, f(\mathbf {x})\big ) \in \bigcap _{i=1}^k H_i\) and, consequently, that

Now take any point \(\mathbf {x}_{k+1}\) such that \(f(\mathbf {x}_{k+1})>f_k(\mathbf {x}_{k+1})\), define the corresponding halfspace

and the new approximation

Observe that, while \(\big (\mathbf {x}_{k+1},f_k(\mathbf {x}_{k+1})\big ) \in epi f_k\), we have \(\big (\mathbf {x}_{k+1},f_k(\mathbf {x}_{k+1})\big ) \notin epi f_{k+1}\) and

In other words, the hyperplane

separates point \(\big (\mathbf {x}_{k+1},f_k(\mathbf {x}_{k+1})\big )\) from \(epi f_{k+1}\), thus it represents a cut for \(epi f_{k}\). Note that the bigger is the difference \(f(\mathbf {x}_{k+1}) -f_k(\mathbf {x}_{k+1})\), the deeper is the cut defined by \(L_{k+1}\), see Fig. 3. The definition of the cutting plane function \(f_k\) provides a natural way to select the next trial point by setting

which is exactly the iteration scheme of the classic cutting plane method, see (Cheney and Goldstein 1959; Kelley 1960). Problem (30) is still nondifferentiable, but it is equivalent to the following linear program, defined in \({\mathbb {R}}^{n+1}\), thanks to the introduction of the additional scalar variable w

whose optimal solution is denoted by \((\mathbf {x}_{k+1}, w_k)\), with \(w_k=f_k(\mathbf {x}_{k+1}\)). Note that, since the feasible region is the nonempty set \(epi f_k\), boundedness of (31) requires feasibility of its dual which, denoting by \(\varvec{\lambda } \in {\mathbb {R}}^k\) the vector of dual variables, can be formulated as the following program

We observe that feasibility of (32) is equivalent to the condition

Hence, the boundedness of problem (31), which allows to calculate \(\mathbf {x}_{k+1}\), requires a kind of hard-to-test qualification of points \(\mathbf {x}_1, \ldots ,\mathbf {x}_k\), expressed in terms of the corresponding subgradients. We show in Fig. 4 an example where the cutting plane function is unbounded since the derivatives \(f'(\mathbf {x}_1)\) and \(f'(\mathbf {x}_2)\) are both negative, thus \(0 \notin [f'(\mathbf {x}_1),f'(\mathbf {x}_2) ]\)).

To avoid the difficulties related to possible unboundedness of the cutting plane function, we put the original problem (1) in an (artificial) constrained optimization setting. In fact, we consider the problem

where Q is a nonempty compact convex subset of \({\mathbb {R}}^{n}\). One would think of Q as a set defined by simple constraints (e.g., box constraints) and sufficiently large to contain \(M^*\). We further assume that for each \(\mathbf {x} \in Q\) both the objective function value \(f(\mathbf {x})\) and a subgradient \(g\in \partial f(\mathbf {x})\) can be computed. We also let \(L_Q\) denote the Lipschitz constant of f on Q. Thus, the cutting-plane iteration becomes

whose well-posedness is guaranteed by the continuity of \(f_k\), together with compactness of Q. Moreover, we note that, since by convexity \(f_k(\mathbf {x}) \le f(\mathbf {x})\) for every \(\mathbf {x} \in Q\), the optimal value \(f_{k}^{*}\) of \(f_k(\mathbf {x})\) provides a lower bound on \(f^*\), the optimal value of f. In addition, since

the sequence \( \{f_{k}^{*} \}\) is monotonically nondecreasing and thus the lower bound becomes increasingly better.

We state now the convergence of a slightly more general cutting plane-like method, presented in Algorithm 1, which comprises the classic version where the iteration scheme (35) is adopted, see (Polyak 1987).

We note that \(f_k(\mathbf {x}) \le f(\mathbf {x})\), for every \(\mathbf {x} \in {\mathbb {R}}^n\), ensures that selection of \(\mathbf {x}_{k+1}\) as in (35) perfectly fits with the condition \(\mathbf {x}_{k+1} \in S_k^*\) at Step 2 of GCPM. The rationale of the definition of \(S_k^*\) is to take \(\mathbf {x}_{k+1}\) well inside into the level set of \(f_k\). This allows to accommodate, at least in principle, possible inexact solution of the program

which is still linear provided Q has a polyhedral structure. GCPM with \(\mathbf {x}_{k+1}\) selected as in (35) will be simply referred in the following as Cutting Plane Method (CPM).

Remark 5

GCPM is an intrinsically nonmonotone method as no objective function decrease is guaranteed at each iteration

The proof of the convergence of Algorithm 1 is rather simple and relies on convexity of f and on compactness of Q.

Theorem 3

GCPM terminates at an \(\epsilon \)-optimal point.

Proof

We observe first that, since \(f_k(\mathbf {x}_{k+1)} \le f^*\), satisfaction of the stopping condition at Step 3 of GCPM implies that

i.e., that the point \(\mathbf {x}_{k+1}\) is \(\epsilon \)-optimal. Now, assume for a contradiction that the stopping condition is not satisfied for infinitely many iterations and, consequently, that

holds for every k. Convexity of f, along with (37) and (25), ensure that the following inequalities hold for every \( i \in \{1,\dots ,k\}\)

which imply

A consequence of (38) is that the sequence of points generated by the algorithm does not have an accumulation point, which contradicts compactness of Q. \(\square \)

While cutting plane method represents an elegant way to handle convex optimization, it exhibits some major drawbacks. We observe first that the convergence proof is based on the hypothesis of infinite storage capacity. In fact, the size of the linear program to be solved increases at each iteration as consequence of the introduction of a new constraint. A second drawback of the method is related to its numerical instability. In fact, not only monotonicity of the sequence \(\{f(\mathbf {x}_k)\}\) is not ensured (this being a fairly acceptable feature of the method, though) but it may happen that after the iterate sequence gets to points very close to the minimizer, some successive iterate points might roll very far away from it, as we show in the following simple example.

Example 1

Consider the one-dimensional quadratic program \(\min \{\frac{1}{2} x^2: x \in {\mathbb {R}}\}\), whose minimizer is \(x^* = 0\). Assume that \(k=2\), let \(x_1=-1\) and \(x_2=0.01\), with point \(x_2\) being rather close to the minimizer. It is easy to verify that \(x_3= \arg \min \{f_2(x): x \in {\mathbb {R}}\}=-0.495\), with the algorithm jumping to a point whose distance from the minimizer is much bigger than 0.01. Illustrative examples of such poor behavior of the method can be found in (Hiriart-Urruty and Lemaréchal (1993), Chapter XV.1).

5.1 Bundle methods (BM)

Bundle methods are a family of algorithms originating from the pioneering work by Lemaréchal (1975). They can be considered as a natural evolution of CPM which provides an effective answer to the previously mentioned drawbacks. The term bundle is meant to recall that, similarly to CPM, at each iteration a certain amount of cumulated information about points scattered throughout the function domain is necessary to create a model-function, whose minimization delivers the new iterate. In particular, we denote by \(B_k\) the bundle of the cumulative information available at iteration k, where \(B_k\) is the following set of point/function/subgradient triplets

In bundle methods, however, one among points \(\mathbf {x}_i\) is assigned the special role of stability center. One may think of such point as the best in terms of objective function value, but this is not strictly necessary. In the following, we will denote by \(\overline{\mathbf {x}}_k\) the current stability center, singled out from the set of iterates \(\{\mathbf {x}_1\ldots \mathbf {x}_k\}\). Adopting a term commonly used in discrete optimization, it will be referred to as the incumbent, \(f(\overline{\mathbf {x}}_k)\) being the incumbent value.

Once the stability center \(\overline{\mathbf {x}}_k\) has been fixed, the change of variables \(\mathbf {x}=\overline{\mathbf {x}}_k+\mathbf {d}\) is introduced. It expresses every point of function domain in terms of its displacement \(\mathbf {d} \in {\mathbb {R}}^n\) with respect to the stability center, and allows to rewrite the cutting plane function \(f_k(\mathbf {x})\) in the form of difference function as

where \(\alpha _i\), for every \(i \in \{1,\ldots ,k\}\), is the linearization error, see (23), associated to the affine expansion \(\ell _i(\mathbf {x})\) at \(\overline{\mathbf {x}}_k\), and is defined as

Note that convexity of f guarantees nonnegativity of the linearization error. Moreover, for every \(\mathbf {x} \in {\mathbb {R}}^n\) and \(i \in \{1,\ldots ,k\}\), since \(\mathbf {g}_i \in \partial f(\mathbf {x}_i)\), it holds that

i.e.,

The latter property, often referred to as subgradient transport, is both conceptually and practically important; it indicates that even points which are far from the stability center provide approximate information on its differential properties.

Note also that points \(\mathbf {x}_i\) do not play any role in the difference function (39), thus the bundle \(B_k\), instead of triplets, can be considered as made up of couples as follows

Note that the definition of the linearization errors is related to the current stability center. In case a new one is selected, say \(\overline{\mathbf {x}}_{k+1}\), the \(\alpha _i\) need to be updated. In fact, denoting by \(\alpha _i^+\), for each \(i \in \{1,\ldots ,k\}\), the new linearization errors updated with respect to \(\overline{\mathbf {x}}_{k+1}\), it is easy to obtain the following update formula

which is independent of the explicit knowledge of points \(\mathbf {x}_i\).

Under the transformation of variables introduced above, problem (31) becomes

whose optimal solution \((\mathbf {d}_k,v_k)\) is related to the optimal solution \((\mathbf {x}_{k+1},w_k)\) of (31) by the relations:

Note that from nonnegativity of the linearization errors it follows that the point \((\mathbf {d},v)=(\mathbf {0},0)\) is feasible in (44), hence \(v_k \le 0\) represents the predicted reduction returned by the model at point \(\mathbf {x}_{k+1}=\overline{\mathbf {x}}_k+\mathbf {d}_k\).

Bundle methods elaborate on CPM as they ensure:

-

(i)

Well-posedness of the optimization subproblem to be solved at each iteration;

-

(ii)

Stabilization of the next iterate.

A conceptual and very general scheme of a bundle method is now given, aiming at highlighting the main differences with CPM.

The schema of Algorithm 2 provides just the backbone of most bundle methods, as it leaves open a number of algorithmic decisions that can lead to fairly different methods. In fact, the body of literature devoted to BM is huge, as a vast number of variants have been proposed over time by many scientists, in order to implement such decisions. We postpone to Sect. 9 some bibliographic notes.

We give in the following a general classification of bundle methods, mainly based on the different variants of the subproblem (44) to be solved at Step 3, in order to satisfactorily deal with the aforementioned issues of well-posedness and stabilization. The approaches are substantially three:

-

Proximal BM;

-

Trust region BM;

-

Level BM.

They share the same rationale of inducing some limitation on the distance between two successive iterates \(\mathbf {x}_{k+1}\) and \(\mathbf {x}_k\) gathering, at the same time, well-posedness and stability with respect to CPM. As it will be clarified in the following, the actual magnitude of such limitation is controlled by an approach-specific parameter (to be possibly updated at each iteration). Its appropriate tuning is the real crucial point affecting numerical performance of all BM variants.

For a better understanding of the impact on the performance of the stabilization strategies, we report the results of an instructive experiment described in Frangioni (2020). For a given nonsmooth optimization problem, the Lagrangian dual of a Linear Program, the minimizer \(\mathbf {x}^*\) has been first calculated by standard CPM. Then, such an optimal point has been given as the starting point both to standard CPM and to a variant of CPM equipped with a constraint of the type \(\Vert \overline{\mathbf {x}}_{k} + \mathbf {d}-\mathbf {x}^*\Vert _{\infty } \le \varDelta \), for different values of \(\varDelta \). In Table 1, for decreasing values of \(\varDelta \), the ratio between the number of iterations upon termination of the modified CPM, \(N_{it}^{\varDelta }\), and that of the standard CPM, \(N_{it}\), is reported. The impressive effect of making more and more stringent the constraint on distance of two successive iterates is apparent.

5.1.1 Proximal BM (PBM)

The proximal point variant of BM is probably the one that attracted most of the research efforts. It has solid theoretical roots in both the properties of the Moreau-Yosida Regularization (Hiriart-Urruty and Lemaréchal 1993) and Rockafellar’s Proximal Point Algorithm (Rockafellar 1976). In such class of methods the variant of subproblem (44), to be solved at Step 3 of Algorithm 2, is

where \(\gamma _k>0\) is the adjustable proximity parameter. The latter problem can be rewritten, taking into account (39), in an equivalent unconstrained form as

hence it has a unique minimizer as a consequence of strict convexity of the objective function.

It is worth observing that in PBM the subproblem (45) is a quadratic program (QP), whose solution can be found either by applying any QP algorithm in \({\mathbb {R}}^n\), or by working in the dual space \({\mathbb {R}}^k\). In fact, the standard definition of Wolfe’s dual for problem (45) is

where \(\varvec{\lambda } = (\lambda _1,\ldots ,\lambda _k)^\top \) is the vector of dual variables (or multipliers), and \(\mathbf {e}\) is a vector of ones of appropriate size. Taking into account that

it is possible to eliminate \(\mathbf {d}\) and to restate the dual of problem (45) as follows:

Letting \((\mathbf {d}_k,v_k)\) and \(\varvec{\lambda }^{(k)}\) be, respectively, optimal solutions to (45) and (48), the following relations hold:

and

which allow to equivalently solve either problem (45) or problem (48) at Step 3 of the Conceptual BM. Note that \(\mathbf {d}_k\) is the opposite of a (scaled) convex combination of the \(\mathbf {g}_i\)s, and it reduces to the anti-subgradient with stepsize \(\frac{1}{\gamma _k}\) in case the bundle is the singleton \(\{ (\mathbf {g}_k,0)\}\), where \(\mathbf {g}_k \in \partial f(\overline{\mathbf {x}}_k)\).

Working in the dual space is, in general, preferred for both practical and theoretical reasons. In fact, problem (48) has a nice structure, being the minimization of a convex quadratic function over the unit simplex, for which powerful ad hoc algorithms are available in the literature (e.g., Kiwiel 1986; Monaco 1987; Frangioni 1996). On the other hand, relations (49)-(50) provide the theoretical basis for possibly certifying (approximate) optimality of the current stability center at Step 4 of Conceptual BM. Let \(\mathbf {g}(\varvec{\lambda })= \sum _{i=1}^k \lambda _i^{(k)} \mathbf {g}_{i}\) and suppose the following holds

for some small \(\epsilon >0\). Thus, from (49)–(50) it follows that

and

Moreover, condition (52), taking into account (42), implies that

i.e., \(\mathbf {g}(\varvec{\lambda })\) is in the \(\epsilon \)-subdifferential of f at \(\overline{\mathbf {x}}_k\). On the other hand, condition (51) provides an upper bound on the norm of \(\mathbf {g}(\varvec{\lambda })\) and hence, taking into account the inequality (4) and letting \(\delta =\sqrt{\gamma _k \epsilon }\) we obtain

which can be interpreted as an approximate optimality condition at point \(\overline{\mathbf {x}}_k\), provided that \(\delta \) is not too big. Note, however, that the magnitude of \(\delta \), once \(\epsilon \) has been fixed, depends on the adjustable proximity parameter \(\gamma _k\) and, consequently, (53) is a sound approximate optimality condition only if the sequence \(\{\gamma _k\}\) is bounded from above. Such condition is intuitively aimed at avoiding shrinking of the model around \(\overline{\mathbf {x}}_k\), which would lead to both a very small \(\mathbf {d}_k\) and to an artificial satisfaction of the condition \(v_k \ge - \epsilon \). A complementary reasoning suggests to keep the sequence \(\{\gamma _k\}\) bounded away from zero, in order for the algorithm to avoid behaving in a way very similar to standard CPM.

We have described, so far, the two possible outcomes from solving at Step 3 of Algorithm 2 problem (45) or, better, problem (48). In fact, in the PBM approach a significant displacement \(\mathbf {d}_k\) is obtained if \(v_k < - \epsilon \), while termination occurs in the opposite case when \(v_k \ge - \epsilon \).

Now suppose that Step 6 has been reached, the point \( \overline{\mathbf {x}}_k+\mathbf {d}_k\) being available as a possible candidate to become the new stability center. The predicted reduction at such point is \(v_k\), which is to be compared with the actual reduction \(f(\overline{\mathbf {x}}_k+\mathbf {d}_k)-f(\overline{\mathbf {x}}_k)\). Reasonable agreement between the two values indicates that the current cutting plane model is of good quality. Since at this stage \(v_k < -\epsilon \), the agreement test at Step 8 is generally aimed at verifying that the actual reduction is just a fixed fraction of the predicted one, as shown in the following inequality

where \(m \in (0,1)\) is the sufficient decrease parameter. Hence, (54) also plays the role of the sufficient decrease condition to be checked at Step 9. In fact, if condition (54) is fulfilled, the algorithm can proceed through Steps 10 to 13, where the stability center is updated by setting \(\overline{\mathbf {x}}_{k+1}=\overline{\mathbf {x}}_k+\mathbf {d}_k\) and a new iteration starts after updating the bundle. Such an exit is usually referred to as Serious Step. If, instead, there is poor agreement between actual and predicted reduction (i.e., a sufficient decrease has not been attained), it holds

and two possible implementations of the Conceptual BM are available, depending on whether or not a line search strategy is adopted.

In case no line-search approach is embedded in the algorithm, Conceptual BM proceeds to Steps 15 to 18, as the attempt to find a better stability center failed, and the stability center remains unchanged (i.e., a Null Step has occurred). Letting \(\mathbf {x}_{k+1}=\overline{\mathbf {x}}_k+\mathbf {d}_k\), the new couple \((\mathbf {g}_{k+1}, \alpha _{k+1})\) is joined to the bundle, where \(\mathbf {g}_{k+1}\in \partial f(\mathbf {x}_{k+1})\), and \(\alpha _{k+1}= f(\overline{\mathbf {x}}_k)-f(\mathbf {x}_{k+1})+\mathbf {g}_{k+1}^\top \mathbf {d}_k\).

In case a line-search strategy is adopted, the algorithm remains at Step 8, \(\mathbf {d}_k\) is taken as a search direction and a line search (LS) is executed by checking at points \(\overline{\mathbf {x}}_k+t\mathbf {d}_k\), with \(t \in (0,1]\), the objective function sufficient decrease condition

skipping to Step 15 as soon as t falls below a given threshold \(\eta \in (0,1)\). Checks are performed for decreasing values of t, starting from \(t=1\), according to classic Armijo’s rule (Armijo 1966).

Detailed presentation of nonsmooth LS algorithms (that is, the minimization of a nonsmooth function of one variable) is beyond the scope for this paper. We wish, however, to point out the fundamental difference between the smooth and the nonsmooth case. In the former case, once at any point \(\mathbf {x}\) a search direction \(\mathbf {d}\) is given within a descent algorithm, a trusted model, constituted by the negative directional derivative along \(\mathbf {d}\) is available. It ensures that there exists a positive threshold \(\bar{t}\) such that \(f(\mathbf {x}+t\mathbf {d}) < f(\mathbf {x})\), for every \(t \in (0,\bar{t})\). In the nonsmooth framework, instead, the cutting plane model is “untrusted”, to recall the evocative term used in Frangioni (2020). In fact, in the Conceptual BM the directional derivative at \(\overline{\mathbf {x}}_k\) along \(\mathbf {d}_k\) is not necessarily known, since \(v_k\) is just an approximation. Thus, the possibility that \(\mathbf {d}_k\) is not a descent direction has to be accommodated by the algorithm.

We have now completed the discussion on the two possible implementations of Step 8 within the proximal version of Conceptual BM. We observe that null step is a result which can occur in both cases. It corresponds to the fact that the cutting plane has revealed a poor approximation of the objective function. Consequently, whenever a null step occurs, the stability center remains unchanged, and a new couple subgradient/linearization-error is added to the bundle, with the aim of improving the model. As for the latter, some explanations are in order. Consider, for an example, the null-step occurring when

with no line search performed. In such a case, after generating the new iterate \(\mathbf {x}_{k+1}=\overline{\mathbf {x}}_{k}+\mathbf {d}_k\), the couple \(\big (\mathbf {g}_{k+1}, \alpha _{k+1}\big )\) is appended to the bundle, where \(\mathbf {g}_{k+1} \in \partial f(\mathbf {x}_{k+1})\) and \(\alpha _{k+1} = f(\overline{\mathbf {x}}_{k})-f(\mathbf {x}_{k+1})+\mathbf {g}_{k+1}^\top \mathbf {d}_{k}\). The updated model in terms of difference function, see (39), becomes

Observe that, for \(\mathbf {d}=\mathbf {d}_k\) there hold

and

which combined means that the updated model provides a more accurate estimate of the objective function f, at least around point \(\mathbf {x}_{k+1}\). Perfectly analogous considerations can be made in case a line search scheme is adopted at Step 8.

We have presented, so far, some general ideas on how the Conceptual BM works in case the proximal approach is adopted. We do not enter into the details of convergence proofs, which depend on the different strategies adopted at various steps. We only wish to sketch how a typical convergence proof works, under the assumptions that f has a finite minimum, that the proximity parameter \(\gamma _k\) stays within a range \(0 < \gamma _{min} \le \gamma _k \le \gamma _{max}\), possibly being adjusted upon modification of the stability center only. As already mentioned, such tuning is a crucial issue in view of granting numerical efficiency to the method.

The proof is based on the following three facts:

-

(a)

The objective function reduction, every time the stability center is updated, is bounded away from zero. This is a consequence of \(v_k < -\epsilon \) and of the sufficient decrease condition (54), in case no line search strategy is adopted. Whenever, instead, a line search is performed, objective function reduction is still bounded away from zero as a consequence, again, of \(v_k < -\epsilon \), of condition (55), and of the lower bound \(\eta \) on the stepsize length t.

-

(b)

Since it has been assumed that the function has finite minimum, from a) it follows that only a finite number of stability center updates may take place.

-

(c)

The Conceptual BM cannot loop infinitely many times through Step 18, that is an infinite sequence of null steps cannot occur. To prove this fact it is necessary to observe that, being the proximity parameter constant by assumption, the sequence \(\{v_k\}\) is monotonically increasing, see (58), and bounded from above by zero, hence it is convergent. The core of the proof consists in showing that \(\{v_k\} \rightarrow 0\) and, consequently, the stopping test \(v_k \ge -\epsilon \) is satisfied after a finite number of null steps.

5.1.2 Trust region BM (TBM)

The approach consists in solving at Step 3 of the Conceptual BM the following variant of problem (44), obtained through the addition of a trust region constraint

where \(\varDelta _k > 0\). Well-posedness is a consequence of continuity of the objective function, problem (60) being in fact a finite min-max, and compactness of the feasible region.

A first issue about the statement of problem (60) is the choice of the norm in the trust region constraint. It is in general preferred to adopt the \(\ell _1\) or the \(\ell _{\infty }\) norm, so that (60) is still a Linear Program. A second relevant point is the setting of the trust region parameter. Intuitively, \(\varDelta _k\) must not be too small, which would result in slow convergence due to shrinking of the next iterate close to the stability center. On the other hand, a too large \(\varDelta _k\) would kill the stabilizing effect of the trust region. A simple approach is to provide two thresholds \(\varDelta _{min}\) and \(\varDelta _{max}\), letting \(\varDelta _k \in [\varDelta _{min}, \varDelta _{max}]\). Such choice is necessary to guarantee convergence of the algorithm, but the type of heuristics adopted for tuning \(\varDelta _k\) within the prescribed interval strongly affects both convergence and the overall performance of the algorithm (see the discussion about the effect of the proximity parameter \(\gamma _k\) in PBM).

Also for the trust region approach the two classes of variants, with or without line search, can be devised. Moreover, the interplay serious-step/null-step is still embedded into the conceptual scheme.

5.1.3 Proximal level BM (PLBM)

The level set approach to BM stems from the general setting of CPM we gave earlier in this section, where point \(\mathbf {x}_{k+1}\) calculated at Step 2 of GCPM was not necessarily a minimizer of \(f_k\), convergence being ensured provided it was sufficiently inside the level set of function \(f_k\) at point \(\mathbf {x}_k\).

The approach consists in finding the closest point to the current stability center \(\overline{\mathbf {x}}_k\) where the difference function (39) takes a sufficiently negative value. In fact, problem (44) is modified as follows

where the adjustable parameter \(\theta _k >0\) indicates the desired reduction in the cutting plane function. In fact, letting, as usual, the stability center \(\overline{\mathbf {x}}_k\) be the incumbent, and denoting by \(\mathbf {d}_k\) the optimal solution of (61), the point \(\mathbf {x}_{k+1}=\overline{\mathbf {x}}_k+\mathbf {d}_k\) belongs to the following level set

of the cutting plane function \(f_k\). Note that an appropriate choice of \(\theta _k\) provides the required stabilization effect, as a small value of \(\theta _k\) results in small \(\Vert \mathbf {d}_k\Vert \).

The approach is known as Proximal Level Bundle Method (PLBM) and indeed the setting of \(\theta _k\) is the key issue to address. To this aim, the optimal value of the model function \(f_k\), say \(f_k^*\), is required. Consequently, we stay in the same constrained context (34) adopted in stating CPM, so that problem

is well posed, being the convex set Q nonempty and compact. Since the incumbent value \(f_k(\overline{\mathbf {x}}_k)\) and \(f^*_k\) are, respectively, an upper and a lower bound on \(f^*\), it is quite natural to set \(\theta _k\) on the basis of the gap

which is a nonincreasing function of the bundle size k. A possible choice is to set \(\theta _k=\mu \varGamma (k)\), for some \(\mu \in (0,1)\), but modifications of such criterion are to be accommodated on the basis of comparison with the previous value of the gap. Note that \(\varGamma (k) \le \epsilon \) provides an obvious stopping criterion for PLBM, since from \(f_k(\overline{\mathbf {x}}_k)=f(\overline{\mathbf {x}}_k)\) it follows that \(f(\overline{\mathbf {x}}_k)-f^* \le \varGamma (k)\). In terms of the Conceptual BM, Step 8 is neglected, and the test at Step 9, for possibly updating the stability center, is based on the simple reduction of the incumbent value. As for method implementation, further observations are in order.

-

Compared to PBM and TBM, setting of \(\theta _k\) appears definitely more amenable than choosing \(\gamma _k\) and \(\varDelta _k\), respectively, as it simply refers to function values, while \(\gamma _k\) and \(\varDelta _k\) are meant to capture some kind of second order behavior of f, an ambitious and fairly hard objective.

-

Unlike PBM and TBM, two distinct optimization subproblems are to be solved at each iteration: the quadratic problem (61), which consists in projecting \(\overline{\mathbf {x}}_k\) onto \(S_k(\theta _k)\), and (62), which is a linear program, in case Q has a simple structure (e.g., it is a hyper-interval).

The following theoretical result, see (Lemaréchal et al. (1995), Th. 2.2.2), provides a bound on the number of iterations needed to get an \(\epsilon \)-approximate solution.

Theorem 4

Let \(L_Q\) be the Lipschitz constant of f on Q, denote by D the diameter of Q, and by c a constant depending on parameter \(\mu \). For any given \(\epsilon >0\) it holds that

5.2 Making BM implementable

The algorithms we have described in this section suffer from a major drawback. They are all based on unlimited accumulation of information, in terms of number of generated linearization or, equivalently, of bundle size. Convergence properties we have discussed are in fact valid under such hypothesis. This makes such methods, at least in theory, not implementable. In the sequel, focusing in particular on PBM, we briefly review two strategies to overcome such difficulty, introduced in Kiwiel (1983), Kiwiel (1985), named subgradient selection and aggregation, respectively.

The strategies are both based on thorough analysis of the dual formulation (48) of the problem to be solved at Step 3 of Conceptual BM. Observe, in fact, that strict convexity of problem (45) ensures that the optimal solution \(\mathbf {d}_k\) is unique and it is a (scaled) convex combination of the \(\mathbf {g}_i\)s, see (49). Note also that the optimal solution of the dual (48) is not necessarily unique, but there exists (by Carathéodory’s Theorem) a set of at most \(n+1\) optimal dual multipliers \(\lambda _i^{(k)} > 0\), \(i \in I_k\), \(|I_k| \le n+1\) such that

They can be calculated, in fact, by finding an optimal basic solution of the following linear program

which is characterized by \((n+1)\) constraints.

On the basis of previous observation there is an obvious possibility, once such set of subgradients has been detected, to select the corresponding bundle couples and to cancel the remaining ones, while the solutions of (48) and (45) remain unchanged. In this way the bundle size can be kept finite, without impairing overall convergence. It is worth noting that ad hoc algorithms for solving (48) are designed to automatically satisfy the condition that no more than \((n+1)\) subgradients are “active” in the definition of \(d_k\), so that solution of problem (63) is not necessary for subgradient selection purposes.

In many practical cases, however, \(n+1\) is still too large in view of the need of solving at each iteration the quadratic program (48) of corresponding size. In such a case, a very strong reduction in bundle size can be obtained by means of the subgradient aggregation mechanism. Once the optimal solution \(\varvec{\lambda }^{(k)}\) to (48) has been found, the aggregate couple \((\mathbf {g}_a, \alpha _a)\) is obtained by letting

In addition, define the single-constraint aggregate quadratic program

and observe that it is equivalent to the simple unconstrained quadratic problem

Hence, the optimal solution \((\mathbf {d}_a,v_a)\) to (64) coincides with the solution to (48) since it can be obtained in closed form as

and

Summing up, the aggregate problem (64) retains the fundamental properties of (48), so that, when point \(\mathbf {x}_{k+1}\) is generated, all past bundle couples \((\mathbf {g}_i, \alpha _i)\) can be replaced by the unique \((\mathbf {g}_a,\alpha _a)\), and the new couple \((\mathbf {g}_{k+1}, \alpha _{k+1})\), with \(\mathbf {g}_{k+1} \in \partial f(\mathbf {x}_{k+1})\) is added to the bundle. Under such aggregation scheme, with the bundle containing just two elements, it is possible to show convergence. Such version of proximal BM is sometimes referred to as the “poorman” bundle. Of course many other selection-aggregation schemes have been discussed in the literature. Their treatment is, however, beyond the scope of this paper.

6 Miscellaneous algorithms

In Sect. 5 we have mostly discussed about bundle methods, a family of NSO algorithms related to cutting plane, which is a model function grounded on information coming from many points spread throughout the objective function domain. Such a feature keeps bundle methods somehow apart from the smooth optimization mainstream, where most of the popular iterative methods (Gradient type, Conjugate Gradient, Newton, quasi–Newton etc.) are based on information on the objective function related to the current iterate or, sometimes, also to the previous one. Several scientists have thus tried to convey to NSO, and in particular to cutting-plane based area, some ideas coming from smooth optimization, upon appropriate modifications to cope with nonsmoothness. In this section we briefly survey some of such attempts.

6.1 Variable metric

In discussing the proximal BM we have already observed that tuning of the proximity parameter \(\gamma _k\) in problem (45) has a strong impact on the numerical performance of such class of algorithms. The problem has been addressed by many authors (see, e.g., Kiwiel 1990) and several heuristic techniques are available. More in general, setting of \(\gamma _k\) is related to the attempt of capturing some kind of second order approximation of the objective function. After all, the quadratic term \(\frac{1}{2}\gamma _k\Vert \mathbf {d}\Vert ^2\), in case f is twice differentiable, would be seen as a single–parameter positive definite approximation \(\gamma _k I\) of the Hessian at point \(\overline{\mathbf {x}}_k\), I being the identity matrixFootnote 1.

Thus, the simplest idea, see (Lemaréchal 1978), is to replace (45) with the following problem

where \(B_k\) is a positive definite matrix in \({\mathbb {R}}^{n \times n}\) to be updated any time the stability center changes, according to some rule inspired by the Quasi–Newton updates for smooth minimization. We recall that in all Quasi–Newton methods the Hessian (or its inverse) approximation is updated, in correspondence to the iterate \(\overline{\mathbf {x}}_k\), on the basis of the following differences in points and gradients between two successive iterates

A straightforward and practical way to adopt a Quasi-Newton approach in the nonsmooth environment would be to use any classic variable metric algorithm based on updating formulae (e.g., DFP, BFGS, etc.), with \(\mathbf {q}_k\) defined as difference of subgradients instead of gradients. Note, in passing, that due to possible discontinuities in derivatives, large \(\mathbf {q}_k\) may correspond to small \(\mathbf {s}_k\). This, however, is not a reportedly serious drawback in terms of practical applications (see classic Lemaréchal 1982 and Vlček and Luksǎn 2001 for an accurate analysis).

As a consequence of previous observation, research has focused on the definition of some differentiable object, related to f, thus suitable for application of Quasi-Newton methods. Such an object, the Moreau-Yosida regularization of f, is the function \(\phi _{\rho }:{\mathbb {R}}^n \rightarrow {\mathbb {R}}\), defined as

for some \(\rho >0\), whose minimizer is denoted by

and referred to as the proximal point of \(\mathbf {x}\), see (Rockafellar 1976). Function \(\phi _{\rho }\) enjoys the following properties:

-

The sets of minima of f and \(\phi _{\rho }\) coincide;

-

\(\phi _{\rho }\) is differentiable (see Hiriart-Urruty and Lemaréchal 1993);

-

\(\nabla \phi _{\rho }(\mathbf {x})=\rho (\mathbf {x}-p_{\rho }(\mathbf {x})) \in \partial f(p_{\rho }(\mathbf {x}))\), since at \(p_\rho (\mathbf {x})\) it is \(0 \in \partial h(\mathbf {y})\), where \(h(\mathbf {y}) = f(\mathbf {y})+ \frac{\rho }{2}\Vert \mathbf {y}-\mathbf {x}\Vert ^2\) is a strictly convex function.

The latter properties allow to find a minimum of f by solving the following (smooth) problem

Here, we note that smoothness is not gathered for free, as calculation of the new objective function \(\phi _\rho \) requires solution of a convex (nonsmooth) optimization problem.

Straightforward application of any Quasi-Newton paradigm (equipped with a line search) to minimize \(\phi _\rho \) leads to the following iteration scheme:

where \(B_k\) is the classic approximation of the Hessian, and a line search is accommodated into the iteration scheme to fix the stepsize \(t_k>0\) along the Quasi–Newton direction \(\mathbf {d}_k=- B_k^{-1} \nabla \phi _{\rho } (\overline{\mathbf {x}}_k)\)

Matrix \(B_k\) comes from updating of \(B_{k-1}\) (usually choosing \(B_1=I\)), by means of one of the effective Quasi–Newton formulae. A popular one is BFGS, according to which it is

with \(\mathbf {s}_k=\overline{\mathbf {x}}_k- \overline{\mathbf {x}}_{k-1}\) and \(\mathbf {q}_k=\nabla \phi _{\rho }(\overline{\mathbf {x}}_k)- \nabla \phi _{\rho }(\overline{\mathbf {x}}_{k-1})\). In fact, matrix \(B_k\) satisfies the secant equation

A (simplified) algorithmic scheme is reported in Algorithm 3.

The QN scheme of Algorithm 3 leaves open several relevant issues. We note first that the inner loop deals with minimization of a (strictly) convex nonsmooth function. Thus, it is quite natural to apply in such framework the machinery we have discussed in previous sections (e.g., any bundle-type algorithm would be in order). On the other hand, the idea of exactly solving at each iteration a problem of the same difficulty as the original one does not appear viable in terms of computation costs. In fact, it is appropriate to settle for an approximate solution of problem (66) in the inner loop, which results in inexact calculation of \(\overline{\mathbf {x}}_{k+1}\) as, instead of the exact optimality condition \(\rho (\overline{\mathbf {x}}_{k+1} - p_{\rho }(\overline{\mathbf {x}}_{k+1})) \in \partial f(p_{\rho }(\overline{\mathbf {x}}_{k+1})\), the approximate one \(\rho (\overline{\mathbf {x}}_{k+1} - p_{\rho }(\overline{\mathbf {x}}_{k+1})) \in \partial _{\epsilon } f(p_{\rho }(\overline{\mathbf {x}}_{k+1})\), for some \(\epsilon >0\), is enforced. We note in passing that the Quasi–Newton framework is one of the areas that have solicited the development of a convergence theory for NSO algorithms with inexact calculation of function and/or subgradient (see Sect. 7).

The need of accommodating for inexact calculation of the Moreau-Yosida regularization \(\phi _{\rho }\) (consider that also tuning of \(\rho \) is a significant issue), has also an impact on the implementation of the choice of \(\overline{\mathbf {x}}_{k+1}\) in the outer loop, irrespective of whether a line search is executed, as evoked by formula (68), or the constant stepsize \(t_k=1\) is adopted. We do not enter into the technicalities of the above mentioned issues. Possible choices are relevant in establishing the theoretical convergence rate of QN type algorithms. Discussion on such topics can be found in Bonnans et al. (1995), Lemaréchal and Sagastizábal (1997), Chen and Fukushima (1999).

6.2 Methods of centers (MoC)

We have already seen how fecund was the cutting plane idea of using many linearizations, generated all over the function domain, in order to obtain a global, not just local, model of the objective function. Yet another approach deriving from cutting plane is a class of methods known as Methods of Centres, whose connection with interior methods for Linear Programming is apparent. To explain the basic ideas it is convenient to assume a set-oriented viewpoint instead of a function-oriented one.

In solving the (constrained) problem (34), the same framework as CP (or BM) is adopted. Given the cutting plane function \(f_k\), available at iteration k, we denote by \(F_k(z_k)\) the following subset of \(\mathbb {R}^{n+1}\)

where \(z_k\) is any upper bound on the optimal value of \(f_k\) (e.g., the value of f calculated at any feasible point). The set \(F_k(z_k)\), next referred to as the localization set, is contained in \(epi f_k\), being obtained by horizontally cutting \(epi f_k\), and it contains the point \((\mathbf {x}^*, f^*)\).

The basic idea of MoC is to construct a nested sequence of sets \(F_k(z_k)\) shrinking as fast as possible around the point \((\mathbf {x}^*,f^*)\), by introducing a cut at each iteration. To obtain substantial volume reduction in passing from \(F_k(z_k)\) to \(F_{k+1}(z_{k+1})\), one looks for a central cut, i.e., a cut generated on the basis of some notion of center of \(F_k(z_k)\). Several proposals in this context can be found in the literature, stemming from Levin’s “Center of Gravity” method (Levin 1965), which is based on the property that for a given convex set C with nonempty interior, any hyperplane passing through the center of gravity generates a cut which reduces the volume of C by a factor of at least \( (1- e^{-1})\). However, such substantial reduction in the volume of \(F_k\) can only be obtained by solving the hard problem of locating the center of gravity.

Next we particularly focus on a more practical proposal, the Analytic Center Cutting Plane Method (ACCPM), see (Goffin et al. 1992, 1997; Ouorou 2009), which is based on the notion of “analytic center” introduced in Sonnevend (1985) as a point that maximizes the product of distances to all faces of \(F_k(z_k)\).

Thus, in the ACCPM the required central point of the localization set is calculated as the unique maximizer of the potential function

Once the analytic center

has been obtained, function \(f_k\) is updated thanks to the new cut generated at \(\mathbf {x}_{k+1}\), and the value \(z_k\) is possibly updated. A stopping condition is tested, which is based on the difference between the upper bound and a lower bound obtained by minimizing \(f_{k+1}\) over Q, and the procedure possibly iterated. Calculation of the analytic center can be performed by adapting interior point algorithms for Linear Programming based on the use of potential functions (see, e.g., de Ghellinck and Vial 1986). Complexity estimates of the method, with possible embedding of a proximal term in calculating the analytic center, are presented in Nesterov (1995)

Yet another possibility is to adopt, instead of the analytic center, the Chebyshëv center, defined as the center of the largest sphere contained in \(F_k(z_k)\). The approach, originally proposed in Elzinga and Moore (1975), has been equipped with a quadratic stabilizing term in Ouorou (2009).

An original approach somehow related to this area can be finally found in Bertsimas and Vempala (2004).

6.3 Gradient sampling

The fundamental fact behind most of NSO method is that satisfaction of an angle condition, that of forming an obtuse angle with a subgradient, is not enough for a direction to be a descent one. The angle condition, in fact, must be robust, that is the direction has to make an obtuse angle with many subgradients around the point. Based on this observation, and considering that for most practical problems the objective function is differentiable almost everywhere, gradient sampling algorithms have been introduced, see Kiwiel (2007), whose key feature is the evaluation of subgradient (i.e., gradient with probability 1) on a set of random points close to the current iterate. All such gradients are then used to obtain a search direction.

A sketch of an iteration of gradient sampling algorithm is reported in Algorithm 4, see (Burke et al. 2005, 2020). We do not report, for simplicity of notation, the iteration counter and thus we indicate by \(\mathbf {x}\) the current iterate. The algorithm works on the basis of two couples of stationarity/sampling-radius tolerances, the overall \((\eta , \epsilon )\) and the iteration–dependent \((\theta , \delta )\), respectively.

It can be proved that an algorithm based on the above iteration scheme provides a sequence of points \(\{\mathbf {x}_k\}\) converging to a Clarke stationary point with probability 1, unless \(f(\mathbf {x}_k) \rightarrow -\infty \). A necessary assumption is that the set of points where f is continuously differentiable is open, dense and full measure in \({\mathbb {R}}^n\), while no convexity assumption is required for ensuring convergence.

7 Inexact calculation of function and/or subgradient

We have already seen a case where it is advisable to dispose of a method for minimizing a convex function without requiring its exact calculation, see Sect. 6.1. This is a typical case in the wide application field of Lagrangian relaxation for hard ILP problems.

Next we briefly recall some basic facts, see (Gaudioso 2020). Suppose the following ILP problem is to be solved

with \( \mathbf {c} \in {\mathbb {R}}^n \), \(A \in {\mathbb {R}}^{m \times n}\), \(B \in {\mathbb {R}}^{p \times n}\), \(\mathbf {b} \in {\mathbb {R}}^m\), \(\mathbf {d} \in {\mathbb {R}}^p\), and \(\mathbb {Z}^n\) denoting the set of n-dimensional vectors. We assume that the problem is feasible and that the set

is finite, that is \(X= \{\mathbf {x}_1,\,\mathbf {x}_2, \ldots , \mathbf {x}_K\}\) and \(\mathcal {K} = \{1,2,\ldots , K \}\) is the corresponding index set.

Assume also that constraints are partitioned into two families, those defined through \(A\mathbf {x}= \mathbf {b}\) being the complicating ones. A Lagrangian relaxation of (69) is obtained by relaxing complicating constraints as follows

where \(\varvec{\lambda } \in {\mathbb {R}}^m\). Problem (70), which is still an ILP, provides an upper bound for problem (69), namely,

Moreover, denoting by \(\mathbf {x}(\varvec{\lambda }) \in \{\mathbf {x}_1,\,\mathbf {x}_2, \ldots , \mathbf {x}_K\}\) the optimal solution of (70), it holds that

\(z(\varvec{\lambda })\) being often referred to as the dual function. We note that, in case \(\mathbf {x}(\varvec{\lambda })\) is feasible (i.e., \(A\mathbf {x}(\varvec{\lambda })=\mathbf {b}\)), then it is also optimal for (69).

Aiming for the best among the upper bounds (i.e., the one closest to \(z_{I}\)), we define the Lagrangian dual problem as

\(z_{LD}\) being the best upper bound obtainable through Lagrangian relaxation.

Problem (72) consists in the minimization of a convex function defined as the pointwise maximum of K affine functions of \(\varvec{\lambda }\), one for each feasible point in X. In fact, it is a convex nonsmooth optimization problems which can be tackled by means of any of the methods described in previous sections.

Very often, once the complicating constraints have been removed, the Lagrangian relaxation is easy to solve. If this is not the case, however, any iterative NSO method which requires at each iteration its exact solution may lead to prohibitive computation time. Now suppose we are able to solve approximately the Lagrangian relaxation (70), that is, we are able to obtain for any given \(\bar{\varvec{\lambda }}\) an approximation of \(z(\bar{\varvec{\lambda }})\), say \(\tilde{z}(\bar{\varvec{\lambda }}) = z(\bar{\varvec{\lambda }})-\epsilon \), for some \(\epsilon \ge 0\). Suppose, in particular, that

for some \(\widetilde{\mathbf {x}}(\bar{\varvec{\lambda }}) \in \{\mathbf {x}_1,\,\mathbf {x}_2, \ldots , \mathbf {x}_K\}\). Hence, for every \(\varvec{\lambda } \in {\mathbb {R}}^m\) the following inequality holds

which indicates that \(\big (\mathbf {b} - A\widetilde{\mathbf {x}}(\bar{\varvec{\lambda }})\big ) \in \partial _{\epsilon } z (\bar{\varvec{\lambda }})\).

Lagrangian relaxation and corresponding solution of the (convex and nonsmooth) Lagrangian dual problem is a very common example of the general case where, in minimizing a convex function f, at any point \(\mathbf {x}\) we have at hand both an approximate value of the function \(\tilde{f}(\mathbf {x})=f(\mathbf {x})+\epsilon _f\), and an approximate subgradient \(\tilde{g}(\mathbf {x}) \in \partial _{\epsilon _g} f(\mathbf {x})\), for some positive \(\epsilon _f\) and \(\epsilon _g\). Convergence analysis of algorithms based on such an approximation has been extensively used both in subgradient (see Kiwiel 2004; D’Antonio and Frangioni 2009; Astorino et al. 2019) and in bundle methods (see Hintermüller 2001; Kiwiel 2006; de Oliveira et al. 2014; van Ackooij and Sagastizábal 2014). In particular, in de Oliveira et al. (2014) a taxonomy of possible kinds of inexactness in function and/or subgradient evaluation is provided, together with a classification of the methods. It is relevant, in fact, the distinction between cases where \(\epsilon _f\) and \(\epsilon _g\) are completely unknown and those where such errors can be estimated or, sometimes, even controlled.

8 Nonconvex NSO: a bundle view

The extension of the cutting plane idea and, consequently, of bundle methods to (local) minimization of nonconvex functions is not straightforward. In fact, in such a case it is still possible to define the convex piecewise affine function \(f_k\), exactly as in (25), provided that vectors \(\mathbf {g}_i\) are now elements of Clarke’s subdifferential \(\partial _C f(\mathbf {x})\). Nevertheless, two fundamental properties valid in the convex framework get lost:

-

it is no longer ensured that \(f_k\) is a lower approximation of f;

-

\(f_k\) does not necessarily interpolates f at points \(\mathbf {x}_i\), \(i \in \{1,\ldots ,k\}\).

If we adopt the stability center viewpoint and rewrite \(f_k\), see (39), as