Abstract

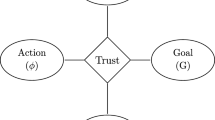

As artificial intelligence (AI) becomes more pervasive, the concern over how users can trust artificial agents is more important than ever before. In this research, we seek to understand the trust formation between humans and artificial agents from the morality and uncanny theory perspective. We conducted three studies to carefully examine the effect of two moral foundations: perceptions of harm and perceptions of injustice, as well as reported wrongdoing on uncanniness and examine the effect of uncanniness on trust in artificial agents. In Study 1, we found perceived injustice was the primary determinant of uncanniness and uncanniness had a negative effect on trust. Studies 2 and 3 extended these findings using two different scenarios of wrongful acts involving an artificial agent. In addition to explaining the contribution of moral appraisals to the feeling of uncanny, the latter studies also uncover substantial contributions of both perceived harm and perceived injustice. The results provide a foundation for establishing trust in artificial agents and designing an AI system by instilling moral values in it.

Similar content being viewed by others

References

Abrardi, L., Cambini, C., & Rondi, L. (2019). The economics of artificial intelligence: A survey. In EUI working papers, Robert Schuman centre for advanced studies Florence school of regulation.

Al-Natour, S., Benbasat, I., & Cenfetelli, R. (2011). The adoption of online shopping assistants: Perceived similarity as an antecedent to evaluative beliefs. Journal of the Association for Information Systems, 12(5), 347–374.

Awad, E., Dsouza, S., Kim, R., Schulz, J., Henrich, J., Shariff, A., et al. (2018). The moral machine experiment. Nature, 563(7729), 59–64.

Ba, S., & Pavlou, P. A. (2002). Evidence of the effect of trust building technology in electronic markets: Price premiums and buyer behavior. MIS Quarterly, 26(3), 243–268.

Baron, R. M., & Kenny, D. A. (1986). The moderator–mediator variable distinction in social psychological research: Conceptual, strategic, and statistical considerations. Journal of Personality and Social Psychology, 51(6), 1173–1182.

Beck, J. C., & Smith, B. M. (2009). Introduction to the special volume on constraint programming, artificial intelligence, and operations research. Annals of Operations Research, 171(1), 1–2.

Bigman, Y. E., Waytz, A., Alterovitz, R., & Gray, K. (2019). Holding robots responsible: The elements of machine morality. Trends in Cognitive Sciences, 23(5), 365–368.

Billings, D. R., Schaefer, K. E., Chen, J. Y., & Hancock, P. A. (2012). Human–robot interaction: Developing trust in robots. In Proceedings of the seventh annual ACM/IEEE international conference on human–robot interaction, March 2012, 109–110.

Bonnefon, J. F., Shariff, A., & Rahwan, I. (2016). The social dilemma of autonomous vehicles. Science, 352(6293), 1573–1576.

Brucker, P., & Knust, S. (2002). Lower bounds for scheduling a single robot in a job-shop environment. Annals of Operations Research, 115, 147–172.

Broadbent, E. (2017). Interactions with robots: The truths we reveal about ourselves. Annual Review of Psychology, 68, 627–652.

Fornell, C., & Larcker, D. F. (1981). Structural equation models with unobservable variables and measurement errors. Journal of Marketing Research, 18(1), 39–50.

Fragapane, G., Ivanov, D., Peron, M., Sgarbossa, F., & Strandhagen, J. O. Increasing flexibility and productivity in industry 4.0 production networks with autonomous mobile robots and smart intralogistics. Annals of Operations Research (Forthcoming).

Graham, J., Haidt, J., Koleva, S., Motyl, M., Iyer, R., Wojcik, S. P., et al. (2013). Moral foundations theory: The pragmatic validity of moral pluralism. Advances in Experimental Social Psychology, 47, 55–130.

Gray, K., & Schein, C. (2012). Two minds vs. two philosophies: Mind perception defines morality and dissolves the debate between deontology and utilitarianism. Review of Philosophy and Psychology, 3, 405–423.

Gray, K., Schein, C., & Ward, A. F. (2014). The myth of harmless wrongs in moral cognition: Automatic dyadic completion from sin to suffering. Journal of Experimental Psychology: General, 143, 1600–1615.

Gray, K., & Wegner, D. M. (2011). Dimensions of moral emotions. Emotion Review, 3(3), 258–260.

Gray, K., & Wegner, D. M. (2012). Feeling robots and human zombies: Mind perception and the uncanny valley. Cognition, 125(1), 125–130.

Gray, K., Young, L., & Waytz, A. (2012). Mind perception is the essence of morality. Psychological Inquiry, 23(2), 101–124.

Haidt, J., & Bjorklund, F. (2008). Social intuitionists answer six questions about moral psychology. In W. Sinnott-Armstrong (Ed.), Moral psychology The cognitive science of morality: Intuition and diversity (Vol. 2, pp. 181–217). Cambridge, MA: MIT Press.

Han, B. T., & Cook, J. S. (1998). An efficient heuristic for robot acquisition and cell formation. Annals of Operations Research, 77, 229–252.

Hancock, P. A., Billings, D. R., & Schaefer, K. E. (2011a). Can you trust your robot? Ergonomics in Design, 19(3), 24–29.

Hancock, P. A., Billings, D. R., Schaefer, K. E., Chen, J. Y., De Visser, E. J., & Parasuraman, R. (2011b). A meta-analysis of factors affecting trust in human–robot interaction. Human Factors, 53(5), 517–527.

Hengstler, M., Enkel, E., & Duelli, S. (2016). Applied artificial intelligence and trust—The case of autonomous vehicles and medical assistance devices. Technological Forecasting and Social Change, 105, 105–120.

IBM. (2019). Building trust in AI. Retrieved 3, December from 2019. https://www.ibm.com/watson/advantage-reports/future-of-artificial-intelligence/building-trust-in-ai.html.

Kats, V., & Levner, E. (1997). Minimizing the number of robots to meet a given cyclic schedule. Annals of Operations Research, 69, 209–226.

Kim, D. J., Song, Y. I., Braynov, S. B., & Rao, H. R. (2005). A multidimensional trust formation model in B-to-C e-commerce: A conceptual framework and content analyses of academia/practitioner perspectives. Decision Support System, 40(2), 143–165.

Komiak, S. Y. X., & Benbasat, I. (2006). The effects of personalization and familiarity on trust and adoption of recommendation agents. MIS Quarterly, 30(4), 941–960.

Lankton, N. K., McKnight, D. H., & Tripp, J. (2015). Technology, humanness, and trust: Rethinking trust in technology. Journal of the Association for Information Systems, 16(10), 880–918.

Lee, M. K. (2018). Understanding perception of algorithmic decisions: Fairness, trust, and emotion in response to algorithmic management. Big Data and Society, 5(1), 1–16.

Li, X., Hess, T. J., & Valacich, J. S. (2008). Why do we trust new technology? A study of initial trust formation with organizational information systems. The Journal of Strategic Information Systems, 17(1), 39–71.

MacDorman, K. F., & Ishiguro, H. (2006). The uncanny advantage of using androids in cognitive and social science research. Interaction Studies, 7, 297–337.

Malle, B. F., Guglielmo, S., & Monroe, A. E. (2014). A theory of blame. Psychological Inquiry, 25(2), 147–186.

Mayer, R. C., Davis, J. H., & Schoorman, F. D. (1995). An integrative model of organizational trust. Academy of Management Review, 20(3), 709–734.

McAndrew, F. T., & Koehnke, S. S. (2016). On the nature of creepiness. New Ideas in Psychology, 43, 10–15.

Mcknight, D. H., Carter, M., Thatcher, J. B., & Clay, P. F. (2011). Trust in a specific technology: An investigation of its components and measures. ACM Transactions on Management Information Systems (TMIS), 2(2), 1–25.

Mori, M. (1970). The uncanny valley. Energy, 7(4), 33–35.

Nissen, M. E., & Sengupta, K. (2006). Incorporating software agents into supply chains: Experimental investigation with a procurement task. MIS Quarterly, 301(1), 145–166.

Pavlou, P. A., & Gefen, D. (2005). Psychological contract violation in online marketplaces: Antecedents, consequences, and moderating role. Information Systems Research, 16(4), 372–399.

Petrovic, S. (2019). “You have to get wet to learn how to swim” applied to bridging the gap between research into personnel scheduling and its implementation in practice. Annals of Operations Research, 275(1), 161–179.

Petter, S., Straub, D. W., & Rai, A. (2007). Specifying formative constructs in information systems research. MIS Quarterly, 31(4), 623–656.

Piazza, J., & Sousa, P. (2016). When injustice is at stake, moral judgements are not parochial. Proceedings from the Royal Society of London B, 283, 20152037.

Piazza, J., Sousa, P., Rottman, J., & Syropoulos, S. (2019). Which appraisals are foundational to moral judgment? Harm, injustice, and beyond. Social Psychological and Personality Science, 10(7), 903–913.

Podsakoff, P. M., MacKenzie, S. B., Lee, J. Y., & Podsakoff, N. P. (2003). Common method biases in behavioral research: A Critical review of the literature and recommended remedies. Journal of Applied Psychology, 88(5), 879–903.

PwC. (2017). Sizing the price: What’s the real value of AI for your business and how can you capitalize. White Paper, Retrieved 2, December from 2019. https://www.pwc.com/gx/en/issues/data-and-analytics/publications/artificial-intelligence-study.html.

Ringle, C. M., Wende, S., & Becker, J.-M. (2015). SmartPLS 3. Boenningstedt: SmartPLS GmbH.

Rousseau, D. M., Sitkin, S. B., Burt, R. S., & Camerer, C. (1998). Not so different after all: A cross-discipline view of trust. Academy of Management Review, 23(3), 393–404.

Russell, P. S., & Giner-Sorolla, R. (2011). Moral anger, but not moral disgust, responds to intentionality. Emotion, 11, 233–240.

Saif, I., & Ammanath, B. (2020). ‘Trustworthy AI’ is a framework to help manage unique risk. MIT Technology Review (March).

Seeber, I., Waizenegger, L., Seidel, S., Morana, S., Benbasat, I., & Lowry, P. B. Collaborating with Technology-Based Autonomous Agents: Issues and Research Opportunities. Internet Research (Forthcoming).

Shank, D. B., & DeSanti, A. (2018). Attributions of morality and mind to artificial intelligence after real-world moral violations. Computers in Human Behavior, 86(September), 401–411.

Shank, D. B., Graves, C., Gott, A., Gamez, P., & Rodriguez, S. (2019). Feeling our way to machine minds: People’s emotions when perceiving mind in artificial intelligence. Computer in Human Behavior, 98, 256–266.

Siemens. (2019). Artificial intelligence in industry: Intelligent Production. Retrieved 2, December from 2019. https://new.siemens.com/global/en/company/stories/industry/ai-in-industries.html.

Sousa, P., & Piazza, J. (2014). Harmful transgressions qua moral transgressions: A deflationary view. Thinking and Reasoning, 20(1), 99–128.

Srivastava, S. C., & Chandra, S. (2018). Social presence in virtual world collaboration: An uncertainty reduction perspective using a mixed methods approach. MIS Quarterly, 42(3), 779–803.

Talbi, E.-G. (2016). Combining metaheuristics with mathematical programming, constraint programming and machine learning. Annals of Operations Research, 240(1), 171–215.

Umphress, E. E., Simmons, A. L., Folger, R., Ren, R., & Bobocel, R. (2013). Observer reactions to interpersonal injustice: The roles of perpetrator intent and victim perception. Journal of Organizational Behavior, 34(3), 327–349.

Wallach, W. (2010). Robot minds and human ethics: the need for a comprehensive model of moral decision making. Ethics and Information Technology, 12(3), 243–250.

Waytz, A., Heafner, J., & Epley, N. (2014). The mind in the machine: Anthropomorphism increases trust in an autonomous vehicle. Journal of Experimental Social Psychology, 52(May), 113–117.

Zhong, C. B., & Leonardelli, G. J. (2008). Cold and lonely: Does social exclusion literally feel cold? Psychological Science, 19(9), 838–842.

Zuboff, S. (1988). In the age of the smart machine: The future of work and power. New York, NY: Basic Books.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Measurement items

Trust in Artificial Agent (adapted from McKnight et al. 2011).

-

1.

[The artificial agent] seems to be a very reliable artificial agent.

-

2.

If I were to work with [the artificial agent], I can trust the agent.

-

3.

[The artificial agent] seems to be very dependable.

-

4.

If you were to work with [The artificial agent], how safe you would feel? (1 = not at all to 7 = extremely safe).

Uncanniness (adapted from Shank et al. 2019).

-

1.

I felt uneasy toward [the artificial agent]

-

2.

I felt unsecure around [the artificial agent]

Perceptions of harm (adapted from Piazza et al. 2019).

-

1.

George’s action was harmful

-

2.

George’s action negatively affected the wellbeing of Lucy

Perceptions of injustice (adapted from Piazza et al. 2019).

-

1.

George’s action was unjust

-

2.

George’s action was unfair

Reported Wrongdoing (adapted from Russell and Giner-Sorolla 2011).

How wrong was the artificial agent’s action? (1 = not wrong at all to 7 = extremely wrong).

Appendix B: PLS cross-loading (Study 1)

Perceived harm | Trust | Uncanny | Injustice | Reported wrongdoing | |

|---|---|---|---|---|---|

Harm1 | 0.96 | − 0.18 | 0.23 | 0.52 | 0.29 |

Harm2 | 0.98 | − 0.19 | 0.35 | 0.53 | 0.31 |

Trust1 | − 0.15 | 0.90 | − 0.31 | − 0.36 | − 0.29 |

Trust2 | − 0.15 | 0.93 | − 0.38 | − 0.40 | − 0.35 |

Trust3 | − 0.15 | 0.91 | − 0.29 | − 0.30 | − 0.24 |

Trust4 | − 0.23 | 0.90 | − 0.44 | − 0.39 | − 0.35 |

Uncanny1 | 0.26 | − 0.37 | 0.95 | 0.48 | 0.43 |

Uncanny2 | 0.33 | − 0.38 | 0.95 | 0.51 | 0.40 |

Injustice1 | 0.53 | − 0.38 | 0.48 | 0.96 | 0.45 |

Injustice2 | 0.52 | − 0.40 | 0.51 | 0.97 | 0.46 |

Wrong_AI | 0.31 | − 0.34 | 0.44 | 0.47 | 1.00 |

Appendix C: PLS cross-loading (Study 2)

Perceived harm | Trust | Uncanny | Injustice | Reported wrongdoing | |

|---|---|---|---|---|---|

Harm1 | 0.62 | -0.20 | 0.14 | 0.13 | 0.04 |

Harm2 | 0.82 | -0.23 | 0.24 | 0.17 | 0.18 |

Trust1 | − 0.21 | 0.84 | − 0.36 | − 0.25 | − 0.32 |

Trust2 | − 0.24 | 0.92 | − 0.53 | − 0.35 | − 0.37 |

Trust3 | − 0.22 | 0.87 | − 0.41 | − 0.30 | − 0.38 |

Trust4 | − 0.33 | 0.85 | − 0.54 | − 0.45 | − 0.44 |

Uncanny1 | 0.27 | − 0.53 | 0.94 | 0.42 | 0.27 |

Uncanny2 | 0.24 | − 0.48 | 0.95 | 0.56 | 0.44 |

Injustice1 | 0.19 | − 0.34 | 0.50 | 0.96 | 0.50 |

Injustice2 | 0.21 | − 0.43 | 0.51 | 0.97 | 0.59 |

Wrong_AI | 0.16 | − 0.44 | 0.38 | 0.57 | 1.00 |

Appendix D: PLS cross-loading (Study 3)

Perceived harm | Trust | Uncanny | Injustice | Reported wrongdoing | |

|---|---|---|---|---|---|

Harm1 | 0.91 | − 0.11 | 0.40 | 0.21 | 0.13 |

Harm2 | 0.65 | − 0.04 | 0.22 | 0.05 | − 0.05 |

Trust1 | − 0.17 | 0.92 | − 0.40 | − 0.12 | − 0.16 |

Trust2 | − 0.07 | 0.96 | − 0.39 | − 0.19 | − 0.15 |

Trust3 | − 0.07 | 0.90 | − 0.33 | − 0.10 | − 0.13 |

Trust4 | − 0.06 | 0.92 | − 0.42 | − 0.26 | − 0.23 |

Uncanny1 | 0.39 | − 0.38 | 0.95 | 0.41 | 0.31 |

Uncanny2 | 0.38 | − 0.41 | 0.95 | 0.42 | 0.36 |

Injustice1 | 0.14 | − 0.15 | 0.34 | 0.93 | 0.40 |

Injustice2 | 0.20 | − 0.20 | 0.47 | 0.96 | 0.55 |

Wrong_AI | 0.08 | − 0.18 | 0.35 | 0.51 | 1.00 |

Rights and permissions

About this article

Cite this article

Sullivan, Y., de Bourmont, M. & Dunaway, M. Appraisals of harms and injustice trigger an eerie feeling that decreases trust in artificial intelligence systems. Ann Oper Res 308, 525–548 (2022). https://doi.org/10.1007/s10479-020-03702-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10479-020-03702-9