Abstract

We consider a K-competing queues system with the additional feature of customer abandonment. Without abandonment, it is optimal to allocate the server to a queue according to the \(c \mu \)-rule. To derive a similar rule for the system with abandonment, we model the system as a continuous-time Markov decision process. Due to impatience, the Markov decision process has unbounded jump rates as a function of the state. Hence it is not uniformisable, and so far there has been no systematic direct way to analyse this. The Smoothed Rate Truncation principle is a technique designed to make an unbounded rate process uniformisable, while preserving the properties of interest. Together with theory securing continuity in the limit, this provides a framework to analyse unbounded rate Markov decision processes. With this approach, we have been able to find close-fitting conditions guaranteeing optimality of a strict priority rule.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we consider a server assignment problem. There are K customer classes, and each customer class \(1\le i\le K\) has holding costs \(c_i\) per unit time, per customer. There is a single server that can serve class i at rate \(\mu _i\). Arrivals occur according to independent Poisson streams, independently of the service process. Each class i customers abandons the system at rate \(\beta _i\), \(1\le i\le K\), independently of whether he is being served or waiting in the queue. The question we address in this paper is: what service policy minimises the expected discounted total and average cost?

In the K-competing queues model without abandonments, it is well-known that the \(c \mu \)-rule is optimal. The \(c \mu \)-rule gives full priority to the queue with the highest index \(c_i\mu _i\), that is, the queue that gives the highest cost reduction per unit time. This result was shown to be optimal in 1985 simultaneously by Baras et al. (1985) and by Buyukkoc et al. (1985).

Recently, there has been a revived interest in the K-competing queues model, with the additional feature of customer abandonment due to impatience. In this case the \(c \mu \)-rule is not always optimal. When we model this problem as a continuous-time Markov decision process (MDP) the abandonments induce unboundedness of the transition rates as a function of the state. Hence, uniformisation is not possible and the standard (discrete time) techniques are not available. In the literature several approaches have been tried to deal with this difficulty. We may categorise them in three main approaches.

-

1.

Study of a relaxation or approximate version of the original problem (see e.g. Atar et al. 2010; Ayesta et al. 2011; Larrañaga et al. 2013, 2015). The obtained policies may serve as a heuristics.

-

2.

Application of specific coupling techniques to obtain an optimal policy. Typically these papers (see e.g. Salch et al. 2013; Down et al. 2011, see also Ertiningsih et al. 2015) are limited to special cases, as the coupling gets more tedious in a more general setting. On the other hand, non-Markovian service time distributions and/or a non-Markovian arrival process may be handled.

-

3.

Truncation of the process to make it uniformisable. Then use discrete-time techniques to derive properties of the optimal policy (see e.g. Down et al. 2011; Bhulai et al. 2014; Blok and Spieksma 2015). This is the solution method that we will follow in this paper.

The first approach is most prominent in the literature. In Atar et al. (2010) consider a K-competing queues problem with many servers. In their paper, the \(c \mu /\beta \)-rule is introduced. This rule prioritises the queue with the highest index \(c_i\mu _i/\beta _i\). We will refer to this rule as the \(c \mu /\beta \)-rule. In the paper, it is shown, that it is asymptotically optimal to follow the \(c \mu /\beta \)-rule in the overloaded regime, as the number of servers tends to infinity.

Ayesta et al. (2011) studied the problem as well. They derive priority rules similar to the \(c \mu /\beta \)-rule by analytically solving the case with one or two customers initially present and without arrivals.

Larrañaga et al. (2013) have studied a fluid approximation of the multi-server variant of the competing queues problem. In this fluid approximation optimality of the \(c \mu /\beta \)-rule in the overloaded regime is shown and it is shown that for \(K=2\) a switching curve policy is optimal in the underloaded regime. In Larrañaga et al. (2015) the same authors study asymptotic optimality of the multi-server competing queues problem for the average expected cost criterion. The authors consider the problem as a restless multi-armed bandit problem, and compute and show that the Whittle index is asymptotically optimal for convex holding cost. The asymptotics concern large states, and light and heavy traffic regimes. The paper also connects the \(c \mu /\beta \)-rule to the Whittle index for fluid approximations.

Other papers do not focus on heuristics, but try to find a subset of the input parameters for which a strict priority rule can be proven to be optimal. Salch et al. (2013) study the competing queues system with a restriction to a maximum of K arrivals. Customers may be impatient, but do not leave the system when they become impatient. Thus, the model is, in fact, a scheduling problem, and the criterion is to minimise the expected weighted number of impatient customers. With the use of a coupling and an interchange argument optimality of a priority policy is proved, provided a set of three conditions on the service, impatience and cost rates holds.

The paper of Down et al. (2011) considers a two-competing queues reward system, where the two classes have equal service rates. A coupling argument is employed to show that if type 1 customers have the largest abandonment rate and reward per unit time, then prioritising these customers is optimal.

The approach that we will carry out is the following. First, we model the problem as a continuous-time MDP. To make the MDP uniformisable, a truncation is necessary. After uniformisation, the truncated processes can be analysed by value iteration. To justify appropriate convergence of the truncated processes to the original model, a limit theorem is required. To our knowledge, so far such a theorem is available only for the discounted cost criterion, see Blok and Spieksma (2015). Via a vanishing discount approach, the results are transferred to the average cost criterion (see Blok and Spieksma 2017 for the justification). Therefore, we will first show for the discounted cost criterion that prioritising type i customers is optimal, if type i has maximum index with respect to c, \(c \mu \) and \(c\mu /\beta \). These conditions are similar to Salch et al. (2013), however the conditions of Salch et al. (2013) are implied by our conditions. Since the resulting index policy is optimal for all small discount factors, even strong Blackwell optimality of this policy follows.

In the paper of Down et al. (2011) a similar approach is used. The limit argument relies on specific properties of the model and a special truncation that does not affect optimality of the aforementioned priority policy. Due to the involved nature of the truncation, it seems unlikely that Down et al. (2011) can be extended to more dimensions or to heterogeneous service rates. The results of our paper can therefore be viewed as an extension of Down et al. (2011). In this paper, we use a different truncation technique called Smoothed Rate Truncation (SRT). This technique has been introduced by Bhulai et al. (2014) and can be utilised to make a process uniformisable while keeping the structural properties in tact.

The paper is organised as follows. In Sect. 2 we give a complete description of the model, and we present the main results. Section 3 contains the core of our analysis. First, it describes the Smoothed Rate Truncation in more detail, then the structural properties of the value function are derived. In Sect. 4 we prove the main theorem. This can be done by invoking the limit theorems of Blok and Spieksma (2017) and Blok and Spieksma (2015). Section 5 presents some numerical examples that show that none of the used conditions are redundant. In the “Appendix”, we provide the proofs of the propositions in Sect. 3.

2 Modelling and main result

2.1 Problem formulation

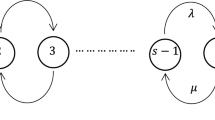

We consider K stations that are served by a single server. Customers arrive to the stations according to independent Poisson processes with rates \(\lambda _i> 0\) for \(i=1,\ldots , K\), respectively. The service requirements of class i customers are exponentially distributed with parameter \(\mu _i>0\). Customers have limited patience: they are willing to wait an exponential time with parameter \(\beta _i>0\) for class i. We allow abandonment during service as well, resulting in an abandonment rate in station i of \(\beta _i x_i\) if there are \(x_i\) customers present at station i. In Sect. 2.2 we will also discuss alternative modelling choices.

The service requirements, abandonments and arrivals are all stochastically independent of each other. Class i customers carry holding costs \(c_i\ge 0\) per unit time, \(i=1,\ldots , K\). The service regime is pre-emptive.

We will study this problem in the framework of Markov decision theory. To this end, let the state space be \({S}={\mathbb {N}}_0^K\). The action space is \({\mathcal {A}}=\{1,\ldots , K\}\), where action \(a\in \{1,\ldots , K\}\) corresponds to assigning the server to station i if \(a=i\). Thus, we only allow idling if one or more queues are empty. By \( {\varPi }=\{\pi :S\rightarrow {\mathcal {A}}\}\) denote the collection of stationary deterministic policies. For \(\pi \in {\varPi }\), a rate matrix \(Q(\pi )\) and cost rate \(c(\pi )\) are given by

where \(e_i\) stands for the i-th unit vector. One can then define a measurable space \(({\varOmega },{{{\mathcal {F}}}})\), a stochastic process \(X=\{X_t\}_{t\ge 0}\),  , a filtration \(\{{{{\mathcal {F}}}}_t\}_{t\ge 0}\) to which X is adapted, and a probability distribution

, a filtration \(\{{{{\mathcal {F}}}}_t\}_{t\ge 0}\) to which X is adapted, and a probability distribution  on \(({\varOmega },{{{\mathcal {F}}}})\), such that X is the minimal Markov process with q-matrix \(Q(\pi )\), for each initial distribution \(\nu \) on

on \(({\varOmega },{{{\mathcal {F}}}})\), such that X is the minimal Markov process with q-matrix \(Q(\pi )\), for each initial distribution \(\nu \) on  , and each policy \(\pi \in {\varPi }\). By

, and each policy \(\pi \in {\varPi }\). By  , \(t\ge 0\), we denote the corresponding minimal transition function and by

, \(t\ge 0\), we denote the corresponding minimal transition function and by  the expectation operator corresponding to

the expectation operator corresponding to  . Notice that we will write

. Notice that we will write  ,

,  , when \(\nu =\delta _x\) is the Dirac measure at state x.

, when \(\nu =\delta _x\) is the Dirac measure at state x.

The problem of interest is finding the policy \(\pi \in {\varPi }\) that minimises the total expected discounted cost and the expected average cost. Let \(\alpha >0\). To this end, define

to be the total expected \(\alpha \)-discounted cost under policy \(\pi \), given that the system is in state x initially,  . Then the minimum total expected cost value function \(V_\alpha \) is defined by

. Then the minimum total expected cost value function \(V_\alpha \) is defined by

If \(V_\alpha ^\pi =V_\alpha \), then \(\pi \) is said to be an \(\alpha \)-discount optimal policy. If there exists \(\alpha _0>0\), such that \(\pi \) is \(\alpha \)-discount optimal for \(\alpha \in (0,\alpha _0)\), then \(\pi \) is called a strongly Blackwell optimal policy.

By \(g^\pi \) given by

we denote the expected average cost under policy \(\pi \), given the initial state x,  . Again, the minimum expected average cost is defined by

. Again, the minimum expected average cost is defined by

and if \(g^\pi =g\), then \(\pi \) is an optimal policy.

It is not to be expected that the optimal policy has a simple description in general. In this paper, we will restrict to providing sufficient conditions for optimality of an index policy.

2.2 Main result

The two main results of our paper are Theorems 1 and 2, providing sufficient conditions for optimality of the Smallest Index Policy.

Definition 1

The Smallest Index Policy assigns the server to the non-empty station with the smallest index. The policy only idles, if no customers are present.

Theorem 1

Suppose that the stations can be ordered such that, for \(1\le i\le j\le K,\) the following three conditions hold

then the Smallest Index Policy is \(\alpha \)-discount optimal for any \(\alpha >0\), and hence also strongly Blackwell optimal.

Theorem 2

Under the conditions of Theorem 1, the Smallest Index Policy is average cost optimal.

The proofs are postponed until Sect. 4. In Sect. 5 we give examples showing, that if any of the three conditions of (1) is omitted, the Smallest Index Policy can fail to be optimal.

Alternative modelling choices In our model the cost function is a holding cost \(\sum {c_ix_i}\) per unit time, when the system is in state x. In many applications a penalty (say \(P_i\) for class i) is charged, if a customer abandons the system due to impatience. Then the cost per unit time is given by \(\sum _i P_i\beta _i x_i\). Substitution of \(c_i=P_i\beta _i\), \(i=1,\ldots , K\) implies equivalence of these cost structures.

We modelled the system, such that customers can leave the system while being in service. In some models, it may be more realistic that abandonment does not take place, after service has started. However, if the abandonment rates are smaller than the service rates, i.e., \(\beta _i< \mu _i\) for all i, then our analysis is still valid after an appropriate parameter change. That is, we consider the system with service rates \({\hat{\mu }}_i=\mu _i-\beta _i>0\). Abandonments during service or service completions in the revised model correspond to a service completion in the original one.

If, for one or more classes, the abandonment rates are greater than or equal to the associated service rates, then this substitution is clearly not possible. However, serving that customer class delays the process of emptying the system. It follows directly that in this case, it can never be optimal to serve these classes of customers. Hence, when there are only customers of that type present then the server should idle in order to minimise the expected average cost. Therefore, the optimal policy never serves class i if \(\mu _i\le \beta _i\). For the remaining customer classes with \(\mu _i>\beta _i\), the Smallest Index Policy is optimal, whenever these classes can be ordered, such that \(c\searrow ,\ c{\hat{\mu }}\searrow ,\ c{\hat{\mu }}/\beta \searrow \).

Finally, it is possible to allow idling at all times. However, it can easily be shown that it is not optimal to have unforced idling. Therefore, we ignore this option for the sake of notational convenience.

2.3 Structural properties

As mentioned in the introduction, Sect. 1, we will first study the \(\alpha \)-discounted cost problem. Crucial in establishing optimality of the Smallest Index Policy are certain properties of the value function. If \(V_\alpha \) is non-decreasing (I) and weighted Upstream Increasing (wUI), then optimality of the Smallest Index Policy can be directly deduced from the \(\alpha \)-discounted cost optimality equation under certain conditions on the Markov decision problem (cf. Blok and Spieksma 2015, 2017) that we will not discuss explicitly in this paper. We will next define the structural properties (I) and (wUI).

Definition 2

The function \(f:{S}\rightarrow {\mathbb {R}}\) is called weighted Upstream Increasing (wUI) if \(f\in wUI\), with wUI defined by

The function \(f:{S}\rightarrow {\mathbb {R}}\) is called non-decreasing (I) if \(f\in I\), with I defined by

The following lemma makes the connection between the structural properties of the \(\alpha \)-discounted cost value function and optimality of the Smallest Index Policy.

Lemma 1

Let the discount factor \(\alpha >0\). Then, the \(\alpha \)-discounted cost value function \(V_\alpha \) is well-defined and finite. Suppose \(V_\alpha \in wUI \cap I\), then the Shortest Index Policy is \(\alpha \)-discount optimal.

Proof

One can view the MDP as a negative dynamic programming problem (cf. Strauch 1966), for which simple conditions allow to draw the conclusions that we aim for. Since later on we will have to include perturbations, we will use (Blok and Spieksma 2015, Theorem 4.2). The conditions in that theorem are all easily verified, except for the following two conditions:

- P1:

-

There exist a function

, and a constant \(\gamma <\alpha \) with the properties that

, and a constant \(\gamma <\alpha \) with the properties that

If F satisfies the first property, then F is called a \(\gamma \)-drift function for the MDP.

- P2:

-

There exist a function

and a constant \(\xi \), such that the following properties are satisfied.

and a constant \(\xi \), such that the following properties are satisfied.

-

G is a \(\xi \)-drift function for the MDP.

-

G is an F-moment function, i.e. there exists an increasing sequence \(\{K_n\}_n\),

, \(|K_n|<\infty \),

, \(|K_n|<\infty \),  , \(n\rightarrow \infty \), such that $$\begin{aligned} \inf _{x\not \in K_n}\frac{G_x}{F_x}\rightarrow \infty ,\quad n\rightarrow \infty . \end{aligned}$$

, \(n\rightarrow \infty \), such that $$\begin{aligned} \inf _{x\not \in K_n}\frac{G_x}{F_x}\rightarrow \infty ,\quad n\rightarrow \infty . \end{aligned}$$

We check property P1. Take \(F_x=e^{\epsilon (x_1+\cdots +x_K)}\), with \(\epsilon \) to be determined. Then,

Clearly, one can choose \(\epsilon >0\) sufficiently small, so that

Since F increases exponentially quick in \(x_i\), \(1\le i\le K\), and c is linear in \(x_i\), the second condition in P1 is easily verified.

Property P2 immediately follows by setting \(G_x=e^{\epsilon '(x_1+\cdots +x_K)}\), for any \(\epsilon '>\epsilon \).

Let \(V_\alpha \in wUI \cap I\), let \(1\le {j_1}\le j_2 \le K\). Suppose x is such that \(x_{j_1}, x_{j_2} >0\), then \(V_\alpha \in wUI\) implies

Now by virtue of (Blok and Spieksma 2015, Theorem 4.2), \(V_\alpha \) is a solution to the Discount Optimality Equation, i.e.

The DCOE yields that if class \({j_1}\) and \({j_2}\) customers are both present, then it is optimal to serve class \({j_1}\) rather than class \({j_2}\).

Further, since \(V_\alpha \) is non-decreasing we have for \(1\le j\le K\), and x with \(x_j>0\) that

with 0 corresponding to the cost if an empty queue is served. Hence idling is never optimal; it is optimal to serve a customer whenever possible. We conclude that the Shortest Index Policy is optimal. \(\square \)

3 Discrete time discounted cost analysis

3.1 Smoothed Rate Truncation

The abandonment rates increase linearly in the number of waiting customers. Hence the transition rates are unbounded as a function of the state. Thus, the system is not uniformisable and so there is no discrete-time equivalent to the continuous-time problem. To make discrete-time theory available, we approximate the MDP with a sequence of (essentially) finite state MDPs. Unfortunately, standard state space truncations generally destroy the structural properties of interest due to boundary effects.

To this end, we have developed the Smoothed Rate Truncation (SRT). This perturbation technique was first introduced in Bhulai et al. (2014). In that paper, SRT is applied to a Markov cost process, and properties of the value function are proven. The distinguishing feature of SRT is that the transition rates are decreased in all states, also close to the origin. This makes the jump rates highly state dependent and complicates the analysis, but it is the key feature of SRT that ensures that the properties are preserved.

The idea of SRT is as follows. Every transition that moves the system into a higher state in one or more dimensions is linearly decreased as a function of these coordinates. This naturally generates a finite subset of the space, that cannot be left with positive probability under any policy. As a consequence, recurrent classes under any policy are always finite. As we get closer to the boundary of the finite set, the rates are smoothly truncated to 0. On the finite state space, the transition rates are bounded. Outside the finite set, the rates can be arbitrarily chosen, since these states are inessential. In particular, they can be chosen such that the jump rates are uniformly bounded.

In our model, a truncation parameter \(N=(N_1,\ldots , N_K)\in {\mathcal {N}}=(\mathbb {N}\cup \infty )^K\) defines the size of the state space. Since the empty state can always be reached, and there is a positive probability of an arrival in any queue within the finite set (not on the boundary clearly), the set of essential states is given by \(S^N=\{x\in S| x_i\le N_i, i=1,\ldots ,K\}\).

SRT prescribes a truncation of all transitions that move the system into a ‘larger’ state. In this model only arrivals move the system to a larger state, hence for all i the arrival rates \(\lambda _i\) are replaced by new rates \(\lambda _i^N(x)\) in state x. The smoothed arrival rates are given by

The result is a uniformisable MDP for each \(N\in {\mathcal {N}}\), which leads to a collection of parametrised MDPs \(\{X^N\}_{N\in {\mathcal {N}}}\). Here \(N=\infty ^K\in {\mathcal {N}}\) corresponds to the original model. For \(\pi \in {\varPi },\ N\in {\mathcal {N}}\) the transition rate matrix \(Q^N(\pi )\) is given by

As has been mentioned already, outside \(S^N\) it is possible to choose the rates as we like, for these states are inessential. In particular, we can choose the new abandonment rates of class i to be bounded by \(N_i\beta _i\).

Furthermore, the perturbed MDP is easily checked to satisfy the conditions of (Blok and Spieksma 2015, Theorems 4.2 and 5.1). The main ingredients of its verification are analogous to the proof of Lemma 1. The results in Blok and Spieksma (2015) guarantee that the value function \(V^{(N)}_\alpha \) of the N-perturbed MDP is well-defined with \(V^{(N)}_\alpha \rightarrow V_\alpha \), \(N\rightarrow \infty ^K\), and any limit point of \(\alpha \)-discount optimal policies for the N-perturbation, \(N\rightarrow \infty ^K\), is \(\alpha \)-discount optimal for the original MDP.

3.2 Dynamic programming

Apart from the parameter space \({\mathcal {N}}\), we will need to introduce a special subset \({\mathcal {N}}(\lambda )\), given by

Throughout the rest of this section, we fix the truncation parameter \(N\in {\mathcal {N}}\) and discount factor \(\alpha >0\). Our goal is to show that \(V_\alpha ^{(N)}\in wUI\cap I\) for all \(\alpha >0\) and \(N\in {\mathcal {N}}(\lambda )\). We use the following short-hand notation

Without loss of generality, we may assume that \({\bar{\lambda }} + \beta _N +\mu = 1\). The discrete-time uniformised MDP is defined by

Let \(V_{{\bar{\alpha }}}^{(N,d)}\) denote the expected discrete-time \({\bar{\alpha }}\)-discounted optimal cost:

Then, \({V}_{{\bar{\alpha }}}^{(N,d)}=V_{\alpha }^{(N)}\) (cf. Serfozo 1979). Moreover, we can approximate \({V}_{{\bar{\alpha }}}^{(N,d)}\) by using the value iteration algorithm. Indeed, the uniformised N-perturbed MDP in discrete time satisfies the conditions from Wessels (1977) for value iteration to converge. This is easily deduced from the fact that the N-perturbed MDP in continuous time satisfies the conditions of (Blok and Spieksma 2015, Theorems 4.2 and 5.1), which are the continuous time versions of the conditions developed by Wessels in discrete time.

Let \(v_{n,{\bar{\alpha }}}^{(N,d)}: {S}\rightarrow {\mathbb {R}}\) for \(n\ge 0\) be given by the following iteration scheme. Put \(v_{0,{\bar{\alpha }}}^{(N,d)}\equiv 0\), and

We will prove by induction that \(v_{n,{\bar{\alpha }}}^{(N,d)}\in wUI \cap I\) on \({S}^N\), for all \(n\ge 0\).

To employ the induction argument, we need three additional structural properties: convexity, supermodularity and bounded increasingness. We will specify these hereafter. The induction hypothesis \(v_{0,{\bar{\alpha }}}^{(N,d)}\equiv 0\) trivially satisfies all these properties. For the induction step, we will use Event Based Dynamic Programming (EBDP). This method uses event operators—representing arrivals, departures or cost—as building blocks to construct the iteration step of the value iteration algorithm.

Definition 3

Let \(f:{S}\rightarrow {\mathbb {R}}\), then define

-

1.

-

(a)

The total smoothed arrivals operator

$$\begin{aligned} {\mathcal {T}}_{SA}^{N}f:={\bar{\lambda }}^{-1}\sum _{i=1}^K \lambda _i {\mathcal {T}}_{SA(i)}^Nf, \end{aligned}$$using

-

(b)

the smoothed arrivals operator given by

$$\begin{aligned} {\mathcal {T}}_{SA(i)}^Nf(x):=\left\{ \begin{array}{ll} \left( 1-\frac{x_i}{N_i}\right) f(x+e_i) +\frac{x_i}{N_i}f(x), &{} \quad x_i\le N_i,\\ f(x), &{} \quad \text {else.} \end{array} \right. \end{aligned}$$

-

(a)

-

2.

-

(a)

The total increasing departures operator

$$\begin{aligned} {\mathcal {T}}_{ID}^{N}f:=\beta _N^{-1}\sum _{i=1}^K \beta _i N_i {\mathcal {T}}_{ID(i)}^Nf, \end{aligned}$$using

-

(b)

the increasing departures operator

$$\begin{aligned} {\mathcal {T}}_{ID(i)}^Nf(x):=\left\{ \begin{array}{ll} \frac{x_i}{N_i}f(x-e_i)+ \left( 1-\frac{x_i}{N_i}\right) f(x), &{} \quad x_i \le N_i,\\ f(x-e_i), &{} \quad \text {else.} \end{array} \right. \end{aligned}$$

-

(a)

-

3.

The cost operator

$$\begin{aligned} {\mathcal {T}}_Cf(x):= \sum _{i=1}^K c_ix_i +f(x). \end{aligned}$$ -

4.

The cost + increasing departures operator

$$\begin{aligned} {\mathcal {T}}_{CID}^{N}:=\beta _N^{-1} {\mathcal {T}}_C(\beta _N {\mathcal {T}}_{ID}^{N}). \end{aligned}$$ -

5.

The movable server operator

$$\begin{aligned} {\mathcal {T}}_{MS}f(x):= \min _{1\le j\le K} \Big \{\frac{\mu _j}{\mu } f((x-e_j)^+) + \Big (1-\frac{\mu _j}{\mu }\Big )f(x)\Big \}. \end{aligned}$$ -

6.

For \(f_1,f_2,f_3:{S}\rightarrow {\mathbb {R}}\), the uniformisation operator

$$\begin{aligned} {\mathcal {T}}_{UNIF}^N(f_1,f_2,f_3):= {\bar{\lambda }} f_1 +\beta _N f_2 + \mu f_3. \end{aligned}$$ -

7.

The discount operator

$$\begin{aligned} {\mathcal {T}}_{DISC}^{{\bar{\alpha }}}f:= {\bar{\alpha }}f. \end{aligned}$$

Now, \(v_{n+1,{\bar{\alpha }}}^{(N,d)}\) is constructed from \(v_{n,{\bar{\alpha }}}^{(N,d)}\) as follows

As has been mentioned, it is sufficient to verify that \( v_{n,{\bar{\alpha }}}^{(N,d)}\) has the desired structural properties on the finite set \({S}^N\). Therefore, we define the following collections of functions restricted to \({S}^N\).

Definition 4

(Properties on \({S}^N\))

-

1.

Weighted upstream increasing functions on \({S}^N\)

$$\begin{aligned}&wUI_N= \{f:{S}\rightarrow {\mathbb {R}}\ |\quad \mu _i(f(x+e_i+e_{i+1})-f(x+e_{i+1}))\\&\quad -\mu _{i+1}(f(x+e_i+e_{i+1})-f(x+e_i)) \ge 0,\\&\qquad \text { for all } x,x+e_i+e_{i+1} \in {S}^N,\ 1\le i< K\}. \end{aligned}$$ -

2.

Increasing functions on \({S}^N\)

$$\begin{aligned} I_N=\{f:{S}\rightarrow {\mathbb {R}}\ |\ f(x+e_i)-f(x)\ge 0,\text { for all } x,x+e_i \in {S}^N,\ 1\le i \le K\}. \end{aligned}$$ -

3.

Supermodular functions on \({S}^N\)

$$\begin{aligned}&\,{Super}_N=\{f:{S}\rightarrow {\mathbb {R}}\ |\ f(x+e_i+e_j)-f(x+e_i)-f(x+e_j)+f(x)\ge 0,\\&\quad \text { for all } x,x+e_i+e_{j} \in {S}^N,\ 1\le i<j\le K\}. \end{aligned}$$ -

4.

Convex functions on \({S}^N\)

$$\begin{aligned}&Cx_N=\{f:{S}\rightarrow {\mathbb {R}}\ |\ f(x+2e_i)\\&\quad -2f(x+e_i)+f(x)\ge 0,\text { for all } x,x+2e_i \in {S}^N,\ 1\le i \le K\}. \end{aligned}$$ -

5.

Bounded increasing functions on \({S}^N\)

$$\begin{aligned} Bd_N=\{f:{S}\rightarrow {\mathbb {R}}\ |\ f(x+e_i)-f(x)\le \frac{c_i}{\beta _i},\text { for all } x,x+e_i \in {S}^N.\ 1\le i \le K\}. \end{aligned}$$

The following propositions are sufficient for the desired structural properties to propagate through the induction step.

Proposition 1

The smoothed arrivals operator has the following propagation properties

-

(i)

$$\begin{aligned} {\mathcal {T}}_{SA}^{N}: I_N\rightarrow I_N, Cx_N\rightarrow Cx_N, \,{Super}_N\rightarrow \,{Super}_N, Bd_N\rightarrow Bd_N. \end{aligned}$$

-

(ii)

If moreover \(N\in {\mathcal {N}}(\lambda )\), then

$$\begin{aligned} {\mathcal {T}}_{SA}^{N}: I_N\cap wUI_N\rightarrow wUI_N. \end{aligned}$$

Proposition 2

The increasing departure operator has the following propagation properties

Proposition 3

The cost operator has the following propagation properties

Proposition 4

The cost + increasing departures operator has the following propagation properties

-

(i)

$$\begin{aligned} {\mathcal {T}}_{CID}^{N}: Bd_N\rightarrow Bd_N. \end{aligned}$$

-

(ii)

If moreover, for all \(1\le i< K\), it holds that \(c_i\ge c_{i+1},\ c_i\mu _i\ge c_{i+1}\mu _{i+1},\ {c_i\mu _i}/{\beta _i}\ge {c_{i+1}\mu _{i+1}}/{\beta _{i+1}}\), then

$$\begin{aligned} {\mathcal {T}}_{CID}^{N}: I_N\cap wUI_N\cap \,{Super}_N\cap Bd_N\rightarrow wUI_N.\end{aligned}$$

Proposition 5

The movable server operator has the following propagation properties

-

(i)

$$\begin{aligned}&{\mathcal {T}}_{MS}: I_N\cap wUI_N\rightarrow I_N\cap wUI_N,\\&I_N\cap wUI_N\cap Cx_N\cap \,{Super}_N\rightarrow Cx_N\cap \,{Super}_N. \end{aligned}$$

-

(ii)

If moreover, for all \(1\le i< K,\ {c_i\mu _i}/{\beta _i}\ge {c_{i+1}\mu _{i+1}}/{\beta _{i+1}}\) then

$$\begin{aligned} {\mathcal {T}}_{MS}:I_N\cap wUI_N\cap Bd_N\rightarrow Bd_N. \end{aligned}$$

Proposition 6

The uniformisation operator has the following propagation properties:

Proposition 7

The discount operator has the following propagation properties:

The proofs of the propositions are provided in the “Appendix”.

Corollary 1

Let \(N\in {\mathcal {N}}(\lambda )\), \(0<{\bar{\alpha }}<1\) and for \(1\le i <K \), \(c_i\ge c_{i+1},\ c_i\mu _i\ge c_{i+1}\mu _{i+1},\ {c_i\mu _i}/{\beta _i}\ge {c_{i+1}\mu _{i+1}}/{\beta _{i+1}}\).

-

(i)

Then, for all \(n\ge 0\)

$$\begin{aligned} v_{n,{\bar{\alpha }}}^{(N,d)}\in wUI_N\cap I_N\cap Cx_N\cap \,{Super}_N\cap Bd_N; \end{aligned}$$ -

(ii)

consequently,

$$\begin{aligned} V_{{\bar{\alpha }}}^{(N,d)}\in wUI_N\cap I_N\cap Cx_N\cap \,{Super}_N\cap Bd_N. \end{aligned}$$

Proof

Denote \({\mathcal {A}}= wUI_N\cap I_N\cap Cx_N\cap \,{Super}_N\cap Bd_N\). First notice that \(v_{0,{\bar{\alpha }}}^{(N,d)}\in {\mathcal {A}}\). Further, under the above conditions we have that \({\mathcal {T}}_{SA}^{N},\ {\mathcal {T}}_{CID}^{N},\ {\mathcal {T}}_{MS},\ {\mathcal {T}}_{DISC}^{{\bar{\alpha }}}: {\mathcal {A}} \rightarrow {\mathcal {A}}\) and \({\mathcal {T}}_{UNIF}^N: {\mathcal {A}}^3 \rightarrow {\mathcal {A}}\). This means that

Now suppose that \(v_{n,{\bar{\alpha }}}^{(N,d)}\in {\mathcal {A}}\). Then also \(v_{n+1,{\bar{\alpha }}}^{(N,d)} = {\mathcal {T}}_{DISC}^{{\bar{\alpha }}}({\mathcal {T}}_{UNIF}^N({\mathcal {T}}_{SA}^{N},{\mathcal {T}}_{CID}^{N},{\mathcal {T}}_{MS}))v_n^{{\bar{\alpha }},N} \in {\mathcal {A}}\). Assertion i) follows by induction.

Assertion ii) immediately follows from i) due to convergence of value iteration [see Wessels 1977, (Blok and Spieksma 2017, Theorem 5.2)]. \(\square \)

4 Proof of main theorems

Proof of Theorem 1

Suppose that for \(1\le i <K \), \(c_i\ge c_{i+1}\), \( c_i\mu _i\ge c_{i+1}\mu _{i+1}\), \( {c_i\mu _i}/{\beta _i}\ge {c_{i+1}\mu _{i+1}}/{\beta _{i+1}}\). Let the continuous-time discount factor \(\alpha >0\), then the discrete time discount factor \({\bar{\alpha }}= {1}/{\alpha +1}\) satisfies \(0<{\bar{\alpha }}<1\). Take \(N\in {\mathcal {N}}(\lambda )\), then Corollary 1 implies that \(V_{{\bar{\alpha }}}^{(N,d)}=V_{\alpha }^{(N)}\in wUI_N\cap I_N\). This model satisfies the parametrised Markov processes theorem in (Blok and Spieksma 2015, Theorem 5.1), which implies continuity in the truncation parameter. This means that \(V_{\alpha }^{(N)}\rightarrow V_{\alpha }\) as \(N\rightarrow \infty \). Hence, \(V_{\alpha }\in wUI \cap I\) and so by virtue of Lemma 1, the smallest index policy is \(\alpha \)-discount optimal. \(\square \)

Proof of Theorem 2

Suppose that for \(1\le i <K \), \(c_i\ge c_{i+1}\), \( c_i\mu _i\ge c_{i+1}\mu _{i+1}\), \( {c_i\mu _i}/{\beta _i}\ge {c_{i+1}\mu _{i+1}}/{\beta _{i+1}}\). By Theorem 1 the Smallest Index Policy, \(\pi ^\alpha \) say, is \(\alpha \)-discount optimal, for all \(\alpha >0\).

Notice, that the model satisfies the assumptions of (Blok and Spieksma 2017, Theorem 5.7). This theorem implies the existence of a sequence \((\alpha _m)\) with \(\lim _{m\rightarrow \infty }\alpha _m=0\), such that the limit \(\lim _{m\rightarrow \infty } \pi ^{\alpha _m}\) is average optimal. Since \(\pi ^\alpha \) is the smallest index policy for all \(\alpha >0\), so is the limit policy. Hence the Smallest Index Policy is average optimal. \(\square \)

5 Numerical results

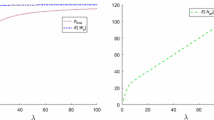

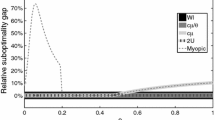

The triple inequality on the input parameters of the process guaranteeing optimality of the Smallest Index Policy induces a lot of parameter configurations that fall outside the scope of the theorems. This naturally gives rise to the question whether all three inequalities are necessary.

From numerical calculations, it follows that we cannot omit one of the three conditions. If one of these three inequalities is violated, then the examples below show that the Smallest Index Policy need not be optimal. We carried out the calculations for \(K=2\).

-

1.

Consider the following parameter setting

We see that the first condition is violated, \(c_1<c_2\), while the other conditions are satisfied. The optimal policy is a switching curve policy: for ‘small’ states action 2 is optimal and for ‘large’ states action 1 is optimal, see Fig. 1. Note that colour green corresponds to action 1, i.e. serving queue 1, and colour red to action 2 i.e. serving queue 2.

-

2.

The next parameter setting is given by

Observe, that the first and the third condition hold, but the second condition is violated. In Fig. 2 the optimal policy is displayed. We see that the Smallest Index Policy need not be optimal. There is a small region—with only few customers—where it is optimal to take action 2. In the larger states action 1 is optimal, that is, the Smallest Index Policy is optimal.

-

3.

The final parameter setting is given by

Here only the first and second condition are satisfied. Figure 3 shows that it can be optimal to serve the station with the highest index instead of the smallest index.

Another observation can be made, based on these examples. In all cases a switching curve policy is optimal. Since an index policy can be viewed as a degenerate switching curve policy, we conjecture that a switching curve policy is always optimal.

References

Atar, R., Giat, C., & Shimkin, N. (2010). The \(c \mu /\theta \) rule for many-server queues with abandonment. Operations Research, 58(5), 1427–1439.

Ayesta, U., Jacko, P., & Novak, V. (2011). A nearly-optimal index rule for scheduling of users with abandonment. In 2011 IEEE Proceedings of INFOCOM (pp. 2849–2857). IEEE.

Baras, J., Ma, D., & Makowski, A. (1985). K competing queues with geometric service requirements and linear costs: The [mu] c-rule is always optimal. Systems & Control Letters, 6(3), 173–180.

Bhulai, S., Brooms, A., & Spieksma, F. (2014). On structural properties of the value function for an unbounded jump Markov process with an application to a processor sharing retrial queue. Queueing Systems, 76(4), 425–446.

Blok, H., & Spieksma, F. (2015). Countable state Markov decision processes with unbounded jump rates and discounted cost: Optimality and approximations. Advances in Applied Probability, 47(4), 1088–1107.

Blok, H., & Spieksma, F. (2017). Structures of optimal policies in Markov Decision Processes with unbounded jumps: The State of our Art, chap. 5 (pp. 139–196). Berlin: Springer.

Buyukkoc, C., Varaiya, P., & Walrand, J. (1985). The c\(\mu \) rule revisited. Advances in Applied Probability, 17(1), 237–238.

Down, D., Koole, G., & Lewis, M. (2011). Dynamic control of a single-server system with abandonments. Queueing Systems, 67(1), 63–90.

Ertiningsih, D., Bhulai, S., & Spieksma, F. M. (2015). Monotonicity properties of the single server queue with abandonments and retrials by coupling. Tech. rep., Mathematisch Instituut Leiden.

Larrañaga, M., Ayesta, U., & Verloop, I. (2013). Dynamic fluid-based scheduling in a multi-class abandonment queue. Performance Evaluation, 70(10), 841–858.

Larrañaga, M., Ayesta, U., & Verloop, I. (2015). Asymptotically optimal index policies for an abandonment queue with convex holding cost. QUESTA, 81(2), 99–169.

Salch, A., Gayon, J. P., & Lemaire, P. (2013). Optimal static priority rules for stochastic scheduling with impatience. Operations Research Letters, 41(1), 81–85.

Serfozo, R. (1979). An equivalence between continuous and discrete time Markov decision processes. Operational Research, 27(3), 616–620.

Strauch, R. (1966). Negative dynamic programming. The Annals of Mathematical Statistics, 37(4), 871–890.

Wessels, J. (1977). Markov programming by successive approximations with respect to weighted supremum norms. Journal of Mathematical Analysis and Applications, 58(2), 326–335.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

In the appendix we will provide the proofs of Propositions 1–7. In this section we use the following notation.

Definition 5

-

1.

For \(1\le i< K\),

$$\begin{aligned}&wUI_N(i)= \{f:{S}\rightarrow {\mathbb {R}}\ | \quad \mu _i[f(x+e_i+e_{i+1})-f(x+e_{i+1})]\\&\quad -\mu _{i+1}[f(x+e_i+e_{i+1})-f(x+e_i)] \ge 0,\\&\qquad \text { for all } x,x+e_i+e_{i+1} \in {S}^N\}. \end{aligned}$$ -

2.

For \(1\le i \le K\),

$$\begin{aligned} I_N(i)=\{f:{S}\rightarrow {\mathbb {R}}\ |\ f(x+e_i)-f(x)\ge 0,\text { for all } x,x+e_i \in {S}^N\}. \end{aligned}$$ -

3.

For \(1\le i \le K\),

$$\begin{aligned} Cx_N(i){=}\{f:{S}{\rightarrow } {\mathbb {R}}\ |\ f(x{+}2e_i)-2f(x+e_i)+f(x)\ge 0,\text { for all } x,x+2e_i \in {S}^N\}. \end{aligned}$$ -

4.

For \(1\le i \ne j\le K\),

$$\begin{aligned}&Super_N(i,j)=\{f:{S}\rightarrow {\mathbb {R}}\ |\ f(x+e_i+e_j)-f(x+e_i)-f(x+e_j)+f(x)\ge 0,\\&\quad \text { for all } x,x+e_i+e_{j} \in {S}^N\}. \end{aligned}$$ -

5.

For \(1\le i \le K\),

$$\begin{aligned} Bd_N(i)=\left\{ f:{S}\rightarrow {\mathbb {R}}\ |\ f(x+e_i)-f(x)\le \frac{c_i}{\beta _i},\text { for all } x,x+e_i \in {S}^N\right\} . \end{aligned}$$

It is straightforward that

Proof of Proposition 1

First, we show that \({\mathcal {T}}_{SA}^{N}: I_N\rightarrow I_N\). Suppose \(f \in I_N\), let \(1\le i\le K\), then for all \(x,x+e_i \in {S}^N\), we have

The inequality is due to \(f\in I_N(i)\). Hence we obtain \({\mathcal {T}}_{SA(i)}^Nf \in I_N(i)\). Notice that \({\mathcal {T}}_{SA(i)}^Nf \in I_N(j)\) for \(i\ne j\) is trivial. Hence we conclude that \({\mathcal {T}}_{SA(i)}^N: I_N\rightarrow I_N\). This yields \({\mathcal {T}}_{SA}^{N}: I_N\rightarrow I_N\).

Secondly, we prove \({\mathcal {T}}_{SA}^{N}: Cx_N\rightarrow Cx_N\). Assume, that \(f\in Cx_N\). Let \(1\le i \le K\), then \(x, x+2e_i \in {S}^N\) implies

The inequality follows from \(f\in Cx_N(i)\). Hence \({\mathcal {T}}_{SA(i)}^Nf\in Cx_N(i)\). Trivially, \({\mathcal {T}}_{SA(i)}^Nf \in Cx_N(j)\), for \(j\ne i\). So, \({\mathcal {T}}_{SA(i)}^Nf\in Cx_N\) for any i, and we conclude that \({\mathcal {T}}_{SA}^{N}: Cx_N\rightarrow Cx_N\).

Thirdly, we prove \( {\mathcal {T}}_{SA}^{N}: \,{Super}_N\rightarrow \,{Super}_N\). Suppose, that \(f \in \,{Super}_N\). Let \(1\le i \ne j \le K\) be arbitrary, then for all x with \(x,x+e_i+e_{j} \in {S}^N\) we have

We use \(f\in Super_N(i,j)\) on the terms between square brackets to get the inequality. Thus, \({\mathcal {T}}_{SA(i)}^Nf \in Super_N(i,j)\). Clearly, \({\mathcal {T}}_{SA(i)}^Nf \in Super_N(j,k)\), if \(i\ne j, k\). Hence, \( {\mathcal {T}}_{SA(i)}^N: \,{Super}_N\rightarrow \,{Super}_N\) for any i, implying that \( {\mathcal {T}}_{SA}^{N}: \,{Super}_N\rightarrow \,{Super}_N\).

Fourth, we prove \({\mathcal {T}}_{SA}^{N}: Bd_N\rightarrow Bd_N\). Suppose, that \(f \in Bd_N\). Let \(1\le i\le K\) arbitrary, then for all x with \(x,x+e_i \in {S}^N\), we have

We use \(f\in Bd_N(i)\) on the terms in square brackets, to obtain the first inequality. Hence, \({\mathcal {T}}_{SA(i)}^Nf \in Bd_N(i)\). It easily follows, that \({\mathcal {T}}_{SA(i)}^N: Bd_N\rightarrow Bd_N\), and so \({\mathcal {T}}_{SA}^{N}: Bd_N\rightarrow Bd_N\).

For the proof of ii), assume that \(N\in {\mathcal {N}}(\lambda )\), so that \({\lambda _{i+1}}/{N_{i+1}} \ge {\lambda _{i}}/{N_{i}}\) for all \(1\le i < K\). We will prove, that \( {\mathcal {T}}_{SA}^{N}: I_N\cap wUI_N\rightarrow wUI_N\). The property \(wUI_N\) does not propagate through one individual smoothed arrivals operator, so it is necessary to look at the combined smoothed arrivals operator \({\mathcal {T}}_{SA}^{N}\). Supposing that \(f \in I_N\cap wUI_N\), it suffices to show that \({\mathcal {T}}_{SA}^{N}f \in wUI_N(i)\), for an arbitrary \(1\le i< K\). First we look at the \({\mathcal {T}}_{SA(i)}^N\) operator, for all x with \(x,x+e_i+e_{i+1} \in {S}^N\). Then, we have

The terms between square brackets are greater or equal to zero because \(f\in wUI_N(i)\). Notice, that the resulting term is smaller than or equal to zero, since \(f\in I_N\). Therefore we combine this with the \({\mathcal {T}}_{SA(i+1)}^N\) operator, similar as above we get

Then, we obtain for the total smoothed arrivals operator

The first inequality is due to the fact that \({\mathcal {T}}_{SA(j)}^N\) for \(j\ne i, i+1\) trivially propagates \(wUI_N(i)\). The second inequality follows from Inequalities (2) and (3). The third inequality follows from \(f\in I_N\cap wUI_N\) and \({\lambda _{i+1}}/{N_{i+1}} \ge {\lambda _{i}}/{N_{i}}\). Therefore, we have \({\mathcal {T}}_{SA}^{N}f \in wUI_N(i)\) for every \(1\le i<K\), hence, \( {\mathcal {T}}_{SA}^{N}: I_N\cap wUI_N\rightarrow wUI_N\). \(\square \)

Proof of Proposition 2

We start with the proof of \({\mathcal {T}}_{ID}^{N}: I_N\rightarrow I_N\). Suppose, that \(f \in I_N\). Let \(1\le i\le K\) be arbitrary, then for all x with \(x,x+e_i \in {S}^N\), we have

Here the inequality follows from \(f\in I_N(i)\). Hence, \({\mathcal {T}}_{ID(i)}^N: I_N(i)\rightarrow I_N(i)\). Moreover, for \(j\ne i\) trivially we have \({\mathcal {T}}_{ID(i)}^N: I_N(j)\rightarrow I_N(j)\) as well. This induces that \({\mathcal {T}}_{ID(i)}^Nf \in I_N\), and since \(1\le i\le K\) was chosen arbitrary, we have \({\mathcal {T}}_{ID}^{N}: I_N\rightarrow I_N\).

We continue with the proof of \({\mathcal {T}}_{ID}^{N}: Cx_N\rightarrow Cx_N\). Suppose, that \(f\in Cx_N\). Let \(1\le i \le K\) be arbitrary. Let x be such, that \(x,x+2e_i \in {S}^N\). Then,

The inequality follows from \(f\in Cx_N(i)\). We may conclude, that \({\mathcal {T}}_{ID(i)}^N:Cx_N(i)\rightarrow Cx_N(i)\). Further, \({\mathcal {T}}_{ID(i)}^N:Cx_N(j)\rightarrow Cx_N(j)\) is trivial. Hence, \({\mathcal {T}}_{ID(i)}^Nf\in Cx_N\) and, since i arbitrary, also \({\mathcal {T}}_{ID}^{N}:Cx_N\rightarrow Cx_N\).

Next, we prove \( {\mathcal {T}}_{ID}^{N}: \,{Super}_N\rightarrow \,{Super}_N\). Suppose, that \(f \in \,{Super}_N\). Let \(1\le i \ne j \le K\). Then, for x with \(x,x+e_i+e_j \in {S}^N\), it holds that

The inequality follows from \(f\in Super_N(i,j)\). Thus, \({\mathcal {T}}_{ID(i)}^Nf\in Super_N(i,j)\). It easily follows, that \( {\mathcal {T}}_{ID(i)}^N: \,{Super}_N\rightarrow \,{Super}_N\), so that also \( {\mathcal {T}}_{ID}^{N}: \,{Super}_N\rightarrow \,{Super}_N\). \(\square \)

Proof of Proposition 3

First, we prove \( {\mathcal {T}}_C: I_N\rightarrow I_N\). Let \(1\le i\le K\), then for x with \(x,x+e_i\in {S}^N\) it holds that

The inequality follows from the assumption that \(f\in I_N(i)\) and \(c_i\ge 0\). Hence, \({\mathcal {T}}_Cf \in I_N(i)\), and so \({\mathcal {T}}_C: I_N\rightarrow I_N\).

Next, we prove the propagation of convexity. Let \(1\le i\le K\). For x with \(x,x+2e_i\in {S}^N\), it holds that

The inequality follows directly from the assumption that \(f\in Cx_N(i)\). We conclude, that \({\mathcal {T}}_Cf \in Cx_N(i)\) implying in turn \({\mathcal {T}}_C:Cx_N\rightarrow Cx_N\).

For the propagation of supermodularity \({\mathcal {T}}_C:\,{Super}_N\rightarrow \,{Super}_N\), let \(1\le i\ne j\le K\). Then for x with \(x,x+e_i+e_j\in {S}^N\), it holds that

The inequality follows from \(f\in Super_N(i,j)\). Hence, \({\mathcal {T}}_Cf \in Super_N(i,j)\) for any \(1\le i\ne j\le K\), and so \({\mathcal {T}}_C: \,{Super}_N\rightarrow \,{Super}_N\). \(\square \)

Proof of Proposition 4, part (i)

First we prove \({\mathcal {T}}_{CID}^{N}: Bd_N\rightarrow Bd_N\). It is necessary to take the combination of more operators, because \({\mathcal {T}}_C\) alone does not propagate \(Bd_N\). First, we derive the following inequalities for the increasing departure operators. Let \(f\in Bd_N\), and let \(1\le i\le K\). For x \(x,x+e_i \in {S}^N\), we have

The inequality follows from \(f\in Bd_N(i)\). Further, for \(j\ne i\) trivially

Hence, for the operator \({\mathcal {T}}_{CID}^{N}\) we obtain

Here the inequality follows from Inequalities (4) and (5). This yields \({\mathcal {T}}_{CID}^{N}f \in Bd_N(i)\), for all i. From this the propagation of \(Bd_N\) through \({\mathcal {T}}_{CID}^{N}\) follows. \(\square \)

Proof of Proposition 4, part (ii)

Suppose that \(c_i\ge c_{i+1}\) \( c_i\mu _i\ge c_{i+1}\mu _{i+1}\), and \({c_i\mu _i}/{\beta _i}\ge {c_{i+1}\mu _{i+1}}/{\beta _{i+1}}\), for all \(1\le i< K\). We will prove, that \({\mathcal {T}}_{CID}^{N}: I_N\cap wUI_N\cap \,{Super}_N\cap Bd_N\rightarrow wUI_N\). Let \(f\in I_N\cap wUI_N\cap \,{Super}_N\cap Bd_N\), take \(1\le i <K\), and let x be such, that \(x,x+e_i+e_{i+1}\in {S}^N\). First, observe that for \(j\ne i, i+1\), we have

Further, we get the following inequality for \({\mathcal {T}}_{ID(i)}^N\)

The inequality is due to \(f\in wUI_N\). For \({\mathcal {T}}_{ID(i+1)}^N\) it holds that

The inequality follows from \(wUI_N\). Combining the above three Inequalities (6), (7) and (8) gives

Hence,

We wish to argue that that Eq. (9) is non-negative. To this end, we will make four case distinctions with respect to the parameters.

-

1.

Suppose, that \(\mu _i \le \mu _{i+1}\) and \(\beta _i\le \beta _{i+1}\), then

$$\begin{aligned} (9)= & {} \beta _i \big ((\mu _{i+1}-\mu _i)(f(x+e_i+e_{i+1})-f(x+e_{i+1}))-\mu _{i+1}(f(x+e_i)-f(x))\big )\\&+\,\beta _{i+1}\mu _i(f(x+e_i)-f(x))+ \mu _i c_i-\mu _{i+1} c_{i+1}\\\ge & {} \beta _i(\mu _{i+1}-\mu _i)[f(x+e_i+e_{i+1})-f(x+e_i)-f(x+e_{i+1})+f(x)]\\&+\,\mu _i(\beta _{i+1}-\beta _i)[f(x+e_i)-f(x)]\\\ge & {} 0. \end{aligned}$$The first inequality is due to \(c_i\mu _i\ge c_{i+1}\mu _{i+1}\). The second inequality follows from \(\mu _i \le \mu _{i+1}\) and \(f\in Super_N(i,j)\), together with \(\beta _i\le \beta _{i+1}\) and \(f\in I_N(i)\).

-

2.

Suppose, that \(\mu _i \le \mu _{i+1}\) and \(\beta _i\ge \beta _{i+1}\). Then,

$$\begin{aligned} (9)= & {} \beta _i \big ((\mu _{i+1}-\mu _i)(f(x+e_i+e_{i+1})-f(x+e_{i+1}))-\mu _{i+1}(f(x+e_i)-f(x))\big )\\&+\,\beta _{i+1}\mu _i(f(x+e_i)-f(x))+ \mu _i c_i-\mu _{i+1} c_{i+1}\\= & {} \beta _i(\mu _{i+1}-\mu _i)[f(x+e_i+e_{i+1})-f(x+e_i)-f(x+e_{i+1})+f(x)]\\&-\,\mu _i(\beta _{i}-\beta _{i+1})[f(x+e_i)-f(x)]+ \mu _i c_i-\mu _{i+1} c_{i+1}\\\ge & {} -\,\mu _i(\beta _{i}-\beta _{i+1})[f(x+e_i)-f(x)]+ \mu _i c_i-\mu _{i+1} c_{i+1}\\\ge & {} -\,\mu _i(\beta _{i}-\beta _{i+1}) \frac{c_i}{\beta _i}+ \mu _i c_i-\mu _{i+1} c_{i+1}\\= & {} -\,\mu _i c_i +\beta _{i+1}\frac{\mu _i c_i}{\beta _i}+ \mu _i c_i-\mu _{i+1} c_{i+1}\\\ge & {} -\,\mu _i c_i +\beta _{i+1}\frac{\mu _{i+1} c_{i+1}}{\beta _{i+1}}+ \mu _i c_i-\mu _{i+1} c_{i+1}\\= & {} 0. \end{aligned}$$The first inequality is due to \(f\in Super_N(i,j)\) together with \(\mu _i \le \mu _{i+1}\). The second inequality comes from \(\beta _i\ge \beta _{i+1}\) and \(f\in Bd_N(i)\). The last inequality is due to \({c_i\mu _i}/{\beta _i}\ge {c_{i+1}\mu _{i+1}}/{\beta _{i+1}}\).

-

3.

Next, we assume that \(\mu _i \ge \mu _{i+1}\), \( {\mu _i}/{\beta _i}\ge {\mu _{i+1}}/{\beta _{i+1}}\). Then,

$$\begin{aligned} (9)= & {} \beta _i \big ((\mu _{i+1}-\mu _i)(f(x+e_i+e_{i+1})-f(x+e_{i+1}))-\mu _{i+1}(f(x+e_i)-f(x))\big )\\&+\,\beta _{i+1}\mu _i(f(x+e_i)-f(x))+ \mu _i c_i-\mu _{i+1} c_{i+1}\\= & {} (\beta _{i+1}\mu _i-\beta _i\mu _{i+1})[f(x+e_i)-f(x)]\\&-\,\beta _i(\mu _i-\mu _{i+1})[f(x+e_i+e_{i+1})-f(x+e_{i+1})]+ \mu _i c_i-\mu _{i+1} c_{i+1}\\\ge & {} -c_i(\mu _i-\mu _{i+1})+ \mu _i c_i-\mu _{i+1} c_{i+1}\\= & {} \mu _{i+1}(c_i-c_{i+1})\\\ge & {} 0. \end{aligned}$$The first inequality follows from \({\mu _i}/{\beta _i}\ge {\mu _{i+1}}/{\beta _{i+1}}\) and \(f\in I_N(i)\), together with \(\mu _i \ge \mu _{i+1}\) and \(f\in Bd_N(i)\). The second inequality follows from \(c_i\ge c_{i+1}\).

-

4.

Finally, assume that \(\mu _i \ge \mu _{i+1}\) and \({\mu _i}/{\beta _i}\le {\mu _{i+1}}/{\beta _{i+1}}\), then

$$\begin{aligned} (9)= & {} \beta _i \big ((\mu _{i+1}-\mu _i)(f(x+e_i+e_{i+1})-f(x+e_{i+1}))-\mu _{i+1}(f(x+e_i)-f(x))\big )\\&+\,\beta _{i+1}\mu _i(f(x+e_i)-f(x))+ \mu _i c_i-\mu _{i+1} c_{i+1}\\= & {} (\beta _i\mu _{i+1}-\beta _{i+1}\mu _i)[f(x+e_i+e_{i+1})-f(x+e_i)-f(x+e_{i+1})+f(x)]\\&-\,(\beta _i-\beta _{i+1})\mu _i[f(x+e_i+e_{i+1})-f(x+e_{i+1})]+ \mu _i c_i-\mu _{i+1} c_{i+1}\\\ge & {} -\,(\beta _i-\beta _{i+1})\mu _i \frac{c_i}{\beta _i} + \mu _i c_i-\mu _{i+1} c_{i+1}\\= & {} +\, \beta _{i+1}\frac{c_i\mu _i}{\beta _i} - c_{i+1}\mu _{i+1}\\\ge & {} +\, \beta _{i+1}\frac{c_{i+1}\mu _{i+1}}{\beta _{i+1}} - c_{i+1}\mu _{i+1}\\= & {} 0. \end{aligned}$$The first inequality follows from \({\mu _i}/{\beta _i}\le {\mu _{i+1}}/{\beta _{i+1}}\) and \(f\in Super_N(i,j)\), together with \(\beta _i \ge \beta _{i+1}\) and \(f\in Bd_N(i)\). The third inequality is due to \({c_i\mu _i}/{\beta _i}\ge {c_{i+1}\mu _{i+1}}/{\beta _{i+1}}\).

So, for any \(1\le i < K\), we have \({\mathcal {T}}_{CID}^{N}f\in wUI_N(i)\). Hence, we conclude that \({\mathcal {T}}_{CID}^{N}: I_N\cap wUI_N\cap \,{Super}_N\cap Bd_N\rightarrow wUI_N\). \(\square \)

Proof of Proposition 5, part (i)

Before starting with the proofs, the following remark is due. By Lemma 1, the Smallest Index Policy is optimal, if \(v^\alpha \in wUI\cap I\). The same holds true, if \(f\in wUI_N\cap I_N\). Then for \(x\in {S}^N\) the action that chooses the smallest index minimises the \({\mathcal {T}}_{MS}\) operator. We will use this several times below.

First, we prove that \({\mathcal {T}}_{MS}: I_N\cap wUI_N\rightarrow I_N\). Assume, that \(f\in I_N\cap wUI_N\). Let \(1\le i\le K\) be arbitrary. It suffices to show that \({\mathcal {T}}_{MS}f \in I_N(i)\). First, suppose \(x=0\), then

The optimal policy is non-idling, because \(f\in I_N\). Hence, in state \(e_i\) the system is minimised by serving station i, the only non-empty queue. The second term corresponds to the system being empty, so nobody is served, and the inequality follows from \(f\in I_N(i)\).

Next, suppose that \(x\ne 0\), s.t. \(x,x+e_i \in {S}^N\). Let \(j^*\) be the optimal action in state x. If \(j^*\le i\), then \(wUI_N\) implies that this is also the optimal action in state \(x+e_i\), and so the inequality follows straightforward. If \(j^*>i\), then \(wUI_N\) implies that serving station i is the optimal action in state \(x+e_i\). We obtain,

The last inequality follows from \(f\in I_N\). We conclude, that \({\mathcal {T}}_{MS}f \in I_N(i)\).

We continue by the proof of \({\mathcal {T}}_{MS}: I_N\cap wUI_N\rightarrow wUI_N\). Suppose that \(f\in I_N\cap wUI_N\), so that the Smallest Index Policy is optimal. Let \(1\le i<K\) be arbitrary. Let x be such that \(x, x+e_i+e_i+1\in {S}^N\), and let \(j^*\) be the optimal action in state \(x+e_{i+1}\). Suppose \(j^*\le i\). Then the Smallest Index Policy implies that \(j^*\) is optimal in states \(x+e_i,\ x+e_{i+1}\) and \(x+e_i+e_{i+1}\) as well. The propagation of \(wUI_N\) is trivial. Supposing that \(j^*> i\), action i is optimal in state \(x+e_i\) and \(x+e_i+e_{i+1}\), while in state \(x+e_{i+1}\) action \(i+1\) is optimal. We get,

The inequality follows from the assumption \(f\in wUI_N(i)\). We conclude that \({\mathcal {T}}_{MS}: I_N\cap wUI_N\rightarrow wUI_N(i)\),thus implying \({\mathcal {T}}_{MS}: I_N\cap wUI_N\rightarrow wUI_N\).

We prove \({\mathcal {T}}_{MS}: I_N\cap wUI_N\cap Cx_N\cap \,{Super}_N\rightarrow Cx_N\cap \,{Super}_N\). To this end, assume \(f\in I_N\cap wUI_N\cap Cx_N\cap \,{Super}_N\). Let \(1\le i\ne j \le K\) be arbitrary, w.l.o.g. assume that \(i<j\). First, suppose that \(x=0\). Then, in state \(x+e_i\) and \(x+e_i+e_j\) it is optimal to serve station i, while in state \(x+e_j\) it is optimal to do action j. Therefore,

The inequality follows from \(f \in I_N(j)\cap Super_N(i,j)\).

Next, suppose that \(x\ne 0\), with \(x,x+e_i+e_j\in {S}^N\). Let the optimal action in state x be \(j^*\). We will make three case distinctions. Suppose that \(j^*\le i\). Then \(f \in I_N\cap wUI_N\) implies that \(j^*\) is also optimal in \(x+e_i,\ x+e_j\) and \(x+e_i+e_j\). The same action is optimal in every state, which implies that \(Super_N(i,j)\) is propagated trivially.

If \(i < j^* \le j\), then \( I_N\cap wUI_N\) implies that \(j^*\) is also optimal in \( x+e_j\), and action i is optimal in \(x+e_i\) and \(x+e_i+e_j\). We obtain

The inequality follows from \(f \in \,{Super}_N\cap Cx_N\).

If \( j^* >j\), then by \( I_N\cap wUI_N\), action i is optimal in \(x+e_i\) and \(x+e_i+e_j\). Serving station j is optimal in state \(x+e_j\), but if we choose suboptimal action \(j^*\) instead, this makes the expression only smaller. Then we are in the same situation as above, for which we have already derived non-negativity. Hence, \({\mathcal {T}}_{MS}f \in Super_N(i,j)\). We conclude, that \({\mathcal {T}}_{MS}f \in \,{Super}_N\) as well.

This only leaves to prove that \({\mathcal {T}}_{MS}f \in Cx_N\). First, consider the case that \(x=0\). Then, in states \(x+e_i\) and \(x+2e_i\) action i is optimal. Hence,

The inequality follows from \(f\in I_N(i)\cap Cx_N(i)\).

Now, consider the case that \(x\ne 0\), such that \(x,x+2e_i\in {S}^N\). Let \(j^*\) be the optimal action in state x. If \({j^*}\le i\), then in states \(x+e_i\) and \(x+2e_i\) the optimal actions are equal to \(j^*\) as well. The propagation is trivial in that case. If \({j^*}> i\), then the optimal action in states \(x+e_i\) and \(x+2e_i\) is action i. We obtain the following inequality

The first inequality is due to \(f\in wUI_N\), the second comes from \(f\in \,{Super}_N\cap Cx_N\). Hence, \({\mathcal {T}}_{MS}f \in Cx_N(i)\). We conclude, that \({\mathcal {T}}_{MS}: I_N\cap wUI_N\cap Cx_N\cap \,{Super}_N\rightarrow Cx_N\cap \,{Super}_N\). \(\square \)

Proof of Proposition 5, part (ii)

Assume, that \({c_{i}\mu _{i}}/{\beta _{i}}\ge {c_{i+1}\mu _{i+1}}/{\beta _{i+1}}\), for all \(1\le i <K\). We will prove, that \({\mathcal {T}}_{MS}: I_N\cap wUI_N\cap Bd_N\rightarrow Bd_N\). Let \(f\in I_N\cap wUI_N\cap Bd_N\), and let \(1\le i\le K\) be arbitrary. Again we make two case distinctions. First, suppose that \(x=0\). Then,

For the second inequality we use that \(f\in Bd_N(i)\).

Next, suppose that \(x\ne 0\). Let \(j^*\) be the optimal action in state x. If \(j^*\le i\), then as a result of \(f\in I_N\cap wUI_N\), the optimal actions in states x and \(x+e_i\) are equal, namely \(j^*\). As a consequence, the propagation is trivial. If \(j^*> i\) then the optimal actions are not equal, because \(wUI_N\) implies that in state \(x+e_i\) it is optimal to serve state i. We obtain

The first inequality follows from \(f\in Bd_N\), the second follows from\({c_{i}\mu _{i}}/{\beta _{i}}\ge {c_{j^*}\mu _{j^*}}/{\beta _{j^*}}\) for \(i< j^*\). We conclude, that \({\mathcal {T}}_{MS}f \in Bd_N(i)\) and thus \({\mathcal {T}}_{MS}: I_N\cap wUI_N\cap Bd_N\rightarrow Bd_N\). \(\square \)

Proof of Proposition 6

It follows directly that \({\mathcal {T}}_{UNIF}^N: I_N^3 \rightarrow I_N, wUI_N^3 \rightarrow wUI_N, Cx_N^3 \rightarrow Cx_N, \,{Super}_N^3 \rightarrow \,{Super}_N\), since convex combinations of nonnegative terms are nonnegative.

The propagation \({\mathcal {T}}_{UNIF}^N: Bd_N^3\rightarrow Bd_N\) is also straightforward. We have that \(\lambda + \beta _N +\mu =1\), hence if \(f_1, f_2, f_3\in Bd_N\), then \({\mathcal {T}}_{UNIF}^N(f_1,f_2,f_3):= \lambda f_1 +\beta _N f_2 + \mu f_3 \in Bd_N\). \(\square \)

Proof of Proposition 7

Recalling that \({\mathcal {T}}_{DISC}^{{\bar{\alpha }}}f = (1-{\bar{\alpha }})f\), clearly \({\mathcal {T}}_{DISC}^{{\bar{\alpha }}}:wUI_N\rightarrow wUI_N,\ I_N\rightarrow I_N,\ Cx_N\rightarrow Cx_N, \,{Super}_N\rightarrow \,{Super}_N\).

Further, suppose that \(f\in Bd_N\). Let \(1\le i\le K\) be arbitrary, and let x be such that \(x,x+e_i\in {S}^N\). Then,

The first inequality is due to \(f\in Bd_N(i)\). This implies \({\mathcal {T}}_{DISC}^{{\bar{\alpha }}}f \in Bd_N(i)\). Hence \({\mathcal {T}}_{DISC}^{{\bar{\alpha }}}: Bd_N\rightarrow Bd_N\). \(\square \)

Rights and permissions

OpenAccess This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Bhulai, S., Blok, H. & Spieksma, F.M. K competing queues with customer abandonment: optimality of a generalised \(c \mu \)-rule by the Smoothed Rate Truncation method. Ann Oper Res 317, 387–416 (2022). https://doi.org/10.1007/s10479-019-03131-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10479-019-03131-3

Keywords

- Competing queues

- Abandonments

- Unbounded transition rates

- Smoothed Rate Truncation

- Markov decision processes

- Generalised \(c \mu \)-rule

- Uniformisation

, and a constant

, and a constant

and a constant

and a constant  ,

,  ,

,