Abstract

In this paper, we pay our attention to multiplicative parameters of random variables and their estimators. We study multiplicative properties of the multiplicative expectation and multiplicative variation as well as their estimators. For distributions having applications in finance and insurance we provide their multiplicative parameters and their properties. We consider, among others, heavy-tailed distributions such as lognormal and Pareto distributions, applied to the modelling of large losses. We discuss multiplicative models, in which the geometric mean and the geometric standard deviation are more natural than their arithmetic counterparts. We provide two examples from the Warsaw Stock Exchange in 1995–2009 and from a bid of 52-week treasury bills in 1992–2009 in Poland as an illustrative example.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Two measures frequently used in descriptive statistics are the arithmetic mean and the standard deviation. The geometric mean is used less often, while the geometric standard deviation connected with the geometric mean is used even more rarely.

When is it better to use arithmetic (additive) parameters and when geometric (multiplicative) ones? A lot of attention has been paid to these problems in the economic and finance literature. One of the firsts papers on this topic was the article by Latané (1959), who introduced the geometric-mean investment strategy into the finance and economics literature. Weide, Peterson and Maier wrote in their paper (1977).

Most of this work has been devoted to the investigation of various properties of the geometric mean strategy. Among the properties of optimal geometric-mean portfolios recently discovered are (i) they maximize the probability of exceeding a given wealth level in a fixed amount of time, (ii) they minimize the long-run probability of ruin, and (iii) they maximize the expected growth rate of wealth.

In the paper (Weide et al. 1977), they consider either the computational problem of finding the optimal geometric mean portfolio or the question of the existence of such a portfolio. They analysed both of these problems under various assumptions about the investor’s opportunity set and the form of his/her subjective probability distribution of holding period returns.

Let us assume that the gross return R in a single period has a lognormal distribution. The unknown parameter is \(a={{\text {E}}}\left( R\right) =e^{m+\sigma ^2/2}\). To estimate this parameter one can use the arithmetic mean of gross returns:

It is an unbiased estimator of the parameter a. Another unknown parameter considered in (Cooper 1996) is the geometric mean of the gross return \(b={{\text {E}}}_{\mathrm {G}}\left( R\right) =e^m\). The parameter b can be estimated as the geometric mean

In (Cooper 1996; Jacquier et al. 2003, 2005), the expected value \({{\text {E}}}\left( \overline{R}_{{\text {G}}}\right) \) is calculated. This value is an asymptotically unbiased estimator of b. Moreover, the variance \({{\text {D}}}^2\left( \overline{R}_{{\text {G}}}\right) \), which tends to zero, is determined. In our paper, we point out that the quality of the geometric estimator should be examined by the geometric mean and variance, not by their arithmetic counterparts as in (Cooper 1996; Jacquier et al. 2003, 2005).

In the paper (Hughson et al. 2006), the authors point out that forecasting a typical future cumulative return should be more focused on estimating the median of the future cumulative return than on the median of the expected cumulative return. Expectation of the cumulative return is always higher than the median of the cumulative return. The probability distribution of returns from risky ventures is positively skewed. It is frequently assumed that returns have lognormal distributions. For a lognormal distribution, the median and the geometrical expectation are equal. Another distribution frequently used in finance and insurance is the Pareto distribution, in which the geometric mean is close to the median and far from the arithmetic mean.

Arithmetic and geometric means are somewhat controversial measurements of the past and future investment returns. Critical remarks on this topic are given in the paper (Missiakoulis et al. 2007). A review of basic equalities and inequalities in the context of a gross income from the investment in a discrete time can be found in the article (Cate 2009).

Properties of various kinds of means can be found in the review paper (Ostasiewicz and Ostasiewicz 2000).

In this paper, unlike in the results discussed above, the issue concerning multiplicative parameters, including a geometric mean, is also extended with interpretations and applications of multiplicative variance as a measure of dispersion. Such a measure, as we justify in more detail in the next sections, is a better and more natural measure of deviation between random variables and their geometric mean.

The geometric variance is invariant with respect to multiplication by a constant. From this property it follows that the variance of an economic quantity given in different monetary units is constant, independent of the choice of the unit. For example, if the monetary unit is $1 or one monetary unit is $100 then the variance is the same. Moreover, the geometric variance is a dimensionless measure of variability. For example, it allows to compare the variability of exchange rates between different currencies.

In Sect. 2.1 we give definitions and properties of multiplicative parameters. We discuss multiplicative models, in which the geometric mean and the geometric standard deviation are more natural than their arithmetic counterparts. In Sect. 2.2 we introduce typical distributions for which the multiplicative parameters are more natural than the additive ones. In Sect. 2.3 we provide estimators of the multiplicative parameters considered in Sect. 2.1 and their properties. In Sect. 3 we give real examples of applications. These examples indicate the real benefits of applying the geometric parameters instead of arithmetic ones in real situations in economics and finance.

2 Parameters and models

2.1 Multiplicative parameters and models

Let us define the multiplicative (geometric) mean by

where \(\Pr \left( X>0\right) =1\). From Jensen’s inequality it is easy to see that

Below we give some obvious properties of the geometric mean. Eq. (1) implies the formula

provided multiplicative expectations of random variables \(X_i\) exist. In this formula the random variables \(X_i\) may be dependent. Moreover, for every \(a>0\)

and for every \(a\in {\mathbb {R}}\)

From (3) for \(a=-1\) we obtain

Hence, from (2) and (4) we have

Property 1

If \({{\text {E}}}_{\mathrm {G}}\left( X+Y\right) \) exists then

Proof

The formula (5) is by definition equivalent to

Dividing both sides of (6) by \(e^{{{\text {E}}}\left( \ln \left( X\right) \right) }\) we obtain an equivalent inequality

Let \(T=Y/X\). Then, it is sufficient to prove the inequality

Let us assume that T is a discrete random variable and \(\Pr \left( T=x_i\right) =p_i\). From the inequality (7.1) from the book (Mitrinović et al. 1993), p. 6, we obtain, after the substitution \(f\left( x\right) =\ln \left( 1+e^x\right) \), the inequality

Substituting \(x_i=\ln a_i\) we obtain

which completes the proof of (5) for discrete X and Y. For any X and Y in the inequality (5) we approximate X and Y by discrete random variables. \(\square \)

The square multiplicative divergence between positive t and 1 is defined by the following conditions:

-

1.

\(\displaystyle f\left( t\right) \ge 1\) and \(\displaystyle f\left( 1\right) =1\),

-

2.

\(\displaystyle f\left( t\right) =f\left( \frac{1}{t}\right) \),

-

3.

\(f\left( t\right) \) is an increasing function for \(t\ge 1\).

Condition 2 means that for any two positive numbers u or v:

The function

fulfils the above conditions and plays the same role for quotients as \(t^2\) for differences. It means that \(f\left( u/v\right) \) is a square multiplicative deviation of u / v from 1.

We will define the geometric variance as the multiplicative mean of the square multiplicative deviation of the random variable X from its geometric mean:

From definition (8) we have

The multiplicative (geometric) standard deviation is defined by:

Note that if \({{\text {D}}}^2_{\mathrm {G}}\left( X\right) \ne 1\) or \({{\text {D}}}^2_{\mathrm {G}}\left( X\right) \ne e\) then \(\sigma _{{\text {G}}}\left( X\right) \ne \sqrt{{{\text {D}}}^2_{\mathrm {G}}\left( X\right) }\). A counterpart of

is given by the equation

However, one cannot compare \({{\text {D}}}^2\left( X\right) \) and \({{\text {D}}}^2_{\mathrm {G}}\left( X\right) \) because \(\sigma \left( X\right) =\sqrt{{{\text {D}}}^2\left( X\right) }\) is represented in the same units as X (e.g. in euro or units of weights or sizes) but \(\sigma _{{\text {G}}}\left( X\right) \) is dimensionless (may be expressed in percent after multiplying by 100).

Apart from function (7) the function

also fulfils the above conditions (Saaty and Vargas 2007). Note, however, that the function defined by (10) is a multiplicative counterpart of \({{\text {E}}}\left| X-{{\text {E}}}X\right| \), not of the variance \({{\text {D}}}^2\left( X\right) \).

Below we give some properties of the multiplicative variance. Eq. (8) implies the formula

provided multiplicative variances of random variables \(X_i\) exist and \(X_i\) are independent. Moreover, for every \(a>0\)

and for every \(a\in {\mathbb {R}}\)

From (12) for \(a=-1\) we obtain

Hence, if X and Y are independent then from (11) and (13) we have

The multiplicative variance and standard deviation are quotient measures of the deviation between a random variable and its multiplicative mean \(m_{\mathrm {G}}={{\text {E}}}_{\mathrm {G}}\left( X\right) \), whereas the additive variance and standard deviation are difference measures of the deviation between a random variable and its additive mean m. Since in the additive case it is useful to define the kth interval of the form

in the multiplicative case we have the counterpart of the form

Let \(\left( X,Y\right) \) be a two-dimensional random vector. We will find the best exponential approximation of a random variable Y by a random variable X. To achieve that we will find a multiplicative counterpart of the equation

The measure of the distance between a random variable Y and the exponential function of a random variable X of the form \(e^{\alpha X+\beta }\) will be, according to Eq. (7), the geometric expectation of the random variable \(e^{\ln ^2 T}\), where

Note that

Instead of minimizing the expression \({{\text {E}}}\left( Y-\left( aX+b\right) \right) ^2\) we will minimise the expression

Therefore,

for

Formulae (15) and (16) imply that the function that is the best approximation of the random variable Y has the form

Note that in Eq. (17) the parameters of the random variable X are additive whereas the parameters of the random variable Y are multiplicative.

The multiplicative econometric model with one explanatory variable is of the form

where \(\varepsilon \) is a random component. It is frequently assumed that \(\varepsilon \) has a lognormal distribution with parameters m and \(\sigma \). Let \(Z=\ln Y\). Then

is an additive model with a random component \(\eta =\ln \varepsilon \) with a normal distribution \({{\text {N}}}\left( 0,\sigma \right) \). We will denote its trend by z, where

An exponential trend is defined by the formula

The trend in the multiplicative model is given by

The behaviour of the variable y in the multiplicative model is reflected by its geometric mean.

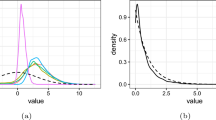

2.2 Parameters of selected distributions

In this section we will determine multiplicative parameters of distributions frequently applied to the modelling of a finance risk. Two heavy-tailed distributions, namely lognormal and Pareto distributions used to estimate large losses on financial and insurance markets, are especially important.

A random variable X has a lognormal distribution if \(Y=\ln X\) has a normal distribution, \(Y\sim {{\text {N}}}\left( m,\sigma \right) \), \({{\text {E}}}Y=m\), \({{\text {D}}}^2Y=\sigma ^2\). Then, the expectation is

and the variance

Multiplicative parameters are the following:

where the median \({{\text {Me}}}\left( X\right) = {{\text {E}}}_{\mathrm {G}}\left( X\right) \) and \({{\text {D}}}^2_{\mathrm {G}}\left( X\right) \) depend only on m and \(\sigma \), respectively.

The divergence between means \({{\text {E}}}\left( X\right) \) and \({{\text {E}}}_{\mathrm {G}}\left( X\right) \) measured by their relationship d is given by

and increases exponentially with \(\sigma ^2\).

In this context, an interesting distribution is the Pareto distribution, with a cumulative distribution function

where \(\alpha >0\), \(\beta >0\).

The additive parameters of the random variable X are:

for \(\alpha >1\) and

for \(\alpha >2\).

The multiplicative parameters are:

and exist for any \(\alpha >0\). The median \({{\text {Me}}}(X)\) exists for any \(\alpha \) and is given by

Since

for large \(\alpha \) we have \({{\text {E}}}\left( X\right) \approx {{\text {E}}}_{\mathrm {G}}\left( X\right) \).

2.3 Estimation of multiplicative parameters

Let us define the following empirical parameters: the geometric mean

and geometric variances

Then, empirical standard deviations are defined as

Now we can derive from Sect. 2.1 the equations for estimators of the multiplicative parameters and their properties.

Let \(X_1,X_2,\dots ,X_n\) be a random sample for a population with cdf \(F\left( x\right) \). Let \(\theta \) be a multiplicative parameter of \(F\left( x\right) \), e.g. \(\theta ={{\text {E}}}_{\mathrm {G}}\left( X\right) \) or \(\theta ={{\text {D}}}^2_{\mathrm {G}}\left( X\right) \). Below we formulate the basic properties of the multiplicative estimators of such parameters.

The statistic \(Z_n=f\left( X_1,\dots ,X_n\right) \) is a multiplicative unbiased estimator of \(\theta \) if \({{\text {E}}}_{\mathrm {G}}\left( Z_n\right) =\theta \). The \(Z_n\) is a multiplicative, asymptotically unbiased estimator of \(\theta \) if \(\lim _{n\rightarrow \infty }{{\text {E}}}_{\mathrm {G}}\left( Z_n\right) =\theta \). The \(Z_n\) is a multiplicative consistent estimator of \(\theta \) if \(Z_n/\theta \) is convergent in probability to 1, denoted as \(Z_n/\theta \mathop {\longrightarrow }\limits ^{P}1\), i.e.

for any \(\varepsilon >0\).

Theorem 1

Let \(X_1,X_2,\dots ,X_n\) be a random sample with the multiplicative mean \({{\text {E}}}_{\mathrm {G}}X_i=m_{{\text {G}}}\). The statistic \(\overline{X}_{{\text {G}}}\) is a multiplicative unbiased estimator of \(m_{{\text {G}}}\).

Proof

Then, \({{\text {E}}}_{\mathrm {G}}\left( \overline{X}_{{\text {G}}}\right) =m_{{\text {G}}}\). \(\square \)

Moreover, one can easily calculate the following:

Property 2

If \(X_1,X_2,\dots ,X_n\) are independent, identically distributed random variables and have the multiplicative expectations \(m_{\mathrm {G}}\) and variances \(\sigma _{{\text {G}}}^2\) then

Proof

\(\square \)

Note that \({{\text {D}}}^2_{\mathrm {G}}\left( \overline{X}_{{\text {G}}}\right) \rightarrow 1\) while \(n\rightarrow \infty \).

Theorem 2

If \(X_1,X_2,\dots ,X_n\) are independent, identically distributed random variables and have the multiplicative expectations \(m_{\mathrm {G}}\) and variances \(\sigma _{{\text {G}}}^2\) then \({\overline{X}}_G\) is the consistent estimator of \(m_G\).

Proof

From the Law of Large Numbers for the sequence \(\ln X_1,\ln X_2,\dots ,\ln X_n\) we have

For any continuous \(g\left( x\right) \)

Taking \(g\left( x\right) =e^x\) we have \({\overline{X}}_G\mathop {\longrightarrow }\limits ^{P}m_G\,\). Hence, \({\overline{X}}_G\) is the consistent estimator of \(m_G\,\). \(\square \)

Theorem 3

Let \(X_1,X_2,\dots ,X_n\) be independent, identically distributed random variables. The statistic \({\hat{S}}^2_{{\text {G}}}\) is a multiplicative unbiased estimator of \(\sigma _{{\text {G}}}^2\) and \(S^2_{{\text {G}}}\) is a multiplicative asymptotically unbiased estimator of \(\sigma _{{\text {G}}}^2\).

Proof

To prove that \({\hat{S}}^2_{{\text {G}}}\) is a multiplicative unbiased estimator of \(\sigma _{{\text {G}}}^2\) we have to calculate the term

Let \(y_i=\ln x_i\). Similarly to proving that

is an unbiased estimator of \({{\text {D}}}^2\left( X\right) \) we can prove that \({\hat{S}}^2_{{\text {G}}}\) is a multiplicative unbiased estimator of \(\sigma _{{\text {G}}}^2\). Hence, we omit details. As a simple conclusion we obtain that \({S^2_{{\text {G}}}}\) is a multiplicative asymptotically unbiased estimator of \(\sigma _{{\text {G}}}^2\,\). \(\square \)

Theorem 4

Let \(X_1,X_2,\dots ,X_n\) be independent, identically distributed random variables. Then \(S^2_{{\text {G}}}\) and \({\hat{S}}^2_{{\text {G}}}\) are the consistent estimators of \(\sigma _{{\text {G}}}^2\,\).

Proof

Since

we have

From the facts

we obtain by easy calculations

which completes the proof. \(\square \)

Estimators \({\hat{\alpha }}\) and \({\hat{\beta }}\) of the parameters \({\widetilde{\alpha }}\) and \({\widetilde{\beta }}\) given by Eqs. (15) and (16) are given respectively by

Estimators of the trend y given by (20) has the form

where \({\hat{z}}={\hat{\alpha }}x+{\hat{\beta }}\).

3 Applications of the multiplicative model

Many applications of the geometric mean in economics can be found in the papers (Hughson et al. 2006; Jacquier et al. 2003). The future portfolio of shares in (Jacquier et al. 2003) and the expected gross return in (Hughson et al. 2006) were estimated by the geometric mean. Cooper in (1996) provided some interesting considerations on how one can apply the geometric or the arithmetic mean to the estimation of the discount rate of planned investments.

However, applications nearly always used the multiplicative mean. Only in (Saaty and Vargas 2007) the multiplicative dispersion given by (10) was applied, but, as it was explained in Sect. 2.1, that dispersion differs from our standard deviation.

In insurance and finance huge losses are modelled by Pareto or lognormal distributions. Such distributions are positively skewed, so their arithmetic expected values are very far from their medians. Therefore, the expected values do not reflect the central tendency of these distributions. As we will see later, geometric means of distributions do not have such defects. Moreover, it is evident that the dispersion around \({{\text {E}}}_{\mathrm {G}}X\) must be equal to \({{\text {D}}}^2_{\mathrm {G}}X\), not to \({{\text {D}}}^2X\).

Let us only point out that also in other fields of science, multiplicative parameters give a better description of some phenomena than additive ones—see, for example, (Zacharias et al. 2011) and references therein.

In the next sections we provide two examples of applications of multiplicative parameters. Those examples come from the Polish market and concern the Stock Exchange in Poland.

3.1 Return index rates

Return rates \(i_r100\) % of indexes WIG20 from the Warsaw Stock Exchange in the years 1995–2009 are given in Table 1, \(r=1995\dots 2009\). The accumulation coefficients \(a_r=1+i_r\) are given in the third column.

The total return at the end of 2009 of an investing initial capital \(p=1\) at the beginning of 1995 (future value \( FV \)) is given by the formula:

Since \(\overline{a}_{{\text {G}}}=1.0820\),

Using the arithmetic mean \(\overline{a}=1.1295\) instead of the geometric mean we obtain

which is a two-time overstated estimation of the quantity \( FV \).

Next, we calculate \({\hat{s}}_{{\text {G}}}=1.1600\). Using Eq. (14) we have the kth interval for \(\overline{a}_{{\text {G}}}\): \(\left( 0.9328,1.2550\right) \), \(\left( 0.8042,1.4561\right) \) and \(\left( 0.6933,1.6890\right) \) for \(k=1\), \(k=2\) and \(k=3\), respectively.

If we calculate \(\overline{a}_{{\text {G}}}=1.1036\) from the 10 years 1995–2004 only, then the total forecasted return of the capital with the investment of initial capital \(p=1\) at the beginning of the year 2005 is equal to 1.6370. The forecast using the arithmetic mean \(\overline{a}=1.1447\) from the years 1995–2004 is equal to 1.9653. The true value of the total return is equal to 1.2185. Therefore, it is more precisely estimated by the geometric mean than by the arithmetic mean.

The analogical conclusion can be drawn from determining the present value \( PV \) by the geometric and arithmetic means of the discount factor \(v_r=1/a_r\). Namely,

3.2 The mean annual rate of profitability of treasury bills

A multiplicative model will be used here to describe the annual market rate with investment for 52-week treasury bills in Poland. The use of a multiplicative model can be justified by the fact that the accumulation of the capital is yielded by the multiplication, not by the addition, of gross return from an investment. Let R denote the annual rate for the 52-week treasury bills and \(f\left( t\right) =ab^t\) be an exponential function of trend. Assume that (see Eq. (20))

where the random component \(\varepsilon \) has a lognormal distribution \({{\text {LN}}}\left( 0,\sigma \right) \).

To estimate the unknown parameters a and b (see Eqs. (28) and (29)) of the trend function of the annual rate of interest we make use of the observations of the average profitabilities from weekly bids in the years 1992–2009. In the observed years, there were from 18 to 56 bids per year. For these particular years, the arithmetic and geometric means as well as the medians were taken as the means—see Table 2.

Since differences between them are small, we take as \(r_i\) the arithmetic mean from the annual profitabilities of bids in a particular year.

We will test the hypothesis of normality of \(\ln \varepsilon \) using the modified Jarque–Bera test. Let n be the sample size, \(b_1^{1/2}=m_3/m_2^{3/2}\), \(b_2=m_4/m_2^2\), where \(m_i\) is the i-th central moment of the observations \(m_i=\sum \left( c_j-{\overline{x}}\right) ^i/n\), and \({\overline{x}}\) the sample mean. For testing normality we use the Jarque–Bera test modified by Urzúa (1996) (see also Thadewald and Büning (2004)):

Here the parameters \(c_i\), \(i=1,2,3\), are given by

For our data, we have \(m_2=0.046825093\), \(m_3=-0.003081238\), \(m_4=0.006135356\), and \(n=18\). Hence, we can calculate that \(\textit{ALM}=0.4062\). The statistic (32) has an asymptotic \(\chi ^2\) distribution. Würtz and Katzgraber (2005), using a Monte Carlo simulation, provide precise quantiles for small samples. For the size of sample \(n=20\) and the levels 0.01 and 0.05 they obtain critical values 18.643 and 6.9317, respectively. Therefore, for such critical values one can not reject the null hypothesis of normality.

Figure 1 shows the average annual profitabilities as well as their exponential approximation

given by (20).

The geometric (multiplicative) mean \(\overline{r}_{{\text {G}}}=0.1245\) was used here to determine the exponential (that is multiplicative) trend of profitability R (see formula (17)). For comparison, the arithmetic mean amounts to \(\overline{r}=0.1661\), and therefore, since it is significantly greater than \(\overline{r}_{{\text {G}}}\), it overestimates the long-run returns (see, e.g., (Cooper 1996) and (Jacquier et al. 2003)).

References

Cate, A. (2009). Arithmetic and geometric mean rates and return in discrete time. CPB Memorandum www.scribd.com/doc/28483920/Arithmetic-and-geometric-mean-rates-of-return-in-discrete-time.

Cooper, I. (1996). Arithmetic versus geometric mean estimators: Setting discount rates for capital budgeting. European Financial Management, 2, 157–167.

Hughson, E., Stutzer, M., & Yung, C. (2006). The misue of expected returns. Financial Analysts Journal, 62(6), 88–96.

Jacquier, E., Kane, A., & Marcus, A. J. (2003). Geometric or arithmetic mean: A reconsideration. Financial Analysts Journal, 59(6), 46–53.

Jacquier, E., Kane, A., & Marcus, A. J. (2005). Optimal estimation of the risk premium for the long run and asset allocation: A case of compounded estimation risk. Journal of Financial Econometrics, 3, 37–55.

Latané, H. (1959). Criteria for choice among risky ventures. The Journal of Political Economy, 67(2), 144–155.

Missiakoulis, S., Vasiliou, D., & Eriotis, N. (2007). A requiem for the use of the geometric mean in evaluating portfolio performance. Appl Financial Economics Letter, 3, 403–408.

Mitrinović, D. S., Pečarić, J. E., & Fink, A. M. (1993). Classical and New Inequalities in Analysis. Dortrecht: Kluwer Academic Publisher.

Ostasiewicz, S., & Ostasiewicz, W. (2000). Means and their applications. Annals of Operations Research, 97, 337–355.

Saaty, T. L., & Vargas, L. G. (2007). Dispersion of group judgments. Mathematical and Computer Modelling, 46, 918–925.

Thadewald, T., & Büning, H. (2004). Jarque-bera test and its competitors for testing normality: A power comparison. School of Business & Economics Discussion Paper: Economics 2004/9, Berlin, http://hdl.handle.net/10419/49919.

Urzúa, C.M. (1996). On the correct use of omnibus tests for normality. Economics Letters, 53, 247–251, (corrigendum, 1997, 54:301)

Weide, J. H. V., Peterson, D. W., & Maier, S. F. (1977). A strategy which maximizes the geometric mean return on portfolio investments. Management Science, 23, 1117–1123.

Würtz, D., & Katzgraber, H.G. (2005). Precise finite-sample quantiles of the Jarque-Bera adjusted Lagrange multiplier test. arXiv:math/0509423v1 [mathST].

Zacharias, N., Sielużycki, C., Kordecki, W., König, R., & Heil, P. (2011). The M100 component of evoked magnetic fields differs by scaling factors: Implications for signal averaging. Psychophysiology, 48, 1069–1082.

Acknowledgments

We are grateful to the anonymous reviewer for constructive criticism and to Dr Cezary Sielużycki for discussions on the final version of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by National Science Centre, Poland.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Jasiulewicz, H., Kordecki, W. Multiplicative parameters and estimators: applications in economics and finance. Ann Oper Res 238, 299–313 (2016). https://doi.org/10.1007/s10479-015-2035-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10479-015-2035-x