Abstract

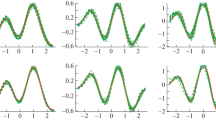

We study the nonparametric regression estimation problem with a random design in \({\mathbb{R}}^{p}\) with \(p\ge 2\). We do so by using a projection estimator obtained by least squares minimization. Our contribution is to consider non-compact estimation domains in \({\mathbb {R}}^{p}\), on which we recover the function, and to provide a theoretical study of the risk of the estimator relative to a norm weighted by the distribution of the design. We propose a model selection procedure in which the model collection is random and takes into account the discrepancy between the empirical norm and the norm associated with the distribution of design. We prove that the resulting estimator automatically optimizes the bias-variance trade-off in both norms, and we illustrate the numerical performance of our procedure on simulated data.

Similar content being viewed by others

Notes

in general, it is a semi-norm but we will only consider subspaces on which it is a norm.

References

Arlot, S., Massart, P. (2009). Data-driven calibration of penalties for least-squares regression. Journal of Machine Learning Research, 10(10), 245–279.

Baraud, Y. (2000). Model selection for regression on a fixed design. Probability Theory and Related Fields, 117(4), 467–493.

Baraud, Y. (2002). Model selection for regression on a random design. ESAIM: Probability and Statistics, 6, 127–146.

Barron, A., Birgé, L., Massart, P. (1999). Risk bounds for model selection via penalization. Probability Theory and Related Fields, 113(3), 301–413.

Birgé, L., Massart, P. (1998). Minimum contrast estimators on sieves: Exponential bounds and rates of convergence. Bernoulli, 4(3), 329–375.

Cohen, A., Davenport, M. A., Leviatan, D. (2013). On the stability and accuracy of least squares approximations. Foundations of Computational Mathematics, 13(5), 819–834.

Comte, F., Genon-Catalot, V. (2018). Laguerre and Hermite bases for inverse problems. Journal of the Korean Statistical Society, 47(3), 273–296.

Comte, F., Genon-Catalot, V. (2020a). Regression function estimation as a partly inverse problem. Annals of the Institute of Statistical Mathematics, 72(4), 1023–1054.

Comte, F., Genon-Catalot, V. (2020b). Regression function estimation on non compact support in an Heteroscesdastic model. Metrika, 83(1), 93–128.

Comte, F., Marie, N. (2021). On a Nadaraya-Watson estimator with two bandwidths. Electronic Journal of Statistics, 15(1), 2566–2607.

Efromovich, S. (1999). Nonparametric curve estimation: Methods, theory and applications. Springer series in statistics, New York: Springer.

Gittens, A., Tropp, J.A. (2011) Tail bounds for all eigenvalues of a sum of random matrices. ArXiv:1104.4513 [math].

Györfi, L., Kohler, M., Krzyżak, A., Walk, H. (2002). A distribution-free theory of nonparametric regression. Springer series in statistics, New York, NY: Springer New York.

Härdle, W., Marron, J. S. (1985). Optimal bandwidth selection in nonparametric regression function estimation. The Annals of Statistics, 13(4), 1465–1481.

Köhler, M., Schindler, A., Sperlich, S. (2014). A review and comparison of bandwidth selection methods for Kernel regression: Review of bandwidth selection for regression. International Statistical Review, 82(2), 243–274.

Lacour, C., Massart, P., Rivoirard, V. (2017). Estimator selection: A new method with applications to Kernel density estimation. Sankhya A, 79(2), 298–335.

Mabon, G. (2017). Adaptive deconvolution on the non-negative real line: Adaptive deconvolution on R+. Scandinavian Journal of Statistics, 44(3), 707–740.

Nadaraya, E. A. (1964). On estimating regression. Theory of Probability & Its applications, 9(1), 141–142.

Sacko, O. (2020). Hermite density deconvolution. Latin American Journal of Probability and Mathematical Statistics, 17(1), 419–443.

Tao, T. (2008). The divisor bound. https://terrytao.wordpress.com/2008/09/23/the-divisor-bound.

Tropp, J. A. (2012). User-friendly tail bounds for sums of random matrices. Foundations of Computational Mathematics, 12(4), 389–434.

Tsybakov, A. B. (2009). Introduction to nonparametric estimation. Series in statistics. London: Springer.

Watson, GS. (1964). Smooth regression analysis. Sankhyā: The Indian Journal of Statistics, Series A 26(4):359–372.

Acknowledgements

I want to thank Fabienne Comte and Céline Duval for their helpful advice and their support of my work. I also want to thank Florence Merlevède for her help with the second inequality of the Matrix Chernoff bound. Finally, I want to thank Herb Susmann for proofreading this article.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by a grant from Région Île-de-France.

Appendices

A Linear algebra

Lemma 8

Let E be a Euclidean vector space and let \(\ell :E\rightarrow {\mathbb {R}}^n\) be an injective linear map. For \(y\in {\mathbb {R}}^n\), the solution of the problem:

is given by:

where \(\ell ^*:{\mathbb {R}}^n\rightarrow E\) is characterized by the relation \({\langle }{ y, \ell (a) }{\rangle }_{{\mathbb {R}}^n} = {\langle }{ \ell ^*(y), a }{\rangle }_{E}\).

Lemma 9

Let \(\textbf{A}\), \(\textbf{B}\) be square matrices. If \(\textbf{A}\) is invertible and \(\left| \left| \textbf{A}^{-1}\textbf{B} \right| \right| _{\textrm{op}}<1\), then \(\textbf{A} +\textbf{B}\) is invertible and it holds:

B Concentration inequalities

You can find the proofs of the following bounds in Tropp (2012) and Gittens and Tropp (2011).

Theorem 5

(Matrix Chernoff bound) Let \({\textbf{Z}}_1, \dotsc , {\textbf{Z}}_n\) be independent random self-adjoint positive semi-definite matrices with dimension d, such that \(\sup _k \lambda _{\max }({\textbf{Z}}_k) \le R\) a.s. If we define:

then we have:

Theorem 6

(Matrix Bernstein bound) Let \({\textbf{Z}}_1, \dotsc , {\textbf{Z}}_n\) be independent random self-adjoint positive semi-definite matrices with dimension d, such that \({\mathbb {E}}[{\textbf{Z}}_k] = \textbf{0}\) and that \(\sup _k \lambda _{\max }({\textbf{Z}}_k) \le R\) a.s. If \(v>0\) is such that:

then for all \(x>0\) we have:

C Combinatorics

Proposition 4

For \(n\ge 1\) and \(p\ge 2\) we have:

where \(H_n {:}{=} \sum _{k=1}^{n} \frac{1}{k}\) is the n-th harmonic number.

Proof

We compute:

\(\square \)

Theorem 7

(Divisor bound) Let \(N\in {\mathbb {N}}_+\) and let \(\textrm{div}(N)\) be the set of divisors of N. We have for all \(\epsilon >0\):

As a consequence, we have for all \(\epsilon >0\):

A proof of this result can be found in Tao (2008).

About this article

Cite this article

Dussap, F. Nonparametric multiple regression by projection on non-compactly supported bases. Ann Inst Stat Math 75, 731–771 (2023). https://doi.org/10.1007/s10463-022-00863-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10463-022-00863-1