Abstract

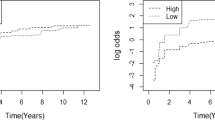

Broken adaptive ridge (BAR) is a computationally scalable surrogate to \(L_0\)-penalized regression, which involves iteratively performing reweighted \(L_2\) penalized regressions and enjoys some appealing properties of both \(L_0\) and \(L_2\) penalized regressions while avoiding some of their limitations. In this paper, we extend the BAR method to the semi-parametric accelerated failure time (AFT) model for right-censored survival data. Specifically, we propose a censored BAR (CBAR) estimator by applying the BAR algorithm to the Leurgan’s synthetic data and show that the resulting CBAR estimator is consistent for variable selection, possesses an oracle property for parameter estimation and enjoys a grouping property for highly correlation covariates. Both low- and high-dimensional covariates are considered. The effectiveness of our method is demonstrated and compared with some popular penalization methods using simulations. Real data illustrations are provided on a diffuse large-B-cell lymphoma data and a glioblastoma multiforme data.

Similar content being viewed by others

References

Akaike, H. (1974). A new look at the statistical model identification. IEEE Transactions on Automatic Control, 19, 716–723.

Box, J. K., Paquet, N., Adams, M. N., Boucher, D., Bolderson, E., Obyrne, K. J., Richard, D. J. (2016). Nucleophosmin: From structure and function to disease development. BMC Molecular Biology, 17(19), 1–12.

Breheny, P., Huang, J. (2011). Coordinate descent algorithms for nonconvex penalized regression, with applications to biological feature selection. The Annals of Applied Statistics, 5(1), 232–253.

Breiman, L. (1996). Heuristics of instability and stabilization in model selection. Annals of Statistics, 24, 2350–2383.

Buckley, J., James, I. (1979). Linear regression with censored data. Biometrika, 66(3), 429–436.

Cai, T., Huang, J., Tian, L. (2009). Regularized estimation for the accelerated failure time model. Biometrics, 65(2), 394–404.

Chen, J., Chen, Z. (2008). Extended Bayesian information criteria for model selection with large model spaces. Biometrika, 95, 759–771.

Cox, B. D. R. (1972). Regression models and life-tables. Journal of the Royal Statistical Society: Series B (Methodological), 34(2), 187–220.

Cui, H., Li, R., Zhong, W. (2015). Model-free feature screening for ultrahigh dimensional discriminant analysis. Journal of the American Statistical Association, 110(510), 630–641.

Dai, L., Chen, K., Sun, Z., Liu, Z., Li, G. (2018). Broken adaptive ridge regression and its asymptotic properties. Journal of Multivariate Analysis, 168, 334–351.

Dai, L., Chen, K., Li, G. (2020). The broken adaptive ridge procedure and its applications. Statistica Sinica, 30(2), 1069–1094.

Datta, S., Le-Rademacher, J., Datta, S. (2007). Predicting patient survival from microarray data by accelerated failure time modeling using partial least squares and lasso. Biometrics, 63(1), 259–271.

Eirín-López, J. M., Frehlick, L. J., Ausió, J. (2006). Long-term evolution and functional diversification in the members of the nucleophosmin/nucleoplasmin family of nuclear chaperones. Genetics, 173(4), 1835–1850.

Fan, J., Li, R. (2001). Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of the American Statistical Association, 96(456), 1348–1360.

Fan, J., Li, R. (2002). Variable selection for cox’s proportional hazards model and frailty model. Annals of Statistics, 30(1), 74–99.

Fan, J., Lv, J. (2008). Sure independence screening for ultrahigh dimensional feature space. Journal of the Royal Statistical Society: Series B (Methodological), 70(5), 849–911.

Foster, D., George, E. (1994). The risk inflation criterion for multiple regression. Annals of Statistics, 22, 1947–1975.

Friedman, J., Hastie, T., Tibshirani, R. (2010). Regularization paths for generalized linear models via coordinate descent. Journal of Statistical Software, 33(1), 1–22.

Huang, J., Ma, S. (2010). Variable selection in the accelerated failure time model via the bridge method. Lifetime Data Analysis, 16(2), 176–95.

Huang, J., Ma, S., Xie, H. (2006). Regularized estimation in the accelerated failure time model with high-dimensional covariates. Biometrics, 62(3), 813–820.

Johnson, B. A. (2009). On lasso for censored data. Electronic Journal of Statistics, 3(2009), 485–506.

Johnson, B. A., Lin, D. Y., Zeng, D. (2008). Penalized estimating functions and variable selection in semiparametric regression models. Journal of the American Statistical Association, 103(482), 672–680.

Johnson, K. D., Lin, D., Ungar, L. H., Foster, D., Stine, R. (2015). A risk ratio comparison of \(l_0\) and \(l_1\) penalized regression. arXiv:1510.06319 [math.ST].

Kalbfleisch, J. D., Prentice, R. L. (2002). The statistical analysis of failure time data (2nd ed.). Hoboken: Wiley.

Kawaguchi, E. S., Suchard, M. A., Liu, Z., Li, G. (2020). A surrogate \(l0\) sparse cox’s regression with applications to sparse high-dimensional massive sample size time-to-event data. Statistics in Medicine, 39(6), 675–686.

Koul, H., Susarla, V., Ryzin, J. V. (1981). Regression analysis with randomly right-censored data. Annals of Statistics, 9(6), 1276–1288.

Leurgans, S. (1987). Linear models, random censoring and synthetic data. Biometrika, 74(2), 301–309.

Li, Y., Dicker, L., Zhao, S. D. (2014). The dantzig selector for censored linear regression models. Statistica Sinica, 24(1), 251–2568.

Liu, Y., Chen, X., Li, G. (2020). A new joint screening method for right-censored time-to-event data with ultra-high dimensional covariates. Statistical Methods in Medical Research, 29(6), 1499–1513.

Mallows, C. (1973). Some comments on \(c_p\). Technometrics, 15, 661–675.

Mummenhoff, J., Houweling, A. C., Peters, T., Christoffels, V. M., Rther, U. (2001). Expression of Irx6 during mouse morphogenesis. Mechanisms of Development, 103(1–2), 193–195.

Nachmani, D., Bothmer, A. H., Grisendi, S., Mele, A., Pandolfi, P. P. (2019). Germline NPM1 mutations lead to altered rRNA 2-O-methylation and cause dyskeratosis congenita. Nature Genetics, 51(10), 1518–1529.

Nardi, Y., Rinaldo, A. (2008). On the asymptotic properties of the group lasso estimator for linear models. Electronic Journal of Statistics, 2, 605–633.

Schwarz, G. (1978). Estimating the dimension of a model. Annals of Statistics, 6, 461–464.

Shen, X., Pan, W., Zhu, Y. (2012). Likelihood-based selection and sharp parameter estimation. Journal of the American Statistical Association, 107, 223–232.

Stute, W. (1993). Consistent estimation under random censorship when covariables are present. Journal of Multivariate Analysis, 45(1), 89–103.

Tibshirani, R. (1996). Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society: Series B (Methodological), 58(1), 267–288.

Tibshirani, R. (1997). The lasso method for variable selection in the cox model. Statistics in Medicine, 16(4), 385–395.

Wang, S., Nan, B., Zhu, J., Beer, D. G. (2008). Doubly penalized Buckley–James method for survival data with high-dimensional covariates. Biometrics, 64(1), 132–140.

Yuan, M., Lin, Y. (2006). Model selection and estimation in regression with grouped variables. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 68(1), 49–67.

Zhang, C. H. (2010). Nearly unbiased variable selection under minimax concave penalty. Annals of Statistics, 38(2), 894–942.

Zhao, H., Wu, Q., Li, G., Sun, J. (2019). Simultaneous estimation and variable selection for interval-censored data with broken adaptive ridge regression. Journal of the American Statistical Association, 115(529), 204–216.

Zhou, M. (1992). Asymptotic normality of the synthetic data regression estimator for censored survival data. Annals of Statistics, 20(2), 1002–1021.

Zhu, L., Li, L., Li, R., Zhu, L. (2011). Model-free feature screening for ultrahigh dimensional data. Journal of the American Statistical Association, 106(496), 1464–1475.

Zou, H. (2006). The adaptive lasso and its oracle properties. Journal of the American Statistical Association, 101(476), 1418–1429.

Zou, H., Hastie, T. (2005). Regularization and variable selection via the elastic net. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 67(2), 301–320.

Acknowledgements

We are grateful to the referees, the associate editor and the editor for their helpful comments. The Glioblastoma multiforme data used in Sect. 4.2 are generated by the TCGA Research Network: https://www.cancer.gov/tcga.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The research of Gang Li was partly supported by National Institute of Health Grants P30 CA-16042, P50 CA211015, and UL1TR000124-02. The research of Zhihua Sun was partly supported by Natural Science Foundation of China 11871444. The research of Yi Liu was partly supported by Natural Science Foundation of China 11801567.

Appendix: Proofs of the theorems

Appendix: Proofs of the theorems

We first introduce notations and lemmas used to prove Theorem 1.

Using Leurgans (1987) method, we transform \({\mathbf{Y}}\) into synthetic data \({\mathbf{Y}}^*\). Let \({\varvec{\beta }}=( {\varvec{\alpha }}^{\top }, {\varvec{\gamma }}^{\top })^{\top }\), where \({\varvec{\alpha }}\) and \({\varvec{\gamma }}\) are \(q_n\times 1\) and \((p_n-q_n) \times 1\) vector, respectively, \({\varvec{\Sigma }}_n={\mathbf{x}}^{\top } {\mathbf{x}}/n\).

For simplicity, we write \({\varvec{\alpha }}^*( {\varvec{\beta }})\) and \({\varvec{\gamma }}^*( {\varvec{\beta }})\) as \({\varvec{\alpha }}^*\) and \({\varvec{\gamma }}^*\) hereafter. \({\varvec{\Sigma }}_n^{-1}\) can be partitioned as

where the \(A_{11}\) is a \(q\times q\) matrix. Multiplying \(( {\mathbf{x}}^{\top } {\mathbf{x}})^{-1}( {\mathbf{x}}^{\top } {\mathbf{x}}+\lambda _n {\mathbf{D}}( {\varvec{\beta }}))\) to equation (10)

where \({\varvec{\varepsilon }}^*={\mathbf{Y}}^*-{\mathbf{x}}{{\varvec{\beta }}_0}\), \({\hat{{\varvec{\beta }}}}_\mathrm{Z}=({\mathbf{x}}^{\top }{} {\mathbf{x}})^{-1}{} {\mathbf{x}}^{\top }{} {\mathbf{Y}}^*\), \({\mathbf{D}}_1( {\varvec{\alpha }})={\text{ diag }} (\alpha _1^{-2},...,\alpha _{{q}}^{-2})\) and \({\mathbf{D}}_2( {\varvec{\gamma }})={\text{ diag }} (\gamma _1^{-2},...,\gamma _{p_n-{q}}^{-2})\).

Lemma 1

Let \(\delta _n\) be a sequence of positive real numbers satisfying \(\delta _n \rightarrow \infty\) and \(p_n\delta _n^2/\lambda _n \rightarrow 0\). Define \(\mathbf{H}_n = \{ {\varvec{\beta }}\in {\mathbb {R}}^{p_n}: \Vert {\varvec{\beta }}-{\varvec{\beta }}_0\Vert \le \delta _n\sqrt{p_n/n}\}\) and \(\mathbf{H}_{n1} = \{ {\varvec{\alpha }}\in {\mathbb {R}}^{{q}}: \Vert {\varvec{\alpha }}-{\varvec{\beta }}_{01}\Vert \le \delta _n\sqrt{p_n/n}\}\). Assume conditions (C1)–(C5) hold. Then, with probability tending to 1, we have

-

(a)

\(\sup _{ {\varvec{\beta }} \in \mathbf{H}_n} {\Vert {\varvec{\gamma }}^*\Vert }/{\Vert {\varvec{\gamma }}\Vert }< {1}/{C_0}, {\text{ for some constant }} C_0>1\);

-

(b)

g is a mapping from \(\mathbf{H}_n\) to itself.

Proof

We first prove part (a).

First, under \(\lambda _n/\sqrt{n} \rightarrow 0\) and \(p_n\delta _n^2/\lambda _n \rightarrow 0\), we have \(\delta _n\sqrt{p_n/n} \rightarrow 0\).

Let \({\hat{{\varvec{\beta }}}}_\mathrm{Z}=({\mathbf{x}}^{\top }{} {\mathbf{x}})^{-1}{} {\mathbf{x}}^{\top }{} {\mathbf{Y}}^*\), \(\omega _{ji}=(({\mathbf{x}}^{\top } {\mathbf{x}})^{-1}{} {\mathbf{x}}^{\top } )_{ji}\), \(\mu _j^*=\sum _i \omega _{ji}\int _{0}^{T_n}{F_i \mathrm{d}t}\) and \({\varvec{\mu }} =(\mu _1^*, \mu _2^*, ..., \mu _{pn}^*)\). For any \(p_n\)-vector \({\mathbf{b}}_n\) which \(\Vert {\mathbf{b}}_n\Vert \le 1\), define \(t_n^2= {\mathbf{b}}_n^{\top } {\varvec{\Omega }}(\infty )) {\mathbf{b}}_n\). Then, we have \(\sqrt{n} \, t_n^{-1} {\mathbf{b}}_n^{\top } ({\hat{{\varvec{\beta }}}}_\mathrm{Z}-{\varvec{\omega }}) \rightarrow _D N(0,1).\) This result can be proved using similar techniques to those used in the proof of Theorem 3.1 of Zhou (1992) along the same lines as outlined below: First, we separate \({\mathbf{b}}_n^{\top } ({\hat{{\varvec{\beta }}}}_\mathrm{Z}-{\varvec{\omega }})\) like (3.6) in Zhou (1992) with a main term \(S_{{\varvec{\beta }}}(T^n)\) and a remainder term \(SS_{{\varvec{\beta }}}(T^n)\), i.e., \({\mathbf{b}}_n^{\top } ({\hat{{\varvec{\beta }}}}_\mathrm{Z}-{\varvec{\omega }})=S_{{\varvec{\beta }}}(T^n)+SS_{{\varvec{\beta }}}(T^n)\), where \(S_{{\varvec{\beta }}}(T^n)\) is a weighted sum of \({{\hat{H}}}(t)-H(t)\) and \({{\hat{G}}}(t)-G(t)\); and \(SS_{{\varvec{\beta }}}(T^n)\) is a weighted sum of \(({{\hat{H}}}(t)-H(t))({{\hat{G}}}(t)-G(t))\) and \(({{\hat{H}}}(t)-H(t))({{\hat{H}}}(t)-H(t))\). Second, under conditions (C2) and (C3), one can show that \(\sqrt{n}SS_{{\varvec{\beta }}}(T^n)\) is negligible. Finally, by applying the martingale central limit theorem and conditions (C1) and (C4), we establish the asymptotic normality of \(\sqrt{n}S_{{\varvec{\beta }}}(T^n)\). By conditions (C1) and (C2), we have \(\sqrt{n}t_n^{-1}{} {\mathbf{b}}_n^{\top }({\varvec{\beta }}_0 - {\varvec{\omega }}) = o_p(1)\), for \({\mathbf{b}}_n={\mathbf{e}}_i=(0,...,1,0,...,0)\). Hence, we have \(\Vert {\hat{{\varvec{\beta }}}}_{\mathrm{Z}}-{\varvec{\beta }}_0\Vert ^2=O_p(p_n/n)\).

It then follows from (11) that

Note that \(\Vert {\varvec{\alpha }} - {\varvec{\beta }}_{01}\Vert \le \delta _n(p_n/n)^{1/2}\) and \(\Vert {\varvec{\alpha }}^*\Vert \le \Vert g( {\varvec{\beta }})\Vert \le \Vert {\hat{{\varvec{\beta }}}}_\mathrm{Z}\Vert =O_p({\sqrt{p_n}})\). By assumptions (C4) and (C5), we have

where the second inequality uses the fact \(\Vert \mathbf{A}_{12}^{\top } \Vert \le \sqrt{2}\, {\tilde{C}}\), which follows from the inequality \(\Vert \mathbf{A}_{12}{} \mathbf{A}_{12}^{\top } \Vert -\Vert \mathbf{A}_{11}^2\Vert \le \Vert \mathbf{A}_{11}^2+\mathbf{A}_{12}{} \mathbf{A}_{21}\Vert \le \Vert {\varvec{\Sigma }}_n^{-2}\Vert <{\tilde{C}}^2.\) Combining (12) and (13) gives

Note that \(\mathbf{A}_{22}=\sum _{i=1}^{p_n-{q}}\tau _{2i} {\mathbf{u}}_{2i} {\mathbf{u}}_{2i}^{\top }\) is positive definite and by the singular value decomposition, , where \(\tau _{2i}\) and \({\mathbf{u}}_{2i}\) are eigenvalues and eigenvectors of \(\mathbf{A}_{22}\). Then, since \(1/{\tilde{C}}<\tau _{2i}< {\tilde{C}}\), we have

This, together with (14) and (C4), implies that with probability tending to 1,

Let \({\mathbf{D}}_{\gamma */\gamma }=(\gamma ^*_1/\gamma _1, \ldots ,\gamma ^*_{p_n-{q}}/\gamma _{p_n-{q}})^{\top }\). Because \(\Vert {\varvec{\gamma }}\Vert \le \delta _n\sqrt{p_n/n}\), we have

and

Combining (15), (16) and (17), we have that with probability tending to 1,

for some constant \(C_0 > 1\) provided that \(\lambda _n/({p_n}\delta _n^2) \rightarrow \infty\).

It is worth noting that \(\Pr (\Vert {\mathbf{D}}_{{\varvec{\gamma }}*/{\varvec{\gamma }}}\Vert \rightarrow 0) \rightarrow 1\), as \(n \rightarrow \infty\). Furthermore, with probability tending to 1,

This proves part (a).

Next we prove part (b). First, it is easy to see from (17) and (18) that, as \(n \rightarrow \infty\),

Then, by (11), we have

Similar to (13), it is easily to verify that

Moreover, with probability tending to 1,

where the last step follows from (15), (19), and the fact that \(\Vert \mathbf{A}_{12}\Vert \le \sqrt{2}{\tilde{C}}\). It follows from (20), (21) and (22) that with probability tending to 1,

Because \(\delta _n\sqrt{p_n}/\sqrt{n} \rightarrow 0\), we have, as \(n \rightarrow \infty\),

Combining (19) and (24) completes the proof of part (b).\(\square\)

Lemma 2

Assume that (C1)–(C5) hold. For any q-vector \({\mathbf{c}}\) satisfying \(\Vert {\mathbf{c}}\Vert \le 1\), define \({z^2}= {\mathbf{c}}^{\top } {\varvec{\Omega }}_{1} \mathbf{c}\) as in Theorem 1. Define

Then, with probability tending to 1,

(a) \(f( {\varvec{\alpha }})\) is a contraction mapping from \({\mathbf{b}}_{n} \equiv \{ {\varvec{\alpha }}\in {\mathbb {R}}^{{q}}: \Vert {\varvec{\alpha }}-{\varvec{\beta }}_{01}\Vert \le \delta _n\sqrt{p_n/n}\}\) to itself;

(b) \(\sqrt{n} \, {z^{-1} \mathbf{c}^{\top }}(\hat{ {\varvec{\alpha }}}^{\circ }- {\varvec{\beta }}_{01}) \rightsquigarrow {\mathcal {N}}(0,1),\) where \(\hat{ {\varvec{\alpha }}}^{\circ }\) is the unique fixed point of \(f({\varvec{\alpha }})\) defined by

Proof

We first prove part (a). Note that (25) can be rewritten as

where \({\hat{{\varvec{\beta }}}}_{1\mathrm Z}=( {\mathbf{x}}_1^{\top } {\mathbf{x}}_1)^{-1} {\mathbf{x}}_1^{\top } {\mathbf{Y}}^*\). Then,

It follows from (26) and (27) that

where \(\delta _n \rightarrow \infty\) and \(\delta _n{/\sqrt{n}}\rightarrow 0\). Then we can get

This means that f is a mapping from the region \({\mathbf{b}}_n\) to itself.

Rewrite (25) as \(\{ {\mathbf{x}}_{1}^{\top }{ {\mathbf{x}}_1}+\lambda _n {\mathbf{D}}_1( {\varvec{\alpha }})\}f( {\varvec{\alpha }})= {\mathbf{x}}_{1}^{\top } {\mathbf{Y}}^*\), then, we have

where \({\dot{f}}( {\varvec{\alpha }})={\partial f( {\varvec{\alpha }})}/{\partial { {\varvec{\alpha }}^{\top }}}\) and \({\text{ diag }} \{\frac{-2f_j( {\varvec{\alpha }})}{\alpha _j^3}\}= {\text{ diag }} \{\frac{-2f_1( {\varvec{\alpha }})}{\alpha _1^3},...,\frac{-2f_{{q}}( {\varvec{\alpha }})}{\alpha _{{q}}^3}\}.\) With the assumption \(\lambda _n/\sqrt{n}\rightarrow 0\),

Write \({\varvec{\Sigma }}_{n1} = \sum _{i=1}^{{q}}\tau _{1i} {\mathbf{u}}_{1i} {\mathbf{u}}_{1i}^{\top }\), where \(\tau _{1i}\) and \({\mathbf{u}}_{1i}\) are eigenvalues and eigenvectors of \({\varvec{\Sigma }}_{n1}\). Then, by (C4), \(1/{\tilde{C}}<\tau _{1i}< {\tilde{C}}\) for all i and

Therefore, it follows from \({\varvec{\alpha }}\in {\mathbf{b}}_n\), (32) and (C4) that

This, together with (31) and the fact \(\lambda _n/n\rightarrow 0\), implies that

Finally, we can get the conclusion in part (a) from (29) and (33).

Next we prove part (b). Write

By the first order resolvent expansion formula

the first term on the right-hand side of equation (34) can be rewritten as

Hence, by the assumption (C4) and (C5), we have

Furthermore, applying the first order resolvent expansion formula, it can be shown that

where \({\varvec{\mu }}_1 =(\mu _1^*, \mu _2^*, ..., \mu _{q}^*)\). \(I_2\) converges in distribution to N(0, 1) by the Lindeberg-Feller central limit theorem. Finally, combining (34), (35), and (36) proves part (b). \(\Box\) \(\square\)

Proof of Theorem 1

Given the initial ridge estimator \({\hat{{\varvec{\beta }}}}^{(0)}\) in (4), we have

By the first-order resolvent expansion formula and \(\xi _n/\sqrt{n}\rightarrow 0\),

It is easy to see that \(\Vert {\mathbf{T}}_2\Vert =O_p(\sqrt{{p_n}/{n}}).\) Thus \(\Vert {\hat{{\varvec{\beta }}}}^{(0)}- {\varvec{\beta }}_0\Vert =O_p((p_n/n)^{1/2})\). This, combined with part (a) of Lemma 1, implies that

Hence, to prove part (i) of Theorem 1, it is sufficient to show that

where \(\hat{ {\varvec{\alpha }}}^\circ\) is the fixed point of \(f({\varvec{\alpha }})\) defined in part (b) of Lemma 2.

Define \({\varvec{\gamma }}^*= 0\) if \({\varvec{\gamma }} = 0\), for any \({\varvec{\alpha }}\in {\mathbf{b}}_n\),

Combining (41) with the fact

implies that for any \({\varvec{\alpha }}\in {\mathbf{b}}_n\),

Therefore, \(g(\cdot )\) is continuous and thus uniformly continuous on the compact set \({\varvec{\beta }}\in \mathbf{H}_n\). This, together with (39) and (42), implies that as \(k\rightarrow \infty\),

with probability tending to 1.

Note that

where the last step follows from \(\Vert f({\hat{ {\varvec{\alpha }}}^{(k)}})-\hat{{\varvec{\alpha }}}^\circ \Vert =\Vert f({\hat{ {\varvec{\alpha }}}^{(k)}})-f(\hat{ {\varvec{\alpha }}}^\circ )\Vert \le (1/{\tilde{C}})\Vert {\hat{ {\varvec{\alpha }}}^{(k)}}-\hat{ {\varvec{\alpha }}}^\circ \Vert\). Let \(a_k=\Vert {\hat{ {\varvec{\alpha }}}^{(k)}}-\hat{{\varvec{\alpha }}}^\circ \Vert\), for all \(k\ge 0\). From (43), we can induce that with probability tending to 1, for any \(\epsilon >0\), there exists an positive integer N such that for all \(k> N\), \(|\eta _k|<\epsilon\) and

This proves (40).

Therefore, it immediately follows from (39) and (40) that the with probability tending to 1, \(\lim _{k\rightarrow \infty } {\varvec{\beta }}^{(k)}= \lim _{k\rightarrow \infty } (\hat{ {\varvec{\alpha }}}^{(k)\top } , \hat{ {\varvec{\gamma }}}^{(k)\top })^{\top }=(\hat{ {\varvec{\alpha }}}^{\circ \top } , 0)^{{\rm {T} }}\), which completes the proof of part (i). This, in addition to part (b) of Lemma 2, proves part (ii) of Theorem 1. \(\Box\)

Proof of Theorem 2

Recall that \({\hat{{\varvec{\beta }}}}^* =\lim _{k\rightarrow \infty }\hat{ {\varvec{\beta }}}^{(k+1)}\) and \(\hat{ {\varvec{\beta }}}^{(k+1)}=\arg \min _{ {\varvec{\beta }}} \{ Q({\varvec{\beta }}| \hat{ {\varvec{\beta }}}^{(k)})\}\), where

If \(\beta _\ell ^*\ne 0\) for \(\ell \in \{ i,j\}\), then \({\hat{{\varvec{\beta }}}}^*\) must satisfy the following normal equations for \(\ell \in \{ i,j\}\):

Thus, for \(\ell \in \{ i, j \}\),

where \(\hat{{\varvec{\varepsilon }}}^{*(k+1)}= {\mathbf{Y}}^*- {\mathbf{x}}\hat{ {\varvec{\beta }}}^{(k+1)}\). Moreover, because

we have

Letting \(k\rightarrow \infty\) in (45) and (46), we have, for \(\ell \in \{i, j\}\) and \(\Vert \hat{{\varvec{\varepsilon }}}^{*}\Vert \le \Vert {\mathbf{Y}}^*\Vert\), \({\hat{\beta }}_\ell ^{*-1}= {\mathbf{x}}_\ell ^{\top } \hat{{\varvec{\varepsilon }}}^{*}{\lambda _n}\), where \(\hat{{\varvec{\varepsilon }}}^{*} = {\mathbf{Y}}^*- {\mathbf{x}}{\hat{{\varvec{\beta }}}}^*\). Therefore,

\(\square\)

About this article

Cite this article

Sun, Z., Liu, Y., Chen, K. et al. Broken adaptive ridge regression for right-censored survival data. Ann Inst Stat Math 74, 69–91 (2022). https://doi.org/10.1007/s10463-021-00794-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10463-021-00794-3