Abstract

Agriculture is one of the most crucial sectors, meeting the fundamental food needs of humanity. Plant diseases increase food economic and food security concerns for countries and disrupt their agricultural planning. Traditional methods for detecting plant diseases require a lot of labor and time. Consequently, many researchers and institutions strive to address these issues using advanced technological methods. Deep learning-based plant disease detection offers considerable progress and hope compared to classical methods. When trained with large and high-quality datasets, these technologies robustly detect diseases on plant leaves in early stages. This study systematically reviews the application of deep learning techniques in plant disease detection by analyzing 160 research articles from 2020 to 2024. The studies are examined in three different areas: classification, detection, and segmentation of diseases on plant leaves, while also thoroughly reviewing publicly available datasets. This systematic review offers a comprehensive assessment of the current literature, detailing the most popular deep learning architectures, the most frequently studied plant diseases, datasets, encountered challenges, and various perspectives. It provides new insights for researchers working in the agricultural sector. Moreover, it addresses the major challenges in the field of disease detection in agriculture. Thus, this study offers valuable information and a suitable solution based on deep learning applications for agricultural sustainability.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Agriculture remains a vital economic sector for developing countries and provides an essential source of livelihood for rural communities. Therefore, it is inevitable that agricultural productivity will increase to meet the increasing population demand (Mueller et al. 2020; Pooniya et al. 2022; Thai et al. 2023). However, the agricultural sector faces many challenges, such as diseases, pests, and ever-changing weather conditions. These affect the spread of diseases and increase food safety concerns.

Plant diseases are essential obstacles to agricultural production. According to Food and Agriculture Organization (FAO) reports, plant diseases and pests cause a loss of 20–40% in global food production (Oerke and Dehne 2004; Loey et al. 2020). Generally, the severity of plant diseases can be reduced by early detection, but manual methods do not easily achieve this. Usually, the effects of plant diseases first appear on the lower parts of plants and then spread throughout the agricultural crop. Therefore, continuous plant monitoring is vital to quickly identify diseases and prevent their spread, with particular emphasis on visually inspecting foliar diseases. In recent years, computer vision, machine learning, and deep learning methods have emerged as promising tools for disease diagnosis and management (Martinelli et al. 2015).

Timely intervention to reduce agricultural losses is of great importance for economic prosperity, food security, and ecological balance (Sunil et al. 2023b). Therefore, increasing interest in precision agriculture stimulates productivity and promotes sustainable growth. Various techniques are used for the early diagnosis of field diseases. Traditional diagnostic methods based on visual inspection are laborious, expensive, and relatively less sensitive, which can lead to significant yield losses, especially for rural farmers. Recently, non-invasive methods have gained popularity and paved the way for automatic, fast, and accurate solutions (Kunduracioglu 2018). In particular, among these solutions, image processing techniques using advanced cameras equipped with sensitive sensors are achieving promising disease diagnosis and management results.

With the rapid growth of the global population, maximizing agricultural productivity becomes inevitable. In this context, precision agriculture practices significantly assist farmers in increasing productivity. Advanced remote sensing and sensor technologies are important in disease diagnosis and management (Shruthi et al. 2019; Umamaheswari et al. 2018).

To summarize, plant diseases have a significant impact on agricultural production and pose threats to global food security and economic stability. Early detection and efficient management are important in reducing these threats, and modern technologies allow these goals to be achieved more effectively. As a result, many automation models using Machine Learning, Deep Learning, and Image Processing methodologies have been developed to address these challenges.

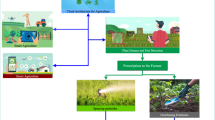

The development of existing technologies, which provide straightforward accessibility to technological products, has facilitated the development and widespread use of technology applications in various fields (Kunduracıoğlu and Durak 2018). Integrating IoT, sensors, drones, artificial intelligence, and blockchain technologies is revolutionizing automated farming (Rehman et al., 2022; Dhanaraju et al. 2022; Yao et al. 2022). Using IoT devices and various sensors, farms constantly collect information about machine operations, soil moisture levels, fertilizer levels, and weather conditions. Technology integration promotes agricultural sustainability. Blockchain technology has made the agricultural supply chain transparent and trustworthy, enabling customers to track and verify their food’s path from farm to table (Alahi et al. 2023). Wastage of resources should be minimized through automated processes that benefit the environment. As per the over usage of automated farming will be crucial to meeting the world’s food production needs and tackling issues such as resource shortages and climate change (Javaid et al. 2022).

Recent advancements in deep learning have transformed plant disease research, attracting much interest from researchers. The need for large datasets and the flexibility of deep learning make it particularly useful for tackling plant diseases. Convolutional neural networks (CNNs), a leading architecture in this field, have proven to be very accurate in detecting plant diseases. Additionally, the Vision Transformer (ViT) has recently gained popularity and is now widely used. It is reported that both CNN and ViT models excel at classifying, detecting, and segmenting plant diseases (Carion et al. 2020; Chen et al. 2018; Ramachandran et al. 2019; Vaswani et al. 2021; Aslan and ÖZÜPAK 2024). These deep learning methods can automatically identify important features in the data, automating the traditionally labor-intensive process of manual feature extraction. This ability to handle large datasets effectively is a significant advancement in agriculture. Traditional methods often lack reliability and performance, but the progress in deep learning offers highly accurate and autonomous detection of plant leaf diseases, significantly benefiting farmers (Chougui et al. 2022). The exact and autonomous diagnosis provided by deep learning represents a significant step forward, especially for sustainable agriculture. Deep learning can boost productivity and support economic growth by helping countries with advanced agricultural planning and early disease detection (Arjunagi and Patil 2023; He et al. 2023). Early detection, increased productivity, and better food planning highlight the importance of deep learning in agriculture. Beyond agriculture, deep learning has also been successful in fields like medicine, defense, finance, and generative artificial intelligence. Overall, the successful use of this technology is crucial for enhancing agricultural production, ensuring sustainability, and securing food safety.

This comprehensive review covers many studies and many aspects, such as creating plant disease datasets, building disease detection models, and predicting disease severity. Our analysis will shed light on the evolution of deep learning models in plant disease detection over time and will encompass the utilization of various data collection platforms. Despite prior review and survey, there is a lack of information that could encapsulate all facets of research in plant disease detection, thereby highlighting evident research gaps. Alongside evaluating existing techniques and methodologies for crop management and yield enhancement, we deliberate on challenges and propose early disease detection and prevention remedies. We emphasize the urgent need for a comprehensive assessment of the current status, identifying developing trends, examining the methodology used, and determining the overall efficacy of various approaches in plant disease detection. This systematic review aims to fulfill this information disparity by thoroughly and carefully assessing the available literature on the uses of machine learning and deep learning in plant disease detection.

2 Methodology

This literature review aims to examine the use of deep learning techniques for detecting plant diseases through plant leaf analysis. We reviewed 160 articles published between 2020 and 2024 from trusted academic sources such as IEEE Xplore, Springer, Google Scholar, and SCOPUS. Using specific keywords, we first searched for articles including the latest work available in the literature. These key terms are “plant disease detection,” “plant disease classification,” “deep learning,” “classification,” “CNN,” and “transfer learning.” This study followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines (Fig. 1). These guidelines provide a methodological way to ensure complete and transparent quality control throughout the review process. The Research Questions (RQ) are as follows.

RQ1: What are the popular deep learning-based models for the classification, detection, and segmentation of plant diseases?

RQ2: How do different models perform in the classification, detection, and segmentation of plant diseases?

RQ3: What are the popular computer vision methods used in deep learning models for plant diseases?

RQ4: What are the commonly preferred datasets in deep learning models for plant diseases?

RQ5: Which plants or crops are most frequently studied in the selected research?

RQ6: What are the most commonly used approaches for the classification, detection, and segmentation of plant diseases?

RQ7: What is the diversity of research on the classification, detection, and segmentation of plant diseases?

RQ8: What challenges or areas for improvement exist in the research on the classification, detection, and segmentation of plant diseases?

Flow map of the systematic review process based on PRISMA (Page et al. 2021)

According to disclosure standards, selected documents should discuss the use of deep learning techniques in classifying plant foliar diseases. The research must be published between 2020 and 2024 and include performance statistics based on at least one deep-learning algorithm. Articles that were preprinted or did not specify performance metrics were excluded from our study. We found the techniques for categorized detection, classification, identification, and segmentation. For each article, deep-learning models studied plants, utilized datasets and accuracy values were located. Results obtained from the models were perceived. The research was reviewed, the literature was compiled, and the outcomes were assessed as shown in Fig. 2.

The article selection process involved a three-stage screening process. Initially, duplicate records in the databases were removed. Subsequently, articles were screened based on their titles, followed by an assessment of abstracts. Finally, eligible articles underwent a full-text examination. The lead researcher carried out all these processes.

This study aimed to examine the use of CNN-based and ViT-based deep learning techniques in detecting plant diseases and evaluate their performance in this field.

2.1 Overview of deep learning

Machine learning, a branch of artificial intelligence, has significantly contributed to the progress and modernization of our world in recent years. With the advancement of technology, smart devices have become an integral part of our daily lives, finding applications in various fields. These smart devices are increasingly woven into our everyday routines as technology evolves (Fernandes et al. 2024a). Machine learning applications not only convert spoken words into written text and identify features in photos but also adapt to user preferences for tailored news or social media feeds. Deep learning methodologies, a specialized subset of machine learning involving deep neural networks, are at the heart of many such applications, driving innovation across different domains. Deep learning achieves one or more levels of learning from data using computer models and algorithms. It can find complex structures in large data sets by applying back propagation techniques. In various fields, these methods have accelerated the advancement of technology. For example, deep convolutional networks have been shown to be important in object detection, image recognition, and speech recognition tasks. Recurrent networks facilitate continuous data analysis (LeCun et al. 2015).

Although deep learning was introduced in 2006 (Hinton 2006), it quickly acquired prominence, notably through the ImageNet contest. This contest provided a venue for demonstrating image recognition systems, with deep learning architectures standing out. These designs have produced impressive results in object identification and are used in various industries, including military, medicine, and finance. Research indicates that deep learning architectures outperform traditional approaches (Fernandes et al. 2024b).

According to the Google Trends report, the search phrase “deep learning” has seen a noticeable trend over time. Beginning in 2013, interest in deep learning started to grow. However, it wasn’t until roughly 2015 that searches for deep learning skyrocketed, demonstrating a growing awareness of its importance and applicability in various sectors. Deep learning’s popularity grew significantly in 2016 and beyond, demonstrating its extensive adoption and application in industries such as technology, healthcare, finance, and more (see Fig. 3). The ongoing interest and engagement with the subject indicate a continual appreciation for the capabilities and possibilities of deep learning algorithms and methodologies. Notably, reaching its peak in 2022 underscores the continued relevance and significance of deep learning in addressing complex challenges and driving innovation across various domains.

2.2 Convolutional neural network

CNN is an essential artificial neural network model in deep learning. CNN has a specific structure that acts on input data via convolutional, pooling, and fully connected layers. Convolutional layers utilize filters to detect and extract characteristics from data. Pooling layers minimize output size with scaled features, whereas fully linked layers are employed for classification and prediction applications.

A convolutional layer is a collection of trainable filters used for feature extraction. If we have input data X that includes the present k filters in the convolutional layer, the result of the convolutional layer may be determined as follows Eq. (1). Here, \(\:{w}_{j}\) and \(\:{b}_{j}\) indicate the weight and bias, whereas f is an activation function. The * symbol represents the convolution process.

The pooling layer is used to minimize the size of the resulting feature data and network parameters. Currently, the most popular approaches for this purpose are maximum and average pooling Eq. (2). Assuming a p × p window size, the average pooling operation is described as follows: \(\:{x}_{ij}\) denotes the activation value at location (i, j), and N indicates the total number of items in S.

The fully connected layer converts each feature map to a single-dimensional feature vector. Equation (3) represents the transformation as γ and z, representing the output vector and input features, respectively. Also, w stands for the weight, while b represents the bias of the fully connected layer.

By reducing the error function through parameter optimization, CNN training is achieved by improving performance. As a result, CNN is a sophisticated artificial neural network model that can reach significant levels of accuracy in image processing and recognition.

CNN includes convolutional layers that use filters to identify features in input data, an activation function that nonlinearly changes convolution results, pooling layers that reduce results while preserving features, and fully connected layers that follow convolution, activation, and pooling layers for prediction and classification tasks (see Fig. 4). CNN training involves reducing an error rate between predicted and real values.

The Visual Geometry Group at Oxford University created VGG, a CNN model. This model comprises convolutional and maximum pooling layers and three fully connected classifier layers. Simonyan and Zisserman created the VGG network for image recognition and classification applications. VGG’s exceptional performance has been proved in several image classification datasets, including ImageNet, and it is extensively utilized as the foundation for various CNN models. ResNet is a CNN model developed to overcome the vanishing gradient problem in deep networks. This model avoids this problem using a special type of connection called redundancy connections. Redundancy links transfer information from one layer to the next, allowing the network to maintain information flow even in its deeper layers. This performs superior in ResNet, ImageNet, and other image classification tasks. Unlike ResNet, DenseNet is a CNN model that connects all layers. Each layer performs feature extraction in this model using information from all previous layers. In this way, DenseNet achieves higher performance with fewer parameters by providing efficient parameter use and continuous feature transfer. These outstanding results of DenseNet have made it a very popular model in computer vision applications. A CNN model named Xception (Chollet 2017) is based on the Inception architecture. This approach divides the convolution phase into depth-information and point-information convolution and uses depth-information deconstructed convolution in place of normal convolution layers. This technique increases accuracy while significantly reducing the number of parameters and computations the network must do. This model is widely used in computer vision applications and has shown impressive performance in various photo categorization benchmarks.

Recently, new architectures continue to emerge. In that case, MobileNet is designed to operate swiftly and effectively in resource-constrained environments, particularly on platforms like mobile devices, due to its lighter and less parameter-intensive architecture compared to standard CNN models. SqueezeNet is recognized for its specialized compressed architecture, particularly the Fire module, which uses fewer parameters to provide faster inference. EfficientNet utilizes a scaling strategy to produce optimized networks with varying depths and widths by adjusting scaling coefficients to achieve more efficient and effective models. NASNet uses automatic architecture search to find the most suitable network architecture and generates dataset-specific optimized models. ShuffleNet offers a lightweight and efficient architecture using group convolutions and channel shuffle operations, even with fewer parameters, to deliver high performance. SENet learns the importance of each channel using a channel attention mechanism, enabling the network to perform better feature extraction.

2.3 Vision transformer

A deep learning model named Image Transformer has recently piqued interest in computer vision. Conventional image classification models mainly depend on CNNs. However, Image Transformer (ViT) uses a non-convolutional technique to analyze visual input. ViT is built on the Transformer architecture, designed for natural language processing (NLP) workloads (Pacal and Kılıcarslan 2024b). The self-attention mechanism is a key component of ViT, modeling interactions between different parts of the input picture and focusing on relevant aspects. The input picture is separated into size-appropriate patches, which interact with one another via attention heads and completely linked layers within transformer blocks. Consequently, the output layer generates data for categorization or another activity (see Fig. 5).

Among the Image Transformer’s primary advantages is its ability to analyze photos of various sizes and manage data efficiently. However, this can be computationally costly and require significant memory and computing power. The ViT architecture divides the input picture into fixed-size patches, which are then turned into continuous vectors via a method known as linear embedding. Positional embeddings are also used in patch representations to maintain positional information. The transformer encoder is then given successive patches (Eq. 4). The encoder is made up of alternating sequences of layers comprising Multi-Head Self-Attention (MSA) (Vaswani et al. 2017) (Eq. 5) and multi-layer perceptron (MLP) (Eq. 6). Layer Normalization (LN) (Lei Ba et al. 2016) is used before MSA and MLP to shorten training time and sustain the process. The remaining connections are introduced following each layer to improve the final result.

In Eq. 4, E refers to the steps of attaching patches, whereas \(\:{E}_{pos}\:\)denotes the positional embedding process.

In Eq. 5, \(\:{z{\prime\:}}_{l}\:\) represents the result from the MSA layer, which is used with LN. It contains a residual connection, referred to as \(\:{z}_{l-1}\) (i.e., \(\:{z}_{0}\)), representing the outcome of the (l-1)-th layer. L shows the total number of layers in the transformer.

In Eq. 6, \(\:{z}_{l}\) is the result of the MLP layer after LN, with a residual link to \(\:{z{\prime\:}}_{l}\) derived from the (l-1)-th layer. In addition, When pre-train weights and transfer learning are paired with large-scale datasets, ViT can produce powerful results in various computer vision applications. In addition, since no convolutions are used, large images can be dealt with more effectively and calculatively. Different models, such as patch-based, hybrid, token-based, scale, and mobile, are also available. ViT offers a unique way to enhance each functionality and efficiency. Mehta and Rastegari (2021) presented MobileViT as an extension of ViT for mobile-based applications. This algorithm has a high inference speed, low parameters, and adequate convolution layers. DeiT (Data Efficient Display Transformer) is a ViT-based architecture that delivers more effective results with less data. Effective techniques such as interpolation and distillation have been used to increase the performance and efficiency of the architecture (Touvron et al. 2021). Swin architecture, one of the important ViT-based architectures, uses a series of hierarchical blocks that offer a mixture of scalability and parallelism techniques. In addition, it tries to optimize performance with different window-based and shifted window-based attention mechanisms (Liu et al. 2021). MaxViT aims to increase performance by encapsulating multi-level representations of images using different axes and attention mechanisms to effectively extract local and global features (Tu et al. 2022). Multiscale Vision Transformers (MViT), introduced by Fan et al. (2021), uses channel resolution scaling stages to create a feature pyramid at multiple scales designed to model visual data spanning images and videos. In short, although vision transformer structures show higher performance than CNN-based architectures, many different architectures have been presented in addition to these.

Many different architectures have been presented in the literature, including different attention mechanisms, patches, and window sizes. To give a few examples, mobile-based ViTs are a lighter version of ViT. It is built for resource-constrained situations to reduce computing needs while maintaining performance. Unlike typical ViT models, TNT (Token-Based Transformers) analyzes image tokens in parallel rather than sequentially, increasing efficiency while maintaining accuracy. PVT (Scaled Feature Transformers) uses a scaled technique to improve feature extraction in multiple dimensions. CoaT (Cross-Attention Transformers) combines cross-attention processes and learning methodologies to improve feature extraction performance. Data-enabled ViT uses a scale-out strategy to achieve high accuracy with less labeled data. The Twins model leverages the Twin Transformers architecture, which processes each image separately and has a dual-input topology. CCT (Customized Cross-Task Converters) is a ViT model specialized for specific tasks. It provides great performance across a wide range of visual tasks while also serving as a general-purpose model.

2.4 Deep learning frameworks

Deep learning frameworks resemble specialized tool kits designed to simplify the challenging task of building deep neural networks. They facilitate the development, training, and implementation of deep learning models by offering researchers and developers a variety of powerful tools and abstractions. The most popular deep learning frameworks are these. The deep learning framework TensorFlow, created by Google Brain, is regarded as one of the finest options. It provides a full suite of tools for constructing neural networks, including several machine-learning models.

PyTorch, developed by Facebook’s Artificial Intelligence Research Laboratory (FAIR), is unique because its dynamic computational network provides greater flexibility and facilitates debugging more easily than frameworks with static visuals. PyTorch is a popular and easy-to-use tool with an interface based on Python. With the release of TensorFlow 2.0, Keras—which was initially intended as a high-level neural network API in Python—became the recognized high-level API. Neural network creation is easy and accessible using Keras. MXNet is an open-source deep learning framework created by the Apache Software Foundation and is renowned for its scalability and versatility. Numerous programming languages are supported, including Python, R, Scala, and Julia. Additionally, it provides many capabilities for developing and implementing deep learning models. While these frameworks have advantages and disadvantages, they all aim to give researchers and developers the resources they need to create and test deep learning models.

2.5 Overview of plant disease databases

The significance of datasets in the success of deep learning architectures cannot be denied. The availability of representative datasets is paramount to facilitate the development of accurate plant disease detection models using image-based approaches. These datasets should contain many images, typically in the thousands, to effectively support the implementation of deep learning techniques. In contrast to classical machine learning approaches, deep learning architectures entail significant differences in utilizing large-scale datasets and automated feature extraction. This stems from the inherent need for data-driven features in deep learning architectures (Pacal 2024). Information about some datasets used in the examined studies has been provided in Table 1. The PlantVillage dataset emerges as a prevalent choice among researchers in these datasets. However, as observed in various studies, many researchers prefer to create their own datasets, which may not be accessible. This trend indicates a growing inclination towards developing specialized datasets tailored to the specific research requirements within the domain of plant disease detection.

The various plant disease datasets listed in the table have been created for different purposes related to classifying and diagnosing plant diseases, as depicted in Table 1. The PlantVillage dataset developed by Hughes and Salathé (2015) provides a comprehensive database for disease diagnosis, while the AI Challenger (2018) dataset can be used for various artificial intelligence and machine learning tasks. A dataset that compiles illnesses exclusive to the cassava plant is the Cassava Leaf Disease Classification dataset (2019). In addition, datasets with data unique to certain plants for comparable purposes include Citrus Leaves (2020) and Grapevine (Koklu et al. 2022). Scientists studying plant diseases can find a wealth of information in these data sets. It plays a significant role in agricultural disease diagnosis and management programs in this way.

3 Results

In this study, we evaluated many research articles in the literature. We divided it into three groups for a more effective understanding: detection, classification, and segmentation. Thus, studies on plant diseases in each group can be compared fairly and in more detail and examined in the literature better and systematically.

3.1 Classification-based studies on plant diseases

Classification is a process that assigns input data to a specific category or class. It can be considered a model predicting the correct label for a given input. Each class represents a specific feature or behavior group within the dataset. In essence, identification seeks to establish the unique properties or traits that differentiate one entity from another, thereby enabling accurate classification or categorization. Deep learning models are commonly employed to solve classification problems and exhibit various applications. Table 2 presents the classification studies of plant diseases in the literature. To enhance the readability of the tables, when multiple CNN-based architectures were employed, we labeled the model column as ‘CNN-based.’ Conversely, if a specific model was predominantly used, we detailed the model’s name in the column. Additionally, when modifications or improvements were made to the model, the term ‘based’ was still used (e.g., Inception-v3-based).

Afifi et al. (2020) employed ResNet18, ResNet34, and ResNet50 models to identify coffee leaves from the PlantVillage and Coffee Leaf datasets. Their proposed model achieved an accuracy rate of 99%. Agarwal et al. (2020) employed CNN models to detect multiple plant species in the PlantVillage dataset, achieving a 91.2% accuracy rate. Ahmad et al. (2020a) achieved a 99.60% accuracy rate in identifying tomatoes in a self-collected dataset using a CNN model. Ahmad et al. (2020a) used an Inception-v3 model to identify diseases in peach leaves with a 92.00% accuracy rate in a self-collected dataset. Anagnostis et al. (2020) utilized a CNN model to identify diseases in a walnut leaf dataset they collected, reporting an accuracy rate of 98.7%. Using a combined model with the FSL method and SVM classifiers, Argüeso et al. (2020) classified various plants in the PlantVillage dataset, achieving an accuracy rate of 94.0%. In recent studies, various machine learning models have been applied to different datasets to classify plant diseases accurately. Chen et al. (2020) used VGGNet to identify rice leaves in the ImageNet and PlantVillage datasets, achieving a 92% accuracy rate. Chohan et al. (2020) employed CNN models to recognize apple images in both the PlantVillage and self-collected datasets, achieving an accuracy rate of 98.3%. Darwish et al. (2020) achieved a 98.2% accuracy rate using a CNN model to identify corn leaves in the Kaggle dataset. Esgario et al. (2020) employed the ResNet50 model to classify coffee diseases in a dataset of coffee leaves they collected, reaching an accuracy rate of 95.24%. Gayathri et al. (2020) recognized tea plants with the LeNet model, achieving an accuracy of 90.23%. Hernandez and Lopez (2020) utilized a VGG16-based model to classify plants in the PlantVillage dataset, with a 96.0% accuracy rate. Hu et al. (2020) combined the MDFC and ResNet models to identify various plants in the AI Challenger dataset, achieving an 85.22% accuracy rate. Huang et al. (2020) examined grape plants in the PlantVillage dataset using several models, reporting accuracies of 98% for VGG16, 77% for AlexNet, and 97% for MobileNet. Jasim and Al-Tuwaijari (2020) used CNN to identify and classify various plants in the PlantVillage database, achieving an accuracy rate of 98.29%. Jiang et al. (2020) used the Leaky-ReLU model to classify tomatoes, achieving a 98% accuracy rate. Karthik et al. (2020) detected tomatoes with 98% accuracy using a CNN model in the PlantVillage dataset. Kaushik et al. (2020) applied the ResNet50 model to detect tomato leaf disease, achieving 97% accuracy. Khamparia et al. (2020) used a CNN model to classify various plants in the PlantVillage dataset, achieving a 97.5% accuracy rate. Li et al. (2020) reached a 98.44% accuracy rate using the VGG16 and Inception V3 models to classify ginkgo leaves in a dataset they collected themselves. Liu et al. (2020) employed the Xception model in the PlantVillage dataset to identify grapes, achieving a 98.7% accuracy rate. Ramesh and Vydeki (2020) combined the DCNN and AlexNet models to classify rice diseases in their dataset, achieving a 90.57% accuracy rate. Saleem et al. (2020a) used the Xception model to classify various plants in the PlantVillage dataset, achieving a 99.81% accuracy rate. Shrestha et al. (2020) used a CNN model to recognize potatoes, tomatoes, and peppers in a self-collected dataset, reporting % accuracy rates of 88.80%. Sun and Wei (2020) used the Inception V4 model on the AI Challenger dataset to identify corn plants, achieving an 81% accuracy rate. Wu et al. (2020) applied the GoogleNet model to the PlantVillage dataset to identify tomato plants, achieving a 94.33% accuracy rate. Yan et al. (2020) used a VGG16-based model on the AI Challenger dataset to identify apple plants, achieving a 99.01% accuracy rate. Lastly, Zhong and Zhao (2020) used the DenseNet 121 model on the AI Challenger dataset to identify apple plants, achieving a 93.51% accuracy rate.

Abbas et al. (2021) applied the DenseNet121 model to identify tomatoes within the PlantVillage dataset and achieved an impressive accuracy of 99.51%. Ahmed and Reddy (2021) employed a CNN model in a self-collected dataset to detect multiple plants and reported an accuracy rate of 93.6%. Akshai and Anitha (2021) employed a CNN model to classify grape leaves in the PlantVillage dataset, achieving an accuracy rate of 98.27%. Achieving an accuracy rate of 98.42%, Atila et al. (2021) utilized the EfficientNet model to classify various plants in the PlantVillage dataset. Ayu et al. (2021) utilized a MobileNetV2 model to classify leaves in the cassava dataset obtained from Kaggle, achieving the lowest accuracy of 65.6%. With a combined Convolutional Autoencoder (CAE) and CNN model, Bedi and Gole (2021) identified peach leaves within the PlantVillage dataset and achieved an accuracy rate of 98.38%. Identifying spice, wheat, and rice plants in PlantVillage, Chen et al. (2021a) achieved the highest accuracy rate of 99.71% using various CNN models. Using the MobileNetV2 model, Enkvetchakul and Surinta (2021) detected cassava leaf spots with an accuracy rate of 96.15% using the Icassava 2019 dataset. Hassan et al. (2021) used the CNN model to identify multiple plant species with 99.56% accuracy. Hemalatha and Vijayakumar (2021) effectively classified tomato leaves with an accuracy rate of 99.86% using the transfer learning approach. Using CNN, Khattak et al. (2021) detected citrus trees with 94.55% accuracy in the small-scale PlantVillage dataset. For the large-scale PlantVillage dataset, Kibriya et al. (2021) achieved an accuracy rate of 99.23% using GoogleNet and 98% accuracy using VGG16 in tomato plant detection. Using the PlantVillage dataset, Lakshmanarao et al. (2021) classified potatoes, peppers, and tomatoes using ConvNets, achieving accuracy rates of 98.3%, 98.5%, and 95.0%, respectively. In the CIFAR-10 dataset, Latha et al. (2021) achieved a 94.45% accuracy rate for CNN-based tea plant detection. Using a self-collected dataset, Maqsood et al. (2021) classified wheat rot with an accuracy rate of 83% using CNN and SRGAN models. Qi et al. (2021) identified peanut disorders using CNN (ResNet50 and DenseNet121) models, with 97.59% and 90.50% accuracy rates, respectively. Rajbongshi et al. (2021) identified mango trees using the DenseNet201 model with a 98.00% accuracy rate in a self-collected mango dataset. Using AlexNet on the PlantVillage dataset, Rao et al. (2021) obtained accuracy rates of 99% for grape and 89% for mango plants. In the extensive Kaggle dataset, Sambasivam and Opiyo (2021) used CNN to recognize and classify cassava plants with a 93% accuracy rate. Singh et al. (2021) identified tomato plants in the PlantVillage dataset with a 99.1% accuracy rate using a CNN model influenced by AlexNet. Sujatha et al. (2021) employed an Inception-v3 model for detecting cassava plants in a self-collected dataset, reporting an accuracy rate of 89%. Sunil et al. (2021) achieved an accuracy rate of 98.26% in classifying cardamom plants using the EfficientNetV2 model on a self-collected dataset. Tan et al. (2021) classified tomato plants in the PlantVillage dataset with 99.7% accuracy using the ResNet34 model. ResNet with the SGD was utilized by Thangaraj et al. (2021) to categorize tomato plants with 99.55% accuracy on the PlantVillage dataset. Tiwari et al. (2021) achieved a 99.19% accuracy rate for multi-plant detection using DCNN in the large-scale self-collected dataset. Trivedi et al. (2021) attained a 98.49% accuracy rate for tomato plant detection using a VGG-based model in the self-collected dataset. Uğuz and Uysal (2021) achieved a 95.00% accuracy rate in classifying olive trees using a CNN-based model they had personally gathered. Yadav et al. (2021) identified peanut plants with a 98.75% accuracy rate using a CNN-based algorithm. Yatoo and Sharma (2021) used a VGG16 model for detecting a plant with unspecified labeling in the PlantPathology dataset and achieved an accuracy rate of 85%. With an accuracy rate of 93.76%, Zhang et al. (2021a) identified apple illnesses in the Pathology Challenge 2020 dataset using a Restnet 34-based model. Citrus plant classification using MobileNetv2 and DenseNet201 models yielded a 95.7% accuracy rate in a self-collected dataset by Zia-Ur-Rehman et al. (2021).

Acar et al. (2022) classified cassava leaves using a dataset of 10,239 leaf photos from the Kaggle website using k-NN classifiers. The range of the model’s accuracy is 81.55–89.7%. Bi et al. (2022) reported a 77.65% accuracy rate using a CNN model for disease identification in an apple leaf dataset. Chakraborty et al. (2022) obtained a 97.21% accuracy rate on a dataset of 12,000 tomato leaf pictures using a CNN-based model designed for disease identification. With an accuracy rating of 99.81%, Eunice et al. (2022) identified different plants in the PlantVillage dataset using the DenseNet-121 model. With a 96% accuracy rate, Dawod et al. (2022) classified illnesses in the sunflower photos they gathered using the Faster R-CNN and Mask R-CNN models. Haque et al. (2022) obtained an accuracy rate of 95.29% by using a CNN model to identify illnesses in their collected photographs. Using a CNN model, Hassan and Maji (2022) identified several plant species with a 99.39% accuracy rate. Using their olive dataset, Ksibi et al. (2022) reported 97.08% accuracy for olive plant recognition using MobiResNet. Using their dataset of olive leaves, Lachgar et al. (2022) classified olive illnesses using the MobileNet model, achieving a 92.59% accuracy rate. Using CNN-based models, Latif et al. (2022) detected rice plants with a 96.08% accuracy rate in the Kaggle dataset. Pan et al. (2022) identified corn leaf spots in a corn leaf dataset they obtained using the AlexNet, GoogleNet, VGG16, and VGG19 models, with 99.94% accuracy rates. Using a CNN-based model, Sharma et al. (2022) detected mango plants with an accuracy rate of 90.36% in the small-scale SKUAST-J dataset. In the large-scale self-collected dataset, Singh et al. (2022b) used CNN, k-NN classifiers, SVM classifiers, and random forest classifiers to achieve a 99.20% accuracy rate for apple plant detection. Using a CNN model, Singh et al. (2022c) classified maize with 99.16% accuracy using a dataset they gathered. Sunil et al. (2022) reached a perfect accuracy rate of 100% in classifying various plants using a CNN-based model, once again utilizing a self-collected dataset. Verma et al. (2022) identified tomato, strawberry, pepper, peach, and potato plants in the PlantVillage dataset with a 99.12% accuracy rate using a CNN-based model. In a self-collected dataset, Yakkundimath et al. (2022) classified rice plants using VGG-16 and GoogleNet models, achieving 92.24% and 91.28% accuracy rates, respectively. With a 99.43% accuracy rate, Zeng et al. (2022) identified rubber trees in the PlantVillage dataset using a CNN-based model.

Grijalva et al. (2023) used a CNN model to classify sugarcane images from Kaggle, achieving an 86% accuracy rate. Kulkarni et al. (2023) classified coffee plants with an accuracy of 98.10% using the EfficientNetV2 model on the JMuBEN Mendeley dataset. Liu et al. (2023) used a MobileNetV2-based model to identify apple diseases in a dataset they collected, achieving a 96.2% accuracy rate. Mahum et al. (2023) achieved a 97.2% accuracy rate for potato plant detection using Efficient DenseNet on the small-scale PlantVillage dataset. Mustafa et al. (2023) achieved 99.99% accuracy for multi-plant detection using a CNN-based model on the large-scale PlantVillage dataset. Panchal et al. (2023) used a VGG-based model trained on the sizable PlantVillage dataset to achieve 93.5% accuracy in multiple plant identification. Abouelmagd et al. (2024) employed the CapsNet model to classify the Kaggle Plant Diseases database into tomato groups, aiming to detect tomato diseases on a large scale and achieving an accuracy of 96.39%. Andrushia et al. (2024) successfully classified grapes in a small-scale dataset using the CapsNet model, achieving an accuracy of 98.7%. Banarase and Shirbahadurkar (2024) achieved 99.36% accuracy on a smaller dataset when they used the MobileNetV2 model to identify apple photographs in the PlantVillage database. Biswas et al. (2024) utilized a CNN-based model to identify multiple plant species in PlantVillage, achieving a 95.17% accuracy rate on a large-scale dataset. Dubey and Choubey (2024) employed a hybrid model to classify rice plants in the PlantVillage dataset, achieving an accuracy of 98.86% on a small-scale dataset. Using CNN, G et al. (2024) achieved an astounding 99.67% accuracy rate in detecting grape leaves. Gautham et al. (2024) classified mangos in a small-scale dataset using a DNN-based model, achieving a 98.57% accuracy rate and demonstrating success in object detection. With an accuracy rate of 86.4%, Hu et al. (2024) used the CNN model to recognize apple images in a new database of plant diseases. Using CNN in the PlantVillage database, Kansal et al. (2024) identified tomatoes with an accuracy of 97.35%. Using CNN and MobileNet models, Khalid and Karan (2024) identified several plant species on a large scale in the PlantVillage database with accuracy ratios of 89% and 96%. Khanna et al. (2024) used the PlaNet model to classify multiple plant species from various dataset sources, achieving an accuracy of 97.95% on a large-scale dataset. Kini et al. (2024) classified peppers in a small-scale dataset using a CNN model, achieving an accuracy of 99.67%. Kunduracioglu and Pacal (2024) used both CNN and ViT models to classify grape images in the PlantVillage and Grapevine datasets, achieving perfect 100% accuracy rates and an extremely impressive success. Mahmood and Alsalem (2024) achieved 99.72% accuracy in detecting olive leaves using a small sample. Using a CNN-based model, Najim et al. (2024) detected tomato diseases on the PlantVillage dataset and achieved a remarkable 96% accuracy rate. Nawaz et al. (2024) used a ResNet-50-based model to classify Arabica coffee leaves in the PlantVillage dataset, achieving an accuracy of 98.54% on a small-scale dataset. Pacal (2024) also employed CNN and ViT models to identify corn plants in datasets from PlantVillage, PlantDoc, and CD&S, achieving an accuracy of 99.24% on a small-scale dataset. Pacal & Kunduracioglu (2024a) classified sugarcane plants with an accuracy of 93.79% using DeiT-based models on the PlantVillage dataset. Paçal & Kunduracıoğlu (2024b) successfully classified potato plants with an accuracy of 99.70% using a modified EfficientNetV2-based model with an ECA module on the PlantVillage dataset. Reis and Turk (2024) employed a hybrid model to classify wheat leaves, achieving an accuracy of 99.43% on a small-scale dataset. In the PlantVillage dataset, Singla et al. (2024) used a CNN-based model to detect multiple plant species and achieved 99.39% accuracy at a large scale. Sofuoglu and Birant (2024) utilized a CNN-based model for detecting potato diseases in the PlantVillage dataset, achieving a 98.28% accuracy rate in a small-scale dataset. Wajid et al. (2024) detected tomato disease in a large-scale self-collected dataset, achieving a 96% accuracy rate.

3.2 Detection-based studies on plant diseases

In this review article, the first group we examine is detecting diseases in plant leaves. Detection, like object detection, provides both the localization of the relevant place in the image and the type of disease in the plant. In other words, it includes the plant disease in the picture. YOLO-based or single-stage approaches, among the most successful algorithms in object detection, are among the deep learning-based approaches frequently used in disease detection in plant leaves. Table 3 provides an overview of plant disease detection studies as documented in the literature.

Liu and Wang (2020) identified tomato plants with 92.39% accuracy using YOLOv3 in a large self-collected database. In the MS COCO and PlantVillage datasets, Saleem et al. (2020a) utilized the SSD Inception-v2 model to identify various plants, achieving a 73.07% accuracy rate. Xie et al. (2020) identified grape plants with 81.1% accuracy using Faster DR-IACNN-based model on the self-collected dataset. Using the Faster R-CNN model, Bari et al. (2021) identified diseases in a rice leaf dataset they collected, achieving an accuracy rate of 99.25%. Barman and Choudhury (2022) investigated disease detection on a self-collected dataset of citrus leaves, utilizing K-Nearest Neighbors (KNN) and Deep Neural Network (DNN) models, resulting in accuracy rates of 99.89% for KNN and 89.89% for DNN. Bazame et al. (2021) utilized the YOLOv3-tiny model to detect coffee plants, achieving an accuracy of 84% in a dataset they collected themselves. In the self-collected dataset, Chen et al. (2021b) applied the Des-YOLOv4 model to successfully identify apple plants, reporting a high accuracy rate of 93.1%. Mirhaji et al. (2021) used YOLOv4 on the COCO dataset to detect oranges, where they achieved a notable 91.4% accuracy. With YOLOv4, Roy and Bhaduri (2021) could recognize apple plants in the PlantPathology dataset with an accuracy rating of 91.2%. Schirrmann et al. (2021) achieved a 95% accuracy rate for winter wheat stripe rust detection using ResNet in the self-collected dataset.

With a good accuracy rate of 99.98%, Albattah et al. (2022) used custom CenterNet to detect multiplants in the PlantVillage dataset. An et al. (2022), using a YOLOX-based model, identified strawberry plants with an impressive 94.3% accuracy on a self-collected dataset. Chen et al. (2022) employed the Improved-YOLOv5s model to identify apple flowers, yielding an accuracy rate of 77.5% in their self-collected dataset. Dawod et al. (2022) used Faster R-CNN and Mask R-CNN models to segment and classify diseases in sunflower images they collected, achieving an accuracy rate of 96%. Ge et al. (2022) applied the YOLO-DeepSort model on tomato plants, reaching a high accuracy of 95.8%. Using their self apple dataset, Khan et al. (2022) employed the Faster-RCNN model to recognize apple plants with an accuracy rate of 87.9%. Pandian et al. (2022) achieved a 99.96% accuracy rate for multi-plant detection using DCNN in the small-scale self-collected dataset. With an accuracy rate of 96.10%, Singh et al. (2022b, c) employed a hybrid model to identify different plants in the PlantVillage dataset. Using Faster-RCNN, Syeh-Ab-Rahman et al. (2022) detected citrus plants with an accuracy rate of 94.27% on the small-scale Kaggle Citrus Leaves dataset. Xu et al. (2022) demonstrated a strong performance with the YOLOv5 model, identifying melon plants with 96.7% accuracy in a self-collected dataset. Zhang et al. (2022) achieved a 95.7% accuracy in identifying apple plants using the YOLOv4 model on a self-collected dataset. Zhao et al. (2022) reported a 90.5% accuracy using YOLOv5 for wheat plant detection in their collected dataset.

Anim-Ayeko et al. (2023) used a ResNet-9 model and saliency maps for detecting potato and tomato leaves in the PlantVillage dataset, achieving an accuracy rate of 99.25%. Within the PlantVillage dataset, Khalid et al. (2023) achieved a 93% accuracy rate by opting for a YOLOv5 model to detect various plant species. Kumar and Kumar (2023) utilized the Imp-YOLOv7 model to recognize apple plants, achieving an accuracy rate of 91% with their self-collected data. Sunil et al. (2023a) achieved a remarkably high accuracy of 99.83% in detecting tomato plants using the ACSPAM-MFFN model on the PlantVillage dataset. Wu et al. (2023) successfully identified grape plants in the WGISD dataset using YOLOv5, achieving 90% accuracy. Lastly, Zhang et al. (2023) reported a 95.1% accuracy in maize plant detection using the YOLOv4 model on the COCO dataset. Dai et al. (2024) utilized the DFN-PSAN model to detect various plants across the PlantVillage, BARI-Sunflower, and FGVC8 datasets, achieving an accuracy rate of 95.27%, demonstrating the model’s effectiveness across a wide range of datasets. Using the LDA technique, Butt et al. (2024) identified citrus leaf disease in a smaller database and achieved an astonishing accuracy rate of 99.6%.

3.3 Segmentation-based studies on plant diseases

Segmentation can be defined as the process of partitioning input data into different regions or segments. Segmentation relies on dissecting input data, with each region or segment characterized by specific attributes or properties. This process is commonly employed in object recognition, medical image analysis, and autonomous driving applications. Segmentation is a detailed and precise partitioning and classification process on complex datasets or image data. This process is essential for enhancing understanding and conducting in-depth data analysis. Table 4 presents the segmentation studies of plant diseases in the literature.

Das et al. (2020) used an SVM model to identify tomato leaves in the Kaggle dataset, reporting an accuracy rate of 87.6%. Gangadharan et al. (2020) combined FFTA and BPNN models to detect various plant species, reaching a maximum accuracy of 97.69%. Pham et al. (2020) used an ANN model to classify mango leaves in the PlantVillage dataset, achieving an 89.41% accuracy rate. Su et al. (2020) employed a U-Net model to identify wheat rust in a self-collected dataset, achieving an accuracy rate of 91.3%. Xiong et al. (2020) utilized the MobileNet model on the PlantVillage dataset to identify various plants with segmentation, achieving an accuracy rate of 84.83%. Tomato identification by Chowdhury et al. (2021) was examined by various EfficientNet-based U-Net models, and the highest accuracy rate reached 99.89%. With a 98% accuracy rate, Pan et al. (2021) identified wheat rust in a dataset of wheat leaves that they had gathered themselves using the PSPNet model. Zhang et al. (2021b) employed an Ir-UNet model for detecting wheat rust disease in a self-collected dataset that used segmentation, achieving an accuracy rate of 97.13%.

In the small-scale self-collected dataset, Abisha and Jayasree (2022) obtained 91% and 98.1% accuracy rates for brinjal plant detection using FFNN and CFNN, respectively. In the PlantVillage dataset, Ashwinkumar et al. (2022) used MobileNet to recognize tomato plants with 98% accuracy. Using the MIB Classifier, Brindha et al. (2022) claimed a 98% accuracy rate for disease diagnosis in citrus leaves using a self-collected dataset. Using the PlantVillage dataset, Harakannanavar et al. (2022) utilized a CNN model modified with DWT-PCA-GLCM to recognize tomatoes with 99.6% accuracy. Li et al. (2022) showed a 90.7% accuracy rate when using an ANN model to identify germ illnesses in a dataset they gathered on cancer. Shoaib et al. (2022) used the Modified U-net with InceptionNet segmentation models to classify tomato plants in the PlantVillage dataset, achieving an accuracy rate of 99.97%. Divyanth et al. (2023) utilized the UNet-DeepLabV3 model to identify corn in a corn leaf dataset they collected, achieving an accuracy rate of 92%. Zhang and Zhang (2023) used the U-Net architecture to achieve a higher accuracy of 92.55% for grape diseases using their self-collected database. Anand et al.‘s (2024) DNN model was used to classify potatoes in the PlantVillage database. Accuracy rate is 98.52%. Kaur et al. (2024) used a hybrid-DSCNN model to detect tomato diseases using the PlantVillage database. They achieved 98.24% accuracy on a small-scale database. Nain et al. (2024) used the HSL-CNN model to segment many plant species using the PlantVillage database with 98.97% accuracy. Rai and Pahuja (2024) conducted segmentation of corn leaves in a small-scale dataset they collected, achieving an accuracy rate of 98.98%. Shwetha et al. (2024) presented the U-Net model, which achieved a remarkable accuracy of 91% for jasmine diseases using a self-collected dataset. Zhang et al. (2024) introduced the UPFormer model, achieving an accuracy of 89.49% on grape diseases using datasets such as PlantVillage, Field-PV, and Syn-PV.

3.4 Discussion of current research studies

The numbers of the studies examined within the scope of the study are as follows: classification 108, detection 30, and segmentation 22 (see Fig. 6). According to this data, it can be observed that classification studies outnumber all other categories. Detection and segmentation studies are relatively lower. These findings indicate a particularly intensive research activity in detecting plant diseases, with deep learning techniques being a significant focus in this field.

The diversity of datasets and their usage in the studies examined within the scope of the research is evident (see Fig. 7). PlantVillage stands out as the most commonly used dataset in the studies, being utilized in a total of 67 works. Following this, the researchers’ datasets have been used in 56 studies. Among other publicly available datasets, AI Challenger and Kaggle Plant Diseases are notable, employed in 5 and 4 studies, respectively. Some other datasets have been utilized more closely; for instance, Cassava Leaf Disease Classification, Citrus Leaves, PlantDoc, and PlantPathology datasets have been used in 2 studies each. The remaining datasets have been grouped under the “others” category, encompassing 14 different datasets. These findings underscore the significant role of datasets obtained from various sources in research on the detection of plant diseases, highlighting their wide range of applications.

Upon examining the accuracy rates in classification articles, it becomes apparent that there are discernible fluctuations across different years. In 2020, accuracy rates spanned from 81 to 99.81%, culminating in a relatively lower average accuracy of 95.02%. However, a significant enhancement in accuracy was observed in 2021, with rates ranging from 65.60 to 99.97% and an average accuracy of 95.37%. Moving forward to 2022, the accuracy rates varied between 77.65 and 100%, with an average accuracy of 95.61%. In 2023, the accuracy rate ranged between 86% and 99.99%. The average accuracy value is 94.58%; however, there are not enough articles to generalize. Finally, in 2024, rates varied between 86.4% and 100%, averaging 97.65%. Overall, the data indicate that the accuracy levels in diagnostic articles vary from year to year, showing significant improvements in some years and consistency in others (see Fig. 8).

Considering historical data, an increasing trend in accuracy rates for detection articles between 2020 and 2024 can be observed. Accuracy rates have been steadily increasing since 2020, but they saw a significant increase in 2021 and 2022 and continue to rise around their fairly high levels in 2023 and 2024. The average accuracy rate over these two years was significantly higher than 94.70%. The average accuracy rate of detection items is 92.07%, and the maximum recorded value is 99.98%. These findings demonstrate the increasing sensitivity and efficiency of research in this area and the positive trend of increased accuracy in detection investigations over time. In particular, the significant increase in susceptibility rates in 2023 and 2024 shows that much work has been done in agricultural research on plant health and disease control. These advancements align closely with the ongoing development of deep learning and artificial intelligence technologies (see Fig. 9).

When evaluating the accuracy rates in segmentation articles, varying results are observed across different years. In 2020, the accuracy rates were recorded as 84.83–90.17%, remaining constant in 2021 and 2023. However, in 2022, the accuracy rates ranged from 90.7 to 99.97%, indicating an average accuracy of 96.21%. In 2023, with rates ranging from 92 to 92.55% and an average accuracy of 92.28%. Similarly, in 2024, rates ranged from 89.49 to 98.98%, and an average accuracy of 95.87%. Overall, it can be seen that the accuracy levels in segmentation articles vary, but they are generally high. However, a very clear conclusion cannot be anticipated due to the limited number of segmentation articles. (see Fig. 10).

When we look at the frequency of use of deep learning algorithms, we can observe the usage rates of various algorithms (see Fig. 11). CNN-based stands out as the most commonly preferred algorithm and has been used in 51 classification studies. The usage of YOLO algorithms is also notable in 15 detection studies. Additionally, the U-net model was used in 8 segmentation studies. Other important deep learning algorithms such as VGG, MobileNet, ResNet, and DenseNet have also been frequently utilized. Moreover, less commonly used but still significant algorithms like Inception, and Faster-RCNN are also listed. It is noticeable that algorithms other than these are less preferred. This diversity indicates that researchers evaluate different deep-learning architectures for detecting and managing plant diseases.

According to Fig. 12, it is observed that focusing on multiple plant species simultaneously is quite common in the articles; multiple plant species were utilized in 32 studies. A total of 25 different plant species were encountered in the studies. Additionally, when examining studies focused on a single plant species, it is seen that 25 studies concentrated on tomatoes, indicating that tomatoes emerged as the most popular plant species. Apples were another significant plant species examined in 11 studies. Studies focused on specific plant species such as grapes, citrus, potatoes, and wheat were also considerable in number. This diversity indicates that deep learning techniques could greatly impact the detection and management of plant diseases. Furthermore, research conducted on different plant species suggests the potential to develop more comprehensive and effective solutions for diagnosing and controlling plant diseases.

When evaluating the performance of deep learning models for plant disease detection in various plant species, presenting the minimum, maximum, and average accuracy values for each plant species, as shown in Fig. 13, provides valuable information. Particularly, the most striking observation is that a 100% accuracy rate in grape disease detection was achieved, underlining the extraordinary performance potential of these models in detecting grape diseases. When all the plants are examined, it is seen that the lowest rate is 73%, and when the averages are examined, it is 92% or above. Such findings facilitate a comparative analysis of the overall effectiveness of deep learning models in detecting plant diseases and their performance in various plant species. It appears helpful in developing more effective solutions for disease management and improving plant health in the agricultural sector.

The findings in Fig. 13 demonstrate the striking differences in accuracy values found in diagnostic articles from different plant species. Results differ when plant species are assessed independently. For example, while the maximum accuracy rate in detecting grape diseases stands out as the highest at 100%, in mango plant diagnosis articles, this rate is relatively lower, with a maximum of 98.57%. On average, the accuracy rate was highest in tomato plants (97.49%) and lowest in apple plants (92.95%). Looking at the minimum values, the lowest result (73.07) is observed in studies conducted for multiple plants. This seems normal, considering the multitude of plant species and the numerous classes being compared. However, upon closer examination of the averages, the fact that lower values are found in apples than in multiple plants is an interesting result. These findings indicate that different plant species achieve varying levels of success in diagnostic methods. Nevertheless, the average accuracy values for each plant species are quite high, indicating that diagnostic articles are generally effective and reliable. These results underscore the importance of disease detection and management methods in agricultural production.

3.5 Limitations

Deep learning stands out by automatically providing nearly fully accurate results in diagnosing various plant diseases. However, multiple challenges are encountered while performing this task. These may arise from data, model complexity, or the computations required for training.

The most common challenge is limited data. Obtaining high-quality, labeled images are both time-consuming and expensive. The resulting data scarcity makes it challenging to train deep learning models, which are often data-hungry to learn effectively. As a result of insufficient data, models show low efficiency.

In addition to limited data, class imbalance in these data sets poses a separate problem. Due to the unbalanced class distribution encountered, especially in plant disease datasets, the model may perform very well in some classes but poorly in others. This calls into question the generalization ability of the model.

Another problem is the image quality of the collected data. Image quality for training models is very important for accurate diagnosis. There are many factors in image quality, such as lighting conditions, stages of diseases, and image resolution. Deep learning models such as CNN have complex architectures. This complexity poses a challenge in resource-constrained environments or real-time applications.

Overfitting, a common challenge in deep learning models, means that the model memorizes the data. While the model achieves very good results on its data due to overfitting, its results may be inconsistent when generalized. While an overfitted model may achieve excellent results in classifying training data, it may fail when new data arrives.

Most studies typically use only a single dataset, which can limit the accuracy of the models when applied in real-world scenarios. A single dataset may not fully represent the diverse conditions and diseases, reducing the generalizability of the results. Therefore, to achieve higher accuracy in real-world applications, it is necessary to create more realistic and diverse datasets. This will help improve the generalization capability of models and lead to more robust results.

4 Conclusion and future directions

This review provides an extensive perspective on applying deep learning in agriculture. This study motivates scholars to investigate deep learning and apply it to various agricultural difficulties. Future research in this area is expected to make extensive use of broad experience and best deep learning acts to provide suggestions to other agricultural properties that have not fully embraced this modern technology; the general advantages of deep learning are helpful and may lead to the advancement of more intelligent and environmentally friendly methods of agriculture. This evaluation is anticipated to spark future research and encourage using deep learning algorithms in farming to achieve sustainable and efficient development.

In future research, there is a pressing need to increase the focus on detection and segmentation studies, as these are critical components for improving plant disease diagnosis. Despite the frequent use of the PlantVillage dataset, it is essential to develop and utilize a variety of alternative datasets to enhance the generalizability and accuracy of the models. By creating diverse datasets that better represent real-world conditions, we can improve model performance and applicability. Additionally, current research heavily relies on standard models. We recommend advancing these models by incorporating innovative techniques and enhancements to develop more robust and effective solutions. This approach will contribute to achieving higher accuracy and better performance in practical applications.

We recommend that future studies focus on validating models with real-world data to ensure their effectiveness and reliability. While existing research often uses synthetic or limited datasets, applying models to diverse and realistic data will provide a more accurate assessment of their performance in practical scenarios. This validation process is crucial for understanding how well models generalize to real-world conditions and for identifying any potential issues that may arise in practical applications. By emphasizing real-world validation, researchers can improve the robustness of their models and ensure that their findings are applicable and beneficial in real-world contexts.

The study concluded that effective plant disease analysis should involve the early-stage detection of multiple plants and their respective diseases and an accurate estimation of disease severity. These findings are crucial for developing an automated end-to-end plant disease detection system. More specifically, as emphasized in the review, there is a significant reliance on the application of deep learning and a lack of development of custom solutions to overcome some of the existing challenges related to standardization and benchmarking. Future studies are invited to complement this area with modern approaches that enhance the reliability and scalability of deep learning to real-time monitoring. To sum up, this review underscores the significant potential of deep learning techniques in transforming the agricultural sector. The analysis indicates that deep learning can substantially enhance productivity and economic growth in the agricultural sector. This study also identified key research gaps, particularly in developing new deep-learning techniques to improve model performance and reduce inference times for practical applications. Continued research in this area is believed to be essential for fully leveraging the potential of deep learning for smart farming and achieving sustainable agricultural development.

Data availability

No datasets were generated or analysed during the current study.

References

Abbas A, Jain S, Gour M, Vankudothu S (2021) Tomato plant disease detection using transfer learning with C-GAN synthetic images. Comput Electron Agric 187:106279

Abisha S, Jayasree T (2022) Application of image processing techniques and artificial neural network for detection of diseases on brinjal leaf. IETE J Res 68(3):2246–2258

Abouelmagd LM, Shams MY, Marie HS, Hassanien AE (2024) An optimized capsule neural networks for tomato leaf disease classification. EURASIP J Image Video Process 1:2

Acar E, Ertugrul OF, Aldemir E, Oztekin A (2022) Automatic identification of cassava leaf diseases utilizing morphological hidden patterns and multi-feature textures with a distributed structure-based classification approach. J Plant Dis Prot 129(3):605–621

Afifi A, Alhumam A, Abdelwahab A (2020) Convolutional neural network for automatic identification of plant diseases with limited data. Plants 10(1):28

Agarwal M, Singh A, Arjaria S, Sinha A, Gupta S (2020) ToLeD: Tomato leaf disease detection using convolution neural network. Procedia Comput Sci 167:293–301

Ahmad J, Jan B, Farman H, Ahmad W, Ullah A (2020) Disease detection in plum using convolutional neural network under true field conditions. Sensors 20(19):5569

Ahmad I, Hamid M, Yousaf S, Shah ST, Ahmad MO (2020a) Optimizing pretrained convolutional neural networks for tomato leaf disease detection. Complexity 2020:1–6

Ahmed AA, Reddy GH (2021) A mobile-based system for detecting plant leaf diseases using deep learning. AgriEngineering 3(3):478–493

Akshai KP, Anitha J (2021) Plant disease classification using deep learning. In: 2021 3rd International conference on signal processing and communication (ICPSC). IEEE, pp 407–411

Alahi MEE, Sukkuea A, Tina FW, Nag A, Kurdthongmee W, Suwannarat K, Mukhopadhyay SC (2023) Integration of IoT-enabled technologies and artificial intelligence (AI) for smart city scenario: recent advancements and future trends. Sensors 23(11):5206

Albattah W, Nawaz M, Javed A, Masood M, Albahli S (2022) A novel deep learning method for detection and classification of plant diseases. Complex Intell Syst pp 1–18

An Q, Wang K, Li Z, Song C, Tang X, Song J (2022) Real-time monitoring method of strawberry fruit growth state based on YOLO improved model. IEEE Access 10:124363–124372

Anagnostis A, Asiminari G, Papageorgiou E, Bochtis D (2020) A convolutional neural networks based method for anthracnose infected walnut tree leaves identification. Appl Sci 10(2):469

Anand S, Pillai B, Gupta N (2024) Identification of potato plant diseases using deep neural network model and image segmentation. Int J Innov Res Technol Sci 12(2):338–344

Andrushia AD, Neebha TM, Patricia AT, Sagayam KM, Pramanik S (2024) Capsule network-based disease classification for Vitis vinifera leaves. Neural Comput Appl 36(2):757–772

Anim-Ayeko AO, Schillaci C, Lipani A (2023) Automatic blight disease detection in potato (Solanum tuberosum L.) and tomato (Solanum lycopersicum L. 1753) plants using deep learning. Smart Agric Technol 4:100178

Argüeso D, Picon A, Irusta U, Medela A, San-Emeterio MG, Bereciartua A, Alvarez-Gila A (2020) Few-shot learning approach for plant disease classification using images taken in the field. Comput Electron Agric 175:105542

Arjunagi S, Patil NB (2023) Optimized convolutional neural network for identification of maize leaf diseases with adaptive ageist spider monkey optimization model. Int J Inform Technol 15(2):877–891

Ashwinkumar S, Rajagopal S, Manimaran V, Jegajothi B (2022) Automated plant leaf disease detection and classification using optimal MobileNet based convolutional neural networks. Mater Today: Proc 51:480–487

Aslan E, ÖZÜPAK Y (2024) Diagnosis and accurate classification of apple leaf diseases using Vision transformers. Comput Decis Making: Int J 1:1–12

Atila Ü, Uçar M, Akyol K, Uçar E (2021) Plant leaf disease classification using EfficientNet deep learning model. Ecol Inf 61:101182

Ayu HR, Surtono A, Apriyanto DK (2021) Deep learning for detection cassava leaf disease. J Phys: Conf Ser 1751(1):012072

Banarase S, Shirbahadurkar S (2024) The Orchard Guard: deep learning powered apple leaf disease detection with MobileNetV2 model. J Integr Sci Technol 12(4):799–799

Bari BS, Islam MN, Rashid M, Hasan MJ, Razman MAM, Musa RM, Nasir AFA, Majeed APA (2021) A real-time approach of diagnosing rice leaf disease using deep learning-based faster R-CNN framework. PeerJ Comput Sci 7:e432

Barman U, Choudhury RD (2022) Smartphone assist deep neural network to detect the citrus diseases in agri-informatics. Global Transit Proc 3(2):392–398

Bazame H, Molin JP, Althoff D, Martello M (2021) Detection, classification, and mapping of coffee fruits during harvest with computer vision. Comput Electron Agric 183:106066

Bedi P, Gole P (2021) Plant disease detection using hybrid model based on convolutional autoencoder and convolutional neural network. Artif Intell Agric 5:90–101

Bi C, Wang J, Duan Y, Fu B, Kang JR, Shi Y (2022) MobileNet based apple leaf diseases identification. Mob Netw Appl 1–9

Biswas S, Saha I, Deb A (2024) Plant disease identification using a novel time-effective CNN architecture. Multimedia Tools Appl, 1–23

Brindha GM, Karishma KK, Nivetha J, Vidhya B (2022) Automatic detection of citrus fruit diseases using mib classifier. In 2022 3rd International conference on electronics and sustainable communication systems (ICESC). IEEE, pp 1111–1116

Butt N, Iqbal MM, Ahmad I, Akbar H, Khadam U (2024) Citrus diseases detection using deep learning. J Comput Biomed Inform, 23–33

Carion N, Massa F, Synnaeve G, Usunier N, Kirillov A, Zagoruyko S (2020) End-to-end object detection with transformers. European conference on computer vision. Springer, Cham, pp 213–229

Chakraborty S, Kodamana H, Chakraborty S (2022) Deep learning aided automatic and reliable detection of tomato begomovirus infections in plants. J Plant Biochem Biotechnol 31(3):573–580

Chen Y, Kalantidis Y, Li J, Yan S, Feng J (2018) A^ 2-nets: double attention networks. Advances in neural information processing systems, p 31

Chen J, Chen J, Zhang D, Sun Y, Nanehkaran YA (2020) Using deep transfer learning for image-based plant disease identification. Comput Electron Agric 173:105393

Chen J, Zhang D, Suzauddola M, Zeb A (2021a) Identifying crop diseases using attention embedded MobileNet-V2 model. Appl Soft Comput 113:107901

Chen W, Zhang J, Guo B, Wei Q, Zhu Z (2021b) An apple detection method based on Des-YOLO v4 algorithm for harvesting robots in complex environment. Math Prob Eng 2021:1–12

Chen Z, Su R, Wang Y, Chen G, Wang Z, Yin P, Wang J (2022) Automatic estimation of apple orchard blooming levels using the improved YOLOv5. Agronomy, 12(10)

Chohan M, Khan A, Chohan R, Hassan S, Mahar M (2020) Plant disease detection using deep learning. Int J Recent Technol Eng 9:909–914

Chollet F (2017) Xception: Deep learning with depthwise separable convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 1251–1258

Chougui A, Moussaoui A, Moussaoui A (2022) Plant-leaf diseases classification using cnn, cbam and vision transformer. In: 2022 5th International symposium on informatics and its applications (ISIA). IEEE, pp 1–6

Chowdhury ME, Rahman T, Khandakar A, Ayari MA, Khan AU, Khan MS, Al-Emadi N, Reaz M, Islam M, Ali S (2021) Automatic and reliable leaf disease detection using deep learning techniques. AgriEngineering 3(2):294–312

Dai G, Tian Z, Fan J, Sunil CK, Dewi C (2024) DFN-PSAN: multi-level deep information feature fusion extraction network for interpretable plant disease classification. Comput Electron Agric 216:108481

Darwish A, Ezzat D, Hassanien AE (2020) An optimized model based on convolutional neural networks and orthogonal learning particle swarm optimization algorithm for plant diseases diagnosis. Swarm Evol Comput 52:100616

Das D, Singh M, Mohanty SS, Chakravarty S (2020) Leaf disease detection using support vector machine. In 2020 International conference on communication and signal processing (ICCSP). IEEE, pp 1036–1040

Dawod RG, Dobre C (2022) Automatic segmentation and classification system for foliar diseases in sunflower. Sustainability 14(18):11312

Dhanaraju M, Chenniappan P, Ramalingam K, Pazhanivelan S, Kaliaperumal R (2022) Smart farming: internet of things (IoT)-based sustainable agriculture. Agriculture 12(10):1745

Divyanth LG, Ahmad A, Saraswat D (2023) A two-stage deep-learning based segmentation model for crop disease quantification based on corn field imagery. Smart Agric Technol 3:100108

Dubey RK, Choubey DK (2024) An efficient adaptive feature selection with deep learning model-based paddy plant leaf disease classification. Multimedia Tools Appl 83(8):22639–22661

Enkvetchakul P, Surinta O (2021) Effective data augmentation and training techniques for improving deep learning in plant leaf disease recognition. Appl Sci Eng Progress 15(3):3810

Esgario JG, Krohling RA, Ventura JA (2020) Deep learning for classification and severity estimation of coffee leaf biotic stress. Comput Electron Agric 169:105162

Eunice J, Popescu DE, Chowdary MK, Hemanth J (2022) Deep learning-based leaf disease detection in crops using images for agricultural applications. Agronomy 12(10):2395

Fan H, Xiong B, Mangalam K, Li Y, Yan Z, Malik J, Feichtenhofer C (2021) Multiscale vision transformers. In: Proceedings of the IEEE/CVF international conference on computer vision. pp 6824–6835

Fernandes R, Pessoa A, Nogueira J, Paiva A, Paçal I, Salgado M, Cunha A (2024a) Evaluation of deep learning models in search by example using capsule endoscopy images. Procedia Comput Sci 239:2065–2073

Fernandes R, Pessoa A, Salgado M, De Paiva A, Pacal I, Cunha A (2024b) Enhancing image annotation with object tracking and image retrieval. A systematic review. IEEE Access

G O, Billa SR, Malik V, Bharath E, Sharma S (2024) Grapevine fruits disease detection using different deep learning models. Multimedia Tools Appl, 1–26

Gangadharan K, Kumari GRN, Dhanasekaran D, Malathi K (2020) Automatic detection of plant disease and insect attack using effta algorithm. Int J Adv Comput Sci Appl, 11(2)

Gautam V, Ranjan RK, Dahiya P, Kumar A (2024) ESDNN: a novel ensembled stack deep neural network for mango leaf disease classification and detection. Multimedia Tools Appl 83(4):10989–11015

Gayathri S, Wise DJW, Shamini PB, Muthukumaran N (2020) Image analysis and detection of tea leaf disease using deep learning. In: 2020 International conference on electronics and sustainable communication systems (ICESC). IEEE, pp 398–403

Ge Y, Lin S, Zhang Y, Li Z, Cheng H, Dong J, Shao S, Zhang J, Qi X, Wu Z (2022) Tracking and counting of Tomato at different growth period using an improving YOLO-Deepsort Network for Inspection Robot. Machines 10(6):489