Abstract

Multi-modal (vision-language) models, such as CLIP, are replacing traditional supervised pre-training models (e.g., ImageNet-based pre-training) as the new generation of visual foundation models. These models with robust and aligned semantic representations learned from billions of internet image-text pairs and can be applied to various downstream tasks in a zero-shot manner. However, in some fine-grained domains like medical imaging and remote sensing, the performance of multi-modal foundation models often leaves much to be desired. Consequently, many researchers have begun to explore few-shot adaptation methods for these models, gradually deriving three main technical approaches: (1) prompt-based methods, (2) adapter-based methods, and (3) external knowledge-based methods. Nevertheless, this rapidly developing field has produced numerous results without a comprehensive survey to systematically organize the research progress. Therefore, in this survey, we introduce and analyze the research advancements in few-shot adaptation methods for multi-modal models, summarizing commonly used datasets and experimental setups, and comparing the results of different methods. In addition, due to the lack of reliable theoretical support for existing methods, we derive the few-shot adaptation generalization error bound for multi-modal models. The theorem reveals that the generalization error of multi-modal foundation models is constrained by three factors: domain gap, model capacity, and sample size. Based on this, we propose three possible solutions from the following aspects: (1) adaptive domain generalization, (2) adaptive model selection, and (3) adaptive knowledge utilization.Kindly check and confirm the edit made in the title.The title is correct.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Artificial intelligence is increasingly being applied to a wide range of key industries, including voice recognition, image recognition, autonomous driving, intelligent manufacturing, medical diagnosis, financial risk control and so on. In the process of empowering various fields with artificial intelligence technology, there are often challenges related to fragmented and diversified demands. In the past, models often had small parameter sizes and limited generalization capabilities. And one model could only cope with a single scenario, resulting in high costs and poor generalization performance. Recently, an increasing number of researchers have started focusing on pre-trained foundation models with greater generalization.

Since 2018, the training data and parameter sizes of foundation models such as BERT (Devlin et al. 2019), Pangu (Zeng et al. 2021), PaLM (Chowdhery et al. 2022), GPT-4 (OpenAI 2023), etc. have grown exponentially, resulting in significant performance improvements in various natural language understanding tasks. Meanwhile, the development of foundation models is gradually evolving from single modalities such as text, speech, vision, etc. to multi-modal fusion. More and more research organizations have turned their attention to multi-modal pre-trained foundation models, such as ViLBERT (Lu et al. 2019), CLIP (Radford et al. 2021), DeCLIP (Li et al. 2022), FILIP (Yao et al. 2022), PyramidCLIP (Gao et al. 2022), OFA (Cai et al. 2020), BEiT-3 (Wang et al. 2022), ERNIE-ViL (Shan et al. 2022) and Data2vec (Baevski et al. 2022).

In early 2021, OpenAI released CLIP, a large-scale multi-modal model for aligning images and texts, which is pre-trained using billions of internet data to obtain rich visual language knowledge through contrastive learning. While the pre-trained CLIP model can achieve zero-shot predictions by employing text features as classification weights during the inference stage, this approach typically excels only in general domains like ImageNet and tends to underperform when dealing with data from certain fine-grained domains. The reason behind this is that such models primarily utilize data from the general domain during their pre-training phase, and when confronted with specific downstream tasks, the data distribution often diverges from pre-training data. Hence, it becomes necessary to fine-tune the model using the specific data of downstream tasks. To improve the generalization performance of the model through fine-tuning, researchers first proposed a prompt-based fine-tuning adaptation method (e.g., CoOp Zhou et al. 2022), which treats the fixed text inputs of the CLIP text side as learnable vectors and then fine-tunes them with a small number of samples to adapt to the downstream tasks. Another method commonly employed to enhance the few-shot adaptation capability is adapter-based fine-tuning, like CLIP-Adapter (Gao et al. 2021). This method involves adding simple adapter structures within the pre-trained model and then fine-tuning the adapter parameters using a small amount of sample data, enabling the foundation model to adapt to downstream tasks. In addition, methods that introduce foundation language models or external knowledge such as knowledge graphs (e.g., CuPL Pratt et al. 2022) can help the model to handle unseen samples better, enhance its semantic comprehension and robustness, and thus improve its performance in few-shot adaptation tasks. The above-mentioned three kinds of methods have been widely used in various downstream adaptation tasks, but there is a lack of a comprehensive survey that systematically sorts out the methods. Therefore, we elaborate and compare these methods in detail and explore their future directions to further improve the performance and generalization ability of pre-trained models. Contributions of this paper are as follows:

-

We comprehensively review and sort out multi-modal few-shot adaptation methods, and classify existing methods into prompt-based fine-tuning adaptation methods, adapter-based fine-tuning adaptation methods, adaptation methods based on external knowledge, and other methods. Within the prompt-based fine-tuning adaptation methods, we further subdivide them into text prompt fine-tuning, visual prompt fine-tuning, multi-modal prompt, and multi-task prompt methods. Regarding adapter-based fine-tuning adaptation methods, we categorize them into single-modal adapter fine-tuning and multi-modal adapter fine-tuning. As for methods employing external knowledge, we distinguish between pre-training methods with external knowledge and downstream adaptation methods leveraging external knowledge.

-

We review 11 commonly used datasets for evaluating the downstream generalization performance of multi-modal foundation models. We provide a detailed description of four experimental setups for verifying the adaptation performance of multi-modal foundation models under few-shot conditions. The experimental results for the four different setups are presented, and a comparative analysis of these results is performed. We highlight the reasons why different types of methods can effectively enhance the generalization performance of multi-modal foundation models.

-

We discuss the common shortcomings of few-shot adaptation methods for existing multi-modal foundation models and analyze the domain adaptation problem. Starting from the error bound in cross-domain generalization from statistical machine learning theory, we derive the error bound for few-shot adaptation with multi-modal foundation models, which reveals that the main challenges faced by existing methods are ineffective adaptation of upstream and downstream domain distributions, lack of adaptability in model selection and insufficient utilization of data and knowledge.

2 Pre-training of multi-modal foundation models

In recent years, large-scale pre-training models have received extensive attention from academia and industry. Initially, the related works of foundation model pre-training mainly focus on the field of natural language processing, in which the self-supervised language models such as BERT (Devlin et al. 2019) and GPT (Radford and Narasimhan 2018) have shown better natural language understanding and generation capabilities than traditional methods. In the field of computer vision, the paradigm has also shifted from supervised pre-training to self-supervised pre-training. The performances of self-supervised pre-trained visual models have significantly improved, evolving from initial models based on data augmentation like SimCLR (Chen et al. 2020) and MoCo (He et al. 2020) to more recent approaches based on random masking methods such as MAE (He et al. 2022) and BEiT (Bao et al. 2022). However, pre-trained language models are unable to receive visual inputs, resulting in an inability to extend their advantage in language understanding to multi-modal downstream tasks such as visual question answering (VQA). On the other hand, the supervised signals used for visual pre-training are often limited to data augmentation and stochastic masks, which prevents them from learning richer semantic representations in the open world. As a result, we have witnessed a recent surge in the development of large-scale pre-trained multi-modal models that combine visual and language modalities, as illustrated in Table 1.

A notable characteristic of the above multi-modal pre-trained foundation models lies in the ability to efficiently learn visual concepts from large-scale natural language supervision and embed image and text features into a shared semantic space, thus obtaining zero-shot prediction capability. However, when the downstream task’s data belongs to some specific domains, such as remote sensing, healthcare, e-commerce, etc., which differ greatly from the pre-training data, the zero-shot prediction accuracy of the multi-modal foundation models will drop sharply. At this point, it is necessary to fine-tune the model with the help of the downstream task’s data, for example, using linear probing or global fine-tuning methods. However, such methods often require a large number of samples for effective training, and the number of samples available in the actual downstream task is often limited by the tagging cost. To address this problem, there have been some initial explorations in the academic community that attempt to fine-tune multi-modal foundation models using small amounts of data so that they can be efficiently generalized to specific downstream applications. For example, there have been some works (Lin et al. 2023; Gao et al. 2021) to fine-tune CLIP, such as using linear classifiers, adapter layers, etc. The work on fine-tuning CLIP can achieve very good results on few-shot image recognition tasks, even surpassing some algorithms designed specifically for few-shot tasks.

3 Few-shot adaptation methods for multi-modal foundation models

To effectively enhance the model’s generalization performance in specific domains, it is necessary to fine-tune multi-modal foundation models using limited samples, enabling them to have broader applications. These methods can be defined as few-shot adaptation methods for multi-modal foundation models. This chapter will be divided into four sections to provide a detailed overview of existing methods for multi-modal foundation models, namely: prompt-based fine-tuning adaptation methods, adapter-based fine-tuning adaptation methods, adaptation methods based on external knowledge, and other methods.

3.1 Prompt-based fine-tuning adaptation methods

In this section, we classify prompt-based fine-tuning adaptation approaches into three categories according to their modalities: textual, visual, and multi-modal methods. We will introduce representative methods among them.

3.1.1 Textual prompt-based fine-tuning adaptation

In the field of natural language processing, prompt-based fine-tuning adaptation (Lester et al. 2021; Shin et al. 2020; Li and Liang 2021; Reynolds and McDonell 2021; Liu et al. 2021) is a classic approach to addressing the issue of few-shot generalization in large language models. It involves using a fixed part of the text input as a learnable vector and fine-tuning its parameters using downstream task data, enabling the model to adapt to specific downstream tasks. The advantage of this method lies in its ability to avoid the manual design of textual prompts, effectively mitigating the risk of overfitting by fine-tuning only a specific portion of the model input. Inspired by this, some researchers have also begun to design prompt-based fine-tuning adaptation methods for multi-modal foundation models. CoOp (Zhou et al. 2022) for the first time incorporates the idea of prompt learning into downstream task adaptation for multi-modal pre-trained foundation models. It uses learnable word embeddings to automatically construct context prompts instead of manually designing prompt templates for each task. As illustrated in Fig. 1, the individual category label \(\left\{ object\right\}\) is transformed into a comprehensive textual prompt ’\(\left[ V\right] _{1},\left[ V\right] _{2},\ldots ,\left[ V\right] _{m},\left\{ object\right\}\)’. Here, \(\left[ V\right] _{i}\) represents the adaptable word vectors. The classification loss is then computed to fine-tune these word vectors using data from the downstream task, enabling the model to autonomously acquire text inputs adapted to the downstream task.

Subsequently, Zhou et al. (2022) introduced Conditional Contextual Optimization (CoCoOp), which constructs a meta-network to learn features from images. These features are then combined with prompt vectors to enhance CoOp’s generalization performance on new category data. To leverage the zero-shot ability of pre-trained models effectively, Huang et al. (2022) proposed Unsupervised Prompt Learning (UPL). It selects zero-shot prediction results with high confidence as pseudo-labels to supervise prompt vector learning. Similarly, Prompt-aligned Gradient (ProGrad) (Zhu et al. 2022) uses the zero-shot prediction results to constrain the direction of the model gradient update, thus avoiding the conflict between few-shot models and the generalized knowledge, and mitigating the problem of overfitting. However, due to the rich diversity of visual information, learning only one textual prompt makes it challenging to match complex visual data. To address this, Chen et al. (2023) proposed Prompt Learning with Optimal Transport (PLOT). It is used to learn multiple distinct textual prompts, where different textual prompts are regarded as descriptions of image locations, and the optimal transport theory is employed to match textual prompts with local image features. Lu et al. (2022) introduced Prompt Distribution Learning (ProDA) to learn prompt distributions and sample different textual prompts from these distributions. In addition, to make full use of the correlation between multi-task data, Ding et al. (2022) proposed Soft Context Sharing for Prompt Tuning (SoftCPT), which designs a task-sharing meta-network that splices predefined task names and learnable meta-prompts as inputs to fine-tune the prompts with the help of multi-task data.

3.1.2 Visual prompt-based fine-tuning adaptation

All of the above methods only fine-tune the textual side of CLIP, whereas CLIP, as a multi-modal model, places equal importance on both visual and textual sides. Fine-tuning only the textual prompts cannot improve the ability of the visual encoder to extract features, and the extracted visual features are likely to mismatch the target features of downstream tasks. Therefore, inspired by the textual prompt fine-tuning adaptation, a series of visual prompt fine-tuning adaptation methods have emerged. Existing visual prompt fine-tuning adaptation methods mainly include token-level fine-tuning adaptation and pixel-level fine-tuning adaptation. Visual Prompt Tuning (VPT) (Jia et al. 2022) introduces learnable visual prompts in token form. Class-Aware Visual Prompt Tuning (CAVPT) (Xing et al. 2022) further includes a cross-attention module on this basis to make visual prompts more focused on the objectives of downstream tasks. In contrast to token-based methods, Bahng et al. (2022) suggested adding pixel-level visual prompts directly around the image in a padding format to enhance visual prompts. Wu et al. (2022) further proposed Enhanced Visual Prompting (EVP) by scaling and padding instead of padding directly around the original image.

3.1.3 Multi-modal prompt-based fine-tuning adaptation

In addition to separately learning textual and visual prompts, it is also possible to simultaneously learn multi-modal prompts to better align textual and visual features. Textual and visual features have inherent differences, and in order to strengthen the connection between them when learning multi-modal prompts, Multi-modal Prompt Learning (MAPLE) (Khattak et al. 2022) uses copula functions to transform textual prompts into visual prompts. Unified Prompt Tuning (UPT) (Zang et al. 2022) on the other hand, first learns a universal prompt and then decomposes it into textual and visual prompts. On the other hand, Multi-task Visual Language Prompt Tuning (MVLPT) (Shen et al. 2022) introduces the concept of multi-task learning, fine-tuning textual and visual prompts using cross-task knowledge.

3.2 Adapter-based fine-tuning adaptation methods

In this section, we divide adapter-based fine-tuning adaptation methods into two categories: single-modal and multi-modal ones according to whether the modality is single or not. We will introduce some representative methods.

3.2.1 Single-modal adapter-based fine-tuning adaptation

In the field of Natural Language Processing (NLP), the concept of Adapters was first introduced by the Google team in 2019 for fine-tuning large language models (Houlsby et al. 2019). During training on downstream tasks, this method freezes the parameters of the original language model and only updates a small number of parameters added as adapter modules. Due to its advantages such as parameter efficiency, flexibility in design, and high robustness, this approach has received extensive research attention in the NLP field in recent years (Ding et al. 2022). More recently, the adapter-based approach has also been applied to Vision Transformers (ViTs) in the computer vision domain. Jie and Deng (2022) addressed the issue of the lack of inductive bias of adapter structures in ViTs by introducing Convolutional Bypasses (Convpass). Additionally, they proposed Factor-Tuning [FacT, cited as Jie and Deng (2023)] to further improve the efficiency of parameter-efficient transfer learning to meet storage constraints in practical applications.

3.2.2 Multi-modal adapter-based fine-tuning adaptation

The above adapter-based methods are all applicable to single-modal foundation models in natural language processing or computer vision. In recent years, adapter-based methods have also been extended to multi-modal foundation models to enhance downstream generalization ability. Gao et al. (2021) introduced CLIP-Adapter, which adds a fully connected layer adapter after freezing the backbone network to learn additional knowledge. It then merges this knowledge with zero-shot prediction results based on residual connections, as illustrated in Fig. 2.

Building upon these developments, Zhang et al. introduced Tip-Adapter (Zhang et al. 2021). This method constructs classifiers based on downstream few-shot training data and combines their predictions with the original zero-shot classifiers’ results in a linear weighted manner to enhance the model’s prediction performance. And SVL-Adapter (Pantazis et al. 2022) fuses a pre-trained self-supervised visual encoder before the adapter to extract more robust visual features. However, the above methods only use cross-modal contrastive loss and do not consider visually specific contrastive loss for few-shot datasets. To address this issue, Peng et al. (2022) proposed Semantic-guided Visual Adapting (SgVA-CLIP), which guides the parameter update of the visual adapter through implicit knowledge distillation to ensure the consistency of the image-text relationship. To enhance the cross-modal interaction capabilities of adapters, CALIP (Guo et al. 2023) leverages attention maps to fuse text and image features and inserts two fine-tunable linear layers before and after fusion. In addition, Cross-Modal Adapter (CMA) (Jiang et al. 2022) and Multimodal Video Adapter (MV-Adapter) (Zhang et al. 2023) achieve cross-modal interaction by sharing adapter weights between two modalities. These methods consider both single-modal and multi-modal scenarios but do not fully integrate the advantages of each modality. To address this, Lu et al. (2023) proposed UniAdapter to unify single-modal and multi-modal adapters.

3.3 External knowledge-based adaptation methods

According to the external knowledge application strategy, we divide external knowledge-based adaptation methods into two categories: pre-training and downstream adaptation methods. Similarly, we will introduce some representative works.

3.3.1 External knowledge-based pre-training methods

Pre-trained foundation models have the ability to learn general representations by mining relevant information from vast amounts of data on the internet. However, in such data-driven models, knowledge is often implicit and not explicitly linked to human understanding of the world or common sense knowledge. In recent years, data and knowledge-driven pre-training methods have been emerging, and researchers have started exploring the incorporation of more comprehensive external knowledge, such as knowledge graphs, into foundation models. This integration aims to make these models more robust, reliable, and interpretable. ERNIE (Zhang et al. 2019) incorporates a knowledge encoder for entity knowledge extraction and heterogeneous information fusion. K-BERT (Liu et al. 2020) retrieves external knowledge relevant to the model input and constructs sentence trees with rich contextual knowledge as model input. In recent years, some efforts have also started to inject knowledge into pre-training for multi-modal foundation models. For example, ERNIE-ViL (Yu et al. 2021) integrates knowledge from scene graphs, KM-BART (Xing et al. 2021) models general visual knowledge by creating additional pre-training tasks, and K-LITE (Shen et al. 2022) incorporates various external knowledge sources, including WordNet and Wikipedia definitions.

3.3.2 External knowledge-based downstream adaptation methods

The methods mentioned above introduce external knowledge during the pre-training phase. However, in downstream few-shot adaptation scenarios with limited data samples, it is also necessary to enhance external knowledge to ensure the model’s performance. One of the most common approaches is to generate richer textual descriptions for each category by querying a large language model. An illustration of this method is shown in Fig. 3. Customized Prompts via Language Models (CuPL) (Pratt et al. 2022) is the first method to integrate external knowledge into the downstream generalization process of multi-modal foundation models. CuPL achieves this by asking GPT-3 questions to generate multiple descriptive statements for each category, enriching the semantics of categories and thereby improving zero-shot classification performance. However, the sentences generated by CuPL using GPT-3 may have issues with poor descriptiveness and reliability. To address these issues, Menon and Vondrick (2023) further refined the knowledge enhancement process based on GPT-3. They prompted GPT-3 to generate semantic attribute descriptions in the form of phrases, enhancing the model’s interpretability. To strike a balance between interpretability and performance, Language Guided Bottlenecks (LaBo) (Yang et al. 2022) uses GPT-3 to generate a large candidate feature descriptor space, taking into account both the discriminability of features with respect to other classes and the coverage of the current class. It filters out the optimal sub-descriptor space for classification decisions, thereby uncovering the model’s decision rationale. ELEVATER (Li et al. 2022) also incorporates definitions from sources like GPT-3, WordNet, and Wiktionary. Experimental results indicate that external knowledge can enhance downstream generalization performance for multi-modal foundation models. However, different sources of knowledge have different emphases and properties. For instance, WordNet has relatively rich and accurate knowledge but lower coverage, while GPT-3 has a broader knowledge coverage but may lack reliability. Additionally, unlike the methods mentioned above that use external knowledge to enhance textual semantics, SuS-X (Udandarao et al. 2022) focuses on enhancing visual samples for multi-modal models. It augments the few-shot training sets for downstream tasks through image retrieval from the LAION-5B dataset (Schuhmann et al. 2022) or generates image samples based on Stable Diffusion (Rombach et al. 2022), which aims to model the true distribution of downstream data more accurately and reliably.

3.4 Other methods

In addition to the three categories of methods mentioned above, there are some approaches to fine-tuning multi-modal foundation models from the perspectives of weight parameter fusion, model reconstruction, and cross-attention. Specifically, Wise-FT (Wortsman et al. 2022) fuses the original and fine-tuned model parameters by linear interpolation, which enables the model to acquire specific knowledge from downstream data while retaining as much generic knowledge as possible. MaskCLIP (Zhou et al. 2022) directly modifies the structure of the CLIP image encoder by removing the query embedding layer and the key embedding layer. It replaces the value embedding layer and the last linear layer with a 1\(\times\)1 convolutional layer, allowing the model to extract denser image features. VT-Clip (Zhang et al. 2021) introduces a visual-guided attention mechanism, which enhances the semantic correlation between the textual features and the image data of the downstream tasks, thus effectively improving the generalization performance of the multi-modal foundation models.

4 Datasets and comparison of experimental results

There are 11 commonly used datasets for evaluating the downstream generalization performance of multi-modal foundation models, namely: 2 general target datasets (ImageNet Deng et al. 2009 and Caltech101 Fei-Fei et al. 2007), 5 fine-grained classification datasets [OxfordPets Parkhi et al. 2012, StanfordCars (Krause et al. 2013), Flowers102 (Nilsback and Zisserman 2008), Food101 (Bossard et al. 2014) and FGVCAircraft (Maji et al. 2013)], 1 scene recognition dataset (SUN397 Xiao et al. 2010), 1 action recognition dataset (UCF101 Soomro et al. 2012), 1 texture dataset (DTD Cimpoi et al. 2014) and 1 satellite image dataset (EuroSAT Helber et al. 2019). These datasets cover a range of different visual tasks and collectively form a more comprehensive benchmark for evaluating multi-modal foundation model performance in various scenarios. These datasets cover a range of different visual tasks and collectively form a more comprehensive benchmark for evaluating multi-modal foundation model performance in various scenarios. These datasets focus on evaluating the model’s classification ability in different domains. For example, fine-grained datasets require the model to have high capability in extracting detailed features. On the other hand, satellite image datasets have unique acquisition perspectives, which impose higher demands on the model’s generalization ability.

The numerical evaluation is achieved using the accuracy. Accuracy is defined as the ratio of correct answers to all answers. To evaluate the generalization performance of multi-modal foundation models under few-shot conditions, four experimental settings are commonly used, namely:

Few-shot Learning: Building upon the 11 datasets mentioned above, the training and test sets are partitioned. For each class in the training set, 1, 2, 4, 8, and 16 samples are extracted and used for training. Subsequently, the model’s performance is evaluated on the test set. The primary aim of this experiment is to assess the impact of limited samples on the generalization performance.

Base-to-new Generalization: To evaluate the effectiveness of adaptation methods for multi-modal foundation models on previously unseen classes, all the classes from the 11 datasets are evenly divided into two groups. One group is called ‘base classes’ and the other group is called ‘new classes’. The multi-modal foundation model is trained only on the data from the base classes. Subsequently, evaluations are performed separately on both the base class and new class data. Performance on the base classes reflects the discriminability of features learned by the model, while performance on the new classes reflects the model’s generalization ability. The harmonic mean of the results obtained on the base and new class data is adopted as a balance between discriminability and generalization ability.

Domain generalization: To validate the generalization and domain shift capabilities of multi-modal foundation models’ adaptation methods when dealing with Out-of-Distribution (OOD) data, ImageNet is selected as the source dataset, and the other four datasets (ImageNetV2 Recht et al. 2019, ImageNet-Sketch Wang et al. 2019, ImageNet-A Hendrycks et al. 2021a and ImageNet-R Hendrycks et al. 2021b) are selected as the target datasets. The target datasets have the same category information as the source dataset but different data distributions. The model is trained solely on the source dataset and subsequently evaluated on the target datasets.

Cross-dataset transfer: To validate the generalization performance on different datasets, ImageNet is chosen as the source dataset, and the remaining 10 datasets are selected as target datasets. The model is trained on ImageNet and then tested on the target datasets. The source dataset and the target datasets have almost no overlap in classes, which can test the model’s generalization ability on different class datasets.

We collect the few-shot learning experimental results for selected methods on the 11 datasets from various sources, as shown in Table 2. The backbone networks primarily used are CNN-based ResNet50, as well as Transformer-based ViT-B and ViT-L. All methods are trained with only 16 samples per class and then tested for image classification accuracy on the test set. The "Baseline" refers to the classification results of Linear-probe CLIP.

Based on Table 2, the following conclusions can be drawn: (1)Three multi-modal foundation models’ adaptation methods for few-shot learning effectively improve the adaptability of foundation models to downstream tasks under few-shot conditions. Methods such as CoOp based on prompts, SgVA-CLIP based on adapters, and CuPL based on external knowledge show performance improvements of 1.7\(\%\) (ViT-B/16), 3.1\(\%\) (ViT-B/16), and 0.1\(\%\) (ViT-L/14), respectively, on the ImageNet dataset. (2) For prompt-based fine-tuning methods, some unsupervised training methods yield results similar to supervised training methods. The accuracy of the unsupervised method UPL is only 0.4\(\%\) higher than that of CoOp trained with 2 samples, while the accuracy of TPT (69.0\(\%\)) is not significantly different from CoOp trained with 16 samples (71.9\(\%\)). This is because unsupervised training methods can leverage unlabeled data effectively and can avoid overfitting compared to supervised training with only a small number of samples.

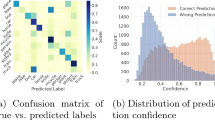

As illustrated in Fig. 4, we also collect the experimental results from base-to-new generalization for existing methods from various sources. The backbone for all methods in Fig. 4 is ViT-B/16. From the Figure, we can conclude that: 1) Methods that perform well on the base classes often sacrifice their generalization performance on the new classes. This may be due to the model overfitting to the base classes. Meanwhile, disrupting or forgetting the potential generalization knowledge acquired during pre-training leads to a drop in performance when the model generalizes to unseen classes. 2) LASP-V (Bulat and Tzimiropoulos 2023) and CPL perform well in terms of base class discriminability and new class generalization. Across the 11 datasets, LASP-V outperforms CLIP by 13.76\(\%\), 1.89\(\%\), and 7.78\(\%\) for the three metrics of the base class, new class, and harmonic mean, respectively. On the ImageNet dataset, CPL outperforms CLIP by 6.38\(\%\), 5.03\(\%\), and 5.67\(\%\) respectively. Notably, both of these methods exhibit significantly better performance on unseen classes compared to CLIP, which has strong zero-shot capabilities. CLIP-CITE (Liu et al. 2024) uses knowledge distillation to retain the original knowledge, achieving optimal average values across the three metrics of the base class, new class, and harmonic mean on 11 datasets.

The collected domain generalization experimental results for existing methods from various sources are shown in Table 3, and we can find that: (1) TPT can effectively combine with CoOp and CoCoOp and achieve state-of-the-art (SOTA) performance. When using ViT-B/16 as the backbone, the combination of TPT and CoOp outperforms zero-shot CLIP by 6.88\(\%\), 6\(\%\), 3.14\(\%\), 10.18\(\%\), and 3.31\(\%\) on the ImageNet, -V2, -Sketch, -A, and -R datasets, respectively. (2) Compared to both textual-only prompt-based fine-tuning adaptation methods (e.g., CoOp, CoCoOp) and visual-only prompt-based fine-tuning adaptation methods (e.g., VPT), multi-modal prompt-based fine-tuning adaptation methods like UPT and MAPLE achieve more improvements. This suggests that fine-tuning the two modalities simultaneously is both sufficient and necessary for better performance.

The collected results of cross-dataset adaptation experiments for existing methods from various sources are shown in Table 4. The purpose of this experiment is to validate the generalization performance of adaptation methods across different datasets. Using the ImageNet dataset with 1000 classes as the source dataset, the methods are initially trained with 16 samples from each class of the source dataset. Subsequently, these methods are tested on 10 different target datasets.

From the data in the table, the following observations can be made: (1) For SubPT and TPT with ResNet50 as the backbone, as well as other methods with ViT-based backbones, they achieve similar results on the source dataset but exhibit significant variations in performance on different target datasets. For example, they achieve higher accuracy on datasets like Caltech101 and Pets, which have categories similar to ImageNet. However, their performances are much lower on datasets like Aircraft and DTD, which contain fine-grained data related to various aircraft and textures. These datasets have greater category differences from ImageNet, and hence, the accuracies on these datasets are much lower than 50\(\%\), indicating that transferring specific knowledge learned from ImageNet to downstream tasks with significantly different categories is challenging. (2) Whether it is prompt-based fine-tuning methods or adapter-based fine-tuning methods, fine-tuning both modalities simultaneously tends to yield better results than fine-tuning only one modality. For instance, the multi-modal prompt learning method MAPLE achieves higher accuracies on 10 target datasets compared to the textual-only prompt learning method CoCoOp (ViT-B/16), and UPT achieves higher accuracies compared to the visual-only prompt learning method VPT (ViT-L/14). This suggests that for multi-modal foundation models like CLIP, fine-tuning both textual and visual aspects is essential for improved generalization performance.

5 Analysis

The current researches on few-shot adaptation for multi-modal foundation models primarily include prompt-based fine-tuning adaptation methods, adapter-based fine-tuning adaptation methods, and external knowledge-based adaptation methods. Based on the current research status on few-shot adaptation, we summarise the following issues and challenges:

-

(1)

Ineffective Adaptation of Upstream and Downstream Domain Distributions: Existing few-shot adaptation methods for multi-modal foundation models mostly focus on the category information in downstream task data while neglecting domain distribution information. Additionally, in the domain adaptation scenarios of multi-modal foundation models, the scarcity of samples in the target domain makes modeling challenging, while the abundance of samples in the source domain results in high modeling costs. Furthermore, current domain adaptation methods are tailored for a single modality, ignoring cross-modal information interaction, which does not meet the requirements of adaptation for multi-modal foundation models.

-

(2)

Lack of Adaptability in Model Selection: There is a wide variety of existing adapter structures with different fine-tuning characteristics, learning capabilities, and model capacities. Different downstream tasks often require different optimal adapters or combinations of multiple adapters, and the feature extraction processes vary significantly across different modalities. Currently, adapter selection is typically reliant on heuristic methods or exhaustive search-based approaches, which are costly and challenging to guarantee performance.

-

(3)

Insufficient Utilization of Data and Knowledge: Although existing data augmentation strategies can enrich the downstream training set and reduce the risk of model overfitting to some extent. However, this empirically manually designed augmentation approach is costly and does not ensure that the selected data augmentation can be effectively adapted to the specific downstream task. Although new textual descriptions can be generated by introducing external knowledge, there is no guarantee that the textual knowledge describing the category attributes can effectively collaborate with the image data.

To address the above-mentioned issues and challenges, we summarize and refine the existing theories related to cross-domain adaptation for multi-modal foundation models, which makes the work of few-shot adaptation more systematic and guides few-shot cross-domain adaptation.

Maurer (2004) focused on the relationship between mean error and expected error in the target domain in 2004:

where \(\Delta _{h_{N}}\left( T,{\mathbb {T}} \right) =\left| \epsilon _T\left( h_N\right) -\epsilon _{\mathbb {T}}\left( h_N\right) \right|\), \(\epsilon _T\left( h_N\right)\) denotes the mean error in the target domain, \(\epsilon _{\mathbb {T}}\left( h_N\right)\) represents the expected error in the target domain, T stands for the data in the target domain dataset, \({\mathbb {T}}\) represents all the possible data that may exist in the target domain, while \(h_0\) represents the prior model, \(h_N\) represents the adapted model, and N represents the number of samples used from the target domain dataset.

Anthony Sicilia et al. (2022) derived an empirical calculation of the domain gap from source and target domain data:

where \(\widetilde{\lambda _{S,T}}\) denotes the minimum value of the model’s error sum over the source and target domains in the fine-tuning space to indicate the adaptability of upstream and downstream tasks, and E[d(S, T)] denotes the domain gap as the H-divergence between two distinct domains.

Crammer et al. (2006) proposed the triangle inequality for errors:

Based on the above lemmas, Anthony Sicilia et al. proposed the PAC-Bayesian domain adaptation bound theory for multi-class classifiers:

however, the term \(\epsilon _S(h_N)\) in theory represents the empirical error of the fine-tuning model on the source domain data, which is independent of the adaptation to the downstream target domain. Conversely, the empirical error \(\epsilon _T(h_N)\) on the target domain, which affects the adaptation, is not captured. To make the theory better represent the impact of target domain data on the adaptation, we propose the following theorem.

Theorem 1

The expected error \(\in _{\mathbb {T}}\left( h_{N}\right)\) of the adapted fine-tuned model in the target domain is defined, with the empirical error of the fine-tuned model in the target domain denoted as \(\in _{T}\left( h_{N}\right)\). The adaptability of the upstream and downstream tasks is defined as \(\widetilde{\lambda _{S,T}}\), with E[d(S, T)] representing the gap between the source and target domains, and \(KL(h_N\ ||\ h_0)\) indicating the difference between the original model and the fine-tuned model. Here, N represents the number of data samples. Therefore, the expected error bound \(\in _{\mathbb {T}}\left( h_{N}\right)\) of the fine-tuned model in the target domain, for adaptation, depends on its empirical error \(\in _{T}\left( h_{N}\right)\), the domain difference E[d(S, T)] between the source and target domains, model capacity \(KL(h_N\ ||\ h_0)\), sample size N, and the adaptability of the upstream and downstream tasks \({\widetilde{\lambda }}_{S, T}\):

Proof

We choose to replace \(\epsilon _S(h_N)\) in (4) with \(\epsilon _S\left( h_N\right) -\epsilon _T\left( h_N\right) +\epsilon _T\left( h_N\right)\), which is organized into the form of (6):

as \(\epsilon _S\left( h_N\right) -\epsilon _T\left( h_N\right) \le {|\epsilon }_S\left( h_N\right) -\epsilon _T\left( h_N\right) |\) is constant, we can derive that:

according to the definition of \(\Delta _{h_{N}}\left( S,T \right) ={|\epsilon }_S\left( h_N\right) -\epsilon _T\left( h_N\right) |\), it can be obtained:

substituting (2) into (8), the collation gives:

\(\square\)

The adaptability of the upstream and downstream tasks mentioned in the above theorem is determined by the nature of the tasks themselves. Once the tasks are fixed, this factor remains constant. However, by adjusting domain discrepancies, model capacity, and sample sizes, it is possible to enhance the model’s generalization performance and reduce empirical errors. Therefore, domain discrepancies between the source and target domains, model capacity, and sample sizes are three fundamental factors that influence the adaptation of multi-modal foundation models.

6 Discussion

Taking guidance from the theory of generalization error bound in few-shot cross-domain adaptation, we start from three aspects, namely: adaptive domain generalization, adaptive model selection, and adaptive knowledge utilization, as shown in Fig. 5, to study the few-shot adaptation methods for multi-modal foundation models and propose corresponding solutions to the aforementioned problems:

-

(1)

Adaptive domain generalization: To address the issue of high modeling cost in domain adaptation, a possible approach is to consider source-free domain adaptation methods for multi-modal foundation models. The reconstruction error of a pre-trained autoencoder-based model can be used as a measure of domain distribution discrepancy and the advantages of prompt-based fine-tuning adaptation methods can also be combined, which can obtain more convenient and efficient adaptation methods. Additionally, to avoid the risk of modality gap during domain alignment and the loss of cross-modal semantic relevance, a multi-modal autoencoder can be introduced in the prompt reconstruction process to constrain the cross-modal joint distribution of data. This will help maintain the semantic consistency of textual and visual features during the domain adaptation process.

-

(2)

Adaptive model selection: Neural Architecture Search (NAS) (Kang et al. 2023) is a promising approach to address the problem of adaptive selection of adapter structures in multi-modal foundation models. It automatically explores different network architectures in the search space to find the best-performing structure for a given task. However, due to the complexity of multi-modal foundation model structures, NAS-based methods need to search for a wide variety of adapter types simultaneously, resulting in a large search space and high computational costs. In such cases, it is necessary to adopt a coarse-to-fine search strategy to design more efficient NAS-based search methods.

-

(3)

Adaptive knowledge utilization: Traditional image augmentation techniques can generate a large number of augmented samples but may not effectively adapt to specific downstream tasks. In contrast, the continuous differentiable image augmentation paradigm can solve for optimal image augmentation parameters through derivation and backpropagation. Therefore, it is worth exploring a differentiable image augmentation approach to achieve adaptive image augmentation for downstream tasks. Additionally, introducing external knowledge can provide richer textual descriptions for multi-modal foundation models. However, it is essential to ensure that the introduced knowledge is highly relevant to visual semantics. One approach could involve using a large language model to generate reliable visual descriptions as references and then training visual filters through adversarial learning to ensure that the filtered textual descriptions contain valid visual semantics.

Due to the rareness of samples, many real-world applications should involve few-shot adaptation methods. For example, for robots to behave more like humans, they should be able to generalize from a few demonstrations. In the field of acoustic signal processing, few-shot adaptation methods can be useful in generating personal voice navigation in map applications (Wang and Yao 2019). Moreover, patients for rare diseases are scarce, and treating rare diseases is very important. Therefore, few-shot adaptation methods are useful for studying treatment options for rare diseases. Downstream tasks in the real world are diverse, they vary in domain distribution, task attributes, available sample quantities, and so on. Current adaptation methods of foundation models do not possess the capability to adapt to these factors effectively, resulting in limited model performance. This limitation has become a bottleneck hindering the further adoption of foundation models in various industries. Therefore, endowing multi-modal foundation models with adaptability in the context of downstream few-shot adaptation is crucial. This can be achieved through adaptive domain generalization, adaptive model selection, and adaptive knowledge utilization. These adaptations have the potential to significantly improve model performance and may represent important research directions in this field in the future.

7 Conclusion

We have comprehensively summarized the methods for multi-modal foundation models in the context of few-shot adaptation tasks, including prompt-based fine-tuning adaptation, adapter-based fine-tuning adaptation, and external knowledge-based fine-tuning adaptation. Prompt-based fine-tuning adaptation methods avoid the tediousness of manually designing the textual prompt and require only a small number of parameters to be fine-tuned, thus effectively mitigating the overfitting problem. Adapter-based fine-tuning adaptation methods only need to update a small number of parameters and have the advantages of high efficiency, design flexibility, and robustness. External knowledge-based fine-tuning adaptation methods can alleviate the problem of insufficient prior knowledge and scarce training samples in downstream few-shot scenarios to a certain extent. However, these methods still have some limitations, such as ineffective adaptation of upstream and downstream domain distributions, lack of adaptability in model selection, and insufficient utilization of data and knowledge. Therefore, we believe that in the future, we need to take three perspectives: adaptive domain generalization, adaptive model selection, and adaptive knowledge utilization in order to improve the performance in multi-modal few-shot adaptation. In addition, we review 11 commonly used datasets for evaluating the downstream generalization performance of multi-modal foundation models and adopt four experimental setups to test the generalization performance of multi-modal foundation models under few-shot conditions. We hope that the summary and analysis of this survey can provide some insights and guidance for future research on the few-shot adaptation of multi-modal foundation models. Furthermore, it is hoped that few-shot adaptation methods can demonstrate their effectiveness and potential in various practical scenarios, such as medical diagnosis, financial analysis, and personalized recommendations.

References

Baevski A, Hsu W, Xu Q, Babu A, Gu J, Auli M (2022) data2vec: A general framework for self-supervised learning in speech, vision and language. In: Chaudhuri, K., Jegelka, S., Song, L., Szepesvári, C., Niu, G., Sabato, S. (eds.) International Conference on Machine Learning, ICML 2022, 17-23 July 2022, Baltimore, Maryland, USA. Proceedings of Machine Learning Research, vol. 162, pp. 1298–1312. PMLR, ???. https://proceedings.mlr.press/v162/baevski22a.html

Bahng H, Jahanian A, Sankaranarayanan S, Isola P (2022) Exploring Visual Prompts for Adapting Large-Scale Models

Bao H, Dong L, Piao S, Wei F (2022) Beit: BERT pre-training of image transformers. In: The Tenth International Conference on Learning Representations, ICLR 2022, Virtual Event, April 25-29, 2022. OpenReview.net, ???. https://openreview.net/forum?id=p-BhZSz59o4

Bossard L, Guillaumin M, Gool LV (2014) Food-101 - mining discriminative components with random forests. In: Fleet, D.J., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) Computer Vision - ECCV 2014 - 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part VI. Lecture Notes in Computer Science, vol. 8694, pp. 446–461. Springer, ???. https://doi.org/10.1007/978-3-319-10599-4_29

Bulat A, Tzimiropoulos G (2023) LASP: text-to-text optimization for language-aware soft prompting of vision & language models. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2023, Vancouver, BC, Canada, June 17-24, 2023, pp. 23232–23241. IEEE, ??? . https://doi.org/10.1109/CVPR52729.2023.02225

Cai H, Gan C, Wang T, Zhang Z, Han S (2020) Once-for-all: Train one network and specialize it for efficient deployment. In: 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, April 26-30, 2020. OpenReview.net, ??? . https://openreview.net/forum?id=HylxE1HKwS

Chen T, Kornblith S, Norouzi M, Hinton GE (2020) A simple framework for contrastive learning of visual representations. In: Proceedings of the 37th International Conference on Machine Learning, ICML 2020, 13-18 July 2020, Virtual Event. Proceedings of Machine Learning Research, vol. 119, pp. 1597–1607. PMLR, ???. http://proceedings.mlr.press/v119/chen20j.html

Chen D, Wu Z, Liu F, Yang Z, Huang Y, Bao Y, Zhou E (2022) Prototypical contrastive language image pretraining. CoRR https://doi.org/10.48550/arXiv.2206.10996arXiv:2206.10996

Chen G, Yao W, Song X, Li X, Rao Y, Zhang K (2023) PLOT: prompt learning with optimal transport for vision-language models. In: The Eleventh International Conference on Learning Representations, ICLR 2023, Kigali, Rwanda, May 1-5, 2023. OpenReview.net, ???. https://openreview.net/pdf?id=zqwryBoXYnh

Chowdhery A, Narang S, Devlin J, Bosma M, Mishra G, Roberts A, Barham P, Chung HW, Sutton C, Gehrmann S, Schuh P, Shi K, Tsvyashchenko S, Maynez J, Rao A, Barnes P, Tay Y, Shazeer N, Prabhakaran V, Reif E, Du N, Hutchinson B, Pope R, Bradbury J, Austin J, Isard M, Gur-Ari G, Yin P, Duke T, Levskaya A, Ghemawat S, Dev S, Michalewski H, Garcia X, Misra V, Robinson K, Fedus L, Zhou D, Ippolito D, Luan D, Lim H, Zoph B, Spiridonov A, Sepassi R, Dohan D, Agrawal S, Omernick M, Dai AM, Pillai TS, Pellat M, Lewkowycz A, Moreira E, Child R, Polozov O, Lee K, Zhou Z, Wang X, Saeta B, Diaz M, Firat O, Catasta M, Wei J, Meier-Hellstern K, Eck D, Dean J, Petrov S, Fiedel N (2022) Palm: Scaling language modeling with pathways. CoRR https://doi.org/10.48550/arXiv.2204.02311arXiv:abs/2204.02311

Cimpoi M, Maji S, Kokkinos I, Mohamed S, Vedaldi A (2014) Describing textures in the wild. In: 2014 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2014, Columbus, OH, USA, June 23-28, 2014, pp. 3606–3613. IEEE Computer Society, ??? . https://doi.org/10.1109/CVPR.2014.461

Crammer K, Kearns MJ, Wortman J (2006) Learning from multiple sources. In: Schölkopf, B., Platt, J.C., Hofmann, T. (eds.) Advances in Neural Information Processing Systems 19, Proceedings of the Twentieth Annual Conference on Neural Information Processing Systems, Vancouver, British Columbia, Canada, December 4-7, 2006, pp. 321–328. MIT Press, ???. https://proceedings.neurips.cc/paper/2006/hash/0f21f0349462cacdc5796990d37760ae-Abstract.html

Deng J, Dong W, Socher R, Li L, Li K, Fei-Fei L (2009) Imagenet: A large-scale hierarchical image database. In: 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2009), 20-25 June 2009, Miami, Florida, USA, pp. 248–255. IEEE Computer Society, ???. https://doi.org/10.1109/CVPR.2009.5206848

Devlin J, Chang M-W, Lee K, Toutanova K (2019) BERT: Pre-training of deep bidirectional transformers for language understanding. In: Burstein, J., Doran, C., Solorio, T. (eds.) Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pp. 4171–4186. Association for Computational Linguistics, Minneapolis, Minnesota. https://doi.org/10.18653/v1/N19-1423 . https://aclanthology.org/N19-1423

Ding N, Qin Y, Yang G, Wei F, Yang Z, Su Y, Hu S, Chen Y, Chan C, Chen W, Yi J, Zhao W, Wang X, Liu Z, Zheng H, Chen J, Liu Y, Tang J, Li J, Sun M (2022) Delta tuning: A comprehensive study of parameter efficient methods for pre-trained language models. CoRR https://doi.org/10.48550/arXiv.2203.06904arXiv:2203.06904

Ding K, Wang Y, Liu P, Yu Q, Zhang H, Xiang S, Pan C (2022) Prompt tuning with soft context sharing for vision-language models. CoRR https://doi.org/10.48550/arXiv.2208.13474arXiv:2208.13474

Fei-Fei L, Fergus R, Perona P (2007) Learning generative visual models from few training examples: An incremental bayesian approach tested on 101 object categories. Comput Vis Image Underst 106(1):59–70. https://doi.org/10.1016/j.cviu.2005.09.012

Fürst A, Rumetshofer E, Lehner J, Tran VT, Tang F, Ramsauer H, Kreil DP, Kopp M, Klambauer G, Bitto A, Hochreiter S (2022) CLOOB: modern hopfield networks with infoloob outperform CLIP. In: NeurIPS. http://papers.nips.cc/paper_files/paper/2022/hash/8078e76f913e31b8467e85b4c0f0d22b-Abstract-Conference.html

Gao P, Geng S, Zhang R, Ma T, Fang R, Zhang Y, Li H, Qiao Y (2021) Clip-adapter: Better vision-language models with feature adapters. CoRR arXiv:2110.04544

Gao Y, Liu J, Xu Z, Zhang J, Li K, Ji R, Shen C (2022) Pyramidclip: Hierarchical feature alignment for vision-language model pretraining. In: NeurIPS. http://papers.nips.cc/paper_files/paper/2022/hash/e9882f7f7c44a10acc01132302bac9d8-Abstract-Conference.html

Guo Z, Zhang R, Qiu L, Ma X, Miao X, He X, Cui B (2023) CALIP: zero-shot enhancement of CLIP with parameter-free attention. In: Williams, B., Chen, Y., Neville, J. (eds.) Thirty-Seventh AAAI Conference on Artificial Intelligence, AAAI 2023, Thirty-Fifth Conference on Innovative Applications of Artificial Intelligence, IAAI 2023, Thirteenth Symposium on Educational Advances in Artificial Intelligence, EAAI 2023, Washington, DC, USA, February 7-14, 2023, pp. 746–754. AAAI Press, ???. https://ojs.aaai.org/index.php/AAAI/article/view/25152

He K, Chen X, Xie S, Li Y, Dollár P, Girshick RB (2022) Masked autoencoders are scalable vision learners. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, June 18-24, 2022, pp. 15979–15988. IEEE, ??? . https://doi.org/10.1109/CVPR52688.2022.01553

He K, Fan H, Wu Y, Xie S, Girshick RB (2020) Momentum contrast for unsupervised visual representation learning. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, June 13-19, 2020, pp. 9726–9735. Computer Vision Foundation / IEEE, ???. https://doi.org/10.1109/CVPR42600.2020.00975

Helber P, Bischke B, Dengel A, Borth D (2019) Eurosat: A novel dataset and deep learning benchmark for land use and land cover classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 12(7), 2217–2226 https://doi.org/10.1109/JSTARS.2019.2918242

Hendrycks D, Basart S, Mu N, Kadavath S, Wang F, Dorundo E, Desai R, Zhu T, Parajuli S, Guo M, Song D, Steinhardt J, Gilmer J (2021) The many faces of robustness: A critical analysis of out-of-distribution generalization. In: 2021 IEEE/CVF International Conference on Computer Vision, ICCV 2021, Montreal, QC, Canada, October 10-17, 2021, pp. 8320–8329. IEEE, ??? . https://doi.org/10.1109/ICCV48922.2021.00823

Hendrycks D, Zhao K, Basart S, Steinhardt J, Song D (2021) Natural adversarial examples. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 15257–15266. https://doi.org/10.1109/CVPR46437.2021.01501

Houlsby N, Giurgiu A, Jastrzebski S, Morrone B, Laroussilhe Q, Gesmundo A, Attariyan M, Gelly S (2019) Parameter-efficient transfer learning for NLP. In: Chaudhuri, K., Salakhutdinov, R. (eds.) Proceedings of the 36th International Conference on Machine Learning, ICML 2019, 9-15 June 2019, Long Beach, California, USA. Proceedings of Machine Learning Research, vol. 97, pp. 2790–2799. PMLR, ???. http://proceedings.mlr.press/v97/houlsby19a.html

Huang T, Chu J, Wei F (2022) Unsupervised prompt learning for vision-language models. CoRR https://doi.org/10.48550/arXiv.2204.03649arXiv:2204.03649

Huo Y, Zhang M, Liu G, Lu H, Gao Y, Yang G, Wen J, Zhang H, Xu B, Zheng W, Xi Z, Yang Y, Hu A, Zhao J, Li R, Zhao Y, Zhang L, Song Y, Hong X, Cui W, Hou DY, Li Y, Li J, Liu P, Gong Z, Jin C, Sun Y, Chen S, Lu Z, Dou Z, Jin Q, Lan Y, Zhao WX, Song R, Wen J(2021) Wenlan: Bridging vision and language by large-scale multi-modal pre-training. CoRR arXiv:2103.06561

Jiang H, Zhang J, Huang R, Ge C, Ni Z, Lu J, Zhou J, Song S, Huang G (2022) Cross-modal adapter for text-video retrieval. CoRR https://doi.org/10.48550/arXiv.2211.09623arXiv:2211.09623

Jia M, Tang L, Chen B, Cardie C, Belongie SJ, Hariharan B, Lim S (2022) Visual prompt tuning. In: Avidan, S., Brostow, G.J., Cissé, M., Farinella, G.M., Hassner, T. (eds.) Computer Vision - ECCV 2022 - 17th European Conference, Tel Aviv, Israel, October 23-27, 2022, Proceedings, Part XXXIII. Lecture Notes in Computer Science, vol. 13693, pp. 709–727. Springer, ???. https://doi.org/10.1007/978-3-031-19827-4_41

Jia C, Yang Y, Xia Y, Chen Y, Parekh Z, Pham H, Le QV, Sung Y, Li Z, Duerig T (2021) Scaling up visual and vision-language representation learning with noisy text supervision. In: Meila, M., Zhang, T. (eds.) Proceedings of the 38th International Conference on Machine Learning, ICML 2021, 18-24 July 2021, Virtual Event. Proceedings of Machine Learning Research, vol. 139, pp. 4904–4916. PMLR, ???. http://proceedings.mlr.press/v139/jia21b.html

Jie S, Deng Z (2022) Convolutional bypasses are better vision transformer adapters. CoRR https://doi.org/10.48550/arXiv.2207.07039arXiv:2207.07039

Jie S, Deng Z (2023) Fact: Factor-tuning for lightweight adaptation on vision transformer. In: Williams, B., Chen, Y., Neville, J. (eds.) Thirty-Seventh AAAI Conference on Artificial Intelligence, AAAI 2023, Thirty-Fifth Conference on Innovative Applications of Artificial Intelligence, IAAI 2023, Thirteenth Symposium on Educational Advances in Artificial Intelligence, EAAI 2023, Washington, DC, USA, February 7-14, 2023, pp. 1060–1068. AAAI Press, ??? . https://ojs.aaai.org/index.php/AAAI/article/view/25187

Kang J, Kang JK, Kim J, Jeon K, Chung H, Park B (2023) Neural architecture search survey: A computer vision perspective. Sensors 23(3):1713. https://doi.org/10.3390/s23031713

Khattak MU, Rasheed HA, Maaz M, Khan S, Khan FS (2022) Maple: Multi-modal prompt learning. CoRR https://doi.org/10.48550/arXiv.2210.03117arXiv:2210.03117

Krause J, Stark M, Deng J, Fei-Fei L (2013) 3d object representations for fine-grained categorization. In: 2013 IEEE International Conference on Computer Vision Workshops, ICCV Workshops 2013, Sydney, Australia, December 1-8, 2013, pp. 554–561. IEEE Computer Society, ???. https://doi.org/10.1109/ICCVW.2013.77

Lester B, Al-Rfou R, Constant N (2021) The power of scale for parameter-efficient prompt tuning. In: Moens, M., Huang, X., Specia, L., Yih, S.W. (eds.) Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, EMNLP 2021, Virtual Event / Punta Cana, Dominican Republic, 7-11 November, 2021, pp. 3045–3059. Association for Computational Linguistics, ??? . https://doi.org/10.18653/v1/2021.emnlp-main.243

Li XL, Liang P (2021) Prefix-tuning: Optimizing continuous prompts for generation. In: Zong, C., Xia, F., Li, W., Navigli, R. (eds.) Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, ACL/IJCNLP 2021, (Volume 1: Long Papers), Virtual Event, August 1-6, 2021, pp. 4582–4597. Association for Computational Linguistics, ??? . https://doi.org/10.18653/v1/2021.acl-long.353

Li J, Gao M, Wei L, Tang S, Zhang W, Li M, Ji W, Tian Q, Chua T, Zhuang Y (2023) Gradient-regulated meta-prompt learning for generalizable vision-language models. CoRR https://doi.org/10.48550/arXiv.2303.06571arXiv:2303.06571

Li Y, Liang F, Zhao L, Cui Y, Ouyang W, Shao J, Yu F, Yan J (2022) Supervision exists everywhere: A data efficient contrastive language-image pre-training paradigm. In: The Tenth International Conference on Learning Representations, ICLR 2022, Virtual Event, April 25-29, 2022. OpenReview.net, ???. https://openreview.net/forum?id=zq1iJkNk3uN

Li C, Liu H, Li LH, Zhang P, Aneja J, Yang J, Jin P, Hu H, Liu Z, Lee YJ, Gao J (2022) ELEVATER: A benchmark and toolkit for evaluating language-augmented visual models. In: NeurIPS. http://papers.nips.cc/paper_files/paper/2022/hash/3c4688b6a76f25f2311daa0d75a58f1a-Abstract-Datasets_and_Benchmarks.html

Li J, Li D, Xiong C, Hoi SCH (2022) BLIP: bootstrapping language-image pre-training for unified vision-language understanding and generation. In: Chaudhuri, K., Jegelka, S., Song, L., Szepesvári, C., Niu, G., Sabato, S. (eds.) International Conference on Machine Learning, ICML 2022, 17-23 July 2022, Baltimore, Maryland, USA. Proceedings of Machine Learning Research, vol. 162, pp. 12888–12900. PMLR, ??? . https://proceedings.mlr.press/v162/li22n.html

Lin Z, Yu S, Kuang Z, Pathak D, Ramanan D (2023) Multimodality helps unimodality: Cross-modal few-shot learning with multimodal models. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2023, Vancouver, BC, Canada, June 17-24, 2023, pp. 19325–19337. IEEE, ??? . https://doi.org/10.1109/CVPR52729.2023.01852

Liu M, Li B, Yu Y (2024) Fully Fine-tuned CLIP Models are Efficient Few-Shot Learners . https://arxiv.org/abs/2407.04003

Liu X, Wang D, Li M, Duan Z, Xu Y, Chen B, Zhou M (2023) Patch-token aligned bayesian prompt learning for vision-language models. CoRR https://doi.org/10.48550/arXiv.2303.09100arXiv:2303.09100

Liu X, Zheng Y, Du Z, Ding M, Qian Y, Yang Z, Tang J (2021) GPT understands, too. CoRR arXiv:2103.10385

Liu W, Zhou P, Zhao Z, Wang Z, Ju Q, Deng H, Wang P (2020) K-BERT: enabling language representation with knowledge graph. In: The Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, The Thirty-Second Innovative Applications of Artificial Intelligence Conference, IAAI 2020, The Tenth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2020, New York, NY, USA, February 7-12, 2020, pp. 2901–2908. AAAI Press, ???. https://ojs.aaai.org/index.php/AAAI/article/view/5681

Lu J, Batra D, Parikh D, Lee S (2019) Vilbert: Pretraining task-agnostic visiolinguistic representations for vision-and-language tasks. In: Wallach, H.M., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E.B., Garnett, R. (eds.) Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, December 8-14, 2019, Vancouver, BC, Canada, pp. 13–23 . https://proceedings.neurips.cc/paper/2019/hash/c74d97b01eae257e44aa9d5bade97baf-Abstract.html

Lu H, Ding M, Huo Y, Yang G, Lu Z, Tomizuka M, Zhan W (2023) Uniadapter: Unified parameter-efficient transfer learning for cross-modal modeling. CoRR https://doi.org/10.48550/arXiv.2302.06605arXiv:2302.06605

Lu Y, Liu J, Zhang Y, Liu Y, Tian X (2022) Prompt distribution learning. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, June 18-24, 2022, pp. 5196–5205. IEEE, ???. https://doi.org/10.1109/CVPR52688.2022.00514

Ma C, Liu Y, Deng J, Xie L, Dong W, Xu C (2023) Understanding and mitigating overfitting in prompt tuning for vision-language models. IEEE Trans Circuits Syst Video Technol 33(9):4616–4629. https://doi.org/10.1109/TCSVT.2023.3245584

Maji S, Rahtu E, Kannala J, Blaschko MB, Vedaldi A (2013) Fine-grained visual classification of aircraft. CoRR arXiv:1306.5151

Maurer A (2004) A note on the PAC bayesian theorem. CoRR cs.LG/0411099

Menon S, Vondrick C (2023) Visual classification via description from large language models. In: The Eleventh International Conference on Learning Representations, ICLR 2023, Kigali, Rwanda, May 1-5, 2023. OpenReview.net, ???. https://openreview.net/pdf?id=jlAjNL8z5cs

Mu N, Kirillov A, Wagner DA, Xie S (2022) SLIP: self-supervision meets language-image pre-training. In: Avidan, S., Brostow, G.J., Cissé, M., Farinella, G.M., Hassner, T. (eds.) Computer Vision - ECCV 2022 - 17th European Conference, Tel Aviv, Israel, October 23-27, 2022, Proceedings, Part XXVI. Lecture Notes in Computer Science, vol. 13686, pp. 529–544. Springer, ??? . https://doi.org/10.1007/978-3-031-19809-0_30

Nilsback M, Zisserman A (2008) Automated flower classification over a large number of classes. In: Sixth Indian Conference on Computer Vision, Graphics & Image Processing, ICVGIP 2008, Bhubaneswar, India, 16-19 December 2008, pp. 722–729. IEEE Computer Society, ??? . https://doi.org/10.1109/ICVGIP.2008.47

OpenAI (2023) GPT-4 technical report. CoRR https://doi.org/10.48550/arXiv.2303.08774arXiv:2303.08774

Pantazis O, Brostow GJ, Jones KE, Aodha OM (2022) Svl-adapter: Self-supervised adapter for vision-language pretrained models. In: 33rd British Machine Vision Conference 2022, BMVC 2022, London, UK, November 21-24, 2022, p. 580. BMVA Press, ???. https://bmvc2022.mpi-inf.mpg.de/580/

Parkhi OM, Vedaldi A, Zisserman A, Jawahar CV (2012) Cats and dogs. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, June 16-21, 2012, pp. 3498–3505. IEEE Computer Society, ??? . https://doi.org/10.1109/CVPR.2012.6248092

Peng F, Yang X, Xu C (2023) Sgva-clip: Semantic-guided visual adapting of vision-language models for few-shot image classification. CoRR https://doi.org/10.48550/arXiv.2211.16191arXiv:2211.16191

Pratt SM, Liu R, Farhadi A (2022) What does a platypus look like? generating customized prompts for zero-shot image classification. CoRR https://doi.org/10.48550/arXiv.2209.03320arXiv:2209.03320

Radford A, Kim JW, Hallacy C, Ramesh A, Goh G, Agarwal S, Sastry G, Askell A, Mishkin P, Clark J, Krueger G, Sutskever I (2021) Learning transferable visual models from natural language supervision. In: Meila, M., Zhang, T. (eds.) Proceedings of the 38th International Conference on Machine Learning, ICML 2021, 18-24 July 2021, Virtual Event. Proceedings of Machine Learning Research, vol. 139, pp. 8748–8763. PMLR, ???. http://proceedings.mlr.press/v139/radford21a.html

Radford A, Narasimhan K (2018) Improving language understanding by generative pre-training. https://api.semanticscholar.org/CorpusID:49313245

Recht B, Roelofs R, Schmidt L, Shankar V (2019) Do imagenet classifiers generalize to imagenet? In: Chaudhuri, K., Salakhutdinov, R. (eds.) Proceedings of the 36th International Conference on Machine Learning, ICML 2019, 9-15 June 2019, Long Beach, California, USA. Proceedings of Machine Learning Research, vol. 97, pp. 5389–5400. PMLR, ???. http://proceedings.mlr.press/v97/recht19a.html

Reynolds L, McDonell K (2021) Prompt programming for large language models: Beyond the few-shot paradigm. In: Kitamura, Y., Quigley, A., Isbister, K., Igarashi, T. (eds.) CHI ’21: CHI Conference on Human Factors in Computing Systems, Virtual Event / Yokohama Japan, May 8-13, 2021, Extended Abstracts, pp. 314–13147. ACM, ??? . https://doi.org/10.1145/3411763.3451760

Rombach R, Blattmann A, Lorenz D, Esser P, Ommer B (2022) High-resolution image synthesis with latent diffusion models. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, June 18-24, 2022, pp. 10674–10685. IEEE, ??? . https://doi.org/10.1109/CVPR52688.2022.01042

Schuhmann C, Beaumont R, Vencu R, Gordon C, Wightman R, Cherti M, Coombes T, Katta A, Mullis C, Wortsman M, Schramowski P, Kundurthy S, Crowson K, Schmidt L, Kaczmarczyk R, Jitsev J (2022) LAION-5B: an open large-scale dataset for training next generation image-text models. In: NeurIPS. http://papers.nips.cc/paper_files/paper/2022/hash/a1859debfb3b59d094f3504d5ebb6c25-Abstract-Datasets_and_Benchmarks.html

Shan B, Yin W, Sun Y, Tian H, Wu H, Wang H (2022) Ernie-vil 2.0: Multi-view contrastive learning for image-text pre-training. CoRR https://doi.org/10.48550/arXiv.2209.15270arXiv:2209.15270

Shen S, Li C, Hu X, Xie Y, Yang J, Zhang P, Gan Z, Wang L, Yuan L, Liu C, Keutzer K, Darrell T, Rohrbach A, Gao J (2022) K-LITE: learning transferable visual models with external knowledge. In: NeurIPS. http://papers.nips.cc/paper_files/paper/2022/hash/63fef0802863f47775c3563e18cbba17-Abstract-Conference.html

Shen S, Yang S, Zhang T, Zhai B, Gonzalez JE, Keutzer K, Darrell T (2022) Multitask vision-language prompt tuning. CoRR https://doi.org/10.48550/arXiv.2211.11720arXiv:2211.11720

Shin T, Razeghi Y, IV RLL, Wallace E, Singh S (2020) Autoprompt: Eliciting knowledge from language models with automatically generated prompts. In: Webber, B., Cohn, T., He, Y., Liu, Y. (eds.) Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, EMNLP 2020, Online, November 16-20, 2020, pp. 4222–4235. Association for Computational Linguistics, ??? . https://doi.org/10.18653/v1/2020.emnlp-main.346

Shu M, Nie W, Huang D, Yu Z, Goldstein T, Anandkumar A, Xiao C (2022) Test-time prompt tuning for zero-shot generalization in vision-language models. In: NeurIPS . http://papers.nips.cc/paper_files/paper/2022/hash/5bf2b802e24106064dc547ae9283bb0c-Abstract-Conference.html

Sicilia A, Atwell K, Alikhani M, Hwang SJ (2022) Pac-bayesian domain adaptation bounds for multiclass learners. In: Cussens, J., Zhang, K. (eds.) Uncertainty in Artificial Intelligence, Proceedings of the Thirty-Eighth Conference on Uncertainty in Artificial Intelligence, UAI 2022, 1-5 August 2022, Eindhoven, The Netherlands. Proceedings of Machine Learning Research, vol. 180, pp. 1824–1834. PMLR, ??? . https://proceedings.mlr.press/v180/sicilia22a.html

Soomro K, Zamir AR, Shah M (2012) UCF101: A dataset of 101 human actions classes from videos in the wild. CoRR arXiv:1212.0402

Sun Q, Fang Y, Wu L, Wang X, Cao Y (2023) EVA-CLIP: improved training techniques for CLIP at scale. CoRR https://doi.org/10.48550/arXiv.2303.15389arXiv:2303.15389

Tejankar A, Sanjabi M, Wu B, Xie S, Khabsa M, Pirsiavash H, Firooz H (2021) A fistful of words: Learning transferable visual models from bag-of-words supervision. CoRR arXiv:2112.13884

Udandarao V, Gupta A, Albanie S (2022) Sus-x: Training-free name-only transfer of vision-language models. CoRR https://doi.org/10.48550/arXiv.2211.16198arXiv:2211.16198

Vasu PKA, Pouransari H, Faghri F, Vemulapalli R, Tuzel O (2024) Mobileclip: Fast image-text models through multi-modal reinforced training. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 15963–15974

Wang W, Bao H, Dong L, Bjorck J, Peng Z, Liu Q, Aggarwal K, Mohammed OK, Singhal S, Som S, Wei F (2022) Image as a foreign language: Beit pretraining for all vision and vision-language tasks. CoRR https://doi.org/10.48550/arXiv.2208.10442arXiv:2208.10442

Wang H, Ge S, Lipton ZC, Xing EP (2019) Learning robust global representations by penalizing local predictive power. In: Wallach, H.M., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E.B., Garnett, R. (eds.) Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, December 8-14, 2019, Vancouver, BC, Canada, pp. 10506–10518. https://proceedings.neurips.cc/paper/2019/hash/3eefceb8087e964f89c2d59e8a249915-Abstract.html

Wang J, Wang H, Deng J, Wu W, Zhang D (2021) Efficientclip: Efficient cross-modal pre-training by ensemble confident learning and language modeling. CoRR arXiv:2109.04699

Wang Y, Yao Q (2019) Few-shot learning: A survey. CoRR arXiv:1904.05046

Wang J, Zhang Y, Zhang L, Yang P, Gao X, Wu Z, Dong X, He J, Zhuo J, Yang Q, Huang Y, Li X, Wu Y, Lu J, Zhu X, Chen W, Han T, Pan K, Wang R, Wang H, Wu X, Zeng Z, Chen C, Gan R, Zhang J (2022) Fengshenbang 1.0: Being the foundation of chinese cognitive intelligence. CoRR abs/2209.02970[SPACE]arXiv:2209.02970

Wortsman M, Ilharco G, Kim JW, Li M, Kornblith S, Roelofs R, Lopes RG, Hajishirzi H, Farhadi A, Namkoong H, Schmidt L (2022) Robust fine-tuning of zero-shot models. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, June 18-24, 2022, pp. 7949–7961. IEEE, ??? . https://doi.org/10.1109/CVPR52688.2022.00780

Wu J, Li X, Wei C, Wang H, Yuille AL, Zhou Y, Xie C (2022) Unleashing the power of visual prompting at the pixel level. CoRR https://doi.org/10.48550/arXiv.2212.10556arXiv:2212.10556

Xiao J, Hays J, Ehinger KA, Oliva A, Torralba A (2010) SUN database: Large-scale scene recognition from abbey to zoo. In: The Twenty-Third IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2010, San Francisco, CA, USA, 13-18 June 2010, pp. 3485–3492. IEEE Computer Society, ???. https://doi.org/10.1109/CVPR.2010.5539970

Xie C-W, Sun S, Xiong X, Zheng Y, Zhao D, Zhou J (2023) Ra-clip: Retrieval augmented contrastive language-image pre-training. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 19265–19274

Xing Y, Shi Z, Meng Z, Lakemeyer G, Ma Y, Wattenhofer R (2021) KM-BART: knowledge enhanced multimodal BART for visual commonsense generation. In: Zong, C., Xia, F., Li, W., Navigli, R. (eds.) Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, ACL/IJCNLP 2021, (Volume 1: Long Papers), Virtual Event, August 1-6, 2021, pp. 525–535. Association for Computational Linguistics, ???. https://doi.org/10.18653/v1/2021.acl-long.44

Xing Y, Wu Q, Cheng D, Zhang S, Liang G, Zhang Y (2022) Class-aware visual prompt tuning for vision-language pre-trained model. CoRR https://doi.org/10.48550/arXiv.2208.08340arXiv:2208.08340

Yang Y, Panagopoulou A, Zhou S, Jin D, Callison-Burch C, Yatskar M (2022) Language in a bottle: Language model guided concept bottlenecks for interpretable image classification. CoRR https://doi.org/10.48550/arXiv.2211.11158arXiv:2211.11158

Yang A, Pan J, Lin J, Men R, Zhang Y, Zhou J, Zhou C (2022) Chinese CLIP: contrastive vision-language pretraining in chinese. CoRR https://doi.org/10.48550/arXiv.2211.01335arXiv:2211.01335

Yao L, Huang R, Hou L, Lu G, Niu M, Xu H, Liang X, Li Z, Jiang X, Xu C (2022) FILIP: fine-grained interactive language-image pre-training. In: The Tenth International Conference on Learning Representations, ICLR 2022, Virtual Event, April 25-29, 2022. OpenReview.net, ??? . https://openreview.net/forum?id=cpDhcsEDC2

Yao H, Zhang R, Xu C (2023) Visual-language prompt tuning with knowledge-guided context optimization. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2023, Vancouver, BC, Canada, June 17-24, 2023, pp. 6757–6767. IEEE, ???. https://doi.org/10.1109/CVPR52729.2023.00653

Yu F, Tang J, Yin W, Sun Y, Tian H, Wu H, Wang H (2021) Ernie-vil: Knowledge enhanced vision-language representations through scene graphs. In: Thirty-Fifth AAAI Conference on Artificial Intelligence, AAAI 2021, Thirty-Third Conference on Innovative Applications of Artificial Intelligence, IAAI 2021, The Eleventh Symposium on Educational Advances in Artificial Intelligence, EAAI 2021, Virtual Event, February 2-9, 2021, pp. 3208–3216. AAAI Press, ???. https://ojs.aaai.org/index.php/AAAI/article/view/16431

Zang Y, Li W, Zhou K, Huang C, Loy CC (2022) Unified vision and language prompt learning. CoRR https://doi.org/10.48550/arXiv.2210.07225arXiv:2210.07225

Zeng W, Ren X, Su T, Wang H, Liao Y, Wang Z, Jiang X, Yang Z, Wang, K, Zhang X, Li C, Gong Z, Yao, Y, Huang X, Wang J, Yu J, Guo Q, Yu Y, Zhang Y, Wang J, Tao H, Yan D, Yi Z, Peng F, Jiang F, Zhang H, Deng L, Zhang Y, Lin Z, Zhang C, Zhang S, Guo M, Gu S, Fan G, Wang Y, Jin X, Liu Q, Tian Y (2021) Pangu-\(\alpha\): Large-scale autoregressive pretrained chinese language models with auto-parallel computation. CoRR arXiv:abs/2104.12369 )

Zhang R, Fang R, Zhang W, Gao P, Li K, Dai J, Qiao Y, Li H (2021) Tip-adapter: Training-free clip-adapter for better vision-language modeling. CoRR arXiv:2111.03930

Zhang Z, Han X, Liu Z, Jiang X, Sun M, Liu Q (2019) ERNIE: enhanced language representation with informative entities. In: Korhonen, A., Traum, D.R., Màrquez, L. (eds.) Proceedings of the 57th Conference of the Association for Computational Linguistics, ACL 2019, Florence, Italy, July 28- August 2, 2019, Volume 1: Long Papers, pp. 1441–1451. Association for Computational Linguistics, ??? . https://doi.org/10.18653/v1/p19-1139

Zhang J, Huang J, Jin S, Lu S (2023) Vision-language models for vision tasks: A survey. CoRR https://doi.org/10.48550/arXiv.2304.00685arXiv:2304.00685

Zhang B, Jin X, Gong W, Xu K, Zhang Z, Wang P, Shen X, Feng J (2023) Multimodal video adapter for parameter efficient video text retrieval. CoRR https://doi.org/10.48550/arXiv.2301.07868arXiv:2301.07868

Zhang R, Qiu L, Zhang W, Zeng Z (2021) VT-CLIP: enhancing vision-language models with visual-guided texts. CoRR arXiv:2112.02399