Abstract

In the inherently hazardous construction industry, where injuries are frequent, the unsafe operation of heavy construction machinery significantly contributes to the injury and accident rates. To reduce these risks, this study introduces a novel framework for detecting and classifying these unsafe operations for five types of construction machinery. Utilizing a cascade learning architecture, the approach employs a Super-Resolution Generative Adversarial Network (SRGAN), Real-Time Detection Transformers (RT-DETR), self-DIstillation with NO labels (DINOv2), and Dilated Neighborhood Attention Transformer (DiNAT) models. The study focuses on enhancing the detection and classification of unsafe operations in construction machinery through upscaling low-resolution surveillance footage and creating detailed high-resolution inputs for the RT-DETR model. This enhancement, by leveraging temporal information, significantly improves object detection and classification accuracy. The performance of the cascaded pipeline yielded an average detection and first-level classification precision of 96%, a second-level classification accuracy of 98.83%, and a third-level classification accuracy of 98.25%, among other metrics. The cascaded integration of these models presents a well-rounded solution for near-real-time surveillance in dynamic construction environments, advancing surveillance technologies and significantly contributing to safety management within the industry.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The construction sector is one of the largest industries and contributes to the economies of developed and emerging countries. Despite its critical role in the economic framework (Khallaf and Khallaf 2021), in construction environments, employees face hazardous conditions that include intense physical labor and significant risks to their safety and health. In this dynamic sector, a significant risk factor is the proximity hazards arising from the interaction of workers with heavy construction equipment and various on-site activities. These factors contribute to poor safety records and increased incidents of both fatal and nonfatal accidents on construction sites (Chen et al. 2023; Rasouli et al. 2023). This is further aggravated by the fact that construction workers constantly face potential dangers, making it one of the most hazardous as well as challenging professions (Seo et al. 2015).

According to the Bureau of Labor Statistics (BLS 2021), construction has had the second most fatal occupational injuries in the USA, a statistic that has remained unchanged from 2017 to 2021. In 2021, out of 1,102 fatalities among construction personnel in America, 170 fatalities resulted from struck-by incidents; 59 were from being caught in, compressed by, or crushed by equipment or objects, as defined by OSHA (Brown et al. 2021, 2020). Furthermore, there were 79,660 nonfatal injuries, with 32.8% resulting from contact with objects or equipment (e.g., loaders, excavators, graders), 31.1% from falling, slipping, or tripping, 25.2% from overexertion or other bodily reactions, and 4.7% from transportation incidents. Struck-by incidents involving heavy construction machinery have been reported as a primary source of injuries in the construction sector since 1992. Considering that a significant 91.8% of construction personnel work around heavy equipment or vehicles, there is a high potential for struck-by or caught-in incidents (Betit et al. 2022; CPWR 2018). The nonfatal injury rate also shows an 8% increase since 2011 (Brown et al. 2020). Choi et al. (2019) conducted an analysis comparing fatal work-related injuries across the USA, South Korea, and China. Their findings revealed that South Korea experiences the highest death rate in the construction sector at 17.9%, with the USA at 9.4% and China at 5.3%. This alarming situation calls for a reevaluation of current safety measures and an exploration of more effective strategies.

The rise of computer and vision-based technologies in construction safety management has the potential to enhance safety monitoring and hazard detection on construction sites (Seo et al. 2015; Alsakka et al. 2023; Lee et al. 2020). Methods based on advanced computer vision (CV) and machine learning (ML) are progressively being utilized to detect unsafe behaviors, track construction vehicles, and monitor activities in real time, offering more precise and proactive safety measures (Guo et al. 2020; Zeng et al. 2021; Martinez et al. 2019; Paneru and Jeelani 2021; Xu et al. 2021).

The need for such advanced detection and classification systems is further emphasized by the evolving nature of construction projects. As these projects become larger and more complex, the use of heavy machinery increases, amplifying the potential risks (Guo et al. 2020; Jung et al. 2022). Additionally, the nature of accidents related to heavy construction machinery tends to be more severe compared to other types of construction-related incidents. Thus, reducing accidents involving heavy machinery is crucial for improving overall safety in the construction industry (Lee et al. 2020; Zeng et al. 2021; Kassemi Langroodi et al. 2021).

Despite the current advancements in these technologies, their applications in CV-based health and safety monitoring in the construction sector are in a nascent stage. There are significant research gaps and technical challenges to be addressed. Issues such as reliability, accuracy, and applicability in diverse construction environments remain to be resolved (Seo et al. 2015; Lee et al. 2020; Guo et al. 2020; Zeng et al. 2021). Furthermore, the construction industry's labor-intensive nature and reliance on manual judgments present additional challenges in integrating these technologies into existing safety management practices (Duan et al. 2022).

Even though the construction industry has made strides in improving safety, adopting smart technologies successfully used in other sectors, such as healthcare, has received only little consideration (Xu et al. 2022). Thus, there is a clear need for more innovative approaches to address the risks associated with heavy machinery (Kassemi Langroodi et al. 2021). The goal of this paper is to explore the potential of advanced detection and classification systems for enhancing safety in the construction industry, with a particular focus on unsafe heavy machinery operations.

The structure of the rest of this document is arranged as follows: Sect. 2 is a summary of the background and related work relevant to the technological advancements related to our methodology. Section 3 offers a comprehensive description of our methodology. Section 4 discusses the computational complexity and cost–benefit analysis of the proposed framework. Section 5 focuses on the performance evaluation of the proposed approach. Finally, Sect. 6 is the concluding section that summarizes the findings of this research.

2 Background and related work

In this section, an overview of the background and related work on CV and ML-based construction safety management is provided, including methods for safety and compliance monitoring, object detection and tracking, and activity recognition and analysis.

2.1 Construction safety management through computer vision and machine learning

There has been notable progress in the field of CV over the last few years, marked by its ability to extract important information from various visual mediums, including videos, digital images, and closed-circuit television (CCTV) systems (IBM 2022). This growth is anticipated to persist going forward, expanding the scope and capabilities of visual data analysis (Martinez et al. 2019; Paneru and Jeelani 2021; Xu et al. 2021; Fang et al. 2019). CV performs a range of visual tasks, for instance, object detection (Guo et al. 2020), image classification (Kim et al. 2017), object and motion tracking (Li et al. 2023), action recognition (Lin et al. 2021), and human pose estimation (Xiong and Tang 2021).

Parallel to these advancements, the integration of CV technology and ML has revolutionized construction safety management, evolving from simple image processing to advanced, automated systems. By simulating human visual perception, CV assists in a variety of construction site operations (Huang 1996). They enhance safety and decision-making by detecting, monitoring, and predicting potential hazards (Martinez et al. 2019; Xu et al. 2021). The early phase of CV for enhancing safety in construction project management focused on the automated monitoring of construction sites through image and video data analysis. These initial efforts employed basic yet crucial methods such as object detection, motion tracking, and activity recognition, all aimed at identifying potential safety hazards. This approach represented a significant improvement compared to traditional, manual safety inspections, offering enhanced accuracy and efficiency. This early stage laid the foundation for a more sophisticated, data-driven methodology for monitoring and ensuring safety in the dynamic, often unpredictable conditions of construction sites (Seo et al. 2015). The continuously advancing integration of CV and ML contributed to construction safety and compliance monitoring, object detection and tracking, as well as activity recognition and analysis in the construction sector.

2.1.1 Safety and compliance monitoring

The integration of ML into construction safety has emerged as a transformative approach to enhancing site safety. It became evident that ML technologies offer robust solutions for hazard detection and risk management. These applications range from predictive modeling of potential safety breaches to the monitoring of construction activities. The integration of ML and deep learning (DL) algorithms with safety management allows for more precise, reliable, and context-sensitive safety interventions (Khallaf and Khallaf 2021; You et al. 2023).

Recent studies in this domain have predominantly focused on leveraging techniques involving ML as well as DL for safety and compliance monitoring. For instance, Lee et al. (2020) developed an event detection system using audio as a basis with the K-Nearest Neighbor (KNN) algorithm and integrating historical accident data along with project schedules for real-time hazard detection in construction sites. The proper detection and monitoring of personal protective equipment (PPE) is also a critical part of construction safety. A significant advancement involved the implementation of MobileNet and shallow Convolutional Neural Network (CNN) classifiers in a pose-guided anchoring scheme for the detection of PPE compliance, which demonstrated high accuracy for the detection of hardhats and safety vests (Xiong and Tang 2021). The exploration of You Only Look Once (YOLO) architecture-based DL models for real-time PPE detection demonstrated substantial accuracy in identifying essential safety gear (Nath et al. 2020). Another study utilized the Faster Region-based Convolutional Neural Network (Faster R-CNN) and Residual Network 50 with Feature Pyramid Networks (ResNet50 + FPN) models for human-object interaction recognition that effectively classifies worker-tool interactions using a specialized dataset to improve safety compliance checks in construction sites (Tang et al. 2020). A novel approach to hazard identification related to improper PPE utilization, combining OpenPose and YOLOv3, was presented, showcasing the effectiveness of DL-based models (Chen and Demachi 2021). Additionally, a framework for real-time hazard detection and worker localization, employing technologies like Mask R-CNN and the Robot Operating System (ROS), was also proposed to enhance safety in dynamic construction environments (Jeelani et al. 2021). The development of a PPE detection system for embedded devices integrated YOLOv5 and YOLOv3-tiny in a Dynamic Neural Network (DNN) architecture with fuzzy logic, optimizing operation in near-real-time with limited training data (Iannizzotto et al. 2021). Lastly, the introduction of the YOLO-EfficientNet model, a two-step approach for safety helmet detection, represented a significant stride in real-time safety management (Lee et al. 2023). While ML applications have been crucial for construction safety and compliance, they have also played a significant role in advancing object detection and tracking in construction.

2.1.2 Object detection and tracking

In the field of construction safety monitoring, object detection and tracking have emerged as critical components for enhancing safety and operational efficiency. An innovative approach was introduced by Guo et al. (2020), utilizing an Orientation-Aware Feature Fusion Single-Stage Detection (OAFF-SSD) network and Unmanned Aerial Vehicle (UAV) imagery. This DL network was able to accurately detect multiple construction vehicles in densely populated areas. The method, which employs techniques for extracting features at multiple levels and integrates them through an innovative fusion process, represents a significant improvement over traditional methods. Furthermore, Zeng et al. (2021) enhanced the detection and localization of machinery in macroscale construction sites through an improved YOLOv3 model coupled with a Grey Wolf Optimizer Improved Extreme Learning Machine (GWO-ELM). This approach has been particularly effective in diverse environmental conditions. For example, the model effectively identifies and localizes construction equipment from far-field videos. Similarly, a DL-based method was developed for multi-object tracking, which significantly improved the tracking performance through deep feature fusion and association analysis, employing techniques like R-CNN and Faster R-CNN for object detection (Li et al. 2023). Son and Kim (2021) integrated YOLOv4 and a Siamese network for real-time personnel detection and tracking, significantly reducing false alarms and enhancing safety in construction machinery operations.

In current years, real-time object detection frameworks often use lightweight models. Chen et al. (2023) introduced a lightweight face-assisted model (WHU-YOLO) for detecting welding masks, integrating a Ghost module with a Bi-directional Feature Pyramid Network (Bi-FPN) for object detection. The model efficiently reduced false positives and enhanced the accuracy of safety management. You Only Look Once and None Left (YOLO-NL) and an Augmented Weighted Bidirectional Feature Pyramid Network (AWBiFPN) were also presented, each offering groundbreaking advancements in object detection, including marine object detection. YOLO-NL employs a global strategy for dynamic label distribution and upgrades the Cross Stage Partial Network (CSPNet) and Path Aggregation Network (PANet) with gradient strategies and self-attention mechanism for enhanced object detection (Zhou 2023; Gao et al. 2023). Finally, Kim et al. (2023) developed a small object detection (SOD) system using YOLOv5, optimized for challenging detection environments, while Angah and Chen (2020) enhanced construction worker tracking through innovative use of Mask R-CNN and gradient-based methods.

These studies collectively shift toward more precise, efficient, and context-specific object detection methods in complex environments. However, implementing CV for safety management in off-site construction also needs more exploration and development. Current literature indicates that there is a distinct gap in the utilization of this technology for safety applications in off-site settings, especially regarding the risks from moving objects such as forklifts, cranes, and construction vehicles (Alsakka et al. 2023). The developments in object detection and tracking showcase the evolving complexity of construction safety monitoring. However, this progress naturally extends to other domains, including activity recognition and analysis.

2.1.3 Activity recognition and analysis

There has been a notable shift toward leveraging ML and DL to enhance activity recognition and analysis on construction sites. This advancement is evident across several studies. Various studies have focused on ML and semi-supervised learning implementations. Kassemi Langroodi et al. (2021) introduced a method named Fractional Random Forest (FRF) for construction equipment action recognition. This method proved to enhance the traditional Random Forest (RF) classifier through fractional calculus and demonstrated efficacy in smaller datasets. A study explored a semi-supervised learning approach that integrated teacher-student networks with data augmentation, significantly reducing the need for extensive labeled datasets in construction site monitoring (Xiao et al. 2021). Additionally, Sanhudo et al. (2020) utilized ML approaches, particularly KNNs and gradient boosting, in conjunction with wearable accelerometers to classify complex worker activities.

Advanced Neural Network (NN) models are often used for activity recognition. There was an image analytics framework proposed that combined CNNs and Long Short-Term Memory (LSTM) networks for recognizing actions and analyzing operations. The study utilized Faster R-CNN and Simple Online and Realtime Tracking (SORT) networks for object detection and object tracking, respectively (Lin et al. 2021). Jung et al. (2021) developed a 3D CNN-based framework for action detection within videos of heavy equipment, using YOLOv3 for object detection. Similarly, Jeong et al. (2024) presented a contextual multimodal approach, integrating audio-visual, spatial, and cyclical temporal data, to recognize concurrent activities of equipment in tunnel construction.

Other ML-based, innovative detection and estimation techniques were also developed. Ghelmani and Hammad (2022) employed a self-supervised contrastive learning method, Contrastive Video Representation Learning on Limited Dataset (CVRLoLD), for recognizing construction equipment activities on limited datasets. Furthermore, a technique for 3D pose estimation of construction machinery was introduced using single-camera images integrated with virtual models to enhance pose estimation accuracy (Kim et al. 2023).

2.1.4 Limitations of current studies

The field of CV and ML-based construction safety management has witnessed significant advancements in recent years. However, despite the progress made, there are still several limitations and challenges that need to be addressed to enhance the reliability, accuracy, and applicability of these technologies in diverse construction environments (Seo et al. 2015; Lee et al. 2020; Guo et al. 2020; Zeng et al. 2021).

One of the primary challenges is the lack of comprehensive, extensive, and well-annotated datasets specific to the construction domain (Seo et al. 2015; Paneru and Jeelani 2021). This issue adversely impacts the precision and reliability of predictive models, as the absence of construction-specific datasets hinders model training and accuracy in construction settings (Xiong and Tang 2021). Similar dataset limitations have been observed in various applications, such as real-time worker localization and hazard detection (Jeelani et al. 2021), PPE detection (Iannizzotto et al. 2021), and sound recognition accuracy (Lee et al. 2020). The creation of diverse and extensive construction machine image datasets is challenging due to limited online resources and the intensive nature of data collection (Xiao et al. 2021). Moreover, data quality and diversity issues further complicate this research gap, as evidenced by the impact of complex construction environments and reliance on historical data on ML model effectiveness (Lee et al. 2020).

Recent efforts to address these dataset challenges include the development of the Small Object Detection dAtaset (SODA) benchmark (Duan et al. 2022), self-supervised learning methods for equipment activity recognition (Ghelmani and Hammad 2022), and the generation of synthetic datasets (Barrera-Animas and Davila Delgado 2023). However, these approaches still face limitations in scope, annotation depth, and generalizability, and they require further validation in diverse scenarios (Duan et al. 2022; Ghelmani and Hammad 2022; Barrera-Animas and Davila Delgado 2023). Additionally, the Moving Object in Construction Sites (MOCS) dataset, introduced as a new benchmark for object detection in construction sites, is limited by its focus on a limited number of object categories, lack of detailed annotations, and limited diversity (An et al. 2021).

Environmental and site-specific challenges also pose significant obstacles to the deployment of CV and ML technologies in construction safety management. The detection of small objects in surveillance footage is often complicated by their relative size and positioning (Zeng et al. 2021), while varying environmental and lighting conditions directly affect object detection accuracy (Alsakka et al. 2023). Limitations in camera view ranges and the complexity of object detection in diverse settings further emphasize the need for adaptable ML models that can function effectively in different construction environments (Paneru and Jeelani 2021). Real-time processing demands, especially with high-resolution imagery, add operational constraints, necessitating model optimization for different site conditions (Jeong et al. 2024). The complexity of deploying DL models for real-time detection in construction environments, particularly on UAVs, is also acknowledged as a significant limitation (Guo et al. 2020).

Technical and operational challenges, primarily linked to model complexity and integration, also hinder the effective implementation of construction safety monitoring systems. Pose estimation models face accuracy issues when trained on non-specific datasets (Xiong and Tang 2021), while automated detection systems for workers and equipment struggle with object scale and varying environmental conditions (Fang et al. 2018). Lightweight object detection models encounter limitations in generalization and robustness (Chen et al. 2023), and real-time detection models grapple with dataset diversity and environmental constraints (Zhou 2023). Multi-object tracking models require integrated detection and tracking frameworks to enhance accuracy (Li et al. 2023), and models tracking multiple workers on construction sites are hindered by occlusions, environmental factors, and hardware limitations (Angah and Chen 2020). Small object detection systems face challenges in real-time processing and accuracy across different construction sites (Kim et al. 2023), and integrated detection and tracking systems still face limitations in dataset diversity and real-world applicability (Zhu et al. 2017).

Object recognition and tracking in construction safety monitoring are characterized by various technical challenges. Specific datasets are found to limit the effectiveness of identifying irregular construction activities, with performance in diverse environments remaining uncertain (Lin et al. 2021). Automatic hazard identification models face challenges in real-time implementation, scalability, and data diversity (Chen and Demachi 2021), while 3D pose estimation methods using single-camera images are hindered by dataset diversity and sensitivity to equipment color and shape (Kim et al. 2023). Contextual multimodal approaches for equipment activity recognition in tunnel construction are constrained by computational demands and noise in data (Jeong et al. 2024), and challenges are faced in detecting and classifying small objects in crowded images for helmet detection (Lee et al. 2023). Activity analysis methods struggle with environmental variability and real-time processing efficacy (Roberts and Golparvar-Fard 2019), and real-time detection of PPE through DL models faces hurdles in generalizing to diverse construction environments and adapting to varying conditions (Nath et al. 2020).

Data analysis limitations also challenge object recognition and tracking in construction safety monitoring. Temporal analysis reveals variations in accident frequencies, but small sample sizes and outdated datasets limit the applicability of these findings (Jung et al. 2022). Human-object interaction models, while effective, are constrained by dataset variety and real-time application challenges (Tang et al. 2020), and FRF methods for activity recognition are limited by sensor quality and dataset representativeness (Kassemi Langroodi et al. 2021). PPE detection systems for embedded devices face accuracy issues in crowded images and small object detection (Iannizzotto et al. 2021), and sound detection systems are impeded by environmental complexities and reliance on historical data (Lee et al. 2020). Additionally, there are several challenges in classifying construction activities due to data acquisition biases and the complexity of the tasks (Sanhudo et al. 2020).

To address the aforementioned challenges and limitations in the current state of CV and ML applications for construction safety management, this study proposes a novel approach that integrates advanced techniques in a cascaded manner. The proposed framework aims to overcome several limitations of existing studies by utilizing an extensive dataset, incorporating super-resolution techniques, employing temporal and spatial object detection, and implementing a hierarchical classification system.

The paper presents several key contributions to the field of automated safety management within the construction industry. First, the study utilized a diverse range of YouTube videos to create a dataset for detecting unsafe operations of heavy construction machinery. The dataset contains actions from various scenarios and in diverse environments. The meticulous data preparation process ensures that the dataset is standardized and the segments are accurately annotated, thereby enhancing the reliability and applicability of the ML models trained on this dataset.

Second, the use of the Super-Resolution Generative Adversarial Network (SRGAN) marks a significant advancement in the processing of low-resolution surveillance footage, essential for accurate object detection in varying environmental conditions. This approach allows the effective detection of small objects and provides enhanced visual quality of the input data for precise action detection.

Third, temporal and spatial object detection using the RT-DETR model was developed for enhanced object detection in dynamic surveillance environments. This enables the near-real-time processing of the surveillance footage, in addition to the computational efficiency and accuracy of object detection.

Fourth, a cascade learning approach, integrating SRGAN and RT-DETR, was developed for achieving high-resolution object detection in challenging surveillance footage. This methodology enhances detection precision and minimizes false positives, thereby improving surveillance efficacy and resilience in construction environments.

Finally, a detailed hierarchical classification was implemented for effective safety assessment and action-type classification. This system allows for near-real-time monitoring and response, effectively detecting and classifying objects and actions for proactive safety management on construction sites.

3 Methodology

In the methodology section, we primarily elaborate on the methods used for data collection and preparation, focusing on collecting diverse videos from Youtube to create a specialized dataset for unsafe action detection of heavy construction equipment. The section then explains the system architecture of the approach, comprising the SRGAN, RT-DETR, self-DIstillation with NO labels (DINOv2), and Dilated Neighborhood Attention Transformer (DiNAT) models, highlighting their roles in enhancing the image resolution and facilitating temporal and spatial object detection in dynamic surveillance environments.

3.1 Data

3.1.1 Data collection

Our research utilizes a dataset curated from a variety of YouTube videos. This diverse collection of videos showcases a variety of actions in different scenarios performed by five types of heavy construction equipment: backhoe, bulldozer, dump truck, excavator, and wheel loader. These equipment types were deliberately chosen due to their widespread use across various construction sites and their involvement in a wide range of construction activities. Our unsafe action detection dataset aims to encompass a balanced ratio of both safe and unsafe actions, mirroring real-world scenarios where machinery might be utilized correctly or might pose potential safety hazards. The chosen safe and unsafe action categories for each type of construction equipment, including backhoes, bulldozers, dump trucks, excavators, and wheel loaders, are depicted in Table 1. This classification serves as an integral component of the dataset, where actions are distinctly segmented into actions with safe safety status, which describes standard operational procedures such as loading, moving, and grading, and unsafe safety status, which highlights high-risk operations like operating near utilities, operating near power lines, or operating on unsafe terrain. This classification played a crucial role in the annotation process of the study, providing a structured basis for the subsequent training of the cascade learning model aimed at improving on-site safety measures.

In the process of dataset development that could substantiate the effectiveness of our proposed ML model, we adopted a methodical approach to video selection. Each video was carefully examined to ensure it depicted distinct actions, such as grading, hauling, or instances of operation without proper personal protective equipment. Given the niche nature of our research material, our selection process was purely random and without bias, driven by the relevance of the content to our research objectives.

Figure 1 displays a series of actions performed by a backhoe, a piece of construction equipment integral to various operations on a job site. This figure is a compilation of image frames extracted from video clips in our dataset, showcasing the backhoe engaged in diverse tasks such as material handling, digging, and grading. These images help illustrate the backhoe's various functions and give insight into the categorized safety statuses of the maneuvers that have been systematically classified into safe and unsafe action categories within our study.

Figure 2 presents the visual assortment that illustrates the bulldozer in action. As depicted in Fig. 2, the bulldozer is involved in performing a diverse range of tasks on construction sites. Similar to Fig. 1, the images shown in the figure are carefully selected video frames, capturing the bulldozer as it engages in various operations classified under safe actions like land clearing, grading, and moving, in contrast to unsafe scenarios where the equipment is shown amidst potential hazards such as tipping over or operating in the proximity of falling debris.

Figure 3 depicts a collection of the operational spectrum of the dump truck. Through this figure, we exhibit a wide range of activities ranging from the routine, such as transporting materials, to moments that carry an inherent risk, like overheight collisions or unsafe dumping events.

Excavators, often central to construction and demolition phases, serve a crucial role in versatile construction operations, as presented in Fig. 4. The image frames in Fig. 4 showcase the nuanced dynamics of excavators at work, ranging from digging to demolition. The imagery also shows the unpredictability of emergency response scenarios such as unsafe land clearing, unsafe demolition, or striking underground utilities.

The dynamic range of operations carried out by wheel loaders is encapsulated in Fig. 5, as this equipment is critical in the construction and material handling domains. The figure depicts the wheel loader's actions, divided into safe and unsafe operational procedures such as material handling, loading, grading, and dumping material, as well as inherently risky instances, including vehicle fire, struck-by accidents, and unsafe operation of the equipment.

The illustrative content in Figs. 1, 2, 3, 4 and 5 of the manuscript offers a visual reference for the various action categories attributed to each type of construction equipment. These figures are sourced from the actual video clips within our dataset and are intended to provide tangible examples of the actions identified and categorized through our annotation process. They suggest the potential real-world applicability of our dataset for operational recognition and classification in the context of construction equipment usage.

The extraction of video frames was executed with precision, employing manual techniques for cutting videos to isolate the desired segments. We supplemented this manual effort with a Python script specifically designed to automate the frame extraction process at a consistent frame rate. Under standard conditions, frames were extracted at 1 frame per second (fps). However, in instances where the total length of the videos was insufficient to provide a representative sample ratio of a given action category, we adjusted the frame rate to 2 or 3 fps. This ensured that each category was equitably represented in the dataset, thereby facilitating the unbiased training of our model.

3.1.2 Dataset preparation

Once the relevant video clips were isolated, the subsequent stage involved a meticulous data preparation process. The primary goal was to establish uniformity across the dataset, thereby simplifying the model training and validation phases. We standardized the resolution and frame rate of all video segments to create a consistent baseline for input data. This standardization was critical to eliminating any variances that could arise from the diverse origins and formats of the source videos.

The annotation of video segments was executed with the aid of the Computer Vision Annotation Tool (CVAT), a sophisticated tool designed for the task of video annotation. The process began with the delineation of objects within each frame using bounding boxes, followed by the categorization of the safety status of the depicted action, designating it as either safe or unsafe. Subsequently, the specific type of action was labeled, with terms corresponding to the taxonomy of construction equipment operations such as backfilling or material handling. This taxonomy ensures consistency and relevance across the dataset.

Our finalized dataset, after meticulous curation and annotation, comprised approximately 11,064 images reflecting a diverse range of actions and scenarios, which increased to 44,295 images after applying data augmentation techniques. Additionally, the original video dataset included 1,528 videos. To facilitate the development and validation of our ML model, we partitioned the data, creating training, validation, and testing datasets, adhering to the conventional 80–10-10 split ratio. This division was strategically implemented to optimize the model's performance and ensure its robustness.

3.1.3 Data processing

Prior to deploying the chosen ML models, namely SRGAN, RT-DETR, DINOv2, and DiNAT, data processing steps were implemented to ensure optimal input quality and format. The data processing workflow encapsulated several steps, including the preparation of distinct datasets optimized for specific tasks: the SRGAN dataset for image resolution enhancement and the object detection and classification dataset tailored for detecting construction equipment and classifying their safety statuses and specific actions.

The SRGAN dataset, assembled separately from the detection and classification dataset and specifically created from high-resolution construction images of construction machinery, underwent extensive data augmentation. A broad spectrum of augmentation techniques was applied, including horizontal and vertical flipping, rotation, brightness adjustment, contrast enhancement, saturation adjustment, hue modification, affine transformations, grayscale conversion, Gaussian blurring, sharpness adjustment, autocontrast, equalization, and perspective transformations. These augmentations, applied using a Python script leveraging the PIL library and PyTorch's torchvision transforms, significantly expanded the variety of visual conditions the model could learn from, thereby improving its generalizability.

For the object detection and classification tasks, we prepared two separate versions of the dataset, each aligned with its specific use case—object detection or hierarchical classification. The images in the object detection version of the dataset were resized to a uniform dimension of 640 × 640 pixels, and pixel values were normalized to a range of 0 to 1 to minimize input variation and accelerate model training. Auto-orientation adjustments ensured that images were correctly aligned for consistent model input. To further improve the dataset quality and avoid overfitting by enhancing the robustness of the model, image augmentation techniques such as flips, rotations, grayscale conversion, hue adjustment, saturation adjustment, brightness adjustment, and exposure adjustment were applied. These augmentations were designed to produce five augmented outputs per training example, effectively increasing the dataset size and diversity while addressing class imbalance through selective augmentation of underrepresented classes. For the classification version of the dataset, the images were resized to a standard resolution of 224 × 224 pixels, and normalization was applied using predefined mean and standard deviation values to adjust the images' color channels to a consistent scale. For this hierarchical classification dataset version, geometric transformations like random rotations and flips were implemented to mimic the diverse conditions of construction regions while preserving the dataset's hierarchical structure to maintain contextual accuracy for safety statuses and activity types. This augmentation process significantly impacted the model's training and validation phases, leading to reduced misclassification rates and heightened accuracy in distinguishing complex subclass relationships.

Post-augmentation, the annotations, which include bounding boxes and action categories, were converted into a machine-readable vector format. This vectorization is crucial for the RT-DETR model to accurately interpret the location and class of each action within the images. The processed images were then batched into subsets suitable for the model's input requirements. Batching is a crucial step that balances computational efficiency with the model's learning capability, ensuring that each iteration of the training process receives a representative sample of the dataset. Following the batch preparation, the dataset was systematically split to create training, validation, and testing sets. This separation is pivotal for unbiased model evaluation and generalization. The final processed images, now in batches, were fed into the SRGAN and RT-DETR models. SRGAN processed the images first, enhancing the resolution, followed by the RT-DETR model, which performed the near-real-time detection and classification of the actions.

These data processing steps were carefully chosen to align with the objective of our study, ensuring that both of the models used received high-fidelity inputs for effective training and subsequent action detection and classification tasks. The creation of separate versions of the dataset for object detection and classification, along with targeted preprocessing and augmentation techniques, enhances the models' performance and generalizability.

3.2 System Architecture

The cascade learning approach simplifies object detection in low-quality CCTV footage by smartly using four steps involving SRGAN, RT-DETR, DINOv2, and DiNAT models. First, SRGAN helps turn low-resolution images into clearer, high-resolution versions by adding missing details. These clearer images are then passed to the RT-DETR model, which is good at spotting objects in a sequence of frames by understanding their motion and changes over time. Following, the DINOv2 model classifies the action depicted in the image by safety status, and DiNAT further classifies it into specific action categories. By putting these models together in a four-step process, this method improves object detection and hierarchical classification in CCTV footage without using too much computer power. So, the approach aims to deliver better detection and classification accuracy in near-real-time surveillance by cleverly combining the strengths of four specialized DL models, maintaining a practical and straightforward application in various surveillance situations.

3.2.1 SRGAN for resolution enhancement

The SRGAN model (Ledig et al. 2016) is employed in the proposed framework to enhance low-resolution images captured by construction site surveillance systems. SRGAN leverages a DL approach to transform low-resolution images into their high-resolution equivalents while preserving essential textural information and image integrity. The system is divided into two key components: a generator, built upon a ResNet architecture, and a discriminator, structured as an advanced CNN.

The generator's structure is initiated with a 9 × 9 convolutional layer, serving as the entry point for the input image. This is followed by a series of 3 × 3 convolutional layers, augmented with batch normalization and Parametric Rectified Linear Unit (PReLU) activation, forming the core of the generator's upscaling function. Integral to this process are the residual blocks, designed to fine-tune the image's details and incrementally upscale its resolution. The use of PixelShuffle operations, crucial for achieving a 4 × upscaling factor through sub-pixel convolution, ensures the image is upscaled effectively while maintaining its original content integrity. The architecture concludes with a final convolutional layer that outputs the super-resolved image at a resolution of 256 × 256 pixels. The discriminator's function is to distinguish between the generator-produced super-resolved images and genuine high-resolution images. It begins with a 3 × 3 kernel size convolutional layer, progressively increasing in depth and complexity through subsequent layers, each featuring batch normalization. The architecture advances to a fully connected layer and ends with a single neuron that evaluates the authenticity of the input image. Integrating the Visual Geometry Group-19 (VGG-19) model, equipped with pre-trained weights, improves the discriminator's feature extraction capabilities, aligning the training process with perceptual quality standards.

For training our SRGAN model, we chose a two-stage training strategy, spanning 200 epochs, to enhance its capability to upscale images from construction surveillance footage. The first phase involved 100 epochs of training on a generalized, augmented dataset to establish broad feature recognition capabilities. This was followed by a second, more focused phase of another 100 epochs, which concentrated on datasets specifically composed of construction site and equipment imagery, to refine the model's performance for our target application. The batch size was chosen as 32 to balance the computational efficiency and the accuracy of gradient updates. Additionally, our training protocol included saving checkpoints at every epoch, facilitating the preservation of model states for potential resumption of training, and ensuring stability against data loss. The learning rate for both the generator and discriminator was initially set at 0.0001, allowing for substantial model adjustments during the early training phases. As training progressed, particularly during the fine-tuning phase, we decreased the learning rate to 0.00001 to enable more precise weight adjustments. We also introduced a learning rate scheduler that systematically reduced the learning rate by a factor of 0.1 every 30 epochs, enhancing the model’s ability to capture detailed and high-fidelity textures essential for high-quality image output. The Adam optimizer was selected for its adept handling of sparse gradients and effectiveness in large-scale applications, ensuring efficient and effective optimization throughout the training process. The model's loss function was a composite of Binary Cross-Entropy (BCE) for adversarial accuracy, Mean Squared Error (MSE) for content integrity, and a VGG-inspired perceptual loss to ensure the upscaling process retained the original image's texture and quality nuances. To validate SRGAN performance, our construction equipment dataset was employed, assessing the model's ability to enhance low-resolution images to a higher resolution. Quantitative evaluations were conducted using Peak Signal-to-Noise Ratio (PSNR) alongside qualitative visual comparisons to gauge the model's effectiveness in improving image clarity and detail.

The SRGAN model is integrated into the analytical framework to improve the quality of images from construction site CCTV footage, which contributes to the overall performance of the action detection algorithms. The architecture of the SRGAN model is illustrated in Fig. 6.

The SRGAN algorithm (Algorithm 1) presents an approach to enhance low-resolution images into high-resolution counterparts, which is crucial for improving construction site imagery. By employing the generator network to upscale images and the discriminator network to refine the output toward indistinguishability from high-resolution originals, the algorithm achieves remarkable fidelity and detail. In the SRGAN framework, the process begins with the initialization of the two networks: the generator (G) and the discriminator (D), each with their respective weights, θG and θD. Initially, the generator undergoes pre-training, where it learns to upscale low-resolution images (LR) to super-resolution images (SR) by minimizing the content loss between the generated SR images and their high-resolution (HR) counterparts. This phase ensures that the generator can produce visually plausible images. Subsequently, the model enters adversarial training, a phase where the generator and the discriminator iteratively improve through competition: the G network aims to produce increasingly realistic images (minimizing both content loss and adversarial losses), while the D network tries to discern the generated images and the real HR images. This adversarial process is vital for enhancing the perceptual quality of the super-resolved images, making SRGAN an effective tool for our objective.

Algorithm 1

3.2.2 RT-DETR for equipment detection

The Real-Time Detection Transformer (Lv et al. 2023) innovatively advances object detection capabilities, especially for immediate analysis crucial in dynamic settings. This evolution of the DEtection Transformer (DETR) (Carion et al. 2020) framework introduces essential enhancements such as an efficient hybrid encoder, leveraging Attention-based Intra-scale Feature Interaction (AIFI) and CNN-based Cross-scale Feature-Fusion (CCFF) module to efficiently manage multi-scale features. The RT-DETR Extra-Large (RT-DETR-X) version, tailored for swift detection and classification of construction equipment, integrates seamlessly with the SRGAN to significantly improve image resolution and detection accuracy.

RT-DETR-X maintains a sophisticated architecture, beginning with an initial stem (HGStem) that escalates channel depth while ensuring minimal information loss. Hierarchical grouped blocks (HGBlock) further enrich feature maps, optimizing the model for detailed spatial resolution. Incorporating IoU-aware query selection and temporal embedding from preceding frames enhances the model's responsiveness to object motion and temporal changes, crucial for dynamic scene analysis.

The training of RT-DETR-X was conducted over 100 epochs using our carefully annotated equipment detection dataset of 44,295 images to cover a broad range of construction equipment. This phase utilized advanced computational resources, including an NVIDIA GeForce RTX 3090, optimizing the process with the AdamW optimizer for stability and efficiency. Loss functions such as Generalized-IoU (GIoU), classification loss (Cross-Entropy Loss), and L1 loss were employed to refine the model's precision in detection, classification, and localization.

The RT-DETR model's development benefits from the integration with SRGAN, as the upscaled high-definition images provided by SRGAN complement RT-DETR-X's detection capabilities. This synergy allowed RT-DETR-X to process enhanced imagery, thereby improving its accuracy and efficiency in object detection and classification within construction environments. The validation process, involving 1,097 images, demonstrated significant improvements in detection accuracy, as indicated by metrics like mAP50 and mAP50-95.

The RT-DETR-X (Algorithm 2) represents a state-of-the-art approach for real-time object detection within video streams, specifically tailored for dynamic and complex environments. At its core, the algorithm initializes with a model (M), equipped with weights (ω), to sequentially process video frames (F) through temporal feature extraction. This initial step captures the temporal context of each frame, enhancing the accuracy of object detection by considering the continuity inherent in video data. Afterward, the algorithm employs these temporally-rich features to identify and annotate objects across a predefined set of classes (C), leveraging the abilities of the model to accurately detect and annotate objects in real time. Furthermore, the model incorporates a real-time optimization mechanism that allows for the adaptation of its weights in response to new incoming frames. This feature makes sure that the model maintains high detection performance in different environments where conditions may rapidly change.

Algorithm 2

3.2.3 DINOv2 for enhanced safety classification

The DINO (Oquab et al. 2023) model was particularly designed for self-supervised CV applications. The DINO framework itself focuses on leveraging self-distillation, where a model learns to predict its own output under slightly different views of the same image, to enhance the learning of robust and versatile visual features without relying on labeled data. Our DINOv2 implementation was tailored for the nuanced classification of construction actions into safe or unsafe categories.

The architecture of DINOv2 is built on a linear classifier wrapper around the DINO Vision Transformer (ViT) backbone, optimizing self-supervised feature extraction. Its patch embedding layer partitions the input image into a grid of non-overlapping patches, projecting each into a high-dimensional vector space. This facilitates a concentrated analysis of local features within patches, while the self-attention mechanism integrates these details on a global scale. The architecture also features 12 transformer blocks, each with layer normalization and memory-effective attention mechanisms, enhancing the model’s focus and contextual understanding of image patches.

DINOv2's training employs a self-supervised methodology, initiated by resizing images to a uniform dimension (224 × 224) and normalizing them. The safe-unsafe dataset, pivotal for training and validation, ensures the model's capability to generalize from its training data. Customization of the model to match the dataset's unique characteristics involves adjusting the output features of the classification layer to align with the distinct classes present in the dataset. For the dataset division of training, validation, and testing sets, we chose a commonly used 70–20-10 ratio.

The training loop, powered by BCE loss and the Adam optimizer, iteratively refines the model's parameters across 100 epochs. For validation, the validation dataset was employed to monitor the performance and make potential training refinements. This phase provided insights into how accurately our model performs on unseen data.

In the proposed cascaded approach, SRGAN is utilized to upscale low-resolution surveillance imagery, which is then processed by subsequent models in the framework, including RT-DETR and DINOv2, to cooperatively elevate safety classification efficacy. RT-DETR then uses the image to identify and localize construction equipment within dynamic site environments. The localized objects are subsequently passed to DINOv2, which classifies the equipment actions based on operational safety. This integration facilitates a streamlined workflow where each model's output directly influences the subsequent model's input, ensuring precise, near-real-time safety monitoring.

3.2.4 DiNAT for action classification

The DiNAT (Hassani and Shi 2022) model represents an advanced adaptation of the transformer architecture and was made for detailed spatial understanding. The basis of the DiNAT is a Neighborhood Attention module that enables the model to focus on local neighborhood features, thereby capturing finer spatial relationships than traditional global self-attention mechanisms allow.

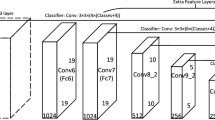

The model architecture begins with an embeddings layer, which employs a sequence of convolutional operations to downsample the input image and create a set of initial feature representations. These features are then normalized using layer normalization and passed through the dropout layer to promote robustness against overfitting. The core of DiNAT is the encoder, which consists of multiple so-called stages, each containing a series of DiNAT layers. Within each of these layers, a layer norm normalizes the input features, preparing them for the attention mechanism. The Neighborhood Attention (NA) module then computes attention within local regions, allowing the model to hone in on specific spatial patterns and details. The intermediate layer within each DiNAT layer performs a feed-forward operation, expanding the feature dimensionality before applying a Gaussian Error Linear Unit (GELU) activation function for non-linearity. Following the attention and feed-forward operations, the features are once again normalized by another layer normalization and passed through the output layer. This layer projects the features back to their original dimensionality and applies dropout to regulate the flow of information. A unique aspect of DiNAT is the drop-path mechanism, a form of structured dropout that randomly drops entire paths in the computational graph to enhance generalization and prevent overfitting. As the information flows through the stages of the encoder, it is progressively downsampled by the downsampler, which uses convolution operations to reduce the spatial resolution while increasing the channel dimension. This hierarchical processing allows DiNAT to operate efficiently on high-resolution inputs by reducing computational complexity while preserving essential information. The model ends with a global layer normalization and a 1D adaptive average pooling layer, which aggregates the features across the spatial dimensions, producing a compact and informative representation suitable for downstream tasks. Both the DiNAT and DINOv2 model architectures are depicted in Fig. 7.

Training the DiNAT model involves preprocessing and normalizing the dataset, followed by the division into batches. The training loop utilizes cross-entropy loss and the Adam optimizer for weight updates over several epochs, with hyperparameters like learning rate and batch size carefully chosen to balance learning speed and stability. Performance metrics are monitored during training to assess the capability of the model to accurately segment and label different regions within the images. During validation, DiNAT's performance is evaluated on unseen data to evaluate its generalization capabilities. The validation phase helps identify overfitting and guides hyperparameter tuning for optimal model performance. The validation metrics provide insights into the model's predictive power, with the primary goal being a balance between low validation loss and high accuracy, indicative of a well-generalized model.

3.2.5 Cascaded SRTransformer framework

The proposed approach, Cascaded SRTRansformer, is built on a four-stage cascade learning model. The cascade learning approach provides high-resolution object detection and classification amidst noisy and often suboptimal CCTV footage. The framework innovatively combines the capabilities of SRGAN, RT-DETR-X, DINOv2, and DiNAT models to address the multifaceted challenge of enhancing image resolution, detecting and classifying construction equipment, and assessing operational safety from CCTV footage of construction sites. This sequential framework is designed to exploit the strengths of each model, thereby providing a detailed analysis of construction equipment actions and their safety implications.

The first step in our framework is SRGAN preprocessing. The framework initiates with SRGAN, tasked with preprocessing low-resolution CCTV images to improve their resolution, thus preparing them for detailed analysis. By upscaling images to a uniform size of 256 × 256 pixels and applying Gaussian blur for noise reduction, SRGAN ensures the retention of crucial textural details, laying a solid foundation for accurate subsequent object detection.

In the second stage, the enhanced images from SRGAN are then processed by RT-DETR-X, which utilizes its real-time detection capabilities to identify and classify construction equipment within the images. The model's ability to detect finer details, thanks to the improved resolution, allows for precise classification of equipment into predefined categories. RT-DETR-X's training on a specialized equipment detection dataset guarantees its effectiveness in recognizing and categorizing equipment accurately. Following the identification and classification of equipment, as the third stage, DINOv2 assesses the operational safety of each detected action of the construction machinery. This model analyzes the equipment's context, usage, and surrounding environment to determine if its action is safe or unsafe. The integration of DINOv2 adds a critical layer of safety analysis, leveraging its training on a dataset specifically labeled for safety features. The cascaded framework ends with DiNAT, which further analyzes the actions performed by the classified equipment. DiNAT's role is to dissect the scene into more granular action categories, informed by inputs pre-classified by DINOv2. This final step utilizes a dataset rich in construction activities to enable DiNAT to distinguish specific actions, enhancing the depth of the framework's analytical capabilities.

The integration of these models into a cascaded system presented challenges such as ensuring data compatibility and maintaining near-real-time processing efficiency. To address these, the framework was optimized through careful parameter tuning and the implementation of lightweight model versions where feasible. The emphasis was placed on reducing latency and enhancing accuracy by selecting efficient architectures and fine-tuning domain-specific datasets. Error management was also a critical component, with robust error-checking and fallback mechanisms implemented at each stage to preserve the integrity of the overall system.

The Cascaded SRTransformer framework (Fig. 8) offers a potential contribution to automated safety management within the construction industry. By systematically enhancing image resolution, detecting and classifying equipment, assessing safety, and identifying specific actions, the framework provides a detailed view of potential hazards on construction sites. As a result, the framework can potentially contribute to the development of more effective safety protocols.

We introduce a multi-stage framework (Algorithm 3) for enhancing construction site safety and efficiency through advanced video analytics. Initially, the algorithm applies SRGAN to each frame from CCTV footage, improving the image quality and detail, thereby enabling more accurate object detection. Following this, the object detection model, initialized with the trained weights, is deployed for precise detection of various types of construction equipment within these super-resolved frames. Subsequently, the algorithm employs a hierarchical classification approach: initially determining the safety status (safe or unsafe) of detected objects using the DINOv2 model, followed by a more granular analysis of unsafe actions through the DiNAT model, categorizing them into specific types of actions. This classification enables a deeper understanding of safety violations and equipment misuse on construction sites, facilitating immediate and targeted interventions.

Algorithm 3

3.2.6 Experimental setup

The experimental setup employs a combination of state-of-the-art hardware and software to facilitate the intensive data processing and model training tasks. The research was conducted using a high-performance computational environment, as detailed in Table 2.

The experimental framework was powered by an AMD Ryzen Threadripper 3990X CPU with 64 cores, optimized for multi-tasking and efficiency. It featured 256 GB of DDR4 Reg-ECC RAM for robust data handling and a 1 TB Samsung SSD that ensured rapid data access. Visual and ML computations were supported by an RTX 3090 24GB VGA, while the ASUS ROG ZENITH II EXTREME ALPHA motherboard provided necessary high-speed connectivity. The entire system was powered by a reliable FSP HYDRO 1200W 80 + Platinum power supply, ensuring stable performance under high loads.

4 Discussions

In the discussion section, we explore the computational complexity and cost–benefit analysis of the Cascaded SRTransformer, evaluating its operational efficiency and economic impact on enhancing construction site safety through improved object detection and action classification.

4.1 Computational complexity

In the computational complexity analysis of the proposed framework, we assessed each component individually as well as their integration into the entire system. This analysis considers the unique set of computational and memory demands of each component, which influence the overall efficiency of the cascade learning architecture employed in the detection and classification of unsafe operations in construction environments. In the assessment of the proposed framework, several metrics were selected to provide a thorough analysis of the system's performance and resource utilization. These metrics—inference time, resident set size (RSS), virtual memory size (VMS), and giga floating point operations (GFLOPs)—are important for assessing different aspects of computational complexity and operational load within the processing environment.

Inference time measures the duration required to process a single instance through the model. This is fundamental for applications where rapid data processing is critical and provides insight into the responsiveness and speed of the computational framework. Memory utilization of the processes was measured through the RSS and VMS. RSS reflects the amount of memory a process has occupied in the main memory (RAM), excluding the memory that is swapped out. VMS, on the other hand, includes the total amount of virtual memory allocated by the process, which includes both used physical memory and swapped-out space. Both of these metrics help in evaluating the scalability and memory efficiency of the models for deployment in systems with limited memory resources. The GFLOPs quantifies the computational complexity in terms of the number of billion floating-point operations performed per second, which allows for a direct assessment of the processing power required by the framework, providing a basis for comparing the computational demands of different components and configurations within the system.

The evaluation of the framework's computational complexity is summarized in Table 3, which outlines the performance metrics for each component within the system. This table aims to provide a clear overview of the computational and memory demands essential for assessing the efficiency of the framework in practical deployment scenarios.

The data presented in Table 3 reflect the individual and collective computational profiles of the components. The SRGAN, while handling the resolution enhancement, demonstrates significant computational intensity, with an average inference time of 0.80639 s and a GFLOP count indicative of high computational demand. In contrast, the RT-DETR, despite its advanced multi-scale processing capabilities, has a much shorter inference time but uses significantly more memory, both in terms of RSS and VMS. Furthermore, the computational architectures of DINOv2 and DiNAT exhibit significantly reduced resource utilization, as indicated by their minimal RSS and VMS, as well as lower GFLOPs. These attributes highlight the models' capacity to operate efficiently under constraints of reduced computational power while still achieving high levels of processing speed. The overall framework combines these individual components, resulting in a cumulative inference time of 1.00539 s and substantial GFLOPs. The memory requirements, as shown by the RSS and VMS, suggest a heavy reliance on system resources. This is justified by the advanced capabilities and high performance of the integrated framework in operational environments.

Optimizing memory-intensive models like RT-DETR and SRGAN involves implementing memory management strategies that can reduce both RSS and VMS without compromising computational efficacy. Techniques such as memory-efficient programming, optimized caching mechanisms, and advanced data structure management can significantly mitigate high memory demands. Additionally, applying model compression techniques like pruning and quantization can decrease the memory footprint, making the model more amenable to environments with strict resource limitations. The RT-DETR model's extensive resource utilization could be optimized through the refinement of its self-attention mechanisms, potentially reducing computational redundancy. Empirical studies, such as those by Hu et al. (2021), have demonstrated that model pruning, quantization, and the application of knowledge distillation strategies can significantly reduce computational demands without compromising performance. Moreover, enhancing the SRGAN component with a lighter architecture could maintain high-quality image upscaling while reducing GFLOPs.

The system-wide enhancement should also be addressed, focusing on optimizing data throughput to reduce input and output operations that can cause bottlenecks, and the inter-component data flow within the framework can help minimize latency. Employing in-memory computing solutions can accelerate data access speeds, and optimizing the inter-component data flow can substantially reduce processing delays, making it ideal for deployment in real-time settings (Rotem Ben-Hur et al. 2020).

4.2 Cost–benefit analysis

To perform a cost analysis based on the computational complexity of the framework, we must consider several key factors for the estimation. Based on the GFLOPs and memory usage, the cloud computing costs can be estimated. These represent the costs of running this framework on cloud platforms like Amazon Web Services (AWS), Azure, or Google Cloud. Besides the cloud computing costs, operational costs and hardware costs also need to be considered when approximating the total deployment costs. The operational costs include the cost of electricity if run on-premises and the required hardware maintenance. As the deployment of this computational framework necessitates new hardware, the initial acquisition costs must be carefully considered as well.

Cloud services provision computational resources on a pay-as-you-go basis, pricing these services according to the type of compute instance (i.e., GPU or CPU), memory allocation, and storage capacity. The choice of instance is directly influenced by the required computational power, as measured in GFLOPs, and the memory demands of the application. For high-throughput tasks such as those encountered in advanced construction safety monitoring systems, GPU instances are often indispensable. Notable instances include the p3 and p4 series of AWS, equipped with Tesla V100 GPUs, A2 VMs of Google Cloud, and NCas T4 v3 VMs of Azure (AWS 2023a, 2023b). For example, the AWS p3.2xlarge instance, offering a Tesla V100 GPU, is currently priced at $3.06 per hour (AWS 2023a, 2023b), highlighting the financial impact of using state-of-the-art computational resources. In addition, operational expenditures for running these instances are not limited to rental costs alone but also encompass the energy consumption associated with their use. For instance, a Tesla V100 GPU, consuming about 250 watts per hour (NVIDIA 2021), translates to a daily energy usage of 0.25 kWh if operated continuously for an hour. According to the U.S. Energy Information Administration (2024), the average electricity price in February 2024 was approximately $0.128 per kWh. Consequently, the daily operational cost amounts to merely $0.03, which is a minor fraction of the total operational cost. Deploying a system locally necessitates initial capital outlays for purchasing the requisite hardware. High-performance GPUs such as the NVIDIA Tesla V100 or A100 are pivotal for real-time processing tasks involved in monitoring construction sites. The cost of a Tesla V100 is approximately $10,000, whereas the more advanced NVIDIA A100 may exceed $12,000 (NVIDIA 2024), representing a significant investment in computational infrastructure. Considering continuous operation, the monthly cloud computing cost for an instance like the AWS p3.2xlarge can accumulate to approximately $2,197.60, leading to an annual expenditure of around $26,371.20.

However, several risks could affect the projected cost–benefit ratio. Technological risks include the potential obsolescence of hardware and dependency on specific cloud platforms, which could necessitate unforeseen investments and adaptations. Operational risks involve fluctuations in energy prices and potential hardware maintenance issues, especially if the system scales across multiple sites. Economic risks such as inflation and changes in labor costs could alter the cost structure significantly. Additionally, regulatory compliance with data protection laws could introduce further costs. To mitigate these risks, it is recommended to regularly update hardware and software, negotiate flexible contracts with cloud providers, develop comprehensive maintenance schedules, conduct extensive pilot testing, and ensure all operations comply with current data protection and privacy laws.

In spite of these risks, the deployment of such a framework can be justified by the substantial benefits. These benefits include a reduction in accident-related costs, decreased downtime, and insurance premium reductions. Research indicates that the average cost of a workplace accident in the construction industry can exceed $50,000, including medical expenses, legal fees, penalties, and insurance costs (Waehrer et al. 2007; Haupt and Pillay 2016). If we assume that a large construction firm experiences an average of 10 accidents per year, by reducing the accident frequency by 20% through better monitoring and early detection of unsafe operations, the framework could potentially save the firm $100,000 per year. Furthermore, unplanned downtime due to accidents can cost upwards of $1000 per hour when considering halted operations (Gangane and Patil 2018; Deshmukh and Bharat 2021). With real-time monitoring, we can assume a reduction of 50 h of downtime annually. As a result, the framework can save up to $50,000 annually just by reducing downtime. Insurance companies often adjust premiums based on the perceived risk associated with specific operational environments. Implementing advanced safety monitoring systems can lead to lower insurance premiums due to the reduced risk. Based on reports from Allianz Global Corporate & Specialty (2019), large construction projects are typically insured at an annual premium of approximately $200,000. The adoption of the framework could lead to a conservative estimate of a 10% reduction in insurance costs. This adjustment translates into annual savings of $20,000 on insurance premiums alone, thereby reducing operational costs and enhancing the project's overall financial sustainability. By aggregating the savings accrued from reduced accident occurrences, minimized operational downtime, and decreased insurance premiums, the total annual benefits are estimated to be $170,000 each year.

To provide a nuanced perspective, we have introduced the Net Present Value (NPV) analysis to evaluate the long-term financial viability of deploying our computational framework. The NPV calculation takes into account the time value of money, discounting future cash flows to their present value. For this analysis, we assume a discount rate of 10%, which represents the minimum rate of return expected by investors (Gallo 2014). The NPV is calculated over a five-year period, considering the initial hardware investment of $12,000 for an NVIDIA A100 GPU and the annual net savings of $143,628. The resulting NPV is approximately $445,785, indicating that the deployment of our framework is a financially viable investment in the long run. Moreover, we have calculated the Internal Rate of Return (IRR) for further assessment. The IRR represents the discount rate at which the NPV of all cash flows equals zero. In other words, it is the expected compound annual rate of return that will be earned on the investment. Using the same cash flow assumptions as in the NPV analysis, the IRR for deploying our computational framework is estimated to be 198%. This high IRR suggests that the investment in our framework is highly profitable and attractive from a financial standpoint.

We have conducted a sensitivity analysis to explore how changes in key assumptions impact the cost–benefit outcomes. The analysis focuses on three critical variables: the cost of technology (NVIDIA A100 GPU), the annual accident reduction rate, and the insurance premium reduction percentage. We varied each parameter by ± 10% while keeping the others constant to observe the impact on the NPV and IRR. The results show that the NPV remains positive and the IRR stays above 150% in all scenarios, demonstrating the robustness of the financial model. Even in the worst-case scenario, where the cost of technology increases by 10%, the accident reduction rate decreases by 10%, and the insurance premium reduction percentage decreases by 10%, the NPV is still $336,206 and the IRR is 159%.

The annual operating costs for deploying this framework, which include computational expenses and other associated overheads, were previously estimated at approximately $26,371. By comparing these costs against the annual benefits, the net annual savings can reach up to $143,629. This substantial net saving signifies a good return on investment (ROI) from the deployment of such safety monitoring computational frameworks. The significant decrease in both direct and indirect costs related to construction site accidents and inefficiencies, along with the reduced insurance premiums, presents a compelling economic argument for the broad adoption of advanced monitoring technologies. Based on the cost–benefit analysis, financial modeling, and sensitivity analysis, the deployment not only enhances operational safety but also establishes a financially viable solution with quantifiable benefits for the construction industry.

5 Experimental results

In the results section, we present a detailed performance evaluation of the Cascaded SRTransformer, showcasing its effectiveness in enhancing construction site safety through improved object detection and action classification.

5.1 Performance evaluation

5.1.1 Performance evaluation metrics

To estimate the accuracy and precision of the object detection model, several key metrics were used, including the Intersection over Union (IoU), Average Precision (AP), mean Average Precision (mAP), Precision, Recall, and F1-score.

The IoU is a metric that evaluates the overlap between the predicted and actual bounding boxes and varies significantly with different threshold settings. An IoU value of 1 signifies the model's enhanced accuracy in localizing objects. The IoU is calculated as Eq. (1).

Here, the area of overlap represents the intersecting area of the forecasted and actual bounding boxes, and the area of union encompasses the total area covered by both bounding boxes combined.

AP measures the precision of object detection at various threshold levels. This metric is important for assessing the model's performance across various object categories in construction environments. The AP is calculated for each class in Eq. (2).

where t is the threshold, Precision(t) refers to the precision of the model at threshold t, and Recall(t) denotes the recall rate at threshold t. The summation is done over all thresholds.

The mAP calculates the average of the AP values for all categories over various IoU thresholds, as formulated in Eq. (3).

where N represents the number of classes or IoU thresholds, \({AP}_{i}\) is the average precision for the ith class or IoU threshold. This metric provides a holistic view of the performance among multiple object categories.

A variety of metrics are integral to the assessment of the DL models' classification performance. Traditional metrics employed in this study include Precision, Recall, F1-score, and Accuracy, derived from the confusion matrix (Hossin and Sulaiman 2015).

5.2 Performance of the Cascaded SRTransformer

5.2.1 Resolution enhancement

While CCTV footage is a critical tool for safety monitoring on construction sites, its efficacy is often compromised by its low resolution. The Cascaded SRTransformer framework, designed for safety monitoring in construction sites, integrates various advanced techniques, including the SRGAN model, to address the challenges posed by low-resolution CCTV footage and enhance the overall performance of the system. By enhancing the resolution of such images, the SRGAN model improves the granularity and quality of the visual data, facilitating more accurate detection and classification of activities in the subsequent stages of the framework.