Abstract

Prognostics and health management (PHM) is critical for enhancing equipment reliability and reducing maintenance costs, and research on intelligent PHM has made significant progress driven by big data and deep learning techniques in recent years. However, complex working conditions and high-cost data collection inherent in real-world scenarios pose small-data challenges for the application of these methods. Given the urgent need for data-efficient PHM techniques in academia and industry, this paper aims to explore the fundamental concepts, ongoing research, and future trajectories of small data challenges in the PHM domain. This survey first elucidates the definition, causes, and impacts of small data on PHM tasks, and then analyzes the current mainstream approaches to solving small data problems, including data augmentation, transfer learning, and few-shot learning techniques, each of which has its advantages and disadvantages. In addition, this survey summarizes benchmark datasets and experimental paradigms to facilitate fair evaluations of diverse methodologies under small data conditions. Finally, some promising directions are pointed out to inspire future research.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Prognostics and health management (PHM), an increasingly important framework for realizing condition awareness and intelligent maintenance of mechanical equipment by analyzing collected monitoring data, is being applied in a growing spectrum of industries, such as aerospace (Randall 2021), transportation (Li et al. 2023a), and wind turbines (Han et al. 2023). According to a survey conducted by the National Science Foundation (NSF) (Gray et al. 2012), PHM technologies have created economic benefits of $855 million over the past decade. It is the fact that PHM has such great application potential that it continues to attract sustained attention and research from different academic communities, including but not limited to reliability analysis, mechanical engineering, and computer science.

Functionally, PHM covers the entire monitoring lifecycle of an equipment, fulfilling roles across four key dimensions: anomaly detection (AD), fault diagnosis (FD), remaining useful life (RUL) prediction, and maintenance execution (ME) (Zio 2022). First, AD aims to discern rare events that deviate significantly from standard patterns, and the crux lies in accurately differentiating a handful of anomalous data from an extensive volume of normal data (Li et al. 2022a). The focus of FD is to classify diverse faults, and the difficulty is to extract effective fault features under complex working conditions. RUL prediction emphasizes on estimating the time remaining before a component or system fails, and its main challenge is to construct comprehensive health indicators capable of characterizing trends in health degradation. Finally, ME optimizes maintenance decisions based on diagnostic and prognostic results (Lee and Mitici 2023).

Methodologically, the techniques employed to execute the PHM tasks of AD, FD, and RUL prediction can be classified into physics model-based, data-driven, and hybrid methods (Lei et al. 2018). Physics model-based methods utilize mathematical models to describe failure mechanisms and signal relationships, representative techniques include state observers (Choi et al. 2020), parameter estimation (Schmid et al. 2020), and some signal processing approaches (Gangsar and Tiwari 2020). However, data-driven methods involve manual or adaptive extraction of features from sensor signals, including statistical methods (Wang et al. 2022), machine learning (ML) (Huang et al. 2021) and deep learning (DL) (Fink et al. 2020). Hybrid approaches (Zhou et al. 2023a) combine elements from both physics model-based and data-driven techniques. Among these methods, DL-based techniques have gained widespread interest in PHM tasks, spanning from AD to ME, which is attributed to their pronounced advantages over conventional techniques in automatic feature extraction and pattern recognition.

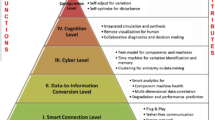

Figure 1 depicts the intelligent PHM cycle based on DL models (Omri et al. 2020), the steps include data collection and processing, model construction, feature extraction, task execution, and model deployment. It is evident that monitoring data forms the foundation of this cycle, its volume and quality wield decisive influence on the eventual performance of DL models in industrial contexts. However, gathering substantial datasets consisting of diverse anomaly and fault patterns with precise labels under different working conditions is time-consuming, dangerous, and costly, leading to small data problems that challenge models’ performance in PHM tasks. A recent investigation conducted by Dimensional Research underscores this quandary, revealing that 96% of companies have encountered small data issues in implementing industrial ML and DL projects (D. Research 2019).

The intelligent PHM cycle based on DL models (Omri et al. 2020)

To address the small data issues in intelligent PHM, organizations have started to shift their focus from big data to small data to enhance the efficiency and robustness of Artificial Intelligence (AI) models, which is strongly evidenced by the rapid growth of academic publications over recent years. To provide a comprehensive overview, we applied the preferred reporting items for systematic reviews and meta-analyses (PRISMA) (Huang et al. 2024; Kumar et al. 2023) method for paper investigation and selection. As shown in Fig. 2, the PRISMA technique includes three steps: Defining the scope, databases, and keywords, screening search results, and identifying articles for analysis. At first, the search scope was limited to articles published in IEEE Xplore, Elsevier, and Web of Science databases from 2018 to 2023, and the keywords consisted of topic terms such as “small/limited/imbalanced/incomplete data”, technical terms such as “data augmentation”, “deep learning”, “transfer learning”, “few-shot learning”, “meta-learning”, and application-specific terminologies such as “intelligent PHM”, “anomaly detection”, “fault diagnosis”, “RUL prediction” etc. The second stage is to search the literature in the databases by looking for articles whose title, abstract and keywords contain the predefined keywords, resulting in 139, 1232, and 281 papers from IEEE Xplore, Elsevier, and Web of Science, respectively. In order to eliminate duplicate literature and select the most relevant literature on small data problems in PHM, the first 100 non-duplicate studies from each database were sorted (producing a sum of 300 papers) according to the inclusion and exclusion criteria as listed in Table 1. Finally, we further refined the obtained results with thorough review and evaluation, and a total of 201 representative papers were chosen for analysis presented in this survey.

Despite the growing number of studies, the statistics highlight that there are few review articles on the topic of small data challenges. The first related review is the report entitled “Small data’s big AI potential”, which was released by the Center for Security and Emerging Technology (CSET) at Georgetown University in September 2021 (Chahal et al. 2021), and it emphasized the benefits of small data and introduced some typical approaches. Then, Adadi (2021) reviewed and discussed four categories of data-efficient algorithms for tackling data-hungry problems in ML. More recently, a study (Cao et al. 2023) theoretically analyzed learning on small data, followed an agnostic active sampling theory and reviewed the aspects of generalization, optimization and challenges. Since 2021, scholars in the PHM community have been focusing on the small data problem in intelligent FD and have conducted some review studies. Pan et al. (2022) reviewed the applications of generative adversarial network (GAN)-based methods, Zhang et al. (2022a) outlined solutions from the perspective of data processing, feature extraction, and fault classification, and Li et al. (2022b) organized a comprehensive survey on transfer learning (TL) covering theoretical foundations, practical applications, and prevailing challenges.

It is worth noting that existing studies provide valuable guidance, but they have yet to delve into the foundational concepts of small data and exhibit certain limitations in the analysis. For instance, some reviews studied the small data problems from a macro perspective without considering the application characteristics of PHM tasks (Chahal et al. 2021; Adadi 2021; Cao et al. 2023). However, some concentrated solely on particular methodologies that were used to address small data challenges in FD tasks (Pan et al. 2022; Zhang et al. 2022a; Li et al. 2022b), lack the systematic research on the solutions to AD and RUL prediction tasks, seriously limiting the development and industrial application of intelligent PHM. Therefore, an in-depth exploration of the small data challenges in the PHM domain is necessary to provide guidance for the successful applications of intelligent models in the industry.

This review is a direct response to the contemporary demand for addressing the small data challenges in PHM, and it aims to clarify the following three key questions: (1) What is small data in PHM? (2) Why solve the small data challenges? and (3) How to address small data challenges effectively? These fundamental issues distinguish our work from existing surveys and demonstrate the major contributions:

-

1.

Small data challenges for intelligent PHM are studied for the first time, and the definition, causes, and impacts are analyzed in detail.

-

2.

An overview of various state-of-the-art methods for solving small data problems is presented, and the specific issues and remaining challenges for each PHM task are discussed.

-

3.

The commonly used benchmark datasets and experimental settings are summarized to provide a reference for developing and evaluating data-efficient models in PHM.

-

4.

Finally, promising directions are indicated to facilitate future research on small data.

Consequently, this paper is organized according to the hierarchical architecture shown in Fig. 3. Section 2 discusses the definition of small data in the PHM domain and analyzes the corresponding causes and impacts. Section 3 provides a comprehensive overview of representative approaches—including data augmentation (DA) methods (Sect. 3.1), transfer learning (TL) methods (Sect. 3.2), and few-shot learning (FSL) methods (Sect. 3.3). The problems in PHM applications are discussed in Sect. 4. Section 5 summarizes the datasets and experimental settings for model evaluation. Finally, potential research directions are given in Sect. 6 and the conclusions are drawn in Sect. 7. In addition, the abbreviations of notations used in this paper are summarized in Table 2.

2 Analysis of small data challenges in PHM

The excellent performance of DL models in executing PHM tasks is intricately tied to the premise of abundant and high-quality labeled data. However, this assumption is unlikely to be satisfied in industry, as small data is often the real situation, which exhibits distinct data distributions and may lead to difficulties in model learning. Therefore, this section first analyzes the definition, causes, and impacts of small data in PHM.

2.1 What is “small data”?

Before answering the question of what small data is, let us first review the relative term, “big data”, which has garnered distinct interpretations among scholars since its birth in 2012. Ward and Barker (1309) regarded big data as a phrase that “describes the storage and analysis of large or complex datasets using a series of techniques”. Another perspective, as presented in Suthaharan (2014), focused on the data’s cardinality, continuity, and complexity. Among the various definitions, the one that has been widely accepted is characterized by the “5 V” attributes: volume, variety, value, velocity, and veracity (Jin et al. 2015).

After long-term research, some experts have discovered the fact that big data is not ubiquitous, and the paradigm of small data has emerged as a novel area worthy of thorough investigation in the field of AI (Vapnik 2013; Berman 2013; Baeza-Yates 2024; Kavis 2015). Vapnik (2013) stands among the pioneers in this pursuit, having defined small data as a scenario where “the ratio of the number of training samples to the Vapnik–Chervonenkis (VC) dimensions of a learning machine is less than 20.” Berman (2013) considered small data as being used to solve discrete questions based on limited and structured data that come from one institution. Another study defines small data as “data in a volume and format that makes it accessible, informative and actionable.” (Baeza-Yates 2024). In an industrial context, Kavis (2015) described small data as “The small set of specific attributes produced by the Internet of Things, these are typically a small set of sensor data such as temperature, wind speed, vibration and status”.

Considering the distinctive attributes of equipment signals within industries, a new definition for small data in PHM is given here: Small data refers to datasets consisting of equipment or system status information collected from sensors that are characterized by a limited quantity or quality of samples. Taking the FD task as an example, the corresponding mathematical expression is: Given a dataset \(D = \left\{ {F_{I} (x_{i}^{I} ,y_{i}^{I} )_{i = 1}^{{n_{I} }} } \right\}_{I = 1}^{N}\), \(\left( {x_{i}^{I} ,y_{i}^{I} } \right)\) are the samples and labels (if any) of the Ith fault \(F_{I}\). \(N\) represents the number of fault classes in \(D,\) and each fault set has a sample size of \(n_{I}\). Notably, the term “small” carries two connotations: (i) On a quantitative scale, “small” signifies a limited dataset volume, a limited sample size \(n_{I}\), or a minimal total number of fault types \(N\); and (ii) From a qualitative perspective, “small” indicates a scarcity of valuable information within \(D\) due to a substantial proportion of anomalous, missing, unlabeled, or noisy-labeled data in \(\left( {x_{i}^{I} ,y_{i}^{I} } \right)\). There is no fixed threshold to define “small” concerning both quantity and quality, which is an open question depending on the specific PHM task to be performed, the equipment analyzed, the chosen methodology, and the desired performance. To further understand the meaning of small data, a comprehensive comparison is conducted with big data in Table 3.

2.2 Causes of small data problems in PHM

Rapid advances in sensors and industrial Internet technology has simplified the process of collecting monitoring data from equipment. However, only large companies currently have the ability to acquire data on a large scale. Since most of the collected data are normal samples with limited abnormal or faulty data, they cannot provide enough information for model training. As illustrated in Fig. 4, four main causes for small data challenges in PHM are analyzed.

2.2.1 Heavy investment

When deploying an intelligent PHM system, Return on Investment (ROI) is the top concern of companies. The substantial investment comes from two main aspects, as shown in the first quadrant of Fig. 4: First, factories need to digitally upgrade existing old equipment to collect monitoring data. (ii) Second, data labeling and processing requires manual operation and domain expertise. Although the costs of sensors and labeling outsourcing are relatively low today, installing sensors across numerous machines and processing terabytes of data is still beyond the reach of most manufacturers.

2.2.2 Data accessibility restrictions

Illustrated in the second quadrant, this factor is underscored by follows: (i) The sensitivity, security, or privacy of the data often leads to strict access controls, an example is data collection of military equipment. (ii) For data transfers and data sharing, individuals, corporations, and nations need to comply with laws and supervisory ordinances, especially after the release of the General Data Protection Regulation (Zarsky 2016).

2.2.3 Complex working conditions

The contents depicted in the third quadrant of Fig. 4 include: (i) Data distribution within PHM inherently displays significant variability across diverse production tasks, machines and operating conditions (Zhang et al. 2023), making it impossible to collect data under all potential conditions. (ii) Acquiring data within specialized service environments, such as high radiation, carries inherent risks. (iii) The development of equipment from a healthy state to eventual failure experiences a long process.

2.2.4 Multi-factor coupling

As equipment becomes more intricately integrated, correlation and coupling effects have undergone continuous augmentation. As shown in the fourth quadrant of Fig. 4: Couplings exist between (i) multiple-components, (ii) multiple-systems, and (iii) diverse processes. Such interactions are commonly characterized by nonlinearity, temporal variability, and uncertain attributes, further increasing the complexity of data acquisition.

2.3 Impacts of small data on PHM tasks

The availability of labeled and high-quality data remains limited, producing some impacts on performing PHM tasks, particularly involving both data and models (Wang et al. 2020a). As shown on the left side of Fig. 5, the effects at the data level primarily include incomplete data and unbalanced distribution, which subsequently leads to poor generalization at the model-level. This section analyzes the impacts with corresponding evaluation metrics based on the example of FD task.

2.3.1 Incomplete data

Data integrity refers to the “breadth, depth, and scope of information contained in the data” (Chen et al. 2023a). However, the obtained small dataset often exhibits a low density of supervised information owing to restricted fault categories or sample size. Further, missing values and labels, or outliers in the incomplete data exacerbates the scarcity of valuable information. Data incompleteness in PHM can be measured by the following metrics:

where \(I_{D}\) represents the incompleteness of the dataset \(D\), \(n_{{_{D} }}\) and \(N_{{_{D} }}\) are the number of incomplete samples and the total samples in \(D\), respectively. Similarly, this metric can also assess the incompleteness of samples within a certain class \(C_{i}\) in line with Eq. (2). When either \(I_{D}\) or \(I_{{C_{i} }}\) approaches 0, it indicates a relatively complete dataset or class. Conversely, a higher value represents a severe degree of data incompleteness, leading to a substantial loss of information within the data.

2.3.2 Imbalanced data distribution

The second impact is the imbalanced data distribution. The fault classes containing higher or lower numbers of samples are called the majority and minority classes, respectively. Depending on the imbalance that exists between different classes or within a single class, the phenomena of inter-class imbalance or intra-class imbalance arises accordingly. Considering a dataset with two distinct fault types, each comprising two subclasses, the degrees of inter-class \(IR\) and intra-class \(IR_{{C_{i} }}\) imbalances can be quantified as (Ren et al. 2023):

where \(N_{{{\text{maj}}}}\) and \(N_{\min }\) represent the count of the majority and minority classes within the dataset. \(n_{{{\text{maj}}}}\) and \(n_{\min }\) signify the respective sample sizes of the two subclasses within class \(C_{i}\). The above values span the interval [1, ∞) to describe the extent of the imbalance. A value of \(IR\) or \(IR_{{C_{i} }}\) is 1 indicates a balanced inter-class or intra-class case, whereas a value of 50 is typically thought of as a highly imbalanced task by domain experts (Triguero et al. 2015).

2.3.3 Poor model generalization

Technically, the principal of supervised DL is to build a model \(f\), which learns the underlying patterns from a training set \(D_{train}\) and tries to predict the labels of previously unseen test data \(D_{test}\). The empirical error \(E_{emp}\) on the training set and the expected error \(E_{exp}\) on the test set can be derived by calculating the discrepancy between the true labels \(Y\) and the predicted labels \(\hat{Y}\), respectively. And the difference between these two errors, i.e., the generalization error \(G(f,D_{train} ,D_{test} )\), is commonly used to measure the generalizability of the trained model on a test set. Generalization error is bounded by the model’s complexity \(h\) and the training data size \(P\) as follows (LeCun et al. 1998):

where k is a constant and α is a coefficient with a value range of [0.5, 1.0]. The equation above shows that the parameter \(P\) determines the model’s generalization. When \(P\) is large enough, \(G(f,D_{train} ,D_{test} )\) for the model with a certain \(h\) will converge towards to 0. However, the small, incomplete or unbalanced data often result in larger \(G(f,D_{train} ,D_{test} )\) and poor generalization.

3 Overview of approaches to small data challenges in PHM

This section provides a structured overview of the latest advancements in tackling small data challenges in representative PHM tasks such as AD, FD and RUL prediction. As depicted on the right-hand side of Fig. 5, three main strategies have been extracted from the current literatures: DA, TL and FSL. In the upcoming subsections, we delve into the relevant theories and proposed methodologies for each category, followed by a brief summary.

3.1 Data augmentation methods

DA methods provide data-level solutions to address small data issues, and their efficacy has been verified in many studies. The basic principle is to improve the quantity or quality of the training dataset by creating copies or new synthetic samples of existing data (Gay et al. 2023). Depending on how the auxiliary data are generated, transform-based, sampling-based, and deep generative models-based DA methods are analyzed.

3.1.1 Transform-based DA

Transform-based methods is one of the earliest classes of DA, which increases the size of small datasets by employing geometric transformations to existing samples without changing labels. These transformations are so diverse and flexible that they include random cropping, vertical and horizontal flipping, and noise injection. However, most of them were initially designed for two-dimensional (2-D) images and cannot be directly applied to one-dimensional (1-D) signals of equipment (Iglesias et al. 2023).

Considering the sequential nature of monitoring data, scholars have devised transformation methods applicable to increase the size of 1-D data (Meng et al. 2019; Li et al. 2020a; Zhao et al. 2020a; Fu et al. 2020; Sadoughi et al. 2019; Gay et al. 2022). For example, Meng et al. (2019) proposed a DA approach for FD of rotating machinery, which equally divided the original sample and then randomly reorganized the two segments to form a new fault sample. In Li et al. (2020a) and Zhao et al. (2020a), various transformation techniques, such as Gaussian noise, random scaling, time stretching, and signal translation, are simultaneously applied, as illustrated in Fig. 6. It is worth noting that all the aforementioned techniques are global transformations that are imposed on the entire signal, potentially overlooking the local fault properties. Consequently, some studies have combined local and global transforms (Zhang et al. 2020a; Yu et al. 2020, 2021a) to change both segments and the entirety of the original signal to obtain more authentic samples. For instance, Yu et al. (2020) simultaneously used strategies of local and global signal amplification, noise addition, and data exchange to improve the diversity of fault samples.

Illustration of the transformations applied in Li et al. (2020a) and Zhao et al. (2020a). Gaussian noise was randomly added to the raw samples, random scaling was achieved by multiplying the raw signal with a random factor, time stretching was implemented by horizontally stretching the signals along the time axis, and signal translation was done by shifting the signal forward or backwards

3.1.2 Sampling-based DA

Sampling-based DA methods are usually applied to solve data imbalance problems under small data conditions. Among them, under-sampling techniques solve data imbalance by reducing the sample size of the majority class, while over-sampling methods achieve DA by expanding samples of the minority class. Over-sampling can be further classified into random over-sampling and synthetic minority over-sampling techniques (SMOTE) (Chawla et al. 2002) depending on whether or not new classes are created. As shown in Fig. 7, random over-sampling copies the data of a minority class n times to increases data size, and SMOTE creates synthetic samples by calculating the k nearest neighbors of the samples from minority classes, thus enhancing both the quantity and the diversity of samples.

To address data imbalance arising from abundant healthy samples and fewer faulty samples in monitoring data, some studies (Yang et al. 2020a; Hu et al. 2020) have introduced enhanced random over-sampling methods for augmentation of small data. For example, Yang et al. (2020a) enhanced random over-sampling method by introducing a variable-scale sampling strategy for unbalanced and incomplete data in the FD task, and Hu et al. (2020) used resampling method to simulate data under different working conditions to decrease domain bias. In comparison, the SMOTE technique has gained widespread utilization in PHM tasks due to its inherent advantages (Hao and Liu 2020; Mahmoodian et al. 2021). Hao and Liu (2020) combined SMOTE with Euclidean distance to achieve better over-sampling of minority class samples. To address the difficulties of selecting appropriate nearest neighbors for synthetic samples, Zhu et al. (2022) calculated the Euclidean and Mahalanobis distances of the nearest neighbors, and Wang et al. (2023) used the characteristics of neighborhood distribution to equilibrate samples. Moreover, studies of (Liu and Zhu 2020; Fan et al. 2020; Dou et al. 2023) further improved the adaptability of SMOTE by employing weighted distributions to shift the importance of classification boundaries more toward the challenging minority classes, demonstrated effectiveness in resolving data imbalance issues.

3.1.3 Deep generative models-based DA

In addition, deep generative models have emerged as highly promising solutions to small data since 2017, autoencoder (AE) and generative adversarial network (GAN) are two prominent representatives (Moreno-Barea et al. 2020). AE is a special type of neural network characterized by encoding its input to the output in an unsupervised manner (Hinton and Zemel 1994), where the optimization goal is to learn an effective representation of the input data. The fundamental architecture of an AE, illustrated in Fig. 8a, comprises two symmetric parts encompassing a total of five shallow layers. The first half, known as the encoder, transforms input data into a latent space, while the second half, or the decoder, deciphers this latent representation to reconstruct the data. Likewise, a GAN is composed of two fundamental components, as shown in Fig. 8b. The first is the generator, responsible for creating fake samples based on input random noise, and the second is the discriminator for identifying the authenticity of the generated samples. These two components engage in an adversarial training process, progressively moving towards a state of Nash equilibrium.

The unique advantages of GAN in generating diverse samples makes it superior to traditional over-sampling DA methods, especially in tackling data imbalance problems for PHM tasks (Behera et al. 2023). Various innovative models have emerged, including variational auto-encoder (VAE) (Qi et al. 2023), deep convolutional GAN (DCGAN) (Zheng et al. 2019), Wasserstein GAN (Yu et al. 2019), etc. These methods can be classified into two groups based on their input types. The first commonly generates data from 1-D inputs like raw signals (Zheng et al. 2019; Yu et al. 2019; Dixit and Verma 2020; Ma et al. 2021; Zhao et al. 2021a, 2020b; Liu et al. 2022; Guo et al. 2020; Wan et al. 2021; Huang et al. 2020, 2022; Zhang et al. 2020b; Behera and Misra 2021; Wenbai et al. 2021; Jiang et al. 2023) and frequency features (Ding et al. 2019; Miao et al. 2021; Mao et al. 2019), which can capture the inherent temporal information in signals without complex pre-processing. For instance, Dixit and Verma (2020) proposed an improved conditional VAE to generate synthetic samples using raw vibration signals, yielding remarkable FD performance despite limited data availability. The work in Mao et al. (2019) applied the Fast Fourier Transform (FFT) to convert original signals into the frequency domain as inputs for GAN and obtained higher-quality generated samples. On the other hand, some studies (Du et al. 2019; Yan et al. 2022; Liang et al. 2020; Zhao and Yuan 2021; Zhao et al. 2022; Sun et al. 2021; Zhang et al. 2022b; Bai et al. 2023) took the strengths of AEs and GANs in the image domain, aimed to generate corresponding images by utilizing 2-D time–frequency representations. For instance, Bai et al. (2023) employed an intertemporal return plot to transform time-series data to 2-D images as inputs for Wasserstein GAN, and this method reduced data imbalance and improved diagnostic accuracy of bearing faults.

3.1.4 Epilog

Table 4 summarizes the diverse DA-based solutions to addressing small data problems in PHM, covering specific issues tackled by each technique, as well as the merits and drawbacks of each technique. It is evident that DA approaches focus on mitigating small data challenges at the data-level, including problems characterized by insufficient labelled training data, class imbalance, incomplete data, and samples contaminated with noise. To tackle these, transform-based methods primarily increase the size of the training dataset by imposing transformations onto signals, but the effectiveness depends on the quality of raw signals. As for sampling-based approaches, they excel at dealing with unbalanced problems in the PHM tasks, and SMOTE methods demonstrate proficiency in both augmenting minority class samples and diversifying their composition, but refining nearest neighbor selection and bolstering adaptability to high levels of class imbalance remain open research areas. While deep generative models-based DA provides a flexible and promising tool capable of generating samples for various equipment under different working conditions, but more in-depth research is needed on integrating the characteristics of PHM tasks, quality assessment of the generated data, and efficient training of generative models.

3.2 Transfer learning methods

Traditional DL models assumes that training and test data originate from an identical domain, however, changes in operating conditions inevitably cause divergences in data distributions. TL is a new technique that eliminates the requirement for same data distribution by transferring and reusing data or knowledge from related domains, ultimately solving small data problems in the target domain. TL is defined in terms of domains and tasks, each domain \(D\) consists of a feature space and a corresponding marginal distribution, and the task \(T\) associated with each domain contains a label space and a learning function (Yao et al. 2023). Within the PHM context, TL can be concisely defined as: Given a source domain \(D_{S}\) and a task \(T_{S}\), and a target domain \(D_{T}\) with a task \(T_{T}\). The goal of TL is to exploit the knowledge of certain equipment that is learned from \(D_{S}\) and \(T_{S}\) to enhance the learning process for \(T_{T}\) within \(D_{T}\) under the setting of \(D_{S} \ne D_{T}\) or \(T_{S} \ne T_{T}\), and the data volume of the source domain is considered much larger than that of the target domain. There is a range of categorization criteria for TL methods in the existing literature. From the perspective of “what to transfer” during the implementation phase, TL can be categorized into three types: instance-based TL, feature-based TL, and parameter-based TL. Among these categories, the former two are affiliated with solutions operating at the data level, while the latter belong to the realm of model-level approaches. These classifications are visually represented in Fig. 9.

3.2.1 Instance-based TL

The premise of applying TL is that the source domain contains sufficient labeled data, whereas the target domain either lacks sufficient labeled data or predominantly consists of unlabeled data. Although a straightforward way is to train a model for the target domain using samples from the source domain, which proves impractical due to the inherent distribution disparities between the two domains. Therefore, finding and applying labeled instances in the source domain that have similar data distribution to the target domain is the key. For this purpose, various methods have been proposed to minimize the distribution divergence, and weighting strategies are the most widely used.

Dynamic weight adjustment (DWA) is a popular strategy, and its novelty lies in reweighting the source and target domain samples based on their contributions to the learning of the target model. Take the well-known TrAdaBoost algorithm (Dai et al. 2007) as an example, which increases the weights of samples that are similar to the target domain, and reduces the weights of irrelevant source instances. The effectiveness of TrAdaBoost has been validated in FD for wind turbines (Chen et al. 2021), bearings (Miao et al. 2020), and induction motors (Xiao et al. 2019). Evolving from foundational research, scholars also introduced multi-objective optimization (Lee et al. 2021) and DL theories (Jamil et al. 2022; Zhang et al. 2020c) into TrAdaBoost to improve model training efficiency. However, DWA requires labeled target samples, otherwise, weight adjustment methods based on kernel mapping techniques are needed to estimate the key weight parameters, such as matching the mean of source and target domain samples in the replicated kernel Hilbert space (RKHS) (Tang et al. 2023a). For example, Chen et al. (2020) designed a white cosine similarity criterion based on kernel principal component analysis to determine the weight parameters for data in the source and target domain, boosting the diagnostic performance for gears under limited data and varying working conditions. More research can be found in Liu and Ren (2020), Xing et al. (2021), Ruan et al. (2022).

3.2.2 Feature-based TL

Unlike instance-based TL that finds similarities between different domains in the space of raw samples, feature-based methods perform knowledge transfer within a shared feature space between source and target domains. As demonstrated in Fig. 10, feature-based TL is widely applied in domain-adaption and domain-generalization scenarios, where the former focuses on how to migrate knowledge from the source domain to the target domain, and domain generalization aims to develop a model that is robust across multiple source domains so that it can be generalized to any new domain. The key to feature-based TL is to reduce the disparities between the marginal and conditional distributions of different domains by some operations, such as discrepancy-based methods and feature reduction methods, which eventually enable the model to achieve excellent adaptation and generalization on target tasks (Qin et al. 2023).

The main challenge for discrepancy-based methods is to accurately quantify the distributional similarity between domains, which relies on specific distance metrics. Table 5 lists the popular metrics (Borgwardt et al. 2006; Kullback and Leibler 1951; Gretton et al. 2012; Sun and Saenko 2016; Arjovsky et al. 2017) and the algorithms applied to PHM tasks (Yang et al. 2018, 2019a; Cheng et al. 2020; Zhao et al. 2020c; Xia et al. 2021; Zhu et al. 2023a; Li et al. 2020b, c, 2021a; He et al. 2021). Maximum Mean Discrepancy (MMD) is based on the distance between instance means in the RKHS, and Wasserstein distance assesses the likeness of probability distributions by considering geometric properties, both of which are widely used. For example, Yang et al. (2018) devised a convolutional adaptation network with multicore MMD to minimize the distribution discrepancy between the feature distributions derived from both laboratory and real machines failure data. And the integration of Wasserstein distance in Cheng et al. (2020) greatly enhanced the domain adaptation capability of the proposed model. Moreover, Fan et al. (2023a) proposed a domain-based discrepancy metric for domain generalization fault diagnosis under unseen conditions, which helps model balance the intra- and interdomain distances for multiple source domains. On the other hand, feature reduction approaches aim to automatically capture general representations across different domains, mainly using unsupervised methods such as clustering (Michau and Fink 2021; He et al. 2020a; Mao et al. 2021) and AE models (Tian et al. 2020; Lu and Yin 2021; Hu et al. 2021a; Mao et al. 2020). For instance, Mao et al. (2021) integrated time series clustering into TL, and used the meta-degradation information obtained from each cluster for temporal domain adaptation in bearing RUL prediction. To improve model performance for imbalanced and transferable FD, Lu and Yin (2021) designed a weakly supervised convolutional AE (CAE) model to learn representations from multi-domain data. Liao et al. (2020) presented a deep semi-supervised domain generalization network, which showed excellent generalization performance in performing rotary machinery fault diagnosis under unseen speed.

3.2.3 Parameter-based TL

The third category of TL is parameter-based TL, which supposes that the source and target tasks share certain knowledge at the model level, and the knowledge is encoded in the architecture and parameters of the model pre-trained on the source domain. It is motivated by the fact that retraining a model from scratch requires substantial data and time, while it is more efficient to directly transfer pre-trained parameters and fine-tune them in the target domain. In this way, there are basically two main implementations depending on the utilization of the transferred parameters in target model training: full fine-tuning (or freezing) and partial fine-tuning (or freezing), as shown in Fig. 11.

Full fine-tuning (or freezing) means that all parameters transferred from the source domain are fine-tuned with limited labelled data from the target domain, or those parameters are frozen without updating during the training of the target model. Conversely, partial fine-tuning (or freezing) is the selective fine-tuning of only specific upper layers or parameters, keeping the lower layer parameters consistent with the pre-trained model. In both cases, the classifier or predictor of the target model needs to be retrained with randomly initialized parameters to align with the number of classes or data distribution of the target task. The full fine-tuning (or freezing) approach is particularly applicable when the source and target domain samples exhibit a high degree of similarity, so that general features can be extracted from the target domain by using the pre-training parameters (Cho et al. 2020; He et al. 2019, 2020b; Zhiyi et al. 2020; Wu and Zhao 2020; Peng et al. 2021; Zhang et al. 2018; Che et al. 2020; Cao et al. 2018; Wen et al. 2020, 2019). From the perspective of the size of the pre-trained model and the fine-tuning time, the full fine-tuning and full freezing strategies are suitable for small and large models, respectively. For example, He et al. (Zhiyi et al. 2020) proposed to achieve knowledge transfer between bearings mounted on different machines by fully fine-tuning the pre-trained parameters with few target training samples. In Wen et al. (2020, 2019), researchers applied deep convolutional neural networks (CNN)—ResNet-50 (a 50-layer CNN) and VGG-19 (a 19-layer CNN) that was pre-trained on ImageNet as feature extractors, and train target FD models using full freezing methods. In contrast, partial fine-tuning (or freezing) strategies are more suitable for handling cases with significant domain differences (Wu et al. 2020; Zhang et al. 2020d; Yang et al. 2021; Brusa et al. 2021; Li et al. 2021b), such as transfer between complex working conditions (Wu et al. 2020) and multimodal data sources (Brusa et al. 2021). In addition, Kim and Youn (2019) introduced an innovative approach known as selective parameter freezing (SPF), where only a portion of parameters within each layer is frozen, which enables explicit selection of output-sensitive parameters from the source model, reducing the risk of overfitting the target model under limited data conditions.

3.2.4 Epilog

The TL framework breaks the assumption of homogeneous distribution of training and test data in traditional DL and compensates for the lack of labeled data in the target domain by acquiring and transferring knowledge from a large amount of easily collected data. As summarized in Table 6, instance-based TL can be regarded as a borrowed augmentation, wherein other datasets with similar distributions are utilized to enrich the samples in the target domain. Among the techniques, DWA strategies demonstrate superiority in solving insufficient labeled target data and imbalanced data, whereas their drawbacks of high computational cost and high dependence on similar distributions need further optimization. As a comparison, feature-based TL performs knowledge transfer by learning general fault representations and has the ability to handle domain-adaption and domain-generalization tasks with large distribution differences, such as transfers between distinct working conditions (He et al. 2020a), transfers between diverse components (Yang et al. 2019a), or even transfers from simulated to physical processes (Li et al. 2020b). And weakly supervised-based feature reduction techniques are capable of adaptively discovering better feature representations, and showing great potential in open domain generalization problems. Finally, parameter-based TL saves the target model from being retrained from scratch, but the effectiveness of these parameters hinges on the size and quality of the source samples, and model pre-training on multi-source domain data can be considered (Li et al. 2023b; Tang et al. 2021).

3.3 Few-shot learning methods

DA and TL methods both require that training dataset has a certain number (ranging from dozens to hundreds) of labeled samples. However, in some industrial cases, samples of specific classes (such as incipient failures or compound faults) may be exceptionally rare and inaccessible, with only a handful of samples (e.g., 5–10) per category for DL model training, resulting in poor model performance on such “few-shot” problems (Song et al. 2022). Inspired by the human ability to learn and reuse prior knowledge from previous tasks, which Juirgen Schmidhuber initially named meta-learning (Schmidhuber 1987), FSL methods have been proposed to learn a model that can be trained and quickly adapted to tasks with only a few examples. As shown in Fig. 12, there are some differences between traditional DL, TL, and FSL methods: (1) traditional DL and TL are trained and tested on data points from a single task, while FSL methods are often learning at the task level; (2) the learning of traditional DL requires large amounts of labeled training and test data, TL requires large amounts of labeled training data in source domain, while FSL methods perform meta-training and meta-test with limited data. The organization of FSL tasks follows the “N-way K-shot Q-query” protocol (Thrun and Pratt 2012), where N categories are randomly selected, and K support samples and Q query samples are randomly drawn from each category for each task. The objective of FSL is to combine previously acquired knowledge from multiple tasks during meta-training with a few support samples to predict the class of query samples during meta-test. Based on the way prior knowledge is learned, metric-, optimization-, and attribute-based FSL methods are primarily discussed.

3.3.1 Metric-based FSL

Metric-based FSL is to learn priori knowledge by measuring sample similarities, which consists of two components: a feature embedding module responsible for mapping samples to feature vectors, and a metric module to compute similarity (Li et al. 2021). Siamese Neural Networks is one of the pioneers, initially proposed by Koch et al. in 2015 for one-shot image recognition (Koch et al. 2015), which used two parallel CNNs and L1 distance to determine whether paired inputs are identical. Subsequently, Vinyals et al. (2016) introducing long short-term memory (LSTM) with attention mechanisms for effective assessment of multi-class similarity, and Snell et al. (2017) developed Prototypical Networks to calculate the distance between prototype representations, and Relation Networks (Sung et al. 2018) utilized adaptive neural networks instead of traditional functions. Table 7 lists the differences between these representative approaches in terms of embedding modules and metric functions.

According to current studies, two forms of metric-based FSL methods are applied in PHM tasks. The first utilizes fixed metrics (e.g., cosine distance) for measuring similarity, while the second leverages learnable metrics, such as the neural network of Relation Networks. For example, Zhang et al. (2019) firstly introduced a wide-kernel deep CNN-based Siamese Networks for the FD of rolling bearings, which achieved excellent performance with limited data under different working conditions. Then, various FSL algorithms based on Siamese networks (Li et al. 2022c; Zhao et al. 2023; Wang and Xu 2021), matching networks (Xu et al. 2020; Wu et al. 2023; Zhang et al. 2020e) and prototypical networks (Lao et al. 2023; Jiang et al. 2022; Long et al. 2023; Zhang et al. 2022c) have been developed for PHM tasks. Zhang et al. (2020e) designed an iterative matching network combined with a selective signal reuse strategy for the few-shot FD of wind turbines. Jiang et al. (2022) developed a two-branch prototype network (TBPN) model, which integrated both time and frequency domain signals to enhance fault classification accuracy. While Relation Networks have shown superiority over fixed metric-based FSL methods when measuring samples from different domains, and they are therefore widely applied for cross-domain few-shot tasks (Lu et al. 2021; Wang et al. 2020b; Luo et al. 2022; Yang et al. 2023a; Tang et al. 2023b). To illustrate, Lu et al. (2021) considered the FD of rotating machinery with limited data as a similarity metric learning problem, and they introduced Relation Networks into the TL framework as a solution. Luo et al. (2022) proposed a Triplet Relation Network method for performing cross-component few-shot FD tasks, and Tang et al. (2023b) designed a novel lightweight relation network for performing cross-domain few-shot FD tasks with high efficiency. Furthermore, to address domain shift issues resulting from diverse working conditions, Feng et al. (2021) integrated similarity-based meta-learning network with domain-adversarial for cross-domain fault identification.

3.3.2 Optimization-based FSL

Optimization-based FSL methods adhere to the “learning to optimize” principle to solve overfitting problems arising from small samples. Specifically, these techniques learn good global initialization parameters across various tasks, allowing the model to quickly adapt to new few-shot tasks during the meta-test (Parnami and Lee 2022). Taking the best-known model agnostic meta-learning (MAML) (Finn et al. 2017) algorithm as an example, optimization-based FSL typically follows a two-loop learning process, first learning a task-specific model (base learner) for a given task in the inner loop, and then learning a meta-learner over a distribution of tasks in the outer loop, where meta-knowledge is embedded in the model parameters and then used as initialization parameters of the model for meta-test tasks. MAML is compatible with diverse models that are trained using gradient descent, allowing models to generalize well to new few-shot tasks without overfitting.

Recent literature highlights the potential of MAML in PHM, mainly focuses on meta-classification and meta-regression. For meta-classification methods, the aim is to learn an optimized classification model based on multiple meta-training tasks that can accurately classify novel classes in meta-test with a few samples as support, typically used for AD (Chen et al. 2022) and FD tasks (Li et al. 2021c, 2023c; Hu et al. 2021b; Lin et al. 2023; Yu et al. 2021b; Chen et al. 2023b; Zhang et al. 2021; Ren et al. 2024). For example, Li et al. (2021c) proposed a MAML-based meta-learning FD technique for bearings under new conditions by exploiting the prior knowledge of known working conditions. To further improve meta-learning capabilities, advanced models such as task-sequencing MAML (Hu et al. 2021b) and meta-transfer MAML (Li et al. 2023c) have been designed for few-shot FD tasks, and a meta-learning based domain generalization framework was proposed to alleviate both low-resource and domain shift problems (Ren et al. 2024). On the other hand, meta-regression methods target prediction tasks in PHM, with the goal of predicting continuous variables using limited input samples based on meta-optimized models derived from analogous regression tasks (Li et al. 2019, 2022d; Ding et al. 2021, 2022a; Mo et al. 2022; Ding and Jia 2021). Li et al. (2019) first explored the application of MAML to RUL prediction with small size data in 2019, a fully connected neural network (FCNN)-based meta-regression model was designed for predicting tool wear under varying cutting conditions. In addition, MAML has also been integrated into reinforcement learning for fault control under degraded conditions, and more insights can be found in Dai et al. (2022), Yu et al. (2023).

3.3.3 Attribute-based FSL

There is also a unique paradigm of FSL known as “zero-shot learning” (Yang et al. 2022), where models are used to predict the classes for which no samples were seen during meta-training. In this setup, auxiliary information is necessary to bridge the information gap of unseen classes due to the absence of training data. The supplementary information must be valid, unique, and representative that can effectively differentiate various classes, such as attribute information for images in computer vision. As shown in Fig. 13, the classes of unseen animals are inferenced by transferring the between-class attributes, such as semantic descriptions of animals’ shape, voice, or habitats, whose effectiveness has been validated in many zero-shot tasks (Zhou et al. 2023b).

The idea of attributed-based FSL approach offers potential solutions to the zero-sample problem in PHM tasks. However, visual attributes cannot be used directly because they do not match the physical meaning of the sensor signals, and for this reason, scholars have worked on effective fault attributes. Given that fault-related semantic descriptions can be easily obtained from maintenance records and can be defined for specific faults in practice, semantic attributes are widely used in current research (Zhuo and Ge 2021; Feng and Zhao 2020; Xu et al. 2022; Chen et al. 2023c; Xing et al. 2022). For example, Feng and Zhao ( 2020) pioneered the implementation of zero-shot FD based on the transfer of fault description attributes, which included failure position, fault causes and consequences, providing auxiliary knowledge for the target faults. Xu et al. (2022) devised a zero-shot learning framework for compound FD, and the semantic descriptor of the framework can define distinct fault semantics for singular and compound faults. Fan et al. (2023b) proposed an attribute fusion transfer method for zero-shot FD with new fault modes. Despite the strides made in description-drive semantic attributes, certain limitations exist, including reliance on expert insights and inaccurate information sources. More recently, attributes without semantic information (termed non-semantic attributes) have also been explored in Lu et al. (2022), Lv et al. (2020). Lu et al. (2022) developed a zero-shot intelligent FD system by employing statistical attributes extracted from time and frequency domains of signal.

3.3.4 Epilog

FSL methods are advantageous in solving small data problems with extremely limited samples, such as giving only five, one, or even zero samples per class in each task. As listed in Table 8, metric-based FSL methods are concise in their principles and computation, and they shift the focus from sample quantity to intrinsic similarity, but the reliance on labeled data during the training of feature embeddings constrains their applicability in supervised settings. Optimization-based FSL, particularly those underpinned by MAML, boast broader applications including fault classification, RUL prediction, and fault control, but these techniques need substantial computational resources for the gradient optimization of deep networks, and the balance between the optimization parameters and model training speed is the key (Hu et al. 2023). Attribute-based FSL is an emerging but promising research topic that has huge potential to significantly reduce the cost of data collection in the industry, and zero-shot learning enables model generalize to new failure modes or conditions without retraining, achieving intelligent prognostics for complex systems even with “zero” abnormal or fault sample. In industry, few-shot is often accompanied by domain shift problems caused by varying speed and load conditions, which is a more difficult problem and poses challenges for traditional FSL methods to learn enough representative fault features that can be adapted and generalized to unseen data distributions, and research in this area has recently begun (Liu et al. 2023).

4 Discussion of problems in PHM applications

Different PHM tasks have distinct goals and characteristics, thus producing various forms of small data problems and needs corresponding solutions. Therefore, based on the methods discussed in Sect. 3, this section will further explore the specific issues and remaining challenges from the perspective of PHM applications. And the distribution of specific issues and corresponding methods for each task is shown in Fig. 14.

4.1 Small data problems in AD tasks

4.1.1 Main problems and corresponding solutions

In industrial applications, the amount of abnormal data is much less than normal data, which seriously hinders the development of accurate anomaly detectors. According to the statistics in Fig. 14, current research on small data in AD tasks focuses on three core issues: class imbalance, incomplete data, and poor model generalization, which have different impacts on AD tasks. Specifically, class imbalance may cause the model to be biased towards the normal class, which reduces the sensitivity of detecting rare anomalies; incomplete data can make it difficult for the model to distinguish between normal variations and true anomalies when key features are missing; and the problem of poor model generalization may lead to false-positives or false-negatives, which reduces the overall reliability of the anomaly detection system.

To address the above class imbalance problems, existing studies demonstrate that directly increasing the samples of minority classes through DA techniques yields positive results. In our survey of the literature, two papers (Fan et al. 2020; Rajagopalan et al. 2023) introduced optimized SMOTE algorithms, and one study employed GAN-based DA methods to AD tasks of wind turbines. These methods facilitated the generation of additional anomalous samples, and enhanced model accuracy while minimizing false positive rates. Another prevalent challenge in AD tasks is incomplete data, stemming from faulty sensors, inaccurate measurements, or different sampling rates. Deep generative models, with their superior learning capabilities, have been widely used to improve information density of incomplete data (Guo et al. 2020; Yan et al. 2022). To address inadequate model generalization when confronted with limited labeled training samples, Michau and Fink (2021) proposed an unsupervised TL framework. Notably, the majority of AD methods advanced in current research are rooted in unsupervised learning models, such as AE, with wide applications involving electric motors (Rajagopalan et al. 2023), process equipment (Guo et al. 2020), and wind turbines (Liu et al. 2019).

4.1.2 Remaining challenges

AD is an integral and fundamental task in equipment health monitoring, where the difficulty lies in dealing with a complex set of data and various anomalies (Pang et al. 2021). Though existing research has provided valuable insights into addressing small data challenges, certain unresolved issues warrant further exploration.

4.1.2.1 Adaptability of detection models

The majority of AD algorithms are domain-dependent and designed for specific anomalies and conditions. However, industrial production constitutes a dynamic and nonlinear process, where changes in variables such as environment, speed, or load may lead to data drift and novel anomalies. For small datasets, even minor changes in the underlying patterns can have a pronounced impact on the dataset’s characteristics, thus degrading anomaly detection performance of models. To address these issues, it is imperative to improve the adaptability of detection models by using adaptive optimizers and learners, such as the online adaptive Recurrent Neural Network proposed in Fekri et al. (2021), which had the capability to learn from newly arriving data and adapt to novel patterns.

4.1.2.2 Real-time anomaly detection

Real-time is always a desirable property of detection models, which ensure that anomalies can be detected and reported to the operator in a timely manner, and corresponding decisions can be made quickly, and this is especially important for complex equipment, such as UAVs (Yang et al. 2023b). The deployment of lightweight network architectures and edge computing technologies holds promise in enabling the realization of real-time detection capabilities.

4.2 Small data problems in FD tasks

4.2.1 Main problems and corresponding solutions

Accuracy is one of the most important metrics for evaluating models’ performance in classifying different types of faults, but it is strongly influenced by the size of the fault data. As shown in Fig. 14, the small data challenge in FD has received the most extensive research attention compared to AD and RUL prediction tasks. The small data problem has also manifested itself in a richer variety of ways, including limited labeled training data, class imbalance, incomplete data, low data quality, and poor generalization. First, limited labeled training data increases the risk of model overfitting and poses a challenge in capturing variations in fault conditions, class imbalance leads to lower sensitivity to few and unseen faults, incomplete data leads to incomplete extraction of fault features, low-quality data misleads the diagnostic model to generate false positives or false negatives; and poor generalization capability limits the applicability of the model to different operating conditions and equipment.

To address the scarcity of labeled training data, two practical solutions emerge: using samples within the existing datasets, and borrowing from external data sources. The former involves employing already acquired signals to generate samples adhering to the same data distribution. Following this idea, there are five and eight surveyed papers have utilized transform-based and deep generative models-based DA methods, respectively, with 1-D vibration signals as input. The second involves three main techniques: reusing samples from other domains through sample-based TL, obtaining available features via feature-based TL, and utilizing attribute representations through attribute-based FSL. According to the statistics of the surveyed papers, feature-based approaches were employed 15 times for cross-domain scenarios, and attribute-based methods were chosen 7 times for predicting novel classes with zero training samples. Data imbalance is another common problem in FD, with 16 articles retrieved on this topic, and most of them applying deep generative models to address inter-class imbalance problems. In addition to the issues discussed above, data quality problems such as incomplete data and noisy labels have also gained attention, with two and three papers based on deep generative models being presented, respectively.

Secondly, as for the issues caused by limited data at the model level, such as overfitting, diminished accuracy, and weakened generalization, researchers also have also proposed various solutions. This includes 12 papers using parameter-based TL methods, 14 papers applying metric-based FSL methods, and 8 papers using MAML-based FSL approaches. Among these, parameter-based TL methods leverage knowledge within the structure and parameters of models to decrease training time, while metric-based FSL alleviates the requirement for sample size by learning category similarities, and MAML-based FSL achieves fast adaptation to novel FD tasks by using meta-learned knowledge. These successful applications also demonstrate the potential for integrating TL and FSL paradigms to improve model accuracy and generalizability.

4.2.2 Remaining challenges

The data-level and model-level approaches proposed above have made significant progress in solving the small data problems in FD tasks. However, there are still some challenges that need to be addressed urgently.

4.2.2.1 Quality of small data

In our survey of 107 studies on FD tasks, most focused on solving sample size problems, only five papers investigated data quality issues in small data challenges. It is important for researchers to realize that a voluminous collection of irrelevant samples is far inferior to a small yet high-quality dataset on FD tasks. And the poor quality of small data results from both samples and labels, including but not limited to missing data, noise and outliers in signal measurement, and the errors during labeling. Consequently, there is a large research gap in factor analysis, data quality assessment and data enhancement.

4.2.2.2 System-level FD with limited data

The majority of current algorithms for handling small data problems focus on component-level FD, as evidenced by their applications to bearings (Zhang et al. 2020a; Yu et al. 2020, 2021a) and gears (Zhao et al. 2020b). However, these methods cannot meet the diagnostic demands of intricate industrial systems composed of multiple components. Thus, developing intelligent models to perform system-level FD with limited data requires more exploration.

4.3 Small data problems in RUL prediction tasks

4.3.1 Main problems and corresponding solutions

The paradox inherent in RUL prediction lies in its aim to estimate degradation trends of an equipment based on historical monitoring data, whereas run-to-failure data are difficult to obtain. This paradox has motivated scholars to recognize the significance of small data issues within prognostic tasks. Among the 27 reviewed papers, the problems of limited labeled training data, class imbalance, incomplete data, and poor model generalization are mainly studied. While these issues are similar to those in the FD task, the RUL prediction task has different implications due to its continuous label space nature (Ding et al. 2023a). Specifically, limited labeled training data makes it difficult to learn sufficiently robust representations of health indictors, class imbalance may lead to more frequent prediction of non-failure events and produce conservative estimates, missing information in incomplete data further increases prediction uncertainty, and poorer generalization capability reduces the compatibility of the model for different operating conditions or devices.

RUL prediction is a typical regression task, wherein the quantity of training data profoundly influences the feature learning and nonlinear fitting abilities of DL models. Addressing the challenges of limited labeled training data, solutions include transform-(Fu et al. 2020; Sadoughi et al. 2019; Gay et al. 2022) and generative model-based (Zhang et al. 2020b) DA methods, alongside instance- (Zhang et al. 2020c; Ruan et al. 2022) and feature-based (Xia et al. 2021; Mao et al. 2021, 2020) TL methods. Among the reviewed papers, three sampling-based DA methods and one deep generative models-based DA approach have been reported to alleviate class imbalance problems. For instance, the Adaptive Synthetic over-sampling strategy was proposed in Liu and Zhu (2020) for tool wear prediction with imbalanced truncation data. Another major challenge of RUL prediction is the incomplete time-series data, which is treated as an imputation problem, the proposed GAN-based methods in Huang et al. (2022), Wenbai et al. (2021) achieved the insertion of missing data by automatically learning the correlations within time series. Model-level solutions based on TL and FSL have also been employed to enhance the generalization of predictive models across domains when faced with limited time series samples. Notably, MAML-based few-shot prognostics (Li et al. 2019, 2022d; Ding et al. 2021, 2022a; Mo et al. 2022; Ding and Jia 2021) have recently demonstrated substantial advancements within the PHM field. In addition, LSTM has become a popular benchmark model for RUL prediction tasks, due to their proficiency in capturing long-term dependencies, and the combination of LSTM with CNN has extended the capability of learning degradation patterns (Wenbai et al. 2021; Xia et al. 2021).

4.3.2 Remaining challenges

Significant strides have been made in addressing the challenges of limited data in RUL predictions. However, it is noteworthy that many of the proposed methods rely more or less on certain assumptions that might not hold in real-world conditions. In order to achieve more reliable forecasts, a number of major challenges must be addressed.

4.3.2.1 Interpretability of prognostic models

Although numerous prognostic models have shown impressive predictive performance, many are poorly interpreted. The inherent “black box” nature of DL models diminishes their desired interpretability, transparency, and causal insights about both the models and their outcomes. Consequently, within RUL prediction, interpretability is much needed to reveal the underlying degradation mechanisms hidden in the monitoring data, thus increasing the level of “trust” of intelligent models.

4.3.2.2 Uncertainty quantification in small data conditions

Uncertainty quantification (UQ) is an important dimension of the PHM framework that can improve the quality of RUL prediction through risk assessment and management. Uncertainty involved in RUL predictions can be categorized into aleatory uncertainty and epistemic uncertainty (Kiureghian and Ditlevsen 2009), where the first type often results from the noise inherent in data, such as the noise in signal measurements; while the second category of uncertainty is attributed to the deficiency of model knowledge, including model architecture, and model parameters. As discussed above, the impacts of small data challenge at the data level (incomplete data and unbalanced distribution) and model level (poor generalization) both further increase the uncertainty of predictive results, leading to few studies on UQ under small data conditions. The existing research on the UQ of intelligent RUL predictions mainly applies Gaussian process regression and Bayesian neural networks. For example, Ding et al. (2023b) designed a Bayesian approximation enhanced probabilistic meta-learning method to reduce parameter uncertainty in few-shot prognostics. The recent study (Nemani et al. 2023) demonstrates that the physics-informed ML is promising for the UQ of RUL predictions in small data conditions by combining physics-based and data-driven modeling.

5 Datasets and experimental settings

There is a growing number of proposed methods for small data problems in the PHM domain, but there is a lack of corresponding unified criteria for fair and valid evaluation of the proposed methods, one of the major reasons being the complexity and variability of the equipment and working conditions under study. To this end, we analyze and distill two key elements of model evaluation in current studies—datasets and small data settings, which are summarized in this section to provide guidance for effective evaluation of existing models.

5.1 Datasets

In the past decade, the PHM community has released many diagnostic and prognostic benchmarks that cover different mechanical objects, such as bearings (Smith and Randall 2015; Lessmeier et al. 2016; Qiu et al. 2006; Nectoux et al. 2012; Wang et al. 2018a; Bechhoefer 2013), gearbox (Shao et al. 2018; Xie et al. 2016), turbofan engines (Saxena et al. 2008), and cutting tools (Agogino and Goebel 2007). Table 9 lists several datasets that have been widely used in existing research to study small data problems, and the signal types, failure modes, counts of operational conditions, application to PHM tasks, and features of these datasets are outlined.

Different datasets exhibit distinct characteristics and are therefore suitable for studying various problems. Depending on how the fault data is generated, these datasets can be broadly categorized as simulated fault datasets, real fault datasets, and hybrid datasets. Among them, the simulated fault datasets (Smith and Randall 2015; Qiu et al. 2006; Wang et al. 2018a; Shao et al. 2018; Xie et al. 2016; Saxena et al. 2008; Bronz et al. 2020; Downs and Vogel 1993) obtain fault samples by using artificially induced faults or simulation software, and the experimental process involves limited and human-controlled variables, so the fault characteristics and degradation modes in the data are relatively simpler, and the DL models often achieve excellent performance. A typical example is the Case Western Reserve University (CWRU) dataset (Smith and Randall 2015), which is a well-known simulation benchmark widely used for small data problems in AD, FD and RUL prediction tasks. The CWRU has characteristics of multiple failure modes, unbalanced classes, different bearings, and various operating conditions, which provide opportunities for studying limited labeled training data (Ding et al. 2019), class imbalance (Mao et al. 2019), incomplete data (Yang et al. 2020a), and equipment degradation under various conditions (Kim and Youn 2019; Li et al. 2021c).

However, real fault datasets (Nectoux et al. 2012; Agogino and Goebel 2007) collect failure samples from equipment during natural degradation, which is often accompanied by many uncontrollable factors from the equipment itself and the external environment, resulting in more complex data distributions. These datasets are generally used to validate the robustness of small-data solutions in practical conditions (Sadoughi et al. 2019). Moreover, hybrid datasets (Lessmeier et al. 2016; Bechhoefer 2013) contain both artificially damaged and real-damaged fault data, and they are used to validate the transfer between failures across objects, working conditions, and from laboratory to real environments (Wang et al. 2020b).

Further, in terms of the types of signals contained in the datasets, vibration signals, sound signals, electric currents, and temperatures are the most common. These various signals open up avenues for developing multi-source data fusion techniques (Yan et al. 2023). In addition, some datasets include not only single faults but also composite faults, and these datasets facilitate the study of diagnosis and prognosis of compound faults. Moreover, as shown in Table 9, most of the datasets collect signals from individual components, but samples from subsystems (Shao et al. 2018) or entire systems (Downs and Vogel 1993) for system-level diagnostics and prediction are required.

5.2 Experimental setups

During the execution of intelligent PHM tasks, the general process of conducting experiments for DL models is to first divide the dataset into training, validation, and test sets according to a certain ratio. However, in order to simulate limited data scenarios, designing “small data” is a conundrum. There are two popular strategies used in the current studies, as shown in Table 10.

5.2.1 Setting a small sample size

The most direct and commonly employed setup in studying small data problems involves reducing the number of training or test samples to a few or dozens, which is achieved by selecting a tiny subset of the entire dataset. For example, 2.5% of the dataset was used for training in Xing et al. (2022), meaning only five fault samples of each class were provided to the model, which is far less than the hundreds or thousands of samples required by traditional DL methods. Due to the ease of implementation and understanding, this strategy has been widely used in AD, FD, and RUL prediction tasks with limited data, and it was notably observed in most experiments using DA and TL methods. However, the number of “small sample” is relative to the total size of the dataset and lacks a unified standard, which should be consistent when comparing various methods.

5.2.2 Following the N-way K-shot protocol

Another strategy is to treat PHM tasks under limited data conditions as a few-shot classification or regression problems. This strategy draws on the organization of the FSL method that extends the input samples from data point level to task space. As described in Sect. 3.3, each N-way K-shot task consists of N (N ≤ 20) classes, with each class containing K (K ≤ 10) support samples. Creating multiple N-way K-shot subtasks can be used for the training and test of FSL models. In the case of the CWRU dataset, for example, 10-way 1/5-shot FD tasks are frequently designed. This setting better aligns the principles of the FSL framework and proves to be beneficial in detecting novel faults under unseen conditions. However, tasks need to be sampled from a sufficiently large number of categories, otherwise the tasks will be homogeneous and degrade model performance.

6 Future research directions

Currently, most of the data sizes involved in intelligent PHM tasks are still in the small data stage and will be for a long time. Various methods proposed in existing research have made significant progress, but there is still a long way to go to realize data-efficient PHM. For this reason, we propose some directions for further research on small data challenges.

6.1 Data governance

Existing research on the limited data challenge focuses on the quantity of monitoring data, with relatively little attention paid to the quality of the samples. In fact, monitoring data serves as the “raw material” for implementing PHM tasks, and its quality seriously affects the performance of intelligent models as well as the accuracy of maintenance decisions. As a result, it is imperative to research the theories and methodologies concerning the governance of industrial data, which involves the quantification, assessment, and enhancement of data quality (Karkošková 2023). An in-depth exploration of these ensures that the collected monitoring data meets the data quality requirements set out in the ISO/IEC 25012 standard (Gualo et al. 2021), thereby minimizing the adverse effects of factors such as sensor drift, measurement errors, environmental noise, and label inaccuracies. Data governance is a key component to steer the trajectory of intelligent PHM from the prevalent model-centric paradigm towards a data-centric fashion (Zha et al. 2023).

6.2 Multimodal learning

Multimodal learning is a novel paradigm to train models with multiple different data modalities, which provides a potential means to solve small data problems in PHM. Specifically, rich forms of monitoring data exist in industry, including but not limited to surveillance videos, equipment images, and maintenance records, these data contain a wealth of intermodal and cross-modal information, which can be fused by multimodal learning techniques (Xu et al. 2023) to compensate for the low information density of limited unimodal data. Meanwhile, multimodal data from different systems and equipment can help to perceive their health status more comprehensively, thus improving the intelligent diagnosis and forecasting capability for the entire fleet of equipment (Jose et al. 2023).

6.3 Physics-informed data-driven approaches

Existing studies have demonstrated that data-driven approaches, especially those based on DL, excel at capturing underlying patterns from multivariable data, but they are susceptible to small dataset size. While physics model-based methods incorporate mechanisms or expert knowledge during the modeling process, but have limited data processing capabilities. Considering the respective strengths and weaknesses of these two paradigms, an emerging trend is to develop hybrid frameworks that integrate domain knowledge with implicit knowledge extracted from data (Ma et al. 2023), which has two obvious advantages in solving small data problems. On the one hand, the introduction of physical knowledge reduces the black-box characteristics of the DL model to a certain extent and enhances the interpretability of the PHM task decision-making under small samples (Weikun et al. 2023); On the other hand, physical modeling takes the known physical laws and principles as priori knowledge, which can reduce the uncertainty and domain bias brought by the small-sample data under the complex working conditions, for example, Shi et al. (2022) have validated the effectiveness of introducing multibody dynamic simulation into data augmentation for robustness enhancement.

6.4 Weak-supervised learning