Abstract

Existing machine reading comprehension methods use a fixed stride to chunk long texts, which leads to missing contextual information at the boundaries of the chunks and a lack of communication between the information within each chunk. This paper proposes DC-Graph model, addressing existing issues in terms of reconstructing and supplementing information in long texts. Knowledge graphs contain extensive knowledge, and the semantic relationships between entities exhibit strong logical characteristics, which can assist the model in semantic understanding and reasoning. By categorizing the questions, this paper filters the content of long texts based on categories and reconstructs the content that aligns with the question category, compressing and optimizing the long text to minimize the number of document chunks when inputted into BERT. Additionally, unstructured text is transformed into a structured knowledge graph, and features are extracted using graph convolutional networks. These features are then added as global information to each chunk, aiding answer prediction. Experimental results on the CoQA, QuAC, and TriviaQA datasets demonstrate that our method outperforms both BERT and Recurrent Chunking Mechanisms, which share the same improvement approach, in terms of F1 and EM score. The code is available at (https://github.com/guohaozhang/DC-Graph.git).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent years, with the widespread application of attention mechanisms and transformer (Vaswani et al. 2017) encoder-decoder frameworks in the field of deep learning, a series of pre-trained language models have emerged, among which the most representative ones are the BERT model (Kenton and Toutanova 2019) and the GPT model (Radford et al. 2018). These types of pre-trained language models have achieved promising results in various downstream tasks in natural language processing.

Compared to large-scale and fully-featured models like ChatGPT (Ouyang et al. 2022), models such as BERT have smaller computational requirements. Additionally, if trained on high-quality datasets specific to a particular domain, BERT-based models may achieve better performance than ChatGPT. However, the basic BERT model has a limitation on input length, where the total length of the question and document cannot exceed 512 tokens. To address this limitation for documents that exceed the input length, a common approach is to use a sliding window where the BERT model’s input is obtained by sliding a fixed step length. While this sliding window approach helps handle documents longer than the input length, it comes with several drawbacks. Firstly, sliding windows only consider tokens and may overlook the integrity of sentences, potentially leading to the loss of sentence-level information (Clark and Gardner 2018). Secondly, not all information in the document is necessarily useful, and using all the document content without filtering may introduce noise or interference to subsequent tasks. Lastly, the information exchange between the segmented blocks is limited as each block can only utilize the information it contains to perform subsequent tasks.

Gong et al. (2020) introduced a recurrent chunking mechanism to guide the model in determining the position and size of the next chunk. Groeneveld et al. (2020) used BERT for sentence-level relevance prediction to filter the document. Their work demonstrates that even without modifying the structure of the model, optimizing the input documents fed into the BERT model can not only reduce the training time but also improve the prediction accuracy of the model. However, their approaches for document optimization in the input to the BERT model were based on chunk-level and sentence-level methods. This limited the depth of document optimization at the word level, preserving unnecessary information to some extent. Additionally, their models applied the same processing to different documents without targeted differentiation to emphasize the crucial parts of each document.

In this study on long text machine reading comprehension, the texts in the dataset have an average length of over 2000 words. Without any improvement, the BERT model would need to segment the text into blocks, resulting in truncation of information and each block relying only on its own information for answer prediction, which is not desirable. However, upon analyzing the long texts, we observed that the key information tends to be concentrated around a particular theme. If we can classify the themes to filter the information in the document, we can minimize the frequency of text segmentation, ensuring that the text information is used as comprehensively as possible for answer prediction. Even after filtering the information based on classification, texts that exceed the sliding window still need to be segmented into different blocks. Additionally, due to the inherent subjectivity and linguistic variability, each block lacks knowledge of the connections between subsequent same-theme text and the information within the block itself. In the past couple of years, we have been inspired by the widespread application of graphs in the field of natural language processing. Graphs (Wu et al. 2020; Xia et al. 2021) exhibit stronger logical structures and, when non-structured texts are transformed into structured representations according to certain rules, they can serve as an additional source of information with global contextual and logical connections, enhancing downstream tasks.

Addressing the incomplete chunk boundary information, presence of irrelevant information within chunks, and lack of inter-block communication issues in the BERT model itself and previous works, this paper proposes DC-Graph: a chunk optimization model based on document classification and graph learning. DC-Graph model consists of four modules: category judgment, document reconstruction, graph construction, and answer prediction. In the category judgment module, the category of each question is determined by a category classifier. The document reconstruction module assigns question labels to the document based on their respective categories and filters the document content, retaining only the information belonging to the same category as the question. This ensures that fewer chunks are generated when passing the document through BERT, allowing each chunk to utilize as much relevant information as possible for answer prediction. The graph construction module utilizes specific natural language processing techniques to extract triples from the question and reconstructed document, forming a graph structure. By employing graph learning techniques, the model extracts relevant features from the graph. Finally, in the answer prediction module, the information processed by each module is integrated and fed into a neural network for answer prediction. The model’s four modules serve distinct purposes, and the output of each step can explain how the model operates, ensuring strong interpretability. Overall, this model exhibits strong purposefulness in each module, providing an interpretable framework for machine reading comprehension through the integration of document classification and graph learning.

During the experimental stage, we evaluated DC-Graph model on the Dialogue-based Machine Reading Comprehension Datasets CoQA, QuAC, and Long Document Machine Reading Comprehension Dataset TriviaQA. The results demonstrated that our approach not only outperformed the baseline BERT-base model in terms of performance but also reduced the required training time by approximately 9.3%. The study proposed by Gong et al. (2020). introduced the RCM (Reinforcement Learning for Chunking) approach, which utilizes reinforcement learning to optimize chunking and incorporates a recurrent mechanism to incorporate information from the previous chunk into the subsequent ones to aid answer prediction. Their method is similar to DC-Graph, and thus, in this paper, we also compare it with RCM. The results show that our approach outperforms RCM with better performance.

The main contributions of DC-Graph model are as follows:

-

1.

Document Classification and Reconstruction: We introduce a document classification module that categorizes questions and reconstructs the document by assigning question labels. This enables us to filter the document content and retain only the information relevant to the question, reducing the need for excessive chunking.

-

2.

Graph Construction and Learning: We develop a graph construction module. In this module, we employ a rule-based approach based on dependency parsing and named entity recognition results to construct triples for all entities from question and reconstructed document, and we apply graph learning techniques to extract relevant features from the graph. This provides additional contextual and logical connections within the document, enhancing the downstream tasks.

-

3.

Experimental Validation: We validate our DC-Graph model on three datasets. The experimental results demonstrate that our model outperforms the baseline BERT model in terms of performance while reducing the training time. Comparison with the RCM proposed by Gong et al. also highlights the superior effectiveness of our model.

Overall, our work contributes to the advancements in long text machine reading comprehension by introducing a chunk optimization model that combines document classification and graph learning, achieving improved performance and enhanced information utilization.

2 Related works

Machine Reading Comprehension (MRC) task is currently a research focus in natural language processing, defined as "the process of extracting and constructing the semantic meaning of written text through interaction." This task can be divided into four types based on specific scenarios: extractive, generative, single-choice, and multiple-choice. However, the purpose of all these types is to enable models to obtain the correct answers based on the provided questions and corresponding context paragraphs.

2.1 Enhanced reasoning for MRC models

Before the emergence of pre-training language models, researchers used attention mechanisms to enable mutual awareness between questions and context paragraphs. For example, Hermann et al. (2015) constructed the proposed neural network-based models, "The Attention Reader" and "The Impatient Reader," while Seo et al. (2016) proposed the BiDAF model. Although these models showed significant improvements compared to other deep learning models, they still fell short of achieving human-level cognition.

The emergence of fully attention-based pre-training language models has significantly improved the effectiveness of machine reading comprehension. Machine reading comprehension models based on large-scale pre-training language models such as BERT have achieved state-of-the-art performance on multiple relevant datasets. This shift has also changed the research focus in machine reading comprehension. Currently, most of the related work in machine reading comprehension is centered around enhancing the model’s reasoning abilities and establishing feedback and adjustment mechanisms.

Most of the current research on enhancing model reasoning abilities is conducted during the pre-training phase of language models. These approaches involve designing new pre-training tasks to further train the language models and equip them with additional reasoning capabilities. For instance, Jiao et al. (2021) enhanced the logical reasoning ability of the BERT model by introducing two pre-training tasks: frequency-based word masking and sentence ordering. Li et al. (2020) performed entity relation prediction pre-training on BERT before applying it to medical question-answering, thereby deepening the model’s understanding of the medical domain. Zheng et al. (2023) modeled the fine-tuning framework as a causal graph and found that catastrophic forgetting is caused by missing causal effects in the pre-training data. They proposed a unified fine-tuning objective based on a causal perspective to restore causal relationships. Diao et al. (2023) introduced MixDA, a method that decouples the feed-forward networks in the Transformer architecture and dynamically integrates knowledge from different domain adapters using a mixed adapter gating mechanism. Zhu et al. (2023) proposed the CI4MRC method, which effectively alleviates the name bias by adjusting the neuron-wise and token-wise confounding factors.

These approaches have demonstrated significant improvements in enhancing model reasoning abilities and can be widely applicable to various downstream tasks. However, a limited amount of data has minimal impact on the model, necessitating a large quantity of high-quality labeled data. Additionally, these methods still require fixed-length chunking operations when dealing with long texts and do not have specific handling mechanisms for long texts.

2.2 MRC models for long texts

Currently, there are two approaches to address long texts in machine reading comprehension. One is to increase the input length of the model to accommodate more text, while the other is to reduce the long text to fit within the model’s input length limitation.In terms of increasing the input length of the model, there have been notable works such as Longformer proposed by Beltagy et al. (2020) and Big Bird proposed by Zaheer et al. (2020). Both of these methods have successfully expanded the model’s input length from 512 to 4096, which is suitable for most texts. However, when encountering texts longer than 4096, chunking operations based on fixed strides are still required, thus the issue of chunking remains.

More research efforts have been devoted to reducing long texts. Prior to the emergence of pre-training language models, there were numerous improvement methods for Recurrent Neural Networks (RNN) (Rumelhart et al. 1986) and Long Short-Term Memory (LSTM) (Hochreiter and Schmidhuber 1997). LSTM-Jump, proposed by Yu et al. (2017), is a model that trains LSTM through reinforcement learning. This model can determine the number of time steps to skip at each state and control the number of tokens to skip in a single jump through hyperparameters. Seo et al. (2018) introduced Skim-RNN, a model that dynamically determines the dimensionality of each time step and the size of the RNN model. Hansen et al. (2018) proposed the Structural-Jump-LSTM model, which can determine whether the next input at each time step should start from the next token or the next punctuation mark. This approach allows the model to make informed decisions about how to process the text based on its structural properties. Additionally, Nishida et al. (2019) developed a query-focused extractor that utilizes RNN states to identify relevant sentences. This extractor enables the model to capture dependencies between sentences, thereby achieving the objective of sentence selection. By focusing on the most relevant sentences, the model can effectively reduce long texts and improve comprehension.

After the emergence of pre-training language models, Zhao et al. (2021), introduced the RoR (Read-over-Read) model. This model consists of two components: the Chunk Reader and the Document Reader.The Chunk Reader predicts a set of region answers for each Chunk of the text and then performs compression encoding on the predicted answers. The Document Reader utilizes the encoding results from the Chunk Reader to make predictions for the complete set of answers. Guan et al. (2022b) proposed the block-skim approach for identifying the relevant context information needed to answer questions. It selectively retains the information that the model deems useful while ignoring the parts deemed unimportant. Feng et al. (2023) proposed the KALM method, which leverages knowledge graphs and language models (LMs) to establish multiple knowledge-aware context representations for long documents. They employ a "context fusion" layer to facilitate knowledge exchange between different levels, enabling the derivation of the overall document representation. Du et al. (2023) constructed a Structure-Discourse Hierarchical Graph (SDHG). This approach first builds sentence-level discourse graphs for each section and encodes discourse relationships using graph attention. Next, it constructs a part-level structure graph based on natural structure and interacts between the question and context. Finally, different hierarchies of representations are integrated into joint answering and conditional decoding.

This paper suggests a slightly different approach. Instead of restructuring long texts based on the question category after text input to pre-training language models, it restructures them beforehand. This practice retains only the text relevant to the question’s category, ensuring that the final answer aligns with the question category without adding extra computational burdens.

2.3 MRC with knowledge graph

Due to the vast amount of knowledge contained within knowledge graphs and the strong logical connections between entities, knowledge graphs theoretically have the potential to assist natural language processing models. Many studies have utilized knowledge graphs as external knowledge in the field of machine reading comprehension. Examples include Freebase proposed by Bollacker et al. (2008) and ConceptNet proposed by Speer et al. (2017).Subsequently, most of the research in graph learning has focused on building more efficient models for extracting graph features (Schlichtkrull et al. 2018; Lin et al. 2019; Wang et al. 2020; Feng et al. 2020).Related work in machine reading comprehension includes the work of Zheng and Kordjamshidi (2022), who address the issue of missing edges between entities in subgraphs extracted from external knowledge graphs. They propose a Dynamic Relational Graph Network to recover the missing edges and establish direct connections between entities to facilitate multi-hop reasoning. Similarly, Guan et al. (2022a) tackle the problem of separately modeling the context representation of QA text and the relationship between entities in the knowledge graph (KG). They propose a Collaborative Reasoning Network that connects these two modules using a structure similar to transformer. This facilitates information co-reasoning between the two modules.However. Zhao et al. (2023) proposed the Verify-and-Edit framework, which performs post-editing of the reasoning chain based on external knowledge to improve the accuracy of predicted facts. Zhang et al. (2023) introduced FC-KBQA, a method that extracts relevant fine-grained knowledge components from a knowledge base and reorganizes them into medium-grained knowledge pairs used to generate the final logical expression. Li et al. (2023) retrieve the most relevant triples from a knowledge graph, reorder them, merge them with the question, and input them into a language model to ensure the involvement of more entities in answer prediction.

Their work mainly applies knowledge graphs to common sense question answering, and the graphs are manually constructed in advance. In this paper, the knowledge graph is generated in real-time from long texts and includes category information, which can better contribute to answer prediction.

3 Problem definition

The goal of this paper can be described as reconstructing the document word sequence \({\tilde{d}}\) and its corresponding document sentence sequence \({\tilde{s}}\) from the question sequence q, the document word sequence d, the document sentence sequence s, and the external knowledge base of categories c. The question sequence q is assigned labels based on categories, forming \({\bar{q}}\). The question sequence q is transformed into its declarative sentence form \({\tilde{q}}\), and then the corresponding question graph \(G_{{\tilde{q}}}\) and document graph \(G_{{\tilde{d}}}\) are constructed based on the question declarative sentence sequence \({\tilde{q}}\) and the reconstructed document word sequence \({\tilde{d}}\). Finally, combining the labeled question sequence \({\bar{q}}\), the reconstructed document word sequence \({\tilde{d}}\), the question graph \(G_{{\tilde{q}}}\) and document graph \(G_{{\tilde{d}}}\), the starting position sequence \(p^s\) and the ending position sequence \(p^e\) of the answer are predicted. Additionally, the answer sequence \(a=\{a_1, a_2, \ldots , a_i \}\) is defined. Notation description can be found in the Table 1.

3.1 Category judgment

The input for category prediction module is the question sequence q and the external knowledge base of categories c. The output is the predicted category sequence \({\tilde{c}}=\{{\tilde{c}}_1, {\tilde{c}}_2, \ldots , {\tilde{c}}_j \}\) , where \({\tilde{c}}_j\) represents the probability that the problem belongs to the j-th category. The goal of this module is to build a question classification model \(f_1(\cdot )\) to determine the category corresponding to the input question. This can be represented as follows:

3.2 Document reconstruction

The input for document reconstruction module is the question sequence q, the document word sequence d, and the output of the category judgement \(f_1(q,c)\). The output includes the reconstructed document word sequence \({\tilde{d}}=\{{\tilde{d}}_1, {\tilde{d}}_2, \ldots ,{\tilde{d}}_{{\tilde{m}}} \}\), the document sentence sequence \({\tilde{s}}=\{{\tilde{s}}_1, {\tilde{s}}_2, \ldots , {\tilde{s}}_{{\tilde{n}}} \}\), and the labeled question sequence \({\bar{q}}=\{{\bar{q}}_1, {\bar{q}}_2, \ldots , {\bar{q}}_{{\bar{l}}} \}\). The goal of this module is to extract information from the original document d that belongs to the same category as the question q, and concatenate them to form a new document. In the reconstructed document, all sentences belong to the same category and do not contain any unrelated information from other categories. The answers predicted by the model will also be generated from these sentences, ensuring that the answers are from the specified category. Additionally, the reconstructed document has fewer sentences compared to the original document, resulting in a reduced number of chunks. This can be represented as follows:

3.3 Graph construction

The input for graph construction module is the question sequence q and the reconstructed document word sequence \({\tilde{d}}\), and the output includes the question graph \(G({\tilde{q}}\_u,{\tilde{q}}\_v)\) and the document graph \(G({\tilde{d}}\_u,{\tilde{d}}\_v)\). Both graphs constructed in this paper are directed graphs, where \({\tilde{q}}\_u\) represents the head node in the question graph, \({\tilde{q}}\_v\) represents the tail node in the question graph. For the document graph, \({\tilde{d}}\_u\) and \({\tilde{d}}\_v\) represent the head and tail nodes, respectively. The goal of this module is to construct structured graph information from unstructured text, incorporating the complete textual entity relationships into each BERT block in the form of a graph, to assist in predicting the starting and ending positions of the answer. This can be represented as follows:

3.4 Answer prediction

The input for answer prediction module is the labeled question sequence \({\bar{q}}\), the reconstructed document sentence sequence \({\tilde{s}}\), the question graph \(G({\tilde{q}}\_u,{\tilde{q}}\_v)\), the document graph \(G({\tilde{d}}\_u,{\tilde{d}}\_v)\), and the output of the category prediction \({\tilde{c}}\). The output includes the probability of the starting position \(p^s\) and ending position \(p^e\) in the reconstructed document \({\tilde{d}}\), which can answer the labeled question \({\bar{q}}\). The objective of this module is to construct structured graph information from unstructured text, by incorporating complete textual entity relationships in the form of graphs into each BERT chunk. This ensures that each block contains comprehensive global information in graph form, thereby assisting in predicting the starting and ending positions of the answer. This can be represented as follows:

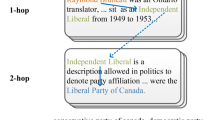

3.5 Concrete example

As shown in the specific example in Fig. 1, during the answer prediction process, for the question "What was the first name of the jazz trombonist Kid Ory?", using the category classification module, we determine that this question belongs to the "Person" category. Therefore, we add the label "Class Person" at the beginning of the question to inform the model that the answer should be a person’s name. Through the external knowledge base, we determine that the second and fourth sentences in the document do not contain any person names information, so these two sentences are excluded from the reconstructed document.

The question is transformed into a declarative sentence form by replacing the interrogative word "What" with "[MASK]". Dependency parsing, entity recognition, and relation extraction are then performed on both the declarative question and the reconstructed document to construct the knowledge graph. Two entities are identified in the question and five entities are identified in the document. The verb phrases or prepositional phrases between two entities are considered as their relations.

The graph node embeddings are initialized and passed through Graph Convolutional Neural Networks or Graph Attention Networks to obtain their graph features. Meanwhile, the question and the reconstructed document are passed through BERT to obtain their textual features. Finally, the graph features and textual features are combined to predict the starting and ending positions of the answer.

4 Model

The DC-Graph model is built upon the pre-trained language model BERT. In this paper, BERT is also used as a baseline model for comparison. DC-Graph model is illustrated in Fig. 2, which consists of four main modules: category judgment, document reconstruction, graph construction, and answer prediction.

4.1 Category judgement module

The purpose of this module is to determine the category of the document. In DC-Graph model, multiple knowledge bases for different categories were autonomously constructed through data collection and specific natural language processing methods. Each category’s knowledge base includes a vocabulary of words that may appear in that category, as well as a syntactic form library of sentences that may appear in that category. Therefore, the handling of training data and test data differs in this module.

The training data includes an answer sequence \(a=\{a_1, a_2, \ldots , a_i \}\), and the category \({\tilde{c}}=\{{\tilde{c}}_1, {\tilde{c}}_2, \ldots , {\tilde{c}}_j \}\) corresponding to the document containing the answer sequence is determined using the vocabulary of words from each category’s external knowledge base \(c=\{c_1, c_2, \ldots , c_j \}\). Here, \({\tilde{c}}_k\) represents whether the answer a belongs to the k-th category. Specifically, it can be represented as follows:

in (5), \(k=1,2,\ldots ,j\). Note that for \({\tilde{c}}\), there is and only one category identifier \({\tilde{c}}_k\) with a value of 1. The class with the lowest index value is set to 1 first.

The test data does not include the answer sequence a, so it cannot be determined based on the word repository in the external knowledge base. This paper analyzes the syntax of the question sequence q in the test data to determine the function of each word in the sentence. Based on this, it compares with the syntactic form library in the external knowledge base c to determine the category \({\tilde{c}}\) of the document corresponding to the question sequence q. it can be represented as follows:

Similar to the processing of the training data, for \({\tilde{c}}\), there is only one category identifier \({\tilde{c}}_k\) with a value of 1, and the category with a smaller index value is prioritized to be set as 1.

4.2 Document reconstruction module

The purpose of this module is content filtering. Not all content in the original document contributes to the prediction of the answers. We want the document content passed to the BERT model for answer prediction to contain as little unrelated information as possible. From the category prediction module, we obtain the category \({\tilde{c}}\) based on the question q or the answer a. We add the category \({\tilde{c}}\) as a part of the question q at the beginning, thereby assigning a label to the question and obtaining the labeled question representation \({\bar{q}}\).

In (6), we define the symbol "; " as the horizontal concatenation of two sequences.

BERT-based machine reading comprehension models typically treat the document as a sequence of words, denoted as \(d=\{d_1, d_2, \ldots , d_m \}\). Therefore, when using a fixed-length sliding window for chunking, a sentence may be divided into different blocks, leading to the loss of contextual information at the edges. In DC-Graph model, the answer prediction module treats the document as a sequence of sentences, denoted as \(s=\{s_1, s_2, \ldots , s_n \}\), where n is the total number of sentences in the document. \(s_k\) represents the k-th sentence in the document, and \(s_k = \{d_x, \ldots , d_y \}\), where \(y-x\) is the number of words included in \(s_k\). Based on \(s_k\), we can obtain \(h_{s_k}\),

in (7), \(k=1,2,\ldots ,n\). The value of \(h_{s_k}\) represents whether the sentence \(s_k\) contains words belonging to the category \({\tilde{c}}\). If the value of \(h_{s_k}\) is 1, it indicates that the sentence contains words from that category. Therefore, during the document concatenation operation, the sentence will be retained. Through this step, we can filter the sequence of sentences in the document and obtain \({\tilde{s}}\).

In (8), we define the symbol "\(\circ\)" as the filtering operation. Through this operation, only the sentences relevant to the category \({\tilde{c}}\) are retained in \({\tilde{s}}\). The reconstructed word sequence \({\tilde{d}}\) is obtained from the sentence sequence \({\tilde{s}}\).

4.3 Graph construction module

The purpose of this module is to construct a structured graph structure from unstructured text content. The research in this paper focuses on machine reading comprehension models for long texts, which contain a large number of words. When passing the document into the BERT model, chunking operations are required. Even after the document reconstruction module, some documents still need to be divided into multiple chunks. Interval answer prediction is performed separately between the chunks, without utilizing the full context. This can lead to biases in the final prediction results. In this module, the paper introduces a knowledge graph to address the issue of information exchange between blocks. A knowledge graph is a structured representation of knowledge that organizes entities, relationships, and attributes into a graph structure to describe the relationships between things in the real world. Knowledge graphs can contain a large amount of factual and semantic information and have a clear logical structure. Knowledge graphs can be integrated as additional contextual information for each block, enabling access to comprehensive document context and logical relationships between entities. In DC-Graph model, the construction of the knowledge graph is implemented based on techniques such as dependency parsing, entity recognition, and relation extraction.

For the question sequence q, as it contains interrogative words but doesn’t include the answer, performing entity recognition and relation extraction on it may result in the omission of necessary entities. To address this, we first convert it into a declarative sentence. We locate the position of the interrogative word in the question sequence q and replace it with "[MASK]", resulting in the declarative form \({\tilde{q}}\). The declarative form \({\tilde{q}}\) and the reconstructed word sequence \({\tilde{d}}\) undergo dependency parsing (DEP), named entity recognition (NER), and relation extraction (RE) processing, represented as

In this module, we define \({\tilde{q}}\_ u = \{{\tilde{q}}\_u_1, {\tilde{q}}\_u_2, \ldots , {\tilde{q}}\_u_x \}\) to represent the head nodes in the question graph, and \({\tilde{q}}\_ v = \{{\tilde{q}}\_v_1, {\tilde{q}}\_v_2, \ldots , {\tilde{q}}\_v_x \}\) to represent the tail nodes in the question graph. The indices of the head nodes and tail nodes correspond one-to-one, representing a relationship from the head node to the tail node. Similarly, for the reconstructed document \({\tilde{d}}\), we have \({\tilde{d}}\_ u = \{{\tilde{d}}\_u_1, {\tilde{d}}\_u_2, \ldots , {\tilde{d}}\_u_y \}\) and \({\tilde{d}}\_ v = \{{\tilde{d}}\_v_1, {\tilde{d}}\_v_2, \ldots , {\tilde{d}}\_v_y \}\), where x represents the maximum number of triples for the question nodes, and y represents the maximum number of triples for the document nodes. As the processing approach for both questions and documents is the same, this module takes the processing of documents as an example. For the document’s relation extraction result \({\tilde{d}}\_RE\), each head and tail node of every triple is respectively added to \({\tilde{d}}\_u\) and \({\tilde{d}}\_v\). Then, based on the entity recognition result \({\tilde{d}}\_NER\), each entity is checked if it exists in \({\tilde{d}}\_u\) or \({\tilde{d}}\_v\). If not, a new triple related to that entity is constructed by incorporating the results from dependency parsing result \({\tilde{d}}\_DEP\). This new triple is then added to \({\tilde{d}}\_u\) and \({\tilde{d}}\_v\). Utilizing \({\tilde{d}}\_u\) and \({\tilde{d}}\_v\), the document knowledge graph \(G({\tilde{d}}\_u, {\tilde{d}}\_v)\) is constructed.

Taking the sentence "He kept La Place, Louisiana, as his base of operations due to family obligations until his twenty-first birthday, when he moved his band to New Orleans, Louisiana" as an example, we can demonstrate how to extract two triples from this sentence using existing tools, as shown in Table 2. The number of directly extracted triples is limited and does not include all the entity information in this sentence. If we directly use such triples to construct a knowledge graph, the effectiveness of supplementing the global information of the block will be greatly reduced. Therefore, we need to supplement them to ensure that all entities are included in the knowledge graph.

First, we perform named entity recognition on this sentence. La Place, New Orleans, and Louisiana are identified as entities. By comparing with Table 2, we can observe that the entities New Orleans and Louisiana do not appear in the directly extracted triples. Therefore, we need to construct new triples with these two entities. After applying the graph construction algorithm proposed in this paper, we obtain five triples as shown in Table 3. Compared to before, all entities in the sentence are now included in the triples.

4.4 Answer prediction module

The BERT-based machine reading comprehension models usually view the document as a sequence of words, represented as \({\tilde{d}}=\{{\tilde{d}}_1, {\tilde{d}}_2, \ldots ,{\tilde{d}}_{{\tilde{m}}} \}\). When using a sliding window with a fixed window size for chunking, there is a possibility of splitting a sentence into different chunks, leading to the loss of contextual information at the boundaries. In DC-Graph model, the answer prediction module views the document as a sequence of sentences, denoted as \({\tilde{s}}=\{{\tilde{s}}_1, {\tilde{s}}_2, \ldots , {\tilde{s}}_{{\tilde{n}}} \}\). We pass both the question representation \({\bar{q}}\), and the document representation \({\tilde{s}}\), into the BERT model to obtain their respective vector representations.

In order to ensure that each block can utilize the complete document context for answer prediction, we convert the question graph \(G({\tilde{q}}\_u,{\tilde{q}}\_v)\) and the document graph \(G({\tilde{d}}\_u,{\tilde{d}}\_v)\) generated by the graph construction module into corresponding vector representations \(h_{G\_{{\tilde{q}}}}\) and \(h_{G\_{{\tilde{d}}}}\) using graph learning models.

In the experimental stage, this paper adopts two graph learning models: Graph Convolutional Neural Networks(Kipf and Welling 2016) and Graph Attention Networks (Veličković et al. 2018). The GCN in (13) and (14) represents the Graph Convolutional Neural Network. The vector representations of the question graph and document graph are embedded into the textual vector representation \(h_{{\bar{q}},{\tilde{s}}}\), resulting in the final vector representation \({\tilde{h}}\) used for answer prediction.

The vector \({\tilde{h}}\) is passed through a linear layer and then transformed using the softmax nonlinearity to obtain the starting position probability \(p^s\) for the answer.

Where \(w_1^T\) represents the parameter between \({\tilde{h}}\) and the linear layer for predicting the starting position of the answer. Similarly, we can obtain the probability \(p^e\) for the ending position of the answer, and \(w_2^T\) represents the parameter between \({\tilde{h}}\) and the linear layer for predicting the ending position.

5 Experiment

5.1 Datasets

The machine reading comprehension datasets used in this paper for experimentation include CoQA (Reddy et al. 2019), QuAC (Choi et al. 2018), and TriviaQA (Joshi et al. 2017). CoQA and QuAC are dialogue-based machine reading comprehension datasets, where each sample consists of multiple questions and answers on a given context document. The context documents provided in these datasets are relatively short. Hence, the experimental analysis in this paper primarily focuses on TriviaQA. TriviaQA is a large-scale machine reading comprehension dataset that consists of 95,000 question-answer pairs sourced from Wikipedia and web data. Compared to other datasets, TriviaQA presents greater challenges in terms of variability between questions and answers, as well as the need for cross-sentence reasoning. In this paper, the experiment specifically focuses on the Wikipedia portion of the TriviaQA dataset, where the average context document contains around 2,600 words. The specific details of three datasets can be found in the Table 4.

5.2 Baseline models

This paper cites two baseline models based on pre-trained language models: BERT-base and Recurrent Chunking Mechanisms. Both baseline models are evaluated and compared in the experimental section of the paper to assess their performance in machine reading comprehension tasks.

-

1.

BERT-base model: this model serves as a foundation for numerous subsequent works and has shown promising performance in machine reading comprehension tasks. However, due to the limitations of input size, a sliding window mechanism is employed for documents that exceed the input length. This mechanism involves moving the window with a fixed stride to capture the content in a sequential manner. The model treats all contents equally and applies a simple chunking approach without considering the specific structure or importance of different parts in the document.

-

2.

Recurrent Chunking Mechanisms (BERT-RCM): This model is an improvement over the sliding window mechanism in BERT. It introduces a recurrent chunking mechanism on top of BERT to determine the position and size of the next window. This mechanism allows the information from one chunk to be passed on to the next one, ensuring that the content from the previous chunk can contribute to the predictions of the subsequent ones. Due to the similarity in the improvement approach, this paper applies the recurrent chunking mechanism to the BERT-base model for comparison with the DC-Graph model.

5.3 Evaluation metrics

In this paper, the evaluation metrics used are the macro-averaged word-level F1 score and Exact Match (EM) score. For each predicted answer, it is compared to the ground truth answer for the question to determine precision and recall for that answer.

Specifically, precision for an answer is calculated as the percentage of word overlap between the predicted answer and the ground truth answer, divided by the number of words in the predicted answer. Recall, on the other hand, is calculated as the percentage of word overlap between the predicted answer and the ground truth answer, divided by the number of words in the ground truth answer. The F1 score is the harmonic mean of precision and recall.

In the case of multiple ground truth answers for a question, the highest F1 score is taken as the final F1 score for that question. For the EM score, if the predicted answer exactly matches any of the ground truth answers for a question, the score is 1; otherwise, it is 0.

5.4 Model comparison

During the experiments in this paper, the maximum input length for all models was set to 512. The stride for both BERT-base and DC-Graph is set to 128. For BERT-RCM, the action space is set to the optimal values provided [− 64, 128, 256, 512, 1024]. Furthermore, data that did not yield correct answers from the context document and data lacking correct answers were removed. Questions which can be used for results analysis will be retained. Using the predicted answers for these questions, the F1 scores and EM scores are calculated for both the two baseline models and the proposed model. The results are presented in the Table 5.

From the Table 5, it can be observed that DC-Graph outperformed two baseline models on all three datasets. Since the improvements made to BERT-RCM are specifically targeted at long-document data, the model did not perform well on the CoQA and QuAC datasets, where the document lengths are relatively short. This is because, with a maximum input length of 512, these datasets do not require the original document to be segmented into multiple chunks. Hence, the BERT-RCM model, which utilizes reinforcement learning for chunking operations, struggles to make an impact. However, the optimization of DC-Graph for chunking is based on document classification. The reconstructed documents retain only the sentences that are relevant to the questions, which benefits answer prediction. As a result, DC-Graph achieves an improved F1 score of 0.6 compared to BERT-base on the CoQA dataset and achieves an improved F1 score of 1.3 on the QuAC dataset.

On the TriviaQA dataset, DC-Graph shows improvements compared to BERT-base. EM score increases by 1.05, and the F1 score increases by 0.93. The improvement approach of our method is similar to BERT-RCM, which achieved an EM score improvement of 0.57 and an F1 score improvement of 0.41 compared to BERT-base. Building upon this, DC-Graph still achieves significant improvements over BERT-RCM, with an increase of 0.48 in EM score and 0.52 in F1 score.

In this paper, we also compared the number of chunks on the TriviaQA dataset. The BERT-base model adopted a fixed-length chunking approach with 112,440 chunks passed to BERT. After our document reconstruction, the number of chunks passed to BERT reduced to 101,892, resulting in a reduction of 9.3% compared to the original number.

5.5 Ablation experiment

In order to evaluate the impact of adding individual modules on answer prediction scores on the long-text dataset TriviaQA, the experimental stage of this paper builds upon the baseline model BERT-base and separately incorporates the category judgement module, document reconstruction module, and graph construction module. The results are then compared and analyzed against BERT-base and the DC-Graph. In the graph construction module, we compare the effects of graph convolutional networks and graph attention networks with 1, 3, or 8 attention heads on our model.

The detailed results are shown in the Table 6. After adding the category judgement module, the model demonstrates a certain level of improvement in EM and F1 scores compared to BERT-base, and the performance approaches that of BERT-RCM. However, when it comes to improving the model by adding a single module on top of BERT-base, the effectiveness of the category judgement module is slightly inferior to that of the document reconstruction module and the graph construction module. The optimization effects of adding the document reconstruction module and the graph construction module are similar, but both are slightly inferior to the complete model. The difference between the different graph learning networks within the graph construction module is minimal.

To evaluate the impact of removing each module on the answer prediction scores, the experimental stage in this paper conducted ablation experiments. Starting with the complete model, each module (category judgement, document reconstruction, and graph construction) is removed individually, and the results are compared and analyzed against BERT-base and the DC-Graph.

The specific results are shown in the Table 7. After removing the category judgement module, the model’s performance in terms of EM and F1 scores is very close to that of the DC-Graph. Therefore, it can be approximated that the category prompts added in the category judgement module have the least impact on the optimization of the DC-Graph. When the document reconstruction module is removed, there is a noticeable decrease in the EM and F1 scores of the model. Similarly, removing the graph construction module also leads to a significant decrease in both the EM and F1 scores, with a magnitude comparable to removing the document reconstruction module.

DC-Graph mainly optimizes BERT-base in two aspects. Firstly, it involves classifying and reconstructing the input documents, which reduces the number of segments and filters out irrelevant information. Secondly, it constructs a graph from the documents, adding global information to the segments to assist answer prediction. The results of the ablation experiments are consistent with expectations.

5.6 Results analysis

In DC-Graph, document optimization is based on question classification. Therefore, in the experimental process, we further classified the dataset based on Bert-base, adding one question category at a time to observe the impact of category numbers on the DC-Graph. The specific results are shown in Figs. 3, 4, and 5.

In Fig. 3, the x-axis represents the number of categories, starting from two categories. When there is only one category, it is assumed that all questions belong to the "Other" category. The y-axis in Fig. 3 represents the quantity, indicating the number of questions for which the DC-Graph provides more correct answers compared to the two baseline models, Bert-base and Bert-RCM.

From Fig. 3, it can be observed that compared to the two baseline models, the DC-Graph has little impact on questions belonging to the "Other" category. As the number of categories increases, the number of correct answers for "Other" category questions remains unchanged. However, the DC-Graph performs well in answering questions that belong to specific categories. As the number of categories increases, the number of correctly answered questions in specific categories also increases. This gradually widens the gap between the DC-Graph and the two baseline models, indicating that the improvement in F1 score and EM score of the DC-Graph is mainly contributed by this part.

In Fig. 4, the x-axis represents the number of categories in the model. "Bert-base" represents the scenario where there is only the "Other" category, and thus the accuracy of identifying specific categories in this category is 0. The y-axis in Fig. 4 represents the accuracy of correct answers. The two curves represent the accuracy of the "Other" category and the accuracy of all categories except "Other".

From Fig. 4, it can be observed that as the number of categories increases, the accuracy of answering questions that belong to specific categories continues to rise. The accuracy stabilizes when there are three and four categories, and it is significantly higher than the accuracy of the "Other" category. Although the accuracy of the "Other" category decreases, the number of questions belonging to the "Other" category decreases as the number of specific categories increases. Therefore, the impact of the decrease in accuracy of the "Other" category is smaller than the impact of the improvement in accuracy in the other part. As a result, the overall accuracy of correct answers is still improved.

In Fig. 5, the x-axis represents each specific category, while the y-axis represents the accuracy of each category. In this paper, the dataset is temporarily divided into four categories. Under this scenario, except for the second category (which has the fewest number of questions and therefore has a smaller impact on the results), the accuracy of all other categories is higher than the accuracy of the "Other" category. Hence, it can be concluded that subdividing the questions based on categories can have a positive impact on predicting answers.

In addition, during the experimental phase of this paper, the analysis of incorrectly answered samples was also conducted. An example is shown in Fig. 6. The question is "When people talk about LGBT rights, what does the ’T’ represent?". Based on the current categorization strategy, because the question contains the word "When", it is categorized as "Number," despite the presence of "what". According to the Number category, sentences containing Number-related words or phrases were filtered out to reconstruct a new document. However, the intention of this sample’s question was to inquire about the meaning of ’T’ in ’LGBT’ which is "transgender," and not to obtain a numeric answer. During the document reconstruction, the first sentence containing "transgender," which does not contain any Number-related words or phrases, was filtered out and discarded. Therefore, the document used for answer prediction does not include the correct answer, resulting in an inevitably incorrect prediction. In this case, the predicted answer was a year, which belongs to the Number category but is not the desired correct answer.

6 Conclusion

This paper proposes a machine reading comprehension model based on document classification and graph learning, aiming to address the limitations of the BERT model in handling long texts. Through the category judgement and document reconstruction modules, relevant information related to the question can be highlighted and the text chunking can be minimized. The graph construction module captures the global contextual logical connections within the document and extracts relevant features. Finally, the integration of the answer prediction module further enhances the accuracy of the reading comprehension task. The experimental results on the TriviaQA dataset demonstrate that DC-Graph outperforms the BERT-base baseline model and the BERT-RCM model with similar improvement approaches.

However, there is still room for further improvement in future research. For example, introducing more natural language processing methods to extract the graph and optimizing the graph construction method can be explored. Additionally, conducting deeper analysis and interpretation of each module can enhance the interpretability of the model.

Data availibility

The data that support the findings of this study are available in the official CoQA website at (https://stanfordnlp.github.io/coqa/), the official QuAC website at (https://quac.ai/), the official TriviaQA website at (https://nlp.cs.washington.edu/triviaqa/).

References

Beltagy I, Peters ME, Cohan A (2020) Longformer: the long-document transformer. In: Proceedings of the conference on empirical methods in natural language processing (EMNLP), pp 4123–4133

Bollacker K, Evans C, Paritosh P et al (2008) Freebase: a collaboratively created graph database for structuring human knowledge. In: Proceedings of the 2008 ACM SIGMOD international conference on Management of data, pp 1247–1250

Choi E, He H, Iyyer M et al (2018) Quac: question answering in context. In: Proceedings of the 2018 conference on empirical methods in natural language processing, pp 2174–2184

Clark C, Gardner M (2018) Simple and effective multi-paragraph reading comprehension. In: Proceedings of the 56th annual meeting of the association for computational linguistics (volume 1: long papers), pp 845–855

Diao S, Xu T, Xu R et al (2023) Mixture-of-domain-adapters: decoupling and injecting domain knowledge to pre-trained language models’ memories. In: Proceedings of the 61st annual meeting of the association for computational linguistics (volume 1: long papers), pp 5113–5129

Du H, Feng Y, Li C et al (2023) Structure-discourse hierarchical graph for conditional question answering on long documents. Findings of the Association for Computational Linguistics: ACL 2023:6282–6293

Feng Y, Chen X, Lin BY et al (2020) Scalable multi-hop relational reasoning for knowledge-aware question answering. In: Proceedings of the 2020 conference on empirical methods in natural language processing (EMNLP), pp 1295–1309

Feng S, Tan Z, Zhang W et al (2023) Kalm: Knowledge-aware integration of local, document, and global contexts for long document understanding. In: Proceedings of the 61st annual meeting of the association for computational linguistics (volume 1: long papers), pp 2116–2138

Gong H, Shen Y, Yu D et al (2020) Recurrent chunking mechanisms for long-text machine reading comprehension. In: Proceedings of the 58th annual meeting of the association for computational linguistics, pp 6751–6761

Groeneveld D, Khot T, Sabharwal A et al (2020) A simple yet strong pipeline for hotpotqa. In: Proceedings of the 2020 conference on empirical methods in natural language processing (EMNLP), pp 8839–8845

Guan X, Cao B, Gao Q et al (2022a) Corn: co-reasoning network for commonsense question answering. In: Proceedings of the 29th international conference on computational linguistics, pp 1677–1686

Guan Y, Li Z, Lin Z et al (2022b) Block-skim: efficient question answering for transformer. In: Proceedings of the AAAI conference on artificial intelligence, pp 10710–10719

Hansen C, Hansen C, Alstrup S et al (2018) Neural speed reading with structural-jump-lstm. In: International conference on learning representations

Hermann KM, Kocisky T, Grefenstette E et al (2015) Teaching machines to read and comprehend. Advances in neural information processing systems 28

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Jiao F, Guo Y, Niu Y et al (2021) Rept: bridging language models and machine reading comprehension via retrieval-based pre-training. Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021:150–163

Joshi M, Choi E, Weld DS et al (2017) Triviaqa: a large scale distantly supervised challenge dataset for reading comprehension. In: Proceedings of the 55th annual meeting of the association for computational linguistics (volume 1: long papers), pp 1601–1611

Kenton JDMWC, Toutanova LK (2019) Bert: pre-training of deep bidirectional transformers for language understanding. In: Proceedings of naacL-HLT, p 2

Kipf TN, Welling M (2016) Semi-supervised classification with graph convolutional networks. In: International conference on learning representations

Li S, Gao Y, Jiang H et al (2023) Graph reasoning for question answering with triplet retrieval. Findings of the Association for Computational Linguistics: ACL 2023:3366–3375

Li D, Hu B, Chen Q et al (2020) Towards medical machine reading comprehension with structural knowledge and plain text. In: Proceedings of the 2020 conference on empirical methods in natural language processing (EMNLP), pp 1427–1438

Lin BY, Chen X, Chen J et al (2019) Kagnet: Knowledge-aware graph networks for commonsense reasoning. In: Proceedings of the 2019 conference on empirical methods in natural language processing and the 9th international joint conference on natural language processing (EMNLP-IJCNLP), pp 2829–2839

Nishida K, Nishida K, Nagata M, et al (2019) Answering while summarizing: multi-task learning for multi-hop qa with evidence extraction. In: Proceedings of the 57th annual meeting of the association for computational linguistics, pp 2335–2345

Ouyang L, Wu J, Jiang X et al (2022) Training language models to follow instructions with human feedback. Adv Neural Inf Process Syst 35:27730–27744

Radford A, Narasimhan K, Salimans T et al (2018) Improving language understanding by generative pre-training

Reddy S, Chen D, Manning CD (2019) Coqa: a conversational question answering challenge. Trans Assoc Comput Linguist 7:249–266

Rumelhart DE, Hinton GE, Williams RJ (1986) Learning representations by back-propagating errors. Nature 323(6088):533–536

Schlichtkrull M, Kipf TN, Bloem P et al (2018) Modeling relational data with graph convolutional networks. In: The semantic web: 15th international conference, ESWC 2018, Heraklion, Crete, Greece, June 3–7, 2018, proceedings 15, Springer, pp 593–607

Seo M, Kembhavi A, Farhadi A et al (2016) Bidirectional attention flow for machine comprehension. In: International conference on learning representations

Seo M, Min S, Farhadi A et al (2018) Neural speed reading via skim-rnn. In: International conference on learning representations

Speer R, Chin J, Havasi C (2017) Conceptnet 5.5: an open multilingual graph of general knowledge. In: Proceedings of the AAAI conference on artificial intelligence

Vaswani A, Shazeer N, Parmar N et al (2017) Attention is all you need. In: Guyon I, Luxburg UV, Bengio S et al (eds) Advances in neural information processing systems, pp 5998–6008. https://proceedings.neurips.cc/paper_files/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf

Veličković P, Cucurull G, Casanova A et al (2018) Graph attention networks. In: International conference on learning representations

Wang P, Peng N, Ilievski F et al (2020) Connecting the dots: a knowledgeable path generator for commonsense question answering. Findings of the Association for Computational Linguistics: EMNLP 2020:4129–4140

Wu Z, Pan S, Chen F et al (2020) A comprehensive survey on graph neural networks. IEEE Trans Neural Netw Learn Syst 32(1):4–24

Xia F, Sun K, Yu S et al (2021) Graph learning: a survey. IEEE Trans Artif Intell 2(2):109–127

Yu AW, Lee H, Le Q (2017) Learning to skim text. In: Proceedings of the 55th annual meeting of the association for computational linguistics (volume 1: long papers), pp 1880–1890

Zaheer M, Guruganesh G, Dubey KA et al (2020) Big bird: transformers for longer sequences. Adv Neural Inf Process Syst 33:17283–17297

Zhang L, Zhang J, Wang Y, et al (2023) Fc-kbqa: a fine-to-coarse composition framework for knowledge base question answering. In: Proceedings of the 61st annual meeting of the association for computational linguistics (volume 1: long papers), pp 1002–1017

Zhao J, Bao J, Wang Y et al (2021) Ror: read-over-read for long document machine reading comprehension. Findings of the Association for Computational Linguistics: EMNLP 2021:1862–1872

Zhao R, Li X, Joty S et al (2023) Verify-and-edit: a knowledge-enhanced chain-of-thought framework. In: Proceedings of the 61st annual meeting of the association for computational linguistics (volume 1: long papers), pp 5823–5840

Zheng C, Kordjamshidi P (2022) Dynamic relevance graph network for knowledge-aware question answering. In: Proceedings of the 29th international conference on computational linguistics, pp 1357–1366

Zheng J, Ma Q, Qiu S et al (2023) Preserving commonsense knowledge from pre-trained language models via causal inference. In: Proceedings of the 61st annual meeting of the association for computational linguistics (volume 1: long papers), pp 9155–9173

Zhu J, Wu S, Zhang X et al (2023) Causal intervention for mitigating name bias in machine reading comprehension. Findings of the Association for Computational Linguistics: ACL 2023:12837–12852

Funding

This work was funded by the Researchers Supporting Project Number (RSPD2024R681) King Saud University, Riyadh, Saudi Arabia

Author information

Authors and Affiliations

Contributions

J.Z. gave the main idea. G.Z. designed the model frame, and completed the experiment. O.A. analyzed the experimental results. A.T. designed the research. X.L. and H.Z. helped with the details.

Corresponding author

Ethics declarations

Competing interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhou, J., Zhang, G., Alfarraj, O. et al. DC-Graph: a chunk optimization model based on document classification and graph learning. Artif Intell Rev 57, 143 (2024). https://doi.org/10.1007/s10462-024-10771-w

Accepted:

Published:

DOI: https://doi.org/10.1007/s10462-024-10771-w