Abstract

Selecting facility locations requires significant investment to anticipate and prepare for disruptive events like earthquakes, floods, or labor strikes. In practice, location choices account for facility capacities, which often cannot change during disruptions. When a facility fails, demand transfers to others only if spare capacity exists. Thus, capacitated reliable facility location problems (CRFLP) under uncertainty are more complex than uncapacitated versions. To manage uncertainty and decide effectively, stochastic programming (SP) methods are often employed. Two commonly used SP methods are approximation methods, i.e., Sample Average Approximation (SAA), and decomposition methods, i.e., Progressive Hedging Algorithm (PHA). SAA needs large sample sizes for performance guarantee and turn into computationally intractable. On the other hand, PHA, as an exact method for convex problems, suffers from the need to iteratively solve numerous sub-problems which are computationally costly. In this paper, we developed two novel algorithms integrating SAA and PHA for solving the CRFLP under uncertainty. The developed methods are innovative in that they blend the complementary aspects of PHA and SAA in terms of exactness and computational efficiency, respectively. Further, the developed methods are practical in that they allow the specialist to adjust the tradeoff between the exactness and speed of attaining a solution. We present the effectiveness of the developed integrated approaches, Sampling Based Progressive Hedging Algorithm (SBPHA) and Discarding SBPHA (d-SBPHA), over the pure strategies (i.e. SAA). The validation of the methods is demonstrated through two-stage stochastic CRFLP. Promising results are attained for CRFLP, and the method has great potential to be generalized for SP problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Most real world modeling and optimization problems are subject to uncertainties in problem parameters. Stochastic Programming (SP) methodologies are often resorted for solving such problems either exactly or with a statistical bound on the optimality gap. Beginning with Dantzig (1955) introduction of a recourse model, the SP models and methodologies has become an important tool for optimization under uncertainty. Operations research community’s contributions to SP has been steadily increasing in terms of both modeling and solution approaches (Lulli and Sen 2006; Topaloglou et al. 2008; Bomze et al. 2010; Peng et al. 2011; Toso and Alem 2014; Shishebori and Babadi 2015; Crainic et al. 2016; Celik et al. 2016; Aydin 2016; Cheung and Simchi-Levi 2019; Zhao et al. 2019; Juan et al. 2021; Jamali et al. 2021; Sener and Feyzioglu 2022). A common assumption in applying most of SP methodologies is that the probability distributions of the random events are known or can be estimated with an acceptable accuracy. For majority of the SP problems, the objective is to identify a feasible solution that minimizes or maximizes the expected value of a function over all possible realizations of the random events (Solak 2007).

In stochastic problems, decomposition methods usually studied into two groups: “stage-based methods and scenario-based methods” (Guo et al. 2015). For stage-based decomposition, L-shaped method, or Benders’ decomposition, can be counted. Benders’ decomposition can be used if the first stage decision variables are integer and the second stage variables are real variables (Sen 2005; Kim and Zavala 2018). Nevertheless, since the Benders’ decomposition depend on strong duality, it cannot be applied in the circumstances of general mixed-integer stochastic programs (Fakhri et al. 2017; Li and Grossmann 2018). On the other hand, as a scenario-based decomposition method, PHA is motivated by the augmented Lagrangean method, and Gade et al. (2016) showed that a lower bound can be acquired by PHA, which is like solving the Lagrangean subproblems. Though the PHA is only guaranteed to converge for problems that have continuous and convex (Rockafellar and Wets 1991) second stage, Watson and Woodruff (2011) proposed various heuristics to hasten the convergence of PHA for stochastic mixed-integer programs. So, in PHA none of the subproblems need to be solved to optimality and PHA can discover high-quality solutions within a reasonable amount of time (Guo et al. 2015). As stated in Guo et al. (2015) one of the advantages of scenario-based decomposition methods (as in PHA) over the stage-based ones, i.e., L-shaped or Benders’ decomposition, is their easing of the computational complexity. For the reasons given, we are motivated to, in this study, marry PHA and SAA to get promising results within a reasonable amount of computational time.

In this study, we propose a novel approach, called Sampling Based Progressive Hedging Algorithm (SBPHA) and an improved version of SBPHA (discarding-SBPHA) to solve a class of two-stage SP problems. A standard formulation of the two-stage stochastic program is (Kall and Wallace 1994; Birge and Louveaux 1997; Ahmed and Shapiro 2002):

where,

is the optimal value and \(\xi := \left( f,T,W,h \right)\) denotes the vector of parameters of the second stage problem. It is assumed that some or all of the components of \(\xi\) are random. The expectation term in (1) is then taken with respect to a known probability distribution of \(\xi\). The problem (1) solves for the first stage variables, \(x \in R^{n_{1}},\) which must be decided prior to any realization of \(\xi ,\) and problem (2) solves for the second stage variables, \(y \in R^{n_{2}}\) , given the first stage decisions as well as the realization of \(\xi\).

The proposed SBPHA approach hybridizes the Progressive Hedging Algorithm (PHA), and the external sampling based approximation algorithm, Sample Average Approximation (SAA), to efficiently solve a class of two-stage SP problems. While the standard SAA procedure is effective with sufficiently large samples, the required sample size can be quite large for the desired confidence level. Furthermore, for combinatorial problems where the computational complexity increases faster than linearly in the sample size, SAA is often executed with a smaller sample size by generating and solving several SAA problems with i.i.d. samples. For complexity and modeling under uncertainty, readers are encouraged to follow the work conducted by Weber et al. (2011). These additional SAA replications with the same sample size are likely to provide a better solution than the best solution found so far. However, by selecting the best performing sample solution, the SAA procedure effectively discards the remaining sample solutions which contain valuable information about the problem’s uncertainty. The main idea of the proposed hybrid method SBPHA is to re-use all the information embedded in sample solutions by iteratively solving the samples with adding augmented Lagrangian penalty term (as in PHA) to find a common solution that all samples agree on.

As one of the strategic decision, facility locations require substantial investments to anticipate for uncertain future events (Owen and Daskin 1998; Melo et al. 2009), i.e., disruption of facilities that are critical to satisfy customer demand (Schütz et al. 2009). For a detailed review of uncertainty considerations in facility location problems, readers are referred to Snyder (2006) and Snyder and Daskin (2005). Approximation algorithms that are generated for UFLP cannot be practical to the general class of facility location problems such as Capacitated Reliable Facility Location Problem (CRFLP). In practice, capacity decisions are considered jointly with the location decisions. Further, the capacities of facilities often cannot be changed (or at a reasonable cost) in the event of a disruption. Following a facility failure, customers can be assigned to other facilities only if these facilities have sufficient available capacity. Thus, CRFLPs are more complex than their uncapacitated counterparts (Shen et al. 2011). Thus, in this study, we introduce promising algorithms for CRFLP, which can be applied to other types of facility location problems and even ex- tended for other two-stage SP problems.

Specifically, the main contributions of the study are as follows: The proposed SBPHA provides a configurable solution procedure, which increases the sampling-based methods’ accuracy and PHA’s efficacy, for a class of two-stage SP problems. Further, the augmented SBPHA which is called Discarding-SBPHA (d-SBPHA), is developed for SPs with binary first stage decision variables. It is analytically proved that SBPHA guarantee optimal solution to the mentioned problems when the number of discarding iterations approaches to infinity.

The rest of the paper is organized as follows. In Sect. 2, related literature is summarized. In Sect. 3, we briefly summarize the SAA and PHA methods, and then describe the Sampling Based Progressive Hedging Algorithm (SBPHA) and d-SBPHA in detail. In Sect. 4, we present scenario based capacitated reliable facility location problem and its mathematical formulation, and then report the computational experiments comparing the solution quality and CPU time efficiency of the SAA algorithm and the proposed hybrid algorithms (SBPHA and d- SBPHA). We conclude with discussions, limitations and future research directions in Sect. 5.

2 Related literature

SP plays a substantial role in the analysis, design, and operation of systems that includes uncertainties. Further, algorithms developed for SP problems provide a tool for coping with inherent system uncertainty (Aydin 2022). The main reason for using SP is that, in general, the SP can successfully accommodate decision making practices under uncertainty. On the other hand, SP with recourse can take curative decisions once the uncertainty is uncovered, and among the SP approaches with recourse, the most commonly used one is the two-stage SP (Ning and You 2019).

Accordingly, this study contributes to the solution methodologies for two-stage capacitated reliable facility location problem (CRFLP) in SPs. In the two-stage SP problems, the decision variables are partitioned into two main sets where the first stage variables correspond to decisions prior to the realization of uncertain parameters. Once the random events realized, design or operative strategy improvements (i.e., second stage recourse decisions) can be made at a certain cost. The objective is to optimize the sum of first stage costs and the expected value of the random second stage or recourse costs (Ahmed and Shapiro 2002). An extensive number of solution methods have been proposed for solving two-stage SP problems. These solution methods can be classified as either exact or approximation methods (Schneider and Kirkpatrick 2007). Exact solution methods include both analytical solution methods as well as computational algorithms. Because of complexity of SP, several approximation algorithms in the form of sampling-based methods (Ahmed and Shapiro 2002) or approximation methods (Higle and Sen 1991) are proposed.

When the random variable set is finite with a relatively small number of joint realizations (i.e., scenarios), a SP can be formulated as a deterministic equivalent program and solved “exactly" via an optimization algorithm (Rockafellar and Wets 1991). The special structure of this deterministic equivalent program also allows for the application of large-scale optimization techniques, e.g., decomposition methods. Such decomposition methods can be categorized into two types. The first type decomposes the problem by stages e.g., L-shaped method (Slyke and Wets 1969; Birge and Louveaux 1997; Elçi and Hooker 2022) while the second type decomposes the problem by scenarios. The latter category’s methods are primarily based on Lagrangian relaxation of the non-anticipativity constraints where each scenario in the scenario tree corresponds to a single deterministic mathematical program e.g., Progressive Hedging Algorithm (Rockafellar and Wets 1991; Lokketangen and Woodruff 1996; Rockafellar 2019). A subproblem obtained by stage or scenario composition may include multiple stages in “by-stage" decomposition method and multiple scenarios in “by-scenario" decomposition methods, respectively (Chiralaksanakul 2003). Monte Carlo sampling based algorithms, a popular approximation method, are commonly used in solving large scale SP problems (Morton and Popova 2001). Monte Carlo sampling method can be deployed within either ‘interior’ or ‘exterior’ of the optimization algorithm. In the ‘interior’ sampling based methods the computationally expensive or difficult exact computations are replaced with the Monte Carlo estimates during the algorithm execution (Verweij et al. 2003). In the ‘exterior’ sampling based methods, the stochastic process is approximated by a finite scenario tree obtained through the Monte Carlo sampling. The solution to the problem with the constructed scenario tree is an approximation of the optimal objective function value. The ‘exterior’ sampling-based method is also referred to as the “sample average approximation" method in the SP literature (Shapiro 2002).

The SAA method has become a popular technique in solving large-scale SP problems over the past decade due to its application ease and scope. It has been shown that the solutions obtained by the SAA converge to the optimal solution as the sample size is sufficiently large (Ahmed and Shapiro 2002). However, these sample sizes could be quite large and the actual rate of convergence de- pends on the problem conditioning. Several studies reported successful appli- cations of SAA to various stochastic programs (Verweij et al. 2003; Kleywegt et al. 2002; Shapiro and Homem-de-Mello, 2001; Fliege and Xu 2011; Wang et al. 2011; Long et al. 2012; Hu et al. 2012; Wang et al. 2012; Aydin and Murat 2013; Ayvaz et al. 2015; Bidhandi and Patrick 2017; Lam and Zhou 2017; Bertsimas et al. 2018; Cheng et al. 2019; Cheung and Simchi-Levi 2019). Most SP problems have key discrete decision variables in one or more of the 5 stages, e.g., binary decisions to open a plant (Watson and Woodruff 2011). Rockafellar and Wets (1991) proposed a scenario decomposition-based method (PHA) to solve challenging large linear or mixed-integer SP problems. PHA is particularly suitable for SPs where there exists effective techniques for solving individual scenario subproblems. While the PHA possesses theoretical con- vergence properties for SP problems with continuous decisions, it is used as a heuristic method when some or all decision variables are discrete (Lokketangen and Woodruff 1996; Fan and Liu 2010; Watson and Woodruff 2011). There are many application studies of the progressive hedging approach, i.e., lot sizing (Haugen et al. 2001), portfolio optimization (Barro and Canestrelli 2005), resource allocation in network flow (Watson and Woodruff 2011), operation planning (Gonçalves et al. 2012), forest planning (Veliz et al. 2015), facility location (Gade et al. 2014), server location and unit commitment (Guo et al. 2015), hydro-thermal scheduling (Helseth 2016), and server location (Atakan and Sen 2018), and resilience-oriented design (Ma et al. 2018).

The main reason and motivation to hybridize SAA and PHA originates from the final step of the SAA, where after selecting the best performing solution, the rest of the sample solutions are abandoned. Discarding of all solutions, except the reported one results in the loss of valuable sample information as well as the effort spent in solving each sample. The proposed SBPHA offers a solution to these losses by considering each sample SAA problem as though it is a scenario subproblem in the PHA. Accordingly, in the proposed SBPHA approach, we modify the SAA method by iteratively re-solving the sample SAA problems while, at the end of each iteration, penalizing deviations from the probability weighted solution of the samples and the best performing solution to the original problem (i.e., as in the PHA). Hence, a single iteration of the SBPHA corresponds to the classical implementation of SAA method. Considering this harmony and fit between SAA and PHA, in this study we hybridized these two methods.

3 Solution methodology

In this section we first summarize PHA and SAA methods. Next, we describe proposed algorithms (SBPHA and d-SBPHA) in detail.

3.1 Progressive hedging algorithm

PHA is proposed by Rockafellar and Wets (1991) as a scenario decomposition-based method to solve challenging large linear or mixed-integer SP problems. A standard approach for solving two-stage SP (1)-(2) is by constructing scenario tree by generating a finite number of joint realizations \(\xi _{s}\) , \(\forall {s} \in {S},\) called scenarios, and allocating to each \(\xi _{s}\), a positive weight \(p_{s}\) , such that \(\sum _{{s} \in {S}}^{}p_{s}=1\) (Shapiro 2008). The generated set, \(\{ \xi _{1}, \ldots , \xi _{|S|} \}\) , of scenarios, with the corresponding probabilities \(p_{s}, \ldots ,p_{|S|},\) is considered a representation of the underlying joint probability distribution of the random parameters. Using this representation, the expected value of the second stage problem’s objective can be calculated as \(E \left[ \varphi \left( x, \xi \right) \right] = \sum _{{s} \in {S}}^{}p_{s} \varphi \left( x, \xi _{s} \right)\) . By duplicating the second stage decisions, \(y_{s}\) , for every scenario \(\xi _{s}\) , i.e. \(y_{s}=y \left( \xi _{s} \right) , \forall {s} \in {S}\) , the two-stage problem (1)-(2) can be equivalently formulated as follows:

where \(\xi _{s} \left( f_{s},T_{s},W_{s},h_{s} \right) ,\forall {s} \in {S}\), are the corresponding scenarios and \(\textit{G} \left( x,{{\xi }}_{\text{s}}\right)\) represents the feasible region for scenario s defined by constraints in problem (2) (Shapiro 2008). To simplify the notation hereafter, we re-express the above extensive form formulation as follows:

where x, first stage decision vector is a common decision vector independent of scenarios, i.e., \({ x_{s}=x, \forall s \in S}\). The \({ y_{s}}\) represent second stage decision variables which are determined given a first stage decision and a specific \({ \xi _{s}}\).

Next, we present the pseudo-code of PHA to show how the PHA converges to a common solution taking all scenarios, which belong to the original problem, into account. Let \(\rho\) be a penalty factor \({( \rho >0)}\), \({\epsilon }\) be a convergence threshold over the first stage decisions and k be the iteration number. The basic PHA Algorithm is stated as follows as presented in Watson and Woodruff (2011):

PHA Algorithm:

-

(1)

\({k}{:=}{0}\)

-

(2)

For all \({s}{\in }{S},{\ x}^{k}_{s}{:=} \text { argmin} _{{x},{{y}}_{{s}}}\left( {\text{cx}}{+}{{\text{f}}}_{{\text{s}}}{{y}}_{{s}}\right) {:(}{x},{{y}}_{{s}}{)}{\in }{\varphi }\left( {\text{x}},{{{\xi }}}_{{\text{s}}}\right)\)

-

(3)

\({\overline{ {x}}}^{ {k}} {:=}\sum _{ {s} {\in } {S}}{{ {p}}_{ {s}}{ {x}}^{ {k}}_{ {s}}}\)

-

(4)

For all \({s} {\in } {S} {,\ }{ {w}}^{ {k}}_{ {s}} {:=} {\rho }\left( { {x}}^{ {k}}_{ {s}} {-}{\overline{ {x}}}^{ {k}}\right)\)

-

(5)

\({k} {:=} {k} {+} {1}\)

-

(6)

For all \({s} {\in } {S},\)

\({ {x}}^{ {k}}_{ {s}} {:=} \text { argmin}_{ {x},{ {y}}_{ {s}}}\left( {\text{cx}} {+}{{ {\omega }}^{ {k} {-} {1}}_{ {s}} {x} {+}\frac{ {\rho }}{ {2}}{\left\| {x} {-}{\overline{ {x}}}^{ {k} {-} {1}}\right\| }^{ {2}} {+} {\text{f}}}_{ {\text{s}}}{ {y}}_{ {s}}\right) {:(} {x},{ {y}}_{ {s}} {)} {\in } {\varphi }\left( {\text{x}},{ {{\xi }}}_{ {\text{s}}}\right)\)

-

(7)

\({\overline{ {x}}}^{ {k}} {:=}\sum _{ {s} {\in } {S}}{{ {p}}_{ {s}}{ {x}}^{ {k}}_{ {s}}}\)

-

(8)

For all \({s} {\in } {S} {,\ }{ {\omega }}^{ {k}}_{ {s}} {:=}{ {\omega }}^{ {k} {-} {1}}_{ {s}} {+} {\rho }\left( { {x}}^{ {k}}_{ {s}} {-}{\overline{ {x}}}^{ {k}}\right)\)

-

(9)

\({ {\pi }}^{ {k}} {:=}\sum _{ {s} {\in } {S}}{{ {p}}_{ {s}}\left\| { {x}}^{ {k}}_{ {s}} {-}{\overline{ {x}}}^{ {k}}\right\| }\)

-

(10)

If \({ {\pi }}^{ {k}} {>} {\epsilon } {,\ }\)then go to Step 5, else terminate.

When x is continuous, the PHA’s convergence to a common solution \({\bar{x}}\) on which all scenarios agree is linear. However problem becomes much more difficult to solve when x vector is integer due to the non-convexity (Watson and Woodruff 2011). Detailed information on the behavior of the PHA methodology can be found in Wallace and Helgason (1991), Mulvey and Vladimirou (1991), Lokketangen and Woodruff (1996), Crainic et al. (2011), Watson and Woodruff (2011), Gade et al. (2016), Barnett et al. (2017), Atakan and Sen (2018), Rockafellar (2018), Sun et al. (2019), and Rockafellar and Sun (2019).

3.2 Sample average approximation

Over the past decade SAA method has become a very popular technique in solving large-scale SP problems. The key idea of SAA approach in solving SP can be described as follows. A sample \(\xi _{1}, \ldots , \xi _{N}\) of N realizations of the random vector \(\xi\) is randomly generated, and subsequently the expected value function \(E \left[ \varphi \left( x, \xi \right) \right]\) is approximated by the sample average function \(N^{-1} \sum _{n=1}^{N} \varphi \left( x, \xi \right)\). In order to reduce variance within SAA, Latin Hypercube Sampling (LHS) may be used instead of uniform sampling. Performance comparisons of LHS and uniform sampling, within SAA scheme, are analyzed in Ahmed and Shapiro (2002). The resulting SAA problem \(Min_{x \in X} \{ \hat{g}_{N} \left( x \right) :=cx+ N^{-1} \sum _{n=1}^{N} \varphi \left( x, \xi _{n} \right) \}\) is then solved by deterministic optimization algorithms. As N increases, the SAA algorithm’s converges to the optimal solution of the SP in (1) as shown in Ahmed and Shapiro (2002) and in Kleywegt et al. (2002). Since solving SAA becomes a challenge with large N, the practical implementation of SAA often features multiple replications of the sampling, solving each of the sample SAA problems, and selecting the best found solution upon evaluating the solution quality using either the original scenario set or a reference scenario sample set. We now provide a description of the SAA procedure as below.

SAA Procedure:

Initialize: Generate M independent random samples \({m=1,2, \ldots ,{M}}\) with scenario sets \({N_{m}}\) where \(\vert {N_{m}} \vert ={N}\). Each sample m consists of N realizations of independently and identically distributed (i.i.d.) random scenarios. We also select a reference sample \({N^{'}}\) which is sufficiently large, e.g., \(\vert {N^{'} \vert \gg N}\).

Step 1: For each sample m , solve the following two-stage SP problem and record the sample optimal objective function value \({v^{m}}\) and the sample optimal solution \({x^{m}}\).

Step 2: Calculate the average \({\bar{v}^{M}}\) of the sample optimal objective function values obtained in Step 1 as follows:

Step 3: Estimate the true objective function value \({\hat{v}^{m}}\) of the original problem for each sample’s optimal solution. Solve the following problem for each sample using the optimal first stage decisions \({x^{m}}\) from step 1.

Step 4: Select the solution \({x^{m}}\) with the best \({\hat{v}^{m}}\) as \({x^{SAA}}\) as the solution and \({v^{SAA}= {\hat{v}^{m}}}\) as the objective function value of SAA.

Let \({v^{*}}\) denote the optimal objective function value of the original problem (1-2). The \({\bar{v}^{M}}\) is an unbiased estimator of the expected optimal objective function value of sample problems. Hence \({\bar{v}^{M}}\) provides a statistical lower bound on the \({v^{*}}\) (Ahmed and Shapiro 2002).When the first and the second stage decision variables in (1) and (2) are continuous, it has been proved that an optimal solution of the SAA problem provides optimal solution of the true problem with probability approaching to one at an exponential rate as N increases (Shapiro and Homem-de-Mello 2001; Ahmed and Shapiro 2002; Meng and Xu 2006; Liu and Zhang 2012; Xu and Zhang 2013; Shapiro and Dentcheva 2014). Determining the optimal or the minimum required sample size, N , is an important task for SAA applicants, therefore a numerous reserachers contributed on determining the sample size (Kleywegt et al. 2002; Ahmed and Shapiro 2002; Shapiro 2002; Ruszczynski and Shapiro 2003a).

3.3 Sampling based progressive hedging algorithm (SBPHA)

We now describe the proposed SBPHA algorithm which is a hybridization of the SAA and PHA. The motivation for this hybridization originates from the final stage of the SAA method (Step 4, in SAA) where, after selecting the best performing solution, the rest of the sample solutions are discarded. This discarding of the \(\left( M-1 \right)\) sample solutions results in losses in terms of both valuable sample information (increasing with M) as well as the effort spent in solving for each sample’s solution (increasing with N). The proposed SBPHA offers a solution to these losses by considering each sample SAA problem as though it is a scenario subproblem in the PHA. Accordingly, in the proposed SBPHA approach, we modify the SAA method by iteratively re-solving the sample SAA problems while, at the end of each iteration, penalizing deviations from the probability weighted solution of the samples and the best performing solution to the original problem (i.e., as in the PHA). Hence, a single iteration of the SBPHA corresponds to the classical implementation of SAA method.

By integrating elements of both SAA and PHA, SBPHA inherits the PHA’s ability to handle problems with Mixed-Integer Programming (MIP) second-stage subproblems. This hybridization allows us to leverage the computational advantages of SAA while addressing the challenges posed by MIP subproblems, resulting in a versatile and efficient algorithm for solving complex stochastic programming problems. An important distinction of the SBPHA from classical PHA is the sampling concept and the size of the subproblems solved. The classical PHA solves many subproblems each corresponding to a single scenario in the entire scenario set one by one at every iteration, and evaluates the probability weighted solution using the individual scenario probabilities. In comparison, the SBPHA solves only a few subproblems each corresponding to the samples (with multiple scenarios) and determines the probability weighted solution in a different way than PHA (explained in detail below). Note that while solving individual sample problems in SBPHA is more difficult than solving a single scenario subproblem in PHA, SBPHA solves much fewer subproblems. Clearly, SBPHA makes a trade-off between the number of sample subproblems to solve and the size of each sample subproblem.

We first present the proposed SBPHA algorithm and then describe its steps in detail. For clarity, we give the notation used precedes the algorithmic steps. Note that for brevity, we only define those notation that are new or different than those used in the preceding sections:

Notation:

-

\({k},{ {k}}_{ {max}}\) : iteration index and maximum number of iterations

-

\({ {P}}_{ {m}},{\widehat{ {P}}}_{ {m}}\) : probability and normalized probability of sample m realization, i.e., \(\sum _{{m}}{{\widehat{ {P}}}_{ {m}} =1}\)

-

\({ {x}}^{ {m}, {k}} {\ }\) : solution vector for sample m at iteration k

-

\({\overline{ {x}}}^{ {k}}\) : probability weighted solution vector at iteration k

-

\({\overline{\overline{ {x}}}}^{ {k}}\) : balanced solution vector at iteration k

-

\({ {x}}_{ {best}}\) : best incumbent solution

-

\({\widehat{ {v}}}_{ {best}}\) : objective function value of the best incumbent solution with respect to \({N}'\)

-

\({\widehat{ {v}}}^{ {k}}_{ {best}}\) : objective function value of the best solution at iteration k with respect to \({N}'\)

-

\({ {\omega }}^{ {k}}_{ {m}}\) : dual variable vector for sample m at iteration k

-

\({ {\rho }}^{ {k}} {\ }\) : penalty factor at iteration k

-

\({\beta }\) : update parameter for the penalty factor, \({1} {<\ } {\beta } {<} {2}\)

-

\({ {\alpha }}^{ {k}}\) : weight for the best incumbent solution at iteration \({k}, {0} {\le } {\ } {\alpha } {\le } {1}\)

-

\({ {\Delta }}_{ {\alpha }}\) : update factor for the weight of the best incumbent solution, \({0} {\le } {\ }{ {\Delta }}_{ {\alpha }} {\le } {0}. {05}\)

-

\({ {\epsilon }}_{ {\alpha }} {\ }\) : Euclidean norm distance between sample solutions \({ {\text{x}}}^{ {m}, {k}}\) and \({\overline{\overline{ {x}}}}^{ {k}}\) at iteration k

-

\({\epsilon }\) : convergence threshold for solution spread

-

\({ {x}}^{ {SBPHA}}\) : best solution found by SBPHA

-

\({ {v}}^{ {SBPHA}}\) : objective function value of the best solution found by SBPHA

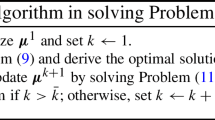

The pseudo-code for the Sampling Based Progressive Hedging Algorithm is as follows:

The first step in SBPHA’s initialization is to execute the standard SAA procedure (Step 5-6). In the initialization step of the SBPHA, unlike SAA, we also calculate sample m’s probability and normalized probabilities, e.g., \({\text{P}}_{\text{m}}\) and \({\widehat{\text{P}}}_{\text{m}}\), which are used to calculate sample m’s probability weighted average solution \({\overline{\text{x}}}^{\text{k}}\) at iteration \(\text{k}\) (Step 12). Next, in Step 13, we calculate the samples’ balanced solution (\({\overline{\overline{\text{x}}}}^{\text{k}}\)) as a weighted average of the average solution (\({\overline{\text{x}}}^{\text{k}}\)) and the incumbent best solution (\({\text{x}}_{\text{best}}\)). The \({\text{x}}_{\text{best}}\) is initially obtained as the solution to the SAA problem (Step 6) and later on, updated based on the evaluation of the improved sample solutions and the most recent incumbent best (Step 26). In calculating the balanced solution (\({\overline{\overline{\text{x}}}}^{\text{k}}\)), the SBPHA uses a weight factor \({{\alpha }}^{\text{k}}{\in }\rm {(0,1)}\) to tune the bias between the sample’s current iteration average solution and the best incumbent solution. High values of \({{\alpha }}^{\text{k}}\) tend the balanced solution (hence the sample solutions in the next iteration) to the samples’ average solution, whereas low values tend \({\overline{\overline{\text{x}}}}^{\text{k}}\) to the incumbent best solution. There are two alternative implementations of SBPHA concerning this bias tuning, where the \({{\alpha }}^{\text{k}}\) can be static by setting \({{\Delta }}_{{\alpha }}\rm {=0}\) or dynamically changing over the iterations by setting \({{\Delta }}_{{\alpha }}\rm {>0}\) (see Step 14). The advantage of dynamic \({{\alpha }}^{\text{k}}\) is that, beginning with a large \({{\alpha }}^{\text{k}}\rm {,\ }\)we first prioritize the sample average solution until the incumbent best solution quality improves. This approach allows guiding the sample solutions to a consensus sample average initially and then directing the consensus sample average in the direction of evolving best solution.

In Step 15, we update the penalty factor \({{\rho }}^{\text{k}}\) depending whether the distance (\({{\epsilon }}_{\text{k}}\)) of the sample solutions from the most recent balanced solution has sufficiently improved. We choose the improvement threshold as half of the distance in the previous iteration (e.g., \({{\epsilon }}_{\rm {k-1}}\)) . Similarly, in Step 16, we update the dual variable (\({{\omega }}^{\text{k}}_{\text{m}}\)) using the standard subgradient method. Note that the\(\ {{\omega }}^{\text{k}}_{\text{m}}\) are the lagrange multipliers associated with the equivalence of each sample’s solution to the balanced solution.

In Step 18, we solve each sample problem with additional objective function terms representing the dual variables and calculate the deviation of the sample solutions from the balanced solution (i.e.,\({\ }{ {{\epsilon }}}_{ {\text{k}}}\)). Step 22 estimates the objective function value of each sample solution in the original problem using the reference set\({\ } {\text{N}} {\rm {'}}\). Steps 25 and 26 identify the sample solution \({ {\text{x}}}^{ {\text{m}}, {\text{k}}}\) with the best \({\widehat{ {\text{v}}}}^{ {\text{m}}, {\text{k}}}\) in iteration \({\text{k}}\) and updates the incumbent best \({\widehat{ {\text{v}}}}_{ {\text{best}}}\) if there is any improvement. Note that \({\widehat{ {\text{v}}}}_{ {\text{best}}}\) is monotonicaly nonincreasing with the SBPHA iterations. The Steps 22, and 25-26 correspond to the integration of SAA method’s selection of the best performing sample solution. Rather than terminating with the best sample solution, the proposed SBPHA conveys this information in the next iteration through the balanced solution. The SBPHA algorithm terminates when either of the two stopping conditions are met. If the iteration limit is reached \({\text{k}} {{\ge }}{ {\text{k}}}_{ {\text{max}}}\) or when the all sample solutions converged to the balanced solution within a tolerance then the SBPHA terminates with the best found solution. The worst-case solution of the SBPHA is equivalent to the SAA solution. This can be observed by noting that the best incumbent solution is initialized with the SAA’s solution and \({\widehat{ {\text{v}}}}_{ {\text{best}}}\) is monotonicaly nonincreasing with the SBPHA iterations. Hence, the SBPHA ensures that there is always a feasible solution which has same performance or better than that of SAA’s. Flow chart of SBPHA algorithm is provided in Fig. 4.

3.4 Discarding-SBPHA (d-SBPHA) for binary-first stage SP problems

The Discarding-SBPHA extends the SBPHA by finding an improved, and ideally the optimal solution, to the original problem. The main idea of the d-SBPHA is to re-solve SBPHA, by adding constraint(s) to the sample subproblems in (8) that prohibits finding the same best incumbent solution(s) found in earlier d-SBPHA iterations. This prohibition is achieved through constraints that are violated if \({ {\text{x}}}^{ {\text{m}}, {\text{k}}}\) overlaps with any of the best incumbent solutions (\({ {x}}_{ {best}} {)}\) found thus far in the d-SBPHA iterations. This modification of SBPHA can be considered as globalization of the SBPHA in that by repeating the discarding steps, the d-SBPHA is guaranteed to find the optimum solution, albeit, with infinite number of discarding steps. The d-SBPHA initializes with the \({ {x}}_{ {best}}\) of the SBPHA solution and the SBPHA iteration’s parameter values (\(\omega\),\(\alpha\),\(\rho\) \({\dots }\)) where this solution is first encountered in the SBPHA iteration history. We now provide the additional notation and algorithmic steps of the d-SBPHA and then describe in detail.

Additional notations for d-SBPHA

-

\({\text{o}}\) : iteration number where the SBPHA or d- SBPHA finds the solution, where it is found the very first, which is converged at the end,

-

\({ {\text{d}} {\rm {,\ }} {\text{d}}}_{ {\text{max}}}\) : number discarding iterations and maximum number of discarding iteration,

-

\({\text{D}}\) : set of discarded solutions,

-

\({ {\text{n}}}_{{ {\text{D}}}_{ {\text{t}}}}\) : number of binary decision variables that are equal to 1 in discarded solution \({ {\text{D}}}_{ {\text{t}}} {{\in }} {D},\)

-

\({ {\text{D}}}^{ {\text{1}}}_{ {\text{t}}}\) : set of decision variables, that are equal to 1 in discarded solution t,

-

\({ {\text{D}}}^{ {\text{0}}}_{ {\text{t}}}\) : set of decision variables, that are equal to 0 in discarded solution t.

Initialization step of the d-SBPHA is the implementation of the original SBPHA with the only difference is to set up the starting values of parameters as the values of the SBPHA algorithm where the current best solution is found. Also in Step 1, the set of the solutions that will be discarded is updated to prevent the algorithm to re-converge to the same solution. In step 2-7, the parameters are updated. Step 8 updates the set of discarded solution by including the most recent \({{\text{x}}}_{{\text{best}}}\). Step 9 executes SBPHA steps to update the parameters of sample problems. Note that Step 11 has the same objective function as in step 13 of SBPHA with the additional discarding constraint(s) that prevent finding the first-stage solutions that are already found in preceding d-SBPHA iterations. Step 18 executes steps of the SBPHA, which test solutions’ quality and performs the updating of the best solution according to the SAA approach. The only difference, in Step 15, between d-SBPHA and SBPHA is that d-SBPHA checks whether the maximum number of discards is reached. If the discarding iteration limit is reached, then the algorithm reports the solution with the best performance (in Steps 16 and 17) else continues discarding. Note that with the discarding strategy, d-SBPHA terminates with a better or the same solution as SBPHA and is guaranteed to terminate with optimal solution with infinite number of discarding iterations. Lastly, we note that, with increasing discarding iterations, the discarding constraints. Flow chart of d-SBPHA algorithm is provided in Fig. 5.

3.4.1 Lower bounds on SBPHA and d-SBPHA

The majority of the computational effort of SBPHA (and d-SBPHA) is spent in solving the sample subproblems as well as evaluating the first stage decisions in the larger reference set. Especially, for combinatorial problems with discrete first-stage decisions, the former effort is much significant than the latter. To improve the computational performance, we propose using a sample specific lower bound used while solving the sample subproblems. The theoretical justification for the proposed lower bound for sample problems is that if the balanced solution does not change, then the solution of the sample problems is non-decreasing. Hence, one can use the previously found solution as the lower bound (due to the Lagrangean duality property). However, if the balanced solution changes, then the lower bound property of the previous solution are not guaranteed and hence the lower bound is removed or a conservative estimate of the lower bound is utilized.

Let \({\text{l}}{ {\text{b}}}^{ {\text{m}} {\rm {,\ }} {\text{k}}}\) be the lower bound for sample m at iterationk in SBPHA (or d-SBPHA). In Step 18 in SBPHA (Step 16 in d-SBPHA) a valid cut should be added: \({ {\text{v}}}^{ {\text{m}}, {\text{k}}} {{\ge }}\) \({\text{l}}{ {\text{b}}}^{ {\text{m}} {\rm {,\ }} {\text{k}}}\), \({{\forall }} {\text{m}}, {\text{m}} {\rm {=}} {\text{1}} {\rm {,\dots }} {\text{M}}\), where \({\text{l}}{ {\text{b}}}^{ {\text{m}} {\rm {,\ }} {\text{k}}} {\rm {=}}{ {\text{c}}}_{ {\text{lb}}} {\text{l}}{ {\text{b}}}^{ {\text{m}} {\rm {,\ }} {\text{k}} {\rm {-}} {\text{1}}}\), and \({\text{0}} {{\le }}{ {\text{c}}}_{ {\text{lb}}} {{\le }} {\text{1}}\), where \({ {\text{c}}}_{ {\text{lb}}}\) is a tuning parameter to adjust the tightness of the lower bound. However\({{\ }}{ {\text{c}}}_{ {\text{lb}}}\), should not be close to 1 because it might cause infeasible solutions. There is a trade of on value of\({{\ }}{ {\text{c}}}_{ {\text{lb}}}\). Higher values might cause either infeasible or sub- optimal solutions, lower values does not provide consistent/tight constraints that should help improving solution time. In this study, we tested multiple values for\({{\ }}{ {\text{c}}}_{ {\text{lb}}}\), we suggest applicants to choose\({{\ }} {\text{0}}. {\text{4}} {{\le }}{ {\text{c}}}_{ {\text{lb}}} {{\le }} {\text{0}}. {\text{6}}\) range. Providing lower bound to optimization problem saved approximately 10%-15% of the solution time.

3.4.2 Theoretical implications of SBPHA and d-SBPHA

In the view of theoretical aspect, the most important merit of the SBPHA from classical PHA is the sampling concept and the size of the subproblems optimized. The classical PHA optimizes numerous subproblems each relating to an individual scenario in the entire scenario set one by one at each iteration. Differently, the SBPHA optimizes only a small number of subproblems each corresponding to the samples (including multiple scenarios) and determines the probability weighted solution in a different way than PHA. Note that optimizing a particular sample problem is more challenging than optimizing a single scenario. On the other hand, the Discarding-SBPHA extends the SBPHA by finding an improved, and ideal optimal solution, to the original problem. The main theoretical contribution of d-SBPHA is to re-solve SBPHA, by adding constraint(s) to the sample subproblems), which prohibits finding the same best incumbent solution(s) found in earlier d-SBPHA iterations. The theoretical characteristics of the SBHA and d-SBHA can be highlighted as follows:

Proposition 1

(Equivalence): SBPHA is equivalent to SAA if algorithm is executed only one time. Further, SBPHA (with parameter settings \({{{\alpha }}}^{{\text{k}}}=1\), \({{\Delta }}_{{\alpha }}\rm {=0}\), and \({{\rho }}^{\text{k}}\) is constant for all k), is equivalent to PHA if the samples are mutually exclusive and their union is the entire scenario set.

Proof

We show this in two parts.

For SAA: If SBPHA terminated at Step 1, then \({ {\text{x}}}^{ {\text{SBPHA}}} {\rm {=}}{ {\text{x}}}^{ {\text{SAA}}}\), and \({ {\text{v}}}^{ {\text{SBPHA}}} {\rm {=}} { {\text{v}}}^{ {\text{SAA}}}\). It can be concluded that SBPHA is equivalent to SAA.

For PHA: Under specified assumptions and for \({\text{M}} {\rm {=}} {\text{S}}\) and\({{\ }}{ {\text{N}}}_{ {\text{m}} {\rm {=}} {\text{1}} {\rm {,\dots ,}} {\text{M}}} {\rm {=}} {\text{1}}\), SBPHA=PHA.

Let’s consider a two-stage SP problem with finite number of scenarios \({ {{\ }} {{\xi }}}_{ {\text{s}}}\), \({\text{s}} {\rm {=}} {\text{1}} {\rm {,\dots ,}} {\text{S}}\), and each scenario occurs with a probability \({ {\text{p}}}_{ {\text{s}}}\), where \(\sum ^{ {\text{S}}}_{ {\text{s}} {\rm {=}} {\text{1}}}{{ {\text{p}}}_{ {\text{s}}} {\rm {=}} {\text{1}}}\). Consider SBPHA with samples as the individual scenarios, e.g., \({\text{M}} {\rm {=}} {\text{S}}\) and \({{\ }}{ {\text{N}}}_{ {\text{m}} {\rm {=}} {\text{1}} {\rm {,\dots ,}} {\text{M}} {\rm {,\ }}} {\rm {=}} {\text{1}} {\rm {,\ }}\)where\({{\ \ \ }} {\text{m}} {{\ne }} {\text{m}} {\rm {'}}\). It can be concluded that\({ {{\ \ }} {\text{P}}}_{ {\text{m}}} {\rm {=}}{ {\text{p}}}_{ {\text{s}}}\). If weight for the best incumbent solution and update factor for the weight of the best incumbent solution are equal to 1 and 0, consecutively, at every iteration \({\rm {(}}{ {{\alpha }}}^{ {\text{k}}} {{:=}} {\text{1}},{ {{\Delta }}}_{ {{\alpha }}} {\rm {=}} {\text{0}} {\rm {)}}\), then \({\overline{\overline{ {\text{x}}}}}^{ {\text{k}}} {\rm {:=}}{\overline{ {\text{x}}}}^{ {\text{k}}} {{:=}}\sum _{ {\text{s}} {{\in }} {\text{S}}}{{ {\text{p}}}_{ {\text{s}}}{ {\text{x}}}^{ {\text{k}}}_{ {\text{s}}}}\), and \({ {\text{x}}}^{ {\text{SBPHA}}} {\rm {=}}{ {\text{x}}}^{ {\text{PHA}}} {{\ }}\)and \({{\ }}{ {\text{v}}}^{ {\text{SBPH}} {\text{A}}} {\rm {=}}{ {\text{v}}}^{ {\text{PHA}}}\). \(\square\)

Proposition 2

(Convergence): SBPHA algorithm converges and terminates with the best solution encountered.

Proof

We prove this by contradiction. Assume that SBPHA finds, at iteration k , the best solution as \(x_{best}=x^{*}.\) Let’s assume that SBPHA algorithm converges to a solution \(x^{'} \ne x_{best}\) which has a worse objective value than \(x^{*}\) (with respect to the reference scenario set). Note that convergence implies \(x^{k}=x^{k-1}=x^{'}\) (assuming \(k_{max}=\infty\) and \(\varepsilon =0\) ). Further in the last update, we must have \(x^{k}=x^{'}= \alpha ^{k}x^{'} + \left( 1- \alpha ^{k} \right) x_{best}\) . Since \(\alpha ^{k}<1\) , this equality is satisfied if and only if \(x^{'}=x_{best}\) which is a contradiction. \(\square\)

Proposition 3

SBPHA and d-SBPHA algorithms have the same convergence properties as SAA with respect to the sample size.

Proof

It is showed in Ahmed and Shapiro (2002) and Ruszczynski and Shapiro (2003b) that SAA converges with probability one (w.p.1) to optimal solution of the original problem as sample size, \(\left( N \rightarrow \infty \right)\), increases to infinity. Since Step 1 in SBPHA is the implementation of SAA and that SBPHA does converge to the best solution found (Proposition 2), we can simply argue that SBPHA and d-SBPHA converges to optimal solution of the original problem as SAA does with increasing sample size. Furthermore, since SBPHA and d-SBPHA guarantee a better or same solution quality as SAA provides, we can conjecture that SBPHA and d-SBPHA have more chance to reach the optimality than SAA with a given number of samples and sample size. \(\square\)

Proposition 4

The d-SBPHA algorithm converges to the optimum solution as \(d\rightarrow \infty\).

Proof

Given that d-SBPHA is not allowed finding the same solution, in the worst case, the d-SBPHA iterates as many times as the number of feasible solutions (infinite in the continuous and finite in the discrete case) for the first stage decisions before it finds the optimum solution. Note that the proposed algorithm is effective for the problems where the first stage decision variables are binary.

Clearly, as the number of discarding constraints added increases linearly with the number discarding iterations, the resulting problems become more difficult to solve. However, in our experiments for a particular problem type, we observed that, in majority of the experiments, d-SBPHA finds the optimal solution in less than 10 discarding iterations. \(\square\)

3.4.3 Practical aspects of the study

The application areas of SP are vast because of its characteristic in terms of representing real world problems better by considering the uncertainty nature of the environment. Among the SP approaches the most commonly used one is the two-stage SP. The proposed algorithms provide effectiveness in terms of solution quality and efficiency in terms of solution time to two-stage SP problems especially for those that have binary first stage decision variables. Disaster management is one of the fields that includes high level of uncertainty (Rath et al. 2016; Caunhye et al. 2016). For such problems the proposed SBPHA and d-SBPHA algorithms are very effective tools to apply. Besides, several more applications areas can be counted to apply the proposed algorithms, such as supply chain (Tolooie et al. 2020), end-of-life vehicles (Karagoz et al. 2022), sharing economy (Aydin et al. 2022), workforce capacity planning (Kafali et al. 2022), unit commitment (Håberg 2019), production planning (Islam et al. 2021), power dispatching (Mohseni-Bonab and Rabiee 2017), energy (Talari et al. 2018), debris management (Deliktaş et al. 2023), etc.. Since d-SBPHA provides optimal or near optimal solutions, thanks to its powerful convergence property. Accordingly, d-SBPHA can be applied when decision makers need accurate solutions, and choose to use SBPHA when solution time is more valued than the accuracy.

4 Experimental study

We now describe the experimental study performed to investigate the computational and solution quality performance of the proposed SBPHA and d-SBPHA for solving two-stage SP problems. We benchmark the results of SBPHA and d- SBPHA with those of SAA. All algorithms are implemented in Matlab R2010b and integer programs are solved with CPLEX 12.1. The experiments are conducted on a PC with Intel(R) Core 2 CPU, 2.13 GHz processor and 2.0 GB RAM running on Windows 7 OS. Next, we describe the test problem, CRFLP, in Section 3.1 and experimental design in Section 3.2. In Section 3.3, we report on the sensitivity analysis results of SBPHA and d-SBPHA’s performance with respect to algorithm’s parameters. In Section 3.4, we present and discuss the benchmarking results.

4.1 Capacitated reliable facility location problem (CRFLP)

Facility location is a strategic supply chain decision and require significant investments to anticipate and plan for uncertain future events (Owen and Daskin 1998; Melo et al. 2009). An example of such uncertain supply chain events is the disruption of facilities that are critical for the ability to efficiently satisfy the customer demand (Schütz et al. 2009). These disruptions can be either natural disasters, i.e., earthquake, floods, or man-made, i.e., terrorist attacks (Murali et al. 2012), labor strikes. For a detailed review of uncertainty considerations in facility location problems, readers are referred to Arya et al. (2004), Pandit (2004), Snyder and Daskin (2005), Snyder (2006), Resende and Werneck (2006), Shen et al. (2011), Li et al. (2013), An et al. (2014), Yun et al. (2014), Albareda-Sambola et al. (2015). In practice, capacity decisions are considered jointly with the location decisions. Further, the capacities of facilities often cannot be changed (or at a reasonable cost) in the event of a disruption. Following a facility failure, customers can be assigned to other facilities only if these facilities have sufficient available capacity. Thus capacitated reliable facility location problems are more complex than their uncapacitated counterparts (Shen et al. 2011). Gade (2007) applied the SAA in combination with a dual decomposition method to solve CRFLP. Later, Aydin and Murat (2013) proposed a swarm intelligence based SAA algorithm to solve CRFLP

We now introduce the notation used for the formulation of CRFLP. Let \(F_R\), and \(F_U\) denote the set of possible reliable and unreliable facility sites, accordingly, \(F=F_R\bigcup {F_U}\), denote the set of all possible facility sites, including the emergency facility, and \(\mathcal {D}\) denote the set of customers. Let \(f_i\) be the fixed cost for locating facility\(\ i\in F\), which is incurred if the facility is opened, and \(d_j\) be the demand for customer\(\ j\in \mathcal {D}\). The\(\ c_{ij}\) denotes the cost of satisfying each unit demand of customer j from facility i and includes such variable cost drivers as transportation, production, and so forth. There are failure scenarios where the unreliable facilities can fail and become incapacitated to serve any customer demand. In such cases, demand from customers need to be allocated between the surviving facilities and emergency facility (\({\text{f}}_{\text{e}}\)) subject to capacity availability. Each unit of demand that is satisfied by the emergency facility cause a large penalty\(\ (h_j)\) cost. This penalty can be incurred due to finding an alternative source or due to the lost sale. Lastly, the facility i has limited capacity and can serve at most \(b_i\)units of demand.

We formulate the CRFLP as a two-stage SP problem. In the first stage, the location decisions are made before the random failures of the located facilities. In the second stage, following the facility failures, the customer-facility assignment decisions are made for every customer given the facilities that have not failed. The goal is to identify the set of facilities to be opened while minimizing the total cost of open facilities and the expected cost of meeting demand of customers from the surviving facilities and the emergency facility. In the scenario based formulation of CRFLP, let s denote a failure scenario and the set of all failure scenarios is\(\ S\), where\(\ s\in S\). Let \(p_s\) be the occurrence probability of scenario s and \(\sum _{s\in S}{p_s=1}\). Further let\(\ k^s_i\) denote whether the facility i survives (i.e., \(k^s_i=1\)) and \(k^s_i=0\) otherwise. For instance, in the case of independent facility failures, we have \({\left| S\right| =2}^{\left| F_U\right| }\) possible failure scenarios.

The binary decision variable \(x_{i}\) specifies whether facility i is opened, and the variable \(y_{ij}^{s}\) specifies the rate of demand of customer j is satisfied by facility i in scenario s . The scenario based formulation of the CRFLP as a two-stage SP is as follows:

Subject to

4.2 Experimental setting

We used the test data sets provided in Zhan (2007) which are also used in Shen et al. (2011) for URFLP. In these data sets, the coordinates of site locations (facilities, customers) are i.i.d and sampled from \(\text{U}\left[ \text{0,1}\right] {\times U}\left[ \text{0,1}\right]\). The sets of customer and facility sites are identical. The customer demand is also i.i.d., sampled from\({\ U}\left[ \text{0,1000}\right]\), and rounded to the nearest integer. The fixed cost of opening an unreliable facility is i.i.d. and sampled from\({\ U}\left[ \text{500,1500}\right]\), and rounded to the nearest integer. For the reliable facilities, we set the fixed cost to \(\text{2,000}\) for all facilities. The variable costs\({\ }{\text{c}}_{\text{ij}}\) for customer \(\text{j}\) and facility i (excluding the emergency facility) are chosen as the Euclidean distance between sites. We assign a large penalty cost, (\(\text{20}\)), \(h_j\) for serving customer \(\text{j}\) from emergency facility. Zhan (2007) and Shen et al. (2011) consider URFLP and thus their data sets do not have facility capacities. In all our experiments, we selected identical capacity levels for all facilities, i.e., \({\text{b}}_{\rm {i=1,..,|F|}}\rm {=2,000}\). Datasets used in our study is given in Appendix B Table 10.

In generating the failure scenarios, we assume that the facility failures are independently and identically distributed according to the Bernoulli distribution with probability\({{\ q}}_{\text{i}}\), i.e., the failure probability of facility\(\ \text{i}\). We experimented with two sets of failure probabilities; first set of experiments consider uniform failure rates, i.e., \({\text{q}}_{\text{i}{\in }{\text{F}}_{\text{U}}}\rm {=q}\) where\(\ \rm {q=}{\{}\rm {0.1,\ 0.2,\ 0.3}{\}}\), and the second set of experiments consider bounded non-uniform failure rates i.e.\({{\ q}}_{\text{i}}\), where \({{\ q}}_{\text{i}}{\le }\rm {0.3}\). We restricted the failure probabilities with \(\rm {0.3}\) since larger failure rates are not realistic. The reliable facilities and emergency facility are perfectly reliable, i.e.,\({\ }{\text{q}}_{\text{i}{\in }\rm {(}{\text{F}}_{\text{R}}{\cup }{\text{f}}_{\text{e}}\rm {)}}\rm {=1}\). Note that in the case, where\(\ {\text{q}}_{\text{i}{\in }{\text{F}}_{\text{U}}}\rm {=0}\), corresponds to the deterministic fixed-charge facility location problem. The failure scenarios \(\text{s}{\in }\text{S}\) are generated as follows. Let \({\text{F}}^{\text{s}}_{\text{f}}{\subset }{\text{F}}_{\text{U}}\) denote the facilities that are failed, and\({\ }{\text{F}}^{\text{s}}_{\text{r}{{\in }\text{F}}_{\text{U}}}{\equiv }{\text{F}}_{\text{U}}\backslash {\text{F}}^{\text{s}}_{\text{f}}\) be the set of surviving facilities in scenario\({\ s}\). The facility indicator parameter in scenario \(\text{s}\) become \({\text{k}}^{\text{s}}_{\text{i}}\)=0 if \(\text{i}{\in }{\text{F}}^{\text{s}}_{\text{f}}\), and \({\text{k}}^{\text{s}}_{\text{i}}\)=1 otherwise, e.g., if \(\text{i}{\in }{\text{F}}^{\text{s}}_{\text{r}}{\cup }{\text{F}}_{\text{R}}{\cup }{\{}{\text{f}}_{\text{e}}\}\). The probability of scenario \(\text{s}\) is then calculated as \({\ }{\text{p}}_{\text{s}}\rm {=}{\text{q}}^{\left| {\text{F}}^{\text{s}}_{\text{f}}\right| }{\rm {(1-q)}}^{\left| {\text{F}}^{\text{s}}_{\text{r}}\right| }\).

In all experiments, we used \(\left| \mathcal {D}\right| \rm {=}{\text{F}}_{\text{U}}\bigcup {{\text{F}}_{\text{R}}}\rm {=12+8=20}\) sites which is a large-sized CRFLP problem and is more difficult to solve than the uncapacitated version (URFLP). The size of the failure scenario set is\(\ \left| \text{S}\right| \rm {=4,096}\). The deterministic equivalent formulation has \(\text{20}\) binary \({\text{x}}_{\text{i}}\) \(\rm {1,720,320=}\left| \text{F}\right| {\times }\left| \text{D}\right| {\times }\left| \text{S} \right|\) \(=21\times 20\times 4,096\) continuous \({\text{y}}^{\text{s}}_{\text{ij}}\) variables. Further, there are \(\rm {1,888,256=}\) \(\rm {81,920+1,720,320+86,016=}\left| \text{D}\right| {\times }\left| \text{S}\right| \rm {+}\left| \text{F}\right| {\times }\left| \text{D}\right| {\times }\left| \text{S}\right| \rm {+\ }\left| \text{F}\right| {\times }\left| \text{S}\right|\) constraints corresponding to constraints (12)-(14) in the CRFLP formulation. Hence, the size of the constraint matrix of the deterministic equivalent MIP formulation is \({1,720,320\times 1,888,256}\) which cannot be tackled with exact solution procedures (e.g., branch-and-cut or column generation methods). Note that while solving LPs with this size is computationally feasible, the presence of the binary variables makes the solution a daunting task. We generated sample sets for SAA and the SBPHA (and d-SBPHA) by randomly sampling from \(\text{U}\left[ \text{0,1}\right]\) as follows. Given the scenario probabilities, \({\text{p}}_{\text{s}},\) we calculate the scenario cumulative probability vector \(\{{\text{p}}_{\text{1}},\left( {\text{p}}_{\text{1}}\rm {+}{\text{p}}_{\text{2}}\right) \rm {,\dots ,(\ }{\text{p}}_{\text{1}}\rm {+}{\text{p}}_{\text{2}}\rm {+\dots +}{\text{p}}_{\left| \text{S}\right| \rm {-1}}\rm {),\ 1}{\}}\) which has \(\rm {|S|}\) intervals. We first generate the random number and then select the scenario corresponding to the interval containing the random number. We tested the SAA, SBPHA, and d-SBPHA algorithms with varying number of samples\({\ (M)}\), and sample sizes\({\ (N)}\). Whenever possible, we use the same sample sets for all three methods. We select the reference set (\({\text{N}}^{\rm {'}}\)) as the entire scenario set, i.e., \({\text{N}}^{\rm {'}}\rm {=S}\) which is used to evaluate the second stage performance of a solution. We note that this is computationally tractable due to relatively small number of scenarios and that the second stage problem is an LP. In case of large scenario set or integer second stage problem, one should select\(\ {\text{N}}^{\rm {'}}{\ll }\text{S}\).

4.3 Parameter sensitivity

In this subsection, we analyze the sensitivity of SBPHA with respect to the weight for the best incumbent solution parameter\(\rm {(}{\alpha }\rm {)}\), penalty factor\(\rm {(}{\rho }\)), and update parameter for the penalty factor\({\ (}{\beta }\rm {)}\). Recall that\({\ }{\alpha }\) determines the bias of the best incumbent solution in obtaining the samples’ balanced solution, which is obtained as a weighted average of the best incumbent solution and the samples’ probability weighted solution. The parameter \({\rho }\) penalizes the Euclidean distance of a solution from the samples’ balanced solution and \({\beta }\) is the multiplicative update parameter for \({\rho }\) between two iterations. In all these tests, we set\(\ (\rm {M,\ N)=(5,\ 10)}\), and\({\ q=0.3}\) unless otherwise is stated. We experimented with two \({\alpha }\) strategies, static and dynamic\(\ {\alpha }\). We solved in total 480\({(=10\ replications\ \times 48\ parameter\ settings)}\) problem instances.

The summary results of solving CRFLP using 10 independent sample sets (replications) with static strategy \({\alpha }\)=0.6,\(\ {\beta }\rm {=}{\{}\rm {1.1,1.2,1.3,1.4,1.5,1.8}{\}}\), and \({\rho }\rm {=}{\{}\text{1,20,40,80,200}{\}}\) are presented in Table 1. The detailed results of the 10 replications of Table 1 together with the detailed replication results with static strategy for \({\alpha }\)=\({\{}\)0.6,0.7,0.8\({\}}\) and dynamic strategy \({\Delta }{\alpha }\rm {=}{\{}\rm {0.02,0.03,0.05}{\}}\) are presented in Appendix A, Table 5.

The first column in Table 1 shows the \({\alpha }\) strategy and its parameter value. Note that in the dynamic strategy, we select the initial value as\({\ }{{\alpha }}^{\rm {k=0}}\rm {=1}\) in Appendix A, Table 5. The second and third columns show penalty factor\({\ (}{\rho }\)) and update parameter for the penalty factor\({\ (}{\beta }\rm {)}\), consecutively. The objective function values for the \(\text{10}\) replications (each replication consists of \({M=5}\) samples) are reported in columns\({\ 4-13}\) (shown only for replications\({\ 1}\),\({\ 2}\) and \(\text{10}\) in Table 1 and detailed results are shown in Appendix A, Table 5). The first column under the “Objective” heading presents the average objective function value across \(\text{10}\) replications, and the second column under the “Objective” heading presents the optimality gap (i.e., \(\text{ga}{\text{p}}_{\text{1}}\)) between the average replication solution and the best objective function value found, which is\({\ 8995.08}\)Footnote 1, while the third and fourth columns under the “Objective” heading present the minimum and maximum objective values across \(\text{10}\) replications. Average objective function value and \(\text{ga}{\text{p}}_{\text{1}}\) are calculated as follows:

where \(\text{Rep}\) is the number of replications, e.g., \(\rm {Rep=10}\) in this section’s experiments.

In the last column, we report on the computational (CPU) time in seconds for tests. The complete results on CPU times are provided in Appendix A, Table 6. First observation from Table 1 is that the SBPHA is relatively insensitive to the \(\alpha\) strategy employed and the parameter settings selected. Secondly, we observe that the performance of SBPHA with different parameter settings depends highly on the sample (see Table 5). As seen in Table 5 replication 7, most of the configurations show good performance as they all obtain the optimal solution. Further, as the \(\Delta _{ \alpha }\) increases, the best incumbent solution becomes increasingly more important leading to decreased computational time. While some parameter settings exhibit good performance in solution quality, their computational times are also higher, and vice versa.

In selecting the parameter settings for SBPHA, we are interested in a parameter setting that offers a balanced trade-off between the solution quality and the solution time. In order to determine such parameter settings, we developed a simple, yet effective, parameter configuration selection index. The selection criterion is the product of average \(gap_{1}\) and CPU time. Clearly, smaller the index value, the better is the performance of the corresponding parameter configuration. To illustrate, using the results of the first row in Table 1, the index of static \(\alpha =0.6\) , with starting penalty factor \(\left( \rho \right)\) \(=1\) and penalty factor update \(\beta =1.8\) , is calculated as \(19.00 \left( =4.039\% \times 470.4 \right)\) . Parameter selection indexes corresponding to all 480 experiments are shown in Table 2. According to the aggregate results in Table 2 (the ‘Total’ row at the bottom), the static \(\alpha =0.7\) setting is the best, the static \(\alpha =0.6\) is the second best, and dynamic \(\alpha\) with \(\Delta _{ \alpha }=0.03\) provides the third best performance. Hence, we use only these \(\alpha\) parameter configurations in our experiments. In terms of penalty parameter configuration, we select the best setting, i.e., starting penalty factor \(\left( \rho \right) =200\) and penalty factor update \(\beta =1.1\) for all \(\alpha\) parameter configurations. Note that by selecting a larger starting penalty factor, the SBPHA would converge faster but the quality of the solution converged would be lower. Therefore, we restricted our experiments in terms of penalty factor to be at most 200 (\(\rho\) ).

Further, among all the experiments, the best parameter configuration in terms of index is with static \(\alpha =0.6\) , when starting penalty factor \(\rho =80\) and update parameter \(\left( \beta \right) =1.2\) . In Tables 1 and 5, this configuration of parameter selection provides the best average gap performance and a good CPU time performance. Hence we also included this parameter configuration in our computational performance experiments.

In the remainder of the computational experiments we used sample size and number as \(\left( M. N \right) = \left( 5. 10 \right)\) . which enables the SBPHA to search the solution space while maintaining computational time efficiency.

4.4 Computational performance of SBPHA and d-SBPHA

In this sub-section, we first show the performance of the d-SBPHA on improving the solution quality of SBPHA and then compare the performances of the SAA and the proposed d -SBPHA algorithm. In the remainder of the experiments, with an abuse of the optimality definition, we refer to the best solution as the “exact solution" . This solution is obtained by selecting the best amongst all SBPHA, d -SBPHA, and SAA solutions and the time-restricted solution of the CPLEX.

4.4.1 Analysis of d-SBPHA and SBPHA

Figure 1 shows effect of discarding strategy on the solution quality for different facility failure probabilities. In all figures, results are based on the average of 10 replications. Optimality gap (shown as ‘Gap’) is calculated as in (18) but substituted \(v_{r}^{SBPHA}\) with \(v_{best.r}^{d-SBPHA}\) in (17) to calculate \(v_{Rep}^{Average}\). First observation is that the d-SBPHA not only improves solution quality but also finds the optimal solution in most facility failure probabilities cases. When failure probability is \(q=0.1\), d-SBPHA converges to optimal solution in less than 5 discarding iterations with all parameter configurations (Fig. 1a). When \(q=0.2\), d-SBPHA converges to optimal solution in all static \(\alpha\) strategies in less than 5 discarding strategies, and less than \(0.2\%\) optimality gap with dynamic \(\alpha\) strategy (Fig. 1b).

Further, when failure probability is 0.3, d-SBPHA is not able converge to optimal solution; however it converges to solutions that are less than \(1\%\) away from the optimal on average (Fig. 1c). Note that these results are based on the average of 10 replications, and at least 5 out of 10 replications are converged to optimal solution in all parameter configurations. Detailed results are provided in the next section.

Lastly, when failure probability is randomized, d-SBPHA converges to optimal solution in 10 or less discarding iterations, with three out of the four selected parameter configurations and less than \(0.5\%\) gap (Fig. 1d). Hence, we conclude that discarding improves the solution performance and the improvement rates depend on the parameter configuration and the problem parameters. Reader is referred to Table 8 in Appendix A for detailed results.

Next, we present the CPU time performance of d-SBPHA for 10 discarding iterations (Fig. 2). Note that time plotted is cumulative over discarding iterations. Time of each discarding iteration is based on average of 10 replications and is reported in seconds.

First observation is that the CPU time is linearly increasing or increasing at a decreasing rate. Further, the solution time is similar for all facility failure probabilities (Fig. 2a–d) with all parameter configurations.

All results are provided in the Appendix A in Table 9. One main reason for linearly increasing CPU time is that the d-SBPHA makes use of the previously encountered solution information. In particular, dSBPHA does not test the reference sample ( \(N'\) ) performance of any first stage solution that is encountered and already tested before. This is achieved by maintaining a library of first stage solutions and corresponding reference sample ( \(N'\) ) performance in a dynamic table.

4.4.2 SAA, SBPHA and d-SBPHA comparison

In this sub-section, we compare the performances of the SBPHA, d-SBPHA, and SAA. First, we analyze the performance of the proposed SBPHA and d-SBPHA with respect to that of the exact method and the SAA method with different sample sizes (N) and number of samples (M). Here, for the sake of simplicity and to explain clearer, we randomly selected a parameter configuration, in which \(\alpha =0.7. \rho =200\) , and \(\beta =1.1\) , which is not the worst or the best parameter configuration.

Tables 3 and 4 illustrate these benchmark results for \(q= \{ 0.1, 0.2, 0.3, \text {and} \ \text {random} \}\) for one of the replications and, then, the average results across all replications are shown in Fig. 3. The second column for SAA shows number of samples and sample size. i.e., ( M, N ). For dSBPHA, it shows number of replications \(r, \left( M,N \right)\) , and number of discarding iterations ( d ). Note that when the number of discarding iterations \(d=0\), dSBPHA becomes SBPHA. Third column, \(F^{*},\) indicates the solution converged by each method, e.g., facilities opened. For instance with \(q=0.1 \ \text {and} \ \left( M, N \right) = \left( 5, 10 \right)\), the SAA’s solution is to open facilities \(F^{*}= \{ 1, 2, 8, 10, 12 \}\) whereas the SBPHA opens facilities \(F^{*}= \{ 1, 2, 4, 10, 12 \}\). 2-SBPHA and exact (optimal) solution opens facilities \(F^{*}= \{ 1, 2, 10, 11, 12 \}\).

Fourth column presents the objective function value for SAA, SBPHA, d-SBPHA and exact method, \(v^{SAA}, v^{SBPHA}, v_{best}^{d-SBPHA}\) and \(v^{*}\) . Fifth column presents CPU time and the sixth column shows the optimality gap \(\left( Gap_{2} \right)\) measures. Reported time for d-SBPHA is the average time of converged solution that is found during the discarding iterations. Gap2 for SAA, SBPHA and d -SBPHA uses the optimal solution value \(v^{*},\) and it is defined as.

Tables 3 and 4 show that with larger sample sizes the objective function on the SAA’s objective function is not always monotonously decreasing while the CPU time increases exponentially. The observation about the time is in accordance with those in Fig. 3. SAA finds optimal solution only when \(N=75\) for \(q=0.1\) and cannot find optimal solution for \(q=0.2\) with any of M-N configurations. SAA also finds optimal solution for \(q=0.3\) only when M =20 and N =75 in more than 13, 000 seconds and shows relatively good performance for random q when M= 20. d-SBPHA finds optimal solution in all facility failure probabilities.

Results for dSBPHA ( \(d=10\) ) in Fig. 3 are for all four parameter settings; first setting is for static \(\alpha =0.6, \ \rho =200\) and \(\beta =1.1\), second is static \(\alpha =0.7, \ \rho =200\) and \(\beta =1.1,\) third is static \(\alpha =0.6, \ \rho =80\) and \(\beta =1.2\) and fourth one is dynamic \(\Delta _{ \alpha }=0.03, \ \rho =200\) and \(\beta =1.1\) .

In Fig. 3, we present the CPU time and solution quality performances of the SAA for N= \(\{ 10, 25, 40, 50, 75 \}\) sample sizes and compare with that of the proposed d-SBPHA, in which \(d=10\) and N=10 in solving CRFLP with failure probabilities \(q= \{ 0.1, 0.2, 0.3, random \}\) . We use 5 (M) samples in both SAA and four different parameter configurations in the proposed method. Different number of samples would increase the solution time of SAA and SBPHA. However, as seen from results, SBPHA already captures the optimal solution in majority of the experiments with only with five samples in less time than the SAA. Therefore, increasing number of samples would not make any difference on the comparison of the SAA and SBPHA in terms of performance. The results indicate that the sample size effect the SAA’s CPU time. For instance, the CPU time of the SAA is growing exponentially. Furthermore, in none of the failure probability cases, the solution quality performance of SAA has converged to that of d-SBPHA. The solution quality of all four configurations of the proposed d-SBPHA are either optimal or near optimal. The gap in Fig. 3 is calculated as in Fig. 1 and the CPU time of d-SBPHA shows the average CPU time when the converged solution is found during the discarding iterations.

5 Conclusion

In this study we have developed hybrid algorithms for two-stage SP problems, specifically for CRFLP. The proposed algorithms are hybridization of two existing methods. The first one is the Monte Carlo sampling based algorithm, which is called SAA. SAA method provides an attractive approximation for SP problems when the number of uncertain parameters increases. The second algorithm is PHA, which is an exact solution methodology for SP problems. The research presented in this study mainly addresses two issues that arise when using SAA and PHA methods individually; lack of effectiveness in solution quality of SAA and lack of efficiency in computational time of PHA.

The first proposed algorithm is called SBPHA, which is the integration of SAA and PHA. This integration considers each sample as a small deterministic problem and employs the SAA algorithm iteratively. In each iteration, the non-anticipativity constraints is injected into the solution process by introducing penalty terms in the objective that guides the solution of each sample to the samples’ balanced solution and to ensure that non-anticipativity constraints are satisfied. The two key parameters of SBPHA are the weight of the best incumbent solution and the penalty factor.

The weight for the best incumbent solution adjusts the importance given to samples’ best found solution and to the most recent average sample solution in calculating the balanced solution. The penalty factor modulates the rate at which the sample solutions converge to the samples’ balanced solution. Given that the best found solution improves over time, we propose two strategies for the weight of the best incumbent solution: static versus dynamic strategy.

We first conducted experiments for sensitivity analysis of the algorithm with respect to the parameters. The results show that the SBPHA’s solution quality performance is relatively insensitive to the choice of strategy for the weight of the best incumbent solution. i.e., both the static and dynamic strategies are able to converge to the optimum solution. SBPHA is able to converge to the optimal solution even with small number of samples and small sample sizes.

In addition to the sensitivity experiments, we compared the performances of SBPHA and d-SBPHA with SAA’s. These results show that the SBPHA and d-SBPHA are able to improve the solution quality noticeably with reasonable computational effort compared to SAA. Further, increasing SAA’s sample size to match the solution quality performance of SBPHA or dSBPHA requires significant computational effort which is not affordable in many practical instances.

The contributions of this research are as follows:

Contribution 1: Developed SBPHA, which provides a configurable solution method that improves the sampling based methods’ accuracy and PHA’s efficiency for stochastic two-stage CRFLP.

Contribution 2: Enhanced SBPHA for SPs with binary first stage decision variables. The improved algorithm is called Discarding-SBPHA (d-SBPHA). It is analytically proved that SBPHA guarantee optimal solution to the mentioned problems when the number of discarding iterations approaches to infinity.

In addition to all the advantages introduced by the proposed algorithms, there are some limitations to discuss. First, the proposed algorithms provide very accurate solutions to the problems that include binary first stage decision variables. However, the cases with general integer or continuous first stage decision variables are not tested and the performances of the proposed algorithms need to be examined. Second, the experimental study conducted on a problem that has continuous second stage decision variables. Even though the SBPHA does not require continuous second stage decision variables, this circumstance still need to be inspected. Lastly, the hyper-tuning parameters of the algorithms need to be re-optimized when the application area or the dynamics of the problem changes.

Besides and parallel to the limitations mentioned, there are several possible avenues for future research on the algorithms. First opportunity is to investigate the integration of alternative solution methodologies to improve the convergence rate and solution quality, i.e., Stochastic Decomposition (SD), Stochastic Dual Dynamic Programming (SDDP), L-Shaped decomposition, Benders Decomposition (BD) etc. Second extension is the research on the application of the d-SBPHA to the SPs that have linear first stage decision variables. Third extension is the investigations on the development of a general strategy for the SBPHA and d-SBPHA specific parameters to reduce the computational effort spent on the parameter sensitivity steps. Fourth extension is the adaptation of the ideas of d-SBPHA to multistage SPs. Lastly, using proposed algorithms a black box type optimization tool may be developed for the two-stage SP problems, i.e., finance (Uğur et al. 2017; Savku and Weber 2018).

Notes

The best solution is obtained by selecting the best amongst all SBPHA solutions (e.g., out of 480 solutions) and the time-restricted solution of the CPLEX. The latter solution is obtained by solving the deterministic equivalent using CPLEX method with %0.05 optimality gap tolerance and 10 hours (36,000 seconds) of time limit until either the CPU time-limit is exceeded or the CPLEX terminates due to insufficient memory.

References

Ahmed S, Shapiro A (2002) The sample average approximation method for stochastic programs with integer recourse. Georgia Institute of TechnologyGeorgia Institute of Technology, Atlanta

Albareda-Sambola M, Hinojosa Y, Puerto J (2015) The reliable p-median problem with at-facility service. Eur J Oper Res 245(3):656–666

An Y, Zeng B, Zhang Y, Zhao L (2014) Reliable p-median facility location problem: two-stage robust models and algorithms. Transp Res Part B 64:54–72

Arya V, Garg N, Khandekar R, Meyerson A, Munagala K, Pandit V (2004) Local search heuristics for k-median and facility location problems. SIAM J Comput 33(3):544–562

Atakan S, Sen S (2018) A Progressive Hedging based branch-and-bound algorithm for mixed-integer stochastic programs. Comput Manag Sci 15(3–4):501–540

Aydin N (2016) A stochastic mathematical model to locate field hospitals under disruption uncertainty for large-scale disaster preparedness. Int J Optim Control 6(2):85–102

Aydin N (2022) Decision-dependent multiobjective multiperiod stochastic model for parking location analysis in sustainable cities: evidence from a real case. J Urban Plan Dev 148(1):05021052

Aydin N, Murat A (2013) A swarm intelligence based sample average approximation algorithm for the capacitated reliable facility location problem. Int J Prod Econ 145(1):173–183

Ayvaz B, Bolat B, Ayd\(\iota\)n N (2015) Stochastic reverse logistics network design for waste of electrical and electronic equipment. Resour Conserv Recycl 104:391–404

Aydin N, Şeker Ş, Deveci M (2022) Multi-objective optimization of car sharing points under uncertainty for sustainable transportation. IEEE Transactions on Engineering Management

Barnett J, Watson JP, Woodruff DL (2017) BBPH: using progressive hedging within branch and bound to solve multi-stage stochastic mixed integer programs. Oper Res Lett 45(1):34–39

Barro D, Canestrelli E (2005) Dynamic portfolio optimization: time decomposition using the maximum principle with a scenario approach. Eur J Oper Res 163(1):217–229