Abstract

In the current landscape of ever-increasing levels of digitalization, we are facing major challenges pertaining to data volume. Recommender systems have become irreplaceable both for helping users navigate the increasing amounts of data and, conversely, aiding providers in marketing products to interested users. Data-driven models are susceptible to data bias, materializing in the bias influencing the models’ decision-making. For recommender systems, such issues are well exemplified by occupation recommendation, where biases in historical data may lead to recommender systems relating one gender to lower wages or to the propagation of stereotypes. In particular, consumer-side fairness, which focuses on mitigating discrimination experienced by users of recommender systems, has seen a vast number of diverse approaches. The approaches are further diversified through differing ideas on what constitutes fair and, conversely, discriminatory recommendations. This survey serves as a systematic overview and discussion of the current research on consumer-side fairness in recommender systems. To that end, a novel taxonomy based on high-level fairness definitions is proposed and used to categorize the research and the proposed fairness evaluation metrics. Finally, we highlight some suggestions for the future direction of the field.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Recommender systems have become integral parts of modern digital society. An exponential increase in data poses significant challenges to users and consumers, who cannot feasibly sift through everything to find what they are looking for. Recommender systems help mitigate these challenges by capturing their users’ preferences and presenting them with prioritized options. Thus, recommender systems have seen widespread application in e-commerce, multimedia platforms, and social networks. Their tactical relevance in the industry has led to a high degree of cooperation between the industry and academia in further developing the field (Jannach et al. 2010; Ricci et al. 2022a).

In recent years, the notion of fairness in machine learning has steadily gained attention. High-profile cases have succeeded in bringing the topic to the general public’s attention, like the analysis performed by ProPublica suggesting the presence of racial bias in the COMPAS system used for predicting the likelihood of recidivism of inmates (ProPublica 2016). Subsequently, fairness challenges have also been identified for recommender systems, and the works of Burke et al. (2018) formalized the presence of multi-stakeholder fairness dynamics mirroring the multi-stakeholder nature of recommender systems. Provider stakeholders may take issue if their products are disproportionally less exposed than similar popular products. Career seekers may feel discriminated against if they are predominantly recommended careers that are stereotypically and historically associated with their gender. An increased focus on fairness in recommender systems is not only ethically beneficial for society as a whole but also helps the actors applying them in satisfying an increasingly fairness-aware user base and retaining good relations and cooperation with providers (Ekstrand et al. 2022).

While provider-side fairness research has a dominant subgroup in research pursuing popularity bias, which is the notion of disproportional amounts of attention given to popular items, consumer-side fairness research has a greater focus on group-based fairness relating to demographic information of the users, i.e., making sure that users are not discriminated against based on aspects such as race, gender, or age. Despite the focus on a specific high-level fairness setting, consumer-side fairness in recommender systems displays a high degree of variation in approaches. The approaches for introducing fairness awareness take place in all parts of the recommender system pipeline, span most established and upcoming model architectures, and are designed to satisfy various fairness definitions. Some models opt for adjusting recommendations post hoc, others modify the input data directly, while others still explicitly model the fairness-awareness. Fairness has been incorporated through penalizing discrimination during optimization, altering user representation to be more neutral, probabilistically modelling the influence of sensitive attributes, or re-ranking unaltered recommendation, all while adhering to different definitions of what discrimination and fairness entails. There is also variation in the application setting of these approaches; most adhere to the regular asymmetric setting where users and items make up fundamentally different concepts, while others consider reciprocal settings where users are recommended to other users like matchmaking. Yet another dynamic is considered in two-sided settings that seek to achieve both consumer- and provider-side fairness concurrently. Despite the great variety, the breadth of consumer-side fairness approaches has yet to be covered in detail by any existing surveys. We further argue for this claim in Sect. 3.3, where we discuss relevant surveys.

In this survey, we have systematically surveyed the existing literature that proposes and evaluates approaches considering consumer-side fairness. Critical aspects of the qualified literature are discussed, compared, and categorized, leading to the proposal of a taxonomy that highlights high-level fairness definitions and how it has been incorporated into the approaches. Further, we provide a comprehensive overview of metrics used to evaluate the fairness of the approaches and some thoughts on the field’s future directions. Our key contributions are:

-

1.

Propose a taxonomy for categorizing consumer-side fairness approaches in recommender systems based on high-level conceptual fairness definitions and how the fairness is incorporated.

-

2.

Provide a comprehensive overview, categorization, and comparison of available consumer-side fairness approaches in recommender systems and their proposed fairness evaluation.

The remaining sections of this survey include a background section on fairness definitions, terminology, related concepts, and related works; methodology covering the literature selection process and the proposed taxonomy; a detailed discussion and comparison of the identified literature; analysis of applied fairness metrics and datasets; and a final discussion of our thoughts on the future directions of the topic.

2 Background

As a primer to this survey’s core content and discussion, we introduce key established fairness concepts and terms that appear frequently or are subject to ambiguity. The background also covers a discussion of recommender systems concepts related to consumer-side fairness and a look into existing surveys on fairness in recommender systems and how this survey differs.

2.1 Recommender system definition

Recommender systems comprise many varied approaches designed for varied settings and present no single concise definition. This Section introduces recommender systems at a high level and provides a definition designed to aid the discussion of considered methods and evaluation. Readers are encouraged to consult the introduction chapter of the Recommender System Handbook (Ricci et al. 2022b) for general recommender system overviews, motivations and definitions. This survey focuses on personalized recommender systems, i.e., those that seek to accommodate different individuals with customized recommendations based on their individual preferences. The recommender systems discussed will be categorized as either rating-based or ranking-based recommender systems. When applying the notation presented in Table 1, both variations attempt to capture how a set of entities \({\mathcal {U}}\) will value another set of entities \({\mathcal {V}}\) on an individual level. \({\mathcal {U}}\) are typically exemplified as users and \({\mathcal {V}}\) as items, and the overall goal is to recommend novel items to the users. For rating-based recommender systems, the level objective is to predict individual ratings given by a user u to an item v, \(r_{uv}\), i.e., \({\hat{r}}_{uv} = r_{uv}\). Ranking-based recommender systems instead take the approach of capturing the general preferences of the users and using this to present the same users with selections of items predicted to be of the users’ liking. This selection is ranked, or ordered, according to predicted relevancy, i.e., the topmost item is the one predicted to be the most liked. The resulting objective is analogous to the rating-based objective, \({\hat{y}}_{uv} = y_{uv}\), but does present slightly different challenges owing to the non-continuous nature of ranking. Both Rating-based and Ranking-based recommender systems may adopt rating data, but Ranking-based recommender systems can more easily adopt data of a more implicit nature, e.g., interaction events.

Recommender systems are implemented using a plethora of different models and methods like Neighbourhood-based Collaborative Filtering (Nikolakopoulos et al. 2022), Matrix Factorization (Koren et al. 2022), various types of Deep Neural Networks (Zhang et al. 2022), Autoencoders (Li and She 2017), Reinforcement Learning (Afsar et al. 2022), Graph-based models (Wang et al. 2021), and various Probabilistic models. Detailed background theories of various models have been left out to avoid significant inflation of the survey’s length. However, as this is a comprehensive survey focusing on tangible model proposals, some technical details will be discussed. Readers are encouraged to consult auxiliary sources, like the provided references, when needed.

2.2 Terminology

The following definitions have been added to mitigate confusion stemming from mixing similar terms or different interpretations of specific terms. A low degree of consensus, especially within fairness-focused research, has led to multiple different terms being used for the same concept and other words like preference are contextually ambiguous.

Rating In rating-based recommender systems, we are interested in the rating given by a specific user to a specific item and is contrasted with ranking-based recommender systems. Ratings can be discrete and continuous and typically have a set range, e.g., between 1 and 5. Input and target ratings are ratings that are provided by the user and used for training and evaluating the model, while predicted ratings are produced by the model.

Ranking Ordering of items or entities where items near the top are considered more relevant than those below. A ranking outputted by a recommender system is ordered by the modelled preference of the user. Rankings are occasionally explicitly provided by users as inputs or targets but are more frequently a concept applied to evaluate whether the model can identify the most relevant target items, i.e., how high the target items ranked in the predicted ranking.

IR Ranking The field of Information Retrieval comprise an array of different approaches for retrieving information from data storage. We will consider intent the key factor separating IR Ranking and recommender systems: recommender systems seek to suggest novel, but relevant, information to their users while IR Ranking seeks to retrieve the most relevant information. Furthermore, IR Ranking approaches often involve a query and are rarely personalized.

Top-k Top k ranked item, where k is an integer indicating the number of items that are of interest. k is usually quite small, often in the range of 5-20, as user attention is a limiting factor.

Preference (score) Continuous measure of user preference used to produce rankings. Score/value/measure may be omitted in the text if the context allows it.

Ranking-based recommender systems Recommender systems that learn to rank in order to present the user with the top list of suggested items. Typically optimized for predicting if users will prefer an item over another item, or just for predicting if users will like an item.

Rating-based recommender systems Recommender systems that attempt to match ratings given to items by users, and predict new ratings given by users to unrated items. Typically optimized for quantifying how much a user will like an item.

Sensitive attribute Unifying term used to describe demographic attributes that are used to segment users into different groups for which fairness considerations are applied. Other terms such as demographic and private have been used when the groups are given equal attention, while terms like protected, minority and marginalized are used when one or more groups are of particular concern. Sensitive attribute is found to be sufficient for explaining most approaches, but more thorough explanations are provided in cases where asymmetry or special dynamics of a sensitive attribute take a more nuanced role.

Sensitive group A group of users that share a specific instance of a sensitive attribute, e.g., all male users in a setting where gender is considered a sensitive attribute.

Consumer-side fairness Fairness considerations centred on users of recommender systems (Burke et al. 2018). Consumer-side fairness definitions are concerned with fair treatment of users on individual or group level. Group-level definitions often group users by sensitive attributes, i.e., Sensitive groups.

Representation Many algorithms commonly used for recommender systems represent users and items as (high dimensional) vectors, e.g., latent factors in factorization models, embeddings in deep models and latent state in autoencoders. In general discussion, we will use representation to refer to these concepts.

Prediction performance measure A measure of Prediction Performance evaluates how close predicted recommendations are to target recommendations. Where target recommendations are assumed to reflect user preference, e.g., items rated/liked or interacted with by a user but not known during model training. Most metrics used to evaluate recommender system performance fall under this definition of Prediction Performance measures, e.g., MAE, RMSE, AUC, NDCG, MRR etc. These measures are contrasted by other utility measures discussed in this survey, e.g., statistical parity only considers predictions and not targets. The notion of Prediction Performance measures will play a key role in differentiating between fundamentally different fairness goals.

2.3 Formal fairness definitions

Several formal fairness definitions have been proposed for classification settings, especially those that influence decisions. In these settings, the models are typically tasked with outputting the most likely label or handful of labels given some input. One example could be a bank that utilizes a model to predict whether a potential customer will default should they be given a mortgage. Fairness can be highly relevant in this example, e.g., if the applied model is shown to discriminate through outputting higher probabilities that customers of a specific race or gender will default.

While some fairness definitions targeting such scenarios can be trivially adapted to the recommendation setting, others are more challenging. One such challenge relates to adaptations of definitions that rely on notions like True and False Positives/Negatives, as the interpretations and implications of these statistics may differ between general label prediction and recommender systems. True and False Positives/Negatives are not trivially derived from ratings and are not typically considered in rating-based recommender systems. Conversely, these statistics are heavily influenced by the fixed number of recommendations and the number of correct recommendations in ranking-based recommender systems. Furthermore, the implications of some definitions may be enhanced in scenarios where a positive label is deemed a positive outcome for a stakeholder, even if it was a False Positive. For instance, in our mortgage application example, the applicant will be happy if the application is accepted regardless of whether it was the correct verdict according to bank policies. In consumer-side recommendation settings, this is rarely the case. A False Positive in a top-k recommendation setting will simply be the presence of an item that the user does not care for among the top recommendations.

A selection of fairness definitions is covered here, along with accompanying descriptions of recommender system-specific adaptions. The reader is encouraged to consult Gajane (2017), Caton and Haas (2023), and Li et al. (2023) for a more in-depth discussion of formal fairness definitions in both machine learning and recommender systems. There is also ongoing research into how users perceive different fairness definitions when applied to different scenarios. Harrison et al. (2020) performs a large-scale user study targeting the perceived fairness of machine learning models, while Sonboli et al. (2021) presents a smaller user study specifically targeting recommender systems.

2.3.1 Fairness through unawareness

Fairness Through Unawareness considers any model fair as long as no explicit sensitive attributes are part of the input. This definition is widely disregarded as it fails to consider implicit information on sensitive attributes present in other parts of the input (Gajane 2017).

2.3.2 Statistical parity

Statistical parity for decision problems requires that each group has an equal probability of being assigned a positive outcome, e.g., being granted a mortgage.

Where \(\text {P}(\cdot )\) represents probability, \({\hat{y}}\) is the predicted label, and s is a sensitive attribute.

The recommendation of specific items is rarely considered inherently positive outcomes in recommender systems. Recommender systems also output ratings or rankings instead of predicted labels, which further complicates the adoption of Statistical Parity to evaluate recommender system fairness. A fairness definition for recommender systems inspired by Statistical Parity instead requires that the predicted ratings of items, or the probability of recommending them, is the same for all sensitive groups. This is further discussed in Sect. 3.2.1.

2.3.3 Equal opportunity

Equal opportunity in decision problems requires that the true positive rate of different sensitive groups is equal.

2.3.4 Equalized Odds

The Equalized Odds definition is stricter than Equal Opportunity in also requiring that the false positive rates of the different sensitive groups are equal.

As previously mentioned, false positives may benefit some stakeholders in certain scenarios. However, false positives may also be the most detrimental type of error in other scenarios. Thus, the decision to pursue Equalized Odds instead of Equal Opportunity may be motivated by a wish to balance either a boon or a bane.

2.4 Research areas related to consumer-side fairness

Many research areas within recommender systems have arisen to cover different needs as they appeared or were made known. It is not uncommon that the concepts considered in different research areas partly overlap, share underlying issues, or share similar mitigation strategies. A number of the most relevant recommender system research areas, when compared with consumer-side fairness, are listed in this section, focusing on similarities and dissimilarities. The intention of this section is two-fold: The first is to highlight related topics that may be of interest to readers and that may help put consumer-side fairness into a broader context. The second motive is to highlight dissimilarities that disqualify certain research from being covered by the scope of this survey, despite occasionally adopting fairness terminology.

2.4.1 Provider-side fairness

As the name entails, provider-side fairness takes a polar opposite view to consumer-side fairness. A significant part of the research focuses on mitigating popularity bias, which occurs when popular items are given disproportional amounts of exposure by the recommender system. However, the broadness of the definition also covers research that is more similar to many consumer-side fairness approaches in considering fairness for groupings based on sensitive information from a provider perspective.

2.4.2 Cold-start, long-tail and diversity

Cold-start, long-tail, and diversity in recommender systems all make out similar concepts with partly overlapping causes and mitigation approaches: Cold-start specifically focuses on the scenario of providing good recommendations for new users or new items, through facing the challenge of comparatively little data for the new entity. There are also recommender systems that more generally focus on increasing the recommendation of items in the Long-tail, i.e., the items that receive little attention compared to the, typically, smaller amount of popular items. Analogous user-centric recommender systems focus on improving the recommendations for users with few interactions or those receiving sub-par recommendations. Approaches that optimize for Diversity attempt to diversify the recommendations given to individual users and the complete user base, motivated by aspects such as popularity bias issues and user experience enhancement. While the definitions differ in generality and perspective, they are sometimes used interchangeably in the literature, especially cold-start and long-tail.

Approaches proposed for addressing issues in these three research areas share many similarities and can directly overlap with approaches proposed to address provider-side fairness issues. A method proposed for mitigating long-tail issues can often also be framed as a method for mitigating popularity bias and ensuring provider-side fairness Item-centric methods for addressing issues related to cold-start, long-tail or diversity share similarities and occasionally overlap, with popularity bias mitigation approaches proposed to improve provider-side fairness. Here, item-centric means the approaches focus on new items, items with few interactions or items that are rarely recommended. Similarly, user-centric approaches that seek to balance the performance of all individual users may overlap with consumer-side fairness approaches and are included in this survey, given that they satisfy all acceptance criteria. The last point typically boils down to whether a fairness perspective is applied, i.e., posed as individual fairness, along with fairness evaluation. However, as a general strategy, methods that seek to balance the prediction performance for user groups, where users are grouped based on how many interactions they have, have been excluded. Such approaches fit perfectly within the mature fields of cold-start or long-tail recommender systems and are better represented when compared with such methods.

2.4.3 IR Ranking fairness

IR Ranking is typically not personalized, i.e., the produced rankings are not affected by user-specific interaction with the system. Subsequently, IR Ranking fairness objectives usually have a provider-side point of view, e.g., balancing the exposure achieved by similar items or the representation of different item groups given non-personalized queries. The work of Pitoura et al. (2022) provides an overview of fairness in the IR Ranking setting.

2.4.4 Group recommendation

Group recommendation approaches seek to recommend a set of items to a collection of users, e.g., recommending a travel destination for a group of friends that consider all of their preferences (Masthoff and Delić 2022). Most group recommender systems use aggregated results from individual user models to provide group-level recommendations. How the group-level recommendation respects the individual preferences of the users is central to both the design and evaluation, e.g., one strategy could average the prediction performance while another minimizes the prediction error of the worst-off group member. This research area frequently applies the term fairness when explaining their motive of balancing the consideration of the various users in the groups. While this could be accepted as a local fairness definition, i.e., users in each group should be equally satisfied with their recommendation, group recommender systems have been excluded from the survey on the grounds that this is the central mechanic of the research area and setting. Group recommender systems have received significant attention both before and after the advent of fairness research and are better discussed within their research area.

2.4.5 Privacy

Privacy in recommender systems covers many approaches that seek to protect privacy in different stages of the recommender system pipeline. For instance, federated learning can be applied to mitigate the issues of having a centralized model that may be breached and mined for sensitive information (Li et al. 2020). Differential privacy has been applied to provide protection guarantees for the sensitive information of individual users (Friedman and Schuster 2010). Some privacy approaches seek to completely remove the information of specific attributes within user representations or data, which overlaps with a class of fairness approaches that do the same with the intention of not having the attributes influence the recommendation.

3 Methodology

The methodology of this survey covers the systematic selection process applied for identifying and screening relevant studies, followed by the definition and justification of applied taxonomies, as well as descriptions of how the taxonomies are used to categorize and structure further discussion.

3.1 Selection process

The selection process comprised the definition of concise acceptance criteria, identification of relevant publication indexes, query definition, two rounds of article screenings, and final in-depth reading of the remaining candidate articles. This section presents the acceptance criteria, details the queries and how they were defined, and presents a full overview of the number of studies involved in each step of the selection process.

3.1.1 Acceptance criteria

Five acceptance criteria have been defined in line with our goals of examining the existing literature of tangible models considering consumer-side fairness in recommender systems:

-

1.

The study considers recommender systems, see Sect. 2.1.

-

2.

The study considers consumer-side fairness, either explicitly or through a multi-stakeholder focus.

Note, Group recommendation and Long tail/Cold-start recommender systems are excluded, see Sect. 2.4.

-

3.

The study is published in a peer-reviewed conference or journal.

-

4.

The study proposes a novel model or method.

-

5.

The study evaluates the fairness of the proposed model or method.

3.1.2 Query definition and overview

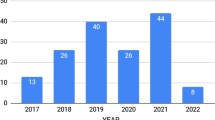

The keywords were kept general to avoid filtering out potential relevant research. The search queries were chronologically bound by 2016-01-01 and 2022-10-01, where the lower bound was set based on prior knowledge of the topic and preliminary querying for validation purposes. The topic started gaining noticeable traction in 2017, but the early adopters had three publications before this, (Kamishima et al. 2012, 2013, 2016). The first two articles do not appear to have inspired other researchers, but since 2016, there has been a gradual increase in the number of articles each year.

The chronological bound was combined with the keyword combination “recommend*” and “fairness”, and both keywords had to be matched in the title, the abstract, or both. “Recommend” was given a wildcard suffix matcher to match both “recommender” and “recommendation”. A similar wildcard, “fair*”, was used instead of “fairness” in the DBLP index to compensate for not being able to match within the abstracts. Observations in known research and research found through preliminary querying confirmed that all articles that matched “fair” in the title also matched “fairness” when considering the abstract. The wildcard was only used in title-only queries since it significantly increased the number of false positives when matching in both title and abstract. Fairness is becoming a well-established concept within the ML community, and most, if not all, research uses the full term at least once before potentially switching over to the shorthand “fair”.

The full selection process is detailed in a PRISMA flow diagram (Page et al. 2021) in Fig. 1.

3.2 Taxonomy

While there have been previous attempts at proposing novel taxonomies for categorizing fairness approaches in recommender systems based on fairness definitions, we argue that there are alternative taxonomies that offer additional insight and value. The most recent taxonomy is proposed by Wang et al. (2022), who first proposes splitting between process and outcome focus, then two alternatives for splitting outcome-focused fairness on target and concept. One challenge when applying this to consumer-side fairness research is that many of the named concept-based fairness categories do not occur that often, and the vast majority of identified research would be classified as either optimizing and evaluating for Process Fairness or Consistency Fairness. We also argue that there may be value in further separating different high-level fairness definitions, e.g., Consistency Fairness may consider distance notions that only compare the distribution of predictions given to different groups, but it can also consider distance notions that measure differences in how the predictions of the same groups match the targets. In other words, the former disregards user preference and only focuses on the predicted ratings, while the latter explicitly evaluates prediction performance by matching predicted ratings with target ratings.

We propose a new taxonomy centred on which high-level fairness definition is considered when optimizing and evaluating models. Besides resulting in a balanced segmentation of the identified research, the taxonomy separates key different classes of fairness definitions, some of which fundamentally conflict with each other. For instance, the view that fairness is achieved when the same prediction performance is achieved for all sensitive groups is fundamentally different from the view that each group should be presented with the same recommendations regardless of prediction performance. To further structure and analyze the research, we propose applying two other, more established, concepts which detail how/at which point the fairness consideration is incorporated and which type of recommender algorithm is applied, respectively.

3.2.1 Fairness Interpretation taxonomy

While several fairness definitions from the fields of law and psychology have been formally defined for machine learning, see Sect. 2.3, they cannot trivially be applied for categorizing the studies considered in this survey. The formal definitions are occasionally implemented as metrics, but since they mostly consider the model’s outcome, it is challenging to define how they should be adhered to during optimization. Another challenge is that some of these definitions are conceptually similar and only differ in minute details. We instead propose categorizing fairness definitions on a higher and more conceptual level, while remaining compatible with the more low-level formal definitions. For instance, Equality of Opportunity and Equalized Odds share a high-level objective of balancing prediction performance measures evaluated for different sensitive groups. We refer to the high-level fairness definitions as Fairness Interpretations to stress that we are discussing high-level conceptual definitions that cannot be expressed in a single metric but rather express a general idea shared by similar definitions/metrics. Two identified Fairness Interpretations have further been assigned sub-categories for finer distinctions between similar concepts. The taxonomy is illustrated in Fig. 2, and the different Fairness Interpretations are further described in the following sections and illustrated in Fig. 3.

Diagram that illustrates the high-level differences between three non-Custom Fairness Interpretations in a scenario where the sensitive group \(s_1\) tend to like the items while \(s_2\) dislikes them. In the recommendation and preference plots, frequency is plotted against preference/rating, while representations are projected into two-dimensional scatterplots. In this case, higher prediction performance is achieved for \(s_1\) than for \(s_2\) when the base recommender system is used, e.g., because this group dominates the dataset. Recommendation Parity is satisfied when the recommendation distributions overlap, while Prediction Performance fairness is achieved when the respective recommendation distributions match and mismatch the “true" distributions equally. In the case of representations, the Neutral Representation Fairness Interpretation would seek to move from the case where representations of different groups can be separated into distinct clusters to the case where the clusters overlap or are indistinguishable. Note that an example with two sensitive groups was chosen for simplicity. All Fairness Interpretations are well-defined for any number of groups/individuals of two or greater

Recommendation parity

Recommendation Parity considers an approach fair when the probability of recommending an item or the predicted item rating is the same for different sensitive groups. For instance, if age is considered a sensitive attribute, Recommendation Parity requires that senior and young users be presented with the same recommendations. Observed user preference or satisfaction is not considered by this Fairness Interpretation, which contrasts it with Prediction Performance and various Custom Fairness Interpretations.

Recommendation Parity for consumer side fairness can be further split based on at which level the parity is optimized or measured. Some optimize and measure parity at a global level, i.e., the distribution of all ratings, while others consider parity in the rating of individual items or item groups. The former is less constricting since the system can predict that different groups will rate the same item differently as long as the rating differences cancel out globally. For instance, if an item’s predicted rating is higher for one group than another, the parity will be regained if there exists another item for which the predicted ratings are mirrored. Local-level Parity demands that the predicted rating or preference of each item/item group is the same for all sensitive groups.

Neutral representation

Neutral Representation considers an approach fair when representations in the model are neutral with respect to sensitive attributes. The key idea is that the sensitive attributes should not influence the recommendation. The research that adopts this Fairness Interpretation varies in having different ideas of what entails influence of sensitive attributes and how neutrality is evaluated, which in turn affects the optimization. For instance, some require no correlation between representation and sensitive attributes, others require representations to be orthogonal to sensitive dimensions in the representation space. Others still define causal models that allow correlation between representation and sensitive attributes but require conditional independence given other variables. The discussion of the methods that are evaluated and optimized for Representation Neutrality is structured by these different ideas.

Unlike the other Fairness Interpretations, the evaluation of Neutral Representation does not consider the outputted recommendation and focuses solely on the model. Achieving perfectly neutral representations will often lead to recommendations akin to those produced by models optimized for Recommendation Parity since the sensitive information no longer affects the predictions. Whether this is the case or not depends on the applied definition of neutral. Regardless, given the unique perspective of focusing on representations rather than the recommendations, characteristic optimizations, representation-centric evaluation, and high prevalence of approaches, a dedicated Fairness Interpretation for neutral model representations is still deemed warranted.

Prediction performance fairness

Prediction Performance Fairness considers an approach fair when equal prediction performance is achieved for all individual users or sensitive groups. Unlike Recommendation Parity, this Fairness Interpretation explicitly considers the users’ opinion on the recommendations they are provided. Evaluation must be based on user feedback in online settings or target ratings/recommendations in offline settings. Despite this requirement, there is a vast amount of varied fairness definitions and metrics that fall under Prediction Performance Fairness. This is because there are endless ways of measuring how well a prediction matches a target and equally many ways to aggregate or compare these measures between users or sensitive groups.

Custom

The Custom Fairness Interpretation covers fairness definitions that do not fall under the three other Fairness Interpretations. Such fairness definitions have been centred on parity with respect to derived attributes or the balancing of custom utility measures that do not consider prediction performance.

3.2.2 Fairness Incorporation taxonomy

When adapting existing recommender systems to new objectives, e.g., adding fairness objectives, one can classify the adaptations by which part of the recommendation process they target. One such categorization distinguishes between adaptations targeting model input, the model itself and the output. This categorization is well established within the field of recommender system fairness and machine learning as a whole, and the classes are typically named pre-, in- and post-processing, respectively (Caton and Haas 2023; Mehrabi et al. 2021; Deldjoo et al. 2023). To structure the identified research, we propose a taxonomy based on these classes. Each class is extended with an additional level to represent better the observed variety of approaches applied to incorporate fairness awareness. The second level contains a single sub-category each for pre- and post-processing approaches, but we propose four sub-categories to cover the diversity of in-processing methods. The full taxonomy is illustrated in Fig. 4, and each proposed sub-category has been given a brief description in this section.

Data Augmentation

The only sub-category of pre-processing methods is Data Augmentation. Data Augmentation covers all methods that increase fairness by augmenting the model’s input data. E.g., modifying the list of movies enjoyed by a male user to look more like the list of a female user when considering Recommendation Parity Fairness.

Loss Enhancement

Loss Enhancement methods improve fairness through additional terms in the loss used for optimizing the model. E.g., adding a loss term that is the mathematical definition of the considered fairness definition. Positive aspects of Loss Enhancement methods are that they can be applied to many recommendation algorithms, are flexible in definition, and can significantly change predictions through minimal changes to an approach. However, extra loss terms do not inherently improve the modelling capacity of a model and may introduce more complex dynamics that would benefit from more modelling capacity or changes to the model architecture.

Probabilistic

The probabilistic fairness approaches apply probabilistic concepts to encourage independence of recommendation and sensitive features, apply soft constraints, or filter out sensitive information. Unlike the other in-processing sub-categories, probabilistic fairness approaches are not easily achieved through a smaller extension to an arbitrary model. This variation of Fairness Incorporation usually requires that the applied model is probabilistic in nature itself, at least partially.

Algorithmic

Algorithmic approaches incorporate fairness by changing smaller aspects of an existing algorithm or through one-time processing, e.g., through selective sampling or removal of sensitive projections in representation space.

Adversarial

Adversarial approaches train adversarial models to classify sensitive information encoded in model representations. The adversarial models are then used to inform how the model should be updated to filter out sensitive information better.

Re-ranking

Re-ranking approaches re-rank the recommendation of one or more base recommender systems according to new or changed objectives, e.g., introducing fairness objectives that are optimized along with regular recommendation performance.

3.2.3 Recommendation algorithm

A third categorization is used to classify approaches according to the applied recommender system algorithm. The algorithm can affect how fairness awareness can be incorporated and influence the general recommendation task. Comparing approaches based on the recommendation algorithm is also enhanced by sharing similar implementation details and premises. The Recommendation Algorithm classes have been created by grouping together related recommendation algorithms and are listed by an acronym and a description in the following list.

-

CF Neighbourhood-based Collaborative filtering. (Nikolakopoulos et al. 2022)

-

MF Matrix-Factorization. (Koren et al. 2022)

-

NCF Neural Collaborative Filtering, taken to mean neural network-based collaborative filtering methods that more specialized model groups do not cover. (He et al. 2017b; Zhang et al. 2022)

-

Graph Various Graph-based models and methods. Graph Neural Networks, Graph Convolutional Networks, Graph Embeddings etc. (Wang et al. 2021)

-

AE (Variational) Auto Encoders. (Kingma and Welling 2022; Li and She 2017)

-

Probabilistic Various Probabilistic models and methods. Probabilistic Soft Logic (Kouki et al. 2015), latent models (Govaert and Nadif 2008; Langseth and Nielsen 2012) etc.

-

Classification Various Classification methods. Logistic Regression, Random Forest (Breiman 2001), Gradient Boosting (Natekin and Knoll 2013) etc.

-

Bandit Contextual Bandit. (Li et al. 2016)

3.2.4 Structuring of main discussion

The three different categorizations will all be used when discussing and comparing the identified approaches. Three sections are reserved for pre-, in- and post-processing Fairness Incorporation approaches, and their content is structured by the corresponding Fairness Incorporation sub-categories and both Fairness Interpretation and recommendation algorithms. The Fairness Interpretation taxonomy is used in an overview and for a focused comparison of fairness optimization. In contrast, recommendation algorithms are used to structure a more general technical discussion to highlight comparable implementational choices.

3.3 Related work and taxonomies

There has been a recent surge in proposed surveys of fairness in recommender systems. Pitoura et al. (2022) surveys both fairness in IR ranking and recommender systems, while Deldjoo et al. (2023), Wang et al. (2022), Li et al. (2023) focus on recommender systems. Pitoura et al. (2022) seeks to serve as an overview of fairness in both IR ranking and recommender systems, which makes it the broadest and most high-level survey among the ones considered relevant. They propose using multiple established concepts as a basis for their categorization, e.g., individual/group fairness and provider/consumer side fairness, as well as novel categorizations of fairness incorporation methods. Because of the broad scope and since it is the oldest survey considered relevant, only two of the studies covered in this survey were covered in Pitoura et al. (2022).

Deldjoo et al. (2023), Wang et al. (2022), Li et al. (2023) were all first made publicly available within a few months of each other in 2022, and all consider a broad scope comprising all types of fairness in recommender systems. This scope is wider than the one applied in this survey by covering provider-side fairness and group recommendation. Additionally, all three surveys also cover research that is theoretical in nature or performs analysis using established approaches and datasets, i.e., not necessarily proposing new models or methods. Deldjoo et al. (2023) investigate the current state of fairness in recommender systems, and focus on charting how the available research is distributed with respect to different high-level concepts. They additionally propose a single-level categorization of the fairness metrics considered in the research they cover. Li et al. (2023) has a more conceptual take and provides a thorough introduction to fairness theory and fairness in other machine learning fields before addressing their identified research through multiple different taxonomies based on binary concepts. Some of the fairness concepts they use to categorize the research are well established, like group/individual and consumer/provider, while others have not previously been focused on, like short-term/long-term and black box/explainable. Wang et al. (2022) propose a hierarchical taxonomy of fairness definitions that are since used to categorize the optimization and fairness metrics applied in their identified studies.

Our work differs from previous surveys by specializing in tangible solutions proposed for consumer-side fairness in recommender systems. The specialization allows for a complete overview of the available literature and a higher focus on technical aspects to enhance comparisons. We also categorize our identified research using a new taxonomy centred on high-level fairness definitions and incorporation methods, which has a purposely high-level and general definition to be applicable and extendable to new definitions and methods. The completeness of our survey is exemplified by Table 2, which indicates that when adjusting for time, the largest coverage overlap with the broader surveys only comprises 18 out of the 43 articles we identified in the same time interval.

3.4 Full model overview

This section presents a preliminary analysis and overview of all identified research by recommendation algorithms and the Fairness Incorporation methods. The motive is to put the topic into the broader context of general recommender systems and to provide an overview of all covered research. A full overview is found in Table 3. Note that the same article may fall under multiple types of Fairness Incorporation and recommendation algorithms, since the proposed approach may apply multiple types of Fairness Incorporation strategies and be applied on multiple base models. Also note that even if an incorporation method theoretically can be applied to a recommendation algorithm, only observed combinations are covered. The fact that a method is adaptable for other recommendation algorithms does not guarantee that un-documented combinations will achieve similar results or improvements. Furthermore, the current trends of the field are better reflected when keeping to the combinations that have been actively researched.

3.4.1 Model analysis

Some clear trends can be observed in the full table. The field has experienced rapid growth, with most research taking place in the most recent years. The early adopters focused heavily on pure Loss Enhancement approaches, usually paired with matrix factorization models, but more recent research rarely applies Loss Enhancement as the sole Fairness Incorporation method. Re-ranking methods saw a similar burst of attention in 2020 and 2021 but did not dominate the field, since multiple other directions were researched in the same period. Adversarial approaches were slow to appear but are now actively researched and paired with a varied selection of recommendation algorithms. Bayesian and algorithmic approaches are the smallest in-processing groups but are characterized by being pretty evenly distributed across time and being applied with specific recommendation algorithms. There also appears to be a recent research trend of applying multiple Fairness Incorporation strategies instead of relying solely on a single strategy.

4 Pre-processing methods

While numerous studies consider the effect of data augmentation, we only found three papers that pass all acceptance criteria. In particular, several candidates were rejected for not proposing formalized approaches or not presenting an evaluation of the achieved fairness. Pre-processing methods comprise the smallest Fairness Incorporation main category (Table 4).

4.1 Fairness optimization

4.1.1 Prediction performance fairness

Rastegarpanah et al. (2019), Fang et al. (2022) propose exploiting collaborative filtering dynamics by training new synthetic users that will influence the recommendation of the real users. When training synthetic users, Rastegarpanah et al. (2019) enhances the loss by adding terms for penalizing both the group-level and the individual-level variance of rating errors. In contrast, Fang et al. (2022) utilize similar loss terms based on the metrics proposed by Yao and Huang (2017), see Sect. 7.3.1, and also global Recommendation Parity.

4.1.2 Custom

The fairness optimization proposed by Slokom et al. (2021) shares similarities with in-processing approaches optimizing for Neutral Representations but alters the input data to remove correlation between the user profiles and the sensitive attributes of the users, instead of altering the intermediate user representations. The approach achieves this by adding items that are more popular among users of other sensitive groups, identified using auxiliary models, to the user profiles. The authors also explore removing items at random or based on how popular they are in the user’s own sensitive group.

4.2 Architecture and method

The three selected papers all propose pre-processing methods that can be applied to a wide variety of recommendation algorithms, and all have used matrix factorization as one of their base recommender models. Rastegarpanah et al. (2019) propose a method for learning supplementary data that can influence polarization and improve individual and group fairness. The key insight is that introducing additional users will affect the recommendation of the original users. This insight is exploited by adding a few synthetic users represented with their own ratings. These ratings are considered parameters and are trained using loss terms designed to influence polarization and fairness. Further, they propose two computationally cheap heuristics. The synthetic data is optimized for prediction performance achieved for both individual users and sensitive groups. Fang et al. (2022) apply the same base approach but focus on optimizing multiple fairness objectives more efficiently and smoothly by projecting the gradients of different objectives onto each other if they conflict. The fairness objectives fall under both Prediction Performance Fairness and Recommendation Parity.

Slokom et al. (2021) modify the data of existing users through an extension of the approach proposed by Weinsberg et al. (2012) instead of training new ones. An auxiliary logistic regression model is trained to tell how indicative items are of the gender of the users that like them. This information is used to select items to be added or removed from user data to make the data less indicative of gender. The addition process specifically intersects lists of indicative items with recommendations from a user-based collaborative filtering model to motivate the addition of relevant items.

5 In-processing methods

In-processing methods are the most represented among the main categories, and their dominance has been constant since the advent of the field. They are characterized by being the most specialized approaches, as the base models themselves are adapted and changed.

5.1 Fairness optimization

5.1.1 Recommendation Parity

Optimization of Recommendation Parity fairness is mainly found among the in-processing methods. Sensitive groups often differ heavily in preferences, so the optimization for a Fairness Interpretation that requires that they are given the same recommendation may benefit from additional influence at a model level and model flexibility. Research that targets Recommendation Parity saw a lot of focus in the field’s early years but has since been overtaken by alternatives.

Global Recommendation Parity

Kamishima et al. (2013); Kamishima and Akaho (2017) propose adding loss terms for matching mean rating and preference of different sensitive groups, while Dickens et al. (2020) devise a probabilistic soft logic rule of similar design for the same goal.

More comprehensive approaches for matching global recommendation distributions beyond the first momentum are proposed by Kamishima et al. (2012, 2016, 2018). Kamishima et al. (2012, 2018) introduce different loss terms for minimizing the mutual information of the ratings and the sensitive groups in matrix factorization. In a slightly different approach, Kamishima et al. (2016) apply a latent factor model where the rating variable is considered independent of the sensitive group variable and optimizes their model using the Expectation Maximization algorithm.

Local Recommendation Parity

In the case of local Recommendation Parity, all relevant research we have found only considers the first moment when matching the recommendations of different sensitive groups. Kamishima et al. (2013) propose adding a loss term that penalizes the squared difference of item ratings between different sensitive groups as an alternative to the already mentioned global version. Similarly, Islam et al. (2021) apply the same idea but opt for an absolute difference instead of a squared difference. The probabilistic soft logic approach proposed in Farnadi et al. (2018) defines rules for encouraging both item-group and item-level parity (Table 5).

5.1.2 Neutral Representation

The objective of Neutral Representation fairness cannot be obtained without altering the model, thus, it is only pursued by in-processing approaches. Optimization of this Fairness Interpretation can be achieved by applying different strategies for filtering out intrinsic sensitive information in representations within the model. The following paragraphs are structured by the technique applied to achieve neutral representations, see also Fig. 4.

Adversarial

The approaches proposed by Resheff et al. (2019), Wu et al. (2021a), Xu et al. (2021), Borges and Stefanidis (2022), Rus et al. (2022) all apply adversarial models directly on model representations. Resheff et al. (2019) pass the latent user factors of their matrix factorization approach to their adversarial, while Wu et al. (2021a) do the same with one of the multiple user representations they train in a composite NCF model. Xu et al. (2021) feed their adversarial model a linear combination of the user representation in a base recommender model and a representation they base on an auxiliary knowledge graph for modelling sensitive user attributes. Rus et al. (2022) propose a neural classification model and applies an adversarial model on a hidden layer in the said model. Finally, Borges and Stefanidis (2022) apply an adversarial model to discriminate the latent representation in their variational autoencoder-based model.

A slightly more intricate scheme is proposed by Wei and He (2022), who concatenate the observed ratings to the representations that are fed to the adversarial model, which they argue will improve the neutrality of the representation and also make the representations independent with respect to the sensitive attribute conditioned on the observed ratings. They further add a second adversarial model, which is fed predicted ratings along with corresponding observed values and item embeddings.

Bose and Hamilton (2019); Li et al. (2021b) argue for letting users dynamically decide which sensitive attributes they are comfortable with the model using. To support this, both propose training optional filters for filtering out different types or combinations of sensitive information from user representations in graph- and matrix-factorization models. The filters are trained using adversarial models. A similar approach is proposed by Wu et al. (2022b), who train adaptors (Houlsby et al. 2019) within the transformers (Vaswani et al. 2017) that make out their model. The adaptors dynamically filter out different combinations of sensitive attributes based on user- and task-based settings in a sequential recommendation setting.

Wu et al. (2021b), Liu et al. (2022a, 2022b, 2022c) all consider graph neural network methods and the construction of higher-order graph representations by accumulating neighbouring representations in the recommendation graph. The approaches apply adversarial models to discourage the encoding of sensitive attributes in the user- and item-level representations, which also mitigate the accumulation of sensitive information in the higher-order neighbourhood representations. Liu et al. (2022c) further supplements the adversarial discrimination loss with a loss term on the covariance of the predicted attribute and the actual sensitive attribute. Liu et al. (2022a) instead designs and utilizes self-supervising loss terms to enhance the representations and mitigate imbalance issues caused by imbalanced sensitive attributes.

Orthogonality

Orthogonality-based approaches apply additional loss terms or explicit removal of sensitive projections to make representations orthogonal to explicit or implicit sensitive dimensions in the representation space. Wu et al. (2021a) model two separate user representations: one for inferring sensitive information and one for providing neutral representations. They devise a loss term that encourages the two representations to be orthogonal and further encourages the neutrality of the second representation through an adversarial approach.

A more explicit approach is pursued by Islam et al. (2019, 2021), where a post hoc step infers sensitive dimensions in the representation space by taking the difference of the mean representation of each sensitive group. The projections of the sensitive dimension onto each representation are then explicitly subtracted. In the case of Islam et al. (2021), the orthogonality processing supplements the Recommendation Parity loss term (see Sect. 5.1.1).

Sampling based representation training

Rahman et al. (2019); Li et al. (2022b) both adjust the sampling strategy used when training representations. Rahman et al. (2019) proposes to balance the sampling of the next user according to sensitive groups when training graph representations using random walks. In contrast, Li et al. (2022b) adjust the probability of sampling the triplets needed for training knowledge graph representations in a manner that balances out the correlation of sensitive groups and items across all users.

Probabilistic approaches

The models by Buyl and Bie (2020); Li et al. (2022a) are fitted using a prior that is informed of sensitive attributes to allow the rest of the model to focus on other aspects. When the model is used, the sensitive prior is replaced by one oblivious to the sensitive attributes. The intention is to produce fair recommendations along with neutral representations.

Frisch et al. (2021) explicitly model a variable for representing the contribution of the sensitive attributes instead of using a sensitive prior. Ideally, this sensitive variable can soak up all sensitive information, leaving the rest of the model neutral. When recommending, the model drops the parts of the model that are dependent on the sensitive attribute.

5.1.3 Prediction Performance Fairness

When optimizing for Prediction Performance Fairness, models attempt to balance prediction performance measures on a group or individual level. While only group-level optimizations are found among the in-processing methods, there is still significant variation in the considered approaches.

Yao and Huang (2017) proposes four Prediction Performance Fairness metrics for recommender systems, then adapts and applies each metric as loss terms in a matrix factorization approach. One of the metrics is similarly adapted by Dickens et al. (2020) as a probabilistic soft logic rule.

Numerous variations of straight-forward loss terms based on Group Prediction Performance Fairness Interpretations are proposed for different models: the contextual bandit approach proposed by Huang et al. (2021) penalizes differences in cumulative mean rewards of different sensitive groups. Liu et al. (2022c) and Borges and Stefanidis (2022) both supplement adversarial approaches with Prediction Performance Fairness Loss Enhancement. Liu et al. (2022c) penalize the absolute differences in pairwise recommendation loss of different sensitive groups, while Borges and Stefanidis (2022) penalize differences in reconstruction loss of a protected group and that of the average user in their variational autoencoder model. Finally, Yao and Huang (2021) train personalized regularization weights based on the loss of a specific sensitive group to force the matrix factorization model to focus more on the prediction performance achieved for said group.

Wan et al. (2020) consider a unique recommender system setting where users and items are segmented into market segments based on sensitive groups and item groups and argue that the prediction performance achieved for the market segments should be similar. The proposed model applies loss terms that penalize error variation between user groups, item groups, and market segments. The authors also explore a market segment-level parity alternative by penalizing the variances of predicted ratings instead of errors.

Li et al. (2021a) propose a less direct way of encouraging the model to value the prediction performance for non-mainstream user groups more by adding decoder components to their representations and corresponding loss terms for reconstructing the inputs. The intention is to provide the model with a stronger incentive for properly encoding all users and items, which in turn may mitigate issues with favouring the mainstream user groups at the expense of everyone else. A similar goal is pursued by Liu et al. (2022a), who devise a set of auxiliary goals for encouraging their model to produce richer representations of all users.

For the reciprocal setting, Zheng et al. (2018) proposes to consider both the prediction performance for the user that receives the recommendation and the prediction performance for the recommended users themselves. On a global level, this scheme balances the prediction performance for two user groups based on the user’s role in individual recommendations, i.e., reference users and users being recommended to reference users.

5.1.4 Custom

Bobadilla et al. (2021) utilize empiric trends in the input data to design a set of indexes that represent users’ and items’ intrinsic sensitive value, e.g., an item is considered fairly young if it is mostly liked by young users, and a user is considered highly senior if they exclusively like item popular with senior users. They further design a loss term to penalize recommending items to users if the index values differ significantly. Loss Enhancement is also applied in the neighbourhood-based collaborative filtering model proposed by Burke et al. (2018) to balance the contribution of peers of different sensitive groups when recommending. Specifically, the added loss term penalizes the absolute difference of the model-specific user-to-user weights of different sensitive groups.

5.2 Architecture and method

5.2.1 Loss Enhancement

Matrix factorization

The early works of Kamishima et al. ; Kamishima et al. are the earliest identified research that satisfies all acceptance criteria in this survey. The four publications (Kamishima et al. 2012, 2013, 2018; Kamishima and Akaho 2017) all propose matrix factorization models where the fairness aspects are modelled through different, but related loss terms. They all share the overall goal and fairness objective of ensuring statistical parity of recommendations. Additionally, all train different sets of parameters for the different sensitive groups they consider. In the first iteration, Kamishima et al. (2012) propose a loss term that is an approximation of the mutual information of the rating and the sensitive attributes. Next, Kamishima et al. (2013) introduces an efficiency improvement with alternative loss terms that penalize differing ratings per sensitive group averaged over all items or individually. The paper by Kamishima and Akaho (2017) considers similar loss terms, but through an implicit-feedback recommender system using ranking-based evaluation. It is noted that the approach has little effect on the ranking order of each user. Finally, Kamishima et al. (2018) returns to rating-based recommender systems, introducing two new methods matching the first and second moment of the distributions to ensure statistical parity. Both methods approximate rating distributions given the sensitive attribute with normal distributions, and then penalize Bhattacharyya distance (Bhattacharyya 1943) and mutual information, respectively.

Another early contribution was by Yao and Huang (2017), who argue for fairness definitions based on balancing prediction performance rather than Recommendation Parity. They propose four new Prediction Performance Fairness metrics that measure imbalances in how well the system recommends for different sensitive groups. Further, they devise loss terms based on these metrics and an additional parity-based metric to compare how well models trained with the different loss terms fare when evaluated using all metrics.

Zheng et al. (2018) is concerned with recommending matches after speed-dating, which is a reciprocal recommendation setting in the sense that consumers are recommended to other consumers. The model predicts user impression of speed-dates with different partners and considers a Custom utility metric based on the similarity of user’s expectation of a partner and their impression of the speed-date partner. The utility metric is also used in the added loss term, which is designed to maximize the prediction performance for both users in each potential match, i.e., the user being recommended another user and the recommended user themselves. Considering the opinions of both involved users may also improve the overall success of this specific application, as mutual interest is ideal in a matchmaking scenario.

The approach proposed by Wan et al. (2020) is designed to address retail marketing bias by better balancing the achieved prediction performance for different market segments. In particular, they define market segments based on sensitive user groups and attributes of models used in marketing different items, e.g., one segment may make out male users and items only marketed using female models. The proposed approach optimizes for balanced prediction performance by penalizing error variance between the different segments and other groupings. An alternative configuration is also considered where the model instead penalizes predicted rating variance, i.e., a configuration that optimizes for Recommendation Parity Fairness rather than Prediction Performance Fairness.

The last identified Loss Enhancement-based matrix factorization approach was proposed by Yao and Huang (2021). The key idea of the model is to improve the prediction performance for disadvantaged users through personalized regularization. This is achieved through a multi-step process that alternates between training for the general recommendation task while keeping the personalized regularization weights static and updating the same parameters based on the recommendation loss of the disadvantages.

Neighbourhood-based collaborative filtering

Burke et al. (2018) propose enhancing the loss of a user-based collaborative filtering approach to encourage users’ neighborhood of peers to be better balanced with respect the considered sensitive attributes. To this end, they devise a loss term that penalizes if the coefficients used for weighting influence of peers are skewed towards a specific group, i.e., the sum of male peer coefficients is greater than that of female peers.

Neural collaborative filtering

Bobadilla et al. (2021) are unorthodox in terms of fairness definition and approach. They give each item a value based on the distribution of, e.g., the gender of users who like it, thus representing how gendered the item is. The items are then used reversely to measure how gendered each user is based on the items they like. The authors go on to penalize recommending items with a gendered value far from the user’s.

Li et al. (2021a) aims to improve the prediction performance achieved for non-mainstream users using collaborative filtering models. Their approach involves a factorization step that involves user and item representations, where the representations double up as the latent representations in two autoencoders. The autoencoders are added to encourage the model to encode all input information in the latent representations properly, and not neglect information only relevant to a subset of the users.

Bandit

The only identified bandit approach was proposed by Huang et al. (2021), and is a contextual bandit method that penalizes differences in cumulative mean rewards of different sensitive groups. The authors construct a synthetic dataset for video recommendations and define a reward function that, for instance, rewards cases where the gender of the user and the video speaker is the same.

5.2.2 Probabilistic

Graph

Buyl and Bie (2020) consider link prediction in social graphs and applies a probabilistic model for training graph representations based on the work by Kang et al. (2019). They encode prior knowledge of the relations in the network using a prior term, which frees up the representations from encoding the same knowledge. Buyl and Bie (2020) leverage this by designing priors that contain sensitive information to be used during training but replaced in the final recommendations. Li et al. (2022a) further adapt the approach for peer recommendation in online learning and introduce changes to the negative sampling used during training.

Probabilistic model

Farnadi et al. (2018) and Dickens et al. (2020) both apply models based on Probabilistic Soft-Logic (PSL) (Bach et al. 2017) for fairness-aware recommendation. PSL allows probabilistic models using human-interpretable first-order logic. Both models apply a base set of logical rules for the general recommendation task, e.g.,

Farnadi et al. (2018) extend the model with fairness rules based on parity, e.g., one sensitive group’s rating of an item implies the rating of a different sensitive group and vice versa. Dickens et al. (2020) consider courser parity-based rules and add others to better balance the prediction performance achieved for different sensitive groups. Further, they allow modellers to adjust the importance of different fairness terms. They also discuss using the model together with an arbitrary black-box model to inject fairness and interpretability, which can be thought of as a form of re-ranking.

In the work by Kamishima et al. (2016), two different graphical models are proposed for modelling ratings independent of a sensitive group membership. The models are realized as latent class models and optimized through the Expectation-Maximization algorithm.

Frisch et al. (2021) propose using a latent block model for clustering users and items, then model a new rating-mean based on the associated cluster mean, individual variables for the item- and user-specific influences, and finally an item-specific variable that is controlled by the user’s sensitive attribute. This final variable models the sensitive information, and is only used during training, similar to how informed priors are used in Buyl and Bie (2020). The model is optimized using variational inference.

5.2.3 Algorithmic

Neural collaborative filtering

Islam et al. (2019) explicitly subtract sensitive projections in user representations in a neural collaborative filtering model. They consider both scenarios where there is a single or multiple binary sensitive attribute(s), e.g., male/female and young/senior. Some of the same authors (Islam et al. 2021) propose a more complex approach where they utilize transfer learning to pre-train user representations and neural network weights in a non-sensitive recommendation setting. The user representations are then processed similarly as by Islam et al. (2019) to be used in a sensitive recommendation setting. The non-sensitive settings considered are film recommendation and social media action recommendation, while the sensitive settings are occupation and college major recommendation, respectively. A parity-based loss term is applied in addition to the user representation processing to incorporate fairness in sensitive settings.

Li et al. (2022b) propose a fairness-aware sequential recommender system in which an integral part is to train item representations for representing the contextual information of the items and their relations. The authors use fairness-aware sampling when training said representations. Specifically, the sampling probability is set to adjust for any empirical skewness in how an item is preferred by different sensitive groups.

Graph

The approach by Rahman et al. (2019) is designed to be used in reciprocal settings and tested on recommending peers in social networks while considering sensitive groups based on gender and race. The base representation algorithm performs random walks over the graph by sampling the next user among the current user’s peers, i.e., the users the current user has a relation to in the observed data. Their fairness view is introduced by first sampling the peer’s sensitive attribute uniformly, then sampling as usual from the qualified peers only.

Xu et al. (2021) work with knowledge graph-based recommender systems. They propose training user representations of an auxiliary graph for representing sensitive attributes and their hidden relationships through a multi-layered neural network. This user representation is combined with that of the original recommender system in a linear transformation and then factorized with the item representation from the original recommender system. Additionally, an adversarial network is trained to classify sensitive attributes from the compound user representations and used to filter out said information. The purpose of the auxiliary graph representation is stated to be to improve the modelling of multiple sensitive attributes and their interactions.

5.2.4 Adversarial

Matrix factorization

Resheff et al. (2019) apply an adversarial gradient-based model to remove information like gender and age in the latent user factors. The authors list both privacy and fairness aspects as motivations for adversarial filtering.

Li et al. (2021b) adopt the approach proposed by Bose and Hamilton (2019) using multiple different recommender system specific models, as opposed to the more general setting of link-prediction considered by Bose and Hamilton (2019). The approach is applied using four different models, covering matrix factorization and neural collaborative filtering. They further extend the approach by proposing a secondary option for training single filters for combinations of sensitive attributes, which is compared to the main approach of training one filter for each attribute and taking the mean of filtered representations to apply combinations.

Graph

Bose and Hamilton (2019) proposes to filter combinations of sensitive attributes from graph representations dynamically and considers both link-prediction and recommender system applications using different setups. They train one filter per sensitive attribute and combine filters by aggregating the representations processed by the filters. Each sensitive attribute is further assigned an adversarial for removing the traces of said sensitive attribute. A binary mask is sampled during training to simulate different users who want to restrict the use of different combinations of sensitive attributes. The mask then determines which filters are applied.

Wu et al. (2021b) assume a graph-based perspective and that pre-trained user and item representations are provided. They suggest training filter functions for filtering out sensitive information from both representations and using these to build higher-order neighbourhood representations iteratively. For instance, the first-order neighbourhood representation of a user is based on the filtered representations of the items the user has liked or interacted with, the second-order neighbourhood contains the first-order neighbourhood representation of the same items, and so on. A multi-layered neural network is used to simultaneously process the first- and second-order neighbourhood representations into the final network level representation, with the motivation to capture higher-order sensitive information and how the different levels relate. Adversarial models are applied to both the filtered initial user representations and the final network-level user representations.