Abstract

Due to the high cost of labelling data, a lot of partially hybrid data are existed in many practical applications. Uncertainty measure (UM) can supply new viewpoints for analyzing data. They can help us in disclosing the substantive characteristics of data. Although there are some UMs to evaluate the uncertainty of hybrid data, they cannot be trivially transplanted into partially hybrid data. The existing studies often replace missing labels with pseudo-labels, but pseudo-labels are not real labels. When encountering high label error rates, work will be difficult to sustain. In view of the above situation, this paper studies four UMs for partially hybrid data and proposed semi-supervised attribute reduction algorithms. A decision information system with partially labeled hybrid data (p-HIS) is first divided into two decision information systems: one is the decision information system with labeled hybrid data (l-HIS) and the other is the decision information system with unlabeled hybrid data (u-HIS). Then, four degrees of importance on a attribute subset in a p-HIS are defined based on indistinguishable relation, distinguishable relation, dependence function, information entropy and information amount. We discuss the difference and contact among these UMs. They are the weighted sum of l-HIS and u-HIS determined by the missing rate and can be considered as UMs of a p-HIS. Next, numerical experiments and statistical tests on 12 datasets verify the effectiveness of these UMs. Moreover, an adaptive semi-supervised attribute reduction algorithm of a p-HIS is proposed based on the selected important degrees, which can automatically adapt to various missing rates. Finally, the results of experiments and statistical tests on 12 datasets show the proposed algorithm is statistically better than some stat-of-the-art algorithms according to classification accuracy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Research background

With the development of science and technology, the amount of information increases in geometric progression. The increase of information brings many uncertainties to information processing. To deal with the uncertain information, many scholars have proposed many effective methods. such as rough set theory (R-theory), fuzzy set theory and uncertainty measurement (UM). These methods have been successfully used in the following fields: pattern recognition (Cament et al. 2014; Swiniarski and Skowron 2003), image processing (Navarrete et al. 2016), medical diagnosis (Hempelmann et al. 2016; Wang et al. 2019), data mining (Dai et al. 2012) and expert systems (Pawlak 1991).

An information system (IS) based on R-theory was defined by Pawlak (1982). It is the main application field of R-theory. From the above, many scholars have researched UM for an IS. For instance, Wierman (1999) put forward information granulation in an IS; Dai and Tian (2013) researched granularity measurement in a set-valued IS; Liang and Qian (2008) studied information granules in an IS; Qian et al. (2011) proposed fuzzy information granularity by constructing fuzzy information granules; Dai et al. (2013) investigated UM for an incomplete decision IS.

In the view of the rapid growth of data, data generates a large number of attributes. However, some features have little effect on describing data. Therefore, attribute reduction or feature selection becomes very important in information processing. It is particularly important to form a new attribute set by selecting features with important influence, which can keep the data information unchanged. From this, the dimensions of the data are reduced. Hu et al. (2008) studied attribute reduction by using neighborhood rough set model.Singh et al. (2020) obtained a novel attribute reduction method in a set-valued IS. Wang et al. (2019) researched attribute reduction based on local conditional entropy. Dai et al. (2016) discussed attribute reduction in an interval-valued IS. Wang et al. (2020) investigated attribute reduction of self-information based on neighborhood. Hu et al. (2022) presented fast and robust attribute reduction in a fuzzy decision IS based on the separability. Yuan et al. (2022) explored interactive attribute reduction via fuzzy complementary entropy for unlabeled mixed data. Houssein et al. (2022) studied centroid mutation-based search, and rescued optimization algorithm for feature selection and classification. Ershadi and Seifi (2022) gave applications of dynamic feature selection and clustering methods to medical diagnosis. Pashaei and Pashaei (2022) proposed an efficient binary chimp optimization algorithm for feature selection in biomedical data classification. Tiwari and Chaturvedi (2022) introduced a hybrid feature selection approach based on information theory and dynamic butterfly optimization algorithm for data classification.

Sang et al. (2021) considered incremental feature selection using a conditional entropy based on fuzzy dominance neighborhood rough sets. Yuan et al. (2021) put forward fuzzy complementary entropy using hybrid-kernel function and its unsupervised attribute reduction.

The deficiency of the existing work mentioned above is that there is less attention paid to attribute reduction for partially hybrid data.

1.2 Motivation and contributions

There are many high-dimensional complex data in many fields. Because of hardware failures, human sabotage, operational errors, virus infections and software failures, the labels of some samples in the data are lost. If only labeled data is used for attribute reduction, the reduction results can’t effectively reflect the distribution of data, and the classification performance could be weak. Finding new labeling methods has become extremely important. Semi-supervised attribute reduction aims to effectively utilize unlabeled data to enhance the effectiveness of attribute reduction, in order to improve the classification performance of learning model. In recent years, semi-supervised attribute reduction has attracted the attention of many scholars. For instance, Dai et al. (2017) constructed a heuristic semi-supervised attribute reduction algorithm on the basis of distinguishing pairs. Wang et al. (2018) designed a semi-supervised attribute reduction algorithm based on information entropy. Zhang et al. (2016) combined R-theory with ensemble learning framework, and constructed an ensemble base classifier. Xu et al. (2010) proposed a semi-supervised attribute reduction algorithm based on manifold regularization, which measures the importance of features by maximizing the spacing between different categories. Han et al. (2015) presented a semi-supervised attribute reduction algorithm, which effectively uses the information in a large number of unmarked video data to distinguish target categories by combining semi-supervised scatter points. Wu et al. (2021) used minimal redundancy to research semi-supervised feature selection. Wan et al. (2021) proposed a semi-supervised attribute reduction method based on neighborhood rough set.

The comparative of this paper with the research results of UM or attribute reduction about some above literatures is shown in Table 1.

However, the existing studies face new challenges in attribute reduction. The discretization of continuous attributes can result in the loss of structural information in the data. Additionally, when the amount of data increases, the quality of reduction becomes low. Attribute reduction based on an identification matrix and identification pair significantly increases the operation cost. While the existing studies can evaluate the uncertainty of hybrid data, they lack an effective mechanism for converting partially hybrid data into hybrid data. Furthermore, the existing studies often replace missing labels with pseudo-labels, which are not real labels. This approach becomes difficult to sustain when encountering high label error rates.

Based on the above research motivation, this paper cleverly divides a p-HIS into the l-HIS and u-HIS, and views the sum of their importance degree as the importance degree of a p-HIS where the incomplete rate of labels is used as the weight. The major contributions are summarized as follows.

-

(1)

The facts that a discernibility pair set for hybrid data is actually a distinguishable relation and a p-HIS can induce the l-HIS and u-HIS are showed.

-

(2)

Four kinds of important degrees on each attribute subset in a p-HIS are defined. They use the weighted importance degree sum of the induced l-HIS and u-HIS to deal with UM for each subsystem. The l-HIS and u-HIS can more deeply reflect the importance or classification ability of an attribute subset.

-

(3)

The performance of four kinds of important degrees in a p-HIS is tested. Numerical experiments and statistical tests verify the effectiveness of these important degrees.

-

(4)

Based on the selected important degrees, heuristic algorithms of semi-supervised attribute reduction in a p-HIS are constructed. The experiment results show the constructed algorithm is statistically better than some stat-of-the-art algorithms according to classification accuracy.

1.3 Organization

In Sect. 2, the indiscernibility relation and discernibility relation in a p-HIS are defined. In Sect. 3, four degrees of importance in a p-HIS are introduced. In Sect. 4, UM for a p-HIS is investigated. A new algorithm is proposed which is related to semi-supervised attribute reduction in a p-HIS. In Sect. 5, the experiments on algorithm performance and effectiveness analysis of the proposed degrees of importance are conducted. In Sect. 6, the statistical test of algorithm performance is conducted. In Sect. 7, this paper is summarized. The logical structure of this paper is shown in Fig. 1.

2 Preliminaries

In this paper, \(U=\{u_1,u_2,\cdots ,u_n\}\), \(2^U\) is the set which contains all subsets of U and |X| represents the cardinality of X. Let

If \(R=\delta\), R is called universal relation; if \(R=\triangle\), R is called identity relation.

Given that R is an equivalence relation and any \(u\in U\), \([u]_R\) is an equivalence class of u which is denoted as: \([u]_R=\{v\in U: uRv\}.\) \(U/R=\{[u]_R: u\in U\}\) denotes all equivalence classes of R.

Definition 2.1

[(Pawlak 1982)] Let U be a universe and A a finite attribute set. (U, A) is called an information system (IS). \(a:U\rightarrow V_a\) is called an information function for any \(a\in A\), where \(V_a=\{a(e):e\in U\}\).

Let (U, A) be an IS, for any \(B\subseteq A\), ind(B) can be defined as

ind(B) is called the indiscernibility relation of B.

Definition 2.2

[(Dai et al. 2017)] (U, A) is an IS, for any \(B\subseteq A\). \(dis_d(B)\) is called the discernibility relation of B if

Let (U, A) be an IS and B a subset of A. B is called a coordinate subset of A if \(ind(B)=ind(A)\). All coordinate subsets of A is denoted by co(A). B is called a reducts of A if \(B\in co(A)\) and for each \(a\in B\), \(B-\{a\}\not \in co(A)\). All reducts of A is denoted by red(A).

Proposition 2.3

(U, A) is an IS and B a subset of A. Then \(B\in co(A)~\Leftrightarrow ~dis(B)=dis(A)\).

Corollary 2.4

(U, A) is an IS with \(B\subseteq A\). Then

(U, C, d) is called a DIS, if \((U,C\cup \{d\})\) is an IS and d is a decision attribute.

Let (U, C, d) be a DIS with \(B\subseteq C\) and \(X\in 2^U\). Define

Definition 2.5

[(Dai et al. 2017)] Let (U, C, d) be a DIS with \(B\subseteq C\). Define

Then \(dis_d(B)\) is called the discernibility relation of B with d.

Let (U, C, d) be a DIS with \(B\subseteq C\). Define \(POS_B(d)=\bigcup \limits _{D\in U/R_d}\underline{R_B}(D)\) and \(\Gamma _B(d)=\frac{|POS_B(d)|}{|U|}.\) B is called a coordinate subset of C if \(R_B=R_C\), All coordinate subsets of C with d is denoted by \(co_d(C)\). B is called a reduct of C with d if \(B\in co_d(C)\) and for each \(a\in B\), \(B-\{a\}\not \in co_d(C)\). All reducts of C with d is denoted by \(red_d(C)\).

From Proposition 2.3 and Definition 2.5, we can get the following results.

Proposition 2.6

Let (U, C, d) be a DIS with \(B\subseteq C\). The following conditions are equivalent:

-

(1)

\(B\in co_d(C)\);

-

(2)

\(POS_B(d)=POS_C(d);\)

-

(3)

\(\Gamma _B(d)=\Gamma _C(d)\);

-

(4)

\(dis_d(B)=dis_d(C)\).

Corollary 2.7

(U, C, d) is a DIS with \(B\subseteq C\). The following conditions are equivalent:

-

(1)

\(B\in red_d(C)\);

-

(2)

\(POS_B(d)=POS_C(d)\), \(POS_{B-\{a\}}(d)\ne POS_C(d)\) for any \(a\in B;\)

-

(3)

\(\Gamma _B(d)=\Gamma _C(d)\), \(\Gamma _{B-\{a\}}(d)\ne \Gamma _C(d)\) for any \(a\in B\);

-

(4)

\(dis_d(B)=dis_d(C)\), \(dis_d(B-\{a\})\ne dis_d(C)\) for any \(a\in B\).

3 An information system for partially labeled hybrid data

The information system for partially labeled hybrid data plays an important role in real life. In order to better study it, we first define the following definitions.

3.1 The definition of a p-HIS

\(\diamond\) represents an unknown information value, and \(*\) stands for an unknown label.

Suppose that (U, C, d) is a DIS with \(a\in C\). Put

From the above, \(V_a^\diamond\) and \(V^*_d\) are the sets which contain all known information values of a and all known labels of d respectively.

Definition 3.1

Let (U, C, d) be a DIS and \(C=C^{c}\cup C^{r}\), where \(C^{c}\) and \(C^{r}\) are categorical and real-valued attributes, respectively. Put

Then \(U^l\cup U^u=U,~~U^l\cap U^u=\emptyset\).

-

(1)

(U, C, d) is called a DIS with l-HIS, if \(\exists\) \(a\in C\) and \(u\in U\), \(a(u)=\diamond\) and \(U^l=U\).

-

(2)

(U, C, d) is called a DIS with p-HIS, if \(\exists\) \(a\in C\) and \(u\in U\), \(a(u)=\diamond\), \(U^l\ne \emptyset\) and \(U^u\ne \emptyset\).

-

(3)

(U, C, d) is called a DIS with u-HIS, if \(\exists\) \(a\in C\) and \(u\in U\), \(a(u)=\diamond\) and \(U^u=U\).

Obviously,

Since there are no labeled objects in u-HIS (U, C, d), we abbreviated (U, C, d) as (U, C).

Definition 3.2

Let (U, C, d) be a p-HIS. Here, \((U^l,C,d)\) and \((U^u,C,d)\) are called the l-HIS and u-HIS induced by (U, C, d), respectively.

Example 3.3

Table 2 is a p-HIS (U, C, d), where \(U=\{u_1,u_2,\cdots ,u_9\}\), \(C=C^{c}\cup C^{r}\), \(C^{c}=\{a_1,a_2\}\) and \(C^{r}=\{a_3\}\).

Example 3.4

(Continue with Example 3.3)

Definition 3.5

(U, C, d) is a p-HIS. The incomplete rate \(\lambda\) of labels is defined as

3.2 A novel distance function in a p-HIS

In order to effectively distinguish the difference between objects in a p-HIS, we will give a new concept.

Definition 3.6

[(Zhang et al. 2022)] (U, C, d) is a p-HIS and \(|V^*_d|=s\). Given \(a \in C^c\) and \(u\in U^l\) with \(a(u)\ne \diamond\).

Denote

Define

By Definition 3.6, we have

Definition 3.7

[(Zhang et al. 2022)] Let (U, C, d) be a p-HIS with \(|V^*_d|=s\). Given \(a\in C^c\), \(e,t\in U^l\) with \(a(e)\ne \diamond\) and \(a(t)\ne \diamond\). Define the distance as follows

Obviously,

Definition 3.8

[(Zhang et al. 2022)] Let (U, C, d) be a p-HIS. Suppose \(a\in C^r\) and \(e,t\in U^l\) with \(a(e)\ne \diamond\), \(a(t)\ne \diamond\). Define the distance as follows

where \(\hat{a}=max\{a(e):e\in U^l\}-min\{a(e):e\in U^l\}\).

If \(\hat{a}=0\), let \(\rho ^l_r(a(e),a(t))=0\). Obviously,

Definition 3.9

Let (U, C, d) be a p-HIS. Given \(a \in C\) and \(e,t \in U^l\). Define the distance as follows

\(\rho (a(e),a(t))=\)

3.3 Some concepts of a p-HIS and related results

Definition 3.10

Let P be a subset of C and (U, C, d) a p-HIS. \((U^l,C,d)\) is the l-HIS induced by (U, C, d). Denote

In the above definition, \(\theta\) is a parameter to control the distance between a(e) and a(t).

Definition 3.11

Let P be a subset of C and (U, C, d) a p-HIS. \((U^l,C,d)\) is the l-HIS induced by (U, C, d). Denote

\(dis^{l,\theta }_d(P)\) is called the relative discernibility relation of P to d on \(U^l\).

Definition 3.12

Let P be a subset of C and (U, C, d) a p-HIS. \((U^u,C,d)\) is the u-HIS induced by \((U^u,C,d)\). Then \((U^u,C,d)\) can be seen as \((U^u,C)\), and

is called the discernibility relation of P on \(U^u\).

P is a subset of C and (U, C, d) is a p-HIS, According to Kryszkiewicz’s ideal Kryszkiewicz (1999), \(\partial ^{l,\theta }_P:U^l\rightarrow 2^{V^*_d}\) is defined as follows:

Then \(\partial ^{l,\theta }_P(u)\) is called generalized decision of u in \((U^l,P,d)\), and \(\partial ^{l,\theta }_P=\{\partial ^{l,\theta }_P(u):u\in U^l\}\).

Definition 3.13

(U, C, d) is a p-HIS. \(\forall\) \(u\in U^l\), \(|\partial ^{l,\theta }_C(u)|=1\), (U, C, d) is a \(\theta\)-consistent; otherwise, (U, C, d) is called \(\theta\)-inconsistent.

Proposition 3.14

(U, C, d) is a p-HIS and P is a subset of C. \(\forall\) \(u\in U^l\).

Proof

\(``\Rightarrow "\). Let \([u]^{l,\theta }_P\subseteq [u]^{l,\theta }_d\). Suppose \(w\in \partial ^{l,\theta }_P(u)\). Then \(\exists\) \(v\in [u]^{l,\theta }_P\), \(w=d(v)\). \(v\in [u]^{l,\theta }_P\) implies that \(v\in [u]^{l,\theta }_d\). So \(w=d(v)=d(u\)). Thus \(|\partial ^{l,\theta }_P(u)|=1.\)

\(``\Leftarrow "\). Let \(|\partial ^{l,\theta }_P(u)|=1\). \(\forall\) \(v\in [u]^{l,\theta }_P\). Then \(d(v)\in \partial ^{l,\theta }_P(u)\). Since \(d(u)\in \partial ^{l,\theta }_P(u)\) and \(|\partial ^{l,\theta }_P(u)|=1\), therefore \(d(u)=d(v)\). Then \(v\in [u]^{l,\theta }_d\). Thus \([u]^{l,\theta }_P\subseteq [u]^{l,\theta }_d\). \(\square\)

Corollary 3.15

(U, C, d) is a p-HIS and P is a subset of A.

Proof

Obviously. \(\square\)

Proposition 3.16

Let (U, C, d) be a p-HIS. (U, C, d) is \(\theta\)-consistent \(\Leftrightarrow\) \(R^{l,\theta }_C\subseteq R^{l,\theta }_d.\)

Proof

It can be proved by Corollary 3.15. \(\square\)

4 Uncertainty measurement for a p-HIS

In this part, UM for a p-HIS is investigated by using four kinds of important degrees on the given attribute subset.

4.1 The type 1 importance of a subsystem in a p-HIS

Definition 4.1

Let (U, C, d) be a p-HIS. P is a subset of C and \(|U^l|=n_l\). Put

Then \(\Gamma ^{l,\theta }_P(d)\) is called the dependence of P to d in \(U^l\).

Proposition 4.2

Let (U, C, d) be a p-HIS with \(|U^l|=n_l\). Denote

(1) \(\Gamma ^{l,\theta }_P(d)=\frac{\sum \limits _{i=1}^r|\underline{R^{l,\theta }_P}(D_i)|}{n_l}.\)

(2) \(0\le \Gamma ^{l,\theta }_P(d)\le 1\).

(3) If \(P\subseteq Q\subseteq C\), then

Proof

(1) Obviously, \(\forall ~i\), \(\underline{R^{l,\theta }_P}(D_i)\subseteq D_i\).

\(\{D_1,D_2\cdots ,D_r\}\) is a partition of \(U^l\). Then

Thus

(2) This holds by (1).

(3) Suppose \(P\subseteq Q\subseteq C\). Then \(\forall ~u\in U^l\), \([u]^{l,\theta }_Q\subseteq [u]^{l,\theta }_P\). So

It implies that

By (1),

\(\square\)

Definition 4.3

Let (U, C, d) be a p-HIS with \(\lambda = \frac{|U^u|}{|U|}\) and \(P\subseteq C\). Then the type 1 importance of the subsystem (U, P, d) is defined as

In the above definition, \(\frac{\Gamma ^{l,\theta }_P(d)}{\Gamma ^{l,\theta }_C(d)}\) and \(\frac{|ind^u(C)|}{|ind^u(P)|}\) can be viewed as the importance of \((U^l,P,d)\) and \((U^u,P,d)\), respectively. \(\lambda\) means the missing rate of labels, which is processed as a weight. We define the sum of the importance of \((U^l,P,d)\) and \((U^u,P,d)\) with the missing rate of labels as the type 1 importance of (U, P, d).

Example 4.4

(Continue with Example 3.3) Given \(\theta =0.5\) and \(\lambda =\frac{2}{9}\approx 0.2222\). Then

Thus

\(R^{l,\theta }_{\{a_1\}}=\{(u_1,u_1),(u_1,u_2),(u_1,u_4),(u_1,u_7), (u_1,u_8),(u_2,u_2),(u_2,u_4),(u_2,u_7), (u_2,u_8),(u_4,u_4),(u_4,u_7),(u_4,u_8),(u_5,u_5),(u_5,u_6),(u_6,u_6),(u_7,u_7),(u_7,u_8),(u_8,u_8)\}\).

where \(D_1=\{u_1,u_4\}\), \(D_2=\{u_2,u_7,u_8\}\), \(D_3=\{u_5,u_6\}\).

Similarly, \({\Gamma ^{l,\theta }_{\{a_2\}}}(d)=0,~\Gamma ^{l,\theta }_{\{a_3\}}(d)\approx 0.2857,~\Gamma ^{l,\theta }_{C}(d)\approx 0.4286\).

Next,

Then

Thus \(|ind^u_\theta (\{a_1\})|=4\).

Similarly, \(|ind^u_\theta (\{a_2\})|=4\), \(|ind^u_\theta (\{a_3\})|=4,~|ind^u(C)|=4\).

Finally, the \(imp^{(1)}_{\lambda ,\theta }(P)\) of each attribute is calculated as follows.

Proposition 4.5

Let (U, C, d) be a p-HIS with \(\lambda = \frac{|U^u|}{|U|}\). Then the following properties hold.

(1) \(0\le imp^{(1)}_{\lambda ,\theta }(P)\le 1\);

(2) \(imp^{(1)}_{\lambda ,\theta }(C)=1\);

(3) \(P\subseteq Q\subseteq C\) implies to \(imp^{(1)}_{\lambda ,\theta }(P)\le imp^{(1)}_{\lambda ,\theta }(Q)\);

(4) \(imp^{(1)}_{\lambda ,\theta }(P)=1\) \(\Leftrightarrow\) \(\Gamma ^{l,\theta }_P(d)=\Gamma ^{l,\theta }_C(d)\), \(|ind^u_\theta (P)|=|ind^u_\theta (C)|\).

Proof

“(1) and (2)" are obvious.

(3) Since \(P\subseteq Q\subseteq C\), we have

Then

Thus

Hence \(imp^{(1)}_{\lambda ,\theta }(P)\le imp^{(1)}_{\lambda ,\theta }(Q)\).

(4) \(``\Leftarrow "\) is clear. Below, we prove \(``\Rightarrow "\).

Suppose \(imp^{(1)}_{\lambda ,\theta }(P)=1\). Then

This implies that

Note that \(1-\frac{\Gamma ^{l,\theta }_P(d)}{\Gamma ^{l,\theta }_C(d)}=\frac{\Gamma ^{l,\theta }_C(d)-\Gamma ^{l,\theta }_P(d)}{\Gamma ^{l,\theta }_C(d)}\ge 0\), \(1-\frac{|ind^u_\theta (C)|}{|ind^u_\theta (P)|}=\frac{|ind^u_\theta (P)|-|ind^u_\theta (C)|}{|ind^u_\theta (P)|}\ge 0\), Then \(1-\frac{\Gamma ^{l,\theta }_P(d)}{\Gamma ^{l,\theta }_C(d)}=0\), \(1-\frac{|ind^u_\theta (C)|}{|ind^u_\theta (P)|}=0\). Thus

\(\square\)

4.2 The type 2 importance of a subsystem in a p-HIS

In the following definition, \(\frac{|dis^{l,\theta }_d(P)|}{|dis^{l,\theta }_d(C)|}\) and \(\frac{|ind^u_\theta (C)|}{|ind^u_\theta (P)|}\) can be viewed as the importance of \((U^l,P,d)\) and \((U^u,P,d)\), respectively. \(\lambda\) means the missing rate of labels, which is processed as a weight. We define the sum of the importance of \((U^l,P,d)\) and \((U^u,P,d)\) with the missing rate of labels as the type 2 importance of (U, P, d).

Definition 4.6

Let (U, C, d) be a p-HIS with \(\lambda = \frac{|U^u|}{|U|}\) and \(P\subseteq C\). Then the type 2 importance of the subsystem (U, P, d) is defined as

Example 4.7

(Continue with Example 4.4) Obviously,

\({dis^{l,\theta }_d}(\{a_1\}) =\{(u_1,u_5),(u_1,u_6),(u_2,u_5),(u_2,u_6),(u_4,u_5),(u_4,u_6), (u_5,u_7),(u_5,u_8),(u_6,u_7),(u_6,u_8)\}\).

Since \({dis^{l,\theta }_d}(\{a_1\})\) is symmetric, we have \(|{dis^{l,\theta }_d}(\{a_1\})|=20\).

Similarly, \(|{dis^{l,\theta }_d}(\{a_2\})|=0\), \(|{dis^{l,\theta }_d}(\{a_3\})|=22\), \(|{dis^{l,\theta }_d}(C)|=26\).

This u-HIS is \(\theta\)-consistent with the result in Example 4.4. Then

Proposition 4.8

Let (U, C, d) be a p-HIS with \(\lambda = \frac{|U^u|}{|U|}\). Then the following properties hold.

(1) \(0\le imp^{(2)}_{\lambda ,\theta }(P)\le 1\);

(2) \(imp^{(2)}_{\lambda ,\theta }(C)=1\);

(3) \(P\subseteq Q\subseteq C\) implies to \(imp^{(2)}_{\lambda ,\theta }(P)\le imp^{(2)}_{\lambda ,\theta }(Q)\);

(4) \(imp^{(2)}_{\lambda ,\theta }(P)=1\) \(\Leftrightarrow\) \(|dis^{l,\theta }_d(P)|=|dis^{l,\theta }_d(C)|\), \(|ind^u_\theta (P)|=|ind^u_\theta (C)|\).

Proof

“(1) and (2)" are obvious.

(3) Since \(P\subseteq Q\subseteq C\), we have

Then

Hence \(imp^{(1)}_{\lambda ,\theta }(P)\le imp^{(1)}_{\lambda ,\theta }(Q)\).

(4) \(``\Leftarrow "\) is clear. Below, we prove \(``\Rightarrow "\).

Suppose \(imp^{(2)}_{\lambda ,\theta }(P)=1\). Then

This implies that

Note that \(1-\frac{|dis^{l,\theta }_d(P)|}{|dis^{l,\theta }_d(C)|}=\frac{|dis^{l,\theta }_d(C)|-|dis^{l,\theta }_d(P)|}{|dis^{l,\theta }_d(C)|}\ge 0\), \(1-\frac{|ind^u_\theta (C)|}{|ind^u_\theta (P)|}=\frac{|ind^u_\theta (P)|-|ind^u_\theta (C)|}{|ind^u_\theta (P)|}\ge 0\), Then \(1-\frac{|dis^{l,\theta }_d(P)|}{|dis^{l,\theta }_d(C)|}=0\), \(1-\frac{|ind^u_\theta (C)|}{|ind^u_\theta (P)|}=0\). Thus

\(\square\)

4.3 The type 3 importance of a subsystem in a p-HIS

Stipulate \(0\log _2 0=0\).

Definition 4.9

(U, C, d) is a p-HIS with \(|U^l|=n_l\). \(\forall\) \(P\subseteq C\). \(H^l_\theta (P)\) is called information entropy of P, if

Proposition 4.10

Let (U, C, d) be a p-HIS with \(|U^l|=n_l\). Given \(P\subseteq C\). Then

Moreover, if \(R^{l,\theta }_P=\triangle\), then \(H^l_\theta (P)=\log _2 n_l\); if \(R^{l,\theta }_P=\delta\), then H has a minimum.

Proof

\(\forall ~i\), \(1\le |[u_i]^{l,\theta }_P|\le n_l\), we have

Then

By Definition 4.19,

If \(R^{l,\theta }_P=\triangle\), then \(\forall ~i\), \(|[u_i]^{l,\theta }_P|=1\). So \(H^l_\theta (P)=\log _2 n_l\).

If \(R^{l,\theta }_P=\delta\), then \(\forall ~i\), \(|[u_i]^{l,\theta }_P|=n_l\). So \(H^l_\theta (P)=0\).

\(\square\)

Definition 4.11

Let (U, C, d) be a p-HIS with \(P\subseteq C\) and \(|U^l|=n_l\). \(H^l_\theta (P|d)\) is called conditional information entropy of P, if

Proposition 4.12

(U, C, d) is a p-HIS with \(|U^l|=n_l\). If \(P\subseteq Q\subseteq C\), then

Proof

Denote

Then

Obviously, \(\forall ~i\), \(R^{l,\theta }_Q(u_i)\subseteq [u_i]^{l,\theta }_P.\)

Then

Put \(f(x,y)=-x\log _2\frac{x}{x+y}(x>0,y\ge 0)\). Then f(x, y) increases with respect to x and increases with respect to y, respectively.

Since \(q_{ij}^{(1)}\le p_{ij}^{(1)}, q_{ij}^{(2)}\le p_{ij}^{(2)},\) we have

Thus

\(\square\)

Definition 4.13

(U, C, d) is a p-HIS with \(|U^l|=n_l\). \(\forall\) \(P\subseteq C\). Denote \(U^l/R^{l,\theta }_d=\{D_1,D_2,\cdots ,D_r\}\). \(H^l_\theta (P\cup d)\) is called joint information entropy of P with d, if

Proposition 4.14

(U, C, d) is a p-HIS with \(|U^l|=n_l\). \(\forall\) \(P\subseteq C\). Then

Proof

Then \(\{D_1,D_2\cdots ,D_r\}\) is a partition of \(U^l\). \(\forall ~i\),

\(\square\)

Proposition 4.15

Let (U, C, d) be a p-HIS with \(|U^l|=n_l\). \(\forall\) \(P\subseteq C\). Then \(H^l_\theta (P|d)\ge 0\).

Proof

We have

\(\{D_1,D_2\cdots ,D_r\}\) is a partition of \(U^l\). \(\forall ~i\),

Then

By Definition 4.13,

\(\forall ~i,j\),

Then

By Proposition 4.14,

Hence \(H^l_\theta (P|d)\ge 0.\)

\(\square\)

Definition 4.16

Let (U, C, d) be a p-HIS with \(\lambda = \frac{|U^u|}{|U|}\) and \(P\subseteq C\). Then the type 3 importance of the subsystem (U, P, d) is defined as

In the above definition, \(\frac{H^l_\theta (C|d)}{H^l_\theta (P|d)}\) and \(\frac{|ind^u_\theta (C)|}{|ind^u_\theta (P)|}\) can be viewed as the importance of \((U^l,P,d)\) and \((U^u,P,d)\), respectively. \(\lambda\) means the missing rate of labels, which is processed as a weight. We define the sum of the importance of \((U^l,P,d)\) and \((U^u,P,d)\) with the missing rate of labels as the type 3 importance of (U, P, d).

Example 4.17

(Continue with Example 4.4) We have

\(H^l_\theta (\{a_1\}|d)=-(\frac{2}{7}*\log _2 \frac{2}{5}+\frac{2}{7}*\log _2 \frac{2}{5}+\frac{2}{7}*\log _2 \frac{2}{5}+\frac{0}{7}*\log _2 \frac{0}{2}+\frac{0}{7}*\log _2 \frac{0}{2} +\frac{2}{7}*\log _2 \frac{2}{5}+\frac{2}{7}*\log _2 \frac{2}{5}+\frac{3}{7}*\log _2 \frac{3}{5}+\frac{3}{7}*\log _2 \frac{3}{5}+\frac{3}{7}*\log _2 \frac{3}{5}+\frac{0}{7}*\log _2 \frac{0}{2}+\frac{0}{7}*\log _2 \frac{0}{2} +\frac{3}{7}*\log _2 \frac{3}{5}+\frac{3}{7}*\log _2 \frac{3}{5}+\frac{0}{7}*\log _2 \frac{0}{5}+\frac{0}{7}*\log _2 \frac{0}{5}+\frac{0}{7}*\log _2 \frac{0}{5}+\frac{2}{7}*\log _2 \frac{2}{2}+\frac{2}{7}*\log _2 \frac{2}{2} +\frac{0}{7}*\log _2 \frac{0}{5}+\frac{0}{7}*\log _2 \frac{0}{5})\approx 3.4677\).

Similarly, \(H^l_\theta (\{a_2\}|d)\approx 10.8966\), \(H^l_\theta (\{a_3\}|d)\approx 3.7664\), \(H^l_\theta (C|d)\approx 1.93\).

Then

Proposition 4.18

Let (U, C, d) be a p-HIS with \(|U^l|=n_l\). Then the following properties hold.

(1) \(0\le imp^{(3)}_{\lambda ,\theta }(P)\le 1\);

(2) \(imp^{(3)}_{\lambda ,\theta }(C)=1\);

(3) \(P\subseteq Q\subseteq C\) implies to \(imp^{(3)}_{\lambda ,\theta }(Q)\le imp^{(3)}_{\lambda ,\theta }(P)\);

(4) \(imp^{(3)}_{\lambda ,\theta }(P)=1\) \(\Leftrightarrow\) \(H^l_\theta (P|d)=H^l_\theta (C|d)\), \(|ind^u_\theta (P)|=|ind^u_\theta (C)|\).

Proof

“(1) and (2)" are obvious.

(3) Since \(P\subseteq Q\subseteq C\), we have

Then

Thus

Hence \(imp^{(1)}_{\lambda ,\theta }(Q)\le imp^{(1)}_{\lambda ,\theta }(P)\).

(4) \(``\Leftarrow "\) is clear. Below, we prove \(``\Rightarrow "\).

Suppose \(imp^{(3)}_{\lambda ,\theta }(P)=1\). Then

This implies that

Note that \(1-\frac{H^l_\theta (C|d)}{H^l_\theta (P|d)}=\frac{H^l_\theta (P|d)-H^l_\theta (C|d)}{H^l_\theta (P|d)}\ge 0\), \(1-\frac{|ind^u_\theta (C)|}{|ind^u_\theta (P)|}=\frac{|ind^u_\theta (P)|-|ind^u_\theta (C)|}{|ind^u_\theta (P)|}\ge 0\). Then \(1-\frac{H^l_\theta (C|d)}{H^l_\theta (P|d)}=0\), \(1-\frac{|ind^u_\theta (C)|}{|ind^u_\theta (P)|}=0\). Thus

\(\square\)

4.4 The type 4 importance of a subsystem in a p-HIS

Definition 4.19

(U, C, d) is a p-HIS with \(|U^l|=n_l\). \(\forall\) \(P\subseteq C\). Then information amount of P is defined as

Obviously, \(E^l_\theta (P) =\sum \limits _{i=1}^{n_l} \frac{|[u_i]^{l,\theta }_P|}{n_l} (1-\frac{|[u_i]^{l,\theta }_P|}{n_l}).\)

Proposition 4.20

Let (U, C, d) be a p-HIS with \(|U^l|=n_l\). Given \(P\subseteq C\). Then

Moreover, if \(R^{l,\theta }_P=\triangle\), then \(E^l_\theta\) achieves the maximum value \(1-\frac{1}{n_l}\); if \(R^{l,\theta }_P=\delta\), then \(E^l_\theta\) achieves the minimum value 0.

Proof

Since \(\forall ~i\), \(1\le |[u_i]^{l,\theta }_P|\le n_l\), we have

Thus

If \(R^{l,\theta }_P=\triangle\), then \(\forall ~i\), \(|[u_i]^{l,\theta }_P|=1\). So \(E^l_\theta (P)=1-\frac{1}{n_l}\).

If \(R^{l,\theta }_P=\delta\), then \(\forall ~i\), \(|[u_i]^{l,\theta }_P|=n_l\). So \(E^l_\theta (P)=0\).

\(\square\)

Definition 4.21

Let (U, C, d) be a p-HIS with \(|U^l|=n_l\). Given \(P\subseteq C\). Denote

Put

Then \(E^l_\theta (P|d)\) are called conditional information amount of P to d in \(U^l\).

Proposition 4.22

(U, C, d) is a p-HIS with \(|U^l|=n_l\). If \(P\subseteq Q\subseteq C\), then

Proof

Denote

Suppose \(P\subseteq Q\subseteq C\). Then \(\forall ~i\), \(R^{l,\theta }_Q(u_i)\subseteq [u_i]^{l,\theta }_P\). So

This implies that

Thus

\(\square\)

Definition 4.23

(U, C, d) is a p-HIS with \(|U^l|=n_l\). \(\forall\) \(P\subseteq C\). Denote

Then joint information amount of P and d is defined as

Proposition 4.24

(U, C, d) is a p-HIS with \(|U^l|=n_l\). \(\forall\) \(P\subseteq C\). Then

Proof

\(\{D_1,D_2\cdots ,D_r\}\) is a partition of \(U^l\). \(\forall ~i\),

\(\square\)

Proposition 4.25

Let (U, C, d) be a p-HIS with \(|U^l|=n_l\). Given \(P\subseteq C\). Then \(E^l_\theta (P|d)\ge 0\).

Proof

By Definition 4.19, we have

\(\{D_1,D_2\cdots ,D_r\}\) is a partition of \(U^l\). \(\forall ~i\),

Then

By Definition 4.23, we have

\(\forall ~i,j\),

Then

By Proposition 4.24,

Hence \(E^l_\theta (P|d)\ge 0.\)

\(\square\)

In the following definition, \(\frac{E^l_\theta (C|d)}{E^l_\theta (P|d)}\) and \(\frac{|ind^u_\theta (C)|}{|ind^u_\theta (P)|}\) can be viewed as the importance of \((U^l,P,d)\) and \((U^u,P,d)\), respectively. \(\lambda\) means the missing rate of labels, which is processed as a weight. We define the sum of the importance of \((U^l,P,d)\) and \((U^u,P,d)\) with the missing rate of labels as the type 4 importance of (U, P, d).

Definition 4.26

Let (U, C, d) be a p-HIS with \(|U^l|=n_l\). \(\forall\) \(P\subseteq C\). Then the type 4 importance of the subsystem (U, P, d) is defined as

Example 4.27

(Continue with Example 4.4) We have

\(E^l_\theta (\{a_1\}|d)=\frac{2}{7}*\log _2 \frac{3}{7}+\frac{2}{7}*\log _2 \frac{3}{7}+\frac{2}{7}*\log _2 \frac{3}{7}+\frac{0}{7}*\log _2 \frac{2}{7}+\frac{0}{7}*\log _2 \frac{2}{7} +\frac{2}{7}*\log _2 \frac{3}{7}+\frac{2}{7}*\log _2 \frac{3}{7}+ \frac{3}{7}*\log _2 \frac{2}{7}+\frac{3}{7}*\log _2 \frac{2}{7}+\frac{3}{7}*\log _2 \frac{2}{7}+\frac{0}{7}*\log _2 \frac{2}{7}+\frac{0}{7}*\log _2 \frac{2}{7} +\frac{3}{7}*\log _2 \frac{2}{7}+\frac{3}{7}*\log _2 \frac{2}{7}+ \frac{0}{7}*\log _2 \frac{5}{7}+\frac{0}{7}*\log _2 \frac{5}{7}+\frac{0}{7}*\log _2 \frac{5}{7}+\frac{2}{7}*\log _2 \frac{0}{7}+\frac{2}{7}*\log _2 \frac{0}{7} +\frac{0}{7}*\log _2 \frac{5}{7}+\frac{0}{7}*\log _2 \frac{5}{7}\approx 1.2245\).

Similarly, \(E^l_\theta (\{a_2\}|d)\approx 4.5714\), \(E^l_\theta (\{a_3\}|d)\approx 0.9796\), \(E^l_\theta (C|d)\approx 0.4898\).

Then

Proposition 4.28

Let (U, C, d) be a p-HIS with \(|U^l|=n_l\). Then the following properties hold.

(1) \(0\le imp^{(4)}_{\lambda ,\theta }(P)\le 1\);

(2) \(imp^{(4)}_{\lambda ,\theta }(C)=1\);

(3) \(P\subseteq Q\subseteq C\) implies to \(imp^{(4)}_{\lambda ,\theta }(Q)\le imp^{(4)}_{\lambda ,\theta }(P)\);

(4) \(imp^{(4)}_{\lambda ,\theta }(P)=1\) \(\Leftrightarrow\) \(E^l_\theta (P|d)=E^l_\theta (C|d)\), \(|ind^u_\theta (P)|=|ind^u_\theta (C)|\).

Proof

“(1) and (2)" are obvious.

(3) Since \(P\subseteq Q\subseteq C\), we have

Then

Thus

Hence \(imp^{(1)}_{\lambda ,\theta }(Q)\le imp^{(1)}_{\lambda ,\theta }(P)\).

(4) \(``\Leftarrow "\) is clear. Below, we prove \(``\Rightarrow "\).

Suppose \(imp^{(4)}_{\lambda ,\theta }(P)=1\). Then

This implies that

Note that \(1-\frac{E^l_\theta (C|d)}{E^l_\theta (P|d)}=\frac{E^l_\theta (P|d)-E^l_\theta (C|d)}{E^l_\theta (P|d)}\ge 0\), \(1-\frac{|ind^u_\theta (C)|}{|ind^u_\theta (P)|}=\frac{|ind^u_\theta (P)|-|ind^u_\theta (C)|}{|ind^u_\theta (P)|}\ge 0\). Then \(1-\frac{E^l_\theta (C|d)}{E^l_\theta (P|d)}=0\), \(1-\frac{|ind^u_\theta (C)|}{|ind^u_\theta (P)|}=0\). Thus

\(\square\)

5 Statistical analysis

In this section, we make statistical analysis on the four UMs. Experimental analysis is carried out to test the effect of measurements.

5.1 Measurement analysis

Twelve hybrid datasets (see Table 3) are selected from UCI (machine learning repository) database for experiments. Since the labels of these datasets are not missing, for the convenience of the experiment, we make the labels randomly missing by 50%. As a result, the following experiments were conducted with \(\lambda = 0.5\). The experimental setup utilized a Lenovo computer equipped with an Intel(R) Core(TM) i7-9700 CPU @ 3.00GHz and 16GB of memory. The code is programmed with MATLAB 2019 software.

In order to test the performance of these four measures, all datasets need to be preprocessed. For any dataset, let \({P_i} = \{ {p_1}, \cdots ,{p_i}\} (i = 1, \cdots , n), n=|C|\), four measure sets are as follows:

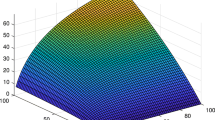

This formula means that we need to observe the change of these measurements as the number of subsets increases. Using this formula, the measurement change curves for 12 data sets are plotted as shown in Fig. 2. The x-axis indicates the cardinality of subset, and the y-axis represents the value of \(X_j(dataset)\). It can be seen that when the number of attributes gradually increases, the four UMs gradually rise to 1. It can be concluded that the certainty of A p-HIS increase with the growth of the attribute subset. We also find that the area enclosed by the blue curve with the x-axis on datasets Ann, Ban and Ion is slightly smaller than that of other curves. However, in other datasets, the blue line is above other color curves. This indicates that \(imp^{(3)}_{\lambda ,\theta }(P)\) sometimes have the better effect. Consequently, four UMs all can be used to measure the uncertainty of partially labeled hybrid data.

5.2 Dispersion analysis

Many literatures employ the standard deviation and discrete coefficient along with the mean to report statistical analysis results, and discrete analysis of hybrid data can use them. Next, we analyze the discretization of 12 datasets by using four UMs.

Suppose that \(X_j(dataset)=\{x_1,x_2 \cdots x_n\}\) is a dataset, let \({\bar{x}}, \sigma (X), CV(X)\) be the arithmetic average value, standard deviation and coefficient of variation for X respectively. Their formulas are as follows: \({\bar{x}}=\frac{1}{n} \sum \limits _{i=1}^{n} x_i\), \(\sigma (X)=\sqrt{\frac{1}{n} \sum \limits _{i=1}^{n} (x_i-{\bar{x}})}\), \(CV(X)=\frac{\sigma (X)}{{\bar{x}}}\). For the sake of simplicity, the coefficient of variation is called CV-value.

The smaller the coefficient of variation is, the more reliable the information system for measuring uncertainty is and the smaller the risk is. The CV-value of each dataset is calculated and displayed in Table 4. Table 4 reports four UMS measure the discretization of different datasets. As we can see that the minimum average value is 0.3517, and the maximum value is 0.4608. In order to more intuitively compare the advantages and disadvantages of these four UMS, we rank them according to the results in Table 4. Since the smaller the CV-value, the smaller the risk, the data in Table 4 are sorted from small to large, and the results are shown in Table 4. It is easy to find that the lowest and highest average ranking are \(imp^{(3)}_{\lambda ,\theta }(P)\) (1.6667) and \(imp^{(1)}_{\lambda ,\theta }(P)\) (3.0833) respectively. Consequently, it can be concluded that \(imp^{(3)}_{\lambda ,\theta }(P)\) is the most stable and the other UMS take more risk.

5.3 Statistical analysis of four UMs

Next, we conduct a statistical analysis on these four UMs. The Friedman test is a nonparametric test used to determine if there are significant differences among the four UMs by ranking them. The data in Table 5 are input into the software SPSS, and the calculated results are shown in Table 6. If the significance level is taken as \(\alpha = 0.05\), then \(p=0.0307 < \alpha\) in Table 6, indicating that there are significant differences among the four UMs. Then, multiple comparisons are implemented by Nemenyi test. The critical range of the difference between the average ordinal values calculated is \(CD=q_\alpha \sqrt{\frac{k(k+1)}{6N}}\).

It is known that \(k=4\) represents four UMs and \(N=12\) represents 12 datasets. Let \(\alpha =0.1\), then look up table \(q_\alpha =2.291\) and calculate \(CD=1.2075\). The test results are plotted in Fig. 3 for visual comparison. It can be seen from Fig. 3 that:

-

(1)

The blue line is closer to the y-axis than the other lines;

-

(2)

In the horizontal direction, the blue line does not overlap the green and red lines;

-

(3)

In the horizontal direction, the blue line partially overlaps the magenta line.

It can be concluded that:

-

(a)

\(imp^{(3)}_{\lambda ,\theta }(P)\) is statistically better than \(imp^{(1)}_{\lambda ,\theta }(P)\) and \(imp^{(4)}_{\lambda ,\theta }(P)\);

-

(b)

There is no significant difference between \(imp^{(3)}_{\lambda ,\theta }(P)\), \(imp^{(2)}_{\lambda ,\theta }(P)\);

-

(c)

There is no significant difference between \(imp^{(1)}_{\lambda ,\theta }(P)\), \(imp^{(2)}_{\lambda ,\theta }(P)\) and \(imp^{(4)}_{\lambda ,\theta }(P)\).

Therefore, \(imp^{(3)}_{\lambda ,\theta }(P)\) performs better than the other three UMs and next we will focus on it.

6 Semi-supervised attribute reduction for hybrid data

In this section, we study Semi-supervised attribute reduction for hybrid data.

6.1 Semi-supervised attribute reduction in a p-HIS

Definition 6.1

Let (U, C, d) be a p-HIS with \(\lambda = \frac{|U^u|}{|U|}\) and \(P\subseteq C\). Then P is called a coordinate subset of C with respect to d in a p-HIS (U, C, d), if \(POS^{l,\theta }_P(d)=POS^{l,\theta }_C(d)\), \(ind^u_\theta (P)=ind^u_\theta (C)\).

All coordinate subsets of C with respect to d is denoted by \(co^p_{\lambda ,\theta }(C)\).

Definition 6.2

Let (U, C, d) be a p-HIS with \(\lambda = \frac{|U^u|}{|U|}\) and \(P\subseteq C\). Then P is called a reduct of C with d, if \(P\in co^p_{\lambda ,\theta }(C)\) and for each \(a\in P\), \(P-\{a\}\not \in co^p_{\lambda ,\theta }(C)\).

All reducts of C with d is denoted by \(red^p_{\lambda ,\theta }(C)\).

Theorem 6.3

(U, C, d) is a p-HIS with \(\lambda = \frac{|U^u|}{|U|}\). The following conditions are equivalent:

(1) \(P\in co^p_{\lambda ,\theta }(C)\);

(2) \(imp^{(1)}_{\lambda ,\theta }(P)=1\);

(3) \(imp^{(2)}_{\lambda ,\theta }(P)=1\);

Proof

(1) \(\Rightarrow\) (2). \(P\in co^p_{\lambda ,\theta }(C)\). Then \(POS^{l,\theta }_P(d)=POS^{l,\theta }_C(d)\), \(ind^u_\theta (P)=ind^u_\theta (C)\). Thus \(\Gamma ^{l,\theta }_P(d)=\Gamma ^{l,\theta }_C(d)\), \(|ind^u_\theta (P)|=|ind^u_\theta (C)|\).

By Proposition 4.5, \(imp^{(1)}_{\lambda ,\theta }(P)=1\).

(2) \(\Rightarrow\) (3). Suppose \(imp^{(1)}_{\lambda ,\theta }(P)=1\). Then by Proposition 4.5,

By Proposition 2.6, \(dis^{l,\theta }_d(P)=dis^{l,\theta }_d(C)\). Then \(|dis^{l,\theta }_d(P)|=|dis^{l,\theta }_d(C)|\).

By Proposition 4.8, \(imp^{(2)}_{\lambda ,\theta }(P)=1\).

(3) \(\Rightarrow\) (1). Suppose \(imp^{(2)}_{\lambda ,\theta }(P)=1\). Then by Proposition 4.8,

Note that \(dis^{l,\theta }_d(P)\subseteq dis^{l,\theta }_d(C)\) and \(ind^u_\theta (P)\supseteq ind^u_\theta (C)\). Then \(dis^{l,\theta }_d(P)=dis^{l,\theta }_d(C)\) and \(ind^u_\theta (P)= ind^u_\theta (C)\).

By Proposition 2.6, \(POS^{l,\theta }_P(d)=POS^{l,\theta }_C(d)\).

Thus \(P\in co^p_{\lambda ,\theta }(C)\).

\(\square\)

Corollary 6.4

(U, C, d) is a p-HIS with \(\lambda = \frac{|U^u|}{|U|}\) and \(P\subseteq C\). The following conditions are equivalent:

(1) \(P\in red^p_{\lambda ,\theta }(C)\);

(2) \(imp^{(1)}_{\lambda ,\theta }(P)=1\) and \(\forall ~a\in P\), \(imp^{(1)}_{\lambda ,\theta }(P-\{a\})<1\);

(3) \(imp^{(2)}_{\lambda ,\theta }(P)=1\) and \(\forall ~a\in P\), \(imp^{(2)}_{\lambda ,\theta }(P-\{a\})<1\);

Proof

It follows from Theorem 6.3. \(\square\)

Lemma 6.5

(U, C, d) is a p-HIS and P is a subset of C. If \(R^{l,\theta }_P\subseteq R^{l,\theta }_d\), then \(\forall\) \(u\in U^l\) and j,

Proof

(1) \(u\in D_j\), \(D_j=[u]^{l,\theta }_d\). Since \(R^{l,\theta }_P\subseteq R^{l,\theta }_d\), therefore \([u]^{l,\theta }_P\subseteq [u]^{l,\theta }_d\). Thus \([u]^{l,\theta }_P\cap D_j=[u]^{l,\theta }_P\).

(2) \(u\not \in D_j\), then \([u]^{l,\theta }_d\cap D_j=\emptyset\). Since \(R^{l,\theta }_P\subseteq R^{l,\theta }_d\), therefore \([u]^{l,\theta }_P\subseteq [u]^{l,\theta }_d\). Thus \([u]^{l,\theta }_P\cap D_j=\emptyset\). \(\square\)

Lemma 6.6

(U, C, d) is a p-HIS. \(\forall\) \(P\subseteq C\). If \(R^{l,\theta }_P\subseteq R^{l,\theta }_d\), \(\forall\) \(u\in U^l\),

Proof

Since \(R^{l,\theta }_P\subseteq R^{l,\theta }_d\), by Lemma 6.5, we have

Thus

\(\square\)

Proposition 6.7

(U, C, d) is a p-HIS and P is a subset of C. the following conditions are equivalent:

(1) \(R^{l,\theta }_P\subseteq R^{l,\theta }_d\);

(2) \(H^l_\theta (P\cup d)=H^l_\theta (P);\)

(3) \(H^l_\theta (P|d)=0\).

Proof

“\((1)\Rightarrow (2)\)" is proved by Lemma 6.6.

“\((2)\Rightarrow (3)\)" follows from Proposition 4.14.

\((3)\Rightarrow (1)\). Suppose \(H^l_\theta (P|d)=0.\) Then

Suppose \(R^{l,\theta }_P\not \subseteq R^{l,\theta }_d.\) Then \(\exists ~i^*\in \{1,\cdots ,n\}\), \([u_{i^*}]^{l,\theta }_P\not \subseteq [u_{i^*}]^{l,\theta }_d.\) Denote

We have

This follows that

Note that

Then

So

This is a contradiction.

Thus \(R^{l,\theta }_P\subseteq R^{l,\theta }_d.\)

\(\square\)

Proposition 6.8

(U, C, d) is a p-HIS. Given \(P\subseteq C\). If \(R^{l,\theta }_P\subseteq R^{l,\theta }_d\), then \(E^l_\theta (P|d)=0\).

Proof

It is directly proved by Proposition 4.24 and Lemma 6.6.

\(\square\)

Corollary 6.9

(U, C, d) is a p-HIS and \(\theta\)-consistent. Then \(H^l_\theta (C|d)=E^l_\theta (C|d)=0\).

Proof

It follows from and Propositions 3.16, 6.7 and 6.8. \(\square\)

Theorem 6.10

(U, C, d) is a \(\theta\)-consistent p-HIS with \(\lambda = \frac{|U^u|}{|U|}\). The following conditions are equivalent:

(1) \(P\in co^p_{\lambda ,\theta }(C)\);

(2) \(imp^{(3)}_{\lambda ,\theta }(P)=1\).

Proof

(1) \(\Rightarrow\) (2). \(P\in co^p_{\lambda ,\theta }(C)\), we have \(POS^{l,\theta }_P(d)=POS^{l,\theta }_C(d)\), \(ind^u_\theta (P)=ind^u_\theta (C)\). Thus \(\Gamma ^{l,\theta }_P(d)=\Gamma ^{l,\theta }_C(d)\), \(|ind^u_\theta (P)|=|ind^u_\theta (C)|\).

By Proposition 4.2, we have

Obviously, \(\forall ~j\), \(\underline{R_C^{l,\theta }}(D_j)\supseteq \underline{R_P^{l,\theta }}(D_j),\) which implies

Thus,

Therefore \(\forall ~j\),

This means that

(U, C, d) is \(\theta\)-consistent, from Proposition 3.16, we have \(R^{l,\theta }_C\subseteq R^{l,\theta }_d.\)

Then \(\forall ~u\in U^l\),

Let \([u]^{l,\theta }_d=D^u\). \(\forall ~u\in U^l\), \([u]^{l,\theta }_P\subseteq D^u=[u]^{l,\theta }_d,\) which implies \(R^{l,\theta }_P\subseteq R^{l,\theta }_d.\)

By Proposition 6.7, \(H^l_\theta (P|d)=0.\)

(U, C, d) is \(\theta\)-consistent, from Corollary 6.9, we have \(H^l_\theta (C|d)=0.\) Then \(H^l_\theta (P|d)=H^l_\theta (C|d).\)

By Proposition 4.18, \(imp^{(3)}_{\lambda ,\theta }(P)=1\).

(2) \(\Rightarrow\) (1). Suppose \(imp^{(3)}_{\lambda ,\theta }(P)=1\). Then by Proposition 4.18, \(H^l_\theta (P|d)=H^l_\theta (C|d),\) \(|ind^u_\theta (P)|=|ind^u_\theta (C)|\).

Since \(ind^u_\theta (P)\supseteq ind^u_\theta (C)\), we have \(ind^u_\theta (P)=ind^u_\theta (C)\).

(U, C, d) is \(\theta\)-consistent, from Corollary 6.9, we have \(H^l_\theta (C|d)=0\). Then \(H^l_\theta (P|d)=0.\) From Proposition 6.7, \(R^{l,\theta }_P\subseteq R^{l,\theta }_d.\)

Suppose that \(\exists ~j^*\),

Then \(\underline{R_C^{l,\theta }}(D_{j^*})-\underline{R_P^{l,\theta }}(D_{j^*})\ne \emptyset .\)

Thus

\(u^*\in \underline{R_C^{l,\theta }}(D_{j^*})\) implies that \(u^*\in R_C^{l,\theta }(u^*)\subseteq D_{j^*}\). Therefore \(D_{j^*}=[u^*]^l_d\). \(u^*\not \in \underline{R_P^{l,\theta }}(D_{j^*})\) implies that \([u^*]^l_P\not \subseteq D_{j^*}\). Thus \([u^*]^l_P\not \subseteq [u^*]^l_d\). So \(R_P^{l,\theta }\not \subseteq R^{l,\theta }_d\). This is a contradiction.

Hence \(\forall ~j\),

Obviously, \(\forall ~j\), \(\underline{R_C^{l,\theta }}(D_j)\supseteq \underline{R_P^{l,\theta }}(D_j).\)

Then \(\underline{R_C^{l,\theta }}(D_j)=\underline{R_P^{l,\theta }}(D_j).\) Thus

Hence \(P\in co^p_{\lambda ,\theta }(C)\).

\(\square\)

Corollary 6.11

(U, C, d) is a \(\theta\)-consistent p-HIS with \(\lambda = \frac{|U^u|}{|U|}\). The following conditions are equivalent:

(1) \(P\in red^p_{\lambda ,\theta }(C)\);

(2) \(imp^{(3)}_{\lambda ,\theta }(P)=1\) and \(\forall ~a\in P\), \(imp^{(3)}_{\lambda ,\theta }(P-\{a\})<1\).

Proof

It is straightly proved by Theorem 6.10. \(\square\)

Theorem 6.12

(U, C, d) is a \(\theta\)-consistent p-HIS with \(\lambda = \frac{|U^u|}{|U|}\). If \(P\in co^p_{\lambda ,\theta }(C)\), then \(imp^{(4)}_{\lambda ,\theta }(P)=1\).

Proof

Suppose \(P\in co^p_{\lambda ,\theta }(C)\). Then \(POS^{l,\theta }_P(d)=POS^{l,\theta }_C(d)\), \(ind^u_\theta (P)=ind^u_\theta (C)\). Thus \(\Gamma ^{l,\theta }_P(d)=\Gamma ^{l,\theta }_C(d)\), \(|ind^u_\theta (P)|=|ind^u_\theta (C)|\).

It is easily proved that \(R^{l,\theta }_P\subseteq R^{l,\theta }_d.\) \(E^l_\theta (P|d)=0\) by Proposition 6.7.

(U, C, d) is \(\theta\)-consistent, from Corollary 6.9, we have \(E^l_\theta (C|d)=0.\) Therefore \(E^l_\theta (P|d)=E^l_\theta (C|d).\)

By Proposition 4.28, \(imp^{(4)}_{\lambda ,\theta }(P)=1\).

\(\square\)

Corollary 6.13

(U, C, d) is a \(\theta\)-consistent p-HIS with \(\lambda = \frac{|U^u|}{|U|}\). If \(P\in red^p_{\lambda ,\theta }(C)\), then \(imp^{(4)}_{\lambda ,\theta }(P)=1\) and \(\forall ~a\in P\), \(imp^{(4)}_{\lambda ,\theta }(P-\{a\})<1\).

Proof

It follows from Theorem 6.12. \(\square\)

6.2 Semi-supervised attribute reduction algorithms in a p-HIS

The coefficient of variation for the four UMS are discussed in the above chapters. As we know that \(imp^{(3)}_{\lambda ,\theta }(P)\) work best, so it is selected to compile the attribute reduction algorithm.

Algorithm 1: Attribute reduction algorithm for a p-HIS based on the type 3 importance (T3I).

The key of T3I is to calculate the importance of the attribute subsets. By traversing the importance of all attributes, the attributes with the greatest importance are found, and then they are put into the reduction set in turn until the stopping condition is satisfied. The specific process of this algorithm is shown in Fig. 4.

We consider the algorithm in terms of time and space complexity. The complexity of step 1 is \(O(|C||U|^2)\), and the complexity of steps 3-9 is \(O(|C|{|U|^2} + (|C| - 1){|U|^2} + \cdots ,+ |P|{|U|^2})\). So the total complexity of the algorithm is \(O((\frac{{{{|C|}^2}}}{2} + \frac{{|C|}}{2} - \frac{{{{|P|}^2}}}{2} + \frac{{|P|}}{2})|U|^2)\). When \(P = \emptyset\), it means that the algorithm has not completed the reduction. At this time, the maximum complexity of the algorithm is \(O({|C|^2}{|U|^2})\). The spatial complexity of the algorithm is \(O({|C|}{|U|^2})\).

7 Experimental analysis

7.1 Numerical experiments

There are two important parameters \(\lambda\) and \(\theta\) in T3I algorithm. To facilitate the experiments, let \(\lambda = 0.5\), which means that 50% of the labels are randomly missing. \(\theta\) is a parameter that controls the distance between information values. In order to analyze the influence of \(\theta\) on the reduction algorithm, two classifiers are used to analyze the accuracy of the reduced attribute subset. Two classification algorithms were used: one is gradient Boosting Decision Tree (BDT), the other is K-NearestNeighbor(KNN, K=3). An average performance measure was computed based on a 10-fold cross validation result repeated 10 times. We have plotted the relationship between \(\theta\) and classification accuracy, as shown in Fig. 5. The experiments show that the \(\theta\) can affect the classification accuracy of the model. Moreover, when the maximum classification accuracy is obtained, different datasets correspond to different parameters \(\theta\). This can provide a basis for us to find out the maximum classification accuracy.

In order to further study the performance of the algorithm, four algorithms are selected from other literatures for comparison to illustrate the effectiveness of the algorithm. They are MEHAR (Hu et al. 2021), SHIVAM (Shreevastava et al. 2019), SFSE (Wan et al. 2021), and RnR-SSFSM (Solorio-Fernndez et al. 2020). Algorithm SHIVAM has no abbreviation, so it is replaced by the author’s name. MEHAR, SHIVAM and SFSE are algorithms for partially labeled hybrid data. RnR-SSFSM is for supervised hybrid data. The five algorithms are restored by programming, and the same 12 datasets are used for numerical experiments.

Table 7 shows the attribute subset of each algorithm after reduction. Black bold type indicates optimal value, and the last line is the average number of reduction. It can be seen that MEHAR performs best, which is 8.42. The proposed algorithm T3I takes the second place, which is 9.08. This shows that T3I reduction efficiency is above the average level.

Then, we analyze the classification accuracy of the reduction set. The comparison of classification accuracy calculated is shown in Tables 8 and 9. It can be seen that TI3 has reached its optimal value in many datasets, with a total of 17 tests ranking first in Tables 8 and 9. This is significantly more than the 2 first-place rankings achieved by MEHAR, the 2 first-place rankings achieved by SHIVAM, the 2 first-place rankings achieved by SFSE and the 1 first-place rankings achieved by RnR-SSFSM. In addition, T3I also get the best average classification accuracy in these two tables, with 0.7516 and 0.7306, respectively. So it can be concluded that T3I performs well in most cases.

To analyze these algorithms, it is not sufficient to compare classification accuracy. We also need to calculate the True Position Rate (TPR)and False Positive Rate (FPR) to evaluate the effect of data prediction. According to the real and predicted values it can be divided into: (1) True Positive (TP); (2) False Positive (FP); (3) True Negative (TN); (4) False Negative (FN). Then True Position Rate (TPR) and False Positive Rate (FPR) can be calculated respectively as follows: \(TPR=TP/TP+FN\) and \(FPR=FP/FP+TN\). The precision (P) and the recall (R) which is also call sensitivity can be expressed as \(P=TP/(TP+FP)\) and \(R=TP/(TP+FN)\). Geometric mean (G-mean) can be showed as \(GM=\sqrt{(P*R)}\).

Therefore, we plotted the Receiver Operating Characteristic Curve (ROC) Narkhede (2018) and calculated the Area Under Curve(AUC) area. See Figs. 6 and 7 for details. The x-axis is FPR, and the y-axis is TPR. The blue line in the figures represents the T3I algorithm. From the Fig. 6, the blue line is not drawn on the outermost edge of the subgraphs Ann, SPF, lon and EES. It can be also seen from Fig. 7 that the blue line is not located on the outermost layer of subgraphs Ban, SPF, Ion and EES. This indicates that T3I does not have any advantages in these datasets, but it performs very well in the remaining datasets. From these two figures, we find that all curves of subgraph Aba are concave, and the area enclosed by the x-axis is less than 0.5, which indicates that the classification characteristics of dataset AD are not good, and key attributes are missing during data collection. Tables 10 and 11 record the area enclosed by each curve in Figs. 6 and 7. The larger the area is, the better the effect of the algorithm classification is. As can be seen from the Tables, the average AUC of T3I is the largest, 0.8688 and 0.8402 respectively. Thus, the attribute subset of T3I after reduction has a nice classification effect.

Furthermore, the G-mean is employed as an additional indicator to evaluate the effectiveness of classification. Prior to that, precision and recall metrics are calculated for the reduction set of these algorithms using two classifiers(See Tables 12 and 13). From Tables 12 and 13, it is apparent that while the computed P and R values from the reduction set of T3I may not always be optimal for each dataset individually, the average performance is the most favorable. However, these tables alone do not provide sufficient insight into the overall quality of these algorithms. For this reason, G-mean is calculated as shown in Tables 14 and 15 according to the data in Tables 12 and 13. Then, we can easily determine which algorithm performs better. It is evident that T3I has achieved a total of 18 top rankings in Tables 14 and 15, surpassing MEHAR’s 2 first places, SHIVAM’s 1 first place, SFSE’s 2 first places, and RnR-SSFSM’s 1 first place by a significant margin. It is not difficult to intuitively analyze from the above results that T3I performs better. However, further statistical analysis is needed to determine whether our judgment is accurate.

7.2 Friedman and Nemenyi test of algorithms

Next, we analyze the differences between these five algorithms. Friedman test was used for significance analysis. The data in Tables 8 and 9 and Tables 14 and 15 are sorted in descending order, and the results are shown in Tables 16, 17, 18 and 19. Once the data is input into SPSS software for statistical analysis, then the calculated results are shown in Tables 20 and 21. It can be seen that the values of p in Tables 20 and 21 are 0.0041, 0.0251, 0.0103 and 0.0116, respectively, all of which are less than the significance \(\alpha =0.05\). Consequently, it can be concluded that the five algorithms have significant differences.

Afterwards, the Nemenyi test was conducted as a post-hoc test with the purpose of further distinguishing the advantages and disadvantages of each algorithm. The calculation formula of the critical range CD is consistent with the description in section 5.3. It is known that \(k=5\) represents 5 algorithms, and \(N=12\) represents 12 datasets. Let \(\alpha =0.1\), \(q_\alpha =2.459\) was obtained by referencing the table, and \(CD=1.5873\) was calculated. Figure 8 was plotted based on the calculated CD value. By Fig. 8a, we have:

-

(a)

The classification accuracy of T3I is significantly better than RnR-SSFSM, and SFSE;

-

(b)

MEHAR, SHIVAM, RnR-SSFSM and SFSE have no significant statistical difference in classification accuracy.

-

(c)

In terms of classification accuracy, there is no obvious difference among T3I, MEHAR and SHIVAM.

By Fig. 8b, we have:

-

(a)

The classification accuracy of T3I is significantly better than MEHAR, SFSE and RnR-SSFSM;

-

(b)

SHIVAM, MEHAR, SFSE and RnR-SSFSM have no significant statistical differences in classification accuracy;

-

(c)

In terms of classification accuracy, there is no obvious difference between T3I and SHIVAM.

By Fig. 9a, we have:

-

(a)

The G-mean of T3I is significantly better than MEHAR, SHIVAM and SFSE;

-

(b)

T3I and RnR-SSFSM have no significant statistical difference in G-mean.

By Fig. 9b, we have:

-

(a)

The G-mean of T3I is significantly better than SFSE, RnR-SSFSM and SHIVAM;

-

(b)

In terms of G-mean, there is no obvious difference between T3I and MEHAR.

It can be seen that the comprehensive ranking of T3I is higher than other algorithms, as shown in Figs. 8 and 9. In summary, the results confirm that the suggested T3I outperforms the other algorithms.

7.3 Experimental analysis of the parameter \(\lambda\)

Let’s next discuss another parameter \(\lambda\) of T3I. \(\lambda\) control the missing rate of labels. MEHAR, SHIVAM, SFSE and T3I are all algorithms dealing with semi-supervised information systems. When a takes different values, in order to compare the changes of the four algorithms, Fig. 10 is drawn for observation. The x-axis is parameter \(\lambda\), ranging from 0.1 to 0.9, indicating that the label missing rate ranges from 10% to 90%, and the y-axis is the classification accuracy. Each algorithm in the figure has two curves, and the solid line and dotted line respectively correspond to the accuracy under BDT and KNN classifiers. The blue line represents T3I, and other color lines are shown in the legend.

The classification accuracy curves of datasets Ann and SPF are relatively stable, indicating that they are not affected by missing labels. Other subgraphs shows that \(\lambda\) has a great impact on the classification accuracy, and the curve fluctuates obviously. We find that no matter what the value of \(\lambda\) is, the blue lines in the subgraphs Aba, Arr, QSA and Thy are closer to the line \(y=1\) than those in other colors. This confirms that the Aba, Arr, QSA and Thy datasets of T3I algorithm experiment have higher classification accuracy. MEHAR performs better in dataset Ann, while SHIVAM performs better in dataset EES. The classification accuracy of other datasets does not show an obvious rule under different algorithms and \(\lambda\).

Therefore, we come to the conclusion that T3I has obvious advantages in attribute reduction of most hybrid datasets. However, with the different values of parameters \(\theta\) and \(\lambda\), the benefits are not clear, resulting in a small range of oscillations.

8 Conclusions

Based on the defined degrees of importance, we propose the semi-supervised attribute reduction algorithm. The algorithm can flexibly adapt to various missing rates of label. Experimental results and statistical tests on 12 datasets have shown that the degrees of importance are effective, the proposed algorithm is not prone to over-fitting and under-fitting, and can deal with various missing rates more effectively by the comparison with other state-of-the-art algorithms. The findings can enable us to effectively cope with all kinds of data with different missing rates. In the future, we will consider applying this idea to gene data.

References

Cament LA, Castillo LE, Perez JP, Galdames FJ, Perez CA (2014) Fusion of local normalization and Gabor entropy weighted features for face identification. Pattern Recognit 47(2):568–577

Dai JH, Hu H, Zheng GJ, Hu QH, Han HF, Shi H (2016) Attribute reduction in interval-valued information systems based on information entropies. Front Inform Technol Electron Eng 17(9):919–928

Dai JH, Tian HW (2013) Entropy measures and granularity measures for set-valued information systems. Inform Sci 240:72–82

Dai JH, Wang WT, Xu Q (2013) An uncertainty measure for incomplete decision tables and its applications. IEEE Trans Cybern 43(4):1277–1289

Dai JH, Hu QH, Zhang JH, Hu H, Zheng NG (2017) Attribute selection for partially labeled categorical data by rough set approach. IEEE Trans Cybern 47(9):2460–2471

Dai JH, Xu Q, Wang WT, Tian HW (2012) Conditional entropy for incomplete decision systems and its application in data mining. Int J General Syst 41(7):713–728

Ershadi MM, Seifi A (2022) Applications of dynamic feature selection and clustering methods to medical diagnosis. Appl Soft Comput 126:109293

Hu SD, Miao DQ, Yao YY (2021) Three-way label propagation based semi-supervised attribute reduction. Chin J Comput 44(11):2332–2343

Houssein EH, Saber E, Ali AA, Wazery YM (2022) Centroid mutation-based search and rescue optimization algorithm for feature selection and classification. Expert Syst Appl 191:116235

Hempelmann CF, Sakoglu U, Gurupur VP, Jampana S (2016) An entropy-based evaluation method for knowledge bases of medical information systems. Expert Syst Appl 46:262–273

Hu M, Tsang ECC, Guo YT, Xu WH (2022) Fast and robust attribute reduction based on the separability in fuzzy decision systems. IEEE Trans Cybern 52(6):5559–5572

Hu QH, Yu DR, Liu J, Wu C (2008) Neighborhood rough set based heterogeneous feature subset selection. Inform Sci 178(18):3577–3594

Han YH, Yang Y, Yan Y, Ma ZG, Zhou XF (2015) Semisupervised feature selection via spline regression for video semantic recognition. IEEE Trans Neural Netw Learn Syst 26(2):252–264

Kryszkiewicz M (1999) Rules in incomplete information systems. Inform Sci 113:271–292

Liang JY, Qian YH (2008) Information granules and entropy theory in information systems. Sci China (Ser F) 51:1427–1444

Narkhede S (2018) Understanding auc-roc curve. Towards Data Sci 26(1):220–227

Navarrete J, Viejo D, Cazorla M (2016) Color smoothing for RGB-D data using entropy information. Appl Soft Comput 46:361–380

Pawlak Z (1982) Rough sets. Int J Comput Inform Sci 11:341–356

Pawlak Z (1991) Rough sets: theoretical aspects of reasoning about data. Kluwer Academic Publishers, Dordrecht

Pashaei E, Pashaei E (2022) An efficient binary chimp optimization algorithm for feature selection in biomedical data classification. Neural Computi Appl 34:6427–6451

Qian YH, Liang JY, Wu WZ, Dang CY (2011) Information granularity in fuzzy binary GrC model. IEEE Trans Fuzzy Syst 19:253–264

Sang BB, Chen HM, Yang L, Li TR, Xu WH (2021) Incremental feature selection using a conditional entropy based on fuzzy dominance neighborhood rough sets. IEEE Trans Fuzzy Syst 30:1683–1697

Shreevastava S, Tiwari A, Som T(2019) Feature subset selection of semi-supervised data: an intuitionistic fuzzy-rough set-based concept. Proceedings of International Ethical Hacking Conference 2018. Springer, Singapore, 2019: 303–315

Solorio-Fernndez S, Martnez-Trinidad JF, Carrasco-Ochoa JA (2020) A supervised filter feature selection method for mixed data based on spectral feature selection and information-theory redundancy analysis. Pattern Recogn Lett 138:321–328

Swiniarski RW, Skowron A (2003) Rough set methods in feature selection and recognition. Pattern Recogn Lett 24:833–849

Singh S, Shreevastava S, Som T, Somani G (2020) A fuzzy similarity-based rough set approach for attribute selection in set-valued information systems. Soft Comput 24:4675–4691

Tiwari A, Chaturvedi A (2022) A hybrid feature selection approach based on information theory and dynamic butterfly optimization algorithm for data classification. Expert Syst Appl 196:116621

UCI Machine Learning Repository, http://archive.ics.uci.edu/ml/datasets.html

Wu XP, Chen HM, Li TR, Wan JH (2021) Semi-supervised feature selection with minimal redundancy based on local adaptive. Appl Intell 51:8542–8563

Wan JH, Chen HM, Yuan Z, Li TR, Yang XL, Sang BB (2021) A novel hybrid feature selection method considering feature interaction in neighborhood rough set. Knowl-Based Syst 227:107–167

Wierman MJ (1999) Measuring uncertainty in rough set theory. Int J General Syst 28:283–297

Wang CZ, Huang Y, Shao MW, Hu QH, Chen DG (2020) Feature selection based on neighborhood self-information. IEEE Trans Cybern 50(9):4031–4042

Wang F, Liu JC, Wei W (2018) Semi-supervised feature selection algorithm based on information entropy. Comput Sci 45(S2):427–430

Wan L, Xia SJ, Zhu Y, Lyu ZH (2021) An improved semi-supervised feature selection algorithm based on information entropy. Stat Decis 17:66–70

Wang YB, Chen XJ, Dong K (2019) Attribute reduction via local conditional entropy. Int J Mach Learn Cybernet 10(12):3619–3634

Wang P, Zhang PF, Li ZW (2019) A three-way decision method based on Gaussian kernel in a hybrid information system with images: an application in medical diagnosis. Appl Soft Comput 77:734–749

Xu ZL, King I, Michael RTL, Jin R (2010) Discriminative semi-supervised feature selection via manifold regularization. IEEE Trans Neural Netw 21(7):1033–1047

Yuan Z, Chen HM, Li TR (2022) Exploring interactive attribute reduction via fuzzy complementary entropy for unlabeled mixed data. Pattern Recognit 127:108651

Yuan Z, Chen HM, Yang XL, Li TR, Liu KY (2021) Fuzzy complementary entropy using hybrid-kernel function and its unsupervised attribute reduction. Knowl-Based Syst 231:107398

Zhang W, Miao DQ, Gao C, Li F (2016) Semi-supervised attribute reduction based on rough-subspace ensemble learning. J Chin Comput Syst 37(12):2727–2732

Zhang QL, Qu LD, Li ZW (2022) Attribute reduction based on D-S evidence theory in a hybrid information system. Int J Approx Reason 148:202–234

Acknowledgements

The authors would like to thank the editors and the anonymous reviewers for their valuable comments and suggestions, which have helped immensely in improving the quality of the paper. This work is supported by National Natural Science Foundation of China (12261096), Natural Science Foundation of Guangxi Province (2020GXNSFAA159155) and Natural Science Foundation of Yulin (202125001).

Author information

Authors and Affiliations

Contributions

ZL: Investigation, Methodology; JH: Data curation, Investigation, Writing-original draft. PW: Investigation, Software, Validation; CFW: Writing-review & editing.

Corresponding authors

Ethics declarations

Competing interest

The authors declare no competing interests

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, Z., He, J., Wang, P. et al. Semi-supervised attribute reduction for hybrid data. Artif Intell Rev 57, 46 (2024). https://doi.org/10.1007/s10462-023-10642-w

Published:

DOI: https://doi.org/10.1007/s10462-023-10642-w