Abstract

In biomedical image analysis, the applicability of deep learning methods is directly impacted by the quantity of image data available. This is due to deep learning models requiring large image datasets to provide high-level performance. Generative Adversarial Networks (GANs) have been widely utilized to address data limitations through the generation of synthetic biomedical images. GANs consist of two models. The generator, a model that learns how to produce synthetic images based on the feedback it receives. The discriminator, a model that classifies an image as synthetic or real and provides feedback to the generator. Throughout the training process, a GAN can experience several technical challenges that impede the generation of suitable synthetic imagery. First, the mode collapse problem whereby the generator either produces an identical image or produces a uniform image from distinct input features. Second, the non-convergence problem whereby the gradient descent optimizer fails to reach a Nash equilibrium. Thirdly, the vanishing gradient problem whereby unstable training behavior occurs due to the discriminator achieving optimal classification performance resulting in no meaningful feedback being provided to the generator. These problems result in the production of synthetic imagery that is blurry, unrealistic, and less diverse. To date, there has been no survey article outlining the impact of these technical challenges in the context of the biomedical imagery domain. This work presents a review and taxonomy based on solutions to the training problems of GANs in the biomedical imaging domain. This survey highlights important challenges and outlines future research directions about the training of GANs in the domain of biomedical imagery.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Generative adversarial networks (GANs) refer to the class of generative models that generate synthetic data by learning through probability distributions of real data (Goodfellow et al. 2014). GANs are designed with generator and discriminator models. The generator produces realistic-looking synthetic data while taking random vectors as inputs. The discriminator’s task is to classify real data from generated (synthetic) data. GANs use an objective function as a joint loss function with minimax optimization. The generator aims to produce realistic data and misguides the discriminator to classify it as real. Contrarily, the discriminator aims to classify synthetic data as fake and real data as real. The discriminator backpropagates its gradient feedback to the generator. The generator updates its learning to generate realistic synthetic data based on the discriminator’s gradient feedback. Ideally, the training of the GANs should be continued until it achieves the Nash equilibrium so that the actions of the generator and discriminator models do not affect each other’s performance. At this stage, the generator becomes well-trained so that it uses random vectors to generate synthetic data that closely resemble the real data.

In healthcare technology, GANs have been widely utilized for several tasks such as pattern analysis of biomedical imagery (Bhattacharya et al. 2020; Qin et al. 2020; Shi et al. 2020), electronic health records (Lee et al. 2020), as well as drug discovery (Zhao et al. 2020a). Recently, GANs have also been contributing in the context of Coronavirus disease (COVID-19), i.e., disease detection from chest radiography (Waheed et al. 2020). In the domain of biomedical imagery, the availability of data is an obstacle to the application of deep learning. Deep learning models are composed of deep neural networks, that require large training datasets for better predictive analytics (Bhattacharya et al. 2020). Thus, enhancing the size of biomedical datasets is a challenging problem. Another dilemma in the biomedical imaging domain is class-imbalanced datasets. It refers to the datasets with skewed classes when dealing with multiple disease classes. With class-imbalanced datasets, deep neural networks train better on the classes with a large number of images rather than the class with a limited number of images (Saini and Susan 2020). Data augmentation is one of the potential solutions to address the class imbalance, as well as data limitation problems (Qasim et al. 2020).

The utility of GANs in biomedical image analysis has been extensively investigated to perform image recognition (Mao et al. 2020), image synthesis (Zhou et al. 2020), image reconstruction (Li et al. 2021a), and image segmentation (Liu et al. 2019). GANs have demonstrated a capacity to support deep learning models through the generation of synthetic images and thus enlarging the size of biomedical datasets (Tegang et al. 2020; Han et al. 2019; Pollastri et al. 2020). GANs suffer from training challenges such as mode collapse, non-convergence, and instability problems. With these limitations, GANs can generate unrealistic, blurry, and less diverse images. The mode collapse problem occurs when the generator produces similar output images while taking different input features. In the domain of biomedical imaging, the mode collapse problem of GANs has been addressed by using minibatch discrimination (Xue et al. 2019), skip connections (Segato et al. 2020), VAEGAN (Kwon et al. 2019), varying layers of generator and discriminator (Qin et al. 2020), spectral normalization (Xu et al. 2020), perceptual image hashing (Neff et al. 2017), Gaussian mixture model as generator (Wu et al. 2018b), discriminator with conditional information vector (Modanwal et al. 2021), self-attention mechanism (Saad et al. 2023; Abdelhalim et al. 2021), and adaptive input-image normalization (Saad et al. 2022). The non-convergence problem occurs due to the lack of GAN’s ability to reach Nash equilibrium. This problem has been addressed by using modified training updates of generator and discriminator (Biswas et al. 2019), Whale optimization algorithm (Goel et al. 2021), and two time-scale update rules (Abdelhalim et al. 2021). The instability problem of GANs occurs due to the vanishing gradient problem. The Wasserstein loss (Xue et al. 2019; Segato et al. 2020; Kwon et al. 2019; Deepak and Ameer 2020), residual connections (Wei et al. 2020), multi-scale generator (Wu et al. 2018a), and Relativistic hinge loss (Saad et al. 2023) techniques are identified to address the instability problem in the biomedical imagery.

Several survey articles have identified technical solutions to address the problems of mode collapse, non-convergence, and instability (Wiatrak et al. 2019; Jabbar et al. 2021; Sampath et al. 2021; Saxena and Cao 2021). In the general imaging domain, few survey articles discuss each problem with solutions based on objective functions and modified architectures of GANs while missing the definition, identification, and quantification methodologies. The quantification methods are discussed as evaluation metrics in two survey articles (Pan et al. 2019; Gui et al. 2021) while covering almost all aspects of each problem. The existing literature discussed these training challenges of GANs in general and did not cover the significant solutions to address these challenges in the domain of biomedical imaging. There are only four survey articles (Kazeminia et al. 2020; Singh and Raza 2021; You et al. 2022; AlAmir and AlGhamdi 2022) that only cover these challenges with their definitions and identifications in the biomedical imaging domain. These survey articles outline application-based problems of GANs and have no information about quantification, and solutions to the training challenges of GANs in the biomedical imaging domain. In this survey article, we define each training problem of GANs with their definition, identification, quantification, and existing solutions. A detailed comparison of this work with the existing survey articles is indicated in Table 1.

1.1 Contributions of this paper

The main contributions of this survey article are listed as follows:

-

In this article, we discuss training challenges of GANs like mode collapse, non-convergence, and instability in detail.

-

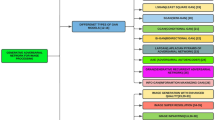

We classify each of these training challenges into four different categories i.e., Definition, Identification, Quantification, and available solutions as shown in Fig. 1.

-

We also review the existing approaches in terms of different biomedical imaging modalities and classify them into applications-based taxonomies for each problem.

-

This survey identifies research gaps and provides future research directions for GANs in the domain of biomedical imagery.

1.2 Organization of the paper

The rest of the article is organized as follows; Sect. 2 presents the detailed working of GANs including background, basic architecture, and popular variants. Section 3 highlights the applications of GANs in biomedical imagery. Section 4 discusses the benchmark evaluation metrics used for quantifying the training challenges of GANs. Section 5 discusses the mode collapse problem definition, identification, quantification, and existing solutions. Section 6 elaborates on the non-convergence problem in the training of GANs, its identification methods, and how to quantify the problem and its possible existing solutions. Section 7 explains the instability problem in the training of GANs while providing a literature review of existing identification and quantification methods, and possible solutions in biomedical imagery. Section 8 provides a comparative analysis of existing GANs architectures for the biomedical imaging domain. Section 9 discusses the important challenges and future research directions. Finally, Sect. 10 concludes the paper.

2 Generative adversarial networks

GANs are advanced machine learning models that are introduced to generate synthetic images by learning the probability distributions of real images. GANs work as learning agents that try to produce realistic images using probability distributions. To gain an understanding of GANs; the architecture, training, objective function, and GANs variants are elaborated as follows.

2.1 Architecture of GANs

GANs are composed of two models; the generator and the discriminator. The generator’s primary task is to create synthetic data that resembles the real data distribution such as images, sounds, or texts (Wolterink et al. 2021). For image data, it takes random vector z with probability distribution \(p_{z}\) (usually drawn from a normal distribution) as input and generates synthetic image samples G(z) with probability distribution \(p_{g}\). The generator is designed with a series of learnable layers, typically consisting of fully connected (dense) or transposed convolutional (deconvolutional) layers. These layers help the generator to upsample the random noise vector z and generate synthetic images in the desired format. The discriminator consists of learnable layers, such as fully connected or convolutional layers for downsampling the images. The discriminator distinguishes the synthetic image samples from real samples. It aims to output high values (close to 1) for real image data and low values (close to 0) for synthetic image data. Goodfellow et al. (2014) proposed the idea of vanilla GANs (baseline GAN) as shown in Fig. 2. The vanilla GAN’s generator and discriminator models are composed of fully connected layers using multi-layer perceptron (MLP) neural networks.

Architecture of vanilla GANs. The generator G and the discriminator D are trained in an adversarial manner so that G can generate plausible fake samples while D can classify them from real samples. G uses random vector input z for generating fake samples. G loss is described as log\((1-D(G(z)))\) while D loss is log(D(x)). The figure is redesigned from Goodfellow et al. (2014)

2.2 Training of GANs

In GANs, adversarial training is the fundamental training technique that involves training two neural networks, the generator, and the discriminator, in a competitive manner, where they learn from each other through an adversarial process. A GAN’s training initializes with random weights of the generator and the discriminator. The generator takes the random noise vector z as input and produces synthetic images. The synthetically generated images are fed into the discriminator, along with real images from the actual dataset. The discriminator’s task is to distinguish between real and synthetic images and assigns probabilities to each image sample being real or fake. The generator aims to generate images that are realistic enough to misguide the discriminator into classifying it as real. It tries to minimize the discriminator’s ability to differentiate between real and synthetic image samples. The discriminator tries to correctly classify real images as real (assigning high probabilities) and synthetic images as fake (assigning low probabilities) (Wiatrak et al. 2019; Jabbar et al. 2021; Goodfellow 2016).

The training process of GANs continues iteratively, with the generator and discriminator playing a minimax game against each other. The generator aims to generate images that look increasingly realistic, while the discriminator strives to become better at distinguishing real from synthetic images. The training converges to Nash equilibrium when the generator generates images that are indistinguishable from real images, and the discriminator can no longer differentiate between the real and synthetic images. The key idea behind adversarial training in GANs is that the generator gets better at producing realistic images by trying to outsmart the discriminator, and the discriminator becomes more adept at distinguishing real from fake images by learning from the generator’s synthetic image samples. This competition and feedback loop between the generator and discriminator lead to the emergence of a well-trained GAN capable of generating high-quality synthetic images (Salimans et al. 2018; Saxena and Cao 2021; Wang et al. 2021).

2.3 Objective function of GANs

The objective function of a GAN is defined by the distance between the probability distribution of the generated samples (\(p_{g}\)) and the probability distribution of real samples (\(p_{r}\)). The binary cross-entropy loss is used to evaluate the objective function. The binary cross-entropy V(D, G) is a joint loss function of the discriminator and the generator. It minimizes the Jensen–Shannon divergence (JSD) between the distribution of generated data as well as real data distribution. The JSD is defined as Eq. (1) (Goodfellow et al. 2014).

In Eq. (1), KL is defined as the Kullback–Leibler divergence, \({\mathbb {P}}_{r}\) and \({\mathbb {P}}_{g}\) represent the real and generated data distributions. \({\mathbb {P}}_{A}\) denotes the average distribution between real and generated distributions. The objective function becomes minimax V(D, G) of G and D as presented in Eq. (2) reproduced from Gui et al. (2021).

In Eq. (2), minimax is considered as a game in the context of GANs. Generally, the minimax is an optimization problem that aims to optimize the objective function using the given constraints of G loss and D loss. The use of the gradient descent method for an optimization of the objective function is discouraged as it may converge the function to a saddle point. At the saddle point, the objective function gives a minimal value for one model’s weight parameters while the maximal value for the other model’s weight parameters. Hence, the objective function is optimized using the minimax game to find a Nash equilibrium.

2.4 Variant of GANs

In this section, we discuss the most commonly practiced variants of GANs that are proposed with some advancement in architecture and loss functions to the vanilla GAN to address the underlying training challenges.

2.4.1 Deep convolutional GAN (DCGAN)

One of the popular variants of GANs is deep convolutional GAN (DCGAN) (Radford et al. 2015). The DCGAN adopted convolutional neural networks instead of fully connected networks as in vanilla GAN for the generator and the discriminator. Besides, batch normalization is used in most of the layers. The ADAM optimizer (Kingma and Ba 2014) is adopted instead of SGD. DCGAN provides a meaningful solution in terms of a stable architecture as compared to a vanilla GAN. However, DCGAN lacks in generating diverse, realistic, and free of artifacts images which are fundamental challenges that need more advanced solutions.

2.4.2 Conditional GAN (CGAN)

In vanilla GAN, the generator produces synthetic images only based on latent input z which is considered to be limited information for high-performance image synthesis. Authors Mirza and Osindero (2014) proposed an idea of conditional GAN that utilizes additional information y together with the random vector input z as well as input to the discriminator. The y can be a class label or any other conditional information that acts as an additional information feed to the generator as well as the discriminator. The CGAN architecture is presented in Fig. 3. The modified objective function is shown in Eq. (3) that is reproduced from Gui et al. (2021). The idea of CGAN has proven to be advantageous in terms of image synthesis as it can generate realistic and diverse images. CGAN shows a more stable training behavior as compared to vanilla GAN and DCGAN.

Architecture of CGANs. The generator G and the discriminator D are trained in an adversarial manner so that G can generate plausible fake samples while D can classify them from real samples. y is a class label or any additional information conditioned with input samples for G and D. G loss is described as log\((1-D(G(z)))\) while D loss is log(D(x)). The figure is redesigned from Mirza and Osindero (2014)

2.4.3 Wasserstein GAN (WGAN)

To address the instability problem in vanilla GANs caused by the use of Jensen–Shannon divergence, authors in Arjovsky et al. (2017) proposed the idea of measuring the distance between two data distributions instead of minimizing the divergence. So, an Earth-mover (EM) or Wasserstein-1 distance is introduced in the Wasserstein-GAN (WGAN). The Wasserstein-1 distance is described as a metric instead of cross-entropy to measure the loss for optimizing the objective function. The objective function of the WGAN is shown in Eq. (4) that is reproduced from Wang et al. (2021).

In Eq. (4), \(\Pi \left( p_{r}, p_{g}\right) \) denotes all the joint distributions and \(\gamma ({\textbf{x}}, {\textbf{y}})\) based on the marginals of \(p_{r}\) and \(p_{g}\). During the training of GAN, when there is no overlap between \(p_{r}\) and \(p_{g}\), the Jensen–Shannon divergence returns no values. However, the EM distance can reflect the distance measured continuously. Thus, WGAN can propagate meaningful gradient feedback to train the generator and avoid vanishing gradient problems. The main contribution of the WGAN is the use of a discriminator as a regressor instead of a binary classifier.

2.4.4 StyleGAN

StyleGAN is a state-of-the-art GAN variant that was proposed with several key features to generate diversified and high-quality synthetic images (Karras et al. 2019). The architecture of StyleGAN is designed with a style generator, adaptive instance normalization (AdaIN), and a progressive growing training technique as depicted in Fig. 4. Unlike traditional GANs where the generator directly maps noise to images, StyleGAN separates the learned “style” (high-level features) from the learned “structure” (low-level features) of the image using a mapping network f. This separation allows for more control over the generation process and results in more realistic and appealing images. AdaIN is used to combine the learned style and structure information in StyleGAN. It aligns the statistics (mean and variance) of the intermediate feature maps to match the desired style (Wang et al. 2021). The progressive growing training of StyleGAN starts with a low resolution and gradually increases the resolution of generated images during training. This approach helps stabilize the training process and allows the generator to focus on generating coarse details first before adding finer details, resulting in more coherent and realistic images.

Generator architecture of StyleGAN. This figure is redesigned from Karras et al. (2019)

StyleGAN is known for its ability to generate diverse and unique images from the same latent code. By controlling the style and structure separately, it allows for the manipulation of individual aspects of the generated image, such as changing the pose, color, and facial expressions while keeping the underlying structure consistent (Saxena and Cao 2021).

2.4.5 CycleGAN

The variants of GANs, such as vanilla GAN, DCGAN, CGAN, and WGAN, are limited to the generation of a single image domain using latent input z. However, architectures of these GANs variants were designed to synthesize training images to similar domains and synthetic images have the same mapping as real training images.

The idea of generating images of different mappings and different modalities as compared to the real training images is known as image-to-image translation (Zhu et al. 2017). For this purpose, CycleGAN architecture is proposed. The CycleGAN learns a mapping using the generators G: A \(\xrightarrow {}\) B such as image distributions of A from G(A) must be indistinguishable from the image distributions of B using an adversarial loss (Singh and Raza 2021). To this end, two generators and two discriminators with a cycle consistency loss are proposed in CycleGAN architecture as depicted in Fig. 5. In CycleGAN, the generator \(G_{AB}\) and discriminator \(D_{B}\) work for a single pair using an adversarial loss \(L_{\text{GAN}}\left( G_{A B}, D_B\right) \) as defined in Eq. 5. However, the adversarial loss for reverse mapping pair \(G_{BA}\) and \(D_{A}\) is denoted as \(L_{\text{GAN}}\left( G_{B A}, D_A\right) \). So, a cycle-consistency loss is proposed to minimize the reconstruction error from image translation of one domain to another domain. The cycle-consistency loss is defined in Eq. 6. The final loss of the CycleGAN is formulated as Eq. 7.

The CycleGAN is trained using the objective function defined in Eq. 8. The Eqs. 5, 6, 7, and 8 are reported from Singh and Raza (2021).

Architecture of CycleGAN. The generators \(G_{AB}\) and \(G_{BA}\) are trained in an adversarial manner by taking real samples from one domain as input and generating plausible fake image samples for another domain as output. \(x_{a}\) and \(x_{b}\) are two different unaligned image domains. The discriminators \(D_{A}\) and \(D_{B}\) distinguish the generated fake samples from real samples and provide feedback to the generators to update their learning accordingly. This figure is redesigned from Singh and Raza (2021)

2.4.6 DiscoGAN

DiscoGAN is another unsupervised GAN variant used for image-to-image translation tasks, but it focuses on discovering cross-domain relations between two distinct domains (Kim et al. 2017). The main goal of DiscoGAN is to learn the cross-domain relationships between two unpaired datasets, without using any paired data during the training process. DiscoGAN uses reconstruction losses to discover relations among different domains as depicted in Fig. 6. It aims to learn the shared structure between two domains, allowing for translation between the two domains in both directions.

Architecture of DiscoGAN. The generator and discriminator models are designed with reconstruction losses (\(L_{const}\)) to discover the cross-domain relationship between two unpaired, unlabeled datasets (Kim et al. 2017)

2.4.7 U-Net

The U-Net is a popular model that is widely used for image segmentation tasks in the domain of biomedical image analysis (Punn and Agarwal 2022). In GANs, U-Net is integrated into GAN architectures to perform segmentation tasks efficiently for biomedical images (Mubashar et al. 2022).

U-Net is a U-shaped network that combines low-level and high-level information to extract the complex features of segmented regions. The U-Net is proposed by Ronneberger et al. (2015). The architecture of U-Net is depicted in Fig. 7. U-Net is designed with a symmetrical ordering of encoder-decoder blocks to distinguish every pixel by extracting multi-scale feature maps using encoding the input and decoding it to output using the same resolution (Punn and Agarwal 2022). The U-Net is operated to segregate the overlapping regions using background pixels with an individual loss of each pixel. This process is defined through an energy function E as represented in Eq. 9.

In Eq. 9 reported from Punn and Agarwal (2022), softmax is defined as Eq. 10.

In 9 and 10, The \(w_c\) indicates a weight map while \(d_1\) and \(d_2\) denote the distances to the boundary pixels at the first and second nearest positions respectively. \(w_0\) and \(\Omega \) are the constants. The \(a_k(x)\) denotes an activation for channel k with pixel \(x \in \Omega \) and \(\Omega \in {\mathbb {Z}}^2\).

Architecture of U-Net (Ronneberger et al. 2015)

3 Applications of GANs in biomedical image analysis

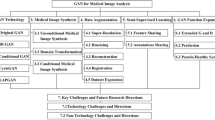

In the domain of biomedical imaging, GANs have been utilized in several applications such as image synthesis (Kazeminia et al. 2020), image segmentation (Román et al. 2020), image reconstruction (Yedder et al. 2021), image detection (Yi et al. 2019), image denoising (Tian et al. 2020), image super-resolution (Li et al. 2021b), and image registration (Haskins et al. 2020). The performance of these applications is affected by the training challenges of GANs. This section presents a high-level discussion on the impact of training challenges of GANs for the applications such as image synthesis, image segmentation, image reconstruction, image detection, image denoising, image super-resolution, and image registration in biomedical image analysis. How these training challenges affect applications is also discussed. A few state-of-the-art survey articles are identified to get insights into these applications for readers that are shown in Fig. 8.

3.1 Image synthesis

GANs are used to generate synthetic images of training images. Conventionally, GANs are introduced as unsupervised models and can be leveraged with unannotated image datasets. Therefore, synthesizing training images using GANs is known as image synthesis. Training challenges of GANs can affect the synthetic images during the image synthesis process. For example, the generation of similar synthetic images for distinct input images, blurry images, and low-quality images indicates the training challenges of GANs. GANs have been used for two types of image synthesis; unconditional image synthesis and conditional image synthesis (Singh and Raza 2021; Kazeminia et al. 2020; Yi et al. 2019). Each type of image synthesis is discussed as follows.

3.1.1 Unconditional image synthesis

In unconditional image synthesis, GANs rely only on random noisy inputs in the latent space without any prior conditions to generate new synthetic image samples. The unconditional image synthesis of biomedical images is affected most by the training challenges of GANs such as mode collapse and training instability. For example, direct generation of magnetic resonance images, computed tomography images, cell images, and dermoscopic images encounter these training challenges. Being an unsupervised framework, this approach has been widely utilized for biomedical image analysis to address data limitation and class imbalance issues. A detailed discussion and technical papers can be found in Kazeminia et al. (2020), Yi et al. (2019).

3.1.2 Conditional image synthesis

In conditional image synthesis, GANs consider some prior conditional information together with z to generate new synthetic images. This type of image synthesis faces training challenges of GANs during the image-to-image translation tasks. When a GAN generates a biomedical image from the same modality input or cross-modality input images, it can miss salient features of input images during the training to translate into new images. Due to instability problems, the quality of synthetic images can be affected during the generation of biomedical images. There are two types of applications in conditional image synthesis. Generation of new images from real images with some prior conditions in the same modalities such as CT to CT, MRI to MRI, and PET to PET. Generation of new images from different modalities like MRI to CT, MRI to PET, etc. The survey article Singh and Raza (2021) discussed these applications in detail and can be studied.

3.2 Image segmentation

GANs provide a significant contribution to the domain of biomedical imagery for image segmentation tasks. It has been utilized for the segmentation of tumors, pathology, and lesions from different body parts like the brain or liver, etc. GANs use segmented masks with input images to generate synthetic images with the segmentation of the target masks. Sometimes, during the training of GANs, the segmented masks are difficult to learn and GANs generate poorly segmented synthetic images or low-quality images. The literature Yi et al. (2019), Nalepa et al. (2019), Román et al. (2020), Iqbal et al. (2022) can be explored for more discussion on biomedical image segmentation.

3.3 Image reconstruction

GANs have been utilized to improve the quality of reconstructed images like estimating full-dose CT images from low-dose CT images with reduced aliasing artifacts. Usually, GANs do not reduce these aliasing artifacts effectively due to the training instability problem. GANs face difficulty in generating plausible images reconstructed from training images due to poor image quality. The mode collapse occurs during the training of GANs while learning the distribution of low-quality images. The reader can be referred to the survey article Yedder et al. (2021) for a detailed insight on biomedical image reconstruction using GANs.

3.4 Image detection

GANs have been used for unsupervised anomaly detection in biomedical imagery. The discriminator model can be used to detect anomalies like lesions or tumors. This contribution helps to work with unannotated data and address the problem of anomaly detection. The survey articles Kazeminia et al. (2020), Yi et al. (2019) are identified for more detail on the underlying GANs application.

3.5 Image denoising

Image denoising techniques are required to remove the noise and recover the original latent information from the noisy images. GANs can be used as an excellent tool to produce sharp, plausible, and noise-free images. A powerful GAN model is required to denoise biomedical images because it is usually incorporated with the training challenges of GANs. These challenges can affect the denoising of biomedical images as GANs are unable to learn low-quality or noisy images effectively and can reflect a poor generation of output images. A more detailed overview of biomedical image denoising techniques with the utility of GANs can be studied in the literature Kazeminia et al. (2020), Tian et al. (2020).

3.6 Image super resolution

GANs can be utilized to produce super-resolution images from low-resolution images. The training instability problem should be addressed completely to achieve better high-resolution biomedical images as the optimality of the GANs is difficult to achieve. The mode collapse and non-convergence problems can also degrade the quality of synthetic images. GANs have performed various super-resolution tasks in biomedical image analysis and the reader can find a detailed review of those tasks in the review paper Li et al. (2021b).

3.7 Image registration

Conventional registration techniques suffer from parameter dependency problems and high optimization loads. GANs have good capabilities of image transformations that can serve as excellent candidates for the extraction of a more optimal registration mapping. GANs have limitations of training challenges as they can miss the location of an object or feature in the biomedical image during the image registration process. Usually, 3D volumes of biomedical images face these challenges as the generator can not learn 3D volumes effectively to generate diverse, un-blurred, and high-quality synthetic images. More details can be found in the survey article of Haskins et al. (2020).

4 Evaluation metrics

Several evaluation metrics have been proposed to assess the technical training challenges of GANs, such as mode collapse, non-convergence, and unstable training. These metrics include Inception Score (IS), Maximum Mean Discrepancy (MMD), Multi-scale Structural Similarity Index Measure (MS-SSIM), Fréchet Inception Distance (FID), Peak signal-to-noise ratio (PSNR), Dice Score (DS), and classification performance metrics (Precision and Recall). Each metric is discussed in detail as follows.

4.1 Inception score (IS)

Inception score is a metric used for the evaluation of GANs (Salimans et al. 2016). It provides an assessment of generated images for high-quality and diverse characteristics. IS utilizes a pre-trained Inception-Net (Szegedy et al. 2016) and measures the KL divergence between class conditional probability distribution \(p(y \mid {\textbf{x}})\) of generated sample and the marginal probability distribution p(y) obtained from a set of generated images.

In Eq. (11) that is reproduced from Borji (2019), \(p(y \mid {\textbf{x}})\) shows the class conditional probability distribution with image x, p(y) is a marginal probability distribution, and H(x) denotes the entropy of variable x (Borji 2019). IS measures the lowest score as 1 while the highest score depends on the number of classes of the dataset. The higher IS score shows that the model can generate high-quality as well as diverse images.

4.2 Maximum mean discrepancy (MMD)

The maximum mean discrepancy is used to measure the dissimilarity between real image distribution \(p_{r}\) and generated image distribution \(p_{g}\) (Gretton et al. 2012). The higher value of MMD indicates that the generator is collapsing and doesn’t generate realistic and diverse images.

Mathematically, it uses Hilbert’s space of functions. In Hilbert space functions, two functions are supposed to be point-wise closed if they are closed in the norm (Segato et al. 2020). So, MMD can be calculated by measuring the squared distance between the embeddings of \(p_{r}\) and \(p_{g}\) as shown in Eq. (12) that is reproduced from Borji (2019).

4.3 Multi-scale structural similarity index measure (MS-SSIM)

MS-SSIM is a metric that is used to assess the diversity of synthetic images in GANs. MS-SSIM is introduced to measure the similarity score using human perception similarity analysis. It computes the similarity between two images with the help of pixels and structures (Odena et al. 2017). MS-SSIM considers luminance (realizing the brightness of a color) and contrast estimations for a metric score. Luminance (l), contrast (c), and structure (s) can be computed using Eq. (13) as reproduced from Borji (2019).

In Eq. (13), x and y are two images. \(\mu _{x}\) and \(\mu _{y}\) represent the mean, whereas \(\sigma _{x}\) and \(\sigma _{y}\) denote the variance (standard deviation) of pixel intensities. The correlation between corresponding pixels is represented by \(\sigma _{xy}\). For the numerical stability of the fractions, constant C is added in all three quantities. The single-scale similarity index is then computed by Eq. (14) [reproduced from Borji (2019)] by considering the fixed distance perspective, as well as sampling density of images (Wang et al. 2004).

The multi-scale SSIM is a variant of the single-scale SSIM metric. It considers all scales of iteratively downsampled images for computing contrast and structural scores. The luminance quantity is measured at the last iteration known as the coarsest scale (M). Conversely, it gives weightage to the contrast and structure at each scale. The MS-SSIM is computed by Eq. (15) as reproduced from Borji (2019).

The range of MS-SSIM scores lies between 0.0 and 1.0. An important point to note is that a higher MS-SSIM score shows lower diversity between images of the same class. This metric is useful for evaluating GANs to compute the diversity between generated images of a single class.

4.4 Fréchet inception distance (FID)

FID is an evaluation metric used to assess the quality of synthetic images. It is proposed by Heusel et al. (2017). FID computes the mean and covariance of synthetic and real images as shown in Eq. (16) that is reproduced from Borji (2019). It visualizes an embedded layer that contains a set of synthetic images in the Inception-Net and uses it as the continuous multivariate Gaussian.

In Eq.(16), r and s shows real and synthetic images while \(\left( \mu _{r}, \Sigma _{r}\right) \) and \(\left( \mu _{s}, \Sigma _{s}\right) \) denote mean and covariances of real and synthetic images. FID score measures the distance between real and synthetic images in GANs. A higher FID score shows a larger distance between synthetic and real data distributions (Borji 2019).

4.5 Peak signal-to-noise ratio (PSNR)

In GANs, PSNR is used to check the quality of synthetic images to the corresponding real images. PSNR is applied to monochrome images. It is measured in decibels (dB). The higher value of PSNR represents a better quality of synthetic images. PSNR is computed as shown in Eq. (17) reproduced from Borji (2019).

By simplifying,

Whereas

The Eqs. (17), (18), and (19) are reported in Borji (2019). I and K represent two monochrome images. In Eq. (18), MAXI denotes the highest possible pixel value of an image such as 255 in the case of 8-bit representation.

4.6 Dice score (DS)

Dice score is a popular metric that is used to evaluate the targeted segmented images as compared to their real ground truth images (Bertels et al. 2019). In GANs, DS is also utilized to assess the quality of synthetic segmented images. DS compares the area of segmented regions of the generated synthetic images and real ground truth images with the total area of both regions (Ghaffari et al. 2019). The formula for DS is calculated using Eq. 20:

In Eq. 20 reported in Ghaffari et al. (2019), \(Y_{pred}\) indicates the ground truth, \(Y_{pred}\) indicates the predicting label and \(\varepsilon \) is a small number used for avoiding division by zero. Perfect segmentation is indicated by the DS of 1.0.

4.7 Classification performance metrics (precision and recall)

In GANs, classification metrics such as recall and precision are also used to evaluate the quality and diversity of synthetic images (Borji 2019). In literature, studies have been proposed to measure the recall and precision to quantify the mode collapse and instability problem (Lucic et al. 2018; Sajjadi et al. 2018). In Lucic et al. (2018), authors argued that these classification metrics can evaluate the quality and diversity of synthetic images. Sajjadi et al. (2018) argued that high precision and low recall scores indicate low quality and diversity of synthetic images while higher quality and diversity of synthetic images are indicated by low precision and high recall scores.

5 The mode collapse problem

5.1 Definition

The basic purpose of the GANs is to produce realistic and a variety of synthetic output images. The synthetic images should be of different styles (modes of distribution) for each random input. In practice, the generator learns to produce synthetic images just to misguide the discriminator for being classified as real. Once the generator finds the best way to fool the discriminator by producing particular plausible images, it focuses on the generation of similar images repetitively. The discriminator gets fooled each time and classifies the synthetic images as real. Eventually, the discriminator gets stuck in this trap and is unable to get out of this trap. Consequently, the generator starts producing a similar style of images. The underlying problem is known as mode collapse (Goodfellow 2016).

5.2 Identification

The mode collapse problem is identified during the training of GANs by looking at the nature of generated images. The mode collapse refers to the generation of less diversified synthetic images where salient features of input (real) images are overlooked by the generator during the training of GANs (Saad et al. 2022). Therefore, GANs with mode collapse generate synthetic images with similar distribution modes repetitively rather than having input images with diverse distribution modes as indicated in Fig. 9. The mode collapse problem can be divided into two categories based on the number of classes within the datasets (Alotaibi 2020). Firstly, when the generator produces a similar style of output images for multi-class input images then it will affect the inter-class diversity, and the problem is known as inter-class mode collapse. Secondly, when the generator produces a similar style of output images for single-class input images then the problem is termed as intra-class mode collapse and affects the intra-class diversity. The mode collapse problem can also be identified using the loss curves of the generator during the training of GANs. Figure 10 illustrates the mode collapse during the training of GANs using a non-converging generator loss (G) for X-ray image synthesis. Consequently, a converging generator loss (G) in Fig. 11 shows the balanced training of GANs indicating no mode collapse for X-ray image synthesis.

5.3 Quantification

The diversity and similarity of generated synthetic images can be computed by several evaluation metrics. The occurrence of mode collapse and diversity of synthetic images is quantified by MS-SSIM (Wang et al. 2003; Odena et al. 2017) using image similarity features while IS (Salimans et al. 2016), MMD (Gretton et al. 2012), and FID (Heusel et al. 2017) using distance measures as discussed in Sect. 4. However, PSNR, SSIM, and classification metrics such as recall and precision are also used to quantify the diversity of synthetic images.

5.4 Solutions to the problem

5.4.1 Regularization

In deep learning models, we aim to find minimum loss that is difficult to achieve when using large weight sizes. This will lead the model to overfit the data and provide poor prediction results. To alleviate this problem, a regularization term is used to reduce the weight size of the network or limit the model capacity (Goodfellow et al. 2016). In GANs, neural networks are used in the generator as well as in the discriminator. So, when the discriminator produces ambiguous gradients as feedback to the generator continuously, the generator learns to generate similar images again and again to fool the discriminator which leads to the mode collapse problem. Here, regularization is used as weight normalization.

5.4.1.1 Weight normalization (WN)

In GANs, weight normalization (WN) uses specialized training algorithms to update the weight matrices regularly while training the GANs. WN does not use additional loss. It backpropagates the gradients by computing them according to the normalized weights during the training of GANs (Lee and Seok 2020). Several normalization techniques such as spectral normalization (Miyato et al. 2018), batch normalization (Radford et al. 2015), and self-normalization (Klambauer et al. 2017) have been proposed to use as a weight normalization in GANs.

Xu et al. (2020) alleviated the mode collapse problem in a GAN using spectral normalization for a super-resolution of low-dose X-ray images. Spectral Normalization is a type of weight normalization that employs the spectral norm of weight matrices as shown in Fig. 12 while training GANs. The spectral norm is equivalent to the L2 norm and corresponds to the largest singular vector. The largest singular vector can be approached to the Lipschitz constant. The spectral normalization is used to normalize the weight matrices in the discriminator of the proposed Spectral Normalization Super Resolution GAN (SNSRGAN) which controls the Lipschitz constant to 1. The authors utilized IS and MS-SSIM scores to evaluate the diversity of super-resolution synthetic images generated by the SNSRGAN. Results demonstrate that SNSRGAN achieved improved scores of IS with 6.56 and MS-SSIM with 0.986 as compared to the baseline SRGAN (Ledig et al. 2017).

Spectral normalization utilizes the largest singular values of \(W_i\) as their spectral norms (\(\sigma \) \(W_i\)) to divide the actual gradient weights of the discriminator. The figure is redesigned from Miyato et al. (2018)

5.4.1.2 Input normalization (IN)

Input normalization refers to the normalization of input image features so that a GAN can better train on those normalized images and alleviate the mode collapse problem for biomedical image synthesis.

A similar idea of input image normalization is proposed by Saad et al. (2022) to the DCGAN for generating diversified chest X-ray images. The authors alleviated the mode collapse in the DCGAN using a preprocessing technique namely an adaptive input-image normalization (AIIN). The AIIN normalizes the input X-ray images using a contrast-based histogram equalization to highlight the diverse features of X-ray images as depicted in Fig. 13. A DCGAN learns X-ray image features more accurately with these normalized images having highlighted features and can generate improved diversified X-ray images. Several experiments with varying batch sizes, window sizes, and contrast thresholds have been conducted. They used MS-SSIM and FID evaluation metrics to evaluate the mode collapse problem in DCGAN and the diversity of synthetic images.

The block diagram of an adaptive input-image normalization. The figure is redesigned from Saad et al. (2022)

The authors demonstrated improved results of AIIN-DCGAN over DCGAN with high diversity scores using the MS-SSIM and FID evaluation metrics. Moreover, synthetic images with the best MS-SSIM and FID scores are used to augment the imbalanced dataset. A baseline CNN classifier is trained on the standard and augmented datasets to compare the classification score including accuracy, recall, specificity, etc. The improved accuracy of 91.50% and specificity of 0.79 are achieved with the augmented dataset having AIIN-DCGAN synthetic images as compared to the alternate datasets.

5.4.2 Modified architecture

In GANs, if a new architecture is defined with an alternative generator or discriminator or both as compared to the vanilla GAN then we describe it as modified architecture.

5.4.2.1 Generator

An alternative generator introduced in the proposed architecture of GAN is described as the modified generator. To avoid the mode collapse problem, a widely adopted approach is to use multiple generators instead of a single as in vanilla GAN which has proved effective to alleviate the problem (Hoang et al. 2018). However, optimizing multiple generators is complicated and costs extensively large computations.

To address this limitation, Wu et al. (2018b) proposed the idea to use multiple distributions instead of using multiple generators to synthesize human cell images. A Gaussian Mixture Model (GMM) based generator is used to cover each data distribution in the latent space as indicated in Fig. 14. It helps the proposed MDGAN to generate diverse image samples using a mixture of data distributions. Moreover, the authors argued that more distributions can aid in generating more diverse synthetic image samples but can lead to huge computational costs. The generated human cell images are then used to augment the dataset for classification tasks. To evaluate generated images, no quantitative analysis is reported in the paper. While authors discussed that the generated synthetic images aid in data augmentation and improve the classification performance of CNN by 4.6% precision value.

The generator architecture of MDGAN. The figure is redesigned from Wu et al. (2018b)

The hierarchy of layers of the generator and discriminator models. To interpret this idea, Qin et al. (2020) proposed an extension to the StyleGAN as skin-lesion StyleGAN (SL-StyleGAN) for synthesizing skin lesion images. In Qin et al. (2020), the authors discussed that changing the number of fully-connected layers in a mapping network of the generator can control the generation of different modes of images. In baseline Style-GAN (Karras et al. 2019), a generator consists of a non-linear mapping network that maps latent input z to an intermediate latent space W using MLP network and then passes the W information to the original generator model. Furthermore, the authors attempted 2, 4, and 6 fully-connected layers and evaluated the generated images with a recall score. They investigated that the generator with 2 fully-connected layers can generate relatively more diverse images than 6 but results in scattered defects like artifacts, etc. The generator model with 4 fully-connected layers can generate relatively good diverse images with no artifacts. The final SL-StyleGAN architecture with a generator of 4 fully-connected layers achieved a 0.263 recall score which is higher than alternate fully-connected layer combinations. The authors concluded that the final synthetic images are not fully diverse as indicated by the lower recall score which needs more work in the future to address this problem.

5.4.2.2 Discriminator

An alternative discriminator introduced in the proposed architecture of GAN is known as the modified discriminator. In GANs, when the generator collapses to a single mode and produces identical image samples then the discriminator backpropagates identical gradients for several generator updates. There is no coordination between the discriminator and its gradients because it deals with each training sample independently. So, no mechanism guides the generator to produce diverse image samples. To address this problem in MR to MR image translation of breast slices, Modanwal et al. (2021) use a small field of view 34 \(\times \) 34 instead of 70 \(\times \) 70 in standard Patch discriminator as depicted in Fig. 15 in the CycleGAN. The small field of view encourages the transformation learned by the generator to maintain the sharp and high-frequency details. This modification of the CycleGAN preserves the structural information of breast and dense tissues during the training of GAN to perform image translation tasks.

Patch discriminator of CycleGAN. The figure is redesigned from Modanwal et al. (2021)

The generated images are evaluated by dice coefficient and compared with the standard CycleGAN. The standard CycleGAN has a mean value of 0.8913 and a standard deviation of 0.0941 for GE to SE translation while the mean value of 0.9089 and a standard deviation of 0.0471 for SE to GE translation. GE Healthcare and Siemens are the two source scanners for image acquisition. Authors have achieved an improved mean value of 0.9801 and a standard deviation of 0.0061 for GE to SE translation while a mean value of 0.9813 and a standard deviation of 0.0049 for SE to GE translation on the test data.

Cervical histopathology images contain fine-grained information that is difficult to learn by GANs and can cause the mode collapse problem. To address the mode collapse in synthesizing cervical histopathology images, authors in Xue et al. (2019) utilize mini-batch discrimination in the discriminator of CGAN to generate realistic diverse samples. The Minibatch discrimination enables the coordination between gradients of discriminator and training samples using mini-batches for training image samples as depicted in Fig. 16. In this way, the generator is penalized if it collapses to a single mode and is regulated to produce diverse images (Salimans et al. 2016). However, synthetic images are not evaluated by any metric to check the diversity or similarity measures with real images. The generated synthetic images are then used to augment the dataset for classification tasks.

The workflow of minibatch discrimination. The figure is redesigned from Salimans et al. (2016)

A similar problem of generating diverse synthetic image samples occurs in CGAN when dealing with distinct CT scans of different body parts for a super-resolution task. To address this problem, a conditional information vector w based modified discriminator is proposed in Kudo et al. (2019). The discriminator is composed of a 3-dimensional fully convolutional neural network as shown in Fig. 17. The conditional vector w contains information about input image data such as leg, head, abdomen, or chest. This information is used by the discriminator to evaluate the generated slices of CT data and encourages the generator to produce diverse image samples. The generated super-resolution images are evaluated through SSIM and PSNR scores. The highest score of SSIM (0.933) and PSNR (35.73) are achieved respectively as compared to the CGAN without conditional vector w. The SSIM score shows a similarity measure and realistic nature of generated images towards ground truth images.

5.4.2.3 Generator-discriminator combined

In this section, we describe the architecture of GANs where the generator and the discriminator are updated or modified. The generation of diversified synthetic 3-dimensional (3D) Magnetic Resonance images is a challenging task. This is due to the complexity of the structure of 3D image data. To address this limitation, authors in Kwon et al. (2019) adopted an \(\alpha \)-GAN with few modifications in the activation functions, batch normalization, and loss function. The \(\alpha \)-GAN is composed of a Variational Auto-encoder (VAE) and a code discriminator network. The VAE is a generative model that explicitly learns the likelihood distributions of training data rather than the other model’s feedback as in GANs to generate synthetic image samples (Kingma and Welling 2014). A GAN combined with VAE can learn the likelihood distributions of images which results in the generation of diversified synthetic images as shown in Fig. 18. In contrast, VAE generates blurry images. \(\alpha \)-GAN utilizes the advantage of VAE in alleviating the mode collapse problem in 3D MR image generation. The authors of Kwon et al. (2019) proposed an Auto-encoding GAN and generated 3D MR images with different latent input z sizes like 100, 1000, and 2048. With a latent vector input of 1000, the proposed Auto-encoding GAN can generate diverse image samples while it fails to escape mode collapse with too small (100) or too large (2048) latent vector input sizes.

The architecture of VAEGAN. The figure is redesigned from Kwon et al. (2019)

To evaluate the diversity of synthetic images, authors Kwon et al. (2019) calculated average MMD \(\times \) \(10^{-4}\) and MS-SSIM scores. The results show that the proposed GAN can perform better with a latent input value of 1000 with an average MMD \(\times \) \(10^{-4}\) score of 0.072 and MS-SSIM of 0.829. The MS-SSIM of real data is 0.846. MS-SSIM score of synthetic 3D MR images shows a good similarity measure with the real data and can be a good candidate for generating diverse images. However, there is a gap in generating more robust and diverse images with smooth and artifact-free images.

To bridge this gap, authors in Segato et al. (2020) extend this work (Kwon et al. 2019) by applying a refiner network based on ResNet blocks (Targ et al. 2016) to generate realistic 3D MR images. The ResNet uses skip connections with deep convolutions as shown in Fig. 19 which controls the skipping of some training layers to smooth the shapes of generated images and make them more realistic. However, this work delivers a low diversity score evident from the MS-SSIM score of 0.9991 between generated images which indicates the lowest diversity of synthetic images as compared to the real images. The proposed deep convolutional refiner GAN (Segato et al. 2020) achieved a good score of MMD as (0.2240 ± 0.0008) \(\times \) \(10^{4}\) as compared to the previous score of MMD as (0.5932 ± 0.0004) \(\times \) \(10^{4}\) which shows the realistic nature of generated images.

The deep convolutional refiner architecture of DCR-AEGAN. The figure is redesigned from Segato et al. (2020)

The mode collapse can occur in a GAN when biomedical images contain complex information of salient features which are difficult to learn and model a relationship between them. A similar type of limitation is addressed for Dermoscopic skin lesion images in a progressive growing GAN (PGGAN) using a self-attention mechanism by authors in Abdelhalim et al. (2021). They discussed that most image synthesis tasks in biomedical imagery utilize PGGAN built with convolutional layers. While in convolutional layers, the convolutional filters are dependent on local neighborhood information to process the convolution operations. It is computationally inefficient for convolutional filters to capture the long-range dependencies in images by relying only on convolutional layers. So, a self-attention mechanism is adapted that enables the discriminator to preserve image features with relevant activations to a particular task. It utilizes feature attention maps that help the generator to produce synthetic images in which coordination should be observed between fine details at every location and fine details in distant portions of the images as shown in Fig. 20. Besides, the discriminator can judge the consistency of highly detailed features in distant portions of the image. In this way, the generator becomes capable of generating diverse image samples using a self-attention mechanism in PGGAN (SPGGAN).

Self-attention mechanism. The figure is redesigned from Zhang et al. (2019)

Different feature level maps are used for evaluating the performance of the self-attention mechanism in image synthesis of resolution 128 \(\times \) 128 pixels. The (N − 1)-to-(N) stage in SPGGAN and PGGAN is monitored which represents the \(2^{N-1}\)-to-\(2^{N}\) level feature maps where \(N = 7\). As a result, SPGGAN performs better with 70.1% as compared to PGGAN with 67.7% for the training set at \(N = 6\). Similarly, SPGGAN performs better with 62.2% as compared to PGGAN with 60.8% for the test set at \(N = 6\). However, the real dataset has feature maps of 78.2%. It shows that the proposed SPGGAN attains better diversity and realistic image synthesis performance than PGGAN yet is distant from real images.

Saad et al. (2023) also utilized a self-attention mechanism in the multi-scale gradient GAN (MSG-GAN) to generate diversified X-ray images. They integrated a self-attention layer into each layer of the generator and discriminator models. The self-attention utilizes attention feature maps to help the MSG-GAN to learn and focus on the diverse features of X-ray images as shown in Fig. 20. The authors demonstrated an improvement in the diversity of generated synthetic images using an improved FID score of 139.6.

5.4.3 Adversarial training

This section discusses the alterations made during the training of GANs such as making buffer storage (Lau et al. 2018) or using perceptual image hash (Neff et al. 2017) to identify and address the mode collapse problem.

5.4.3.1 Buffer storage scheme

Generation or simulation of diverse scar tissues in the myocardium of the left ventricle from a segmented healthy Late-gadolinium enhancement (LGE) imaging scan using GANs is always a challenging task. Scar tissue is a fibrosis tissue that appears when healthy tissue gets destroyed by some disease. Lau et al. (2018) proposed a variant of GAN namely ScarGAN that is composed of a convolutional U-Net-based architecture (Ronneberger et al. 2015) both in the generator as well as in the discriminator. In ScarGAN, an experience replay buffer scheme (Shrivastava et al. 2017) is used to prevent the generator from producing similar shapes of scar tissue. In this scheme, half of the generated masks are stored in a buffer for an experience replay. From this buffer, the discriminator uses half of the training batches randomly to check previously generated scar tissue samples and prevent the generator from producing similar shapes of scar tissue.

The generated images from ScarGAN (Lau et al. 2018) are evaluated by experienced physicians. These physicians are provided with 15 generated and 15 real images in a mixed dataset. They classify them with an accuracy of 53% which reflects a good score for the realism of generated images. However, the authors concluded that ScarGAN still generates less diverse shapes of scar tissues i.e. similar shapes that require to be researched in the future.

5.4.3.2 Perceptual image hashing

Generating new segmentation masks and ground-truth images separately from GANs is a time-consuming task. To generate new chest X-ray images and segmentation masks, Neff et al. (2017) proposed a variant of DCGAN that forces the generator to produce a segmentation mask together with ground truth images. During the adversarial training, the generator starts producing identical image-segmentation pairs with few artifacts that lead to a mode collapse problem. To address this problem, the authors use the perceptual image hash function to remove the identical generated image-segmentation pair. Perceptual image hash functions calculate hash values of real and generated images based on specific image features as shown in Fig. 21. These hash values are compared further to evaluate the difference between generated and real images.

A flow methodology of image hash generation. The figure is redesigned from Du et al. (2020)

The generated image-segmentation pair is evaluated in data augmentation for the segmentation task. The U-Net is trained on 30 real and 120 generated images. The lowest Hausdorff distance of 7.2885 has been observed as compared to the results when U-Net trained on only real images or only generated images. However, the authors concluded that a mild form of mode collapse occurred which resulted in less diverse images.

5.4.4 Summary

In this section, technical papers are reviewed to address the mode collapse problem in the biomedical imagery domain. The mode collapse problem can be alleviated by using different methods such as regularization, modified architectures, and adversarial training. These methods are reviewed as solutions to the underlying problem in the domain of biomedical imagery. A taxonomy is created based on these solutions as shown in Fig. 22. In Fig. 22, each sub-category is further divided into different methods like regularization has weight normalization, modified architectures are divided into the generator, discriminator, and generator-discriminator combined. Similarly, adversarial training is further divided into possible solutions like buffer schemes and perceptual image hash. The application-based taxonomy is also created as shown in Fig. 23. This taxonomy 23 helps to analyze the effect of mode collapse for the specific type of biomedical images.

From the technical literature, it is reviewed that all of the papers have utilized technical approaches that partially alleviate the problem of mode collapse in biomedical imagery. The Auto-encoding GAN (Kwon et al. 2019) provides relatively more diverse synthetic images while addressing the problem in biomedical imagery. Table 2 provides a comparative analysis of contributing papers to address the underlying training challenges in GANs for biomedical imagery.

Moreover, a detailed overview of each solution is also listed in Table 3 where each solution is based on three categories such as preprocessing, modified GAN architectures, and loss functions. Table 3 summarises how each solution addressed the mode collapse problem in GANs for the biomedical imagery domain.

6 The non-convergence problem

6.1 Definition

In GANs, it is important that the training of the generator and the discriminator should converge at a global point (Nash equilibrium). The training of GANs is performed as a minimax game to reach this Nash equilibrium. The discriminator and the generator should be trained with the best training strategies to achieve better training. As the generator’s performance improves, it becomes increasingly difficult for the discriminator to distinguish synthetic images from real images. When the generator is producing the best plausible (realistic-looking) images, the discriminator will have a classification accuracy of 50%. Consequently, the discriminator has no meaningful feedback to update the weights of the generator. This will affect the synthetic images produced by the generator. As a result, the training of GANs leads to a non-convergence problem (Arjovsky and Bottou 2017).

6.2 Identification

The non-convergence problem has a direct effect on the generation of synthetic images. The underlying problem is identified by analyzing the nature of synthetic images. The non-convergence problem leads the generator to produce plane color images such as black or white in the case of gray-scale images as indicated in Fig. 24.

Identification of the non-convergence problem in GANs for X-ray image synthesis. The plane black color synthetic images with no information show the imbalanced training of the GANs for X-ray image synthesis. It shows a failure of the generator and discriminator models during the training of GANs for generating X-ray images

6.3 Quantification

To evaluate the problem of non-convergence in GANs, evaluation metrics are proposed to judge the quality of generated images. So, several evaluation metrics are proposed such as peak signal-to-noise ratio (PSNR) (Borji 2019) and FID (Heusel et al. 2017) to quantify the quality of generated images as discussed in Sect. 4.

6.4 Solutions to the problem

6.4.1 Nash equilibrium

This section discusses the possible solutions in terms of using optimization algorithms and controlling the training iteration (k) to find a Nash equilibrium.

In vanilla GAN (Goodfellow et al. 2014), Goodfellow demonstrated that an equilibrium can be achieved with an optimal discriminator during the training of GAN. However, this is an ideal case, and in practice, GAN does not meet the condition. So, the author Goodfellow et al. (2014) proposed an algorithm to update the discriminator multiple times (k) per generator’s training update to get the discriminator close to an ideal. In vanilla GAN, the discriminator is updated only once \((k = 1)\) per generator’s training update which was suitable for that specific experiment. Similarly, WGAN (Arjovsky et al. 2017) uses \((k = 5)\) for discriminator updates per generator’s training update for attaining an equilibrium state.

6.4.1.1 Updating algorithm

It is a very critical and sensitive approach to control the training updates of the generator and discriminator models to reach a balanced state of training. Biswas et al. (2019) proposed a uGAN with separate parameters (k) for the discriminator and (r) for the generator to control the updates of the training iteration of both of these models. The authors investigated that the similar number of updates for both models yields balanced training and the generation of high-quality retinal synthetic images. It is also analyzed that k with large values can generate high-quality realistic images by keeping \(r = 1\). In contrast, noisy images are generated using larger values of r with \(k = 1\).

The synthetic images are evaluated with an SSIM metric. The mean, maximum, and mean-maximum values of SSIM are measured between synthetic and real images to check the quality and similarity between images. A higher score of SSIM shows higher similarity and high-quality measures. The mean SSIM score of 0.61, maximum SSIM score of 0.73, and mean-maximum SSIM score of 0.81 are achieved.

6.4.1.2 Learning rate

The idea of using learning rates to stabilize and balance the training of GANs is proposed by Heusel et al. (2017). The authors introduced a novel algorithm namely the Two Time-scale Update Rule (TTUR) to achieve a local Nash equilibrium using distinct learning rates of the discriminator and the generator instead of using multiple update algorithms. TTUR uses stochastic gradient learning \({\varvec{g}}(\varvec{\theta }, {\varvec{w}})\) of the discriminator’s loss and \({\varvec{h}}(\varvec{\theta }, {\varvec{w}})\) of the generator’s loss. It defines the true gradients of \({\varvec{g}}(\varvec{\theta }, {\varvec{w}})=\nabla _w {\mathcal {L}}_D\) and \({\varvec{h}}(\varvec{\theta }, {\varvec{w}})=\nabla _\theta {\mathcal {L}}_G\) with random variables \({\varvec{M}}^{(w)}\) and \({\varvec{M}}^{(\theta )}\) as shown in Eq. 21 reported from Heusel et al. (2017). So, it uses stochastic learning b(n) and a(n) for updating the discriminator and generator steps respectively as defined in Eq. 22 reported from Heusel et al. (2017). However, the choice of appropriate learning rates depends on the GAN architecture, type of experiments, and nature of the datasets.

Abdelhalim et al. (2021) investigated the use of both TTUR (Heusel et al. 2017) and discriminator updates in SPGGAN for skin lesion image synthesis. The authors updated the discriminator five times for every single update of the generator’s training. The update algorithm slows down the training process while TTUR tries to balance it to generate noise-free images.

SPGGAN-TTUR (Abdelhalim et al. 2021) shows visually appealing results of generated images as compared to SPGGAN. The results are evaluated through a paired t-test with \(95\%\) confidence (p-value < 0.05). Paired t-test gives the mean difference between two sample observations. The p-value of the t-test (PVT) is calculated to check the performance of SPGGAN-TTUR for generating synthetic train and test sets images. The PVT of \(68.1 \pm 0.8\%\) for the training set while \(60.8 \pm 1.5\) for test sets are achieved which outperformed the SPGGAN. However, SPGGAN-TTUR (Abdelhalim et al. 2021) suffers from artifacts in the generated image that need to be researched.

6.4.1.3 Hyperparameter optimization

In GANs, the choice of appropriate hyperparameters to control the discriminator and the generator is a challenging task. To address this problem, optimization techniques can be used to obtain adaptive losses for updating the weights of the generator.

Goel et al. (2021) proposed an optimized GAN to generate synthetic chest CT images of COVID-19 disease. The optimized GAN utilizes a CGAN with Whale Optimization Algorithm (WOA) (Mirjalili and Lewis 2016) to optimize its hyperparameters. A flow of the Whale optimization algorithm is shown in Fig. 25. In this algorithm, the hunting trick of humpback whales is adapted to optimize the prey’s location. This hunting trick determines the generator’s best search agents with the given discriminator. To update the position of search agents, the optimization of hyperparameters follows three rules; first, the leader whale finds the prey’s position and encircles it. Similarly, the generator’s search agents calculate the fitness function at each iteration to achieve the best position and then update their positions. Second, the distance between the prey and the location of the generator’s search agents is measured and then the generator’s search agents update their position based on these measures. Third, it is the same as the first rule but it updates the position of search agents based on the random search instead of the best search as in the first rule. The Optimized GAN (Goel et al. 2021) improves the performance of the discriminator and can generate adaptive losses to update weights of the generator to produce good quality diverse images.

The flow diagram of the Whale optimization algorithm. The figure is redesigned from Goel et al. (2021)

The performance of optimized GAN (Goel et al. 2021) is compared with the baseline CGAN. The generated images are used with training images for classification tasks. So, the F1-score and accuracy of 98.79% and 98.78% respectively are achieved with Optimized GAN while 91.60% accuracy and 90.99% F1-score are achieved with the baseline CGAN. It shows that Optimized GAN can perform better with accuracy and F1-score measures, as well as in optimizing hyperparameters for a balanced GAN.

6.4.2 Summary

In this section, technical papers of GANs are reviewed to address the non-convergence problem in the domain of biomedical imagery. Achieving a Nash equilibrium during the training of GANs is a remedy to this non-convergence problem (Goodfellow 2016). Training GANs at an equilibrium state is not an easy task. By keeping this concept in mind, the reviewed papers are classified into three different categories as shown in Fig. 26. First, updating algorithms (Biswas et al. 2019), second, learning rate (Abdelhalim et al. 2021), and third hyperparameter optimization (Goel et al. 2021). Another taxonomy is also proposed for application-based biomedical imagery as shown in Fig. 27. This is further classified into image modality types such as dermoscopic (Abdelhalim et al. 2021), CT (Goel et al. 2021), and retinal images (Biswas et al. 2019).

The updating algorithm is reviewed for vanilla GAN (Goodfellow et al. 2014), WGAN (Arjovsky et al. 2017), and then state-of-the-art uGAN (Biswas et al. 2019). The updating algorithms in vanilla GAN (Goodfellow et al. 2014) and WGAN (Arjovsky et al. 2017) are proposed for the general imagery domain while updating algorithm in uGAN (Biswas et al. 2019) is proposed for the biomedical imagery domain. All of these propose strategies to update discriminator time-steps per generator time-steps during the training of GANs. They show that their proposed solutions work better in attaining an equilibrium state while training the GANs.

Another idea of achieving equilibrium in training the GANs is proposed by Heusel et al. (2017). It also helps to achieve an equilibrium using adaptive learning rates for the discriminator and the generator. This technique is used by Abdelhalim et al. (2021) to address the non-convergence problem in the biomedical domain. The Hyperparameter optimization approach is also helpful in reaching the Nash equilibrium. For this, Goel et al. (2021) investigated the use of optimization algorithms such as the Whale optimization algorithm (WOA) (Mirjalili and Lewis 2016) for biomedical imagery.

To summarize this section, Table 2 shows a comparison of proposed techniques adapted by the contributing papers based on the underlying problem. It is observed that all of the technical papers belong to the image synthesis of CT, dermoscopic, and retinal image modalities. Among all of the contributed solutions, the TTUR (Heusel et al. 2017) scheme provides relatively good performance to address the non-convergence problem in the biomedical imaging domain. High-quality realistic images can be achieved using this approach in biomedical imagery.

Moreover, a detailed overview of existing solutions to address the non-convergence problem in GANs is also reported in Table 4 where the methodology of each solution to the non-convergence problem in GANs is summarized for the domain of biomedical imagery.

7 The instability problem

7.1 Definition

The training of the GANs can get unstable due to the vanishing gradient problem. The vanishing gradient problem occurs when the discriminator becomes an optimal classifier and produces smaller values of gradients (approaching zero) for back-propagation. These gradients are unable to update the weights of the generator due to which the generator stops producing new images and the overall training of the GANs becomes unstable (Goodfellow 2016).

7.2 Identification

The instability during the training of GANs is identified by the generation of blurry or low-quality synthetic images as indicated in Fig. 28. Moreover, the underlying problem takes a longer time to train GANs with unstopping behavior which results in generating poor-quality images. Another drawback of the instability problem is that it will lead the generator to produce synthetic images with artifacts. These artifacts include noise or additional objects that are not meant for generated.

Identification of the instability problem in GANs for X-ray image synthesis. The noisy synthetic X-ray images show unstable training of the GANs for X-ray images. The blurriness in the images is generated due to the vanishing gradient problem which is a basic reason for the unstable training of GANs

7.3 Quantification

The instability problem of training GANs can be evaluated by the same metrics that are used for mode collapse and non-convergence problems such as MS-SSIM (Odena et al. 2017), FID (Heusel et al. 2017), and PSNR (Borji 2019). The quality of generated images can be evaluated in terms of similarity measures as discussed in Sect. 4. Furthermore, classification metrics such as recall and precision are also used to quantify the quality of synthetic images.

7.4 Solutions to the problem

In synthetic image generation using GANs, the stability of GANs is an important aspect to consider. If the training of GANs becomes unstable, the network cannot generate high-resolution realistic images. To alleviate this problem, the following possible solutions are proposed for the domain of biomedical imagery.

7.4.1 Modified architecture

The architecture of GANs plays a key role to avoid the vanishing gradient problem. The selection of the generator and the discriminator have a great impact on the training performance of GANs. To synthesize PET images from multi-sequence MR images, a Refined CF-SAGAN is proposed (Wei et al. 2020). In the proposed architecture (Wei et al. 2020), the problem of vanishing gradient occurs when the long-skip connections are used in the generator to recover the lost spatial information during the down-sampling operations. Then, short skip connections are used to handle this problem. This process is known as the residual connections (He et al. 2016). The residual connection helps to mitigate the problem of vanishing gradient by allowing an alternative shortcut track for the gradient to flow through as shown in Fig. 29 the training of GANs. It also enhanced the feature exchanges across layers. The generated synthetic PET images are evaluated with the PSNR for image quality. The proposed Refined CF-SAGAN outperformed by \(9.07\%\) in PSNR (p < 0.05).

A flow of residual learning in CF-SAGAN. The figure is redesigned from He et al. (2016)