Abstract

Economic vulnerability, such as homelessness and unemployment, contributes to HIV risk among U.S. racial minorities. Yet, few economic-strengthening interventions have been adapted for HIV prevention in this population. This study assessed the feasibility of conducting a randomized clinical trial of a 20-week microenterprise intervention for economically-vulnerable African-American young adults. Engaging MicroenterprisE for Resource Generation and Health Empowerment (EMERGE) aimed to reduce sexual risk behaviors and increase employment and uptake of HIV preventive behaviors. The experimental group received text messages on job openings plus educational sessions, mentoring, a start-up grant, and business and HIV prevention text messages. The comparison group received text messages on job openings only. Primary feasibility objectives assessed recruitment, randomization, participation, and retention. Secondary objectives examined employment, sexual risk behaviors, and HIV preventive behaviors. Outcome assessments used an in-person pre- and post-intervention interview and a weekly text message survey. Several progression criteria for a definitive trial were met. Thirty-eight participants were randomized to experimental (n = 19) or comparison group (n = 19) of which 95% were retained. The comparison intervention enhanced willingness to be randomized and reduced non-participation. Mean age of participants was 21.0 years; 35% were male; 81% were unemployed. Fifty-eight percent (58%) of experimental participants completed ≥ 70% of intervention activities, and 74% completed ≥ 50% of intervention activities. Participation in intervention activities and outcome assessments was highest in the first half (~ 10 weeks) of the study. Seventy-one percent (71%) of weekly text message surveys received a response through week 14, but responsiveness declined to 37% of participants responding to ≥ 70% of weekly text message surveys at the end of the study. The experimental group reported higher employment (from 32% at baseline to 83% at week 26) and lower unprotected sex (79% to 58%) over time compared to reported changes in employment (37% to 47%) and unprotected sex (63% to 53%) over time in the comparison group. Conducting this feasibility trial was a critical step in the process of designing and testing a behavioral intervention. Development of a fully-powered effectiveness trial should take into account lessons learned regarding intervention duration, screening, and measurement.

Trial Registration ClinicalTrials.gov. NCT03766165. Registered 04 December 2018. https://clinicaltrials.gov/ct2/show/NCT03766165

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Economic vulnerability, such as homelessness and unemployment, contributes to HIV risk among racial minorities in the United States (U.S.) who are disproportionately infected. According to UNAIDS, the U.S. has a concentrated HIV epidemic that has greatly affected impoverished urban areas [1, 2] where HIV prevalence is alarmingly high at 2.1%, over seven times the national HIV prevalence (0.3%) [1, 3, 4]. HIV prevalence is 2.1 times higher among individuals with income at or below the U.S. poverty threshold as compared to those above [1, 2], and 2.6 times higher among unemployed individuals as compared to employed individuals [1, 2]. Homelessness in the past 1 year is also associated with 1.8 times higher HIV prevalence [1, 2].

Racial minorities, particularly African-Americans, are disproportionately affected by the HIV epidemic. Although representing 12% of the U.S. population [3], African-Americans make up 42% of all U.S. HIV infections [5]. The rate of new HIV infections is 8.3 times higher in African-Americans compared to non-Hispanic whites [5]. In Baltimore, Maryland (MD), the setting for this study, 82% of adult and adolescent HIV diagnoses were in non-Hispanic Blacks (African-American) [4]. In addition, young adults in Baltimore, MD, aged 20–29, made up the largest proportion of HIV diagnoses (29%) compared to any other age group [4] as well as an increasing proportion of the urban homeless and unemployed.

Economic vulnerability is associated with behaviors that contribute to HIV risk, such as condomless sex and sex exchange [6,7,8]. Low economic resources may also lead to a loss of hope and agency that diminishes motivations to avoid exposure to future HIV infection [6,7,8]. Yet, despite persistent racial and economic disparities in HIV infection, few economic-strengthening interventions have been adapted for HIV prevention in economically-vulnerable African-American young adults [9]. The absence of published studies conducted within communities of color has hindered efforts to reduce economic drivers of HIV risk in this population.

Microenterprise (or very small-scale businesses) has been shown to improve sexual attitudes [10,11,12], sexual risk behaviors [13,14,15,16,17,18,19,20], and HIV communication and testing [13, 15]. Prior microenterprise initiatives have combined HIV and microbusiness training, mentoring, and small grants. However, with a few exceptions, most microenterprise interventions have been conducted outside of the U.S. in low-income countries and using face-to-face classes [21]. They have also focused primarily on female sex workers and older adults (aged 25 to 49) and have required all participants to engage in an identical income-generating activity [21]. Less is known about how to effectively design choice-based microenterprise interventions that are tailored to young adults’ interests and abilities. This is particularly true for disconnected youth who are out of school and unemployed. Our prior research found that young adults experiencing homelessness had access to cell phones and had interests in expanding their current entrepreneurial activities [22, 23].

The objective of this study was to assess the feasibility of conducting a trial of a microenterprise intervention, entitled Engaging MicroenterprisE for Resource Generation and Health Empowerment (EMERGE). We designed EMERGE for economically-vulnerable African-American young men and women in a U.S. urban setting. EMERGE included face-to-face sessions that were augmented by text messaging, grants, and mentoring. While text messages have been used in HIV risk reduction programs [24,25,26,27], they have not previously been combined with a microenterprise intervention. The primary feasibility objectives were to assess participant recruitment, randomization, participation, and retention, in addition to intervention acceptability. Secondary exploratory objectives were to examine the level of employment, sexual risk behaviors, and HIV preventive practices in study groups over time. This feasibility study will inform whether and how to conduct a larger effectiveness trial for HIV risk reduction in this population [28]. Findings will help to address uncertainties that would arise when planning a larger trial, such as participant willingness to be randomized, time needed to collect data, intervention acceptance, and responsiveness to outcome assessments [29, 30]. This article presents results of feasibility and behavioral data. Qualitative acceptability data have been published elsewhere [31].

Methods

Design

This feasibility trial was a two-group parallel design with a 1:1 allocation ratio to experimental or comparison group.

Study Registration

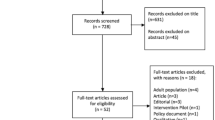

The trial was registered at ClinicalTrials.gov (NCT03766165). It was entitled the EMERGE Project, Engaging MicroenterprisE for Resource Generation and Health Empowerment (K01MH107310). This manuscript has been prepared according to the Consolidated Standards of Reporting Trials Statement for Social and Psychological Interventions (CONSORT-SPI) [32] and the extension for randomized pilot and feasibility trials [33]. A standard CONSORT diagram is included (Fig. 1). The study methods are based on the EMERGE trial protocol (Version 5; 23 January 2019), approved by the Johns Hopkins University School of Public Health Institutional Review Board (#00008833) and the Indiana University Institutional Review Board (IRB) (#2003950305).

Setting

The feasibility trial took place in Baltimore, MD in collaboration with two community-based organizations (CBO), AIRS and YO!Baltimore, which provide transitional and emergency housing for young adults who are experiencing homelessness. Participants were recruited from and received the interventions at the CBOs. Data were also collected at the CBOs. The CBOs were chosen based on years working in Baltimore (> 2 years), support to African-American youth experiencing homelessness (> 20 youth per year), and willingness to participate in the study.

Participants

Details of the study’s participant recruitment process have been published previously in a protocol manuscript [34]. Study eligibility was determined using a screening tool during an in-person enrollment process. The eligibility criteria for participants were: African American, aged 18–24, living in Baltimore, having experienced at least one episode of homelessness in the last 12 months (e.g., defined as reporting any episode in which a person lacked a regular or adequate nighttime residence, such as living in a hotel/motel, vehicle, shelter, or friend’s home and living primarily on their own, apart from a parent or guardian), unemployed or employed fewer than 10 h per week, not enrolled in school, owning a cell phone with text messaging functionality, and reporting at least one episode of unprotected or unsafe sex in the prior 12 months.

Potential participants were recruited on-site at the two participating CBOs. A one-page recruitment flyer was posted in the main building of each CBO. Designated CBO staff informed potential participants of the study team’s scheduled visit days. On these visit days, the PI and/or a trained research assistant introduced the study to a group of potential participants. On the same day, the PI or a trained research assistant then accompanied each potential participant to a private room to complete the screening tool, administer written informed consent, register the participant’s cell phone to the study’s online text messaging service (TextIt.in), and conduct the pre-intervention assessment.

Timeline

Data were collected for 26 weeks. Participants underwent an in-person, pre-intervention assessment at the time of enrollment and were randomized in week 4 if they successfully completed a three-week run-in period (week 1 to week 3). Both groups received the assigned interventions concurrently for 20 weeks (week 4 to week 23). An in-person post-intervention assessment was conducted in week 26. Participants additionally completed a weekly text message survey (week 1 to week 26) on Fridays for a sub-set of outcomes. Recruitment and pre-intervention assessments began in December 2018 and were completed in February 2019. The run-in period, weekly text message survey, and interventions were delivered from February to July 2019. The post-intervention assessment was conducted from July to August 2019.

Run-In Period

Following the recruitment period, participants began a three-week run-in period prior to randomization. The run-in period was used to minimize dropouts after randomization by identifying participants who were likely to take-up the intervention and to complete outcome assessments [35,36,37,38,39,40]. The two run-in requirements were: (1) to respond to one or more of the first three weekly text message surveys and (2) to describe the type of microenterprise one would like to start (e.g., during the enrollment interview or by email/hand-written document). A prior third run-in requirement of attending a group session was omitted to reduce research burden and minimize delays in the time of enrollment until the start of the intervention. Participants who did not successfully complete the run-in requirements were eligible to choose either to withdraw from the study or to remain in the study and only complete the study’s assessments.

Interventions

Participants assigned to the experimental intervention received the following: (1) one text message each week on job openings in Baltimore, appropriate for young adults at or slightly above or below high school diploma or equivalent training. Text messages on job announcements were sent every Monday at 6PM; (2) one 2-h educational session each week on business start-up and HIV prevention on Wednesdays at 10:30AM or 3:00PM in a classroom at the CBO resource center with a PowerPoint presentation, handouts, group discussions, guest speakers, small-group activities, and completion of the session and participant checklists. The sessions were led by three female facilitators. A text message reminder to attend the educational session was sent 2 h prior to the session; (3) one mentor during the intervention period, who was aged 25 and older, lived in the Baltimore greater metropolitan area, owned a small business in Baltimore, and spoke English. Mentors included men and women who were matched according to the microbusiness interests of the participants; (4) one microbusiness start-up grant (repayment not required) in the amount of $1100.00 paid by check in a series of small payments over the study period and used for purchasing microbusiness supplies, marketing, communication, and travel. Participants were required to attend educational sessions, complete weekly text message surveys, and provide evidence of prior microbusiness purchases (e.g., receipts, goods, photos, etc.) to receive the next small payment; and (5) three text messages each week on microenterprise and HIV prevention, delivered every Tuesday, Wednesday, and Thursday at 6PM to reiterate key messages from the educational sessions. In a larger effectiveness trial, the experimental intervention was hypothesized to work to reduce sexual risk behaviors and increase employment and uptake of HIV preventive behaviors by building skills and providing motivational messaging and financial support.

The proposed experimental intervention was delivered as planned with some minor modifications. All text messages on job openings (20 texts) and microenterprise and HIV prevention (57 texts) were delivered as planned. However, no microenterprise and HIV prevention text messages were delivered during the 1st week of the intervention so as to allow participants to focus on orientation. All 20 educational sessions were delivered as planned. However, the mean time of the weekly sessions was modified to 2 h rather than 3 h based on feedback from the CBO staff and participants after the first two sessions were completed. Microbusiness grants in the amount of $1100 were available for all participants as planned. The final number and pace of payments was determined while the study was underway and included three payments: $500, $300, and $300 in sessions 7, 14, and 17, respectively. Participants were offered a mentor as planned, although the majority of mentors had limited capacity to provide a paid apprenticeship. A total of eight microbusiness individual mentors were recruited in addition to three microbusiness group mentors (e.g., guest speakers during the educational sessions) and two HIV prevention care specialists (e.g., also guest speakers). Mentor matches were made after session 4, as planned, once participants finalized a microbusiness endeavor. There was approximately a two- to three-week lag from the time of the mentor match to the time of initial contact.

Participants assigned to the comparison intervention received one text message each week on job openings, identical in content and timing (every Monday) to the job openings sent to participants assigned to the experimental intervention. The comparison intervention was implemented as planned with no modifications. Figure 2 highlights components of the experimental intervention. Additional details regarding both interventions, including rationale, structure, and processes are published in the aforementioned protocol manuscript [34].

Outcomes

To measure feasibility we examined rates of recruitment, randomization, and retention. We also assessed participation in intervention activities and outcome assessments. Specifically, the two primary outcomes of feasibility were: (1) proportion of participants in both groups who responded to 70% or more of the weekly text message surveys measured at week 26; and (2) proportion of experimental intervention participants who completed 70% or more of intervention activities measured at week 23. Core intervention activities included four measures: session attendance, receipt of one or more informational text messages, receipt of one or more mentor contacts, and spending of one or more grant payments.

The secondary feasibility outcomes were: (3) proportion of all participants who received one or more informational text messages measured weekly at weeks 4 to 23; (4) proportion of all participants who responded to the text message survey measured weekly at weeks 1 to 26; (5) proportion of experimental intervention participants who attended an educational session measured weekly at weeks 4 to 23. Participants who missed a session but completed a missed session review and make-up materials during the following session received credit for the session; (6) proportion of experimental intervention participants who received one or more mentor contacts measured weekly at weeks 4 to 23 for any discussion with a mentor or guest speaker in-person or by email, text, online, or phone call; (7) proportion of experimental intervention participants who spent one or more grant payments measured weekly at weeks 4 to 23.

We also explored the completion and level of sexual risk behaviors, HIV preventive behaviors, and employment. Specifically, the secondary behavioral outcomes were: (8) proportion of participants in each group who reported one or more unprotected sex acts in the last week measured weekly at weeks 1 to 26; (9) proportion of participants in each group who reported one or more unprotected sex acts in the last month measured at week 1 and 26; (10) proportion of participants in each group who reported one or more safer sex acts in the last week measured weekly at weeks 1 to 26; (11) proportion of participants in each group who reported one or more safer sex acts in the last month measured at week 1 and 26; (12) proportion of participants in each group who reported one or more HIV preventive care-seeking or information-seeking acts in the last week measured weekly at weeks 1 to 26; (13) proportion of participants in each group who reported one or more HIV preventive care-seeking or information-seeking acts in the last month measured at week 1 and 26; (14) proportion of participants in each group who reported one or more paid hours of work in the last week measured weekly at weeks 1 to 26; (15) proportion of participants in each group who reported one or more paid hours of work in the last month measured at week 1 and 26. The list of eligible behaviors for each behavioral outcome is included in the footnotes of Tables 4 and 5.

Outcome data were collected using responses from the online text messaging service (#1, 3, 4), weekly participant checklists completed at the end of each educational session (#2, 5, 6, 7), weekly text message surveys (#8, 10, 12, 14), and in-person pre-intervention and post-intervention assessments (#9, 11, 13, 15). The pre-intervention assessment was also used to obtain participant demographic data relating to age, gender, sexual orientation, employment status, parental status, prior night’s residence, income insecurity (e.g., having enough money to buy food, housing, and/or transportation in the last 30 days), access to a banking account, and cell phone behaviors. No changes to the feasibility trial’s assessments or measurements were made after the feasibility trial commenced.

Progression Criteria to Definitive Trial

The decision of whether and how to proceed to a full-scale trial is based on the feasibility data and overall study experience. Progression criteria to a definitive trial that were considered were: (i) recruitment of ≥ 80% of target sample size; (ii) response of ≥ 70% of participants in experimental and comparison groups to ≥ 70% of weekly text message surveys; (iii) completion of ≥ 70% of experimental participants of ≥ 70% experimental intervention activities, such as text message receipt, session attendance, grant spending, and mentor contact; and (iv) acceptability of the comparison and experimental interventions. Acceptability is analyzed and reported in a second manuscript of qualitative findings [31]. Progression criteria also considered retention and the practicality of potential modifications required for an effectiveness trial.

Sample Size

A power calculation to estimate sample size was not appropriate for a feasibility trial because the aim of the trial was not to establish effectiveness [41]. Instead we determined that a minimum sample of 30 participants (15 in each group) would generate sufficient data to assess trial feasibility and to assess the acceptability of the assigned interventions. This was determined with reference to recommendations for feasibility studies, which recommended sample sizes of between 24 and 50 [41,42,43] and as used in other published feasibility randomized clinical trials [44,45,46,47]. The minimum target sample size of 30 was inflated to 40 to allow for drop-out during the run-in period (15%) or drop-out following randomization (10%), which was estimated to be a total of 25% based on similar studies [35,36,37, 48].

Allocation

The PI and a trained research assistant enrolled participants prior to the run-in period and prior to the assignment to comparison or experimental intervention. A biostatistician who was not involved in recruitment, intervention implementation, or outcome assessment used a computer to generate the random allocation sequence and assigned all participants, at the same time, to comparison or experimental intervention. Participants were randomized in a 1:1 ratio, stratified by CBO to allow equal numbers of CBO participants in each study group.

Masking

A fully masked design was not possible because participants knew to which intervention group they had been assigned, and the study team members administering the microenterprise intervention knew participants’ assignments. However, as both interventions were economic-strengthening activities, the similarities of the interventions were intended to reduce possible biases in the expectations of benefits by participants. Both interventions were described as novel activities aiming to improve employment for young adults in Baltimore.

In addition, the PI provided a masked dataset to two research analysts so that statistical analyses could be performed without knowing group assignments.

Statistical Analysis

Descriptive statistics were reported for the primary and secondary feasibility outcomes. Frequencies and proportions were used to summarize categorical data for the specified time points by study group and total. Study enrollment, run-in eligibility, randomization, and retention are described using a standard CONSORT diagram (Fig. 1). To improve the interpretation of trial results, ancillary analyses were used to examine trends in responsiveness to the text message survey and in levels of intervention participation. We partitioned primary outcome #1 into three time periods: up to the first quarter of the study period (weeks 1 to 7), up to the second quarter (weeks 1 to 14), and up to the third quarter (weeks 1 to 21). For primary outcome #2, we also calculated the mean percent of intervention activities engaged in and the proportion of experimental participants who completed ≥ 70% and ≥ 50% of all intervention activities, which included the four core activities plus one other microbusiness activity (e.g., developing a business plan, obtaining licensing, sharing products, or preparing a business social media page) and one other intervention text-messaging activity (e.g., sharing texts with peers or business advertising and/or reporting using texts). Inclusion of additional intervention activities enabled the study team to account for participant efforts not previously reflected in the primary outcome. Participants who completed their in-person post-intervention assessment between weeks 26 to 30 were treated as non-missing. Participants who responded to the weekly text message survey by Monday (approximately 72 h after the survey was sent) were treated as non-missing.

Descriptive statistics were also reported for secondary behavioral outcomes, using frequencies and proportions by study group for the specified time points. Given the lower response rates (< 50%) to the weekly text message surveys during the second half of the study period (weeks 15 to 26), prespecified statistical analyses across exposure periods using a random effects generalized linear model were not possible. Therefore, as prespecified if weekly text message survey data were insufficient over time, we compared pre- and post-intervention secondary behavioral outcomes using two tests of proportions with an interaction term of group and time. The coefficient of the interaction term equaled the difference in the change over time in the experimental group minus the change over time in the comparison group. As this was a feasibility trial testing the methods to be used on a larger scale, comparative analyses were performed for the purpose of reporting the level and change difference in behavioral outcomes and not for the purpose of significance testing [28, 41]. We completed analyses for the intention-to-treat sample (defined as every participant randomized) and the per protocol sample (defined as only participants completing 70% or more of core intervention activities). Missing data in the in-person post-intervention assessment for two participants was handled by using the last measure carried forward from the weekly text message survey. Other methods that would account for uncertainty due to missingness (e.g., multiple imputation) were not used because the sample size was small.

Results

Feasibility Outcomes

Recruitment

The study recruited 100% of the target sample size. Over the 6 weeks of recruitment (December 2018 to February 2019), we recruited 44 participants from two CBOs, approximately 22 participants per CBO. We were unable to obtain complete enrollment for one participant given his use of a cell phone number previously registered by another participant. Therefore, enrollment was completed for 43 participants. We informed approximately 61 participants at the CBO center in an open group format of the intervention of which 17 (28%) self-identified themselves as ineligible after hearing the study’s eligibility criteria. These non-participants did not undergo screening. Self-reported ineligibility was anecdotally due to having employment > 10 h week, being aged 25 or older, intending to move out of town in the coming weeks, or not having a cell phone. No screened participants declined participation.

Baseline Data

Pre-intervention assessments were conducted for 43 enrolled participants (100%). The mean age was 21.0 years (Table 1). Thirty-five percent (35%) of participants were male. Most participants (70%) had high school diploma or equivalent as their highest level of education. Twenty-eight percent (28%) had only completed up to grades 8 to 11. Two percent (2%) of participants had completed up to 2 years of college. Unemployment and income insecurity were high (81% and 84%, respectively). Housing status varied with 19% of participants having spent the previous night in an emergency shelter compared to 2% with a stranger; 42% in transitional housing; and 33% at the home of a friend, relative, or intimate partner. Five percent (5%) had their own apartment. All participants had access to a cell phone as required for study enrollment. Eighty-four percent (84%) had a password-enabled cell phone. Forty-percent (40%) stated that others could access their cell phone.

Randomization

Figure 1 provides a participant flow diagram. Thirty-eight enrolled participants (88%) completed the run-in requirements and were randomized 1:1 to comparison (n = 19, 50%) or experimental intervention (n = 19, 50%). Five enrolled participants (12%) were ineligible for randomization because they did not respond to one or more of the first three text message surveys as specified by the run-in requirements.

Weekly Text Message Survey Participation

Text message survey participation was relatively high in the first half of the study, but declined thereafter (Table 2). Seventy-nine percent (79%, n = 30) of participants in both groups responded to ≥ 70% of weekly text message surveys through week 7, compared to 55% (n = 21) and 45% (n = 17) through weeks 14 and 21, respectively. Through weeks 7 and 14, the proportion of all text message surveys that received a response was 81% and 71%, respectively, among all randomized participants compared to 63% and 59% through weeks 21 and 26, respectively. At the end of the 26-week period, 37% (n = 14) of participants in both groups responded to ≥ 70% of weekly text message surveys. The experimental group had higher response rates in the early weeks of the study. The comparison group had higher response rates in the middle and ending weeks of the study (Table 2).

Intervention Participation

Intervention participation was also high in the first half of the study, but declined thereafter. The majority of participants (> 50%) engaged in text-messaging, educational sessions, grant spending, and mentor contact up to weeks 8 to 14 of the intervention (Table 3). Most experimental participants (65% to 71%) attended educational sessions during the first half of the intervention (weeks 1 to 9). During the second half (weeks 10 to 20), session attendance was lower and varied from 47 to 18%. Mentor contacts among experimental participants commenced in week 6 at 58% and varied from 58 to 11% over time. Grant spending followed similar trends with 58% of participants spending grant monies up to week 13, and declining to 26% in the remaining weeks. Among comparison and experimental participants, 100% received one or more informational text messages during the intervention period, such as job announcements only or job announcements and HIV and microbusiness tips.

In summary, 58% (n = 11) of experimental participants completed ≥ 70% of the core intervention activities (Table 2). This subset defined the per protocol experimental sample. Seventy-four percent (74%, n = 14) of experimental participants completed half of all intervention activities. The mean percent of all activities completed was 69% and 91% in the intention-to-treat and per protocol samples, respectively. Intervention activities with the greatest participation were text messaging (100%), microbusiness activity (74%), and session attendance (74%).

Twenty-six percent (26%, n = 5) of experimental participants never attended an educational session. Reported reasons for session non-attendance included new or changing employment, other personal obligations, competing activities at the CBO, lack of transportation, or “not feeling like it”. In addition, two experimental participants (11%) were later disallowed to visit the CBO due to disputes with other CBO staff and youth and therefore unable to attend the educational sessions, although they continued to receive the intervention’s text messages and assessments. Grant spending was limited by eligibility (e.g., sending in receipts and few educational session absences). Mentor contact was limited by delays in the identification of mentors with comparable occupational interests and scheduling conflicts between mentors and mentees. Reported reasons for decreases in overall intervention participation in the second half of the study were feeling overwhelmed with other personal obligations, feeling this was not a good time to start a microbusiness, and being ineligible to receive future grant payments. At the end of the study, 82% (n = 14) of experimental participants and 74% (n = 14) of all comparison participants reported one or more positive experiences with EMERGE. Additional data on acceptability have been reported elsewhere [31].

Retention

Post-intervention assessments were completed by 93% (n = 40) of enrolled participants (Fig. 1). The remaining three participants (7%) were lost-to-follow up. Retention among randomized participants was 95% (n = 36) compared to 80% (n = 4) among non-randomized participants who did not meet the run-in requirements. Retention within randomized participants was 89% (n = 17) of experimental participants and 100% (n = 19) of comparison participants. Total loss-to-follow-up was 19% (n = 8), accounting for 12% (n = 5) during the run-in period and 7% (n = 3) post-randomization. Reasons for post-randomization lost to follow-up were that one participant in the non-randomization group did not respond to the any of the study’s assessment invitations. A second experimental participant was reported to have moved out of town, had no email address, and had a disconnected cell phone. A third experimental participant was no longer allowed on the premises of the CBO and did not attend two scheduled off-site assessments. No participants reported an intervention-related adverse event or a severe adverse event. No participants were withdrawn from the study.

Behavioral Outcomes

Using the intention-to-treat sample, the experimental group reported higher employment over time in pre- versus post-intervention assessments (32% to 83%) compared to reported changes in employment over time in the comparison group (37% to 47%) (Table 4). The experimental group also reported a larger decrease in unprotected sex over time (79% to 58%) compared to reported changes in unprotected sex over time in the comparison group (63% to 53%). HIV preventive behaviors were high in both groups at pre-intervention and remained high at post-intervention in the experimental group (95% to 100%) while declining in the comparison group (95% to 74%). Both groups also reported decreases over time in safer sex from 79 to 68% in the comparison group and 84% to 53% in the experimental group. However, the experimental group reported higher sexual abstinence over time (16% to 47%) compared to reported changes in sexual abstinence over time in the comparison group (21% to 32%). Levels of secondary behavioral outcomes were comparable in the per-protocol sample (Table 5).

Discussion

Principal Findings

This study helped to identify a number of unknown parameters required for the design of a future definitive study. First, we found that the recruitment pace and time needed to collect data were sufficient. We were also able to deliver the full 20-week intervention of educational sessions, grants, mentors, and text messages. Second, we found that participants were willing to be randomized, and the comparison intervention was successful in reducing non-participation. This may have resulted because both interventions were described as novel activities aiming to improve employment for young adults in Baltimore. We also found that some participants were disappointed by assignment to the comparison intervention, which they perceived to have less value. However, they also valued the potential perceived benefits of receiving the comparison group’s job announcements. In fact, the comparison group had higher retention and survey participation rates at the end of the study than the experimental group. This may have occurred because while experimental participants received financial incentives for multiple activities, comparison participants only received incentives for the study’s weekly survey. It is possible that having fewer intervention activities to do over time resulted in greater focus on one activity by the comparison group.

We also found that experimental participation rates were relatively high until weeks 8 to 14 of the intervention, after which they declined. Therefore, a shorter intervention may be preferable for this population. Participants experienced changing cell phone numbers and changing employment and housing situations. Limited time management skills, competing personal obligations, and ongoing interpersonal disputes with CBO peers and cohabiting partners may also have been overwhelming in combination with intervention activities and limited participation over time. Having an equal number of sessions in a shorter time period could enable participants to take advantage of the intervention prior to the onset of further uncertainties or instability. Response rates to the weekly text message surveys were also moderate to high until about 12 to 14 weeks after the start of the interventions. This suggests that shortening the duration of weekly text message assessments in a future trial could complement a shorter intervention period. Having fewer text message questions may also improve long-term responsiveness. Other efforts to maintain intervention participation could include providing payment for travel to sessions, having online sessions, including time management skills training, and assessing readiness to start a microbusiness. Having mentors attend all sessions, rather than a select few, may also enhance participation.

The trial also provided important information concerning retention. The study achieved high retention which may have resulted from the weekly contact to all participants by text message, marketing flyers and emails, and the provision of snacks and payment for the final study assessment. In addition, loss to follow-up among participants who met the run-in requirements was low and less than anticipated. This finding suggests that the two run-in requirements were successful in screening out individuals unlikely to be retained in the study. In contrast, the two run-in requirements had mixed results in excluding individuals who were likely to have low participation as five participants did not attend any educational sessions. Low participation in a future trial could be addressed by assessing readiness or adding attendance to one or more sessions to the run-in requirements. Should the latter be implemented, our findings suggests that sample size calculations would need to be adjusted for higher drop-out prior to randomization, such as 25%. This may be important given that individuals with high engagement early-on were more likely to have high engagement at the end of the study.

Finally, while the trial was not powered to examine effectiveness, the exploratory changes in behavioral outcomes are worth noting. Both the comparison and experimental groups reported increased employment and decreased unprotected sex over time. However, a fully-powered definitive trial is needed to assess the statistical significance of the larger relative changes observed in the experimental group. The weekly text surveys with questions on sexual behaviors may have been an unintended active ingredient of the intervention (e.g., cues, reminders) and contributed to potential changes in the comparison group, along with the weekly job announcements. It is possible also that participants assigned to the comparison group exhibited an increase in performance in order to compete with the experimental group, a phenomenon that has been recorded in trials as “performance bias” [49, 50]. In contrast, the addition of educational sessions, motivational texts, grants, and mentoring may be important factors in assessing effectiveness in a future trial among experimental participants. More research is also needed regarding participants’ decisions to reduce sexual encounters versus use of safer sexual practices within existing relationships. We found that decreases in reported safer sex in the experimental group were coupled with an equal increase in reports of sexual abstinence. In addition, the more positive changes in the per protocol participants suggest that efforts to sustain active participation in a definitive trial may have important effects. There is also a need to investigate potential causal pathways by which economic-strengthening interventions affect HIV risk, such as by increasing negotiating power within sexual relationships, by increasing access to safe housing free of domestic and sexual violence, by decreasing reliance on transactional sex, or by increasing financial access to HIV prevention knowledge and services [51].

Progression to a Definitive Study

Several of the study’s pre-determined progression criteria for a definitive trial were met. The study was implemented as planned and reached its recruitment target. The study also had minimal loss-to-follow-up and high acceptability among experimental and comparison interventions. However, only 58% of experimental participants had high participation at the end of the study. This was less than the 70% target. In contrast, moderate to high participation was achieved by most participants (74%) in the first half of the study (up to week 14). The study achieved its participation target for text message surveys (70% responding to 70% of text message surveys) up to week 8, but not at the end of the study.

Taken together, these findings support progression to a larger trial with some modifications. One modification is to shorten the duration of the intervention and text message assessment activities to approximately 10 weeks. This is considered practical and consistent with the most active period observed in the trial. Ten weeks is also consistent with participant preferences for more frequent sessions, such as two or more a week, over a shorter time period [31]. Given that the most active participants completed 91% of intervention activities, a second modification may be to improve screening to identify the most active participants. Modifications to screening could include requiring attendance to one or more orientation sessions prior to randomization, assessing intervention readiness, or requiring submission of a reference letter. Including more frequent rewards and mentor support may also be beneficial.

Limitations

The study’s limitations should be considered. All measures were self-reported and may be subject to reporting biases. Some participants reported technological difficulties in receiving the weekly text message survey due to frozen screens or stalled prompts. These issues may have contributed to lower response rates. A future trial may benefit from giving participants an alternate mode of weekly assessment such as email, phone call, or web should a problem arise with text messages. Another limitation was the relatively small sample size, which was appropriate for a feasibility study, but which did not allow for hypothesis testing [28, 41]. A larger trial with adequate statistical power is needed to examine effectiveness. Having all female session leaders may also have limited the study’s engagement of male participants. To counter this, male mentors and guest speakers were included. Finally, some measurements related to preventive and safer sex behaviors had baseline prevalence of > 80% which may have led to a ceiling effect given the number of eligible behaviors defined by the study’s protocol [34]. Future assessments may benefit from examining effectiveness using disaggregated outcomes. The study’s strengths were the multiple lessons learned for a future trial relating to recruitment, participation, and retention. Both interventions were conducted in partnership with CBOs, tailored to the needs of participants, and well received. The use of mixed methods of assessment additionally enhanced our evaluation.

Conclusions

Conducting this feasibility trial was a critical step in the process of designing and testing a behavioral intervention. The trial demonstrated feasibility of the experimental and comparison interventions with promising changes in employment and HIV-related outcomes. Design of an effectiveness trial should take into account the study’s lessons learned with regard to intervention duration, screening, and measurement as these are likely to be important to the success of a larger study. Given that economic vulnerability is associated with HIV among African-American young adults, more research is needed on the role of integrated microenterprise interventions.

Data Availability

The de-identified dataset used to analyze results for this study and the final study protocol are available in an online repository.

Abbreviations

- AIRS:

-

AIDS Interfaith Residential Services

- EMERGE:

-

Engaging MicroenterprisE for Research Generation and Health Empowerment

- CBO:

-

Community Based Organization

- HIV:

-

Human Immunodeficiency Virus

- IRB:

-

Institutional Review Board

- ITT:

-

Intent-to-treat

- MD:

-

Maryland

- PP:

-

Per protocol

- USD:

-

United States Dollar

- YO!B:

-

Youth opportunities! Baltimore

References

Denning P, DiNenno E. UNAIDS and Centers for Disease Control and Prevention. Communities in crisis: is there a generalized HIV epidemic in impoverished urban areas of the United States? (2019). Accessed https://www.cdc.gov/hiv/group/poverty.html

CDC. HIV prevalence estimates—United States. MMWR. 2008;57:1073–6.

Maryland HIV Progress Report (2017). Accessed https://phpa.health.maryland.gov/OIDEOR/CHSE/SiteAssets/Pages/statistics/Maryland-Progress-Report-2016.pdf

Baltimore City Annual HIV Epidemiological Profile 2017. Center for HIV Surveillance, Epidemiology and Evaluation, Maryland Department of Health. Baltimore. (2018). Accessed https://health.baltimorecity.gov/hivstd-data-resources

Centers of Disease Control and Prevention. Estimated HIV incidence and prevalence in the United States, 2010–2015. HIV Surveill Suppl Rep 2018; 23(1).

Barnett T. HIV/AIDS prevention: hope for a new paradigm? Development. 2007;50(2):70–5.

Dinkelman T, Lam D, Leibbrandt M. Household and community income, economic shocks and risky sexual behavior of young adults: evidence from the Cape Area Panel Study 2002 and 2005. Aids. 2007;21(Suppl 7):S49–56.

Campbell C. Letting them die: why HIV/AIDS intervention programmes fail. Bloomington: Indiana University Press; 2003.

Jennings L. Do men need empowering too? A systematic review of entrepreneurial education and microenterprise development on health disparities among inner-city black male youth. J Urban Health. 2014;91(5):836–50.

Ssewamala FM, Han CK, Neilands TB, Ismayilova L, Sperber E. Effect of economic assets on sexual risk-taking intentions among orphaned adolescents in Uganda. Am J Public Health. 2010;100(3):483–8.

Ssewamala FM, Ismayilova L, McKay M, Sperber E, Bannon W Jr, Alicea S. Gender and the effects of an economic empowerment program on attitudes toward sexual risk-taking among AIDS-orphaned adolescent youth in Uganda. J Adolesc Health. 2010;46(4):372–8. https://doi.org/10.1016/j.jadohealth.2009.08.010.

Jennings L, Ssewamala F, Nabunya P. Effect of savings-led economic empowerment on HIV preventive practices among AIDS-orphaned adolescents in rural Uganda: results from the Suubi-Maka randomized experiment. AIDS Care. 2016;28(3):273–82.

Kim J, Ferrari G, Abramsky T, et al. Assessing the incremental effects of combining economic and health interventions: the IMAGE study in South Africa. Bull World Health Org. 2009;87(11):824–32.

Odek WO, Busza J, Morris CN, Cleland J, Ngugi EN, Ferguson AG. Effects of micro-enterprise services on HIV risk behaviour among female sex workers in Kenya's urban slums. AIDS Behav. 2009;13(3):449–61.

Pronyk PM, Kim JC, Abramsky T, et al. A combined microfinance and training intervention can reduce HIV risk behaviour in young female participants. Aids. 2008;22(13):1659–65.

Rosenberg MS, Seavey BK, Jules R, Kershaw TS. The role of a microfinance program on HIV risk behavior among Haitian women. AIDS Behav. 2011;15(5):911–8.

Sherman SG, Srikrishnan AK, Rivett KA, Liu SH, Solomon S, Celentano DD. Acceptability of a microenterprise intervention among female sex workers in Chennai, India. AIDS Behav. 2010;14(3):649–57.

Sherman SG, German D, Cheng Y, Marks M, Bailey-Kloche M. The evaluation of the JEWEL project: an innovative economic enhancement and HIV prevention intervention study targeting drug using women involved in prostitution. AIDS Care. 2006;18(1):1–11.

Raj A, Dasgupta A, Goldson I, Lafontant D, Freeman E, Silverman JG. Pilot evaluation of the Making Employment Needs [MEN] count intervention: addressing behavioral and structural HIV risks in heterosexual black men. AIDS Care. 2014;26(2):152–9.

Dunbar MS, Kang Dufour MS, Lambdin B, Mudekunye-Mahaka I, Nhamo D, Padian NS. The SHAZ! project: results from a pilot randomized trial of a structural intervention to prevent HIV among adolescent women in Zimbabwe. PLoS ONE. 2014;9(11):e113621.

Cui RR, Lee R, Thirumurthy H, Muessig KE, Tucker JD. Microenterprise development interventions for sexual risk reduction: a systematic review. AIDS Behav. 2013;17(9):2864–77.

Jennings L, Shore D, Strohminger N, Burgundi A. Entrepreneurial development for US minority homeless youth: a qualitative inquiry on value, barriers, and impact on health. Child Youth Serv Rev. 2015;49:39–47.

Jennings L, Lee N, Shore D, Strohminger N, Burgundi A, Conserve DF, Cheskin LJUS. minority homeless youth’s access to and use of mobile phones: implications for mHealth intervention design. J Health Commun. 2016;21(7):725–33.

Reback CJ, Fletcher JB, Shoptaw S, Mansergh G. Exposure to theory-driven text messages is associated with HIV risk reduction among methamphetamine-using men who have sex with men. AIDS Behav. 2015;19(Suppl 2):130–41.

Pérez-Sánchez IN, Candela Iglesias M, Rodriguez-Estrada E, Reyes-Terán G, Caballero-Suárez NP. Design, validation and testing of short text messages for an HIV mobile-health intervention to improve antiretroviral treatment adherence in Mexico. AIDS Care. 2018;30(sup1):37–433.

Lester RT, Ritvo P, Mills EJ, Kariri A, Karanja S, Chung MH, Jack W, Habyarimana J, Sadatsafavi M, Najafzadeh M, Marra CA, Estambale B, Ngugi E, Ball TB, Thabane L, Gelmon LJ, Kimani J, Ackers M, Plummer FA. Effects of a mobile phone short message service on antiretroviral treatment adherence in Kenya (WelTel Kenya1): a randomised trial. Lancet. 2010;376(9755):1838–45.

Garofalo R, Kuhns LM, Hotton A, Johnson A, Muldoon A, Rice D. A Randomized controlled trial of personalized text message reminders to promote medication adherence among hiv-positive adolescents and young adults. AIDS Behav. 2016;20(5):1049–59.

Leon AC, Davis LL, Kraemer HC. The role and interpretation of pilot studies in clinical research. J Psychiatr Res. 2011;45(5):626–9. https://doi.org/10.1016/j.jpsychires.2010.10.008.

Eldridge SM, Lancaster GA, Campbell MJ, Thabane L, Hopewell S, Coleman CL, Bond CM. Defining feasibility and pilot studies in preparation for randomised controlled trials: development of a conceptual framework. PLoS ONE. 2016;11(3):e0150205.

Bowen DJ, Kreuter M, Spring B, Cofta-Woerpel L, Linnan L, Weiner D, Bakken S, Kaplan CP, Squiers L, Fabrizio C, Fernandez M. How we design feasibility studies. Am J Prev Med. 2009;36(5):452–7.

Jennings Mayo-Wilson L, Coleman J, Timbo F, Brown ERT, Butler AI, Conserve DF, Glass NE. Acceptability of a feasibility randomized clinical trial of a microenterprise intervention to reduce sexual risk behaviors and increase employment and HIV preventive practices (EMERGE) in young adults: a mixed methods assessment. Manuscript Under Review.

Grant S, Mayo-Wilson E, Montgomery P, Macdonald G, Michie S, Hopewell S, Moher D, On behalf of the CONSORT-SPI group. CONSORT-SPI 2018 explanation and elaboration: guidance for reporting social and psychological intervention trials. Trials. 2018;19(1):406.

Eldridge SM, Chan CL, Campbell MJ, Bond CM, Hopewell S, Thabane L, Lancaster GA, PAFS consensus group. CONSORT 2010 statement: extension to randomised pilot and feasibility trials. BMJ. 2016;355:5239.

Jennings Mayo-Wilson L, Glass NE, Ssewamala FM, Linnemayr S, Coleman J, Timbo F, Johnson MW, Davoust M, Labrique A, Yenokyan G, Dodge B, Latkin C. Microenterprise intervention to reduce sexual risk behaviors and increase employment and HIV preventive practices in economically-vulnerable African-American young adults (EMERGE): protocol for a feasibility randomized clinical trial. Trials. 2019;20(1):439.

Desai AS, Solomon S, Claggett B, McMurray JJ, Rouleau J, Swedberg K, Zile M, Lefkowitz M, Shi V, Packer M. Factors associated with noncompletion during the run-in period before randomization and influence on the estimated benefit of LCZ696 in the PARADIGM-HF Trial. Circ Heart Fail. 2016;9(6):e002735.

Bowry R, Parker S, Rajan SS, Yamal JM, Wu TC, Richardson L, Noser E, Persse D, Jackson K, Grotta JC. Benefits of stroke treatment using a mobile stroke unit compared with standard management: the BEST-MSU study run-in phase. Stroke. 2015;46(12):3370–4.

Suissa S. Run-in bias in randomised trials: the case of COPD medications. Eur Respir J. 2017;49(6):1700361.

Fukuoka Y, Gay C, Haskell W, Arai S, Vittinghoff E. Identifying factors associated with dropout during pre-randomization run-in period from an mHealth physical activity education study: the mPED Trial. JMIR Mhealth Uhealth. 2015;3(2):e34.

Schechtman KB. Run-in periods in randomized clinical trials. J Card Fail. 2017;23(9):700–1.

Rees JR, Mott LA, Barry EL, Baron JA, Figueiredo JC, Robertson DJ, Bresalier RS, Peacock JL. Randomized controlled trials: who fails run-in? Trials. 2016;29(17):374.

Arain M, Campbell MJ, Cooper CL, Lancaster GA. What is a pilot or feasibility study? A review of current practice and editorial policy. BMC Med Res Methodol. 2010;10:67.

Julious SA. Sample size of 12 per group rule of thumb for a pilot study. Pharm Stat. 2005;4:287–91.

Lancaster GA, Dodd S, Williamson PR. Design and analysis of pilot studies: recommendations for good practice. J Eval Clin Pract. 2004;10:307–12.

Pile V, Smith P, Leamy M, Blackwell SE, Meiser-Stedman R, Stringer D, Ryan EG, Dunn BD, Holmes EA, Lau JYF. A brief early intervention for adolescent depression that targets emotional mental images and memories: protocol for a feasibility randomised controlled trial (IMAGINE trial). Pilot Feasibility Stud. 2018;4(4):97.

O'Gara B, Marcantonio ER, Pascual-Leone A, Shaefi S, Mueller A, Banner-Goodspeed V, Talmor D, Subramaniam B. Prevention of Early Postoperative Decline (PEaPoD): protocol for a randomized, controlled feasibility trial. Trials. 2018;19(1):676.

Barrett E, Gillespie P, Newell J, Casey D. Feasibility of a physical activity programme embedded into the daily lives of older adults living in nursing homes: protocol for a randomised controlled pilot feasibility study. Trials. 2018;19(1):461.

Steffens D, Young J, Beckenkamp PR, Ratcliffe J, Rubie F, Ansari N, Pillinger N, Solomon M. Feasibility and acceptability of PrE-operative Physical Activity to improve patient outcomes After major cancer surgery: study protocol for a pilot randomised controlled trial (PEPA Trial). Trials. 2018;19(1):112.

Fukuoka Y, Gay C, Haskell W, Arai S, Vittinghoff E. Identifying factors associated with dropout during prerandomization run-in period from an mHealth physical activity education study: the mPED Trial. JMIR Mhealth Uhealth. 2015;3(2):e34.

Norris M, Poltawski L, Calitri R, Shepherd AI, Dean SG, ReTrain Team. Hope and despair: a qualitative exploration of the experiences and impact of trial processes in a rehabilitation trial. Trials. 2019;20(1):525. https://doi.org/10.1186/s13063-019-3633-8.

McCambridge J, Sorhaindo A, Quirk A, Nanchahal K. Patient preferences and performance bias in a weight loss trial with a usual care arm. Patient Educ Couns. 2014;95:243–7.

Swann M. Economic strengthening for HIV prevention and risk reduction: a review of the evidence. AIDS Care. 2018;30(sup3):37–84. https://doi.org/10.1080/09540121.2018.1479029.

Acknowledgements

The authors wish to thank the EMERGE study participants and the CBO managers at YO!Baltimore (Elizabeth Torres Brown) and AIRS (Anthony Butler, Christopher Maith, Michelle Smalls, and Nadiyah Williams) who made this work possible. The authors also thank the Johns Hopkins University research assistants (Melissa Davoust and Jordan Besch) who supported data collection and entry. A special thanks to all of the mentors and guest speakers who participated in the EMERGE study (Jordan Matthews, Eric Randall, Allison Brown, Taj Thomas, Kristian Henderson, Shavon Edmonson, Shawn Rauson, Evonna McDonald, Holly Gray, Tia Hamilton, Jenn Williams, Tonya Williamson, and Valeria Pasquale).

Funding

This research was funded by the National Institute of Mental Health (NIMH) (Grant: K01MH107310, PI: Larissa Jennings Mayo-Wilson) and supported by services received from the Johns Hopkins Institute for Clinical and Translational Research (ICTR) (Grant: UL1TR003098). The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIMH. The funder had no role in the design of this study and did not have any role during its execution, analyses, interpretation, or submission of results.

Author information

Authors and Affiliations

Contributions

LJMW was the principal investigator of the study. LJMW, JC, and FT implemented the trial and managed the data. LJMW and GY developed the analysis plan. BK and GTY analyzed the data. LJMW prepared the first draft of the manuscript with input from JC and FT. All authors (LJMW, JC, FT, FMS, SL, GTY, BK, MWJ, GY, BD, NEG) contributed to editing the manuscript. All authors have read and approved the final version.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no competing interests.

Ethical Approval

This study received ethics approval from the Johns Hopkins Bloomberg School of Public Health Institutional Review Board (IRB#00008833) and the Indiana University Institutional Review Board (IRB#2003950305).

Informed Consent

Written consent to participate was obtained from all participants prior to the start of data collection.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jennings Mayo-Wilson, L., Coleman, J., Timbo, F. et al. Microenterprise Intervention to Reduce Sexual Risk Behaviors and Increase Employment and HIV Preventive Practices Among Economically-Vulnerable African-American Young Adults (EMERGE): A Feasibility Randomized Clinical Trial. AIDS Behav 24, 3545–3561 (2020). https://doi.org/10.1007/s10461-020-02931-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10461-020-02931-0