Abstract

Even though past research suggests that visual learning may benefit from conceptual knowledge, current interventions for medical image evaluation often focus on procedural knowledge, mainly by teaching classification algorithms. We compared the efficacy of pure procedural knowledge (three-point checklist for evaluating skin lesions) versus combined procedural plus conceptual knowledge (histological explanations for each of the three points). All students then trained their classification skills with a visual learning resource that included images of two types of pigmented skin lesions: benign nevi and malignant melanomas. Both treatments produced significant and long-lasting effects on diagnostic accuracy in transfer tasks. However, only students in the combined procedural plus conceptual knowledge condition significantly improved their diagnostic performance in classifying lesions they had seen before in the pre- and post-tests. Findings suggest that the provision of additional conceptual knowledge supported error correction mechanisms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Learning to interpret visual information is crucial in many medical fields, for example, dermatology, radiology, and histology. Medical students are often instructed to use algorithms and subsequently provided opportunities to train their visual skills using images (e.g. in online learning tools). When taught about algorithms, students traditionally gain procedural knowledge (e.g. Jensen & Elewski, 2015; Tsao et al., 2015; Zalaudek et al., 2006). However, findings from learning sciences have demonstrated that conceptual knowledge is often beneficial for transfer (Sawyer, 2008). Only a few studies have addressed how procedural and conceptual knowledge influence visual skills development in medicine (Baghdady et al., 2009, 2013). The present study fills this research gap by comparing the effects of combined procedural and conceptual versus pure procedural knowledge on visual learning in diagnosing melanoma, also known as pigmented skin cancer.

Teaching medical image interpretation

Medical educators who aim to teach their students how to interpret visual information often design initial learning treatments focusing on algorithms. These learning materials consist either of procedural information, such as the different steps of the algorithm, or of conceptual information, such as explanations of why each step is part of the algorithm. Procedural and conceptual information is generally assumed to lead to procedural and conceptual knowledge, respectively, although acquiring one type of knowledge is not limited to the corresponding learning opportunity (Rittle-Johnson et al., 2015; Schneider & Stern, 2010; Ziegler et al., 2018). Procedural knowledge means knowledge of procedures, such as a series of steps or actions, done to accomplish a goal (Rittle-Johnson & Star, 2009; Rittle-Johnson et al., 2015), whereas conceptual knowledge means knowledge of concepts, which are abstract and general principles (Rittle-Johnson et al., 2015).

After learning about algorithms, students need to transfer their (procedural and conceptual) knowledge to visual learning resources consisting of images and the corresponding diagnoses (for example, Aldridge et al., 2011; Drake et al., 2013; Girardi et al., 2006; Kellman, 2013; Kellman & Krasne, 2018; Lacy et al., 2018; Xu et al., 2016). The so-acquired knowledge and skills are finally supposed to be applied to target transfer problems, which means assessing medical images or patients in clinical practice.

Current educational materials for medical image interpretation algorithms often focus on procedural knowledge acquisition. However, research in the learning sciences has shown that conceptual understanding is crucial for long-term retention and transfer in domains such as mathematics and science (Kapur, 2014; Sinha & Kapur, 2020), and the research we discuss below indicates that this might also be the case in visual domains.

Conceptual knowledge for visual learning

Numerous studies involving human participants (for example, Carvalho & Goldstone, 2014, 2015; Kellman & Krasne, 2018) and animals (Altschul et al., 2017; Levenson et al., 2015) demonstrated that conceptual knowledge is not necessary to learn a visual discrimination task. However, research suggests that it could be beneficial. In their famous chess experiments, Chase and Simon (1973)—based on earlier findings of de Groot (1965)—demonstrated that expert players showed superior performance compared to novices when they were asked to reconstruct positions of pieces, but only for meaningful configurations. More recently, it has been shown that associating knowledge with rare objects with largely unknown functions increased participants’ ability to detect them (Abdel Rahman & Sommer, 2008; Weller et al., 2019). These findings indicate that perceiving is more than sensing; it also includes attributing meaning to what is seen. Hence, it might be beneficial if educational materials for visual learning targeted conceptual knowledge in addition to procedural knowledge. The following studies have investigated this assumption.

Previous research in medicine

Baghdady et al. (2009) compared three learning groups in oral radiology, who all received sets of images complemented by different audio recordings. The study material of the feature list group contained only the radiographic features of four potentially confusable diseases. The structured algorithm group additionally received an algorithm to analyse the images, and the basic science group additionally received information on the pathophysiological basis of abnormalities. Hence, the feature list and the structured algorithm group received only procedural information, whereas the basic science group additionally received conceptual information. The diagnostic tests revealed a superiority of the conceptual information group versus both procedural information groups immediately and one week after the learning phase. As an explanation for this effect, the authors suggest conceptual coherence theory (Woods et al., 2005), which proposes that causal explanations allow the participants to provide diagnoses that make sense rather than simply focusing on features or algorithms.

Furthermore, Baghdady et al. (2013) investigated students' diagnostic accuracy when taught biomedical knowledge integrated with or segregated from clinical features. Integrated biomedical knowledge, presented as a causal mechanism for radiological features, produced higher diagnostic accuracy than segregated knowledge. Although these findings indicate that conceptual knowledge—especially when taught integrated with procedural knowledge—improves diagnostic accuracy, a direct comparison is still missing. We aimed to fill this research gap by comparing the effects of combined procedural and conceptual versus pure procedural knowledge acquisition on visual learning in melanoma detection.

Application case: learning to detect melanoma

We investigated visual learning using the application case of diagnosing pigmented skin cancer because it is an essential and challenging visual task, for which an evidence-based algorithm and conceptual knowledge exist, as shown below.

First, melanoma detection is vital for health professionals: Melanoma is currently the fifth most common cancer diagnosis in the US (Saginala et al., 2021) and represents the most lethal form of skin cancer, accounting for more than 80% of skin cancer deaths. While the five-year relative survival rate is 99.5% when the melanoma is diagnosed while the cancer is still at a localised stage, it declines to 70.6% when cancer spreads to regional other parts of the body and to 31.9% when it spreads to distant other parts of the body (National Cancer Institute, 2022). Hence, early detection of melanoma is crucial. Second, diagnosing pigmented skin cancer is known to be challenging, as highly prevalent harmless melanocytic lesions, such as nevi, share many common features with malignant melanomas (Xu et al., 2016). Third, the three-point checklist for evaluating pigmented skin lesions by dermoscopy provides an algorithm empirically shown to enhance diagnostic accuracy (Soyer et al., 2004; Zalaudek et al., 2006). Fourth, histopathology offers a way to include conceptual explanations for each point of the checklist, as research has shown that knowing about the correlation of histopathology with dermoscopic findings increases the understanding of dermoscopy (Ferrara et al., 2002; Soyer et al., 2000). This might allow physicians to interpret dermoscopic patterns and clues, even when a specific diagnosis cannot be reached (Kittler et al., 2016).

Research questions and hypotheses

We investigated the following four research questions (RQs): What are the effects of combined procedural and conceptual versus pure procedural knowledge on….

-

RQ 1: …the performance outcomes?

Hypothesis 1

Compared to pure procedural knowledge (P), we expected combined procedural and conceptual knowledge (P + C) to lead to higher performance, as assessed through diagnostic accuracy in retention and transfer tasks, from a short-term from and a long-term perspective, as well as in three difficulties (easy, medium and difficult tasks).

-

RQ 2: …the performance development?

Hypothesis 2

We generally expected that both groups would significantly improve their diagnostic accuracy during the experiment. Furthermore, we supposed that conceptual knowledge might support transfer in two ways: (1) It might facilitate the transfer of students’ prior knowledge about dermatology to the newly acquired knowledge about the three-point-checklist, and (2) it might facilitate the transfer of the knowledge about the three-point checklist to the visual learning resource. Consequently, we also expected that the final transfer from the visual learning resource to the target visual test tasks would be improved. We were interested in developing diagnostic accuracy in the same and new images.

-

RQ 3: …the perceived helpfulness of the checklist?

Hypothesis 3

Due to limited research in this field and the explorative nature of this research question, we did not have a directional hypothesis. On the one hand, students who acquired conceptual knowledge might find the checklist more helpful because they understand why each point is included. On the other hand, they may also perceive it as less useful because their additional knowledge makes them more aware of the checklist’s limits.

-

RQ 4: …the metacognitive calibration?

Hypothesis 4

We hypothesised the P + C students to be more self-critical (i.e., less overconfident or more underconfident than the P students) because they know about the reasons behind the three-point checklist, which presumably makes them more aware of the fact that the criteria for evaluating the three points are not clear-cut.

Materials and methods

Participants

We invited all fourth-semester Bachelor students of human medicine at ETH Zurich, Switzerland, to participate in the study during a dermatology block course. The study comprised two iterations; the first in the spring semester of 2021 and the second in the spring semester of 2022. In 2021, the students participated online from home due to restrictions because of the COVID-19 pandemic. In 2022, the students were at the campus, simultaneously participating in a large lecture hall. We invited 203 students, of which 125 completed the learning intervention and the immediate post-test. Subsequently, 81 (64.8%) students also completed the delayed post-test. The study was performed in accordance with the Declaration of Helsinki (WMA, 2022) and the Swiss Federal act on research involving human beings and the ordinance on human research with the exception of clinical trials. Furthermore, the study was approved by ETH Zurich’s ethics committee (EK 2020-N-160) and all participants provided informed consent. The students did not receive compensation but could voluntarily participate in a competition for three digital gift cards from a chocolate shop. We awarded these to the three students with the best post-test performances.

Independent samples t-tests did not show any significant differences between the 2021 and the 2022 cohort regarding the incoming characteristics, the time spent for learning and diagnostic accuracy in the four tests (p > 0.05, see “Appendix A”); hence, we pooled the participants from both iterations for further analyses. Furthermore, we removed ten outliers based on their learning time ("Appendix B"), resulting in a final sample of 115 students.

Study design and procedures

We investigated the effects on visual learning of combined procedural and conceptual knowledge (P + C) versus pure procedural knowledge (P; Fig. 1).

Materials

We conducted the study using online surveys designed with the Qualtrics XM platform, which allowed us to capture the participants’ decisions and their response times.

Images

We used images from the ISIC archive to design skin lesion classification tasks for the learning activities and the tests (International Skin Imaging Collaboration: Melanoma Project, n.d.). Each image corresponded to one classification task. Details are provided in “Appendix C”. All images fulfilled specific inclusion criteria that we already used in previous research, which guaranteed that images of nevi (benign = harmless) and melanomas (malignant = suspicious) shared common characteristics and that their distinction was challenging to learn (Beeler et al., 2023). Furthermore, the results from an earlier study allowed us to distinguish between images that are easy, medium and difficult to classify for novices (Beeler et al., Under revision).

Learning activities

The study included two learning activities: Initial knowledge acquisition, in which the learning materials differed between the two study groups, and subsequent visual learning, which was the same for both groups.

Knowledge acquisition

The knowledge acquisition phase started with a short introduction to the general principles of dermoscopy (Kittler et al., 2002, 2016; Marghoob et al., 2012; Wang et al., 2012), followed by the three-point checklist for evaluating pigmented skin lesions (Argenziano, 2012). This checklist is relatively easy to learn and has a high sensitivity for melanoma (Soyer et al., 2004; Zalaudek et al., 2006). All students received a definition and some illustrative examples for each point of the checklist (procedural knowledge). The P + C group then received a histological explanation for each point of the checklist (Braun et al., 2012), which provided reasons why each point of the checklist is a marker for suspicious lesions (conceptual knowledge). The P group had to summarise what they had just learned to equalise the learning time. We developed the learning materialsFootnote 1 with experienced dermatology lecturers to ensure they were appropriate for the students' prior knowledge.

Visual learning

The common visual learning resource consisted of randomly displayed active and passive skin lesion classification tasks. In the active tasks, the participants had to diagnose a lesion and received immediate corrective feedback. In the passive tasks, the correct diagnosis was directly displayed upon the display of each lesion. Please refer to previously published work for details (Beeler et al., 2023).

Diagnostic tests (RQ 1 and RQ 2)

Each participant completed a pre-test, an intermediate test, an immediate post-test and a delayed post-test (Fig. 1), which all consisted of an equal number of randomly displayed images of nevi and melanomas. The participants had to indicate whether they thought the displayed lesion was harmless or suspicious without receiving feedback.

To measure the performance outcomes after the learning intervention (RQ 1), both post-tests included images that the participants had already seen during learning (including the correct diagnosis) to assess retention and new images that the participants had never seen before to assess transfer. Furthermore, to analyse the performance development throughout the study (RQ 2), the pre-test and the intermediate test also included track tasks that consisted of the same images in each of the four tests but did not appear in any learning activity (which means that the students never saw the correct diagnoses for these lesions). Finally, each test also contained new tasks. These corresponded to the transfer tasks in the post-tests, but because transfer can only be assessed after learning, we call these tasks new tasks when analysing the performance development during the intervention.

Table 1 provides an overview of the different image types.

Perceived helpfulness (RQ 3)

We used the following open-ended question to assess the perceived helpfulness: “In the field below, please describe how your knowledge about the three-point checklist was helpful (or not helpful) to learn classifying lesions”. The students had to provide an answer after the active and the passive skin lesion classification tasks in the visual learning resource.

Metacognitive calibration (RQ 4)

To assess metacognitive calibration (RQ 4), we asked the participants to indicate not only the lesion diagnosis but also how confident they are about their decision on a four-point Likert scale (not confident at all, slightly confident, moderately confident, or very confident) in selected images with medium difficulty in each of the four tests (“Appendix C”).

Analyses

Performance outcomes (RQ 1)

To analyse the performance differences between the two groups after the learning intervention, we assessed the participant's accuracy in the immediate and the delayed post-test for easy, medium and difficult retention and transfer tasks, respectively. Furthermore, we also calculated their overall accuracy in the pre-test. To assess the short-term performance differences, we subsequently performed ANCOVAs with the dependent variable accuracy in the immediate post-test, the fixed factor group and the covariates accuracy in the pre-test and duration of learning. To assess the long-term performance differences, we conducted ANCOVAs with the dependent variable accuracy in the delayed post-test, the fixed factor group and the covariates accuracy in the pre-test and duration of learning.

Performance development (RQ 2)

To analyse how the diagnostic accuracy of the two study groups developed throughout the experiment, we first calculated the participant’s accuracy in each of the four tests for easy, medium and difficult track tasks and transfer tasks, respectively. Subsequently, we conducted repeated measures ANOVAs, including the within-subjects variables accuracy in the pre-test, intermediate, immediate, and delayed post-test and the between-subject factor group.

Perceived helpfulness (RQ 3)

We used an inductive, data-driven approach to analyse the explorative qualitative data on how helpful the participants found the checklist. First, we screened all answers and developed the final version of the coding scheme in an iterative process (“Appendix D”). Second, two independent raters coded forty-nine randomly chosen answers, and we calculated their interrater reliability using Krippendorff's Alpha (Hayes & Krippendorff, 2007; Krippendorff, 1970). We deemed the observed alpha-values between 0.624 and 0.761 sufficient; hence, in a third step, only one of the two raters subsequently coded the remaining answers. Fourth, we compared the proportions of the quantified answers between the two groups using Pearson Chi-Square-tests.

Metacognitive calibration (RQ 4)

As proposed by Sinha and Kapur (2021), we calculated metacognitive calibration by subtracting the observed average performance from the expected average performance based on the reported confidence level. Due to the binary answer options, the expected performance per task lies at 0.5 for the lowest confidence value and at 1.0 for the highest confidence value. Because we assumed a linear relationship between the level of confidence and the expected average performance, we calculated the expected average performance using the formula (confidence/2 + 0.5). For the so-computed metacognitive calibration, positive values (> 0 to 0.5) indicate overconfidence and negative values (< 0 to − 0.5) indicate underconfidence. Finally, we applied one-sample t-tests to check if the metacognitive calibration differed significantly from zero.

Results

Incoming characteristics, performance in pre-test, duration of learning and gender

We found no significant differences between the two study groups regarding the incoming characteristics, performance in the pre-test, time spent on learning and gender distribution (see “Appendix E” for details).

Performance outcomes (RQ 1)

Short-term performance outcomes

Considering the short-term outcomes, the ANCOVA including the covariates accuracy in the pre-test and duration of learningFootnote 2 revealed a significant effect of the study group on the overall retention performance, F(1, 111) = 4.172, p = 0.043, ηp2 = 0.036. Concretely, the P + C group outperformed the P group in the retention tasks in the immediate post-test (covariate-adjusted M = 0.748, SE = 0.019 vs covariate-adjusted M = 0.694, SE = 0.019; Fig. 2Footnote 3). However, running ANCOVAS for easy, medium and difficult retention tasks separately did not significantly affect the study group's retention performance (Fig. 2 and “Appendix F”). For the transfer performance, neither the ANCOVA for all tasks nor the ANCOVAs for the three task difficulties separately revealed significant effects of the study group (Fig. 3 and “Appendix G”).

Long-term performance outcomes

To analyse the long-term performance outcomes, we only considered data from participants who completed the delayed post-testFootnote 4 (N after removing outliers = 75). The ANCOVAs revealed no significant effects of the study group on the long-term performance outcomes, neither in the retention nor in the transfer tasks (Figs. 4, and 5; “Appendices I and J”).

Performance development (RQ 2)

Performance development in track tasks

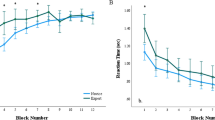

Figure 6 displays the development of the participants' accuracy in the track tasks throughout the study. Considering track tasks of all difficulty levels, the repeated measures ANOVA including pre-test, intermediate test, and immediate and delayed post-test showed a significant effect of the test on participants’ performance, F(3, 219) = 5.243, p = 0.002, ηp2 = 0.067. Similarly, the separate RM ANOVAs for easy and for difficult track tasks also revealed significant effects (F(3, 219) = 4.463, p = 0.005, ηp2 = 0.058 and F(3, 219) = 3.941, p = 0.009, ηp2 = 0.051, respectively). However, we found no significant effect of the test on performance in medium track tasks (F(2.7, 195.4) = 1.355, p = 0.259, ηp2 = 0.018). Furthermore, we found neither significant effects for the factor group nor significant interaction effects between the factors test and group when considering track tasks of all difficulty levels and also when analysing the three difficulty levels separately (“Appendix K”). Pairwise comparisons of the performances in track tasks in each test did not reveal significant differencesFootnote 5 between the two groups. However, we found that the P + C group (but not the P group) significantly improved their performance in all track tasks from the pre-test to the immediate post-test (p = 0.028) and from the pre-test to the delayed post-test (p = 0.032).

Performance development in new tasks

A plot of the performance development in new tasks is provided in Fig. 7. Considering new tasks, the RM ANOVAs revealed significant effects of the test on performance in tasks of all difficulty levels (F(2.6, 189.4) = 42.935, p < 0.001, ηp2 = 0.370) as well as in easy (F(2.4, 174.4) = 21.074, p < 0.001, ηp2 = 0.224), medium (F(2.5, 182.7) = 26.371, p < 0.001, ηp2 = 0.265) and difficult (F(3, 219) = 11.599, p < 0.001, ηp2 = 0.137) tasks separately. Furthermore, we found a significant effect of group, but only for new tasks with medium difficulty (F(1, 73) = 5.222, p = 0.025, ηp2 = 0.067) and not for new tasks of the other difficulty levels. There were no significant interaction effects for the test*group in all difficulty levels. Group comparisons in each test showed that the P + C group significantly outperformed the P group in medium tasks in the pre-test (M = 0.486, SD = 0.323 vs M = 0.329, SD = 0.314, p = 0.035). The group comparisons for the other tests and difficulty levels revealed non-significant results. Finally, posthoc comparisons showed that participants in both groups significantly improved their performance in new tasks from pre-test to intermediate test and from pre-test to immediate and delayed post-test. However, we also observed a significant decrease in performance in both groups from the intermediate test to the immediate post-test and from the immediate to the delayed post-test. Please refer to “Appendix L” for details.

Perceived helpfulness (RQ 3)

In the first step, we were interested in the extent of the perceived helpfulness of the three-point checklist (Fig. 8). The Chi-Square test did not reveal significant differences between the two groups regarding the proportions of participants who wrote that the checklist was helpful, sometimes helpful or not helpful, neither in active visual learning tasks (X2 (3, 115) = 0.842, p = 0.839) nor in passive visual learning tasks (X2 (3, 115) = 1.736, p = 0.629). In the second step, we examined the reasons for the perceived helpfulness. The Chi-Square test for comparing the proportions of participants who provided the investigated reasons in both groups all resulted in p-values > 0.05 (“Appendix M”). An exception is that the P group more often reported having specific criteria as a reason than the P + C group in passive visual learning tasks (16.9% versus 1.8%, X2 (1, 115) = 7.637, p = 0.006).

Metacognitive calibration (RQ 4)

We analysed the participants’ metacognitive calibration in track tasks, new tasks,Footnote 6 and retention tasks separately.Footnote 7 In the track tasks, one-sample t-tests indicated a significant overconfidence of participants in the P + C group in the pre-test and the intermediate test, but neither over- nor underconfidence in the immediate and the delayed post-test (Fig. 9). However, we found significant overconfidence in all four tests in the P group. In the new tasks, participants in both groups were significantly overconfident in the pre-test and the delayed post-test (Fig. 10). In the intermediate test and the immediate post-test, we found neither significant over- nor underconfidence in new tasks in both groups. In the retention tasks, participants in both groups were on average metacognitively well calibrated in the immediate post-test but significantly underconfident in the delayed post-test (Fig. 11). See “Appendix N” for detailed results.

Discussion

Summary and discussion of results

This study aimed to investigate the effects of procedural and conceptual knowledge on visual learning in melanoma detection. To this end, we investigated four research questions (RQ).

Performance outcomes (RQ 1)

RQ 1 addressed the outcomes of two learning interventions, and we expected higher diagnostic accuracy in the P + C group compared to the P group. However, our data supported this hypothesis only for the short-term performance in retention tasks. We found similar outcomes for both experimental groups for the short-term performance in transfer tasks and the long-term performance in retention and transfer tasks. The insignificant difference in long-term retention is in line with the findings of a recent study, which also showed no significant effect of histopathological explanations for dermoscopic criteria on retention in skin cancer training (Kvorning Ternov et al., 2023). However, one interpretation of the pattern of our findings is that the differences between the groups became only visible in the least challenging tasks, as retention and short-term conservation of knowledge are less challenging than transfer and long-term conservation. This is inconsistent with existing literature (Kvorning Ternov et al., 2023), as conceptual understanding has previously been observed to be especially beneficial in challenging tasks, such as in transfer and long-term performance measurements (Kapur, 2014; Loibl et al., 2017). Accordingly, observing group differences mainly in difficult tasks would also have been plausible. However, analysing easy, medium and difficult tasks separately revealed no significant group differences. One reason for this might be the relatively large standard errors observed in the separate analyses for the three difficulty levels, which can be explained by the small number of tasks per task type (retention/transfer) and difficulty level. To investigate this further, future studies should include more tasks per difficulty level, but at the same time, consider the total number of tasks carefully, as we observed in a previous study that participants might experience fatigue when they have to solve more than approximately 50 tasks (Beeler et al., Under revision).

Performance development (RQ 2)

To explore potential explanations for the observed performance outcomes, we looked at the development during the learning intervention in RQ 2. Our expectation that the students would improve their diagnostic accuracy from the pre-test to the immediate post-test can be confirmed for both groups in the transfer tasks but only for the P + C group in the track tasks.

In the transfer tasks, we found that both groups developed comparably: They significantly improved their diagnostic accuracy from the pre-test to the post-tests. However, closer inspection shows that this improvement took place while learning the three-point checklist. From the interaction with the visual learning resource, we unexpectedly observed a significant decrease in performance in both groups. This contradicts our hypothesis that conceptual knowledge allows students to profit more from the visual learning resource, as it turns out that it was detrimental for both groups. One potential explanation for this finding is that the training with the images might have left the participants feeling that the classification tasks are too difficult to solve, leading to less effort in trying. However, our analyses regarding the participants' metacognitive calibration (see RQ 4) revealed neither significant over- nor underconfidence in the transfer tasks in the post-tests. Alternatively, a ceiling effect may have occurred after learning with the three-point checklist. To test this, future research could change the order of the learning phases, which means starting with a visual learning resource, followed by a knowledge acquisition treatment.

In the track tasks, both groups showed minor, non-significant performance improvements from the three-point checklist and the visual learning resource. These small increases resulted in a significant improvement from the pre-test to the two post-tests, but—as stated before—only in the P + C group.

Our results show that the visual learning resource was necessary to reach significant performance improvements in track tasks (at least for the P + C group), but it was detrimental in transfer tasks. We argue that the track tasks measure error correction, as they consisted of the exact same images in each test. Thus, if we observe a performance increase in these tasks, it must be due to fewer errors in diagnosing the same images (as the participants had to provide an answer for each task). Contrastingly, the transfer tasks measure the application of the knowledge and skills to new cases, as they consisted of images that the students had never seen before. Hence, our results indicate that raining with a visual learning resource is necessary and that conceptual knowledge is beneficial only for error correction but not for transfer in visual diagnostic tasks. Alternatively, this finding could also be explained by a testing effect in the track tasks (Richland et al., 2009) or by different characteristics of the skin lesions in the transfer tasks (Beeler et al., 2023).

Perceived helpfulness (RQ 3)

In RQ 3, we explored how receiving procedural and conceptual versus solely procedural information about the three-point checklist affects how helpful students find it for subsequent visual learning. Overall, we observed that most participants found the checklist helpful or at least sometimes helpful, with no significant differences between the two groups. Furthermore, the checklist's specific criteria and simplicity were the most popular reasons for the perceived helpfulness. These results demonstrate that participants perceive algorithms as equally helpful when receiving conceptual and procedural rather than pure procedural information. Based on our results stemming from qualitative data, future research should explore this topic further—for example, by using (additional) quantitative measures.

Metacognitive calibration (RQ 4)

Regarding RQ 4, in which we investigated metacognitive calibration, we assumed that participants in the P + C group are less overconfident or more underconfident than participants in the P group. This hypothesis could only be confirmed for the track tasks in the immediate and the delayed post-test. We observed significant overconfidence in the P group but neither over- nor underconfidence in the P + C group. In all other tasks, participants from both groups were either similarly overconfident, underconfident or, on average, metacognitively well calibrated.

These findings indicate that receiving conceptual information only affected metacognitive calibration in track tasks, where it prevented students from being overconfident. This is in line with findings from RQ 1, in which we also observed that the P + C students exclusively outperformed the P students in the track tasks. Furthermore, it is consistent with the observation that people who make more accurate judgements often also have more accurate self-monitoring skills (Chi, 2006, p. 22).

Limitations and directions for future research

Certain limitations of this study could be addressed in future research. First, we did not conduct a priori power analyses to determine the sample size because the intervention targeted all medical students participating in the dermatology block course. Second, it is possible that the participants in both groups could have thought of reasons for the points in the three-point checklist. Hence, future studies should aim at recruiting participants with less prior (conceptual) knowledge of dermatology. Furthermore, including tests to assess procedural and conceptual knowledge would be helpful. This would allow a manipulation check, which should confirm that students in the two experimental groups acquired different types of knowledge. Moreover, we investigated learning mechanisms in a rather explorative way. Future studies should investigate these mechanisms using validated questionnaires.

Conclusion

This study investigated the effects of combined procedural and conceptual versus pure procedural knowledge on visual learning. Our findings provide another empirical demonstration that procedural knowledge about the three-point checklist improves novices’ diagnostic accuracy in melanoma detection (Soyer et al., 2004; Zalaudek et al., 2006). Furthermore, they imply that additional conceptual knowledge about algorithms for medical image interpretation might support error correction mechanisms in visual classification tasks. Future research should aim to replicate this finding, and investigate the involved learning mechanisms in error correction and transfer using validated instruments.

Availability of Data and Materials

The data underlying this article is available in the Open Science Framework repository, https://osf.io/4qp67/?view_only=b9442b13c82f4e76aeadd409432a7449.

Notes

Unfortunately, the learning materials cannot be published for copyright reasons, but they are available from the first author upon request.

For descriptive values on covariates, please see “Appendix E”, Table E.1.

Descriptive statistics for this subsample regarding the covariates' accuracy in the pre-test and duration of learning are provided in "Appendix H".

We applied Bonferroni-corrections to adjust the p-values for multiple comparisons.

In the post-tests, new tasks correspond to transfer tasks.

Remember that metacognitive calibration was only assessed in tasks with medium difficulty.

References

Abdel Rahman, R., & Sommer, W. (2008). Seeing what we know and understand: How knowledge shapes perception. Psychonomic Bulletin & Review, 15(6), 1055–1063. https://doi.org/10.3758/PBR.15.6.1055

Aldridge, R. B., Glodzik, D., Ballerini, L., Fisher, R. B., & Rees, J. L. (2011). Utility of non-rule-based visual matching as a strategy to allow novices to achieve skin lesion diagnosis. Acta Dermato-Venereologica, 91(3), 279–283. https://doi.org/10.2340/00015555-1049

Altschul, D., Jensen, G., & Terrace, H. (2017). Perceptual category learning of photographic and painterly stimuli in rhesus macaques (Macaca mulatta) and humans. PLoS ONE, 12(9), e0185576. https://doi.org/10.1371/journal.pone.0185576

Argenziano, G. (2012). Chapter 6g—Three-point checklist. In Atlas of Dermoscopy (pp. 144–147). CRC Press. https://doi.org/10.3109/9781841847627-16

Baghdady, M. T., Carnahan, H., Lam, E. W. N., & Woods, N. N. (2013). Integration of basic sciences and clinical sciences in oral radiology education for dental students. Journal of Dental Education, 77(6), 757–763.

Baghdady, M. T., Pharoah, M. J., Regehr, G., Lam, E. W. N., & Woods, N. N. (2009). The role of basic sciences in diagnostic oral radiology. Journal of Dental Education, 73(10), 1187–1193.

Beeler, N., Ziegler, E., Navarini, A. A., & Kapur, M. (Under revision). Factors related to the performance of laypersons diagnosing pigmented skin cancer: An explorative study. Scientific Reports.

Beeler, N., Ziegler, E., Navarini, A. A., & Kapur, M. (2023). Active before passive tasks improve long-term visual learning in difficult-to-classify skin lesions. Learning and Instruction, 88, 101821. https://doi.org/10.1016/j.learninstruc.2023.101821

Braun, R. P., Scope, A., Marghoob, A. A., Kerl, K., Rabinovitz, H. S., & Malvehy, J. (2012). Chapter 3—Histopathologic tissue correlations of dermoscopic structures. In Atlas of Dermoscopy (pp. 20–42). CRC Press. https://doi.org/10.3109/9781841847627-3

Carvalho, P. F., & Goldstone, R. L. (2014). Putting category learning in order: Category structure and temporal arrangement affect the benefit of interleaved over blocked study. Memory & Cognition, 42(3), 481–495. https://doi.org/10.3758/s13421-013-0371-0

Carvalho, P. F., & Goldstone, R. L. (2015). The benefits of interleaved and blocked study: Different tasks benefit from different schedules of study. Psychonomic Bulletin & Review, 22(1), 281–288. https://doi.org/10.3758/s13423-014-0676-4

Chase, W. G., & Simon, H. A. (1973). Perception in chess. Cognitive Psychology, 4(1), 55–81. https://doi.org/10.1016/0010-0285(73)90004-2

Chi, M. T. H. (2006). Two approaches to the study of experts’ characteristics. In K. A. Ericsson, N. Charness, P. J. Feltovich, & R. R. Hoffman (Eds.), The Cambridge Handbook of Expertise and Expert Performance (pp. 21–30). Cambridge University Press. https://doi.org/10.1017/CBO9780511816796.002

de Groot, A. D. (1965). Thought and choice in chess. In Thought and Choice in Chess. De Gruyter Mouton. https://doi.org/10.1515/9783110800647

Drake, T., Krasne, S., Hillman, J., & Kellman, P. (2013). Applying perceptual and adaptive learning techniques for teaching introductory histopathology. Journal of Pathology Informatics, 4(1), 34. https://doi.org/10.4103/2153-3539.123991

Ferrara, G., Argenziano, G., Soyer, H. P., Corona, R., Sera, F., Brunetti, B., Cerroni, L., Chimenti, S., El Shabrawi-Caelen, L., Ferrari, A., Hofmann-Wellenhof, R., Kaddu, S., Piccolo, D., Scalvenzi, M., Staibano, S., Wolf, I. H., & De Rosa, G. (2002). Dermoscopic and histopathologic diagnosis of equivocal melanocytic skin lesions: An interdisciplinary study on 107 cases. Cancer, 95(5), 1094–1100. https://doi.org/10.1002/cncr.10768

Girardi, S., Gaudy, C., Gouvernet, J., Teston, J., Richard, M. A., & Grob, J.-J. (2006). Superiority of a cognitive education with photographs over ABCD criteria in the education of the general population to the early detection of melanoma: A randomized study. International Journal of Cancer, 118(9), 2276–2280. https://doi.org/10.1002/ijc.21351

Hayes, A. F., & Krippendorff, K. (2007). Answering the call for a standard reliability measure for coding data. Communication Methods and Measures, 1(1), 77–89. https://doi.org/10.1080/19312450709336664

International Skin Imaging Collaboration: Melanoma Project. (n.d.). ISIC archive. Gallery. Retrieved 6 November 2020, from https://www.isic-archive.com

Jensen, D. J., & Elewski, B. E. (2015). The ABCDEF Rule: Combining the “ABCDE rule” and the “Ugly duckling sign” in an effort to improve patient self-screening examinations. The Journal of Clinical and Aesthetic Dermatology, 8(2), 15.

Kapur, M. (2014). Productive failure in learning math. Cognitive Science, 38(5), 1008–1022. https://doi.org/10.1111/cogs.12107

Kellman, P. J. (2013). Adaptive and perceptual learning technologies in medical education and training. Military Medicine, 178(suppl_10), 98–106.

Kellman, P. J., & Krasne, S. (2018). Accelerating expertise: Perceptual and adaptive learning technology in medical learning. Medical Teacher, 40(8), 797–802. https://doi.org/10.1080/0142159X.2018.1484897

Kittler, H., Rosendahl, C., Cameron, A., & Tschandl, P. (2016). Dermatoscopy: Pattern analysis of pigmented and non-pigmented lesions (2nd ed.). Facultas.

Kittler, H., Pehamberger, H., Wolff, K., & Binder, M. (2002). Diagnostic accuracy of dermoscopy. The Lancet Oncology, 3(3), 159–165. https://doi.org/10.1016/S1470-2045(02)00679-4

Krippendorff, K. (1970). Estimating the reliability, systematic error and random error of interval data. Educational and Psychological Measurement, 30(1), 61–70. https://doi.org/10.1177/001316447003000105

KvorningTernov, N., Tolsgaard, M., Konge, L., Christensen, A. N., Kristensen, S., Hölmich, L., Stretch, J., Scolyer, R., Vestergaard, T., Guitera, P., & Chakera, A. (2023). Effect of histopathological explanations for dermoscopic criteria on learning curves in skin cancer training: a randomized controlled trial. Dermatology Practical & Conceptual. https://doi.org/10.5826/dpc.1302a105

Lacy, F. A., Coman, G. C., Holliday, A. C., & Kolodney, M. S. (2018). Assessment of smartphone ypplication for teaching intuitive visual diagnosis of melanoma. JAMA Dermatology, 154(6), 730–731. https://doi.org/10.1001/jamadermatol.2018.1525

Levenson, R. M., Krupinski, E. A., Navarro, V. M., & Wasserman, E. A. (2015). Pigeons (Columba livia) as trainable observers of pathology and radiology breast cancer images. PLoS ONE, 10(11), e0141357. https://doi.org/10.1371/journal.pone.0141357

Loibl, K., Roll, I., & Rummel, N. (2017). Towards a theory of when and how problem solving followed by instruction supports learning. Educational Psychology Review, 29(4), 693–715. https://doi.org/10.1007/s10648-016-9379-x

Marghoob, A. A., Braun, R. P., & Malvehy, J. (2012). Introduction. In Atlas of Dermoscopy (pp. 11–12). CRC Press. https://doi.org/10.3109/9781841847627-1

National Cancer Institute. (2022). Melanoma of the skin—Cancer stat facts. Surveillance, Epidemiology, and End Results Program (SEER). https://seer.cancer.gov/statfacts/html/melan.html

Richland, L. E., Kornell, N., & Kao, L. S. (2009). The pretesting effect: Do unsuccessful retrieval attempts enhance learning? Journal of Experimental Psychology: Applied, 15(3), 243–257. https://doi.org/10.1037/a0016496

Rittle-Johnson, B., Schneider, M., & Star, J. R. (2015). Not a one-way street: Bidirectional relations between procedural and conceptual knowledge of mathematics. Educational Psychology Review, 27(4), 587–597. https://doi.org/10.1007/s10648-015-9302-x

Rittle-Johnson, B., & Star, J. R. (2009). Compared to what? The effects of different comparisons on conceptual knowledge and procedural flexibility for equation solving. Journal of Educational Psychology. https://doi.org/10.1037/a0014224

Saginala, K., Barsouk, A., Aluru, J. S., Rawla, P., & Barsouk, A. (2021). Epidemiology of Melanoma. Medical Sciences, 9(4), 63. https://doi.org/10.3390/medsci9040063

Sawyer, R. K. (2008). Optimising learning implications of learning sciences research. In Innovating to Learn, Learning to Innovate (pp. 45–65). OECD Publishing. https://doi.org/10.1787/9789264047983-4-en.

Schneider, M., & Stern, E. (2010). The developmental relations between conceptual and procedural knowledge: A multimethod approach. Developmental Psychology, 46(1), 178–192. https://doi.org/10.1037/a0016701

Sinha, T., & Kapur, M. (2020). When problem-solving followed by instruction works: Evidence for productive failure. Psychological Bulletin (Under Review).

Sinha, T., & Kapur, M. (2021). Robust effects of the efficacy of explicit failure-driven scaffolding in problem-solving prior to instruction: A replication and extension. Learning and Instruction, 75, 101488. https://doi.org/10.1016/j.learninstruc.2021.101488

Soyer, H. P., Argenziano, G., Zalaudek, I., Corona, R., Sera, F., Talamini, R., Barbato, F., Baroni, A., Cicale, L., Stefani, A. D., Farro, P., Rossiello, L., Ruocco, E., & Chimenti, S. (2004). Three-point checklist of dermoscopy. Dermatology, 208(1), 27–31. https://doi.org/10.1159/000075042

Soyer, H. P., Kenet, R. O., Wolf, I. H., Kenet, B. J., & Cerroni, L. (2000). Clinicopathological correlation of pigmented skin lesions using dermoscopy. European Journal of Dermatology: EJD, 10(1), 22–28.

Tsao, H., Olazagasti, J. M., Cordoro, K. M., Brewer, J. D., Taylor, S. C., Bordeaux, J. S., Chren, M.-M., Sober, A. J., Tegeler, C., Bhushan, R., & Begolka, W. S. (2015). Early detection of melanoma: Reviewing the ABCDEs. Journal of the American Academy of Dermatology, 72(4), 717–723. https://doi.org/10.1016/j.jaad.2015.01.025

Wang, S. Q., Marghoob, A. A., & Scope, A. (2012). Chapter 2—Principles of dermoscopy and dermoscopic equipment. In Atlas of Dermoscopy (pp. 13–19). CRC Press. https://doi.org/10.3109/9781841847627-2

Weller, P. D., Rabovsky, M., & Abdel Rahman, R. (2019). Semantic knowledge enhances conscious awareness of visual objects. Journal of Cognitive Neuroscience, 31(8), 1216–1226. https://doi.org/10.1162/jocn_a_01404

WMA. (2022, September 6). Declaration of Helsinki—Ethical principles for medical research involving human subjects. World Medical Association. https://www.wma.net/policies-post/wma-declaration-of-helsinki-ethical-principles-for-medical-research-involving-human-subjects/

Woods, N. N., Brooks, L. R., & Norman, G. R. (2005). The value of basic science in clinical diagnosis: Creating coherence among signs and symptoms. Medical Education, 39(1), 107–112. https://doi.org/10.1111/j.1365-2929.2004.02036.x

Xu, B., Rourke, L., Robinson, J. K., & Tanaka, J. W. (2016). Training melanoma detection in photographs using the perceptual expertise training approach. Applied Cognitive Psychology, 30(5), 750–756. https://doi.org/10.1002/acp.3250

Zalaudek, I., Argenziano, G., Soyer, H. P., Corona, R., Sera, F., Blum, A., Braun, R. P., Cabo, H., Ferrara, G., Kopf, A. W., Langford, D., Menzies, S. W., Pellacani, G., Peris, K., & Seidenari, S. (2006). Three-point checklist of dermoscopy: An open internet study. British Journal of Dermatology, 154(3), 431–437. https://doi.org/10.1111/j.1365-2133.2005.06983.x

Ziegler, E., Edelsbrunner, P. A., & Stern, E. (2018). The relative merits of explicit and implicit learning of contrasted algebra principles. Educational Psychology Review, 30(2), 531–558. https://doi.org/10.1007/s10648-017-9424-4

Acknowledgements

We thank Joris Stemmle and Stefan Wehrli for support in implementing the surveys in Qualtrics; Corinne Meier for coding the open-ended questions; the members of the Professorship for Learning Sciences and Higher Education for their valuable feedback; and all medical students who participated in the study.

Funding

Open access funding provided by Swiss Federal Institute of Technology Zurich. This work was supported by the Innovedum Fund of ETH Zurich (Grant Number 2035).

Author information

Authors and Affiliations

Contributions

NB: Conceptualisation, Methodology, Formal analysis, Investigation, Resources, Project administration, Writing—Original Draft, Writing—Review & Editing; EZ: Conceptualisation, Funding acquisition, Supervision; AV: Resources; AAN: Supervision; MK: Conceptualisation, Funding acquisition, Supervision.

Corresponding author

Ethics declarations

Competing interests

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material (Appendices).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Beeler, N., Ziegler, E., Volz, A. et al. The effects of procedural and conceptual knowledge on visual learning. Adv in Health Sci Educ (2023). https://doi.org/10.1007/s10459-023-10304-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10459-023-10304-0