Abstract

Constraining the actions of AI systems is one promising way to ensure that these systems behave in a way that is morally acceptable to humans. But constraints alone come with drawbacks as in many AI systems, they are not flexible. If these constraints are too rigid, they can preclude actions that are actually acceptable in certain, contextual situations. Humans, on the other hand, can often decide when a simple and seemingly inflexible rule should actually be overridden based on the context. In this paper, we empirically investigate the way humans make these contextual moral judgements, with the goal of building AI systems that understand when to follow and when to override constraints. We propose a novel and general preference-based graphical model that captures a modification of standard dual process theories of moral judgment. We then detail the design, implementation, and results of a study of human participants who judge whether it is acceptable to break a well-established rule: no cutting in line. We then develop an instance of our model and compare its performance to that of standard machine learning approaches on the task of predicting the behavior of human participants in the study, showing that our preference-based approach more accurately captures the judgments of human decision-makers. It also provides a flexible method to model the relationship between variables for moral decision-making tasks that can be generalized to other settings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Concerns about the ways AI systems behave when deployed in the real world are growing in the research community and beyond [1,2,3]. A central worry is that AI systems may achieve their objective functions in ways that are morally unacceptable to those impacted by the decisions, for example, revealing “specification gaming” behaviors [4, 5]. Thus, there is a growing need to understand how to constrain the actions of AI systems by providing boundaries within which the system must operate.

However, imposing formal constraints within the models used by AI systems presents its own problems. Sometimes, constraints preclude actions that are perfectly acceptable or even desirable. On the other hand, sometimes constraints will permit actions that should be prohibited. These properties are true of laws and rules generally speaking, not just for those constraining AI systems. Consider a rule prohibiting vehicles from entering a public park (“no vehicles in the park”) [6] in order to preserve the safe and serene environment that the park provides. A plain reading of this rule might presume that ambulances and wheelchairs are barred from entering, though they should clearly be allowed, but might fail to preclude drones, which are not vehicles per se, but potentially as dangerous and disruptive. Constraints on AI systems can fall victim to these two kinds of problems— sometimes being too lenient—finding “loop holes” around constraints while optimizing an objective function [5, 7]—while other times being unnecessarily stringent (for a non-exhaustive list see, e.g., [8]).

But few humans would be confused about the rule banning vehicles from the park. No wheelchair user would wonder if they could enter, and ambulances would not meet their arrival on the scene of an accident with protest. In short, humans are experts at figuring out when constraints can and should be broken. How do humans do this and can we enable AI to do the same?

In recent years, there has been an ever-increasing number of proposals on how and why to constrain the actions of AI systems to ensure their alignment with human values, i.e., to make them “ethically aligned” [1], culminating with both the ACM Statement on Algorithmic Transparency [9] and the National Institute of Standards and Technology AI Risk Management Framework [10]. Within the AI research literature, many techniques for constraint engineering are based on bottom-up, top-down, or hybrid approaches [11,12,13]. A bottom-up approach involves teaching a machine what is right and wrong by providing examples of “correct” decisions—for instance, providing an autonomous vehicle with demonstrations about how to handle certain traffic patterns [13,14,15]. In top-down approaches, behavioral guidelines are specified by explicit rules or constraints on the decisions space—for instance, by making a rule that an autonomous vehicle must never strike a human [16, 17]. However, both of these kinds of models will struggle to determine when constraints should be overridden. Top-down approaches will err when the rules or constraints on the system are too general to deal with a particular edge case or unusual circumstance. Bottom-up approaches will err when a case that should be an exception to a rule differs dramatically from anything in the training set.

Our goal is to formalize and understand how to build AI agents that can act in ways that are morally acceptable to humans [4, 18] by taking inspiration from how humans decide what is morally acceptable. In this work, we start from the observation that decisions are linked to preferences: we humans choose one among all possible decisions because we prefer the resulting state-of-affairs over the ones generated by the other decisions. Thus, we can model how an individual makes a decision in a given scenario by modeling her preferences over decisions in that scenario [19].

In this paper, we extend the existing preference framework of CP-nets [20] to capture complex preferences over both the context of a decision and the resulting judgments about actions. Traditional CP-nets allow for the representation of conditional preferences over options. Our novel extension, Scenario-Evaluation-Preference Nets (SEP-nets), allows for a more complete model of how humans decide when it is permissible to break a moral rule. The aim of using this formalism to describe human moral decision-making is to be able to ultimately embed the human-like process of flexibly overriding constraints into a machine or to build machines that can better collaborate with humans, knowing how humans would behave.

While there are many formalisms to choose from when modeling preferences, we focus on CP-nets because of their many strengths. In particular, they provide a compact representation of possibly complex domains, e.g., in the cloud service selection [21] where they are employed to represent the preferences of users. Moreover, they have been extended in multiple ways to be easily translated into formal languages [22] or to embed uncertainty [23]. The varied number of applications of CP-nets and their popularity in the academic research literature make this framework and its extensions of particular interest as they provide feasible ways for representing conditional preferences and at the same time leverage a plethora of tools for the development of such frameworks.

Our extension of CP-nets, SEP-nets, captures a modification to standard “dual process” theories of moral judgment. Human decision-making processes (in many domains, not just morality) are sometimes characterized by dual process models of cognition, which posit that humans have both a fast and automatic way of making decisions (“System 1”) and a slower, more deliberate method (“System 2”) [24, 25]. Dual process models of moral cognition follow this mold: these theories tend to argue that we make moral judgments using a combination of heuristic-like rules (System 1) and more deliberative utility calculations (System 2) [26, 27]. We argue for a modification of this standard account, proposing that System 1 processes support rule-based moral judgment (as is widely agreed upon in the literature) and that System 2 processes can enable moral rule flexibility. Specifically, humans use System 2 thinking to figure out when a rule should be overridden.

To assess our proposal, we run a study in which participants make judgments about breaking a simple and well-known socio-moral rule, specifically, “no cutting in line”.Footnote 1 Despite first appearances, figuring out how to wait in line cannot be governed by a simple rule; on the contrary, we can intuitively evaluate a huge range of exceptions to the rule about line waiting. We perform an extensive analysis of the data to uncover possible relationships among the variables used to describe the scenario. We then use the collected data to build an SEP-net, and finally, we compare this SEP-net approach with well-known machine learning models including XGBoost [29] and Support Vector Machines [30] on a prediction task and find that our model outperforms the competitors.

1.1 Contribution

We propose a novel extension of CP-nets we call Scenario-Evaluation-Preference Nets (SEP-nets). This framework is able to represent conditional preferences and take into account a complex deliberation process to compute an outcome. We focus on scenarios when a socio-moral rule should be broken, specifically, when it is morally acceptable to cut in line. To support our model, we collect data from human participants, asking them to make judgments about when it is permissible to cut in line. We perform an extensive data analysis and show how our framework can be used to model the moral judgments made by individuals. Our SEP-nets method performs better than most standard machine learning classification algorithms. We suspect that many social and moral rules are supported by generative psychological mechanisms that allow people to make judgments in novel scenarios. We, therefore, believe that the formalism we develop that captures the psychological process that enables people to navigate exceptions to line-waiting rules will be generalizable to many other rules guiding human social life.

1.2 Organization

The rest of the paper is structured as follows. Section 2 details related work in moral psychology as well as in the computational decision-making literature. In Sect. 3 we provide a comprehensive background and formal notation for CP-nets. Section 4 formalizes SEP-nets. Section 5 gives a detailed overview of our experimental setting, data collection and analysis, as well as the empirical evaluation of SEP-nets. Section 6 provides a discussion about the results and Sect. 7 offers conclusions and directions for future work.

2 Related work

The following section provides an overview of the existing research and developments in the field, highlighting key contributions and insights relevant to our study.

2.1 Computational ethics

The vast majority of work characterizing human moral decision-making, both in psychology [31, 32] and experimental philosophy [33, 34] has focused on identifying factors that are relevant to moral judgment (e.g., affect, rules, utility calculations). In this work we focus on an emerging body of work, called “computational ethics” [35], that goes beyond simply identifying these factors, but also seeks to characterize the mechanisms underlying moral judgments in order to embed them in artificial agents [36,37,38,39,40,41,42]. This is a critical step in building AI that can produce and interpret human moral judgment [37, 43, 44] in a way that is explainable [45].

One prominent example of agents that try to find a trade-off between maximizing an objective function while respecting ethical constraints is the case of autonomous cars. A range of interdisciplinary research groups have asked how autonomous cars should handle cases where harm to passengers or pedestrians is inevitable [28, 46], how to aggregate societal preferences to make these decisions [47,48,49], and how to measure distances between these preferences [18, 50]. Similar tensions around ethical constraints arise for recommender systems. A parent or guardian may want the online agent to not recommend certain types of movies to children, even if this recommendation could lead to a high reward [14]. Not being able to specify the appropriate ethical constraints may lead to undesired and unexpected behavior. This is sometimes referred to as “specification gaming” because agents “game” the given specification by behaving in unexpected (and undesired) ways [5].

2.2 Preferences in artificial intelligence

As we noted above, the concepts of decisions and actions are linked to the concept of preferences, and the issue of modeling and reasoning with preferences in an artificial agent has been the subject of research within AI for many years [51]. Several frameworks have been defined, and their properties studied, for many situations including expressivity [19], computational complexity, and easiness of preference elicitation [52]. The centrality of preferences is true also in the case of moral judgement: we consider a decision more morally acceptable than another one if its impact on others is preferred according to our moral values [53,54,55]. Therefore, finding a way to model these values, and the corresponding preferences they create, is central to building artificial agents that behave in a way that is aligned to humans values [4, 18].Footnote 2

For instance, Freedman et al. [56] introduce a comprehensive methodology involving human participants to estimate weights for individual profiles in kidney exchanges, ultimately prioritizing patients and donors during organ allocation. This highlights the significant impact of human value judgments on patient prioritization outcomes. WeBuildAI [57] is another illustration, presenting a participatory framework where stakeholders collaboratively build computational models to guide voting-based algorithmic policy decisions. This was demonstrated through a case study involving an on-demand food donation transportation service, resulting in improved fairness, distribution outcomes, algorithmic awareness, and identification of decision-making inconsistencies. In a similar vein, [40] explore the permissibility of actions that save lives while causing harm to others, proposing a computational model using subjective utilities to capture moral judgments across diverse scenarios, extending beyond typical life-and-death dilemmas and suggesting integration with causal theory.

In this work, we focus our attention on Conditional Preference networks (CP-net), a graphical model for compactly representing conditional and qualitative preferences [20]. CP-nets are comprised of sets of ceteris paribus preference statements (cp-statements). For instance, the cp-statement, “I prefer red wine to white wine if meat is served,” asserts that given two meals that differ only in the kind of wine served and both containing meat, the meal with red wine is preferable to the meal with white wine. Given a CP-net, one can address optimality questions, that look for the most preferred decision, or also dominance questions, that ask for comparing the preference of two decisions. Many algorithms to respond to such questions, for several versions of CP-nets, have been defined, and their computational complexity has been thoroughly studied.

CP-nets have been extensively used in preference reasoning, preference learning, and social choice literature as a formalism for modeling and reasoning with qualitative preferences [51, 58, 59]. They have been used to compose web services [60] and to support other decision aid systems [61]. CP-nets are a particularly attractive formalism as they provide an explicit model of the dependencies between features of a decision: for instance, in the example of the two meals, the wine feature depends on the main dish feature. This is important for AI decision-making as, for instance, the Engineering and Physical Science Research Council (EPSRC) Principles of Robotics dictates the implementation of transparency in robotic systems specifically by providing a mechanism to “expose the decision-making of the robot [45].” Hence, having a formal, explicit decision model such as a CP-net can provide this transparency.

2.3 Value alignment and dual process models

The idea of teaching machines right from wrong has become an important research topic in both AI [62] and related fields [11]. This challenge has been pursued using a range of computer science approaches, including taking sequences of actions in a reactive environment [63] and teaching agents how to respond in specific environments [64]. Many of these projects address what is called the value-alignment problem [65], that is, the problem of building machines that behave according to values aligned with human ones [12, 66,67,68,69]. This aligns with the challenge of instilling morality into AI systems addressed by [70], who introduce Delphi, an experimental framework based on deep neural networks trained to reason about ethical judgments. This reveals the potential and limitations of machine ethics, emphasizing the necessity of explicit moral instruction and exploring alignment with ethical theories. We continue in this stream of research by proposing a novel and extensible formalism for modeling and reasoning with preferences over complex judgments.

Many human decision-making processes can be characterized by dual process models of cognition, often known as the “thinking fast and slow” approach [24]. Dual process theories describe the mind as composed of two broad reasoning approaches: (1) thinking fast, or System 1, which relies on predefined heuristics or rules, and (2) thinking slow, or System 2, which is more deliberate. Each of these systems has its merits. System 1 thinking is efficient, requiring only limited computational resources and contextual information. When System 1 heuristics are deployed in the environments they were intended for, they often produce good-enough decisions; though they sometimes can lead to sub-optimal choices when deployed in edge cases. System 2 decision-processes, on the other hand, are cognitively intensive and allow the decision-maker to flexibly integrate information from disparate sources, leading to decisions that can be carefully tailored to the case at hand. These decision-processes can be used in combination in a way that optimizes payoffs given the limitations of the computational resources available [71,72,73].

Dual process models have also been used to understand human moral judgment [26, 27, 74, 75]. These models draw their inspiration from two of the major branches of moral philosophy. The rule-based System 1 is inspired by deontological theories of moral philosophy, which focus on constraints on actions. The deliberative System 2 is typically associated with consequentialist theories, which focus on maximizing the utility of outcomes. System 1 applies a hard and fast rule and does not consider the subtleties or complexities of the current scenario. In contrast, System 2 acts more like a utilitarian reasoner, it attempts to quantify utility losses and gains, which enable it to make a judgment. For example, when Bob refrains from stealing something he cannot afford from a store even though he really wants it, he may be following a System 1 moral rule: no stealing. When Susan decides to donate $5 to buy mosquito nets rather than chocolate bars for children in crisis, she may be using System 2 reasoning and comparing the values of the outcomes of the candidate’s actions.

2.4 Contractualism and universalization

Theories of moral psychology have successfully drawn on ideas from two major frameworks in moral philosophy—deontology and consequentialism—to contribute to our understanding of the moral mind. Curiously, the central idea of another family of philosophical views—contractualism—has been virtually absent from theories of moral psychology. Contractualist views ask us to consider what agreements could be adopted that would lead to mutual benefit [76,77,78,79]. Recent work has begun to show that, despite being neglected for so long, contractualist mechanisms play a critical role in the moral mind [36, 80,81,82,83].

The specific agreement-based process we focus on in this paper is universalization—a psychological mechanism akin to Kant’s Categorical Imperative [84]—which is a way of making a moral decision by asking “what if everyone felt at liberty to do that?” [36]. When people universalize, they imagine a hypothetical world where everyone is allowed to act in a certain way. If things go well in that hypothetical world, the action in question should be allowed. If things go badly, the action should be prohibited. This boils down to asking if a person is taking a special privilege for themselves that they couldn’t grant to everyone. If everyone could feel free to do the action, then presumably mutual agreement could be reached that the action is permitted. Universalization is likely to be a System 2 process because running the universalization computation is resource-intensive and requires a lot of information about the particular decision-making context [36].

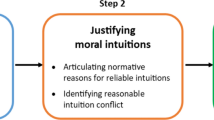

2.5 Extending the dual process model of morality

We propose an extension of standard dual-process models of morality [85]. Not only do humans have two systems that they can use to make moral judgments, but the two systems flexibly interact. Humans sometimes use outcome-based and agreement-based (System 2) processes to figure out when a (System 1) rule can be overridden [85].

This proposal is intuitively plausible. After all, sometimes people decide that it is morally acceptable to break previously established rules because doing so would bring about a better outcome than following the rule [26, 86]. For example, in general, there is a rule against non-consensual harmful contact, i.e., no hitting. But if pushing someone out of the way of a speeding train could save their life, then it is morally permissible to do so [87]. Yet in other cases, people may decide that it is morally acceptable to break a rule because the person whom the rule protects would agree to have the rule violated [80, 88]. There is a rule against taking things that don’t belong to you (or: no stealing), but it may be morally acceptable to take coffee grounds from your co-worker’s desk if you know they would consent to it, given that they are likely to be caught in a similar situation in the future and want to take some coffee from you. Following this pattern, we further hypothesize that universalization is another System 2 process that will help people determine when it is OK to break a rule.

In this work, we focus on the rule about waiting in line: “no cutting”. Scenarios involving waiting in line provide an interesting test-case to study how people make moral judgments,Footnote 3 Lines are seemingly adjudicated by a simple rule that everyone can articulate. However, people’s judgments about when it is permissible to cut in line are (at least somewhat) consistent and replicable, even in completely novel contexts that participants have never seen before [91, 92]. This suggests that participants are not simply “memorizing” the exceptions to the rule, but instead using a generative psychological mechanism to make judgments in novel cases [85, 91, 92]. This generative mechanism is what our computational formalism is designed to describe and be aligned with.

3 Formal background: CP-nets

Conditional Preference networks (CP-nets) are a graphical model for compactly representing conditional and qualitative preferences. CP-nets are comprised of sets of ceteris paribus preference statements (cp-statements). For instance, the cp-statement, “I prefer red wine to white wine if meat is served," asserts that, given two meals that differ only in the kind of wine served and both containing meat, the meal with red wine is preferable to the meal with white wine.

CP-nets have been extensively used in preference reasoning, preference learning, and social choice literature as a formalism for working with qualitative preferences [51, 58, 59]. CP-nets have even been used to compose web services [60] and other decision aid systems [61]. While there are many formalisms to choose from when modeling preferences, we focus on CP-nets as they are graphical and intuitive.

We borrow from [20] the definition of a CP-net:

Definition 1

A CP-net over variables \(V = \{X_1,..., X_n\}\) is a directed graph G over \(X_1, \ldots , X_n\) whose nodes are associated with conditional preference tables \(CPT(X_i)\) for each \(X_i \in V\). Each conditional preference table \(CPT(X_i)\) associates a total order \(\succ _u^i\) with each instantiation u of \(X_i\)’s parents \(Pa(X_i) = U\) in the directed graph.

A CP-net has a set of features (also called variables) \(V = \{X_1,\ldots ,X_n\}\), each with a finite domain \(D(X_1),\ldots , D(X_n)\). For each feature \(X_i\), we are given a set of parent features \(Pa(X_i)\) that can affect the preferences over the values of \(X_i\). This defines a directed dependency graph G in which each node \(X_i\) has \(Pa(X_i)\) as its immediate predecessors. An acyclic CP-net is one in which the dependency graph is acyclic.

Given this structural dependency information among the CP-net’s variables, one needs to specify the preference over the values of each variable \(X_i\) for each complete assignment to the parent variables \(Pa(X_i)\). This preference takes the form of a total or partial order over \(D(X_i)\). This is formally done via the notion of cp-statement. Given a variable \(X_i\) with domain \(D(X_i) = \{a_1, \ldots , a_m\}\) and parent variables \(Pa(X_i) = \{Y_1, \ldots , Y_n\}\), a cp-statement for \(X_i\) has the form: \(Y_1=v_1, Y_2=v_2, \ldots ,Y_n=v_n: X_i = a_1 \succ \ldots \succ X_i = a_m\), where, for each \(Y_j \in Pa(X_i)\), \(v_j \in D(Y_j)\). The set of cp-statements for a variable \(X_i\) is called the cp-table (CPT) for \(X_i\). A full example is given as Example 1

Among the several generalizations of CP-nets, in this work, we focus on one that models cases where individuals have indifference over the values of some features or where they do not specify some preference information [93]. A cp-statement \(z: a_i \approx a_j\) means that the person is indifferent between \(a_i\) and \(a_j\) given the assignment z to the parents variables, i.e., \(a_i \succeq a_j\) and \(a_j \succeq a_i\). A lack of information over one of the values of a variable is modeled with empty cp-statements for that value.

Example 1

This example provides a CP-net for a scenario that is related to the data we have collected (discussed later in the paper), that has to do with people expressing a moral judgment over the action of cutting the line.

We consider a person, say John, who is the first in line at the airport security. A person approaches him and asks to cut the line because her flight is going to leave very soon. Because of security considerations, John always prefers not to let anyone cut the line at the airport. However, he has different preferences when he is not at the airport: he lets a person cut the line if he is on time, while he prefers not to let someone cut the line if he is late.

The CP-net representing John’s preferences described in Example 1. Variable S denotes the scenario and has the values a for “at the airport”, and \(\overline{a}\) for “not at the airport”. Values in the domain of this variable are incomparable because no cp-statements appear in the CP-table of this variable, i.e., we assume John is indifferent between these values; variable T represents time, with values o for “on time”, and \(\overline{o}\) for “late”; variable P represents the preference over letting people cut the line, with values c is for “ok to cut the line”, and \(\overline{c}\) is for “not ok to cut the line”

The CP-net in Fig. 1, with features S, T, and P, represents John’s preferences as described in Example 1: variable S denotes the scenario and has values a for “at the airport", and \(\overline{a}\) for “not at the airport", variable T represents time, with values o for “on time" and \(\overline{o}\) for “late", and variable P models his preference over the request and has values for yes (c) and no (\(\overline{c}\)). In this CP-net, S and T are parent variables to P. The first cp-statements for P states that, when he is at the airport (\(S=a\)) and he is on time (\(T=o\)), he prefers to not let people cut the line (\(\overline{c} \succ c\)). A similar interpretation can be made for the other three cp-statements for P. For variable T, we assume that John prefers to be on time rather than late, so we include the cp-statement \(o \succ \overline{o}\). On the other hand, for the scenario variable S, we do not assume any preference because values in the domain of this variable are incomparable due to the fact that no cp-statements appear in the CP-table of this variable.

The preorder induced by the CP-net in Fig. 1. The two components are due to the incomparability on the domain of the variable S

The semantics of a CP-net depends on the notion of a worsening flip, which is a change in the value of a variable to a less preferred value according to the cp-statement for that variable. One outcome (that is, an assignment of values to all the variables) \(\alpha\) is preferred to, or dominates, another outcome \(\beta\) (written \(\alpha \succ \beta\)) if and only if there is a chain of worsening flips from \(\alpha\) to \(\beta\). This definition of dominance induces a preorder (i.e. a binary relation which is reflexive and transitive) over the outcomes. Indifference induces a loop between pairs of outcomes while the lack of information induces incomparability in the preorder (i.e. given two outcomes o, p, if neither \(o \succeq p\) nor \(p \succeq o\) are valid, then we say that o and p are incomparable, denoted with \(o \bowtie p\).). This incomparability makes the preference graph disconnected. In particular, in the induced preference graph there is a connected component for each combination of values of the variables with missing cp-statements. For instance, Fig. 2 gives the full induced preference order for the CP-net shown in Fig. 1. The component on the left side describes preferences over the airport scenario (\(S=a\)), while the component on the right side describes John’s preferences when not at the airport (\(S=\overline{a}\)). Clearly, outcomes in different components cannot be compared because they describe preferences over different scenarios. In this, incomparability is a useful tool that allows us to model different scenarios.

While CP-nets are usually a compact way to express preferences, their induced order can be exponentially larger. This is why it is important, for computational sake, to be able to reason on CP-nets and not on their induced graphs. Two important questions about CP-nets are related to optimality and dominance [58]. Finding the optimal outcome of a CP-net is NP-hard [20] in general but can be found in polynomial time for acyclic CP-nets, by assigning the most preferred value (according to the CP–tables) for each variable in the order given by the dependencies. Indeed, acyclic CP-nets induce a lattice over the outcomes as depicted in Fig. 2. The induced preference ordering, Fig. 2, can be exponentially larger than the CP-net itself as shown in Fig. 1. On the other hand, checking the dominance between two outcomes is a computationally difficult problem [94].

4 SEP-nets: scenarios, evaluation, and preferences for modeling morality driven preferences

In a standard CP-net, there is only one kind of variable: those needed to express preferences. There is no ability to describe the context in which a preference-based decision-making process takes place, nor to model other auxiliary evaluation variables that may be needed, or useful, to declare our own preferences. In some sense, a CP-net is a useful tool only when it is clear what the context is, and if no reasoning on the context is needed in order to state the preferences, or when such reasoning takes place outside the CP-net formalism.

Given this limitation, there have been calls to extend this model into reasoning and preference systems in computer science in a principled and exact way [52]. We therefore propose an extension to the CP-net formalism to be able to capture the psychological mechanisms at play in our humans’ moral judgments. Following our discussion of human moral decision-making in Sect. 2, we hypothesize that humans employ a combination of System 1 (rule-based) and System 2 (consequentialist and contractualist) thinking. This insight leads us to model a two-modality reasoning process in an extension of the CP-net formalism so that we can embed it within a machine, to allow the machine to reason both fast and slow about ethical principles [18, 95]. Our motivation is to extend the semantics of CP-nets in order to model both the snap judgments that do not take into account the particularities of the scenario, System 1 thinking, as well as provide the ability to reason about these details, using System 2 thinking, if necessary [15].

Informally, our proposed generalization of the CP-net formalism is called SEP-nets (Scenarios, Evaluation, and Preferences networks), to handle variables associated with the context. We propose extending the formalism consisting of:

-

a set S of scenario variables (we call SVs in the text) to define a decision-making context over which there is no preference to be stated;

-

a set E of evaluation variables (we call EVs in the text) to model the evaluation process that takes place in the subjects’ minds while reasoning over the given context;

-

to decide their preference over the preference variables (set P) (we call PVs in the text) that are already modeled in CP-nets.

SEP-nets allow us to have a compact framework to express and reason about knowledge on moral preferences. Notice that when SEP-nets include only preference features, they collapse to being standard CP-nets. SEP-nets are built on a generalization of CP-nets proposed by [93] allowing for incompatibility in the set Eval(X), which is not captured in the cp-tables of traditional CP-nets. For SEP-nets, we use incomparability to model components in the preference graph whose nodes cannot be compared. For instance, outcomes that depend on specific features of the scenario variables, i.e., the context of the decision, cannot be compared as these are not under the control of the decision maker. This change, with the addition of the evaluation functions ef for real-valued judgements mean that the SEP-net model is a strict generalization of the classic CP-net model.

Definition 2

An SEP-net consists of

-

A set of features (or variables) \(V=S \cup E \cup P\). Given a variable X, the domain of X is a finite set if \(X \in S\) or \(X \in P\). In particular, if \(X \in E\) then the domain of X is \(<EF, Eval(X)>\), where EF is a set of evaluation functions and Eval(X) is a set of evaluation values. Each variable can be only in one of three sets, that is, \(S \cap E= \emptyset\), \(S \cap P= \emptyset\), and \(P \cap E= \emptyset\).

-

As in a standard CP-net, each variable X has a set of parent variables Pa(X), on which it depends on. However, \(Pa(X) = \emptyset\) if \(X \in S\); \(Pa(X) \subseteq S \cup E\) if \(X \in E\), and \(Pa(X) \subseteq S \cup E \cup P\) if \(X \in P\). This models a three-level acyclic structure, where scenario variables are independent, evaluation variables can depend only on scenario variables or other evaluation variables, and preference variables can depend on any variable.

-

If \(X \in E\), then given an assignment u to Pa(X) and a value \(j \in Eval(X)\) an evaluation function \(ef_{u}(j) \in EF\) identifies a single value in [0, 1]. If \(X \in P\), a standard CP-table states the preferences over the domain of X: for each combination of values in Pa(X), the table provides a total order of Dom(X). No CP-table or evaluation function is associated with scenario variables.

Preference variables may depend directly on either all or a subset of the scenario variables. Moreover, they may depend on certain values of a scenario variable, but not others. This direct dependency between preferences and scenarios models a sort of System 1 approach, where people make a moral judgment (or any other preference decision) by just looking at the situation at hand and without performing any sophisticated reasoning. On the other hand, when preference variables depend on evaluation variables, which in turn depend on scenario variables, we model a sort of System 2 approach, where people consider a scenario and a preference question, and make an estimate of the consequences of the various options before finalizing their decision or judgment.

When there are no scenario nor evaluation variables, but just preference variables, an SEP-net is the same as a standard CP-net, so its semantics are defined as usual for CP-nets [20]. When instead we have a full SEP-net, its semantics is an order over all the SEP-outcomes, where a SEP-outcome includes not only assignments to the PVs but also all the SVs and EVs. For the sake of readability, in the following, we denote with lowercase letters an assignment to a variable (i.e., \(X_i=x_i\) or \(X_i=x'_i\)). Given two outcomes \(o_v = [s_1,\ldots ,s_n,e_1,\ldots ,e_m,p_1,\ldots ,p_k]\) and \(o'_v = [s'_1,\ldots ,s'_n,e'_1,\ldots ,e'_m,p'_1,\ldots ,p'_k]\), we have \(o'_v \succ o_v\) if

-

\([s_1 \ldots , s_n] = [s'_1 \ldots , s'_n]\). This means that we are considering the same scenario.

-

\([e_1 \ldots , e_m] = [e'_1 \ldots , e'_m]\). That is, evaluation variables are set to their estimates as given by their evaluation functions, based on a vector of evaluation values \(v =[v_1,\ldots ,v_m]\), with \(v_i \in Eval(E_i)\).

-

\([p'_1 \ldots , p'_k] \succ _p [p_1 \ldots , p_k]\) in the order \(\succ _p\) induced by the CP-net which is obtained by just considering the preference variables (in P) and the dependencies among them.

So, outcomes that differ in the scenario are not connected in the order induced by a SEP-net. Moreover, any outcome with the evaluation variables set to values that are different from the value given by their evaluation function are not connected to any other outcome. If the dependencies among preference variables define an acyclic graph, the result is a set of partial orders, one for each scenario. Each of such partial orders has the same shape as the induced order of the CP-net obtained by the given SEP-net by setting the scenario variables to any value in their domain and the evaluation variables to their estimated value. Given an SEP-net and its induced set of partial orders, the optimal outcomes are the top elements in the induced partial orders, thus one for each scenario, and can be efficiently computed by 1) choosing any scenario, 2) setting the evaluation variables to their estimate value, given the chosen scenario, and 3) taking the most preferred values for the preference variables.

5 Empirical evaluation

To test our model, we ran an experiment on Amazon MTurk. Informed consent was given by all participants and this study was approved by the Massachusetts Institute of Technology Institutional Review Board.Footnote 4 407 subjects participated in the study in 2020. Following attention checks, the data from 301 subjects was retained for analysis. No demographic data was taken from participants, but the average demographic information for MTURK participants is as follows [96]: Gender, 55 % Female; Age, 20% born after 1990, 60% born after 1980, and 80% born after 1970; Median household income, $47K/year.

Subjects were randomly assigned to one of three story contexts, in which subjects were asked to imagine that they were standing in line as a deli (12 scenarios), for a single-occupancy bathroom (7 scenarios), or at an airport security screening (6 scenarios). (Refer to Table 1 for details.) These three contexts were selected because they represent mundane, everyday situations that call for moral judgment, a kind of “mundane realism” [97]. Judgments in these cases, therefore, are likely to represent our participants’ commonly deployed moral reasoning capacity. We expect patterns of judgments in these cases to generalize to other cases of waiting in line – and ideally other cases of rule-breaking.

These contexts present the opportunity for someone to want to cut in line for a diverse range of reasons. We developed cases that manipulated (1) the amount of time by which the person cutting would delay the line, (2) the benefit that the person cutting would accrue by cutting, (3) the benefit that the people waiting would accrue by this person cutting, (4) the likelihood this particular scenario would happen at all. We hypothesized that if subjects were using agreement-based or utility-based reasoning to make their judgments, then these manipulations would have systematic impacts on moral acceptability judgments.

After reading each vignette, subjects were asked about the acceptability of cutting in line in that scenario: “Is it OK for that person to ask the cashier for a new spoon without waiting in line?” Subjects were allowed to answer “yes” or “no”. Complete descriptions of the scenarios are given in Table 3, and Fig. 3 gives a screenshot of the experimental set-up.

As a way of evaluating the role of System 2 reasoning in producing these moral judgments, we ask subjects to evaluate each scenario on a series of utility and agreement-based measures based on our experimental manipulations. To evaluate the role of System 2 outcome-based thinking, Subjects were asked to estimate the utility consequences of the person cutting in line in each scenario, e.g. how long the cutter would delay the line, the benefit to the cutter, the detriment to the line. To evaluate the role of System 2 agreement-based thinking, we ask subjects what would happen, overall, if this type of line-cutting were always allowed, a proxy for whether everyone would agree to allow this person to cut [36]. Subjects responded on a scale of -50 (a lot worse off), 0 (not affected), +50 (a lot better off); likelihood was judged between 0 (not likely) and 100 (very likely). For a full list of these “evaluation questions” see Table 2.

Half the subjects were shown the evaluation questions for all the scenarios followed by the permissibility questions for all the scenarios; the other half of the subjects received the blocks of questions in the reverse order. This was designed to test and eliminate if necessary, the effects of evaluation then judgment versus judgment then evaluation.

Finally, we coded each vignette for whether or not the person attempting to cut had the goal of accessing the main service the line was providing. This allowed us to check whether a slight addendum to the rule about waiting in line could explain our findings. That is, rather than the rule simply being “no cutting in line”, perhaps the rule participants use is “no cutting if you are interested in accessing the main service.” The main service for the deli line was the sale of an item, for the airport scenario it was security screening, and for the bathroom it was the use of the toilet. Note that defining what counts as the “main service” being provided to the line is open to interpretation. We think that variation in this interpretation likely impacts subjects’ judgments about the acceptability of cutting in line. For instance, one might view the main service of the deli line as receiving anything from the cashier, rather than purchasing something. Our characterizations of the main function of the line are rough approximations meant to describe one view that seems commonly held. This coding can be found in Table 3, see column titled “Main Func.”. We also coded each scenario for whether or not the person attempting to cut had already waited.

At the end of the survey, participants were given an attention check as follows: “Finally, we are interested in learning some facts about you to see how our survey respondents answer questions differently from each other. This is an attention check. If you are reading this, please do not answer this question (do not check any of the boxes). Instead, in the box below labeled ‘Other’ please write ‘I am paying attention’. Thanks very much!” This was followed with a list of levels of education and a free-response box labeled “Other.” Participants who checked any box or failed to write ”I am paying attention” in the appropriate place were screened out of the study.

Moral judgments about cutting in line in each of the scenarios. Color indicates if the person cutting in line is requesting the main service or not (blue for Yes, red for No). Error bars are \(95\%\) confidence intervals. As we can see, a simple rule, such as “it is ok to cut if you are not requesting the main service” is not sufficient to explain variation

5.1 Data analysis

We perform an extensive analysis of the collected data to uncover possible relationships among the variables. We will then leverage these insights in testing the applicability of the SEP-net model in the next section.

Figure 4 shows overall moral permissibility data, i.e., average OK or not-OK judgments, for each of the scenarios. The most notable feature of this data is that moral permissibility is graded; cutting the line is endorsed probabilistically by our subjects rather than in an all-or-none fashion. This is the first hint that our subjects are not using a simple rule to figure out when it is permissible to cut in line. A rule like “don’t cut” for instance, would produce unanimously low permissibility for all cases. A slightly more sophisticated version of this rule-based approach would be that subjects use the rule “don’t cut” but realize that the rule is not operative in certain scenarios. This would yield a slightly more complex rule such as “cutting is allowed only when you are not requesting the main service.” This would yield a binary all-or-none pattern of results, with some instances of cutting being permissible (i.e. the red bars in Fig. 4) and some not (i.e. the blue bars). Instead, it seems that a more sophisticated understanding of the computations behind subjects’ moral judgments is required. The aim of our model is to be able to predict our participants’ graded permissibility judgments.

We checked if the order of question presentation (whether the subjects were asked the evaluation questions or moral judgments first) impacted moral judgments. To test this, we ran a Wilcoxon signed-rank testFootnote 5 against the null hypothesis that changing the order of evaluation and judgment does not influence the judgment value. We ran this for all 25 scenarios described in Table 3, and the full set of p-values is available in the supplemental material. The test did not reject the null hypothesis for any of the 25 scenarios. Hence, under the conditions of this study, asking individuals to think closely about the evaluation questions did not cause them to change their judgments. We conjecture that this happens because people already went through the internal evaluation process when they made a decision. Thus asking for it before or after does not influence the decision they made. We therefore pooled subjects in both order conditions for the remaining analyses.

Figure 5 depicts the distribution of responses by all participants for the evaluation questions. We conjectured that the different evaluation variables might draw out that individuals found various elements of the scenarios more or less important, allowing for the emergence of a better predictor by using multiple variables. For instance, the well-being of the first person could matter most because she has the most to lose if someone cuts in line, i.e., her change in wait time is proportionally greatest. On the other hand, it might emerge that the last person is most important because waiting additional time might actually make it no longer worthwhile to wait at all. Furthermore, Global Welfare or Middle Person Welfare might be the best because they provide an aggregate estimate or average estimate. As it turns out, people do not judge these metrics differently (see Fig. 5). Additionally, there is a fair amount of negative skew for the question Line Cutter Welfare, indicating that some participants felt that even if cutting the line was allowed, they were not receiving much benefit.

Finally, we wanted to see if there were any strong correlations between the various evaluation questions and the moral judgment. Figure 6 shows the cross-correlation between all responses from the subjects. As one might expect, the questions about the individuals in line are highly correlated, further indicating that most subjects respond to these questions with similar evaluations. In addition, as predicted, all evaluation variables are negatively correlated with the moral judgment variable, indicating that as there are more negative impacts of the action, the less likely it is judged permissible to cut in line. Universality has the strongest negative correlation with the moral judgment variable as well, indicating that participants seem to consider the question “what if everyone did this” (the System 2 contractualist method of reasoning) when deciding if it was OK to cut.

5.2 Building an SEP-net from data

Our model blends the notion of preferences with that of Scenario and Evaluation Variables. While individuals cannot set or have preferences over the Scenario Variables, they will possess their own subjective evaluations over the Evaluation Variables given a setting to the Scenario Variables. Given both the Scenario Variables and the Evaluation Variables, the agent can then decide on a preference over the single Preference Variable

Leveraging the insights of our initial data analysis, we detail how we map the responses into an SEP-net that we will then use for predicting human responses to moral judgments in the next section. To start, a simple, fully connected version of an SEP-net, without accounting for the data, is depicted in Fig. 7. Working from our questionnaires we have the following variables:

-

1.

Scenario Variables (SVs). A set of variables that describe the context, such as location, whether or not the agent had already waited in line, whether or not the agent was using the main function of the line, and the size of the line. In addition, we need a variable to specify the main reason or motivation for cutting the line. We observe that the agent does not have the ability to set values for these variables, nor does the agent have preferences over their values, as these values are set by the environment or context within which the decision is taking place. These variables do not depend on any other variable, i.e., there is no incoming dependency arrow, meaning that this is part of the input to a decision-making AI system.

-

2.

Evaluation Variables (EVs). A set of variables that a person (or an AI system) considers (and estimates the value of) to reason about the given scenario. These are, for example, the well-being of the first in line, the well-being of the cutter, and others as discussed in the experimental details section. In our experiments, we have 8 such variables. These variables have a real-valued range as a domain and the user selects one point in the range, which represents her estimate for that variable’s value. However, no preferences for the values of these variables are required. All the evaluation variables depend on the scenario variables. This follows our conjecture that people need to examine the specific scenario in order to start an evaluation phase in which they identify the evaluation variables and estimate a value for them.

-

3.

Preference Variables (PVs). These are already included in a standard CP-net. In the setting under study, the agent expresses preference over a single value, that models whether or not, given both the values of the SVs and the values for the EVs, it is acceptable to cut the line, i.e., the moral judgment. The single preference variable depends on the evaluation variables. This again follows from the conjecture that a person needs to first perform a level of consequentialist or contractualist estimation in order to decide whether the rule, that states that a line cannot be cut, can be violated.

CP-nets and their variants, e.g., probabilistic CP-nets [23, 99], allow only for preference variables, and there is no option for creating a dependency between the preference variables to scenario and context variables. We rather envision a three-layer generalization where, as shown in Fig. 7, the single preference variable depends on the evaluation variables, which in turn depend on the scenario variables. However, a finer-grained analysis may show that there are evaluation variables that do not depend on the scenario variables. For example, the evaluation variable that has to do with the likelihood of the event happening does not have any relationship with whether or not the cutter is concerned with the main function of the line. Hence, we turn to look closer at our data in order to refine the fully connected SEP-net depicted in Fig. 7.

The SEP-net corresponding to the data collected in our study. SVs influence the way individuals evaluate each scenario and make a decision. For the sake of readability, we group evaluation variables based on whether they depend on a particular SV in order to reduce the number of arrows. Given the SVs and the EVs, the agent can then decide on a preference over the single PV. Note that Line Cutter Welfare does not have any effect on the PVs, while the Already Waited variable is completely missing

In order to build an SEP-net from our collected data, we must first identify dependencies between variables in our collected data. The variables of our SEP-net will be the same as those detailed above and the final result of our analysis can be seen in Fig. 8. The SVs describe all features of a scenario, and therefore refer to Reason and Location. Reason has a domain that includes all 25 reasons for cutting the line as listed in Table 3 and Location has domain \(\{Airport, Bathroom, Deli\}\). The 7 EVs in Fig. 8 correspond to the evaluation questions asked to the subjects. All have the domain \([-50,50]\), except Likelihood that has the domain [0, 100]. There is only one PV, corresponding to the moral judgment question. It has a binary domain (i.e., 0 “no cut", 1 otherwise).

To start, we investigate which SVs influence the way individuals respond to EVs. If we can find a relationship between these variables, then we can say that a subset of EVs depends on a subset of SVs, and use these relationships to build our model. We also check whether SVs influence the PV. To test for dependency, we run a set of Wilcoxon signed-rank tests to see whether the following four null hypotheses can be rejected:

-

1.

NH1: Location does not affect the EVs;

-

2.

NH2: Reason does not affect the EVs;

-

3.

NH3: Location does not affect the PV;

-

4.

NH4: Evaluation variables do not affect the PV.

5.2.1 NH1: Location does not affect all evaluation variables equally

Table 4 gives the p-values for our Wilcoxon signed rank test for each pair of location and evaluation variables. NH1 is only rejected in a few cases, specifically between Deli and Bathroom when evaluating the effect on the last person, cutter, and likelihood as well as between the Deli and Airport when evaluating the effect on the cutter and likelihood. Hence we can say that NH1 is only partially rejected, and that some Evaluation Variables are affected by the Location, specifically: last person, cutter, and likelihood. We have depicted these results in Fig. 8 where we build an SEP net from our experimental data. It is interesting to notice that there are a set of variables that seem to be independent of location, e.g., Global Welfare is always important, but likelihood is dependent on location.

5.2.2 NH2: Reason does not affect the evaluation variables

For each pair of scenarios, we checked the Wilcoxon signed-rank test for each of the EVs; we omit the full \(7 \times \left( {\begin{array}{c}25\\ 2\end{array}}\right)\) pairs for readability (they are available in the appendix). For NH2 we can only reject the null hypothesis in some cases, however, some evaluation variables are significantly affected for each assignment to the reason variable. Hence, we can say that individuals evaluate the scenario differently based on why someone wants to cut the line, and that the reason can and does influence all evaluation variables, with the exception of the Line Cutter Welfare variable, as shown in Fig. 8.

dummy

5.2.3 NH3: Location does not affect the preference variable

We investigated whether or not the location had an effect on the PV. In order to test this, we selected four reasons for each location, since there were different numbers per location, aggregated these, and compared the mean responses to the moral judgment (PV). The complete results are depicted in Table 5. From this, we can reject NH3 for all pairs except Deli and Bathroom. This indicates that in some cases location may be sufficient to evaluate the vignette and make a decision. This is represented by the arc between Location and PV in Fig. 8. It is interesting to note that participants seemed to evaluate the airport as being a significantly different location than the deli or the bathroom.

5.2.4 NH4: Evaluation variables do not affect the preference variable

In order to assess the influence of the EVs over the PV we need to place them all in a comparable range. To do this we compute quartiles of each evaluation variable to bucket them and then compare the effect of this group on the PV using the Wilcoxon signed-rank test. For each combination of SV and EV, we pair groups in order to perform the test. The full results are available in the appendix. The results suggest that the EVs have some influence on the preference variable, except for the cutter variable. Indicating that in general participants were not thinking of the benefit to the cutter, but only the cost to others. This is depicted in Fig. 8 as we have moved the cutter variable out of the box of the rest of the EVs, since is perceived differently.

Based on the findings we can construct a partial graph of the dependencies between variables, the resulting SEP-net corresponding to the data of our study is shown in Fig. 8. In the next section, we will put our SEP-net to the test as a prediction model for human moral judgments.

5.3 Prediction analysis

The analysis conducted in the previous section can be leveraged to automatically construct an SEP-net, provided suitable data. In this work, we use the SEP-net from data as a proof-of-concept system and test it using a prediction task, comparing its performance with that of several popular machine learning models. The aim of the task is to model a social behaviour in a specific scenario, i.e., given a location and a reason, and predict whether an individual would allow another individual to cut in line given her evaluations for each EV.

All models are trained on a 5-fold cross-validation. Meaning that the dataset has been split into 5 non-overlapping subsets. One subset is used for testing and the remaining four for training. The process is repeated 5 times, changing at each iteration the subset used for testing. In a binary prediction task, we evaluate models according to their performance as a combination of True Positive (TP), False Positive (FP), True Negative (TN), and False Negative (FN) predictions. We employ the following standard metrics to evaluate the models:

-

Accuracy is the proportion of correct predictions out of all possible predictions, computed as: \(\frac{TP + TN}{TP + TN + FP + FN}\).

-

Precision is the fraction of true positives among all positive predictions, computed as: \(\frac{TP}{TP+FP}\).

-

Recall is the fraction of true positive predictions among all actual positives, computed as: \(\frac{TP}{TP+FN}\).

-

F1 Score is the harmonic mean of precision and recall, computed as \(\frac{2*TP}{2*TP+FP + FN}\).

Following the relationships discovered in the data analysis reported in Sect. 5.1, we modeled two SEP-nets. The two SEP-nets differ in the set of evaluation functions and evaluation values. The two SEP-nets have the same structure, as reported in Fig. 8. In particular, in one SEP-net we refer to as SEP-SVM, evaluation functions are modeled by letting each one be a Support Vector Machine (SVM) [30]. SVMs are one of the most robust prediction methods for binary classification tasks and are commonly used for these tasks. In our model, given an evaluation variable, a location, and a reason, the correspondent evaluation function is trained on the subset of individuals’ responses in the training set for that variable to predict the preference value. In SEP-SVM, the set of evaluation values coincides with the set of original values in the MT survey (i.e., values are in \([-50, 50]\) for all variables, except for Likelihood whose values are in [0, 100]).

In the other SEP-net, which we call SEP-Table, evaluation functions are modeled using the empirical distribution of the preference values in the training data, grouped by quartiles of evaluation values. This means, that given a variable, a location, and a reason, evaluation values in the training set are split into 4 groups, Table 6 gives the intervals for each evaluation variable. For each group, the function returns the preference value that is preferred by the highest number of subjects in the training set. In both SEP-nets, the CP-table of the preference variable is built by adopting a simple approach: each cp-statement reports the value that appears most often in the training set, given the assignment to the parent variables in the SEP-net. Notice that the assignment of the parents is given by the output of the selected evaluation functions except “line cutter welfare", and the value of the location variable. At training time we compute the number of individuals grouped by location and preference value. In building the CP-table, we use this value to break possible ties in the assignments of evaluation variables, i.e., in the case of ties, we choose the preference value that is preferred by the highest number of individuals for a given location. This models a simple majority rule on the evaluation variables with a tie-break rule.

Given a location, a reason, and a set of evaluation values of an individual, a SEP-net returns a prediction of whether the individual would allow someone to cut in line, consistent with the preferences in our collected data. We put SEP-nets to the test against a set of baselines leveraging popular machine learning models typically used for binary classification/prediction tasks. As baselines, we trained four models: an XGBoost [100, 101], an ensemble of 100 neural networks using VORACE [102], a Random Forest [103], and a single SVM. We did not perform any fine-tuning of hyperparameters but rather used their default values. The experiments were run on a MacBook Pro (13-inch, 2017), CPU 3.5 GHz Intel Core i7, RAM 8 GB 2133 MHz LPDDR3. The simulations are developed in Python 3.7. We adopted RandomForestClassifier, SVM by Scikit-learn, XGBClassifier by XGBoost.

The average performance and standard deviation across all five folds of our cross-validation, along with average training time, is reported in Table 7. We observe that the two SEP-nets perform very well overall, and slightly better than XGBoost which is considered state-of-the-art in many domains. In the case of SEP-Table, the training time is much smaller than the others since the model is mostly fit from the empirical data. It is interesting to notice that the single SVM performs much worse across all metrics than SEP-SVM. This result suggests that the specialization adopted inside the proposed model seems to be beneficial for modeling highly contextual decisions. The proposed SEP-nets have high accuracy with low standard deviation, showing that they are able to fit our data very well. From this model, one may conjecture that the computation of the preference value appears to be the output of a deliberation process that chooses a moral judgment based on the majority of more simple decisions.

6 Discussion

The analysis reported in Sect. 5.1 and the results reported in Sect. 5.3 achieve the two central goals of this paper. First, they provide evidence for our hypothesis about moral psychology, namely, that System 2 (outcome-based and agreement-based) reasoning is at play for our participants when deciding when to override rules. Second, they demonstrate that our novel formalism (SEP-nets) describes this process in a way that could be useful for enabling AI systems to understand the bounds of constraints.

There is a consensus in the moral psychology literature that rules matter for moral judgment [26, 27, 87, 104, 105]. However, what is less clear is how we readily figure out when rules can be overridden. In this paper, we proposed that a System 1 mechanism is often at play when we follow rules (as others have suggested before us, see especially [26]) and a (partially) contractualist System 2 mechanism is at play to figure out when to override rules (our original contribution). The data from our study supports this hypothesis. If our participants were using an entirely rule-based approach to make moral judgments about the scenarios we gave them, then they would have responded by 1) saying it was never permissible to cut in line (a strict rule-based approach), 2) saying it was only permissible to cut in line if you weren’t requesting the main service (a slightly more nuanced version of the rule about standing in line), or 3) being responsive only to the location variable and not the evaluation variables. It is clear that participants were sometimes rule-based. This was revealed by the fact that the location variables sometimes have a direct influence on the preference variable (see Fig. 8). However, subjects also clearly used System 2 reasoning, which was apparent by the fact that the evaluation variables (including both utility-based and agreement-based concerns) impacted the preference variable.

Constraints on AI systems can be useful to ensure that AI systems are abiding by the social and moral rules that guide the human world. However, constraints have their drawbacks. Humans navigate constraints quite flexibly, understanding when a seemingly fixed constraint should actually be overridden. SEP-nets present a formalism that is inspired by the way that human participants sometimes decide to override socio-moral rules. To generalize this formalism to other socio-moral rules, evaluation variables for those rules would have to be determined. In the simulations, we used different evaluation functions without tuning their hyper-parameters, but many others exist that can be adopted. Moreover, the whole process could be automated on the basis of collected data in order to provide a SEP-net that better describes a social behaviour.

Moreover, our model goes even further and provides a demonstration of how the current methods of implementing morality in AI systems would need to be modified to capture the computational mechanisms we describe.

7 Conclusions and future work

We have taken a first step to model and understand the question of when humans think it is morally acceptable to break rules. We showed that existing structures in the preference reasoning literature are insufficient for modeling this data, and we defined a generalization of CP-nets, called SEP-nets, which allow the linkage of preferences with scenarios and context evaluation. We constructed and studied a suite of hypothetical scenarios relating to this question, and collected human judgments about these scenarios.

Through our empirical study, we showed that humans seem to employ a complex set of preferences when determining if it is morally acceptable to break a previously established rule. Subjects seem to take into account the particular elements of a given scenario, including location and reasons to break the rule. This provides some evidence that a System 2 process is operative in rule-breaking decisions: implying that moral judgments were influenced by calculations of the impact of rule-violations on the well-being of others (consequentialist reasoning) as well as notions of what everyone would agree to (contractualist reasoning). Other times, System 1 reasoning seemed to be operative; sometimes, outcomes and agreement had no relationship to moral permissibility and rather rules were considered inviolable. Together, this pattern of data begins to suggest that moral rigidity and moral flexibility may be driven by fast and slow thinking.

There have been various attempts by philosophers to unite the three major threads of moral philosophy (outcomes, rules, and agreement) into a single unified view [86, 106]. Parfit called his unified view a “Triple Theory”. To date, no psychological theory has attempted to explain how rules, outcomes, and agreement are all integrated in the moral mind. Our work is one step on the way to creating a Psychological Triple Theory.

We do not claim that our proposal is the sole solution to the problem of how AI systems should reason about exceptions to constraints. We simply aim to describe one potential mechanism that humans use to reason about exceptions to one kind of rule (socio-moral rules) that could help AI systems do the same. Of course, we hope that the mechanism we describe here generalizes beyond the specific scenarios we use and perhaps even past the specific rule categories we use (e.g. to organizational norms, religious norms, social conventions, and so on). However, more extensive experimental and computational work will be needed to determine how general this process is. Indeed, while the SEP-net formalism could take into account strict constraints in the form of scenario variables that engage in certain contexts, i.e., are not learned from data but rather set by experts or situational constraints. However, for sensitive applications such as health care, these would require careful understanding and modeling. Finally, we do not explicitly encode high-level moral values such as altruism or fairness in our model. While we could add a layer to the SEP-net, defining and quantifying these variables is beyond the scope of this paper and an interesting direction for future work. Among several possible extensions, we consider the following the most interesting.

7.1 Comparing preferences, measuring value deviation, and uncertainty

We defined generalized CP-nets (called SEP-nets) in a way that is consistent with classical CP-nets and probabilistic CP-nets. The aim is to understand how to use a (generalized) preference structure to effectively learn and reason with morality-driven preferences, and to embed them into an AI system. Another fruitful direction for future work is to attempt to adapt our results to other preference reasoning formalisms, e.g., soft-constraints or weighted logical representations [52]. Reasoning about preferences and decision-making requires also being able to compare preferences. For instance, in a multi-agent system, it is important to understand whether an agent is deviating from a societal priority or norm. To do that, recent studies proposed metric spaces over preferences [69] which can be used to implement value alignment procedures. Such metric spaces may be extended to be applicable to SEP-nets. Such metrics would be useful to allow a system designer to intervene in cases where individuals (or artificial agents) behave differently than expected or are operating outside the norms or rules of a society. Finally, in our work so far we use deterministic formalisms for SEP-nets, however, moral situations are often complicated by uncertainty in the scenario or decisions made. A key future direction will be generalizing our work, e.g., through the use of probabilistic CP-nets (PCP-nets) [23] or other graphical utility models such as generalized random utility models [107], to explicitly model this uncertainty.

7.2 Limitations of our approach

While our work marks a significant step towards modeling and understanding the conditions under which humans find it morally acceptable to break the rules, it is important to acknowledge limitations in both our experiment and our model. In the scenario proposed in this work, the SEP-net focuses on a single target variable. However, real-world applications may require the consideration of multiple variables in the final decision-making stage. In such cases, the model would need to be equipped with additional preference variables to capture the complexity of these scenarios. This expansion could significantly increase the model’s complexity, particularly with adding more variables per layer. Managing this complexity and ensuring the model remains computationally feasible is a critical challenge that needs further exploration.

Moreover, while our SEP-net framework provides a structured method to link preferences with scenarios and context evaluations, it does not fully address the complexity and uncertainty inherent in moral reasoning. The SEP-net formalism we define is extensible in that we can add additional layers without significantly affecting the semantics of the current formalism. However, given that identifying and quantifying these values, especially in the context of moral decision making, is an active area of research in moral theory significant work is needed to model these.

Finally, in some settings we may wish to incorporate expert knowledge or constraints into the model. While the SEP-net framework as defined should be able to handle these issues, the semantics are not straightforward. Likewise, the current SEP-net framework could be extended to incorporate probabilistic elements, handle multiple target variables, and manage the increased complexity from additional variables to enhance the robustness and applicability of our model.

7.3 Prescriptive plans based on moral preferences

The AI research community has not only been active in understanding how to make single decisions based on preferences but also in creating plans, consisting of sequences of actions, that would respect or follow certain preferences [108]. This work can be exploited to extend the use of the moral preferences discussed in this paper into more prescriptive AI techniques such as automated planning [109]. Although prior efforts from the planning perspective all investigate the generation of plans that take into account pre-specified utilitarian preferences, the question of where those utilities and preferences manifest from has not been addressed very adequately so far. We are currently actively investigating methods that seek to use the data collected in this work to automatically generate preferences in the notation used by planning formalisms [110]. The generation of such preferences will in turn enable us to generate prescriptive plans for agents or systems that conform to the moral standards of that agent or system. Specifically, we will transform the problem from a classification-based setting into a generative model, and then present plan (action) alternatives that agents can choose from [111]. To situate this in the context of the current question of study: this extension would enable us to move from determining whether it was acceptable to break a rule, to generating ways to do so that are most in accordance with some preference and cost function that takes moral obligations into account.

Data availability

All the data collected are available on https://github.com/aloreggia/SEP-net/data.

Notes

We depart from the typical work in this area which has often focused on understanding judgments in high-stakes, uncommon scenarios, e.g., a runaway trolley headed towards people [28]. Instead, we probe people’s moral intuitions about commonplace scenarios to ensure that we are modeling the processes people are using in their day-to-day moral decisions.

Note that despite using human preferences as a core concept, preference-based models are not limited to capturing consequentialist or utilitarian models of moral cognition. As we illustrate below (§2.4 and 4), our formalism captures utilitarian as well as non-utilitarian elements of morality, such as agreement-based factors.

In this paper, we sometimes refer to rules that guide these cases as “socio-moral” and the judgments people render of them “socio-moral judgments.” This is because there is no widely agreed-upon definition of what counts as a moral rule as opposed to a socially conventional one [89]. In fact, recent research shows that people from different cultures have different understandings of what counts as a moral issue to begin with [90]. Moreover, there is no consensus as to whether there are separate psychological processes for judging moral rules verses social ones [89]. For our purposes, it is not critical whether the rule about standing in line is moral per se but rather that it exhibits certain interesting characteristics.

The Committee on the Use of Humans as Experimental Subjects. The review process ensures compliance with university-mandated ethical guidelines for research conducted with human subjects. See couhes.mit.edu for details of the review process.

The Wilcoxon signed-rank test [98] is a non-parametric test for comparing paired data samples from the evaluations of individuals. A Non-parametric test means we do not make assumptions about an underlying distribution of the data, e.g., we do not assume our data follows a normal distribution. The Wilcoxon signed rank test assumes that there is information in the magnitudes and signs of the differences between paired observations. It is considered the non-parametric equivalent of the paired student’s t-test. In particular, the signed-rank test can be used as an alternative to the t-test when the population data does not follow a normal distribution. It is used to test the null hypothesis that two related paired samples come from the same distribution.

References

Russell, S., Hauert, S., Altman, R., & Veloso, M. (2015). Ethics of artificial intelligence. Nature, 521(7553), 415–416.

O’Neil, C. (2017). Weapons of math destruction: How big data increases inequality and threatens democracy. Crown.

Kearns, M., & Roth, A. (2019). The ethical algorithm: The science of socially aware algorithm design. Oxford University Press.

Rossi, F., & Mattei, N. (2019). Building ethically bounded AI. In: Proc. of the 33rd AAAI(Blue Sky Track).

Amodei, D., Olah, C., Steinhardt, J., Christiano, P.F., Schulman, J., & Mané, D. (2016). Concrete problems in AI safety. arXiv:1606.06565

Hart, H. (1958). Positivism and the separation of law and morals. Harvard Law Review, 71, 593–607.

Clark, J., & Amodei, D. (2016). Faulty reward functions in the wild. Retrieved 1 Aug 2023 from https://blog.openai.com/faulty-reward-functions