Abstract

We study the rational preferences of agents participating in a mechanism whose outcome is a ranking (i.e., a weak order) among participants. We propose a set of self-interest axioms corresponding to different ways for participants to compare rankings. These axioms vary from minimal conditions that most participants can be expected to agree on, to more demanding requirements that apply to specific scenarios. Then, we analyze the theories that can be obtained by combining the previous axioms and characterize their mutual relationships, revealing a rich hierarchical structure. After this broad investigation on preferences over rankings, we consider the case where the mechanism can distribute a fixed monetary reward to the participants in a fair way (that is, depending only on the anonymized output ranking). We show that such mechanisms can induce specific classes of preferences by suitably choosing the assigned rewards, even in the absence of tie breaking.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider a group of agents participating in a contest, whose outcome is a ranking among them. Concrete scenarios of this form include tournaments, recruiting competitions, and some types of auctions and elections [1,2,3,4,5,6]. In these scenarios, agents exhibit certain preferences on the output ranking: teams participating in a tournament aim at prevailing over the opponents, whereas in multi-winner elections a candidate may settle for being in the top-k list. If the output ranking is a linear order, there is virtually no question about the rational preference of each agent: they will prefer (at least weakly) to stand as high as possible in the ranking.Footnote 1 However, when the output ranking contains ties [7,8,9], the situation is not so clear-cut. Depending on externalities, agents may be sensitive to ties and prefer to occupy their ranking with as few peers as possible, or instead they could prefer to be as close as possible to the top level, regardless of ties, or many other plausible preferences. That is why tie-breaking rules are frequently used as a workaround to turn a ranking into a linear order. However, when no domain-specific criterion is applicable, tie-breaking might be based either on randomization, as in [10], or on a fixed order between competing entities as in [11]. When applied to agents, these generic tie-breaking techniques may appear arbitrary or, even worse, prejudicial.

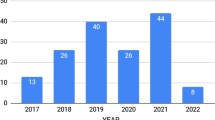

Also the specific order in which tie-breaking rules are applied can raise questions. The group stage of the UEFA Champions League, for instance, employs 11 tie-breaking rules when two teams finish with the same score. In order of importance, these criteria encompass factors like head-to-head match results, goal difference in all group matches, and disciplinary points accrued through red/yellow cards. Notably, FIFA regulations introduce a variation by altering the precedence order of the first two criteria mentioned above. This alteration has been shown to potentially reduce the occurrences of both uncompetitive matches [12] and heteronomous relative rankings [13].Footnote 2 As mentioned in [14], these undesirable effects manifested in a case of suspicious collusions during the 2017 UEFA European Under-21 Championship, prompting a formal complaint from the Slovakian Prime Minister to the UEFA General Secretary. Finally, ties can be inherent to some domains, e.g. when rankings reflect social categories like honorary titles, corporation hierarchies, or donor status [15].

In this paper, we assume that no tie-breaking rule is used and take an axiomatic approach to study the landscape of preference relations of agents involved in a mechanism producing a weak ordering among participants. We assume agents’ rationality, self-interest, and a commitment to align with the direction of rankings. As customary in the axiomatic formulation of Decision Theory, we leave the mechanism, as well as the agents’ declarations, completely unspecified [16,17,18]. Instead, we focus entirely on the aftermath of the competition, and analyze the possible preferences that agents can hold over the competition outcomes. This perspective is also justified by the fact that we embrace a large class of ranking procedures, which include voting systems, examination boards, pairwise comparisons (e.g., matches in a sport competition), that generate rankings according to a highly heterogeneous toolbox of grading/scoring rules [19,20,21]. Furthermore, in some cases the candidates themselves may only have a vague idea of the underlying mechanism. Nonetheless, they can be perfectly able to judge and compare counterfactual outcomes independently from how they have been produced.

By selecting different self-interest axioms, we obtain a rich taxonomy of preference types, or theories, which exhibit different degrees of refinement, in turn related to the agents’ competitiveness. As an extreme case, an agent that is entirely indifferent to its own position within the output ranking will be unlikely to put effort in the competition. Conversely, an agent that subscribes to more self-interest axioms will exhibit a fine-grained preference among outcomes, and therefore will actively participate in the competition to try and exert control over its outcome. Such considerations are of primary interest for the competition designer, who will generally try to encourage active participation and competitiveness among the participants [22].

For example, consider a scenario where the top k agents are awarded equal prizes, while the remaining participants receive no rewards. In this case, self-interested participants will exhibit a preference (later denoted by \(\preceq ^{\top }_k\)) which adheres to only the two weakest axioms that we propose. Consider, instead, an alternative situation where all agents are granted prizes that decrease in value as their ranking descends. Under these conditions, the resulting preference (later denoted by \(\preceq ^{\top }\)) is more discerning and indeed belongs to a stronger theory within our taxonomy.

Further, under specific circumstances, the designer can proactively enhance competitiveness through the strategic introduction of suitable economic incentives. Along these lines, we consider the possibility of assigning quantitative rewards to the participating agents according to the output ranking. Under mild fairness conditions, quantitative rewards simplify the preference landscape, in the sense that some theories that were distinct in the aforementioned taxonomy collapse into a single one. Finally, by analogy to the classical representation theorem in Decision Theory, we identify a family of reward strategies, called strictly level averaged, which characterizes the strongest, i.e. the most competitive, of our preference theories. This characterization carries significance on both theoretical and practical fronts. On a theoretical level, it underscores the suitability of the set of proposed axioms. From a practical standpoint, it demonstrates that it is feasible to enhance agents’ competitiveness by offering appropriate rewards, even without the need to break ties.

Related Work

To the best of our knowledge, the specific problem addressed in this paper was not previously considered in the literature. However, it touches several subfields within game theory and social choice theory, including contest theory, tournament design, and preference aggregation.

As far as tournament design is concerned, the classical problem of interest is to identify the best way to arrange a sequence of pairwise comparisons and extract a global ranking from their outcomes. The primary objective is to obtain a ranking that is faithful to the agents’ true strength. In turn, such faithfulness may be measured in various ways: the likelihood that the strongest agent wins the competition [23], or the expected distance between the outcome and the true strength-based ranking [24]. Secondary design objectives, especially when such designs are applied to actual sports, include fairness, maximizing attendance, or minimizing rest mismatches [25]. Whenever tournament design concerns itself with the strategic behavior of the participating agents, it needs to assume some preferences over the output rankings, as in [1]. The current paper attempts a thorough investigation of such preferences, encompassing various facets of self-interest.

Similarly to preference aggregation, and to social welfare rules in particular, we assume that agents hold a (hyper-)preference over the outcomes of a choice mechanism. However, in our study, these outcomes consist of rankings among the participating agents themselves. Consequently, we make a justified assumption that agents do not harbor arbitrary preferences regarding these outcomes. Instead, their preferences tend to align with the direction of the output ranking, with each agent naturally favoring their own top placement. The first part of this paper (up to Section 4) is precisely an exploration of the different ways in which an agent can be expected to compare two rankings in this context. Importantly, our analysis transcends the specific contest that leads to the ranking’s creation. In contrast, preference aggregation is concerned with characterizing effective ranking-producing mechanisms, using evaluation criteria that range from the classic Arrovian axioms [26] to more recent proposals such as hyper-stability [27] or popularity [28].

Our investigation of quantitative rewards (Section 5), instead, is connected to the contest theory and prize allocation literature. Contest theory examines the impact of rank-order reward allocations on individual efforts [29]. For example, in a seminal paper, Lazear and Rosen [30] identify the reward scheme that maximizes the participants’ utility at equilibrium. Subsequent investigations have considered different optimization criteria, such as maximizing the total effort [31] or maximizing participation [32]. Although our approach and contest theory have some common objectives, some aspects of them differ. First of all, contest theory typically assumes linear rankings, whereas ties are an essential feature of our analysis. Secondly, contest theory is grounded on an explicit representation of abilities, efforts, and the associated cost functions (which are determined a priori or come from a known distribution) and it assumes that agents, who know how the mechanism transposes the individual efforts into an output ranking, act strategically in a Nash equilibrium. Although these assumptions fit some concrete scenarios such as auctions, competition-based crowdsourcing, or labor compensations, they appear somewhat unrealistic in many other cases. For example, in recruiting competitions or sport tournaments, how can we specifically quantify the agents’ efforts or abilities, and how can we predict the final ranking as a function of effort? In this respect, our approach provides some qualitative results to promote the active participation in the competition, whenever a detailed quantitative description of the contest and its participants is not available. Closer to our approach is a recent paper [33], that proceeds axiomatically from a set of desirable properties for reward functions, and characterizes families of rewards that satisfy certain sets of axioms. As customary, the reward functions considered in that paper apply to linear rankings only.

Structure of the Paper The paper is structured as follows. Section 2 provides preliminary notions about rankings and introduces various preferences among them, drawing inspiration from real-world scenarios. In Section 3, we introduce the foundational axioms that underlie our theories and explore their relative implications. Section 4 investigates the taxonomy resulting from all possible theories that can be derived from the axioms introduced in the previous section. Section 5 explores how a mechanism designer can enhance competitiveness by dividing a jackpot among the participants according to the resulting ranking. Specifically, we define level-averaged rewards and demonstrate their alignment with the strongest theory in our taxonomy. The paper concludes with a discussion of our findings.

2 Preliminaries

2.1 Classes of Orders

Recall the following definitions: a pre-order is a reflexive and transitive binary relation, a weak order is a total pre-order, and a linear order is an antisymmetric weak order.

Given a pre-order \(\sqsubseteq \), we denote its asymmetric part by \(\sqsubset \), its symmetric part by \(\equiv \), and its symmetric complement by \(\not \bowtie \). Formally, we have:

We say that a pre-order \(\sqsubseteq _2\) perfects another pre-order \(\sqsubseteq _1\) if \(a \sqsubset _1 b\) implies \(a \sqsubset _2 b\), and \(a \not \bowtie _2 b\) implies \(a \not \bowtie _1 b\). Intuitively, \(\sqsubseteq _2\) exhibits at least the same strong preferences of \(\sqsubseteq _1\), but it may distinguish items that are equivalent for \(\sqsubseteq _1\), as well as distinguishing or equating items that are incomparable for \(\sqsubseteq _1\). Perfectioning is itself a pre-order, whose top elements are the linear orders. The bottom element of perfectioning is the identity relationFootnote 3 in the general space of pre-orders, whereas if we consider weak orders only, the bottom element is the degenerate weak order that equates all items, denoted by \(\preceq ^{\equiv }\). To the best of our knowledge, the perfectioning relation has been overlooked in the literature, and no standard name has been established for it. Figure 1 depicts four pre-orders over three items, connected in a chain by the perfectioning relation.

Let us contrast perfectioning with the containment between pre-orders, when represented as sets of pairs. First, note that \(\sqsubseteq _2\) contains \(\sqsubseteq _1\) (i.e., \(\sqsubseteq _2 \,\supseteq \, \sqsubseteq _1\)) iff \(a \equiv _1 b\) implies \(a \equiv _2 b\), and \(a \not \bowtie _2 b\) implies \(a \not \bowtie _1 b\). Containment is also a pre-order and it is incomparable with the perfectioning relation. In particular, containment has a single top element that is the degenerate weak order \(\preceq ^{\equiv }\). The bottom element is, as for perfectioning, the identity relation in the general space of pre-orders, whereas any linear order is a bottom element when we restrict consideration to weak orders. In Fig. 1, dashed arrows represent the containment relation, and the four orders in the figure are connected in a containment chain that starts from the identity relation and ends in the weak order \(\preceq ^{\equiv }\).

2.2 Rankings

We assume that an undescribed process, such as a competition or an election, produces a ranking on a finite set of agents A. A ranking is function \({ rk}: A \rightarrow \mathbb {N} \) with no “holes”, meaning that the range of \({ rk} \) is an interval \(\{ 1,\ldots , K \}\) with \(K\le \vert A\vert \). A ranking represents a discending order of credit among agents where rank 1 and K correspond to the top and the bottom of the ranking, respectively.

By \(\mathcal {R}(A)\) we denote the set of all rankings over A. Note that \(\mathcal {R}(A)\) is isomorphic to the set of weak orders over A: a ranking \({ rk} \) naturally induces a weak order \(\sqsubseteq _{ rk} \) such that \(a\sqsubseteq _{ rk} b\) iff \({ rk} (a)\ge { rk} (b)\). Conversely, a weak order \(\sqsubseteq \) over A corresponds to a ranking \({ rk} _\sqsubseteq \) that is recursively defined as follows:

If \(K= \vert A\vert \), then \({ rk} \) corresponds to a linear order over agents. Conversely, \(K< \vert A\vert \) implies that \({ rk} \) contains some ties of the typer \({ rk} (a)={ rk} (b)\), for two distinct agents a and b.

A preference relation for an agent \(a\in A\) (in short, an a-preference) is a weak order \(\preceq _a\) on \(\mathcal {R}(A)\), and we write \({ rk} _1\preceq _a { rk} _2\) to mean that agent a (weakly) prefers the ranking \({ rk} _2\) to \({ rk} _1\).Footnote 4 We aim at analyzing, first from an axiomatic point of view and then from a representational one, all plausible preferences of a self-interested agent who aligns with the ranking’s direction. In most cases, the designer of the contest that produces the rankings wishes for agents to be competitive with each other, rather than cooperative or indifferent [25]. Thus, it is useful to seek out a measure of preference competitiveness, or at least a (partial) order over preferences capturing the fact that some preferences are more discriminating than others. Without further information on the underlying mechanism, the perfectioning relation defined above is such a partial order. When applied to two preferences \(\preceq _1\) and \(\preceq _2\), perfectioning states that \(\preceq _2\) is more discriminating than \(\preceq _1\), in the sense that \(\preceq _2\) is obtained from \(\preceq _1\) by splitting some levels of the weak order \(\preceq _1\) into multiple levels.

For example, mechanism designers are likely to avoid the degenerate preference \(\preceq ^{\equiv }\) that equates all rankings. Indeed, \(\preceq ^{\equiv }\) is the unique bottom element of the perfectioning relation on preferences. At the other extreme we find preferences that are linear orders: they are maximal elements w.r.t. perfectioning. In the following section, we present a selection of preferences and relate them via perfectioning.

Finally, given a ranking \({ rk} \) and an agent a, \({ bl}_a({ rk})=\{b\in A\mid r(b)> r(a)\}\), \({ eq}_a({ rk})=\{b\in A\mid r(b)= r(a)\}\), \({ ab}_a({ rk})=\{b\in A\mid r(b)< r(a)\}\) is the partition of A into the agents that are strictly below, equivalent, and strictly above a, respectively.

2.3 Relations among Rankings

In this section, we introduce some basic binary relations on rankings. In Section 3.1, we then use these relations to define a set of self-interest axioms.

Let \({ rk} _1, { rk} _2 \in \mathcal {R}(A)\) be two rankings and \(a \in A\) be an agent, we define the following binary relations:

-

Same-context relation \(\textsf{SCRel}_a({ rk} _1, { rk} _2)\). The restrictions of \({ rk} _1\) and \({ rk} _2\) to \((A \setminus \{a\})^2\) coincide.

-

Improvement relation \(\textsf{ImprRel}_a({ rk} _1, { rk} _2)\). Agent a strictly improves its ranking with respect to at least one agent: there exists \(b \in A\) such that either \({ rk} _1(a)={ rk} _1(b)\) and \({ rk} _2(b)> { rk} _2(a)\), or \({ rk} _1(a)>{ rk} _1(b) \) and \({ rk} _2(a)\le { rk} _2(b)\).

-

Swap relation \(\textsf{SwapRel}_a({ rk} _1, { rk} _2)\). \({ rk} _2\) is obtained from \({ rk} _1\) by switching a with some agent above her: there exists \(b \in { ab}_a({ rk} _1)\) such that (i) \({ rk} _2(a)={ rk} _1(b)\), (ii) \({ rk} _2(b)={ rk} _1(a)\), and for all \(c\in A\setminus \{a,b\}\) it holds \({ rk} _2(c)={ rk} _1(c)\).

-

Dominance relation \(\textsf{DomRel}_a({ rk} _1, { rk} _2)\). All agents (strictly) dominated by a in \({ rk} _1\) remain (strictly) dominated in \({ rk} _2\): for all \(b \in A\), (i) if \({ rk} _1(b)>{ rk} _1(a)\) then \({ rk} _2(b)>{ rk} _2(a)\), and (ii) if \({ rk} _1(b)={ rk} _1(a)\) then \({ rk} _2(b)\ge { rk} _2(a)\).

Example 1

Consider the 6 rankings among 6 agents shown in Fig. 2. In each ranking, agents on the same line share the same level in the ranking. The graph shows the relations between the central ranking \({ rk} _1\) and the other 5 rankings, from the point of view of agent a. All rankings \({ rk} _i\), with \(i = 2,\ldots ,6\) represent some sort of improvement for agent a over \({ rk} _1\).

Notice that, by definition, \(\textsf{DomRel}_a({ rk} _1, { rk} _2)\) is equivalent to the conjunction of the following conditions:

and also equivalent to the conjunction of the following:

Then, assuming that a ranking \({ rk} _2\) dominates \({ rk} _1\) for agent a, the following lemma establishes other equivalences depending on whether \({ rk} _2\) also improves \({ rk} _1\).

Lemma 1

Let \({ rk} _1, { rk} _2 \in \mathcal {R}(A)\) be two rankings such that \(\textsf{DomRel}_a({ rk} _1, { rk} _2)\) holds. The following are equivalent:

-

1.

Not \(\textsf{ImprRel}_a( { rk} _1, { rk} _2)\);

-

2.

\(\textsf{DomRel}_a({ rk} _2, { rk} _1)\);

-

3.

\({ rk} _1\) and \({ rk} _2\) are 3-tier equivalent for a, i.e., \({ bl}_a({ rk} _1) = { bl}_a({ rk} _2)\), \({ eq}_a({ rk} _1) = { eq}_a({ rk} _2)\), and \({ ab}_a({ rk} _1) = { ab}_a({ rk} _2)\).

Moreover, the following are equivalent:

-

4.

\(\textsf{ImprRel}_a( { rk} _1, { rk} _2)\);

-

5.

not \(\textsf{DomRel}_a( { rk} _2, { rk} _1)\);

-

6.

one of the two inclusions in (I) or (II) (resp., (III) or (IV)) is strict.

Proof

Regarding the first triple of equivalences, the following chain of implications holds. First, assume that property (1) holds, so that \(\textsf{ImprRel}_a( { rk} _1, { rk} _2)\) does not hold. Then, we have that

This, together with (III) and (IV), clearly implies that \({ rk} _1\) and \({ rk} _2\) are 3-tier equivalent for a, which proves that (1) implies (3).

Then, assume \({ rk} _1\) and \({ rk} _2\) are 3-tier equivalent for a. By conditions (III) and (IV), it immediately holds that also \({ rk} _2\) dominates \({ rk} _1\) for a, which proves that (3) implies (2).

Finally, assume that also \({ rk} _2\) dominates \({ rk} _1\) for a. In particular, we have that \({ rk} _1(b) > { rk} _1(a)\) is equivalent to \({ rk} _2(b) > { rk} _2(a)\) and \({ rk} _1(b) = { rk} _1(a)\) is equivalent to \({ rk} _2(b) = { rk} _2(a)\). Consequently, none of the two cases in the definition of Improvement is satisfied.

The second triple of equivalences is a direct consequence of the first one and conditions (I) or (II) (resp., (III) or (IV)). \(\square \)

2.4 A Catalog of Preferences

In this section we show a short catalog of possible ways to compare rankings from the point of view of a participant. To identify a preference, we use a uniform naming system based on the following abbreviations:

Abbreviation | Meaning | |

|---|---|---|

\(\top \) | \({ rk} (a)\) | rank of a (1 is top) |

\(\bot \) | \({ rk} (a) - \max _b { rk} (b)\) | negative distance between a and the worst agent |

\(\textrm{ab}\) | \(|{ ab}_a({ rk})|\) | number of agents above a |

\(\textrm{eq}\) | \(|{ eq}_a({ rk})|\) | number of agents tied with a |

\(\textrm{bl}\) | \(|{ bl}_a({ rk})|\) | number of agents below a |

We use the above abbreviations as a superscript to indicate that a preference tries to minimize the corresponding quantity. For example, \(\preceq ^\textrm{ab}_a\) is the a-preference that minimizes \(|{ ab}_a({ rk})|\), i.e., the number of agents strictly above a in the ranking. Formally, \({ rk} _1\preceq _a^\textrm{ab}{ rk} _2\) holds iff \(|{ ab}_a({ rk} _1)| \ge |{ ab}_a({ rk} _2)|\). We also write \(\preceq ^{x,y}_a\) for the preference that tries to minimize first quantity x and then quantity y, lexicographically. Notice that, regardless of the quantities x and y, the preference \(\preceq ^{x,y}_a\) perfects the preference \(\preceq ^{x}_a\). Finally, we allow simple arithmetic expressions, like \(\preceq ^{\textrm{ab}+\textrm{eq}}_a\) for the preference that minimizes the sum of \(|{ ab}_a({ rk})|\) and \(|{ eq}_a({ rk})|\).

We present a selection of preferences based on the above measures.

-

Level-based. \(\preceq ^{\top }_a\) prefers to minimize the level of a in the ranking, i.e., \({ rk} (a)\). In particular, it is insensitive to ties. This preference may reflect a conscientious participant when a group of students is being partitioned into different levels of ability.

-

Level-based top-k. For a positive integer k, \(\preceq ^{\top _{\textrm{k}}}_a\) prefers a to be within the first k levels in the ranking. This preference only distinguishes two classes of rankings: those where a sits in one of the top k levels, and all other rankings. Any ranking in the first class is (strongly) preferred to any ranking in the second class. This preference applies to the preliminary phases of some sport tournaments, such as the UEFA Champions League, or in multi-winner voting systems where different scoring rules (e.g., plurality score or Borda score) can be used to select the participants who are in the top-k placements [5].

-

Relative. \(\preceq ^{\textrm{ab},\textrm{eq}}_a\) prefers having fewer agents above a; equal that, it prefers to have fewer agents tied with a. It is equivalent to minimising the vector \(\big ( |{ ab}_a({ rk})|, |{ eq}_a({ rk})| \big )\), lexicographically. [1] use the preference \(\preceq ^{\textrm{ab},\textrm{eq}}_a\) to model the fact that a player participating in a tournament generally aims at prevailing over the opponents.

-

Point based. \(\preceq _a^{\textrm{ab}-\textrm{bl}}\) judges a ranking by assigning 0 points to the agents that are placed the same as a, 1 point to each agent below a, and \(-1\) point to each agent that is better placed. It is equivalent to minimizing \(|{ ab}_a({ rk})|- |{ bl}_a({ rk})|\). As shown in Fig. 3, the preference \(\preceq _a^{\textrm{ab}-\textrm{bl}}\) can be seen as a less discerning alternative to \(\preceq ^{\textrm{ab},\textrm{eq}}_a\).

-

Best linearization. \(\preceq ^{\textrm{bst}}_a\) judges a ranking the same as its linear extension where a has the best position. The canonical name for this preference is \(\preceq ^\textrm{ab}_a\), because it is equivalent to minimising \(|{ ab}_a({ rk})|\).

-

Worst linearization. \(\preceq ^{\textrm{wst}}_a\) is the dual of \(\preceq ^{\textrm{bst}}_a\). The canonical name for this preference is \(\preceq ^{\textrm{ab}+\textrm{eq}}_a\), because it is equivalent to minimising \(|{ ab}_a({ rk})| + |{ eq}_a({ rk})|\). Preferences \(\preceq ^{\textrm{bst}}_a\) and \(\preceq ^{\textrm{wst}}_a\) can be adopted whenever an unknown rule is used to resolve ties. In particular, they reflect an optimistic or pessimistic attitude, respectively.

Figure 3 shows the relationships between the above preferences according to the perfectioning relation. Specifically, all preferences perfect the degenerate preference \(\preceq ^{\equiv }\) that equates all rankings. Then, \(\preceq ^{\textrm{ab},\textrm{eq}}_a\) perfects \(\preceq ^\textrm{ab}_a\) because it distinguishes rankings having the same number of agents above a based on the number of agents at the same level as a. Furthermore, to see that \(\preceq ^{\top }_a\) perfects \(\preceq ^{\top _k}_a\), assume that \({ rk} _1\) and \({ rk} _2\) are two rankings such that \({ rk} _1 \prec ^{\top _k}_a { rk} _2\). Then, we have that \({ rk} _1(a) > k \ge { rk} _2(a)\), and hence it also holds \({ rk} _1 \prec ^{\top }_a { rk} _2\). On the other hand, the preference \(\preceq ^{\top }_a\) is strictly more discriminating than \(\preceq ^{\top _k}_a\) in all cases where agent a occupies two different levels in the two rankings, both above or both below the threshold k.

The previous examples show how, depending on the specific context, different ways to compare rankings can be adopted. At the same time, it is evident that not all possible weak orders on \(\mathcal {R}(A)\) make sense. For instance, a preference where coming in last position is better than being first is poorly tenable in any context that we consider. In the next section we investigate which preferences are plausible from the point of view of a rational and self-interested agent.

3 Preference Axioms

In this section we formalize some preference axioms and investigate their mutual relationship.

3.1 Basic Axioms

The axioms introduced in this section are inspired by four ways in which two rankings can be interpreted as a change for the better for agent a. They are based on the relations between rankings introduced in Section 2.3:

-

a trades place with an agent that is higher up in the order; this case is captured by the \(\textsf{SwapRel}\) relation

-

a moves up in the order, and all other agents stay put; this scenario is captured by the intersection of the relations \(\textsf{SCRel}\) and \(\textsf{ImprRel}\) (Same-context and Improvement)

-

no agent moves up w.r.t. a, as captured by the \(\textsf{DomRel}\) relation (Dominance)

-

at least one agent moves down w.r.t. a, and no agent moves up w.r.t. a; this is captured by the intersection of the relations \(\textsf{DomRel}\) and \(\textsf{ImprRel}\) (Dominance and Improvement).

To simplify the notation, hereafter we refer by default to the preference of agent a, and omit the corresponding subscript. From the above four scenarios, we obtain the following four axioms, by requiring that the agent weakly prefers the more favorable ranking:

Axiom Sw states that trading place with another agent higher up in the ranking is a net positive. Axiom SCI states that, other things being equal (same context), moving up in the ranking is a net positive. These two axioms find natural justification in virtually all practical scenarios involving self-interested agents who align with the ranking’s direction. For instance, consider a sports athlete who deliberately underperforms, resulting in a potentially lower ranking position, thus violating SCI. Such behavior would likely raise suspicions of fraudulent activity or, if the athlete were sincere in their intentions, one might question their decision to engage in a context that evidently contradicts their spirit of competitiveness. Similarly, if a student were to swap their test with another student known to be significantly less prepared (thus violating Sw), it would be an anomaly demanding compelling justifications that surpass the influence of the ranking system.

The axiom DomI, instead, represents a stronger flavor of self-interest, wherein an agent a places a positive value on the fact that no other agent improves their relative ranking compared to a. whereas at least one agent strictly worsens their relative ranking. Accordingly, we can interpret DomI as the desire to overcome all other participants. Returning to the previous examples, such a behavior can be expected from a professional athlete, whereas it is not necessarily warranted among students in the same class. Finally, axiom Dom weakens the premise of axiom DomI by only requiring that no other agent improves their relative ranking compared to a. Note that, among these four axioms, axiom Dom is the only one that forces some rankings to be equivalent. Specifically, all pairs of 3-tier equivalent rankings \({ rk} _1,{ rk} _2\) satisfy both \(\textsf{DomRel}({ rk} _1,{ rk} _2)\) and \(\textsf{DomRel}({ rk} _2,{ rk} _1)\) (see Lemma 1). Consequently, those rankings appear equivalent to any preference that satisfies axiom Dom.

Next, we also include the strong version of the previous axioms, by strenghtening their conclusion to prescribe strict preference instead of weak preference. Such a strengthening does not make sense for the axiom Dom, because its premise \(\textsf{DomRel}(\cdot ,\cdot )\) is a reflexive relation, so the corresponding strong axiom would be unsatisfiable.

We denote by \(\mathcal {A}\mathcal {X}\) the set of the above seven axioms, i.e.

A preference \(\preceq \) satisfies one of the above axioms if the axiom holds for all rankings \({ rk} _1,{ rk} _2\). Given an axiom \(\alpha \), we denote by \({ Pref}_A(\alpha )\) the set of preferences satisfying \(\alpha \). As customary, we say that the axiom \(\alpha \) implies another axiom \(\beta \), denoted by \(\alpha \Rightarrow \beta \), if for all sets of agents A and all preferences \(\preceq \) on \(\mathcal {R}(A)\), if \(\preceq \) satisfies \(\alpha \) then it also satisfies \(\beta \) (i.e., \({ Pref}_A(\alpha ) \subseteq { Pref}_A(\beta )\)).

The following theorem characterizes the implication relationships holding between the preference axioms.

Theorem 2

The only implications holding among the axioms in \(\mathcal {A}\mathcal {X}\) are displayed in Fig. 4 (including the transitive closure of the arrows).

Proof

The implications \(SSw \Rightarrow \,Sw\), \(Dom \Rightarrow \,DomI\), \(SDomI \Rightarrow DomI\), and \(SSCI \Rightarrow \,SCI\) are obvious by definition. The implications between the other axioms derive from the following two implications between their premises.

Implication 1:

Assume \(\textsf{SwapRel}( { rk} _1, { rk} _2)\) and let b be the agent that trades place with a. Notice that b is strictly above a in \({ rk} _1\). To prove that Dominance holds, let c be an agent in \({ bl}_a({ rk} _1)\). Since a moves up in the order, we have that c is also in \({ bl}_a({ rk} _2)\). If instead we consider \(c\in { eq}_a({ rk} _1)\), for the same reason we have that \(c \in { bl}_a({ rk} _2)\). Then conditions (I) and (II) are satisfied, which means that \(\textsf{DomRel}( { rk} _1, { rk} _2)\) holds. To prove that \(\textsf{ImprRel}( { rk} _1, { rk} _2)\) holds, simply use b as witness. Implication 1 proves that \(DomI \Rightarrow \,Sw\) and \(SDomI \Rightarrow \,SSw\).

Implication 2:

Let \({ rk} _1,{ rk} _2\) be two rankings satisfying \(\textsf{SCRel}\) and \(\textsf{ImprRel}\), and let b be the witness agent for \(\textsf{ImprRel}\). To prove dominance, let \(c\in { bl}_a({ rk} _1)\) (if there is no such agent, this part of the claim is vacuously true). By definition of \(\textsf{ImprRel}( { rk} _1, { rk} _2)\), \(b\in { ab}_a({ rk} _1) \cup { eq}_a({ rk} _1)\), therefore \(b \ne c\). It follows that \(c \in { bl}_b({ rk} _1)\) and, by \(\textsf{SCRel}( { rk} _1, { rk} _2)\), \(c \in { bl}_b({ rk} _2)\), i.e \({ rk} _2(c)>{ rk} _2(b)\). Since \({ rk} _2(b) \ge { rk} _2(a)\), by transitivity we obtain \({ rk} _2(c)>{ rk} _2(b)\), as required by dominance.

Next, let \(c\in { eq}_a({ rk} _1)\), i.e. \({ rk} _1(c)={ rk} _1(a)\). If \(b=c\) then \({ rk} _2(c)\ge { rk} _2(a)\), as required by dominance. Otherwise, we distinguish two further cases, according to the disjunction in the definition of improvement. If \(b\in { eq}_a({ rk} _1)\) (that is, \({ rk} _1(b)={ rk} _1(a)\)) then \({ rk} _1(c)={ rk} _1(b)\) and, by \(\textsf{SCRel}( { rk} _1, { rk} _2)\), \({ rk} _2(c)={ rk} _2(b)\). By definition of improvement, \({ rk} _2(b)>{ rk} _2(a)\) and therefore \({ rk} _2(c)>{ rk} _2(a)\), as required by dominance. Finally, if \(b\in { ab}_a({ rk} _1)\) (that is, \({ rk} _1(a)> { rk} _1(b)\)) then \({ rk} _1(c)> { rk} _1(b)\) and, by the same-context condition, \({ rk} _2(c)> { rk} _2(b)\). Since \({ rk} _2(b)\ge { rk} _2(a)\), by transitivity we obtain \({ rk} _2(c)> { rk} _2(a)\). Implication 2 proves that \(DomI \Rightarrow \,ScI\) and that \(SDomI \Rightarrow \,SScI\).

Each non-implication can be proved by a suitable counterexample, details can be found in Appendix A.2. \(\square \)

Example 2

We present a natural class of preferences that satisfy the strongest of our axioms, namely Dom and SDomI. Let \(U^{ ab}\), \(U^{ eq}\), and \(U^{ bl}\) be three functions from agents to real values, subject to the constraint that \(U^{ ab}(b)<U^{ eq}(b)<U^{ bl}(b)\), for each agent b. Assuming as usual the point of view of agent a, \(U^{ ab}(b)\) is the utility associated to b having a better placement than a. In normal circumstances \(U^{ ab}(b)\) is likely to be negative. Similarly, \(U^{ eq}(b)\) is the utility of having b ranked the same as a and finally \(U^{ bl}(b)\) is used in case b is ranked worse than a; normally it is a positive value. A linear utility over rankings is then defined as follows:

We say that the linear utility U induces the preference relation \(\preceq _U\) defined by \({{ rk} _1} \preceq _U {{ rk} _2}\) iff \(U({ rk} _1) \le U({ rk} _2)\).

Consider a contest between agents a, b, and c where a has more rivalry with c than with b. More precisely, the preference of agent a is represented by a linear utility U with \(U^{ bl}(b)=1\), \(U^{ bl}(c)=2\), \(U^{ ab}(b)=-1\), \(U^{ ab}(c)=-2\), and \(U^{ eq}\) equals to the constant function 0. Then, U induces five equivalence classes of rankings, shown in the following table:

\(\mathcal {C} ^a_1\) | \(\mathcal {C} ^a_2\) | \(\mathcal {C} ^a_3\) | \(\mathcal {C} ^a_4\) | \(\mathcal {C} ^a_5\) | ||

|---|---|---|---|---|---|---|

c | b | c | a, b, c | b | a | a |

b | c | a | a | b | c | |

a | a | b | c | c | b | |

where the utility values of \(\mathcal {C} ^a_1\) – \(\mathcal {C} ^a_5\) are respectively \(-3\), \(-1\), 0, \(+1\), and \(+3\).

Another example of a preference induced by a linear utility is the standard preference \(\preceq ^{\textrm{ab},\textrm{eq}}_a\). Indeed, let \(n = |A|\) be the number of agents, it is straightforward to see that \(\preceq ^{\textrm{ab},\textrm{eq}}_a\) is induced by the utility function whose cost functions \(U^{ ab}\), \(U^{ eq}\), and \(U^{ bl}\) are constant functions yielding respectively \(-n\), \(-1\), and 0.

Theorem 8 in [1] shows that all preferences induced by a linear utility satisfy axioms Dom and SDomI, which in turn imply all the other axioms in \(\mathcal {A}\mathcal {X}\), as shown in Fig. 4.

3.2 Indifference to the Others

All the preferences in Section 2.4 satisfy a notable property: agent a takes into account only its own placement considering all the other agents in the same way. More formally, we introduce the condition \(\textsf{IndRel}({ rk} _1, { rk} _2)\), meaning that \({ rk} _2\) is obtained from \({ rk} _1\) by permutating the agents in \(A \setminus \{a\}\). Notice that \(\textsf{IndRel}(\cdot , \cdot )\) is an equivalence relation. Then, we say that a is indifferent to the others if \(\textsf{IndRel}({ rk} _1, { rk} _2)\) implies that \({ rk} _1\) and \({ rk} _2\) are equivalent for a:

The following theorem states that Ind is independent of the other axioms in the sense that Ind does not imply any other axiom and it is not implied by any of them.

Theorem 3

Axiom Ind is independent of the axioms in \(\mathcal {A}\mathcal {X}\).

Proof

According to the taxonomy proved in Theorem 2, it suffices to prove that Ind does not imply the weakest axioms from \(\mathcal {A}\mathcal {X}\), and that the strongest axioms from \(\mathcal {A}\mathcal {X}\) do not imply Ind. This is achieved by the following non-implications.

\(Ind\not \!\!\Longrightarrow \,SCI\) and \(Ind \not \!\!\Longrightarrow Sw\). Consider the preference \(\preceq ^{-\top }\) where agent a prefers to maximize its level. It is straightforward to see that \(\preceq ^{-\top }\) satisfies Ind and yet it does not satisfy neither SCI nor Sw.

\(SDomI\not \!\!\Longrightarrow \,Ind\) and \(Dom\not \!\!\Longrightarrow Ind\). Pick an agent \(b \ne a\) and consider the preference relation denoted by \({\trianglelefteq }=\, \preceq ^{\textrm{ab}, \textrm{eq}, a\ge b}\), that perfects \(\preceq ^{\textrm{ab}, \textrm{eq}}\) in the following way: when two rankings are equivalent for \(\preceq ^{\textrm{ab}, \textrm{eq}}\), \({\trianglelefteq }\) strongly prefers the ranking where agent a comes before b.

We first show that \({\trianglelefteq }\) satisfies both DomI and Dom. Assume that \(\textsf{DomRel}( { rk} _1, { rk} _2)\) holds. By (III), we know that \({ ab}({ rk} _2) \subseteq { ab}({ rk} _1)\); if the containment is strict we obtain by definition that \({\trianglelefteq }\) strictly prefers \({ rk} _2\). Conversely, if \({ ab}({ rk} _2) = { ab}({ rk} _1)\), condition (IV) implies that \({ eq}({ rk} _2) \subseteq { eq}({ rk} _1)\). Again if \({ eq}({ rk} _2) \subset { eq}({ rk} _1)\), then \({\trianglelefteq }\) strictly prefers \({ rk} _2\). Conversely, assume that \({ eq}({ rk} _1) = { eq}({ rk} _2)\). In this case \({ rk} _1\) and \({ rk} _2\) are 3-tier equivalent and hence \({ rk} _1(b)\ge { rk} _1(b)\) iff \({ rk} _2(b)\ge { rk} _2(b)\); consequently \({ rk} _1\) and \({ rk} _2\) are equivalent for a. This concludes the proof that \({\trianglelefteq }\) satisfies Dom. Assume now that also \(\textsf{ImprRel}( { rk} _1, { rk} _2)\) holds. As before, if \({ ab}({ rk} _2) \subset { ab}({ rk} _1)\), then \({\trianglelefteq }\) strictly prefers \({ rk} _2\). When \({ ab}({ rk} _2) = { ab}({ rk} _1)\), instead, Lemma 1 ensures that \({ eq}({ rk} _2) \subset { eq}({ rk} _1)\) and hence also in this case \({\trianglelefteq }\) strictly prefers \({ rk} _2\).

It remains to show that \({\trianglelefteq }\) violates Ind. To this aim, consider the linear orders \({ rk} _1(c)>{ rk} _1(a)>{ rk} _1(b)\) and \({ rk} _2(b)>{ rk} _2(a)>{ rk} _2(c)\). Clearly, \(\textsf{IndRel}( { rk} _1, { rk} _2)\) holds, since \({ rk} _2\) is obtained from \({ rk} _1\) by permutating agents b and c. However, \({\trianglelefteq }\) strictly prefers \({ rk} _2\) where a leaves b behind. \(\square \)

3.3 Perfectioning

The following lemma states that the strong axioms are preserved by perfectioning. Its proof follows immediately from the definition of perfectioning and the fact that those axioms prescribe strong preference.

Lemma 4

If a preference \(\preceq \) satisfies one of the axioms in \(\{\)SSCI, SSw, SDomI\(\}\) and \(\preceq '\) is a preference that perfects \(\preceq \) then \(\preceq '\) satisfies the same axiom.

On the contrary, the weak axiom Dom puts an upper bound on perfectioning, because it requires certain rankings to be considered equivalent. Specifically, as noted in Lemma 1 all pairs of rankings that are 3-tier equivalent are equivalent for a preference satisfying Dom. As a consequence, perfecting such preferences beyond 3-tier equivalence breaks Dom.

3.4 Linear Rankings

A ranking is linear if it contains no ties, i.e., if it assigns to the agents in A all integers between 1 and |A|. Linear rankings support a canonical preference relation \(\preceq _{\textrm{Lin}}\), where agents simply prefer to be as high as possible in the ranking. Taking agent a as the reference agent, it holds \({ rk} _1 \preceq _{\textrm{Lin}}{ rk} _2\) iff \({ rk} _1(a) \le { rk} _2(a)\). It then makes sense to ask which preference relations \(\preceq \) on general rankings extend such a canonical preference. This is the captured by the following SLin axiom:

We will also consider a weaker axiom about linear rankings, that simply forbids a preference from inverting the strong preferences of \(\preceq _{\textrm{Lin}}\). However, according to this axiom a preference is allowed to discriminate between two rankings where agent a holds the same position, and also to equate two rankings where agent a holds different positions. This is captured as follows:

Clearly, axiom SLin implies axiom Lin. On the other hand, it turns out that the above two axioms are independent of all the basic axioms.

Theorem 5

Axioms SLin and Lin are independent of the axioms in \(\mathcal {A}\mathcal {X}\).

Proof

To prove that the axioms in Fig. 4 do not imply either SLin or Lin, it is sufficient to prove that the strongest axioms in the first group (i.e., Dom and SDomI) do not imply the weakest axiom of the second group (i.e., Lin). This can be proved with the assistance of a SAT-solver, as described in Appendix A.1.

For the other direction, we prove that the strongest of the two linear axioms (i.e., SLin) does not imply any of the weakest axioms from Fig. 4 (i.e., SCI and Sw). This is accomplished by the following counterexamples. Let \(\preceq ^2\) be the preference relation in Fig. 7c. One can check by inspection that it satisfies SLin, whereas it violates Sw due to the two rankings emphasized in boldface in Fig. 7c.

Next, consider the preference \(\preceq ^{\top , -\textrm{eq}}\), which aims at minimizing the level of a from the top, and then at having as many other agents as possible at the same level as a. Axiom SLin is satisfied by the \(\top \) criterion and preserved by the lexicographic addition of the \(-\textrm{eq}\) criterion. To show that SCI is not satisfied, consider three agents, \(A=\{a,b,c\}\), and the rankings \({ rk} _1(a)={ rk} _1(c)=2, { rk} _1(b)=1 \) and \({ rk} _2(c)=3, { rk} _2(a)=2,{ rk} _2(b)=1\). In both rankings agent a is at the second level, so \({ rk} _1\) and \({ rk} _2\) are equivalent w.r.t. the criterion \(\top \). However, according to the criterion \(-\textrm{eq}\), \({ rk} _1\) is better than \({ rk} _2\). As a consequence, SCI does not hold. \(\square \)

4 Preference Theories

As demonstrated in Section 3, preference axioms do not adhere to a single chain of implications, which means that some of them are mutally independent. It then seems natural to combine multiple axioms to further circumscribe the class of preferences an agent may adopt. In this section we investigate how axioms can be combined to form preference theories. We initially focus on the axioms in Fig. 4, treating axiom Ind separately.

As usual, given a subset \(\mathcal {T} \subseteq \mathcal {A}\mathcal {X}\), \({ Pref}_A(\mathcal {T})\) is the set of preferences satisfying all the axioms in \(\mathcal {T} \). As for single axioms, we say that a theory \(\mathcal {T} \) implies \(\mathcal {T} '\), denoted by \(\mathcal {T} \Rightarrow \mathcal {T} '\), if for all sets of agents A it holds \({ Pref}_A(\mathcal {T})\subseteq { Pref}_A(\mathcal {T} ')\). As usual, two theories are said to be equivalent if they imply each other.

We claim that axioms SCI and Sw are primitive and should be satisfied by any preference. This claim is supported by the following observations: (i) axioms SCI and Sw are mutually independent and are not subsumed by any other axiom (see Fig. 4), and (ii) all “plausible” preferences that we have been able to define satisfy those two axioms. Consequently, we will consider only those theories that imply \(\{SCI, Sw\}\). Hereafter, \(\mathcal {C}\) denotes the set of all such theories. The following preliminary lemma shows that DomI (Dominance and Improvement) and SSCI (Strong Same Context and Improvement) together imply SSw (Strong Swap).

Lemma 6

If a preference relation satisfies DomI and SSCI, then it satisfies SSw.

Proof

Let \(\preceq \) be a preference satisfying DomI and SSCI, and let \({ rk} _1\) and \({ rk} _2\) be two rankings such that \(\textsf{SwapRel}({ rk} _1, { rk} _2)\) holds. By definition, there is an agent b different from a such that \({ rk} _1(a)> { rk} _1(b)\) and \({ rk} _2\) is obtained from \({ rk} _1\) by switching a and b. Let \({ rk} _3\) be the ranking that coincides with \({ rk} _1\), except for the position of a, which rises to the level of b, so that \({ rk} _3(a)= { rk} _3(b)\). Notice that it holds \(\textsf{SCRel}({ rk} _1, { rk} _3)\) and \(\textsf{ImprRel}({ rk} _1, { rk} _3)\), the latter thanks to agent b, who is strictly better than a in \({ rk} _1\), and equivalent to a in \({ rk} _3\). Therefore, by SSCI we have that \(\preceq \) strictly prefers \({ rk} _3\) to \({ rk} _1\). Now, the only difference between \({ rk} _3\) and \({ rk} _2\) consists in b moving down to the level that a occupies in \({ rk} _1\). As a consequence, it holds \(\textsf{DomRel}({ rk} _3, { rk} _2)\) and \(\textsf{ImprRel}({ rk} _3, { rk} _2)\). By axiom DomI, \(\preceq \) weakly prefers \({ rk} _2\) over \({ rk} _3\). By transitivity, \(\preceq \) strongly prefers \({ rk} _2\) over \({ rk} _1\). Then, \(\preceq \) satisfies SSw. \(\square \)

Our next result states that the theories in Fig. 5 cover all possible combinations of axioms in \(\mathcal {A}\mathcal {X}\) that imply at least axioms SCI and Sw. We call such theories canonical.

Theorem 7

Each theory \(\mathcal {T} \in \mathcal {C}\) is equivalent to one of the canonical theories in Fig. 5.

Proof

Let \(\mathcal {T} \subseteq \mathcal {A}\mathcal {X}\). Obtain \(\mathcal {T} ' \subseteq \mathcal {T} \) by removing from \(\mathcal {T} \) all axioms that are redundant due to the implications in Fig. 4. Clearly, the preference theories of \(\mathcal {T} \) and \(\mathcal {T} '\) coincide.

If \(SDomI \in \mathcal {T} '\), then either \(\mathcal {T} ' = \{Dom, SDomI\}\) or \(\mathcal {T} ' = \{SDomI\}\). In both cases, the thesis holds because \(\mathcal {T} '\) is one of the the theories in Fig. 5. In the rest of the proof we can assume that \(SDomI \not \in \mathcal {T} '\).

Next, assume that \(Dom \in \mathcal {T} '\). Then, \(\mathcal {T} '\) is one of the following theories: (i) \(\{Dom\}\), (ii) \(\{Dom, SSw\}\), (iii) \(\{Dom, SSCI\}\), or (iv) \(\{Dom, SSw, SSCI\}\). The first three theories are canonical whereas, from Lemma 6 and the fact that Dom implies DomI, the theory \(\{Dom, SSw, SSCI\}\) is equivalent to \(\{Dom, SSCI\}\). In the rest of the proof we can assume that \(Dom \not \in \mathcal {T} '\).

By a similar argument as for Dom, it can be proved that the only canonical theories that include DomI are \(\{DomI\}\), \(\{DomI, SSw\}\), and \(\{DomI, SSCI\}\), which are all present in Fig. 5. Thereafter, we assume that \(DomI \not \in \mathcal {T} '\).

The remaining theories which include either SSw or SSCI are \(\{SSw, SSCI\}\), \(\{Sw, SSCI\}\), and \(\{SSw, SCI\}\). All of them are canonical. Finally, \(\{Sw, SCI\}\) is canonical. \(\square \)

As the following theorem shows, the hierarchy in Fig. 5 among all canonical theories is correct and complete.

Theorem 8

Let \(\mathcal {T} _1, \mathcal {T} _2\) be two of the theories in Fig. 5. Then, \(\mathcal {T} _1 \Rightarrow \mathcal {T} _2\) if and only if there is a path from \(\mathcal {T} _1\) to \(\mathcal {T} _2\) in Fig. 5.

Proof

It is easy to verify that most implications in Fig. 5 directly derive from Theorem 2. For instance, since SDomI implies both DomI and SSCI, then we clearly have that \(\{SDomI\}\) implies \(\{DomI, SSCI\}\). The only two implications that do not directly derive from Theorem 2, namely the fact that \(\{DomI, SSCI\}\) implies both \(DomI, SSw\}\) and \(\{SSCI, SSw\}\), follow from Lemma 6 instead.

The counterexamples proving each non-implication can be found in Appendix A.3. \(\square \)

Hasse diagram for containment between self-interest theories. Lower theories are contained in higher ones. The preferences listed next to a theory belong to that theory and do not belong to any stronger theory in the diagram. The inclusions and lack thereof are proved in Thm. 8, while Thm. 7 proves that this taxonomy is complete, in the sense that every combination of axioms from \(\mathcal {A}\mathcal {X}\) is equivalent to one of the theories in this figure. Moreover, Thm. 11 states that the same taxonomy holds if the axiom Ind is added to all theories

Note that the hierarchy established by Theorem 8 has a complex relationship with the perfectioning order between preferences, mostly due to the nature of the axiom Dom. For instance, consider the three preferences \(\preceq ^\textrm{ab}\), \(\preceq ^{\textrm{ab},\textrm{eq}}\), and \(\preceq ^{\textrm{ab},\textrm{eq},\top }\). By definition, each of them is perfected by the next one in the list. Fig. 5 shows that the middle one (i.e., \(\preceq ^{\textrm{ab},\textrm{eq}}\)) belongs to the theory \(\{Dom, SDomI\}\), which is stronger than those that contain the other two preferences. Thus, the hierarchy in Fig. 5 and perfectioning agree on the relationship between \(\preceq ^\textrm{ab}\) and \(\preceq ^{\textrm{ab},\textrm{eq}}\), whereas they differ on \(\preceq ^{\textrm{ab},\textrm{eq}}\) and \(\preceq ^{\textrm{ab},\textrm{eq},\top }\). Indeed, \(\preceq ^{\textrm{ab},\textrm{eq},\top }\) (and all standard preferences involving level-based measures \(\top \) and \(\bot \)) violates Dom by distinguishing some 3-tier-equivalent rankings, and therefore belongs to the weaker theory \(\{SDomI\}\), even though it perfects \(\preceq ^{\textrm{ab},\textrm{eq}}\).

Another notable example is the degenerate preference \(\preceq ^{\equiv }\), which is the bottom element for perfectioning (among preferences) but does not belong to the weakest theory in our hierarchy because it satisfies Dom.

Finally, among the theories in Fig. 5, those that only contain strong axioms (namely, \(\{SDomI\}\) and \(\{SSCI, SSw\}\)) are closed under perfectioning, as a consequence of Lemma 4.

Hereafter, let \(\preceq ^{{ dom}}\) be the pre-order on \(\mathcal {R}(A)\) representing the dominance relation among rankings, i.e., \(\preceq ^{{ dom}}({ rk} _1, { rk} _2)\) iff \(\textsf{DomRel}({ rk} _1, { rk} _2)\). Notice that \(\preceq ^{{ dom}}\) is not a preference because it is not a total order. The next theorem characterizes the strongest preference theory \({ Pref}_A(Dom, SDomI)\) in terms of \(\preceq ^{{ dom}}\).

Theorem 9

A preference belongs to \({ Pref}_A\)(Dom, SDomI) iff it contains and perfects \(\preceq ^{{ dom}}\).

Proof

Let \(\preceq \,\in \! { Pref}_A(Dom, SDomI)\). First, we show that \(\preceq \) perfects \(\preceq ^{{ dom}}\). Let \({ rk} _1, { rk} _2 \in \mathcal {R}(A)\) be such that \(\preceq ^{{ dom}}\) strictly prefers \({ rk} _2\) to \({ rk} _1\), that is, \({ rk} _1 \prec ^{{ dom}}{ rk} _2\). By definition, we have that inclusions (III) and (IV) hold, and at least one of them is strict. The two inclusions together imply Dominance. If (III) is strict, there exists \(b \in { ab}_a({ rk} _1) \setminus { ab}_a({ rk} _2)\). Equivalently, \({ rk} _1(a)> { rk} _1(b)\) and \(rk_2(b) \ge { rk} _2(a)\), which implies Improvement. Since Dominance and Improvement hold, by axiom SDomI we have that \({ rk} _1\prec { rk} _2\).

If instead (III) is an equality and (IV) is strict, it means that there exists \(b \in { eq}_a({ rk} _1) \setminus { eq}_a({ rk} _2)\). As b cannot belong to \({ ab}_a({ rk} _2)\), it must belong to \({ bl}_a({ rk} _2)\). Equivalently, \({ rk} _1(a)={ rk} _1(b) \) and \({ rk} _2(b)>{ rk} _2(a)\), which again implies Improvement. As before, we have that \({ rk} _1 \prec { rk} _2 \). Because \(\preceq \) is a total order, no rankings are incomparable for \(\preceq \). This proves that \(\preceq \) perfects \(\preceq ^{{ dom}}\). Finally, notice that \(\preceq \) clearly contains \(\preceq ^{{ dom}}\) because it satisfies axiom Dom.

For the other implication, let \(\preceq \) be a preference that contains and perfects \(\preceq ^{{ dom}}\). Due to containment, \(\preceq \) satisfies axiom Dom. To prove that it also satisfies axiom SDomI, it suffices to notice that whenever Dominance and Improvement hold, by Lemma 1, \(\textsf{DomRel}({ rk} _2, { rk} _1)\) does not hold. Equivalently, \(\preceq ^{{ dom}}\) strictly prefers \({ rk} _2\) to \({ rk} _1\). Since \(\preceq \) perfects \(\preceq ^{{ dom}}\), we have \({ rk} _1\prec { rk} _2\) and the thesis follows. \(\square \)

To appreciate the relevance of the previous theorem, notice that Lemma 1 tells us that all preferences satisfying the axiom Dom are 3-tier (that is, they do not distinguish between 3-tier equivalent rankings). However, if Dominance establishes a strong preference between two rankings, a preference satisfying Dom may instead equate those orders. For an extreme example, the degenerate preference \(\preceq ^{\equiv }{}\) equates all rankings, but it still satisfies Dom. Theorem 9 states that adding SDomI to the picture forces preferences to uphold those strong preferences as well. Indeed, since preferences are total orders, a preference refining and perfecting \(\preceq ^{{ dom}}\) can only (and must) establish a preference among rankings that are incomparable for \(\preceq ^{{ dom}}\).

4.1 Theories with Linear Ranking Axioms

In this section we consider theories that include one of the two axioms pertaining to linear rankings: Lin and SLin. Recall that SLin implies Lin, so the combination \(\{\,Lin, SLin\}\) is equivalent to simply including SLin.

The main result of this section states that the taxonomy described in Fig. 5 does not change when either of those two axioms is added uniformly to all theories. That is, the addition of either axiom does not cause any collapse in the taxonomy. This is formally proved by the following theorem.

Theorem 10

Let \(\mathcal {T} _1,\mathcal {T} _2\in \mathcal {C}\). The following are equivalent:

-

1.

\(\mathcal {T} _1\cup \{Lin\}\) implies \(\mathcal {T} _2\cup \{Lin\}\);

-

2.

\(\mathcal {T} _1\cup \{SLin\}\) implies \(\mathcal {T} _2\cup \{SLin\}\);

-

3.

\(\mathcal {T} _1\) implies \(\mathcal {T} _2\).

Proof

We prove that (3) is equivalent to (1) and equivalent to (2). The fact that (3) implies both (1) and (2) is trivial. To prove that both (1) and (2), separately, imply (3), assume by contraposition that (3) is false, i.e., \(\mathcal {T} _1\) does not imply \(\mathcal {T} _2\). By Theorem 7, we can assume that \(\mathcal {T} _1\) and \(\mathcal {T} _2\) are canonical. For most pairs of canonical theories, the preference used in Theorem 8 to show that \(\mathcal {T} _1\) does not imply \(\mathcal {T} _2\) satisfies SLin, and hence Lin as well. In those cases, the same preference also shows that \(\mathcal {T} _1\cup \{SLin\}\) does not imply \(\mathcal {T} _2 \cup \{SLin\}\) (so, (2) is false), and that \(\mathcal {T} _1\cup \{Lin\}\) does not imply \(\mathcal {T} _2 \cup \{Lin\}\) (so, (1) is false). Two pairs of theories require new counterexamples, that are reported in the Appendix.

-

\(\{Dom, SLin\} \not \!\!\Longrightarrow \{SSw\}\). The fact that Dom does not imply SSw is easily proved by the degenerate preference \(\preceq ^{\equiv }\), which satisfies Lin but not SLin. We therefore describe in Fig. 7b a custom preference \(\preceq ^4\) on three agents that satisfies Dom and SLin but not SSw.

-

\(\{Dom, SSCI, SLin \} \not \!\!\Longrightarrow SDomI\}\). This non-implication is witnessed by the 3-agent preference \(\preceq ^5\) depicted in Fig. 7e.

\(\square \)

4.2 Theories with the Indifference Axiom

In this section we study the interplay of axiom Ind with the other axioms. First of all, the hierarchy depicted in Fig. 5 does not change in case we assume indifference to the others.

Theorem 11

Let \(\mathcal {T} _1,\mathcal {T} _2 \in \mathcal {C}\). Then, \(\mathcal {T} _1\cup \{Ind\}\) implies \(\mathcal {T} _2\cup \{Ind\}\) if and only if \(\mathcal {T} _1\) implies \(\mathcal {T} _2\).

Proof

The if direction being trivial, for the only if direction, assume by contraposition that \(\mathcal {T} _1\) does not imply \(\mathcal {T} _2\).

Now, all the non-implications in Theorem 8 have been proved using as witnesses preferences that satisfy Ind (see Appendix A.3). Consequently, there exists a preference P that satisfies \(\mathcal {T} _1\cup \{Ind\}\) and does not satisfy \(\mathcal {T} _2\). \(\square \)

For what concerns the interplay with the linear ranking axioms, adding the indifference axiom to one of the canonical theories forces axiom Lin, or in some cases SLin, to hold, as shown in the following two results. The first result focuses on the two swap axioms, and states that, when paired with indifference, the weak (resp., strong) swap axiom implies the weak (resp., strong) linear axiom.

Theorem 12

Axioms Sw and Ind together imply Lin whereas axioms SSw and Ind together imply SLin.

Proof

Let \({ rk} _1\) and \({ rk} _2\) be two linear rankings and assume that the preference \(\preceq \) of agent a satisfies Sw and Ind. First, if \({ rk} _1(a)={ rk} _2(a)\), then \({ rk} _2\) is obtained from \({ rk} _1\) by permutating the agents in \(A\setminus \{a\}\). Consequently, by Ind, we immediately have that (i) \({ rk} _1\sim { rk} _2\).

Let \({ rk} _1(a)>{ rk} _2(a)\) and b the agent such that \({ rk} _2(b)={ rk} _1(a)\). Let \({ rk} _1'\) be a ranking such that \({ rk} _1'(a)={ rk} _1(a)\), \({ rk} _1'(b)={ rk} _2(a)\), and for all other agents c distinct from a and b, \({ rk} _1'(c)={ rk} _2(c)\). By construction, \({ rk} _1'\) is obtained from \({ rk} _1\) by permutating the agents in \(A\setminus \{a\}\), then \({ rk} _1'\sim { rk} _1\) by Ind. Moreover, \({ rk} _2\) is obtained by \({ rk} _1'\) by swapping the positions of a and b, hence \(\textsf{SwapRel}_a({ rk} _1', { rk} _2)\) holds. Consequently: (ii) if \(\preceq \) satisfies Sw, we have that \({ rk} _1 \sim { rk} '_1 \preceq { rk} _2\) – which means that \(\preceq \) satisfies Lin; (iii) if \(\preceq \) satisfies SSw, \({ rk} _1 \sim { rk} '_1 \prec { rk} _2\) – which, together with (i), means that \(\preceq \) satisfies SLin. \(\square \)

The next result focuses on the two same-context axioms, and again shows that, when paired with indifference, the weak (resp., strong) same-context axiom implies the weak (resp., strong) linear axiom.

Theorem 13

Axioms SCI and Ind together imply Lin whereas axioms SSCI and Ind together imply SLin.

Proof

Let \({ rk} _1\) and \({ rk} _2\) be two linear rankings and assume that the preference \(\preceq \) of agent a satisfies Ind. If \({ rk} _1(a)={ rk} _2(a)\), it holds (i) \({ rk} _1\sim { rk} _2\) (i.e., \({ rk} _1 \preceq { rk} _2\) and \({ rk} _2 \preceq { rk} _1\)).

Then, assume \({ rk} _1(a)>{ rk} _2(a)\) and let \(A_1=\{b\in A\mid { rk} _2(b)< { rk} _2(a)\}\), \(A_2=\{b\in A\mid { rk} _2(a)<{ rk} _2(b)\le rk_1(a)\}\), and \(A_3=\{b\in A\mid { rk} _1(a)< { rk} _2(b)\}\). Let \({ rk} _1'\) be a ranking such that \({ rk} _1'(a)={ rk} _1(a)\), \({ rk} _1'(b)={ rk} _2(b)\) for all \(b\in A_1\cup A_3\), and \({ rk} _1'(b)={ rk} _2(b)-1\) for all \(b\in A_2\). By construction, \({ rk} _1'\) is obtained from \({ rk} _1\) by permutating the agents in \(A\setminus \{a\}\), then \({ rk} _1'\sim { rk} _1\). Moreover, it is easy to see that w.r.t. \({ rk} _1'\), \({ rk} _2\) satisfies same-context and improvement, i.e., \(\textsf{SCRel}_a({ rk} '_1, { rk} _2)\) and \(\textsf{ImprRel}_a({ rk} '_1, { rk} _2)\) hold. Consequently, we have that: (ii) if \(\preceq \) satisfies SCI, \({ rk} _1\preceq { rk} _2\) – and hence \(\preceq \) satisfies Lin; (iii) if \(\preceq \) satisfies SSCI, \({ rk} _1\prec { rk} _2\) – which, together with (i), means that \(\preceq \) satisfies SLin. \(\square \)

In conclusion, the following theorem shows that the “indifferent” versions of the theories in Fig. 5 satisfy Lin. Moreover, those that imply either SSCI or SSw satisfy SLin too.

Theorem 14

For all \(\mathcal {T} \in \mathcal {C}\), the following implications hold:

-

1.

\(\mathcal {T} \cup \{Ind\}\) implies Lin;

-

2.

\(\mathcal {T} \cup \{Ind\}\) implies SLin iff \(\mathcal {T} \) implies SSCI or SSw.

Proof

The first statement as well as the right-to-left direction of the second statement directly derive from Theorems 12 and 13. For the other direction, assume that \(\mathcal {T} \) implies neither SSCI nor SSw, this means that \(\mathcal {T} \subseteq \{SCI, Sw, Dom, DomI\}\). Then, the assertion derives from the fact that Dom implies the other axioms SCI, Sw, and DomI, but it does not imply SLin (consider \(\preceq ^{\equiv }{}\) as witness). \(\square \)

5 Quantitative Rewards as Preference-Inducing Mechanisms

The previous section showed that agents may exhibit many different preferences when confronted with an outcome that is a (weak) ranking. The designer of a competition may then try to induce a specific type of preference by enriching the outcome with a numerical (e.g., monetary) reward for each participant.

A reward policy (or simply reward) is a function \(\rho : \mathcal {R}(A) \rightarrow (A \rightarrow \mathbb {R})\) that assigns a real-valued reward to each agent in A, for each given ranking in \(\mathcal {R}(A)\). We only consider rewards that preserve strict order in a weak sense: formally, for all \(a, b \in A\), if \({ rk} (a)>{ rk} (b)\) then \(\rho ({ rk})(a) \le \rho ({ rk})(b)\). We allow rewards to be more or less discriminating than the ranking. The only requirement is that they do not invert the order of the agents. A faithful reward preserves order: \({ rk} (a)\ge { rk} (b)\) iff \(\rho ({ rk})(a) \le \rho ({ rk})(b)\). Intuitively, a faithful reward only establishes the distance between different layers from the ranking, whereas a general reward can also perform tie-breaking (dividing a single layer into multiple ones) or aggregate adiacent layers.

Additionally, a reward \(\rho \) is anonymous if it is invariant under permutations of agents. Formally, for all rankings \({ rk} _1,{ rk} _2\) such that \({ rk} _2\) is obtained from \({ rk} _1\) by applying the permutation \(\pi : A \rightarrow A\) of the agents, it holds \(\rho ({ rk} _1)(a) = \rho ({ rk} _2)(\pi (a))\) for all \(a \in A\). Intuitively, anonymity is a fairness condition ensuring that the designer does not favour any agent in particular and the rewards are assigned only on the basis of the mutual placements in the ranking.Footnote 5

A straightforward application of the definition proves that anonymous rewards assign the same amount to equivalent agents. In other words, anonymous rewards cannot break ties.

Lemma 15

If \(\rho \) is an anonymous reward and \({ rk} \) is a ranking such that \({ rk} (a)={ rk} (b)\), then \(\rho ({ rk})(a) = \rho ({ rk})(b)\).

Note that anonymous rewards are not necessarily faithful, a counterexample being the degenerate policy that, independently from the ranking, assigns the same constant reward to all agents.

A reward \(\rho \) naturally induces a preference \(\preceq _a^\rho \) for each agent \(a \in A\), where \({ rk} _1 \preceq _a^\rho { rk} _2\) iff \(\rho ({ rk} _1)(a) \le \rho ({ rk} _2)(a)\). Moreover, we say that a preference is compatible with a reward \(\rho \) if it perfects the preference induced by \(\rho \). We say that a reward \(\rho \) satisfies one of the preference axioms from Section 3 iff for all agents a, the preference \(\preceq _a^\rho \) induced by \(\rho \) on a satisfies that axiom. We now investigate the properties of the preferences induced by different types of rewards.

Lemma 16

All anonymous rewards satisfy axiom Ind.

Proof

The statement follows directly from the fact that if \(\textsf{IndRel}({ rk} _1, { rk} _2)\) holds, then \({ rk} _2\) is obtained from \({ rk} _1\) by applying a permutation \(\pi \) where agent a is a fix-point (i.e. \(\pi (a)=a\)). \(\square \)

The next result draws a close connection between anonymity of a reward and the two axioms based on the swap operation: axioms Sw and SSw.

Lemma 17

If a reward is anonymous then it satisfies Sw. Moreover, an anonymous reward is faithful iff it satisfies SSw.

Proof

Assume that \(\rho \) is an anonymous reward, and let \({ rk} _1,{ rk} _2\) be two rankings such that \(\textsf{SwapRel}_a({ rk} _1,{ rk} _2)\) holds. By definition, there exists an agent b such that \({ rk} _1(a)>{ rk} _1(b)\) and \({ rk} _2\) is obtained by switching the positions of a and b. Since \(\rho \) is anonymous, it holds \(\rho ({ rk} _1)(a) = \rho ({ rk} _2)(b)\) and \(\rho ({ rk} _1)(b) = \rho ({ rk} _2)(a)\). By definition of reward, it also holds that \(\rho ({ rk} _1)(a) \le \rho ({ rk} _1)(b)\). As a consequence, we have that \(\rho ({ rk} _1)(a) \le \rho ({ rk} _2)(a)\), satisfying axiom Sw.

It is straightforward to verify that if additionally \(\rho \) is faithful, then \(\rho ({ rk} _1)(a) < \rho ({ rk} _2)(a)\), satisfying axiom SSw.

Conversely, assume that \(\rho \) is anonymous and satisfies SSw, and let \({ rk} \) be a ranking. For any pair of agents a, b, we can assume w.l.o.g. that either \({ rk} (a)={ rk} (b)\) or \({ rk} (a)>{ rk} (b)\). By Lemma 15, \({ rk} (a)={ rk} (b)\) implies \(\rho ({ rk})(a) = \rho ({ rk})(b)\). Moreoever, since \(\rho \) satisfies SSw, then \({ rk} (a)>{ rk} (b)\) implies \(\rho ({ rk})(a) < \rho ({ rk})(b)\). Together, these two implications prove that \(\rho \) is faithful. \(\square \)

As far as linear rankings are concerned, being anonymous is sufficient for a reward to satisfy axiom Lin, and additionally faithfulness implies the stronger axiom SLin.

Theorem 18

All anonymous rewards satisfy axiom Lin and all anonymous and faithful rewards satisfy axiom SLin.

Proof

From Lemma 16 and 17, we know that an anonymous reward \(\rho \) satisfies axioms Ind and Sw and, if in addiction it is also faithful, it satisfies SSw. Then, the conclusion follows immediately from Theorem 12. \(\square \)

A common requirement for a reward policy is that it should split a fixed amount of money (the jackpot) among the participants. In that case, we say that the reward is cake-cutting and we conventionally set the jackpot to 1. Formally, for all subsets of agents \(A'\subseteq A\), denote by \(\rho ({ rk})(A')\) the sum of the rewards \(\rho ({ rk})(a)\) assigned to all the agents a in \(A'\). A reward is cake-cutting if for all rankings \({ rk} \) it holds \(\rho ({ rk})(A)=1\).

5.1 Level-Averaged Rewards

Next, we introduce a class of anonymous and cake-cutting rewards, called level-averaged, that will be shown to play a special role in the taxonomy of rewards. Intuitively, level-averaged rewards generalize the so-called fractional ranking (see [35, 36]), in that a group of tied agents receive the arithmetic mean of what they would receive if the tie was broken in an arbitrary way. The formal definition follows.

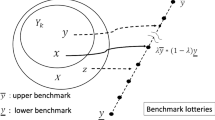

Let \({ rk} \) be a ranking with \(\ell \) levels, and \(n_i\) be the number of agents at level \(i=1,\ldots , \ell \) (1 is the top level). Clearly, the sum n of all the \(n_i\)’s is equal to the total number of agents. A reward \(\rho \) is level-averaged iff there exists a sequence of real coefficients \(\lambda _1\ge \cdots \ge \lambda _n\) summing up to 1 such that if a is an agent at level i, it receives the reward

where \(N_i\) is the sum of all \(n_h\) with \(h<i\). Notice that \(N_i = | { ab}_a({ rk}) |\) and \(n_i = | { eq}_a({ rk}) |\). A reward is strictly level-averaged if it is level-averaged and its coefficients are strictly monotonic, that is, if \(\lambda _1> \cdots > \lambda _n\).

Example 3

Consider a set of three agents a, b, c and the strictly level-averaged reward \(\rho \) with coefficients \((\lambda _1,\lambda _2,\lambda _3)=(\frac{1}{2},\frac{1}{3},\frac{1}{6})\). For any linear ranking, \(\rho \) attributes reward \(\lambda _i\) to the agent on level i. Next, consider the ranking \({ rk} \) that puts c alone on the top and a and b together at level 2 (i.e., \({ rk} (c) = 1\) and \({ rk} (a)={ rk} (b)=2\)). When applied to \({ rk} \), \(\rho \) assigns reward \(\frac{1}{2}\) to c, because it is the only agent on level 1; agents a and b receive \(\frac{1}{2}(\frac{1}{3}+\frac{1}{6}) = \frac{1}{4}\) each.

The first result on level-averaged rewards shows which axioms they satisfy. In particular, strictly level-averaged rewards induce preferences that satisfy all the axioms introduced in Section 3.

Lemma 19

Level-averaged rewards are anonymous, cake-cutting, and satisfy the axioms Dom and Lin. Strictly level-averaged rewards additionally are faithful and satisfy axioms SDomI and SLin.

Proof

Anonymity and cake-cutting are obvious consequences of the definition of level-averaged reward, whereas Lin is a direct consequence of anonymity and Theorem 18.

As for Dom, let \({ rk} _1\) and \({ rk} _2\) be two rankings s.t. \(\textsf{DomRel}_a({ rk} _1,{ rk} _2)\) holds, for some agent a. Following the definition of level-averaged reward, let \(n_1,m_1,n_2,m_2\) be the integers such that:

In words, \(\rho ({ rk} _1)(a)\) is the arithmetic mean of the sequence of coefficients \(\lambda _{m_1}, \ldots , \lambda _{m_1+n_1-1}\), and \(\rho ({ rk} _2)(a)\) is the arithmetic mean of \(\lambda _{m_2}, \ldots , \lambda _{m_2+n_2-1}\). Recall that the sequence of \(\lambda _j\)’s is monotonically non-increasing.

Now, inclusion (III) states that the set of agents above a in \({ rk} _2\) is a subset of the set of agents above a in \({ rk} _1\). This implies that \(m_2 \le m_1\). Additionally, inclusion (IV) states that the set of agents above or equivalent to a in \({ rk} _2\) is also a subset of the corresponding set in \({ rk} _1\). This implies that \(m_2 + n_2 \le m_1 + n_1\). Summarizing, the sequence of \(\lambda _j\)’s whose mean is \(\rho ({ rk} _2)(a)\) starts earlier (index-wise) and ends earlier than the corresponding sequence for \(\rho ({ rk} _1)(a)\). It follows that \(\rho ({ rk} _2)(a) \ge \rho ({ rk} _1)(a)\) and any a-preference compatible with \(\rho \) must weakly prefer \({ rk} _2\) over \({ rk} _1\).

We now prove that if \(\rho \) is strictly level-averaged, it additionally satisfies SDomI. Let \({ rk} _1\) and \({ rk} _2\) be two rankings s.t. \(\textsf{DomRel}_a({ rk} _1,{ rk} _2)\) and \(\textsf{ImprRel}_a({ rk} _1,{ rk} _2)\) hold, for some agent a. From the previous argument, we know that \(\rho ({ rk} _2)(a) \ge \rho ({ rk} _1)(a)\). We show that \(\textsf{ImprRel}_a\) forces such inequality to be strict. By Lemma 1, one of the two inequalities \(m_2 \le m_1\) and \(m_2 + n_2 \le m_1 + n_1\) is strict. Thus, either the sequence of \(\lambda _j\)’s whose mean is \(\rho ({ rk} _2)(a)\) starts strictly earlier than the corresponding sequence for \(\rho ({ rk} _1)(a)\), or the former ends strictly earlier than the latter. In either case, since the sequence of \(\lambda _j\)’s is strictly decreasing, we obtain that \(\rho ({ rk} _2)(a) > \rho ({ rk} _1)(a)\) and hence \(\rho \) satisfies SDomI.

Finally, since SDomI implies SSw, then by Lemma 17 and Theorem 18 strictly level-averaged rewards are faithful and satisfy SLin. \(\square \)

As a marginal note to the previous lemma, the degenerate level-averaged reward where all \(\lambda _i\) are equal to \(\frac{1}{n}\) is obviously not faithful.

We can actually prove that being a level-averaged reward is equivalent to being anonymous, cake-cutting, and satisfying Dom.

Theorem 20

A reward is level-averaged if and only if it is anonymous, cake-cutting, and satisfies Dom.

Proof

The “only if” direction is a consequence of Lemma 19. The “if” direction is proved by induction on the number of agents n. The base case \(n=1\) trivially follows from the cake-cutting condition.

Assume by induction that any anonymous, cake-cutting, and Dom-satisfying reward on n agents is level-averaged. Let \(\rho \) be a reward on \(n+1\) agents \(A \cup \{ a\}\), where \(a \not \in A\), which is anonymous, cake-cutting, and Dom-satisfying.

We define \(\rho '\) as the projection of \(\rho \) on A obtained by fixing the position of a as the top element in the order. Formally, for all rankings \({ rk} _A\) on A and agents \(b \in A\), we set

where \({ rk} _A^a\) is the ranking on \(A\cup \{a\}\) obtained from \({ rk} _A\) by placing a above all other agents.

The reward \(\rho '\) inherits anonymity from \(\rho \) and is cake-cutting by construction. We show that it also satisfies Dom. Let \({ rk} _1\) and \({ rk} _2\) be two rankings on A such that \(\textsf{DomRel}_b({ rk} _1, { rk} _2)\) holds for an agent \(b\in A\). Notice that adding a on top of two rankings preserves dominance among them from the point of view of all the other agents. Then, since \(\rho \) satisfies Dom, it holds (i) \(\rho ({ rk} _1^a)(b)\le \rho ({ rk} _2^a)(b)\). Moreover, since in both \({ rk} _1^a\) and \({ rk} _2^a\) the agent a is the only agent on level one, we have that \(\textsf{DomRel}_a({ rk} ^a_1, { rk} ^a_2)\) and \(\textsf{DomRel}_a({ rk} ^a_2, { rk} ^a_1)\), which implies (ii) \(\rho ({ rk} ^a_1)(a)=\rho ({ rk} ^a_2)(a)\). In turn, conditions (i) and (ii) imply that

and hence \(\rho '\) satisfies Dom. Summarizing, we have that \(\rho '\) is anonymous, cake-cutting, and satisfies Dom. Therefore, by the inductive hypothesis \(\rho '\) is level-averaged with coefficients, say, \(\{\lambda _1, \ldots , \lambda _n \}\). It remains to prove that \(\rho \) is also level-averaged, with suitable coefficients.

Let \({ rk} _A\) be an arbitrary ranking over A and \({ rk} _A^a\) be the extension where a is placed above all other agents. We set \(\lambda _0\) to \(\rho ({ rk} _A^a)(a)\) and \(\lambda '_i\) to \(\lambda _i\cdot \rho ({ rk} _A^a)(A)\). Notice that by condition (ii) above \(\lambda _0\) does not depend on the choice of \({ rk} _A\). Clearly, it holds by construction that \(\lambda '_1\ge \ldots \ge \lambda '_n\) and \(\lambda _0+\lambda '_1+\cdots + \lambda '_n=1\). Moreover, we show that \(\lambda _0 \ge \lambda '_1\). Let \({ rk} \) be a linear order on \(A\cup \{a\}\) with a the only agent on level 1. Since A is not empty, there exists at least an agent b on level 2 in \({ rk} \). Consider the linear order \({ rk} '\) obtained from \({ rk} \) by swapping a and b. We have that

Since by construction \({ rk} \) is equal to \({ rk} _A^a\) for some linear order \({ rk} _A\), we know that \(\rho ({ rk})(a)=\lambda _0\) and \(\rho ({ rk})(b)=\lambda '_1\). Therefore, \(\lambda _0 \ge \lambda '_1\) and \(\lambda _0,\lambda '_1,\ldots , \lambda '_n\) have all the properties required to form a level-averaged reward. Indeed, we now prove that \(\rho \) is level-averaged with coefficients \(\lambda _0,\lambda '_1,\ldots , \lambda '_n\).

Let \({ rk} \) be a ranking on \(A\cup \{a\}\), we can assume w.l.o.g. that agent a is at level one — otherwise we can consider by anonymity a \(\rho \)-equivalent ranking obtained by swapping a with some agent on the top. Let \({ rk} ^+\) be obtained from \({ rk} \) by moving a above all other agents.

First, consider the case where a is the only agent on the top level in \({ rk} \). Then \({ rk} ^+={ rk} \) and \({ rk} \) is equal to \({ rk} _A^a\) for some ranking \({ rk} _A\) on A. As shown previously, this means that \(\rho ({ rk})(a)=\lambda _0\). Moreover, given an agent \(b\in A\) located on level \(i>1\), it holds

Consequently, \(\rho \) is level-averaged on \({ rk} \) with coefficients \(\lambda _0,\lambda '_1,\ldots , \lambda '_n\).