Abstract

It is essential for agents to work together with others to accomplish common objectives, without pre-programmed coordination rules or previous knowledge of the current teammates, a challenge known as ad-hoc teamwork. In these systems, an agent estimates the algorithm of others in an on-line manner in order to decide its own actions for effective teamwork. A common approach is to assume a set of possible types and parameters for teammates, reducing the problem into estimating parameters and calculating distributions over types. Meanwhile, agents often must coordinate in a decentralised fashion to complete tasks that are displaced in an environment (e.g., in foraging, de-mining, rescue or fire control), where each member autonomously chooses which task to perform. By harnessing this knowledge, better estimation techniques can be developed. Hence, we present On-line Estimators for Ad-hoc Task Execution (OEATE), a novel algorithm for teammates’ type and parameter estimation in decentralised task execution. We show theoretically that our algorithm can converge to perfect estimations, under some assumptions, as the number of tasks increases. Additionally, we run experiments for a diverse configuration set in the level-based foraging domain over full and partial observability, and in a “capture the prey” game. We obtain a lower error in parameter and type estimation than previous approaches and better performance in the number of completed tasks for some cases. In fact, we evaluate a variety of scenarios via the increasing number of agents, scenario sizes, number of items, and number of types, showing that we can overcome previous works in most cases considering the estimation process, besides robustness to an increasing number of types and even to an erroneous set of potential types.

Similar content being viewed by others

1 Introduction

Autonomous agents are usually designed to pursue a specific strategy and accomplish a single or set of tasks. Intending to improve their performance, these agents often follow specified coordination and communication protocols to enable the collection of valuable information from the environment components or even from other reliable agents. However, employing these methods is challenging due to environmental and technological constraints. There are circumstances where communication channels are unreliable, and agents cannot fully trust them to send or receive information. Moreover, particular situations require the design of agents (e.g., robots or autonomous systems) from various parties aiming to solve a problem urgently, but constructing and testing communication and coordination protocols for all different agents can be unfeasible given the time constraints. For example, consider a natural disaster or a hazardous situation where institutions may urgently ship robots from different parts of the world for handling the problem. In these scenarios, avoiding delays and unnecessary funding usage would save lives and mitigate the caused damages

One possible solution is to offer a centralised mechanism to allocate tasks to each agent in the environment in an efficient manner. However, we may face scenarios where there is no centralised mechanism available to manage the agents’ actions. When we consider large scale problems, it is even easier to imagine situations where environmental or time constraints also derail this solution. Hence, agents need to decide, autonomously, which task to pursue [11]—defining what we will denominate a decentralised execution scenario. The decentralised execution is quite natural in ad-hoc teamwork, as we cannot assume that other agents would be programmed to follow a centralised controller. Therefore, allowing agents to reason about the surrounding environment and create partnerships with other agents can support the accomplishment of missions that are hard to deal with individually, reducing the necessary time to achieve all tasks and minimising the costs related to the process.

For many relevant domains, these decentralised execution problems can be modelled focused on the set of tasks that need to be accomplished in a distributed fashion (e.g., victims to be rescued from a hazard, letters to be quickly delivered to different locations, etc). Note this kind of design presents a task-based perspective to solve the problem, where agents must reason about their teammates’ targets to improve the coordination, hence the team’s performance. In this way, the agents must approximate the teammates’ behaviours (or their main features) in order to deliver this improvement while solving the problem.

As our first goal, this paper will address the problem where agents are supposed to complete several tasks cooperatively in an environment where there is no prior information, reliable communication channel or standard coordination protocol to support the problem completion. We will denominate this ad-hoc team situation as a Task-based Ad-hoc Teamwork problem, a decentralised distributed system where agents decide their tasks autonomously, without previous knowledge of each other, in an environment full of uncertainties.

Instead of developing algorithms that are able to learn any possible policy from scratch, a common approach in the ad-hoc teamwork literature is to consider a set of possible agent types and parameters, thereby reducing the problem of estimating those [2, 3, 10]. This approach is more applicable, as it does not require a large number of observations, thus allows the learning and acting to happen simultaneously in an on-line fashion, i.e., in a single execution. Types could be built based on previous experiences [7, 8] or derived from the domain [1]. Moreover, the introduction of parameters for each type allows more fine-grained models [2]. However, the previous works that learn types and parameters in ad-hoc teamwork are not specifically designed for decentralised task execution, missing an opportunity to obtain better performances in this relevant scenario for multi-agent collaboration.

Other lines of work focus on neural network-based models and learn the policies of other agents after thousands (even millions) of observations [22, 33]. These applications, however, would be costly, especially when domains get larger and more complicated. Similarly, I-POMDP based models [12, 17, 19, 23] could be applied for reasoning about the model of other agents from scratch, but its application is non-trivial considering larger problems.

On the other hand, some approaches in the literature have also tested task-based designs, inferring about agents pursuing tasks to predict their behaviour [13]. Although we share some similarities, they have not yet handled learning types and parameters of agents in ad-hoc teamwork systems where multiple agents may need to cooperate to complete common tasks.

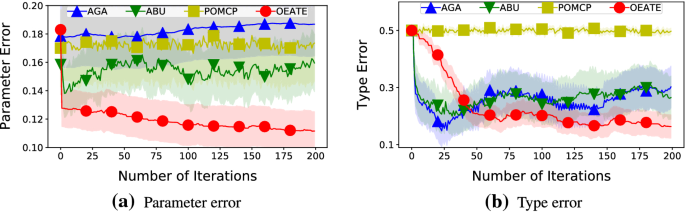

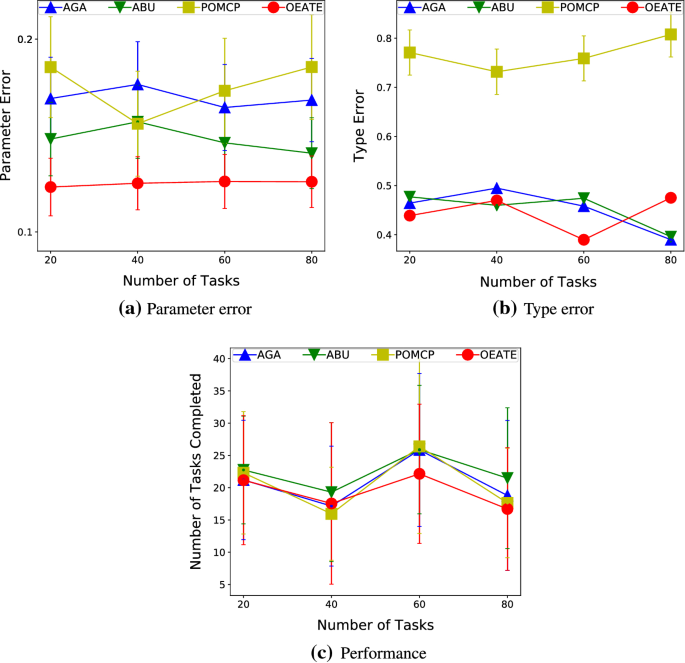

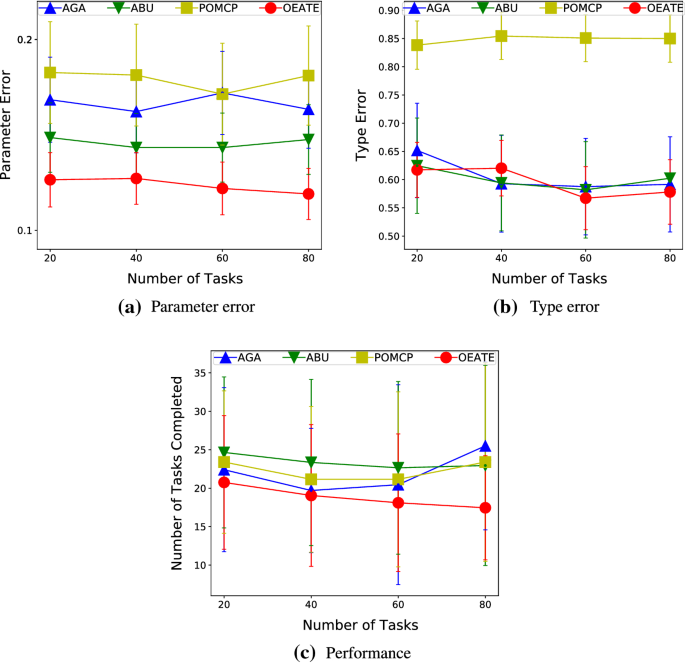

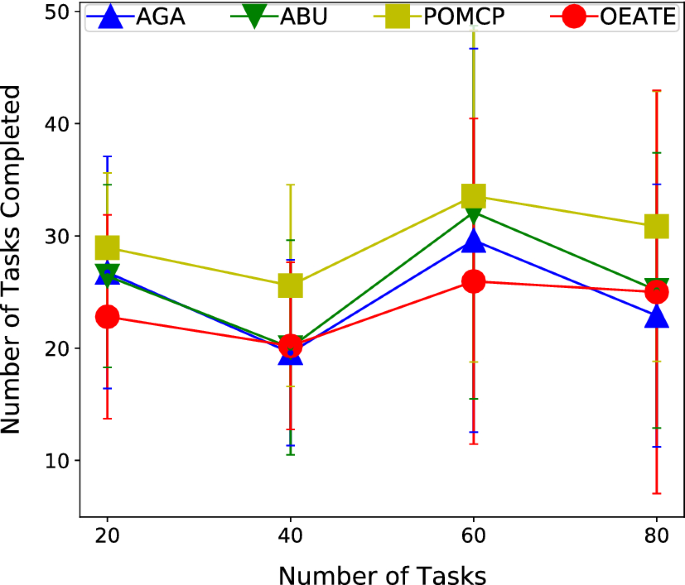

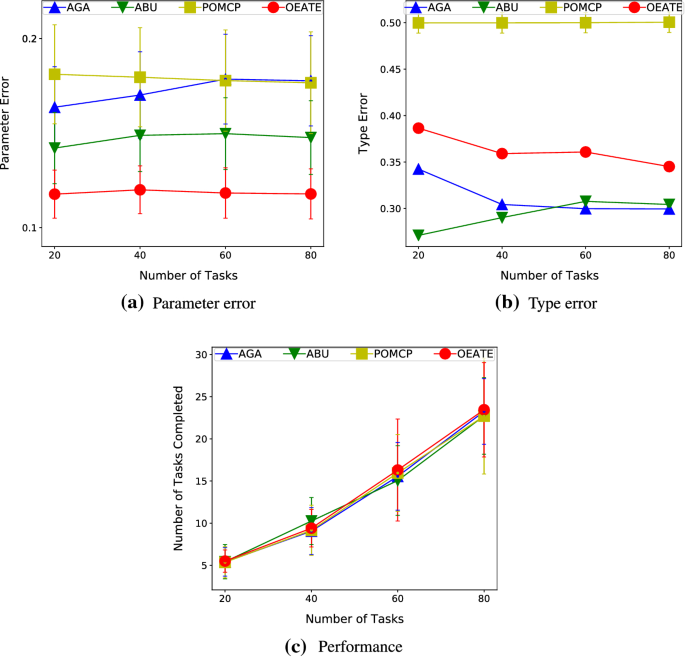

Therefore, as our main contribution, we present in this paper On-line Estimators for Ad-hoc Task Execution (OEATE), a novel algorithm for estimating teammates types and parameters in decentralised task execution. Our algorithm is light-weighted, running estimations from scratch at every single run, instead of employing pre-trained models, or carrying knowledge between executions. Under some assumptions, we show theoretically that our algorithm converges to a perfect estimation when the number of tasks to be performed gets larger. Additionally, we run experiments for two collaborative domains: (ii) a level-based foraging domain, where agents collaborate to collect “heavy” boxes together, and; (ii) a capture the prey domain, where agents must collaborate to surround preys and capture them. We also tested the performance of our method in full and partial observable scenarios. We show that we can obtain a lower error in parameter and type estimations in comparison with the state-of-the-art, leading to significantly better performance in task execution for some of the studied cases. We also run a range of different scenarios, considering situations where the number of agents, scenario sizes, and the number of items gets larger. Furthermore, we evaluate the impact of increasing the number of possible types. Finally, we run experiments where our ad-hoc agent does not have the true type of the other agents in its pool of possible agent types. In such challenging situations, our parameter estimation outstands the competitors and, our type estimation and performance is similar or better than the state-of-the-art in several cases considering the results’ confidence interval.

2 Background

Ad-hoc Teamwork Model Ad-hoc teamwork defines domains where agents intend to cooperate with their teammates and coordinate their actions to reach common goals. Moreover, agents in the domains do not have any prior communication or coordination protocols to enable the exchange of information between them, so learning and reasoning about the current context are mandatory to improve the team’s performance as a unit. However, if agents are aware of some potential pre-existing standards for coordination and communication, they can try to learn about their teammates with limited information [8]. As a result of such intelligent coordination in the ad-hoc teams, they can improve their decision-making process and hence, accomplish shared goals more efficiently.

This fundamental model can be extended to fit many problems and scenarios. For our work, we will extend it to a task-based model, enabling a better representation of our world as presented in previous state-of-the-art works [5, 6, 41].

Task-based Ad-hoc Teamwork Model As an extension of ad-hoc teamwork model, the task-based ad-hoc teamwork model represents a problem where one learning agent \(\phi\), acts in the same environment as a set of non-learning agents \(\omega \in \varvec{\Omega }\), \(\phi \notin \varvec{\Omega }\). In the ad-hoc team \(\phi \cup \varvec{\Omega }\), the objective of \(\phi\) (as the learning agent) is to maximise the performance (e.g., the number of tasks accomplished or the necessary time to finish them all). However, all non-learning agents’ models are unknown to \(\phi\), and there is no communication channel available. Hence, \(\phi\) must estimate and understand their models as time progresses, by observing the scenario. In other words, the learning agent must improve its decision-making process by approximating the teammates’ behaviour in an on-line manner and facing a lack of information.

Besides, there is a set of tasks \(\mathbf {T}\) which all agents in the team endeavour to accomplish autonomously. A task \(\tau \in \mathbf {T}\) may require multiple agents to perform it successfully and multiple time steps to be completed. For instance, in a foraging problem, a heavy item may require two or more robots to be collected, and the robots would need to move towards the task location to accomplish it, taking multiple time steps to move from their initial position.

The learning agent \(\phi\) must minimise the time to accomplish all tasks. Hence, playing this role requires the support of a method that integrates the estimation and the decision-making process while performing and improving the planning.

Model of Non-Learning Agents All non-learning agents aim to finish the tasks in the environment autonomously. However, choosing and completing a task \(\tau\) by any \(\omega\) is dependent on its internal algorithm and its capabilities. Nonetheless, \(\omega\)’s algorithm can be one of the potential algorithms defined in the system, which might be learned from previous interactions with other agents [7].

Therefore, following the model of Non-Learning agents defined in previous works [2, 41], there is a set of potential algorithms in the system, which compose a set of possible types \(\varvec{\Theta }\) for all \(\omega \in \varvec{\Omega }\). The assumption is that all these algorithms have some inputs, which is denominated parameters. Hence, the types are all parameterised, which affects agents’ behaviour and actions. Considering the existence of these types’ parameters allows \(\phi\) to use more fine-grained models when handling new unknown agents.

According to these assumptions, each \(\omega \in \varvec{\Omega }\) will be represented by a tuple (\(\theta\), \(\mathbf {p}\)), where \(\theta \in \varvec{\Theta }\) is \(\omega\)’s type and \(\mathbf {p}\) represents its parameters, which is a vector \(\mathbf {p} = <p_1,p_2,\ldots ,p_n>\). Also, each element \(p_i\) in the vector \(\mathbf {p}\) is defined in a fixed range [\(p_i^{min}\), \(p_i^{max}\)] [2]. So, the whole parameter space can be represented as \(\mathbf {P} \subset \mathbb {R}^{n}\). These parameters can be the abilities and skills of an agent. For instance, a robot can be quite different depending on its hardware—for a robot, it can be vision radius, the maximum battery level or the maximum velocity. The parameters could also be hyper-parameters of the algorithm itself. Consequently, each \(\omega \in \varvec{\Omega }\), based on its type \(\theta\) and parameters \(\mathbf {p}\), will choose a target task. The process of choosing a new task can happen at any time and any state in the state space, depending on the agents’ parameters and type. We denominate these decision states as Choose Target States \(\mathfrak {s} \in S\).

In the Task-based Ad-hoc Teamwork context, a precise estimation of tasks also depends on estimating the Choose Target State. Our method presents a solution to this problem by considering an information-based perspective, which does its evaluation by giving different weights to the information derived from observations made by the agent \(\phi\), instead of directly estimating the choose target state. More detail will be presented in Sect. 6.

Stochastic Bayesian Game Model A Stochastic Bayesian Game (SBG) describes a well-suited solution towards the representation of ad-hoc teamwork problems that combine the Bayesian games with the concept of stochastic games and provide a descriptive model to the context [4, 29]. In this section, we will define an SBG-based model for our specific setting and refer the reader to [29] for a more generic formulation.

Our model consists of a discrete state space S, a set of players (\(\phi \cup \varvec{\Omega }\)), a state transition function \(\mathcal {T}\) and a type distribution \(\Delta\). Each agent \(\omega \in \varvec{\Omega }\) has a type \(\theta _i \in \varvec{\Theta }\) and a parameter space \(\mathbf {P}\). Each parameter is a vector \(\mathbf {p} = <p_1,p_2 \ldots p_n>\) and each \(p_i \in [p_i^{min},p_i^{max}]\), for all agents. The set \([p_1^{min},p_1^{max}] \times \dots \times [p_n^{min},p_n^{max}] = \mathbf {P} \subset \mathbb {R}^n\) is the parameter space for each agent. Each type could have a different parameter space, but we define a single parameter space here for simplicity of notation. Furthermore, we assume that the types of the agents are fixed throughout the process (a pure and static type distribution). Moreover, each player is associated with a set of actions, an individual payoff function and a strategy. Considering that at each time step, agents \(\omega _i \in \varvec{\Omega }\) are fixed tuples \((\theta _i, \mathbf {p}_i)\), where \(\theta _i \in \varvec{\Theta }\) and \(\mathbf {p}_i \in \mathbf {P}\), we extend the SBG model in order to describe the following problem:

Problem Consider a set of players \(\phi \cup \varvec{\Omega }\) that share the same environment. Each player acts according to its type \(\theta _i\), set of parameters \(\mathbf {p}_i\) and own strategy \(\pi _i\). They do not know the others’ types or parameters. At each time step t, given the state \(s^t\) and a joint action \(a^t = (a^t_\phi ,a^t_1,a^t_2,\ldots ,a^t_{|\varvec{\Omega }|})\), the game transitions accordingly to the transition probability \(\mathcal {T}\) and each player receives an individual payoff \(r_i\) until the game reaches a terminal state.

Therefore, by using the SBG model, we can represent our problem and the necessary components in it. However, we consider in this work a fully cooperative problem, under the point of view of agent \(\phi\). Hence, within the task-based ad-hoc teamwork context, we want to model the problem employing a single-player abstraction under \(\phi\)’s point of view. Using a Markov Decision Process Model (MDP), we can abstract all the environment components as part of the state (including teammates in \(\varvec{\Omega }\)). This approach enables the aggregation of individual rewards from the SBG model into a single global reward and allows us to use single-player Monte Carlo Tree Search techniques, as previous works did [5, 32, 41].

Markov Decision Process Model The Markov Decision Process (MDP) consists of a mathematical framework to model stochastic processes in a discrete time flow. As mentioned, although there are multiple agents and perspectives in the team, we will define the model considering the point of view of an agent \(\phi\) and apply a single agent MDP model, as in previous works [5, 32, 41] that represent other agents as part of the environment.

Therefore, we consider a set of states \(s \in \mathcal {S}\), a set of actions \(a \in \mathcal {A}_\phi\), a reward function \(\mathcal {R}(s,a)\), and a transition function \(\mathcal {T}\), where the actions in the model are only the \(\phi\)’s actions. In other words, \(\phi\) can only decide its own actions and has no control over other environment components (e.g., actions of agents in the set \(\varvec{\Omega }\)). All \(\omega\) in \(\varvec{\Omega }\) are modelled as the environment, as their actions indirectly affect the next state and the obtained reward. Therefore, they are abstracted in the transition function. That is, in the actual problem, the next state depends on the actions of all agents, however, \(\phi\) is unsure about the non-learning agents next action. For this reason, we consider that given a state s, an agent \(\omega \in \varvec{\Omega }\) has a (unknown) probability distribution (pdf) across a set of actions \(\mathcal {A}_{\omega }\), which is given by \(\omega\)’s internal algorithm (\(\theta\), \(\mathbf {p}\)). This pdf is going to affect the probability of the next state. Therefore, we can say that the uncertainty in the MDP model comes from the randomness of the actions of the \(\omega\) agents, besides any additional stochasticity of the environment.

This model allows us to employ single-agent on-line planning techniques, like UCT Monte Carlo Tree Search [26]. In the tree search process, the pdf of each agent defines the transition function. At each node transition, \(\phi\) samples \(\omega\) agents’ actions from their (estimated) pdfs, and that will determine the next state \(s'\) for the next node. However, in traditional UCT Monte Carlo Tree Search, the search tree increases exponentially with the number of agents. Hence, we use a history-based version of UCT Monte Carlo Tree Search called UCT-H, which employs a more compact representation than the original algorithm, and helps to trace the tree in larger teams in a simpler and faster fashion [41].

As mentioned earlier, in this task-based ad-hoc team, \(\phi\) attempts to help the team to get the highest possible reward. For this reason, \(\phi\) needs to find the optimal value function, which maximises the expected sum of discounted rewards \(E[\sum _{j=0}^{\infty }\gamma ^jr_{t+j}]\), where t is the current time, \(r_{t+j}\) is the reward \(\phi\) receives at j steps in the future, \(\gamma \in (0, 1]\) is a discount factor. Also, we consider that we obtain the rewards by solving the tasks \(\tau \in \mathbf {T}\). That is, we define \(\phi\)’s reward as \(\sum r(\tau )\), where \(r(\tau )\) is the reward obtained after the task \(\tau\) completion. Note that the sum of rewards is not only across the tasks completed by \(\phi\), but all tasks completed by any set of agents in a given state. Furthermore, there might be some tasks in the system that cannot be completed without cooperation between the agents, so the number of required agents for finishing a task \(\tau\) depends on each specific task and the set of agents that are jointly trying to complete it.

Note that the agents’ types and parameters are actually not observable, but in our MDP model that is not directly considered also. Estimated types and parameters are used during on-line planning, creating an estimated transition function. The actual decisions made by the non-learning agents is observable in the real world transitions without any direct information about type and parameters. More details are available in the next section.

3 Related works

The literature introduces ad-hoc teamwork as a remarkable approach to handle multi-agents systems [5, 38]. This approach presents the opportunity to achieve the objectives of the multiple agents in a collaborative manner that surpasses the requirement of designing a communication channel for information exchanging between the agents, building an application to do prior coordination or the collection of previous data that train agents intending to improve the decision-making process within the environment. Furthermore, these models enable the creation of algorithms capable of acting in an on-line fashion, dynamically adapting their behaviour according to the environment and current teammates.

In this section, we will carry out a comprehensive discussion about the state-of-the-art contributions and how these different approaches have inspired our work. Intending to facilitate understanding and readability, we organised the section into topics and related contributions by groups. Each subsection categorises the major idea of each group and summarises the main strategy of those.

3.1 Type-based parameter estimation

Considering type-based reasoning and parameters learning, we can solve the problem using fine-grained models, which evaluate the observations and estimate each agent’s type and parameters in an on-line manner [1, 3, 7, 8, 10]. These lines of works propose the approximation of agents’ behaviour to a set of potential types to improve the ad-hoc agents’ decision-making capabilities, allowing a quick on-line estimation of agents’ algorithms, without requiring an expensive training process for learning their policies from scratch. However, if a set of potential types and the parameter space cannot be defined through domain knowledge, then they would have to be learned from previous interactions [8].

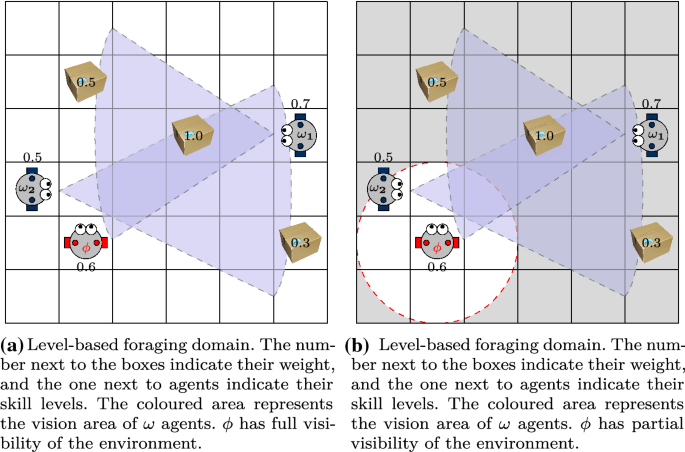

Albrecht and Stone [2], in particular, introduced the AGA and ABU algorithms for type-based reasoning of teammates parameters in an on-line manner, which are the main inspirations for this work. Both methods sample sets of parameters (from a defined parameter space) to perform estimations via gradient ascent and Bayesian updates, respectively. However, by focusing on decentralised task execution in ad-hoc teams, our novel method surpasses their parameter and type estimations when the number of teammates gets larger or more tasks are accomplished, consequently leading to better team performance. We also extend their work by adding partial observability to all team members.

On the other hand, Hayashi et al. [22] propose an enhanced particle reinvigorating process that leverages prior experiences encoded in a recurrent neural network (RNN), acting into a partial observable scenario in their ad-hoc team. However, they need thousands of previous experiences for training the RNN, while still requiring knowledge of the potential types. Our approach can start from scratch at every single run, with no pre-training.

Concerning problems with partial observability, POMCP is usually employed for on-line planning [37]. However, it is originally designed for a discrete state space, making it harder to apply POMCP for (continuous) parameter estimation. However, we apply POMCP in combination with our algorithm OEATE, which enables the decision making on partial observable scenarios and improves the POMCP search space, given the OEATE’s estimation of the agents’ parameters. We also evaluate experimentally the performance of POMCP for our problem without the embedding of parameter estimation algorithms.

3.2 Complex models

Guez et al. [20] proposed a Bayesian MCTS that tries to directly learn a transition function by sampling different potential MDP models and evaluating it while planning under uncertainty. Our planning approach (inspired by [2, 7]) is similar, as we sample different agent models from our estimations. However, instead of directly working on the complex transition function space, we learn agents types and parameters, which would then translate to a certain transition probability for the current state or belief state.

Rabinowitz et al. [33] introduce a “Machine Theory of Mind”—or purely the Theory of Mind (ToM) approach—, where neural networks are trained in general populations to learn agent types, and the current agent behaviour is then estimated in an on-line manner. Similarly to learning policies from scratch, however, their general models require thousands (even millions) of observations to be trained. Besides, they used a small \(11 \times 11\) grid in their experiments, while we scale all the way to \(45 \times 45\) to estimate the behaviour of several unknown and distinct teammates. On the other hand, if a set of potential types is not given by domain knowledge, then their work serves as another example that types could be learned.

A different approach that enables the learning of teammates models and reasoning about their behaviour in planning is given by I-POMDP based models [12, 17, 19, 23]. However, they are computationally expensive, assuming all agents are learning about others recursively and considering agents that receive individual rewards (processing estimations individually).

Eck et al. [18] addressed this problem and recently proposed a scalable approach using the I-POMDP-Lite Framework in order to consider large open agent systems. In their approach, an agent considers a large population by modelling a representative set of neighbours. They focus on estimating how many agents perform a particular action, hence their approach is not applicable to the task-based problems that we consider in this work. Additionally, although they present a scalable approach in terms of team size, they still consider only small \(3 \times 3\) scenarios. In this work, we show scalability regarding the team size, the dimensions of the map and the numbers of simultaneous tasks in the scenario.

Rahman et al. [34] also handle open agent problems and propose the application of a Graph Neural Network (GNN) for estimating agents behaviours. Similarly to other neural network-based models, it needs a large amount of training, and their results are limited to a \(10 \times 10\) grid world with 5 agents. Their agent parametrisation is also more limited, with only 3 possible levels in the level-based foraging domain, which is directly given as input for each agent (instead of learned).

Therefore, we propose lighter MDP/POMDP models, focused on decentralised task execution, with a single team reward, that allows us to tackle problems with a larger number of agents, and tasks in bigger scenarios in the partially observable domain. Also, we build a model for every single member of the team. On the other hand, open agent systems are not in the scope of our work, and we consider fixed team sizes.

3.3 Task-oriented and task-allocation approaches

As mentioned, our key idea is to focus on decentralised task execution problems in ad-hoc teamwork. Chen et al. [13] present a related approach, where they focus on estimating tasks of teammates, instead of learning their model. While related, they focus on task inference in a model-free approach, considering that each task must be performed by one agent, and the ad-hoc agent goal changes to identifying tasks that are not yet allocated. Our work, on the other hand, combines task-based inference with model-based approaches and allows for tasks to require an arbitrary number of agents. Additionally, their experiments are on small \(10 \times 10\) grids, with a lower number of agents than us.

There are also other works attempt to identify the task being executed by a team from a set of potential tasks [29]; or an agent’s strategy for solving a repetitive task, enabling the learner to perform collaborative actions [39]. Our work, however, is fundamentally different, since we focus on a set of (known) tasks which must be completed by the team.

Another approach suggested in the literature for task-based problems optimisation are the Multi-Agent Markov Decision Problem (MMDP) models [14, 15]. These models allow agents to decide their target task autonomously and are focused on estimating teammates’ policies directly at specific times in the problem execution. Given knowledge of the MMDP model, those approaches compute the best response policy (at the current time) for the other agents and use those models while planning. However, they do not consider learning a probability distribution over potential types and estimating agents’ parameters like in our approach. OEATE is capable of using a set of potential types and space of parameters to learn the probabilities of each type-parameter set up for each teammate in an on-line fashion.

Multi-Robot Task Allocation (MRTA) models also represent an alternative approach to solve problems in the ad-hoc teamwork context [27, 40]. Intending to maximise the collective completion of tasks, these models employ decentralised task execution strategies that work in an on-line manner without a central learning agent. Each agent develops its own strategy based on the received observations. Similarly to our proposal, MRTA models implement a task-based perspective to deliver solutions where agents know and seek tasks distributed in an environment while reasoning. However, MRTA models assume knowledge about the teammates’ types and the tasks that they are pursuing. Furthermore, this assumption holds because they consider this information is available in the environment, where agents can get it through observation (e.g., agents choosing tasks of different colours) or reliable communication channels for information exchange between the agents. As we mentioned earlier, there are circumstances where communication channels are unreliable, and agents cannot fully trust them to send or receive information. OEATE predicts their teammates’ targets while learning their types and parameters, besides handling problems where these assumptions are not secured.

Concerning task allocation, MDP-based models are commonly applied [30, 31] in the ad-hoc teamwork context. For instance, it can be framed as a multi-agent team decision problem [35], where a global planner calculates local policies for each agent. Auction-based approaches are also common, assigning tasks based on bids received from each agent [28]. These approaches, however, require pre-programmed coordination strategies, while we employ on-line learning and planning for ad-hoc teamwork in decentralised task execution, enabling agents to choose their tasks without relying on previous knowledge of the other team members, and without requiring an allocation by centralised planners/controllers.

3.4 Genetic algorithms

OEATE is inspired by Genetic Algorithms (GA) [24] since our main idea is to keep a set of estimators, generating new ones either randomly or using information from previously selected estimators. However, GAs evaluate all individuals simultaneously at each generation, and usually, they are selected to stay in the new population or for elimination according to its fitness function. Our estimators, on the other hand, are evaluated per agent at every task completion, and survive according to the success rate. The proportion of survived estimators are then used for type estimation, and new ones are generated using a similar approach to the usual GA mutation/crossover. Moreover, we choose the application of GA concepts in the works considering our empirical and theoretical results. As an empirical result, the employment of the GA approach showed better results in comparison with the Bayesian Updates (considering the performance of AGA and ABU against OEATE) As a theoretical result, our solution does not depend on finite-dimensional representations for parameter-action relationships and can provide a more robust way to explore the whole parameter space, through the use of multiple estimators, which mutate to form even better estimators.

3.5 Prior contributions

As one of our major prior contributions, we recently proposed an on-line learning and planning approach for an agent to make decisions in environments containing previously unknown swarms (Pelcner et al. [32]). Defined in a “capture the flag” domain, an agents must perform its learning procedure at every run (from scratch) to approximate a single model for a whole defensive swarm, while trying to invade their formation to capture the flag. Differently from Pelcner et al. [32], in this proposal we are aiming to learn a model for each agent in the environment and by the estimation of types and parameters.

Another important work related to this current contribution is the UCT-H proposal in Shafipour et al. [41]. Previous works that employ Monte Carlo Tree Search approaches are limited to a small search tree since the cost of this procedure increases exponentially with the number of agents and scenario. Trying to expand its horizons of applicability, we proposed a history-based version of UCT Monte Carlo Tree Search (UCT-H), using a more compact representation than the original algorithm. We performed several experiments with a varying number of agents in the level-based foraging domain. As OEATE is a Monte-Carlo based model, the studied of Monte Carlo Tree Search approaches and their capabilities were essential to the development of our novel algorithm. In this current work and to perform a fair comparison, we used the UCT-H version of the Monte-Carlo tree search to run every defined baseline.

4 Estimation problem

Considering the problem described by the MDP model in Sect. 2, in this section, we describe the general workflow of an estimation process and discuss how we integrated planning and estimation in this work.

Estimations process Initially, since agent \(\phi\) does not have information about each agent \(\omega\)’s true type \(\theta ^*\) and true parameters \(\mathbf {p}^*\), it will not know how they may behave at each state, hence, must reason about all possibilities for type and parameters from distribution \(\Delta\). So, \(\phi\) must consider, for each \(\omega \in \varvec{\Omega }\), an uniform distribution for initialising the probability of having each type \(\theta \in \varvec{\Theta }\), as well as randomly initialising each parameter in the parameter vector \(\mathbf {{p}}\) based on their corresponding value ranges. However, given some domain knowledge, it could be sampled from a different distribution both for types and for parameters.

After each estimation iteration, we expect that agent \(\phi\) will have a better estimation for type \(\theta\) and parameter \(\mathbf {p}\) of each non-learning agent in order to improve its decision-making and the team’s performance. Hence, \(\phi\) must learn a probability for each type, and for each type, it must present a corresponding estimated parameter vector.

In further steps, as agent \(\phi\) observes the behaviour of all \(\omega \in \varvec{\Omega }\) and notices their actions and the tasks that they accomplish, it keeps updating all the estimated parameter vectors \(\mathbf {p}\), and the probability of each type \(\mathsf {P}(\theta )_\omega\), based on the current state. The way these estimations are updated depends on which on-line learning algorithm is employed.

This described process aims to improve the quality of \(\phi\)’s decision-making based on the quality of the result delivered by the estimation method. Therefore, we will perform experiments using three different methods from the literature for type and parameter estimation: Approximate Gradient Ascent (AGA), Approximate Bayesian Update (ABU) [2] and POMCP [37], which will be explained in more detail in further Sect. 5. Moreover, these methods will represent our baselines for comparison against our novel algorithm, denominated On-line Estimators for Ad-hoc Task Execution (OEATE), for parameter and type estimation in decentralised task execution, which will be described in detail in Sect. 6.

Planning and Estimations The current estimated models of the non-learning agents are used for on-line planning, allowing agent \(\phi\) to estimate its best actions. In this work, we employ UCT-H for agent \(\phi\)’s decision-making. UCT-H is similar to UCT, but using a history-based compact representation. This modification was shown to be better in ad-hoc teamwork problems [41]. Therefore, as in previous works [2, 41], we sample a type \(\theta \in \varvec{\Theta }\) for each non-learning agent from the estimated type probabilities each time we re-visit the root node during the tree search process. We use the newly estimated parameters \(\mathbf {p}\) for the corresponding agent and sampled type, which will impact the estimated transition function, as described in our MDP model. Consequently, the higher the quality of the type and parameter estimations, the better will be the result of the tree search process. As a result, agent \(\phi\) makes a decision concerning which action to take.

Note that the actual \(\omega\) agents may be using different algorithms than the ones available in our set of types \(\varvec{\Theta }\). Nonetheless, agent \(\phi\) would still be able to estimate the best type \(\theta\) and parameters \(\mathbf {p}\) to approximate agent \(\omega\)’s behaviour. Additionally, \(\omega\) agents may or may not run algorithms that explicitly model the problem as decentralised task execution or over a task-based perspective. However, using the single-agent MDP, we only need agent \(\phi\) to be able to model the problem as such.

5 Previous estimation methods and baselines

In this work, we compare our novel method against some state-of-the-art methods. We defined three algorithms from the literature as our baselines: AGA, ABU and POMCP. Therefore, we will review these methods in this section.

AGA and ABU Overall The Approximate Gradient Ascent (AGA), and the Approximate Bayesian Update (ABU) estimation methods are introduced in Albrecht and Stone [2]. In that work, the probability of taking the action \(a^t_\omega\) at time step t, for agent \(\omega\), is defined as \(\mathsf {P}(a^t_\omega |H_\omega ^t, \theta _i, \mathbf {p})\), where \(H_\omega ^t = (s^0_i , \ldots , s^t_i )\) is the \(\omega\) agent’s history of observations at time step t, \(\theta _i\) is a type in \(\Theta\), and \(\mathbf {p}\) is the parameter vector which is estimated for type \(\theta _i\). For the estimation methods, a function f is defined as \(f(\mathbf {p}) = \mathsf {P}(a^{t-1}_\omega |H_\omega ^{t-1} , \theta _i , \mathbf {p})\) where \(f(\mathbf {p})\) represents the probability of the agents’ previous action \(a^{t-1}_\omega\), given the history of observations of agent \(\omega\) in previous time step, \(H_\omega ^{t-1}\), type \(\theta _i\), and its corresponding parameter vector \(\mathbf {p}\). After estimating the parameter \(\mathbf {p}\) for agent \(\omega\) for the selected type \(\theta _i\), the probability of having type \(\theta _i\) is updated following:

Iteratively, they showed that both methods are capable of approximate the type and parameters and improve the performance in the ad-hoc teamwork context.

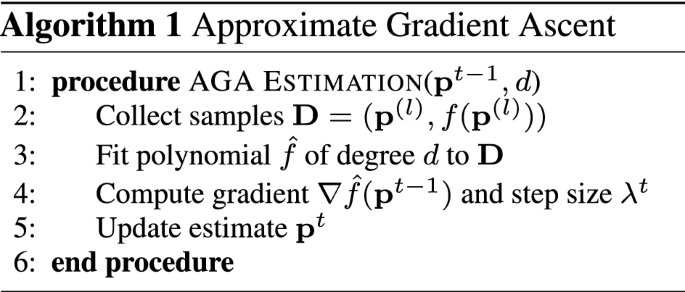

AGA The main idea of this method is to update the estimated parameters of an agent \(\omega\) by following the gradient of a type’s action probabilities based on its parameter values. Algorithm 1 provides a summary of this method.

First of all, the method collects samples \((\mathbf {p}^{(l)}, f(\mathbf {p} ^ {(l)}))\), and stores them in a set \(\mathbf {D}\) (Line 2). The method for collection could be, for example, using a uniform grid over the parameter space that includes the boundary points. After collecting a set of samples, the algorithm, in Line 3, fits a polynomial \(\hat{f}\) of some specified degree d according to the collected samples. By fitting \(\hat{f}\), the gradient \(\nabla \hat{f}\) with some suitably chosen step size \(\lambda ^t\) is calculated in the next Line 4. At the end, in Line 5, the estimated parameter is updated as presented in Equation 2.

These steps define the AGA algorithm to estimate the agent’s parameters and type iteratively. For further details, we recommend reading Albrecht and Stone [2].

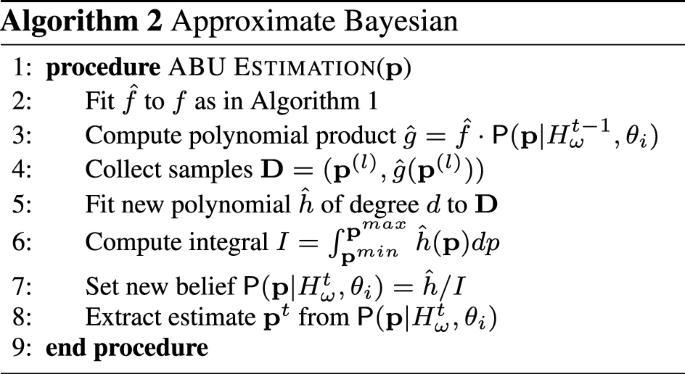

ABU In this method, rather than using \(\hat{f}\) to perform gradient-based updates, Albrecht and Stone use \(\hat{f}\) to perform Bayesian updates that retain information from past updates. Hence, in addition to the belief \(\mathsf {P}(\theta _i |H^t_\omega )\), agent \(\phi\) now also has a belief \(\mathsf {P}(\mathbf {p}|H^t_\omega , \theta _i )\) to quantify the relative likelihood of parameter values \(\mathbf {p}\), for agent \(\omega\), when considering type \(\theta _i\). This new belief is represented as a polynomial of the same degree d as \(\hat{f}\). Algorithm 2 provides a summary of the Approximate Bayesian Update method.

After fitting \(\hat{f}\) (Line 2), the polynomial convolution of \(\mathsf {P}(\mathbf {p}|H_\omega ^{t-1} , \theta _i )\) and \(\hat{f}\) results in a polynomial \(\hat{g}\) of degree greater than d (Line 3). Afterwards, in Line 4, a set of sample points is collected from the convolution \(\hat{g}\) in the same way that is done in Approximate Gradient Ascent, and a new polynomial \(\hat{h}\) of degree d is fitted to the collected set in Line 5. Finally, the integral of \(\hat{h}\) under the parameter space, and the division of \(\hat{h}\) by the integral is calculated, to obtain the new belief \(\mathsf {P} (\mathbf {p}|H_\omega ^t, \theta _i )\). This new belief can then be used to obtain a parameter estimation, e.g., by finding the maximum of the polynomial or by sampling from the polynomial. For further details, we recommend reading Albrecht and Stone’s work [2].

POMCP Although in the MDP model agent \(\phi\) has full observation of the environment, it cannot observe the type and parameters of its teammates. Therefore, we can employ POMCP [37], a state-of-the-art on-line planning algorithm for POMDPs (Partially Observable Markov Decision Process) [25]. POMCP stores a particle filter at each node of a Monte Carlo Search Tree. In this case, like the environment, apart from the types and parameters of the other agents, is fully observable, the particles are defined as different combinations of the types and parameters for all agents in \(\varvec{\Omega }\). I.e., [(\(\theta _4, \mathbf {p}_1\)), (\(\theta _2, \mathbf {p}_2\)), ..., (\(\theta _1, \mathbf {p}_n\))], where each (\(\theta , \mathbf {p}\)) corresponds to one non-learning agent.

In the very first root, when the particles are created, we randomly assign types and parameters for each agent at each particle. Therefore, at every iteration, we sample a particle from the particle filter of the root, and hence change the estimated type and parameters of the agents. As in the POMCP algorithm, the root gets updated once a real action is taken, and a real observation is received. Therefore, for having a type probability \(\mathsf {P}(\theta )_\omega\) for a certain agent \(\omega\), we calculate the frequency that the type \(\theta\) is asssigned to \(\omega\) in the current root’s particle filter. Additionally, for the parameter estimation, we will consider the average across the particle filter (for each type and agent combination). For further explanations about the POMCP algorithm, we recommend reading Silver and Venesss [37].

6 On-line estimators for ad-hoc task execution

In this section, we introduce our novel algorithm, On-line Estimators for Ad-hoc Task Execution (OEATE), which helps the ad-hoc agent \(\phi\) to learn the parameters and types of non-learning teammates autonomously. The main idea of the algorithm is to observe each non-learning agent (\(\omega \in \varvec{\Omega }\)) and record all tasks (\(\tau \in \mathbf {T}\)) that any one of the agents accomplishes, in order to compare them with the predictions of sets of estimators. In OEATE, there are some fundamental concepts applied during the process of estimating parameters and types. Therefore, we introduce the concepts first and, then, explain the algorithm in detail.

6.1 OEATE fundamentals

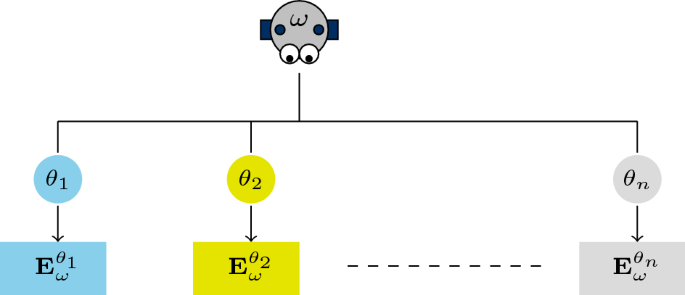

Sets of Estimators In OEATE, there are sets of estimators \(\mathbf {E}^{\theta }_{\omega }\) for each type \(\theta\) and each agent \(\omega\) that the agent \(\phi\) reasons about (Fig. 1). Moreover, each set \(\mathbf {E}^{\theta }_{\omega }\) has a fixed number of N estimators \(e \in \mathbf {E}^{\theta }_{\omega }\). Therefore, the total number of sets of estimators for all agents are \(|\varvec{\Omega }| \times |\varvec{\Theta }|\). Figure 1 presents this idea, relating agent, types and estimators.

An estimator e of \(\mathbf {E}^{\theta }_{\omega }\) is a tuple: \(\{\mathbf {p}_e, {c}_e, {f}_e, {\tau }_e\}\), where:

-

\(\mathbf {p}_e\) is the vector of estimated parameters for \(\omega\), and each element of the parameter vector is defined in the corresponding element range.

-

\({c}_e\) holds the success score of each estimator e in predicting tasks.

-

\({f}_e\) holds the failures score of each estimator e in predicting tasks.

-

\(\varvec{\tau }_e\) is the task that \(\omega\) would try to complete, assuming type \(\theta\) and parameters \(\mathbf {p}_e\). By having estimated parameters \(\mathbf {p}_e\) and type \(\theta\), we assume it is easy to predict \(\omega\)’s target task at any state.

The success and failure scores (\(c_e\) and \(f_e\), respectively) will be further explained the in the Evaluation step of OEATE presentation.

All estimators are initialised in the beginning of the process and evaluated whenever a task is done (by the \(\omega\) agent alone or cooperatively). The estimators that are not being able to make good predictions after some trials are removed and replaced by estimators that are created using successful ones, or purely random, in a fashion inspired by GA [24].

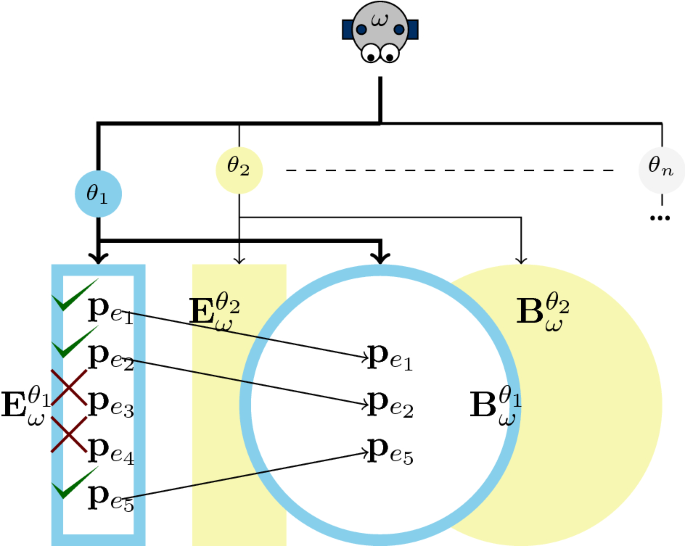

Bags of successful parameters Given the vector of parameters \(\mathbf {p}_e = <p_1, p_2, \ldots , p_n>\), if any estimator e succeeds in task prediction, we keep each element of the parameter vector \(\mathbf {p}_e\) in bags of successful parameters to use them in the future during new parameter vector creation. Accordingly, there is a bag of parameters \(\mathbf {B}_\omega ^{\theta }\) for each type \(\theta \in \varvec{\Theta }\) as there is a estimator set \(\mathbf {E}^{\theta }_\omega\) for each type. These bags are not erased between iterations, hence, their size may increase at each iteration. There is no limit size for the bags. We will provide more details in Sect. 6.2. Figure 2 presents this idea, relating agent, types and estimators to the addition of estimators in the bags.

For each \(\omega\) agent and each possible type \(\theta \in \Theta\), there is a bag of successful estimators. Successful estimator are copied to the bag of estimators of their respective type, in order to later generate new combinations of their elements. The check mark indicates success in predicting the task and the cross mark indicates failure

Choose Target State In the presented task-based ad-hoc teamwork context, besides estimation of type and parameter for each non-learning agent (\(\omega \in \varvec{\Omega }\)), the learning agent \(\phi\) must be able to estimate the Choose Target State (\(\mathfrak {s}_e\)) of each \(\omega\). The Choose Target State of an \(\omega\) agent can be any \(s \in S\) or, in other words, a non-learning agent \(\omega\) can choose a new task \(\tau \in T\) to pursue at any time t or state s. This can happen in many situations, for example, when the agent \(\omega\) notices that its target is not existing anymore (if it was completed by other agents), it would choose a new target, and the Choose Target State would not be the same state as when the last task was done by agent \(\omega\). Hence, a task-based estimation algorithm must be able to identify these moments where a possible task decision happened, to correctly predict the target.

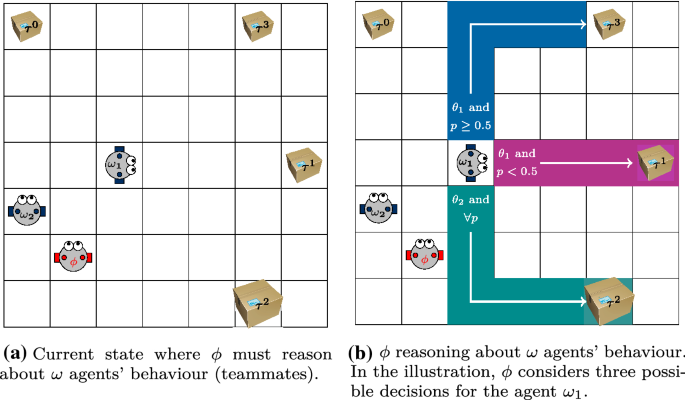

Example For a better understanding of our method’s fundamentals, we will present a simple example. Let us consider a foraging domain [2, 41], in which there is a set of agents in a grid-world environment as well as some items. Agents in this domain are supposed to collect items located in the environment.

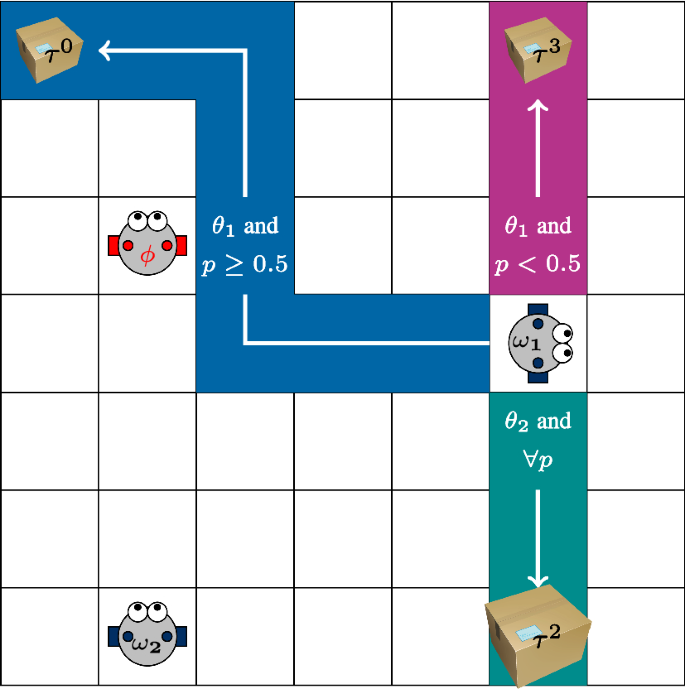

We show a simple scenario in Fig. 3, in which there are two non-learning agents \(\omega _1\), \(\omega _2\), one learning agent \(\phi\), and four items which are in two sizes. As in all foraging problems, each task is defined as collecting a particular item, so in this scenario there are four tasks \(\tau ^i\). In addition, we have two types \(\theta _1\) and \(\theta _2\), and two parameters (\(p_1, p_2\)), where \(p_1, p_2 \in [0, 1]\).

To keep the example simple, we consider that only \(p_1\) affects \(\omega _1\)’s decision-making at each state, and its behaviour follows the rules:

-

If the type is \(\theta _1\), and \(p_1 \ge 0.5\), then \(\omega _1\) goes towards small and furthest item (\(\tau ^3\)).

-

If the type is \(\theta _1\), and \(p_1 < 0.5\), then \(\omega _1\) goes towards small and closest item (\(\tau ^1\)).

-

If the type is \(\theta _2\), \(\forall p_1 \in [0, 1]\), \(\omega _1\) goes towards big and closest item (\(\tau ^2\)).

Therefore, in the example scenario, there are four sets of estimators, two for each \(\omega\) agent : \(\mathbf {E}^{\theta _1} _{\omega _1}\), \(\mathbf {E}^{\theta _2}_{\omega _1}\), \(\mathbf {E}^{\theta _1} _{\omega _2}\), \(\mathbf {E}^{\theta _2}_{\omega _2}\). We assume that the total number of estimators in each set is 5 (\(N=5\)). Furthermore, we maintain 4 bags of estimators : \(\mathbf {B_{\omega _1}^{\theta _1}}\), \(\mathbf {B}^{\theta _2}_{\omega _1}\), \(\mathbf {B}^{\theta _1} _{\omega _2}\), \(\mathbf {B}^{\theta _2}_{\omega _2}\).

We assume that the true type of agent \(\omega _1\) is \(\theta _1\), and the true parameter vector is (0.2, 0.5). At this point, we will focus on the set of estimators for agent \(\omega _1\). Moreover, we will continue to use this example to explain further details of OEATE implementation.

6.2 Process of estimation

After presenting the fundamental elements of OEATE, we will explain how we define the process of estimating the parameters and type for each non-learning agent. Simultaneously, we will also demonstrate how OEATE evolves in various steps, using our above example. The algorithm is divided into five steps, which is executed for all agents in \(\varvec{\Omega }\) at every iteration:

-

(i)

Initialisation: responsible for initialising the estimator set and the bags of successful estimators for each agent \(\omega \in \varvec{\Omega }\).

-

(ii)

Evaluation: step where OEATE will increase the failure or the success score of each estimator, for all initialised estimator sets, based on the correct prediction of the \(\omega\)’s target task. If the estimator successfully predicts the task, it will be added to its respective bag. Otherwise, it will be up for elimination.

-

(iii)

Generation: step where our method replaces the estimators removed in the evaluation process for new ones.

-

(iv)

Estimation: process of calculating the types’ probabilities and expected parameters’ value for each existing estimators set. The calculation is based on the success rate of each set.

-

(v)

Update: responsible for analysing the integrity of each estimator e and its respective chosen target \(\tau _e\) given the current world state. If it finds some inconsistency, a new prediction is made considering \(\omega\)’s perspective.

These steps are explained in detail below:

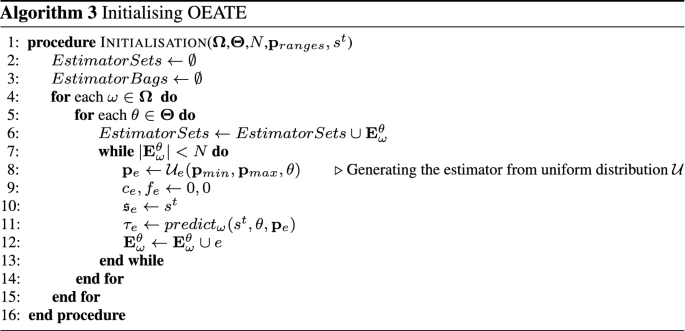

Initialisation At the very first step, for each identified teammate in the environment, we initialise its estimation set and the bag for each possible type. Therefore, agent \(\phi\) needs to create N estimators for each type \(\theta \in \varvec{\Theta }\) and each \(\omega \in \varvec{\Omega }\). If there is a lack of prior information, the parameter vectors \(\mathbf {p}_e\) of each estimator can be initialised with a random value from the uniform distribution \(\mathcal {U}\), in each parameter’s range. Since each estimator has a certain type \(\theta\) and a certain parameter vector \(\mathbf {p}_e\), it allows agent \(\phi\) to estimate agent \(\omega\)’s task choosing process. A task will be estimated and assigned to \(\tau _e\) when, in a given state \(s \in S\) at the time t, the prediction return a valid task. In the case where there is no valid task to return at the state s and time t, \(\tau _e\) receives “None” and will be updated in later iterations (process carried out by the Update step). Finally, both \({c}_e\) and \({f}_e\) are initialised to zero.

The Algorithm 3 presents the initialisation process.

Initialisation Example Returning back to our example, in Initialisation step, we start by creating random estimators, as shown in Table 1. To make the example simple, we define the state as only the position of agent \(\omega _1\). Therefore, we set each \(\mathfrak {s}_e\) (Choose Target State) with the initial position of \(\omega _1\), which is (3, 4), and then we create the parameter vectors \(\mathbf {p}_e\) by randomly sampling from the uniform distribution, which should be done separately for both \(p_1\) and \(p_2\). Agent \(\phi\) simulates \(\omega _1\)’s task decision-making process for each estimator in the sets \(\mathbf {E}^{\theta _1}_{\omega _1}\) and \(\mathbf {E}^{\theta _2}_{\omega _1}\), and obtains the corresponding target task \(\tau _e\) based on the type and parameter of each estimator. In addition, all \(f_e\) and \(c_e\) will be initialised as zero. All initial estimators for both sets are shown in Table 1.

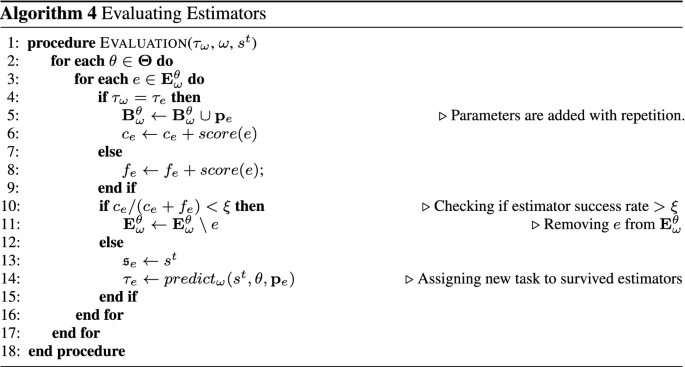

Evaluation The evaluation of all sets of estimators \(\mathbf {E}^{\theta }_\omega\) for a certain agent \(\omega\) starts when it completes a task \(\tau _\omega\). The objective of this step is to find the estimators that could estimate \(\omega\)’s just completed real task \(\tau _\omega\) correctly. Therefore, we present the Algorithm 4 to facilitate the understanding and explanation of the evaluation process.

As there are sets of estimators for each type \(\theta \in {\varvec{\Theta }}\), then for every e in \(\mathbf {E}^{\theta }_\omega\), we check if the \(\tau _{e}\) (estimated task by assuming \(\mathbf {p}_e\) to be \(\omega\)’s parameters with type \(\theta\)) is equal to \(\tau _{\omega }\) (the real completed task). If they are equal, we consider them as successful parameters and save the \(\mathbf {p}_e\) vector in the respective bag \(\mathbf {B}_\omega ^{\theta }\) (Line 5). The union between bag and parameter, which is applied in the equation, means that new parameters would be added to the bag with repetition, and if a parameter succeeds many times, it will appear in the bag with the same numbers of successes, so the chance of selecting it would be higher.

If the estimated task \(\tau _e\) is equal to the real task \(\tau _{\omega }\), we will increase the \(c_e\) following \(c_e \leftarrow c_e + score(e)\). The score(e) value denotes the information-level score for the prediction made by estimator e. The information-level score is used to represent the weighting given to certain task completions over others. For example: If a task prediction occurs many steps before the task completion, it was likely made by a correct estimator than by random chance. Furthermore, this function can be tweaked in a domain-specific way.

If the estimated task \(\tau _e\) is not equal to the real task \(\tau _{\omega }\), we will increase the \(f_e\) score following \(f_e \leftarrow f_e + score(e)\). Note from the algorithm that we will only remove an estimator e if its success rate is lower than \(\xi\) (Line 10). We define the threshold \(\xi\) as a success threshold aiming to improve our estimator set, by removing the estimators that do not make good predictions and keeping the ones that do (more detail in the Generation explanation).

Note that, by using this approach, any generated estimator e has a chance to be eliminated at the first iteration of estimation. Hence, some estimators, which may potentially approximate well the actual parameters, can be removed after performing their first estimation wrongly, \(\forall \xi \in [0,1]\). However, even if these particles fail at the beginning of the estimation, other estimators may also likely fail in the subsequent iterations of OEATE, enabling the regeneration of the removed potentially correct estimator through the bags or by sampling it again from the uniform distribution. As we will show in Section 6.3, OEATE estimates the correct parameter for all agents as the number of completed tasks grows and under some assumptions.

Finally, the Choose target State (\(\mathfrak {s}_e\)) of the successful estimators is updated and a new task (\(\tau _{e}\)) is predicted using the type and parameters of the estimator. The evaluation process ends and the removed estimators will be replaced by new ones in the Generation Process.

Evaluation Example From the previous example, after the initialisation, the agents move towards their respective targets. Based on the true type and parameters of the agent \(\omega _1\), after some iterations, the agent (\(\omega _1\)) gets the item that corresponds to the task \(\tau ^1\). For this example, and throughout our experimentation, we will use the number of steps required between predicting the task and completing the next task as the score (information-level) for the estimator for that prediction. Let us assume that the number of steps required by the agent \(\omega _1\) is 4 (3 for moving and 1 for completing). From Fig. 4, the agent \(\omega _1\)’s new position will be (6,4). We will use this value as the score for the estimators. Note that here, since all estimators chose the task at the same time, they will get the same score.

Whenever a task is done by an agent, the process of evaluation will start. Now, we carry out the next step of our process. In Evaluation, all estimators of the two sets \(\mathbf {E}^{\theta _1} _{\omega _1}\), \(\mathbf {E}^{\theta _2}_{\omega _1}\) will be evaluated. If the task \(\tau\) of any estimator e equals to \(\tau ^1\), then its success counter \(c_e\) increases by score(e), otherwise it remains the same. Also, in failing cases, the counter of failures \(f_e\) increases by score(e). The updated values of the estimators are shown in Table 2.

If we suppose that the threshold for removing estimators is equal to 0.5 (\(\xi = 0.5\)), then we will have two surviving estimators (\(\frac{c_e}{c_e+f_e} \ge \xi\)) at \(\mathbf {E}^{\theta _1}_{\omega _1}\) and none in \(\mathbf {E}^{\theta _2}_{\omega _1}\). Hence, the bag for \(\theta _1\) are: \(\mathbf {B}_{\omega _1}^{ {\theta _1}} = \{(0.4,0.6) , (0.2,0.5)\}\) and the bag for \(\theta _2\) is empty. Further, the new Choose Target State will be (6,4) and using this, we can find the new task (\(\tau _e\)) for each of the surviving estimators. The new estimator sets are represented in Table 3 and, the new choose target state is illustrated by Fig. 4.

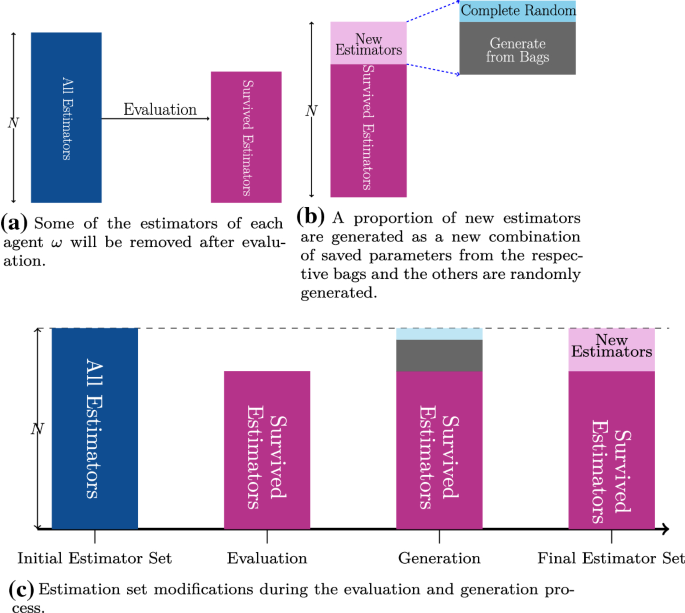

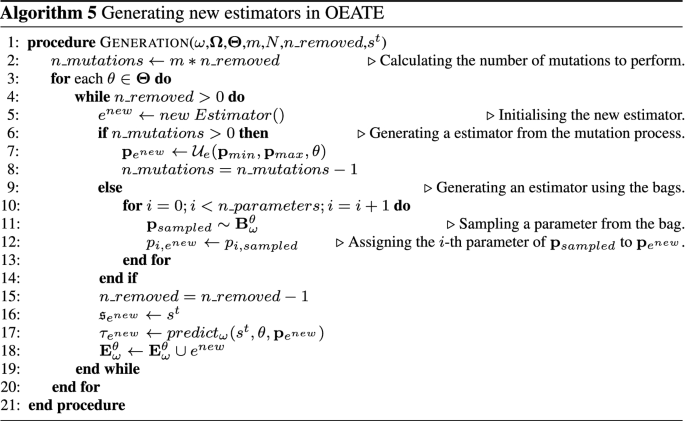

Generation The generation process of new estimators occurs after every evaluation process and only over the removed estimators. In this step, the objective is to generate new estimators, in order to maintain the size of the \(\mathbf {E}^{\theta }_\omega\) sets equal to N.

Unlike the Initialisation step, we do not only create random parameters for new estimators, but generate a proportion of them using previously successful parameters from the bags \(\mathbf {B}_\omega ^{\theta }\). Therefore, we will be able to use a new combination of parameters from estimators that had successful predictions at least one time in previous steps. Moreover, as the number of copies of the parameter \(\mathbf {p}\) in the bag \(\mathbf {B}_\omega ^{\theta }\) is equivalent to the number of successes of the same parameter in previous steps, the chance of sampling very successful parameters will increase according to its success rate.

The idea of using successful estimators to generate part of the new estimators is related to the Genetic Algorithm (GA) principles. Until now, the described process shares several similarities with the GA idea, such as the generation of a sample population for further evaluation and feature improvement. Furthermore, we are concerned about boosting our estimation process (based on the estimator sampling and evaluation), so we require a reasonable way to generate new estimators that can improve our estimation quality. Therefore, inspired by GAs mutation and cross-over process, we implement a GA-inspired process that supports our generation method.

Therefore, after the elimination of estimators for which the probability of making a correct prediction is lower than the threshold \(\xi\), we will generate new estimators for our population following the mutation rate of m, where part of our population is generated randomly following a uniform distribution \(\mathcal {U}\), and the rest following a process inspired by the cross-over, using our bags of successful parameters. With domain knowledge, different distributions could be used. Figure 5 illustrates how the estimator set changes during this described process and indicates the portion of particles generated using the bags or randomly. Algorithm 5 summarises this generation procedure.

The generation process using the bags can be seen in Algorithm 5 Line 10-13 . There, a new estimator is created by sampling n different parameters (with repetition) from the target bag, and then choosing their \(i\text {-th}\) parameters. Hence, essentially if the parameter of new estimator (\(e^{new}\)) is \(\mathbf {p}_{e^{new}} = <p_1, p_2, \ldots , p_n>\), then \(p_i\) is chosen by sampling \(\mathbf {p}_{sampled} \sim \mathbf {B}_{\omega }^{\theta }\) and then taking the i-th parameter from it (\(p_{i,{sampled}}\)).

After performing all the generations with the bag, we continue to fill the estimator set with uniform generated parameters. Once the estimator set is full (i.e., \(|\mathbf {E}_{\omega }^{\theta }| = N\)), the current state is assigned as Choose Target State (\(\mathfrak {s}_{e^{new}}\)) of every new estimator. Afterwards, a task (\(\tau _{e^{new}}\)) is predicted for each new estimator and the generation process finishes.

Generation Example Supposing \(m = \frac{1}{3}\) as mutation rate, then \((1 - \frac{1}{3}) \times (5-2) = 2\) new estimators are generated by randomly sampling from the bags, while \(\frac{1}{3} \times (5-2) = 1\) estimator is generated randomly from the uniform distribution. Therefore, we may create new estimators with the following parameters: (0.4, 0.5); (0.2, 0.6); (0.8, 0.7), where the last vector is fully random. For \(\mathbf {E}^{\theta _2}_{\omega _1}\), as all estimators were removed and the corresponding bags are empty, the whole set \(\mathbf {E}^{\theta _2}_{\omega _1}\) will be generated using the uniform distribution as in the initialisation process. After this, the current state (6,4) , is assigned as the Choose Target State for each new estimator and a task is predicted. All new estimators and updated values are shown in Table 4.

Estimation At each iteration after doing evaluation and generation, it is required to estimate a parameter and type for each \(\omega \in \varvec{\Omega }\) to improve the decision-making. First, based on the current sets of estimators, we calculate the probability distribution over the possible types. For calculating the probability of agent \(\omega\) having type \(\theta\), \(\mathsf {P}(\theta )_\omega\), we use the success score \(c_e\) of all estimators of the corresponding type \(\theta\). For each \(\omega \in \varvec{\Omega }\), we add up the success rates \(c_e\) of all estimators in \(\mathbf {E}^\theta _\omega\) of each type \(\theta\), that is:

It means that we want to find out which set of estimators is the most successful in estimating correctly the tasks that the corresponding non-learning agent completed. In the next step we normalise the calculated \(k^\theta _\omega\), to convert it to a probability estimation, following:

During the simulations, OEATE will sample estimations from the current estimation sets. In detail, for each agent \(\omega\), we will sample a type \(\theta\) based on \(P(\theta )_\omega\) and sample an estimator from \(\omega\)’s estimator set of that type (\(\mathbf {E}_\omega ^\theta\)), using the weights given by \(c_e\) of the estimators. In this way, once a type (\(\theta\)) is selected, the probability of selection of each estimator \(e \in \mathbf {E}_\omega ^{\theta }\) is equals to \(c_e/k_\omega ^\theta\) . If \(k_\omega ^{\theta } = 0\), we sample the estimator uniformly from \(\mathbf {E}_{\omega }^{\theta }\). Otherwise, we perform the weighted sampling.

Using this strategy, OEATE can improve the reasoning horizon and diversify the simulations. Differently from AGA and ABU that presents only a single estimation per iteration, we present a set of the (current) best found estimators for planning and decision-making.

Estimation Example Now, we do the Estimation step in our example to have a probability distribution over types, and one parameter vector per type of \(\omega _1\). At this step, in order to find the probability of being either \(\theta _1\) or \(\theta _2\), we apply the Equation 6.2. By considering the \(c_e\) of all estimators, we have that:

Hence, to calculate the probability of each type, we use the Equation 6.2. Accordingly, the probabilities are:

which means that the probability of being \(\theta _1\) is the higher one.

Now, for the sampling process, we sample a type using the previously calculated distribution. Let’s say that we sample \(\theta _1\). Now, from this type, we also sample an estimator, using the ratio \(c_e/k^{\theta _1}\) as the probability of each estimator in \(E_{\omega _1}^{\theta _1}\). Concretely, we get:

while the other estimators have probability 0. So, we use these probabilities to sample an estimator, let’s say (0.4,0.6). Therefore, type \(\theta _1\) and the parameters (0.4, 0.6) will be our estimated type and parameter for the current estimation step.

During the planning phase in the root of the MCTS (for the learning agent \(\phi\) perspective), the OEATE will sample the simulating type and parameter respecting the probabilities calculated above. Moreover, to calculate the error of the estimation of our method, we use the mean square error (MSE) between the true parameter and the expected parameter of the true type (\(\theta ^*\)). The expected parameter of a type (\(\theta\)) and agent \(\omega\) is calculated as:

Update As mentioned earlier, there are possible issues that might arise in our estimation process, they occur:

- (i):

-

when a certain task \(\tau\) is accomplished by any of the team members (including agent \(\phi\)), and some other non-learning agent was targeting to achieve it, or;

- (ii):

-

when a certain non-learning agent is not able to choose a task to target (e.g., cannot see or find any available (or valid) task within its vision area considering possible parameters limitations, such as vision radius and angle).

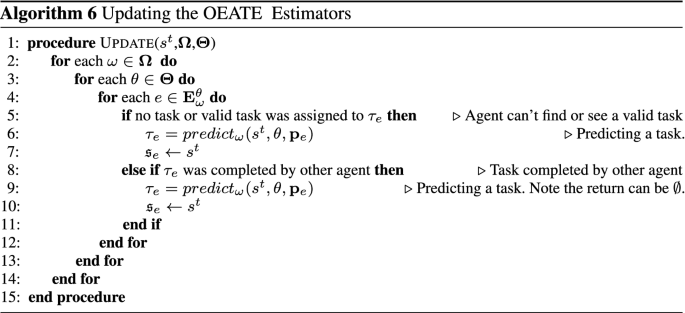

If some non-learning agent \(\omega\) faces one of these problems, it will keep trying to find a task to pursue. Hence, from the perspective of the learning agent \(\phi\), OEATE must handle this problem updating its teammates’ targets. Otherwise, it might incorrect evaluate the available estimators given the outdated prediction.

Therefore, the OEATE’s Update process exists to guarantee the estimator set integrity for future evaluation. At each iteration, the update step will analyse the integrity of each estimator e and its respective chosen target \(\tau _e\) given the current world state. If it finds some inconsistency, it will simulate the estimator’s task selection for the next states, considering \(\omega\)’s perspective. The process is carried out in each successive state until it returns a new valid target for the indecisive estimator. The Algorithm 6 presents the described update routine.

Update Example In the update step, we look at our estimators from Table 4 and check whether the conditions for update (from Algorithm 6 ) are met. Evidently, for our case,we see that every estimator has a valid task assigned to it and therefore, nothing will happen in the update step.

6.3 Analysis

We show that as the number of tasks goes to infinite, under full observability, OEATE perfectly identifies the type and parameters of all agents \(\omega\), given some assumptions. Since each of our updates are related to completing the tasks, this analysis assumes that the agents are able to finish the tasks. First, we consider that parameters have a finite number of decimal places. This is a light assumption, as any real number x can be closely approximated by a number \(x'\) with finite precision, without much impact in a real application (e.g., any computer has a finite precision). Hence, as each element \(p_i\) in the parameter vector is in a fixed range, there is a finite number of possible values for it. To simplify the exposition, we consider \(\psi\) possible values per element (in general they can have different sizes). Let n be the dimension of the parameter space.

Additionally, let \(\mathbf {p^*}\) be the correct parameter, and \(\theta ^*\) be the correct type, of a certain agent \(\omega\). We define \(\theta ^{-} \not = \theta ^*\), and \(\mathbf {p}^{-} \not = \mathbf {p^*}\), representing wrong types and parameters, respectively. We will also use tuples \((\mathbf {p}, \theta )\) to represent a pair of parameter and type.

Assumption 1 Any \((\mathbf {p}, \theta ^{-})\), and any \((\mathbf {p}^{-}, \theta ^*)\) has a lower probability of making a correct task estimation than \((\mathbf {p^*}, \theta ^*)\). Moreover, we assume that the correct parameter-type pair \((\mathbf {p}^*,\theta ^*)\) will also be able to have the correct Choose Target State (\(\mathfrak {s}_e\)).

This assumption is very light because if a certain pair \((\mathbf {p}, \theta ^{-})\) or \((\mathbf {p}^{-}, \theta ^*)\) has a higher probability of making correct task predictions, then it should indeed be the one used for planning, and could be considered as the correct parameter and type pair.

Assumption 2 Any \((\mathbf {p}, \theta ^{-})\), and any \((\mathbf {p}^{-}, \theta ^*)\) will not succeed infinitely often. That is, as \(|\mathbf {T}| \rightarrow \infty\) there will be cases where it successfully predicts the task, but the number of cases is limited by a finite constant c.

Assumption 3 This assumption is needed to distinguish our method from a random search. The assumption has 2 parts: (i) a correct value \(p_i^*\) in any position i may still predict the task wrongly (since other vector positions may be wrong), but it will eventually predict at least one task correctly in at most t trials, where t is a constant; (ii) a wrong value \(p_i^{-}\) in any position i may still predict the task correctly (since other vector positions may be correct), but that would happen at most \(\mathfrak {b}\) times for each bag, across all wrong values. Therefore, \(\mathfrak {b} \ll \psi\).

That is, if one of the vector positions i is correct, \(\mathbf {p}\) will not fail infinitely, even though other elements may be incorrect. That is valid in many applications, as in some cases only one element is enough to make a correct prediction. For example, if a task was nearby, for almost any vision radius it would be predicted as the next one if the vision angle were correct. On the other hand, wrong values will not always succeed. That is also true in many applications: although by the argument above, wrong values may make correct predictions, but these are a limited number of cases in the real world. Eventually, all tasks nearby will be completed, and a correct vision radius estimation becomes more important to make correct predictions. Usually, \(\psi\) would be large (e.g., they may approximate real numbers), so we would have \(\mathfrak {b} \ll \psi\). Additionally, we will consider the case with lack of previous knowledge, so parameters and types will be initially sampled from the uniform distribution. As before, we denote by \(\mathsf {P}(\theta )\) the estimated probability of a certain agent having type \(\theta\), but we drop the subscript \(\omega\) for clarity.

Theorem 1

OEATE estimates the correct parameter for all agents as \(|\mathbf {T}| \rightarrow \infty\). Hence, \(\mathsf {P}(\theta ^{*}) \rightarrow 1\).

Proof

Since wrong parameters-type pairs will not succeed infinitely often, we always will generate new estimators with a random \(\mathbf {p}_e\). As we sample from the uniform distribution, \(\mathbf {p}^*\) will be sampled with probability \(1/\psi ^n > 0\). Hence, eventually it will be generated as \(|\mathbf {T}| \rightarrow \infty\). As the generation defines a Bernoulli experiment, from the geometric distribution, we expect \(\psi ^n\) trials.

Therefore, eventually, there will be an estimator with the correct parameter vector \(\mathbf {p^*}\). Furthermore, since \((\mathbf {p^*}, \theta ^*)\) has the highest probability of making correct predictions (Assumption 1), it has the lowest probability of reaching the failure threshold \(\xi\). Hence, as \(|\mathbf {T}| \rightarrow \infty\), there will be more estimators \((\mathbf {p^*}, \theta ^*)\), than any other estimator. Further, any \((\mathbf {p^{-}}, \theta ^*)\) will eventually reach the failure threshold, and will eventually be discarded, since it succeeds at most c times by Assumption 2. Therefore, by considering our method of sampling an estimator from the estimator sets, we will correctly estimate \(\mathbf {p^*}\) when assuming type \(\theta ^*\). Hence, when \(|\mathbf {T}| \rightarrow \infty\) the sampled estimator from \(\mathbf {E}^{\theta ^*}_\omega\) will be \(\mathbf {p^*}\).

Further, when we consider the Assumption 2 , then the probability of the correct type \(\mathsf {P}(\theta ^*) \rightarrow 1\). That is, we have that \(c_e \rightarrow \infty\) in the set \(\mathbf {E}^{\theta ^*}_\omega\). Hence, \(k^{\theta ^*}_\omega \rightarrow \infty\), while \(c_e < c\) for \(\theta ^{-}\) (by assumption). Therefore:

while \(\mathsf {P}(\theta ^{-}) \rightarrow 0\), as \(|\mathbf {T}| \rightarrow \infty\). \(\square\)

This ensures that the as \(|\mathbf {T}| \rightarrow \infty\), the sampled type is \(\theta ^*\).

We saw in Theorem 1 that a random search from the mutation proportion takes \(\psi ^n\) trials in expectation. OEATE , however, finds \(\mathbf {p}^*\) much quicker than that, since a proportion of estimators are sampled from the corresponding bags \(\mathbf {B}_\omega ^{\theta , i}\). In the following proposition, we will prove that OEATE will indeed find \(\mathbf {p}^*\) and under Assumption 1, \(\mathbf {p}^*\) would have highest probability of not being removed from the estimator set and will continue to add it’s own parameters back to the bag, thereby further increasing the probability of sampling those parameters at each mutation.

Proposition 1

OEATE finds \(\mathbf {p}^*\) in \(O(n \times \psi \times (\mathfrak {b}+1)^n)\).

Proof

Consider Assumption 3, we know that at some time, we must encounter a parameter value \(p_i\). Sampling the correct value for element \(p_i\) would take \(\psi\) trials in expectation. Once a correct value is sampled, it will be added to \(\mathbf {B}_{\omega }^{\theta ^*}\) if it makes at least one correct task prediction. It may still make incorrect predictions because of wrong values in other elements, and it would be removed (from the estimator set) if it reaches the failure threshold \(\xi\). However, for a constant number of trials \(t \times \psi\), it would be added to \(\mathbf {B}_{\omega }^{\theta ^*}\). Similarly, sampling the correct value for all n dimensions at least one time would take \(n \times \psi\) trials in expectation, and in at most \(t \times n \times \psi\) trials \(\mathbf {B}_{\omega }^{\theta ^*}\) would have at least one estimator each with the correct value in position i. The bags store repeated values, but in the worst case, there is only one correct example at each \(\mathbf {B}_{\omega }^{\theta ^{*}}\), leading to at least \(1/(\mathfrak {b}+1)\) probability to sample the correct value from the bag. Hence, given the bag sampling operation, we would find \(\mathbf {p}^*\) with at most \(t \times n \times \psi \times (\mathfrak {b}+1)^n\) trials in expectation.

Hence, the complexity is close to \(O(\psi )\), instead of \(O(\psi ^n)\) as the random search (since \(\mathfrak {b} \ll \psi\)).

Considerations In Assumption 1, the choose target state \((\mathfrak {s}_e)\) of an estimator is dependent only on the previous predicted tasks and the main agent’s observation. Therefore, in a fully observable case, the true parameters have the highest probability of having the correct choose target state . Furthermore, we leave the proof for partially observable cases as future work.

Time Complexity It is worth noting that the actual time taken by the algorithm is dependent on \((\mathfrak {b}+1)^n\) . So, as an example, if \(\mathfrak {b}=10 \ll \psi =100\) , then if \(n=3\) , \((\mathfrak {b}+1)^n =1000 \gg \psi = 100\) . However, when we are write the time complexity, we are focusing on how the algorithm will scale with larger search space (i.e. Higher \(\psi\)). Further, since \(\psi\) is the precision of parameters, it is likely to be a large value. For instance, if there are 3 elements in parameter vector (\(\mathbf {p}\)), if range of each element (\(p_i\)) is [0,1] and we want our answer to be accurate up to only 3 places of decimal, then \(\psi = 10^3\) .

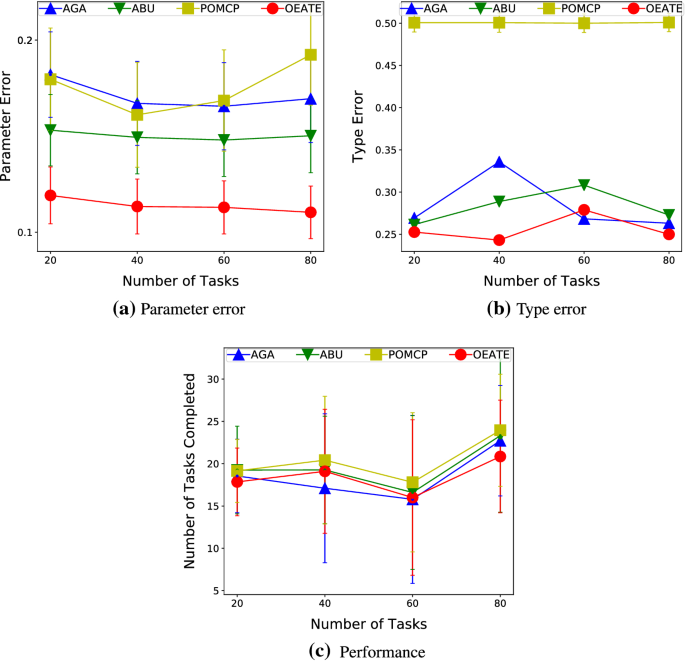

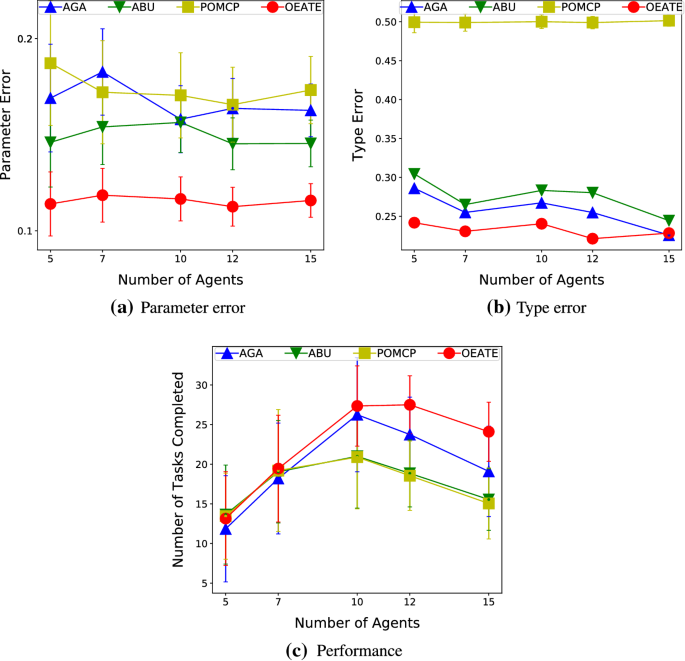

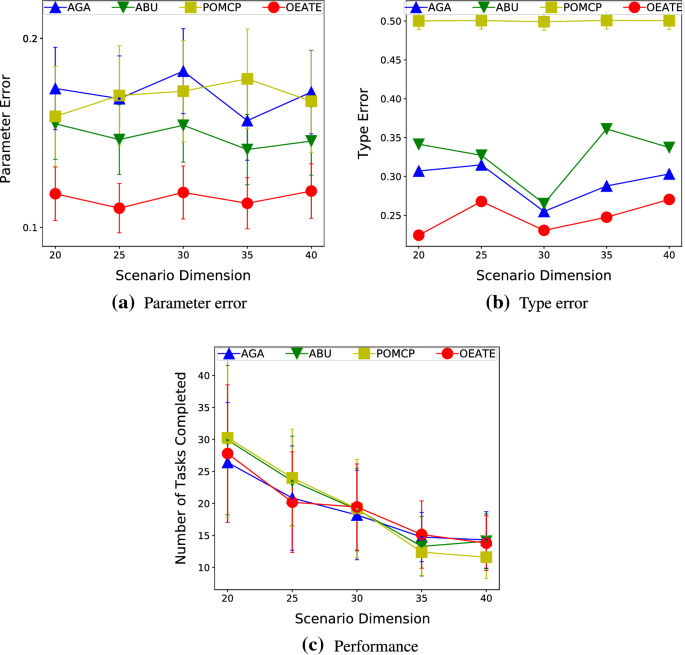

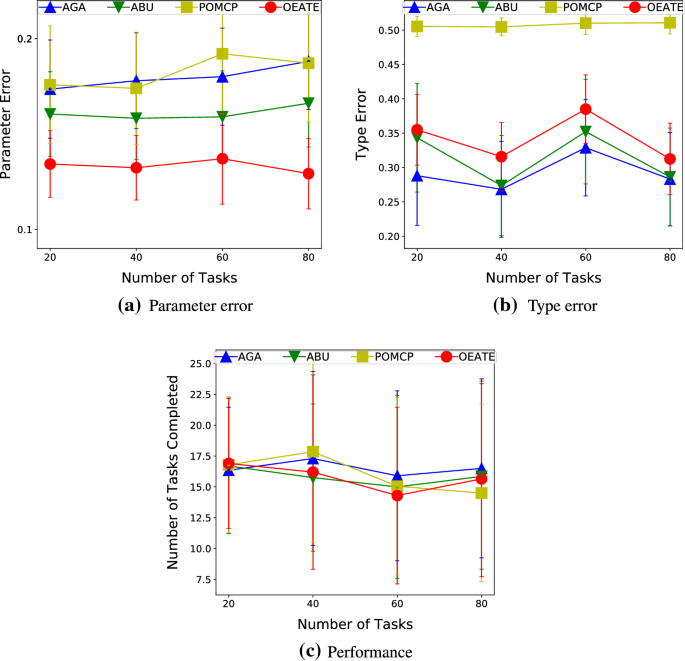

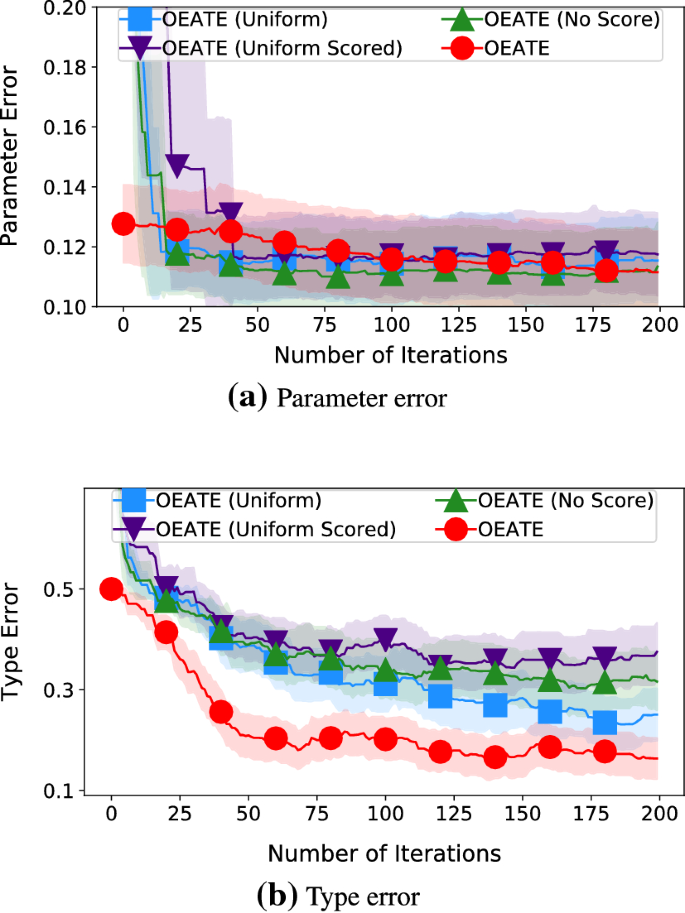

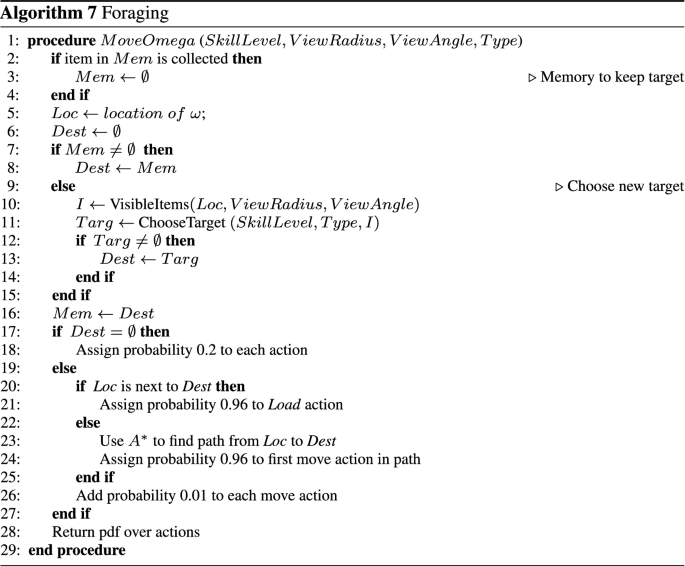

6.4 OEATE with partial observability