Abstract

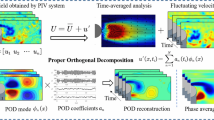

The proper orthogonal decomposition (POD) is a powerful classical tool in fluid mechanics used, for instance, for model reduction and extraction of coherent flow features. However, its applicability to high-resolution data, as produced by three-dimensional direct numerical simulations, is limited owing to its computational complexity. Here, we propose a wavelet-based adaptive version of the POD (the wPOD), in order to overcome this limitation. The amount of data to be analyzed is reduced by compressing them using biorthogonal wavelets, yielding a sparse representation while conveniently providing control of the compression error. Numerical analysis shows how the distinct error contributions of wavelet compression and POD truncation can be balanced under certain assumptions, allowing us to efficiently process high-resolution data from three-dimensional simulations of flow problems. Using a synthetic academic test case, we compare our algorithm with the randomized singular value decomposition. Furthermore, we demonstrate the ability of our method analyzing data of a two-dimensional wake flow and a three-dimensional flow generated by a flapping insect computed with direct numerical simulation.

Article PDF

Similar content being viewed by others

Avoid common mistakes on your manuscript.

References

Ali, M., Steih, K., Urban, K.: Reduced basis methods with adaptive snapshot computations. Adv. Comput. Math. 43(2), 257–294 (2017)

Ali, M., Urban, K.: Reduced Basis Exact Error Estimates with Wavelets. In: Numerical Mathematics and Advanced Applications ENUMATH 2015, pp. 359–367. Springer (2016)

Alla, A., Kutz, J.N.: Randomized model order reduction. Adv. Comput. Math. 45(3), 1251–1271 (2019)

Alnæs, M., Blechta, J., Hake, J., Johansson, A., Kehlet, B., Logg, A., Richardson, C., Ring, J., Rognes, M.E., Wells, G.N.: The fenics project. https://fenicsproject.org/, Visited, 12, May (2020)

Alnæs, M., Blechta, J., Hake, J., Johansson, A., Kehlet, B., Logg, A., Richardson, C., Ring, J., Rognes, M.E., Wells, G.N.: The fenics project version 1.5 Archive of Numerical Software 3(100) (2015)

Anderson, E., Bai, Z., Dongarra, J., Greenbaum, A., McKenney, A., Du Croz, J., Hammarling, S., Demmel, J., Bischof, C., Sorensen, D.: LAPACK: A portable linear algebra library for high-performance computers. In: Proceedings of the 1990 ACM/IEEE Conference on Supercomputing, Supercomputing ’90, pp 2–11. IEEE Computer Society Press, Los Alamitos, CA, USA (1990)

Benner, P., Cohen, A., Ohlberger, M., Willcox, K.: Model reduction and approximation: theory and algorithms, vol. 15 SIAM (2017)

Benner, P., Ohlberger, M., Patera, A., Rozza, G., Urban, K.: Model reduction of parametrized systems Springer (2017)

Castrillon-Candas, J.E., Amaratunga, K.: Fast estimation of continuous karhunen-loeve eigenfunctions using wavelets. IEEE Trans. Signal Process. 50(1), 78–86 (2002)

Cohen, A., Daubechies, I., Feauveau, J.C.: Biorthogonal bases of compactly supported wavelets. Comm. Pure and Appl. Math. 45, 485–560 (1992)

Deslauriers, G., Dubuc, S.: Interpolation dyadique École polytechnique de Montréal (1987)

Deslauriers, G., Dubuc, S.A.: Saff DeVore Symmetric Iterative Interpolation Processes. In: Ronald, E.B. (ed.) Constructive Approximation: Special Issue: Fractal Approximation, pp 49–68. Springer US, Boston, MA (1989)

DeVore, R.A.: Nonlinear approximation. Acta Numerica 7, 51–150 (1998)

Domingues, M., Gomes, S., Díaz, L.: Adaptive wavelet representation and differentiation on block-structured grids. Appl. Numer. Math. 47 (3), 421–437 (2003)

Donoho, D.L.: Interpolating wavelet transforms. Department of Statistics, Stanford University (1992)

Eckart, C., Young, G.: The approximation of one matrix by another of lower rank. Psychometrika 1(3), 211–218 (1936)

Engels, T., Krah, P.: Python tools for the wavelet adaptive block-based solver for interactions in turbulence. https://github.com/adaptive-cfd/python-tools, Visited 9 (2020)

Engels, T., Krah, P.: Scripts used for this publication. https://github.com/adaptive-cfd/WABBIT-convergence-test.git. Visited 9 (2020)

Engels, T., Schneider, K., Reiss, J., Farge, M.: A wavelet-adaptive method for multiscale simulation of turbulent flows in flying insects. Commun. Comput. Phys. 30(4), 1118–1149 (2021)

Fang, F., Pain, C., Navon, I., Piggott, M., Gorman, G., Allison, P., Goddard, A.: Reduced-order modeling of an adaptive mesh ocean model. International journal for numerical methods in fluids 59(8), 827–851 (2009)

Farge, M., Pellegrino, G., Schneider, K.: Coherent vortex extraction in 3D turbulent flows using orthogonal wavelets. Phys. Rev. Lett. 87(054), 501 (2001)

Farge, M., Schneider, K., Kevlahan, N.: Non-Gaussianity and coherent vortex simulation for two-dimensional turbulence using an adaptive orthogonal wavelet basis. Phys. Fluids 11(8), 2187–2201 (1999)

Futatani, S., Bos, W., del Castillo-Negrete, D., Schneider, K., Benkadda, S., Farge, M.: Coherent vorticity extraction in resistive drift-wave turbulence: Comparison of orthogonal wavelets versus proper orthogonal decomposition. Comptes Rendus Physique 12(2), 123–131 (2011)

Gräßle, C., Hinze, M.: POD Reduced-order modeling for evolution equations utilizing arbitrary finite element discretizations. Adv. Comput. Math. 44(6), 1941–1978 (2018)

Gräßle, C., Hinze, M., Lang, J., Ullmann, S.: POD Model order reduction with space-adapted snapshots for incompressible flows. Adv. Comput. Math. 45(5-6), 2401–2428 (2019)

Greif, C., Urban, K.: Decay of the Kolmogorov n-width for wave problems. Appl. Math. Lett. 96, 216–222 (2019)

Halko, N., Martinsson, P.G., Tropp, J.A.: Finding structure with randomness: probabilistic algorithms for constructing approximate matrix decompositions. SIAM Rev. 53(2), 217–288 (2011)

Harten, A.: Discrete multi-resolution analysis and generalized wavelets. Applied Numerical Mathematics 12(1), 153–192 (1993)

Harten, A.: Multiresolution representation of data: a general framework. SIAM J. Numer. Anal. 33(3), 1205–1256 (1996)

Harten, A.: Multiresolution Representation and Numerical Algorithms: a Brief Review, pp. 289–322. Springer, Netherlands, Dordrecht (1997)

Holmes, P., Lumley, J.L., Berkooz, G., Rowley, C.W.: Turbulence, coherent structures, dynamical systems and symmetry. Cambridge University Press (2012)

Karatzas, E.N., Ballarin, F., Rozza, G.: Projection-based reduced order models for a cut finite element method in parametrized domains. Computers & Mathematics with Applications 79(3), 833–851 (2020)

Läuchli, P.: Jordan-Elimination und Ausgleichung nach kleinsten Quadraten. Numer. Math. 3(1), 226–240 (1961)

Mahoney, M.W.: Randomized algorithms for matrices and data. Found. Trends Mach. Learn. 3(2), 123–224 (2011)

Mallat, S.: A Wavelet Tour of Signal Processing, Third Edition Edn. Academic Press, Boston (2009)

Mallat, S.G.: Multiresolution approximations and wavelet orthonormal bases of \({L}^{2}(\mathbb {R})\). Transactions of the American Mathematical Society 315(1), 69–87 (1989)

Massing, A., Larson, M.G., Logg, A.: Efficient implementation of finite element methods on nonmatching and overlapping meshes in three dimensions. SIAM J. Sci. Comput. 35(1), C23–C47 (2013)

Mendez, M., Balabane, M., Buchlin, J.M.: Multi-scale proper orthogonal decomposition of complex fluid flows. arXiv:1804.09646 (2018)

Nguyen van yen, R.: Wavelet-based study of dissipation in plasma and fluid flows. Ph.D. thesis Université Paris-Sud XI (2010)

Ohlberger, M., Rave, S.: Reduced basis methods: Success, limitations and future challenges. Proceedings of the Conference Algoritmy, pp 1–12 (2016)

Reiss, J., Schulze, P., Sesterhenn, J., Mehrmann, V.: The shifted proper orthogonal decomposition: a mode decomposition for multiple transport phenomena. SIAM J. Sci. Comput. 40(3), A1322–A1344 (2018)

Roussel, O., Schneider, K.: Coherent vortex simulation of weakly compressible turbulent mixing layers using adaptive multiresolution methods. J. Comput. Phys. 229(6), 2267–2286 (2010)

Sakurai, T., Yoshimatsu, K., Schneider, K., Farge, M., Morishita, K., Ishihara, T.: Coherent structure extraction in turbulent channel flow using boundary adapted wavelets. J. Turbul. 18(4), 352–372 (2017)

Schmid, P.J.: Dynamic mode decomposition of numerical and experimental data. J. Fluid Mech. 656, 5–28 (2010)

Schneider, K., Vasilyev, O.V.: Wavelet methods in computational fluid dynamics. Annu. Rev. Fluid Mech. 42, 473–503 (2010)

Sieber, M., Paschereit, C.O., Oberleithner, K.: Spectral proper orthogonal decomposition. J. Fluid Mech. 792, 798–828 (2016)

Sirovich, L.: Turbulence and the dynamics of coherent structures. part i-iii. Q. Appl. Math. 45(3), 561–571 (1987)

Sroka, M., Engels, T., Krah, P., Mutzel, S., Schneider, K., Reiss, J.: An Open and Parallel Multiresolution Framework Using Block-Based Adaptive Grids. In: Active Flow and Combustion Control 2018, pp. 305–319. Springer (2019)

Sroka, M., Engels, T., Mutzel, S., Krah, P., Reiss, J.: Wavelet adaptive block-based solver for interactions in turbulence. https://github.com/adaptive-cfd/{{WABBIT}}. Visited 9 (2020)

Sweldens, W., Schröder, P.: Building your own wavelets at home Wavelets in Computer Graphics (1997)

Ullmann, S., Rotkvic, M., Lang, J.: POD-Galerkin reduced-order modeling with adaptive finite element snapshots. J. Comput. Phys. 325, 244–258 (2016)

Unser, M.: Approximation power of biorthogonal wavelet expansions. IEEE Trans. Signal Process. 44(3), 519–527 (1996)

Uytterhoeven, G., Roose, D.: Experiments with a wavelet-based approximate proper orthogonal decomposition. Katholieke Universiteit Leuven Departement Computerwetenschappen (1997)

Volkwein, S.: Optimal control of a phase-field model using proper orthogonal decomposition. ZAMM-Journal of Applied Mathematics and Mechanics/Zeitschrift für Angewandte Mathematik und Mechanik: 81(2), 83–97 (2001)

Volkwein, S.: Model reduction using proper orthogonal decomposition. Lecture Notes, Institute of Mathematics and Scientific Computing. University of Graz. see http://www.uni-graz.at/imawww/volkwein/POD.pdf, p 1025 (2011)

Yu, D., Chakravorty, S.: A Randomized Proper Orthogonal Decomposition Technique. In: 2015 American Control Conference (ACC), pp. 1137–1142 (2015)

Zumbusch, G.: Parallel multilevel methods: adaptive mesh refinement and loadbalancing Springer Science & Business Media (2012)

Acknowledgements

The authors gratefully acknowledge the support of the Deutsche Forschungsgemeinschaft (DFG) as part of GRK2433 DAEDALUS. The authors were granted access to the HPC resources of IDRIS under the Allocation No. 2018-91664 attributed by GENCI (Grand Équipement National de Calcul Intensif). Centre de Calcul Intensif d’Aix-Marseille Université is acknowledged for granting access to its high performance computing resources.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Appendices

Appendix : A. Block-based wavelet adaptation

1.1 A.1 Refinement and coarsening

For the wavelet adaptation scheme, here illustrated for the two-dimensional case, we assume real valued and continuous L2-functions u(x,y), such as the pressure or velocity component of a flow field. The function is sampled on a block-based multiresolution grid as shown in Fig. 1, with maximum tree level \({J}_{\max \limits }\), block size B1 ×B2 and a ghost node layer of size g, needed for synchronization. The sampled values on a block \({{{\mathscr{B}}}_{p}^{j}}\) are denoted by:

For a block refinement j → j + 1 the lattice spacings are halved and dyadic points are added to the block, as shown in Fig. 17.

Dyadic grid refinement and coarsening of a single block. Refinement: First the block \({{{\mathscr{B}}}_{p}^{j}}\) is refined by midpoint insertion and then split into four new blocks \(({{\mathscr{B}}}_{p0}^{j},{{\mathscr{B}}}_{p1}^{j},{{\mathscr{B}}}_{p2}^{j},{{\mathscr{B}}}_{p3}^{j})\). Coarsening: After a low pass filter is applied all midpoints are removed and merged into one block

The values at the refined blocks can be obtained by the refinement relation:

where hk denotes the weights of the one-dimensional interpolation scheme:

In the following, we will refer to Eq. (30) as the prediction operation \(P_{j}^{j+1}:\underline {\mathsf {u}}^{j}\mapsto \widehat {\underline {\mathsf {u}}}^{j+1}\) as it was introduced for point value multiresolution in [28]. Using Eq. (5) one can show that Eq. (4) is equivalent to the continuous refinement relation:

is the two-dimensional tensor product of one-dimensional interpolating scaling functionsφ(x).

When a block is coarsened, the tree level is decimated by one: j + 1 → j and every second grid point is removed. The values at the coarser level are obtained by the coarsening relation:

In the notation of Harten [28] coarsening is called decimation and Eq. (34) is denoted by \(D_{j}^{j-1}\colon \underline {\mathsf {u}}^{j}\to \underline {\mathsf {u}}^{j-1}\) in the following. After decimation the block will be merged with its neighboring blocks, as shown in Fig. 17. Similar to the continuous refinement relation Eq. (32) there is a continuous counterpart for coarsening: the dual scaling function \(\tilde {\varphi }\), which satisfies

Instead of using Deslauriers-Dubuc (DD) wavelets, as in the framework of Harten [28], we use lifted Deslauriers-Dubuc wavelets, i.e., biorthogonal Cohen-Daubechies-Feauveau wavelets of fourth order (CDF 4,4) with filter coefficients hk and dual filter coefficients \(\tilde {h}_{k}\) listed in Table 1. The lifted DD wavelets allow a better scale separation and can be easily implemented replacing the loose downsampling filter by a low pass filter before coarsening the grid.

1.2 A.2 Computing wavelet coefficients and adaptation criterion

With the definition in Eqs. (30) and (34) we have introduced a biorthogonal multiresolution basis \(\{\tilde {\varphi }^{j}_{p,k_{1},k_{2}},\varphi ^{j}_{p,k_{1},k_{2}}\}\), which can approximate any continuous function \(u\in L^{2}(\mathbb {D})\) arbitrary close. This aspect is the main property of a multiresolution analysis and can be used to relate and compare samples at different resolutions, i.e., different scales.

The difference between two consecutive approximations can be represented by a wavelet with its corresponding coefficients

known as wavelet details.

As shown in one space dimension by Unser in [52] for biorthogonal wavelets the difference dj between two consecutive approximations is bounded for sufficiently smooth L2 functions u. The bound depends on the local regularity of u and the order N (here N = 4) of the scaling function:

where \({{\varDelta }} x^{j}\sim 2^{j}\) is the step size, \(C_{\varphi ,\tilde {\varphi }}\) is a constant independent of u. This result can be understood as an interpolation error, since the CDF4,4 filter can be viewed as an interpolation of the averaged data \(D_{j}^{j-1}\underline {\mathsf {u}}^{j}\). From Eq. (37) we can thus conclude: since the approximation error of the interpolation scheme depends on the smoothness of the sampled function and the lattice spacing on the block, we can increase the local lattice spacing of the block for blocks where the function is smooth and keep the fine scales only for blocks where up is not smooth. This is achieved by coarsening the block, as decreasing the tree level j increases the lattice spacing. For an intended approximation error we therefore define the wavelet threshold𝜖 together with the coarsening indicator \(i_{\epsilon }({{{\mathscr{B}}}_{p}^{j}})\):

which marks the block for coarsening. For vector-valued quantities \(\mathbf{u} =(u_{1},u_{2},\dots ,u_{K})\), a block will be coarsened only if all components indicate coarsening. The pseudocode in Algorithm 1 outlines the wavelet adaptation algorithm for vector-valued quantities. For the sake of completeness we will put this algorithm in relation to the underlying wavelet representation.

1.3 A.3 Wavelet representation in the continuous setting

For completeness of this manuscript we give a detailed description of the underlying wavelet representation in a concise way. For the interested reader we recommend Ref. [50] for a succinct introduction to biorthogonal wavelets and to Refs. [11, 12, 15, 35] for a more detailed description.

Assuming that we have sampled a continuous function \(u\in L^{2}(\mathbb {D})\) inside a domain \(\mathbb {D}\subset \mathbb {R}^{2}\) on an equidistant grid corresponding to refinement level \({J}_{\max \limits }\), we can block-decompose it in terms of Eq. (29). By choosing \(j={J}_{\max \limits }\) in Eq. (32) and summing over all blocks p, we can thus represent u in L2 using a basis of dilated and translated scaling functions \(\{\varphi _{p,k_{1},k_{2}}^{j}\}\):

Here we have introduced a multi-index \(\lambda =(p,k_{1},k_{2})\in \overline {\Lambda }^{j}:={\Lambda }^{j}\times \{0,\dots ,\text {B}_{1}{-1}\}\times \{0,\dots ,\text {B}_{2}{-1}\}\) for ease of notation. With this notation we can rewrite Eq. (39) in a wavelet series

where the interpolating scaling basis \(\{\varphi _{\lambda }^{{J}_{\min \limits }}\}_{\lambda \in \overline {\Lambda }^{{J}_{\min \limits }}}\) approximates u at the coarsest scale \({J}_{\min \limits }\) and the wavelet basis \(\{\psi _{\mu ,\lambda }^{j}\}_{\mu =1,2,3,\lambda \in \overline {\Lambda }^{j\ge {J}_{\min \limits }}}\) contains all the additional information necessary to construct u.

In the CDF4,4 setting we have biorthogonal scaling functions:

and the associated three biorthogonal wavelets (in the d-dimensional case we have 2d − 1 wavelets see [39, p. 42])

for the horizontal (μ = 1), vertical (μ = 2) and diagonal (μ = 3) direction. The wavelet and its dual are defined by the same scaling relations as φ and \(\tilde \varphi \):

The filter coefficients are given by \(g_{k}=(-1)^{1-n}\tilde {h}_{k}\) and \(\tilde g_{k}=(-1)^{1-n}{h}_{k}\) with \(h_{k},\tilde {h}_{k}\) listed in Table 1. The components of the scaling and wavelet coefficients (\(c_{\lambda }^{j}\) and \(d_{\lambda }^{j}\)) are determined via component-wise projection of Eq. (40) onto the dual basis \(\{\tilde \varphi ^{j}_{\lambda }, \tilde \psi ^{j}_{\mu \lambda }\}\):

assuming that \(\langle {\varphi ^{j}_{\lambda _{1}}},{\tilde \psi ^{j}_{\lambda _{2}}}\rangle =\langle {\psi ^{j}_{\lambda _{1}}},{\tilde \varphi ^{j}_{\lambda _{2}}}\rangle =0 \) are orthogonal. Note that \(\langle {a}\rangle {b}={\int \limits }_{\mathbb {D}} a(\mathbf{x})b(\mathbf{x})\mathrm{d} {\mathbf{x}}\) denotes the L2-inner product.

In most wavelet adaptation schemes, one truncates Eq. (40) such that only detail coefficients are kept which carry significant information. According to [45] “this can be expressed as a nonlinear filter”, which acts as a cutoff wavelet coefficients with small magnitude. The cutoff is given by the threshold parameter 𝜖 > 0. However, in contrast to these schemes, our block-based adaptation groups the detail coefficients in blocks, i.e., all details on the block are kept if at least one detail carries important information. This seems to be inefficient at first sight, because unnecessary information is kept, but grouping details in blocks is reasonable, since the block-based adaptation is computationally efficient for MPI distributed architectures. Moreover, groups of significant details are often nearest neighbors, rather than a single significant detail in a block. Therefore, we define the set of blocks

with significant details in the predefined tree level range \({J}_{\min \limits }\le j\le {J}_{\max \limits }\). In the spirit of our previous notation we thus define: \(\overline {I}_{\epsilon }^{j}:= I_{\epsilon }^{j}\times \{0,\dots ,\text {B}_{1}{-1}\}\times \) \(\{0,\dots ,\text {B}_{2}{-1}\}\) for the set of all significant detail indices. The filtered block-based wavelet field in Eq. (40) can now be written as follows:

In the following we will denote all fields with an upper index 𝜖, which have been filtered with Algorithm 1 and can be thus expressed as Eq. (44).

For illustration we have computed the vorticity \(\omega ^{\epsilon }=\partial _{x} v_{y}^{\epsilon }-\partial _{y} v_{x}^{\epsilon }\) of a thresholded vector field \(\mathbf {u}^{\epsilon }=(v_{x}^{\epsilon },v_{y}^{\epsilon },p^{\epsilon })\) in Fig. 3 for various 𝜖 (more details can be found in Section 4.2.1). Here, 𝜖 > 0 and 𝜖 = 0 corresponds to a filtered and unfiltered field, respectively. For increasing 𝜖, less detail coefficients will be above the threshold and therefore the number of blocks decreases.

Taking the difference between the thresholded Eq. (44) and the original field Eq. (10) only details below the threshold are left. Hence the total error can be estimated and yields:

Because \({\|\psi _{\mu ,\lambda }^{j}\|}_{\infty }=1\) we finally get \({\| u-u^{\epsilon }\|}_{\infty }\le C\epsilon \) for the total error in the \(L^{\infty }\)-norm. Similarly one can normalize \(\psi _{\mu ,\lambda }^{j}\) in the L2-norm, which corresponds to re-weighting the thresholding criterion \(\left |{d^{j}_{\mu ,\lambda }}\right |<\epsilon \) with a level (j) and dimension (d) dependent threshold: \(\left |{d^{j}_{\mu ,\lambda }}\right |<\epsilon _{0} 2^{-d(j-{J}_{\max \limits })/2}\epsilon \) [42]. The additional constant 𝜖0 = 1 for d = 3 and 𝜖0 = 0.1 for d = 2 tunes the offset of the compression error. It is chosen such that the relative compression error \(\mathcal {E}_{\text {wavelet}}\) is close to, but still below 𝜖, i.e., it fulfills Eq. (6) in the L2-norm.

1.4 A.4 L 2 Inner products expressed in the wavelet basis

The L2 inner product is computed as a weighted sum of two fields u and v. For this we first refine both fields onto a unified grid with identical tree codes Λj, as explained in Section 3.2. Then, we are able to compute Eq. (14) as a weighted sum over all blocks:

Note that this quadrature rule is exact for 𝜖 = 0. We denote by IK ⊗W ⊗W the Kronecker product between the weight matrix W and the identity matrix \(\boldsymbol {I}_{K}\in \mathbb {R}^{K,K}\). The weight matrix is pre-computed by Eq. (47) and its non-vanishing values (W)ik = wi−k are shown in Table 2. The listed matrix elements are the discrete values of the autocorrelation function between two compactly supported scaling functions φ, see Fig. 18. Therefore, W is sparse, symmetric and circulant. Since W is also strictly diagonal dominant and all diagonal entries are positive, W and the Kronecker product of such matrices is also positive definite.

Appendix : B. Derivation of the error estimation given in Eq. (23)

Using Eq. (18) the total error in Eq. (16) becomes

Furthermore we can simplify the first term with Eq. (19) inserting \(\|{\mathbf {u}_{i} - \mathbf {u}_{i}^{\epsilon }}\|\le \epsilon \|{\mathbf {u}_{i}}\|\) into the nominator:

The second term in Eq. (48) can be expressed with the help of the eigenvalues of the correlation matrix. We use the identities: \({\sum }_{i=1}^{\text {N}_{\mathrm {s}}}\|{\mathbf {u}_{i}^{\epsilon } - \tilde {\mathbf {u}}_{i}^{\epsilon }}\|^{2}={\sum }_{k=r+1}^{\text {N}_{\mathrm {s}}} \lambda _{k}^{\epsilon }\) for perturbed eigenvalues \(\lambda _{k}^{\epsilon }=\lambda _{k}+l_{k}\epsilon \) and \({\sum }_{i=1}^{\text {N}_{\mathrm {s}}}\|{\mathbf {u}_{i}}\|^{2}={\sum }_{k=1}^{\text {N}_{\mathrm {s}}} \lambda _{k}\), yielding

Here \({\mathscr{M}}_{r}={{\sum }_{k=r+1}^{\text {N}_{\mathrm {s}}}l_{k}}/{{\sum }_{k=1}^{\text {N}_{\mathrm {s}}}\lambda _{k}}\) is the perturbation coefficient of the total error. Note that the perturbations lk are caused by the non-vanishing mixed terms \(\langle {\varphi _{\lambda }^{j}}\rangle {\psi _{\mu ,\lambda }^{j}}\) (see also [9]), when computing the correlation matrix from thresholded snapshots \(u_{i}^{\epsilon }\). Note that for orthogonal wavelets the first-order perturbations would vanish. For slowly decaying eigenvalues λk the perturbation coefficient \({\mathscr{M}}_{r}\) is typically very small, since the sum of perturbations lk is small compared to the total energy. In this case it is reasonable to neglect the second term in Eq. (49):

However, in general \({\mathscr{M}}_{r}\) does not vanish and we only have linear convergence in 𝜖:

Note that \({\mathscr{M}}_{r}\) does not depend on 𝜖, as all epsilon dependence has been removed. So it is asymptotically a first-order scheme in 𝜖 only. For a certain range we can observe second order, if \({\mathscr{M}}_{r}\) is sufficiently small. Eventually for 𝜖 sufficiently small the first-order term will dominate.

Appendix : C. Technical details and supplementary material

Example of the light data structure in WABBIT, to be compared with Fig. 2. For each tree lgt_active stores a light-ID list of all active blocks. With the blocks light-ID (lgt_id) all parameters in the forest (tree code, tree ID, tree level, refinement status) can be accessed, from the corresponding row in lgt_block. Note that the order of the light-ID depends on the process rank

CPU time required for the individual steps of the wPOD algorithm described in Section 3.3. The CPU time is shown for three different cases: 1.) the synthetic test case from Section 4.1 computed with \({J}_{\max \limits }=5\) and 𝜖 = 1.3 × 10− 4 on a Intel Core i5-7200U cpu (blue), 2.) the flow past a cylinder data Section 4.2.1 computed with 𝜖 = 1.3 × 10− 4 on Intel Xeon E5645 cpus (orange) and 3.) the bumblebee data of Section 4.2.4 with 𝜖 = 1.3 × 10− 4 using Intel Xeon Gold 6142 cpus (green). The comparison of the individual cases should be done with care, since they have been computed on different hardware and the data-structure (number of snaphots, blocks, block size, spatial dimension, etc.) is different. Therefore, the computational costs of MPI communication overhead, block administration may vary. However, the overall proportions between the individual steps of the algorithm are comparable among the test cases

First three sparse modes \(\mathbf {\boldsymbol {{\varPsi }}}^{\epsilon }_{k}\) (k = 1 upper left, k = 2 upper right, k = 3 bottom left) with their corresponding amplitudes \(a_{k}^{\epsilon }(t_{i})\) computed with 𝜖 = 1.3 × 10− 2 and one dense (𝜖 = 0) mode \(\boldsymbol {{\varPsi }}^{\epsilon }_{3}\) for comparison (bottom, right). Each figure shows the modes “vorticity” (labeled by ∇×v), computed from the two velocity components of the modes, and the pressure component (labeled by p). Note that the first mode represents the base flow, which is non oscillating, whereas the other modes always have an oscillating structure, with a frequency increasing with the mode number. When comparing the dense mode \(\boldsymbol {{\varPsi }}^{\epsilon }_{3}\) of the non-adaptive case in the lower right of Fig. 21 with the adapted modes on the lower left, no qualitative differences can be observed, except the local changes in the resolution

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Krah, P., Engels, T., Schneider, K. et al. Wavelet adaptive proper orthogonal decomposition for large-scale flow data. Adv Comput Math 48, 10 (2022). https://doi.org/10.1007/s10444-021-09922-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10444-021-09922-2