Abstract

The image of a polynomial map is a constructible set. While computing its closure is standard in computer algebra systems, a procedure for computing the constructible set itself is not. We provide a new algorithm, based on algebro-geometric techniques, addressing this problem. We also apply these methods to answer a question of W. Hackbusch on the non-closedness of site-independent cyclic matrix product states for infinitely many parameters.

Article PDF

Similar content being viewed by others

Avoid common mistakes on your manuscript.

References

Buczyńska, W., Wiśniewski, J.: On the geometry of binary symmetric models of phylogenetic trees. J. Europ. Math. Soc. 9(3), 609–635 (2007)

Buhrman, H., Christandl, M., Zuiddam, J.: Nondeterministic quantum communication complexity: The cyclic equality game and iterated matrix multiplication, arXiv:1603.03757

Chen, C., Lemaire, F., Li, L., Maza, M., Pan, W., Xie, Y.: The ConstructibleSetTools and ParametricSystemTools modules of the RegularChains library in maple. In: International Conference on Computational Sciences and Its Applications, 2008, ICCSA’08, pp 342–352. IEEE (2008)

Chor, B., Hendy, M., Holland, B., Penny, D.: Multiple maxima of likelihood in phylogenetic trees: an analytic approach. Mol. Biol. Evol. 17(10), 1529–1541 (2000)

Cox, D., Little, J., O’Shea, D.: Ideals, Varieties, and Algorithms. Springer (1992)

Drton, M., Sullivant, S.: Algebraic statistical models. Stat. Sin., 1273–1297 (2007)

De Silva, V., Lim, L.: Tensor rank and the ill-posedness of the best low-rank approximation problem. SIAM J. Matrix Anal. Appl. 30(3), 1084–1127 (2008)

Grayson, D.R., Stillman, M.E.: Macaulay2, a software system for research in algebraic geometry. Available at http://www.math.uiuc.edu/Macaulay2/

Ganter, B.: Algorithmen zur formalen Begriffsanalyse, Beiträge zur Begriffsanalyse (Darmstadt, 1986), pp 242–254. Bibliographisches Inst., Mannheim (1987)

Geiger, D., Meek, C., Sturmfels, B.: On the toric algebra of graphical models. Ann. Stat. 34(3), 1463–1492 (2006)

Hackbusch, W.: Tensor Spaces and Numerical Tensor Calculus. Springer Science & Business Media (2012)

Hauenstein, J., Ikenmeyer, C., Landsberg, J.M.: Equations for lower bounds on border rank. Exp. Math. 22(4), 372–383 (2013)

Hampe, S., Joswig, M., Schröter, B.: Algorithms for Tight Spans and Tropical Linear Spaces, arXiv:1612.03592

Hopcroft, J., Kerr, L.: On minimizing the number of multiplications necessary for matrix multiplication. SIAM J. Appl. Math. 20(1), 30–36 (1971)

Landsberg, J.M.: The border rank of the multiplication of 2x2 matrices is seven. J. Am. Math. Soc. 19(2), 447–459 (2006)

Landsberg, J.M.: Tensors: Geometry and Applications. American Mathematical Society (2012)

Landsberg, J.M., Michałek, M.: On the geometry of border rank decompositions for matrix multiplication and other tensors with symmetry. SIAM J. Appl. Algebra Geom. 1(1), 2–19 (2017)

Landsberg, J.M., Qi, Y., Ye, K.: On the geometry of tensor network states. Quant. Inf. Comput. 12(3-4), 346–354 (2012)

Monagan, M., Geddes, K., Heal, K., Labahn, G., Vorkoetter, S., McCarron, J., DeMarco, P.: Maple 10 Programming Guide. Maplesoft, Waterloo (2005)

Michałek, M.: Geometry of phylogenetic group-based models. J. Algebra 339 (1), 339–356 (2011)

Michałek, M.: Toric varieties in phylogenetics. Dissertationes mathematicae 2015(511), 1–86 (2015)

Oseledets, I.V.: Tensor-train decomposition. SIAM J. Sci. Comput. 33(5), 2295–2317 (2011)

Perez-Garcia, D., Verstraete, F., Wolf, M., Cirac, J.: Matrix product state representations. Quant. Inf. Comput. 7(5), 401–430 (2007)

Qi, Y., Michałek, M., Lim, L.-H.: Complex tensors almost always have best low-rank approximations, to appear in Applied and Computational Harmonic Analysis. https://doi.org/10.1016/j.acha.2018.12.003

Shafarevich, I.: Basic Algebraic Geometry, 1. Springer (2013)

Sturmfels, B., Sullivant, S.: Toric ideals of phylogenetic invariants. J. Comput. Biol. 12(2), 204–228 (2005)

Szalay, S., Pfeffer, M., Murg, V., Barcza, G., Verstraete, F., Schneider, R., Legeza, Ö.: Tensor product methods and entanglement optimization for ab initio quantum chemistry. Int. J. Quantum Chem. 115(19), 1342–1391 (2015)

Khrulkov, V., Hrinchuk, O., Oseledets, I: Generalized tensor models for recurrent neural networks, arXiv:1901.10801

Khrulkov, V., Novikov, A., Oseledets, I: Expressive power of recurrent neural networks, arXiv:1711.00811

Winograd, S.: On multiplication of 2 × 2 matrices. Linear Algebra Appl. 4(4), 381–388 (1971)

Wu, W.-T.: A zero structure theorem for polynomial equations solving, MM Research Preprints, 1(2) (1987)

Zhao, Q., Zhou, G., Xie, S., Zhang, L., Cichocki, A: Tensor ring decomposition, arXiv:1606.05535

Acknowledgments

Open access funding provided by Max Planck Society. We thank Wolfgang Hackbusch for posing the question which motivated this work and for the stimulating discussions. We are grateful to Bernd Sturmfels and Michael Joswig for many suggestions and encouraging remarks.

Funding

MM was supported by Polish National Science Center project 2013/08/A/ST1/00804 affiliated at the University of Warsaw.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by: Ivan Oseledets

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Matrix product states

Appendix: Matrix product states

We recall here two representations of tensors that are inspired from physics [23]. The corresponding notions have found applications in deep learning where they are called tensor train or tensor ring decompositions [22, 28, 29, 32].

The symbol \(\mathbb {K}\) denotes the field \(\mathbb {C}\) or \(\mathbb {R}\). For any \(a \in \mathbb {Z}_{>0}\), the vector space \(\mathbb {K}^{a}\) comes with the standard basis \(e_{1},\dots ,e_{a}\). Therefore, a tensor \(T\in \mathbb {K}^{a_{1}}\times \dots \times \mathbb {K}^{a_{q}}\) may be represented as

which is also written

Definition A.1 (Site-independent (cyclic) matrix product state)

Fix integers r > 0, k > 0, q > 1, and matrices \(M_{i}\in \mathbb {K}^{r\times r}\) for \(i=1,\dots ,k\). Let \(T\in (\mathbb {K}^{k})^{\otimes q}\) be a tensor given by

The set of all tensors that allow such a representation will be denoted by \(\text {IMPS}(r,k,q)\subset (\mathbb {K}^{k})^{\otimes q}\).

Example A.1

Let us consider the case of matrices (q = 2). Here elements of IMPS(r, k, 2) can be viewed as matrices M such that \(M[i_{1},i_{2}]=\text {tr}(M_{i_{1}}M_{i_{2}})\). This is equivalent to a factorization of M = A ⋅ At for some matrix \(A\in \text {Hom}(\mathbb {K}^{r},\mathbb {K}^{k^{2}})\). In particular, M ∈IMPS(r, k, 2) if and only if M is symmetric and has ranked at most k2. It follows that IMPS(r, k, 2) is closed.

When q = 2, the tensor T corresponds to a symmetric matrix. However, for q > 2, the tensor T will not be a symmetric tensor in general, though the identity \(T[i_{1},\dots ,i_{q}]=T[i_{q},i_{1},\dots ,i_{q-1}]\) continues to hold. In other words, the tensor has cyclic symmetries with respect to the order of the product of the matrices.

Definition A.1 can be regarded as a symmetrization of the following definition of a cyclic matrix product state, where the underlying graph for the tensor network is a cycle.

Fix an integer q > 1 and tuples of positive integers \(\mathfrak {a} = (a_{1},\dots ,a_{q})\), \(\mathfrak {b}=(b_{1},\dots ,b_{q})\). We set aq+ 1 = a1. Then the locus \(\text {MPS}(\mathfrak {a},\mathfrak {b},q) \subset \mathbb {K}^{b_{1}}\otimes {\dots } \otimes \mathbb {K}^{b_{q}}\) is given by the following definition.

Definition A.3 (Cyclic matrix product state)

A tensor \(T \in \mathbb {K}^{b_{1}}\otimes {\dots } \otimes \mathbb {K}^{b_{q}}\) is in \(\text {MPS}(\mathfrak {a},\mathfrak {b},q)\) if there exist matrices

such that

Example A.4

The situation for q = 2 is analogous to Example A.2. In this case, we have M ∈MPS((a1, a2), (b1, b2), 2) if and only if M = AB where \(A\in \text {Hom}({\mathbb {K}^{b}_{1}},\mathbb {K}^{a_{1}a_{2}})\) and \(B\in \text {Hom}(\mathbb {K}^{a_{1}a_{2}},\mathbb {K}^{b_{2}})\). This can happen if and only if the rank of the matrix M is at most a1a2. Therefore, \(\text {MPS}(\mathfrak {a},\mathfrak {b},2)\) is always closed.

Proposition A.5

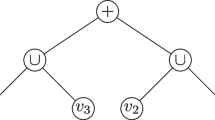

The sets IMPS and MPS may be represented as

- 1.

\(\text {IMPS}(r,k,q)=\{f^{\otimes q}(\mathfrak {M}_{r,\dots ,r}) \mid f\in \text {Hom}(\mathbb {K}^{r\times r},\mathbb {K}^{k})\}\).

- 2.

\(\text {MPS}(\mathfrak {a},\mathfrak {b},q)=\{(f_{1}\otimes {\dots } \otimes f_{q})(\mathfrak {M}_{a_{1},\dots ,a_{q}}) \mid f_{i} \in \text {Hom}(\mathbb {K}^{a_{i} \times a_{i+1}},\mathbb {K}^{b_{i}})\}\).

Proof

The proofs of both statements are similar. We prove the first one, as it is more important for this paper. We will be interpreting elements of \(\text {Hom}(\mathbb {K}^{r\times r},\mathbb {K}^{k})\) as r2 × k matrices. First, we note that there is a natural bijection φ between k-tuples of r × r matrices \(\mathcal {M}:=(A_{1},\dots ,A_{k})\) and matrices \(\varphi (\mathcal {M})\in \text {Hom}(\mathbb {K}^{r\times r},\mathbb {K}^{k})\). For 1 ≤ i ≤ k, the i th column of \(\varphi (\mathcal {M})\) is the representation of Ai as a vector of length r2.

Write \(M_{i}={\sum }_{p,q=1}^{r} a_{i,p,q} e_{p,q}\), where ep, q is the matrix with 1 in its (p, q)th entry and zeros everywhere else. Note that \(\varphi (\mathcal {M})(e_{p,q})=a_{i,p,q}\).

We prove the claim by showing that the tensor T ∈IMPS(r, k, q) associated to \(\mathcal {M}\) equals \(\varphi (\mathcal {M})(\mathfrak {M}_{r,\dots ,r})\). Indeed, we have

where in all sums 1 ≤ j ≤ q. We can simplify further:

□

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Harris, C., Michałek, M. & Sertöz, E.C. Computing images of polynomial maps. Adv Comput Math 45, 2845–2865 (2019). https://doi.org/10.1007/s10444-019-09715-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10444-019-09715-8