Abstract

We study two closely related problems in the online selection of increasing subsequence. In the first problem, introduced by Samuels and Steele (Ann. Probab. 9(6):937–947, 1981), the objective is to maximise the length of a subsequence selected by a nonanticipating strategy from a random sample of given size \(n\). In the dual problem, recently studied by Arlotto et al. (Random Struct. Algorithms 49:235–252, 2016), the objective is to minimise the expected time needed to choose an increasing subsequence of given length \(k\) from a sequence of infinite length. Developing a method based on the monotonicity of the dynamic programming equation, we derive the two-term asymptotic expansions for the optimal values, with \(O(1)\) remainder in the first problem and \(O(k)\) in the second. Settling a conjecture in Arlotto et al. (Random Struct. Algorithms 52:41–53, 2018), we also design selection strategies to achieve optimality within these bounds, that are, in a sense, best possible.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

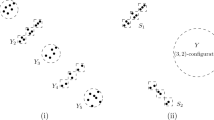

The online increasing subsequence problems are stochastic optimisation problems concerned with non-anticipating policies aimed to select an increasing subsequence from a sequence of random items \(X_{1}, X_{2},\ldots \) with known continuous distribution \(F\), which, without loss of generality, will be assumed uniform on \([0,1]\). The online constraint requires to accept or reject \(X_{i}\) at time \(i\), when the item is observed, with the decision becoming immediately terminal. Formally, an online policy for selecting an increasing subsequence is a collection of stopping times \(\boldsymbol{\tau } = (\tau _{1}, \tau _{2}, \ldots )\) adapted to the sequence of sigma-fields \(\mathcal{F}_{i} = \sigma \{X_{1}, X_{2}, \ldots , X_{i}\}\), \(1\leq i < \infty \), and satisfying

-

(i)

\(\tau _{1} < \tau _{2} < \cdots \),

-

(ii)

\(X_{\tau _{1}}< X_{\tau _{2}}< \cdots \).

We denote the space of all online policies by \({\mathcal{T}}\).

In the first problem, introduced by Samuels and Steele [20], the objective is to maximise the expected length of an increasing subsequence selected from the first \(n\) items. The performance of an online policy is measured by \(\mathbb{E}L_{n}(\boldsymbol{\tau })\), where

is the length of the selected increasing subsequence.

Samuels and Steele proved that the maximal expected length, \(v_{n} = \sup _{\boldsymbol{\tau }\in {\mathcal{T}}} \mathbb{E}L_{n}( \boldsymbol{\tau })\), satisfies

It has been later observed that the Samuels-Steele problem is equivalent to a special case of online bin-packing problem [11, 18] with uniformly distributed weights being packed into a unit-sized bin. This connection was useful to show that \(\sqrt{2n}\) is, in fact, the upper bound [9] (also see [13] for an alternative approach).

For comparison, a clairvoyant decision-maker with a complete overview of the data could choose the longest increasing subsequence. The study of the statistical properties of its length \(l(n)\) is known as the Ulam-Hammersley problem. After years of exciting development Baik et al. [5] showed that

with \(\widehat{c} = -1.758\ldots \), and proved that the distribution of \((l(n) - 2\sqrt{n}) / n^{1/6}\) converges to Tracy-Widom distribution from random matrix theory. See Romik [19] for an excellent account.

To prove the existence of the limit \(v_{n}/\sqrt{n}\), Samuels and Steele also introduced analogous problem with arrivals by Poisson process, and the objective to complete selections within given time horizon \([0,n]\). The connection with poissonised version has been useful to obtain asymptotic results in the fixed-\(n\) setting. Arlotto et al. [2] proved the central limit result analogous to Bruss-Delbaen [8] result in poissonised setting:

where \(\boldsymbol{\tau ^{*}}\) is the optimal selection policy.

Tightest known bounds on \(v_{n}\) are

The lower bound was shown recently in [4]. By assessing a suboptimal selection policy with an acceptance window which depends on both the size of the last selection and the number of items yet to be observed, Arlotto et al. suggested that the optimality gap in (2) can be further tightened. They also obtained numerical evidence that the performance of the employed policy is within \(O(1)\) from the optimum.

In a dual problem, studied recently by Arlotto et al. [3], the objective is to minimise \(\mathbb{E}\tau _{k}\), the expected time to select an increasing subsequence of fixed length \(k\) from an infinitely long series of observations. For the optimal expected time

Arlotto et al. proved the bounds

The bounds were obtained by an analytical investigation of the optimality recursion.

The quickest selection problem of Arlotto et al. [3] is equivalent to a special case of the sum constraint problem of Chen et al. [10] (see Example 2 on p. 541 for the \(k^{2}/2\) asymptotics and Mallows et al. [15] for a multidimensional extension). So the principal asymptotics \(k^{2}/2\) can be read off from this earlier work. Coffman et al. [11] in Sect. 6 showed that the same asymptotics \(k^{2}/2\) also occur in the offline quickest selection problem.

In the present paper we adapt an asymptotic comparison method used before in the poissonised setting [6, 14] to approximate solutions to the optimality equations. In fact, this method can also be used to estimate the performance of a certain class of suboptimal policies too. We refine the cited results as follows. For the longest increasing subsequence problem, we prove that

A similar expansion with the second term \((\log {n})/6\) was obtained in the related problem of online selection from a random permutation of \(n\) integers by Peng and Steele [17]. The difference in logarithmic terms can be interpreted as an advantage of a better-informed decision-maker, who knows the values of order statistics of \(\{X_{1},\dots ,X_{n}\}\) but not the succession in which the items are revealed in the course of observation.

Furthermore, we settle the conjecture from [4] by showing that the performance of the policy used there is indeed within \(O(1)\) from the maximum \(v_{n}\).

For the quickest selection problem, we prove that

Given the natural duality of the two problems, one may suspect that the functions \(n \to v_{n}\) and \(k \to \beta _{k}\) are asymptotic inverses of one another. In the principal asymptotics, this was obvious from (1) and (3). However, the refined expansions (4) and (5) show a more intimate connection between the problems: inverting \(v_{n}\) gives a two-term expansion of \(\beta _{n}\).

For the quickest selection problem, we also introduce selection policies that are asymptotically optimal. The first is a variation of the constant window policy, resembling the one from [20] to achieve the principal asymptotics \(k^{2}/2\). The second is a more complex policy, with optimality gap \(O(k)\), which is in fact the best one can do asymptotically, without concern about small \(k\).

2 The Longest Subsequence Problem

In the Samuels-Steele problem, \(n\) is the model parameter representing the total size of a random sample. After the first item is observed, the problem reduces down to a selection out of \(n-1\) random items. Furthermore, if the first item of size \(z, \, z \in [0,1]\) was selected, the future observations that fall below \(z\) are to be automatically discarded. We denote by \(v_{m}(z)\), \(m=1, \ldots , n\) the maximal expected length of an increasing subsequence, when the remaining sample size is \(m\), and the last selected item is of size \(z\). The functions \(v_{m}:[0,1] \to \mathbb{R}^{+}\) are called the optimal value functions in the longest subsequence selection.

In addition, we define a subclass of online selection policies that have a variable acceptance window. That is, at each step of selection, there is a threshold function \(h_{m}:[0,1] \to [0,1]\), \(0\leq h_{m}(z) \leq 1-z\), that shapes the decision of whether to accept or reject current observation: the corresponding policy accepts the observation of size \(x\) if and only if it falls into the acceptance window \([z, z+h_{m}(z)]\). Setting \(\tau _{0} = 0\) and \(X_{\tau _{0}}=0\), this policy corresponds to a sequence of stopping times \(\boldsymbol{\tau } = (\tau _{1}, \tau _{2}, \ldots , \tau _{j})\), where

with the convention that \(\min \emptyset = \infty \).

2.1 Optimality Equation for the Value Function

The optimality equation is a well-known recursion [2, 4, 20]

with \(v_{0}(z) = 0\). Note that \(v_{n}(z)+c\) also satisfies (6) for any constant \(c\). We provide here an intuition behind the optimality equation (6). Assume we are at the selection stage with \(n+1\) observations to inspect and the last selection size is \(z\); this corresponds to the value function \(v_{n+1}(z)\) on the left-hand side. With probability \(z\) the next observation is below \(z\), leaving us with the expected length \(v_{n}(z)\), which explains the first term on the right-hand side. Should the next observation \(x\) be admissible, the dynamic programming principle prescribes us to choose \(x\) if and only if \(v_{n}(x)+1 \geq v_{n}(z)\). Hence, the optimal decision provides \(\max \{v_{n}(x)+1, v_{n}(z)\}\). Averaging over the uniformly distributed \(x\) gives (6).

Observe that \(v_{n}(z)\) is the maximum expected length of an increasing subsequence chosen from \(N\) items, with \(N\overset{d}{=}\mathrm{Bin}(n, 1-z)\) (see [13], p. 945, and [20], p. 1083).

Let \(h_{n}(z):[0,1] \to [0,1]\) be the solution to

if \(v_{n}(z)>1\), and \(h_{n}(z)=1-z\) otherwise. Then, the monotonicity of \(v_{n}(z)\) in \(z\) implies that the integrand in (6) is equal to \(v_{n}(x)+1\) on the interval \([z, z+h_{n}(z)]\). On the remaining interval \([z+h_{n}(z),1]\) the integrand assumes value \(v_{n}(z)\).

This provides the form of the optimal selection policy: accept the observation \(x\), if it falls into the acceptance window \([z, z+ h_{n}(z)]\). From (7) it can be seen that the acceptance window is updated dynamically with every observation. Thus, the optimal policy indeed belongs to the class of policies with a variable acceptance window, and we call functions \(h_{n}(z)\) the optimal threshold functions. Note, the equation (7) has a solution only when \(v_{n}(z)>1\). This has a logical interpretation: when \(v_{n}(z)\leq 1\), the decision-maker should select every successive record, as this provides the largest expected payoff.

In the sequel we work directly with the optimality equation to refine the asymptotics of the value functions. The comparison method we employ hinges on certain monotonicity properties of (6). Let an operator \(G_{n}\) acting on continuous bounded functions \(f:[0,1]\to \mathbb{R}_{+}\) possess the following properties

-

(i)

Shift-invariance: \(G_{n+1}(f + c)(z) = G_{n+1}(f)(z) + c\) for any constant \(c\),

-

(ii)

Monotonicity: if \(\widehat{f}(z) \geq f(z)\), then \(G_{n+1}(\widehat{f})(z) \geq G_{n+1}(f)(z)\).

To determine the range of limit regimes for \((n,z)\), we introduce a size function \(g_{n}(z) := n(1-z)\). We say that sequence \((f_{n})\) is locally bounded from above if

locally bounded from below if \((-f_{n})\) is locally bounded from above, and locally bounded if \((|f_{n}|)\) is locally bounded from above. With this in mind, we present the following key lemma.

Lemma 1

Asymptotic comparison

Let sequence \((f_{n})\) solve \(f_{n+1} = G_{n+1}(f_{n})(z)\).

If \((f_{n})\) is locally bounded from above, while a sequence \((\widehat{f}_{n})\) is locally bounded from below and \(\widehat{f}_{n+1} \geq G_{n+1}(\widehat{f}_{n})(z)\) when \(g_{n}(z)\) is sufficiently large, then the difference \(f_{n}(z) - \widehat{f}_{n}(z)\) is bounded from the above uniformly for all \(n\) and \(z\).

Similarly, if \((f_{n})\) is locally bounded from below, while \((\widehat{f}_{n})\) is locally bounded from above and \(\widehat{f}_{n+1} \leq G_{n+1}(\widehat{f}_{n})(z)\) when \(g_{n}(z)\) is sufficiently large, then \(f_{n}(z) -\widehat{f}_{n}(z)\) is bounded from the below uniformly for all \(n\) and \(z\).

Proof

Adding a constant if necessary and using the shift-invariance property (i) of the operator \(G_{n}\), we can reduce to the case \(\widehat{f}_{n}(z)>0\).

If the first claim is not true, then for every constant \(c>0\) we can find \(n_{0}\), \(z_{0}\) such that \(f_{n_{0}+1}(z_{0}) - \widehat{f}_{n_{0}+1}(z_{0}) > c\). Choose the minimal such \(n_{0} = n_{0}(c)\), then

Observe that

Therefore, from the local boundedness of \((f_{n})\), we have \(n_{0}(c) \to \infty \) as \(c\to \infty \).

Moreover, since \((f_{n})\) is locally bounded from above, we have \(g_{n_{0}+1}(z) \geq f_{n_{0}+1}(z_{0}) >c\), so we can choose \(c\) large enough to achieve \(G_{n_{0}+1}(\widehat{f}_{n_{0}})(z_{0}) < \widehat{f}_{n_{0}+1}(z_{0})\). Therefore, appealing to the shift-invariance (i), we obtain

However, by the choice of \(n_{0}\), we have \(f_{n_{0}}(z_{0}) < \widehat{f}_{n_{0}}(z_{0}) + c\). Thus, from the monotonicity property (ii), it follows that

which is a contradiction.

Now, to prove the second part of the lemma, assume to the contrary that the difference \(f_{n}(z) - \widehat{f}_{n}(z)\) is unbounded from the below. Then, for every constant \(c>0\) one can find \(n_{1}\), \(z_{1}\) such that \(f_{n_{1}+1}(z_{1}) - \widehat{f}_{n_{1}+1}(z_{1}) < -c\). Choosing the minimal such \(n_{1} = n_{1}(c)\), we have

From \(g_{n_{1}+1}(z_{1}) \geq \widehat{f}_{n_{1}+1}(z_{1})> f_{n_{1}+1}(z_{1}) + c > c \), it follows that \(n_{1}(c) \to \infty \) as \(c\to \infty \). Moreover, choosing \(c\) large enough, we can achieve

However, this contradicts

which follows from (8). This concludes our proof. □

2.2 Asymptotic Expansion of \(v_{n}\)

We utilise Lemma 1 to compare \(v_{n}(z)\) with a sequence of carefully chosen test functions. With each iteration of the method, we obtain a finer asymptotic expansion of \(v_{n}(z)\). Since we do not employ the initial condition \(v_{n}(0)=0\), the maximal accuracy of the comparison method is bound by the \(O(1)\)-term. Having obtained the desired expansion of \(v_{n}(z)\), we specialise it to the case with \(z=0\), thus deriving (4). The whole procedure is reminiscent of a familiar method of successive approximations of the solution to the differential equations (see, for example, [12], Sect. 9.1).

Introduce operators \(\Delta \) and \(\varPsi \) acting as

With this notation, the optimality equation (6) assumes the form

Applying Lemma 1 with \(G_{n+1}(f_{n})(z) = f_{n}(z) + \varPsi f_{n}(z)\) yields the following result.

Corollary 1

If, for \(n(1-z)\) large enough, \(\Delta \widehat{v}_{n}(z) > \varPsi \widehat{v}_{n}(z)\), then the difference \(v_{n}(z) - \widehat{v}_{n}(z)\) is bounded from the above uniformly in \(n\) and \(z\); likewise, if \(\Delta \widehat{v}_{n}(z) < \varPsi \widehat{v}_{n}(z)\), then \(v_{n}(z) - \widehat{v}_{n}(z)\) is bounded from the below uniformly for all \(n\) and \(z\).

To obtain the principal asymptotics consider the test function

where \(\gamma _{0}>0\) is a parameter. Introducing for convenience \(\widehat{n}:=n(1-z)\) and expanding for large \(\widehat{n}\) we obtain

Furthermore, using the change of variable \(y:={(x-z)}/{(1-z)}\), we can write the integral as

where \(h_{n}^{(0)}(z)\) is the solution to

We have

Using Taylor expansion of the integrand in \(y\) around 0 yields

hence, integrating and using (10) yields

The match between (9) and (11) occurs for \(\gamma _{0} = \sqrt{2}\). Therefore, we have, for \(n(1-z)\) large enough,

Applying Lemma 1 we see that \(\underset{n(1-z)\to \infty }{\limsup }(v_{n}(z) - \gamma _{0} \sqrt{n(1-z)})< \infty \). In light of this, \(\underset{n(1-z)\to \infty }{\limsup }(v_{n}(z)/\sqrt{n(1-z)}) \leq \gamma _{0}\), for \(\gamma _{0}>\sqrt{2}\). Consequently,

A parallel argument with \(\gamma _{0} < \sqrt{2}\) yields

Combining (12) with (13), we obtain

For a better approximation we consider the test functions

with \(\gamma _{1}\in {\mathbb{R}}\). We choose \(\log {(n(1-z)+1)}\) over \(\log {(n(1-z))}\) to avoid the singularity at 0. The forward difference becomes

Using Taylor expansion with a remainder yields

On the other hand, using substitution \(y ={(x-z)}/{(1-z)}\) it can be deduced that

where \(h_{n}^{(1)}(z)\) solves

For \(\widehat{n}\to \infty \),

Actually, we only need the first term of (17) to obtain the expansion of \(\varPsi v^{(1)}_{n}(z)\) up to the desired order. This is down to the fact that order \(O(\widehat{n}^{-1})\)-term in (17) contributes only \(O(\widehat{n}^{-3/2})\) to \(\varPsi v^{(1)}_{n}(z)\). Indeed, keeping \(\widehat{n}\) as a parameter, let us view the integral on the third line of (15) as a function of its upper limit

In view of (16), \(h_{1}:=h_{n}^{(1)}(z)\) is a stationary point of the integrand. Expanding at \(h_{1}\) with a remainder we get, for some \(\zeta \in [0,1]\)

Now letting \(\widehat{n} \to \infty \) and \(\varepsilon = O(\widehat{n}^{-1})\) we obtain

as claimed.

In light of this, integrating and expanding we obtain

Expansions (14) and (18) match at \(\gamma _{1}=-\frac{1}{12}\). Thus, another application of Lemma 1 gives us

We need one more iteration to bound the remainder. Consider the test functions

For \(\widehat{n}\to \infty \), we obtain the expansion

uniformly in \(z\in [0,1)\), and with some more effort for the integral

Since \(z\in [0,1)\), we have

Appealing to (19), (20) and the first inequality in (21), we conclude that, for large \(n(1-z)\),

hence, by Lemma 1, \(v_{n}(z) - v^{(2)}_{n}(z)\) for such \(\gamma _{2}\) is bounded from above. On the other hand, exploiting the second inequality in (21), we derive that for large \(n(1-z)\)

thus, by the asymptotic dominance lemma \(v_{n}(z) - v^{(2)}_{n}(z)\) for such \(\gamma _{2}\) is bounded from below. However, since the last term of \(v^{(2)}_{n}(z)\) is already bounded, it follows readily that

Our main result is the special case of (22) with \(z=0\).

Theorem 1

The maximum expected length of an increasing subsequence selected in an online regime \(v_{n}\) satisfies

2.3 Asymptotically Optimal Policies

Recall our definition of a policy with a variable acceptance window. For \(m\in {\mathbb{N}}\), \(m\leq n\), let \(\widetilde{h}_{m}:[0,1]\to [0,1]\) be threshold functions which define a policy via the acceptance window

where \(m\) is the number of remaining observations. The threshold functions \(\widetilde{h}_{m}(z)\) depend on both the size of the last selected item and the number of remaining observations. As was shown in the previous section, when \(v_{m}(z) > 1\), the optimal policy has threshold functions \(h_{m}(z)\) solving

However, there are good policies that can be defined more simply. For example, the stationary policy of Samuels and Steele [20] has constant threshold functions independent of the remaining sample size. It accepts every observation that exceeds the last selection by no more than \(\sqrt{2/n}\). Setting threshold functions \(\widehat{h}_{m}(z) :=\min \{ \sqrt{2/n}, 1-z\}\) for all \(m=1, 2, \ldots , n\) describes this strategy completely. Remarkably, this uncomplicated policy achieves asymptotic optimality up to the leading order term of the expected performance. The intuition behind the choice of this threshold function lies in the derivation of the familiar mean-constraint bound on \(v_{n}\) (see, for example, [9]).

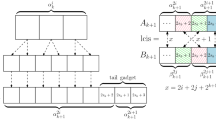

A more sophisticated policy was introduced by Arlotto et al. [4]. In contrast to the stationary policy of Samuels and Steele, the acceptance window here is variable. The acceptance criterion for this policy is

where

Observe, that normalising (24) leads to the acceptance condition

i.e. the threshold functions are similar to Samuels and Steele’s with one exception: Arlotto et al. used the expected number of remaining admissible observations in the calculation of the threshold function.

The value functions corresponding to Arlotto et al.’s policy satisfy the recursion

Equation (25) can be subjected to the comparison method too. Applying Lemma 1 with the functional

one can obtain an analogous result. Choosing the approximating function of the form

and successively working out the values of \(\alpha _{0}\), \(\alpha _{1}\), \(\alpha _{2}\) leads to the same asymptotic expansion as in (22)

Taken together with (22) this settles the conjecture in [4].

Theorem 2

The policy with threshold functions (24) has the expected performance \(\widetilde{v}_{n}=\widetilde{v}_{n}(0)\) satisfying

3 The Quickest Selection Problem

We now turn to study the quickest selection problem introduced in [3]. In contrast to the original problem, they asked what is the minimum expected time \(\beta _{k}\) to choose a \(k\)-long increasing subsequence from an infinite sequence of random variables in an online fashion? Recall that, formally,

3.1 Optimality Recursion in the Quickest Selection Problem

The quickest selection problem is a decision problem with infinite horizon. Still, a version of the comparison method turns useful here too.

The value function in the quickest selection problem \(\beta _{k}(z)\) depends on the running maximum \(z\) and the number of selections yet to be made. The first step decomposition yields the dynamic programming equation

The marks above \(z\) occur with \({\mathrm{Geom}}(1-z)\) interarrival times, hence

where \({\beta _{k}}:={\beta _{k}}(0)\). Substituting this into the dynamic programming equation and substituting \(x\to (1-z) \,x + z\) we arrive at

Lemma 2

The optimal value function \(\beta _{k}\) satisfies the implicit recursion

initialised with \(\beta _{1} = 1\).

Proof

We have \(\beta _{k}/(1-x) \leq \beta _{k+1}\) if and only if \(x \leq 1 - \beta _{k}/\beta _{k+1} =: h_{k}\). Thus, it is optimal to choose the observation \(X\) only when \(X\leq h_{k}\). From this we can rewrite (26) as

Integrating and permuting yields (27). □

The optimal strategy amounts to the following rule. If at stage \(j\) some \(k\) items are yet to be chosen, the last selection was \(z\), and the observed item is \(X_{j}=x\) then the item should be selected if and only if

For arbitrary \(\widetilde{h}_{k}, \, 0\leq \widetilde{h}_{k} \leq 1-z\), we call a strategy defined in that way self-similar.

Arlotto et al. derived (3) by analysing the optimality recursion in the following form ([3], Lemma 3)

The recursion (27) is, in fact, equivalent to (28). Indeed, we find that the minimising value of \(t = t^{*}\) satisfies

Substituting (29) into (28) yields the optimal solution \(t^{*} = 1 - \beta _{k} / \beta _{k+1}\). Plugging the optimal solution into (28) and rearranging gives (27).

Arlotto et al. also proved that the function \(k \to \beta _{k}\) is convex and showed the optimal policy to be self-similar with the optimal threshold functions satisfying

3.2 Preparation for the Asymptotic Analysis

Recursion (27) possesses several useful analytical properties. To begin with, define a function \(G:\mathbb{R}^{2}_{+} \to \mathbb{R}\) as

In terms of \(G\), (27) becomes

Recursion (31) taken together with the condition

defines the sequence \(\beta _{k}\) uniquely, as seen from the next lemma.

For \(x\geq 0\) define \(g(x)\) as a solution to

This function \(g(x)\) has two branches, and we are interested in the upper branch.

Lemma 3

The function \(g\) has a branch that lies entirely in the domain \(\mathcal{D}=\{(x,y):x+1 < y, \, x>0\}\).

Proof

Calculating the partial derivatives

we see that, if \(G(x_{0}, y_{0}) = 1\), then, by the Implicit Function Theorem, in the vicinity of \((x_{0}, y_{0})\) there is a uniquely defined function \(g(x)\) with

provided \(x_{0} \neq y_{0}\). If, furthermore, \(0< x_{0}< y_{0}\), then this function has derivative \(g'(x)>1\), since

where \(z = x/y\). Thus, if there is one such point \((x_{0}, y_{0}) \in \mathcal{D}\), then there is a branch \(g(x): \mathbb{R}_{+} \to \mathbb{R}_{+}\) with \((x, g(x)) \in \mathcal{D}\). □

In particular, we can pick \((x_{0}, y_{0}) = (1, y_{0})\), where \(y_{0} =3.146\cdots \) solves

Note that \(g(0+) = 1\), but \(g'(0+)=\infty \).

From now on we only consider the branch of \(g(x)\) defined in Lemma 3. In these terms

That is, the sequence of optimal values \(\beta _{k}\) is obtained as iterations of \(g\), starting with \(\beta _{1} = 1\). So \(\beta _{2} = g(1)\), \(\beta _{3} = g(g(1))\), etc. We wish to find now the asymptotic behaviour of \(g\) for large \(x\).

Lemma 4

Function \(g(x)\) possesses the following asymptotic expansion

Proof

Dividing both sides of \(G(x,y)=1\) by \(x\) yields

Note that taking limits on both sides gives

Performing a change of variables

we arrive at

Because \(\limsup y/x<\infty \) and \(a=1\) is the unique solution to \(a=1+\log a\), we may conclude that

In light of this, we may investigate (33) in the vicinity of \(z=w=0\). The function \(w(z)\) is analytic within a unit circle. Thus, expanding logarithm yields a series representation of \(w(z)\)

Since \(w'(0)=0\), the inverse function has an algebraic branch point at 0 of order 1 (see [16] for definition). The inverse \(z(w)\) is representable as Puiseux series in powers of \(w^{1/2}\), with coefficients that can be calculated recursively. From the first two terms of series (34) we obtain

Plugging \(z(w) = \sqrt{2}w^{1/2} + a_{0} w + o(w)\), where \(a_{0}\) is a constant coefficient, into (34) yields

which provides us with a refinement

Another iteration of the method with \(z(w) = \sqrt{2} w^{1/2} + \frac{2}{3}w + a_{1} w^{3/2}+o(w^{3/2})\), \(a_{1}\) constant, results in the expansion

Translating this back in terms of variables \(x\), \(y\), we obtain the desired asymptotic expansion

□

Suppose now that \((x_{k})\) is a sequence of iterations

with some \(x_{1}>0\). Since \(x_{k+1}>x_{k}+1\), we have \(x_{k+1}>x_{0} +k\), and so \(x_{k} \to \infty \), as \(k \to \infty \). Thus, by Lemma 4,

To derive the leading asymptotic term from (35) we only need

The idea is to compare \(x_{k}\) with a solution of the analogous differential equation

which satisfies

Equation (37), in turn, yields

An application of the mean value theorem leads to

where \(x_{k}<\widetilde{x}_{k} < x_{k+1}\). Hence,

Recalling that \(\lim _{k \to \infty } x_{k+1}/x_{k} =1\) and the asymptotics (36), we obtain

and, therefore,

The recursion \(x_{k+1}=g(x_{k})\) is homogeneous, meaning that any consequent term \(x_{k+1}\) of the sequence is a function of \(x_{k}\) only, independent of \(k\). Thus, we are interested in how the shift in the initial condition affects the sequence for large \(k\).

Lemma 5

For any sequence \((x_{k})\) solving the recursion \(G(x_{k}, x_{k+1})=1\), it holds that

Proof

If \(x_{1}=\beta _{1}\) the sequences are identical and the assertion trivial. We shall first examine the case \(x_{1} > \beta _{1}\).

Given the monotonicity of \(g(x)\), we have that \(x_{k} > \beta _{k}\), for all \(k\in \mathbb{N}\). Thus, it suffices to prove that there exists a positive constant \(c\) such that \(x_{k}-\beta _{k} \leq ck\), for all \(k\).

Since sequence \((\beta _{k})\) is unbounded and increasing, we can find a finite \(k_{0}\) such that \(\beta _{k_{0}}> x_{1}\). Having identified the point \(k_{0}\) of the inequality direction change, we know that, by monotonicity of \(g(x)\), the elements \(\beta _{k_{0}+1}, \beta _{k_{0}+2}, \ldots \) dominate \(x_{2}, x_{3}, \ldots \) respectively. Observe that

Hence, comparing \(x_{k}\) to the shifted sequence yields

Whence the upper bound

The asymptotic expansion (35) together with (38) implies \(\Delta \beta _{k} = O(k)\); therefore, allowing us to choose \(c:=k_{0} M\), where \(M\) is a constant such that \(\Delta \beta _{k} \leq M k\) for all \(k\in \mathbb{N}\).

Now we turn to the case when \(x_{1} < \beta _{1}\). From the monotonicity of \(g(x)\), it is enough to show that there exists a positive constant \(c_{1}\) satisfying \(x_{k} - \beta _{k} \geq -c_{1}k\) for all \(k\).

The sequence \((x_{k})\) is unbounded and increasing; therefore, one can find a finite \(k_{1}\) such that \(x_{k_{1}}>\beta _{1}\). Choosing the smallest such \(k_{1}\), we have that \(x_{k_{1}+1} > \beta _{2}\), \(x_{k_{1}+2} > \beta _{3}\), …. Appealing to (39), we have

Whence,

Choosing \(c_{1}:=k_{1} M\) concludes the proof. □

Before stating an analogue of Lemma 1, we need to highlight the following monotonicity property of \(G\).

Lemma 6

Let \(0< u< v\). If \(G(u,v)>1\) and \(u>x\), then \(v>g(x)\). Analogously, if \(G(u,v)<1\) and \(u< x\), then \(v< g(x)\).

Proof

From

we have \(g(u)>g(x)\). Then, \(G(u,g(u))=1\) and \(G(u,v)>1\) imply \(v>g(u)\) by monotonicity of \(G\) that follows from

Hence \(v>g(x)\).

Analogously, \(u< x\) implies \(g(u)< g(x)\). By \(G(u,v)<1\) and the monotonicity of \(G\), we have \(v< g(u)< g(x)\), which completes the proof. □

Now, we state and prove the analogue of Lemma 1 in the quickest selection problem.

Lemma 7

Let \((x_{k})\) be an increasing sequence such that \(G(x_{k},x_{k+1})>1\) (or, equivalently, \(x_{k+1}>g(x_{k})\)) for all sufficiently large \(k\). Then for some constant \(c>0\)

Similarly, if \(G(x_{k},x_{k+1})<1\) (or, equivalently, \(x_{k+1}< g(x_{k})\)) for all sufficiently large \(k\), then for some \(c>0\)

Proof

Assume to the contrary that for arbitrarily large \(c_{0}\in \mathbb{R}^{+}\) there exists \(k_{0} \in \mathbb{N}\) such that

Choosing \(c_{0}\) large ensures \(x_{k}>g(x_{k})\), \(k \geq k_{0}\).

Now, it is easy to see that \(\beta _{k_{0}}< x_{k_{0}}\) leads to a contradiction with the second inequality in (40); thus, we only consider the case \(\beta _{k_{0}}>x_{k_{0}}\). Introducing a sequence \((y_{k})\) that satisfies \(G(y_{k}, y_{k+1}) =1 \) and \(y_{k_{0}} = x_{k_{0}}\), we have

Moreover, by Lemma 5, there exists a positive constant \(c_{1}\) such that

Let \(c_{2} := c_{0} \vee c_{1}\). Then we can find a \(k_{1} \geq k_{0}\), \(k_{1} \in \mathbb{N}\) such that

and

Recalling (41) yields

which contradicts (42).

For the second part of the lemma, assume \(G(x_{k}, x_{k+1}) < 1\) for large \(k\), but for an arbitrarily large constant \(\widetilde{c}_{0}\), one can find \(k_{2}\) such that

When \(\beta _{k_{2}} > x_{k_{2}}\), this leads to a contradiction with (43) immediately. Hence, we only consider the case \(\beta _{k_{2}} < x_{k_{2}}\). For a sequence \((z_{k})\) satisfying \(G(z_{k}, z_{k+1}) = 1\) and \(z_{k_{2}} = x_{k_{2}}\), we have

By virtue of Lemma 5, we can find a positive constant \(\widetilde{c}_{1}\) such that

With \(\widetilde{c}_{2}:=\widetilde{c}_{0} \vee \widetilde{c}_{1}\), we can find \(k_{3} \geq k_{2}\), \(k_{3} \in \mathbb{N}\) to have

Since \(\widetilde{c}_{2} \geq \widetilde{c}_{1}\), one has \(\beta _{k} - z_{k} > -\widetilde{c_{2}} k\), for all \(k\). Hence, by (44),

However, this contradicts (45), which finalises the proof of the lemma. □

With this result in our toolbox, we are fully equipped to refine the asymptotic expansion of \(\beta _{k}\).

3.3 Asymptotic Expansion of \(\beta _{k}\)

The order of the next term of expansion of \(\beta _{k}\) is readily suggested by the upper bound in (3). However, to strengthen the hypothesis, we provide a heuristic argument based on the natural duality between this problem and the original problem of selecting the longest increasing subsequence.

We are taking a step further in exploring the connection between the two problems. Obtaining the asymptotic inverse of (23) suggests that

Thus, heuristics hint at the second term of order \(O(k \log {k})\). In view of this, we choose the first approximating sequence \((x^{(0)}_{k})\)

where \(\omega _{0}\) is a parameter. Recalling the expansion (32), we obtain, as \(k\to \infty \),

Therefore,

Straightforwardly it follows that, for \(k\) large enough,

Combining the inequalities (46) with Lemma 7 produces the following result

Corollary 2

As \(k \to \infty \),

To bound the remainder, we need yet another successive approximation. Choose a test function of the form

where \(\omega _{1}\) is a constant. On the one hand, we have, as \(k \to \infty \),

On the other hand, taking all four terms of expansion (32),

Hence,

Recall that the shift in the initial condition of the optimality recursion (27) results in the order \(O(k)\) change to the solution; since the comparison to the approximating sequence \(x^{(1)}_{k}\) provides a refinement of smaller order, we may bound the remainder in the expansion (47).

Theorem 3

The minimum expected time required to select an increasing sequence of length \(k\) satisfies the following asymptotic expansion

Corollary 3

The optimal threshold \(h_{k}\) satisfies the following refined asymptotic expansion

Proof

Unfortunately, the direct computation of \(h_{k} = 1 - \beta _{k}/\beta _{k+1}\) from (48) does not yield any meaningful results due to the \(O(k)\) remainder. However, [3], Lemma 7 provides an asymptotic approximation to the optimal threshold functions in terms of the optimal value functions

Using the one-term asymptotic expansion \(\beta _{k} \sim k^{2}/2\), Arlotto et al. computed that \(h_{k} = 2/k + O(k^{-2} \log {k})\), \(k\to \infty \). Plugging in the refined asymptotics (48) instead leads to the desired result. □

3.4 A Quasi-Stationary Policy

In this section, we construct a simple quasi-stationary policy that, as \(k\) grows large, has the expected time of selection matching \(\beta _{k}\) up to the leading term of expansion. We call it quasi-stationary because it has a second more conservative selection mode with a more narrow acceptance window. Our quasi-stationary policy has threshold functions independent of the remaining number of elements to be chosen, analogously to the stationary policy of Samuels and Steele [20].

We define our policy by choosing the threshold functions \(\widehat{h}_{i}(z),\, i=1, \ldots k\)

where

and the function \(a(k):\mathbb{R}_{+} \to [0,1]\) is decreasing in \(k\); we fix \(a(k)\) in the sequel.

The policy acts in two regimes. Firstly, we accept every consecutive observation within \(\eta \) above the last selected item. Secondly, when the last selection size gets above \(1-a(k)-\eta \), we abandon the initial rule and accept all admissible elements within an acceptance window of size \(a(k)/k\).

The choice of \(\eta \) is inspired by the asymptotics of the optimal threshold (30). However, choosing \(2/k\) exactly leads to a problem: with high probability the selection process will cross the \(1-a(k)-\eta \) barrier, while there are \(O(k^{1/2})\) elements yet to choose. Loosely speaking, as \(k\) gets large, the selection process with a constant window is governed by the central limit theorem. Although the expectation of the sum of \(k\) random variables distributed uniformly on \([0, 2/k] \) is 1, it has a standard deviation of \(O(k^{-1/2})\). A way to overcome this issue is to decrease the threshold size so that the probability of reaching the barrier is low, but keep it large enough so that the expected time of selection remains unchanged up to the terms of a lower order. The task narrows down to choosing a suitable \(a(k)\).

The value \(\widehat{\beta }_{k}\) corresponds to the expected performance of the quasi-stationary policy in the rest of this section.

Theorem 4

The quasi-stationary policy with threshold functions \(\widehat{h}_{i}(z)\) is asymptotically optimal, i.e.

Proof

Let \((Z_{j})_{j\in \mathbb{N}}\) denote the last selection process of the quasi-stationary policy. Introduce a hitting time \(\xi \) of the barrier \(1-a(k)-\eta \)

where we follow the convention \(\inf \emptyset = \infty \). Moreover, let the stopping time \(\rho \) be defined as

In this notation, we can write \(\widehat{\beta }_{k}\) out as follows

Before the barrier is hit, the inter-selection times are independent and distributed identically as \(\mathrm{Geom}(\eta )\), hence

moreover, by Wald’s identity

Consequently,

The second expectation in (49) is bounded by the expected time of selection in case the barrier is hit. Thus,

A rough upper-bound on \(\mathbb{E}(\tau _{k} | \rho < k)\) suffices for our purposes

it follows from computing an expected time to select all \(k\) elements with a constant window \(a(k)/k\). To get a grip on \(\mathbb{P}(\rho < k)\) we first notice that

Introduce a renewal sequence \((S_{j})\) with inter-arrival times distributed uniformly on \([0,\eta ]\). For \(j<\rho \), this sequence is equivalent in distribution to the gaps between consecutive selections \(Z_{j+1}-Z_{j}|\rho < j\). In light of this, we can write

Since we have

we can write the probability on the right-hand side of (52) in terms of \(\mu \) as

where \(\epsilon = a(k) - 2/k\). The probability in focus can be estimated from above by applying the Chernoff-Hoeffding inequality (see, for example, [7] for details)

Thus, choosing \(a(k):=k^{-1/2+\varepsilon }\), \(0<\varepsilon <1/2\) we ensure that the probability in (53) has an exponentially decreasing upper-bound

With \(a(k)\) finally fixed, from an upper bound (50) we have

Taking together (51), (54) and (55) yields

A sufficient lower-bound on \(\widehat{\beta }_{k}\) follows from the inequality

Plugging in the expression for \(a(k)\) yields

At last, combining (56) with (57) leads to

and the result of Theorem 4 follows immediately. □

3.5 A Self-Similar Policy

We shall construct next a self-similar policy to closer approach optimality. Recall that a selection policy is self-similar if it chooses the observation of size \(x\) if and only if

Let \(\widetilde{\beta }_{k}\) be the value functions of such strategy; then, decomposing by the first arrival yields

Computing the integral and rearranging

This is an inhomogeneous linear recursion, which can be solved explicitly in terms of \(\widetilde{h}_{k}\)’s by the method of variation of constants.

Introduce a self-similar suboptimal selection policy with thresholds

Note that \(\widetilde{h}_{k}<1\) for \(k>1\), thus \(\widetilde{\beta }_{k}<\infty \) for all \(k\). The recursion defining the value functions \(\widetilde{\beta }_{k}\) becomes

where

The homogeneous equation (59) has the general solution of the form

Taking two terms in the expansion of the logarithm we get

which readily implies

for some \(c>0\). We see that \(y_{k}\) is about linear in the initial value \(y_{1}\). Likewise, because the general solution is the sum of a particular solution and the general solution to the homogeneous equation, if we replace the initial value \(\widetilde{\beta }_{1}=1\) in the inhomogeneous equation by \(\widetilde{\beta }_{1}+\theta \), the corresponding solution will change by about \(\theta c k\).

On the other hand, equation (59) has necessary monotonicity properties to apply the asymptotic comparison method (since \(a_{k}>0\)). Checking that a test function satisfies the appropriate inequality for \(k>k_{0}\), we adjust the initial value (resulting in the \(O(k)\) deflection) for this \(k_{0}\) to apply comparison in the already familiar way.

The comparison lemma adapted for recursion (59) states that if a sequence \((y_{k})\) is such that \(y_{k+1} > a_{k} y_{k} + b_{k}\), then \(\widetilde{\beta }_{k}-y_{k}< ck\), and vice versa.

Following the usual procedure, we choose test functions of the form

The computation consists of three successive refinements, but we drop the explicit calculation. Matching coefficients and observing that the last term of \(y_{k}\) is of order \(o(k)\), we obtain

This result, together with the expansion (48), allows us to obtain the following theorem, which is the final accord of this paper.

Theorem 5

The self-similar strategy with threshold functions (58) has the value function \(\widetilde{\beta }_{k}\) satisfying

4 Concluding Remarks

In this paper, we studied two classical sequential selection problems initiated by Samuels and Steele [20] and Arlotto et al. [3]. To refine the asymptotic expansions of the respective value functions, we developed a method of approximating solutions to the difference equations satisfying certain monotonicity criteria. This ‘asymptotic comparison’ method, as we called it, allowed us to methodically obtain finer asymptotics of the solution to the optimality equation by bounding it from the above and the below with suitable test functions. In fact, we believe this method to be applicable to a wider class of value function recursions. In particular, the method could be adapted to the improve the value function asymptotics in closely related online bin-packing problem [11], where only principal term is currently known. Theorem 1 holds for the special case of the bin-packing problem with uniform weights and a unit-sized bin. This suggests a logarithmic term in the expansion for the general case too, which ties in nicely with the logarithmic regret bound derived by Arlotto and Xie [1].

Although this paper achieves high precision in estimating the mean length \(v_{n}\) in the longest increasing subsequence problem, there are several possibilities to improve the result. For example, one may prove the convergence of \(O(1)\)-term in the expansion (4) to a constant. With this settled, it may be possible to derive a refined expansion of the variance \({\mathrm{Var}}\, L_{n}(\boldsymbol{\tau ^{*}})\), as was demonstrated in Gnedin and Seksenbayev (2019) [14] for the poissonised variant of the problem. Moreover, building a direct bridge connecting the results in the classical and the poissonised problems remains an open problem.

References

Arlotto, A., Xie, X.: Logarithmic regret in the dynamic and stochastic knapsack problem. Stoch. Syst. 10(2), 170–191 (2020)

Arlotto, A., Nguyen, V.V., Steele, J.M.: Optimal online selection of a monotone subsequence: a central limit theorem. Stoch. Process. Appl. 125, 3596–3622 (2015)

Arlotto, A., Mossel, E., Steele, J.M.: Quickest online selection of an increasing subsequence of specified size. Random Struct. Algorithms 49, 235–252 (2016)

Arlotto, A., Wei, Y., Xie, X.: A O(log n)-optimal policy for the online selection of a monotone subsequence from a random sample. Random Struct. Algorithms 52, 41–53 (2018)

Baik, J., Deift, P., Johansson, K.: On the distribution of the length of the longest increasing subsequence of random permutations. J. Am. Math. Soc. 12, 1119–1178 (1999)

Baryshnikov, Y., Gnedin, A.: Sequential selection of an increasing sequence from a multidimensional random sample. Ann. Appl. Probab. 10, 258–267 (2000)

Bentkus, V.: On Hoeffding’s inequalities. Ann. Probab. 32, 1650–1673 (2004)

Bruss, F.T., Delbaen, F.: A central limit theorem for the optimal selection process for monotone subsequences of maximum expected length. Stoch. Process. Appl. 114, 287–311 (2004)

Bruss, F.T., Robertson, J.B.: Wald’s lemma for sums of order statistics of i.i.d. random variables. Adv. Appl. Probab. 23(3), 612–623 (1991)

Chen, R., Nair, V., Odlyzko, A., Shepp, L., Vardi, Y.: Optimal sequential selection of n random variables under a constraint. J. Appl. Probab. 21(3), 537–547 (1984)

Coffman, E.G., Flatto, L., Weber, R.R.: Optimal selection of stochastic intervals under a sum constraint. Adv. Appl. Probab. 19, 454–473 (1987)

De Bruijn, N.G.: Asymptotic Methods in Analysis. Dover, New York (1981)

Gnedin, A.: Sequential selection of an increasing subsequence from a sample of random size. J. Appl. Probab. 36(4), 1074–1085 (1999)

Gnedin, A., Seksenbayev, A.: Asymptotics and renewal approximation in the online selection of increasing subsequence (2019). ArXiv e-print, arXiv:1904.11213

Mallows, C.L., Nair, V.N., Shepp, L.A., Vardi, Y.: Optimal sequential selection of optical fibers and secretaries. Math. Oper. Res. 10, 709–715 (1985)

Markushevich, A.I.: Theory of Functions of a Complex Variable: Volume III. Am. Math. Soc., Providence (2005)

Peng, P., Steele, M.: Sequential selection of a monotone sequence from a random permutation. Proc. Am. Math. Soc. 144, 4973–4982 (2016)

Rhee, W., Talagrand, M.: A note on the selection of random variables under a sum constraint. J. Appl. Probab. 29(4), 919–923 (1991)

Romik, D.: The Surprising Mathematics of Longest Increasing Subsequences. Cambridge University Press, Cambridge (2015)

Samuels, S.M., Steele, J.M.: Optimal sequential selection of a monotone sequence from a random sample. Ann. Probab. 9(6), 937–947 (1981)

Acknowledgements

The author thanks the anonymous referees for their valuable feedback.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Seksenbayev, A. Asymptotic Expansions and Strategies in the Online Increasing Subsequence Problem. Acta Appl Math 174, 6 (2021). https://doi.org/10.1007/s10440-021-00424-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10440-021-00424-3