Abstract

Under a two-factor stochastic volatility jump (2FSVJ) model we obtain an exact decomposition formula for a plain vanilla option price and a second-order approximation of this formula, using Itô calculus techniques. The 2FSVJ model is a generalization of several models described in the literature such as Heston (Rev Financ Stud 6(2):327–343, 1993); Bates (Rev Financ Stud 9(1):69–107, 1996); Kou (Manag Sci 48(8):1086–1101, 2002); Christoffersen et al. (Manag Sci 55(12):1914–1932, 2009) models. Thus, the aim of this study is to extend some approximate pricing formulas described in the literature, like formulas in Alòs (Finance Stoch 16(3):403–422, 2012); Merino et al. (Int J Theor Appl Finance 21(08):1850052, 2018); Gulisashvili et al. (J Comput Finance 24(1), 2020), to pricing under the more general 2FSVJ model. Moreover, we provide numerical illustrations of our pricing method and its accuracy and computational advantage under double exponential and log-normal jumps. Numerically, our pricing method performs very well compared to the Fourier integral method. The performance is ideal for out-of-the-money options as well as for short maturities.

Similar content being viewed by others

1 Introduction

In the quest to enhance option pricing models in order to reproduce the volatility smile or smirk observed in derivative markets, researchers like Heston (1993) and some others, came up with stochastic volatility models to cater this stylized fact. Recall that in a stochastic volatility model, the price process under a risk-neutral measure is assumed to depend not on constant volatility as in the Black–Scholes model, but on a stochastic volatility described by a second stochastic differential equation driven by a Brownian motion correlated with the Brownian motion that drives the price process. Later, in order to improve them, jumps following a compound Poisson process were added to the price process, as in Bates (1996a, b). Currently, Heston and Bates models (see Heston (1993) and Bates (1996a) respectively) are standard models regularly used in the financial industry. Bates model is the Heston model with the addition of jumps in the price process described by a compound Poisson process with normal amplitudes. In Bates (2000), in order to overcome some inconsistencies of Heston and Bates models in trying to generate volatility surfaces similar to those observed in derivative markets, a second factor was added to the volatility equation, modeling separately the long-term and the short-term volatility evolution. This idea was later developed by several authors, see for example Christoffersen et al. (2009) and Andersen and Benzoni (2010).

Certainly, most of previous models, have the advantage of having exact semi-closed pricing formulas, however, they involve numerical integration which is computationally expensive especially when calibrating models. See the recent papers Orzechowski (2020), Deng (2020), and Orzechowski (2021) for discussions about the efficiency of different methods to compute approximately these formulas. The last two papers cover the 2FSVJ model and in fact, Deng (2020) extends the 2FSVJ model including jumps in the volatility equations.

In general, the need for fast option pricing has driven, during the last years, the research of closed approximate formulas. A different line in this direction is the one started by Alòs (2012), who derived an exact decomposition of an option price in terms of volatility and correlation in the case of the Heston model, that can be well approximated by an easy-to-manage closed approximate formula. In this approach, the problem is not how to do fast numerical integration in the price closed formula but to obtain another type of approximate formula based on a Taylor type decomposition. This point of view is not only interesting since the computational finance point of view, but also since an intrinsic point of view that shows the impact of correlation and volatility of volatility in option pricing.

The ideas in Alòs (2012) were exploited in Alòs et al. (2015) to develop an alternative method to fast calibration of the Heston model on the basis of a market price surface. This approximate formula for the Heston model was improved in terms of accuracy in Gulisashvili et al. (2020). Moreover, the same ideas were extended beyond Heston model in several papers. In Merino and Vives (2015) the decomposition formula was extended to a general stochastic volatility models without jumps, in Merino and Vives (2017), stochastic local volatility and spot-dependent models were considered, and in Merino et al. (2018) the case of Bates model was treated. Recently, in Merino et al. (2021), similar results for rough Volterra stochastic volatility models have been obtained.

It is important too to comment on the advantages of this line of research with alternative methodologies in relation to accuracy and computational efficiency in pricing derivatives. In Alòs (2012), results are compared with another approximate formula developed by E. Benhamou, E. Gobet and M. Miri based on Malliavin calculus techniques, see Benhamou et al. (2010) and the references therein. In Alòs et al. (2015), accuracy and computational efficiency is compared with results in Forde et al. (2011) based on a an alternative closed form approximate formula. In Merino et al. (2018), one of the main references for the present paper, the accuracy and computational efficiency of the obtained approximate formula for Bates model is compared with transform pricing methods based on a semi-closed pricing formula. Concretely, the new formula is compared with the Fourier transform based pricing formula used in Baustian et al. (2017), resulting in a three times faster method with similar accuracy. As a summary, approximate formulas based on the mentioned decomposition formula, beyond its advantages in terms of computational efficiency, allow to understand the key terms contributing to the option fair value and to infer parametric approximations to the implied volatility surface.

In the present paper, in line with the mentioned previous papers, the goal is to obtain a decomposition formula and a closed approximate option pricing formula for a two-factor Heston–Kou 2FSVJ model, as described in Bates (2000) and Christoffersen et al. (2009). Our study brings some innovations to the existing and mentioned literature on three fronts. Firstly, we consider a two-factor model which to the best of our knowledge has not been studied in the context of the mentioned decomposition formula. Secondly, we get a second-order formula like in the case of Gulisashvili et al. (2020) while most research in this line obtains first-order formulae only. Lastly, in addition to log-normal jumps, double exponential jumps as in Kou (2002) and Gulisashvili and Vives (2012) are considered, and in this sense, this is a generalization of Merino et al. (2018). Our results are compared with the Fourier integral method obtaining faster results.

The rest of the paper is divided as follows: in Sect. 2 we introduce the model and outline some key concepts and assumptions. In Sect. 3 the generic decomposition formula is obtained. In Sect. 4 we derive the first and second-order approximate formulae. Section 5 describes the numerical experiments and results while Sect. 6 outlines the conclusions of our research.

2 The model

Assume we have an asset \(S:=\{S_{t}, t\in [0,T]\}\) described by the SDE

under a risk-neutral probability measure, where \((B_{i,t})_{t\in [0,T]}\) and \((W_{i,t})_{t\in [0,T]}\) are mutually independent Wiener processes for \(i=1,2\). The i.i.d. jumps \((Z_{i})_{i\in \mathbb {N}}\) have a known distribution and are independent of the Poisson process \(N_{t}\) and the Wiener processes.

In order to compute the decomposition formula we need a version of the variance processes suitable for our computations. We use an alternative adapted specification that is suitable for Itô calculus, that is, the expected future average variance defined as

where \(\mathbb {E}_{t}\) denotes the conditional expectation with respect to the complete natural filtration generated by the five processes involved in the model.

The following lemma will be useful in the remainder of the paper.

Lemma 1

The process \(V_{i,t}\) satisfies the differential form

where

is a martingale. In particular,

where

Proof

Integrating (2) and (3) on [t, s] and taking conditional expectations yields:

and

Transforming the second expression via an integrating factor we get the following differential equation:

Integrating and multiplying by \(e^{-\kappa _{i}s}\) reveals that

Integrating the above on [t, T] yields

Now, from the definition of \(V_{i,t}\)

where

Then, the differential form of \(V_{i,t}\) follows.

In relation with the expression of \(dM_{i,t}\), note that using (5) we have

and

Substituting the expression of \(dY_{i,t}\), the differential form of \(M_{i,t},\) (4), follows. \(\square\)

Remark 1

Recall that in the two-factor Black–Scholes model, we transform the diffusion term as follows:

where

and

Thus, taking the above remark into account and letting \(X_{t}=\ln (S_{t})\) we have

where

and

The process \({{\overline{Y}}}_{t}\) has an expected future average variance whose differential form

can easily be derived since it is a linear combination of independent processes. Here,

and

3 Decomposition formula

Having defined the terms and processes related to the volatility, we recall some notation according to the Black–Scholes formula. Let B(t, x, y) be the Black–Scholes function that gives the acclaimed plain vanilla Black–Scholes option price with variance y, log price x, and maturity T:

where N is the standard normal cumulative distribution function and

Recall that \(\mathcal {L}_{y}B(t,x,y)=0\) where \({{\mathcal {L}}}_y\) is the Black–Scholes operator

We begin by obtaining a generic decomposition formula which is instrumental throughout our discussion. It will be particularly useful in deriving the approximate versions of the decomposition formula as discussed in the “Appendix”.

Lemma 2

Let

be the continuous part of \(X_{t}\), and let the function

satisfy

Suppose that \(G_{t}\) is a continuous semi-martingale adapted to the complete natural filtration generated by \(W_{1,t}\) and \(W_{2,t}.\) Then, the following generic decomposition formula holds:

where \(\Lambda =\partial _x\), \(\Gamma :=\partial ^2_{xx}-\partial _x.\)

Proof

Refer to Theorem 3.1 in Merino et al. (2018). \(\square\)

Remark 2

Note that in the Lemma 2 function A is a generic function. Moreover, condition (7), which is satisfied by the Black–Scholes function, is used only to simplify terms in the decomposition. The proof is based on the Itô formula. Therefore, the methodology used in this paper is completely general. Properties of the Black–Scholes function and of any concrete stochastic volatility model can be useful to obtain some simplifications, but the ideas behind the decomposition formula, are general and can be developed for any stochastic volatility model and any function.

Corollary 1

Assuming that \(A(t,x,y)=B(t,x,y)\) and \(G\equiv 1\) in Lemma 2, we have

Remark 3

Though this formula can be written similarly to the one derived by Merino et al. (2018), it is different due to the two driving stochastic volatility terms

Hence, our decomposition formula can be resolved into five terms instead of three terms.

In Merton (1976) and Merino et al. (2018) the treatment of a jump model problem is reduced to a treatment of a continuous case problem by conditioning on the number of jumps. Assuming that we observe k jumps in the time period [t, T] we have

where \(L_k=\sum _{i=1}^{k} Z_{i}.\)

From now on we will write for simplicity \(D_s:=X_t+\widehat{X}_{s}-\widehat{X}_{t}\) for any \(s\ge t.\) Note that \(D_t=X_t.\) Define moreover

Thus, it follows that we can set

where in general, for any positive \(\eta ,\)

and then,

is the probability of observing k jumps in [t, T].

This enables us to deal with our problem in a continuous setting. Following that, we obtain the decomposition of the 2FSVJ model.

Applying Lemma 2 recursively to \(A=H_k\) and \(G\equiv 1\) we obtain the following corollary:

Corollary 2

The price of the plain vanilla European call option is given as

4 Approximate formulae

In the study of decomposition formulas, it has been found that formulas like (8) are not easy to compute in their present form. But they allow building closed-form approximation formulas that are computationally tractable.

The idea is to freeze the integrands in formula (8), to compute the difference between the original and the frozen approximate formulas, and decompose this error formula in a series of decreasing terms. Adding to the approximate formula terms of the error formula up to a certain order allows us to obtain good approximations; see Gulisashvili et al. (2020).

Freezing the integrands of the formula in Corollary 2 gives

where \(\epsilon (T-t)\) denotes an error term that has to be estimated.

From now on we will denote

and

Using this notation, the first naive version of the approximate formula is given by

Before giving precise approximate formulas, we recall two lemmas:

Lemma 3

(Alòs 2012) For any \(n\ge 0\) and \(0\le t\le T,\) there exists a constant C(n) such that

Lemma 4

(Alòs et al. 2015) The following relations hold::

-

1.

$$\begin{aligned} \psi _{i}(t)\le \frac{1}{\kappa _i}. \end{aligned}$$

-

2.

$$\begin{aligned} \int _{t}^{T}{ \mathbb {E}}_{t}\left[ {Y}_{i,s}\right] ds\ge Y_{i,t}\psi _{i}(t). \end{aligned}$$

-

3.

$$\begin{aligned} \int _{t}^{T}{ \mathbb {E}}_{t}\left[ {Y}_{i,s}\right] ds\ge \frac{\theta _{i}\kappa _{i}}{2}\psi ^{2}_i(t). \end{aligned}$$

-

4.

$$\begin{aligned} R_{i,t}=\frac{\nu _{i}^{2}}{8}\int _{t}^{T}\mathbb {E}_t [Y_{i,u}]\psi _{i}^{2}(u)du. \end{aligned}$$

-

5.

$$\begin{aligned} U_{i,t}=\frac{\rho _{i}\nu _{i}}{2}\int _{t}^{T}\psi _{i}(u)\mathbb {E}_t \left[ Y_{i,u}\right] du. \end{aligned}$$

-

6.

$$\begin{aligned} Q_{i,t}=\frac{\rho _{i}^2\nu _{i}^2}{2}\int _{t}^{T}\mathbb {E}_t \left[ Y_{i,u}\right] \left (\int _{u}^{T}e^{-\kappa _{i}(z-u)}\psi _{i}(z)dz\right )du. \end{aligned}$$

-

7.

$$\begin{aligned} dR_{i,t}=\frac{\nu _{i}^{3}}{8}\left (\int _{t}^{T}e^{-\kappa _{i}(z-t)}\psi _{i}(z)^2dz\right ) \sqrt{Y_{i,t}}dW_{i,t}-\frac{\nu _{i}^2}{8}\psi _{i}^{2}(t)Y_{i,t}dt \end{aligned}$$

-

8.

$$\begin{aligned} dU_{i,t}=\frac{\rho _i\nu _{i}^{2}}{2}\left (\int _{t}^{T}e^{-\kappa _{i}(z-t)}\psi _{i}(z)dz\right ) \sqrt{Y_{i,t}}dW_{i,t}-\frac{\rho _i\nu _{i}}{2}\psi _{i}(t)Y_{i,t}dt \end{aligned}$$

-

9.

$$\begin{aligned} dQ_{i,t}=\frac{\rho _i^2\nu _{i}^{3}}{2}\int _{t}^{T}\left [e^{-\kappa _{i}(u-t)}\left (\int _{u}^{T}e^{-\kappa _{i}(z-u)}\psi _{i}(z)dz\right )du\right ] \sqrt{Y_{i,t}}dW_{i,t} \end{aligned}$$$$\begin{aligned} -\frac{\rho _{i}^2\nu _{i}^2}{2}\left (\int _{t}^{T}e^{-\kappa _{i}(z-t)}\psi _{i}(z)dz\right )Y_{i,t}dt \end{aligned}$$

Following Gulisashvili et al. (2020) we derive higher order approximations by applying the generic decomposition formula in Lemma 2 for appropriate choices of \(A(t,X_t,V_t)\) and \(G_t\) as follows. Under this approach, it is necessary to evaluate the respective error bounds.

Proposition 1

We have the following approximate formula:

where

where \(C(\theta _1,\theta _2,\kappa _1,\kappa _2)\) is a constant that depends only on parameters \(\theta _i\) and \(\kappa _i\) and \(\nu =\max \{\nu _1,\nu _2\}.\)

Proof

See the “Appendix”. \(\square\)

Remark 4

Note that this approximated option price is the Black–Scholes price plus appropriate correction terms. It is worth mentioning that this formula provides significant generality within the framework of the 2FSVJ model. Furthermore, it encompasses and extends the formulas presented in the references cited, namely Heston (1993), Bates (1996a). Christoffersen et al. (2009), Merino et al. (2018), as well as some of the results obtained in Gulisashvili et al. (2020), which can be considered specific instances of our more comprehensive formula.

While the above approximate formula is second-order one, we can obtain the first-order version as it is given in the following corollary.

Corollary 3

We have the following approximate formula:

where

where \(C(\theta _1, \theta _2, \kappa _1, \kappa _2)\) is a constant that depends only on \(\theta _1, \theta _2, \kappa _1, \kappa _2.\)

Proof

See the “Appendix”. \(\square\)

Remark 5

-

1.

Expanding the scope of the approximate options pricing formula to include other types of options, such as barrier or American options, presents great potential. However, it is important to note that the decomposition results are derived from the Black–Scholes formula, which is specifically applicable to European options. Therefore, extending these decomposition formulae to include additional option types requires extensive investigation and comprehensive studies to establish a robust framework. Such explorations have the potential to open up new avenues for research and provide valuable insights into the pricing and analysis of a broader range of option types.

-

2.

Incorporating real-data examples would not only enhance the credibility of the research but also offer valuable contributions to the field. Nevertheless, there are numerous challenges that contribute to the difficulty in obtaining real market data examples for the application of option pricing formulas, such as our decomposition formula. The challenges include limited availability, market complexity, and potential deviations from model assumptions, such as risk-neutral assumptions. It is worth noting that the lack of real-data examples presents an opportunity for new directions of future research to explore and provide valuable insights into the practical application and performance of the formula using real market data.

5 Numerical computations

Though our focus is on a class of Heston–Kou like models with two factors, this model is general enough to cover other jump structures studied in the literature. Thus, from henceforth we shall assume that jumps are defined by the Compound Poisson process

where \(Z_i\) is a double exponential random variable whose distribution is given by

where \(\eta _1>1\), \(\eta _2>0\), \(p,q\in (0,1)\) such that \(p+q=1\). Assuming that k jumps are recorded then the convolution of the law of k jumps is

where

for all \(1 \le j \le k-1\), and

for all \(1 \le j \le k-1\) with \(P_{k, k} = p^k\) and \(Q_{k, k} = q^k\). See Kou (2002) and Gulisashvili and Vives (2012).

Consequently,

Then, we want to compute

And this is equal to

where

with

and

To compute the integral (9) we truncate it at \(\pm 30.5.\) Additionally, we consider that there are a total of 150 jumps. We are assured that the approximation converges well since several terms converge to zero very fast.

Besides the double-exponential jumps, we also consider the case where \((Z_i)\) are i.i.d. normal random variables with mean \(\mu _J\) and standard deviation \(\sigma _J\). In this case, see Merino et al. (2018),

where the modified risk-free rate \(r^* = r-\lambda (e^{\mu _J+\frac{\sigma _J^2}{2}}-1)+k\frac{\mu _J+\frac{\sigma _J^2}{2}}{(T-t)}\) is used.

The parameters used in our computations are obtained from Pacati et al. (2018) who consider a similar model with log-normal jumps. Unless otherwise stated, the parameters used are given in Table 1.

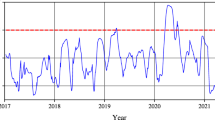

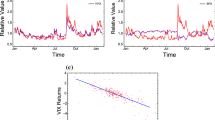

Comparing the first-order and the second-order decomposition methods to the Fourier integral method based on Gil-Pelaez (1951) and we find that the decomposition methods perform very well in relation to the Fourier integral method under both the log-normal and double exponential jumps. See Figs. 1, 2, 3 and 4. Take note that the error is so small that the three option price plots for the Fourier integral (green), the first-order decomposition (blue), and the second-order decomposition (orange) cannot be distinguished by the naked eye. The first-order approximation indicates that the method performs well under out-of-the-money conditions. Moreover, we analyze the impact of time to maturity on the method performance in Figs. 5 and 6. Finally, in Figs. 7 and 8 we show the impact of the vol-of-vol in the pricing error for different strike prices and different jump regimes. Generally, our method behaves well for short-dated options. In addition, we find that the method is faster and more accurate for log-normal jumps as compared to double exponential jumps.

Additionally, to investigate the computational performance of our method we computed option prices for five different strikes and measured the average time taken. This experiment was repeated 1000 times and the results in Table 2 show that the decomposition is at least 20% faster than the Fourier integral method under log-normal jumps.

6 Conclusion

This paper investigates the valuation of European options under an enhanced model for the underlying asset prices. We consider a two-factor stochastic volatility jump (2FSVJ) model that includes stochastic volatility and jumps. A decomposition formula for the option price and first-order and second-order approximate formulae via Itô calculus techniques are obtained. Moreover, several numerical computations and illustrations are carried out, and they suggest that our method under double exponential and log-normal jumps offers computational gains. The results of this paper generalize the existing work in the literature in relation to the decomposition formula and its applications. As in the other cases cited in the introduction, the given approximate pricing formula is fast to compute and accurate enough.

References

Alòs E (2012) A decomposition formula for option prices in the Heston model and applications to option pricing approximation. Finance Stoch 16(3):403–422

Alòs E, De Santiago R, Vives J (2015) Calibration of stochastic volatility models via second-order approximation: the Heston case. Int J Theor Appl Finance 18(06):1550036

Andersen TG, Benzoni L (2010) Stochastic volatility. CREATES research paper

Bates DS (1996a) Jumps and stochastic volatility: exchange rate processes implicit in Deutsche mark options. Rev Financ Stud 9(1):69–107

Bates DS (1996b) Testing option pricing models. Handb Stat 14:567–611

Bates DS (2000) Post-’87 crash fears in the S &P 500 futures option market. J Econom 94(1–2):181–238

Baustian F, Mrázek M, Pospśil J, Sobotka T (2017) Unifying pricing formula for several stochastic volatility models with jumps. Appl Stoch Models Bus Ind 33(4):422–442

Benhamou E, Gobet E, Miri M (2010) Time dependent Heston model. SIAM J Financ Math 1:289–325

Christoffersen P, Heston S, Jacobs K (2009) The shape and term structure of the index option smirk: why multifactor stochastic volatility models work so well. Manag Sci 55(12):1914–1932

Deng G (2020) Option pricing under two-factor stohastic volatility jump-diffusion model. Complexity 2020(Article ID 1960121)

Forde M, Jacquier A, Lee R (2011) The small-time and term structure of implied volatility under the Heston model. SIAM J Financ Math 3(1):690–708

Gil-Pelaez J (1951) Note on the inversion theorem. Biometrika 38(3–4):481–482

Gulisashvili A, Lagunas M, Merino R, Vives J (2020) High-order approximations to call option prices in the Heston model. J Comput Finance 24(1)

Gulisashvili A, Vives J (2012) Two sided estimates for distribution densities in models with jumps. In: Stochastic differential equations and processes, Springer proceedings in mathematics, vol 7, pp 239–254

Heston SL (1993) A closed-form solution for options with stochastic volatility with applications to bond and currency options. Rev Financ Stud 6(2):327–343

Kou SG (2002) A jump-diffusion model for option pricing. Manag Sci 48(8):1086–1101

Merino R, Pospíšil J, Sobotka T, Vives J (2018) Decomposition formula for jump diffusion models. Int J Theor Appl Finance 21(08):1850052

Merino R, Pospíšil J, Sobotka T, Sottinen T, Vives J (2021) Decomposition formula for rough Volterra stochastic volatility models. Int J Theor Appl Finance 24(02):2150008

Merino R, Vives J (2015) A generic decomposition formula for pricing vanilla options under stochastic volatility models. Int J Stoch Anal 2015

Merino R, Vives J (2017) Option price decomposition in spot-dependent volatility models and some applications. International Journal of Stochastic Analysis 2017

Merton RC (1976) Option pricing when underlying stock returns are discontinuous. J Financ Econ 3(1–2):125–144

Orzechowski A (2020) Pricing European options in selected stochastic volatility models. Quant Methods Econ XX I(3):145–156

Orzechowski A (2021) Pricing European options in the Heston and the double Heston models. Quant Methods Econ XXI I(1):39–50

Pacati C, Pompa G, Renò R (2018) Smiling twice: the Heston++ model. J Bank Finance 96:185–206

Acknowledgements

We would like to thank the two anonymous reviewers for their valuable contributions through constructive comments which have improved the paper. Nevertheless, the usual disclaimer applies. The research of Josep Vives is partially financed by Spanish Grant PID2020-118339GB-100 (2021-2024).

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature.

Author information

Authors and Affiliations

Contributions

All authors have contributed to all aspects of the submitted paper equally. The lisf of authors is in alphabetical order.

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: First order approximation

We consider the formula in Corollary 2:

and apply the generic formula in Lemma 2 for appropriate choices of A and G.

1.1 Term I.i

Consider

Let \(A=\Gamma ^{2}H_{k}\) and \(G_t=R_{i,t}.\) Then we have

1.2 Term II.i

Consider

Let \(A=\Lambda \Gamma H_{k}\) and \(G_t=U_{i,t}.\) Then we have

We look now at the error of approximating each term I.i and II.i.

1.3 Error of Term I.i

Let be \(a_{it} = \sqrt{V_{it}(T-t)}\) for \(i=1,2\) and \(\overline{a}_{t} = \sqrt{\overline{V}_{t}(T-t)}.\) It is clear that

This fact will come in handy for the calculations below.

We have

and

Hence, we have

and

which simplifies to

Applying Lemma 4 again we find that

and using the fact that

it follows that

where \(C(\theta _1,\theta _2,\kappa _1,\kappa _2)\) is a constant that depends only on \(\theta _1,\theta _2,\kappa _1,\kappa _2.\)

1.4 Error of Term II.i

We have

and

Then,

and

and hence we have

and

Lastly,

Appendix 2: Second order approximation

The terms

and

are of order 2 and need to be expanded further to obtain a higher order of precision. Following similarly as before we find that:

The fact that \(Q_{i,t}\) has a term \(\nu _i^2\), \(dM_{j,t}\) a term \(\nu _j\) and \(dQ_{i,t}\) a term \(\nu _i^3\) guarantees that

is of order \(\nu ^3\) where \(\nu :=\max \{\nu _1,\nu _2\}.\)

On the other hand,

Note that from the independence of \(W_1\) and \(W_2\), \(d[M_l,U_i U_j]_{s}\) is equal to \(U_{2,s} d[M_1,U_1]_{s}\) if \(l=1\) and equal to \(U_{1,s} d[M_2,U_2]_{s}\) if \(l=2\) and similarly for \(d[{W}_l,U_i U_j]_{s}.\)

As before, here \(U_i\) has a coefficient \(\nu _i\), \(dU_i\) coefficient \(\nu _i^2,\) and \(dM_i\) a coefficient \(\nu _i.\) Therefore, all terms are of order \(\nu ^3\) where \(\nu :=\max \{\nu _1,\nu _2\}.\)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

El-Khatib, Y., Makumbe, Z.S. & Vives, J. Approximate option pricing under a two-factor Heston–Kou stochastic volatility model. Comput Manag Sci 21, 3 (2024). https://doi.org/10.1007/s10287-023-00486-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10287-023-00486-8