Abstract

Robust model predictive control approaches and other applications lead to nonlinear optimization problems defined on (scenario) trees. We present structure-preserving Quasi-Newton update formulas as well as structured inertia correction techniques that allow to solve these problems by interior-point methods with specialized KKT solvers for tree-structured optimization problems. The same type of KKT solvers could be used in active-set based SQP methods. The viability of our approach is demonstrated by two robust control problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper addresses nonlinear optimization problems (NLPs) with an underlying tree topology. The prototypical example are multistage stochastic optimization problems. These are computationally expensive because they involve some random process whose discretization yields a scenario tree that grows exponentially with the length of the planning horizon. Primal and dual decomposition methods are specially tailored to linear and mildly nonlinear convex multistage stochastic optimization problems. Moreover, they extend well to the mixed-integer case. See Birge and Louveaux (2011), Kall and Wallace (1994) for an overview of algorithmic approaches and of stochastic optimization in general. To tackle highly nonlinear convex and nonconvex NLPs, we consider interior-point and SQP methods. Interior-point methods (IPMs) are also efficient on linear or quadratic problems, while SQP methods extend well to mixed-integer problems. In both cases the key to efficiency is the algebraic structure of the arising KKT systems rather than the stochastic structure. Therefore, we allow arbitrary trees instead of only scenario trees. All methods mentioned above are amenable to parallelization due to the rich structure of the underlying tree.

In Steinbach (2002) and the references therein, the third author has developed suitable formulations and a sequential interior-point approach for convex tree-structured NLPs with polyhedral constraints, although with parallelization and with the fully nonlinear and nonconvex case in mind. Other early interior-point approaches for stochastic optimization include Berger et al. (1995), Carpenter et al. (1993), Czyzyk et al. (1995), Jessup et al. (1994), Schweitzer (1998). For more recent structure-exploiting parallel algorithms see, e.g., Blomvall (2003), Blomvall and Lindberg (2002), Gondzio and Grothey (2006, 2007, 2009), Lubin et al. (2012). Based on the dissertation (Hübner 2016), a massively distributed implementation of our algorithm has been presented in Hübner et al. (2017). Here we address the extension to fully nonlinear and nonconvex problems. In particular, we present proper NLP formulations, structure-preserving Quasi-Newton updates, and tailored inertia correction techniques. Although all presented techniques possess a straightforward distributed implementation, we will not address this in detail.

The paper is structured as follows. In Sect. 2 we briefly introduce our approach and extend the problem formulations and KKT systems of Steinbach (2002) to the general NLP case. Section 3 presents (partially) separable Quasi-Newton update formulas that preserve the problem-specific block structure. The proper specialization of standard inertia correction techniques to this structure is discussed in Sect. 4. In Sect. 5, finally, two case studies from robust process control that lead to multistage stochastic NLPs with ODE dynamics serve as proof of concept for our algorithmic techniques.

2 Tree-sparse optimization

In this paper, we consider the integrated modeling and solution framework for tree-structured convex NLPs from Steinbach (2002) that we refer to as “tree-sparse”. It consists of natural model formulations that have favorable regularity properties and block-level sparsity admitting \(O({|V|})\) KKT solution algorithms, where \({|V|}\) is the number of tree nodes. There are three standard forms that cover, respectively, the general case with no further structure and two more specialized control formulations with different stochastic interpretations. The NLPs that we study below have linearizations whose polyhedral constraints match precisely the structure considered in Steinbach (2002).

For the following, let \(V = \{0, \dots , N\}\) denote the node set of a tree rooted in 0. Given a node \(j \in V\), we denote the set of successors by S(j), the predecessor by \(i=\pi (j)\) (if \(j \ne 0\)), and the path to the root by \({\varPi }(j) = (j, \pi (j), \dotsc , 0)\). The level of j is the path length, i.e., \(t(j) = {|{\varPi }(j)|} - 1\). Finally, \(L_t\) stands for the set of level t nodes and L for the set of leaves. In a scenario tree we have \(L = L_T\) where T is the tree’s depth, and every node j has a probability \(p_j\) such that \(\sum _{j \in L_t} p_j = 1\) for every t.

2.1 Tree-sparse NLPs

Consider a general NLP with equality constraints, range inequality constraints, and simple bounds in the form

We call the NLP tree-sparse if it satisfies certain separability properties. Given a tree with vertex set V and variable vector \(y = (y_j)_{j \in V}\), the objective \(\phi \) and range constraints \(c_{\mathcal {R}}\) have to be separable with respect to the node variables \(y_j\), and the equality constraints \(c_{\mathcal {E}}\) split into dynamic constraints with a Markov structure (\(y_j\) depends only on its predecessor \(y_i\)) and separable global constraints. We distinguish three variants of tree-sparse NLPs depending on the type of dynamic constraints: implicit tree-sparse NLPs have implicit dynamics while outgoing and incoming tree-sparse NLPs with \(y_j = (x_j, u_j)\) and \(y_j = (u_j, x_j)\), respectively, have explicit dynamics in control form where the current state \(x_j\) depends on \((x_i, u_i)\) or \((x_i, u_j)\). In the following, we state these three NLP variants without further explanation. All details can be found in Steinbach (2002).

The implicit tree-sparse NLP reads

the outgoing tree-sparse NLP is given by

and the incoming tree-sparse NLP reads

At the root, \(j = 0\), the preceding variable \(y_i\) is empty and the dynamic constraints reduce to respective initial conditions \(g_0(y_0) = h_0\), \(x_0 = h_0\), and \(x_0 = h_0(u_0)\).

In addition to global equality constraints one might also consider local equality constraints as in Steinbach (2002). In the above NLPs these would take the respective forms

To avoid unnecessary technical complexity we assume that local constraints are modeled as global constraints. Specific discussions will be added where the distinction is relevant. Again for simplicity we consider range constraints in the form \(c_{\mathcal {R}}(y) \ge 0\) rather than lower and upper constraints as in Steinbach (2002), \(c_{\mathcal {R}}(y) \in [r^-, r^+]\).

2.2 Tree-sparse KKT systems

We solve the NLP (1) by an interior-point method, handle bounds directly, and convert range constraints to \(c_{\mathcal {R}}(y) - s = 0\) using slacks \(s \ge 0\). Then, every iteration leads to a KKT system of the following form, where slack increments \({\varDelta }s\) have already been eliminated:

Here \(G, F, F^r\) correspond to dynamic, global, and range constraints, respectively, and \({\varPhi }\ge 0, {\varPsi }> 0\) are diagonal barrier matrices. Elimination of \({\varDelta }v\) from the last equation, \(F^r {\varDelta }y + {\varPsi }^{-1} {\varDelta }v = -\psi \), then yields a system of the form

where \({\bar{H}} = H + {\varPhi }+ (F^r)^{T}{\varPsi }(F^r)\). If we solve the NLP by an active-set based SQP method, every active-set sub-iteration also produces a KKT system of the form (7), except that the barrier matrices vanish (yielding \({\bar{H}} = H\)) and that F may contain additional rows from active inequality constraints. It is an essential feature of all three tree-sparse NLP forms that the additional terms in \({\bar{H}}\) (IPM) or in F (SQP) preserve the original block structure.

Next we consider the separable or partially separable Lagrangians of the three tree-sparse NLP forms and the resulting specializations of the KKT system (6), which are all straightforward extensions of the material in Steinbach (2002). The specific forms of the Lagrangians are needed for the Quasi-Newton updates in Sect. 3 whereas the KKT systems are needed for the inertia correction in Sect. 4.

2.2.1 Implicit tree-sparse KKT system

The Lagrangian of the implicit tree-sparse NLP (with \(\eta _j = (\eta _j^-, \eta _j^+)\) and \(h_0\) constant) reads

where

With barrier parameter \(\beta \) and \(e = (1, \dotsc , 1)^{T}\) in suitable dimension, the resulting barrier matrices and vectors are

Let \(\zeta \) generically denote the dual variables appearing in the Lagrangian \(\mathcal {L}\) or its components \(\mathcal {L}_j\). Then the relevant partial derivatives of the Lagrangian read

and we further abbreviate

This yields the following KKT system (6) in node-wise representation, where we omit \({\varDelta }\)’s on all increments and write \(y_j, \lambda _j, v_j, \mu \) for simplicity:

The construction of \(H, G, F, F^r\) and \({\varPhi }, {\varPsi }\) from the node contributions proceeds exactly as in Steinbach (2002), also for the following explicit tree-sparse NLP variants.

2.2.2 Outgoing tree-sparse KKT system

The Lagrangian of the outgoing tree-sparse NLP (with \(\xi _j = (\xi _j^-, \xi _j^+)\) and \(h_0\) again constant) reads

with

Here, the barrier matrices and vectors are

and the partial derivatives of the Lagrangian read

Thus, with

we obtain the KKT system (6) in node-wise representation, again with \({\varDelta }\)’s omitted:

2.2.3 Incoming tree-sparse KKT system

For the incoming tree-sparse NLP the Lagrangian finally reads

with

and

Here, the barrier matrices and vectors are

and the partial derivatives of the Lagrangian read

Again, with

we obtain the KKT system (6) in node-wise representation with \({\varDelta }\)’s omitted:

3 Tree-sparse Quasi-Newton updates

Standard interior-point methods are second-order methods, i.e., they use local information given by the Hessian of the Lagrangian \(\mathcal {L}\). In many applications, the evaluation of second-order derivatives is prohibitively expensive and one is interested in using Quasi-Newton updates that approximate the Hessian.

For the following let an initial point \(y^{(0)}\) and an initial approximation \(B^{(0)}\) of the Hessian \(\nabla ^2_{yy} \mathcal {L}(y,\zeta )\) be given, where \(\zeta \) again denotes all relevant dual variables. Then, omitting the current iteration index k and denoting iteration \(k+1\) by superscript “\(+\)”, three standard approaches of updating the Hessian approximations are the symmetric rank-two BFGS update rule

the symmetric rank-one (SR1) update formula

and the somewhat less popular PSB rule

where the iteration data is given by

An overview of Quasi-Newton methods for unconstrained and constrained optimization can be found, e.g., in Dennis and Moré (1977), Dennis and Schnabel (1996), Fletcher (2013), Nocedal and Wright (2006) and the references therein. General-purpose implementations of algorithms using Quasi-Newton approaches for constrained nonlinear optimization include, e.g., KNITRO (Byrd et al. 2006, 2000) and SNOPT (Gill et al. 2002).

3.1 Quasi-Newton methods for tree-sparse problems

Standard update formulas like (16)–(18) produce dense Hessian approximations that destroy the specific block structure of the tree-sparse NLPs. Quasi-Newton methods in large-scale optimization should generally not alter the sparsity pattern too much, i.e., the Hessian update strategy should keep additional fill-in at a minimum level. Such sparse Quasi-Newton approaches for unconstrained and constrained optimization are considered repeatedly in the literature; see, e.g., Fletcher (1995), Gill et al. (1984), Liu and Nocedal (1989), Lucia (1983), Powell and Toint (1981), Toint (1981).

In fact, the tree-sparse NLPs are designed such that their block structure is entirely preserved by suitable block-sparse Hessian approximations. The key idea is to apply the update formulas node-wise rather than globally, which goes back to multiple shooting SQP methods for deterministic optimal control problems (Bock and Plitt 1985). As an additional benefit the updates have much higher rank.

Before we explicitly discuss tree-sparse Quasi-Newton updates for specific control forms we briefly review the concept of (partially) separable functions. A function \(\varphi :\mathbb {R}^n \rightarrow \mathbb {R}\) is called partially separable if it can be written as

where each function \(\varphi _i\) depends only on a subset of the variables that is indexed by \(I(i) \subseteq \{1, \dots , n\}\) for \(i = 1, \dots , M\). We call the function \(\varphi \) (completely) separable if, in addition, \(I(i) \cap I(j) = \emptyset \) for all \(i \ne j\).

Quasi-Newton methods for arbitrary partially separable functions have first been developed in Griewank and Toint (1982a, b).

3.2 Tree-sparse Hessian updates for outgoing control problems

For tree-sparse NLPs in outgoing control form the Lagrangian (10),

is completely separable with respect to the primal node variables, \(y_j = (x_j, u_j)\), \(j \in V\). Thus, its Hessian is block-diagonal, and each block \(\nabla _{y_j y_j}^2 \mathcal {L}_j(y_j, \zeta )\) can be approximated individually by some \(B_j\) obtained with the same update formula based on

Here, \(B_j\) approximates the diagonal block (without barrier terms)

where the subblocks \(H_j\), \(J_j\), and \(K_j\) are given in (11). The overall Hessian approximation (again without barrier terms) is then given by

Finally note that, since the Lagrangian (8) of the implicit NLP form is also completely separable, it admits analogous individual updates of the diagonal blocks \(\nabla _{y_jy_j}^2 \mathcal {L}_j(y_j, \zeta ) = H_j\). No communication is needed in the distributed code.

3.3 Tree-sparse Hessian updates for incoming control problems

The Lagrangian of tree-sparse NLPs in incoming control form,

is composed of two types of node functions, \(\mathcal {L}_{ij}\) and \(\mathcal {L}_j\) as given in (13). In contrast to the outgoing control case, this Lagrangian is only partially separable with respect to the primal node variables \(y_j = (u_j, x_j)\). Thus, by setting \(y_{ij} = (x_i, u_j)\), the overall Hessian \(\nabla _{yy}^2 \mathcal {L}\) will be approximated by the sum of updates \(B_{ij} \approx \nabla _{y_{ij}y_{ij}}^2 \mathcal {L}_{ij}\) and \(B_j \approx \nabla _{x_jx_j}^2 \mathcal {L}_j\), \(j \in V\), whose subblocks overlap in part as detailed below.

We obtain the node-wise approximations

where \(H_{ij}\), \(J_j\), and \(K_j\) are defined in (14). In a distributed code, \(B_{ij}\) has to be sent to the process that holds \(B_i\). The approximations of the Hessians of \(\mathcal {L}_j\) are given by

The approximations \(B_{ij}\) and \(B_j\) are updated by applying, for instance, one of the update rules (16)–(18) where the respective differences of the iterates are

the corresponding differences of the gradients of the Lagrangian are

and, finally, \(r_{ij} = g_{ij} - B_{ij} s_{ij}\) and \(r_j = g_j - B_j s_j\).

To illustrate the resulting block structure, we consider a simple tree with root 0 and successors \(S(0) = \{1,2\}\). In this case, the Hessian of the Lagrangian (13) has the form

with variables \((u_0, x_0, u_1, x_1, u_2, x_2)\) and

Hence, the subblocks \(K_j\) and \(\tilde{H}_j\) are placed on the diagonal corresponding to the respective variables \(u_j\) and \(x_j\). Moreover, each subblock \(H_{ij}\) is added to \(\tilde{H}_i\), and \(J_j\) and \(J_j^{T}\) are placed on the anti-diagonals corresponding to the variable pair \((x_i, u_j)\).

4 Tree-sparse inertia correction

Recall that the search direction in an interior-point method for NLP (1) is computed from system (7). Hence the reduced KKT matrix has to be invertible. In addition, to guarantee a descent direction, the Hessian \({\bar{H}}\) has to be positive definite on the null-space of the constraints matrix. These conditions are satisfied if and only if the reduced KKT matrix

has n positive and m negative eigenvalues (Nocedal and Wright 2006), i.e., \({\text {inertia}}({\varOmega }) = (n, m, 0)\). Therefore, when necessary, the inertia condition is enforced by replacing \({\varOmega }\) with

as follows. If C (and hence \({\varOmega }\)) is rank-deficient, the associated zero eigenvalues are shifted into the negative region by choosing a fixed small value \(\delta _{r}> 0\) (regularization). Then, if \({\varOmega }(0, \delta _{r})\) has less than n positive eigenvalues, \(\delta _{c}\) is increased repeatedly until \({\varOmega }(\delta _{c}, \delta _{r})\) has the desired inertia (convexification). For details see Schmidt (2013), Vanderbei and Shanno (1997), Wächter and Biegler (2006).

For the inertia correction of the tree-sparse NLPs, additional structural properties of the associated factorizations of \({\varOmega }\) come into play. First, in the implicit tree-sparse KKT system the general regularization \(-\delta _{r}I\) in (27) splits into independent regularizations for each dynamics equation (9b) and for the global constraints (9d), which are applied to certain symmetric Schur complement blocks \(Y_j \ge 0\) and \(X_\emptyset \ge 0\) (see Steinbach 2001) by factorizing \(Y_j + \delta _{r}I> 0\) (or \(X_\emptyset + \delta _{r}I> 0\)) unless \(Y_j > 0\) (or \(X_\emptyset > 0\)). Thus, any rank deficiencies in C are handled locally, and the local refactorizations do not require any communication in the distributed code.

On the other hand, the general regularization \(-\delta _{r}I\) would destroy the block structure of the tree-sparse KKT systems in outgoing or incoming control form since in both cases the factorization makes use of the fact that the matrix blocks to the right of G and below \(G^{T}\) are zero. These factorizations can only handle a regularization of the global constraints, not the dynamics,

While at first thought this might appear as a drawback, it is actually an advantage: with explicit dynamics (3b) or (4b), the term \(-x_j\) creates identity blocks \(-I\) along the diagonal of G. Hence G has always full rank by construction, and a regularization needs only be considered for F. A closer look at the tree-sparse KKT solution algorithms reveals that full rank of F is equivalent to \(X_\emptyset > 0\) (where \(X_\emptyset \) can be shown to be the Schur complement of the projection of F on N(G), with \(X_\emptyset \ge 0\)). This is checked in the very last block operation: the Cholesky factorization of \(X_\emptyset \), cf. step 18 of Table 1 for the incoming control case. Thus, if a regularization is required, only \(X_\emptyset + \delta _{r}I> 0\) needs to be refactorized while the by far more expensive initial part of the factorization can be retained. Again there is no extra communication.

Second, to preserve sparsity, each tree-sparse factorization needs a stricter condition than the inertia condition above: while \({{\,\mathrm{rank}\,}}(C) = m\) is required as before, \({\bar{H}}\) must be positive definite on the null space N(G) rather than on \(N(C) = N(G) \cap N(F)\). This is because all three factorizations form the Schur complement \(X_\emptyset \) of the projection of F on N(G). The stricter condition can easily be incorporated into the convexification heuristic since positive definiteness of \({\bar{H}} + \delta _{c}I\) on N(G) is checked during the factorization. Of course, in problems without global constraints we have \(F \in \mathbb {R}^{0 \times m}\) and there is no difference.

The structural details of the tree-sparse convexification are more involved than for the regularization. Positive definiteness of \({\bar{H}} + \delta _{c}I\) on N(G) is equivalent to positive definiteness of properly modified blocks \(K_j\) in every node, see steps 3 and 7 in Table 1. Thus, if this does not hold, the Cholesky factorization of some modified block \(K_j\) will fail (step 8), and \({\varOmega }^*(\delta _{c}, \delta _{r})\) has to be refactorized from scratch with an increased value of \(\delta _{c}\).

In order to avoid refactorizations of the entire matrix, different convexification parameters \(\delta _{cj}\) can be used in each node j to modify the local Hessian blocks,

This way, only local refactorizations are needed. However, in contrast to the uniform shift of all eigenvalues of \({\bar{H}}\) by \(\delta _{c}\), individual local shifts \(\delta _{cj}\) may produce a highly inhomogeneous change of the subproblem’s geometry. As a remedy, one may also consider combined global and local convexifications, as suggested in Hübner (2016). This allows for a wide range of possible heuristics that we do not want to explore here.

Let us finally discuss the inertia correction with local equality constraints. In all tree-sparse factorizations, these constraints are eliminated by local projections at the very beginning of the algorithm, under the assumption that the Jacobians of \(e_{ij}^l, e_j^l\) in (5) have full rank. This produces a projected KKT system without local constraints which has precisely the form (9), (12), or (15). If any of the local constraints may require a regularization, this procedure has to be modified as follows. All potentially rank-deficient local constraints are modeled as global constraints. However, it turns out that each of them augments the Schur complement \(X_\emptyset \ge 0\) mentioned above with an independent diagonal block \(X_j^l \ge 0\). Thus, similar to the implicit case, the general regularization splits into independent local regularizations that are applied immediately when reaching the respective block by factorizing \(X_j^l + \delta _{r}I> 0\) (or \(X_\emptyset + \delta _{r}I> 0\)) unless \(X_j^l > 0\) (or \(X_\emptyset > 0\)). Local regularizations are again automatically independent, require no communication, and in contrast to the convexifications a refactorization of \({\varOmega }^*(\delta _{c}, \delta _{r})\) from scratch is never needed.

5 Case studies

The main goal of this section is to show that the tree-sparse Hessian updates and the tree-sparse inertia correction work efficiently: they provide natural extensions of the existing tree-sparse algorithms to deal with nonconvexity and with unavailable or expensive second-order derivatives.

In Sect. 5.1 and 5.2 we consider robust moving horizon control problems for a double integrator and a bioreactor, respectively. These examples are both nonconvex. The second problem does not provide explicit evaluations of the Hessian of the Lagrangian, so we use a Quasi-Newton approach based on the tree-sparse Hessian updates of Sect. 3. All optimization problems are solved using the interior-point code Clean::IPM (Schmidt 2013) with a tree-sparse KKT solver. The KKT solver incorporates the proposed inertia correction heuristic of Sect. 4 to address nonconvexity.

Moving horizon controllers (MHC) compensate random disturbances of a process by measuring or estimating the current disturbance in regular intervals and solving a dynamic optimization problem over a certain prediction horizon T to determine the current corrective action. Robust MHC incorporate an explicit stochastic model of future disturbances up to some stochastic horizon \(T_s \le T\) whereas standard MHC simply ignore future disturbances (\(T_s = 0\)).

5.1 Nonlinear double integrator

In this case study, we consider a moving horizon controller (MHC) for stabilizing a perturbed nonlinear double integrator. The dynamics is given by the discrete time model proposed in Lazar et al. (2008),

Herein, the state \((x_1, x_2)\) is driven by the control \(u \in [-2, 2]\), and the first state \(x_1\) is perturbed by some dynamic disturbance d with nominal value \(d^{\text {nom}} = 0\). The task is to keep the system close to its reference point, \((x_1^*, x_2^*) = (0,0)\). In the absence of disturbances, the reference state \((x_1^*, x_2^*)\) is a fixed point of the dynamics (30).

As in Lucia and Engell (2012), the disturbance may take one of three values, \(d(t) \in \{-0.05, 0, 0.05\}\), with respective probabilities 0.2, 0.4, and 0.4. Again as in Lucia and Engell (2012), the objective is

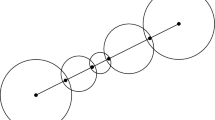

with \(Q = I\) and \(R = 0.15\), a standard quadratic tracking functional with control costs. At each sampling time, the double integrator receives a control signal u(t) from the MHC and the random disturbance d(t) is observed. The resulting new state \((x_1(t+1), x_2(t+1))\) is then sent back to the controller; see Fig. 1.

5.1.1 Tree-sparse formulation

The moving horizon optimization problem that is solved at each sampling time to determine the control signal u includes the dynamic model (30) and the cost (31). We formulate it as a tree-sparse NLP in outgoing control form with probability-weighted node objectives

dynamics

and simple control bounds

The initial state \(\hat{x}_0\) is given as \(h_0 = \hat{x}_0\). A possible scenario tree is shown in Fig. 1.

5.1.2 Control towards reference point

In a first test, we evaluate the performance of the deterministic controller (\(T_s = 0\)) for bringing the system from three given initial states close to the reference point \((x_1^*, x_2^*)\). The MHC uses the prediction horizon \(T = 3\) and analytic second-order derivatives. We generate random disturbances at \(t = 1, \dots , 10\) and apply the MHC for 10 time steps. For each initial value, this is repeated 50 times with different series of random disturbances. Figure 2 shows the mean values of the states over the 10 time steps. For each of the three initial states, the mean values come very close to \((x_1^*, x_2^*)\) within the first 5 time steps, which confirms the proper operation of the controller.

5.1.3 Hold the reference point with minimal costs

In the second test series, the performance of the robust controller is tested for keeping the perturbed system close to the initial state \((x_1^*, x_2^*)\) over 20 time steps. Here we consider prediction horizons \(T = 3\) and \(T = 10\) with respective stochastic horizons \(T_s \in \{0, 1, 2, 3\}\) and \(T_s \in \{0, 1, 2, 3, 5, 7\}\). Again we use analytic second-order derivatives, and each test is run with the same set of 50 series of random disturbances at \(t = 1, \dots , 20\). Figure 3 illustrates the resulting averages of accumulated costs, states, and control over 20 steps. We observe that the 10 different controllers determined by \((T, T_s)\) show visible differences in the mean values of u and \(x_2\) only during the first five time steps (with an average control of zero at \(t = 5\)). Moreover, the length of the prediction horizon has no visible influence: the plots for \(T = 3\) and \(T = 10\) combined with \(T_s \in \{0, 1, 2, 3\}\) look exactly identical. A close examination of the data reveals that differences after \(t = 5\) and between \(T = 3\) and \(T = 10\) are indeed very small with values in the order of \(10^{-4}\) to \(10^{-3}\).

The average accumulated costs (top of Fig. 3) demonstrate that the performance of the controller improves when uncertainties are included. Adding uncertainties in the first time step, i.e., increasing \(T_s\) from 0 to 1, reduces the resulting costs at the final step \(t = 20\) by approximately 9%. Increasing the stochastic horizon to \(T_s = 2\) leads to a further cost reduction of approximately 3%. Larger stochastic horizons (\(T_s > 2\)) have no significant effect on the costs. The cost reductions are obtained by balancing the disturbances in the first 5 time steps of the process. The control signal is adjusted to reduce the deviation of \(x_1\) from the reference state \(x_1^* = 0\). This reduces the costs due to deviations of \(x_1\) and increases the costs due to deviations of \(x_2\) and due to nonzero control signals. In other words, within the critical first 5 time steps, the incurred costs are “moved” from state \(x_1\) to state \(x_2\) and to the control u. This strategy pays off in the further process where the costs due to u and \(x_2\) are identical for all considered values of \(T_s\) while the costs due to \(x_1\) depend on its value at time step 5.

5.1.4 Exact Hessians versus approximations

Here we solve the robust control problem (32)–(34) with a fixed prediction horizon \(T = 12\) and increasing stochastic horizons \(T_s \in \{1, \dots , 12\}\). We measure the total time for IPM solution and for evaluating the NLP data with either analytic Hessians or SR1 updates or PSB updates. Each test is run 50 times for different initial states, and the averages of IPM solution time, NLP evaluation time, and iteration counts are measured, see Fig. 4. The problem sizes are given in Table 2 together with total runtimes of the IPM with analytic Hessian evaluations. All computations are carried out on a single core of a workstation with 48 GiB of RAM and 12 X5675 cores running at 3.07 GHz.

Let us first consider the case of analytic Hessian evaluations. The average number of iterations is almost independent of \(T_s\), which indicates a certain “scalability” of the stochastic model. The NLP evaluation time is lower than with both Quasi-Newton updates, thus analytic Hessians are cheaper than approximations in this problem. Finally we note that the constraints matrices cannot become rank-deficient and thus the regularization remains inactive for all 12 values of \(T_s\) and all 50 initial values.

With SR1 updates we observe the lowest iteration counts of all three Hessian versions on small problems (\(T_s < 8\)) but the highest iteration counts on large problems (\(T_s > 9\)). The convexification is again inactive in all runs, which indicates that the tree-sparse SR1 updates provide good Hessian approximations.

With PSB updates, the iteration count varies with \(T_s\) but remains significantly smaller than with analytic Hessians. This yields the smallest total runtimes on large problems (\(T_s > 9\)) although the NLP evaluation time per iteration is significantly larger than for analytic Hessians. Surprisingly, most runs require a convexification to solve the optimization problems.

5.2 Nonlinear bioreactor

This case study is concerned with a robust nonlinear moving horizon controller that keeps a bioreactor in a steady state of production. The problem is proposed as a benchmark in Ungar (1990) and has been studied, e.g., in Lucia and Engell (2012), Lucia et al. (2012). The plant consists of a continuous flow stirred tank reactor containing a mixture of water and cells. The latter consume nutrients and produce (desired and undesired) products as well as more cells. The volume of the mixture is constant and its composition is adjusted by a water stream that feeds nutrients into the tank at the inlet and that contains nutrients and cells at the outlet. The dynamic model of the bioreactor is given by the ODE system

where \(x_1\) and \(x_2\) are dimensionless amounts of cell mass and nutrient, respectively, and v is the flow rate of the water stream. The respective physical bounds are

The ODE system (35) describes the rates of change in the amounts of cells \(x_1\) and nutrients \(x_2\), respectively, that result from the respective amounts \(-x_1 v\) and \(-x_2 v\) leaving the tank and from the metabolism of the cells. The cell growth is represented by \(x_1 (1 - x_2) e^{x_2/\gamma }\), where \(\gamma \) is the uncertain nutrient consumption parameter with nominal value \(\gamma ^{\text {nom}} = 0.48\). The rate of cell growth \(\beta \) depends very mildly on the composition of the mixture in the tank. We regard it as constant, \(\beta ^{\text {nom}} = 0.02\).

When feeding nutrients to the bioreactor with a constant flow rate v, the system has a Hopf bifurcation at a certain flow rate \(v^{\text {H}}\) that depends on the values of \(\gamma \) and \(\beta \). For \(v < v^{\text {H}}\), System (35) stabilizes at a unique fixed point \((x_1^*, x_2^*)\) whereas it becomes unstable for \(v \ge v^{\text {H}}\). For the nominal parameter values, the Hopf bifurcation occurs at the flow rate \(v^{\text {H}} = 0.829\). This value decreases with an increasing value of \(\gamma \) or a decreasing value of \(\beta \) as shown in Fig. 5.

The desired steady state of production, \((x_1^*, x_2^*) \approx (0.1236477, 0.8760318)\), is close to the Hopf bifurcation and is obtained for the constant flow rate \(v^* = 0.769\) with nominal parameter values. The parameter \(\gamma \) is assumed to be normally distributed with small variance, \(\gamma \sim N(\gamma ^{\text {nom}}, 0.005)\). As in Lucia and Engell (2012), standard quadratic costs are applied that penalize deviations from the reference state \(x_1^*\) with the factor 200 and changes of the flow rate with the factor 75.

5.2.1 Tree-sparse formulation

The optimization problem for the bioreactor is formulated as a tree-sparse NLP with incoming control (4). State variables \(x_j \in \mathbb {R}^3\) consist of the two states \(x_1, x_2\), and the flow rate v of the ODE system (35). The control \(u_j \in \mathbb {R}\) models the change of the piecewise constant flow rate v at time t(i), yielding dynamics \(x_j = g_j(x_i, u_j) = (w_j(x_i, u_j), x_{i,3} + u_j)\) where

Here the flow rate is modeled as a state variable since its difference \(u_j = x_{j,3} - x_{i,3}\) enters into the objective,

with \(R = 75\), \(Q = {{\,\mathrm{Diag}\,}}(200,0,0)\), and \(\bar{x}^* = (x_1^*, x_2^*, 0)\). Finally, physical bounds (36) are incorporated as simple state bounds, \(x_j^- = (0,0,0)\) and \(x_j^+ = (1,1,2)\).

It turns out that with increasing stochastic horizon \(T_s\) the robust control problem becomes extremely hard to solve due to the highly nonlinear dynamics. Therefore we use as scenario tree a simple fan (\(T_s = 1\)) with \(n_s\) scenarios, see Fig. 6.

5.2.2 Keep a steady state of production

In the following, we consider 13 instances of the benchmark problem differing in the prediction horizon T or in the number of scenarios \(n_s\). The reactor is started in the reference state and run for 40 s with a sampling interval of 100 ms. At each sampling time, the plant receives a new control signal from the MHC and the random value of \(\gamma \) is sampled, see Fig. 6. Thus, each test run requires solving 400 optimization problems. Each of those tests is run 50 times with different series of random disturbances. We compute variances of the cell mass \(x_1\) and averages of accumulated costs, of \(x_1\), and of the flow rate v.

As in Sect. 5.1, the tree-sparse NLPs are solved using Clean::IPM where solutions of the IVP (37) are computed using a semi-implicit extrapolation method (Bader and Deuflhard 1983). The Hessians are approximated by tree-sparse SR1 updates, which turned out to be the most reliable choice here. All computations in this section have been executed on a single core of a workstation with 16 GiB of RAM and an Intel(R) Core i7-3770 quad-core processor running at 3.40 GHz.

In some test runs, the IPM does not converge for all 400 optimization problems, which demonstrates the difficulty of these problems. To keep the results comparable, we exclude all test runs that do not succeed in each of the 13 benchmark instances. In the end we are left with solutions for 28 of the 50 disturbance series.

The results are given in Figs. 7 and 8. The plots show averages of accumulated costs (upper left), of the cell mass \(x_1\) (upper right), the variance of \(x_1\) (lower left), and average flow rates (lower right) vs. time. Two situations are shown in the figures. First, in Fig. 7, the bioreactor is regulated using deterministic optimization problems (\(n_s = 1\)) and an increasing prediction horizon T. Second, in Fig. 8, the MHC uses stochastic optimization problems with fixed prediction horizon (\(T = 5\)) and a varying number of scenarios \(n_s\). In both situations, increasing the free tree parameter (T or \(n_s\)) improves the performance of the controller. The average accumulated costs decrease monotonously, which is achieved by damping the effect of the perturbation on the cell mass \(x_1\). After experiencing disturbances, \(x_1\) oscillates about the reference state \(x_1^*\). The amplitude of this oscillation is reduced by adjusting the flow rate v, which reduces the costs caused by the deviations of \(x_1\).

The results in Fig. 7 show that a certain minimal prediction horizon is required to produce a significant effect. Using the value \(T = 5\) reduces the costs by less than 5% in comparison to \(T = 1\) whereas the value \(T = 10\) yields a reduction of more than 60%. However, increasing T further yields no significant improvement. The value \(T = 20\), for instance, produces a reduction of only 65%.

The results in Fig. 8 show that the robust control approach with its explicit model of uncertainties improves the controller performance. The total costs are reduced by 7% with \(n_s = 3\), and by 70% with \(n_s = 81\). Of course, each additional scenario causes higher computational effort while the relative reduction of costs per scenario decreases. For instance, we have 3.5% reduction of costs per scenario for \(n_s = 3\) and 0.88% per scenario for \(n_s = 81\).

The results of Figs. 7 and 8 show that the goal of reducing the costs is achieved in both situations. However, increasing the number of scenarios \(n_s\) is computationally more expensive than enlarging the prediction horizon T, as can be seen in Table 3. The optimization problems of case 1 (\(T = 100\) and \(n_s = 1\)) are significantly smaller and solved faster than those of case 2 (\(T = 5\) and \(n_s = 51\)), although the two cases produce almost identical costs as shown in Fig. 9. Finally, case 3 in Table 3 represents a fair compromise between the length of the prediction horizon (\(T = 10\)) and the number of scenarios (\(n_s = 3\)). In Fig. 9 we see that this compromise produces the smallest costs and, moreover, has the smallest optimization problems among the ones listed in Table 3. It is solved 3 times faster than case 1 and 9 times faster than case 2. Thus we conclude that a suitably designed robust controller can be significantly more efficient than a deterministic controller.

6 Conclusion

We have seen that the concept of tree-sparse optimization problems (Steinbach 2002) extends directly from convex problems with polyhedral constraints to the fully nonlinear and nonconvex NLP case. The required structural assumptions are satisfied whenever the nonlinear constraint functions possess mild separability properties. Moreover, interior-point methods with a tree-sparse KKT solver are directly applicable to the general case by introducing straightforward structure-specific specializations to a standard inertia correction procedure. Finally, the Lagrangian is separable or partially separable in all three tree-sparse NLP variants so that the Hessian can be approximated by structure-preserving Quasi-Newton update formulas when second-order derivatives are unavailable or too expensive. The effectiveness of these techniques has been demonstrated on two robust model predictive control problems with ODE dynamics. Altogether, our problem formulation and our solution approach exploit the specific structure of tree-sparse problems in a natural way.

Although we have not demonstrated this here, it is easily seen that the distributed implementation presented in Hübner et al. (2017) extends as well to the NLP case considered in this paper: the function and derivative evaluations, possibly with Quasi-Newton updates, and the tree-sparse inertia correction parallelize similar or easier than the KKT solver. Our techniques are also directly applicable in an active set-based SQP framework such as Rose (2018): here we can again use the tree-sparse KKT solver, except that the implementation requires some overhead for the changing active set.

References

Bader G, Deuflhard P (1983) A semi-implicit midpoint rule for stiff systems of ordinary differential equations. Numer Math 41:373–398. https://doi.org/10.1007/BF01418331

Berger AJ, Mulvey JM, Rothberg E, Vanderbei RJ (1995) Solving multistage stochastic programs using tree dissection. Technical Report SOR-95-07. Princeton University, USA

Birge JR, Louveaux F (2011) Introduction to stochastic programming. Springer, Berlin. https://doi.org/10.1007/978-1-4614-0237-4

Blomvall J, Lindberg PO (2002) A Riccati-based primal interior point solver for multistage stochastic programming. Eur J Oper Rese 143(2):452–461. https://doi.org/10.1016/S0377-2217(02)00301-6

Blomvall J (2003) A multistage stochastic programming algorithm suitable for parallel computing. Parallel Comput 29(4):431–445. https://doi.org/10.1016/S0167-8191(03)00015-2

Bock HG, Plitt KJ (1985) A multiple shooting algorithm for direct solution of optimal control problems. In: Gertler J (ed) Proceedings of the 9th IFAC world congress, Budapest, Hungary, 1984, vol IX. Pergamon Press, Oxford, UK, pp 242–247. https://doi.org/10.1016/S1474-6670(17)61205-9

Byrd RH, Gilbert J-C, Nocedal J (2000) A trust region method based on interior point techniques for nonlinear programming. Math Program 89(1):149–185. https://doi.org/10.1007/PL00011391

Byrd RH, Nocedal J, Waltz RA (2006) KNITRO: an integrated package for nonlinear optimization. In: Large scale nonlinear optimization, 35–59, 2006. Springer, pp 35–59. https://doi.org/10.1007/0-387-30065-1_4

Carpenter TJ, Lustig IJ, Mulvey JM, Shanno DF (1993) Separable quadratic programming via a primal-dual interior point method and its use in a sequential procedure. ORSA J Comput 5(2):182–191. https://doi.org/10.1287/ijoc.5.2.182

Czyzyk J, Fourer R, Mehrotra S (1995) A study of the augmented system and column-splitting approaches for solving two-stage stochastic linear programs by interior-point methods. ORSA J Comput 7(4):474–490. https://doi.org/10.1287/ijoc.7.4.474

Dennis JE, Moré JJ (1977) Quasi-Newton methods, motivation and theory. SIAM Rev 19(1):46–89. https://doi.org/10.1137/1019005

Dennis JE, Schnabel RB (1996) Numerical methods for unconstrained optimization and nonlinear equations, vol 16. SIAM. https://doi.org/10.1137/1.9781611971200

Fletcher R (1995) An optimal positive definite update for sparse Hessian matrices. SIAM J Optim 5(1):192–218. https://doi.org/10.1137/0805010

Fletcher R (2013) Practical methods of optimization. Wiley, New York. https://doi.org/10.1002/9781118723203

Gill PE, Murray W, Saunders MA, Wright MH (1984) Sparse matrix methods in optimization. SIAM J Sci Stat Comput 5(3):562–589. https://doi.org/10.1137/0905041

Gill PE, Murray W, Saunders MS (2002) SNOPT: an SQP algorithm for large-scale constrained optimization. SIAM J Optim 12(4):979–1006. https://doi.org/10.1137/S1052623499350013

Gondzio J, Grothey A (2006) Direct solution of linear systems of size 109 arising in optimization with interior point methods. In: Wyrzykowski R, Dongarra J, Meyer N, Wasniewski J (eds) Parallel processing and applied mathematics, vol 3911. Lecture notes in computer science. Springer, Berlin, pp 513–525. https://doi.org/10.1007/11752578_62

Gondzio J, Grothey A (2007) Parallel interior-point solver for structured quadratic programs: application to financial planning problems. Ann Oper Res 152(1):319–339. https://doi.org/10.1007/s10479-006-0139-z

Gondzio J, Grothey A (2009) Exploiting structure in parallel implementation of interior point methods for optimization. Comput Manag Sci 6(2):135–160. https://doi.org/10.1007/s10287-008-0090-3

Griewank A, Toint PL (1982a) Partitioned variable metric updates for large structured optimization problems. Numer Math 39(1):119–137. https://doi.org/10.1007/BF01399316

Griewank A, Toint P (1982b) On the unconstrained optimization of partially separable functions. In: Powell M (ed) Nonlinear optimization 1981. Academic press, Cambridge, pp 301–312

Hübner J (2016) Distributed algorithms for nonlinear tree-sparse problems. Ph.D. Thesis. Gottfried Wilhelm Leibniz Universität Hannover

Hübner J, Steinbach MC, Schmidt M (2017) A distributed interior-point KKT solver for multistage stochastic optimization. INFORMS J Comput 29(4):612–630. https://doi.org/10.1287/ijoc.2017.0748

Jessup ER, Yang D, Zenios SA (1994) Parallel factorization of structured matrices arising in stochastic programming. SIAM J Optim 4(4):833–846. https://doi.org/10.1137/0804048

Kall P, Wallace SW (1994) Stochastic programming. Wiley-interscience series in systems and optimization. Wiley, New York

Lazar M, De La Peña DM, Heemels W, Alamo T (2008) On input-tostate stability of min-max nonlinear model predictive control. Syst Control Lett 57(1):39–48. https://doi.org/10.1016/j.sysconle.2007.06.013

Liu DC, Nocedal J (1989) On the limited memory BFGS method for large scale optimization. Math Program 45(3):503–528. https://doi.org/10.1007/BF01589116

Lubin M, Petra CG, Anitescu M (2012) The parallel solution of dense saddle-point linear systems arising in stochastic programming. Optim Methods Softw 27(4–5):845–864. https://doi.org/10.1080/10556788.2011.602976

Lucia A (1983) An explicit Quasi-Newton update for sparse optimization calculations. Math Comput 40(161):317–322. http://www.jstor.org/stable/2007377

Lucia S, Engell S (2012) Multi-stage and two-stage robust nonlinear model predictive control. Nonlinear Model Predict Control 45(17):181–186. https://doi.org/10.3182/20120823-5-NL-3013.00015

Lucia S, Finkler T, Basak D, Engell S (2012) A new robust NMPC scheme and its application to a semi-batch reactor example. In: Proceedings of the international symposium on advanced control of chemical processes, pp 69–74. https://doi.org/10.3182/20120710-4-SG-2026.00035

Nocedal J, Wright SJ (2006) Numerical optimization, 2nd edn. Springer, Berlin. https://doi.org/10.1007/978-0-387-40065-5

Powell M, Toint PL (1981) The Shanno–Toint procedure for updating sparse symmetric matrices. IMA J Numer Anal 1(4):403–413. https://doi.org/10.1093/imanum/1.4.403

Rose D (2018) An elastic primal active-set method for structured QPs. Ph.D. Thesis. Leibniz Universität Hannover

Schmidt M (2013) A generic interior-point framework for nonsmooth and complementarity constrained nonlinear optimization. Ph.D. Thesis. Gottfried Wilhelm Leibniz Universität Hannover

Schweitzer E (1998) An interior random vector algorithm for multistage stochastic linear programs. SIAM J Optim 8(4):956–972. https://doi.org/10.1137/S105262349528456X

Steinbach MC (2001) Hierarchical sparsity in multistage convex stochastic programs. In: Uryasev SP, Pardalos PM (eds) Stochastic optimization: algorithms and applications. Kluwer Academic Publishers, Dordrecht, pp 385–410. https://doi.org/10.1007/978-1-4757-6594-6_16

Steinbach MC (2002) Tree-sparse convex programs. Math Methods Oper Res 56(3):347–376. https://doi.org/10.1007/s001860200227

Toint PL (1981) A note about sparsity exploiting quasi-Newton updates. Math Program 21(1):172–181. https://doi.org/10.1007/BF01584238

Ungar LH (1990) A bioreactor benchmark for adaptive network-based process control. In: Miller WT III, Sutton RS, Werbos PJ (eds) Neural networks for control. MIT Press, Cambridge, pp 387–402. http://dl.acm.org/citation.cfm?id=104204.104221

Vanderbei RJ, Shanno DF (1997) An interior-point algorithm for nonconvex nonlinear programming. Comput Optim Appl 13:231–252. https://doi.org/10.1023/A:1008677427361

Wächter A, Biegler LT (2006) On the implementation of an interior-point filter line-search algorithm for large-scale nonlinear programming. Math Program 106(1):25–57. https://doi.org/10.1007/s10107-004-0559-y

Acknowledgements

Open Access funding provided by Projekt DEAL. This research has been performed as part of the Energie Campus Nürnberg and supported by funding through the “Aufbruch Bayern (Bavaria on the move)” initiative of the state of Bavaria.

Funding

Funding was provided by Bayerisches Staatsministerium für Wirtschaft und Medien, Energie und Technologie (Grant No. EnCN).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hübner, J., Schmidt, M. & Steinbach, M.C. Optimization techniques for tree-structured nonlinear problems. Comput Manag Sci 17, 409–436 (2020). https://doi.org/10.1007/s10287-020-00362-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10287-020-00362-9

Keywords

- Nonlinear stochastic optimization

- Interior-point methods

- Structured Quasi-Newton updates

- Structured inertia correction

- Robust model predictive control