Abstract

Life-threatening acute aortic dissection (AD) demands timely diagnosis for effective intervention. To streamline intrahospital workflows, automated detection of AD in abdominal computed tomography (CT) scans seems useful to assist humans. We aimed at creating a robust convolutional neural network (CNN)-based pipeline capable of real-time screening for signs of abdominal AD in CT. In this retrospective study, abdominal CT data from AD patients presenting with AD and from non-AD patients were collected (n 195, AD cases 94, mean age 65.9 years, female ratio 35.8%). A CNN-based algorithm was developed with the goal of enabling a robust, automated, and highly sensitive detection of abdominal AD. Two sets from internal (n = 32, AD cases 16) and external sources (n = 1189, AD cases 100) were procured for validation. The abdominal region was extracted, followed by the automatic isolation of the aorta region of interest (ROI) and highlighting of the membrane via edge extraction, followed by classification of the aortic ROI as dissected/healthy. A fivefold cross-validation was employed on the internal set, and an ensemble of the 5 trained models was used to predict the internal and external validation set. Evaluation metrics included receiver operating characteristic curve (AUC) and balanced accuracy. The AUC, balanced accuracy, and sensitivity scores of the internal dataset were 0.932 (CI 0.891–0.963), 0.860, and 0.885, respectively. For the internal validation dataset, the AUC, balanced accuracy, and sensitivity scores were 0.887 (CI 0.732–0.988), 0.781, and 0.875, respectively. Furthermore, for the external validation dataset, AUC, balanced accuracy, and sensitivity scores were 0.993 (CI 0.918–0.994), 0.933, and 1.000, respectively. The proposed automated pipeline could assist humans in expediting acute aortic dissection management when integrated into clinical workflows.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Acute aortic syndrome (AAS) consists of the life-threatening conditions of aortic dissection (AD), intramural hematoma (IMH), and penetrating atherosclerotic ulcer (PAU) [1]. In acute AD, tearing of the aortic vessel intima leads to uncontrolled blood inflow into the aortic wall. Incidence has been reported to range from 2.6 to 7.2 cases per 100,000 person-years and is associated with a reported in-hospital mortality of 30.1% in women and 21.0% in men, creating a substantial healthcare burden [2].

The event of acute AD can cause severe abdominal or back pain; however, in many cases, symptoms are nonspecific and driven by secondary complications like visceral ischemia, resulting in a relatively high rate of patients not initially being suspected of AD, thus receiving abdominal imaging for other reasons [3]. Modern medical management of acute AD aims at early CT-based diagnosis and prompt therapy stratification. Whereas thoracic AD including the ascending part of the vessel (type A) typically requires immediate intervention, AD located more distally in the aorta (type B) in the absence of complications like rupture, malperfusion of visceral organs, spinal ischemia, or lower limb ischemia [4] may be managed conservatively or with elective intervention. In CT imaging, acute AD can in many cases be distinguished from its chronic form [5], bearing the risk of development of abdominal aortic aneurysm (AAA), representing a chronic risk for rupture.

In many cases, the unspecific clinical symptoms and even radiological misdiagnosis under emergency conditions which were reported at 35% for type A AD and 17% for type B AD in a British setting [6] possibly compromise timely suspicion, diagnosis, and treatment of this rare but time-critical medical condition in a substantial proportion of patients [7].

Multiple factors have been addressed to streamline AD management workflows [8]. Artificial intelligence (AI)-based techniques have recently been described as promising tools, capable of detecting critical findings, prioritizing cases accordingly, and eventually reducing delays [9]. Multiple groups have aimed to create algorithms capable of detecting AD mainly in thoracic CT scans. Yi et al. [10] developed a method using a 2.5D U-Net to extract aorta masks and subsequently used a 3D ResNet34 CNN [11], pre-trained on MedicalNet [12], for feature extraction and final prediction via a Gaussian Naive Bayes algorithm by combining radiomics and CNN features. The results showed high performance on internal and external datasets (AUC = 0.948, sensitivity = 0.862, specificity = 0.923 for internal (341 patients); AUC = 0.969, sensitivity = 0.978, specificity = 0.554 for external (111 patients)). Despite their robust results on the internal set, they attained low specificity on a small external dataset. Hata et al. [13] utilized a 2D Xception architecture [14], pre-trained on ImageNet [15], to classify AD in non-contrast-enhanced CT scans (170 patients). They achieved an AUC of 0.940, sensitivity of 0.918, and specificity of 0.882 by classifying consecutive slices of the aorta. However, their study’s limitation lies in the lack of external validation, raising concerns about the generalizability of their model. Huang et al. [16] proposed a 2-step hierarchical model involving an attention U-Net for initial AD detection (AD case; if 5 or more slices were detected with AD) followed by a ResNext model [17] for Stanford type classification. Their internal results showed excellent performance (AUC = 0.980, recall = 0.960, specificity = 1.000 for AD detection; AUC = 0.950, recall = 0.947, specificity = 0.953 for Stanford types). Despite high internal metrics, their approach was not tested on an external dataset, questioning its robustness. Harris et al. developed a 2D five-layer CNN as a screening algorithm, yielding a sensitivity of 0.878 and a specificity of 0.960 [18] with a training dataset of 778 patients, and demonstrated a reduction of turnover time (395 s) by prioritizing worklist in a teleradiology setting in the USA. Cheng et al. employed a U-Net to first segment the aorta and then analyze its circularity for AD classification. They obtained a sensitivity of 0.900 and specificity of 0.800 on a comparably small Chinese dataset of n = 20 patients (10 AD) [19]. Yellapragada et al. described a wider focussed 3D deep learning model for the detection of AAS solely in CTA scans, trained on a dataset of 3500 cases (500 containing AAS, unclear number of AD cases), overall yielding promising results [20]. Guo et al. [21] segmented the aorta ROI manually and extracted 396 radiomic features, including texture features, gray-level co-occurrence matrix, gray-level run-length matrix, gray-level size zone matrix, form factor features, and histograms. They selected the top 20 features using max-relevance min-redundancy and constructed a radiomic signature via LASSO logistic regression, followed by logistic regression for classification. Their results indicated consistent performance on internal (304 patients, AUC = 0.92, recall = 0.941, specificity = 0.753) and external (74 patients, AUC = 0.90, recall = 0.857, specificity = 0.917) datasets. It is noteworthy that their method involves manual segmentation of the aorta, which is time-consuming. Manual segmentation diminishes the benefits of an automated AD detection algorithm, as the time spent could instead be used by a radiologist to identify dissection cases directly. Additionally, the unavailability of a large external dataset in these studies underscores the necessity of developing robust algorithms that can generalize well across diverse (external) datasets. Furthermore, in the medical domain, the availability of large annotated datasets (for training) is particularly challenging due to factors such as patient privacy concerns, the extensive time required for expert annotation, and the variability in imaging protocols across institutions. Hence, producing a reliable model using a small internal dataset that can be effectively validated on a larger external dataset without manual segmentation is vital.

The aim of this analysis was to develop an easy-to-train convolutional neural network (CNN)-based AI pipeline on a small dataset that can be validated on a large external dataset and capable of real-time screening of all intrahospital abdominal CT scans for signs of AD, even at peak times and independent of acquired contrast phase. High robustness, efficient use of computing power, and a high degree of automation are therefore key requirements.

Materials and Methods

Possible conflicts of interest have been stated elsewhere. This retrospective study was approved by the local institutional review board (2021–635). Written informed consent was not required due to the retrospective nature of the study population enrolled.

Data Collection

Internal Training and Validation Dataset

Patients presenting with acute AD with abdominal extent between 2010/01/01 and 2021/03/01 were identified in the Radiology Information System (RIS) and included in the study, if an abdominal CT exam had been performed, intentionally regardless of contrast phase or other parameters like image quality or presence of metallic interferences. Patients with preexisting AD and scans not covering the entire abdomen were excluded. To include AD-negative cases, studies from patients with matching contrast phases and patient characteristics were collected similarly, of which some have already been used in a previous, different study [22], representing patient data overlap (n = 85). Datasets were randomly split into training (80%; 163 patients) and validation (20%; 32 patients) datasets. The validation set is used only for testing, and we call it internal validation dataset. Patient-specific data was removed.

External Validation Dataset

Anonymous external non-synthetic CT data containing healthy aortic vessels and AD were created from the publicly available ImageTBAD [23], AVT [24], and Abdomenct-1 k collections [25]. To avoid data overlap, we discarded the 20 KiTS19 patients from the AVT dataset, since these were included in the Abdomenct-1 k set.

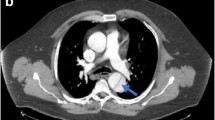

Example images are displayed in Fig. 1.

Image Annotation Strategy

Annotation was performed in Aycan Osirix (Aycan Digitalsysteme, Würzburg, Germany) by an attending radiologist (5 years of experience in cardiovascular imaging) and a separate second reading by a resident (4 years of experience). Cases with disagreements were resolved by a senior physician with > 15 years of experience. Annotation contained age, sex, CT date, contrast phase, presence of metallic interferences, presence of dissection (small and subtle AD were annotated as positive cases), exact dissection extent, occlusion or dissection of side branches (celiac trunk, superior and inferior mesenteric artery, renal arteries, iliac arteries), and presence of signs of visceral ischemia, intramural hematoma, or aortic thrombosis. The external dataset and its annotations were validated.

Data Preprocessing

We extract the abdominal region from each CT case using a heuristic implemented in [22]. The algorithm analyzes HU distribution along the z-axis in the soft tissue HU range to establish the upper and lower bounds of the abdomen, determining the abdomen center using high HU values, and extracting a subvolume around it. The extracted subvolumes are resampled to a size of 320 × 384 × 224 voxels with a uniform spacing of 0.9 × 0.9 × 1.5 mm3. The intensities are windowed in the [− 200, 400] HU range, corresponding to the soft tissue domain. Next, the CT image is rescaled to be in the range [0, 1]. Subsequently, the mask of the abdominal aorta including iliac arteries is extracted using the TotalSegmentator algorithm [26]. To compensate for the insufficient segmentation quality of dissected vessels, aorta masks were dilated in 10 iterations via a square structuring element with a connectivity of one, ensuring complete coverage. The CT image is then masked using the dilated mask. The dissected aorta has a membrane separating the true and false lumen, which was highlighted by extracting edges (canny detector (σ = 2)) inside the aortic ROI. The subvolume voxels that do not belong to the edges are weighed down with a factor of 0.6, while the edge voxels remain unchanged. We then extract the bounding box of weighted aorta ROI using the dilated mask with a boundary margin of 5 voxels in each direction. Finally, the bounding box is resized with padding or cropping to a standardized size of 224 × 224 × 224 voxels (CNN input) in order to standardize data and minimize the usage of memory. The subvolume of this size is sufficient to encompass the entire aorta ROI (as seen in Fig. 2). The aorta mask is used to create a bounding box, which centers the aorta ROI within this subvolume. The preprocessing pipeline is depicted in Fig. 2. Further data augmentation details are described in the supplemental material.

CT volume preprocessing pipeline. The abdominal region is automatically extracted and resized. An aorta mask is generated from this region and then dilated to mask out the aorta region of interest (ROI) from the abdomen region. For highlighting aorta bifurcation in AD cases, edges are extracted using the canny detector. The aorta ROI is weighted down by a factor of 0.6 where there is no edge voxel present. Finally, the edge-weighted aorta ROI is standardized by cropping/padding with the bounding box of the dilated aortic mask

Network Architecture

We construct a CNN comprising 5 convolutional blocks and 1 dense block, depicted in Fig. 3. The network takes as input the masked, edge-weighted aortic ROI. Each convolution block entails a 3 × 3 × 3 convolution, followed by instance normalization, dropout, and ReLU activation. Dropout probabilities for the initial to final convolution blocks are 0.00, 0.05, 0.10, 0.10, and 0.10, correspondingly. Post each of the initial four convolution blocks, a 3D max-pooling operation is performed to downsample the feature maps with a stride of 2. The final feature vector of size 256 is produced using a 3D average-pooling operation with a kernel size of 14. Lastly, the dense block processes this vector, yielding network output through a dense layer followed by sigmoid activation, i.e., a probability score that a patient belongs to either AD or non-AD class. This network was selected after testing various other networks, whose details are provided in the supplements and results.

Network architecture for aortic dissection classification. The input volume (edge-weighted aorta ROI) is processed with a CNN to produce a single output in the range [0, 1]. The network consists of convolutional blocks with convolutions of size three, followed by instance norm, dropout, ReLU activation, and max-pool of size two (except in the last convolution block (orange block)). The final feature vector is produced by an average-pooling. It is then processed by a dense layer and a sigmoid activation to produce the output

Evaluation

To assess the performance of all different networks, the area under the receiver operating characteristic curves (AUCs) were compared to choose the best-performing network for further evaluation. Next, the best network’s performance was further assessed using sensitivity, specificity, balanced accuracy \(([Sensitivity + Specificity]/2;\) suited for high class imbalance), and AUC with a 95% confidence interval. We select the binary threshold of our models based on the high sensitivity and balanced specificity on the internal cross validation dataset, i.e., 0.45. Next, we create an ensemble of five models trained on this set to predict the internal validation and external sets based on our set binary threshold. The ensemble combines probabilities (average) for each case in the internal validation and external sets, and we evaluate its performance using the same threshold.

Results

Patient and Dataset Characteristics

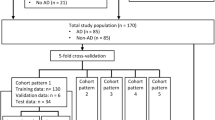

From the total of n = 266 cases, n = 163 scans were used for training and n = 32 were used for validation, in the rest, either images were not available, or the abdominal aorta was not fully covered. AD patients on average were 65.9 ± 13.5 (29–93) years of age, 36% were female. For the training cases, in 72.0%, suprarenal AD was present, and infrarenal AD was seen in 79.3%. 9.8% presented with intramural hematoma, and 56.1% showed partial aortic lumen thrombosis. The external public dataset used for validation consisted of a dataset with n = 1189 cases (100 AD cases). Details are provided in Table 1 and patient selection criteria are shown in Fig. 4.

Classification Results

From the four tested networks, our 5-layer CNN yielded the highest AUC (0.932) in cross-validation (Table 2) and was therefore chosen for further investigation. Its performance on internal and external validation sets are provided in Table 3. Furthermore, the confusion matrices and the AUC curves are illustrated in Fig. S1 (supplement) and 5, respectively. The entire workflow including preprocessing, aorta segmentation, and the prediction was tested on internal training (cross-validation) and validation dataset and takes approximately 45 s (model prediction = 0.003 s).

Internal Set

The balanced accuracy score for the internal set fivefold cross-validation (test sets only) is 0.860 (Table 3). The corresponding sensitivity value is 0.885 (69/78), with the specificity being 0.835 (71/85) and the AUC of 0.932 (CI 0.891–0.963), which is shown in the confusion matrix (Fig. S1 (a)) and plotted in Fig. 5 (red). From the nine missed AD cases, six had a clearly visible AD and three presented with very subtle signs of AD (further described in supplement material and Fig. S4).

Internal Validation Set

The sensitivity and specificity for the internal validation set are 0.875 (14/16) and 0.688 (11/16), respectively, with a balanced accuracy of 0.789. The AUC value obtained is 0.887 (CI 0.732–0.988), as shown in Fig. S1 (b) and Fig. 5 (blue). Out of the five FP cases, two had a very subtle form of AD (examples in supplementary Fig. S3).

External Set

For the external validation dataset, a balanced accuracy score of 0.933 was obtained with sensitivity and specificity of 1.000 (100/100) and 0.865 (942/1089), respectively (Table 3, Fig. S1 (c)). Furthermore, the AUC value is 0.993 (CI 0.988–0.997) (Fig. 5 (green)).

Discussion

This study demonstrated that rapid AI-based automated aortic dissection detection from CT images is feasible in an academic-level hospital in Germany. Overall, on all the datasets, an AUC of > 88.7% and sensitivities and specificities of > 87.5% and > 68.8% were consistently achieved. The strengths of this study are the comparably low training effort, and testing on a heterogeneous, real-world internal and external dataset with good overall processing times, indicating promising potential for clinical implementation as an alarming system for AD management.

AI-based approaches have been demonstrated to be of great potential for the detection of various pathologies in abdominal emergency imaging [9] and to support the management of chronic and acute vascular pathologies [27]. Structured text-based clinical data and imaging data have both been leveraged for AD detection, its rupture risk assessment, segmentation, therapy planning and prediction of mortality [28,29,30]. AI-based regular chest radiography analysis has been shown to offer a precision of 90.2% in the detection of AD [31]. Early approaches of CT imaging-based AD characterization tools used rule-based technologies and small datasets of n < 20 ADs [32]. Recently, CNN-based algorithms were proposed as mentioned in the introduction section. In contrast to most other algorithms, this study focussed on the abdominal region and comparably heterogeneous data with respect to CT scanners, contrast phase, and morphological characteristics of AD, resulting in sensitivity from 87.5 to 100% and specificity from 68.8 to 86.5%. It is important to mention that when used as a detection algorithm to improve prioritization of AD-positive cases, high sensitivity remains paramount and optimizing thresholds accordingly represents a very important design decision, but it also comes with the cost of a higher false-positive rate. An understanding of human performance is of high interest when interpreting AI performance. Nienaber et al. found the human sensitivity and specificity for the detection of acute AD in the thoracic region to be 93.8% and 87.1% [33], which is further underlined by the findings of Dreisbach et al., which describe substantial error rates of CT reading in acute AD under emergency conditions, depending on reader experience [6]. An important goal for further research is therefore to create a detailed understanding of the performance of human performance alone, but also with AI support, under realistic conditions in a prospective setup.

Clinical implementation of AI lagged behind expectations in the past [34]. The reasons are limited performance, lack of trust in AI systems, and poor workflow integration, amongst others [35]. A practical way of implementing this algorithm would be to automatically prioritize acute AD cases by re-ordering radiology reading lists [30] which has been shown to reduce delays in pulmonary embolism management [36] or to alarm specialized vascular care teams. Importantly, physicians would realistically expect the algorithm to not miss any cases of AD and a failure in this area can be expected to negatively impact trust in a clinical setting, therefore optimization of parameters contributing to sensitivity, but also the quality of user training require high attention. Moreover, a broader AI solution also covering chronic AD, PAU, and IMH would increase clinical use and therefore should be added with priority. To overcome the black box problem, a graphical visualization of the dissection membrane within the aorta in each detected case could offer substantial merit.

The results of this study are limited by various factors. First, due to the retrospective nature, there was an inevitable selection bias. As AD remains a rare condition, the dataset inevitably contains a limited number of patient cases. This limitation is particularly evident in our internal cases and extends to the variety of CT scanners and morphological differences between cases. Even though thorough external validation on a newly created dataset was performed, generalizability required confirmation and prospective multicenter testing with more patient cases therefore represents an important next goal. Additionally, the generation of synthetic training data using latent diffusion models could contribute to overcome these limitations.

Second, even though results are promising, balancing sensitivity and specificity remains a major issue. External validation demonstrated perfect sensitivity, but due to limited specificity, over half of the positive cases would yield false-positive results. On the internal training and validation datasets, on the other hand, six and two cases of AD were not detected. While some of these cases contained very subtle ADs and may have been missed for anatomical reasons, others presented with clear ADs where, for example, the isolation of the aortic vessel did not work correctly. Both the inclusion of larger training datasets as well as detailed refining and improvement of automated segmentation of the aortic lumen independent of external components like TotalSegmentator or Canny detector (for AD cases) might help improve performance. Third, due to the design-decision to not create a segmentation-based algorithm, possibilities of graphical visualization remain limited which could in the future possibly be added. Last, the focus only on abdominal imaging and only on AD limit clinical benefits to patients which present with abdominal AD, and therefore in the future, the inclusion of the full range of vascular pathologies and the thoracic region is needed.

Conclusion

In conclusion, the proposed algorithm yielded sensitivity > 87.5% for the detection of AD within heterogeneous abdominal CT scans, which could be confirmed on an internal as well as external validation dataset. It therefore seems promising as a detection tool for AD in the abdomen which offers the potential for earlier detection of AD, especially in patients with unclear symptoms, leading to improved management. Further in-hospital testing is encouraged and necessary.

Data Availability

Data generated or analyzed during the study are available from the corresponding author by reasonable request.

Abbreviations

- AD:

-

Aortic dissection

- AUC:

-

Area under the receiver operating characteristic curve

- CT:

-

Computed tomography

- CNN:

-

Convolutional neural network

- HU:

-

Hounsfield units

- ROI:

-

Region of interest

- CI:

-

Confidence interval

- TP:

-

True positive

- TN:

-

True negative

- FP:

-

False positive

- FN:

-

False negative

- IMH:

-

Intramural hematoma

- PAU:

-

Penetrating atherosclerotic ulcer

References

Vilacosta I, San RJA, di BR, Eagle K, Estrera AL, Ferrera C, Kaji S, Nienaber CA, Riambau V, Sch äfers H-J, Serrano FJ, Song J-K, Maroto L (2021) Acute Aortic Syndrome Revisited. J Am Coll Cardiol 78:2106–2125. https://doi.org/10.1016/j.jacc.2021.09.022

Bossone E, Eagle KA (2021) Epidemiology and management of aortic disease: aortic aneurysms and acute aortic syndromes. Nat Rev Cardiol 18:331–348. https://doi.org/10.1038/s41569-020-00472-6

Wundram M, Falk V, Eulert-Grehn J-J, Herbst H, Thurau J, Leidel BA, Göncz E, Bauer W, Habazettl H, Kurz SD (2020) Incidence of acute type A aortic dissection in emergency departments. Sci Rep 10:7434. https://doi.org/10.1038/s41598-020-64299-4

Benkert AR, Gaca JG (2021) Initial Medical Management of Acute Aortic Syndromes. In: Sellke FW, Coselli JS, Sundt TM, Bavaria JE, Sodha NR (eds) Aortic Dissection and Acute Aortic Syndromes. Springer International Publishing, Cham, pp 119–129

Orabi NA, Quint LE, Watcharotone K, Nan B, Williams DM, Kim KM (2018) Distinguishing acute from chronic aortic dissections using CT imaging features. Int J Cardiovasc Imaging 34:1831–1840. https://doi.org/10.1007/s10554-018-1398-x

Dreisbach JG, Rodrigues JC, Roditi G (2021) Emergency CT misdiagnosis in acute aortic syndrome. Br J Radiol 94:20201294. https://doi.org/10.1259/bjr.20201294

Nienaber CA, Clough RE (2015) Management of acute aortic dissection. The Lancet 385:800–811. https://doi.org/10.1016/S0140-6736(14)61005-9

Harris KM, Strauss CE, Eagle KA, Hirsch AT, Isselbacher EM, Tsai TT, Shiran H, Fattori R, Evangelista A, Cooper JV, Montgomery DG, Froehlich JB, Nienaber CA, null null (2011) Correlates of Delayed Recognition and Treatment of Acute Type A Aortic Dissection. Circulation 124:1911–1918. https://doi.org/10.1161/CIRCULATIONAHA.110.006320

Liu J, Varghese B, Taravat F, Eibschutz LS, Gholamrezanezhad A (2022) An Extra Set of Intelligent Eyes: Application of Artificial Intelligence in Imaging of Abdominopelvic Pathologies in Emergency Radiology. Diagn Basel Switz 12:1351. https://doi.org/10.3390/diagnostics12061351

Yi Y, Mao L, Wang C, Guo Y, Luo X, Jia D, Lei Y, Pan J, Li J, Li S, Li X-L, Jin Z, Wang Y (2022) Advanced Warning of Aortic Dissection on Non-Contrast CT: The Combination of Deep Learning and Morphological Characteristics. Front Cardiovasc Med 8.

He K, Zhang X, Ren S, Sun J (2015) Deep Residual Learning for Image Recognition

Chen S, Ma K, Zheng Y (2019) Med3D: Transfer Learning for 3D Medical Image Analysis

Hata A, Yanagawa M, Yamagata K, Suzuki Y, Kido S, Kawata A, Doi S, Yoshida Y, Miyata T, Tsubamoto M, Kikuchi N, Tomiyama N (2021) Deep learning algorithm for detection of aortic dissection on non-contrast-enhanced CT. Eur Radiol 31:1151–1159. https://doi.org/10.1007/s00330-020-07213-w

Chollet F (2017) Xception: Deep Learning with Depthwise Separable Convolutions

Deng J, Dong W, Socher R, Li L-J, Li K, Fei-Fei L (2009) ImageNet: A large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition. pp 248–255

Huang L-T, Tsai Y-S, Liou C-F, Lee T-H, Kuo P-TP, Huang H-S, Wang C-K (2021) Automated Stanford classification of aortic dissection using a 2-step hierarchical neural network at computed tomography angiography. Eur Radiol. https://doi.org/10.1007/s00330-021-08370-2

Aggregated Residual Transformations for Deep Neural Networks | IEEE Conference Publication | IEEE Xplore. https://ieeexplore.ieee.org/document/8100117. Accessed 22 May 2024

Harris RJ, Kim S, Lohr J, Towey S, Velichkovich Z, Kabachenko T, Driscoll I, Baker B (2019) Classification of Aortic Dissection and Rupture on Post-contrast CT Images Using a Convolutional Neural Network. J Digit Imaging 32:939–946. https://doi.org/10.1007/s10278-019-00281-5

Cheng J, Tian S, Yu L, Ma X, Xing Y (2020) A deep learning algorithm using contrast-enhanced computed tomography (CT) images for segmentation and rapid automatic detection of aortic dissection. Biomed Signal Process Control 62:102145. https://doi.org/10.1016/j.bspc.2020.102145

Yellapragada MS, Xie Y, Graf B, Richmond D, Krishnan A, Sitek A (2020) Deep Learning Based Detection of Acute Aortic Syndrome in Contrast CT Images. In: 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI). pp 1474–1477

Guo T, Fang Z, Yang G, Zhou Y, Ding N, Peng W, Gong X, He H, Pan X, Chai X (2021) Machine Learning Models for Predicting In-Hospital Mortality in Acute Aortic Dissection Patients. Front Cardiovasc Med 8:727773. https://doi.org/10.3389/fcvm.2021.727773

Golla A-K, Tönnes C, Russ T, Bauer DF, Froelich MF, Diehl SJ, Schoenberg SO, Keese M, Schad LR, Zöllner FG, Rink JS (2021) Automated Screening for Abdominal Aortic Aneurysm in CT Scans under Clinical Conditions Using Deep Learning. Diagn Basel Switz 11:2131. https://doi.org/10.3390/diagnostics11112131

Yao Z, Xie W, Zhang J, Dong Y, Qiu H, Yuan H, Jia Q, Wang T, Shi Y, Zhuang J, Que L, Xu X, Huang M (2021) ImageTBAD: A 3D Computed Tomography Angiography Image Dataset for Automatic Segmentation of Type-B Aortic Dissection. Front Physiol 12:

Radl L, Jin Y, Pepe A, Li J, Gsaxner C, Zhao F-H, Egger J (2022) AVT: Multicenter aortic vessel tree CTA dataset collection with ground truth segmentation masks. Data Brief 40:107801. https://doi.org/10.1016/j.dib.2022.107801

Ma J, Zhang Y, Gu S, Zhu C, Ge C, Zhang Y, An X, Wang C, Wang Q, Liu X, Cao S, Zhang Q, Liu S, Wang Y, Li Y, He J, Yang X (2022) AbdomenCT-1K: Is Abdominal Organ Segmentation a Solved Problem? IEEE Trans Pattern Anal Mach Intell 44:6695–6714. https://doi.org/10.1109/TPAMI.2021.3100536

Wasserthal J, Breit H-C, Meyer MT, Pradella M, Hinck D, Sauter AW, Heye T, Boll DT, Cyriac J, Yang S, Bach M, Segeroth M (2023) TotalSegmentator: Robust Segmentation of 104 Anatomic Structures in CT Images. Radiol Artif Intell 5:e230024. https://doi.org/10.1148/ryai.230024

Javidan AP, Li A, Lee MH, Forbes TL, Naji F (2022) A Systematic Review and Bibliometric Analysis of Applications of Artificial Intelligence and Machine Learning in Vascular Surgery. Ann Vasc Surg 85:395–405. https://doi.org/10.1016/j.avsg.2022.03.019

Hahn LD, Mistelbauer G, Higashigaito K, Koci M, Willemink MJ, Sailer AM, Fischbein M, Fleischmann D (2020) CT-based True- and False-Lumen Segmentation in Type B Aortic Dissection Using Machine Learning. Radiol Cardiothorac Imaging 2:e190179. https://doi.org/10.1148/ryct.2020190179

Li B, Feridooni T, Cuen-Ojeda C, Kishibe T, de Mestral C, Mamdani M, Al-Omran M (2022) Machine learning in vascular surgery: a systematic review and critical appraisal. NPJ Digit Med 5:7. https://doi.org/10.1038/s41746-021-00552-y

Mastrodicasa D, Codari M, Bäumler K, Sandfort V, Shen J, Mistelbauer G, Hahn LD, Turner VL, Desjardins B, Willemink MJ, Fleischmann D (2022) Artificial Intelligence Applications in Aortic Dissection Imaging. Semin Roentgenol 57:357–363. https://doi.org/10.1053/j.ro.2022.07.001

Lee DK, Kim JH, Oh J, Kim TH, Yoon MS, Im DJ, Chung JH, Byun H (2022) Detection of acute thoracic aortic dissection based on plain chest radiography and a residual neural network (Resnet). Sci Rep 12:21884. https://doi.org/10.1038/s41598-022-26486-3

Chen D, Zhang X, Mei Y, Liao F, Xu H, Li Z, Xiao Q, Guo W, Zhang H, Yan T, Xiong J, Ventikos Y (2021) Multi-stage learning for segmentation of aortic dissections using a prior aortic anatomy simplification. Med Image Anal 69:101931. https://doi.org/10.1016/j.media.2020.101931

Nienaber CA, von Kodolitsch Y, Nicolas V, Siglow V, Piepho A, Brockhoff C, Koschyk DH, Spielmann RP (1993) The diagnosis of thoracic aortic dissection by noninvasive imaging procedures. N Engl J Med 328:1–9. https://doi.org/10.1056/NEJM199301073280101

Fujimori R, Liu K, Soeno S, Naraba H, Ogura K, Hara K, Sonoo T, Ogura T, Nakamura K, Goto T (2022) Acceptance, Barriers, and Facilitators to Implementing Artificial Intelligence-Based Decision Support Systems in Emergency Departments: Quantitative and Qualitative Evaluation. JMIR Form Res 6:e36501. https://doi.org/10.2196/36501

Eltawil FA, Atalla M, Boulos E, Amirabadi A, Tyrrell PN (2023) Analyzing Barriers and Enablers for the Acceptance of Artificial Intelligence Innovations into Radiology Practice: A Scoping Review. Tomogr Ann Arbor Mich 9:1443–1455. https://doi.org/10.3390/tomography9040115

Topff L, Ranschaert ER, Bartels-Rutten A, Negoita A, Menezes R, Beets-Tan RGH, Visser JJ (2023) Artificial Intelligence Tool for Detection and Worklist Prioritization Reduces Time to Diagnosis of Incidental Pulmonary Embolism at CT. Radiol Cardiothorac Imaging 5:e220163. https://doi.org/10.1148/ryct.220163

Funding

Open Access funding enabled and organized by Projekt DEAL. This research has been funded as part of the “DeepRAY” project under the funding code BW1_1523/02 by the Ministry of Economy, Employment and Tourism of the State of Baden-Württemberg, Germany.

Author information

Authors and Affiliations

Contributions

Guarantors of the integrity of the entire study, AR, JSR, FGZ; study concepts/study design or data acquisition or data analysis/interpretation, all authors; manuscript drafting or manuscript revision for important intellectual content, all authors; approval of the final version of the submitted manuscript, all authors; agrees to ensure any questions related to the work are appropriately resolved, all authors; literature research, JSR, AR, FGZ; clinical studies, JSR, AA, HK, experimental studies, AR, FGZ; statistical analysis, AR; and manuscript editing, JSR, AR, FGZ.

Corresponding author

Ethics declarations

Ethics Approval

This retrospective study was approved by the local institutional review board (2021–635). Written informed consent was not required due to the retrospective nature of the study population enrolled.

Competing Interests

SOS: the Department of Radiology and Nuclear Medicine has general research agreements with Siemens Healthineers. FGZ: the Department of Computer Assisted Clinical Medicine has general research agreements with Siemens Healthineers. Others: no conflicts of interest declared.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Raj, A., Allababidi, A., Kayed, H. et al. Streamlining Acute Abdominal Aortic Dissection Management—An AI-based CT Imaging Workflow. J Digit Imaging. Inform. med. (2024). https://doi.org/10.1007/s10278-024-01164-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10278-024-01164-0