Abstract

Radiomics extracts hundreds of features from medical images to quantitively characterize a region of interest (ROI). When applying radiomics, imbalanced or small dataset issues are commonly addressed using under or over-sampling, the latter being applied directly to the extracted features. Aim of this study is to propose a novel balancing and data augmentation technique by applying perturbations (erosion, dilation, contour randomization) to the ROI in cardiac computed tomography images. From the perturbed ROIs, radiomic features are extracted, thus creating additional samples. This approach was tested addressing the clinical problem of distinguishing cardiac amyloidosis (CA) from aortic stenosis (AS) and hypertrophic cardiomyopathy (HCM). Twenty-one CA, thirty-two AS and twenty-one HCM patients were included in the study. From each original and perturbed ROI, 107 radiomic features were extracted. The CA-AS dataset was balanced using the perturbation-based method along with random over-sampling, adaptive synthetic (ADASYN) and the synthetic minority oversampling technique (SMOTE). The same methods were tested to perform data augmentation dealing with CA and HCM. Features were submitted to robustness, redundancy, and relevance analysis testing five feature selection methods (p-value, least absolute shrinkage and selection operator (LASSO), semi-supervised LASSO, principal component analysis (PCA), semi-supervised PCA). Support vector machine performed the classification tasks, and its performance were evaluated by means of a 10-fold cross-validation. The perturbation-based approach provided the best performances in terms of f1 score and balanced accuracy in both CA-AS (f1 score: 80%, AUC: 0.91) and CA-HCM (f1 score: 86%, AUC: 0.92) classifications. These results suggest that ROI perturbations represent a powerful approach to address both data balancing and augmentation issues.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Radiomics is an emerging field of research focused on extracting quantitative data from medical images with the aim to deliver an even more accurate personalized medicine. Radiomics models have been widely employed in oncology, due to their utility in classification of tumors [1, 2], prediction of treatment response [3,4,5], and prognostication [6], but, recently, it has gained increasing interest for the diagnosis and assessment of prognosis of cardiovascular disease [7,8,9,10,11,12,13,14].

Dealing with medical images datasets, common challenges are usually represented by class unbalance or lack of data: the reasons of these issues lie in the low prevalence of some diseases, poor medical equipment or resources, difficulties in obtaining images that meet specific, or issues related to the complex and time-consuming tasks of image labeling and segmentation [15]. Imbalanced or small dataset may negatively affect machine learning model performance leading to overfitting, biased outcomes, and inaccurate results. Therefore, it is crucial to address these issues when training machine learning models.

As concern imbalanced datasets, resampling procedures are usually applied as pre-processing step: these methods can be organized into under-sampling, oversampling and hybrid methods [16].

Under-sampling achieves class balancing by removing some instances from the majority class. Several under-sampling methods, with different filtering principles, have been implemented: random Under-Sampling, Near Miss, Tomek Links, and Edited Nearest Neighbors (ENN) [17, 18]. Random Under-Sampling discards random samples from the majority class, Near Miss selects majority samples close to some minority samples, Tomek Links defines two instances as boundary or noisy instances used to remove samples from the majority class, while ENN tests each instance, using k Nearest Neighbors (kNN), with the rest of the samples, and discards them if incorrectly classified [16].

Employing over-sampling methods, indeed, balancing is reached by generating new data based on samples from the minority class. Within this resampling class, Synthetic Minority Over-Sampling Technique (SMOTE) is the most employed method which employs a kNN approach to produce new minority instances between the existing ones. Other over-sampling techniques have been tested, as random over-sampling [16], adaptive synthetic (ADASYN), and borderline SMOTE [17, 18]. Random over-sampling (ROS) copies random minority class samples while ADASYN is based on SMOTE and creates new instances according to the data distribution and density, thus generating different numbers of new samples. Borderline SMOTE performs SMOTE on borderline samples, which are instances that are often misclassified by their nearest neighbors [16].

Finally, hybrid systems involve a combination of minority class over-sampling with majority class under-sampling. These methods include SMOTE-ENN, which combines SMOTE for over-sampling and ENN for under-sampling, and SMOTE-Tomek, which uses SMOTE for over-sampling and Tomek Links for under-sampling. These two hybrid methods were employed in [17] and [18] with the purpose to balance the training dataset and remove the noisy points at the wrong side of the decision boundary, finding better models with good generalization ability [16].

Dealing with small dataset, data augmentation strategies are generally used on the training data to reduce the risk of overfitting. Data augmentation is commonly used in the field of deep learning, as it requires a lot of training samples to produce an effective model. Augmentation is usually achieved in two ways: by generating different images from the original images, such as cropped images with different locations, noise-added images, or mirrored images, or using generative adversarial network (GAN) [19]. To the best of our knowledge, no studies, dealing with medical imaging, has performed features augmentation in the machine learning field. In [20] only, the authors proposed a new data augmentation technique employing the Conditional Wasserstein GAN. This approach consists of two networks, a generator and a discriminator, which work together to generate realistic-like EEG features. However, it should be noted that all the resampling methods previously cited might be employed to perform data augmentation.

In this study, both class imbalance and small dataset issues were addressed, dealing with radiomic-based model aimed to differentiating cardiac amyloidosis (CA) from aortic stenosis (AS) and hypertrophic cardiomyopathy (HCM). As demonstrated in [21] and [22], in fact, radiomics represents a promising non-invasive tool to conduct a differential diagnosis of CA using medical images, such as cardiac computed tomography (CCT), acquired in the clinical routine.

CA is an infiltrative disease characterized by the extracellular deposition of misfolded amyloidogenic proteins. It is an underestimated cause of heart failure and cardiac arrhythmias often only diagnosed at autopsy post-mortem [23]. Due to its phenotypical features, as the increased biventricular wall thickness, myocardial stiffening, and restrictive physiology of the left and right ventricles, CA has often been misdiagnosed as AS, or HCM [24, 25]. AS is the most common valvular heart disease, triggered by a progressive aortic valve narrowing, leading to heart failure, while HCM is a common inherited cardiovascular disease whose main macroscopic characteristics are myocardial wall thickening and stiffening, and myocyte hypertrophy. Due to the absence of specific symptoms, and the need of specific diagnostic tools, not available in some cases, the diagnosis of CA is difficult and time-consuming. In addition, the differentiation of this relatively rare disease from other pathologies is extremely important for the diverse therapeutic options and the difference in long-term prognosis [26, 27]. Lastly, the recent emergence of novel and effective treatments, improving the prognosis of patients with CA, has significantly enhanced the importance of early detection of this disease.

The aim of this work it to develop new balancing and augmentation methods, starting from region of interest (ROI) perturbations. In particular, ROIs were perturbed, i.e., slightly modified by applying geometrical operations, namely, erosion, dilation and contour randomization, and the features extracted from the perturbed ROIs are used to balance or augment the original data. Thus, the final aim of the study is to investigate the impact of this novel image-based method and compare it with the most commonly used data-based resampling methods, i.e., ROS, ADASYN, and SMOTE, in two classification tasks: distinguishing CA from AS and CA from HCM. In the first case, perturbations are employed to balance the dataset while in the former they are used to perform data augmentation.

In addition, the developed method was validated by performing data augmentation on an external set, including cardiac magnetic resonance (CMR) images for analyzing epicardial adipose tissue (EAT). EAT is the fat depot existing between the heart muscle’s surface and the inner layer of the pericardium and its biological activity can potentially influence major health issues like coronary artery disease, atrial fibrillation, and heart failure. In the context of EAT, literature reports promising results in classifying and predicting atrial fibrillation using radiomic features [28,29,30]. In the current study, we addressed a different classification task involving the identification of patients experiencing a hard cardiac event (HCE).

Materials and Methods

CCT Acquisition Protocol

CCT examinations were performed using using 256-slices (Revolution CT; GE Healthcare, Milwaukee, WI) or 320-slices wide volume coverage CT scanner (Aquilion ONE VisionTM; Canon Medical Systems Corp., Tokyo, Japan).

No premedication with beta-blockers or nitrates was added before CT acquisition. Patients received a fixed dose of 50 ml bolus of contrast medium (400 mg of iodine per milliliter, Iomeprol; Bracco, Milan, Italy) despite the BMI via antecubital vein at an infusion rate of 5 ml s−1 followed by 50 ml of saline solution at 5 ml·s−1.

CMR Acquisition Protocol

CMR studies were performed with a 1.5-T Discovery MR450 (G.E. Healthcare, Milwaukee, Wisconsin). Breath-hold steady-state free-precession cine imaging was performed in vertical and horizontal long- and short-axis orientations.

A contrast-enhanced breath-hold segmented T1-weighted inversion-recovery gradient-echo sequence was used for the detection of late gadolinium enhancement. Steady state-free precession cine sequences were acquired using the following parameters: echo time 1.57 ms, 15 segments, repetition time 46 ms without view sharing, slice thickness 8 mm, field of view 350 × 263 mm, and pixel size 1.4 × 2.2 mm. Late gadolinium enhancement imaging was performed 10 to 20 min after administration of an intravenous bolus of gadolinium at a flow rate of 4 mL/s followed by saline flush. The inversion time was individually adjusted to null normal myocardium (usual range 220 to 300 ms). The following parameters were used: FOV: 380 to 420 mm; TR/TE: 4.6/1.3 ms; α: 20°; matrix: 256 × 192; S.T.: 8 mm; and no interslice gap.

Study Population and Baseline Characteristics

The study included 21 patients affected by CA, 21 patients with HCM and 32 patients with AS. In all patients, CA was detected through bone scintigraphy using 3,3-diphosphono-1,2-propanodicarboxylicacid (DPD) and confirmed with myocardial biopsy (Congo red and immunohistochemical staining) in case of uncertain diagnosis. In AS patients, the concomitant presence of CA was excluded by bone scintigraphy and/or cardiac magnetic resonance (CMR). All patients underwent comprehensive evaluation with transthoracic echocardiography using commercially available equipment (iE33 or Epiq, Philips Medical System, or Vivid-9, GE Healthcare) measuring LV end-diastolic (LVEDV) and end-systolic (LVESV) volumes indexed for body surface area, LV ejection fraction (LVEF), and intraventricular septum thickness (IVS). Clinical characteristics are shown in Table 1.

The external validation set included 40 patients, 20 of them experienced an HCE. These patients were referred to perform clinically indicated dipyridamole stress CMR for suspected or known coronary artery disease between January 2011 and December 2014 at Centro Cardiologico Monzino (Milan, Italy). Clinical characteristics are shown in Table 2.

The institutional Ethical Committee approved the studies, and all the patients signed the informed consent.

Image Processing and Radiomics Feature Extraction

CCT images were manually segmented by an expert cardiac imager with level III European Association of Cardiovascular Imaging to obtain the left ventricular wall. Two image preprocessing steps were performed: a 3D Gaussian filter with a 3 × 3 × 3 voxel kernel and σ = 0.5 was applied to denoise the images, and a B-spline interpolation was performed to resample voxel size to an isotropic resolution of 2 mm (as in [30]).

EAT was manually segmented by an expert cardiac imager with level III European Association of Cardiovascular. Image pre-processing was performed to denoise images using a 3D Gaussian filter with a 3 × 3 × 3 voxel kernel and σ = 0.5. Intensity-non uniformities were corrected using the N4ITK algorithm and intensity standardization was performed with z-score. Finally, B-spline interpolation was employed to resample the voxel size to a 2-mm isotropic resolution.

MATLAB R2017a (MathWorks, Natick, MA, USA) was used to perform all the previous steps.

From each dataset, a total of 107 radiomic features were extracted from the segmented ROIs using Pyradiomics 3.0 [31]. The features obtained belong to three categories: shape and size (14 features), first order statistics (18 features), and texture (75 features), being the latter based on the grey level co-occurrence matrix (GLCM), grey level run length matrix (GLRLM), and gray level size zone matrix (GLSZM).

Radiomic Feature Evaluation

Radiomic features underwent a series of feature selection steps based on robustness, redundancy, and relevance analysis.

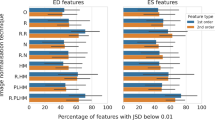

To evaluate the robustness, i.e., stability and discriminative capability of extracted features, ROI small and large perturbations were performed following the approaches described in [32] and [33]. The underlying hypothesis is that small perturbations mimic the effects of random multiple delineations while large transformations help to identify non-discriminant features. In particular, three small entity perturbations were used to assess feature stability, namely erosion, dilation, and contour randomization, while a large translation was performed to assess feature discrimination capacity (see Fig. 1). Only first-order and textural features were submitted to this evaluation.

Example of perturbations applied to the ROI. The filled structure represents the original ROI, while the dashed red line represents the modified ROI used to assess features stability. a) ROI dilation (zoom-in with τ = 0.15). b) ROI erosion (zoom-out with τ = − 0.15). c) ROI contour randomization with superpixels area equal to 25 mm2. d) ROI translation of 30% in the x positive direction

The entire workflow is implemented in MATLAB 2022a (Mathworks, Natick, MA, USA) and applied to each single CCT slice.

Erosion and dilation were used to reduce and enlarge the segmentation mask, respectively. These transformations are achieved by employing a kernel, represented as a matrix of 0 s and 1 s, which slides across the image. For this study, a 3 × 3 kernel was created, encompassing a pixel p with coordinates (x, y), and its two horizontal neighbors [(x + 1, y), (x − 1, y)] and two vertical neighbors [(x, y + 1), (x, y − 1)]. During the erosion process, a pixel in the original image is set to 1 only if all the pixels covered by the kernel are also 1. On the other hand, in ROI dilation, a pixel in the original image (either 1 or 0) is set to 1 if at least one of the pixels covered by the kernel is 1. The final area achieved is Af = [A0 (1 + τ)], being V0 the original volume and τ > 0 the growth factor while τ < 0 the shrinkage factor. In this study, the parameter |τ| is set to 0.15, resulting in a ROI that is reduced or enlarged by 15% of the original ROI area.

Contour randomization is achieved using a superpixel-based segmentation strategy, where superpixels represent connected clusters of pixels with similar intensity characteristics (in this study, the area occupied by a superpixel was set to 25 mm2). The process of generating a randomized contour involves comparing these superpixels to the original segmentation mask. To accomplish this, the degree of overlap ν between each superpixel and the original ROI contour is considered. Superpixels with an overlap ν ≥ 0.90 are included in the modified ROI, while those with an overlap ν ≤ 0.20 are excluded. For superpixels with an overlap value between 0.20 and 0.90, a random selection process is applied to determine their inclusion in the new ROI.

Finally, to evaluate feature discrimination capacity, the ROIs were subjected to translation, which involves shifting them along both the x and y axes. A large translation was applied, corresponding to ± 30% of the length of the bounding box surrounding each ROI. Four translations were computed for each ROI: two in the positive direction and two in the negative direction, separately for both the x and y directions.

Features extracted from the original and the modified ROI were compared using the intraclass correlation coefficient (ICC). The ICC was computed following the approach described in [33]. According to general guidelines [34], robustness assessment was performed using two thresholds: features having an ICC > 0.75 for small entity perturbation (erosion, dilation, and random contour) were considered stable, while features with an ICC < 0.5, for large entity translations, were considered discriminative.

Features selected as stable, and discriminant were thus submitted to redundancy analysis. The Spearman correlation coefficient ρ was calculated for each couple of features. If |ρ| exceeded a predefined threshold, only features with the lower mean Spearman coefficient when compared to all other n-2 (n = total number of features) were kept. Following the results achieved in [22], two thresholds were considered: 0.90 and 0.95.

Finally, the most relevant features were selected by testing 5 different feature selection methods: p-value, least absolute shrinkage and selection operator (LASSO), semi-supervised LASSO (ssLASSO), principal component analysis (PCA), semi-supervised PCA (ssPCA). The p-value and semi-supervised based methods involves conducting a Mann–Whitney U test on each feature to identify significant differences between two unpaired groups of patients and then to apply the LASSO or PCA.

ML Model Development

In this study, two classification tasks were considered: distinguishing CA from AS and CA from HCM.

For both the classification tasks, the first step performed in the model development process was dividing the dataset into training and test set by mean of a 10-fold cross validation. In each fold, training set was used to select the final set of features and train the classifier, while the test set was employed to evaluate model performance. Since [22] reported support vector machine (SVM) as the best classifier in distinguishing CA from AS, it was chosen to address the two tasks.

During the training phase, data were balanced or augmented using features extracted from the perturbated ROI. In particular, dilated, eroded, and randomized ROI were employed. ROI dilation and erosion were performed using |τ|= 0.30, thus, obtaining a ROI shrunk or enlarged by 30%. ROI contour randomization was, indeed, performed using a superpixel area of 4 cm2 which provided a 70% of overlapping between the original and the modified ROI. Compared to robustness analysis, larger perturbation entities were chosen to generate new and different instances contributing to introduce variability and prevent overfitting.

Data Balancing

In the CA-AS classification task, since the dataset was imbalanced (32 AS vs. 21 CA), balancing was performed by two methods, summarized in Fig. 2, both applied to the training set. In the first approach, ROS, ADASYN, and SMOTE, commonly used as data balancing techniques, were employed. ROS randomly selects samples from the minority class, with replacement, and adds them to the training dataset. ADASYN uses a weighted distribution for minority class examples based on their learning difficulty and produces more synthetic data for challenging instances and less for easier-to-learn minority samples. SMOTE creates synthetic samples for the minority class by interpolating between existing instances. The algorithm selects the k-nearest neighbors for each minority instance and generates new synthetic instances along the line segments connecting the instance and its neighbors. In this work, k was set to 5.

Machine learning workflow dealing with data-balancing task. In the training set, the grey dots represent majority class samples, whereas the white ones represent minority class samples. X: a synthetic sample obtained by using ROS, ADASYN, or SMOTE, E: a synthetic sample obtained from an eroded ROI, D: a synthetic sample obtained from a dilated ROI, R: a synthetic sample obtained from a random contour perturbation of the ROI. ROS: random over-sampling; ADASYN: adaptive synthetic; SMOTE: synthetic minority oversampling technique

The second approach, indeed, employed ROI perturbations. The balancing required number of patients was selected from the minority class in the training set. Thus, ROIs belonging to such patients were perturbated randomly choosing among erosion, dilation, and contour randomization. Finally, features were extracted from the perturbed ROIs and added to the training set.

Data Augmentation

As concern CA-HCM classification, since the dataset was balanced but small, data augmentation was performed using ROS, ADASYN, SMOTE, or perturbations, and results were compared to the non-augmented data. The three methods are schematized in Fig. 3.

Machine learning workflow dealing with data augmentation task. In the training set, the grey and dots represent the two classes to be classified. X: a synthetic sample obtained by using ROS, ADASYN, or SMOTE, E: a synthetic sample obtained from an eroded ROI, D: a synthetic sample obtained from a dilated ROI, R: a synthetic sample obtained from a random contour perturbation of the ROI. ROS: random over-sampling; ADASYN: adaptive synthetic; SMOTE: synthetic minority oversampling technique

Employing ROS, ADASYN, and SMOTE method, the samples belonging to a single class were quadrupled, and the algorithm was applied: the process was carried out independently for both classes, and the newly generated samples were then integrated into the training set.

In the perturbation approach, indeed, augmentation was performed by adding the features extracted from the perturbed ROIs pertained to the training samples: in such a way, for each training instance, three new samples obtained from the dilated, eroded, and randomized ROI were obtained. Thus, the samples belonging to each class were quadrupled as when using the other balancing techniques.

Validation Model

To validate the proposed data augmentation method, an external set of EAT CMR images was considered to classify patients experiencing an HCE from patients who did not. Features extracted from the original ROIs were submitted to the three feature selection steps described in the radiomic feature evaluation section, to obtain a set of robust, non-redundant, and relevant features. The correlation threshold was set to 0.95.

As stated for the CA-AS and CA-HCM datasets, a 10-fold cross validation was used to divide the dataset into training and test set: within each fold the training set was employed to select the final features set and train the SVM model, while the test set to evaluate the classifier performance. As the dataset was balanced but small, data augmentation was performed according to the workflow explained in the data augmentation section.

Results

Feature Selection

A total of 32 and 40 features were identified as robust, i.e., stable, and discriminative, for CA-AS and CA-HCM classification tasks, respectively (Table 3).

Performing the distinction between CA and AS, using a correlation threshold of 0.95, an average of 23 ± 1, 23 ± 1, 23 ± 1, and 24 ± 1 non-redundant features were selected at each train-test split, for the ROS, ADASYN, SMOTE, and the perturbation-based balancing methods respectively.

Dealing with CA-HCM classification, the redundancy analysis with correlation threshold of 0.95 identified 28 ± 1, 28 ± 1, 27 ± 1, 26 ± 1, and 30 ± 0 non-correlated features using the no-augmentation, ROS, ADASYN, SMOTE, and perturbation-based augmentation methods, respectively.

Focusing on the five feature selection methods, the average number of features identified as relevant from each technique, are reported in Table 4 and 5. As shown, in the case of CA–AS differentiation, the number of selected features ranges between 5 and 10, in the CA-HCM issue, between 6 and 23.

Results for the correlation threshold equal to 0.90 are shown in the Supplementary material.

Classification with Data Balancing

The performance achieved for the classification task CA vs. AS, by combining SVM with the 0.95 correlation threshold and each of the five feature selection methods is reported in Fig. 4 in terms of sensitivity, specificity, balanced accuracy, and f1 score, averaged on the 10 test folds. The analogous figures for the correlation threshold 0.90 are shown in Supplementary Fig. 1. It can be noted that the highest mean f1 score and balanced accuracy, 80% and 85%, respectively, were reached using the ssPCA feature selection technique and ROI perturbations balancing method. These results were compared with the ones achieved by the other balancing methods using a repeated measure ANOVA, or Kruskal Wallis test, followed by post hoc tests. Balanced accuracy was significantly higher using the perturbation-based method than all the other balancing techniques, f1 score was significantly higher using the perturbation-based method than using ROS and SMOTE. Considering all the feature selection methods and all the performance metrics, the differences were not always statistically significant, but, in most cases, the performance metrics of the perturbation-based balancing method tend to be superior to those with ROS, ADASYN, and SMOTE.

Mean a) sensitivity, b) specificity, c) balanced accuracy, and d) f1 score, averaged on the 10 test folds for the different feature selection methods when differentiating CA from AS, using ROS (yellow bars), ADASYN (orange bars), SMOTE (red bars), or ROI perturbations (green bars). LASSO, least absolute shrinkage and selection operator; ssLASSO, semi-supervised LASSO; PCA, principal component analysis; ssPCA, semi-supervised PCA. ROS: random over-sampling; ADASYN: adaptive synthetic; SMOTE: synthetic minority oversampling technique; LASSO: least absolute shrinkage and selection operator; ssLASSO: semi-supervised LASSO; PCA: principal component analysis; ssPCA: semi-supervised PCA

Figure 5(a) shows the ROC curve, highlighting an AUC of 0.92.

Classification with Data Augmentation

The performance achieved for the classification task CA vs. HCM, by combining SVM with the 0.95 correlation threshold and each of the five feature selection methods, is reported in Fig. 6 in terms of sensitivity, specificity, accuracy, and f1 score, averaged on the 10 test folds. The analogous figures for the correlation threshold 0.90 are shown in in Supplementary Fig. 2. It can be noted that all performance metrics tend to be higher when using data augmentation in respect to the original dataset. Moreover, in most cases, the perturbation-based method tends to achieve higher f1 score and accuracy compared to ROS, ADASYN, and SMOTE (no significant differences). In particular, the highest mean f1 score and accuracy, 86% and 88%, respectively, were reached using the p-value feature selection technique and perturbation-based augmentation method. Figure 5(b) shows the ROC curve, highlighting an AUC of 0.91.

Mean a) sensitivity, b) specificity, c) balanced accuracy, d) f1 score, averaged on the 10 test folds for the different feature selection methods when differentiating CA from HCM, without augmentation (orange bars), using ROS (light yellow bars), ADASYN (light green bars), and SMOTE (dark green bars) or ROI perturbations (light blue bars). ROS: random over-sampling; ADASYN: adaptive synthetic; SMOTE: synthetic minority oversampling technique; LASSO, least absolute shrinkage and selection operator; ssLASSO, semi-supervised LASSO; PCA, principal component analysis; ssPCA, semi-supervised PCA

Validation Set

A total of 100 features were identified as robust, i.e., stable, and discriminative. At each train-test split an average of 62 ± 1, 62 ± 2, 62 ± 1, 60 ± 2, and 61 ± 1 non-redundant features, using the no-augmentation, ROS, ADASYN, SMOTE, and perturbation-based augmentation methods, respectively.

Focusing on the five feature selection methods, the average number of features, identified as relevant from each technique, is reported in Table 5.

The performance achieved for the classification task HCE vs. not-HCE, by combining SVM with the 0.95 correlation threshold and each of the five feature selection methods, is reported in Fig. 7 in terms of sensitivity, specificity, accuracy, and f1 score, averaged on the 10 test folds. Comparing the different augmentation techniques, it can be observed that in most cases f1 score and balanced accuracy show higher values using the perturbation-based method (no significant differences), with maximum scores, 78% and 70%, respectively, reached in correspondence of the p-value feature selection method.

Mean a) sensitivity, b) specificity, c) balanced accuracy, and d) f1 score, averaged on the 10 test folds for the different feature selection methods when differentiating patients experiencing an HCE from patients who did not, without augmentation (orange bars), using ROS (light yellow bars), ADASYN (light green bars) and SMOTE (dark green bars) or ROI perturbations (light blue bars). ROS: random over-sampling; ADASYN: adaptive synthetic; SMOTE: synthetic minority oversampling technique; LASSO, least absolute shrinkage and selection operator; ssLASSO, semi-supervised LASSO; PCA, principal component analysis; ssPCA, semi-supervised PCA

Discussions

The main findings of this study are the following: (i) in case of imbalanced dataset, ROI perturbations can be used to balance the two classes achieving greater performance compared to ROS, ADASYN, and SMOTE; (ii) in case of small dataset, ROI perturbations can be used to increase data size providing better results compared to a no-augmentation or a ROS, ADASYN, or SMOTE-based approach.

In clinical settings, many medical image datasets present challenges such as imbalance or lack of data, which can make it difficult to achieve high predictive performance using data-driven machine learning methods. Since radiomics focus on medical images, these are common issues found within several studies [17, 18, 35].

Dealing with imbalanced dataset, three resampling approaches are usually chosen as a pre-processing step: over-sampling, under-sampling, and hybrid systems. The over-sampling technique helps to improve the performance of models but present the drawback of overfitting and introduces additional noise. Under-sampling method, indeed, works by removing instances from the majority class showing an advantage in saving computation time [18]. However, it might remove critical instances required for defining the class boundaries and might leave unnecessary instances of the majority class in the training data, generating additional noise [36]. Hybrid systems, finally, combined the two previously described resampling techniques.

In the radiomic field, literature reports several studies applying various resampling methods. In [17], Xie et al. investigated the impact of ten re-sampling techniques on PET-based radiomics models for predicting prognosis in head and neck cancer. The overall survival and disease-free survival were predicted in three imbalanced datasets finding that resampling techniques impacted positively on the prediction performance, but depending on the clinical problem and dataset, the results of each individual balancing approach can vary. The study of Park et al. [35] involved the application of ROS and SMOTE resampling techniques in radiomics analysis for predicting the grade and histological subtype of meningiomas. SMOTE resampling demonstrated higher performance, with an AUC of 0.86, with respect to ROS (AUC = 0.85). In [18] the authors have focused on predicting lymph node metastasis in clinical stage T1 lung adenocarcinoma patients using radiomics analysis based on PET/CT images: the unbalanced dataset problem in this study was addressed by testing ten resampling strategies (ROS, ADASYN, SMOTE, bSMOTE, RUS, NM, TL, ENN, SMOTE-TL, SMOTE-ENN) among which ENN resampling method showed prediction performances averagely higher of 0.04 ± 0.02 (AUC = 0.71). It can be observed that there is no consensus concerning the best resampling method as this depends on the clinical problem and the data intrinsic characteristics, such as, dataset size and dimensionality, imbalance ratio, overlapping between classes or borderline samples [18].

Working with small dataset, indeed, data augmentation strategies are useful to increase the training data and mitigate the potential for overfitting. However, data augmentation is mainly used in the deep learning field, and it is usually achieved by generating new transformed versions of the original images or using GAN. Literature lacks medical images-based studies reporting methods addressing feature augmentation, but this task can be also achieved by employing several resampling methods.

In the current study, a new image perturbation-based technique was developed to address, for the first time, the class balancing and data augmentation issues. Differently from other existing methods, this approach focuses on generating new features starting from the image itself and not from the available features. Two correlation thresholds (0.90 and 0.95) and five feature selection methods (p-value, LASSO, ssLASSO, PCA, ssPCA) were combined and tested to evaluate the results among different models. Finally, to assess the efficacy of the proposed perturbation-based method, its performances were compared with those obtained by using SMOTE. The performance metrics of both classifications (CA vs. HCM and CA vs. AS) show that using a correlation threshold equal to 0.95, in most cases, our perturbation-based method outperforms SMOTE in terms of both f1 score and balanced accuracy, independently from the chosen feature selection approach. The same observation can be done setting the correlation threshold to 0.90 (Supplementary material).

In particular, dealing with CA-AS classification, the perturbation-based balancing strategy reached the highest balanced accuracy value of 85%, in correspondence of the ssPCA, while the maximum accuracy achieved by ROS, ADASYN, and SMOTE was 78% (LASSO model), 72% (PCA model), 73% (ssPCA model), respectively. As concern the CA-HCM classification the greatest accuracy of 88% was obtained with the perturbation-based method using the p-value and the PCA feature selection methods while ROS and ADASYN achieved their best accuracy values, 80% and 81%, in correspondence of ssLASSO and the no-augmentation and the SMOTE-based approaches showed the highest accuracy of 76% and 81%, respectively, using the ssPCA. The promising results reached by the developed data augmentation method, observed in both the CA-AS and CA-HCM classification tasks were finally validated with an external CMR dataset. Distinguishing patients experiencing an HCE from those who did not, the perturbation-based method demonstrated higher performances than all the other methods. In particular, perturbation-based performance metrics reached the maximum accuracy value of 78%, in correspondence of the p-value and ssPCA-based feature selection methods, while no augmentation, ROS, ADASYN, and SMOTE reached a maximum accuracy of 63%, 70%, 70%, and 68%, respectively.

CA patients were included in both classification tasks, as identifying this disease represents a relevant and challenging task from the clinical point of view. Nowadays, an accurate diagnosis of CA is challenging and crucial as this disease is substantially underdiagnosed and mimics a wide range of cardiac and systemic conditions. Furthermore, the recent introduction of new and effective therapies has emphasized the increased importance of its early recognition to improve patient prognosis. Endomyocardial biopsy is the historic gold standard for diagnosis of CA, but it carries a small risk of serious complication and requires the technical expertise of a procedural cardiologist. Over the last decade, the great progress achieved in the cardiac imaging field has opened new frontiers in the diagnosis of CA. Bone scintigraphy has emerged as a reliable non-invasive alternative to endomyocardial biopsy [37], but the approach presents several limitations from the logistic and economic point of view.

Literature shows that several studies have tried to improve CA diagnosis using machine learning algorithms. In [38], Wu et al. developed a machine learning tool able to distinguish 74 patients with CA from 64 patients with HCM with an AUC of 0.86, using speckle tracking echocardiography data. The same clinical goal was achieved in [39] where the authors combined CMR-based radiomic texture features with conventional MR metrics reaching an accuracy of 0.85 in classifying 85 patients affected by CA and 82 affected by HCM. Radiomics has recently achieved good results in differentiating CA from AS. In [22], a CCT-based radiomic model was employed to distinguish 15 patients affected by CA from 15 patients with AS with an accuracy of 0.83. Similar results were reached in [21] with an AUC of 0.92 considering 21 patients with CA and 44 with AS. These results are in line with the ones obtained in the present study.

Conclusions

ROI perturbations can be used to balance an imbalanced dataset as well as to augment data size in a radiomics-based classification study.

Data Availability

The raw data supporting the conclusions of this article will be made available by the authors, upon reasonable request.

References

La Greca Saint-Esteven, A., Vuong, D., Tschanz, F., van Timmeren, J.E., Dal Bello, R., Waller, V., Pruschy, M., Guckenberger, M., Tanadini-Lang, S.: Systematic Review on the Association of Radiomics with Tumor Biological Endpoints. Cancers. 13, 3015 (2021). https://doi.org/10.3390/cancers13123015.

Corino, V.D.A., Montin, E., Messina, A., Casali, P.G., Gronchi, A., Marchianò, A., Mainardi, L.T.: Radiomic analysis of soft tissues sarcomas can distinguish intermediate from high-grade lesions. Journal of Magnetic Resonance Imaging. 47, 829–840 (2018). https://doi.org/10.1002/jmri.25791.

Kothari, G.: Role of radiomics in predicting immunotherapy response. Journal of Medical Imaging and Radiation Oncology. 66, 575–591 (2022). https://doi.org/10.1111/1754-9485.13426.

Bologna, M., Calareso, G., Resteghini, C., Sdao, S., Montin, E., Corino, V., Mainardi, L., Licitra, L., Bossi, P.: Relevance of apparent diffusion coefficient features for a radiomics-based prediction of response to induction chemotherapy in sinonasal cancer. NMR in Biomedicine. 35, e4265 (2022). https://doi.org/10.1002/nbm.4265.

Zhang, B., Ouyang, F., Gu, D., Dong, Y., Zhang, L., Mo, X., Huang, W., Zhang, S.: Advanced nasopharyngeal carcinoma: pre-treatment prediction of progression based on multi-parametric MRI radiomics. Oncotarget. 8, 72457–72465 (2017). https://doi.org/10.18632/oncotarget.19799.

Raisi-Estabragh, Z., Jaggi, A., Gkontra, P., McCracken, C., Aung, N., Munroe, P.B., Neubauer, S., Harvey, N.C., Lekadir, K., Petersen, S.E.: Cardiac Magnetic Resonance Radiomics Reveal Differential Impact of Sex, Age, and Vascular Risk Factors on Cardiac Structure and Myocardial Tissue. Front. Cardiovasc. Med. 8, 763361 (2021). https://doi.org/10.3389/fcvm.2021.763361.

Lee, J.W., Park, C.H., Im, D.J., Lee, K.H., Kim, T.H., Han, K., Hur, J.: CT-based radiomics signature for differentiation between cardiac tumors and thrombi: a retrospective, multicenter study. Sci Rep. 12, 8173 (2022). https://doi.org/10.1038/s41598-022-12229-x.

Ponsiglione, A., Stanzione, A., Cuocolo, R., Ascione, R., Gambardella, M., De Giorgi, M., Nappi, C., Cuocolo, A., Imbriaco, M.: Cardiac CT and MRI radiomics: systematic review of the literature and radiomics quality score assessment. Eur Radiol. 32, 2629–2638 (2022). https://doi.org/10.1007/s00330-021-08375-x.

Shang, J., Guo, Y., Ma, Y., Hou, Y.: Cardiac computed tomography radiomics: a narrative review of current status and future directions. Quant Imaging Med Surg. 12, 3436–3453 (2022). https://doi.org/10.21037/qims-21-1022.

Hu, W., Wu, X., Dong, D., Cui, L.-B., Jiang, M., Zhang, J., Wang, Y., Wang, X., Gao, L., Tian, J., Cao, F.: Novel radiomics features from CCTA images for the functional evaluation of significant ischaemic lesions based on the coronary fractional flow reserve score. Int J Cardiovasc Imaging. 36, 2039–2050 (2020). https://doi.org/10.1007/s10554-020-01896-4.

Lin, A., Kolossváry, M., Yuvaraj, J., Cadet, S., McElhinney, P.A., Jiang, C., Nerlekar, N., Nicholls, S.J., Slomka, P.J., Maurovich-Horvat, P., Wong, D.T.L., Dey, D.: Myocardial Infarction Associates With a Distinct Pericoronary Adipose Tissue Radiomic Phenotype. JACC: Cardiovascular Imaging. 13, 2371–2383 (2020). https://doi.org/10.1016/j.jcmg.2020.06.033.

Oikonomou, E.K., Williams, M.C., Kotanidis, C.P., Desai, M.Y., Marwan, M., Antonopoulos, A.S., Thomas, K.E., Thomas, S., Akoumianakis, I., Fan, L.M., Kesavan, S., Herdman, L., Alashi, A., Centeno, E.H., Lyasheva, M., Griffin, B.P., Flamm, S.D., Shirodaria, C., Sabharwal, N., Kelion, A., Dweck, M.R., Van Beek, E.J.R., Deanfield, J., Hopewell, J.C., Neubauer, S., Channon, K.M., Achenbach, S., Newby, D.E., Antoniades, C.: A novel machine learning-derived radiotranscriptomic signature of perivascular fat improves cardiac risk prediction using coronary CT angiography. European Heart Journal. 40, 3529–3543 (2019). https://doi.org/10.1093/eurheartj/ehz592.

Shang, J., Ma, S., Guo, Y., Yang, L., Zhang, Q., Xie, F., Ma, Y., Ma, Q., Dang, Y., Zhou, K., Liu, T., Yang, J., Hou, Y.: Prediction of acute coronary syndrome within 3 years using radiomics signature of pericoronary adipose tissue based on coronary computed tomography angiography. Eur Radiol. 32, 1256–1266 (2022). https://doi.org/10.1007/s00330-021-08109-z.

Izquierdo, C., Casas, G., Martin-Isla, C., Campello, V.M., Guala, A., Gkontra, P., Rodríguez-Palomares, J.F., Lekadir, K.: Radiomics-Based Classification of Left Ventricular Non-compaction, Hypertrophic Cardiomyopathy, and Dilated Cardiomyopathy in Cardiovascular Magnetic Resonance. Front. Cardiovasc. Med. 8, 764312 (2021). https://doi.org/10.3389/fcvm.2021.764312.

Goceri, E.: Medical image data augmentation: techniques, comparisons and interpretations. Artif Intell Rev. (2023). https://doi.org/10.1007/s10462-023-10453-z.

Khushi, M., Shaukat, K., Alam, T.M., Hameed, I.A., Uddin, S., Luo, S., Yang, X., Reyes, M.C.: A Comparative Performance Analysis of Data Resampling Methods on Imbalance Medical Data. IEEE Access. 9, 109960–109975 (2021). https://doi.org/10.1109/ACCESS.2021.3102399.

Xie, C., Du, R., Ho, J.W., Pang, H.H., Chiu, K.W., Lee, E.Y., Vardhanabhuti, V.: Effect of machine learning re-sampling techniques for imbalanced datasets in 18F-FDG PET-based radiomics model on prognostication performance in cohorts of head and neck cancer patients. Eur J Nucl Med Mol Imaging. 47, 2826–2835 (2020). https://doi.org/10.1007/s00259-020-04756-4.

Lv, J., Chen, X., Liu, X., Du, D., Lv, W., Lu, L., Wu, H.: Imbalanced Data Correction Based PET/CT Radiomics Model for Predicting Lymph Node Metastasis in Clinical Stage T1 Lung Adenocarcinoma. Front Oncol. 12, 788968 (2022). https://doi.org/10.3389/fonc.2022.788968.

Yasaka, K., Akai, H., Kunimatsu, A., Kiryu, S., Abe, O.: Deep learning with convolutional neural network in radiology. Jpn J Radiol. 36, 257–272 (2018). https://doi.org/10.1007/s11604-018-0726-3.

Kalashami, M.P., Pedram, M.M., Sadr, H.: EEG Feature Extraction and Data Augmentation in Emotion Recognition. Computational Intelligence and Neuroscience. 2022, e7028517 (2022). https://doi.org/10.1155/2022/7028517.

Iacono, F.L., Maragna, R., Guglielmo, M., Chiesa, M., Fusini, L., Annoni, A., Babbaro, M., Baggiano, A., Carerj, M.L., Cilia, F., Torto, A.D., Formenti, A., Mancini, M.E., Marchetti, F., Muratori, M., Mushtaq, S., Penso, M., Pirola, S., Tassetti, L., Volpe, A., Guaricci, A.I., Fontana, M., Tamborini, G., Treibel, T., Moon, J., Corino, V.D.A., Pontone, G.: Identification of subclinical cardiac amyloidosis in aortic stenosis patients undergoing transaortic valve replacement using radiomic analysis of computed tomography myocardial texture. Journal of Cardiovascular Computed Tomography. (2023). https://doi.org/10.1016/j.jcct.2023.04.002.

Lo Iacono, F., Maragna, R., Pontone, G., Corino, V.D.A.: A robust radiomic-based machine learning approach to detect cardiac amyloidosis using cardiac computed tomography. Frontiers in Radiology. 3, (2023).

Manolis, A.S., Manolis, A.A., Manolis, T.A., Melita, H.: Cardiac amyloidosis: An underdiagnosed/underappreciated disease. Eur J Intern Med. 67, 1–13 (2019). https://doi.org/10.1016/j.ejim.2019.07.022.

Liu, H., Bai, P., Xu, H.-Y., Li, Z.-L., Xia, C.-C., Zhou, X.-Y., Gong, L.-G., Guo, Y.-K.: Distinguishing Cardiac Amyloidosis and Hypertrophic Cardiomyopathy by Thickness and Myocardial Deformation of the Right Ventricle. Cardiol Res Pract. 2022, 4364279 (2022). https://doi.org/10.1155/2022/4364279.

Aortic Stenosis and Cardiac Amyloidosis: JACC Review Topic of the Week - ScienceDirect, https://www.sciencedirect.com/science/article/pii/S0735109719379264?via%3Dihub, last accessed 2023/08/08.

Ternacle, J., Krapf, L., Mohty, D., Magne, J., Nguyen, A., Galat, A., Gallet, R., Teiger, E., Côté, N., Clavel, M.-A., Tournoux, F., Pibarot, P., Damy, T.: Aortic Stenosis and Cardiac Amyloidosis: JACC Review Topic of the Week. Journal of the American College of Cardiology. 74, 2638–2651 (2019). https://doi.org/10.1016/j.jacc.2019.09.056.

Cardiac amyloidosis and hypertrophic cardiomyopathy: “You always have time to make an accurate diagnosis!” - International Journal of Cardiology, https://www.internationaljournalofcardiology.com/article/S0167-5273(19)33615-0/fulltext, last accessed 2023/08/08.

Zhang, L., Xu, Z., Jiang, B., Zhang, Y., Wang, L., de Bock, G.H., Vliegenthart, R., Xie, X.: Machine-learning-based radiomics identifies atrial fibrillation on the epicardial fat in contrast-enhanced and non-enhanced chest CT. BJR. 95, 20211274 (2022). https://doi.org/10.1259/bjr.20211274.

Mancio, J., Azevedo, D., Saraiva, F., Azevedo, A.I., Pires-Morais, G., Leite-Moreira, A., Falcao-Pires, I., Lunet, N., Bettencourt, N.: Epicardial adipose tissue volume assessed by computed tomography and coronary artery disease: a systematic review and meta-analysis. European Heart Journal - Cardiovascular Imaging. 19, 490–497 (2018). https://doi.org/10.1093/ehjci/jex314.

Yang, M., Cao, Q., Xu, Z., Ge, Y., Li, S., Yan, F., Yang, W.: Development and Validation of a Machine Learning-Based Radiomics Model on Cardiac Computed Tomography of Epicardial Adipose Tissue in Predicting Characteristics and Recurrence of Atrial Fibrillation. Frontiers in Cardiovascular Medicine. 9, (2022).

van Griethuysen, J.J.M., Fedorov, A., Parmar, C., Hosny, A., Aucoin, N., Narayan, V., Beets-Tan, R.G.H., Fillion-Robin, J.-C., Pieper, S., Aerts, H.J.W.L.: Computational Radiomics System to Decode the Radiographic Phenotype. Cancer Res. 77, e104–e107 (2017). https://doi.org/10.1158/0008-5472.CAN-17-0339.

Zwanenburg, A., Leger, S., Agolli, L., Pilz, K., Troost, E.G.C., Richter, C., Löck, S.: Assessing robustness of radiomic features by image perturbation. Sci Rep. 9, 614 (2019). https://doi.org/10.1038/s41598-018-36938-4.

Bologna, M., Corino, V.D.A., Montin, E., Messina, A., Calareso, G., Greco, F.G., Sdao, S., Mainardi, L.T.: Assessment of Stability and Discrimination Capacity of Radiomic Features on Apparent Diffusion Coefficient Images. J Digit Imaging. 31, 879–894 (2018). https://doi.org/10.1007/s10278-018-0092-9.

Koo, T.K., Li, M.Y.: A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. Journal of Chiropractic Medicine. 15, 155–163 (2016). https://doi.org/10.1016/j.jcm.2016.02.012.

Park, Y.W., Oh, J., You, S.C., Han, K., Ahn, S.S., Choi, Y.S., Chang, J.H., Kim, S.H., Lee, S.-K.: Radiomics and machine learning may accurately predict the grade and histological subtype in meningiomas using conventional and diffusion tensor imaging. Eur Radiol. 29, 4068–4076 (2019). https://doi.org/10.1007/s00330-018-5830-3.

Sasada, T., Liu, Z., Baba, T., Hatano, K., Kimura, Y.: A Resampling Method for Imbalanced Datasets Considering Noise and Overlap. Procedia Computer Science. 176, 420–429 (2020). https://doi.org/10.1016/j.procs.2020.08.043.

Pibarot, P., Lancellotti, P., Narula, J.: Concomitant Cardiac Amyloidosis in Severe Aortic Stenosis: The Trojan Horse?∗. Journal of the American College of Cardiology. 77, 140–143 (2021). https://doi.org/10.1016/j.jacc.2020.11.007.

Wu, Z.-W., Zheng, J.-L., Kuang, L., Yan, H.: Machine learning algorithms to automate differentiating cardiac amyloidosis from hypertrophic cardiomyopathy. Int J Cardiovasc Imaging. 39, 339–348 (2023). https://doi.org/10.1007/s10554-022-02738-1.

Jiang, S., Zhang, L., Wang, J., Li, X., Hu, S., Fu, Y., Wang, X., Hao, S., Hu, C.: Differentiating between cardiac amyloidosis and hypertrophic cardiomyopathy on non-contrast cine-magnetic resonance images using machine learning-based radiomics. Frontiers in Cardiovascular Medicine. 9, (2022).

Funding

Open access funding provided by Politecnico di Milano within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Contributions

FL: radiomic feature extraction, prognostic model training and validation, manuscript writing; VDAC: 300 data analysis, manuscript writing; RM: image segmentation, image data collection and cleaning, manuscript revision; GP: study design, supervision, manuscript revision. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Ethics Approval

The study conforms to the Declaration of Helsinki, and all participants gave informed consent of the study that was approved by the Institutional Ethical Committee.

Consent to Participate

Informed consent was obtained from all individual participants included in the study.

Competing Interests

Gianluca Pontone declares the following conflict of interest: Honorarium as speaker/consultant and/or 297 research grants from GE Healthcare, Bracco, Heartflow, Boheringher. All other authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lo Iacono, F., Maragna, R., Pontone, G. et al. A Novel Data Augmentation Method for Radiomics Analysis Using Image Perturbations. J Digit Imaging. Inform. med. (2024). https://doi.org/10.1007/s10278-024-01013-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10278-024-01013-0