Abstract

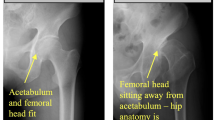

To use a novel deep learning system to localize the hip joints and detect findings of cam-type femoroacetabular impingement (FAI). A retrospective search of hip/pelvis radiographs obtained in patients to evaluate for FAI yielded 3050 total studies. Each hip was classified separately by the original interpreting radiologist in the following manner: 724 hips had severe cam-type FAI morphology, 962 moderate cam-type FAI morphology, 846 mild cam-type FAI morphology, and 518 hips were normal. The anteroposterior (AP) view from each study was anonymized and extracted. After localization of the hip joints by a novel convolutional neural network (CNN) based on the focal loss principle, a second CNN classified the images of the hip as cam positive, or no FAI. Accuracy was 74% for diagnosing normal vs. abnormal cam-type FAI morphology, with aggregate sensitivity and specificity of 0.821 and 0.669, respectively, at the chosen operating point. The aggregate AUC was 0.736. A deep learning system can be applied to detect FAI-related changes on single view pelvic radiographs. Deep learning is useful for quickly identifying and categorizing pathology on imaging, which may aid the interpreting radiologist.

Similar content being viewed by others

Data Availability

The data that support the findings of this study are available from the corresponding author, MH, upon reasonable request.

References

Ghaffari A, Davis I, Storey T, Moser M. Current concepts of femoroacetabular impingement. Radiol Clin North Am. 2018;56(6):965-982.

Amanatullah DF, Antkowiak T, Pillay K, et al. Femoroacetabular impingement: current concepts in diagnosis and treatment. Orthopedics. 2015;38(3):185-199.

Tannast M, Siebenrock KA, Anderson SE. Femoroacetabular impingement: radiographic diagnosis--what the radiologist should know. AJR Am J Roentgenol. 2007;188(6):1540-1552.

Mascarenhas VV, Rego P, Dantas P, et al. Imaging prevalence of femoroacetabular impingement in symptomatic patients, athletes, and asymptomatic individuals: a systematic review. Eur J Radiol. 2016;85(1):73-95.

Clohisy JC, Carlisle JC, Trousdale R, et al. Radiographic evaluation of the hip has limited reliability. Clin Orthop Relat Res. 2009;467(3):666-675.

Egger AC, Frangiamore S, Rosneck J. Femoroacetabular impingement: a review. Sports Med Arthrosc. 2016;24(4):e53-e58.

Sim Y, Chung MJ, Kotter E, et al. Deep convolutional neural network-based software improves radiologist detection of malignant lung nodules on chest radiographs. Radiology. 2020;294(1):199-209.

Park VY, Han K, Seong YK, et al. Diagnosis of thyroid nodules: performance of a deep learning convolutional neural network model vs. radiologists. Sci Rep. 2019;9(1):17843.

Truhn D, Schrading S, Haarburger C, Schneider H, Merhof D, Kuhl C. Radiomic versus convolutional neural networks analysis for classification of contrast-enhancing lesions at multiparametric breast MRI. Radiology. 2019;290(2):290-297.

Mutasa S, Chang PD, Ruzal-Shapiro C, Ayyala R. MABAL: a novel deep-learning architecture for machine-assisted bone age labeling. J Digit Imaging. 2018;31(4):513-519.

Xue Y, Zhang R, Deng Y, Chen K, Jiang T. A preliminary examination of the diagnostic value of deep learning in hip osteoarthritis. PLoS One. 2017;12(6):e0178992.

Irmakci I, Anwar SM, Torigian DA, Bagci U. Deep learning for Musculoskeletal image analysis. arXiv [eessIV]. Published online March 1, 2020. http://arxiv.org/abs/2003.00541

Jones RM, Sharma A, Hotchkiss R, et al. Assessment of a deep-learning system for fracture detection in musculoskeletal radiographs. NPJ Digit Med. 2020;3:144.

Tomita N, Cheung YY, Hassanpour S. Deep neural networks for automatic detection of osteoporotic vertebral fractures on CT scans. Comput Biol Med. 2018;98:8-15.

Urakawa T, Tanaka Y, Goto S, Matsuzawa H, Watanabe K, Endo N. Detecting intertrochanteric hip fractures with orthopedist-level accuracy using a deep convolutional neural network. Skeletal Radiol. 2019;48(2):239-244.

Bien N, Rajpurkar P, Ball RL, et al. Deep-learning-assisted diagnosis for knee magnetic resonance imaging: development and retrospective validation of MRNet. PLoS Med. 2018;15(11):e1002699.

Pedoia V, Norman B, Mehany SN, Bucknor MD, Link TM, Majumdar S. 3D convolutional neural networks for detection and severity staging of meniscus and PFJ cartilage morphological degenerative changes in osteoarthritis and anterior cruciate ligament subjects. J Magn Reson Imaging. 2019;49(2):400-410.

Lang N, Zhang Y, Zhang E, et al. Differentiation of spinal metastases originated from lung and other cancers using radiomics and deep learning based on DCE-MRI. Magn Reson Imaging. 2019;64:4-12.

McBee MP, Awan OA, Colucci AT, et al. Deep learning in radiology. Acad Radiol. 2018;25(11):1472-1480.

Raman SP, Chen Y, Schroeder JL, Huang P, Fishman EK. CT texture analysis of renal masses: pilot study using random forest classification for prediction of pathology. Acad Radiol. 2014;21(12):1587-1596.

Kohli M, Prevedello LM, Filice RW, Geis JR. Implementing machine learning in radiology practice and research. AJR Am J Roentgenol. 2017;208(4):754-760.

Hecht-Nielsen R. Theory of the backpropagation neural network. In: Neural Networks for Perception. Elsevier; 1992:65–93.

He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2016:770–778.

Szegedy C, Liu W, Jia Y, et al. Going deeper with convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2015:1–9.

Lin T-Y, Goyal P, Girshick R, He K, Dollár P. Focal loss for dense object detection. In: Proceedings of the IEEE International Conference on Computer Vision. 2017:2980–2988.

Ng AY. Feature selection, L 1 vs. L 2 regularization, and rotational invariance. In: Proceedings of the Twenty-First International Conference on Machine Learning. 2004:78.

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R. Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res. 2014;15(1):1929-1958.

Mascarenhas VV, Caetano A, Dantas P, Rego P. Advances in FAI imaging: a focused review. Curr Rev Musculoskelet Med. 2020;13(5):622-640.

Lloyd RFW, Feeney C. Adolescent hip pain: the needle in the haystack. A case report. Physiotherapy. 2011;97(4):354-356. doi:https://doi.org/10.1016/j.physio.2010.11.009

Zeng G, Zheng G. Deep learning-based automatic segmentation of the proximal femur from MR images. In: Zheng G, Tian W, Zhuang X, eds. Intelligent Orthopaedics: Artificial Intelligence and Smart Image-Guided Technology for Orthopaedics. Springer Singapore; 2018:73–79.

Hodgdon T, Thornhill RE, James ND, Beaulé PE, Speirs AD, Rakhra KS. CT texture analysis of acetabular subchondral bone can discriminate between normal and cam-positive hips. Eur Radiol. 2020;30(8):4695-4704.

Zech JR, Badgeley MA, Liu M, Costa AB, Titano JJ, Oermann EK. Variable generalization performance of a deep learning model to detect pneumonia in chest radiographs: a cross-sectional study. PLoS Med. 2018;15(11):e1002683.

Medina G, Buckless CG, Thomasson E, Oh LS, Torriani M. Deep learning method for segmentation of rotator cuff muscles on MR images. Skeletal Radiol. 2021;50(4):683-692.

James SLJ, Ali K, Malara F, Young D, O’Donnell J, Connell DA. MRI findings of femoroacetabular impingement. AJR Am J Roentgenol. 2006;187(6):1412-1419.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection, and analysis were performed by MH, SM, and RCH. The first draft of the manuscript was written by MH, and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics Approval

This is an observational study approved by the institutional review board, which waived the requirement for informed consent, in compliance with the Health Insurance Portability and Accountability Act of 1996 (HIPAA).

Consent to Participate

The authors affirm that the institutional review board waived requirement for consent to participate.

Consent for Publication

The authors affirm that the institutional review board waived requirement for consent to publish.

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

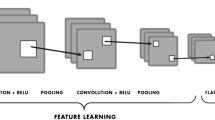

The localization network implemented a 40-layer custom-built neural network, trained from random initializations. The design of this network was based on principles borrowed from three well-established architectures, namely, ResNet, a product of Microsoft, Inception by Google, and RetinaNet from Facebook. Each of these architectures contributed unique elements to the customization of the neural network, aimed at optimal functionality and efficiency.

The localization network was trained using inputs consisting of full-resolution original anterior–posterior (AP) view radiographs of the hip. These inputs were fed to an algorithm that was responsible for generating what are known as anchor boxes. Anchor boxes, a concept adopted from the RetinaNet architecture, are essentially potential bounding boxes of various sizes and aspect ratios that could potentially encapsulate a target object in an image—in this case, the hip joint.

Three distinct sizes for these anchor boxes were selected, ranging from 122 to 128 pixels. In terms of aspect ratios, two different variants were chosen, varying from 0.80 to 1.10. Each anchor box was strategically offset by 8 pixels to ensure a comprehensive search space over the entire unscaled input image.

Anchors were then classified into positive and negative classes, with positive anchor boxes signifying the presence of a hip joint. The classification was based on the degree of overlap between the anchor box and the ground truth bounding box (the region that truly encapsulates the hip joint in the image) using a metric known as intersection over union (IoU). Anchor boxes with an IoU greater than 0.6 were classified as positive, while those with an IoU less than 0.25 were deemed negative. Anchor boxes with IoU values in the range of 0.25 to 0.6 were excluded from the training set to maintain a clean separation between the positive and negative classes.

Intersection over Union (IoU) is a metric used to measure the accuracy of object detection models. It calculates the amount of overlap between two bounding boxes: a predicted bounding box and a ground truth bounding box. The greater the region of overlap, the greater the IoU.

To calculate the IoU between the predicted and the ground truth bounding boxes, you first take the intersecting area between the two corresponding bounding boxes for the same object. You subsequently divide the union of the boxes, which is the total area covered by both the boxes. IoU is a number from 0 to 1 that specifies the amount of overlap between the predicted and ground truth bounding box.

Over the course of the study, over 30 million anchor boxes were generated in the bounding box dataset, of which just over 30 thousand were identified as positive for the presence of a hip joint. Considering the large class imbalance, the study incorporated certain strategies during the training of the localization network. Positive boxes were oversampled to make up 1% of the network inputs while training, addressing the imbalanced class distribution to an extent. Further, a modified focal loss function, as outlined in the RetinaNet paper, was utilized. This function forced higher training gradients on misclassified positive cases, ensuring that the model pays more attention to these instances, thereby enhancing the performance of the localization network. The localization network head which ultimately classified the boxes was a standard Inception-ResNet hybrid network trained from random initializations.

Inputs to the classifier network were the five bounding boxes, determined by the preceding localization network, that exhibited the highest object scores, suggesting these areas had the highest likelihood of containing the hip joint.

The structure of this classifier convolutional neural network (CNN) was designed to mirror that of the previously described localization network. However, the classifier network was initialized with the trained weights of the localization network, which essentially means it started off with pre-existing knowledge from the localization process.

To ensure robustness and prevent overfitting, runtime regularization techniques were applied to both the localization and classifier networks. One such technique is L2 regularization, which penalizes large weights in the network model by adding a term proportional to the square of the magnitude of weights to the loss function. This discourages overly complex models and aids in generalization. Another technique used was dropout, a method that temporarily deactivates a random subset of neurons (units) during training to prevent them from adapting too much to the training data, further promoting model generalization.

The classification network relied on a weighted cross-entropy loss function for two to four classes, used to compute the error between the network’s predictions and the true labels. This function played a crucial role in updating the model weights during backpropagation and consequent iterations.

The raw outputs of the classification network were two numerical values, interpreted as un-normalized logarithmic odds (log-odds) for each class. To make these log-odds interpretable, they were normalized using the softmax function, a common method in machine learning that transforms log-odds into probabilities. The final predictions for each patient were then determined by aggregating the softmax scores after adjusting them for the object score.

The classifier network’s hyperparameters were meticulously fine-tuned based on its performance on a validation set, comprising 20% of the total sample. To achieve an unbiased estimate of the model’s performance, the dataset was divided into training (70%), validation (20%), and testing (10%) subsets. The testing set was sequestered, or kept separate, during the training and validation process, ensuring an unbiased assessment of the model’s final performance.

Once the optimal hyperparameters were identified using the validation set, the network was subjected to a single run on the sequestered test set, and its performance was recorded. The primary performance metric chosen for this network was the aggregated area under the receiver-operating curve (AUC). AUC measures the quality of a classifier and indicates how well the model can distinguish between the classes across various threshold settings. An AUC closer to 1 is desirable as it signifies a higher true-positive rate and a lower false-positive rate.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hoy, M.K., Desai, V., Mutasa, S. et al. Deep Learning–Assisted Identification of Femoroacetabular Impingement (FAI) on Routine Pelvic Radiographs. J Digit Imaging. Inform. med. 37, 339–346 (2024). https://doi.org/10.1007/s10278-023-00920-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10278-023-00920-y