Abstract

As a complex three-dimensional organ, the inside of a human brain is difficult to properly visualize. Magnetic Resonance Imaging provides an accurate model of the brain of a patient, but its medical or educational analysis as a set of flat slices is not enough to fully grasp its internal structure. A virtual reality application has been developed to generate a complete three-dimensional model based on MRI data, which users can explore internally through random planar cuts and color cluster isolation. An indexed vertex triangulation algorithm has been designed to efficiently display large amounts of complex three-dimensional vertex clusters in simple mobile devices. Feedback from students suggests that the resulting application satisfactorily complements theoretical lectures, as virtual reality allows them to better observe different structures within the human brain.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The human brain is a complex three-dimensional object that resides inside our cranium. Our understanding of the brain has increased enormously with the advent of recent neuro-imaging techniques such as Magnetic Resonance Imaging (MRI) [1, 2]. The MR technique creates 3D matrices that contain signal intensity values determined by the specific magnetic properties of the tissues in particular locations of the brain. The most commonly used method for visualizing these 3D matrices relies on displaying 3D versions of the images on a 2D computer monitor [3].

This standard method of visualization is used for diagnosis and prognosis in clinical contexts, to study brain function in research contexts, and to study the underlying principles of neuro-anatomy and physiology in educational contexts. However, despite its widespread use, this visualization method is problematic because the 2D images do not preserve accurate depth information, and do not permit easy interaction. Consequently, improving methods for visualization may be beneficial to this wide range of contexts.

This work presents a mobile application to expedite the teaching of brain anatomy by visualizing MR images and the different brain structures using Virtual Reality (VR). Although techniques for VR have been around for years [4], recent technological advancements in small-scale computing have made VR accessible to the masses. Specifically, modern mobile phones now possess sufficient computing power to render a fully interactive VR experience [5]. Our particular VR setup relied on a low-cost solution: a standard Android phone (LG Nexus 5x with Android 6.0 Marshmallow) combined with a VR headset [6]. We used the Unity3D platform as a local rendering engine [7].

Related Work

Visualizing the human brain using VR is not new; early approaches date back to 2001. The advantages of using VR are that it preserves accurate depth information, and that it potentially allows for a natural interaction with the visualized object. Zhang et al. [8] displayed diffusion tensor magnetic resonance images using a virtual environment, which consisted of an \(8\times 8\times 8\) foot cube with rear-projected front and side walls and a front-projected floor; in this setup, the user wore a pair of LCD shutter glasses which supported stereo-viewing. Ten years later, Cheng et al. [9] presented a virtual reality visualization method which used a two-screen immersive projection system, required passive projection and glasses for a stereoscopic 3-D effect, and involved an Intersense IS-900 6-DOF tracking system with head-tracker and wand.

As technology evolved, VR systems were integrated into ever smaller devices, such as mobile phones and virtual reality glasses. Kosch et al. [10] used VR as input stimulus to display real time, three-dimensional measurements using a brain–computer interface. Soeiro et al. [11] proposed a mobile application which used virtual and augmented reality to display the human brain and allowed the user to show or hide complete regions. Prior applications primarily convert the MR images to surface meshes and do not permit the examination of the internal structure of the brain. The application presented in this paper allows the user to make arbitrary cuts that reveal the underlying brain structure directly from the MR image, and to generate voxel clusters from arbitrary seed points, with high detail and fidelity to the original data.

The benefits of the educational application of VR systems have been extensively studied before: Schloss et al. [12] included audio to narrate information as part of guided VR neuroanatomy tours, and Stepan et al. [13] used computed tomography and highlighted the ventricular system and cerebral vasculature to create a focused interactive model. Several different technologies have also been used to produce educational VR experiences, including the origin of the presented anatomical data (magnetic resonance [14], dissection [15]), the hardware which runs the applications (HTC Vive [16], Dextrobeam [17]), or the software which presents the simulation (virtual presentations [18], fully interactive applications [19]). All works however agree that allowing students to study anatomical models in an interactive virtual environment greatly improves their understanding of the matter. The presented work builds upon this concept and introduces a new slicing feature that allows students to explore the human brain in greater depth.

This paper is organized as follows: “Educational Application” explains the educational goals of the presented work; “Virtual Brain in the Classroom” then details the protocol used to introduce students to the developed application, which is then described in “Material and Methods”, along with the actions a user can perform in it; “Calculation” then presents the algorithms upon which the application is based; “Results and Discussion” studies the user feedback regarding the presented work; finally, “Conclusions” provides a conclusion on the usefulness of the application.

Educational Application

The VRBrain application will be used in two separate courses. The first course, Biological Psychology (BP), forms part of the Master degree of Biomedicine at the University of La Laguna. The course consists of 3 ECTS credits (European Credit Transfer and Accumulation System) and takes place across a period of 3 weeks in daily 2 hour sessions. The course is typically taken by students that intend to pursue a doctoral degree in the PhD program in Medicine where a Master’s degree is required. The focus of the course is on how the various human cognitive and behavioral skills are implemented in the brain and how they are affected by disease.

The course is divided into two main sections: One section that examines these issues using the Magnetic Resonance Imaging (MRI) technique and one that examines these issues using the Electro-Encephalography (EEG) technique. These two techniques permit insight into brain structure and function and allow for the examination of the brain under pathological circumstances. The defined skills that students are required to have mastered at the completion of the course are the following:

-

Understand basic anatomical organization of the human brain

-

Understand how brain pathology can affect basic functions like memory and language

-

Understand how brain pathology can affect basic functions like attention and perception

The first section of the course explains the MRI technique and details how this tool has been used to understand basic functions like memory and language. The course also examines how brain pathology that affects these functions can be elucidated using MRI. For example, it is commonly known that abnormal aging and Alzheimer’s Disease are related to memory problems and MRI has played a pivotal role in showing that such memory dysfunctions are associated with reductions in gray matter volume that start in a specific brain region called the hippocampal formation. In addition, another brain pathology called cerebral stroke sometimes leads to a specific language problem called Broca’s aphasia, and MRI has shown that such problems are associated with lesions in a part of the brain called the left inferior frontal gyrus.

In order to understand these issues, students should learn the brain’s division into its main structures (the cerebral lobes, the ventricles, the meninges, etc.), as well as know some of the finer details of the organization of the brain (e.g., the parcellation of the cerebral cortex into its main areas, frontal lobe, temporal lobe, etc.). The BP course is then focused on the hypothesized function of these different parts of the brain and the role they may play in pathology.

In the second part of the course, the EEG technique will be used to address similar issues in the context of attention and perception. However, given the limitations of the EEG technique to yield images of the internal structure of the brain, the VRBrain application will be primarily used in the first section of the course. The specific structure of this first section of the course is as follows:

-

Basic concepts in functional Magnetic Resonance Imaging (fMRI): Physical basis, biological basis, hands-on experience—7 hours

-

Learning and Memory: Aging, Alzheimer’s Disease—4 hours

-

Language: Language disorders and the brain, language lateralization, recent evidence—4 hours

The section on fMRI and the hands-on experience are intended to use the VRBrain application.

The second course in which we intend to use the VRBrain application is the Undergraduate Thesis Projects (UTP) which are mandatory under the Spanish university system. The UTP is a 6 ECTS credits course which takes place in the second semester of the fourth year in the Psychology Degree at the University of La Laguna. The aim of this course is to allow for students to develop their own interest on a given research topic. They work together with a professor to establish a research idea and then do autonomous work to perform the research and write the thesis.

Given the large number of final year students in the Psychology degree that need to do the UTP, the students need to choose from a number of research themes that are proposed by professors in the Psychology department that are teachers in the UTP course. Some of these research themes are:

-

Educational Psychology

-

Personality Psychology: Mindfulness, stress, anxiety

-

Basic Psychology: Neuro-imaging; Neuro-anatomy; Language; Memory

The research theme in which the VRBrain will be applied is the research theme related to neuro-imaging and neuro-anatomy. The research topics in this research theme are related to investigation of the brain and pathology, and as such, it is useful if students understand the basic neuro-anatomy of the brain. Here the VRBrain application will be very useful.

Virtual Brain in the Classroom

As we pointed out in “Educational Application”, the main goal of the application is to increase the understanding of neuro-anatomy in students at both the undergraduate and master’s degree levels. In the classroom setting we will have implemented the following protocol in the usage of the VRBrain application. The duration of the entire protocol is around 2 hours.

First, we will divide students in the class into small groups of 3 to 4 people. This will ensure that the application is used by everyone including those that do not have an Android device. In addition, given that the number of VR headsets is limited, this will also ensure that everyone will be able to use the application.

Second, given the complexity of the application, we will first give students the ability to get familiar with the application. This means they are free to start up the application and explore the various menus using the Bluetooth controller. In this next section they are required to perform a small quiz in which we present a number of targeted questions that require finding and understanding basic neuro-anatomy of the human brain. This mainly relies on the first functionality of the application (see “Material and Methods”). For example, we have questions such as:

-

Describe in anatomical terms the location of the human hippocampus in relation to the amygdala.

-

Does Broca’s region lie in the frontal or temporal lobe?

-

What is the main function of the occipital lobe?

Answering these questions relies on a 3D understanding of the brain, as well as having read the information that appears in the textbox when a given structure is highlighted within the VRBrain application.

In addition, we also ask students to examine the internal structure of the brain using the second functionality of the application (see “Brain Slicing” in “Material and Methods” as follows). Within this functionality we will ask questions such as

-

Estimate the distance from the superior part of the brain to the lateral ventricle in centimeters.

-

Find the hippocampal area by slicing the brain in coronal slices

-

Find the corpus callosum by slicing the brain in sagittal slices

Answering these questions requires inspection of the internal structure of the brain which is implemented in the VRBrain application. We hope that by studying these questions in the classroom the students develop further insight into the 3D structure of the brain and improve their understanding of human neuro-anatomy. This will then in turn improve their understanding of the larger topics related to brain function and pathology in the respective courses.

Material and Methods

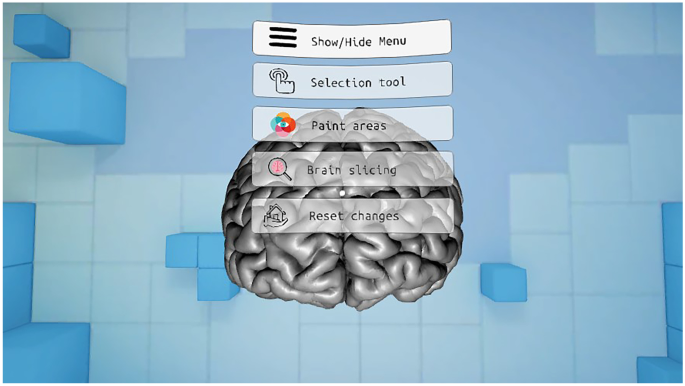

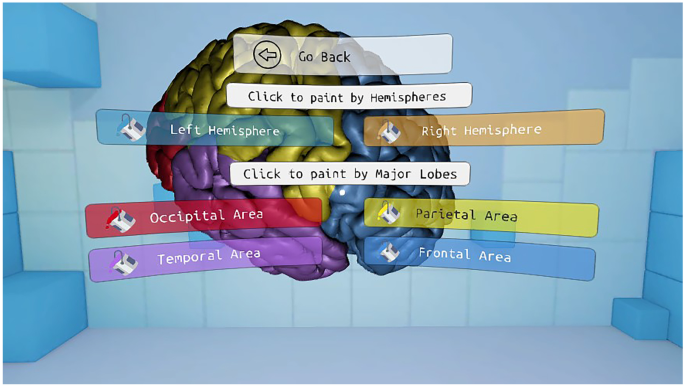

The application is divided into two main parts. First, a series of functionalities have been implemented to color and highlight different parts of the brain and show information about them. Second, a functionality has been developed in which the user can make cuts in the brain in orthogonal planes to the point of view. In this application, the user can interact with the menu entries by looking directly at them for a short period of time. The analog stick is used to rotate the virtual representation of the brain. Figure 1 shows the main menu of the presented application.

Brain Slicing

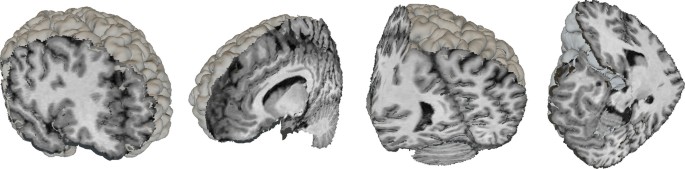

Brain Slicing is a functionality to study the internal anatomy of the brain. The user can rotate the view around the virtual brain and perform cuts in an orthogonal plane to the point of view. The virtual representation of the brain is made from MRI data that must be preprocessed to generate both the internal information of the brain and the cortical surface. In the next sections, both the preprocessing step and the calculations necessary to carry out the cuts both in the cortex and in the internal representation of the brain are detailed.

Preprocessing

In the first step of the process, the raw MRI data that is obtained from a patient scan is stored in the standard Digital Imaging and Communications in Medicine (DICOM) format. As this image format generally does not permit easy manipulation, all DICOM images were transformed into a data format called Neuroimaging Informatics Technology Initiative (NIfTI [20]). The NIfTI file format includes the affine coordinate definitions relating voxel index to spatial location and codes to indicate the spatial and temporal order of the captured brain image slices. Although the developed application accepts files of any size, the default resolution of the examples in the presented work is \(256\times 256\times 128\) voxels.

This NIfTI file is first processed through the BrainSuite cortical surface identification tool, which produces a three-dimensional vertex mesh of the brain cortex [21]. This step is necessary because a raw representation of the voxels of a NIfTI file most commonly offers a dull and unrealistic appearance. However, in order to properly display any segmentation of a brain, both the cortical and inner data are required; therefore, both the cortex mesh and the vortex data matrix are loaded onto the visualization program.

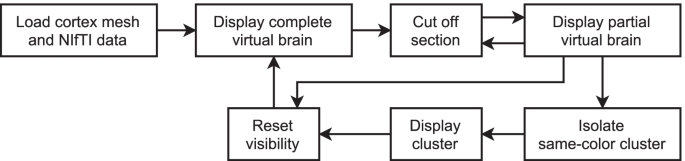

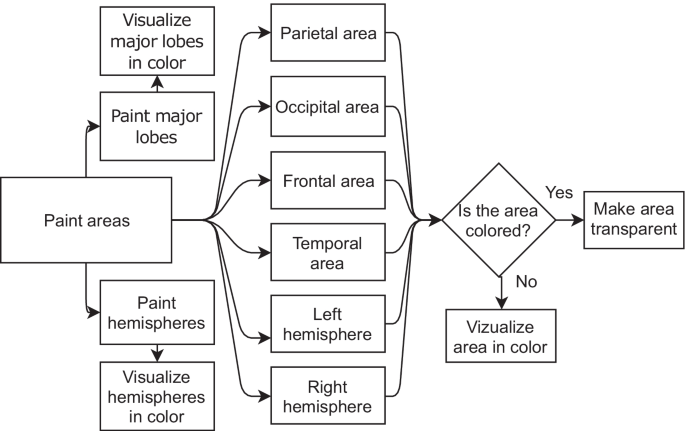

The user can then freely rotate the view around the virtual representation of the brain, and they may choose to perform three different actions: cutting off a section by defining an intersection plane, isolating a specific same-colored region of the brain, and restoring the whole brain to its original state. “Calculation” explains how these operations are executed. The flowchart of the presented application is displayed in Fig. 2.

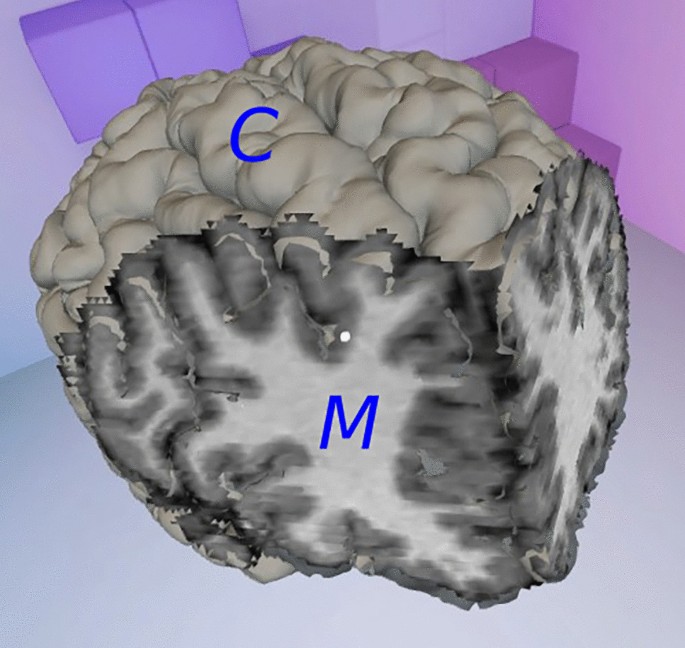

A second mesh is created when a cut or an isolation is produced, showing the faces inside the brain that become visible (Fig. 3). A three-dimensional mesh normally contains the locations of all vertices, some metadata regarding their normal vectors, colors and/or texture mapping, and a list of triangles, which describe the connections between vertices in order to form visible faces.

The vertices of the inner brain mesh are the centers of all the voxels provided by the NIfTI file, and their color is the gray level defined by their fMRI value. To generate a clearer image, shading is not taken into account, so the normal vectors of the vertices are unnecessary and ignored. Visible faces only appear at the edge between a visible region of the brain and a hidden one, so the triangles of the inner mesh are calculated every time the user produces a cut or an isolation, as explained in “Calculation”.

Calculation

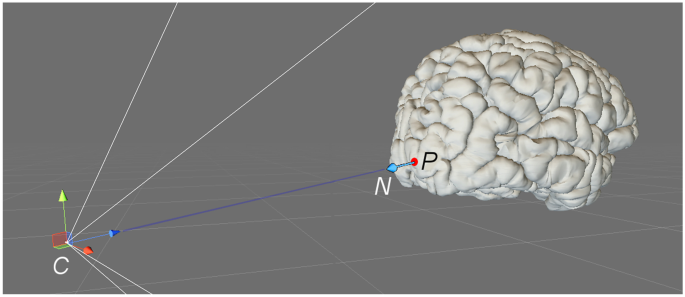

To select a plane with which to cut off a portion of the brain, a point in space P and a normal vector N are needed. Both elements are extracted from the point of view of the user, relative to the center of the brain: the orientation vector of the camera in the scene equals \(-N\), while P is located at the center of the closest active brain voxel on which the user focuses their gaze (Fig. 4). Point P can also define a seed voxel to isolate a same-colored region of the brain.

Once the user selects a cut plane, all voxels of the virtual brain are then classified according to their relative position. Let Q be the center of a voxel, its signed distance to the plane is calculated as the scalar product \(N\cdot \left( P-Q\right)\); if this value is negative, the voxel is located between the cut plane and the user camera and must be removed.

The cortex mesh is also updated every time a brain section is cut off. Removing its vertices is not necessary, since they will not be visible unless they are referenced by at least one triangle. Therefore, when the user defines a cut plane, only the list of triangles of the mesh is updated by removing every triangle which contains one or more vertices located between the plane and the user camera (Fig. 5).

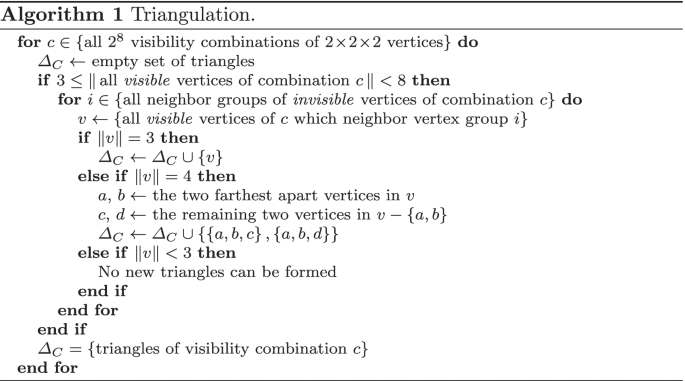

To calculate the triangles of the inner brain mesh, all \(2\!\times \!2\!\times \!2\) vertex neighborhoods of the inner brain mesh are studied individually. Since visible faces only form at the edge between visible and invisible regions of the brain, triangles will only connect visible vertices that are close to at least one hidden vertex (Fig. 6). A neighborhood contains only 8 vertices, which can either be visible or invisible, so the amount of possible face combinations per neighborhood is \(2^8\). To decrease calculation time during execution, these combinations are precalculated as shown in Algorithm 1 and reused for each vertex neighborhood of the virtual brain.

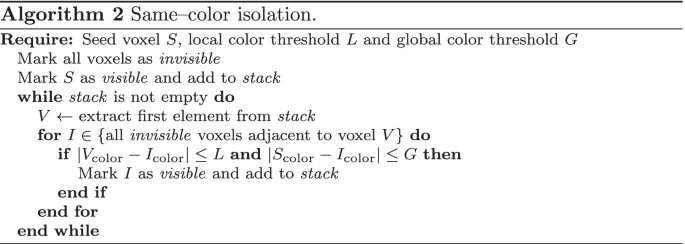

Once the inside of the brain becomes visible, the user can select an isolation seed by focusing their gaze on a specific brain voxel. When this happens, every voxel in the brain is marked as invisible; then, the visibility of the seed and every neighboring voxel with a similar enough gray level, given a threshold, is restored as shown in Algorithm 2. The result is a single cluster of voxels of similar color (Fig. 7). The cortex is completely hidden in this situation, so that the cluster can be seen clearly, and the triangulation process described in Algorithm 1 creates a visible mesh around the isolated voxels.

Finally, the restoration action simply returns all voxels back to their original state and resets the triangles of the cortex mesh.

Paint Areas and Selection Tool

The application also includes functionalities to paint areas of the brain, to highlight both internal and external structures and to display information about them. Virtual reality is used so that the user can better appreciate the shape and spatial arrangement of these structures within the brain.

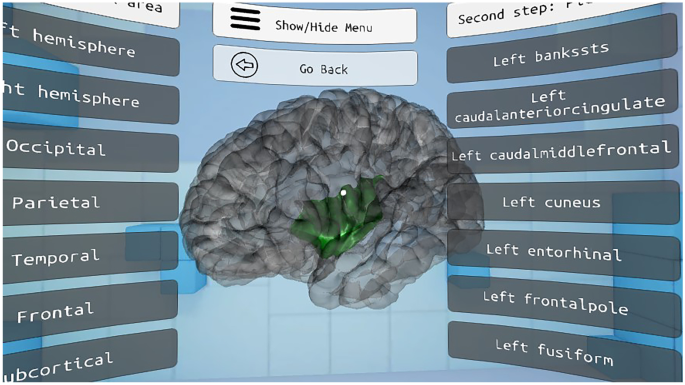

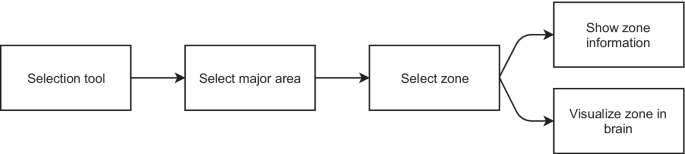

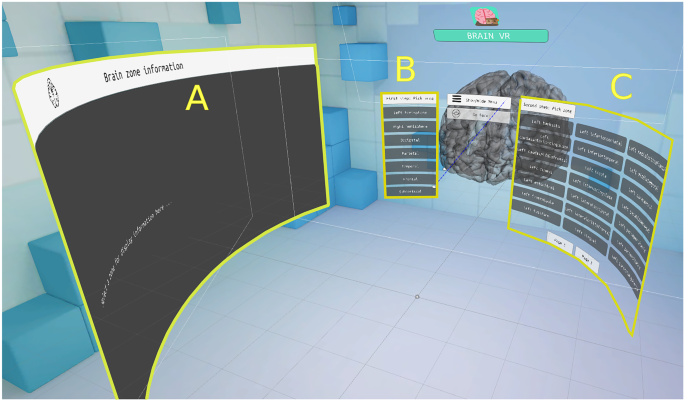

Selection Tool

The selection tool allows the user to select a part of the brain and visualize its shape and location; information about its functions is also displayed. To select a region, the user must first select the zone in which it is located, in a menu on the left side. Then, a menu is displayed to the right of the user, displaying the structures which the selected zone contains. Finally, the selected area is shown in green color inside a semitransparent brain, as seen in Fig. 8. Figure 9 shows a flowchart of this functionality and Fig. 10 shows the different interfaces which the user can access in the selection tool environment.

Paint Areas

The functionality to paint areas allows the user to visualize different cortical areas of the brain in color. The interface is composed of two main buttons that activate two different color schemes: one to visualize the hemispheres and the other to show the major lobes. In addition, the user has access to buttons to color each area individually. If the area is already colored, clicking the button turns it semi-transparent, so that the internal structure of the brain is exposed. Figures 11 and 12 show the flowchart and the interface of this functionality, respectively.

Results and Discussion

The developed VR application was presented to 32 students, ages between 19 and 37 years old, and their opinion on its performance and usefulness was collected in an anonymous five point Likert scale satisfaction questionnaire [22]. Table 1 shows the mean, standard deviation and \(95\%\) confidence interval of the questionnaire results.

These results show that students have found the application to be helpful in their learning process, as represented by their opinion on the “Paint areas” and “Selection tool” functionalities (mean above 4.44). This is in line with previous works, which found that the usage of virtual reality significantly improves test grades [17], since it helps students to better understand the three-dimensional structures of the human brain [18], also improving their satisfaction and decreasing their reluctance to learn neuroanatomy [14].

The “Brain slicing” option scored only an average 3.33 out of 5. This tool was not originally designed as an educational device, but for medical experts to explore the brain of a real patient and search for anomalies; as such, students found it too complicated to use and not instructive enough. Further iterations of this work may attempt to adapt this option to increase its formative potential.

Students also mostly agree that the presented application should be used in following courses and find its usage easy and intuitive, the “Brain slicing” option again scoring lower than the other functionalities.

Conclusions

A VR brain exploration application has been developed as a medical and educational tool. The presented system builds a three-dimensional brain model from MRI data and a basic cortex model, then allows the user to cut slices off in order to study its inside and isolate vertex clusters by color. Students can also highlight and analyze different areas of the brain in order to complement their anatomical knowledge.

A satisfaction questionnaire showed very positive feedback from the students who tested the application, who claim that the educational side of the presented work was very useful to them, as it helped them better understand the theoretical explanations provided by the teacher.

The results obtained in the present study fit well with previous works, such as [13, 14, 16, 17], or [19], but the implemented virtual experience allowed for a greater degree of interaction than Schloss et al. [12], Lopez et al. [18] and de Faria et al. [15], due to its unique vertex triangulation algorithm and novel exploration tools which may also be used for medical and non-educational purposes. Based on student feedback, further iterations of the presented work will improve some of the presented features to increase their approachability in an academic environment.

Data Availability

All collected data is included as part of the presented work

Code Availability

All developed code is available at github.com/jhaceituno/brain3D.

References

Huettel SA, Song AW, McCarthy G (2009) Functional Magnetic Resonance Imaging. Freeman, USA

Lauterbur P (1973) Image formation by induced local interactions: examples employing nuclear magnetic resonance. Nature 242:190-191

Rinck PA (2019) Magnetic resonance in medicine: a critical introduction. BoD–Books on Demand

Steuer J (1992) Defining virtual reality: Dimensions determining telepresence. Journal of communication 42(4):73–93

Henrysson A, Billinghurst M, Ollila M (2005) Virtual object manipulation using a mobile phone. In: Proceedings of the 2005 international conference on Augmented tele-existence, ACM, pp 164–171

Google (2021) Google AR & VR. https://arvr.google.com/vr/

Unity Technologies (2005) Unity3D. https://unity3d.com, accessed: 2017-06-20

Zhang S, Demiralp Ç, DaSilva M, Keefe D, Laidlaw D, Greenberg B, Basser P, Pierpaoli C, Chiocca E, Deisboeck T (2001) Toward application of virtual reality to visualization of dt-mri volumes. In: Medical Image Computing and Computer-Assisted Intervention–MICCAI 2001, Springer, pp 1406–1408

Chen B, Moreland J, Zhang J (2011) Human brain functional mri and dti visualization with virtual reality. In: ASME 2011 World Conference on Innovative Virtual Reality, American Society of Mechanical Engineers, pp 343–349

Kosch T, Hassib M, Schmidt A (2016) The brain matters: A 3d real-time visualization to examine brain source activation leveraging neurofeedback. In: Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems, ACM, pp 1570–1576

Soeiro J, Cláudio AP, Carmo MB, Ferreira HA (2016) Mobile solution for brain visualization using augmented and virtual reality. In: Information Visualisation (IV), 2016 20th International Conference, IEEE, pp 124–129

Schloss KB, Schoenlein MA, Tredinnick R, Smith S, Miller N, Racey C, Castro C, Rokers B (2021) The uw virtual brain project: An immersive approach to teaching functional neuroanatomy. Translational Issues in Psychological Science

Stepan K, Zeiger J, Hanchuk S, Del Signore A, Shrivastava R, Govindaraj S, Iloreta A (2017) Immersive virtual reality as a teaching tool for neuroanatomy. In: International forum of allergy & rhinology, Wiley Online Library, vol 7, pp 1006–1013

Ekstrand C, Jamal A, Nguyen R, Kudryk A, Mann J, Mendez I (2018) Immersive and interactive virtual reality to improve learning and retention of neuroanatomy in medical students: a randomized controlled study. Canadian Medical Association Open Access Journal 6(1):E103–E109

de Faria JWV, Teixeira MJ, Júnior LdMS, Otoch JP, Figueiredo EG (2016) Virtual and stereoscopic anatomy: when virtual reality meets medical education. Journal of neurosurgery 125(5):1105–1111

van Deursen M, Reuvers L, Duits JD, de Jong G, van den Hurk M, Henssen D (2021) Virtual reality and annotated radiological data as effective and motivating tools to help social sciences students learn neuroanatomy. Scientific Reports 11(1):1–10

Kockro RA, Amaxopoulou C, Killeen T, Wagner W, Reisch R, Schwandt E, Gutenberg A, Giese A, Stofft E, Stadie AT (2015) Stereoscopic neuroanatomy lectures using a three-dimensional virtual reality environment. Annals of Anatomy-Anatomischer Anzeiger 201:91–98

Lopez M, Arriaga JGC, Álvarez JPN, González RT, Elizondo-Leal JA, Valdez-García JE, Carrión B (2021) Virtual reality vs traditional education: Is there any advantage in human neuroanatomy teaching? Computers & Electrical Engineering 93:107282

Souza V, Maciel A, Nedel L, Kopper R, Loges K, Schlemmer E (2020) The effect of virtual reality on knowledge transfer and retention in collaborative group-based learning for neuroanatomy students. In: 2020 22nd Symposium on Virtual and Augmented Reality (SVR), IEEE, pp 92–101

Cox RW, Ashburner J, Breman H, Fissell K, Haselgrove C, Holmes CJ, Lancaster JL, Rex DE, Smith SM, Woodward JB, Strother SC (2004) A (sort of) new image data format standard: NIfTI–1. Neuroimage 22:e1440

Shattuck DW, Leahy RM (2002) Brainsuite: An automated cortical surface identification tool. Medical Image Analysis 6(2):129 – 142

Likert R (1932) A technique for the measurement of attitudes. Archives of psychology

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature. No funding was received for the presented work

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics Approval

No experiments on humans or animals were performed.

Informed Consent

Informed consent was obtained from all individual participants included in the study.

Consent for Publication

No personal data of any participant is revealed in the study.

Conflicts of Interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hernández-Aceituno, J., Arnay, R., Hernández, G. et al. Teaching the Virtual Brain. J Digit Imaging 35, 1599–1610 (2022). https://doi.org/10.1007/s10278-022-00652-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10278-022-00652-5