Abstract

With the increasing presence of cyber-physical systems (CPSs), like autonomous vehicle systems and digital twins, the future of software engineering is predicated on the importance of designing and developing data-centric software systems that can adapt intelligently at run time. CPSs consist of complex heterogeneous software components. Model-driven engineering advocates using software models to tame such complexity, capturing the relevant design concerns of such systems at different levels of abstraction. Yet most of the existing CPSs are engineered without considering MDE practices and tools, facing fundamental challenges when working with data: monitoring the program data at run time, syncing updates between program and model, dealing with heterogeneous data sources, and representing such observed data at run time to facilitate automated analysis. In this work, we introduce the notion of view models to explicitly represent parts of the domain knowledge implicitly embedded in the source code of a software system. This notion is equipped with a scalable bidirectional syncing mechanism that extracts view model instances from program snapshots at run time. The syncing mechanism is proposed from a conceptual point of view, independently of specific implementations and supports incremental view model update and view model maintenance. We show how this syncing mechanism is flexible enough to facilitate the non-intrusive adoption of MDE technology over existing MDE-agnostic heterogeneous data-centric systems. We study the run-time cost implied by the EMF-Syncer , the tool implementing this syncing mechanism for Java applications and view models atop the eclipse modeling framework (EMF) when executing data analytic and transformation tasks over large volumes of data in the presence of data updates at run time. An empirical evaluation of the EMF-Syncer has been conducted with an industry-targeted benchmark for decision support systems, analyzing performance and scalability. The novel syncing mechanism enables new opportunities to adopt MDE technology in heterogeneous data-centric systems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

With the increasing presence of cyber-physical systems (CPSs) [1, 14] among software systems, like autonomous vehicles and digital twins, the future of software engineering is predicated on the importance of designing and developing software systems that can adapt intelligently at run time [2]. Run-time models can inform reasoning and decision-making based on the knowledge that emerges during the execution of a system, which cannot be foreseen at design time [3]. Run-time models need to be causally related to the system being modeled so that data from the underlying system can be taken into account by the runtime model and updates in the runtime model lead to updates in the underlying system.

CPSs consist of complex heterogeneous software components, including different data sources. To deal with such complexity, software models capture the relevant design concerns of such systems at different levels of abstraction. Once models are explicitly available, model views provide a unified perspective of the system along with a viewpoint that can be used for analysis purposes [10], like reasoning about interoperability consistency among the different components involved. For modeling technology to analyze such systems, models need to be materialized first, usually via a data store, fetched to memory, and then the views have to be initialized[10, 23]. While model storage may be necessary to deal with very large models, it may become a performance bottleneck when dealing with models at run time, especially when design models need to be kept consistent with run-time models.

When looking at program instrumentation, Gray and Rumpe [24] argue that domain knowledge is implicitly represented in programs using standard programming languages, like Java. Such implicit representation, although pragmatic, can pose a severe disadvantage; it is not amenable for explicit analysis at design time, as discussed above.

Hence, while database technology for dealing with large model instances may not be convenient for obtaining run-time models of systems whose data is available in memory, implicit knowledge in programs is not amenable for explicit analysis. In this work, we are proposing an MDE approach for connecting MDE-agnostic software to models at run time. Our approach consists of a bidirectional syncing mechanism that can be used to observe program data (in-memory Java objects forming program snapshots) and modify it, thus affecting the system behavior that relies on such data. Our approach is a code-first approach, enabling the adoption of modeling technology to any software. It is decoupled from specific persistence technology by extracting in-memory data from a program at run time. Models can be retrieved from data stores or services. The syncing mechanism facilitates on-demand syncing and incremental updates, leveraging scalability features of persistence layers accessible from code and incremental execution features from modeling tools, both for querying and transformation.

A synchronization mechanism involves some additional computation (and resources) to maintain copies of objects (Java objects and view objects) in sync, whereas modeling technology that interfaces with data stores directly does not need to deal with such duality. This work presents the EMF-Syncer , the tool implementing our synchronization model over Java programs. It investigates whether the synchronization mechanism imposes run-time and memory penalties that may cause unduly pragmatic concerns. The evaluation has been performed using an industry-targeted benchmark for decision support systems, TPC-DS [36], that involves very large data sets. We aim to assess the performance of the  , as a proof-of-concept of the proposed conceptual framework, in use cases that involve mapping reasonably large program snapshots to models.

, as a proof-of-concept of the proposed conceptual framework, in use cases that involve mapping reasonably large program snapshots to models.

The rest of the paper is structured as explained below. Section 2 introduces the terminology on (data) views that we will use in the remainder of the article, and a running example is presented. Section 3 formulates the problem that we are solving in the article and introduces an implementation-independent formulation of program data and views. Section 4 describes a solution to the problem by presenting a synchronization model that is independent of technical spaces and then discusses how domain-specific synchronization policies can constrain the synchronization model. Section 5 presents the implementation requirements for selecting target programming languages and modeling environments and how this synchronization model has been realized in \(\textsc {EMF-Syncer\ }\). In Sect. 6, \(\textsc {EMF-Syncer\ }\) is analyzed, in terms of performance and scalability by using the TPC-DS benchmark. The results of this empirical evaluation are then used to discuss to what extent it is feasible to work with EMF (data) views in terms of the size of data sets. In Sect. 7, related work is discussed, concluding with the lessons learned and future research directions.

This work is based on the syncing mechanism presented in [7], where the syncing mechanism is used to show how to adopt MDE technology in MDE-agnostic spaces. The following parts of the article are new:

-

The introduction of key terminology and practical use cases to motivate the example presented in Sect. 2.

-

The extension of the syncing mechanism to support more complex synchronization scenarios, both at a conceptual level in Sect. 3, and in the \(\textsc {EMF-Syncer\ }\) tool through the implementation of user-definable domain-specific policies in Sect. 4.

-

A detailed examination of the implementation requirements for porting the \(\textsc {EMF-Syncer\ }\) to other technologies, such as Python/Pycore, in Sect. 5.

-

An updated evaluation of the syncing mechanism in Sect. 6, which includes new experimentation performed on a different computational platform, Windows 10, and an analysis of memory overhead.

-

An emphasis on the role the syncing mechanism plays in linking program snapshots to their counterpart models at run time, and the potential for utilizing heterogeneous data sources. A new discussion on persistence and scalability has been included in Sect. 7.

Moreover, the classification of threats to validity in Sect. 6 has been revised to include both internal and external validity threats. Additionally, the related work section has been expanded to include additional research on bidirectional model transformation.

2 Heterogeneous data sources

Views can be extracted from explicit models using MDE technology[10, 17, 23], and the concepts are often overloaded with a different meaning in the literature. In this section, we define the fundamental notions in our work and, then, illustrate the main problem with an example.

2.1 Terminology

The notion of view is contextualized at the data level, as opposed to the model level, by stating the meaning of the following terms that will be used in the remainder of the paper:

-

(Implicit) domain knowledge We regard domain knowledge embedded in programs as implicit domain models, usually encoded as types in a programming language.

-

Program data (snapshot) In-memory objects, or excerpts of them, of the classes that are relevant from a domain knowledge perspective. Those objects represent entities in the application domain and are orthogonal to design choices specific to a given solution.

-

(Explicit) view model Explicit domain model that describes the system from a particular viewpoint characterizes the types of views that can be observed from a program. A view model is represented as a class diagram in the context of this work, using EMF. This notion has no relation to an explicit base (contributing) model, as done when working model views of a system in the literature [10]. This base model is embedded in a program as implicit domain knowledge in our work. In Sect. 7, we discuss the differences between the notion of view model, which we are developing in this work, and the notion of model view, present in the literature, in more detail.

-

A Viewpoint is the description of a combination, partitioning, and restriction of concerns from which systems can be observed. In the context of this paper, a viewpoint is not represented explicitly.

-

A (data) view is the representation of a given program snapshot as an instance of a view model, enabling the application of MDE tools and techniques to program snapshots.

-

A syncing policy defines how program snapshots are represented as views using a forward synchronization. The reverse direction is called backward synchronization. The initial forward synchronization can be performed in bulk so that all relevant objects in the program snapshots are represented as instances of classes of the view model, or on demand, so that relevant objects are synced only when they are needed in the view. However, subsequent synchronizations can be performed incrementally, propagating only updates on a program snapshot (or on a view) to objects in the synced view (or program snapshot).

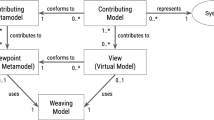

Figure 1 illustrates the process of transforming program data into a (data) view using the \(\textsc {EMF-Syncer\ }\) tool. The left side of the image shows a runtime system containing implicit domain knowledge, represented as Java classes. The right side of the image shows the view model capturing excerpts of the domain model explicitly, using EMF. \(\textsc {EMF-Syncer\ }\) provides syncing policies to map the Java program to the view model implicitly, or explicitly using user-defined domain-specific policies that help synchronize program data (represented as a graph of Java objects) and their counterpart views.

2.2 Example

A domain model may be implemented in a varying degree of formality in a software system. Software analysis and design approaches like domain-driven design [16] and the object-oriented analysis and design method [4] emphasize capturing the suitable domain model, which should be implemented in the code. On the other hand, current object-oriented frameworks, like Java EE and the Spring Framework, provide convenient abstractions like Java beans and data repositories to help design the domain model in the code, and architectural styles to separate the data layer from the business and presentation layers. However, domain classes will often be found mixed with cross-cutting concerns in the code, like logging and persistence annotations. The case study presented below is an example: a Java application with a domain model mapped to a relational database through JPA annotations.

An excerpt of a Java program containing domain knowledge is given in Listing 1. This listing declares the domain classes StoreReturns, Customer and CustomerAddress, decorating their fields with JPA annotationsFootnote 1 that link the classes to tables in a relational database, used in the experiments in Sect. 6. The corresponding class in the view model, where only the fields of interest have been captured, is represented in Fig. 2. In this example, it is worth noting that the class CustomerAddress in the listing is not represented in the view model to show how some elements of a program can be filtered away. In addition, the Customer class in the view model has an additional attribute points, which is not present in the original source code. Such an attribute illustrates that the view model can include other knowledge not present in the original program, building an extension of the original program.

The benefit of decoupling the view model from the source code is twofold; modeling practices are applied in a backward-compatible manner, since view models are synchronized with programs containing implicit domain knowledge, and any MDE extension is fully retractable without incurring any technical debt on the original application. Moreover, view models can interface with well established APIs, via programs, enabling the extraction of views from a wide range of heterogeneous data sources:

Persistence and scalability. There are stable SDKs for several database technologies, including in-memory, graph databases and document stores. Spring Data is one such example. Applications can store domain model instances using such APIs. In that situation, our synchronization model could be used to add a persistence layer for view model instances. The added advantage is that each API provides facilities like on-demand loading and pagination to deal with the persistence of very large models.

Data exchange and flexible models. There are also SDKs, like Jackson,Footnote 2 for mapping types to standard data formats like XML, JSON, and CSV. Using a synchronization policy between a domain model that uses such types of SDKs and a view model, models can be serialized in flexible formats.

Interoperability. Building on the point mentioned above, data transfer objects are usually mapped to standard formats that can be exchanged via web APIs. Such data transfer objects may or may not be regarded as part of the domain model, but they are classes in an object-oriented program that can be mapped to classes in view models using our syncing mechanism. Such an approach would help view models to be used as abstractions of distributed data.

3 Synchronization problem

As we consider view models explicitly capturing domain knowledge that is embedded, and possibly scattered, in object-oriented programs. A view model can be a faithful reflection of the domain model, a projection, or an extension. The synchronization problem that we are addressing can be decomposed into two symmetric problems that have traditionally been studied by the database community [18]:

-

View update problem once a view has been obtained from a program snapshot, any update to the view should be backward synced to the underlying program.

-

Materialized view maintenance problem once a view has been obtained from a program snapshot, any update on the program snapshot should be forward synced to the view.

Below, we expand on the use cases where a view model is a projection of part of a program and where the view model is an extension of the original program. These two use cases are illustrated with specific scenarios using the running example. For each scenario, we show the initial program snapshot and the corresponding initialized view, followed by an update and a backward propagation exhibiting how the program snapshot is updated.

The first scenario, shown in Fig. 3, is an example of projection, as the class CustomerAddress does not appear in the view model. In the forward synchronization, the Customer 1 loses its address in the view. However, once we perform an update by adding one more Customer in the view, the backward propagation retains the address of the Customer 1 in the original program. However, it does not initialize any address for Customer 2.

The second scenario, shown in Fig. 4, is an example of an extension of the domain knowledge in the program. The Customer 1 is initialized in the view without the attribute value points. After the update, the backward propagation forgets this data, which cannot be stored in the Java Customer object. However, after an update of the name on the program snapshot, the forward propagation preserves the points value and updates the name.

In the following subsections, we present an algebraic formalization of models, their instances, and updates, concluding with an informal description of the synchronization problem. This formalization lays the foundations for presenting the synchronization model in Sect. 4, independently of specific programming/modeling languages (and accompanying tool support).

3.1 Domain models and view models

In this work, we are interested in capturing updates at the granularity level of (structural) features within objects, and they are treated as independent units. Therefore, object-oriented domain models are represented as a set of (structural) feature values that are well typed, from which specific objects can be derived. We start by defining the notion of feature type and the notion of feature value.

Definition 1

(Feature type) Let t be a value type indexed by the set \( \{0\} \cup \mathbb {N}\) of natural numbers as defined by the following grammar \(t {:}{:}= {dt}| e | c | t \rightarrow t',\) where \({dt}\) denotes any primitive data type, such as String, Integer, and Boolean, available in Ecore (and their counterparts in Java)[33, pg 124]; e denotes any user-defined enumeration type; c denotes any class type, representing sets of object identifiers o; and \(t \rightarrow t'\) denotes sets of entries mapping a key of type t to a value of type \(t'\). A feature type \({f_{m}: c \rightarrow t}\) where m is a record \((l,u,{ordered},unique,cont,op)\) describing the usual properties of a feature: a lower bound l, an upper bound u, whether the feature is \({ordered}\) or unique, whether it is a containment, and the opposite reference − if any.

Note that a feature type uses value types t that are indexed by \( \{0\} \cup \mathbb {N}\), i.e., \( \{0\} \cup \mathbb {N} \rightarrow t\). However, notation is abused and we represent them by t, for the sake of simplicity, but their values \(v_i\) are represented with an explicit indexed \(i \in \mathbb {N}\).

A view model v(M) is represented by its set of feature types. These can be either attribute values, when either \(t = {dt}\) or \(t = e\), or reference values, when \(t = c\). Each feature type denotes a (possibly infinite) set of feature values representing the constituents of a model instance. A domain model M can be regarded as a partial specification of a view model, where some design decisions −, e.g., multiplicity constraints − are not captured nor documented, probably not intendedly. Therefore, the same representation can be used both for domain models, where the m component is optional, and for view models.

For example, considering the abbreviations StoreReturns as \(\texttt {SR}\), StoreReturnsId as \(\texttt {SRId}\), Customer as \(\texttt {C}\), Store as \(\texttt {S}\), DateDim as \(\texttt {D}\), the class StoreReturns of Fig. 2 is represented using the following feature types:

Inheritance can also be considered in this representation. A naïve, and verbose, strategy consists in defining feature types for the class in which it is declared and for each of its subclasses. More precisely, when a feature f is declared for a class with name c with a type t and multiplicity constraints m, a feature type \({f_{m}: c \rightarrow t}\) is in the model together with each feature type of the form \({f_{m}: c' \rightarrow t}\), where \(c'\) is the name of a subclass of the class with name c. However, such formal representation is only used at a conceptual level in our framework, and end users can keep using the corresponding notation in their technical space, Java programs, or EMF models in this work.

3.2 Program data and views

We use the notation v : t for denoting that a value v belongs to the domain of a type t, i.e., \(v \epsilon [[t]]\). For representing values, we use families of values \(v_i\), denoting sets \(\{ i \mapsto v \}\) for \(i \in \{0\} \cup \mathbb {N}\). The notation for families of values \(v_i\) is abused, and we also use it for representing a specific value v, indexed by a given natural number i. This confusion is disambiguated from the context where the expression is used. When the context mentions one feature value then \(v_i\) refers to an indexed value, and it refers to a family of values \(v_i\) otherwise. Therefore, we will use the predicate \(v_i: t\) to denote that all the values in the family \(v_i\) are typed with t.

Definition 2

(Feature value) Given a feature type \({f_{m}: c \rightarrow t}\), a feature value is defined as a mapping \({f: o \mapsto v_i}\), where o : c and \(v_i: t\).

Given a model \(\mathcal {M}\), a model instance m is represented as a set m of feature values \({f: o \mapsto v_i}\) that are typed with the feature types in \(\mathcal {M}\), and the instance of relation, at model instance level, is denoted by \(m:\mathcal {M}\). The notion of model instance may refer either to a domain model instance, or system state, or to a specific view. When the component m in a feature type \({f_{m}: c \rightarrow t}\) specifies constraints, the notion of feature value \({f: o \mapsto v_i}\) is enriched as follows:

-

cardinality constraints: the cardinality of a value family \(v_i\) is constrained as follows: \(m.l \le | v_i | \le m.u\);Footnote 3

-

order is captured by the index i of a value family \(v_i\);

-

unique: if a feature has the unique constraint, \(m.{unique}\), then, for any i, the set \(\{ i \mapsto v \}\) for a particular feature value defines an injective mapping;

-

containment integrity: if a feature \({f: o \mapsto v_i}\) in m is a reference and has the containment constraint, then for any object \(o'\) referred by f, there cannot exist another containment \(f'\) in m, which also contains the object \(o'\);

-

bidirectional associations: if a feature f in m is a reference belonging to object o points to object \(o'\) and has an opposite reference \(f'\), then the feature value \(f': o' \mapsto o_i\), for some i, also belongs to m.

3.3 View updates

In our approach, view models, which are synchronized with a domain model instance, can be materialized through a persistence API in the MDE technical space or used as a virtual view at run time. In both cases, views can be updated, and such updates are incrementally propagated to the underlying domain model instance on demand. There are two main cases of view updates: when root objects, w.r.t. the containment hierarchy, are added or deleted, and when feature values are updated. In what follows, we develop the notion of update that is used in our synchronization model and explain how an update is applied to a model instance, which can be a domain model instance or its associated view.

Definition 3

(Atomic updates) Given a model \(\mathcal {M}\) and a model instance m, such that \(m: \mathcal {M}\), an atomic update is represented as a pair \((\mu ,{f: o \mapsto v_i}) \in (\{ {upsert}, {delete}\} \times {f_{m}: c \rightarrow t})\) for a specific value v indexed by some i.

According to this definition, an atomic update can only affect one value in the family of values bound to the object o through f.

The set \(\Delta \) of updates for a particular model \(\mathcal {M}\) comes equipped with a binary operation \(\cdot : \Delta \times \Delta \rightarrow \Delta \) so that, for all given \(\delta _1, \delta _2, \delta _3 \in \Delta \):

-

\(\cdot \) is associative, i.e., \((\delta _1 \cdot \delta _2) \cdot \delta _3 = \delta _1 \cdot (\delta _2 \cdot \delta _3),\)

-

1 is the identity element, i.e., \(\delta _1 \cdot 1 = 1 \cdot \delta _1 = \delta _1\)

-

for any update \(\delta \in \Delta \), there is an inverse delta \(\delta ^{-1}\) that cancels the former, i.e., \(\delta \cdot \delta ^{-1}= 1\).

Hence, \((\Delta ,\cdot )\) represents the set of composite updates that can be defined as sequences of atomic updates \(\delta \in \Delta \), including empty and singleton sequences.

Definition 4

(Update application) Given a model \(\mathcal {M}\) and a model instance m, such that \(m: \mathcal {M}\), a delta \(\delta \in (\Delta ,\cdot )\) is applied to m, denoted by the expression \(\delta (m)\), using the operator \(\circ : (\Delta , \cdot ) \times \mathcal {M}\rightarrow \mathcal {M}\) as follows:

where  represents disjoint union of sets.

represents disjoint union of sets.

In the equational presentation above, terms − with variables − are used on the left-hand side of the equations to denote a search of the relevant feature values in a given model instance, through pattern matching. An upsert update is characterized with the equations (update), which updates the value \(v'\) at position i with the new value v in the feature f for object o, and (insert), which inserts a new value at an index j that is not used. A delete update is characterized with the equation (delete), where the expression \(\{ i \mapsto v \}\) characterizes the set of indexed values with the value v, in case there are duplicates. Hence, deletion has set semantics, and all entries containing the value v are deleted, irrespectively of their indexes. Equations (identity) and (composition) define the base case of the inductive definition of the application operator over the structure of updates, and that update application is compositional.

When considering updates, the following assumptions are taken into account: an atomic update can be applied if it refers to a valid feature type −, i.e., updates are type preserving − and to an existing object (type preservation); a feature value whose cardinality is the lower bound cannot be subject to a deletion (lower bound); a feature value \({f: o \mapsto v_i}\) with the unique constraint cannot be subject to an insert of a value that is already contained in \(v_i\); a feature value whose cardinality has met the upper bound cannot be subject to an insertion, although it may be updated (upper bound); when a reference value is updated, and this reference has an opposite, the update of the opposite reference is included in the update (bidirectional reference integrity); an update describing a move of an object from one container to another one is defined in terms of an atomic deletion of the object from the source container, followed by an upsert of the object to the target container (containment integrity); when an object is removed, all of its containments are removed recursively, and resulting dangling references are also removed − such deletions are part of the update (delete cascade semantics). These pre-conditions guarantee the correct behavior of the update application operator.

In this work, the consistency relation \(\mathcal {R}\) between domain models \(\mathcal {M}\) and their view models \(v(\mathcal {M})\) is defined by an isomorphism that maps feature types by name, taking into account the name of their class as well. A domain model instance m, \(m:\mathcal {M}\), and a view v, \(v:v(\mathcal {M})\) are consistent when \((m,v) \in \mathcal {R}\). An update \(\delta \) on m introduces an inconsistency that must be repaired by propagating \(\delta \) to the view v. Symmetrically, when an update \(\delta \) is applied to v, consistency needs to be restored by propagating it to the model instance m. With this problem in mind, we discuss a solution in the following section.

4 Synchronization of feature values

In this section, we present our solution to the view update problem for domain model instances in the form of a conceptual synchronization model that is independent from specific programming languages and modeling frameworks. The synchronization model employs a synchronization policy for specifying the consistency relation between domain models and view models, to be taken into account when their instances are synchronized.

First, we start by explaining how domain models can be related to view models at type level. A method for obtaining view models, when these are not available, from (DDD) domain models is discussed embedding MDE practices in agile software development environments. This conceptual mapping serves to explain how program snapshots are synchronized with view model instances at run time: a default synchronization policy is described, followed by a mechanism to allow users to define domain-specific synchronization policies.

4.1 Domain knowledge reification

MDE-agnostic domain models can be explicitly represented using an appropriate modeling language. This process is called reificationFootnote 4 and, in this work, is also used to concretize design decisions that may have been deferred or not taken inadvertently.

Regarding the base (source) code, reification can be regarded as the means to embed modeling practices in agile software development processes in projects where modeling is used at different levels of formality: when there is no clear domain model in the application and when there is a domain model.

In the first case, reification becomes a modeling process that may help either to capture the main concepts and structural constraints intermingled in the source code, refactor the application, abstract the existing domain model, or document the system’s design (architecture). Abstraction is supported by selecting classes and structural features that need to belong to a view model. Refactoring may involve relocating features and aggregating/splitting concepts, which will be discussed when defining synchronization policies in subsequent sections.

In the second case, reification brings advantages that stem from the level of expressiveness in the target modeling notation, refining a domain model by adding multiplicity (e.g., in structural features, uniqueness, ordering, optionality) and structural constraints (e.g., in references, bidirectional references, and containments).

That is, reification helps in refining design decisions that could not be captured earlier due to a lack of appropriate notation for capturing the domain model in the source code.

From the case study used in the benchmark in Sect. 6, we extract excerpts of domain classes that appear in listing 1. These domain classes mix domain knowledge with implementation details, like JPA annotations to map domain classes to tables in a relational schema.

The view model, shown in Fig. 5, that is related to this code has some improvements as a result of applying modeling practices; the view model is more abstract and ignores implementation details, focusing on modeling domain knowledge; attribute names are more succinct, losing the prefix; specific fields from the class CustomerAddress have been copied into the class Customer in the view model. In addition, although merely indicated with a note on the model, the currency used in the view model is in GBP, whereas the domain model uses USD. While some of these updates could be implementable in the source code, the presence of crosscutting concerns, like JPA annotations, tends to make such updates more error-prone.

In what follows, we present how program snapshots of the original program in Listing 1 can be mapped to instances of the view model automatically. Figure 5 shows a graphical representation of the domain model using class diagram notation on the left, the corresponding view model on the right, and the synchronization policy in the middle. We explain how our approach automatically infers a synchronization policy between the domain model and the view model, helping to decouple the domain model from implementation aspects. Then, we explain how to deal with representational gaps due to semantic changes using domain-specific synchronization policies.

4.2 Default synchronization policy

The (default) domain-independent policy maps model instances by relying on a one-to-one mapping between their corresponding feature types, based on the name of the class for which they are defined and on their name. This policy is useful when the original domain model is clearly defined. The view model could be extracted faithfully, either from the whole domain model or an excerpt.

The main synchronization policy is declared as a map

where \(\Omega \) is the domain of synced links between object identifiers \(\mathcal {O}\rightarrow \mathcal {O}\), which are used to map objects in the source technical space to their counterparts in the target technical space. P is an overloaded function, for updates and values, that takes a store of synced links and a value in a source technical space and obtains its counterpart in the technical target space, while tracking new synced links.

For mapping values, the definition of P proceeds by cases, depending on the type of the value:

The most interesting case is when the value is an object identifier. If it has been synchronized already and exists in the store \(v\in \omega \), the target object identifier is simply retrieved from the store with the expression \(\omega [v]\). When the object identifier is not synchronized, a fresh identifier of the target class R(c) is inserted in the store \(\omega \) of synced links with the expression \(\omega \cup (v\mapsto o')\) and returned.

The propagation P of atomic updates is defined in equation (syncing), which translates values using the expression \(P(\omega , v)\) and then updates the two components of the co-domain, the store of synced links and the view.

In equation (syncing), dot notation is used to access the components of \(P_\omega (v)\), using \(\_._\omega \) for projecting the store of synced links and \(\_._v\) for projecting the value. In addition, the expression \(o.{type}\) is used to obtain the class name of the object type. Equations (identity) and (composition) define the extension of P to a function on sequences of atomic updates. For now, the function R is defined as the identity function \(R(c)=c\) for class names c and \(R(c,f)=(R(c),f)\) for names f of features belonging to class c. The expression \(e_f(o)\) corresponds to the value of feature f in object identified by o, that is o.f. The funtion R and \(e_f\) are placeholders that serve as extension points that will cater for domain-specific customizations in the following subsection.

The operations P for updates and values have unique inverse maps \(P^{-1}\). For updates, \(P^{-1}\) is declared as

Its definition proceeds analogously, by replacing P with \(P^{-1}\), \(\omega \) with \(\omega ^{-1}\), R with \(R^{-1}\), and \(e_f\) with \(e_f^{-1}\).

The domain-independent synchronization policy can be inferred automatically between the domain model and the view model, although the specification P does not mandate any specific traversal algorithm.

4.3 User-definable domain-specific policies

User-definable domain-specific policies capture use cases where the MDE domain model forms a view model of the original domain model, which can, in addition, consider structural changes that do not incur information loss, as explained in Sect. 4.1. For example, when feature types are renamed or when hierarchies of types can be flattened to simplify the corresponding MDE task, as discussed in Sect. 4.1.

Below we present how the generic synchronization policy can be tailored to a particular domain by considering feature renamings, feature value transformations, and feature relocations.

Renamings Class names and feature names may differ in different domain models yet denote the same concept. In addition, a modeler may prefer to use a more precise name in a view model. From a pragmatic point of view, this allows using different naming conventions in view models to avoid name clashes, although this is not necessary, as explained in Sect. 4.1. Renaming of feature types and classes are defined with the R function using the identities \(R(c)=c\) for class names c and \(R(c,f)=(R(c),f)\) for names f of features belonging to class c, where the expression R(c, f).f obtains the target feature name.

In the example, renamings are used to remove the prefix of features in the domain model for clarity. These renamings are graphically depicted as straight lines between features of the domain model and the view model in Fig. 5.

Feature value transformations When the domain model and its view model differ semantically, we may need to transform feature values from the source program snapshot. For example, when the view model is a more abstract representation of the program snapshot, when it is a more refined model including new derived feature values, or when the context where the view model is used differs from its original context.

These transformations are specified redefining the expression evaluation function \(e_f: c \rightarrow t\) where t may or may not be the type of the feature o.f. This expression will be used instead of the feature value o.f in the synchronization policy. Our synchronization model does not assume any particular expression language, which will be determined by the programming language chosen when the specification is implemented. Depending on whether the feature exists on the source domain model, an inverse feature value transformation \(e_f^{-1}(o)\) may need to be provided and used within \(P^{-1}\).

In the example, we are assuming that while

StoreReturns tracks refunded cash in US dollars, the view model should track this figure in GBP. These feature value transformations can be encoded with arithmetic expressions applying the corresponding exchange rate. The mapping between the features stRefundedCash and refundedCash expresses this feature value transformation as an OCL expression.

Relocations Feature values can be moved from the original counterpart of a source object to a different object, as long as there is a unique path between them. This mechanism facilitates considering structural refactorings related to refinement (or abstraction) when reifying the domain model, e.g., by splitting the feature of a class into two subclasses (or vice versa). The user needs to provide a source path formula \(\psi : c \rightarrow c\) that uniquely obtains a target object \(\psi (o)\), which will be the owner of the feature value.

Relocations combine renamings with feature value transformations. On the one hand, the renaming indicates where the feature has been moved by indicating both the target class and the target feature. On the other hand, the feature f value can be obtained from the related source object \(\psi (o).f\). In this case, the inverse mapping \(P^{-1}\) needs to be provided explicitly by providing the target formula path for backward propagation. In the example, the view model collapses both the Customer and CustomerAddress classes into one single class Customer and the address feature values are relocated from the class CustomerAddress to the class Customer. Relocation expressions are defined in OCL for each mapping. For example, for fetching the streetNumber from customer, the associated customerAddress needs to be found with the OCL expression CustomerAddress.allInstances()-> select(c| c.cCustomerSk=cCustomerSk)-> first().

It is worth noting that this mechanism allows for feature value transformations for all instances of a source class and feature value transformations for specific class instances. Such filters can be specified as part of the feature value mapping using a guarded statement that applies the transformation only when the guard is satisfied.

5 Synchronization framework realization

The abstract feature synchronization specification from Sect. 4 is programming language independent. However, for the feature synchronization model to be applied, it needs to be realized using specific programming languages and MDE frameworks. Below, we enumerate the implementation requirements that need to be satisfied by a programming language and a (meta-)modelling framework to implement the feature synchronization specification. Then, we introduce the EMF-Syncer, which implements this specification for JVM languages using EMF as the (meta-)modeling framework.

5.1 Implementation requirements

The feature synchronization model allows the extraction of view model instances from program snapshots at run time. The object-oriented paradigm is used to unify modeling concepts in programming languages and MDE modeling frameworks so that classes, with their fields, in a program can be mapped to classes, with their features, in a view model, while respecting class inheritance semantics.

Hence, programs are expected to be represented using an object-oriented programming language equipped with reflection facilities. Reflection usually builds on two techniques: introspection, which allows a program to analyze its class constituents, and intercession, which enables the program to modify itself (its behavior or its state). Reflection is required both to extract relevant excerpts of program snapshots when obtaining the MDE counterpart, and to propagate updates to the original program snapshot that may have resulted from updates to the model instance. Some examples of programming languages that fall under this category are Java (and, by transition, any JVM programming language), C#, Python, Ruby, and C++.

For an object-oriented (meta-)modeling framework to qualify for implementing the synchronization model, it is required to provide a model access API with reflection facilities. In addition, the programming language of choice should have an SDK to access this API.

5.2 EMF-Syncer

The synchronization specification has been implemented both for domain models embedded in Java programs and for EMF view models in \(\textsc {EMF-Syncer\ }\), available at https://emf-syncer.github.io. A syncing session in \(\textsc {EMF-Syncer\ }\) has two main stages: an initial stage where feature values are synchronized on demand, and a (backward) incremental propagation stage where updates are propagated from the view to the underlying domain model instance.

In the initial stage, the domain model instance is described as a big composite update, and the result of this initial propagation is that the source non-EMF model instance and the EMF view are synchronized in the store \(\omega \) of synced links. Two synchronization strategies have been implemented, providing support for push-based initial synchronization, in which all of the feature values are mapped to target feature values, and for pull-based initial synchronization, in which feature values are only initialized when they are accessed in the target EMF application.

The following listing instantiates the \(\textsc {EMF-Syncer\ }\) two arguments, a list of qualified package names containing the classes to be mapped, and the Ecore model containing the corresponding EMF classifiers. The second line shows how to initialize the EMF model instance from a list of Java objects.

In the second stage, updates to either non-EMF or EMF views are incrementally propagated in a push-based fashion. Updates in the EMF model are observed using an aspect (in AspectJ), which is provided by the \(\textsc {EMF-Syncer\ }\), and that intercepts calls to feature values. The user needs to provide the name of the packages containing the classes to be monitored by the aspect. The push-based strategy consists in applying the synchronization policy to the view v, which is initially empty, with \((P(\omega , \delta _s).v) (v),\) where \(\omega \) is empty initially and reuses the initialized store of synced links for subsequent incremental propagations. For propagating updates \(\delta _t\) back to Java domain model instances m, the inverse synchronization policies are applied with \((P^{-1}(\omega ^{-1}, \delta _t).v) (m)\). Incremental backward propagation of updates is achieved as shown in line 4 of the listing 2.

For the initial synchronization of model instances, the tool takes advantage of the fact that there are no updates of existing feature values or deletions of objects, and the representation of model instances as deltas is circumvented, as the type of the delta, \(\mu \), is known. The computational cost of the push-based synchronization strategy is linear in the size of feature values, which can be undesirable for the initialization phase for very large model instances. Subsequent incremental propagation of updates, once the model instance has been synchronized with its view, is usually instant, as shown in the experimental results, because the size of updated feature values tends to be a fraction of the original model instance size. That is, updates can be propagated in real-time − in less than 1 ms. for updates affecting about \(10^3\) objects and in ms. for updates affecting about \(10^5\) objects − where objects may contain several feature values.

The implementation of this synchronization policy uses the fact that feature values can be indexed by the object identifier and by the feature name so that the search for the feature value to be synchronized is obtained in constant time. The pull-based synchronization strategy, only available for the initial stage at the moment, is also linear but only in the size of the feature values that are accessed in the actual computation in the EMF application, thus avoiding unnecessary propagations for executing the task at hand. We refer the reader to Sect. 6, where we justify the pragmatism of the pull-based strategy when dealing with benchmark model queries and updates. An additional example showing how \(\textsc {EMF-Syncer\ }\) can be used in practice for implementing round-trip scenarios in java web services is available at [8].

6 Empirical evaluation

In this section, we investigate whether the proposed synchronization model is a pragmatic approach to adopting MDE tooling by checking to what extent \(\textsc {EMF-Syncer\ }\) allows us to apply EMF-based tools to a representative Java program at run time. Two main research questions are considered:

RQ1: What is the overhead imposed by the synchronization algorithm, both during the initial phase and during the incremental propagation phase, to perform queries? For the analysis of this question, three dimensions are considered: the time taken for syncing feature values, the time taken to perform tasks (queries and updates) with them, and the memory used.

Regarding the first dimension, an implementation of a basic algorithm that maps Java objects to EMF objects is considered. This algorithm is domain-specific − that is, specific to a domain model, − can only be executed in batch mode and does not offer synchronization. Therefore, its raw performance sets a baseline for comparing the overhead incurred by additional functionality.

Regarding the second dimension, we are using the TPC Benchmark DS [36]. The purpose of the TPC benchmark is to provide relevant, objective performance data to industry users. The current version of the TPC-DS benchmark considers emerging technologies, such as big data systems. This benchmark allows us to generalize our findings to common functionality found in decision support systems used in industry and that may involve very large data sets.

Five tasks have been analyzed: a selection of the store returns corresponding to a given customer (Q1) together with an update that adds one store return to the customer (B1); an invariant that checks that store returns have valid identifiers (Q2); and a typical big data query, extracted from the benchmark [36, B.1], which finds customers who have returned items more than 20

These five tasks have been implemented in SQL, using the query template facilitated in the benchmark specification for Q3, in Java and EMF. The SQL implementation executes SQL native queries via the Spring Data JDBC templateFootnote 5 considering that they only return lists of strings, minimizing fetching time. The Java and the EMF implementations are exactly the same, but for the implementation of the domain classes, which are declared in a different namespace. In addition, the Java domain classes are linked to a MariaDB relational databaseFootnote 6 via JPA and the EMF domain classes only contain the feature values, thus forming a view model, that are relevant for the tasks to be computed. The correctness of the Java/EMF queries is cross-checked with the results obtained with the corresponding SQL queries for each database every time a query is executed.

RQ2: To what extent does the synchronization algorithm scale with respect to the size of the given data set in the Java program? For the analysis of this question, databases of different sizes have been generated using the Java port of the TPC-DS benchmark,Footnote 7 which allows for no sexism in the generated data setFootnote 8 and this has been the preferred option. Table 1 shows a summary of model instance sizes involved in the experiments. A size factor on a logarithmic scale (with base 2) has been used to increase the size of the factor to be able to experiment with several databases in a standard computer. The baseline object mapper traverses the graph of Java objects in memory and has been used to obtain the cardinality of the model instances involved, noting that the actual number of objects in memory is twice the size mentioned in the table, given to the Java/EMF duality that \(\textsc {EMF-Syncer\ }\) maintains. The other columns show the cardinality of the model instance that has been synchronized, after the corresponding query was executed (Q1I, Q2I and Q3I), and the cardinality of the set of feature values that are propagated incrementally after the update was performed (Q1B and Q3B).

6.1 Experiment setup

The experiments were performed on a computer with CPU i7-10810U and 32 GB RAM, using OpenJDK 11.0.11. The default G1 Garbage Collector was configured to start concurrency GC cycles at 70 These parameters were coupled with a maximum heap size limit of 24GB and an aggressive heap usage strategy, minimizing interferences with processes external to the experiment. Pauses due to garbage collection should be avoided for small databases where response time is of a few ms, or even \(\mu s\).

Figures 6, 7 shows the time taken (in ms) by the three queries in SQL, Java, and EMF. The Java application uses lazy (JPA) associations between domain classes, which are initialized when loading data from the database. Java objects are assumed to be in memory at run time because the problem of fetching model instances from disk or an external store is out of the scope of this work. Therefore, loading time is not relevant to the research questions and has been excluded from the analysis. In addition, the Java queries have been executed twice, minimizing the impact of lazy object loading (Java 1st) and only the time taken by the queries in the second iteration (Java 2nd), where objects are already loaded in memory, has been considered. In EMF, each query has been performed twice to measure the impact of deferred initialization in the synchronizer: the first time (EMF 1st) corresponds to query time mixed with feature value initialization, while the second time (EMF 2nd) is mostly query time. In the second iteration, there are still feature values whose value corresponds to null values (in the case of one-bounded references) or default values, and \(\textsc {EMF-Syncer\ }\) does not distinguish them from EMF feature values that have been initialized with default values already. Performing a check to avoid such cases was more expensive than performing the translation.

Figure 8 shows the time taken (in ms) by the baseline object mapper and by the synchronizer for each query. The time taken by the baseline mapper corresponds to the translation of the whole program snapshot into an EMF view. For each query, the time taken by the synchronizer has been monitored in two stages: initial synchronization together with deferred initialization of features of values when executing the corresponding query (Initialization), excluding the time taken by proper query execution and during backward propagation (Backward). In Q2, we have not considered updates and, consequently, there is no Backward component.

The times reported are average times (in ms) extracted from 100 iterations for experiments for size factors up to \(2^{2}\), and from 10 iterations for the rest of the cases. “The first 10% of results have been considered a warm-up exercise for the JVM and have been excluded from the analysis. The raw data, together with the tools to replicate the experiment, are available at url https://github.com/emf-syncer/emf-syncer.tpcds”.

6.2 Analysis and interpretation of results for RQ1

The initial synchronization algorithm is linear in the size of the list of objects given. The propagation algorithm (both from Java to EMF and from EMF to Java) is linear in the update’s size. From the results obtained, plotted in Fig. 6, Java queries Q1 and Q3, including queries over synchronized EMF views, are more efficient than the SQL queries run on MariaDB in general, once the excerpt of the database relevant for queries is available in memory as object instances. The reason for this is that contextual information is already available in memory for evaluating the query. In contrast, SQL queries have to traverse very large tables in the database to find that initial context. Q2 (an integrity constraint) shows that grouping in SQL is more efficient than Java’s solution. Figure 9 shows the run-time overhead (in ms) that \(\textsc {EMF-Syncer\ }\) introduces for computing queries over EMF objects when comparing the same queries over Java objects. In particular, we compare the run-time of evaluating the three queries when the synchronization is being initialized (emf_1st), and when it is already initialized (emf_2nd), concerning the query evaluation when all objects are in memory (java_2nd). The overhead obtained is linear with the size of the program snapshot. For large program snapshots (size factor \(2^0\) with view sizes of 575K objects in Q2, and a view of 375K objects in Q3), the overhead of the initial synchronization is about 1 s. For the largest size factor (with a view of 9.2 M objects in Q2, and a view of 4.8 M in Q3), the overhead is of about 16 s in Q2, and about 19 s in Q3.

Synchronization time is split into the time used in the initial phase and in deferred synchronization of feature values once they are requested. \(\textsc {EMF-Syncer\ }\) thus balances the synchronization workload and tailors it to the computation task to be performed. In practice, the client application will experience a split of this time in the initial phase and the rest when feature values are requested for the first time in a query. Deferred synchronization resolution occurs once and for all, that is, a feature value is synchronized the first time it is accessed and stays synchronized for subsequent accesses. EMF feature values corresponding to Java feature values that are not initialized are a special case as \(\textsc {EMF-Syncer\ }\) cannot distinguish them from initialized feature values.

Overhead in the second run of EMF queries (emf_2nd) should be understood as the performance of queries run on synchronized EMF views. This overhead is almost an order of magnitude lower than in the initial synchronization. It is due to the instrumentation in EMF code performed by \(\textsc {EMF-Syncer\ }\), which, for example, needs to check whether an EMF feature value has been initialized in the presence (or lack) of default values in a Java feature value.

Figure 8 compares synchronization run time with respect to the (Baseline) mapping of objects. The baseline mapping is more efficient when comparing it with the initial synchronization of large models. However, subsequent incremental synchronizations (Backward) offer much better performance (more than one order of magnitude) than re-executing the baseline mapping from scratch, especially for large model instances.

Figure 7 shows the memory consumption by the different tasks. Each experiment runs several iterations of the SQL query for a given size factor, then fetches the objects via JPA, and runs the queries over the program snapshot and the EMF view. Memory measurements (in MBs) refer to the amount of memory used by the experiment at the end of each of these tasks, without trying to compute the amount of memory specific to each particular task. We can observe that the EMF-Syncer introduces no memory overhead from the measurements. This is because on-demand synchronization only propagates the feature values required to execute the query.

Therefore, when adopting EMF tooling at run time in an EMF-agnostic JVM application, there is an initial synchronization overhead, considering both the initial phase and deferred synchronization of feature values; this overhead is balanced between initial synchronization and deferred synchronization, and is under a second for large views (0.5 M objects). \(\textsc {EMF-Syncer\ }\) tailors the synchronization effort depending on the computation task being performed, depending on the access to feature values. Notably, the \(\textsc {EMF-Syncer\ }\) does not introduce any noticeable memory overhead.

6.3 Analysis and interpretation of results for RQ2

We consider two cases, when the resulting model instance is small and when the initial data set is very large.

Table 1 shows that \(\textsc {EMF-Syncer\ }\) only syncs those feature values that are accessed depending on the task being performed. For example, while the batch object mapper traverses 9.5 M objects for a size factor of \(2^4\), \(\textsc {EMF-Syncer\ }\) only needed to sync 4.8 M objects for Q3.

Figure 8 shows that \(\textsc {EMF-Syncer\ }\) can synchronize small excerpts of a model instance (in Q1) in a few ms independently of the total size of the model instance. Moreover, initial synchronization time together with deferred synchronization time exhibits a reasonably linear growth w.r.t. the size of the model instance, which scales relatively well-compared with the baseline mapper’s performance.

Incremental propagation of updates is efficient, even for large updates. For example, updating 124K objects takes about 120 ms, in Q3_Backward, for a size factor of \(2^4\). Forward incremental propagation (from Java to EMF, once model instances are synchronized) is based on the same algorithm and the same data structures, and it, therefore, exhibits a similar performance.

For large model instances, e.g., \(2^3\), in Q3, it can be observed that initialization time (the sum of initial synchronization time and deferred synchronization time) together with query time Q3 (EMF 1st), excluding loading time, is better than the time used to compute the same query with SQL. This shows that the scalability of \(\textsc {EMF-Syncer\ }\) performance can be better than the scalability of query evaluation in industry database management systems in some scenarios. The reason is the availability of contextual information in memory that is readily exploited when evaluating navigation expressions, which need to be computed when using SQL. Domain-specific query optimization, using indexing, was not performed as we used the relational schema provided in the TPC-DS benchmark resources.

From Fig. 6, it can be observed that the execution of queries scales similarly in the Java program and in the view model, via the EMF-Syncer . Hence, while the EMF-Syncer introduces some overhead in the initial evaluation of the query, this overhead does not affect the scalability of query evaluation. This is because the run-time overhead introduced by the EMF-Syncer , as shown in Figure reffigOverhead, is sufficiently small when compared with the cost of executing the query.

6.4 Threats to validity

In this subsection, we considered threats to internal validity, in which the structure of the benchmark may have influenced the results; threats to external validity, which may affect the generalization of results of an experiment to other populations, settings, or conditions [39].

Regarding internal validity, we need to consider that the database was generated once for each size factor and used for all of the iterations. To counter the bias that a particular database may introduce in the experiment, we compared the results of the experimentation in this article, run on a Windows machine, with the results of the experimentation run on a Mac OS in [7] with a different database and no inconsistencies were found. In addition, we also have to consider that the size of a database is generated according to a size factor, but the actual data is randomly generated and the cardinality of the results obtained by the queries may not be correlated for each database. For example, the time Q3B for the size factor \(2^3\) corresponds to the update of 54K objects, and the time Q3B for the size factor \(2^2\) is for 46K objects. The actual size increase does not correspond to the logarithmic scale used for the overall database size. Object sizes and an explanation of each task help scrutinize the obtained results objectively.

Regarding external validity, we need to discuss any threats that may affect the generality of the conclusions inferred within the scope of the work. Our synchronization model captures common modelling constructs available in any kind of object-oriented model and, in the case of the \(\textsc {EMF-Syncer\ }\), in any kind of EMF model. So the solution is generic and not domain-specific. Moreover, it works at the level of feature values. This level of granularity is common in model-to-model transformation languages and facilitates comparison with other benchmarks that use EMF for processing very large models.Footnote 9 One must take into consideration, however, that performance results may vary depending on the density of feature values in objects and in how many of them are used. In Table 1, the size of a model instance has been presented in terms of the cardinality of its constituents, without decomposing the basic unit of data in the object-oriented paradigm, the object. In addition, the experimentation has been performed with the TPC-DS benchmark with representative data analytic queries, yet other use cases and benchmarks may require additional experimentation.

7 Related work

The \(\textsc {EMF-Syncer\ }\) facilitates the automatic extraction of editable (view) model instances from program snapshots. When there are edits in the (view) model instance, the propagation of changes to the original program snapshot is incremental, significantly reducing the computational cost of maintaining both in consistent state. Furthermore, the \(\textsc {EMF-Syncer\ }\) can leverage third-party libraries, such as Spring Data, to extract model instances from various data sources. Hence, in this section, we focus the discussion of research in three main areas: model synchronization at run time, approaches for supporting model views, and approaches for storing models in heterogeneous data sources.

7.1 Model synchronization at run time

The literature surveys[3, 34] provide a historical perspective on the development of the research topic models@run.time. Model transformation and reflection are important techniques that have been used for system adaptation, in particular using bidirectional transformation [12] and model synchronization [38].

The adoption of MDE tooling at run time was investigated in [28, 29, 32]. Given a system meta-model, providing the types of the runtime data, and an access model, specifying how to manipulate the data through the API of the system, the tool Sm@rt automatically generates a synchronizer. This synchronizer maintains the consistency of the model (view in our work) and the system state (model instances in our work) in the presence of concurrent updates, either to the model or to the system state. Sm@rt has been used to implement the execution model of QVT for synchronizing models@runtime in [30, 31].

Sm@rt and EMF-Syncer facilitate the adoption of MDE tooling in MDE-agnostic systems at run time. However, in Sm@rt, concurrent updates are allowed, while synchronization is controlled by the client program in EMF-Syncer . More importantly, Sm@rt assumes the existence of an access model that adapts the existing system, to inspect its state at run time, while \(\textsc {EMF-Syncer\ }\) can inspect the state of Java programs out of the box. Song et al. studied the feasibility of adopting MDE tooling with several case studies, and performance was assessed with models containing less than 1000 elements [31]. We have taken this research one step further to scrutinize, from a quantitative point of view, the extent to which it is pragmatic to use MDE tooling in representative industrial scenarios, which may involve large data sets.

Incremental model transformation with triple graph grammars (TGGs) [19, 21, 22] have been applied to adapt and analyze models@runtime [20]. TGGs in [20] rely on a runtime model framework[37] which enables the representation of the state of a managed system, a component-based system instrumented with sensors and effectors via mKernel [9]. mKernel is an autonomic computing infrastructure for component-oriented enterprise applications that follows the Enterprise Java Beans (EJB) standard, providing appropriate facilities for information discovery, via sensors, and manipulation, via effectors, of EJB components. A source model in [20] is causally connected to a running system, which is realized by an event-driven adapter that works incrementally. Therefore, events dispatched from mKernel sensors notifying about changes in the system are processed to update the source model. Likewise, if the source model is changed by the transformation engine, the model emits corresponding events that are mapped to invocations of effectors that actually adapt the system. The \(\textsc {EMF-Syncer\ }\) performs monitoring using Java reflection and is, therefore, agnostic of specific software architectures, potentially leveraging MDE tools for a broader range of software systems. The EMF-Syncer , in this context, could be regarded as an alternative to design a runtime model framework, providing support for model transformation languages and model view technology, which is the topic of the following subsection.

7.2 Model views

A number of works, see the survey by Brunelière et al. [10], use views over models, or model views, to facilitate multi-view modeling. Such approaches rely on explicit representations of (base or contributing) models. Elements from those models may form part of a view, where they may be enriched with additional data, describing a system from a particular viewpoint.

In EMFViews [11], scalable model views are designed over heterogeneous data sources by building on the persistence layer of modeling technologies. It uses the EMF API to interact with SQL databases via CDO [15] and with NoSQL databases via NeoEMF[13], and the Epsilon Model Connectivity (EMF) layer [26] to load models from various data sources. In this approach, views are read-only and consist of proxies to elements of constituent models. Viewpoints are declared using a weaving model without having to modify the original models. The definition of views uses the weaving model to create links between the different models at run time.

Queries are performed using the EMF-level query APIs provided by CDO, NeoEMF, and Epsilon. Scalability is achieved using lazy loading and by exploiting the facilities that each of the underlying modeling technologies offer. EMFViews can work with runtime models that are persisted, e.g., as logs. At the moment, views are not editable, and queries are not executed incrementally. Once a model is partially loaded to evaluate a query, a change in the data requires to re-evaluate the whole query. Our work focuses on view models instead, reifying (relevant parts of) domain knowledge implicitly represented in the source code of a program. Such view models explicitly represent the domain model of a program, and define views, since they abstract away parts that are not relevant for a chosen viewpoint. In addition, views models can also aggregate relevant knowledge from different programs. This difference is important as the traditional concept of model view cannot be directly applied in the context of our work, since the base (contributing) model is missing. The new notion of view model could enable the application of model views, by exposing excerpts (views) of program snapshots in the form of a model—hence the name view model—which can then be used as instances of a base (contributing) model for a model view. Furthermore, the living objects of a system can be expressed as constituents of a model instance at run time, without having to rely on persistence technology, which quite often requires disk access. View models in EMF-Syncer are editable in both directions, view update and view maintenance. However, changes cannot occur concurrently, and their occurrence needs to be controlled by the client code.

Views, that is, view model instances consist proxies until they need to be materialized, creating copies of objects. The empirical results show that the performance overhead is minimal, taking into account that views are editable in both directions incrementally.

On the other hand, relying on the observation that an EMF model can be realized as a Java program, our approach can also be applied to define model views.

7.3 Model persistence

In this work, we have presented that the EMF-Syncer is a mechanism to build model adapters, as in the adapter design pattern, so that they can be managed by metamodel-agnostic programs. In the use cases that we have mentioned in Sect. 2, domain models can be stored and loaded from large databases, using different persistence technology depending on the application domain.

In MDE, several approaches solve this problem by using a model API as the data persistence façade, aiming at providing interoperability and scalability. This natural option permits hiding persistence details from EMF client applications when models are explicitly represented, e.g., in EMF’s Ecore language, and program states correspond to model instances. In such approaches, interoperability with MDE-agnostic software is delegated to the datastore technology. Considering new database technology, or a new representation format, requires the extension of the corresponding SDK building the bridge between the persistency layer and the modeling API.

NeoEMF [13] encapsulates NoSQL data stores under the EMF model API to store very large models. In this approach, the corresponding metamodel determines the data schema, and the database is populated via the EMF model API. Thereby, interoperability with other applications is determined by the MDE tool, and reusing existing software projects may be more complex. In our approach, the data schema is determined by the MDE-agnostic program.

The Epsilon platform [35] uses the notion of the driver to implement such representational changes, enabling interaction with relational databases, XML documents, spreadsheets, and Simulink models, among others. Drivers in the Epsilon platform raise the level of abstraction above persistence SDKs so that client applications need not be aware of implementation aspects. The metamodel is implicitly inferred from the data schema in the corresponding data store, and models can be loaded from various data formats with minimal effort for standard use cases, such as treating table tuples as objects that can be queried using OCL via the JDBC connector. However, use cases that deviate from the supported functionality may require modifying the driver’s code. For example, if foreign keys are to be considered in OCL queries .Footnote 10 While the EMF-Syncer exposes persistence implementation details, already existing in software projects, it provides more flexibility for reusing available persistence APIs, like Spring Data, without imposing functional constraints via an object broker. The application of MDE in software projects incurs technical debt that affects their maintainability [25]. Our approach enables the application of MDE technology non-intrusive without requiring an up-front commitment. Once the MDE technology and its benefits are fully understood, agile practitioners can decide whether to adopt MDE technology up-front.

8 Conclusions and future work

A roundtrip synchronization model has been presented for extending MDE-agnostic software applications with MDE-aware functionality, while preserving their original semantics, at run time. Data synchronization occurs at the level of feature values by using views that need not be materialized. In-memory program data (snapshots) can be explicitly represented as view model instances, facilitating the application of MDE technology to program data that may be fetched from heterogeneous data sources. For example, the experimental setup for the benchmark illustrates the use case where data stored in a relational database is made available as EMF model instances, reusing JPA providers widely employed in industry, e.g., Hibernate, without having to rely on EMF persistence solutions, such as CDO, which requires knowing both EMF and JPA[27].

Implementation requirements have been enumerated to cater to different implementations of the abstract synchronization model. As a proof of concept, this synchronization model has been implemented in \(\textsc {EMF-Syncer\ }\), providing deferred initialization of feature values and incremental propagation of updates, whose performance and scalability have been analyzed from a quantitative point of view. \(\textsc {EMF-Syncer\ }\) can be used as an infrastructure tool to observe implicit domain knowledge in programs as explicit model views, fostering experimentation with MDE tooling in a non-intrusive manner. The tool, available as an IDE-independent library at https://emf-syncer.github.io, is easily reusable as a Maven dependency.

Our experiments with the \(\textsc {EMF-Syncer\ }\) show that despite maintaining the consistency between copies of objects (program snapshot and view), there is no apparent memory overhead. The initial synchronization with millions of objects shows overhead in the region of seconds but, for large cardinalities, 500K objects, the synchronization is performed in under one second, and the propagation of large updates over models instances consisting of millions of objects can be performed in ms. In our experiments, the cardinality of elements considered has been increased by one order of magnitude concerning the cardinality of the specimens considered in previous experiments, e.g., [40], thus providing a better insight into the capabilities of state-of-the-art MDE technology for scenarios involving large data sets.

At present, the application of the EMF-Syncer relies on existing view models. In future work, we would like to study approaches to synthesize such view models from existing programs (semi-)automatically. Another area of work is the application of EMF-Syncer to support MDE technology, such as incremental model transformations in YAMTL [6], or retyping mechanisms for reusing model management operations via structural subtyping [5].

Notes

The meaning of the used JPA annotations is not relevant for the presentation of the work and they have been included to show that there is non-MDE technology that is made available to MDE tools through the EMF-Syncer .

In EMF, the value \(-1\) is used to denote the UML value many, \(*\), but we simply consider that \(*\) is the infinite natural number.

In the original work in [7], we used the Spring Data JPA interface with native SQL queries. The SpringData JDBC template performs SQL queries independently of the object-relational mapping.

Version 10.2.11-MariaDB with INNODB 5.7.20.

In the official TPC-DS benchmark, only males are chosen as managers.

For example, the \(\textsc {EMF-Syncer\ }\) was used at the Transformation Tool Contest 2020 [8], where it was compared against state-of-the-art model-to-model transformation tools.

As of 16-10-2021.

References

Amrani, M., Blouin, D., Heinrich, R., Rensink, A., Vangheluwe, H., Wortmann, A.: Multi-paradigm modelling for cyber-physical systems: a descriptive framework. Softw. Syst. Model. 20(3), 611–639 (2021). https://doi.org/10.1007/s10270-021-00876-z

Baresi, L., Ghezzi, C.: The disappearing boundary between development-time and run-time. In: G. Roman, K.J. Sullivan (eds.) Proceedings of the Workshop on Future of Software Engineering Research, FoSER 2010, at the 18th ACM SIGSOFT International Symposium on Foundations of Software Engineering, 2010, Santa Fe, NM, USA, November 7–11, 2010, pp. 17–22. ACM (2010). https://doi.org/10.1145/1882362.1882367

Bencomo, N., Götz, S., Song, H.: Models@run.time: A guided tour of the state of the art and research challenges. Softw. Syst. Model. 18(5), 3049–3082 (2019). https://doi.org/10.1007/s10270-018-00712-x

Booch, G., Maksimchuk, R.A., Engle, M.W., Young, B.J., Conallen, J.: Object-oriented analysis and design with applications, 3rd edn. Addison-Wesley, Addison Wesley object technology series (2007)

Boronat, A.: Structural model subtyping with OCL constraints. In: Proceedings of the 10th ACM SIGPLAN International Conference on Software Language Engineering, SLE 2017, Vancouver, BC, Canada, October 23-24, 2017, pp. 194–205. ACM (2017)