Abstract

IT Landscape models are representing the real-world IT infrastructure of a company. They include hardware assets such as physical servers and storage media, as well as virtual components like clusters, virtual machines and applications. These models are a critical source of information in numerous tasks, including planning, error detection and impact analysis. The responsible stakeholders often struggle to keep such a large and densely connected model up-to-date due to its inherent size and complexity, as well as due to the lack of proper tool support. Even though modeling techniques are very suitable for this domain, existing tools do not offer the required features, scalability or flexibility. In order to solve these challenges and meet the requirements that arise from this application domain, we combine domain-driven modeling concepts with scalable graph-based repository technology and a custom language for model-level queries. We analyze in detail how we synthesized these requirements from the application domain and how they relate to the features of our repository. We discuss the architecture of our solution which comprises the entire data management stack, including transactions, queries, versioned persistence and metamodel evolution. Finally, we evaluate our approach in a case study where our open-source repository implementation is employed in a production environment in an industrial context, as well as in a comparative benchmark with an existing state-of-the-art solution.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Model-driven engineering (MDE) is a discipline that aims at improving the processes, workflows and products of existing engineering areas by applying models as an abstraction layer. The primary field of application for MDE has traditionally always been software engineering [64]. However, the key innovations of MDE are not domain specific. The general concept of using a metamodel to define a structure and then instantiating it to create actual objects applies to a wide range of problems. When comparing different use cases it becomes evident that modeling concepts tend to be employed in areas that exhibit high complexity and heterogeneity in their domain structures, such as cloud orchestration [22], self-adaptive software [5], automotive systems [24] or Enterprise Architecture Management (EAM) [43]. However, there are still many potential application areas for model-driven approaches that have barely been investigated so far. EAM focuses exclusively on the strategic aspects of IT management. Standard metamodels (such as ArchiMate [43]) have been developed for this domain, yet these metamodels focus primarily on high-level business services and capabilities. The actual assets (or Configuration Items (CIs) [7]) on the operative level are captured in a coarse-grained way that does not allow for deeper analysis, or are excluded entirely.

Configuration items typically comprise physical servers, applications, databases and network infrastructure. We refer to the collection of all assets in a company as the IT Landscape (also known as resource landscape [36]). The IT Landscapes of major, globally operating companies, can grow to considerable dimensions. Due to agility requirements, they are increasingly subject to frequent evolution and technology shifts. Recent examples include the extensive usage of virtualization platforms in data centers, the advent of cloud computing and the emergence of As-A-Service solutions. Furthermore, even though commonalities do exist, every company has its own architecture and vision behind its landscape. The terminology also varies, as there is no generally accepted definition across all stakeholders for common terms like Service or Application. Responsible persons and teams often struggle in their continuous efforts to properly document these landscapes due to their inherent size and complexity. The absence of a reliable and up-to-date documentation can result in slow error detection, loss of traceability of changes and misguided planning processes due to poor information situations. Ultimately, these issues can lead to problems which cause very high costs for the companies if they remain unaddressed [30, 51].

The need for tool support in the area of IT Landscape documentation is evident, and model engineering is well-suited to provide the required concepts. However, the existing MDE tool infrastructure is insufficient when it comes to satisfying the requirements of this domain. Existing solutions either do not scale with the number of elements in a real-world IT Landscape documentation, do not offer the necessary analysis capabilities, or lack the flexibility needed in long-term projects. Several state-of-the-art model repositories employ relational databases, even though the object-relational gap is well-known to cause additional complexity and performance overhead. Furthermore, the required commitment to a fixed schema across all entries impedes the ability to perform metamodel evolution processes without altering past revisions. In recent years, the NoSQL family of databases has expanded, and graph databases in particular are an excellent fit for storing model data [1, 4]. The central research question we focus on in this paper is how to combine domain-driven modeling concepts and technologies with the innovations from the graph database community in order to build a model repository which is suitable for IT Landscape documentation.

In this paper, we present a solution for storing, versioning and querying IT Landscape models called ChronoSphere. ChronoSphere is a novel open-source EMF model repository that addresses the needs of this domain, in particular scalable versioning, querying and persistence. It utilizes innovative database technology and is based on a modular architecture which allows individual elements to be used as standalone components outside the repository context. Even though ChronoSphere has been designed for the IT Landscape use case, the core implementation is domain independent and may also serve other use cases (see Sect. 9.4). In our inter-disciplinary efforts to realize this repository, we also contributed to the state-of-the-art in the database community, in particular in the area of versioned data storage and graph versioning. We evaluate our approach in an industrial case study in collaboration with Txture GmbH.Footnote 1 This company employs our ChronoSphere implementation as the primary storage back-end in their commercial IT Landscape modeling tool.

The remainder of this paper is structured as follows. In Sect. 2, we first describe the IT Landscape use case in more detail. We then extract the specific requirements for our solution from this environment and discuss how they were derived from the industrial context. Section 3 provides a high-level overview of our approach. In Sects. 4 through 6, we discuss the details of our solution. In Sect. 7, we present the application of our repository in an industrial context. Section 8 evaluates the performance of ChronoSphere in comparison with other model repository solutions, which is followed by a feature-based comparison of related work in several different areas in Sect. 9. We conclude the paper with an outlook to future work in Sect. 10 and a summary in Sect. 11. Sections 4 through 6 consist of a revised, updated and extended version of the content presented in our previous work, mainly [25, 27, 28]. The remaining sections (most notably 2, 7 and 8) have never been published before.

2 Use case and requirement analysis

The overarching goal in IT Landscape documentation is to produce and maintain a model which reflects the current IT assets of a company and their relationships with each other. As these assets change over time, keeping this model up-to-date is a continuous task, rather than a one-time effort.

From a repository perspective, the use case of IT Landscape documentation is unique because it is both a database scenario (involving large datasets) as well as a design scenario where multiple users manually edit the model in a concurrent fashion (see Fig. 1). The amount and quality of information which is available in external data sources depends on the degree of automation and standardization in the company. For companies with a lower degree of automation, users will want to edit the model manually to keep it up-to-date. In companies that have a sophisticated automation chain in place, the majority of data can be imported into the repository without manual intervention. Typical data sources involved in such a scenario are listed in Table 1.

After gathering and consolidating the required information in a central repository, typical use cases are centered around analysis and reporting. A user usually starts a session with a query that finds all assets that match a list of criteria, such as “Search for all Virtual Machines which run a Linux Operating System” or “Search for the Cluster named ‘Production 2’ located in Vienna”. Finding an asset based on its name is a particularly common starting query.

From the result of this initial global query, the user will often want to analyze this particular asset or group of assets. Common use cases involve impact analysis and root cause analysis. The central question in impact analysis is “What would be the impact to my applications if a given Physical Server fails” and can be answered by a transitive dependency analysis starting from the Physical Server and resolving the path to the transitively connected applications (crossing the virtualization, clustering and load balancing layers in between). Root cause analysis is the inverse question: given an Application, the task is to find all Physical Servers on which the application transitively depends. This insight allows to reduce the search space in case of an incident (ranging from performance problems to total application outage).

Finally, analyzing the history of a single element or the entire model as a whole are important use cases in IT Landscape management. For example, users are interested in the number of applications employed in their company over time. Version control becomes essential in such scenarios, because it allows to formulate queries over time after the actual insertion of the data has happened (whereas for a statistic on a non-versioned store the query would have to be known in advance to track the relevant data at insertion time). Per-element history traces are also important, as they allow to identify who performed a certain change, which properties of the asset have been modified, and when the modification has occurred.

In the remainder of this section, we focus on the most important influences from the industrial context, how we derived requirements for our repository from them, and how these requirements are met by technical features. Figure 2 provides an overview of this process.

2.1 Deriving requirements from the context

IT architectures and their terminology (e.g., the exact definition of general terms like Service or Application) vary by company. Therefore, the structure of the resulting IT Landscape models also differs. One solution to these Heterogeneous Architectures [C1] is to unify them under a common, fixed metamodel (e.g., ArchiMate [43]). However, this can lead to poor acceptance in practice due to its rigidity and the additional complexity introduced by its generality. From our past surveys and case studies [17,18,19,20, 73], we inferred the requirement that the metamodel should be configurable by the user [R1]. The companies which utilize IT Landscape models the most are usually large companies [C2], or companies with a strong focus on IT (such as data centers). This entails that the corresponding models will grow to considerable sizes, and a repository must offer the necessary scalability [R5].

Documenting the IT Landscape of a company is a continuous effort. In the industrial context, we are therefore faced with long-running endeavors [C4] that span several years. In situations where responsible persons change and team members leave while new ones join, the ability to comprehend and reflect upon past decisions becomes crucial. Versioning the model content [R2] meets these demands, and also enables use cases that involve auditing, as well as use cases where versioning is required for legal compliance [C6]. The underlying requirement for these use cases is to not only store the version history, but also to analyze history traces [R8]. During a long-term documentation project, the metamodel sometimes also needs to be adapted [R3], for example due to technological shifts [C3] that introduce new types of assets. Examples for technological shifts include the introduction of virtualization technologies in data centers, and the advent of cloud computing. Another direct consequence of long-running projects is that the change history of individual model elements can grow to large sizes [R5] which must be considered in the technical realization of a repository.

In industrial contexts, several different stakeholders collaborate in documenting the IT Landscape. Depending on the scope of the project, stakeholders can involve a wide variety of roles, ranging from IT operations experts to database managers and enterprise architects [C5]. This entails that the repository must support concurrent access [R6] for multiple users. Another requirement that follows directly from concurrent access is that the structural consistency of the model contents must be ensured by the repository [R6, R9] (e.g., conformance to the metamodel and referential integrity). From the analysis perspective, concurrent access is also a threat to the consistency and reproducibility of query results, which is required for reliable model analysis [R9]. Apart from analyzing the current and past states of the model, IT Landscape models are also used to plan for future transformations [C7]. The general workflow involves the creation of “to-be” scenarios based on the current state which are then compared against each other in order to select the best candidate. In order to cope with such use cases, the repository must support branching [R4]. Branches allow the plans to be based on the actual model and to change it independently without affecting the as-is state. Since the comparison of two model states is an important part of planning (as well as a number of other use cases), the repository needs to be able to evaluate an analysis query on any model version and on any branch [R7] without altering the query.

2.2 Deriving features from requirements

From the set of requirements, we inferred the technical features which our model repository has to support. As we want our users to be able to provide their own metamodels, we employ the standard Eclipse Modeling Framework (EMF [70]) as our modeling language of choice [F1]. The fact that the data managed by our repository consists of a large and densely connected model which has to be put under version control lead to the decision to employ a per-element versioning strategy [F2], as a coarse-grained whole-model versioning strategy would cause performance issues for such models.

Supporting a user-defined metamodel, element versioning and metamodel evolution at the same time is a challenging task. The combination of these requirements entails that our repository must support metamodel versioning, metamodel evolution and instance co-adaptation [F3]. From a technical perspective, it is also inadvisable to create a full copy of a model version each time a branch is created due to the potential size of the model. We therefore require a branching mechanism that is lightweight [F4] in that it reuses the data from the origin branch rather than copying it when a new branch is created. Since IT Landscape models can grow to large sizes and will potentially not fit into the main memory of the machine which runs our repository, we require a mechanism for dynamic on-demand loading and unloading of model elements [F5].

A technical feature which is crucial for efficient querying of the entire model is indexing [F6]. The primary index allows to locate a model element by its unique ID without linear iteration over all elements. Secondary indices can be defined by the user for a given metamodel and can be used to efficiently find all elements in the model where a property is set to a specified value (e.g., finding all servers where the name contains “Production”). In addition, indexing has to consider the versioned nature of our repository, as we want our indices to be usable for all queries, regardless of the chosen version or branch. In other words, even a query on a model version that is one year old should be able to utilize our indices. In order for queries to be executable on any branch and timestamp, we require a framework that allows for the creation of queries that are agnostic to the chosen branch and version [F9].

All queries and modifications in our repository are subject to concurrent access. We meet this requirement by providing full ACID [38] end-to-end transaction support in our repository [F8]. Finally, in order to support long histories, we implement a feature called Temporal Rollover which enables the archiving of historical entries [F7]. This feature allows for indefinite growth of element histories and will be explained in detail in later sections.

3 Solution overview

The overarching goal of our efforts is to provide a model repository that fulfills the requirements in Sect. 2. Our initial prototypes were based on standard technology, such as object-relational mappers and SQL databases. However, we soon realized that table-based representations were not an ideal fit for the structure of model data. The main issues we faced with these solutions were related to scalability and performance [R5]. The fact that most SQL databases require a fixed schema also proved to be very limiting when taking the requirement for metamodel evolution [R3] into consideration.

During our search for alternatives, we were inspired by approaches such as MORSA [54] and Neo4EMF [4]. We investigated various NoSQL storage solutions and eventually settled for graph databases. Graph databases do not require a fixed schema, offer fast execution of navigational queries and the bijective mapping between model data and graph data is both simpler and faster than object-relational mappings. However, existing graph databases on the market did not offer built-in versioning capabilities [R2]. Using a general-purpose graph database (e.g., Neo4jFootnote 2 or TitanFootnote 3) and managing the versioning process entirely on the application side has already been proven by various authors to be possible [8, 66, 67, 71]. However, such approaches greatly increase the complexity of the resulting graph structure as well as the complexity of the queries that operate on it. This reduces the maintainability, performance and scalability [R5] of such systems.

After discovering this gap in both research and industrial solutions, we created a versioned graph database called ChronoGraph [27], which is the first graph database with versioning support that is compliant to the Apache TinkerPop standard. ChronoGraph is listedFootnote 4 as an official TinkerPop implementation and available as an open-source project on GitHub.Footnote 5 Since graph structures need to be transformed into a format that is compatible with the sequential nature of hard drives, we also required a versioned storage solution. Our explorative experiments with different back-ends of Titan DB demonstrated that key-value stores are a very suitable fit for storing graph data. We created ChronoDB [25], a versioned key-value store, to act as the storage backend for ChronoGraph. The full source code of this project is also available on GitHub.Footnote 6 The resulting repository solution therefore consists of three layers, allowing for a coherent architecture and a clean separation of concerns.

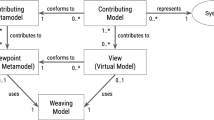

Figure 3 shows the data management concepts of ChronoSphere. At the very top, in Fig. 3 Part A, we are working with EObjects and their corresponding EClasses, EPackages and other Ecore elements. It is important that the model and the metamodel are stored together. This will become a critical factor when dealing with metamodel evolution. This combined model needs to be persisted and versioned [R1–R4]. ChronoSphere maps it to a property graph representation [58] for this purpose. This representation is conceptually very close to the model form. Our model-to-graph mapping is inspired by Neo4EMF [4]. We will discuss related work in this area in more detail in Sect. 9.

The property graph management in Fig. 3 Part B is provided by ChronoGraph. In order to achieve a serial form for the model data that can be persisted to disk, ChronoGraph disassembles the property graph into individual Star Graphs, one for each vertex (i.e., node). A star graph is a sub-graph that is centered around one particular vertex. Figure 3 Part C shows the star graph of vertex v1. Creating star graphs for each vertex is a special kind of graph partitioning. When linking the star graphs again by replacing IDs by vertices, the original graph can be reconstructed from this partitioning. This reconstruction can occur fully or only partially, which makes this solution very suitable for lazy loading techniques [R5].

In the next step, we transform the star graph of each vertex into a binary string using the KryoFootnote 7 serializer, and pass the result to the underlying ChronoDB, our versioned Key-Value-Store. When the transaction is committed [R6], the commit timestamp is assigned to each pair of modified keys and corresponding binary values, creating time-key-value triples as shown in Fig. 3 Part D. ChronoDB then stores these triples in a Temporal Data Matrix (Fig. 3 Part E) which is implemented as a B\(^{+}\)-Tree [61]. Each row in this matrix represents the full history of a single element, each column represents a model revision, and each cell represents the data of one particular element for a given ID at a given timestamp. We will define and discuss this matrix structure in more detail in the following section.

4 Solution part I: ChronoDB

ChronoDB [25] is a versioned key-value store and the bottom layer in our architecture. Its main responsibilities are persistence, versioning, branching and indexing. As all other components in our architecture rely on this store, we formalized its data structures and operations during the design phase.

4.1 Formal foundation

Salzberg and Tsotras identified three key query types which have to be supported by a data store in order to provide the full temporal feature set [62]. For versioning purposes, this set can be reused by restricting the features to timestamps instead of time ranges. This gives rise to the following three types of possible queries:

-

Pure-Timeslice Query Given a point in time (e.g., date and time), find all keys that existed at that time.

-

Range-Timeslice Query Given a set of keys and a point in time, find the value for each key which was valid at that time.

-

Pure-Key Query Given a set of keys, for each key find the values that comprise its history.

We use these three core query types as the functional requirements for our formalization approach. For practical reasons, we furthermore require that inserted entries never have to be modified again. In this way, we can achieve a true append-only store. In order to maintain the traceability of changes over time (e.g., for auditing purposes [R8]), we also require that the history of a key must never be altered, only appended.

The key concept behind our formalism is based on the observation that temporal information always adds an additional dimension to a dataset. A key-value format has only one dimension, which is the key. By adding temporal information, the two resulting dimensions are the key, and the time at which the value was inserted. Therefore, a matrix is a very natural fit for formalizing the versioning problem, offering the additional advantage of being easy to visualize. The remainder of this section consists of definitions which provide the formal semantics of our solution, interleaved with figures and (less formal) textual explanations.

Definition 1

Temporal Data Matrix

Let T be the set of all timestamps with \(T \subseteq \mathbb {N}\). Let \(\mathcal {S}\) denote the set of all non-empty strings and K be the set of all keys with \(K \subseteq \mathcal {S}\). Let \(\mathbb {B}\) define the set of all binary strings with \(\mathbb {B} \subseteq \{0,1\}^+ \cup \{null, \epsilon \}\). Let \(\epsilon \in \mathbb {B}\) be the empty binary string with \(\epsilon \ne null\). We define the Temporal Data Matrix\(\mathcal {D} \in \mathbb {B}^{\infty \times \infty }\) as:

We define the initial value of a given Temporal Data Matrix D as:

In Definition 1, we define a Temporal Data Matrix, which is a key-value mapping enhanced with temporal information [R2, R3]. Note that the number of rows and columns in this matrix is infinite. In order to retrieve a value from this matrix, a key string and a timestamp are required. We refer to such a pair as a Temporal Key. The matrix can contain an array of binary values in every cell, which can be interpreted as the serialized representation of an arbitrary object. The formalism is therefore not restricted to any particular value type. The dedicated null value (which is different from all other bit-strings and also different from the \(\epsilon \) values used to initialize the matrix) will be used as a marker that indicates the deletion of an element later in Definition 3.

In order to guarantee the traceability of changes [R8], entries in the past must not be modified, and new entries may only be appended to the end of the history, not inserted at an arbitrary position. We use the notion of a dedicated now timestamp for this purpose.

Definition 2

Now Operation

Let D be a Temporal Data Matrix. We define the function \(now: \mathbb {B}^{\infty \times \infty } \rightarrow T\) as:

Definition 2 introduces the concept of the now timestamp, which is the largest (i.e., latest) timestamp at which data has been inserted into the store so far, initialized at zero for empty matrices. This particular timestamp will serve as a safeguard against temporal inconsistencies in several operations. We continue by defining the temporal counterparts of the put and get operations of a key-value store.

Definition 3

Temporal Write Operation

Let D be a Temporal Data Matrix. We define the function \(put: \mathbb {B}^{\infty \times \infty } \times T \times K \times \mathbb {B} \rightarrow \mathbb {B}^{\infty \times \infty }\) as:

with \(v \ne \epsilon \), \(t > now(D)\) and

The write operation put replaces a single entry in a Temporal Data Matrix by specifying the exact coordinates and a new value for that entry. All other entries remain the same as before. Please note that, while v must not be \(\epsilon \) in the context of a put operation (i.e., a cell cannot be “cleared”), v can be null to indicate a deletion of the key k from the matrix. Also, we require that an entry must not be overwritten. This is given implicitly by the fact that each put advances the result of now(D), and further insertions are only allowed after that timestamp. Furthermore, write operations are not permitted to modify the past in order to preserve consistency and traceability, which is also asserted by the condition on the now timestamp. This operation is limited in that it allows to modify only one key at a time. In the implementation, we generalize it to allow simultaneous insertions in several keys via transactions.

Definition 4

Temporal Read Operation

Let D be a Temporal Data Matrix. We define the function \(get: \mathbb {B}^{\infty \times \infty } \times T \times K \rightarrow \mathbb {B}\) as:

with \(t \le now(D)\) and

The function get first attempts to return the value at the coordinates specified by the key and timestamp (\(u = t\)). If that position is empty, we scan for the entry in the same row with the highest timestamp and a non-empty value, considering only entries with lower timestamps than the request timestamp. In the formula, we have to add \(-~1\) to the set from which u is chosen to cover the case where there is no other entry in the row. If there is no such entry (i.e., \(u = -~1\)) or the entry is null, we return the empty binary string, otherwise we return the entry with the largest encountered timestamp.

This process is visualized in Fig. 4. In this figure, each row corresponds to a key, and each column to a timestamp. The depicted get operation is working on timestamp 5 and key ‘d’. As \(D_{5,d}\) is empty, we attempt to find the largest timestamp smaller than 5 where the value for the key is not empty, i.e., we move left until we find a non-empty cell. We find the result in \(D_{1, d}\) and return v1. This is an important part of the versioning concept: a value for a given key is assumed to remain unchanged until a new value is assigned to it at a later timestamp. This allows any implementation to conserve memory on disk, as writes only occur if the value for a key has changed (i.e., no data duplication is required between identical revisions). Also note that we do not need to update existing entries when new key-value pairs are being inserted, which allows for pure append-only storage. In Fig. 4, the value v1 is valid for the key ‘d’ for all timestamps between 1 and 5 (inclusive). For timestamp 0, the key ‘d’ has value v0. Following this line of argumentation, we can generalize and state that a row in the matrix, identified by a key \(k \in K\), contains the history of k. This is formalized in Definition 5. A column, identified by a timestamp \(t \in T\), contains the state of all keys at that timestamp, with the additional consideration that value duplicates are not stored as they can be looked up in earlier timestamps. This is formalized in Definition 6.

A get operation on a Temporal Data Matrix [25]

Definition 5

History Operation

Let D be a Temporal Data Matrix, and t be a timestamp with \(t \in T, t \le now(D)\). We define the function \(history: \mathbb {B}^{\infty \times \infty } \times T \times K \rightarrow 2^{T}\) as:

In Definition 5, we define the history of a key k up to a given timestamp t in a Temporal Data Matrix D as the set of timestamps less than or equal to t that have a non-empty entry for key k in D. Note that the resulting set will also include deletions, as null is a legal value for \(D_{x,k}\) in the formula. The result is the set of timestamps where the value for the given key changed. Consequently, performing a get operation for these timestamps with the same key will yield different results, producing the full history of the temporal key.

Definition 6

Keyset Operation

Let D be a Temporal Data Matrix, and t be a timestamp with \(t \in T, t \le now(D)\). We define the function \(keyset: \mathbb {B}^{\infty \times \infty } \times T \rightarrow 2^{K}\) as:

As shown in Definition 6, the keyset in a Temporal Data Matrix changes over time. We can retrieve the keyset at any desired time by providing the appropriate timestamp t. Note that this works for any timestamp in the past, in particular we do not require that a write operation has taken place precisely at t in order for the corresponding key(s) to be contained in the keyset. In other words, the precise column of t may consist only of \(\epsilon \) entries, but the key set operation will also consider earlier entries which are still valid at t. The version operation introduced in Definition 7 operates in a very similar way, but returns tuples containing keys and values, rather than just keys.

Definition 7

Version Operation

Let D be a Temporal Data Matrix, and t be a timestamp with \(t \in T, t \le now(D)\). We define the function \(version: \mathbb {B}^{\infty \times \infty } \times T \rightarrow 2^{K \times \mathbb {B}}\)

Figure 5 illustrates the key set and version operations by example. In this scenario, the key set (or version) is requested at timestamp \(t = 5\). We scan each row for the latest non-\(\epsilon \) entry and add the corresponding key of the row to the key set, provided that a non-\(\epsilon \) right-most entry exists (i.e., the row is not empty) and is not null (the value was not removed). In this example, keyset(D, 5) would return \(\{a,c,d\}\), assuming that all non-depicted rows are empty. b and f are not in the key set, because their rows are empty (up to and including timestamp 5), and e is not in the set because its value was removed at timestamp 4. If we would request the key set at timestamp 3 instead, e would be in the key set. The operation version(D, 5) returns \(\{ \langle a,v0\rangle , \langle c, v2\rangle , \langle d, v4\rangle \}\) in the example depicted in Fig. 5. The key e is not represented in the version because it did not appear in the key set.

Performing a keyset or version operation on a Temporal Data Matrix [25]

With the given set of operations, we are able to answer all three kinds of temporal queries identified by Salzberg and Tsotras [62], as indicated in Table 2. Due to the restrictions imposed onto the put operation (see Definition 3), data cannot be inserted before the now timestamp (i.e., the history of an entry cannot be modified). Since the validity range of an entry is determined implicitly by the empty cells between changes, existing entries never need to be modified when new ones are being added. The formalization therefore fulfills all requirements stated at the beginning of this section.

4.2 Implementation

ChronoDB is our implementation of the concepts presented in the previous section. It is a fully ACID compliant, process-embedded, temporal key-value store written in Java. The intended use of ChronoDB is to act as the storage backend for a graph database, which is the main driver behind numerous design and optimization choices. The full source code is freely available on GitHub under an open-source license.

4.2.1 Implementing the matrix

As the formal foundation includes the concept of a matrix with infinite dimensions, a direct implementation is not feasible. However, a Temporal Data Matrix is typically very sparse. Instead of storing a rigid, infinite matrix structure, we focus exclusively on the non-empty entries and expand the underlying data structure as more entries are being added.

There are various approaches for storing versioned data on disk [15, 46, 50]. We reuse existing, well-known and well-tested technology for our prototype instead of designing custom disk-level data structures. The temporal store is based on a regular B\(^{+}\)-Tree [61]. We make use of the implementation of B\(^{+}\)-Trees provided by the TUPLFootnote 8 library. In order to form an actual index key from a Temporal Key, we concatenate the actual key string with the timestamp (left-padded with zeros to achieve equal length), separated by an ‘@’ character. Using the standard lexicographic ordering of strings, we receive an ordering as shown in Table 3. This implies that our B\(^{+}\)-Tree is ordered first by key, and then by timestamp. The advantage of this approach is that we can quickly determine the value of a given key for a given timestamp (i.e., get is reasonably fast), but the keyset (see Definition 6) is more expensive to compute.

The put operation appends the timestamp to the user key and then performs a regular B\(^{+}\)-Tree insertion. The temporal get operation requires retrieving the next lower entry with the given key and timestamp.

This is similar to regular B\(^{+}\)-Tree search, except that the acceptance criterion for the search in the leaf nodes is “less than or equal to” instead of “equal to”, provided that nodes are checked in descending key order. TUPL natively supports this functionality. After finding the next lower entry, we need to apply a post-processing step in order to ensure correctness of the get operation. Using Table 3 as an example, if we requested aa@0050 (which is not contained in the data), searching for the next-lower key produces a@1000. The key string in this temporal key (a) is different from the one which was requested (aa). In this case, we can conclude that the key aa did not exist up to the requested timestamp (50), and we return null instead of the retrieved result.

Due to the way we set up the B\(^{+}\)-Tree, adding a new revision to a key (or adding an entirely new key) has the same runtime complexity as inserting an entry into a regular B\(^{+}\)-Tree. Temporal search also has the same complexity as regular B-Tree search, which is \(\mathcal {O}(\hbox {log}(n))\), where n is the number of entries in the tree. From the formal foundations onward, we assert by construction that our implementation will scale equally well when faced with one key and many versions, many keys with one revision each, or any distribution in between [R5]. An important property of our data structure setup is that, regardless of the versions-per-key distribution, the data structure never degenerates into a list, maintaining an access complexity of \(\mathcal {O}(\hbox {log}(n))\) by means of regular B\(^{+}\)-Tree balancing without any need for additional algorithms.

4.2.2 Branching

Figure 6 shows how the branching mechanism works in ChronoDB [R4]. Based on our matrix formalization, we can create branches of our history at arbitrary timestamps. To do so, we generate a new, empty matrix that will hold all changes applied to the branch it represents. We would like to emphasize that existing entries are not duplicated. We therefore create lightweight branches. When a get request arrives at the first column of a branch matrix during the search, we redirect the request to the matrix of the parent branch, at the branching timestamp, and continue from there. In this way, the data from the original branch (up to the branching timestamp) is still fully accessible in the child branch.

For example, as depicted in Fig. 7, if we want to answer a get request for key c on branch branchA and timestamp 4, we scan the row with key c to the left, starting at column 4. We find no entry, so we redirect the call to the origin branch (which in this case is master), at timestamp 3. Here, we continue left and find the value \(c_{1}\) on timestamp 1. Indeed, at timestamp 4 and branch branchA, \(c_{1}\) is still valid. However, if we issue the same original query on master, we would get \(c_{4}\) as our result. This approach to branching can also be employed recursively in a nested fashion, i.e., branches can in turn have sub-branches. The primary drawback of this solution is related to the recursive “backstepping” to the origin branch during queries. For deeply nested branches, this process will introduce a considerable performance overhead, as multiple B\(^{+}\)-Trees (one per branch) need to be opened and queried in order to answer this request. This happens more often for branches which are very thinly populated with changes, as this increases the chances of our get request scan ending up at the initial column of the matrix without encountering an occupied cell. The operation which is affected most by branching with respect to performance is the keySet operation (and all other operations that rely on it), as this requires a scan on every row, leading to potentially many backstepping calls.

4.2.3 Caching

A disk access is always slow compared to an in-memory operation, even on a modern solid state drive (SSD). For that reason, nearly all database systems include some way of caching the most recent query results in main memory for later reuse. ChronoDB is no exception, but the temporal aspects demand a different approach to the caching algorithm than in regular database systems, because multiple transactions can simultaneously query the state of the stored data at different timestamps. Due to the way we constructed the Temporal Data Matrix, the chance that a given key does not change at every timestamp is very high. Therefore, we can potentially serve queries at many different timestamps from the same cached information by exploiting the periods in which a given key does not change its value. For the caching algorithm, we apply some of the ideas found in the work of Ramaswamy [57] in a slightly different way, adapted to in-memory processing and caching idioms.

Figure 8 displays an example for our temporal caching approach which we call Mosaic. When the value for a temporal key is requested and a cache miss occurs, we retrieve the value together with the validity range (indicated by gray background in the figure) from the persistent store, and add the range together with its value to the cache. Validity ranges start at the timestamp in which a key-value pair was modified (inclusive) and end at the timestamp where the next modification on that pair occurred (exclusive). For each key, the cache manages a list of time ranges called a cache row, and each range is associated with the value for the key in this period. As these periods never overlap, we can sort them in descending order for faster search, assuming that more recent entries are used more frequently. A cache look-up is performed by first identifying the row by the key string, followed by a linear search through the cached periods.Footnote 9 We have a cache hit if a period containing the requested timestamp is found. When data is written to the underlying store, we need to perform a write-through in our cache, because validity ranges that have open-ended upper bounds potentially need to be shortened due to the insertion of a new value for a given key. The write-through operation is fast, because it only needs to check if the first validity range in the cache row of a given key is open-ended, as all other entries are always closed ranges. All entries in our cache (regardless of the row they belong to) share a common least recently used registry which allows for fast cache eviction of the least recently read entries.

In the example shown in Fig. 8, retrieving the value of key d at timestamp 0 would result in adding the validity range [0; 1) with value v0 to the cache row. This is the worst-case scenario, as the validity range only contains a single timestamp, and can consequently be used to answer queries only on that particular timestamp. Retrieving the same key at timestamps 1 through 4 yields a cache entry with a validity range of [1; 5) and value v1. All requests on key d from timestamp 1 through 4 can be answered by this cache entry. Finally, retrieving key d on a timestamp greater than or equal to 5 produces an open-ended validity period of \([5;\infty )\) with value v2, which can answer all requests on key d with a timestamp larger than 4, assuming that non-depicted columns are empty. If we would insert a key-value pair of \(\langle d, v3\rangle \) at timestamp 10, the write-through operation would need to shorten the last validity period to [5; 10) and add a cache entry containing the period \([10;\infty )\) with value v3.

4.2.4 Incremental commits

Database vendors often provide specialized ways to batch-insert large amounts of data into their databases that allow for higher performance than the usage of regular transactions. ChronoDB provides a similar mechanism, with the additional challenge of keeping versioning considerations in mind along the way. Even when inserting large amounts of data into ChronoDB, we want the history to remain clean, i.e., it should not contain intermediate states where only a portion of the overall data was inserted. We therefore need to find a way to conserve RAM by writing incoming data to disk while maintaining a clean history. For this purpose, the concept of incremental commits was introduced in ChronoDB. This mechanism allows to mass-insert (or mass-update) data in ChronoDB by splitting it up into smaller batches while maintaining a clean history and all ACID properties for the executing transaction.

Figure 9 shows how incremental commits work in ChronoDB. The process starts with a regular transaction inserting data into the database before calling commitIncremental(). This writes the first batch (timestamp 2 in Fig. 9) into the database and releases it from RAM. However, the now timestamp is not advanced yet. We do not allow other transactions to read these new entries, because there is still data left to insert. We proceed with the next batches of data, calling commitIncremental() after each one. After the last batch was inserted, we conclude the process with a call to commit(). This will merge all of our changes into one timestamp on disk. In this process, the last change to a single key is the one we keep. In the end, the timestamps between the first initial incremental commit (exclusive) to the timestamp of the final commit (inclusive) will have no changes (as shown in timestamps 3 and 4 in Fig. 9). With the final commit, we also advance the now timestamp of the matrix and allow all other transactions to access the newly inserted data. By delaying this step until the end of our operation, we keep the possibility to roll back our changes on disk (for example in case that the process fails) without violating the ACID properties for all other transactions. Also, if data generated by a partially complete incremental commit process is present on disk at database start-up (which occurs when the database is unexpectedly shut down during an incremental commit process), these changes can be rolled back as well, which allows incremental commit processes to have “all or nothing” semantics.

A disadvantage of this solution is that there can be only one concurrent incremental commit process on any data matrix. This process requires exclusive write access to the matrix, blocking all other (regular and incremental) commits until it is complete. However, since we only modify the head revisions and now does not change until the process ends, we can safely perform read operations in concurrent transactions, while an incremental commit process is taking place. Overall, incremental commits offer a way to insert large quantities of data into a single timestamp while conserving RAM without compromising ACID safety at the cost of requiring exclusive write access to the database for the entire duration of the process. These properties make them very suitable for data imports from external sources, or large scale changes that affect most of the key-value pairs stored in a matrix. This will become an important factor when we consider global model evolutions in the model repository layer [R3]. We envision incremental commits to be employed for administrative tasks which do not recur regularly, or for the initial filling of an empty database.

4.2.5 Supporting long histories

In order to create a sustainable versioning mechanism, we need to ensure that our system can support a virtually unlimited number of versions [R2, R5]. Ideally, we also should not store all data in a single file, and old files should remain untouched when new data is inserted (which is important for file-based backups). For these reasons, we must not constrain our solution to a single B-Tree. The fact that past revisions are immutable in our approach led to the decision to split the data along the time axis, resulting in a series of B-Trees. Each tree is contained in one file, which we refer to as a chunk file. An accompanying meta file specifies the time range which is covered by the chunk file. The usual policy of ChronoDB is to maximize sharing of unchanged data as much as possible. Here, we deliberately introduce data duplication in order to ensure that the initial version in each chunk is complete. This allows us to answer get queries within the boundaries of a single chunk, without having to navigate to the previous one. As each access to another chunk has CPU and I/O overhead, we should avoid accesses on more than one chunk to answer a basic query. Without duplication, accessing a key that has not changed for a long time could potentially lead to a linear search through the chunk chain which contradicts the requirement for scalability [R5].

The temporal rollover process by example [26]

The algorithm for the “rollover” procedure outlined in Fig. 10 works as follows.

In Line 1 of Algorithm 1, we fetch the latest timestamp where a commit has occurred in our current head revision chunk. Next, we calculate the full head version of the data in Line 2. With the preparation steps complete, we set the end of the validity time range to the last commit timestamp in Line 3. This only affects the metadata, not the chunk itself. We now create a new, empty chunk in Line 4, and set the start of its validity range to the split timestamp plus one (as chunk validity ranges must not overlap). The upper bound of the new validity range is infinity. In Line 5 we copy the head version of the data into the new chunk. Finally, we update our internal look-up table in Line 6. This entire procedure only modifies the last chunk and does not touch older chunks, as indicated by the grayed-out boxes in Fig. 10.

The look-up table that is being updated in Algorithm 1 is a basic tree map which is created at start-up by reading the metadata files. For each encountered chunk, it contains an entry that maps its validity period to its chunk file. The periods are sorted in ascending order by their lower bounds, which is sufficient because overlaps in the validity ranges are not permitted. For example, after the rollover depicted in Fig. 10, the time range look-up would contain the entries shown in Table 4.

We employ a tree map specifically in our implementation for Table 4, because the purpose of this look-up is to quickly identify the correct chunk to address for an incoming request. Incoming requests have a timestamp attached, and this timestamp may occur exactly at a split, or anywhere between split timestamps. As this process is triggered very often in practice and the time range look-up map may grow quite large over time, we are considering to implement a cache based on the least-recently-used principle that contains the concrete resolved timestamp-to-chunk mappings in order to cover the common case where one particular timestamp is requested more than once in quick succession.

With this algorithm, we can support a virtually unlimited number of versions [R6] because new chunks always only contain the head revision of the previous ones, and we are always free to roll over once more should the history within the chunk become too large. We furthermore do not perform writes on old chunk files anymore, because our history is immutable. Regardless, thanks to our time range look-up, we have close to \(O(\log {}n)\) access complexity to any chunk, where n is the number of chunks.

This algorithm is a trade-off between disk space and scalability. We introduce data duplication on disk in order to provide support for large histories. The key question that remains is when this process happens. We require a metric that indicates the amount of data in the current chunk that belongs to the history (as opposed to the head revision) and thus can be archived if necessary by performing a rollover. We introduce the Head–History–Ratio (HHR) as the primary metric for this task, which we defined as follows:

...where e is the total number of entries in the chunk, and h is the size of the subset of entries that belong to the head revision (excluding entries that represent deletions). By dividing the number of entries in the head revision by the number of entries that belong to the history, we get a proportional notion of how much history is contained in the chunk that works for datasets of any size. It expresses how many entries we will “archive” when a rollover is executed. When new commits add new elements to the head revision, this value increases. When a commit updates existing elements in the head revision or deletes them, this value decreases. We can employ a threshold as a lower bound on this value to determine when a rollover is necessary. For example, we may choose to perform a rollover when a chunk has an HHR value of 0.2 or less. This threshold will work independently of the absolute size of the head revision. The only case where the HHR threshold is never reached is when exclusively new (i.e., never seen before) keys are added, steadily increasing the size of the head revision. However, in this case, we would not gain anything by performing a rollover, as we would have to duplicate all of those entries into the new chunk to produce a complete initial version. Therefore, the HHR metric is properly capturing this case by never reaching the threshold, thus never indicating the need for a rollover.

4.2.6 Secondary indexing

There are two kinds of secondary indices in ChronoDB. On the one hand, there are indices which are managed by ChronoDB itself (“system indices”) and on the other hand there are user-defined indices. As indicated in Table 3, the primary index for each matrix in ChronoDB has its keys ordered first by user key and then by version. In order to allow for efficient time range queries, we maintain a secondary index that is first ordered by timestamp and then by user key. Further system indices include an index for commit metadata (e.g., commit messages) that maps from timestamp to metadata, as well as auxiliary indices for branching (branch name to metadata). User-defined indices [R5] help to speed up queries that request entries based on their contents (rather than their primary key). An example for such a query is find all persons where the first name is ’Eva’. Since ChronoDB stores arbitrary Java objects, we require a method to extract the desired property value to index from the object. This is accomplished by defining a ChronoIndexer interface. It defines the index(Object) method that, given an input object, returns the value that should be put on the secondary index. Each indexer is associated with a name. That name is later used in a query to refer to this index. The associated query language provides support for a number of string matching techniques (equals, contains, starts with, regular expression...), numeric matching (greater than, less than or equal to...) as well as Boolean operators (and, or, not). The query engine also performs optimizations such as double negation elimination. Overall, this query language is certainly less expressive than other languages such as SQL. Since ChronoDB is intended to be used as a storage engine and embedded in a database frontend (e.g., a graph database), these queries will only be used internally for index scans while more sophisticated expressions are managed by the database frontend. Therefore, this minimalistic Java-embedded DSL has proven to be sufficient. An essential drawback of this query mechanism is that the number of properties available for querying is determined by the available secondary indices. In other words, if there is no secondary index for a property, that property cannot be used for filtering. This is due to ChronoDB being agnostic to the Java objects it is storing. In absence of a ChronoIndexer, it has no way of extracting a value for an arbitrary request property from the object. This is a common approach in database systems: without a matching secondary index, queries require a linear scan of the entire data store. When using a database frontend, this distinction is blurred, and the difference between an index query and a non-index query is only noticeable in how long it takes to produce the result set.

In contrast to the primary index, entries in the secondary index are allowed to have non-unique keys. For example, if we index the “name” attribute, then there may be more than one entry where the name is set to “John”. We therefore require a different approach than the temporal data matrices employed for the primary index. Inspired by the work of Ramaswamy et al. [57], we make use of explicit time windows. Non-unique indices in versioned contexts are special cases of the general interval stabbing problem [31].

Table 5 shows an example of a secondary index. As such a table can hold all entries for all indices, we store the index for a particular entry in the “index” column. The branch, keyspace and key columns describe the location of the entry in the primary index. The “value” column contains the value that was extracted by the ChronoIndexer. “From” and “To” express the time window in which a given row is valid. Any entry that is newly inserted into this table initially has its “To” value set to infinity (i.e., it is valid for an unlimited amount of time). When the corresponding entry in the primary index changes, the “To” value is updated accordingly. All other columns are effectively immutable.

In the concrete example shown in Table 5, we insert three key-value pairs (with keys e1, e2 and e3) at timestamp 1234. Our indexer extracts the value for the “name” index, which is “john” for all three values. The “To” column is set to infinity for all three entries. Querying the secondary index at that timestamp for all entries where “name” is equal to “john” would therefore return the set containing e1, e2 and e3. At timestamp 5678, we update the value associated with key e2 such that the indexer now yields the value “jack”. We therefore need to terminate the previous entry (row #2) by setting the “To” value to 5678 (upper bounds are exclusive), and inserting a new entry that starts at 5678, has the value “jack” and an initial “To” value of infinity. Finally, we delete the key e3 in our primary index at timestamp 7890. In our secondary index, this means that we have to limit the “To” value of row #3 to 7890. Since we have no new value due to the deletion, no additional entries need to be added.

This tabular structure can now be queried using well-known techniques also employed by SQL. For usual queries, the branch and index is fixed, the value is specified as a search string and a condition (e.g., “starts with [jo]”) and we know the timestamp for which the query should be evaluated. We process the timestamp by searching only for entries where

...in addition to the conditions specified for the other columns. Selecting only the documents for a given branch is more challenging, as we need to traverse the origin branches upwards until we arrive at the master branch, performing one subquery for each branch along the way and merging the intermediate results accordingly.

Transaction control with and without versioning [25]

4.2.7 Transaction control

Consistency and reliability are two major goals in ChronoDB. It offers full ACID transactions with the highest possible read isolation level (serializable, see [38]). Figure 11 shows an example with two sequence diagrams with identical transaction schedules. A database server is communicating with an Online Analytics Processing (OLAP [10]) client that owns a long-running transaction (indicated by gray bars). The process involves messages (arrows) sending queries with timestamps and computation times (blocks labeled with “c”) on both machines. A regular Online Transaction Processing (OLTP) client wants to make changes to the data which is analyzed by the OLAP client. The left figure shows what happens in a non-versioned scenario with pessimistic locking. The server needs to lock the relevant contents of the database for the entire duration of the OLAP transaction, otherwise we risk inconsistencies due to the incoming OLTP update. We need to delay the OLTP client until the OLAP client closes the transaction. Modern databases use optimistic locking and data duplication techniques (e.g., MVCC [6]) to mitigate this issue, but the core problem remains: the server needs to dedicate resources (e.g., locks, RAM...) to client transactions over their entire lifetime. With versioning, the OLAP client sends the query plus the request timestamp to the server. This is a self-contained request; no additional information or resources are needed on the server, and yet the OLAP client achieves full isolation over the entire duration of the transaction, because it always requests the same timestamp. While the OLAP client is processing the results, the server can safely allow the modifications of the OLTP client, because it is guaranteed that any modification will only append a new version to the history. The data at timestamp on which the OLAP client is working is immutable. Client-side transactions act as containers for transient change sets and metadata, most notably the timestamp and branch name on which the transaction is working. Security considerations aside, transactions can be created (and disposed) without involving the server. An important problem that remains is how to handle situations in which two concurrent OLTP transactions attempt to change the same key-value pair. ChronoDB allows to select from several conflict handling modes (e.g., reject, last writer wins) or to provide a custom conflict resolver implementation.

5 Solution part II: ChronoGraph

ChronoGraph is our versioned graph database which is built on top of ChronoDB. ChronoGraph implements the Apache TinkerPop standard, the de-facto standard interface for graph databases. We first provide a high-level overview over TinkerPop; then, we focus on the concepts of ChronoGraph itself.

ChronoGraph architecture [27]

5.1 Apache TinkerPop

The TinkerPop framework is the de-facto standard interface between applications and graph databases. Its main purpose is to allow application developers to exchange a graph database implementation with another one without altering the application source code that accesses the database. The TinkerPop standard is designed in a modular fashion. The core module is the property graph API [58] which specifies the Java interfaces for vertices, edges, properties and other structural elements.

In a property graph, each vertex and edge can have properties which are expressed as key–value pairs. According to the standard, each vertex must have an identifier which is unique among all vertices, and the same is true for edges. In practice, database vendors often recommend to use identifiers which are globally unique in the database. Furthermore, in addition to the unique ID and the user-defined properties, each vertex and edge has a label, which is defined to be a single-valued string that is intended to be used for categorization purposes. All user-defined properties are untyped by definition, i.e., no restriction is imposed on which values a user-defined property may have. However, some graph database vendors such as Titan DBFootnote 10 and OrientDBFootnote 11 offer the possibility to define a schema which is evaluated at runtime. The only unsupported value for any user-defined property is the null value. Instead of assigning null to a property, it is recommended to delete the property on the target graph element entirely.

Another module in the TinkerPop standard is the graph query language Gremlin. In contrast to the property graph API, which is only a specification, Gremlin comes with a default implementation that is built upon the property graph API interfaces. This implementation also includes a number of built-in query optimization strategies. Other modules include a standard test suite for TinkerPop vendors, and a generic server framework for graph databases called Gremlin Server.

5.2 ChronoGraph architecture

Our open-source project ChronoGraph provides a fully TinkerPop-compliant graph database implementation with additional versioning capabilities. In order to achieve this goal, we employ a layered architecture as outlined in Fig. 12a. In the remainder of this section, we provide an overview of this architecture in a bottom-up fashion.

The bottom layer of the architecture is a versioned key-value store, i.e., a system capable of working with time–key–value tuples as opposed to plain key–value pairs in regular key-value stores. For the implementation of ChronoGraph, we use ChronoDB, as introduced in Sect. 4.

ChronoGraph itself consists of three major components. The first component is the graph structure management. It is responsible for managing the individual vertices and edges that form the graph, as well as their referential integrity [R9]. As the underlying storage mechanism is a key-value store, the graph structure management layer also performs the partitioning of the graph into key-value pairs and the conversion between the two formats. We present the technical details of this format in Sect. 5.3. The second component is the transaction management. The key concept here is that each graph transaction is associated with a timestamp on which it operates. Inside a transaction, any read request for graph content will be executed on the underlying storage with the transaction timestamp. ChronoGraph supports full ACID transactions [R6] with the highest possible isolation level (“serializable”, also known as “snapshot isolation”, as defined in the SQL Standard [38]). The underlying versioning system acts as an enabling technology for this highest level of transaction isolation, because any given version of the graph, once written to disk, is effectively immutable. All mutating operations are stored in the transaction until it is committed, which in turn produces a new version of the graph, with a new timestamp associated with it. Due to this mode of operation, we do not only achieve repeatable reads, but also provide effective protection from phantom reads, which are a common problem in concurrent graph computing. The third and final component is the query processor itself which accepts and executes Gremlin queries on the graph system. As each graph transaction is bound to a branch and timestamp, the query language (Gremlin) remains agnostic of both the branch and the timestamp, which allows the execution of any query on any desired timestamp and branch [R8].

The application communicates with ChronoGraph by using the regular TinkerPop API, with additional extensions specific to versioning. The versioning itself is entirely transparent to the application to the extent where ChronoGraph can be used as a drop-in replacement for any other TinkerPop 3.x compliant implementation. The application is able to make use of the versioning capabilities via additional methods, but their usage is entirely optional and not required during regular operation that does not involve history analysis.

5.3 Data layout

In order to store graph data in our Temporal Key–Value Store, we first need to disassemble the graph into partitions that can be serialized as values and be addressed by keys. Then, we need to persist these pairs in the store. We will first discuss how we disassemble the graph, followed by an overview of the concrete key–value format and how versioning affects this process.

5.3.1 Partitioning: the star graph format

Like many other popular graph databases, e.g., Titan DB, we rely on the Star Graph partitioning in order to disassemble the graph into manageable pieces.

Figure 13 shows an example of a star graph. A star graph is a subset of the elements of a full graph that is calculated given an origin vertex, in this case v0. The star graph contains all properties of the vertex, including the id and the label, as well as all incoming and outgoing edges (including their label, id and properties). All adjacent vertices of the origin vertex are represented in the star graph by their ids. Their attributes and remaining edges (indicated by dashed lines in Fig. 13) are not contained in the star graph of v0. This partitioning was chosen due to its ability to reconstruct the entire graph from disk without duplicating entire vertices or attribute values. Furthermore, it is suitable for lazy loading of individual vertices, as only the immediate neighborhood of a vertex needs to be loaded to reconstruct it from disk.

5.3.2 Key–value layout

Starting from a star graph partitioning, we design our key–value layout. Since all graph elements in TinkerPop are mutable by definition and our persistent graph versions have to be immutable, we perform a bijective mapping step before persisting an element. We refer to the persistent, immutable version as a Record, and there is one type of record for each structural element in the TinkerPop API. For example, the mutable Vertex element is mapped to an immutable VertexRecord. A beneficial side-effect of this approach is that we hereby gain control over the persistent format, and can evolve and adapt each side of the mapping individually if needed. Table 6 shows the contents of the most important record types.

In Table 6, all id and label elements, as well as all PropertyKeys, are of type String. The PropertyValue in the PropertyRecord is assumed to be in byte array form. An arrow in the table indicates that the record contains a mapping, usually implemented with a regular hash map. An element that deserves special attention is the EdgeTargetRecord that does not exist in the TinkerPop API. Traversing from one vertex to another via an edge label is a very common task in a graph query. In a naive mapping, we would traverse from a vertex to an adjacent edge and load it, find the id of the vertex at the other end, and then resolve the target vertex. This involves two steps where we need to resolve an element by ID from disk. However, we cannot store all edge information directly in a VertexRecord, because this would involve duplication of all edge properties on the other-end vertex. We overcome this issue by introducing an additional record type. The EdgeTargetRecord stores the id of the edge and the id of the vertex that resides at the “other end” of the edge. In this way, we can achieve basic vertex-to-vertex traversal in one step. At the same time, we minimize data duplication and can support edge queries (e.g., g.traversal().E() in TinkerPop), since we have the full EdgeRecords as standalone elements. A disadvantage of this solution is the fact that we still need to do two resolution steps for any query that steps from vertex to vertex and has a condition on a property of the edge in between. This trade-off is common for graph databases, and we share it with many others, e.g., Neo4j. We will discuss this with a concrete example in Sect. 5.4.

For each record type, we create a keyspace in the underlying key–value store. We serialize the record elements into a binary sequence. This binary sequence serves as the value for the key–value pairs and the id of the element is used as the corresponding key. The type of record indicates which keyspace to use, completing the mapping to the key–value format. The inverse mapping involves the same steps: given an element ID and type, we resolve the key–value pair from the appropriate keyspace by performing a key look-up using the ID. Then, we deserialize the binary sequence, and apply our bijective element-to-record mapping in the inverse direction. When loading a vertex, the properties of the incoming and outgoing edges will be loaded lazily, because the EdgeTargetRecord does not contain this information and loading edge properties immediately would therefore require an additional look-up. The same logic applies to resolving the other-end vertices of EdgeTargetRecords, allowing for a lazy (and therefore efficient and RAM-conserving) solution.

Figure 14 shows an example for the translation process between the Graph format and the Key-Value-Store format. In this example, we express the fact “John Doe knows Jane Doe since 1999” in a property graph format. Each graph element is transformed into an entry in the key–value store. In the example, we use a JSON-like syntax; our actual implementation employs a binary serialization format. Please note that the presented value structures correspond to the schema for records presented in Table 6.

5.4 Versioning concept

When discussing the mapping from the TinkerPop structure to the underlying key–value store in Sect. 5.3, we did not touch the topic of versioning. This is due to the fact that our key–value store ChronoDB is performing the versioning on its own. The graph structure does not need to be aware of this process. We still achieve a fully versioned graph, an immutable history and a very high degree of sharing of common (unchanged) data between revisions. This is accomplished by attaching a fixed timestamp to every graph transaction. This timestamp is always the same as in the underlying ChronoDB transaction. When reading graph data, at some point in the resolution process we perform a get(...) call in the underlying key–value store, resolving an element (e.g., a vertex) by ID. At this point, ChronoDB uses the timestamp attached to the transaction to perform the temporal resolution. This will return the value of the given key, at the specified timestamp.

In order to illustrate this process, we consider the example in Fig. 15. We open a transaction at timestamp 1234 and execute the following Gremlin query:

Translated into natural language, this query:

-

1.

starts at a given vertex (v0),

-

2.

navigates along the outgoing edge labeled as e0 to the vertex at the other end of the edge,

-

3.

from there navigates to the outgoing edge labeled as e1,

-

4.

checks that the edge has a property p which is set to value x,

-

5.

and finally navigates to the target vertex of that edge.

We start the execution of this query by resolving the vertex v0 from the database. Since our transaction uses timestamp 1234, ChronoDB will look up the temporal key v0@1234, and return the value labeled as B in Fig. 15.Footnote 12 Value A is not visible because it was overwritten by B at timestamp 1203, and value C is also not visible because it was written after our transaction timestamp. Next, we navigate the outgoing edge labeled as e0. Our store does contain information on that edge, but since the query does not depend on any of its properties, we use the EdgeTargetRecord stored in B and directly navigate to v1. We therefore ask ChronoDB for the value associated with temporal key v1@1234, and receive value G. For the next query step, we have a condition on the outgoing edge e1. Our EdgeTargetRecord in value G does not contain enough information to evaluate the condition; hence, we need resolve the edge from the store. Querying the temporal key e1@1234 will return the value H, which is shared with the previous version because it was not changed since then. After evaluating the condition that the property “p” on edge version H is indeed set to the value “x” (as specified in the query), we continue our navigation by resolving the target of e1, which is v2. The temporal key v2@1234 will result in the value K being returned.

Note that this final navigation step starts at an element that was reused from the commit at timestamp 1065 and ends at the state of v2 that was produced by the commit at timestamp 1203. This is possible because graph elements refer to each other by ID, but these references do not include the branch or timestamp. This information is injected from the transaction at hand, allowing for this kind of navigation and data reuse. This is a major step toward fulfilling requirement [R8]. As ChronoDB offers logarithmic access time to any key-value pair on any version, this is also in line with requirement [R5].

5.5 TinkerPop compatibility and extensions

The Apache TinkerPop API is the de-facto standard interface between graph databases and applications built on top of them. We therefore want ChronoGraph to implement and be fully compliant to this interface as well. However, in order to provide our additional functionality, we need to extend the default API at several points. There are two parts to this challenge. The first part is compliance with the existing TinkerPop API, the second part is the extension of this API in order to allow access to new functionality. In the following sections, we will discuss these points in more detail.

5.5.1 TinkerPop API compliance

As we described in Sects. 5.3 and 5.4, our versioning approach is entirely transparent to the user. This eases the achievement of compliance to the default TinkerPop API. The key aspect that we need to ensure is that every transaction receives a proper timestamp when the regular transaction opening method is invoked. In a non-versioned database, there is no decision to make at this point, because there is only one graph in a single state. The logical choice for a versioned graph database is to return a transaction on the current head revision, i.e., the timestamp of the transaction is set to the timestamp of the latest commit. This aligns well with the default TinkerPop transaction semantics—a new transaction t1 should see all changes performed by other transactions that were committed before t1 was opened. When a commit occurs, the changes are always applied to the head revision, regardless of the timestamp at hand, because history states are immutable in our implementation in order to preserve traceability of changes. As the remainder of our graph database, in particular the implementation of the query language Gremlin, is unaware of the versioning process, there is no need for further specialized efforts to align versioning with the TinkerPop API.

We employ the TinkerPop Structure Standard Suite, consisting of more than 700 automated JUnit tests, in order to assert compliance with the TinkerPop API itself. This test suite is set up to scan the declared Graph Features (i.e., optional parts of the API), and enable or disable individual tests based on these features. With the exception of Multi-PropertiesFootnote 13 and the Graph Computer,Footnote 14 we currently support all optional TinkerPop API features, which results in 533 tests to be executed. We had to manually disable 8 of those remaining test cases due to problems within the test suite, primarily due to I/O errors related to illegal file names on our Windows-based development system. The remaining 525 tests all pass on our API implementation.

5.5.2 TinkerPop extensions