Abstract

Large-scale model-driven system engineering projects are carried out collaboratively. Engineering artefacts stored in model repositories are developed in either offline (checkout–modify–commit) or online (GoogleDoc-style) scenarios. Complex systems frequently integrate models and components developed by different teams, vendors and suppliers. Thus, confidentiality and integrity of design artefacts need to be protected in accordance with access control policies. We propose a secure collaborative modelling approach where fine-grained access control for models is strictly enforced by bidirectional model transformations. Collaborators obtain filtered local copies of the model containing only those model elements which they are allowed to read; write access control policies are checked on the server upon submitting model changes. We present a formal collaboration schema which provenly guarantees certain correctness constraints, and its adaption to online scenarios with on-the-fly change propagation and the integration into existing version control systems to support offline scenarios. The approach is illustrated, and its scalability is evaluated using a case study of the MONDO EU project.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Collaborative modelling in MDE

The adoption of model-driven engineering (MDE) by system integrators (like airframers or car manufacturers) has been steadily increasing in the recent years [55], since it enables to detect design flaws early and generate various artefacts (source code, documentation, configuration tables, etc.) automatically from high-quality system models.

The use of models also intensifies collaboration between distributed teams of different stakeholders (system integrators, software engineers of component providers/suppliers, hardware engineers, certification authorities, etc.) via model repositories, which significantly enhances productivity and reduces time to market. An emerging industrial practice of system integrators is to outsource the development of various design artefacts to subcontractors in an architecture-driven supply chain.

Collaboration scenarios include traditional offline collaborations with asynchronous long transactions (i.e. to check out an artefact from a version control system and commit local changes afterwards) as well as online collaborations with short and synchronous transactions (e.g. when a group of collaborators simultaneously edit a model, similarly to well-known online document/spreadsheet editors). Several collaborative modelling frameworks (like CDO [49], EMFStore [50]) exist to support such scenarios.

However, such collaborative scenarios introduce significant challenges for security management in order to protect the intellectual property rights (IPR) of different parties. For instance, the detailed internal design of a specific component needs to be hidden to competitors who might supply a different component in the overall system, but needs to be revealed to certification authorities in order to obtain airworthiness. Large research projects in the avionics domain (like CESAR [12] or SAVI [1]) address certain collaborative aspects of the design process (e.g. by assuming multiple subcontractors), but security aspects are restricted to that of the system under design.

An increased level of collaboration in a model-driven development process introduces additional confidentiality challenges to sufficiently protect the IPR of the collaborating parties, which are either overlooked or significantly underestimated by existing initiatives. Even within a single company, there are often teams with differentiated responsibilities, areas of competence and clearances. Such processes likewise demand confidentiality and integrity of certain modelling artefacts.

1.2 Problems of coarse-grained access control

Existing practices for managing access control of models rely primarily upon the access control features of the back-end repository. Coarse-grained access control policies aim to restrict access to the files that store models. For instance, EMF models can be persisted as standard XMI documents, which can be stored in repositories providing file-based access and change management (as in SVN [2], CVS [24]). Fine-grained access control policies, on the other hand, may restrict access to the model on the row level (as in relational databases) or triple level (as in RDF repositories). Unfortunately, coarse-grained security policies are captured directly on the storage (file) level often result in inflexible fragmentation of models in collaborative scenarios.

To illustrate the problem of coarse-grained permissions, let us consider two collaborators, \({\textit{SW Provider}}_1\) and \({\textit{HW Supplier}}_1\) having full control over their model (fragment). Now if \({\textit{HW Supplier}}_1\) intends to share part of their model with \({\textit{SW Provider}}_1\), then either they need to grant access to the entire model (which would mean losing the confidentiality of certain intellectual properties), or split their model into two files and give access to only one fragment. For each additional actor \({\textit{SW Provider}}_2\), the same argument applies; in the end, a collaboratively developed system model would end up being split into several fragments.

Even in the simple case depicted in Fig. 1, the model needs to be split into two files (\({\textit{Model Fragment}}_1\) and \({\textit{Model Fragment}}_2\)) and access needs to be granted separately for each file when a \({\textit{SW Provider}}_1\) and a \({\textit{HW Supplier}}_1\) collaborates. When a new collaborator, \({\textit{SW Provider}}_2\), joins in the future who is allowed to partially read all two existing fragments, each model fragment needs to be divided at least in two. In this example, 5 fragments are required: one that can be read by both \({\textit{SW Provider}}_1\Leftrightarrow {\textit{SW Provider}}_2\), one accessible to \({\textit{HW Supplier}}_2\Leftrightarrow {\textit{SW Provider}}_2\) and three more private model fragments for the three collaborators. If additional collaborators join the collaboration, the number of fragments has to be increased further.

As a result, coarse-grained access control can lead to significant model fragmentation, which greatly increases the complexity of storage and access control management. In industrial practice, automotive models may be split into more than 1000 fragments, which poses a significant challenge for tool developers. Some model persistence technologies (such as EMF’s default XMI serialization) do not allow model fragments to cyclically refer to each other, putting a stricter limit to fragmentation. Hence, MDE use cases often demand the ability to define access for each object (or even each property of each object) independently.

Furthermore, coarse-grained access control lacks flexibility, especially when accessing models from heterogeneous information sources in different collaboration scenarios. For instance, they disallow type-specific access control, i.e. to grant or restrict access to model elements of a specific type (e.g. to all classes in a UML model), which are stored in multiple files.

On the other hand, fine-grained access control necessitates to assign access rights to each model element. As the size of the model grows, these permissions or restrictions cannot be set and maintained manually for each individual model element, but a systematic assignment technique is needed.

1.3 Goals

The main objective of the paper is to achieve secure collaborative modelling with fine-grained access control, by using advanced model transformation techniques, while relying upon existing storage back ends to follow current industrial best practices. In particular, we aim to address the following high-level goals (refined later into technical goals in Sect. 2):

-

G1

Fine-grained Access Control Management

to enforce read and write permissions of users separately to each model object, attribute or reference.

-

G2

Secure and Versatile Offline Collaboration

where each collaborator can work with a model fragment filtered in accordance with read permissions and processed using off-the-shelf MDE tools (e.g. editor, verifier). A user may be disconnected from any server or access control mechanism and then submit (commit) his updated version in the end.

-

G3

Secure and Efficient Online Collaboration

where multiple users can view and edit a model hosted on a server repository in real time while imposing different read and write permissions. Small changes performed by one collaborator are quickly and efficiently propagated to the views visible to other users, without reinterpreting the entire model.

-

G4

General Collaboration Schema

that is adaptable for online and offline scenario defining workflows of a server and multiple clients to handle fine-grained access control management.

1.4 Contributions

In this paper, we define an approach for secure collaborative modelling using bidirectional model transformations to derive filtered secure views for each collaborator and to propagate changes introduced into these views back to a server. Our approach is uniformly applicable to support both online and offline collaboration scenarios, and it enforces fine-grained access control policies for each collaborator during the derivation of views and the back-propagation of changes.

We formalize the collaboration schema using communicating state machines and provide formal proofs for certain correctness criteria using the FDR4 tool [30]. The schema is integrated into existing version control systems using hook programs triggered by repository events to support offline collaborative scenarios, whereas a prototype tool of online collaboration is also realized on the top of Eclipse RAP [51].

Finally, a detailed scalability evaluation is carried out using models from the Wind Turbine Case Study of the MONDO European FP7 project, which serves as a motivating example for the paper.

This paper is an extension of [8, 18] by providing (1) an in-depth precise specification of the bidirectional transformation as well as the collaboration scheme, (2) further technical details on its realization for both offline and online collaborative scenarios and (3) an extended scalability evaluation of our approach now also covering the offline scenario.

1.5 Structure

Rest of the paper is organized as follows. Our motivating example is detailed, and the challenges are introduced in Sect. 2. Section 3 defines how models can be decomposed into individual assets and introduces the rules that assign read and write permissions to assets. In Sect. 4, we overview our bidirectional model transformation for access control, while Sect. 5 describes our secure collaboration schema and proves its correctness. In Sect. 6, we give a brief overview on how to adapt this collaborative modelling schema to online and offline scenarios. Section 7 describes the evaluation of our approach, and related work is overviewed in Sect. 8. Finally, Sect. 9 concludes our paper.

2 Case study

2.1 Modelling language

Our approach will be illustrated using a simplified version of a modelling language for system integrators of offshore wind turbine controllers, which served as one of the case studies of the MONDO EU FP7 project [4]. The metamodel, defined in Ecore [52] and depicted in Fig. 2, describes how the system builds up from modules (Module) providing and consuming signals (Signal) that send messages after a specific amount of time defined by the frequency attribute. Modules are organized in a containment hierarchy of composite modules (Composite) shipped by external vendors (vendor attribute), and ultimately containing control unit modules (Control) responsible for a given type of physical device (such as pumps, heaters or fans: FanControl, HeaterControl, PumpControl, respectively) with specific cycle priorities (cycle attribute). A documentation is attached to each signal (documentation attribute) to clarify its responsibilities. Some of the signals are treated as confidential intellectual property (ConfidentialSignal).

The design of wind turbine control units requires specialized knowledge. There are three kinds of control units, and each kind can only be modified by specialist users with the appropriate qualification: fan, heater and pump control engineers.

A sample instance model containing a hierarchy of 3 Composite modules with 4 Control units as submodules, providing 6 Signals altogether where two of them are Confidential Signals, is shown in Fig. 3. Boxes represent objects (with attribute values as entries within the box and their types shown as labels on the tops). Arrows with diamonds represent containment edges, while arrows without diamonds represent cross-references.

2.2 Security requirements

Specialists are not allowed to modify (and in some cases, read) parts of the model. For this purpose, the following security requirements are stated for control unit specialists:

-

R1

Each group of specialists shall be responsible for a specific kind of control unit (owned control units).

-

R2

Specialists shall see only those signals that are within the scope for their owned control units, i.e. signals provided by a module that is either (a) a composite that directly contains an owned control unit, or (b) any submodule (including the owned control unit) contained transitively in such a composite.

-

R3

Specialists shall be able to modify signals provided by their owned control units.

-

R4

Specialists shall observe which modules consume signals provided by their owned control units.

-

R5

Specialists shall see the vendor attributes in an obfuscated form.

-

R6

Specialists must not see confidential signals.

2.3 Usage scenarios

The system integrator company is hosting the wind turbine control model on their collaboration server, where it is stored, versioned, etc. There are two ways for users to interact with it.

Online collaboration. A group of users may participate in online collaboration, when they are continuously connected to the central repository via an appropriate client (e.g. web browser). Each user sees a live view of those parts of the model that he is allowed to access. Changes need to be propagated on-the-fly between the views of users in short transactions. These transactions contain each modification such as create, update, delete or move. Finally, the collaboration tool has to reject a modification immediately when it violates a security requirement.

The users can modify the model through their client, which will directly forward the change to the collaboration server. The server will decide whether the change is permitted under write access restrictions. If it is allowed, then the views of all connected users will be updated transparently and immediately, though the change may be filtered for them according to their read privileges.

Offline collaboration. In case of offline collaboration, when connecting to the server, each user can download a model file containing those model elements that he is allowed to see. The user can then view, process, and modify his downloaded model file locally. The model can be developed with unmodified off-the-shelf tool that need not be aware of collaboration and access control. After the modification, the changes will be uploaded to the server in a long transaction.

2.4 Challenges

Deriving from the goals stated in Sect. 1.3, we identify the following challenges.

-

C1

Fine-grained Access Control of Model Artefacts.

To meet G1, the approach must enforce to allow or deny model access separately for individual model elements.

-

C2.1

Model Compatibility.

To meet G2 in offline collaboration scenario, the approach must be able to present the information available to a given user as a self-contained model, in a format that can be stored, processed, displayed and edited by off-the-shelf modelling tools.

-

C2.2

Offline Models.

To meet G2 in an offline collaboration scenario, the approach must be able to present only the available information to a given user without maintaining connectivity with any central server or authority responsible for access control.

-

C3.1

Incrementality.

To meet G3 in an online collaboration scenario, the approach must be able to process model modifications initiated by a user and apply the consequences to the views available to other users without re-processing the unchanged parts of the model.

-

C4.1

Correctness Criteria.

To meet G4, the approach must define the correctness criteria of the collaboration schema and prove their fulfilment.

-

C4.2

Adaptability.

To meet G4, the approach must realize the collaboration schema both in offline and online scenarios.

3 Access control of models

3.1 Modelling preliminaries

In order to tackle challenges C1.1 and C2.1, we first analyse how models can be decomposed into individual assets for which access can be permitted and denied, and under what conditions a filtered set of such assets can be represented as a model that can be processed by standard tools.

For the purposes of access control, a model is conceived as a set of elementary model assets. An \(\textit{Asset}\) is an entity that the access control policy will protect. Generally, models can be decomposed into object, reference and attribute assets.

Definition 1

Object assets are pairs formed of a model element with its exact class for each model element object;

Definition 2

Reference assets are triples formed of a source object, a reference and the referenced target object, for each containment link and cross-link between objects;

Definition 3

Attribute assets are triples formed of a source object, an attribute and a data value, for each (non-default) attribute value assignment;

Definition 4

Models are triples formed of a set of object, reference and attribute assets.

Note that there can be multi-valued attributes and references in certain modelling platforms (e.g. EMF), where an object is allowed to host multiple attribute values (or reference endpoints) for that property. For such properties, each entry at a source object will be represented by separate attribute (or reference) assets.

Example 1

\(\textit{ObjectAsset}(o1,\textit{Composite})\) is an object asset, \(\textit{AttributeAsset}(o10,\textit{cycle},\textit{low})\) is an attribute asset and \(\textit{ReferenceAsset}(o2,\textit{consumes},o12)\) is a reference asset in our running example (depicted in Fig. 3).

3.2 Consistency of models

An arbitrary set of model assets does not necessarily constitute a valid model; there may be consistency rules imposed on the assets by the modelling platform to ensure the integrity of the model representation and the ability to persist, read and traverse models. Challenge C2.1 requires that filtered models must be synthesized as a set of model assets compatible with all consistency rules of the underlying modelling platform.

-

Object Existence. Attributes and references imply that the objects involved exist, having a type compatible with the type of the attribute or reference.

-

Containment Hierarchy. In modelling languages that have a notion of containment, certain references are denoted as containment types realizing a containment hierarchy of objects. This hierarchy implies a containment forest of all objects. Therefore, objects must either be root objects of the model, or be transitively contained by a root object via a chain of objects that are all existing. (Modelling languages that do not have containment are of course also supported, with all objects considered root objects.)

-

Opposite Features. There are opposite references defined as a pair of references where the existence of a relation depends on its pair. For reference types having an opposite, reference assets of the two types exist in symmetric pairs.

-

Multiplicity Constraints. The number of reference assets for a given reference of an object needs to satisfy the multiplicity constraints.

We distinguish these low-level internal consistency rules from high-level, language-specific well-formedness constraints. Well-formedness constraints (also known as design rules or consistency rules) define additional restrictions to the metamodel that the instance models need to satisfy. These types of constraints are often described using OCL [40]. The difference between the two concepts is that violating the latter kind does not prevent a model from being processed and stored in a given modelling technology. Thus, only internal consistency is required for access control.

3.3 Model obfuscation

Obfuscation is defined as the process of “making something less clear and harder to understand, especially intentionally” [48]. The first purpose of obfuscation in programming was to distribute C sources in an encrypted way to prevent access to confidential intellectual property in the code [33].

A model obfuscation takes a model as input and yields another model as output where the structure of the model remains the same but data values (such as names, identifiers or other strings) are altered. Two data values that were identical before the obfuscation will also be identical after it, but the obfuscated value computed based on a different input string will be completely different. Moreover, all the altered values can be reverted by the original owner of the model using a private key.

In the context of access control, obfuscation can be applied to data values of attribute assets. An obfuscated data describe its presence in the model (e.g. the value of an object’s attribute is not empty), but the real content of that asset remains hidden.

Definition 5

The \(\textit{obf}\) function takes a data \(\textit{Value}\) and a \(\textit{Seed}\) as inputs and maps the value to (\(\widehat{\textit{Value}}\)). The \(Obf^{-1}\) function is the inverse of \(\textit{obf}\) which returns the original data if the same Seed is used.

Example 2

In our example, the security requirement R5 prescribes to obfuscate the vendor attribute A of object root that may become “oA3DD43CF5” in the views.

3.4 Access control rules and permissions

Our fine-grained access control policy has to assign permissions separately for each model asset. In case of a large model, there can be thousands of assets where it is tedious to manually assign permissions one by one. Therefore, the policies are constructed from a list of access control rules, each of which controls the access to a selected set of model assets by certain users or groups, and may either allow or deny the read and/or write operation.

Definition 6

An access control rule (\(\textit{ac-rule}\)) defines a partial function that applies judgements (allow, obfuscate, deny) to specify the privileges of a certain user\(\in \)Users for an operation type (read or write) on a given subset of assets.

Definition 7

An access control policy defines an effective permission function (\(\textit{permission}_\textit{Eff}\)) derived from a list of access control rules that applies judgements (allow, obfuscate, deny) for both operation types (read and write) of each assets in the context of a certain user\(\in \)Users

To manage the challenge C2.1, it is necessary to eliminate inconsistencies introduced by access control rules. In addition, these access control rules can be contradictory as one access control rule might grant a permission for a given part of the model, while another rule may deny it at the same time. Hence, the effective permission function (\(\textit{permission}_\textit{Eff}\)) needs to derive a consistent and conflict-free set of judgements. Our previous work [9, 18] describes the effective permission calculation in more detail, but here we give a brief overview on conflict resolution, permission dependencies and outline some reconciliation strategies as well.

Conflicts. Conflicting policy rules can be resolved by assigning priorities to each rule. Hence, the rules with higher priority override the other rules.

Sanity. The sanity of the policy implies that a user should not be allowed to write values and model assets that are not readable to them. Therefore without effective read permission, write permission is automatically denied as well, even if there are no rules to deny the write permissions.

Read dependencies. Read permissions may depend on permissions on other model assets.

If a model element is unreadable, its incoming and outgoing references and its attributes shall not be readable either, otherwise the set of readable assets would not form a self-consistent model.

In modelling platforms (such as EMF) with a notion of containment between objects, readable objects cannot be contained in unreadable objects (as the latter do not exist in the front model); this needs to introduce a new container for the orphan object (e.g. promoting it to a top-level object of the model). Alternatively, this implies that an object hidden from the front model will hide the entire containment subtree rooted there (this latter choice is used in the case study).

Write dependencies. Write permissions likewise have dependencies on other model assets.

In general, creating/modifying/removing references between objects requires a writable source object and a readable target object, but some modelling platforms including EMF have bidirectional references (or opposites), for which internal consistency dictates that the target object must be writable as well.

A metamodel may constrain a reference (or attribute) to be single-valued; assigning a new target to the reference would automatically remove the old one, so a user can only be allowed the former write operation if they are allowed the latter.

Similarly, removing an object from the model implies removing all references pointing to it, and all objects contained within it.

Example 3

An access control policy is set up to meet the security needs of the running example introduced in Sect. 2.2. A possible permission function (\(\textit{permission}_\textit{Eff}\)) is visualized in Fig. 4. For instance, Pump Control Engineers have full access to PumpControl objects and their provided Signals (squares marked with bold borders and blue headers); however, they cannot access ConfidentialSignal objects (squares with dashed borders). The rest of the objects are readable, but not writable by this group of users (squares with thick borders and orange headers). If an object is only required to preserve read dependencies, its identifier is obfuscated (marked with “O” letter in a square next to the attribute) and all other attributes remain hidden (“H” letter in the square). Finally, bold edges are writable by the engineers, i.e. the writable signals (s2, s5) can be removed from their container, or new signals can be created under the writable controls (ctrl2, ctrl4); thick edges represent readable references (in this example, these are required mostly to preserve containment hierarchy); and the rest of the dashed edges are hidden from the engineers.

4 Bidirectional model transformation for access control management

4.1 The access control lens

Due to read access control, some users are not allowed to learn certain model assets. This means that the complete model (which we will refer to as the gold model) differs from the view of the complete model that is exposed to a particular user (the front model).

In theory, access control could be implemented without manifesting the front model, by hiding the entire gold model behind a model access layer that is aware of the security policy and enforces access control rules upon each read and write operation performed by the user. However, challenge C2.1 requires users to access their front models using standard modelling tools; moreover, while challenge C2.2 requires that in the offline collaboration scenario, they can “take home” their front model files without being directly connected to the gold model. In order to meet these goals, we propose to manifest the front models of users as regular stand-alone models, derived from a corresponding gold model by applying a bidirectional model transformation.

In the literature of bidirectional transformations [21], a lens (or view update) is defined as an asymmetric bidirectional transformation relation where a source knowledge base (KB) completely determines a derived (view) KB, while the latter may not contain all information contained in the former, but it can still be updated directly. The two operations of crucial importance in realizing a lens relationship are the following:

-

Get obtains the derived KB from the source KB that completely determines it, and

-

PutBack updates the source KB, based on the derived view and the previous version of the source (the latter is required as the derived view may not contain all information).

The bidirectional transformation relations between a gold model (containing all assets) and a front model (containing a filtered view) satisfies the definition of a lens. The Get process applies the access control policy for filtering the gold model into the front model. The PutBack process takes a front model updated by the user and transfers the changes back into the gold model.

Definition 8

The Get process derives the front model from the gold model in accordance with the read permissions.

Definition 9

The PutBack process enforces the write permissions and derives the updated gold model from the modified front model and the original version of the gold model.

The lens concept is illustrated in Fig. 5. Initially, the Get operation is carried out to obtain the front model for a given user from the gold model. Due to the read access control rules, some objects in the model may be hidden (along with their connections to other objects); additionally, some connections between otherwise readable objects may be hidden as well; finally, some attribute values of readable objects may be omitted, obfuscated or hidden altogether. If the user subsequently updates the front model, the PutBack operation checks whether these modifications were allowed by the write access control rules. If yes, the changes are propagated back to the gold model, keeping those model elements that were hidden from the user intact (preserved from the previous version of the gold model).

Write access control checks are performed by the PutBack operation as they (a) may prevent a user from writing to the model, and (b) access control rules need to be evaluated on the gold model.

Access control rules cannot be evaluated directly on the front model since only the gold model contains all information. Thus, write access control can only be enforced by taking into account the gold model as well. Therefore, write access control must be combined with the lens transformation. In particular, PutBack must check write permissions, and fail (by rolling back any effects of the commit or operation) if a certain modification cannot be applied to the gold model.

Example 4

In our running example, the original model (Fig. 3) acts as the gold model containing all the information. The Get transformation applies the permissions and produces a front model for each specialist. In Fig. 4, each front model consists of

-

the objects with bold or solid borders;

-

the references with solid lines;

but the objects and references with dashed borders and lines are removed, whereas the attributes marked with

-

an “O” in a square are obfuscated;

-

an “H” in a square are removed.

When a PumpControlEngineer tries to modify the frequency of the signal s3 from 6 to 10, the PutBack operation is responsible for declining this change as the access control rules deny the modification (the signal s3 is readable but not writable).

On the other hand, if a PumpControlEngineer tries to modify the frequency of the signal s2 from 29 to 17, the PutBack operation propagates the change back to the gold model (the signal s2 is writable) by identifying the signal s2 in the gold model and setting its frequency attribute.

4.2 Transformation design

Both Get and PutBack are designed as rule-based model transformations [16]. In the terminology of model transformation, gold and front models act as the source and target models, respectively.

To address challenge C3.1, the transformations need to be reactive and incremental computations in the online collaboration scenario.

Reactive transformations [7] follow an event-driven behaviour where the events are triggered by model manipulations such as creation/modification/deletion of model assets. The transformation observes these events and reacts to them.

Incrementality [16] means that there is no need to re-execute the whole transformation upon a small change introduced into the model. Source incrementality is the property of a transformation that only re-evaluates the modified parts of the source model. Target incrementality means that only the necessary parts of the target model are modified by the transformation, and there is no need to recreate the new target model from scratch.

Definition 10

A transformation rule

is associated with a precondition\(\in \textit{Preconditions}\), an action\(\in \textit{Actions}\) (parametrized by a match of the precondition) and a numerical priority\(\in \mathbb {P}\) value.

is associated with a precondition\(\in \textit{Preconditions}\), an action\(\in \textit{Actions}\) (parametrized by a match of the precondition) and a numerical priority\(\in \mathbb {P}\) value.

Definition 11

A transformation \(\mathcal {T}\) consists of a set of transformation rules (

) that a transformation engine \(\mathcal {TE}\) executes to incrementally derive an updated target model \(M'_{T}\) from a source and target model \(M_{S}, M_{T}\).

) that a transformation engine \(\mathcal {TE}\) executes to incrementally derive an updated target model \(M'_{T}\) from a source and target model \(M_{S}, M_{T}\).

Transformation execution repeatedly fires the rules as follows:

-

1.

finds all the matches of rule preconditions of all rules (this set of matches is efficiently and incrementally maintained during the transformation),

-

2.

selects a match from the rule with the highest priority,

-

3.

executes the action of the rule along that match;

The loop terminates when there are no more precondition matches.

According to the process Get and PutBack of the lens, we define \(\mathcal {T}_{\textsc {Get}{}}\) and \(\mathcal {T}_{\textsc {PutBack}{}}\) transformations, respectively.

These transformations consist of four groups of transformation rules based on its direction (Get, PutBack) and whether it adds or removes assets from the model (additive, subtractive):

In case of Get process:

-

Additive adds assets to \(M_F\) if no corresponding assets are present in \(M_G\)

-

Subtractive removes assets from \(M_F\) if no corresponding assets are present in \(M_G\)

In case of PutBack process:

-

Additive adds assets to \(M_G\) if no corresponding assets are present in \(M_F\)

-

Subtractive removes assets from \(M_G\) if no corresponding assets are present in \(M_F\)

All four groups consist of one rule for each kind of model asset; in the context of this paper, we distinguish 3 kinds of model assets (see Sect. 3.1); this makes twelve transformation rules altogether, described in the tables of “Appendices A and B”.

The preconditions require to initialize correspondence between front and gold models. For that purpose, we introduce a trace function.

Definition 12

The trace function is responsible for associating two object assets with each other:

We select three example transformation rules listed in Table 1 to describe the key concept of how the access control is managed.

Additive Get Object rule

(

)

)

The additive rule of \(\mathcal {T}_{\textsc {Get}{}}\) related to object assets is responsible for propagating object addition from the gold model \(\textit{M}_G\) to the front model \(\textit{M}_F\). A change is recognized in the precondition which selects pairs of \(\textit{ObjectAsset}(o_G,t)\) and \(\textit{ObjectAsset}(o_F,t)\) as follows: an \(\textit{ObjectAsset}(o_G,t)\) in the gold model that has no corresponding \(\textit{ObjectAsset}(o_F,t)\) in the front model, but it should be readable according to the \(\textit{permission}_{\text {Eff}}{}\). The action part will create a new \(\textit{ObjectAsset}(o_F,t)\) and establish a correspondence relation between these two objects.

Example 5

A system administrator who has access to the original gold model (depicted in Fig. 3) adds a new signal object \(sN_G\) under the heater control unit ctrl3. This change needs to be propagated to the front models as the new signal should be at least readable (also writable for Heater Control Engineers).

\(\mathcal {T}_{\textsc {Get}{}}\) transformation will be executed between \(\textit{M}_G\) and the front model of Pump Control Engineer\(\textit{M}^\text {pump}_F\) (depicted in Fig. 4c). The precondition of the

selects the \(\textit{ObjectAsset}(sN_G,\textsf {Signal})\) as it has no corresponding \(\textit{ObjectAsset}(sN_F,\textsf {Signal})\) in the front model. The action part creates \(\textit{ObjectAsset}(sN_F,\textsf {Signal})\) and traces it back to \(\textit{ObjectAsset}(sN_G,\textsf {Signal})\). Exactly the same sequence happens in case of the front model of Fan Control Engineer\(M\text {fan}_F\) (depicted in Fig. 4a).Footnote 1

selects the \(\textit{ObjectAsset}(sN_G,\textsf {Signal})\) as it has no corresponding \(\textit{ObjectAsset}(sN_F,\textsf {Signal})\) in the front model. The action part creates \(\textit{ObjectAsset}(sN_F,\textsf {Signal})\) and traces it back to \(\textit{ObjectAsset}(sN_G,\textsf {Signal})\). Exactly the same sequence happens in case of the front model of Fan Control Engineer\(M\text {fan}_F\) (depicted in Fig. 4a).Footnote 1

Additive Get Attribute rule

(

)

)

The additive rule of \(\mathcal {T}_{\textsc {Get}{}}\) related to attribute assets is responsible for propagating data value insertion on the gold model \(\textit{M}_G\) to the front model \(\textit{M}_F\). The precondition of the

selects \(\textit{AttributeAsset}(o_G,attr,v)\) in the gold model that has no corresponding \(\textit{Attribute}{} \textit{Asset}(o_F,attr,v')\) in the front model, but it should be readable according to the \(\textit{permission}_{\text {Eff}}{}\). The value of \(v'\) is calculated in accordance with its read permission (potentially in an obfuscated form).

selects \(\textit{AttributeAsset}(o_G,attr,v)\) in the gold model that has no corresponding \(\textit{Attribute}{} \textit{Asset}(o_F,attr,v')\) in the front model, but it should be readable according to the \(\textit{permission}_{\text {Eff}}{}\). The value of \(v'\) is calculated in accordance with its read permission (potentially in an obfuscated form).

Example 6

The system administrator modifies the frequency attribute of \(s1_G\) from 30 to 15. This change needs to be propagated to the front models.

-

1)

\(\mathcal {T}_{\textsc {Get}{}}\) will be executed between \(\textit{M}_G\) and the front model of Pump Control Engineer\(\textit{M}^\text {pump}_F\) (depicted in Fig. 4c). The precondition of the

selects the \(s1_F\) object from the front model attribute frequency and value 15 as \(\textit{AttributeAsset}(s1_F,frequency,15)\) does not exist, but it should be readable in \(F_\text {pump}\). The action part adds the \(\textit{AttributeAsset}(s1_F,frequency,15)\) to \(\textit{M}^\text {pump}_F\).Footnote 2

selects the \(s1_F\) object from the front model attribute frequency and value 15 as \(\textit{AttributeAsset}(s1_F,frequency,15)\) does not exist, but it should be readable in \(F_\text {pump}\). The action part adds the \(\textit{AttributeAsset}(s1_F,frequency,15)\) to \(\textit{M}^\text {pump}_F\).Footnote 2 -

2)

\(\mathcal {T}_{\textsc {Get}{}}\) will be executed between \(\textit{M}_G\) and the front model of Fan Control Engineer\(\textit{M}^\text {fan}_F\) (depicted in Fig. 4a). But now, the precondition has no match as s1 is not readable in\(\textit{M}^\text {fan}_F\)

Subtractive PutBack Object

(

)

)

The subtractive rule of \(\mathcal {T}_{\textsc {PutBack}{}}\) related to object assets is responsible for propagating object asset removals from the front model \(\textit{M}_F\) to the gold model \(\textit{M}_G\). A deletion is recognized in the precondition as follows: there is an \(\textit{ObjectAsset}(o_G,t)\) in the gold model that has no corresponding \(\textit{ObjectAsset}(o_F,t)\) in the front model. The action part checks the write permissions of \(\textit{ObjectAsset}(o_G,t)\). If the removal of the asset is denied, \(\mathcal {T}_{\textsc {PutBack}{}}\) terminates after a rollback. Otherwise, it removes the selected object asset.

Example 7

A Pump Control Engineer removes \(ctrl1_F\) object from his front model \(\textit{M}^\text {pump}_F\) (depicted in Fig. 4c). This change needs to be propagated be to the gold model; thus, PutBack transformation will be executed between \(\textit{M}^\text {pump}_F\) and the gold model \(\textit{M}_G\) (depicted in Fig. 4c). The precondition of the rule selects \(\textit{ObjectAsset}(ctrl1_G, FanControl)\). In the action part, the rule realizes that the permissions do not allow to delete ctrl1 object; thus, the transformation terminates and rejects the change.

To sum up, Get is responsible for enforcing read permissions in front models, while PutBack takes care of write permissions. If any write permission is violated, the transformation terminates and the front model (target) is reverted to its original state.

4.3 Discussion and analysis

In the following paragraphs, we analyse and discuss properties of the lens transformations.

First, in Sect. 4.3.1, we state the properties that the lens transformations are expected to exhibit. In Sect. 4.3.2, we state and discuss an important assumption that will be vital to proving the aforementioned properties in “Appendix C”. Finally, in Sect. 4.3.3 we will turn our attention to deviations of the technical realization from the ideal formulation.

4.3.1 Desirable properties of the transformation

In the following, we present a number of properties that the lens transformations would be desirable to exhibit. We first state these desirable properties and then discuss them individually in subsequent sections to find which ones are met under which conditions by the presented transformations.

Transformation Property 1

(Termination) Given a pair of starting models, Get and PutBack shall both terminate after a finite number of rule executions.

Transformation Property 2

(Confluence) Given a pair of starting models and running the transformation to completion, the terminal state of both Get and PutBack shall be independent from the chosen execution order of rule application, i.e. both Get and PutBack define a deterministic function.

Transformation Property 3

(Confidentiality)Get shall yield a front model that contains exactly those assets that are visible according to effective read permissions.

Transformation Property 4

(Integrity)PutBack shall successfully accept a modified front model if and only if its differences from the original front model do not violate effective write permissions.

Transformation Property 5

(GetPut)PutBack shall be a no-op when applied on the front model directly returned by Get, i.e. if the user makes no changes, the gold model shall not be updated.

Transformation Property 6

(PutGet)Get shall be a no-op when applied on the gold model previously updated by a successful PutBack (from the same front), i.e. if the gold model has not changed, the front model shall not be updated.

Transformation Property 7

(PutPut) A user applying a sequence of successful PutBack operations (and changing the front model inbetween) should have the same ultimate effect on the gold model as applying only the last one.

The first few properties (Properties 1, 2 ) are generally expected of most rule-based model transformations, in order to define an actual deterministic transformation function. Then Properties 3 and 4 state the security-specific requirements.

Next, Properties 5 and 6 pertain specifically to bidirectional transformations and are widely promoted (see e.g. [21, 46], also [25] specifically for security views) as very important “well-behavedness” properties that users of bidirectional transformations would most certainly expect. They enable the lens transformations to truly realize an updateable view.

Finally, Property 7 provides even stronger predictability guarantees, but is often considered very restrictive and therefore optional in the literature. A benefit of this law is that user modifications are undoable, i.e. the original state of the system (incl. gold model) can be restored when a change to the from model is reverted. On the other hand, it might unfortunately disallow certain sensible extensions, an example for which we include below.

As introduced in [9], a possible sample refinement of the write permission levels could be \(\{deny< dangle < allow\}\). Cross-references with write permission level dangle cannot be normally modified by the user, but they can be removed as the side effect of deleting the source or target object of the reference (if that deletion is permitted). Unlike the usual allowed write permission, dangle does not imply the readability of the asset, so this kind of deletion is possible even if the cross-link is not visible to the user. Imagine a traceability link that points from a hidden part of the model to a visible object; the difference between assigning deny or dangle is that the target object cannot be deleted by the user in the former case, while its deletion would be allowed (with an invisible side effect of removing the traceability link) in the latter case. These dangle semantics can be similarly extended to attributes or contained objects that might be attached as (invisible or read-only) tags to objects; they must not be modified by the user, but will be removed along with the object they are attached to if the object is deleted. It is easy to see that (a) such a feature would be quite useful in many practical applications of the approach presented in the paper, yet (b) Property 7 will not hold, as undoability is lost when dangling links/attributes/objects are removed.

4.3.2 Regularity of policy

Constant complement [5], a common strategy for proving desirable properties of secure views, involves partitioning the data into a readable part and the so-called complement. This partitioning can be used, for example, to verify whether PutBack (translator in the terminology of [5]) may possibly change the complement (which would violate Property 7). Similarly, correctness proofs can benefit from applying a second kind of partitioning [25] into a writable and endorsed part of the model.

Unfortunately, these partitioning schemes do not apply perfectly to our approach for the simple reason that a single asset might move from one partition to another as the model evolves—in fact even during the execution of PutBack. This is due to the fact that our approach is more powerful: we do not consider explicit access control attributes of assets, but rather derive effective permissions from policy rules based on arbitrary model queries (over the gold model) that can take into account the wider context of assets; thus, it is possible to change the effective permissions for a given asset (even without directly changing the asset itself). Therefore, we first make the following assumption and then discuss violating cases separately:

Assumption 1

(Regularity) For any transformation run, there exists a constant permission set for all assets (i.e. a constant partitioning of assets based on permission levels) so that effective permissions will always evaluate to results consistent with this fixed permission set when they are evaluated during the run (i) as a condition excluding activations of higher-priority rules, or (ii) as a precondition to an individual rule to be executed, when higher-priority rules have already been found to have no matches, (iii) as a condition for rejecting disallowed write attempts, or (iv) to determine termination.

Note that Assumption 1 does not require that the actual effective permissions (as evaluated following the policy by the appropriate algorithms [9, 18]) remain entirely constant that would not be feasible. For example, if a user creates a new model element, the corresponding asset propagated to the gold model by PutBack would only evaluate as writeable once it exists in the first place. What is actually required is that permissions are not allowed to flip-flop; i.e. a transformation should never observe a particular asset change its effective permissions if the transformation has already acted upon the old value of the effective permissions. This condition is met in the previous example, as we can include the newly created asset in the constant permission set as writeable: the asset did not exist in the gold model before its creation, so no rules would have ever observed it as an existing but non-writeable asset; as far as the rules are concerned, the asset could have always been listed as writeable in the permission set.

Get leaves the gold model, and thus, the effective permissions unchanged, so Assumption 1 holds trivially. For PutBack, however, it is possible to come up with scenarios where Assumption 1 is violated. One of these cases is privilege escalation, where a user can make a change somewhere in the model that would grant them additional read or write privileges somewhere else that they did not previously have (even though those assets existed before). The other case is lockout, where a user can make a change that will have the side effect of losing their read or write access on some assets (even though those assets continue to exist). We believe both of these cases are likely symptoms of defective policy definition, and a system can only be considered secure and reliable if it does not exhibit these behaviours (or only in a very controlled manner).

Therefore, in the proofs for the properties of Sect. 4.3.1, we consider Assumption 1 to hold and apply partitioning-based arguments partly similar to those in [5, 25]. This limitation to the case with regularity is, on one hand, necessary to prove the properties stated earlier (the exception is Property 1; the transformations will be shown to terminate regardless whether regularity holds). On the other hand, the limitation is prudent for the above-listed reasons of security. Finally, the limitation is also feasible, as it is fairly easy to screen changes during PutBack and reject them if they lead to either privilege escalation or lockout. Static analysis of policy definitions regarding their susceptibility to these problems is left as future work.

Now we are ready to sketch conditional proofs for each of the listed properties to hold for the transformations induced by rule sets in “Appendices A and B”; the proof sketches are found in “Appendix C”.

4.3.3 Realization in EMF

We realized the presented lens transformation in the Eclipse Modelling Platform (EMF) [52]. Instead of approaches specifically designed for easy specification of bidirectional transformations, the unidirectional and reactive VIATRA framework [54] has been chosen for its (a) target-incremental transformations and (b) source-incremental model queries to define rule preconditions.

The presented transformation rules, as well as the proof sketches, are formulated on technology-independent models defined as a set of model assets. Actual model representations—EMF in our case—expose an object-oriented API instead. Therefore, we have implemented a relational model wrapper layer that exposes the contents of the model through a writeable API as a set of model assets (essentially tuples). This abstraction, however, is incomplete: the underlying object-oriented model structure (i) may not be compatible with all set operations and (ii) may allow a given set of operations only in certain sequences. The transformation must enforce these constraints.

The first problem occurs if adding or removing a model asset would violate the internal consistency (see Sect. 3.1) of the model; this is avoided by the consistency property of effective permission function in such a way that both Get and PutBack would only attempt valid changes to the front and gold models, respectively.

The second problem occurs if valid changes are attempted in the wrong order, e.g. if a reference asset is only deleted after deleting the object asset for one of the endpoints. By choosing the transformation rule priorities accordingly, the object, attribute and reference rules are ordered in a way that avoids violating these kinds of constraints in all but one cases. The remaining case is object containment, e.g. a child object cannot be added to the model before its container object is created. This depends on the ordering of two instances of the object rule and thus cannot simply be expressed using rule-level fixed priorities. To solve this problem, the relational model wrapper temporarily allows “unrooted” model objects detached from the model. Note that the EMF API itself allows the existence of such detached model objects, and they are just not treated as part of the model (resource set) by default, which our model wrapper needs to circumvent.

As a further effect of this abstraction layer, there is a genuine loss of information: the ordering of multi-valued collections is not preserved in the relational representation. See Sect. 6.3.2 for discussion.

As a slight technical hurdle, the EMF-based query engine of VIATRA, in charge of interpreting the query-based security policy, is not actually capable of evaluating permission queries on assets that are non-existent in the gold model. A workaround is applied in practice to the additive PutBack attribute rule (the only rule where this is relevant, due to obfuscation), which we omit here.

Finally, we note that in order to simplify the language of the discussion, we have informally described assets as potentially being contained in both the gold and front models. Since a single EMF object is contained in at most one model, it would be more precise to say that the two models contain disjoint assets that are related by the equivalence induced by the trace function (see Sect. 4.2).

5 Collaboration scheme

To satisfy our goal G4, a general collaboration scheme is required including the bidirectional lens transformation between a server and several clients to enforce access control policies correctly.

The server stores the gold models and clients can download their specific front models. Modifications, executed by a client, can be submitted to the server and downloaded by the other clients. These are the basic actions that nowadays, a version control system (VCS) should provide to a user. In case of various implementations, these actions may be called differently (e.g. checkout, update, commit in SVN or clone, pull, push in Git).

According to the basic actions supported by any VCS, we define the basic operations of the collaboration scheme as follows:

-

\({{\varvec{Checkout}}}\) downloads the model from the server side to the workspace of a specific client who initiated the operation.

-

\({{\varvec{Update}}}\) retrieves the model changes from the server side to the workspace of a specific client who initiated the operation.

-

\({{\varvec{Commit}}}\) propagates the changes of a specific client to the server side.

5.1 Formalization of the collaboration

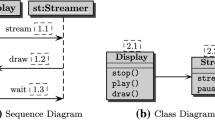

Figures 6 and 7 describe the behaviour of collaboration scheme as state machines for the server and the client.

A state machine consists of states (represented by boxes) and transitions (denoted by directed edges) between states. Each state machine has an initial state (denoted by arrow from a black circle) and a current state that specifies the system at a certain time.

The system can accept input events and send output events during its process (denoted by labels on the edges where “?” and “!” mean receiving and sending a certain event, respectively, following process-algebraic notation). In the concept of collaboration, each event is assigned to a collaborator using “.” symbol after the name of event, e.g. input.x/output.y means that the collaborator x initiates an input and the transition produces an output to the collaborator y. A transition will be executed immediately when its input event arrives and during the execution it produces its output event.

Compound state (visualized as boxes containing other states) refines the behaviour of a given state by defining its own state machine where only one state can be active.

Orthogonal regions (divided by dashed borders) separate the behaviour of independent states, and they are processed concurrently. In each region, only one state can be active at a time.

Two state machines can synchronize on events sending by one and received by the other one.

Server (Fig. 6). Its state machine has three orthogonal regions to handle the commit,update and checkout requests concurrently.

-

Checkout and Update. In case of receiving checkout and update requests, our approach rejects them when a user has no access to the model itselfFootnote 3 by sending an accessDenied event followed by a failure event. Otherwise, a success event is sent.

Upon an update request, it is also checked, whether the client’s model is up to date, and then, an upToDate event is produced followed by a success event.

-

Commit. The process of receiving commit requests consists of Idle, Locked, Synchronization and Unlock hierarchical states:

-

Idle state accepts commit requests from any collaborator. It produces an accessDenied event when the user has no access to the model, or a needToUpdate event when the user needs to update his/her model locally to be able to commit the modifications. Both events are followed by a failure event. Otherwise, the system locks the model to prevent concurrent processing any other commit requests and activates the Locked state.

-

Locked state executes the \(\mathcal {T}_{\textsc {PutBack}{}}\) in the name of the commit owner (x) and rejects the commit requests from any collaborator (y) by sending an otherCommitUnderExecution event with a failure event. After the execution of the transformation, a policyViolated event is sent to x, if the changes violated the access control policy and the system steps to Unlock state. Otherwise, a putback event leads the system to the Synchronization state.

-

Synchronization state is responsible for sending the success event to the owner of the commit and executing \(\mathcal {T}_{\textsc {Get}{}}\) to propagate the changes to other collaborators (denoted by output event get for all collaborator except the owner of the commit in the state Sync). Then, systems move forward to the Unlock state.

-

Unlock state is responsible for unlocking the model in all cases (unlock event). If the system is led to this state after a policy violation, the state produces a failure event before the unlock. It also rejects any other commit request by sending an otherCommitUnderExecution event with a failure event.

-

Client (Fig. 7). Its state machine cooperates with the server using the sending and receiving events that (i) trigger an operation (commit,update,checkout); (ii) indicate failures (needToUpdate); and (iii) indicate server responses (success, failure). It consists of Checkout, Idle and Update hierarchical states:

-

Checkout State. First, the clients need to checkout their models represented by sending a checkout event in the Checkout state. Based on the received server response, the clients can move to Idle state.

-

Idle State. In Idle state, clients can commit or update their changes by sending commit or update events. All the events produced by the server can be received, but only the needToUpdate event restricts the behaviour of the client by moving to Update state.

-

Update State. The clients need to initiate an update request by sending a update event to be able to commit their changes again.

5.2 Correctness criteria

To address the challenge C4.1, we describe the correctness criteria that the collaboration scheme needs to satisfy:

-

Criterion 1. The scheme needs to be deadlock free (i.e. all the locks need to be unlocked during a commit operation).

-

Criterion 2. The scheme needs to be livelock free (i.e. all the operations need to finish at some point and lead the scheme to an idle state).

-

Criterion 3. Commit operation shall be rejected, while another commit is under execution.

-

Criterion 4. Commit operation shall propagate the changes to all collaborators.

-

Criterion 5. Clients need to initiate an update operation when it is required by the server.

Note that Criterion 1. and Criterion 2. are required to ensure that the collaboration can run without any manual intervention. Criterion 3. declines overwriting changes without notification of a commit happened previously. Criterion 5. enforces the clients to avoid conflicting commits.

5.3 Proof of correctness

In accordance with challenge 4.1, we formalized our collaboration scheme as communicating sequential processes (CSP) [44, 45] described in “Appendix D” to prove its correctness. CSP is a formal specification language of concurrent programs or systems where the communications and interactions are presented in an algebraic style.

The Server and Clients processes define the behaviour of exactly 1 server and n clients, respectively. The collaboration is specified as a concurrent execution (denoted by ||) of the server and clients where the processes synchronize on a given set of events SyncEvents: {commit, update, checkout, accessDenied, policyViolated, needToUpdate, failure, success}.

For the analysis, we used the FDR4 tool [30] to evaluate assertions over certain properties of the processes.

Criteria 1. and 2. require the entire collaboration process (Collaboration) to be deadlock and livelock free. To check these properties, the \(:[deadlock\text { }free]\) and \(:[divergence\text { }free]\) built-in structures are used, respectively.

The rest of the criteria requires to evaluate whether the process formally refines a certain event sequence according to the CSP models, namely the traces and failures models. For that purpose, we use the \(\mathbb {T:}\) and \(\mathbb {F:}\) structures, respectively, where

-

\(\mathbf P \, \mathbb {T:} <a,b,c>\) means that process \(\mathbf P \) must be able to perform the ordered sequence of the events a, b, c and only these events.

-

\(\mathbf P \, \mathbb {F:} <a,b,c>\) means that process \(\mathbf P \) must not be able to refuse to perform the ordered sequence of events a, b, c without performing any other event.

To check the remaining criteria, we introduce the \(\lnot \) symbol to negate assertions; the \(\textbackslash \) symbol that hides events from the process and \(\mathcal {E}\) denotes the events provided by all processes. The combination of these symbols allows us to evaluate the processes in the context of certain event, e.g. \(\mathbf P \text { }\textbackslash (\mathcal {E}\cap \{a,b,c\})\) means that all events are hidden from the process \(\mathbf P \) except a, b and c.

Criterion 3. includes that after executing a \(\mathcal {T}_{\textsc {PutBack}{}}\), another \(\mathcal {T'}_{\textsc {PutBack}{}}\) cannot be executed without unlocking the model.

Criterion 4. requires to execute \(\mathcal {T}_{\textsc {Get}{}}\) for all collaborators other than the owner of the commit after a successfully executed \(\mathcal {T}_{\textsc {PutBack}{}}\) but before unlocking the model. As we start the synchronization with collaborator 1, and then 2, it implies that the collaboration scheme needs to execute it to the last collaborator, namely n.

To satisfy Criterion 5., after a commit operation rejected by the server with a need to update message, the client (i) cannot commit again and (ii) must be able to initiate update operation:

As in the assertions we hide several events, the FDR4 tool was able reduce the state space and the transitions that needs to be traversed.

We evaluated the assertionsFootnote 4 for \(n=5\) users. The results are presented in Table 2. To check deadlock and livelock properties, all the events and states are required. Hence, the tool traversed almost 90,000 states and 230,000 transitions to prove these properties. To verify the rest of the assertions, at most 500 states and 1100 transitions were enough to traverse. All the assertions are evaluated within less than 0.51 seconds and none of them failed.

According to the results, we state that our collaboration scheme for access control management satisfies the correctness criteria.

6 Realization of Collaboration Scheme

Source codes and more details are at https://tinyurl.com/sosym-access-control-source.

In accordance with challenge 4.2, our goal is to provide tool support for enforcing fine-grained model access control rules in offline and online scenario realizing the introduced collaboration scheme (see Sect. 5).

6.1 Offline collaboration

In the offline scenario, models are serialized (e.g. in an XMI format) and stored in a version control system (VCS). Users work on local working copies of the models in long transactions called commits. The goal of our approach is to manage fine-grained access control on the top of existing security layers available in the VCS.

6.1.1 Realization

The concept of gold and front models is extended to the repository level where the two types of repositories are called gold and front repositories as depicted in Fig. 8. The gold repository contains complete information about the gold models, but it is not accessible to collaborators. Each user has a front repository, containing a full version history of front models. New model versions are first added to the front repository; then changes introduced in these revisions will be interleaved into the gold models using PutBack transformation. Finally, the new gold revision will be propagated to the front repositories of other users using Get transformation. As a result, each collaborator continues to work with a dedicated VCS as before; thus, they are unaware that this front repository may contain filtered and obfuscated information only.

Existing access control mechanisms (such as firewalls) are used to ensure that the gold model is accessible to superusers only, and each regular user can only access their own front repository. These regular users can use any compatible VCS client to communicate with their front repository, being unaware of collaboration mechanisms in the background.

This scheme enforces the access control rules even if users access their personal front repositories using standard VCS clients and off-the-shelf modelling tools. Nevertheless, optional client-side collaboration tools may still be used to improve user experience, e.g. for smart model merging [19], user-friendly lock management [13] or preemptive warning about potential write access violations that greatly enhances the usability and applicability of the offline scenario.

6.1.2 Realization of the collaboration scheme

In the current prototype, our collaboration scheme is realized by extending an off-the-shelf VCS server, namely Subversion [2]. Subversion provides features of the collaboration scheme by default:

-

File-level access control

is responsible for sending accessDenied event to the collaborators whenever their access is denied for a certain file (which contains models).

-

Version control

allows to the users to download the files by sending a checkout event, submit their changes by sending a commit event and update the files by sending an update event.

-

Version check

checks the version of the files and sends upToDate the collaborators whether the files are already up-to-date upon an update or sends needToUpdate event they need to update upon a rejected commit.

-

File-level locking

allows users to lock files by sending a lock event and reject commits initiated by other users. They can also remove their locks by sending an unlock event.

-

Handling Multiple Requests

allows users to initiate multiple requests simultaneously that the server can accept.

-

Final Notification

notifies the users about the result of their requested operations by sending a success or a failure event.

As checkout and update operations of the collaboration scheme are fully handled by Subversion, we need to integrate the \(\mathcal {T}_{\textsc {PutBack}{}}\) and \(\mathcal {T}_{\textsc {Get}{}}\) into the commit operation to enforce fine-grained access control and propagate the changes.

Hooks are programs triggered by repository events such as lock, unlock or commit. The hook may be set up to be triggered before such an event (with the possibility of influencing its outcome, e.g. cancelling it upon failure) or directly afterwards (when the event is guaranteed to have happened). The following hook programs will be executed upon a commit operation.

Pre-Commit Hook.\(\mathcal {T}_{\textsc {PutBack}{}}\) is invoked by pre-commit hook executing when a user attempts to commit a new revision of a model \(M^{r'}_F\) (new revision \(r'\) of a model \(M_F\)). This hook performs the following steps to enforce access control policies corresponding to Fig. 9:

-

1.

Parent revision \(M^{r}_F\) of \(M^{r'}_F\) is identified.

-

2.

Revision \(M^{r}_F\) is traced to the corresponding revision \(M^{R}_G\) in the gold repository.

-

3.

The hook attempts to put a file-level lock to \(M_G\) in the gold repository.

-

(a)

If the locking attempt fails, the hook terminates sending an otherCommitUnderExecution event.

-

(b)

Otherwise, the lock on \(M_G\) is activated by sending a lock and the hook continues its process.

-

(a)

-

4.

\(\mathcal {T}_{\textsc {PutBack}{}}\) is executed between \(M^{r'q}_F\) and \(M^{R}_G\) in the gold repository, in order to reflect the changes performed in the new commit.

-

(a)

If the \(\mathcal {T}_{\textsc {PutBack}{}}\) detects any attempts to perform model modifications violating write permissions, then the commit process to the front repository terminates by sending a policyViolated event.

-

(b)

Otherwise, the commit is deemed successful, and \(M^{R''}_G\) is committed to the gold repository (with metadata such as committer name and commit message copied over from the original front repository commit).

-

(a)

-

5.

Finally, the hook finishes successfully and let the VCS server to handle the request.

Post-Commit Hook.\(\mathcal {T}_{\textsc {Get}{}}\) is invoked by post-commit hook synchronizing all front models \(M^{r}_F\) with the new revision of gold model \(M^{R'}_G\). This hook is triggered after a commit of \(M^{R'}_G\) finished successfully at the gold repository and performs the following steps correspond to Fig. 10 to propagate the new changes.

-

1.

Parent revision \(M^{R}_G\) of \(M^{R'}_G\) is identified.

-

2.

The hook iterates over each front repository and execute the following steps. If the commit to the gold repository is initiated by a front repository, then the originating front repository will be skipped.

-

(a)

Revision \(M^{R}_G\) is traced to the corresponding front revision \(M^{r}_F\) in the front repository.

-

(b)

\(\mathcal {T}_{\textsc {Get}{}}\) is executed between \(M^{R'}_G\) and \(M^{r}_F\) in order to reflect the changes performed in the commit. If \(M^r_F\) does not exist, it is handled as an empty model.

-

(c)

New revision of the model \(M^{r'}_F\) is commited to the front repository (with metadata such as committer name and commit message copied over from the original front repository commit).

-

(a)

-

3.

The hook removes the lock from \(M^{R'}_G\) by sending an unlock event.

-

4.

Finally, it finishes successfully and lets the VCS server to handle the request.

6.1.3 Discussion

It is worth discussing the following properties of the offline collaboration framework.

Generality. Our solution is general and adaptable to any VCS that supports checkout, update, commit operations (maybe they are named differently).

Server Response. Users get response to their commit right after the collaboration server attempts to propagate back the changes to the gold repository. If any access control rule is violated, the pre-commit hook fails. At the last phase of pre-commit, the VCS declines the commit action to the gold repository, if any modified files are locked on the gold repository. Hence, the hook fails again and prevent the VCS-specific file-level locks. In contrast, if everything goes well, the users do not need to wait for synchronizing with the remaining front repositories.

Multiple Models in a Commit. A single commit may update several models at once. In this case, the hooks are invoked for each model in the commit.

Non-blocking Commit. Commit operation does not block update and checkout operations as previous versions still readable in the front repositories.

Models stored among other project files. Our solution supports storing models along with non-model files in the repositories. The hooks can be parameterized with file extensions to determine whether a file needs to be handled as a model. When a file is not a model, it is simply copied from the gold repository to the front repositories.

Correspondence Relation. It is a challenging task to identify the correspondences between model assets of the front and gold models, where the models are stored independently as it is addressed in C2.2.

Our approach currently uses specific attributes to provide permanent identifiers. Such a permanent identifier is preserved across model revisions and lens mappings and can therefore be used to pre-populate the object correspondence relation. In our running example, each object has a unique id attribute. Note that unlike EMF, some modelling platforms (e.g. IFC [32]) automatically provide such permanent identifiers.

While requiring permanent identifiers is a limitation of the approach, it is only relevant for modelling platforms that do not themselves provide this kind of traceability, and only in the offline collaboration scenario. Being able to identify model objects is a relatively low barrier for modelling languages; e.g. the original wind turbine language includes a unique identifier for all model objects.

Authorization Files We have taken the design decision that the authorization files are stored and versioned in the same VCS as the models. Thus, policy files may evolve naturally along with the evolution of the contents of the repository.

Policy files are writable by superusers only, but readable by every user; this means that offline clients may evaluate security rules on their offline copies themselves. Note that we do not believe that this openness of the security policy causes major security concerns, as security by obscurity is not good security principal. In any way, names and parameters of security rules should not themselves contain sensitive design information.

6.2 Online collaboration

In the online scenario, several users can simultaneously display and edit the same model with short transactions by using a web-based modelling tool where changes are propagated immediately to other users during collaborative modelling sessions. In contrast to the offline scenario, where users manipulated local copies of the models, models are kept in a server memory and users access the model directly on the server. The goal of our approach in accordance with C3.1 is to incrementally enforce fine-grained model access control rules and on-the-fly change propagation between view models of different users.

6.2.1 Technical realization

During a collaborative modelling session, a model kept in server memory for remote access may also be called a whiteboard depicted in Fig. 11. The collaboration server hosts a number of whiteboard sessions, each equipped with a gold model. Each user connected to a whiteboard is presented with their own front model, connected to the gold model via a lens relationship. The front models are initially created using Get. If a user modifies their front model, the changes are propagated to the gold model using PutBack and propagated further to the other front models using Get again. In case of online collaboration, these lens operations are continuously and efficiently executed as a live transformation [16]; thus, users always see an up-to-date view of the model during the editing session.

Similarly to modern collaborative editing tools (such as Google Sheets [15]), whiteboards can be operated transparently: whenever the first user attempts to open a given model, a new whiteboard is started; subsequent users opening the model will join the existing whiteboard. When all users have left, the whiteboard can be disposed. The model may be persisted periodically, or on demand (“save button”). The session manager component enables collaborators to start, join or leave whiteboard sessions and persist models to disc.

6.2.2 Realization of the collaboration scheme

To achieve challenge 4.2, we need to discuss how the online collaboration realizes the collaboration scheme.

-

1.