Abstract

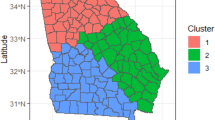

This work focuses on Generalized Linear Mixed Models that incorporate spatiotemporal random effects structured via Factor Model (FM) with nonlinear interaction between latent factors. A central aspect is to model continuous responses from Normal, Gamma, and Beta distributions. Discrete cases (Bernoulli and Poisson) have been previously explored in the literature. Spatial dependence is established through Conditional Autoregressive (CAR) modeling for the columns of the loadings matrix. Temporal dependence is defined through an Autoregressive AR(1) process for the rows of the factor scores matrix. By incorporating the nonlinear interaction, we can capture more detailed associations between regions and factors. Regions are grouped based on the impact of the main factors or their interaction. It is important to address identification issues arising in the FM, and this study discusses strategies to handle this obstacle. To evaluate the performance of the models, a comprehensive simulation study, including a Monte Carlo scheme, is conducted. Lastly, a real application is presented using the Beta model to analyze a nationwide high school exam called ENEM, administered between 2015 and 2021 to students in Brazil. ENEM scores are accepted by many Brazilian universities for admission purposes. The real analysis aims to estimate and interpret the behavior of the factors and identify groups of municipalities that share similar associations with them.

Similar content being viewed by others

Data Availability

Data explored in the study are available upon reasonable request to the authors.

References

Amorim EC, Mayrink VD (2020) Clustering non-linear interactions in factor analysis. METRON 78:329–352

Assuncao RM, Krainski E (2009) Neighborhood dependence in Bayesian spatial models. Biom J 51(5):851–869

Banerjee S, Carlin BP, Gelfand AE (2004) Hierarchical modeling and analysis for spatial data, 2nd edn. CRC Presss, New York

Barnett AG, Dobson AJ (2018) An introduction to generalized linear models, 3rd edn. Chapman and Hall/CRC, Boca Raton

Barreto-Souza W, Mayrink VD, Simas AB (2021) Bessel regression and bbreg package to analyze bounded data. Aust N Z J Stat 63(4):685–706

Besag J (1974) Spatial interaction and the statistical analysis of lattice systems. J Roy Stat Soc B 36(2):192–236

Besag J, York J, Mollie A (1991) Bayesian image restoration, with two applications in spatial statistics (with discussion). Ann Inst Stat Math 43:1–59

Brown TA (2015) Confirmatory factor analysis for applied research, 2nd edn. The Guilford Press, New York

Corrales ML, Cepeda-Cuervo E (2019) A Bayesian approach to mixed gamma regression models. Revista Colombiana de Estadística 42(1):81–99

Datta A, Banerjee S, Hodges JS, Gao L (2019) Spatial disease mapping using directed acyclic graph auto-regressive (DAGAR) models. Bayesian Anal 14(4):1221–1244

Ferrari SLP, Cribari-Neto F (2004) Beta regression for modeling rates and proportions. J Appl Stat 31(7):799–815

Ferreira MPS, Mayrink VD, Ribeiro ALP (2021) Generalized mixed spatio-temporal modeling: Random effect via factor analysis with nonlinear interaction for cluster detection. Spat Stat 43:100515. https://doi.org/10.1016/j.spasta.2021.100515

Figueroa-Zún̋iga JI, Arellano-Valle RB, Ferrari SLP, (2013) Mixed beta regression: a Bayesian perspective. Comput Stat Data Anal 61(7):137–147

Gamerman D, Lopes HF (2006) Markov chain Monte Carlo: Stochastic simulation for Bayesian inference, 1st edn. Chapman and Hall/CRC, Boca Raton

Gamerman D, Salazar E (2013) Hierarchical modeling in time series: the factor analytic approach. Oxford University Press, Oxford, pp 167–182

Gelfand AE, Smith AFM (1990) Sampling-based approaches to calculating marginal densities. J Am Stat Assoc 85(410):398–409

Geman S, Geman D (1984) Stochastic relaxation, Gibbs distributions, and the Bayesian restoration of images. IEEE Trans Pattern Anal Mach Intell 6:721–741

George EI, McCulloch E (1993) Variable selection via Gibbs sampling. J Am Stat Assoc 88(423):881–889

George EI, McCulloch E (1997) Approaches for Bayesian variable selection. Stat Sinica 7:339–373

Geweke J (1992) Evaluating the accuracy of sampling-based approaches to the calculation of posterior moments, vol 4. Clarendon Press, Oxford, pp 169–193

Green PJ (1995) Reversible jump Markov chain Monte Carlo computation and Bayesian model determination. Biometrika 82(4):711–732

Hastings WK (1970) Monte Carlo sampling methods using Markov chains and their applications. Biometrika 57:97–109

Johnson RA, Wichern DW (2007) Applied multivariate statistical analysis, 6th edn. Pearson, Upper Saddle River

Lopes HF (2003) Expected posterior priors in factor analysis. Braz J Probab Stat 17(1):91–105

Lopes HF (2003) Factor models: an annotated bibliography. In: Lopes HF (ed) ISBA Bull, vol 10. ISBA, Durham, pp 7–10

Lopes HF (2014) Modern Bayesian factor analysis, chap 5. In: Jeliazkov I, Yang XS (eds) Bayesian inference in the social sciences. Wiley, New York, pp 117–158

Lopes HF, Carvalho CM (2007) Factor stochastic volatily with time varying loadings and Markov switching regimes. J Stat Plan Inference 137(10):3082–3091

Lopes HF, Salazar E, Gamerman D (2008) Spatial dynamic factor analysis. Bayesian Anal 3(4):759–792

Lopes HF, Gamerman D, Salazar E (2011) Generalized spatial dynamic factor models. Comput Stat Data Anal 55(3):1319–1330

Lopes HF, Schmidt AM, Salazar E, Gomez M, Achkar M (2012) Measuring the vulnerability of the Uruguayan population to vector-borne diseases via spatially hierarchical factor models. Ann Appl Stat 6(1):284–303

Mayrink VD, Gamerman D (2009) On computational aspects of Bayesian spatial models: influence of the neighboring structure in the efficiency of MCMC algorithms. Comput Stat 24(4):641–669

Mayrink VD, Lucas JE (2013) Sparse latent factor models with interactions: analysis of gene expression data. Ann Appl Stat 7(2):799–822

Mayrink VD, Panaro RV, Costa MA (2021) Structural equation modeling with time dependence: an application comparing Brazilian energy distributors. AStA Adv Stat Anal 105(2):353–383

McCulloch CE, Neuhaus JM (2015) Generalized Linear Mixed Models, 2nd edn. Elsevier, Amsterdam, pp 845–852

Metropolis N, Rosenbluth AW, Rosenbluth MN, Teller AH, Teller E (1953) Equation of state calculations by fast computing machines. J Chem Phys 21(6):1087–1092

Mitchell TJ, Beauchamp JJ (1988) Bayesian variable selection in linear regression. J Am Stat Assoc 83(404):1023–1032

Nelder JA, Wadderburn RWM (1972) Generalized linear models. J R Stat Soc Ser A 135(3):370–384

Park HS, Dailey R, Lemus D (2002) The use of exploratory factor analysis and principal component analysis in communication research. Hum Commun Res 28(4):562–577

R Core Team (2023) R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. https://www.R-project.org/

Roberts GO, Sahu SK (1997) Updating schemes, correlation structure, blocking and parameterization for the Gibbs sampler. J Roy Stat Soc B 59(2):291–317

Rue H, Held L (2005) Gaussian Markov random fields: theory and applications, 1st edn. Chapman and Hall/CRC, Boca Raton

Wall MM (2004) A close look at the spatial structure implied by the CAR and SAR models. J Stat Plan Inference 121(2):311–324

Acknowledgements

The authors would like to thank CAPES (Coordenação de Aperfeiçoamento de Pessoal de Nível Superior, Brasil), CNPq (Conselho Nacional de Desenvolvimento Científico e Tecnológico, Brasil), and FAPEMIG (Fundação de Amparo à Pesquisa do Estado de Minas Gerais, Brasil) for their support to develop this research. The authors also thank two anonymous referees for constructive comments to improve this work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Details regarding prior specifications

As described in Ferreira et al. (2021), constraints are necessary in the priors of \(\alpha \) and \(\eta \) to ensure model identifiability. We use an auxiliary variable related to the application to define \(K+1\) disjoints groups \(G_1, G_2, \ldots , G_K\), and \(G_{Extra} = G_E\), such that \(G_1 \cup G_2 \cup \cdots \cup G_K \cup G_E = \{1, 2, \ldots , L\}\). Each group, denoted as \(G_{k \ne E}\), consists of regions that are exclusively affected by the kth factor and are not influenced by interactions or other factors. Additionally, the group \(G_E\) encompasses regions that have unknown associations with the primary factors and the interaction. As a result, when \(l \notin G_k\) and \(l \notin G_E\), we assign \(\alpha _{lk} = 0\). Furthermore, for all \(l \in G_{k \ne E}\), assume \(\eta _{l \bullet } = \textbf{0}_{1 \times T}\), which is induced by setting a Beta prior for \(p_l\) with probability mass near 0.

Given the restrictions on \(\alpha \), the prior in (2) needs to be adjusted accordingly. Let \(\alpha _{0k}\) represent a \(L_{0_k} \times 1\) vector associated with the kth column of \(\alpha \), which contains the zero loadings. Specifically, it includes elements \(\alpha _{lk} = 0\) for l not belonging to either \(G_k\) or \(G_E\). Further, let \(\alpha _{\emptyset k}\) denote a \(L_{\emptyset _k} \times 1\) vector comprising the non-null loadings from the kth column, which means it consists of elements \(\alpha _{lk} \ne 0\) for l belonging to either \(G_k\) or \(G_E\). Under this notation, one can rewrite (2) as follows

Let \(\partial _{\emptyset _k}\) denote the set of indices in \(\{1, 2, \cdots , L\}\) that correspond to \(\alpha _{\emptyset k}\). Similarly, \(\partial _{0_k}\) represents the set of indices that correspond to \(\alpha _{0 k}\). We define \(B_{k,11} = B[\partial _{\emptyset _k}, \partial _{\emptyset _k}]\) as the sub-matrix formed by selecting the rows \(\partial _{\emptyset _k}\) and the columns \(\partial _{\emptyset _k}\) of the matrix \([D_{\alpha } - \rho _{\alpha } W_{\alpha }]^{-1}\). We also write \(B_{k_, 22} = B[ \partial _{0_k }, \partial _{0_k }]\), \(B_{k_, 12} = B[ \partial _{\emptyset _k }, \partial _{0_k }]\), and \(B_{k_, 21} = B[ \partial _{0_k }, \partial _{\emptyset _k }]\). In terms of dimension, \(B_{k_, 11}\) is \(L_{\emptyset k} \times L_{\emptyset k}\), \(B_{k_, 22}\) is \(L_{0 k} \times L_{0 k}\), \(B_{k_, 21}\) is \(L_{0 k} \times L_{\emptyset k}\), and \(B_{k_, 12}\) is \(L_{\emptyset k} \times L_{0 k}\). The following conditional distribution is then determined

where \(\mu _{\emptyset _k |0_k} = \textbf{0}_{L_{\emptyset _k} \times 1} + B_{k_, 12}(B_{k_, 22})^{-1}(\alpha _{0k} -\textbf{0}_{L_{\emptyset _k} \times 1}) =\textbf{0}_{L_{\emptyset _k} \times 1}\), and \(B_{{\emptyset _k}|{0_k}} = B_{k_, 11} - B_{k_, 12}B_{k_, 22}^{-1}B_{k_, 21}\).

Appendix B: The MCMC algorithm

This section shows a description of the MCMC algorithm implemented to allow indirect sampling from the joint posterior distribution of the proposed model. The method begins by setting initial values for all unknown parameters. The subsequent steps are as follows.

-

1.

Sample \(\eta ^{*}|\bullet \sim \text{ N}_{T}(M_{\eta ^{*}}, V_{\eta ^{*}})\), \(V_{\eta ^{*}} = [ \sum ^{L}_{l=1}(Z_l / \sigma ^2) \varvec{I}_{T \times T} + \kappa (\lambda )^{-1}]^{-1}\),

\(M_{\eta ^{*}} = V_{\eta ^{*}}\sum _{l=1}^{L}(Z_l/\sigma ^2)(\delta _{l\bullet }^{\top } - \lambda ^{\top }\alpha ^{\top }_{l\bullet })\).

-

2.

Calculate \(p^{*} (Z_{l} = 1{|}\bullet ) = p(Z_{l} = 1{|}\bullet ) /[p(Z_{l} = 1{|}\bullet ) + p(Z_{l} = 0{|}\bullet )]\) such that

\(p(Z_l = 1|\bullet ) \propto \exp \{ (-1/2\sigma ^2) [ (\eta ^{*})^{\top }\eta ^{*} - 2\eta ^{*} (\delta _{l\bullet } - (\alpha _{l\bullet }\lambda ))^\top ]\} \ p(\eta _{l\bullet } = \eta ^{*} | \lambda , Z_l = 1) \ p_l\),

\(p(Z_l = 0|\bullet ) \propto \exp \{ (-1/2\sigma ^2)[ (\textbf{0})^{\top }\textbf{0} - 2\textbf{0} (\delta _{l\bullet } - (\alpha _{l\bullet }\lambda ))^\top ]\} \ p(\eta _{l\bullet } = \textbf{0} | \lambda , Z_l = 0) \ (1 - p_l) = (1 - p_l)\).

Generate \(u \sim \text{ U }(0,1)\) and set (\(Z_l = 1\), \(\eta _{l\bullet } = \eta ^*\)), if \(u < p^*(Z_l = 1|\bullet )\). Otherwise (\(Z_l = 0\), \(\eta _{l\bullet } = \textbf{0}\)).

-

3.

Sample \(p_l|\bullet \sim \text{ Beta }(a_p + Z_l, b_p - Z_l + 1)\).

-

4.

Sample \(\beta \). This step depends on the distribution of \(Y_i\). MH is needed for the Gamma and Beta, but not for the Normal case. To simplify the MH tuning, we generate each \(\beta _j\) separately.

-

5.

Generate \(\psi \). MH is necessary for the Gamma and Beta models. The Normal case is simpler.

-

6.

Sample \(\delta _{lt}\). The MH is required for all three models.

-

7.

Generate \(\sigma ^2|\bullet \sim \text{ IG }(a^*_{\sigma ^2}, b^*_{\sigma ^2})\), \(a^*_{\sigma ^2} = LT/2 + a_{\sigma ^2}\), \(b^*_{\sigma ^2} = b_{\sigma ^2} + (1/2) \sum ^K_{k=1} \alpha ^\top _{\emptyset _k}B^{-1}_{\emptyset _k | 0_k} \alpha _{\emptyset _k}\).

-

8.

Sample \((\alpha _{\emptyset k }|\alpha _{0 k} = \textbf{0}, \bullet ) \sim \text{ N}_{L_{\emptyset _k}}(M^*_{\emptyset _k|0_k}, V^*_{\emptyset _k|0_k})\),

$$\begin{aligned} & V^*_{\emptyset _k|0_k} = [ (1/\tau _{\alpha }) B_{\emptyset _k|0_k}^{-1} + (1/\sigma ^2) \sum _{t=T}^T \lambda ^{2}_{kt} \varvec{I}_{L_{\emptyset _k} \times L_{\emptyset _k}}]^{-1},\\ & M^*_{\emptyset _k|0_k} = (1/\sigma ^2) V^*_{\emptyset _k|0_k} \sum _{t=1}^T ( \delta _{\emptyset _k t} - \eta _{\emptyset _k} -\sum _{k'\ne k} \alpha _{\emptyset k'}\lambda _{k't})\lambda _{kt}. \end{aligned}$$ -

9.

Generate \(\tau _{\alpha }|\bullet \sim \text{ IG }(a^{*}_{\tau _{\alpha }}, b^{*}_{\tau _{\alpha }})\),

$$\begin{aligned} a^*_{\tau _{\alpha }} = a_{\tau _{\alpha }} +\sum _{k=1}^{K}L_{\emptyset _k}/2, \ b^*_{\tau _{\alpha }} =b_{\tau _{\alpha }} + (1/2) \sum _{k=1}^{K} \alpha _{\emptyset _k}^{\top } B^{-1}_{\emptyset _k|0_k}\alpha _{\emptyset _k}. \end{aligned}$$ -

10.

Sample \(\lambda _{k \bullet }\). The MH is necessary for all three models. Let \(\textrm{N}_{T}[x|M,V]\) be the density of \(\textrm{N}_{T} (M, V)\) evaluated at x. Consider the kernel

$$\begin{aligned} & p(\lambda _{k\bullet }|\bullet ) \propto \textrm{N}_{T}[\lambda _{k\bullet }| M_{\lambda }, V_{\lambda }] \ |\kappa (\lambda )|^{-1/2} \ \exp \{ -(1/2) {\eta ^*}^\top \kappa (\lambda )^{-1} \eta ^* \}, \\ & V_{\lambda } = [(1/\tau _{\lambda })(D_{\lambda } -\rho _{\lambda }W_{\lambda }) +\sum _{l=1}^{L} (\alpha _{lk}^{2}/\sigma ^2)\varvec{I}_{T \times T} ]^{-1},\\ & M_{\lambda } = V_{\lambda }(1/\sigma ^2)\sum _{l=1}^{L}\alpha _{lk} (\delta ^\top _{l\bullet } - \eta ^\top _{l\bullet } -\sum _{k'\ne k}\alpha _{lk'}\lambda _{k'\bullet }^\top ). \end{aligned}$$

Assuming \(Y_i \sim \text{ Beta }\), the configurations of Steps 4, 5, and 6 are:

-

4.

Consider \(E_i = \exp \{X_{i \bullet } \beta + \delta _{l^{*}_{i} t^{*}_{i}}\} / ( 1 + \exp \{X_{i \bullet } \beta +\delta _{l^{*}_{i} t^{*}_{i}}\} )\) and compute the log-kernel

$$\begin{aligned} \log p(\beta _j|\bullet )= & - \sum _{i=1}^{n} \log \Gamma (\psi E_i) - \sum _{i=1}^{n} \log \Gamma [\psi (1 - E_i)] + \psi \sum _{i=1}^{n} [ \log (y_i) E_i ] \\ & +- \psi \sum _{i=1}^{n} [ (1-y_i) E_i ] - [1/(2s_{\beta _j})] (\beta _j^2 - 2\beta _j m_{\beta _j}) + C_{\beta _{Beta}}. \end{aligned}$$ -

5.

See \(E_i\) (Step 4), and obtain the log kernel

$$\begin{aligned} \log p(\psi |\bullet )= & n \log \Gamma (\psi ) - \sum _{i=1}^{n} \log \Gamma (E_i) - \sum _{i-1}^{n} \log \Gamma [\psi E_i] \\ & + \psi \sum _{i=1}^{n}[\log (y_i) E_i] \ \psi \ \sum _{i=1}^{n} \log (1-y_i) - \psi \sum _{i=1}^{n}[\log (1-y_i) E_i] \\ & + a_{\psi -1} \ \log (\psi )-b_{\psi } \psi + C_{\psi _{Beta}}. \end{aligned}$$ -

6.

See \(E_i\) (Step 4), and compute the log kernel

$$\begin{aligned} \log p(\delta _{lt}|\bullet )= & - \sum _{i=1}^{n} \varvec{1}_{\{l^*_i = l\} \{t^*_i = t\}} \log \Gamma (\psi E_i) - \sum _{i=1}^{n} \varvec{1}_{\{l^*_i = l\} \{t^*_i = t\}} \log \Gamma [ \psi (1 - E_i)] \\ & + \psi \sum _{i=1}^{n} \varvec{1}_{\{l^*_i = l\} \{t^*_i = t\}} \log (y_i) E_i -\psi \sum _{i=1}^{n} \varvec{1}_{\{l^*_i = l\} \{t^*_i = t\}} (1-y_i) E_i \\ & +- [1/(2\sigma ^2)][\delta _{lt}^2 - 2\delta _{lt} (\alpha _{l \bullet } \lambda _{\bullet t} + \eta _{lt})] + C_{\delta _{Beta}}. \end{aligned}$$

The elements \(C_{\beta _{Beta}}\), \(C_{\psi _{Beta}}\), and \(C_{\delta _{Beta}}\) represent normalizing constants in the log scale.

Now assuming \(Y_i \sim \text{ Normal }\), consider Steps 4, 5, and 6 as follows:

-

4.

Sample \(\beta |\bullet \sim \text{ N}_q(M_{\beta }, V_{\beta })\),

$$\begin{aligned} V_{\beta } = [X^{\top }X + (S_{\beta })^{-1}]^{-1}, \ M_{\beta } = V_{\beta }(X^\top Y - X^\top \delta _{l^* t^*}) + S_{\beta }^{-1}m_{\beta }. \end{aligned}$$ -

5.

Generate \(\psi |\bullet \sim \text{ Ga }(a_{\psi }^*, b_{\psi }^*)\), \(a_{\psi }^* = \frac{n}{2} + a_{\psi } \),

$$\begin{aligned} b_{\psi }^* = Y^\top Y - Y^\top \delta _{l^* t^*} -\delta _{l^* t^*}^\top Y + \delta _{l^* t^*}^\top \delta _{l^* t^*} + M_{\beta }(S_{\beta }m_{\beta } + 2b_{\psi } - M_{\beta }^{\top }V_{\beta }^{-1}M_{\beta }). \end{aligned}$$ -

6.

Compute the log-kernel \(\log p(\delta _{lt}|\bullet ) = -[1/(2\psi )] [\sum _{i=1}^{n} (X_{i \bullet } \beta + \delta _{l^* t^*})^2 \varvec{1}_{\{l^*_i = l\} \{t^*_i = t \}} + - 2\sum _{i=1}^{n}y_i \delta _{l^* t^*} \varvec{1}_{\{l^*_i = l\} \{t^*_i =t \}}] -[1/(2\sigma ^2)] [\delta _{lt}^2 -2\delta _{lt}(\alpha _{l\bullet }\lambda _{\bullet t} + \eta _{lt})] + C_{\delta _{N}}\),

with \(C_{\delta _{N}}\) being an unknown constant.

Finally, if \(Y_i \sim \text{ Gamma }\), consider Steps 4, 5, and 6 with the next specifications.

-

4.

Build the MH with the following log kernel

$$\begin{aligned} \log p(\beta _j|\bullet )= & - \psi \beta _j\sum _{i=1}^n X_{ji} -\sum _{i=1}^{n} \psi y_i / \exp \{X_{i \bullet } \beta +\delta _{l^*_i t^*_{i}}\} -[1/(2 s_{\beta _j})][\beta _j^2 - 2\beta _j m_{\beta _j}] \\ & + C_{\beta _{Ga}}. \end{aligned}$$ -

5.

Consider the following log kernel

$$\begin{aligned} \log p(\psi |\bullet )= & n \psi \log (\psi ) - \psi \sum _{i=1}^{n} (X_{i \bullet } \beta + \delta _{l^{*}t^{*}}) - n\log [\gamma (\psi )] + \psi \sum _{i=1}^{n}\log (y_i) \\ & +-\sum _{i=1}^{n} \psi y_i / \exp \{X_{i \bullet } \delta _{l^{*}_{i} t^{*}_{i}} \} + a_{\psi -1} \log (\psi ) -b_{\psi }\psi + C_{\psi _{Ga}}. \end{aligned}$$ -

6.

Determine the log-kernel

$$\begin{aligned} \log p(\delta _{lt}|\bullet )= & -\psi \sum _{i=1}^n \delta _{l^* t^*} \varvec{1}_{\{l^{*}_i = l\} \{t^*_i = t \}} - \sum _{i=1}^{n} \varvec{1}_{\{l^*_i = l\} \{t^*_i = t\}} \psi y_i / \exp \{X_{i \bullet } \beta \delta _{l^* t^*}\} \\ & -[1/(2\sigma ^2)] [\delta _{lt}^{2} - 2\delta _{lt}(\alpha _{l\bullet } \lambda _{\bullet t} + \eta _{lt})] + C_{\delta _{Ga}}. \end{aligned}$$

The terms \(C_{\beta _{Ga}}\), \(C_{\psi _{Ga}}\), and \(C_{\delta _{Ga}}\) are normalizing constants in the log scale.

Appendix C: Convergence analysis related to Sect. 3

The three boxplots shown in Fig. 11 summarize, for each model, the z-scores of the Geweke convergence test (Geweke 1992) applied to the chains of all parameters obtained in the simulation study of Sect. 3. As can be seen, all z-scores fall within the 95% interval (\(-1.96\), 1.96), confirming that convergence was achieved by the MCMC.

The \(\psi \), although present in all three models, has a different relationship with the dispersion of the response variable; \(\psi \) is the variance in the Normal, but it is not the variance in the Gamma and Beta cases. In Sect. 3, the true value \(\psi = 2\) was set for all three models. The Beta model deals with a bounded response, and its histogram generated under \(\psi = 2\) exhibits a stronger U-shape than that obtained under a larger \(\psi \). The high concentration of \(y_i\)’s near 0 or 1, in the Beta case with \(\psi = 2\), poses greater difficulty for the MCMC. When using simulated data with \(\psi = 30\) and adopting a vague prior (variance 150, centered at 50), the MCMC (Beta) shows faster convergence, requiring a shorter burn-in period similar to what was adopted for the Normal and Gamma cases with \(\psi = 2\).

Boxplots summarizing the z-scores from the Geweke convergence test applied to the chains of all parameters for the three models evaluated in Sect. 3. The test is based on the first 30% and the last 30% of each chain. The horizontal red lines delineate the 95% acceptance reagion

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

de Oliveira, N.C.C., Mayrink, V.D. Generalized mixed spatiotemporal modeling with a continuous response and random effect via factor analysis. Stat Methods Appl (2024). https://doi.org/10.1007/s10260-024-00755-z

Accepted:

Published:

DOI: https://doi.org/10.1007/s10260-024-00755-z