Abstract

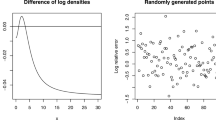

A simple saddlepoint (SP) approximation for the distribution of generalized empirical likelihood (GEL) estimators is derived. Simulations compare the performance of the SP and other methods such as the Edgeworth and the bootstrap for special cases of GEL: continuous updating, empirical likelihood and exponential tilting estimators.

Similar content being viewed by others

Notes

See also Rilstone and Ullah (2005) for a correction to the second-order variance term.

We use the abbreviation SP or capitalize “Saddlepoint” when we refer to the SP approximation as distinguished to the stationary saddle point of \(\mathcal{L}(\theta ,\lambda )\).

Henceforth all conditions are for \(i=1,\ldots ,N\) and summations are over the same range.

Also see Huzurbazar and Williams (2010) in this regard.

Under certain conditions renormalizing the SP approximation further reduces the size of the relative error as argued by Daniels (1980).

See also Mittelhammer et al. (2000).

The number of bootstrap re-samples is chosen such that \(\alpha (B + 1)\) is an integer as in Davidson and MacKinnon (2000).

References

Barndorff-Nielson OE, Cox DR (1989) Asymptotic techniques for use in statistics. Chapman and Hall, New York

Bhattacharya RN, Ghosh JK (1978) On the validity of the formal Edgeworth expansion. Ann Stat 6(2):434–451

Butler RW (2007) Saddlepoint approximations with applications. Cambridge University Press, Cambridge

Czellar V, Ronchetti E (2010) Accurate and robust tests for indirect inference. Biometrika 97(3):621–630

Daniels HE (1954) Saddlepoint approximations in statistics. Ann Math Stat 25:631–650

Daniels HE (1980) Exact saddlepoint approximations. Biometrika 67:59–63

Daniels HE (1983) Saddlepoint approximations for estimating equations. Biometrika 70:89–96

Davison AC, Hinkley DV (1988) Saddlepoint approximations in resampling methods. Biometrika 75(3):417–431

Davidson R, MacKinnon JG (2000) Bootstrap tests: how many bootstraps? Econ Rev 19:55–68

Easton GS, Ronchetti E (1986) General saddlepoint approximations with applications to L statistics. J Am Stat Assoc 81:420–430

Field CA (1982) Small sample asymptotic expansions for multivariate M- estimates. Ann Stat 10:672–689

Field CA, Hampel FR (1982) Small-sample asymptotic distributions of M-estimators of location. Biometrika 69:29–46

Field CA, Ronchetti E (1990) Small sample asymptotics. Institute of Mathematical Statistics. Lecture notes monograph series. Institute of Mathematical Statistics, Hayward [147]

Feuerverger A (1989) On the empirical saddlepoint approximation. Biometrika 76(3):457–464

Ghosh JK, Sinha BK, Wieand HS (1980) Second-order efficiency of the MLE with respect to any bowl-shaped loss function. Ann Math Stat 8:506–521

Hansen LP, Heaton J, Yaron A (1996) Finite sample properties of some alternative GMM estimators. J Bus Econ Stat 14:262–280

Huzurbazar AV, Williams BJ (2010) Incorporating cavariates in flowgraph models: applications to recurrent event data. Technometrics 52:198–208

Jensen JL (1995) Saddlepoint approximations. Oxford University Press, USA

Jing BY, Feuerverger A, Robinson J (1994) On the bootstrap saddlepoint approximations. Biometrika 81(1):211–215

Kundhi G, Rilstone P (2008) The third-order bias of nonlinear estimators. Commun Stat Theor Methods 37(16):2617–2633

Kundhi G, Rilstone P (2012) Edgeworth expansions for GEL estimators. J Multivar Anal 106:118–146

Kundhi G, Rilstone P (2013) Edgeworth and saddlepoint expansions for nonlinear estimators. Econ Theory 29(5):1057–1078

Lô SN, Ronchetti E (2012) Robust small sample accurate inference in moment condition models. Comput Stat Data Anal 56:3182–3197

Lugannani R, Rice SO (1980) Saddlepoint approximations for the distributions of the sum of random variables. Adv Appl Probab 12:475–490

Ma Y, Ronchetti E (2011) Saddlepoint test in measurement error models. J Am Stat Assoc 106(493):147–156

McCullagh P (1987) Tensor methods in statistics. Chapman and Hall, New York

Mittelhammer RC, Judge GG, Miller DJ (2000) Econometric foundations. Cambridge University Press, Cambridge

Monti AC, Ronchetti E (1993) On the relationship between empirical likelihood and empirical approximations for multivariate M-estimators. Biometrika 80:329–338

Newey WK, Smith R (2004) Higher order properties of GMM and generalized empirical likelihood estimators. Econometrica 72(1):219–255

Phillips PCB (1977) A general theorem in the theory of asymptotic expansions as approximations to the finite sample distributions of econometric estimators. Econometrica 45(6):1517–1534

Pfanzagl J, Wefelmeyer W (1978) A third-order optimum property of the maximium likelihood estimator. J Multivar Anal 10:1–29

Qin J, Lawless J (1994) Empirical Likelihood and general estimating equations. Ann Stat 22(1):300–325

Rilstone P, Srivastava VK, Ullah A (1996) The second-order bias and mean squared error of nonlinear estimators. J Econom 75(2):369–395

Rilstone P, Ullah A (2005) Corrigendum to: the second-order bias and mean squared error of non-linear estimators. J Econom 124(1):203–204

Reid N (1988) Saddlepoint methods and statistical inference. Stat Sci 3(2):213–238

Robinson J, Ronchetti E, Young GA (2003) Saddlepoint approximations and tests based on multivariate M-estimates. Ann Stat 31:1154–1169

Ronchetti E, Welsh AH (1994) Empirical saddlepoint approximations for multivariate M-estimators. J R Stat Soc Ser B 56:313–326

Rothenberg TJ (1984) Approximating the distributions of econometric estimators and test statistics. In: Griliches Z, Intriligator MD (eds) Handbook of econometrics, vol 2, chap 15. Elsevier Science Inc., New York

Schennach SM (2007) Point estimation with exponentially tilted empirical likelihood. Ann Stat 35(2):634–672

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix

Appendix

This appendix lists the notations used in the paper and provides the regularity conditions underlying the results in the paper. It also provides proofs to those results not proven in the paper or which are not proven elsewhere.

Notational conventions:

For an \(m\times n\) matrix \(A\), \(A^\top \) indicates its \(n\times m\) transpose, \(\Vert A\Vert =\) Trace\([AA^\top ]^{1/2}\). When the argument \(\beta \) of a function is suppressed it is understood that the function is evaluated at \(\beta _0\) so that, for example, \(A\equiv A(\beta _0)\). The Kronecker product is defined in the usual way so that for \(A=[a_{ij}]\) and a \(p\times q\) matrix \(B\), we have \(A\otimes B=[a_{ij}B]\), an \(mp\times nq\) matrix. The vectorization operator is defined in the usual way so that \(\text {Vec}[A]\) denotes an \(mn\times 1\) vector with the columns of \(A\) stacked one upon each other.

The matrix of \(\nu \)’th order partial derivatives of a matrix \(A(\beta )\) is indicated by \( A^{(\nu )}(\beta )\). Specifically, if \(A(\beta )\) is a \(m\times 1\) vector function, \( A^{(1)}(\beta )\) is the usual Jacobian whose \(l\)’th row contains the partials of the \(l\)’th element of \(A(\beta )\). The matrices of higher derivatives are defined recursively so that the \(j\)’th element of the \(l\)’th row of \( A^{(\nu )}(\beta )\) (a \(m\times m^\nu \) matrix), is the \(1\times m\) vector \(a^{(\nu )}_{lj}(\beta )=\partial a^{(\nu -1)}_{lj}(\beta )/\partial \beta ^\top \). Two useful properties of these definitions, are that, if \(a(\beta )=[a_j(\beta )]\) is a \(m\times 1\) vector, then the \(j\)’th row of \( a^{(1)}(\beta )\) contains the gradient of \(a_j(\beta )\) and the \(j\)’th row of \( a^{(2)}(\beta )\) contains the transposed vectorization of the Hessian matrix of \(a_j(\beta )\).

A bar over a function indicates its expectation so that \(\overline{A(\beta )}= E[A(\beta )]\). A tilde over a function indicates its deviation from its expectation so that \(\widetilde{A(\beta )}= A(\beta )- \overline{A(\beta )}\). Also \( Q = (E \left[ {q_i}^{(1)} \right] )^{-1}\) is evaluated at \(\beta _0\) and is therefore treated as a constant.

Assumptions and proofs:

The result in Lemma 1 is proven in Rilstone et al. (1996) assuming \(\widehat{\beta }\) is consistent, the data is i.i.d. and the following conditions satisfied for \(s\ge 2\).

Assumption A

The \(s\)’th order derivatives of \(q_i(\beta )\) exist in a neighborhood of \(\beta _0\), \(i=1,2,\ldots \) and \(E\Vert q_i^{(s)}(\beta _0)\Vert ^{2}<\infty \).

Assumption B

For some neighborhood of \(\beta _0\), \(\left( \frac{1}{N}\sum q_i ^{(1)}(\beta )\right) ^{-1} = O_P(1)\).

Assumption C

\( \left\| q_i^{(s)}(\beta ) - q_i^{(s)}(\beta _0)\right\| \le \Vert \beta -\beta _0\Vert M_i \) for some neighborhood of \(\beta _0\) where \(E| M_i| \le C<\infty \), \(i=1,2,\ldots \).

Throughout this appendix we let \(\bar{X}_m =\prod _{l=1}^m \frac{1}{N}\sum _{i=1}^N X_{li}\) denote the product of \(m\) sample averages of mean-zero random variables.

Lemma A

Suppose \(E \Vert X_{ji_j}\Vert ^m \le C<\infty \), \(j=1,2,\ldots ,m\). Then

Proof

Suppose \(m>1\); otherwise \(E\left[ \bar{X}_m \right] =0.\)

The complete proof can be found in Kundhi and Rilstone (2012), Appendix B.

Proof to Lemma 3

The first moment of \(\xi \) follows directly from the bias result in Rilstone et al. (1996). Since \(E[\xi ]= O(N^{-1/2})\), we have \( E\left[ ( \xi - E[\xi ])^2 \right] = E\left[ \xi ^2 \right] + O(N^{-1}) \) and the second moment of \(\xi \) is

where

Applying Lemma A we see that \(T_N\) is of the form and magnitude as follows

so that

Finally, the third moment of \(\xi \) is

where

Applying Lemma A we see that \(T_N\) is of the form and magnitude as follows

so that

Evaluating the first two terms we see first that

From the random sampling assumption and Lemma A

We can therefore write the third cumulant as

Proof to Proposition 1

We note that

so that

Similarly,

where \(\bar{t}\) is a mean value.

Rights and permissions

About this article

Cite this article

Kundhi, G., Rilstone, P. Saddlepoint expansions for GEL estimators. Stat Methods Appl 24, 1–24 (2015). https://doi.org/10.1007/s10260-014-0277-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10260-014-0277-4