Abstract

A series of numerical experiments for data assimilation with the Ensemble Kalman Filter (EnKF) in a shallow water model are reported. Temperature profiles measured at a North Sea location, 55°30ˊ North and 0°55ˊ East (referred to as the CS station of the NERC North Sea project), are assimilated in 1-D simulations. Comparison of simulations without assimilation to model results obtained when assimilating data with the EnKF allows us to assess the filter performance in reproducing features of the observations not accounted for by the model. The quality of the model error sampling is tested as well as the validity of the Gaussian hypothesis underlying the analysis scheme of the EnKF. The influence of the model error parameters and the frequency of the data assimilation are investigated and discussed. From these experiments, a set of optimal parameters for the model error sampling are deduced and used to test the behavior of the EnKF when propagating surface information into the water column.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Data assimilation could be defined as the combination of the available observations and the dynamical model to improve the model results. The Ensemble Kalman Filter is a sequential method developed by Evensen (1994, 2003, 2004), which provides an error statistics for the forecasts of non-linear systems, provided that good initial error statistics are established.

Up to now, this filter has been the subject of numerous studies for the assimilation of surface data in Ocean Global Circulation Models. It has been applied for the assimilation of satellite altimetry data (e.g., Evensen and van Leeuwen 1996; Leeuwenburgh 2005), for the assimilation of sea surface temperature (e.g., Haugen and Evensen 2002), and for the simultaneous assimilation of these data (e.g., Keppene et al. 2005; Leeuwenburgh 2007).

The focus of this article is the North Sea. One motivation for the use of data assimilation methods in shallow water models is the refinement of the operational forecasts. Kalman filter based methods are used for operational storm surge forecasting in the Netherlands (Verlaan et al. 2005). The EnKF has also been applied for the assimilation of sea level measurements in coastal models (Echevin et al. 2000; Mourre et al. 2004). Another important application of data assimilation methods in coastal seas is to provide measures of uncertainties associated to operational forecasts.

In this study, it has been chosen to assimilate temperature data because a highly reliable modelled temperature field is of critical importance for the modelling of biological phenomena, and in particular for the forecasting of algal blooms. The assimilation of temperature profiles is still under development in operational models of the North-East Atlantic Shelf. The aim of this study is to provide preliminary results for the assimilation of temperature profiles with the EnKF in the North Sea.

The most important forcing mechanisms in the North Sea are the tides and the wind, whose time scales range broadly from 1 h to 1 day. The dominant forcing on the temperature field in the North Sea is the surface seasonal heating and cooling, which, in the northern North Sea, leads to a thermal stratification of the water column in summer. This region therefore provides a good case study for testing an assimilation scheme with temperature data.

The Ensemble Kalman Filter (EnKF) has been implemented in the hydrodynamic component of the public domain three-dimensional baroclinic COHERENS model (Luyten et al. 2005). The simulations have been conducted with the simplified 1-D (z,t) version of the program applied at the North Sea CS station located in the northern part of the North Sea (Luyten et al. 1999). Several series of numerical experiments of temperature profiles assimilation have been conducted. One of their aims was to test the ability of the EnKF to correct a model with “bad/very simplified” physics and another one was to assess how it propagates information from the sea surface into the water column. Therefore, the use of the 1-D version of the model was relevant and has the advantage of limiting the computing time of the simulations, allowing the performance of many tests to define a set of optimal parameters for the model error sampling. Temperature profiles from the “North Sea Project” data set were assimilated for August 1989.

The validity of the implementation and the performance of the EnKF in dealing with the assimilation of temperature profiles have been examined by comparison of the results to a simulation without data assimilation and from the improvement of the modelled temperature profile with respect to the observations. We also determine for this application a set of optimal values for the parameters of the model error sampling: vertical decorrelation length, standard deviation of the model error, influence of the number of ensemble members, and frequency of assimilation. These parameters are then further used to assess the behavior of the EnKF when propagating “surface” data information into the water column by comparing the assimilation of the “surface” value of the temperature profiles to the assimilation of full temperature profiles. Finally, the study of Luyten et al. (2003) has shown that, even with well parameterized physics, the 3-D model could not fully reproduce internal oscillations present in the observations at the level of the thermocline. The representation of these internal oscillations is therefore a good test of the performance of the EnKF in coastal models.

Another motivation to this preliminary study is the design of optimal monitoring networks in coastal zones. Based on the tools for the assimilation of temperature profiles developed here, 3-D simulations with a spatial resolution of four nautical miles are under way. Their aim is to perform observing system simulation experiments (OSSE) prior to the implementation of optimally designed monitoring networks in the North Sea.

In the next section, we briefly discuss the ensemble Kalman filter, the implemented square root algorithm, and the generation of the ensemble. Section 3 is dedicated to the description of the model, the simulations, and the data set. The results are discussed in detail in Section 4. A critical issue with the EnKF is the sampling of the model error. The ensemble spread represents the range in which the improvement expected from the observations can take place. This issue is studied in Section 4.8 of this paper. Finally, as the ensemble generation of the state variables relies on the hypothesis of a Gaussian distribution of the model error variance, the relevance of this assumption is discussed in Section 4.9.

2 Description of the ensemble Kalman filter

The ensemble Kalman filter method has been formulated by Evensen to solve data assimilation problems represented by non-linear operators. It combines the traditional Kalman filter with Monte Carlo methods to generate an ensemble of states representing the model and observations error covariance matrices.

The model state variables are updated at each time where observations are available (analysis step):

where K the Kalman gain is given by:

ψ f is the forecast state vector, ψ a is the analyzed state vector, d is a vector of measurements of size m (the number of measurements), H is the observation operator, and P and R are, respectively, the error covariance matrices of the model and of the observations. The integration of the model is restarted from the updated state (forecast step):

M being the non-linear model integration operator.

Although the Kalman filter provides an optimal estimate of the state of the dynamical system given the measurements, the analysis equations are not a good choice from a numerical point of view. Square root algorithms provide an algebraically equivalent but numerically more robust alternative. Moreover, it has been pointed out that the perturbation of measurements in the EnKF standard analysis equation may be an additional source of errors (Anderson 2001). This study is based on one of the square root algorithms for the analysis step proposed by Evensen (2004) where the perturbation of measurements is avoided (R = 0). A description of square root filters may be found in Tippett et al. (2003). The model error covariance matrix is estimated from an ensemble of states; let A be the matrix representing that ensemble of N state vectors of the model:

the ensemble mean is stored in each column of the matrix \(\bar{A}\), which can be defined as:

where 1 N is the matrix of which each element is equal to \(\frac{1}{N}\). A matrix of ensemble perturbations with respect to the mean value of the ensemble of state vectors is defined as:

The model error covariance matrix is estimated from the ensemble of perturbations:

where the superscript T indicates the matrix transpose.

The square root algorithm updates the ensemble mean and the ensemble perturbations separately. The updated mean is computed similarly to the Kalman filter analysis equation:

the analyzed perturbations are computed as:

where the eigenvectors matrix Z and the eigenvalues matrix Λ result from the eigenvalue decomposition of the mapping of the model error covariance matrix on the observations space (H P f H T) − 1:

The whole analyzed ensemble is obtained as:

The ensemble must be chosen to properly represent the error statistics of the model. An ensemble of model states is generated by adding pseudo random noise with prescribed statistics to a best-guess estimate. Then, each of the ensemble members is integrated forward in time until measurements are available. At these time instants, the analysis is performed to update the model state in a statistically consistent way, this leads to a multivariate analysis computed from the ensemble statistics, i.e., cross-correlations between the different variables of the model are included. Thus, a change in one of the model variables will influence the other variables. This analysis scheme minimizes the error variance of the analyzed state in a least square sense (Eknes and Evensen 2002).

There are several ways to generate model perturbations: perturbations in model parameters, perturbations in the forcing fields, or adding some error terms to the right-hand side of the model equations (Zheng and Zhu 2008). Most of the studies involving the EnKF are based on the first two methods. The results reported in this study are based on the “adding error terms to the model” approach, this choice has been guided by the lack of advection and horizontal diffusion terms in the 1-D version of the model. The model parameters are kept fixed with predefined values so that the model itself is considered as a non perfect estimate of the true evolution of the dynamical system. The model uncertainties are therefore represented through a stochastic term ρ representing the model error:

The stochastic model error term is expressed as follows:

the multiplicative factor γ, the standard deviation of the model error, represents the amplitude of the model errors and modulates the amplitude of the model error term q.

The EnKF allows for a wide range of noise models (Evensen 2003). In our implementation, the model error terms, q, are not correlated in time but they have a vertical spatial correlation expressed in terms of the vertical level of the model grid k:

where w is a sequence of white noise drawn from a statistical distribution of pseudorandom numbers ranging between 0 and 1 and generated following the algorithm given by L’Ecuyer and Côté (1991).

The factor α is related to the vertical spacing and to a vertical decorrelation length λ:

Two limiting cases clarify the role of λ: if the sequence of model error terms is vertically uncorrelated (α = 0), the vertical decorrelation scale is of the same order of magnitude as the vertical grid spacing, λ = Δz, so that the model error becomes equal to w. If the vertical decorrelation scale λ tends to infinity (α = 1), there is no random component in the sequence of model error terms which become deterministic and, in this case, q is damped by a factor of e − 1 on a length scale z = λ.

3 Description of the simulations

The hydrodynamic component of the model COHERENS is based on the equations of momentum, continuity, temperature and salinity, written in spherical polar coordinates and discretized on an Arakawa C-grid. The simulations reported in this article are performed with the 1-D (z,t) simplified version of the program so that advective effects and horizontal diffusion are ignored and the momentum equations are then given by:

where (u,v) are the horizontal components of the current, ζ is the surface elevation, f is the Coriolis frequency, ρ is the density, and ν T is the coefficient of eddy diffusivity. The surface slope term is provided by a depth-averaged tidal model of the North-West European Shelf model.

The consequence of these hypotheses is that the model is turned into a model with very simplified physics not fully able to account for the stratification of the temperature T(z,t) obtained as a solution of the following equation:

where λ T is the eddy diffusion coefficient. The source term \(\frac{\partial I}{\partial z}\) is added to take into account the absorption of the solar radiation in the upper part of the water column with

where Q sol is the solar heat flux incident on the sea surface, C p is the specific heat of seawater at constant pressure, − H ≤ z ≤ 0 with H = 83m, \(\lambda_1=10m^{-1}\) is a constant inverse attenuation depth of the infrared radiation, λ 2 is a diffuse attenuation coefficient for the short-wave radiation.

The solar heat flux is evaluated as a function of geographical location, time of the year, and cloud coverage. The non-solar heat flux at the surface is decomposed into a latent, sensible, and long-wave radiation flux, calculated as a function of meteorological variables. A zero flux of temperature is imposed at the bottom of the water column. At the surface, a conventional bulk formulation is used for the expression of the surface wind stress.

Salinity is kept constant and uniform at a value of 34.8 psu. The eddy diffusion coefficients ν T and λ T are obtained from a turbulence closure scheme by solving a transport equation for the turbulent kinetic energy and using the Blackadar asymptotic mixing length formulation (Luyten 1996).

Wind velocity, air temperature, and relative humidity are generated from the forecasting model of the British Meteorological Office with a 6-h resolution. The model is run with a time step of 20 min and 50 σ levels in the vertical. The temperature field is initialized using CTD data available from January 3, 1989.

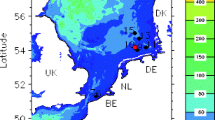

The simulations were conducted at a station located in the central part of the North Sea and referred to as CS station (see Fig. 3 of Charnock et al. 1994). It is located at 55°30′ North and 0°55′ East in the deeper parts of the North Sea where the water depth is equal to 83 m and where a thermocline develops in spring and summer.

High-resolution temperature profiles from the “North Sea Project” data set (thermistor chain data) are assimilated for August 1989. The measurement of temperature profile ranges from 10- to 60-m depth with a resolution of 5 m. Time sampling rate was approximately equal to 2 min. The rms error of the observations is about 0.1°C (Charnock et al. 1994).

All the simulations presented in this article have the following common characteristics:

-

The model is run from January to April without ensemble generation.

-

An initial ensemble of states is generated in May and is integrated without data assimilation till the end of July.

-

Data are assimilated from August 5 to August 24.

Moreover, the temperature is the only sampled variable (to which a random noise component is added) of the model, and the sampling is performed once a day. The standard simulation is based on an ensemble of 50 members with a vertical decorrelation length of 10 m. Data are assimilated once a day and a multivariate analysis of the temperature and horizontal currents is performed. Table 1 summarizes the different simulations that were performed to determine the set of optimal values of the model error parameters.

4 Analysis of the results of the assimilation experiments

4.1 Comparison of the temperature profiles modelled with and without data assimilation to the observations

The results presented in this paragraph correspond to experiments 0 and 1 (standard simulation). In experiment 1, data are assimilated once a day, using 50 ensemble members with a vertical decorrelation scale of 10 m and a standard deviation for the model error of 1.0°C.

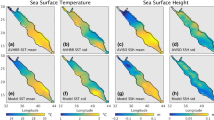

Figure 1 represents the time-depth series of the observed temperature (thermistor chain data) used for the assimilation experiments. Data are plotted with a temporal resolution of 1 day. The observed temperature profiles are stratified and show a sharp thermocline positioned at about 30-m depth.

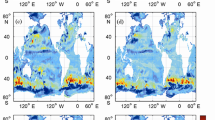

Figure 2 represents the time-depth series of the temperature for August 1989 modelled without performing data assimilation (left) and with data assimilation (right). A comparison of the temperature profile modelled without data assimilation to the observations indicates that the position of the thermocline is not at the right place (at about 20 m depth rather than 30 m). The largest difference between the observations and the model without assimilation is located at the level of the thermocline. Because of the too-high modelled position of the thermocline, the temperature above the thermocline is overestimated (too warm) and the temperature of the cold water mass located below the thermocline is underestimated (too cold).

The effect of the assimilation of temperature profiles is shown on the right side of Fig. 2: the position of the thermocline is improved, it is shifted from 20-m depth to about 30-m depth. The temperature field located above 10-m depth and below 60-m depth where no observations are available is also corrected and is closer to the observations, i.e., colder above the thermocline and warmer below it.

Then, simulations without data assimilation have been performed for September. The results are shown at the left panel of Fig. 3 and the available observations for September are presented at the right panel. The forecast for September indicates a thermocline located at 30-m depth, which is the adjusted position at the end of the assimilation process in August; moreover, the temperature of the water masses located above and below the thermocline is in good agreement with the available observations. This experiment shows that the model maintains the adjusted thermocline in its correct position during a forecast following the assimilation.

Figure 3: Time-depth series of the temperature modelled without data assimilation (left) and the observations (right) for September

These results indicate that a daily assimilation of temperature profiles with the ensemble Kalman filter already compensates for a very simplified physical model. This is the case because sufficient information is provided by the observations to take in charge the model lacks.

4.2 Modelled standard deviation

The variance of the ensemble is the averaged squared spread of each ensemble member with respect to the ensemble mean:

where N represents the number of ensemble members. The modelled standard deviation σ ens is defined as the square root of the ensemble variance. As the Kalman filter is variance-minimizing, the modelled standard deviation decreases when data are assimilated.

Figure 4 presents a comparison of the modelled standard deviation without (left) and with (right) data assimilation. A sharp decrease in the modelled standard deviation appears from the first day of assimilation (day 215) and concerns the whole water column as the assimilation process performed through the EnKF is applied to the whole temperature profile.

This sharp decrease in the modelled standard deviation underlines the importance of the generation of the initial ensemble because a too-low modelled standard deviation may generate a collapse of the filter. In the framework of the study reported in this article, the generation of the modelled standard deviation starts in May and reaches the level of 1°C at the beginning of August when the assimilation process starts. As the decrease in modelled standard error is very sharp, it has proven to be necessary to generate an ensemble of model error every 24 h to prevent a collapse of the filter.

4.3 Influence of the vertical decorrelation length of the model error

The vertical decorrelation length of the model error has been chosen by examining the observations. The observed temperature profile at Fig. 1 indicates a relatively good mixing of the water masses within the upper and lower layers and suggests a vertical decorrelation length ranging between 10 and 20 m.

The results described in this section refer to experiment 2, which has been performed with a vertical decorrelation length of 20 m, the double of that used for the standard simulation. Observations are available between about 10 and 60 m depth. With a longer vertical decorrelation length, the effect of an assimilation at 20-m depth could be propagated to the surface and a data assimilated at 60-m depth could influence the temperature at the bottom of the water column.

The temperature profile modelled with a vertical decorrelation length of 20 m is shown at Fig. 5 (left), it can be compared to the standard simulation presented at Fig. 2 (right). With a longer decorrelation length than in the standard simulation, the temperature field above the thermocline is colder, and below the thermocline, it is warmer. The temperature profile modelled in this way is therefore smoother than in the framework of the standard simulation.

This experiment and the standard simulation indicate that the effect of the assimilation of temperature profiles is to cool the water above the thermocline and to warm it below the thermocline. A longer vertical decorrelation scale reinforces this effect.

4.4 Influence of the standard deviation of the model error

The effect of varying the value of the standard deviation of the model error γ on the model results has been examined through experiment 3. Figure 5 (right) represents the temperature profile modelled with a standard deviation of 0.5°C, which is half the value used in the standard simulation.

Figure 6 shows that the modelled standard deviation of experiment 3 (left panel) has about half the value of that of the standard simulation (right panel), as could be expected.

Figure 11 compares the root mean square of the innovation vectors (black circles) to the modelled standard deviation (red squares) of the standard simulation (left panel) to that of experiment 3 (right panel), see Section 4.8 for more details. In the standard simulation, these two quantities are of the same order of magnitude, which indicates a good model error sampling. In experiment 3, the innovation is higher than the modelled standard deviation, indicating a too-low model error sampling. Therefore, a standard deviation of the model error of 1°C seems to be an optimal value for that parameter.

4.5 Effect of increasing the number of ensemble members on the model error sampling

When performing data assimilation with the EnKF, the sampling of the model error is a critical issue. The convergence of the EnKF is proportional to \(\sqrt{N}\) in the framework of the standard Monte-Carlo sampling. In this section, we compare the results of the standard simulation to experiments 4 and 5 that are performed, respectively, with 10 and 200 ensemble members. As the number of ensemble members is increased, the sampling of the model error should be improved.

Figure 7 represents the temperature profile modelled with 10 ensemble members (left) and with 200 members (right). The simulation with 10 ensemble members presents “temperature anomalies” in the form of warmer or colder patches in the modelled temperature profile. Such anomalies are not present in the observations. Moreover, the simulation with 10 ensemble members overestimates the surface temperature and underestimates the temperature at the bottom of the surface column, which is the feature of a bad model representation in the framework of the study presented in this article.

Compared to the simulation with 10 ensemble members, the standard simulation (Fig. 2—right) with 50 members does not present these “temperature anomalies” and is closer to the observations (colder surface temperature and warmer bottom temperature). With respect to the standard simulation, the use of 200 ensemble members for a daily assimilation does not seem to take into account major features of the observations that were not represented by the standard simulation, but it requires a much higher computing time. These results suggest, therefore, the existence of an optimal number of ensemble members when considering the improvement in the modelled temperature field with respect to the computing time. For this study, this optimal number seems to be of the order of 50 ensemble members.

4.6 Influence of the frequency of the assimilation

Figure 8 (left panel) presents a time-depth series of the observed temperature plotted with a temporal resolution of 1 h. Internal oscillations are present at the level of the thermocline in the observed temperature profiles.

As mentioned in the introduction, among the observations features that should be represented by the model when assimilating data, there are the internal oscillations. The study by Luyten et al. (2003) has shown that, even with a realistic setup of the model, these oscillations were underestimated. The simulations reported by Luyten et al. (2003) were performed with the 3-D version of the model, using a horizontal spatial resolution of 7.3 km. The advection of momentum and scalars was represented using a total variation diminishing (TVD) scheme and the advective flux was evaluated as a weighted average between the upwind flux and the Lax–Wendroff in the horizontal and the central flux in the vertical.

Figure 8 (right panel) presents the difference between the observations and the temperature modelled without data assimilation. The largest difference between the observations and the model is located at the level of the thermocline. Because of the too-high modelled position of the thermocline, the cold water mass located below the thermocline is overestimated so that the difference between the observations and the model is mostly positive (temperature globally underestimated by the model).

The left panel of Fig. 9 corresponds to experiment 7, which has been performed with the same filter parameters as the standard simulation but assimilating data every 3 h. “Temperature anomalies” in the form of warmer or colder patches, which do not exist in the observations, are present in the modelled temperature profile. These “anomalies” are attributed to a possible difficulty of the filter to provide a good sampling of the model error when assimilating data with a high frequency. An assimilation interval of 3 h contains only nine time integration steps of the model, which could be insufficient for the model to incorporate the information and relax between two analyses.

Therefore, an experiment with an assimilation frequency of 6 h has been performed, the results are presented in the right panel of Fig. 9 and correspond to experiment 8. The “temperature anomalies” are reduced and the internal oscillations are correctly represented at the level of the thermocline.

When using a model with very simplified physics, a high frequency of data assimilation may seem to be necessary to represent critical features of the observations. However, an assimilation frequency that is too high to allow a sufficient relaxation of the model between two time steps may worsen the situation. For the assimilation of temperature profiles reported in this study, an assimilation frequency of 6 h allows us to reproduce the internal oscillations present in the observations.

4.7 Assimilation of surface temperature

Among the available data assimilation schemes, the Ensemble Kalman Filter is sophisticated and costly in CPU time; one may therefore wish that it is able to correct temperature profiles from surface data. The observed temperature profiles available in the framework of this study do not allow us to properly extract “surface data” because the observation point closer to the surface is located at 10-m depth. However, in the framework of this study, a performance test for the assimilation scheme is to provide a corrected position of the thermocline, which is located below this depth.

The results presented in this section correspond to experiments 9 and 10. The observed temperature at the 10-m depth point has been assimilated daily (experiment 9) and hourly (experiment 10).

The results are presented in Fig. 10. Daily assimilation (left panel) does not sufficiently correct the position of the thermocline. In the right panel, one can see that hourly data assimilation allows us to correct the temperature of the water masses located above the thermocline.

These results indicate that, with a good set of parameters for the model error—standard deviation, vertical decorrelation length, and assimilation frequency—the EnKF is able to correct temperature profiles from surface data. In the experiments presented here, a high frequency of assimilation was necessary to compensate for a too simplified physical model.

4.8 Estimation of the quality of the model error sampling

The innovation is defined as the residual between the observations and the forecast:

the root mean square of the innovations provides a measure of the deviation between the model and the observations:

where m is the number of measurements.

The standard deviation of the ensemble of modelled temperature is a measure of the model error estimate:

To make the most out of the data assimilation, the sampled model error should be of the same order of magnitude as the distance between the model and the observations. The sampling of the model error is represented by the ensemble spread. To check that the distance between the model and the observations is taken into account in the ensemble spread, the standard deviation of the model error should be of the same order of magnitude as the root mean square of the innovations.

In this section, the root mean square of the innovations is compared to the standard deviation of the ensemble for the standard simulation (experiment 1) and for experiment 3. The results are presented in Fig. 11, the root mean square of the innovations is represented by black squares and the standard deviation of the ensemble by red circles. The left side of Fig. 11 presents the results for the standard simulation (experiment 1) and its right side corresponds to the results of experiment 3 with a standard deviation of the model error having a half value with respect to the standard simulation.

For both experiments, at the first day of assimilation, the standard deviation of the ensemble overestimates the innovations, and then, the difference is reduced due to the variance-minimizing character of the EnKF. For the results corresponding to the standard experiment at the left panel, the standard deviation of the ensemble is of the same order of magnitude as the root mean square of the innovations. When the standard deviation of the model error is reduced, the results of the right panel show an equivalent reduction of the modelled standard deviation, which becomes too small to take the innovations into account. A comparison of the two panels of Fig. 11 indicates that, for the simulations reported in this study, a standard deviation of the model error γ of 1°C is an optimal value to allow the assimilation scheme run properly.

4.9 Testing Gaussian hypothesis

The sampling of the model error is based on an ensemble of states generated randomly, assuming that their initial distribution is Gaussian. If the model were linear, this distribution would remain Gaussian at any time (Jazwinski 1970). However, with a non-linear ocean model, statistical moments of odd order may develop from Gaussian initial conditions so that the probability density function may become non Gaussian during the model integration. With the EnKF method, the analyzed state is computed using only the Gaussian part of the probability density function. The relevance of this assumption underlying the EnKF analysis scheme can be checked by using measures of skewness (third-order moment) and kurtosis (fourth-order moment) of the probability distribution of the ensemble.

The skewness provides a measure of the lack of symmetry of the distribution:

and the kurtosis a measure of whether the data are peaked (positive kurtosis) or flat (negative kurtosis) relative to a normal distribution (whose fourth order moment is three):

where σ is the standard deviation of the distribution.

The relevance of the Gaussian hypothesis has already been investigated by Natvik and Evensen (2003) in a statistical analysis of the assimilation of ocean color surface data in a North Atlantic model. In the study reported here, we compare the skewness and kurtosis at the forecast time and at the analysis step for the assimilation of temperature profiles.

The results presented at Fig. 12 correspond to the standard simulation and provide a comparison of the skewness at the forecast step (black squares) and at the analysis step (red circles), at 10-m depth (left panel) within the surface mixed layer and at 45-m depth below the thermocline (right panel).

Close to the surface, the dispersion of the skewness around 0 is slightly larger at the analysis step than at the forecast step. At 45-m depth, this effect is increased, and the skewness dispersion around 0 is still larger after the analysis. It seems, therefore, that the Gaussian hypothesis is broadly valid at the surface, but it is not clear that the behavior of the temperature field follows this assumption in the middle of the water column.

The left and right panels of Fig. 13 show, respectively, the kurtosis at 10-m depth and at 45-m depth for the standard simulation at the forecast time step (black squares) and at the analysis step (red circles). At 10-m depth, the kurtosis at the analysis step is closer to 0 than at the forecast step. Below the thermocline, the behavior is the opposite, the kurtosis at the forecast step is very close to zero but its dispersion increases a lot at the analysis step. Concerning the kurtosis, the Gaussian hypothesis again seems to work better near the surface than in the middle of the water column.

The behavior of the skewness and kurtosis of the distribution suggests that the Gaussian hypothesis underlying the analysis scheme of the EnKF is not fully appropriate for our ocean model, as could be expected from its non-linear character. However, this assumption could be bypassed by taking account of the non-Gaussian component of the distribution with other methods, such as the multi-Gaussian distribution proposed by Bertino et al. (2003) and applied in the study by Simon and Bertino (2009).

5 Concluding remarks

The Ensemble Kalman Filter (EnKF) has been the subject of numerous studies for surface data assimilation in global ocean models. The results reported here concern the assimilation of temperature profiles in a shallow-water model of the North Sea.

One of the square-root algorithms developed by Evensen (2003) for the EnKF has been implemented in the hydrodynamic component of the three-dimensional public domain model COHERENS. To determine the ability of the EnKF to correct a model with “bad” physics, the simulations have been performed with a 1-D simplified test case of the program. Temperature profiles have been assimilated.

In a first step, the validity of our implementation of the filter has been checked by comparison of the model results with and without data assimilation to the assimilated observations. Some features of the results have been studied in detail: the profiles of temperature computed when observations are assimilated are closer to the observations, and the standard deviation of the ensemble is reduced when an assimilation is performed.

A critical issue when working with ensemble methods is to have an adequate sampling of the model error; a partial check of this is provided by a comparison of the innovation to the model error variance given by the ensemble spread. Then, we have looked for a set of optimal values for the model error parameters such as the standard deviation, the vertical decorrelation length, the number of ensemble members, and the assimilation frequency.

One conclusion of this study is that the EnKF is able to correct a model with “bad/very simplified physics” (lack of advection and horizontal diffusion), as is indicated by the better position of the thermocline and more realistic temperature values for the water masses after data assimilation. It has also been shown that the adjusted position of the thermocline is maintained during a forecast following the assimilation.

Moreover, a correct representation of internal oscillations, even with a 3-D well parameterized model, is a critical issue (Luyten et al. 2003). Such oscillations are present in the observed temperature profiles that were assimilated. The results reported here show that they can be correctly modelled when assimilating data with an adequate frequency and a sufficient number of ensemble members.

The ability of the EnKF to correct the temperature profile from surface data has also been examined in detail. Using the optimal set of model error parameters that was deduced from a series of numerical experiments, the “surface” value of the temperature profile has been assimilated. The results indicate that the ensemble Kalman filter is able to correct the temperature of the water masses above the thermocline from surface data.

Finally, the analysis scheme of the EnKF is based on the hypothesis of a Gaussian distribution of the probability density function. The validity of this hypothesis has been examined through the amplitude of the skewness and kurtosis of the distribution around the surface and below the thermocline. The results seem to indicate that it is probably not completely valid for our model. However, this assumption could be bypassed by taking into account the non-Gaussian component of the distribution with other methods such as the multi-Gaussian distribution.

References

Anderson JL (2001) An ensemble adjustment kalman filter for data assimilation. Mon Weather Rev 29:2884–2903

Bertino L, Evensen G, Wackernagel H (2003) Sequential data assimilation techniques in oceanography. Int Stat Rev 71(2):223–241

Charnock H, Dyer KR, Huthnance JM, Liss PS, Simpson JH, Tett PB (1994) Understanding the North Sea system. The Royal Society, London

Echevin V, De Mey P, Evensen G (2000) Horizontal and vertical structure of the representer functions for sea surface measurements in a coastal circulation model. J Phys Oceanogr 30:2627–2635

Eknes M, Evensen G (2002) An ensemble Kalman filter with a 1-D marine ecosystem model. J Mar Syst 36:75–100

Evensen G (1994) Sequential data assimilation with a nonlinear quasi-geostrophic model using Monte Carlo methods to forecast error statistics. J Geophys Res 99:10143–10162

Evensen G (2003) The ensemble Kalman filter: theoretical formulation and practical implementation. Ocean Dyn 53:343–367

Evensen G (2004) Sampling strategies and square root analysis schemes for the EnKF. Ocean Dyn 54:539–367

Evensen G, van Leeuwen PJ (1996) Assimilation of geosat altimeter data for the Agulhas current using the ensemble Kalman filter with a quasi-geostrophic model. Mon Weather Rev 124:85–96

Haugen VEJ, Evensen G (2002) Assimilation of SLA and SST data into an OGCM for the Indian Ocean. Ocean Dyn 52:133–151

Jazwinski AH (1970) Stochastic processes and filtering theory. Academic, New York

Keppenne CL, Rienecker MM, Kurkowski NP, Adamec DA (2005) Ensemble Kalman filter assimilation of temperature and altimeter data with bias correction and application to seasonal prediction. Nonlinear Process Geophys 12:491–503

L’Ecuyer P, Côté S (1991) Implementing a random number package with splitting facilities. ACM Trans Math Softw 17:98–111

Leeuwenburgh O (2005) Assimilation of along-track altimeter data in the tropical pacific region of a global OGCM ensemble. Q J Royal Meteorol Soc 131:2455–2472

Leeuwenburgh O (2007) Validation of an EnKF system for OGCM initialization assimilating temperature. Salinity, and surface height measurements. Mon Weather Rev 135:125–139

Luyten PJ (1996) An analytical and numerical study of surface and bottom boundary layers with variable forcing and application to the North Sea. J Mar Syst 8:171–189

Luyten PJ, Jones JE, Proctor R, Tabor A, Wild-Allen K (1999) COHERENS-A coupled hydrodynamical-ecological model for regional and shelf seas, user documentation, 911 p. MUMM report

Luyten PJ, Jones JE, Proctor R (2003) A numerical study of the long- and short-term temperature variability and thermal circulation in the North Sea. J Phys Oceanogr 33:37–56

Luyten P, Andreu-Burillo I, Norro A, Ponsar S, Proctor R (2005) A new version of the European public domain code COHERENS. In: Proceedings of the fourth international conference on EuroGOOS, pp 474–481

Mourre B, De Mey P, Lyard F, Le Provost C (2004) Assimilation of sea level data over continental shelves: an ensemble method for the exploration of model errors due to uncertainties in bathymetry. Dyn Atmos Ocean 38:93–121

Natvik L-J, Evensen G (2003) Assimilation of ocean colour data into a biochemical model of the North Atlantic. Part 2. Statistical analysis. J Mar Syst 40–41:155–169

Simon E, Bertino L (2009) Application of the Gaussian anamorphosis to assimilation in a 3-D coupled physical-ecosystem model of the North Atlantic with the EnKF: a twin experiment. Ocean Sci Discuss 6:617–652

Tippett MK, Anderson JL, Bishop CH (2003) Ensemble square root filters. Mon Weather Rev 131:1485–1490

Verlaan M, Zijderveld A, de Vries H, Kroos J (2005) Operational storm surge forecasting in the Netherlands: developments in the last decade. Philos Trans R Soc A. doi:10.1098/rsta.2005.1578

Zheng F, Zhu J (2008) Balanced multivariate model errors of an intermediate coupled model for ensemble Kalman filter data assimilation. J Geophys Res 113:C07002

Acknowledgements

The authors of this article wish to thank Sébastien Legrand and José Ozer from the Belgian Management Unit of the North Sea Mathematical Models for their valuable inputs and stimulating discussions during the writing of this paper. They also express their thanks to anonymous reviewers for their comments.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible Editor: Phil Dyke

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Ponsar, S., Luyten, P. Data assimilation with the EnKF in a 1-D numerical model of a North Sea station. Ocean Dynamics 59, 983–996 (2009). https://doi.org/10.1007/s10236-009-0224-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10236-009-0224-3