Abstract

We study the validity of the comparison and maximum principles and their relation with principal eigenvalues, for a class of degenerate nonlinear operators that are extremal among operators with one-dimensional fractional diffusion.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The fractional Laplacian is a singular integral operator defined, e.g., by

with \(s \in (0,1)\) and

so that the value of \((-\Delta )^su\) at x depends on the value of u in the whole of \({\mathbb {R}}^N\). But, of course, it is possible to define singular integral operators that depend only on subdimensional sets of \({\mathbb {R}}^N\). For example, one can consider one-dimensional sets, fixing a direction \(\xi \in {\mathbb {R}}^N\) and letting

Here \(C_s=C_{1,s}\) so that \({\mathcal {I}}_\xi u(x)\) acts as the 2s-fractional derivative of u in the direction \(\xi\). Hence, we can denote \({\mathcal {V}}_k\) the family of k-dimensional orthonormal sets in \({\mathbb {R}}^N\) and define the following nonlocal nonlinear operators

These operators have been very recently considered in [7], where representation formulas were given, and in [12], where the operators \({\mathcal {I}}_1^\pm\) are shown to be related with a notion of fractional convexity. These extremal operators, even for \(k=N\), are intrinsically different from the fractional Laplacian and we will show some new phenomena arising. We concentrate in particular on exterior Dirichlet problems in bounded domains.

Precisely, for \(\Omega\) a bounded domain of \({\mathbb {R}}^N\), we will study:

The first difference we wish to emphasize is that, in general, these operators are not continuous, precisely, even if u is in \(C^\infty (\Omega )\) and bounded, \({\mathcal {I}}_k^\pm u(\cdot )\) may not be continuous. What is required in order to have continuity, or lower or upper semicontinuity, is a global condition on the regularity of u; this will be shown in Proposition 3.1. This is a striking difference with respect to the case of nonlinear integro-differential operators like, e.g., the ones considered in [10], which are continuous once \(C^{1, 1}\) regularity holds in the domain \(\Omega\). These continuity properties play a key role in the arguments used for the proofs of the comparison principle, Alexandrov–Bakelman–Pucci estimate, and the Harnack inequality, showing that the setting we are interested in deviates in a substantial way from [10].

Nevertheless, we will show that the comparison principle still holds for \({\mathcal {I}}_k^\pm\) in any bounded domain; we recall that a comparison principle for \({\mathcal {I}}_1^\pm\) was also proved in [12], but under the assumption that the domain is strictly convex. We wish to remark that in fact the comparison principle here is very simple compared to the local case. As it is well known, in the theory of viscosity solutions the comparison principle for second order operators requires the Jensen-Ishii’s lemma, see [11], which in turn lies on a remarkably complex proof that uses tools from convex analysis. Here, instead, the proof is completely self-contained and uses only a straightforward calculation, somehow more similar to the case of first order local equations, where just the doubling variable technique is used.

Via an adaptation of the Perron’s method by [11], the comparison principle allows to prove existence of solutions for (1.1). Let us mention that existence in a very general setting that includes elliptic integro-differential operators was proved in [2, 3]. However, the approach we use is quite immediate, and it seemed to us simpler and friendlier to the reader to just give the proof then checking if we fit into the general Barles–Chasseigne–Imbert setting.

We conclude with the proof of Hölder estimates for \({\mathcal {I}}_1^\pm\) in uniformly convex domains and the validity of maximum principle for the operators

with \(\mu\) below the generalized principal eigenvalues, which, adapting the classical definition in [4], we set as

Let us mention that with our choice of the constant \(C_s\), the operators \({\mathcal {I}}_k^\pm\) converge to the operators \({\mathcal {P}}_k^\pm\), the so called truncated Laplacians, defined by

and

where \(\lambda _i(D^2 u)\) are the eigenvalues of \(D^2u\) arranged in nondecreasing order, see [5, 6, 9, 15]. Of course there are other classes of nonlocal operators that approximate \({\mathcal {P}}^\pm _k (D^2 u)(x)\), as can be seen in [7]. But we have concentrated on those that are somehow more of a novelty.

In general, we wish to emphasize that in this setting we have differences both with the local equivalent operators and with more standard nonlocal operators. We have already seen that they are in general not continuous, also it is immediate that even when \(k=N\), which in the local case gives \({\mathcal {P}}^+_N (D^2u)(x)={\mathcal {P}}^-_N (D^2u)(x)=\Delta u\), it is not true that \({\mathcal {I}}_N^-\) is equal to \({\mathcal {I}}_N^+\) or that it is equal to the fractional Laplacian. But there are other differences, for example, regarding the validity of the strong maximum principle, see Theorem 4.3 and Proposition 4.7, or regarding the fact that for \({\mathcal {P}}^\pm _k\) the supremum (infimum) among all possible k-dimensional frames is in fact a maximum (minimum), while here the extremum may not be reached as it is shown in the examples before Proposition 3.1. Hence we encourage the reader to pursue her reading in order to see all these fascinating differences.

This paper is organized as follows.

After a preliminary section, in Sect. 4 we study continuity properties of \({\mathcal {I}}_k^\pm\). We will first give counterexamples showing that in general these operators are not continuous, and then we prove that they preserve upper (or lower) semicontinuity under some global assumptions. As a related result, we also show that the supremum and the infimum in the definitions of \({\mathcal {I}}_k^\pm\) are in general not attained.

Section 5 is devoted to the proof of the comparison principle. We investigate the validity and the failure of strong maximum/minimum principles for these operators. Moreover, we prove a Hopf-type lemma for \({\mathcal {I}}_N^-\) and \({\mathcal {I}}_k^+\).

In Sect. 6, we exploit the uniform convexity of the domain \(\Omega\) to construct first barrier functions, then solutions for the Dirichlet problem by using the Perron’s method [11].

Section 7 is devoted to the analysis of validity of the maximum principle for \({\mathcal {I}}_k^\pm \cdot +\mu \cdot\), and to the relation with principal eigenvalues.

Finally, Hölder estimates for solutions of \({\mathcal {I}}_1^\pm u= f\) in \(\Omega\), \(u=0\) in \({\mathbb {R}}^N {\setminus } \Omega\), where \(\Omega\) is a uniformly convex domain, are proved in Sect. 8.

We will use them in Sect. 9 to prove existence of a positive principal eigenfunction.

Notations

\(B_r(x)\) | ball centered in x of radius r |

\({\mathcal {S}}^{N-1}\) | unitary sphere in \({\mathbb {R}}^{N}\) |

\(\{ e_i\}_{i=1}^N\) | canonical basis of \({\mathbb {R}}^N\) |

d(x) | \(= \inf _{y \in \partial \Omega } \left| x-y\right|\), the distance function from \(x \in \Omega\) to \(\partial \Omega\) |

\(LSC(\Omega )\) | space of lower semicontinuous functions on \(\Omega\) |

\(USC(\Omega )\) | space of upper semicontinuous functions on \(\Omega\) |

\(\delta (u, x, y)\) | \(= u(x+y)+ u(x-y)-2u(x)\) |

\({\mathcal {I}}_\xi u(x)\) | \(=C_s \int _{0}^{+\infty } \frac{\delta (u, x, \tau \xi )}{\tau ^{1+2s}} \, d\tau\), where \(\xi \in {\mathcal {S}}^{N-1}\) and \(C_s\) is a normalizing constant |

\({\hat{x}}\) | \(=\frac{x}{\left| x\right| }\) |

\(\beta (a, b)\) | \(=\int _0^1 t^{a-1} (1-t)^{b-1} \, dt\) |

\({\mathcal {V}}_k\) | the family of k-dimensional orthonormal sets in \({\mathbb {R}}^N\) |

2 Preliminaries

We recall the definition of viscosity solution in this nonlocal context [2, 3]. For definitions and main properties of viscosity solutions in the classical local framework, we refer to the survey [11].

Henceforth, we consider bounded functions \(u:\mathbb {R}^N\mapsto \mathbb {R}\) which are measurable along one-dimensional affine subspaces of \(\mathbb {R}^N\). That is for every \(x\in \mathbb {R}^N\) and \(\xi \in {\mathcal {S}}^{N-1}\) we require the map

to be measurable. In the rest of the paper we shall tacitly assume such condition without mentioning it anymore and, with a slight abuse of notation, we shall simply write \(u\in L^\infty (\mathbb {R}^N)\).

Definition 2.1

Given a function \(f \in C(\Omega \times {\mathbb {R}})\), we say that \(u \in L^\infty ({\mathbb {R}}^N) \cap LSC(\Omega )\) (respectively, \(USC(\Omega )\)) is a (viscosity) supersolution (respectively, subsolution) to

if for every point \(x_0 \in \Omega\) and every function \(\varphi \in C^2(B_\rho (x_0))\), \(\rho >0\), such that \(x_0\) is a minimum (resp. maximum) point to \(u - \varphi\), then

where

We say that a continuous function u is a solution of (2.1) if it is both a supersolution and a subsolution of (2.1). We analogously define viscosity sub/super solutions for the operator \({\mathcal {I}}_k^-\), taking the infimum over \({\mathcal {V}}_k\) in place of the supremum.

Remark 2.2

We stress that the definition above is inspired by \(-(-\Delta )^s\) and not by \((-\Delta )^s\), that means, in a certain sense, that a minus sign in front of the operator is taken into account.

Remark 2.3

In the definition of supersolution above, we can assume without loss of generality that \(u > \varphi\) in \(B_\rho (x_0) {\setminus } \{ x_0 \}\), and \(\varphi (x_0) = u(x_0)\). Indeed, let us assume that for any such \(\varphi\)

is satisfied, and consider a general \({\tilde{\varphi }} \in C^2(B_\rho (x_0))\) such that \(u-{\tilde{\varphi }}\) has a minimum in \(x_0\). We take for any \(n \in {\mathbb {N}}\)

and notice that \(u(x_0)=\varphi _n(x_0)\), and since \(u(x_0) - {\tilde{\varphi }}(x_0) \le u(x)-{\tilde{\varphi }}(x)\),

for any \(x \in B_\rho (x_0) {\setminus } \{ x_0 \}\). Also, for any \(n \in {\mathbb {N}}\),

and the conclusion follows taking the limit \(n \rightarrow \infty\).

Remark 2.4

We point out that if we verify (2.2) for \(\rho _1\), then it is also verified for any \(\rho _2 > \rho _1\), since

Remark 2.5

The operators \({\mathcal {I}}_k^\pm\) satisfy the following ellipticity condition: if \(\psi _1, \psi _2 \in C^2(B_\rho (x_0)) \cap L^{\infty }({\mathbb {R}}^N)\) for some \(\rho >0\) are such that \(\psi _1-\psi _2\) has a maximum in \(x_0\), then

Indeed, if \(\psi _1(x_0)-\psi _2(x_0) \ge \psi _1(x)-\psi _2(x)\) for all \(x \in \mathbb R^N,\) then

which yields the conclusion.

Remark 2.6

Notice that in the definition above we assumed \(u \in L^\infty ({\mathbb {R}}^N)\), as this will be enough for our purposes, however, one can also consider unbounded functions u with a suitable growth condition at infinity, see [7].

3 Continuity

In this section, we study continuity properties of the maps \(x \mapsto {\mathcal {I}}_k^\pm u(x)\). We start by showing that the assumption \(u \in C^2(\Omega ) \cap L^\infty ({\mathbb {R}}^N)\) which ensures that \({\mathcal {I}}_k^\pm u(x)\) is well defined, is in fact not enough to guarantee the continuity of \({\mathcal {I}}_k^\pm u(x)\) with respect to x. What is needed is a more global assumption as it will be shown later.

Let u be the function defined as follows:

Set \(\Omega =B_1(0)\). The map

is well defined, since u is bounded in \({\mathbb {R}}^N\) and smooth (in fact constant) in \(\Omega\). We shall prove that it is not continuous at \(x=0\) when \(k<N\).

Let us first compute the value \({\mathcal {I}}_k^+u(0)\). Since \(u\le 0\) in \({\mathbb {R}}^N\) it turns out that for any \(|\xi |=1\)

Hence,

On the other hand, choosing the first k-unit vectors \(e_1,\ldots ,e_k\) of the standard basis, we obtain that

Now we are going to prove that

where \(e_N=(0,\ldots ,0,1)\). Fix any \(|\xi |=1\). Since \({\mathcal {I}}_\xi u={\mathcal {I}}_{-\xi }u\), we can further assume that \(\left\langle \xi ,e_N\right\rangle \ge 0\). Then, for any \(n>1\),

where

and

Notice that if \(\tau \le \tau _1(n)\) then \(\frac{1}{n} e_N \pm \tau \xi \in \overline{B_1(0)}\), if \(\tau \in (\tau _1(n), \tau _2(n)]\) then \(\frac{1}{n} e_N - \tau \xi \in \overline{B_1(0)}\), however \(\frac{1}{n} e_N + \tau \xi \not \in \overline{B_1(0)}\). Finally, if \(\tau > \tau _2(n)\), then \(\frac{1}{n} e_N \pm \tau \xi \not \in \overline{B_1(0)}\), see also Fig. 1.

Using \(u\le 0\) we obtain from (3.4) that

Moreover, since \(\tau _1(n)\le \sqrt{1-\frac{1}{n^2}}\), we infer that

for any \(|\xi |=1\). Then,

and

as we wanted to show.

A slight modification of the function u in (3.1) allows us to show that the map

is also, in general, not continuous.

Consider the function

As before, using the fact that \(u\le 0\) in \({\mathbb {R}}^N\) and that

we have

Moreover, for any \(|\xi |=1\) such that \(\left\langle \xi ,e_N\right\rangle \in [0,1)\), then (3.5) still holds. Since for any orthonormal basis \(\left\{ \xi _1,\ldots ,\xi _N\right\}\) there is at most one \(\xi _i\) such that \(\left\langle \xi _i,e_N\right\rangle =1\), then

and

A further consequence of the lack of continuity is that the \(\sup\) or \(\inf\) in the definition of \({\mathcal {I}}_k^\pm\) are in general not attained under the only assumption \(u \in C^2(\Omega ) \cap L^\infty ({\mathbb {R}}^N)\). As an example, take

Then

Since \({\mathcal {I}}_\xi u(0)={\mathcal {I}}_{-\xi } u(0)\), we can assume without loss of generality that \(\langle \xi , e_N \rangle \in [0, 1]\). Thus,

Notice that

where

which is continuous and monotone decreasing and

Therefore, we deduce

However, there does not exist any \(\xi\) such that \({\mathcal {I}}_1^+ u(0)={\mathcal {I}}_\xi u(0)\).

Let us now consider the case \({\mathcal {I}}_k^+\) with \(2 \le k \le N\). We take into account the function

In this case,

where

Thus, one has

Now, let us compute the supremum of the function

Observe that

and that

as \(s > 1/2\). By (3.6) and (3.7), \(F(\theta ) \in C^1(0, \pi /2)\) and

Moreover,

for all \(\tau >1\) and \(\theta \in (0, \pi /2)\). Also,

Combining (3.8) and (3.9), we conclude

Finally,

which implies

Therefore,

however there does not exists \(\theta \in [0, \pi /2]\) such that

Proposition 3.1

Let \(u\in C^2(\Omega )\cap L^\infty ({\mathbb {R}}^N)\) and consider the maps

If \(u\in LSC({\mathbb {R}}^N)\) (respectively, \(USC({\mathbb {R}}^N)\), \(C({\mathbb {R}}^N)\)) then

-

(i)

\(\Psi \in LSC(\Omega \times {{\mathcal {S}}}^{N-1})\) (respectively, \(USC(\Omega \times {{\mathcal {S}}}^{N-1})\), \(C(\Omega \times {{\mathcal {S}}}^{N-1})\));

-

(ii)

\({\mathcal {I}}^\pm _ku\in LSC(\Omega )\) (respectively, \(USC(\Omega )\), \(C(\Omega )\)).

Proof

-

(i)

Let \((x_n,\xi _n)\rightarrow (x_0,\xi _0)\in \Omega \times {{\mathcal {S}}}^{N-1}\) as \(n\rightarrow +\infty\). Fix \(R>0\) such that \({\overline{B}}_R(x_0)\subset \Omega\) and set \(M=\displaystyle \max _{x\in {\overline{B}}_R(x_0)}\left\| D^2u(x)\right\|\). For \(\rho \in (0,\frac{R}{2})\) it holds that \(B_{2\rho }(x_0)\subset B_R(x_0)\) and, for n sufficiently large and any \(\tau \in [0,\rho )\), that \(x_n\pm \tau \xi _n\in B_{2\rho }(x_0)\). By a second-order Taylor expansion, we have

$$\begin{aligned} {\mathcal {I}}_{\xi _n}u(x_n)-{\mathcal {I}}_{\xi _0}u(x_0)\ge -C_s\frac{M\rho ^{2-2s}}{1-s}+C_s \int _\rho ^{+\infty }\frac{\delta (u, x_n, \tau \xi _n)}{\tau ^{1+2s}}\,d\tau -C_s \int _\rho ^{+\infty }\frac{\delta (u, x_0, \tau \xi _0)}{\tau ^{1+2s}}\,d\tau \,. \end{aligned}$$Since \(u(x_n)\rightarrow u(x_0)\) as \(n\rightarrow +\infty\), because of the continuity of u in \(\Omega\), then using the lower semicontinuity of u in \({\mathbb {R}}^N\) we have

$$\begin{aligned} \liminf _{n\rightarrow +\infty }\delta (u, x_n, \tau \xi _n) \ge \delta (u, x_0, \tau \xi _0) \end{aligned}$$for any \(\tau \in (0,+\infty )\). Moreover, taking into account that \(\rho >0\) and \(u\in L^\infty ({\mathbb {R}}^N)\), by means of Fatou’s lemma we also infer that

$$\begin{aligned} \liminf _{n\rightarrow +\infty }[ {\mathcal {I}}_{\xi _n}u(x_n)-{\mathcal {I}}_{\xi _0}u(x_0)] \ge -C_s\frac{M\rho ^{2-2s}}{1-s}. \end{aligned}$$Since \(\rho\) can be chosen arbitrarily small, we conclude that

$$\begin{aligned} \liminf _{n\rightarrow +\infty }\Psi (x_n, \xi _n)\ge \Psi (x_0, \xi _0). \end{aligned}$$In a similar way one can prove that \(\Psi \in USC(\Omega \times {{\mathcal {S}}}^{N-1})\) if \(u\in USC({\mathbb {R}}^N)\). In particular \(\Psi \in C(\Omega \times {{\mathcal {S}}}^{N-1})\) when u is continuous in \({\mathbb {R}}^N\).

-

(ii)

By the assumption \(u\in C^2(\Omega )\cap L^\infty ({\mathbb {R}}^N)\), we first note that, for any \(x\in \Omega\), \({\mathcal {I}}_\xi u(x)\) is uniformly bounded with respect to \(\xi \in {\mathcal {S}}^{N-1}\). Hence,

$$\begin{aligned} -\infty<{\mathcal {I}}^-_ku(x)\le {\mathcal {I}}^+_ku(x)<+\infty . \end{aligned}$$Moreover, for any compact \(K \subset \Omega\) there exists a constant \(M_K\) such that

$$\begin{aligned} -M_K \le {\mathcal {I}}_k^- u \le {\mathcal {I}}_k^+ u \le M_K. \end{aligned}$$Henceforth, we shall consider \({\mathcal {I}}^-_k\), the other case being similar.

Let \(x_n\rightarrow x_0\in \Omega\) as \(n\rightarrow +\infty\) and let \(\varepsilon >0\). By the definitions of lower limit and \({\mathcal {I}}_k^-u\), there exist a subsequence \((x_{n_m})_{m}\) and k sequences \((\xi _i(m))_m\subset {\mathcal {S}}^{N-1}\), \(i=1,\ldots ,k\), such that for any \(m \in {\mathbb {N}}\)

Up to extract a further subsequence, we can assume that \(\xi _i(m)\rightarrow {\bar{\xi }}_i\), as \(m\rightarrow +\infty\), for any \(i=1,\ldots ,k\). Since \(\Psi \in LSC(\Omega \times {{\mathcal {S}}}^{N-1})\) by i), we can pass to the limit as \(m\rightarrow +\infty\) in (3.10) to get

This implies that \({\mathcal {I}}_k^-u(x)\in LSC(\Omega )\) sending \(\varepsilon \rightarrow 0\).

The proof that \({\mathcal {I}}_k^-u(x)\in USC(\Omega )\) under the assumption \(u\in USC({\mathbb {R}}^N)\) is more standard since \({\mathcal {I}}_k^-u(x)=\inf _{\left\{ \xi _i\right\} _{i=1}^k\in {{\mathcal {V}}}_k}\sum _{i=1}^k\Psi (x,\xi _i)\) and \(\Psi (x,\xi _i)\in USC(\Omega )\) by i).

Lastly if \(u\in C({\mathbb {R}}^N)\), by the previous cases \({\mathcal {I}}_k^-\) is in turn a continuous function in \(\Omega\). \(\square\)

4 Comparison and maximum principles

We consider the problems

Theorem 4.1

Let \(\Omega \subset {\mathbb {R}}^N\) be a bounded domain and let \(c(x),f(x)\in C(\Omega )\) be such that \(\left\| c^+\right\| _{\infty }<C_s \frac{k}{s}({\text {diam}}(\Omega ))^{-2s}\). If \(u\in USC({\overline{\Omega }})\cap L^\infty ({\mathbb {R}}^N)\) and \(v\in LSC({\overline{\Omega }})\cap L^\infty ({\mathbb {R}}^N)\) are, respectively, sub- and supersolution of (4.1), then \(u\le v\) in \(\Omega\).

Proof

We shall detail the proof in the case \({\mathcal {I}}^+_k\), the same arguments applying to \({\mathcal {I}}^-_k\) as well. We argue by contradiction by supposing that there exists \(x_0\in \Omega\) such that

Doubling the variables, for \(n\in \mathbb {N}\) we consider \((x_n,y_n)\in {\overline{\Omega }}\times {\overline{\Omega }}\) such that

Using [11, Lemma 3.1], up to subsequences, we have

and

By semicontinuity of u and v we can find moreover \(\varepsilon >0\) such that

and also

where \(\Omega _\varepsilon =\left\{ x\in {\overline{\Omega }}:\;{\text {dist}}(x,\partial \Omega )<\varepsilon \right\}\). We first claim that for \(n\ge \frac{\left\| u\right\| _\infty +\left\| v\right\| _\infty }{\varepsilon ^2}\)

To show (4.7) take any \((x,y)\notin {\overline{\Omega }}\times {\overline{\Omega }}\):

Case 1. If \(|x-y|\ge \varepsilon\), then \(u(x)-v(y)-n|x-y|^2\le \left\| u\right\| _\infty +\left\| v\right\| _\infty -n\varepsilon ^2\le 0\);

Case 2. If \(|x-y|<\varepsilon\) and both \(x\notin {\overline{\Omega }}\) and \(y\notin {\overline{\Omega }}\), then \(u(x)-v(y)-n|x-y|^2\le 0\);

Case 3. If \(|x-y|<\varepsilon\) and \(x\notin {\overline{\Omega }},\ y\in {\overline{\Omega }}\) or \(x\in {\overline{\Omega }},\ y\notin {\overline{\Omega }}\), then using (4.5) and (4.6) we infer that \(u(x)-v(y)-n|x-y|^2<u(x_0)-v(x_0)\).

Thus, (4.7) is proved.

Taking the functions \(\varphi _n(x):=u(x_n)+ n|x-y_n|^2 - n|x_n-y_n|^2\) and \(\phi _n(y)=v(y_n)- n|x_n-y|^2 +n|x_n-y_n|^2\), we see that \(\varphi _n\) touches u in \(x_n\) from above, while \(\phi _n\) touches v in \(y_n\) from below. Hence, for any \(\rho >0\)

In a dual fashion

Subtracting (4.8) and (4.9), we then obtain

Choosing in particular \(x=x_n\pm \tau \xi _i\) and \(y=y_n\pm \tau \xi _i\) we deduce that

for any \(\tau >0\) and for any \(|\xi _i|=1\). Thus, (4.10) implies, assuming without loss of generality that \(\rho < {\text {diam}}(\Omega )\),

Since \(\Omega \subset B_{{\text {diam}}(\Omega )}(x_n)\) and \(x_n\pm \tau \xi _i \notin B_{{\text {diam}}(\Omega )}(x_n)\) for any \(\tau \ge {\text {diam}}(\Omega )\), then \(u(x_n\pm \tau \xi _i)\le 0\). For the same reason \(v(y_n\pm \tau \xi _i)\ge 0\) when \(\tau \ge {\text {diam}}(\Omega )\). Hence,

and

Letting first \(\rho \rightarrow 0\), then \(n\rightarrow +\infty\) and using (4.3)-(4.4) we obtain

which is a contradiction since \(u(x_0)-v(x_0)>0\) and \(\left\| c^+\right\| _{\infty }<C_s \frac{k}{s}({\text {diam}}(\Omega ))^{-2s}\). \(\square\)

In what follows, we clarify what we mean by (weak) maximum/minimum principle.

Definition 4.2

We say that the operator \({\mathcal {I}}\) satisfies the weak maximum principle in \(\Omega\) if

and that it satisfies the strong maximum principle in \(\Omega\) if

Correspondingly, \({\mathcal {I}}\) satisfies the weak minimum principle in \(\Omega\) if

and it satisfies the strong minimum principle in \(\Omega\) if

The weak minimum/maximum principle follows by applying the comparison principle Theorem 4.1 with \(v=0\) or \(u=0\). However, the operators \({\mathcal {I}}_k^\pm\) do not always satisfy the strong maximum or minimum principle, see also [7].

Theorem 4.3

The following conclusions hold.

-

(i)

The operators \({\mathcal {I}}_k^-\), with \(k < N\), do not satisfy the strong minimum principle in \(\Omega\).

-

(ii)

The operator \({\mathcal {I}}_N^-\) satisfies the strong minimum principle in \(\Omega\).

-

(iii)

The operators \({\mathcal {I}}_k^+\), with \(k \le N\), satisfy the strong minimum principle in \(\Omega\).

Remark 4.4

We notice that since \({\mathcal {I}}_k^+ (-u)= -{\mathcal {I}}_k^- u\), corresponding results hold for the maximum principle.

Proof

-

(i)

We refer to Proposition 2.2 in [7] for a counterexample.

-

(ii)

Let us assume that u satisfies

$$\begin{aligned} {\left\{ \begin{array}{ll} {\mathcal {I}}_N^- u \le 0 &{}{\text { in }} \Omega \\ u \ge 0 &{}{\text { in }} {\mathbb {R}}^N \end{array}\right. } \end{aligned}$$and let \(u(x_0)=0\) for some \(x_0 \in \Omega\). We want to prove that \(u \equiv 0\) in \(\Omega\). Let us proceed by contradiction, and assume there exists \(y \in \Omega\) such that \(u(y) >0\). Let us choose a ball \(B_R(y)\) such that

-

\(B_R(y) \subset \Omega\)

-

\(\exists\, x_1\in\partial B_R(y)\) such that \(u(x_1)=0\)

-

\(u(x)>0\) for all \(x\in\overline B_R(y)\backslash\left\{x_1\right\}\).

Then, by definition of viscosity super solutions, for fixed \(\rho >0\) and \(\varphi \in C^2(B_\rho (x_1))\), for which \(x_1\) is a minimum point for \(u-\varphi\), and for every \(\varepsilon >0\), there exists a orthonormal basis \(\{ \xi _1, \dots , \xi _N \}=\{ \xi _1(\varepsilon ), \dots , \xi _N(\varepsilon ) \}\) such that

$$\begin{aligned} \varepsilon \ge C_s \sum _{i=1}^N \left( \int _{0}^\rho \frac{\delta (\varphi , x_1, \tau \xi _i)}{\tau ^{1+2s}} \, d\tau +\int _{\rho }^{+\infty } \frac{\delta (u, x_1, \tau \xi _i)}{\tau ^{1+2s}} \, d\tau \right) . \end{aligned}$$(4.13)Fix \(\rho < \frac{2R}{\sqrt{N}}\), and choose \(\varphi \equiv 0\) on \(B_\rho (x_1)\). Moreover, we know that there exists \(j=j(\varepsilon )\) such that

$$\begin{aligned} \langle \xi _j, \widehat{x_1- y} \rangle \ge \frac{1}{\sqrt{N}}, \quad {\text { with }} \widehat{x_1- y} =\frac{x_1-y}{\left| x_1-y\right| }. \end{aligned}$$In particular, one has \(\rho < 2R\langle \xi _j, \widehat{x_1- y} \rangle\). Then, taking into account that \(u(x_1)=0\) and \(u \ge 0\), from (4.13) one has

$$\begin{aligned} \varepsilon&\ge C_s \sum _{i=1}^N \int _{\rho }^{+\infty } \frac{u(x_1 + \tau \xi _i)+u(x_1-\tau \xi _i)}{ \tau ^{1+2s}} \, d\tau \\&= C_s \sum _{i \ne j} \int _{\rho }^{+\infty } \frac{u(x_1 + \tau \xi _i)+ u(x_1-\tau \xi _i) }{\tau ^{1+2s}} \, d\tau + C_s \int _{\rho }^{+\infty } \frac{u(x_1 + \tau \xi _j)+ u(x_1-\tau \xi _j)}{ \tau ^{1+2s}} \, d\tau \\&\ge C_s \int _{\rho }^{+\infty } \frac{u(x_1 - \tau \xi _j)}{\tau ^{1+2s}} \, d\tau \ge C_s \int _{\rho }^{2R\langle \xi _j, \widehat{x_1- y} \rangle } \frac{u(x_1 - \tau \xi _j)}{\tau ^{1+2s}} \, d\tau \\&\ge C_s \frac{1}{2s} \left( \rho ^{-2s} - \left( \frac{2R}{\sqrt{N}} \right) ^{-2s} \right) \min _{{\overline{B}}_R(y) {\setminus } B_\rho (x_1)} u, \end{aligned}$$as \(x_1-\tau \xi _j \in {\overline{B}}_R(y) {\setminus } B_\rho (x_1)\) if \(\rho<\tau < 2R\langle \xi _j, \widehat{x_1- y} \rangle\), which gives the contradiction if \(\varepsilon\) is small enough.

-

-

(iii)

The conclusion for the operators \({\mathcal {I}}_k^+\) follows recalling

$$\begin{aligned} {\mathcal {I}}_k ^+ u(x) \le 0\; \Rightarrow \; {\mathcal {I}}_N^- u(x)\le 0. \end{aligned}$$Indeed, since \({\mathcal {I}}_k ^+ u(x) \le 0\) one has \(\sum _{i=1}^k {\mathcal {I}}_{\xi _i} u(x) \le 0\) for any \(\{ \xi _1, \dots , \xi _k\} \in {\mathcal {V}}_k\). Fix any \(\{ {\bar{\xi }}_1, \dots , {\bar{\xi }}_N \} \in {\mathcal {V}}_N\), and denote with \({\mathcal {A}}_k\) the set of all subsets of cardinality k of \(\{ {\bar{\xi }}_1, \dots , {\bar{\xi }}_N \}\). Clearly, \({\mathcal {A}}_k \subset {\mathcal {V}}_k\). In particular,

$$\begin{aligned} 0 \ge \sum _{\{ \xi _i \} \in {\mathcal {A}}_k } \sum _{i=1}^k {\mathcal {I}}_{\xi _i} u(x) ={{N-1}\atopwithdelims (){k-1}} \sum _{i=1}^N {\mathcal {I}}_{{\bar{\xi }}_i} u(x), \end{aligned}$$from which the conclusion.

\(\square\)

Remark 4.5

Notice that the proofs above only require \(\Omega\) to be connected, and not necessarily bounded.

Remark 4.6

The same proof as in item (iii) shows that

and

Actually, the operators \({\mathcal {I}}_k^+\) satisfy a stronger condition than the strong minimum principle, which is also satisfied by the fractional Laplacian, and which turns out to be false for \({\mathcal {I}}_N^-\).

Proposition 4.7

One has

-

(i)

The operators \({\mathcal {I}}_k^+\), with \(k \le N\), satisfy the following

$$\begin{aligned} {\mathcal {I}}_k^+ u(x) \le 0 {\text { in }} \Omega , \quad u \ge 0 {\text { in }}{\mathbb {R}}^N \; \Rightarrow \; u > 0 {\text { in }} \Omega {\text { or }} u \equiv 0 {\text { in }} {\mathbb {R}}^N. \end{aligned}$$ -

(ii)

There exist functions u such that \({\mathcal {I}}_N^- u \le 0\) in \(\Omega\), \(u \equiv 0\) in \({\overline{\Omega }}\), and \(u \not \equiv 0\) in \({\mathbb {R}}^N {\setminus } {\overline{\Omega }}\).

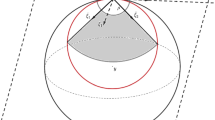

Graphic representation of the function u in the proof of Proposition 4.7 (ii), with \(N=2\)

Proof

-

(i)

Take u which satisfies the assumptions of the minimum principle, and assume there exists \(x_0 \in \Omega\) such that \(u(x_0)=0\). By the strong minimum principle in \(\Omega\), we know that \(u \equiv 0\) in \(\Omega\), in particular \(u \ge 0\) in \({\mathbb {R}}^N\). Choose any orthonormal basis of \({\mathbb {R}}^N\) \(\{ \xi _1, \dots , \xi _{N} \}\). Thus, recalling that \(u\ge 0\) in \({\mathbb {R}}^N\)

$$\begin{aligned} 0 \ge {\mathcal {I}}_k^+ u(x_0)&\ge \sum _{i=1}^k {\mathcal {I}}_{\xi _i} u(x_0) =C_s \sum _{i=1}^k \int _0^{+\infty } \frac{u(x_0 + \tau \xi _i) + u(x_0-\tau \xi _i)}{\tau ^{1+2s}} \, d\tau . \end{aligned}$$Hence, since \(u \ge 0\) in \({\mathbb {R}}^N\), we conclude that \(u \equiv 0\) on every line with direction \(\xi _i\), and passing by \(x_0\). Since the directions are arbitrary, we get the conclusion.

-

(ii)

Take

$$\begin{aligned} u(x)={\left\{ \begin{array}{ll} 0 &{}{\text { if there exists }} i =1, \dots , N {\text { such that }} \left| \langle x, e_i \rangle \right| \le 1\\ 1 &{}{\text { otherwise,}} \end{array}\right. } \end{aligned}$$see also Fig. 2, and notice that

$$\begin{aligned} {\mathcal {I}}_N^- u (x)\le \sum _{i=1}^N {\mathcal {I}}_{e_i} u (x) =0 {\text { in }} B_1(0), \end{aligned}$$where \(e_i\) is the canonical basis. Moreover, \(u \equiv 0\) in \({\overline{B}}_1(0)\), however \(u \not \equiv 0\) in \({\mathbb {R}}^N {\setminus } {\overline{B}}_1(0)\). \(\square\)

We now prove a Hopf-type Lemma. We will borrow some ideas from [14], where the fractional Laplacian is taken into account. The next known computation (see [8, end of Section 2.6]) provides a useful barrier function.

Lemma 4.8

For any \(\xi \in {\mathcal {S}}^{N-1}\) one has

where

is the Beta function. In particular,

For completeness’ sake, we give a sketch of the proof.

Sketch of the proof Call \(v(x)={(R^2-|x|^2)}^s_+\), and define \(u : {\mathbb {R}}\rightarrow {\mathbb {R}}\) as \(u(t)={(1-|t|^2)}^s_+\). Notice that for \(x\in B_R(0)\)

Now, one performs the change of variable

to get

The last equality follows from equation (2.43) in [8], see also [13], and the fact that \(\frac{\left| \langle x, \xi \rangle \right| }{\sqrt{R^2- |x|^2+\langle x, \xi \rangle ^2}}<1\). \(\square\)

Proposition 4.9

Let \(\Omega\) be a bounded \(C^2\) domain, and let u satisfy

Assume \(u \not \equiv 0\) in \(\Omega\). Then, there exists a positive constant \(c=c(\Omega , u)\) such that

Notice that the conclusion is not true for the operators \({\mathcal {I}}_k^-\), \(k < N\). Indeed, consider the function

and take \(\{ \xi _i \} \in {\mathcal {V}}_k\) such that \(\langle x, \xi _i \rangle =0\) for any \(i=1, \dots , k\). Hence,

and using the radial monotonicity of u

However, u clearly does not satisfy

for any positive constants \(c, \gamma\).

As a consequence of Proposition 4.9, we immediately obtain the following

Corollary 4.10

Let \(\Omega\) be a bounded \(C^2\) domain, and let u satisfy

Assume \(u \not \equiv 0\) in \(\Omega\). Then,

for some positive constant \(c=c(\Omega , u)\).

Remark 4.11

We also point out that from Proposition 4.9 one can deduce the strong maximum/minimum principle for the operators \({\mathcal {I}}_k^+\), \({\mathcal {I}}_N^-\), which however follows also by a more direct argument as we showed in Theorem 4.3.

Proof of Proposition 4.9

By the weak and strong minimum principles, see Theorem 4.1 and Theorem 4.3-(ii), \(u>0\) in \(\Omega\). Therefore, for any K compact subset of \(\Omega\) we have

Without loss of generality we can further assume that u vanishes somewhere in \(\partial \Omega\), otherwise the conclusion is obvious.

Since \(\Omega\) is a \(C^2\) domain, there exists a positive constant \(\varepsilon\), depending on \(\Omega\), such that for any \(x \in \Omega _\varepsilon =\{ x \in \Omega : d(x) < \varepsilon \}\) there are a unique \(z \in \partial \Omega\) for which \(d(x)=\left| x-z\right|\) and a ball \(B_{2\varepsilon }({\bar{y}}) \subset \Omega\) such that \(\overline{B_{2\varepsilon }({\bar{y}})} \cap ({\mathbb {R}}^N {\setminus } \Omega )=\{ z \}\).

Now we consider the radial function \(w(x)={((2\varepsilon )^2-\left| x- {\bar{y}}\right| ^2)}^s_+\) which satisfies, see Lemma 4.8, the equation

We claim that there exists \({\bar{n}}= {\bar{n}}(u, \varepsilon )\) such that

where

This implies (4.14). Indeed, for any \(x\in \Omega _\varepsilon\)

and

From (4.16)-(4.17) we obtain (4.14) with \(c=\min \left\{ \frac{2\varepsilon }{{\bar{n}}} ,\min _{y\in \Omega \backslash \Omega _\varepsilon }\frac{u(y)}{d(y)^s}\right\}\).

We proceed by contradiction in order to prove the claim; hence, we suppose that for any \(n \in {\mathbb {N}}\)

is USC and positive somewhere. From now on, for simplicity of notation, we assume that \(B_{2\varepsilon }({\bar{y}}) =B_1(0)\). Since

we know that it attains its positive maximum \(x_n\) in \(B_1(0) \subset \Omega\). One has

Also, \(w_n \rightarrow 0\) uniformly in \({\mathbb {R}}^N\), thus

Therefore, recalling (4.15), \(\left| x_n\right| \rightarrow 1\) as \(n \rightarrow \infty\), hence in particular \(x_n \in B_1(0) {\setminus } B_{r_0}(0)\), where \(r_0=\sqrt{1 - \frac{1}{2N}}\), and \(d(x_n) < (1-r_0)/2\) for n large enough.

Since \({\mathcal {I}}_N^- u \le 0\) in \(\Omega\), we know that for every test function \(\varphi \in C^2(B_\rho (x_n))\) such that \(x_n\) is a minimum point to \(u-\varphi\), one has

and in particular for any \(n \in {\mathbb {N}}\) there exists \(\{ \xi _1(n), \dots , \xi _N(n) \}\) orthonormal basis of \({\mathbb {R}}^N\) such that

Since \(\{ \xi _1(n), \dots , \xi _N(n) \}\) is a basis of \({\mathbb {R}}^N\), then there exists at least one \(\xi _i(n)\) such that \(\langle {\hat{x}}_n, \xi _i(n) \rangle \ge \frac{1}{\sqrt{N}}\). Without loss of generality, we can suppose that \(\xi _i(n)=\xi _1(n)\). Let us choose \(\rho = d(x_n) < (1- r_0)/2\), and \(\varphi (x)=w_n (x) \in C^2(B_\rho (x_n))\) as test function.

We consider the left hand side of (4.19), and we aim at providing a positive lower bound independent on n, which will give the desired contradiction. Let us start with the second integral in (4.19) for each fixed \(i=2, \dots , N\), and let us notice that since \(x_n\) is a maximum point for \(v_n\)

On the other hand, in order to estimate the integral for \(i=1\), we split it as follows:

where

and

with

and

Notice that if \(\tau \in [\tau _1(n), \tau _2(n)]\) then \(x_n - \tau \xi _1(n) \in B_{r_0}(0)\), as

see also Fig. 3. Also, for n large we can assume \(\rho =d(x_n)< \tau _1(n) < \tau _2(n)\), since as \(n\rightarrow +\infty\), \(d(x_n)\rightarrow 0\), \(\tau _1(n)\rightarrow \frac{1}{\sqrt{N}}\left( 1-\frac{1}{\sqrt{2}}\right)\) and \(\tau _2(n)\rightarrow \frac{1}{\sqrt{N}}\left( 1+\frac{1}{\sqrt{2}}\right)\).

Integrals \(J_1\) and \(J_3\) can be estimated once again as above, exploiting the inequality

In order to estimate \(J_2\), we now use the fact that \(u(x_n - \tau \xi _1 (n))\ge \min _{{\overline{B}}_{r_0}} u >0\). We obtain

Now, putting estimates above together and recalling (4.19), one has

Notice that, as \(n\rightarrow +\infty\)

and that by Lemma 4.8

Thus, by taking the limit \(n \rightarrow +\infty\) in (4.21) and using (4.18) we get the contradiction

\(\square\)

5 Stability and the Perron method

We now give some stability results which will be crucial for our purposes. They have been treated in a very general context in [2, 3], see also [1]; here we give a simplified proof with full details for the operators \({\mathcal {I}}_k^\pm\).

For the local counterparts, we refer to [11]. Let us set

and

Lemma 5.1

Let \(u_n \in USC(\Omega )\) (respectively, \(LSC(\Omega )\)) be a sequence of subsolutions (supersolutions) of

where \(f_n\) are locally uniformly bounded functions, and \(u_n \le 0\) (\(u_n \ge 0\)) in \({\mathbb {R}}^N {\setminus } \Omega\). We assume that there exists \(M>0\) such that for any \(n \in {\mathbb {N}}\)

Then \({\overline{u}}:= {\limsup }^* u_n\) (resp. \({\underline{u}} :={\liminf }_* u_n\)) is a subsolution (resp. supersolution) of

such that \(\overline u \le 0\) (resp. \(\underline u \ge 0\)) in \({\mathbb {R}}^N {\setminus } {\overline{\Omega }}\), where \({\underline{f}}=\liminf _* f_n\) (resp. \({\overline{f}}=\limsup ^* f_n\)).

Remark 5.2

Notice that in general we cannot guarantee that the limit solution \({\overline{u}}\) is \(\le 0\) also on the boundary of the domain \(\Omega\). However, in our next results, we will always be able to avoid this difficulty, by comparing the limit solution with the distance function to the boundary, see also Lemma 6.5.

Proof

Let us only consider \({\mathcal {I}}_k^+\), for \({\mathcal {I}}_k^-\) is analogous. Let us fix \(x_0 \in \Omega\), and let us choose \(\Phi \in C^2(B_\rho (x_0))\) such that \(\Phi (x_0)={\overline{u}} (x_0)\), and \(\Phi > {\overline{u}}\) in \(B_\rho (x_0) {\setminus } \{ x_0 \}\). We can choose \(x_n \rightarrow x_0\) such that up to a subsequence \(u_n-\Phi\) has a maximum in \(x_n\) in \({\overline{B}}_{\rho /2}(x_n)\), and \({\overline{u}}(x_0)=\lim _n u_n(x_n)\). Since \(u_n\) are subsolutions, there exist \(\{ \xi _i(n) \} \in {\mathcal {V}}_k\) such that

Up to extracting a further subsequence, we can assume \(\xi _i(n) \rightarrow {\bar{\xi }}_i\) as \(n \rightarrow \infty\). Then, recalling \(\Phi \in C^2(B_\rho (x_0))\),

On the other hand, by applying Fatou lemma, and using hypothesis (5.2),

Thus, recalling (5.3), passing to the limit, and also using that \(\Phi \ge {\overline{u}}\) in \(B_\rho (x_0)\),

which implies the conclusion. \(\square\)

Analogously one proves

Lemma 5.3

Let \((u_\alpha )_\alpha \subseteq USC(\Omega )\) (respectively, \(LSC(\Omega )\)) a family of subsolutions (supersolutions) of

such that \(u_\alpha \le 0\) (\(u_\alpha \ge 0\)) in \({\mathbb {R}}^N {\setminus } \Omega\), and there exists \(M>0\) such that for any \(\alpha\)

where \(f_\alpha\) are uniformly bounded. Set \(u=\sup _\alpha u_\alpha\) (resp. \(v=\inf _{\alpha } u_\alpha\)). Then \(u^*\) (resp. \(v_*\)) is a subsolution (resp. supersolution) of

such that \(u \le 0\) (\(u \ge 0\)) in \({\mathbb {R}}^N {\setminus } \Omega\), where \(f=(\inf _\alpha f_\alpha )_*\) (resp. \(f=(\sup _\alpha f_\alpha )^*\)).

As a consequence, we get the following analog of the Perron method.

Lemma 5.4

Let \({\underline{u}}\) and \({\overline{u}}\) in \(C({\mathbb {R}}^N)\) be, respectively, sub- and supersolutions of

such that \({\underline{u}}= {\overline{u}}=0\) in \({\mathbb {R}}^N {\setminus } \Omega\). Then there exists a solution \(v \in C({\mathbb {R}}^N)\) to (5.4) such that \({\underline{u}} \le v \le {\overline{u}}\), and \(v=0\) in \({\mathbb {R}}^N {\setminus } \Omega\).

Proof

In what follows we only consider the case \({\mathcal {I}}_k^+\), similar considerations hold for \({\mathcal {I}}_k^-\). Let

Notice that \(v \in L^\infty ({\mathbb {R}}^N)\) as

which also implies \(v=0\) in \({\mathbb {R}}^N {\setminus } \Omega\). We know by Lemma 5.3 that \(v^*\) is a subsolution to (5.4), thus \(v^* \le v\) by maximality of v and \(v=v^*\). We claim that \(v_*\) is a supersolution to (5.4). If the claim is true, then by the comparison principle Theorem 4.1 we conclude \(v^* \le v_*\), and since the other inequality trivially holds, then \(v=v_*=v^* \in C({\mathbb {R}}^N)\) is a solution to (5.4) such that \(v=0\) in \({\mathbb {R}}^N {\setminus } \Omega\).

We now prove the claim. Let us assume by contradiction that \(v_*\) is not a supersolution. Then, there exists \(x_0 \in \Omega\), \(\rho >0\) and \(\Phi \in C^2(\overline{B_\rho (x_0)})\) such that \(\Phi (x_0)=v_*(x_0)\), \(\Phi < v_*\) in \(\overline{B_\rho (x_0)} {\setminus } \{ x_0 \}\), and

where \(\Psi \in LSC({\mathbb {R}}^N) \cap L^\infty ({\mathbb {R}}^N) \cap C^2(B_\rho (x_0))\) is defined as

By Proposition 3.1, there exist \(r < \rho /2\) and \(\varepsilon _0 >0\) such that

for any \(x \in B_r(x_0)\). Moreover, for any \(\eta >0\) let

Then,

for any \(\eta < \eta _1=\varepsilon _0 C_s^{-1} \frac{s}{k} \left( \frac{\rho }{2} \right) ^{2s}\) and for any \(x \in B_r(x_0)\). Indeed, notice that \(\Psi _{\eta } = \Psi + \eta \chi _{{\overline{B}}_\rho (x_0)}\), where \(\chi _A\) is the characteristic function of the set A, and that for any \(\left| \xi \right| =1\) and \(x \in B_r (x_0)\), \(x \pm \tau \xi \in B_\rho (x_0)\) if \(\tau < \rho -r\). Thus, by direct computations

Thus,

by using (5.6).

Let us take

so that \(v_* > \Phi +\eta\) in \({\overline{B}}_{\rho }(x_0) {\setminus } B_{r/2}(x_0)\) for any \(\eta < \eta _2\).

Consider

Define

In particular, \(w(x) \ge \Psi _{\eta _0}(x)\) for all x.

Let us prove that w is a subsolution. Let us fix \({\bar{x}} \in \Omega\), and let us choose \(\varphi \in C^2(B_\varepsilon ({\bar{x}}))\) such that \(w({\bar{x}})=\varphi ({\bar{x}})\), and \(w(x) \le \varphi (x)\) in \(B_\varepsilon ({\bar{x}})\).

If \(w({\bar{x}} )=v({\bar{x}})\), then \(\varphi\) is a test function for v, and we exploit the fact that v is a subsolution. If \(w({\bar{x}})=\Phi ({\bar{x}})+ \eta _0> v({\bar{x}})\), then in particular \({\bar{x}} \in B_{r/2}(x_0)\). Set

One has

Also, \(\theta (x)\ge \Psi _{\eta _0}(x)\) for any x. Indeed, if \(x \in B_\varepsilon ({\bar{x}})\), then \(\theta (x) =\varphi (x) \ge w(x) \ge \Psi _{\eta _0}(x)\), whereas if \(x \not \in B_\varepsilon ({\bar{x}})\), then \(\theta (x)=w(x) \ge \Psi _{\eta _0}(x)\). Therefore,

by (5.7).

Hence, w is a subsolution, and this yields a contradiction. Indeed, there exists a sequence \(x_n \rightarrow x_0\) such that \(\lim _{n\rightarrow \infty } v(x_n)=v_*(x_0)\), and one has

Thus, \(w(x) > v(x)\) for some x. Finally, we notice that \(w \le {\overline{u}}\) by comparison, and as a consequence \(w \le v\) by maximality of v, a contradiction. \(\square\)

We finally prove existence of a unique solution to the Dirichlet problem in uniformly convex domains

The proof will be based on stability properties above.

Theorem 5.5

Let f be a bounded continuous function, and let \(\Omega\) be a uniformly convex domain. Then there exists a unique function \(u \in C({\mathbb {R}}^N)\) such that

Proof

Exploiting the barrier functions in Lemma 4.8, we build suitable sub/super solutions. Indeed, for any \(y \in Y\) one considers the function

which for \(M=M(k, s)\) big enough satisfies

We now take

which is a supersolution to (5.8). In order to prove it, first we note that \(0 \le v(x) \le M R^{2s}\), hence v is bounded. Moreover, notice that \(v \in C^{0, s}({\mathbb {R}}^N)\). Indeed, for any \(x, y \in {\overline{\Omega }}\), one has

Moreover, \(v = 0\) in \({\mathbb {R}}^N {\setminus } \Omega\). Indeed, if \(x \not \in \Omega\), there exists \(y=y(x)\) such that \(x \not \in B_R(y)\) which implies

The infimum in definition (5.9) is attained, as given \(x_0 \in \Omega\), we can choose \(y_0 \in Y\) and \(z_0 \in \partial B_R(y_0)\) such that

Therefore, as \(B_\eta (x_0) \subseteq \Omega \subseteq B_R(y)\) for any \(y \in Y\),

and as a consequence \(v(x_0)=v_{y_0}(x_0)\). In particular,

which yields

Analogously, we take the supremum of the subsolutions

Notice that

for a sufficiently big constant M.

We now exploit the Perron method, applying Lemma 5.4, to get a solution to (5.8). Uniqueness follows from Theorem 4.1. \(\square\)

6 Maximum principles and principal eigenvalues

We finally define the following generalized principal eigenvalues, adapting the classical definition in [4],

Also let us set

Remark 6.1

In this section, we only consider the operators \({\mathcal {I}}^\pm _k(\cdot )+\mu \cdot\), however, one can also treat operators with a zero order term like \({\mathcal {I}}^\pm _k(\cdot )+c(x) \cdot + \mu \cdot\), up to some technicalities.

Theorem 6.2

The operators \({\mathcal {I}}^\pm _k(\cdot )+\mu \cdot\) satisfy the maximum principle for \(\mu <{\bar{\mu }}^\pm _k\).

Proof

We consider \({\mathcal {I}}^+_k\), the other case being analogous. Let \(\mu <{\bar{\mu }}^+_k\) and let \(u\in USC({\overline{\Omega }})\cap L^\infty ({\mathbb {R}}^N)\) be a solution of

By contradiction, we suppose that \(u(x_0)>0\) for some \(x_0\in \Omega\). In view of Theorem 4.1 we have \(\mu >0\). By the definition of \({\bar{\mu }}^+_k\) there exists \(\eta \in (\mu ,{\bar{\mu }}^+_k)\) and a nonnegative bounded function \(v\in LSC(\Omega )\) such that

Set \(\gamma =\sup _\Omega \frac{u}{v}\). Then,

and for any \(\varepsilon \in (0,\gamma )\) there exists \(z_\varepsilon \in \Omega\) such that

From this, we infer that there exists \(x_\varepsilon \in \Omega\) such that

For \(n\in \mathbb {N}\) let \(x_n=x_n(\varepsilon ), y_n=y_n(\varepsilon )\in {\overline{\Omega }}\) be such that

Arguing as in the proof of Theorem 4.1 we find that, for n sufficiently large,

Moreover, up to extract a subsequence, we may further assume that \((x_n,y_n)\rightarrow ({\bar{x}},{\bar{x}})\), with \({\bar{x}}\in \Omega\). Using \(\varphi _n(x)=u(x_n) + n|x-y_n|^2- n\left| x_n-y_n\right| ^2\) as test function for u at \(x_n\), and also testing v at \(y_n\) with \(\phi _n(y)=(\gamma -\varepsilon )v(y_n) -n|x_n-y|^2 + n\left| x_n-y_n\right| ^2\), and finally subtracting the corresponding inequalities, see also the proof of Theorem 4.1, we obtain

By (6.1)-(6.2) it follows that \(\delta (u, x_n, \tau \xi _i)- \delta ((\gamma -\varepsilon ) v, y_n, \tau \xi _i)\le 0\). Hence,

Letting \(\rho \rightarrow 0\)

Then, as \(n\rightarrow +\infty\)

Since v and \(\gamma\) are positive and \(\varepsilon\) can be chosen arbitrarily small, we reach the contradiction

\(\square\)

Proposition 6.3

One has

-

(i)

\({\bar{\mu }}_k^-=\mu _k^-=+\infty\) for any \(k < N\).

-

(ii)

If \(B_{R_1} \subseteq \Omega \subseteq B_{R_2}\), then

$$\begin{aligned}\frac{c_2}{R_2^{2s}} \le {\bar{\mu }}_1^+ \le \cdots \le {\bar{\mu }}_N^+\le {\bar{\mu }}_N^- \le \frac{c_1}{R_1^{2s}} < +\infty , \end{aligned}$$where \(c_1, c_2\) are positive constants depending on s.

Proof

-

(i)

Let \(w(x)=e^{-\alpha \left| x\right| ^2} > 0\) for \(\alpha >0\) and fix any \(\mu >0\). Since

$$\begin{aligned} \int _0^{+\infty } (1-e^{-\alpha \tau ^2}) \tau ^{-(1+2s)} \, d\tau = \alpha ^s \int _0^{+\infty } (1-e^{- \tau ^2}) \tau ^{-(1+2s)} \, d\tau , \end{aligned}$$using Theorem 3.4 in [7] (see also Remark 3.5) we obtain

$$\begin{aligned} {\mathcal {I}}_k^- w + \mu w&= k {\mathcal {I}}_{x^\perp } w + \mu w \\&= - 2k C_s e^{-\alpha \left| x\right| ^2} \int _0^{+\infty } (1-e^{-\alpha \tau ^2}) \tau ^{-(1+2s)} \, d\tau + \mu e^{-\alpha \left| x\right| ^2} = 0 \end{aligned}$$if

$$\begin{aligned} \alpha ^s=\frac{\mu }{2k C_s \int _0^{+\infty } (1-e^{-\tau ^2}) \tau ^{-(1+2s)}}, \end{aligned}$$where \(x^\perp\) is a unitary vector such that \(\langle x, x^\perp \rangle =0\).

-

(ii)

We first note that in the definitions of \({\bar{\mu }}^\pm _k\) it is not restrictive to suppose \(\mu \ge 0\) (since the constant function \(v=1\) is a positive solution of \({\mathcal {I}}^\pm _kv=0\)). Moreover if \(\mu \ge 0\) and v is a nonnegative supersolution of the equation

$$\begin{aligned} {\mathcal {I}}^+_kv+\mu v=0 \quad {\text {in }} \Omega , \end{aligned}$$then \({\mathcal {I}}^+_kv\le 0\) in \(\Omega\) and using Remark 4.6 we have

$$\begin{aligned} {\mathcal {I}}^+_{k+1}v+\mu v\le 0 \quad {\text { in }}\Omega . \end{aligned}$$This leads to \({\bar{\mu }}^+_k\le {\bar{\mu }}^+_{k+1}\) for any \(k=1,\dots ,N-1\). If \(k=N\), using the inequality \({\mathcal {I}}^-_N\le {\mathcal {I}}^+_N\) we immediately obtain that \({\bar{\mu }}^+_N\le {\bar{\mu }}^-_N\).

Also, by scaling we obtain

Hence, it is sufficient to prove that \({\bar{\mu }}_N^- (B_1)\) is bounded from above.

Arguing as in [16], choose a constant function \(h \ge 0\), \(h \not \equiv 0\) with compact support in \(B_1\). By Theorem 5.5, there exists a unique solution to the following

By Theorem 4.1 and Theorem 4.3\(v >0\) in \(B_1\). Since h has compact support, we may select a constant \(\rho _0 >0\) such that \(\rho _0 v \ge h\) in \(B_1\). Therefore, v satisfies

By Theorem 6.2 we infer that \({\bar{\mu }}_N^- \le \rho _0\).

As for the bound from below, we observe that \(u(x)={(R_2^2 - \left| x\right| ^2)}^s_+ + \varepsilon\) satisfies

if we take \(\mu \le \frac{C_s \beta (1-s,s)}{R_2^{2s}+\varepsilon }\) for any \(\varepsilon >0\), thus \({\bar{\mu }}_1^+ \ge \frac{ C_s \beta (1-s,s)}{R_2^{2s}}>0\). \(\square\)

Remark 6.4

Notice that the proof of (i) above suggests the existence of a continuum of eigenvalues in \((0, +\infty )\) for \({\mathcal {I}}_k^- + \mu\) in \(\mathbb {R}^N\).

We now consider uniformly convex domains and prove that \({\bar{\mu }}_k^+=\mu _k^+\). Moreover this common value turns out to be the optimal threshold for the validity of the maximum principle. We start with the next Lemma which will be crucial in the rest of the paper.

Lemma 6.5

Let m be a positive constant and let u be a solution of

where the domain \(\Omega\) is uniformly convex. Then there exists a positive constant \(C=C(\Omega , m, s)\) such that

for any \(x \in {\overline{\Omega }}\).

Proof

Fix any \(y \in Y\) and consider the function

where M is such that \(k M C_s \beta (1-s, s)=m\). Then,

Also, we point out that \(v_y(x) \ge 0\) in \({\mathbb {R}}^N\). By the comparison principle, see Theorem 4.1, \(u(x) \le v_y(x)\) in \({\mathbb {R}}^N\). Let \(x \in \Omega\) and select \(z \in \partial \Omega\) so that \(d(x)=\left| x-z\right|\). Choose \(y \in Y\) such that \(z \not \in B_R(y)\). Notice that since \(\left| x-y\right| \le R\),

Thus, for any \(x \in \overline{\Omega }\)

leading to (6.3) with \(C= M 2^s R^s\). \(\square\)

Theorem 6.6

Let \(\Omega\) be a uniformly convex domain. There exists a nonnegative subsolution \(v \not \equiv 0\) of

Proof

Let us consider the problem

and define

One has \(\emptyset \ne A_n \subseteq A_{n+1}\). We claim that for any n there exists \(w_n \in A_n\) such that \(\lim _n \left\| w_n\right\| _\infty =+ \infty\). If the claim is true, then we define \(z_n=\frac{w_n}{\left\| w_n\right\| }\), which turn out to be solutions of

By semicontinuity, there exists a sequence \(x_n \in \Omega\) such that \(\sup _\Omega z_n=z_n(x_n)=1\). Up to a subsequence, \(x_n \rightarrow x_0\), and by Lemma 6.5\(x_0 \in \Omega\). Thus, \(v(x)={\limsup _n}^* z_n(x)\) satisfies by Lemma 5.1

and, again by Lemma 6.5\(v = 0\) on \({\mathbb {R}}^N {\setminus } \Omega\). Also, \(v(x_0)=1\) and the proof is complete.

Let us now prove the claim. We will proceed by contradiction, assuming that for any sequence \(u_n \in A_n\) then \(\limsup _n \left\| u_n\right\| _\infty < + \infty\), and split the proof into steps.

Step 1. We show that \(U_n(x)=\sup _{w \in A_n} w(x) < +\infty\) for any x and any n.

If it is not the case, then there exists \({\bar{n}}\) and \({\bar{x}}\) such that \(U_{{\bar{n}}}({\bar{x}})=+\infty\), and by definition of supremum, there exists a sequence \((u_n)_n \subseteq A_{{\bar{n}}}\) such that \(\lim _n u_n ({\bar{x}}) =+ \infty\). Since for any \(n \ge {\bar{n}}\) one has \(A_{{\bar{n}}} \subseteq A_n\), then \(u_n \in A_n\) for any \(n \ge {\bar{n}}\) and \(\lim _n \left\| u_n\right\| _\infty =+\infty\), a contradiction.

Step 2. One has \(\left\| U_n\right\| _\infty < +\infty\) for any fixed n.

Indeed, if there exists \({\bar{n}}\) such that \(\left\| U_{{\bar{n}}}\right\| _\infty =+\infty\), then there exists \(x_n \in \Omega\) and \(u_n \in A_{{\bar{n}}}\) such that \(u_n(x_n) \rightarrow +\infty\). Then, \(u_n \in A_n\) for any \(n \ge {\bar{n}}\), and \(\left\| u_n\right\| _\infty \ge u_n(x_n) \rightarrow +\infty\), a contradiction.

Step 3. We show that there exists a constant \(C>0\) such that \(\left\| U_n\right\| _\infty \le C\) uniformly in n.

Notice that \(\left\| U_n\right\| _\infty \le \left\| U_{n+1}\right\| _\infty\) and hence if it is not bounded, then \(\left\| U_n\right\| _\infty \rightarrow \infty\), thus \(\left\| u_n\right\| _\infty \rightarrow \infty\) for a sequence \(u_n \in A_n\), a contradiction.

Step 4. One has \(U_n=(U_n)^*\) is a subsolution to (6.4) such that \(U_n=0\) in \({\mathbb {R}}^N {\setminus } \Omega\).

Indeed, \((U_n)^*\) is a subsolution by Lemma 5.3. Moreover, since for any \(u \in A_n\)

where C is the constant found in Step 3, by applying Lemma 6.5 we have \(u(x) \le {\tilde{C}} d(x)^s\), for a positive constant \({\tilde{C}}= {\tilde{C}}({\bar{\mu }}_k^+ C, s, \Omega )\), and as a consequence \((U_n)^*=0\) in \({\mathbb {R}}^N {\setminus } \Omega\). Finally, by maximality of \(U_n\), we conclude \(U_n=(U_n)^*\).

Step 5. Conclusion of the proof of the claim.

By using the same argument as in the proof of Lemma 5.4 (in particular the bump construction), we prove that \((U_n)_*\) is a supersolution to (6.4), which implies that \((U_n)_*+\varepsilon\) is a supersolution of

if n is sufficiently big, and \(\varepsilon\) is sufficiently small. Also, \((U_n)_*+\varepsilon >0\) in \({\overline{\Omega }}\), which contradicts the definition of \({\bar{\mu }}_k^+\). \(\square\)

Lemma 6.7

Let \(\Omega\) be a convex domain. Then \(\mu _k^+={\bar{\mu }}_k^+\).

Proof

Fix any \(\varepsilon >0\). Let \(v \in LSC(\Omega )\cap L^\infty ({\mathbb {R}}^N)\) such that \(v>0\) in \(\Omega\), \(v \ge 0\) in \({\mathbb {R}}^N\), and \({\mathcal {I}}_k^+ v + (\mu _k^+-\varepsilon ) v \le 0\) in \(\Omega\). Fix \(x_0 \in \Omega\), and observe that

satisfies

Also, \({\tilde{v}} >0\) in \({\overline{\Omega }}\), as \(\Omega\) is convex. Thus,

from which letting \(\varepsilon \rightarrow 0\) we have \(\mu _k^+\le {\bar{\mu }}_k^+\), and by definition equality holds. \(\square\)

Theorem 6.8

Let \(\Omega\) be a uniformly convex domain. The operator

satisfies the maximum principle if and only if \(\mu< \mu _k^+ < +\infty\), and correspondingly

satisfies the maximum principle for any \(\mu \in \mathbb {R}\).

Proof

Immediately follows from Theorems 6.2 -6.6, Proposition 6.3 and Lemma 6.7. \(\square\)

7 Hölder estimates

Proposition 7.1

Let u satisfy

where \(\Omega\) is a uniformly convex domain. If \(s>\frac{1}{2}\), then u is Hölder continuous of order \(2s-1\) in \({\mathbb {R}}^N\).

Proof

It is sufficient to show that for any \(x, y \in \overline{\Omega }\) such that \(\left| x-y\right| < \rho\), where \(\rho =\rho (s,\left\| f\right\| _\infty )\) is a positive constant to be determined, then

with \(L=L(\Omega , \left\| u\right\| _\infty , \left\| f\right\| _\infty ,s)\). Fix \(\theta \in (s,2s)\) and consider

which has a minimum in

Set

We claim that there exists \({\bar{\rho }}={\bar{\rho }}(s,\left\| f\right\| _\infty )\) sufficiently small such that

In order to show (7.4), we fix \(x \in B_{{\bar{\rho }}}(0)\), where \({\bar{\rho }} < r_0\) will be chosen later, and notice that it is sufficient to make computations in the parallel direction \(I_{{\hat{x}}}v\), thus

We now add and subtract the integral

and as a result

where

and

Recall that

where \(c_\theta >0\) as \(\theta > 2s-1\), see Lemma 3.6 in [7]. Moreover, using \(w(r_0)<0\),

Similarly, for \({\bar{\rho }}<\frac{r_0}{2}\)

Summing up,

Since the expression in parenthesis tends to \(c_\theta >0\) as \({\bar{\rho }}\rightarrow 0\), then we can pick \({\bar{\rho }}={\bar{\rho }}(s,\left\| f\right\| _\infty )\) sufficiently small such that

This shows (7.4).

Let \(x_0, y_0 \in {\overline{\Omega }}\) with \(\left| x_0-y_0\right| < {\bar{\rho }}\) and take

where \(L>0\). We want to prove that there is \(L=L(\Omega ,\left\| u\right\| _\infty ,\left\| f\right\| _\infty ,s)\) sufficiently large such that

This readily implies (7.2) since \(v_{y_0}(x_0)\ge u(y_0)-L|x_0-y_0|^{2s-1}\) and \(x_0,y_0\) are arbitrary points of \({\overline{\Omega }}\) with \(\left| x_0-y_0\right| < {\bar{\rho }}\).

To obtain (7.6) we make use of the comparison principle, see Theorem 4.1, in \(\Omega \cap B_{{\bar{\rho }}}(y_0)\backslash \left\{ y_0\right\}\). By (7.5), if \(L\ge 1\) then

hence \(v_{y_0}\) is a subsolution of \({\mathcal {I}}_1^+v=f(x)\) in \(B_{{\bar{\rho }}}(y_0) {\setminus } \{y_0\}\). As far as the exterior boundary condition is concerned, first notice that by definition \(v_{y_0}(y_0)=u(y_0)\). Now let \(x \in {\mathbb {R}}^N\backslash B_{{\bar{\rho }}}(y_0)\). Since the function v(x) is radially decreasing it turns out that

and, for

that

It remains to prove the inequality \(v_{y_0}(x)\le u(x)\) for \(x \in \overline{B_{{\bar{\rho }}}(y_0)} \cap \Omega ^c\). For this we recall that by Lemma 6.5 there exists a positive constant \(C=C(\Omega ,\left\| f\right\| _\infty ,s)\) such that

Notice that the function \(r\in (0,+\infty )\mapsto r^{s-1} - r^{\theta - s}\) is decreasing, thus

Using (7.9) with \(r=|x-y_0|\) and (7.8) we obtain, for \(x \in \overline{B_{{\bar{\rho }}}(y_0)} \cap \Omega ^c\), that

provided

Summing up, by (7.7) and(7.10), if

then by comparison we conclude that (7.6) holds, as we wanted to show. \(\square\)

Let us point out that, as in the local setting (see [6, Section 3]), the uniform convexity of \(\Omega\) was just exploited in the proof of Proposition 7.1 to get (7.8), hence to apply comparison principle up to the boundary. Moreover, in order to obtain interior Hölder estimates is in fact sufficient to assume the function u to be only supersolution.

Proposition 7.2

Let \(\Omega\) be a bounded domain of \({\mathbb {R}}^N\), and let \(s > \frac{1}{2}\). Then:

-

i)

for any compact \(K\subset \Omega\) and any supersolution u of (7.1), there exists a positive constant \(C=C(K,\Omega ,\left\| u\right\| _\infty ,\left\| f\right\| _\infty , s)\) such that \(\left\| u\right\| _{C^{0,2s-1}(K)}\le C\);

-

ii)

any supersolution u which satisfies (6.3) is \((2s-1)\)-Hölder continuous in \({\overline{\Omega }}\).

In the next theorem, we obtain global Hölder equicontinuity of sequences of solutions with uniformly bounded right hand sides. We shall use it in the next section for the existence of a principal eigenfunction.

Theorem 7.3

Let \(s > \frac{1}{2}\), and let \(u_n\in C({\overline{\Omega }})\cap L^\infty ({\mathbb {R}}^N)\) be solutions of

where the domain \(\Omega\) is uniformly convex and \(f_n\in C(\Omega )\) for any \(n\in \mathbb {N}\). Assume that there exists a positive constant D such that

Then there exists \({\tilde{D}}={\tilde{D}}(D,\Omega ,s)>0\) such that

Proof

We start by showing that \(\sup _n\left\| u_n\right\| _{L^\infty ({\mathbb {R}}^N)}<+\infty\). Let R, just depending on \(\Omega\), be such that \(B_R(0)\supseteq \Omega\) and consider the function

By Lemma 4.8, \(\varphi\) solves

For any \(n\in \mathbb {N}\), using (7.11), \(u_n\) is solution of

Hence, by the comparison Theorem 4.1 we get

In a similar fashion we also obtain

From (7.13)-(7.14) we infer that \(\sup _n\left\| u_n\right\| _{L^\infty ({\mathbb {R}}^N)}<+\infty\). Arguing as in the proof of Proposition 7.1, with the same notations there used, and v as defined in (7.3), we can pick \({\bar{\rho }}={\bar{\rho }}(s,D)\) such that

Moreover, by Lemma 6.5 there exists a positive constant \(C=C(\Omega ,D,s)\) such that

Hence, by taking

we conclude that for any \(n\in \mathbb {N}\) and any \(x,y\in {\overline{\Omega }}\) such that \(|x-y|\le {\bar{\rho }}\) then

This readily implies (7.12). \(\square\)

8 Existence of a principal eigenfunction

The main result of this section is the following

Theorem 8.1

Let \(\Omega\) be a uniformly convex domain, and let \(s > \frac{1}{2}\). Then, there exists a positive function \(\psi _1 \in C^{0,2s-1}({\overline{\Omega }})\) such that

For this, we first prove the solvability of the Dirichlet problem “below the principal eigenvalue”.

Theorem 8.2

Let \(\Omega\) be a uniformly convex domain, \(s > \frac{1}{2}\), and let \(f\in C(\Omega )\cap L^\infty (\Omega )\). Then there exists a solution \(u\in C^{0,2s-1}({\overline{\Omega }})\) of

in the following cases:

-

(i)

for any \(\mu\) if \(f\ge 0\)

-

(ii)

for any \(\mu <\mu ^+_1\).

In the case \(\mu <\mu ^+_1\) the solution is unique.

Proof

We can assume \(\mu >0\), since the arguments of the proof of Theorem 5.5 continue to apply for \({\mathcal {I}}^\pm _k+\mu u\) when \(\mu \le 0\).

-

(i)

Let \(w_1=0\) and define iteratively \(w_{n+1} \in C({\mathbb {R}}^N)\) as the solution, obtained by Theorem 5.5, of

$$\begin{aligned} {\left\{ \begin{array}{ll} {\mathcal {I}}_1^+ w_{n+1} = f(x) - \mu w_n(x) &{}{\text { in }} \Omega \\ w_{n+1}=0 &{}{\text { in }} {\mathbb {R}}^N {\setminus } \Omega . \end{array}\right. } \end{aligned}$$(8.3)Note that the sequence \((w_n)_n\) is nonincreasing and in particular \(w_n \le 0\) for any n. Indeed, since \(f\ge 0\) then \(w_2\le 0=w_1\) by Theorem 4.1. Moreover assuming by induction \(w_{n+1}\le w_n\), one has

$$\begin{aligned} {\mathcal {I}}_1^+ w_{n+2} = f- \mu w_{n+1} \ge f- \mu w_{n} = {\mathcal {I}}_1^+ w_{n+1}, \end{aligned}$$hence again by comparison \(w_{n+2} \le w_{n+1}\).

We now show that \(\sup _n \left\| w_n\right\| _\infty < +\infty\). If this is true, then in view of Theorem 7.3, the sequence \((w_n)_n\) converges uniformly in \({\mathbb {R}}^N\) to \(u\in C^{0,2s-1}({\mathbb {R}}^N)\), and passing to the limit in (8.3) we conclude, exploiting Lemma 5.1. Let us assume by contradiction that \(\lim _{n\rightarrow +\infty } \left\| w_n\right\| _\infty = +\infty\), and let \(v_n=\frac{w_n}{\left\| w_n\right\| }\). Then

$$\begin{aligned} {\left\{ \begin{array}{ll} {\mathcal {I}}_1^+ v_{n+1}=\frac{f(x)}{\left\| w_{n+1}\right\| } - \mu \frac{\left\| w_n\right\| }{\left\| w_{n+1}\right\| }v_n(x) &{}{\text { in }} \Omega \\ v_{n+1}=0 &{}{\text { in }} {\mathbb {R}}^N {\setminus } \Omega . \end{array}\right. } \end{aligned}$$Then again by the Hölder estimate (7.12) the sequence \((v_n)_n\) converges uniformly, up to a subsequence, to a function \(v \le 0\). Since, up to extract a further subsequence, \(\frac{\left\| w_n\right\| }{\left\| w_{n+1}\right\| } \rightarrow \tau \le 1\), we may pass to the limit to get

$$\begin{aligned} {\left\{ \begin{array}{ll} {\mathcal {I}}_1^+ v +\mu \tau v =0 &{}{\text { in }} \Omega \\ v =0 &{}{\text { in }} {\mathbb {R}}^N {\setminus } \Omega . \end{array}\right. } \end{aligned}$$Now since \({\mathcal {I}}^-_1(-v)+\mu \tau (-v)=0\) in \(\Omega\), by Theorem 6.8 we infer that v in fact vanishes everywhere. This is in contradiction to \(\left\| v\right\| _\infty =1\).

-

(ii)

We first claim that there exists a nonnegative solution \({\overline{w}}\in C^{0,2s-1}({\mathbb {R}}^N)\) of

$$\begin{aligned} {\left\{ \begin{array}{ll} {\mathcal {I}}_1^+{\overline{w}} + \mu {\overline{w}}=-\left\| f\right\| _\infty &{}{\text { in }} \Omega \\ {\overline{w}}=0 &{}{\text { in }} {\mathbb {R}}^N {\setminus } \Omega . \end{array}\right. } \end{aligned}$$(8.4)As above, we define \(w_1=0\) and \(w_{n+1}\) be the solution of

$$\begin{aligned} {\left\{ \begin{array}{ll} {\mathcal {I}}_1^+ w_{n+1} = -\left\| f\right\| _\infty - \mu w_n(x) &{}{\text { in }} \Omega \\ w_{n+1}=0 &{}{\text { in }} {\mathbb {R}}^N {\setminus } \Omega . \end{array}\right. } \end{aligned}$$The sequence \((w_n)_n\) is nondecreasing. Using now that \(\mu <\mu ^+_1\) we also infer that \(\sup _n \left\| w_n\right\| _\infty < +\infty\). Then, by Theorem 7.3, \(w_n\) converges uniformly in \({\mathbb {R}}^N\) to a function \({\overline{w}}\in C^{0,2s-1}({\mathbb {R}}^N)\) which is solution of (8.4).

For the general case, let us denote by \({\underline{w}}\) the solution of

obtained in i). Notice that \({\underline{w}}\le 0\le {\overline{w}}\).

Now let us define \(u_1= \underline{w}\) and let \(u_{n+1}\) be the solution of

We want to show that \(\underline{w} \le u_n \le {\overline{w}}\). This is true for \(n=1\). Let us assume by induction that this holds true at level n, and notice that

and similarly

Hence, by comparison we have \(\underline{w} \le u_{n+1} \le {\bar{w}}\). As a consequence, the sequence \((u_n)_n\) is bounded in \(C^{0,2s-1}({\mathbb {R}}^N)\) and up to a subsequence it converges uniformly to a function \(u\in C^{0,2s-1}({\mathbb {R}}^N)\) which is the desired solution.

It remains to show that (8.2) has at most one solution. For this notice that if u and v are, respectively, sub- and supersolution of \({\mathcal {I}}^+_1u+\mu u=f\) in \(\Omega\), then the difference \(w=u-v\) is a viscosity subsolution of

This easily follows if at least one between u and v are in \(C^2(\Omega )\). Instead, if u and v are merely semicontinuous, then using the doubling variables technique, as in the proof of Theorem 4.1 with minor changes, we obtain the result. Hence, if \(u_1\) and \(u_2\) are solutions of (8.2) then \(w=u_1-u_2\) solves

By Theorem 6.8, we infer that \(u_1\le u_2\). Reversing the role of \(u_1\) and \(u_2\) we conclude that \(u_1=u_2\). \(\square\)

We are now in position to give the proof of Theorem 8.1.

Proof of Theorem 8.1

In view of Theorem 8.2, for any \(n\in \mathbb {N}\) there exists a solution \(w_n\in C^{0,2s-1}({\overline{\Omega }})\) of

We claim that \(\sup _n \left\| w_n\right\| =+\infty\). If not, we can pick \(j\in \mathbb {N}\) such that \(j\ge 2\sup _n \left\| w_n\right\|\). Hence, \(w_j\) solves

This contradicts the maximality of \(\mu _1^+\), and proves that \(\sup _n \left\| w_n\right\| =+\infty\). Up to a subsequence, we may assume \(\lim _n \left\| w_n\right\| = +\infty\), and we can introduce the functions \(z_n=\frac{w_n}{\left\| w_n\right\| }\), which turn out to be solutions of

Using the estimate (7.12), the sequence \((z_n)_n\) converges uniformly to a function \(\psi _1\in C^{0,2s-1}({\overline{\Omega }})\) which is solution of (8.1). Moreover, \(\psi _1 \ge 0\) in \(\Omega\) by construction and \(\left\| \psi _1 \right\| _\infty =1\). By the strong minimum principle, see Theorem 4.3-iii), we conclude that \(\psi _1>0\) in \(\Omega\). \(\square\)

Change history

12 July 2022

Missing Open Access funding information has been added in the Funding Note.

References

Alvarez, O., Tourin, A.: Viscosity solutions of nonlinear integro-differential equations. Ann. Inst. H. Poincaré Anal. Non Linéaire 13(3), 293–317 (1996)

Barles, G., Chasseigne, E., Imbert, C.: On the Dirichlet problem for second-order elliptic integro-differential equations. Indiana Univ. Math. J. 57(1), 213–246 (2008)

Barles, G., Imbert, C.: Second-order elliptic integro-differential equations: viscosity solutions’ theory revisited. Ann. Inst. H. Poincaré Anal. Non Linéaire 25(3), 567–585 (2008)

Berestycki, H., Nirenberg, L., Varadhan, S.R.S.: The principal eigenvalue and maximum principle for second-order elliptic operators in general domains. Commun. Pure Appl. Math. 47(1), 47–92 (1994)

Birindelli, I., Galise, G., Ishii, H.: Existence through convexity for the truncated Laplacians. Math. Ann. 379(3–4), 909–950 (2021)

Birindelli, I., Galise, G., Ishii, H.: A family of degenerate elliptic operators: maximum principle and its consequences. Ann. Inst. H. Poincaré Anal. Non Linéaire 35(2), 417–441 (2018)

Birindelli, I., Galise, G., Topp, E.: Fractional truncated Laplacians: representation formula, fundamental solutions and applications. Preprint arXiv:2010.02707

Bucur, C., Valdinoci, E.: Nonlocal diffusion and applications. Lecture Notes of the Unione Matematica Italiana, 20. Springer; Unione Matematica Italiana, Bologna, xii+155 pp (2016)

Caffarelli, L., Li, Y.Y., Nirenberg, L.: Some remarks on singular solutions of nonlinear elliptic equations. I. J. Fixed Point Theory Appl. 5(2), 353–395 (2009)

Caffarelli, L., Silvestre, L.: Regularity theory for fully nonlinear integro-differential equations. Commun. Pure Appl. Math. 62, 597–638 (2009)

Crandall, M., Ishii, H., Lions, P.-L.: User’s guide to viscosity solutions of second order partial differential equations. Bull. Am. Math. Soc. 27(1), 1–67 (1992)

Del Pezzo, L.M., Quaas, A., Rossi, J.D.: Fractional convexity. Math. Ann. (2021). https://doi.org/10.1007/s00208-021-02254-y

Dyda, B.: Fractional calculus for power functions and eigenvalues of the fractional Laplacian. Fract. Calc. Appl. Anal. 15(4), 536–555 (2012)

Greco, A., Servadei, R.: Hopf’s lemma and constrained radial symmetry for the fractional Laplacian. Math. Res. Lett. 23(3), 863–885 (2016)

Harvey, F.R., Lawson, H.B.: Dirichlet duality and the nonlinear Dirichlet problem. Commun. Pure Appl. Math. 62(3), 396–443 (2009)

Quaas, A., Salort, A., Xia, A.: Principal eigenvalues of fully nonlinear integro-differential elliptic equations with a drift term. ESAIM Control Optim. Calc. Var. 26, 36 (2020)

Funding

Open access funding provided by Università degli Studi di Roma La Sapienza within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The authors were partially supported by INdAM-GNAMPA.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Birindelli, I., Galise, G. & Schiera, D. Maximum principles and related problems for a class of nonlocal extremal operators. Annali di Matematica 201, 2371–2412 (2022). https://doi.org/10.1007/s10231-022-01203-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10231-022-01203-z

Keywords

- Maximum and comparison principles

- Fully nonlinear degenerate elliptic PDE

- Nonlocal operators

- Eigenvalue problem