Abstract

We study existence and multiplicity of radial ground states for the scalar curvature equation

when the function \(K:{{\mathbb {R}}}^+\rightarrow {{\mathbb {R}}}^+\) is bounded above and below by two positive constants, i.e. \(0<{\underline{K}} \le K(r) \le {\overline{K}}\) for every \(r > 0\), it is decreasing in (0, 1) and increasing in \((1,+\infty )\). Chen and Lin (Commun Partial Differ Equ 24:785–799, 1999) had shown the existence of a large number of bubble tower solutions if K is a sufficiently small perturbation of a positive constant. Our main purpose is to improve such a result by considering a non-perturbative situation: we are able to prove multiplicity assuming that the ratio \({\overline{K}}/{\underline{K}}\) is smaller than some computable values.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper is devoted to the study of existence and multiplicity of positive solutions for the scalar curvature equation

where \(x \in {\mathbb {R}}^n\), \(n>2\), and K is a reciprocally symmetric, bounded, positive, continuous function, \(C^1\) for \(r=|x|>0\). The main purpose is to ensure the multiplicity of positive entire solutions which decay at infinity like \(|x|^{2-n}\) (i.e. fast decay solutions), when

According to [3, Theorem 1], [5, Theorem 2], we know that assumption (1.2) guarantees that each solution of (1.1) is radially symmetric about the origin.

Therefore, it is not restrictive to concentrate on radial solutions of (1.1), by considering the equivalent singular O.D.E.

where “ \(\,'\,\) ” denotes the differentiation with respect to \(r{=}|x|\), and, with a slight abuse of notation, \(u(r)=u(x)\).

The solutions of (1.3) can be classified according to their asymptotic behaviour at zero and at infinity. More precisely, a solution u(r) of (1.3) is called regular if \(u(0)=d\) and \(u'(0)=0\) and it will be denoted by u(r; d), and singular if \({\lim _{r \rightarrow 0}}u(r)=\pm \infty \); similarly, u(r) is a fast decay solution if \({\lim _{r \rightarrow \infty }}u(r) r^{n-2}=c\), and a slow decay solution if \({\lim _{r \rightarrow \infty }}u(r)r^{n-2}=\pm \infty \).

Moreover, we say that u(r) is a ground state (G.S.) if it is a positive regular solution of (1.3) such that \(\lim _{r\rightarrow \infty }u(r)=0\); we say that u(r) is a singular ground state (S.G.S.) if it is a positive singular solution of (1.3), which is defined for any \(r > 0\) and satisfies \(\lim _{r\rightarrow \infty }u(r)=0\). It is easy to show that G.S. and S.G.S. are decreasing, see Remark 2.5.

Equation (1.1) and its generalizations have attracted the attention of several different authors giving rise to a huge literature on the topic, both for its intrinsic mathematical interest and for its relevance in application. In fact, Eq. (1.1) is known as scalar curvature equation since the existence of G.S. with f.d. is equivalent to the existence of a metric in \({{\mathbb {R}}}^n\) conformally equivalent to the Euclidean metric and which has scalar curvature K, see, for example, [6, 9, 14] for more details.

Furthermore, Eq. (1.1) finds application in astrophysics for particular type of K: a significant example is given by the Matukuma equation where u represents the gravitational potential in a globular cluster (cf., among others, [1, 11, 40]). Finally, it can be used to study the stationary states for a nonlinear Schrodinger equation in quantum mechanic and for a reaction diffusion equation in chemistry. In most of the applications, positivity is crucial and the fast decay is needed to deal with physically relevant solutions.

In the 1980s, it was realized that the Pohozaev function \(P(r)=\int _{0}^{r}K'(s) s^{n-1}\hbox {d}s\) plays a key role in determining the structure of positive solutions of (1.3). No G.S. with fast decay can exist if P(r) has constant sign, so, in particular, when K is monotone (but non-constant), see [14, 26, 28, 32]; while there are many different existence results when P(r) changes sign. The situation is simpler if P(r) is positive for r small and negative for r large, in particular when K(r) has a maximum, see, for example, [6, 7, 11, 28, 29, 38, 39]. More in detail, the existence of a radial G.S. with fast decay was proved requiring that

where \(A_0,B_0, \delta _0,\eta _0\) are positive constants, \(\delta _1>\delta _0\), \(\eta _1>\eta _0\), see [11, 28, 38, 39]. Results are also available when \(\delta _0=\eta _0=0\) and \(A_i,B_i,\delta _1,\eta _1\) are positive, see, for example, [6, 7, 29, 39]. We emphasize that under assumption (1.4) a complete classification of both regular [27, 37, 40] and singular solutions [13] is available, and the uniqueness of radial G.S. with fast decay is guaranteed whenever the unique critical point of K is a maximum. More precisely, if K(r) satisfies (1.4) with \(A_i,B_i,\delta _i,\eta _i \ge 0\), and \(rK'/K\) is decreasing, then there is a unique \(d^*>0\) such that u(r; d) is a G.S. with slow decay if \(0<d<d^*\), it is a G.S. with fast decay if \(d=d^*\) and it has a positive zero if \(d>d^*\), see in particular [27, 37, 40] concerning the uniqueness of \(d^*\). Furthermore, there are uncountably many radial S.G.S. with slow decay and uncountably many radial S.G.S. with fast decay. Nowadays, also nodal solutions are classified [13, 31], and many results have been extended to a p-Laplace context [17, 18, 24]. We remark that the presence of a local maximum allows existence and multiplicity of non-radial positive solutions of (1.1), cf. [33] and [35], respectively.

However, there is a striking difference in the structure of radial positive solutions between the case in which K admits a unique maximum and the case in which it admits a unique minimum. As observed above, in the former (and easier) case we could have a unique G.S. with fast decay of (1.3), and a complete classification of the solutions. In the latter (and more complicated) situation, we range from a large number of G.S. with fast decay to non-existence results, see, for example, [6] for non-existence, [6, 7] for existence, and [1, 20, 21] for both multiplicity and non-existence results in the case where K is an unbounded function satisfying \(K(r)\sim r^{\delta }\) as \(r \rightarrow 0\) and \(K(r)\sim r^{\eta }\) as \(r \rightarrow +\infty \), with \(\delta< 0 < \eta \). Partial structure results have been achieved, cf. [20].

Similarly, for K(r) varying between two positive constants the situation is delicate and quite complicated: non-existence results as well as existence of non-radial solutions for (1.1) can be achieved, cf. [7, Theorem 0.4] and [4], respectively.

In the 1990s, it was noticed that multiplicity results could be produced requiring several sign changes in the Pohozaev function, namely asking the function K to have many critical points, under the additional assumption that K is either a regular or a singular perturbation of a constant, i.e.

see [2, 25]. Some further results in this direction are contained in [8, 36].

Chen and Lin in [9] noticed that if K(|x|) has a critical point, but it is a minimum, uniqueness of the G.S. might be lost. They considered K(|x|) as in (1.5) and assume the following

- \(\varvec{(\mathrm{K}_0)}\):

\(K(r)=K\left( \frac{1}{r}\right) \) for any \(0<r \le 1\);

- \(\varvec{(\mathrm{K}_1)}\):

\(K'(r) \le 0 \) for any \(r\in (0,1)\), but \(K'(r)\not \equiv 0\) ;

- \(\varvec{(\mathrm{K}_2)}\):

- $$\begin{aligned}{}\begin{array}[t]{c} \displaystyle {K(r)= K(0)-A r^l+h(r) \quad \text { where }} \\ \displaystyle {A>0, \quad \quad 0<l < \frac{n-2}{2}, \quad \quad \lim _{r\rightarrow 0} |h(r)|r^{-l}+|h'(r)| r^{-l+1}=0.} \end{array} \end{aligned}$$

Theorem A

[9, Theorem 1.1] Assume that K satisfies (1.5) and \(\varvec{(\mathrm{K}_0)}\)–\(\varvec{(\mathrm{K}_1)}\)–\(\varvec{(\mathrm{K}_2)}\), then for any \(\ell \in {\mathbb {N}}\) there exists a \(\varepsilon _\ell >0\) such that for every \(\varepsilon \in (0,\varepsilon _\ell )\) Eq. (1.3) admits at least \(\ell \) G.S. with fast decay \(u_1,\ldots ,u_\ell \), where the function \(u_j(r)r^{\frac{n-2}{2}}\) has j local maxima and \((j-1)\) local minima.

Lin and Liu [30] obtained a similar result, again in a perturbative setting for K of the form (1.5), removing the technical symmetry assumption \(\varvec{(\mathrm{K}_0)}\), but requiring condition \(\varvec{(\mathrm{K}_2)}\) with a more restrictive smallness assumption on the parameter l. The same conclusion as in Theorem A was also obtained in [15] for K of the form (1.6), just requiring that K has a (possibly degenerate) positive minimum. As far as we are aware, [9, 15, 30] are the only papers obtaining multiplicity of G.S. with fast decay for (1.3) with a unique positive minimum of K.

The main purpose of this article is to extend the perturbative result of [9] to a non-perturbative situation. To this aim, we give a new argument to reprove Theorem A which furnishes a precise estimate on how small \(\varepsilon _{\ell }\) should be, and we show that \(\varepsilon _{\ell }\) need not be too small.

Theorem 1.1

All the constants \(\varepsilon _\ell \) in Theorem A can be explicitly computed. In particular, we can find the following approximations from below:

Moreover, the explicit expression of the first two constants is

and \(\varepsilon _3\) solves the equation

where

Finally, if the dimension is \(n=4\), we have \(\displaystyle \varepsilon _\ell = \frac{1}{\ell }\) for every positive integer \(\ell \).

We wish to remark that conditions \(\varvec{(\mathrm{K}_1)}\) and \(\varvec{(\mathrm{K}_2)}\) are crucial to obtain multiplicity results, but they can be weakened or overlooked when dealing with the existence of at least a G.S. with fast decay. Condition \(\varvec{(\mathrm{K}_0)}\) is a technical requirement which greatly simplifies the proof. The possibility of removing such a condition will be the object of forthcoming investigations.

As a first step in our analysis we also obtain the following existence result which does not require any integral or asymptotic condition.

Theorem 1.2

Assume that K satisfies \(\varvec{(\mathrm{K}_0)}\) and (1.5) with \(0<\varepsilon \le \varepsilon _1:= \frac{2}{n-2}\), then Eq. (1.3) admits at least a G.S. with fast decay.

The symmetric condition \(\varvec{(\mathrm{K}_0)}\) allows to overcome the Pohozaev obstruction; furthermore the smallness condition is again quantitative and not of perturbative nature.

We emphasize that with a standard rescaling argument we can address Eq. (1.1) with more general bounded functions K. Indeed, let v(r) be a solution of (1.3) where K satisfies \(0<{\underline{K}} \le K(r) \le {\overline{K}} <\infty \), then \(u(r)= {\underline{K}}^{(n-2)/4} v(r)\) solves \((u'\, r^{n-1})'+ {{\mathscr {K}}}(r)\, r^{n-1}\, u^{\frac{n+2}{n-2}}=0\), where \({{\mathscr {K}}}(r):={\underline{K}}^{-1} K(r)\) can be written in the form (1.5) with \(\varepsilon ={{\overline{K}}}/{{\underline{K}}}-1\). So, we have the following.

Remark 1.3

Let \(0<{\underline{K}} \le K(r)\le {\overline{K}} <\infty \) for any \(r \ge 0\), then Theorems A and 1.2 keep on holding, simply by replacing the condition \(0<\varepsilon < \varepsilon _{\ell }\) with \({{\overline{K}}}/{{\underline{K}}} < 1+\varepsilon _{\ell }\).

Theorems 1.1, 1.2 and Remark 1.3 can be trivially generalized to embrace the slightly more general case of

and \(\sigma >-2\). Notice that in this case we cannot apply directly [5, Theorem 2], so G.S. need not be radial.

Anyway, restricting to consider just radial G.S, we can reduce Eq. (1.9) to

Then, we can prove a slightly more general version of Theorem A which has not appeared in literature previously, as far as we are aware.

Corollary 1.4

Theorem A continues to hold for Eq. (1.10) when \(0<k(|x|)<1\). Furthermore, we can reprove Theorems 1.2 and 1.1 as well. We stress that in the equations defining \(\varepsilon _j\) we must replace \(q=q(0)\) by \(q(\sigma )\). Consequently, all the values in the table 1.7 have to be slightly modified. In particular, we find

To conclude this incomplete review of the vast literature on the problem, we wish to draw to the reader’s attention the interesting paper [35], where Wei and Yan prove that if K(|x|) has a positive maximum there are infinitely many non-radial G.S. This result, together with [9, 15] and the present article, seems to suggest that the bubble tower phenomenon occurs in the presence of a critical point of K(|x|), and it is made up by radial solutions if the critical point is a minimum and by non-radial ones if it is a maximum.

Ours proofs are developed through basic tools of phase plane analysis after passing from (1.3) to the two-dimensional dynamical system (2.2), via Fowler transformation. The problem of existence of G.S. with fast decay is then translated into a problem of existence of homoclinic trajectories. Following the outline of [9], we perform a shooting argument, within system (2.2), from the origin towards the isocline \({\dot{x}}=0\). The results are then obtained by combining some barrier sets constructed in Section 3 and an asymptotic result borrowed from [9, 10].

The paper is organized as follows. In Sect. 2, we introduce the Fowler transformation and we give some preliminary results. In Sect. 3, we sketch the geometrical construction on which the proof of our main results developed in Sect. 4 is based. In Sect. 5, we compute explicitly the values of the constants \(\varepsilon _{\ell }\). In Appendix, we reprove [10, Theorem 1.6] for completeness.

2 Fowler transformation and invariant manifolds

Let us introduce a classical change of variable, known as Fowler transformation, to convert Eq. (1.3) into a two-dimensional dynamical system. More precisely, by setting

we can rewrite (1.3) as the following system:

where “\(\,{\cdot }\,\)” denotes the differentiation with respect to t, and \(q=\frac{2n}{n-2}\).

Remark 2.1

If we start from (1.10), we obtain system (2.2) again, but the power q is different, i.e. \(q(\sigma )=2\,\, \frac{n+\sigma }{n-2}\).

We collect here some notations that will be in force throughout the whole paper. Let \({\varvec{Q}}\in {{\mathbb {R}}}^2\), we denote by \(\varvec{\phi }(t;\tau ,{\varvec{Q}})\) the trajectory of (2.2) which is in \({\varvec{Q}}\) at \(t=\tau \). We denote by u(r; d) the regular solution of (1.3) such that \(u(0;d)=d>0\) and \(u'(0;d)=0\), and by \(\varvec{\varvec{\phi }}(t,d)=(x(t,d),y(t,d))\) the corresponding trajectory of (2.2). The origin is a critical point for (2.2) and the linearization of (2.2) at the origin has constant positive and negative eigenvalues, i.e. \( \pm \alpha \), so the origin is a saddle. Moreover, from [12, §13.4], we see that the non-autonomous system (2.2) admits unstable and stable leaves, i.e.

Namely, \(W^u(\tau )\) and \(W^s(\tau )\) are \(C^1\) immersed one-dimensional manifolds.

Another way to construct \( W^u(\tau )\) is to add the extra variable \(z= {e}^{\varpi t}\), where \(\varpi >0\), so that the system (2.2) can be rewritten as the equivalent autonomous three-dimensional system

It can be shown that if (2.4) is smooth, it admits a two-dimensional unstable manifold \(\varvec{W^u}\) and that

This allows us to define \(W^u(-\infty )=\{\varvec{Q}\in {{\mathbb {R}}}^2 \mid (\varvec{Q}, 0) \in \varvec{W^u} \}\), which is the unstable manifold of the frozen autonomous system where \({\mathcal {K}}\equiv {\mathcal {K}}(0)\).

Since the flow of (2.2) is ruled by its linear part close to the origin, according to [22, 25] and [12, §13.4], we easily deduce the following properties of the unstable and stable leaves, respectively.

Remark 2.2

Assume that \({\mathcal {K}}\in C^1\) and it is bounded. Then,

Moreover, \(W^u(\tau )\) is tangent in the origin to the line \(y=\alpha x\), for any \(\tau \in {{\mathbb {R}}}\), and it depends smoothly on \(\tau \), i.e., let L be a segment which intersects \(W^u(\tau _0)\) transversally in a point \({\varvec{Q}}(\tau _0)\), then there is a neighbourhood I of \(\tau _0\) such that \(W^u(\tau )\) intersects L in a point \({\varvec{Q}}(\tau )\) for any \(\tau \in I\), and \({\varvec{Q}}(\tau )\in C^1\).

Furthermore, if (2.4) is smooth for \(z=0\) too (e.g. if \(\varvec{(\mathrm{K}_2)}\) holds and \(0<\varpi < l)\), the smoothness property of \(W^u(\tau )\) is extended to \(\tau _0=-\infty \), i.e. to the system (2.4) restricted to \(z=0\), see [23, §2.2] for more details.

Similarly, fast decay solutions correspond to trajectories of \(W^s(\tau )\).

Remark 2.3

Assume that \({\mathcal {K}}\in C^1\) and it is bounded. Let \(\varvec{\phi }(t;\tau _0,{\varvec{Q}})\) be the trajectory of (2.2) corresponding to the solution u of (1.3). Then,

Moreover, \(W^s(\tau )\) is tangent in the origin to the line \(y=-\alpha x\), for any \(\tau \in {{\mathbb {R}}}\), and it depends smoothly on \(\tau \).

We stress that the manifold \(W^u(\tau )\) (as well as \(W^s(\tau )\)) is divided by the origin in two connected components: one which leaves the origin and enters \(x>0\), and the other that enters \(x<0\). Since we are just interested in positive solutions, abusing the notation, we let \(W^u(\tau )\) and \(W^s(\tau )\) stand for the branches of the leaves which depart from the origin and enter in \(x>0\).

Remark 2.4

Assume that \({\mathcal {K}}\in C^1\) and it is bounded. Fix \(\tau \in {{\mathbb {R}}}\), and let \({\varvec{Q}}(d) \in W^u(\tau )\) be such that \(\varvec{\phi }(\tau ,d)={\varvec{Q}}(d)\), for every \(d\ge 0\). Then, the function \({\varvec{Q}}:[0,+\infty )\rightarrow W^u(\tau )\) is a smooth (bijective) parametrization of \(W^u(\tau )\) and \({\varvec{Q}}(0)=(0,0)\).

We refer to [13, Lemma 2.10] for the proof of Remark 2.4. Let us also notice that, in a similar way, the stable leave \(W^s(\tau )\) can be parametrized directly by \(c:={\lim _{r \rightarrow \infty }}u(r)r^{n-2}\).

Remark 2.5

Any regular solution u(r; d) is decreasing until its first zero; so Ground States are monotone decreasing.

For an easy proof of the remark, we refer to [32, Lemma 3.7].

Assumption \(\varvec{(\mathrm{K}_0)}\) guarantees that \({{{\mathcal {K}}}}\) is even, i.e. \({{{\mathcal {K}}}}(-t)={{{\mathcal {K}}}}(t)\) for any \(t\in {\mathbb {R}}\). Hence, if \(\varvec{\phi }(t,d)=(x(t),y(t))\) solves (2.2) and \(y(0)=0\), then x(t) is even and y(t) is odd. Thus, we get the following.

Remark 2.6

Assume \(\varvec{(\mathrm{K}_0)}\). If \(\varvec{\phi }(t,d)\) is such that \(x(t,d)>0\) for \(t \le 0\), and \(y(0,d)=0\), then u(r; d) is a monotone decreasing G.S. with fast decay.

To illustrate the main ideas of the proofs of Theorem 1.2 and Theorem A, we enumerate some results which will be proved in Sect. 4.

Taking into account that the origin is a critical point and that \(W^u(\tau )\) is tangent in the origin to the line \(y=\alpha x\), we easily deduce that x(t, d) is strictly increasing and, consequently, y(t, d) is strictly positive for t in a neighbourhood of \(-\infty \), for any \(d>0\).

For any \(\ell \in {\mathbb {N}}\), \(\ell \ge 1\) we define the sets

We denote by \(T_1(d)\) and \(T_\ell (d)\), respectively, the first and the \(\ell \)th zero of \(y(t,d)= {\dot{x}}(t,d)\), i.e.

Remark 2.7

Assume (1.5) and \(0<\varepsilon \le \varepsilon _1\). Then, there is \(D_1>0\) such that \(R_1(d)>1\) for every \(d\in (0,D_1)\).

This Remark is proved in Section 4 as an easy consequence of Remark 4.3.

In Sect. 4 (Lemma 4.4) and in Appendix, we reprove for completeness the following result, which is a consequence of [9, 10].

Proposition 2.8

[10, Theorem 1.6], [9, Lemma 2.2] Assume \(\varvec{(\mathrm{K}_1)}\) and \(\varvec{(\mathrm{K}_2)}\). Then, for any fixed \(\ell \in {\mathbb {N}}\), \(\ell \ge 1\), and for any \(\rho >0\) there is \({d}_\ell \in I_\ell \) such that \(R_\ell (d_{\ell }) <\rho \).

Under very mild conditions, we can show the continuity of \(R_1(d)\).

Proposition 2.9

Assume (1.5) with \(0<\varepsilon \le \varepsilon _1\), then \(I_1=(0, +\infty )\) and \(R_1(d)\) is continuous in \(I_1\). Furthermore, \(\lim _{d \rightarrow +\infty } R_1(d)=0\) and \(\lim _{d \rightarrow 0} R_1(d)=+\infty \).

Proposition 2.9 is restated in a dynamical context and proved in Sect. 4 as Lemma 4.9. From Proposition 2.9, we find \(d_1^*>0\) such that \(R_1(d_1^*)=1\); hence, if \(\varvec{(\mathrm{K}_0)}\) holds, \(\varvec{\phi }(t,d_1^*)\) is a homoclinic trajectory for (2.2) such that x(t, d) is positive and increasing for \(t \le 0\) and positive and decreasing for \(t \ge 0\). Then, Theorem 1.2 immediately follows from Remark 2.6.

To prove the continuity of \(R_j(d)\) for \(j \ge 2\), we need to require \(\varvec{(\mathrm{K}_1)}\) and to develop an articulated barrier argument which guarantees that the flow \(\varvec{\phi }(t,d)\) intersects the x-axis transversally.

Proposition 2.10

Assume \(\varvec{(\mathrm{K}_1)}\) and (1.5) with \(0<\varepsilon \le \varepsilon _\ell \), where \(\varepsilon _\ell \) is the computable constant given by Lemma 3.7 below. If \(R_{j}(d) \le 1\), then \(R_j\) is continuous for any \(j=1, \ldots , \ell \).

Let us sketch the proof of Theorem A, see Sect. 4 for a full fledged argument. Fix \(\ell \in {\mathbb {N}}\), assume \(\varvec{(\mathrm{K}_0)}\)–\(\varvec{(\mathrm{K}_1)}\)–\(\varvec{(\mathrm{K}_2)}\) and (1.5) with \(0<\varepsilon <\varepsilon _\ell \). According to Proposition 2.8, Remark 2.7 and Proposition 2.10, for any \(j=1, \ldots , \ell \) there is at least a value \(d_j^* \in I_j\) such that \(R_j(d_j^*)=1\). Hence, from Remark 2.6 we immediately infer that \(\varvec{\phi }(t,d_j^*)\) is a homoclinic trajectory for (2.2) such that \(x(t,d_j^*)\) is positive for any \(t \in {{\mathbb {R}}}\) and \(y(t,d_j^*)\) has exactly \((2j-1)\) zeroes. This would complete the proof of Theorem A.

We emphasize that, in this paper as well as in [9], the simplicity of the zeros of y(t, d) is crucial to obtain the continuity of the function \(R_{j}\), i.e. Proposition 2.10. The contribution here with respect to [9] lies in the fact that the perturbative result in [9] gives no information on the actual size of the values \(\varepsilon _j\) and no clue on how to compute them.

3 Some geometrical constructions: meaning of \(\varvec{\varepsilon _1}\) and definition of \(\varvec{\varepsilon _\ell }\)

Define

If we evaluate \({\mathcal {H}}\) along a solution (x(t), y(t)) of (2.2), we obtain the associated Pohozaev type energy \(\mathcal H(x(t),y(t),t)\), whose derivative with respect to t satisfies

We immediately observe that if \(\varvec{(\mathrm{K}_1)}\) holds, then the function \({\mathcal {H}}\) is decreasing along the trajectories defined on negative values of t.

Moreover, if u(r; d) is a regular solution, then the corresponding trajectory \(\varvec{\phi }(t,d)\) satisfies \({\lim _{t \rightarrow -\infty }}{\mathcal {H}}(\varvec{\phi }(t,d),t)=0\), since \({\mathcal {H}}(0,0,t) \equiv 0\). So, we easily get the following lemma.

Lemma 3.1

Assume \(\varvec{(\mathrm{K}_1)}\). Then, \({\mathcal {H}}(x(t,d),y(t,d),t)<0\) for any \(t \le 0\) and \(d>0\).

Let us now consider the frozen autonomous system (2.2) defined by setting \({\mathcal {K}}(t) \equiv 1+c\) for any \(t \in {{\mathbb {R}}}\), for a certain constant \(c \ge 0\), i.e.

We introduce the corresponding energy function

Notice that if (x(t), y(t)) is a solution of (2.2), then

System (3.3) admits a homoclinic orbit

Lemma 3.2

Assume (1.5) and \(\varvec{(\mathrm{K}_1)}\). Then, the trajectory \(\varvec{\phi }(t,d)\) of system (2.2) belongs to the region enclosed by \(\Gamma _0\) for every \(t\le 0\) and \(d>0\). In particular, \(x(t,d)>0\) for any \(t \le 0\).

Proof

Assumption (1.5) ensures that \({\mathcal {K}}(t)\ge 1\) for every \(t\le 0\). Thus, combining definitions (3.1) and (3.4) with Lemma 3.1, we immediately deduce that

\(\square \)

Remark 3.3

The homoclinic orbit \(\Gamma _{c_{1}}\) belongs to the region enclosed by \(\Gamma _{c_{2}}\), whenever \(c_1>c_2\).

System (3.3) admits a unique critical point \(\varvec{P^*}(c)=(P^*_x(c),0)\) such that \(P^*_x(c)>0\), i.e.

Remark 3.4

Set \(G_c^{\min }:=G_c(P_x^*(c))\), the minimum of \(G_c\) in the interval \([0,+\infty )\). Fix \(g\in (G_c^{\min },0)\); then the equation \(G_c(x)=g\) admits exactly two positive solutions \(x_{1,c}(g)\) and \(x_{2,c}(g)\) such that \(0<x_{1,c}(g)<P^*_x(c)<x_{2,c}(g)\). We denote by \(A_1(c)\) the unique positive solution of the equation \(G_c(x)=0\), i.e.

Remark 3.5

The functions \(x_{1,c}(g)\) and \(x_{2,c}(g)\) are, respectively, decreasing and increasing functions with respect to the energy \(g\in (G_c^{\min },0]\). In particular, \(\varvec{A_1}(c)=(A_1(c),0)\) is the right extremal of \(\Gamma _c\).

We set \(\varepsilon _1={\frac{q-2}{2}}=\frac{2}{n-2}=\frac{1}{\alpha }\), so that, from (3.7) and (3.8), we find

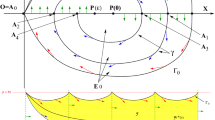

We are now going to define a spiral-like path\(\varvec{\gamma }\) inside the region bounded by \(\Gamma _0\), cf. Fig. 1. The path \(\varvec{\gamma }\) will rotate several times around the points \(\varvec{P^*}(\varepsilon )\) and \(\varvec{P^*}(0)\) provided that \(\varepsilon \) is sufficiently small, as described in Lemma 3.7 below. Such a path will allow to locate the solutions of the non-autonomous system (2.2) satisfying \((x(t),y(t))\rightarrow (0,0)\) as \(t\rightarrow -\infty \), cf. Fig. 2 and Lemma 4.6 below.

We emphasize that the spiral-like path \(\varvec{\gamma }\) depends on \(\varepsilon \), but we leave this dependence unsaid for simplicity. The path \(\varvec{\gamma }\) is made up by the level curves of \(H_\varepsilon \) in the half-plane \(\{y\ge 0\}\) and by the level curves of \(H_0\) in the half-plane \(\{y\le 0\}\). The continuity of the path is guaranteed by the proper choice of the levels.

Let us introduce the curve

joining the origin to the point \(\varvec{A_1}(\varepsilon )=(A_1(\varepsilon ),0)\). We set

Then, for any \(0<\varepsilon \le \varepsilon _1\) we define

and \(\varvec{A_2}=(A_2,0)\) as the left extremal of \({\mathcal {A}}_2\), i.e. \(A_2=x_{1,0}({\mathcal {H}}_1)\).

Remark 3.6

For any \(0\le \varepsilon \le \varepsilon _1\), the functions \(A_1=A_1(\varepsilon )\) and \({\mathcal {H}}_1={\mathcal {H}}_1(\varepsilon )\) are both continuous and decreasing, while \(A_2=A_2(\varepsilon )\) is continuous and increasing. Furthermore, \({\mathcal {A}}_2\) belongs to the region enclosed by \(\Gamma _{\varepsilon }\).

Proof

The properties of \(A_1(\varepsilon )\) and \({\mathcal {H}}_1(\varepsilon )\) follow from (3.8) and (3.11). Since \({\mathcal {H}}_1(\varepsilon )\) is decreasing, from Remark 3.5 we find that \(A_2(\varepsilon )=x_{1,0}({\mathcal {H}}_1(\varepsilon ))\) is increasing. \(\square \)

Since \(P_x^*(\varepsilon )\) is descreasing, we deduce that \(P_x^*- A_2\) is a continuous decreasing function satisfying

which guarantees the existence of \(\varepsilon _2\in (0,\varepsilon _1)\) such that

(We will show in Sect. 5 how to compute \(\varepsilon _2\)). Now, assume \(0<\varepsilon <\varepsilon _2\), so that \(A_2 < P^*_x(\varepsilon )\): taking into account (3.11), we set

We define

and \(\varvec{A_3}=(A_3,0)\) as the right extremal of \({\mathcal {A}}_3\). In particular, \(A_3=x_{2,\varepsilon }({\mathcal {H}}_2)\). Combining Remarks 3.5 and 3.6, we deduce that \({\mathcal {H}}_2(\varepsilon )\) and, consequently, \(A_3(\varepsilon )\) are both continuous and decreasing for \(\varepsilon \in (0,\varepsilon _2]\). Moreover, from (3.13) and (3.9), we deduce that \(A_3(\varepsilon _2)=P^*_x(\varepsilon _2)<P^*_x(0)\) and \(\lim _{\varepsilon \rightarrow 0} A_3(\varepsilon )=x_{2,0}(0)=A_1(0)>P^*_x(0)\), which ensures the existence of \(\varepsilon _3\in (0,\varepsilon _2)\) such that

For \(\varepsilon <\varepsilon _3\) we have \(A_3>P^*_x(0)\), and we can construct the set

where \({\mathcal {H}}_3:=H_0(\varvec{A_3})=H_\varepsilon (\varvec{A_3}) -\frac{\varepsilon }{q} A_3^q= {\mathcal {H}}_2 -\frac{\varepsilon }{q} A_3^q= -\frac{\varepsilon }{q}(A_1^q-A_2^q+A_3^q)<0\). Finally, we locate the point \(\varvec{A_4}=(A_4,0)\) as the left extremal of \({\mathcal {A}}_4\), so that \(A_4= x_{1,0}({\mathcal {H}}_3)\). Iterating such a procedure, we have the following lemma which summarizes the situation, see Fig. 1.

Lemma 3.7

There exists a decreasing sequence of positive values \((\varepsilon _\ell )_{\ell \in {{\mathbb {N}}}}\) with the following property: for every \(\varepsilon <\varepsilon _\ell \), we can construct the sets \(\mathcal A_1,\ldots ,{\mathcal {A}}_\ell \) as follows:

where \({\mathcal {H}}_0=0\),

with \(A_{2i+1}=x_{2,\varepsilon }({\mathcal {H}}_{2i})\), \(A_{2i+2}=x_{1,0}({\mathcal {H}}_{2i+1})\). Moreover,

We can glue together the sets \({\mathcal {A}}_1,\ldots ,{\mathcal {A}}_\ell \) and draw a spiral-like path\(\varvec{\gamma }\) which rotates around the points \(\varvec{P^*}(\varepsilon )\) and \(\varvec{P^*}(0)\).

Finally, if we choose a critical value\(\varepsilon =\varepsilon _\ell \), we can construct the sets \({\mathcal {A}}_1,\ldots ,{\mathcal {A}}_\ell \) using the definitions above, with the only difference that \(A_\ell =P^*_x(0)\) if \(\ell \) is odd and \(A_\ell =P^*_x(\varepsilon _\ell )\) if \(\ell \) is even.

The function \(A_j=A_j(\varepsilon )\) is continuous and decreasing if j is odd, while it is increasing when j is even; \({\mathcal {H}}_j={\mathcal {H}}_j(\varepsilon )\) is continuous and decreasing, and \({\mathcal {H}}_{j+2}<{\mathcal {H}}_{j}\).

Remark 3.8

Note that \({\mathcal {H}}_{2j+1}=H_0(\varvec{A_{2j+1}})\) and \({\mathcal {H}}_{2j}=H_\varepsilon (\varvec{A_{2j+1}})\), which implies that \(A_{2i+1}=x_{2,0}({\mathcal {H}}_{2i+1})\) and \(A_{2i+2}=x_{1,\varepsilon }({\mathcal {H}}_{2i+2})\). Finally, observe that \(\varvec{\gamma }\subseteq \Gamma _\varepsilon \subset \Gamma _0\).

The computation of the values \(\varepsilon _\ell \) is postponed to Sect. 5.

4 Proofs

In the previous section, we have constructed the path \(\varvec{\gamma }\) gluing together trajectories of the autonomous systems (3.3). Now, we turn to consider the non-autonomous problem (2.2). The first step is to locate the initial branch of the unstable manifold \(W^u(\tau )\): this will be enough to prove Theorem 1.2. Then, to obtain the multiplicity result, Theorem A, we will use the set \(\varvec{\gamma }\) to control the behaviour of the solutions of (2.2) for \(t\le 0\).

Having in mind (3.4) and (3.8), we define

Notice that \(E_1\) is the set enclosed by \(\Gamma _0\) and \(\Gamma _\varepsilon \) in the half-plane \(\{y\ge 0\}\).

We aim to prove the following.

Lemma 4.1

Assume (1.5) with \(0<\varepsilon \le \varepsilon _1\). Then, for any \(\tau \in {{\mathbb {R}}}\) there is \(\varvec{\xi _1}(\tau )=(\xi _1(\tau ),0)\) such that \(\varvec{\xi _1}(\tau )\in [W^u(\tau ) \cap L_1]\) and the connected branch \({\tilde{W}}^u(\tau )\) of the manifold \(W^u(\tau )\) between the origin and \(\varvec{\xi _1}(\tau )\) lies in \(E_1\).

In order to prove Lemma 4.1, we need the following result.

Lemma 4.2

Assume (1.5), then the flow of (2.2) on \({\mathcal {A}}_1\) and \({\mathcal {B}}_1\) points towards the interior of \(E_1\). Assume also \(0<\varepsilon \le \varepsilon _1\), then the flow of (2.2) on \(L_1\) points towards the exterior of \(E_1\) for any \(t \in {{\mathbb {R}}}\). In particular, if \({\varvec{Q}}\in L_1\), then \({\dot{y}}(t;t,{\varvec{Q}})<0\).

Proof

for every solution (x(t), y(t)) of (2.2) with \(x(t)>0\), \(y(t)> 0\). This proves the first assertion of the lemma.

Assume now \(0<\varepsilon \le \varepsilon _1\) and consider a solution (x(t), y(t)) of (2.2) with \((x(t_0),y(t_0))\in L_1\). Thus, from (1.5) and (3.8) we get

which implies

This completes the proof of the lemma. \(\square \)

Proof of Lemma 4.1

We just sketch the proof inspired by Ważewski’s principle; we refer to [16, Theorem 3.3], see also [19, Lemma 3.5], [23, Lemma 6.3] for more details.

Fix \(\tau \in {\mathbb {R}}\). We claim that \(L_1\cap W^u(\tau )\ne \emptyset \). Consider \({\varvec{Q}}\in L_1\setminus W^u(\tau )\). Taking into account Lemma 4.2 and the absence of invariant sets in the interior of \(E_1\), we define \({\mathcal {T}}({\varvec{Q}})\) as the value in \((-\infty ,\tau ]\) such that \(\varvec{\phi }(t;\tau ,{\varvec{Q}}) \in E_1\) for every \(t\in ({\mathcal {T}}({\varvec{Q}}),\tau )\) and \(\varvec{\phi }({\mathcal {T}}({\varvec{Q}});\tau ,{\varvec{Q}}) \in ({\mathcal {A}}_1 \cup {\mathcal {B}}_1)\). Combining (4.2) with the continuity of the flow, it is easy to check that the sets

are open. Furthermore, observe that \((A_1(\varepsilon ),0) \in L_{{\mathcal {A}}}\) and \((A_1(0),0) \in L_{{\mathcal {B}}}\); from a connection argument, it follows that there is \(\varvec{\xi _1}(\tau ) \in L_1\), \(\varvec{\xi _1}(\tau ) \not \in L_{{\mathcal {A}}}\cup L_{{\mathcal {B}}}\). Then, according to Lemma 4.2, we easily deduce that \(\varvec{\phi }(t;\tau , \varvec{\xi _1}(\tau )) \in E_1\) for any \(t<\tau \), and \({\lim _{t \rightarrow -\infty }}\varvec{\phi }(t;\tau , \varvec{\xi _1}(\tau ))=(0,0)\). Therefore, \(\varvec{\xi _1}(\tau ) \in W^u(\tau )\), which proves the claim.

Consider a continuous path \(\sigma :[0,1]\rightarrow {\mathbb {R}}\) joining \({\mathcal {A}}_1\) and \({\mathcal {B}}_1\) with \(\sigma (0)\in {\mathcal {A}}_1\) and \(\sigma (1)\in {\mathcal {B}}_1\). We could adopt the argument above, with \(L_1\) replaced by \(\sigma \), to prove the existence of \(s\in (0,1)\) such that \(\sigma (s)\in W^u(\tau )\). Then, applying Lemma 4 in [34] we conclude that the set \(W^u(\tau )\) defined as in (2.3) contains a compact connected set \({\tilde{W}}^u(\tau )\) which contains the origin, \(\varvec{\xi _1}(\tau ) \in L_1\) and such that \({\tilde{W}}^u(\tau )\subset E_1\). Furthermore, using the classical results in [12, § 13.4], we see that \({\tilde{W}}^u(\tau )\) and \(W^u(\tau )\) are indeed one-dimensional immersed manifolds, see also [23, Lemma 6.5]. \(\square \)

Combining the previous results with Remark 2.4, we can state the following.

Remark 4.3

Assume (1.5) with \(0< \varepsilon \le \varepsilon _1\), fix \(\tau \in {{\mathbb {R}}}\) and let \(d_*(\tau )>0\) be such that \(\varvec{\phi }(\tau , d_*(\tau ))= \varvec{\xi _1}(\tau )\), then \(\varvec{\phi }(\tau ,d) \in {\tilde{W}}^u(\tau )\) for any \(d \le d_*(\tau )\). In fact, the map \(\varvec{\phi }(\tau ,\cdot ): [0,d_*(\tau )] \rightarrow E_1\) is a smooth parametrization of \({\tilde{W}}^u(\tau )\). Furthermore, by construction, \(\varvec{\phi }(t,d) \in {\tilde{W}}^u(t)\subset E_1\) for any \(t \le \tau \) and any \(0<d \le d_*(\tau )\).

According to definition (2.6), we notice that \(T_1(d_*(\tau ))=\tau \), whence \(T_1(d)>0\) for any \(d<D_1=d_*(0)\). Thus, Remark 2.7 immediately follows.

We are now ready to prove Theorem 1.2.

Proof of Theorem 1.2

Setting \(\tau =0\) and applying Lemma 4.1 and Remark 4.3, we see that \(\varvec{\phi }(t, d_*(0)) \in E_1\) for any \(t<0\). In addition, \(x(t, d_*(0))>0\) and \(y(t, d_*(0))>0\) for \(t<0\), and \(y(0, d_*(0))=0\). Thanks to assumption \(\varvec{(\mathrm{K}_0)}\), we can apply Remark 2.6 to deduce that \(u(r;d_*(0))\) is a G.S. with fast decay and \(x(t, d_*(0))\) has a unique critical point which is a positive maximum. \(\square \)

Lemma 4.4

[10, Theorem 1.6], [9, Lemma 2.2] Assume conditions \(\varvec{(\mathrm{K}_1)}\)–\(\varvec{(\mathrm{K}_2)}\) and fix \(\rho \in (0,1)\). For any positive integer \(\ell \), there is \(d_\ell \) such that \(x(t,d_\ell )>0\) and \(y(t,d_\ell )\) has at least \(\ell \) non-degenerate zeroes for \(t< \ln (\rho )\).

As a consequence, \(T_\ell (d_{\ell })< \ln (\rho )<0\).

Notice that the last assertion gives Proposition 2.8. The proof of Lemma 4.4 is far from being trivial, and it is reproved in Appendix for completeness, following the original idea of [10, Theorem 1.6]. In fact, from Proposition 2.8 we easily get the existence of a trajectory of (2.2) having the whole set \(\Gamma _{\varepsilon }\) as \(\alpha \)-limit set. We emphasize that if \(l \ge \frac{n-2}{2}\) in \(\varvec{(\mathrm{K}_2)}\) such a trajectory does not exist, see [10, Theorem 1.1].

We are now interested in showing the continuity of the maps \(T_\ell (d)\) and \(R_\ell (d)\), defined in (2.6), since this property is crucial to prove Theorem A, as observed in the final part of Sect. 2.

Lemma 4.5

Let \(D>0\) be such that y(t, D) has at least \(\ell \) zeroes for \(t \in {{\mathbb {R}}}\), so that \(T_\ell (D)\) is well defined. Assume that \({\dot{y}}(T_\ell (D)) \ne 0\), then the functions \(T_\ell (d)\) and \(R_\ell (d)\), introduced in (2.6), are continuous in \(d=D\).

Proof

Fix \(\tau <T_\ell (D)\). According to Remark 2.4, we set \({\varvec{Q}}(D)=\varvec{\phi }(\tau ,D)\in W^u(\tau )\). Notice that \(y(T_\ell (D);\tau , {\varvec{Q}}(D))=0\) and \({\dot{y}}(T_\ell (D); \tau , {\varvec{Q}}(D)) \ne 0\). We assume that \({\dot{y}}(T_\ell (D); \tau , {\varvec{Q}}(D))>0\) (i.e. \(\ell \) even); the case \({\dot{y}}(T_\ell (D); \tau , {\varvec{Q}}(D))<0\) (\(\ell \) odd) is analogous. Then, for every small \(\Delta t\in (0,T_\ell (D)-\tau )\) we can find \(c>0\) such that

and \({\dot{y}}(t;\tau ,{\varvec{Q}}(D))>0\) for any \(t \in [T_\ell (D)-\Delta t, T_\ell (D)+\Delta t]\). Using continuous dependence on initial data, we can choose \(\sigma >0\) such that \(\Vert \varvec{\phi }(t;\tau ,{\varvec{Q}}(D))-\varvec{\phi }(t;\tau ,{\varvec{Q}})\Vert <c\), whenever \(\Vert {\varvec{Q}}-{\varvec{Q}}(D)\Vert <\sigma \) for every \(t\in [\tau ,T_\ell (D)+\Delta t]\). From Remark 2.4, for any \(\sigma >0\) we can find \(\delta >0\) with the following property: if \(|d-D|<\delta \), then \(\Vert {\varvec{Q}}(d)-{\varvec{Q}}(D)\Vert <\sigma \), where \({\varvec{Q}}(d):=\varvec{\phi }(\tau ,d)\in W^u(\tau )\). Summing up, if \(|d-D|<\delta \) we get

and we can assume \({\dot{y}}(t;\tau ,{\varvec{Q}}(d))>0\) for any \(t \in [T_\ell (D)-\Delta t, T_\ell (D)+\Delta t]\), too. Hence, \(T_\ell (d)\) is uniquely defined and \(|T_\ell (D)-T_\ell (d)|<\Delta t\) holds. This concludes the proof. \(\square \)

We emphasize that the transversality assumption in Lemma 4.5 is not just a technical condition: the continuity of \(T_\ell (d)\) and \(R_\ell (d)\) might indeed be lost removing this condition.

In order to prove the continuity of \(T_j(d)\), we introduce some useful notation.

Let \(\varvec{B_0}=(B_0,0)= \Gamma _0 \cap \{(x,0) \mid x>0 \}\), i.e. \(\varvec{B_0}=\varvec{A_1}(0)\). Assume \(0<\varepsilon <\varepsilon _\ell \); from Lemma 3.7 we can construct the sets \({\mathcal {A}}_j\) for \(j\in \{1,\ldots ,\ell \}\). Let \(F_j\) be the bounded set enclosed by \({\mathcal {A}}_j\) and the line \(y=0\) (see Fig. 2), i.e.

Define

Following the procedure developed in the proof of Lemma 4.2, from (3.5), (3.7) and (3.17), we easily get the following result which is crucial to prove the continuity of \(T_\ell (d)\) in its whole domain.

Lemma 4.6

Assume (1.5) with \(0<\varepsilon \le \varepsilon _\ell \), where \(\varepsilon _\ell \) is the computable constant given by Lemma 3.7; then the flow of (2.2) on \({\mathcal {A}}_j\) points towards the exterior of \(F_j\) for every \(j\in \{1,\ldots ,\ell \}\). Moreover, the flow of (2.2) on \(L_j\) points towards \(y>0\) if j is even, respectively, towards \(y<0\) if j is odd.

Now we are in a position to obtain the continuity of \(T_j(d)\).

Lemma 4.7

Assume \(\varvec{(\mathrm{K}_1)}\) and (1.5) with \(0<\varepsilon \le \varepsilon _\ell \). Then, the functions \(T_j(d)\), \(j=1,\ldots ,\ell \), are continuous in their domains, provided that \(T_j(d) \le 0\).

Proof

Let us fix \(j\in \{1\ldots ,\ell \}\). Let \(d >0\) be such that \(T_j(d)\) is well defined and \(T_j(d)\le 0\) holds. Then, the solution \(\varvec{\phi }(\cdot ,d)\) intersects the x-axis at \(T_j(d)\) in the point \(\varvec{\xi _j}(T_j(d))=\varvec{\phi }(T_j(d),d)\). By Lemma 4.6, the solution \(\varvec{\phi }(\cdot ,d)\) is driven by the spiral \(\varvec{\gamma }\) around the points \(\varvec{P^*}(\varepsilon )\) and \(\varvec{P^*}(0)\) remaining inside the set \(\Gamma _0\) in \((-\infty ,0)\) (cf. Lemma 3.2) and, finally, \(\varvec{\xi _j}(T_j(d))\in L_j\) with \(\dot{y} (T_j(d),d)\ne 0\). Then, by Lemma 4.5 the continuity of \(T_j\) in d follows. \(\square \)

Remark 4.8

Notice that Proposition 2.10 follows from Lemma 4.7.

We state the following result, which is a translation of Proposition 2.9.

Lemma 4.9

Assume (1.5) with \(0<\varepsilon \le \varepsilon _1\). Then, \(T_1(d)\) is continuous for any \(d>0\) and

Proof

Combining Lemmas 4.2 and 4.5 with the absence of invariant sets in the interior of \(E_1\), we see that the function \(T_1(d)\) is well defined for any \(d>0\) and it is continuous. From Remark 4.3, we know that for any \(\tau \in {{\mathbb {R}}}\) there is \(d_*(\tau )>0\) such that \(T_1(d_*(\tau ))=\tau \), hence \(T_1(\cdot ): (0,+\infty ) \rightarrow {{\mathbb {R}}}\) is surjective. According to Remark 2.4, we can parametrize \({\tilde{W}}^u(\tau )\) by \(\varvec{{\tilde{Q}}^u}(d)\) so that \(\varvec{{\tilde{Q}}^u}(0)=(0,0)\) and \(\varvec{{\tilde{Q}}^u}(d^*(\tau ))= \varvec{\xi _1}(\tau )\). Hence, from Remark 4.3 it easily follows that \(T_1(d) \rightarrow +\infty \) as \(d \rightarrow 0\).

Observe now that \(d^*(\tau ) \rightarrow +\infty \) as \(\tau \rightarrow -\infty \). In fact, by Remark 2.5\(u'(r,d^*(\tau ))<0\) for any \(0<r<{e}^{\tau }\), which, combined with (2.1) and (3.9), leads to

Recalling that \(T_1(d^*(\tau ))=\tau \), we see that there is \(d_k \nearrow +\infty \) such that \(T_1(d_k) \rightarrow -\infty \). This is what is actually needed for the argument of this paper. However, we show that \(T_1(d) \rightarrow -\infty \) as \(d \rightarrow +\infty \).

Assume by contradiction that there are \(M>0\) and \({\tilde{d}}_m \nearrow +\infty \) such that \(T_1({\tilde{d}}_m)>-M\) for any m. Then, for any m we can choose k such that \(d_k \le {\tilde{d}}_m <d_{k+1}\); we can assume without loss of generality that \(T_1(d_k)<-M-1\), \(T_1(d_{k+1})<-M-1\), while \(T_1({\tilde{d}}_m)>-M\). Let us fix \(\tau _k = 1+ \max \{ T_1(d_k) ; T_1(d_{k+1}) \}\) and denote by \(\breve{W}^u(\tau _k)\) the branch of \(W^u(\tau _k)\) between the origin and \(\varvec{{\tilde{Q}}^u}(d_{k+1})\). Following \(\breve{W}^u(\tau _k)\) from the origin towards \(\varvec{{\tilde{Q}}^u}(d_{k+1})\), we meet \(\varvec{{\tilde{Q}}^u}(d_{k})\) and then \(\varvec{{\tilde{Q}}^u}({\tilde{d}}_m)\). Hence, \(\breve{W}^u(\tau _k)\) enters the set \(E_1\) defined in (4.1), it crosses the x positive semi-axis until it gets to \(\varvec{{\tilde{Q}}^u}(d_{k})\) which lies in \(y<0<x\), then it bends and gets back to \(\varvec{{\tilde{Q}}^u}({\tilde{d}}_m) \in E_1\), then it bends again and gets to \(\varvec{{\tilde{Q}}^u}(d_{k+1})\) which lies again in \(y<0<x\). But this is in contradiction with Remark 2.2, since \(\breve{W}^u(\tau _k)\) is \(C^1\) close to the corresponding branch of \(W^u(-\infty )\). In fact, we can find a segment transversal to \(W^u(-\infty )\) which has a tangency point with \(\breve{W}^u(\tau _k)\). \(\square \)

Now Theorem A easily follows from Lemmas 4.4 and 4.7.

Proof of Theorem A

Let us fix \(\ell \ge 2\), \(0<\varepsilon \le \varepsilon _\ell \) and \( j \in \{1,2, \ldots , \ell \}\). Let us define

Obviously, \({\hat{I}}_j\) is a subset of \(I_j\) defined in (2.5). Since \(T_j(d)\) is continuous when it is negative (cf. Lemma 4.7), it is easy to see that \({\hat{I}}_j\) is open. Furthermore, there is \(a_1>0\) such that \({\hat{I}}_j \subset {\hat{I}}_1\subset (a_1, +\infty )\), cf. (4.3). From Lemma 4.4, we know that \({\hat{I}}_j\ne \emptyset \). Hence, we can find an interval \((a_j, b_j) \subset {\hat{I}}_j\) such that \(a_j \not \in {\hat{I}}_j\), and \(a_j \ge a_1>0\). Notice that \(b_j\) can be \(+\infty \), and, in fact, \(b_1=+\infty \).

We claim that \(T_j(a_j)=0\)for any\(j \in \{1,2, \ldots , \ell \}\). In fact, let us consider a sequence \(d^k \searrow a_j\). Since \(T_j\) is continuous, \(\lim _{k \rightarrow \infty }T_j(d^k) =T_j(a_j) \le 0\). But, if \(T_j(a_j) <0\) then \(a_j \in {\hat{I}}_j\), which is a contradiction. Therefore, \(T_j(a_j)=0\) and the claim is proved.

Then, it follows that \(x(t,a_j)\) is positive, and \(y(t,a_j)\) has exactly j zeroes for \(t \le 0\), so Theorem A follows from Remark 2.6. \(\square \)

5 Computation of \(\varvec{\varepsilon _\ell }\)’s. Proof of Theorem 1.1

In this section, we provide a procedure in order to obtain the values \(\varepsilon _\ell \) presented in Theorem 1.1. Let us recall that the explicit formula for \(\varepsilon _1\), cf. (1.8) and (1.11), has been already deduced from Eq. (3.9).

We begin by giving an algorithm which allows to compute explicitly the values of \(\varepsilon _{\ell }\) for Eq. (1.9) for any \(\ell \) and any dimension n. In particular, when \(\sigma =0\), i.e. (1.3) is considered, we obtain the table (1.7). However, these values are not expressed by close formulas.

Then, we obtain an explicit formula for \(\varepsilon _2\) in Proposition 5.3, while \(\varepsilon _3\) is given as a solution of an algebraic equation in Proposition 5.4. Finally, in Proposition 5.5 we prove the surprisingly simple formula for the \(n=4\) and \(\sigma =0\) case: \(\varepsilon _\ell =1/\ell \).

As illustrated in Sect. 3, in order to control the behaviour of the solutions of system (2.2) converging to the origin as \(t\rightarrow -\infty \), we need to construct a spiral-like path\(\varvec{\gamma }\). This curve is built by gluing together branches of different level curves of the energy functions \(H_\varepsilon \) and \(H_0\) introduced in (3.4).

The first branch of \(\varvec{\gamma }\) is defined when \(0<\varepsilon \le \varepsilon _1\), and is made up by the 0-level curve \({\mathcal {A}}_1\) of \(H_\varepsilon \), see (3.10), which connects the origin \(\varvec{O}\) and the point \(\varvec{A_1}(\varepsilon )=(A_1(\varepsilon ),0)\) defined in (3.8); we set \({\mathcal {H}}_1=H_0(\varvec{A_1}(\varepsilon ))\) as in (3.11).

The second branch exists for \(0<\varepsilon \le \varepsilon _2\) (defined just below) and it is made up by \({\mathcal {A}}_2\) which is part of the \({\mathcal {H}}_1\)-level curve of \(H_0\), see (3.12): it connects \(\varvec{A_1}(\varepsilon )\) and \(\varvec{A_2}(\varepsilon )=(A_2(\varepsilon ),0)\). We denote by \(\varepsilon _2>0\) the unique value such that \(A_2(\varepsilon _2)=P^*_x(\varepsilon _2)\), so that \(A_2(\varepsilon )<P^*_x(\varepsilon )\) iff \(0<\varepsilon <\varepsilon _2\), and we set \({\mathcal {H}}_2=H_{\varepsilon }(\varvec{A_2})\). Then, the third branch exists for \(0<\varepsilon \le \varepsilon _3\) and it is made up by \({\mathcal {A}}_3\) which is part of the \({\mathcal {H}}_2\)-level curve of \(H_\varepsilon \), see (3.14): it connects \(\varvec{A_2}(\varepsilon )\) and \(\varvec{A_3}(\varepsilon )=(A_3(\varepsilon ),0)\). Then, \(\varepsilon _3>0\) is the unique value such that \(A_3(\varepsilon _3)=P^*_x(0)\), so that \(A_3(\varepsilon )>P^*_x(0)\) iff \(0<\varepsilon <\varepsilon _3\), and we set \({\mathcal {H}}_3=H_0(\varvec{A_3})\).

So the iterative scheme to calculate the extremal x-coordinates \(A_i\) is obtained via Lemma 3.7 and Remark 3.8, by setting \(A_0=0\), and

which, combined with formula (5.5), allows us to determine \(A_{i+1}\) from the previous term \(A_i\), with a recursive procedure.

According to Lemma 3.7 and Remark 3.8, the procedure to draw \(\varvec{\gamma }\) for a certain value \(\varepsilon \) can be summarized by the following algorithm:

The critical value\(\varepsilon _\ell \) is the only value which satisfies the identities

Formula (5.2), combined with the explicit expression (3.7) of \(P_x^*\), allows us to calculate all the values \(\varepsilon _\ell \) with a simple shooting argument. In particular, through a rigorous computer-assisted computation we obtain the table (1.7), which provides approximations from below of the values \(\varepsilon _\ell \).

Recalling that we are treating Eq. (1.1) with K as in (1.5), we can observe that the values \(\varepsilon _\ell \) are not so small!

Remember that \(G_c\) is defined in (3.4); furthermore \(x_{1,c}(g)\) and \(x_{2,c}(g)\) are the non-negative zeroes of the equation in x, \(G_c(x)=g\) and they are, respectively, decreasing and increasing with respect to g, see Remark 3.5.

Let \(\mathtt {R}_c: [G_c^{\min },0] \rightarrow [0,1]\) be the continuous function defined by

Remark 5.1

The function \(\mathtt {R}_c\) is strictly decreasing.

Let us evaluate \(\varepsilon _2\): the stating point is (3.13), i.e. \(A_2(\varepsilon _2)=P_x^*(\varepsilon _2)\). Then, from (3.7) and (3.8), we get

According to (5.3) and recalling that \(A_1=x_{2,0}({\mathcal {H}}_1)\) and \(A_2=x_{1,0}({\mathcal {H}}_1)\), the previous condition is equivalent to ask for

Definition (5.3) enables us to express \(x_{1,c}\) and \(x_{2,c}\) as functions of \(\mathtt {R}_c\).

Lemma 5.2

Consider \(\mathtt {R}\in (0,1)\) and \(g\in (G_c^{\min },0)\) such that \(\mathtt {R}_c(g)=\mathtt {R}\). Then, we get

Proof

From \(G_c(x_{1,c}(g))=G_c(x_{2,c}(g))=g\), we deduce that

Then, substituting \(x_{1,c}(g)=\mathtt {R}\,x_{2,c}(g)\), we easily complete the proof. \(\square \)

We are now in the position to calculate explicitly the critical value \(\varepsilon _2\), proving (1.12) and its restriction (1.8) to the \(\sigma =0\) case.

Proposition 5.3

The critical value \(\varepsilon _2\) for (1.9) is given by the following formula

which equals \(\varepsilon _2= \frac{2}{n} \left[ \left( \frac{n}{n-2}\right) ^{\frac{n-2}{2}}-1\right] ^{-1}\) for (1.3), i.e. when \(\sigma =0\) and \(q=\frac{2n}{n-2}\).

Proof

From (3.8) and Lemma 5.2 with \(\mathtt {R}=\Lambda \) and \(c=0\), we obtain

whence

From (5.4) and (5.7), since \(\Lambda ^{q-2}= \frac{2}{q}\), we get

\(\square \)

Let us now proceed with the estimate of \(\varepsilon _3\), starting from \(A_3(\varepsilon _3) = P_x^*(0)\).

Proposition 5.4

The critical value \(\varepsilon _3\) is the unique solution of the following equation:

provided that \({\mathcal {X}}^q(\varepsilon _3) + {\mathcal {W}}(\varepsilon _3)>0\).

Proof

From (3.16) we have \(G_\varepsilon (A_3)= {\mathcal {H}}_2 = - \frac{\varepsilon }{q} (A_1^q -A_2^q)\). Thus, according to (3.4), we immediately infer that

Similarly, from (3.16) we find \(G_0(A_2)={\mathcal {H}}_1=-\frac{\varepsilon }{q} A_1^q\), hence

Substituting the expression of \(A_2\) given by (5.10) into (5.11), we obtain

Since \(A_3=A_3(\varepsilon _3)=P_x(0)\), see (3.7), and \(A_1\) is given by (3.8) we get

which coincides with Eq. (5.8). The uniqueness of the solution of this equation is guaranteed provided that \(A_2(\varepsilon _3)>0\), which is equivalent to \({\mathcal {X}}^q(\varepsilon _3) + {\mathcal {W}}(\varepsilon _3)>0\). \(\square \)

Proposition 5.5

Consider (1.3), i.e. (1.9) with \(\sigma =0\), and set \(n=4\). Then, \(\displaystyle \varepsilon _\ell = \frac{1}{\ell }\) for any \(\ell \ge 1\).

Proof

According to (2.1) and (3.1), we know that \(\alpha =1\), \(q=4\). Consequently, (5.5) becomes

Hence, from (5.1) we obtain the following identities

Starting from \(A_0=0\), we can prove by induction that

Combining (5.2) with (3.7), we note that if \(\ell =2{\hat{i}}+1\) is odd,

whence it follows \(\displaystyle \varepsilon _\ell =\frac{1}{\ell }\). Conversely, if \(\ell =2{{\hat{i}}}\) is even, one has

which implies \(\displaystyle \varepsilon _\ell =\frac{1}{\ell }\). This completes the proof. \(\square \)

References

Alarcón, S., Quaas, A.: Large number of fast decay ground states to Matukuma-type equations. J. Differ. Equ. 248, 866–892 (2010)

Battelli, F., Johnson, R.: On positive solutions of the scalar curvature equation when the curvature has variable sign. Nonlinear Anal. 47, 1029–1037 (2001)

Bianchi, G.: Non-existence and symmetry of solutions to the scalar curvature equation. Commun. Partial Differ. Equ. 21, 229–234 (1996)

Bianchi, G.: The scalar curvature equation on \({\mathbb{R}}^n\) and \({\mathbb{S}}^n\). Adv. Differ. Equ. 1, 857–880 (1996)

Bianchi, G.: Non-existence of positive solutions to semilinear elliptic equations on \({\mathbb{R}}^n\) or \({\mathbb{R}}^n_+\) through the method of moving planes. Commun. Partial Differ. Equ. 22, 1671–1690 (1997)

Bianchi, G., Egnell, H.: An ODE approach to the equation \(\Delta u+K u^{\frac{n+2}{n-2}} =0\), in \({\mathbb{R}}^n\). Math. Z. 210, 137–166 (1992)

Bianchi, G., Egnell, H.: A variational approach to the equation \(\Delta u+K u^{\frac{n+2}{n-2}} =0\) in \({\mathbb{R}}^n\). Arch. Ration. Mech. Anal. 122, 159–182 (1993)

Cao, D., Noussair, E., Yan, S.: On the scalar curvature equation \(-\Delta u = (1 + \varepsilon K)u^{(N+2)/(N-2)}\) in \({\mathbb{R}}^N\). Calc. Var. Partial Differ. Equ. 15, 403–419 (2002)

Chen, C.C., Lin, C.S.: Blowing up with infinite energy of conformal metrics on \(S^n\). Commun. Partial Differ. Equ. 24, 785–799 (1999)

Chen, C.C., Lin, C.S.: On the asymptotic symmetry of singular solutions of the scalar curvature equations. Math. Ann. 313, 229–245 (1999)

Cheng, K.S., Chern, J.L.: Existence of positive entire solutions of some semilinear elliptic equations. J. Differ. Equ. 98, 169–180 (1992)

Coddington, E., Levinson, N.: Theory of Ordinary Differential Equations. Mc Graw Hill, New York (1955)

Dalbono, F., Franca, M.: Nodal solutions for supercritical Laplace equations. Commun. Math. Phys. 347, 875–901 (2016)

Ding, W.Y., Ni, W.M.: On the elliptic equation \(\Delta u+K u^{\frac{n+2}{n-2}} =0\) and related topics. Duke Math. J. 52, 485–506 (1985)

Flores, I., Franca, M.: Multiplicity results for the scalar curvature equation. J. Differ. Equ. 259, 4327–4355 (2015)

Franca, M.: Non-autonomous quasilinear elliptic equations and Ważewski’s principle. Topol. Methods Nonlinear Anal. 23, 213–238 (2004)

Franca, M.: Structure theorems for positive radial solutions of the generalized scalar curvature equation. Funkc. Ekvacioj 52, 343–369 (2009)

Franca, M.: Fowler transformation and radial solutions for quasilinear elliptic equations. Part 2: nonlinearities of mixed type. Ann. Mat. Pura Appl. 189, 67–94 (2010)

Franca, M.: Radial ground states and singular ground states for a spatial-dependent \(p\)-Laplace equation. J. Differ. Equ. 248, 2629–2656 (2010)

Franca, M.: Positive solutions for semilinear elliptic equations: two simple models with several bifurcations. J. Dyn. Differ. Equ. 23, 573–611 (2011)

Franca, M.: Positive solutions of semilinear elliptic equations: a dynamical approach. Differ. Integr. Equ. 26, 505–554 (2013)

Franca, M., Johnson, R.: Ground states and singular ground states for quasilinear partial differential equations with critical exponent in the perturbative case. Adv. Nonlinear Stud. 4, 93–120 (2004)

Franca, M., Sfecci, A.: Entire solutions of superlinear problems with indefinite weights and Hardy potentials. J. Dyn. Differ. Equ. 30, 1081–1118 (2018)

García-Huidobro, M., Manasevich, R., Yarur, C.: On the structure of positive radial solutions to an equation containing \(p\)-Laplacian with weights. J. Differ. Equ. 223, 51–95 (2006)

Johnson, R., Pan, X.B., Yi, Y.F.: The Melnikov method and elliptic equation with critical exponent. Indiana Math. J. 43, 1045–1077 (1994)

Kawano, N., Ni, W.M., Yotsutani, S.: A generalized Pohozaev identity and its applications. J. Math. Soc. Jpn. 42, 541–564 (1990)

Kawano, N., Yanagida, E., Yotsutani, S.: Structure theorems for positive radial solutions to \(\Delta u+K(|x|) u^{p} =0\) in \({\mathbb{R}}^n\). Funkcial. Ekvac. 36, 557–579 (1993)

Li, Y., Ni, W.M.: On conformal scalar curvature equations in \({\mathbb{R}}^n\). Duke Math. J. 57, 895–924 (1988)

Lin, C.S., Lin, S.S.: Positive radial solutions for \(\Delta u+K u^{\frac{n+2}{n-2}} =0\) in \({\mathbb{R}}^n\) and related topics. Appl. Anal. 38, 121–159 (1990)

Lin, L.S., Liu, Z.L.: Multi-bump solutions and multi-tower solutions for equations on \({\mathbb{R}}^n\). J. Funct. Anal. 257, 485–505 (2009)

Naito, Y.: Bounded solutions with prescribed numbers of zeros for the Emden-Fowler differential equation. Hiroshima Math. J. 24, 177–220 (1994)

Ni, W.M.: On the elliptic equation \(\Delta u+K u^{\frac{n+2}{n-2}} =0\), its generalizations, and applications in geometry. Indiana Univ. Math. J. 31, 493–529 (1982)

Noussair, E.S., Yan, S.: The scalar curvature equation on \({\mathbb{R}}^N\). Nonlinear Anal. 45, 483–514 (2001)

Papini, D., Zanolin, F.: On the periodic boundary value problem and chaotic-like dynamics for nonlinear Hill’s equations. Adv. Nonlinear Stud. 4, 71–91 (2004)

Wei, J., Yan, S.: Infinitely many solutions for the prescribed scalar curvature problem on \({\mathbb{S}}^N\). J. Funct. Anal. 258, 3048–3081 (2010)

Yan, S.: Concentration of solutions for the scalar curvature equation on \({\mathbb{R}}^N\). J. Differ. Equ. 163, 239–264 (2000)

Yanagida, E., Yotsutani, S.: Classification of the structure of positive radial solutions to \(\Delta u +K(|x|) u^p=0\) in \({\mathbb{R}}^n\). Arch. Ration. Mech. Anal. 124, 239–259 (1993)

Yanagida, E., Yotsutani, S.: Existence of nodal fast-decay solutions to \(\Delta u +K(|x|) |u|^{p-1}u=0\) in \({\mathbb{R}}^n\). Nonlinear Anal. 22, 1005–1015 (1994)

Yanagida, E., Yotsutani, S.: Existence of positive radial solutions to \(\Delta u +K(|x|) u^p=0\) in \({\mathbb{R}}^n\). J. Differ. Equ. 115, 477–502 (1995)

Yanagida, E., Yotsutani, S.: Global structure of positive solutions to equations of Matukuma type. Arch. Ration. Mech. Anal. 134, 199–226 (1996)

Acknowledgements

F. Dalbono would like to express her gratitude to the “Centro de Matemática, Aplicaç\(\tilde{\text{ o }}\)es Fundamentais e Investigaç\(\tilde{\text{ a }}\)o Operacional” of the University of Lisbon for its hospitality. M. Franca wishes to honour prof. R. Johnson who recently passed away, for his generosity and careful guide. He was the one who introduced the author to the study of this subject and to research in mathematics.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

F. Dalbono: Partially supported by the GNAMPA project “Dinamiche non autonome, analisi reale e applicazioni”. M. Franca: Partially supported by the GNAMPA project “Sistemi dinamici, metodi topologici e applicazioni all’analisi nonlineare”. A. Sfecci: Partially supported by the GNAMPA project “Problemi differenziali con peso indefinito: tra metodi topologici e aspetti dinamici”

Proof of Lemma 4.4

Proof of Lemma 4.4

In this Appendix, we reprove Lemma 4.4, and so Proposition 2.8, in order to be more self-contained. We recall that this result has been already proved in [10, Theorem 1.6] by Chen and Lin and restated in [9, Lemma 2.2]. However, their clever proof is far from being trivial, and the argument is even more difficult to be read due to the presence of some misprints. For this reason and for completeness, we reprove it here in a slightly more general version, following the outline of the original idea, but performing some changes in certain points to make it more coherent with the dynamical ideas of the present article.

In this Appendix, we consider equation

and K is a positive \(C^1\) function. Our aim consists in proving the following result.

Proposition A.1

Consider Eq. (A.1), and assume both \(\varvec{(\mathrm{K}_1)}\) and \(\varvec{(\mathrm{K}_2)}\). Then, for any fixed \(\ell \in {\mathbb {N}}\), and for any \(\rho >0\) there is \({d}_\ell \in I_\ell \) such that \(R_\ell (d_{\ell }) <\rho \).

Taking into account the standard rescaling argument exhibited to prove Remark 1.3, without loss of generality, from now on we will restrict ourselves to the case

where \(\chi >0\) is a fixed constant, which need not be small. In particular, Eq. (A.1) reduces to Eq. (1.9).

Let \(u(r)=u(r;d)\) be a solution of (1.9), and let \(\varvec{\phi }(t,d)=\varvec{\phi }(t)=(x(t),y(t))\) be the corresponding trajectory of (2.2). According to (3.2), \({\mathcal {H}}(\varvec{\phi }(t),t)\) is decreasing for \(t \le 0\), and, consequently, as in Lemma 3.1, \({\mathcal {H}}(\varvec{\phi }(t),t)\) is non-positive for \(t \le 0\), and u(r) and x(t) are positive for \(r \le 1\) and \(t \le 0\), respectively. From the monotonicity assumption on K, we immediately observe that \(1\le {{{\mathcal {K}}}}(t)\le 1+\chi \), for \(t\le 0\). Thus, according to Lemma 3.2, we deduce that \(\varvec{\phi }(t)\) belongs to the region enclosed by \(\Gamma _{0}\) for \(t\le 0\), and, consequently, \(\varvec{\phi }(t,d)\) is bounded for \(t \le 0\), uniformly in d; in fact \(0<x(t) \le A_1(0)\), see (3.8).

Furthermore, from (2.2), it is easy to check that \({\dot{y}}(t)>0\) as long as \(0<x(t)<P^*_x(\chi )\le P^*_x(\chi \,k({e}^t))\) for \(t\le 0\), see (3.7). Let us choose \(\zeta _0 = P^*_x(\chi )/2>0\).

Lemma A.2

Fix \(\zeta <\zeta _0\); there exist the sequences \(d^i\), \({\bar{T}}^i_1\) and \({\bar{T}}^i_2\) with \({\bar{T}}^i_1<{\bar{T}}^i_2\), \(d^i \nearrow +\infty \), \({\bar{T}}^i_2\searrow -\infty \) such that the trajectory \(\varvec{\phi ^i}(t)=(x^i(t),y^i(t))\) of (2.2) corresponding to \(u^i(r)=u(r;d^i)\) satisfies the following property: \(x^i(t)<\zeta \) for \(t<{\bar{T}}^i_1\), \(x^i({\bar{T}}^i_1)=\zeta \), \(x^i(t)>\zeta \) for \({\bar{T}}^i_1<t<{\bar{T}}^i_2\), and \(x^i({\bar{T}}^i_2)=\zeta \).

Proof

To prove this lemma, we use an argument different from [10, Theorem 1.6]. Let us observe that the level curve \(\Gamma _{\chi }\) defined in (3.6) intersects the line \(x=\zeta \) in two points \(\varvec{Q^{\infty }_{\pm }}=(\zeta , \pm Y^{\infty })\), where \(Y^{\infty }>0\). Let

and notice that the flow of the autonomous system (2.2), where \({\mathcal {K}}(t) \equiv K(0) =1+\chi \) is transversal on L, since \(\zeta <\zeta _0\). Furthermore, \(\Gamma _{\chi }\) is the graph of a homoclinic trajectory \(\varvec{\psi }_{\tau }(t)\) of such a system, and for any \(\tau \in {{\mathbb {R}}}\) we may assume that \(\varvec{\psi }_{\tau }(\tau )=\varvec{Q^{\infty }_{-}}\), due to the t-translation invariance of the autonomous system. From Remark 2.2, we see that there is \(\tau ^*\) such that the unstable leaf \(W^u(\tau )\) of the original non-autonomous system (2.2) intersects the line L in a point \({\varvec{Q}}(\tau )\) for any \(\tau \le \tau ^*\). Furthermore, \({\varvec{Q}}(\tau ) \rightarrow \varvec{Q^{\infty }_{-}}\) as \(\tau \rightarrow -\infty \).

Let us consider the trajectory \(\varvec{\psi }_{\tau }(t)={\bar{\varvec{\phi }}}(t;\tau , \varvec{Q^{\infty }_-})\) of the frozen autonomous system (2.2) where \({\mathcal {K}}(t) \equiv 1+\chi \) for \(\tau \le \tau ^*\), and the corresponding regular solution \({\bar{u}}(r;{\bar{d}}(\tau ))\) of (A.1), with \(K(r) \equiv 1+\chi \). Similarly, let \(\varvec{\phi }(t;\tau , \varvec{Q}(\tau ))\) be a trajectory of the original non-autonomous system (2.2), and let \(u(r;d(\tau ))\) be the corresponding regular solution of (A.1) with the original K(r). Using continuous dependence on parameters, we see that \(\varvec{\phi }(t;\tau , \varvec{Q}(\tau ))\) is close to \(\varvec{\psi }_{\tau }(t)\) if \(t \le \tau \) and \( \tau \le \tau ^*\), with a possibly larger \(|\tau ^*|\). According to [20, Remark 2.5], we notice that \({\bar{d}}(\tau ) \rightarrow +\infty \) as \(\tau \rightarrow -\infty \), which implies that \(d(\tau ) \rightarrow +\infty \) as \(\tau \rightarrow -\infty \), see also the proof of Lemma 4.9. The claim immediately follows by extracting the sequence \(d^i=d({\bar{T}}^i_2)\) which satisfies the required monotonicity properties. \(\square \)

Fix \(\zeta <\zeta _0\) so that the existence of the sequences \(d^i,\,{\bar{T}}^i_1,\,{\bar{T}}^i_2\) is guaranteed by Lemma A.2. Let \(u^i(r)=u(r;d^i)\), and let \(\varvec{\phi ^i}(t)=(x^i(t),y^i(t))\) be the corresponding trajectory of (2.2).

Lemma A.3

For any \(M>0\), there are \(r_0\) and \(i_0\) such that \(u^{i}(r_0) \ge M\) for any \(i \ge i_0\).

Proof

From now on, we follow quite closely the ideas of [10]. Assume, by contradiction, that the lemma is false; then there exists \(M>0\) with the following property: for any \(r_0>0\), there is a subsequence, still denoted by \(u^i\), such that \(u^i(r_0)< M\) for any i.

Fix \(\delta >0\) small enough to satisfy

where the constant \(l\in (0,\alpha )\) is given by assumption \(\varvec{(\mathrm{K}_2)}\). We set \({\tilde{\zeta }}={\tilde{\zeta }}(\delta )= \min \left[ \left( \frac{2\delta \alpha - \delta ^2}{K(0)}\right) ^{\frac{1}{q-2}}; \zeta \right] \), so that from (2.2) we find

Taking into account \(\varvec{(\mathrm{K}_2)}\), we choose \(r_0\) so small that \(M r_0^{(n-2)/2}< {\tilde{\zeta }}/2\), and

Set \(T_0=\ln (r_0)<0\). Recalling that \({\bar{T}}^i_2\searrow -\infty \), we can find \(i_0 \in {\mathbb {N}}\) such that \({\bar{T}}^i_1<{\bar{T}}^i_2<T_0\) for any \(i \ge i_0\). Without loss of generality, we now restrict ourselves to consider the sequence \(u^i\) with \(i\ge i_0\). Thus,

According to Lemma A.2, we define

so that \(x^i(t) < {\tilde{\zeta }}\) when \({\bar{T}}^i<t< T_0\), hence (A.4) holds and \(\ddot{x}^i(t)>0\) in this interval. So, we have two cases: either \({\dot{x}}^i(t) < 0\) for any \({\bar{T}}^i<t<T_0\), or \(x^i(t)\) has a local minimum at \(t={\mathcal {T}} \in ({\bar{T}}^i,T_0)\).

Case 1)Assume \({\dot{x}}^i(t) < 0\)for any \({\bar{T}}^i<t<T_0\).

Let \(E_{\delta }(x,y)= y^2-(\alpha -\delta )^2x^2\). According to (2.2), we deduce that

From (A.4), we see that \(E_{\delta }(x^i(t),y^i(t))\) is strictly decreasing if \({\bar{T}}^i<t< T_0\). Hence, \(E_{\delta }(\varvec{\phi ^i}(t))>E_{\delta }( \varvec{\phi ^i}(T_0))\) for any \({\bar{T}}^i<t<T_0\), so that from (2.2) we find

Since \(x^i(t)\) is decreasing, the right hand side of (A.9) is positive in \(({\bar{T}}^i,T_0)\), hence

for any \({\bar{T}}^i<t<T_0\). Integrating, we get

At this point, we need assumption \(\varvec{(\mathrm{K}_2)}\) to estimate \({\bar{T}}^i\) from above. Since \(x^i({\bar{T}}^i)= {\tilde{\zeta }}\), cf. (A.7), and \(x^i(T_0)<{\tilde{\zeta }}/2\), cf. (A.6), there is \({\tilde{T}} \in ({\bar{T}}^i, T_0)\) such that \(x^i({\tilde{T}})={\tilde{\zeta }}/2\). Furthermore, \(y^i(t)\) is bounded, so there is \(c>0\) such that \({\tilde{T}}-{\bar{T}}^i>c\, {\tilde{\zeta }}\). Actually, it might be shown that \({\tilde{T}}-{\bar{T}}^i> \ln (2)/\alpha \) as a consequence of the negativity of \({\mathcal {H}}(\varvec{\phi ^i}(t),t)\) for \(t\le 0\) and using some Gronwall estimates. Thus, from (3.1) and (3.2) we find

Then, recalling that \({\mathcal {H}}(\varvec{\phi ^i}(t),t)\) and \(\dot{{\mathcal {K}}}(t)\) are non-positive and \(x^i(t)\) decreases when \(t \in [{\bar{T}}^i, T_0]\), from \(\varvec{(\mathrm{K}_2)}\) and (A.5), we obtain

where \(c_1=\frac{1}{\alpha }\sqrt{\frac{l A c }{q 2^{q}}}\). Hence, by (A.6)

which implies

Plugging the second inequality of (A.14) in (A.11), we get

where \(c_2=\frac{\ln (2)- \ln (c_1)}{\alpha -\delta }\). Then, from (A.16) and (A.15) we find

From the choice of \(\delta \) in (A.3), \( \frac{2\alpha }{l}\left( 1- \frac{l }{2(\alpha -\delta )} \right) -1= 2\left( \frac{\alpha }{l} -1 \right) -\frac{\delta }{\alpha -\delta }=\beta >0\), then there are \(c_3>0\), \(c_4>0\) such that (A.17) can be written as follows

Since \({\tilde{\zeta }}>0\) and \(M>0\) are fixed, we can let \(T_0\) go to \(-\infty \), obtaining a contradiction with (A.18), and the lemma in Case 1 is proved.

Case 2)Assume that there is \({\mathcal {T}} \in ({\bar{T}}^i, T_0)\) such that \({\dot{x}}^i(t) < 0\) for \({\bar{T}}^i \le t<{\mathcal {T}}\) and \({\dot{x}}^i(t) > 0\) for \({\mathcal {T}}<t \le T_0\).

Repeating the argument of Case 1 for \({\bar{T}}^i \le t<{\mathcal {T}}\), we go through (A.9) and (A.10) and we find

Now we estimate \(T_0- {\mathcal {T}}\) with analogous techniques. In particular, from (A.4) and (A.8) we see that \(E_{\delta }(x^i(t),y^i(t))\) is strictly increasing if \({\mathcal {T}}<t< T_0\). Hence, \(E_{\delta }(\varvec{\phi ^i}(t))>E_{\delta }(\varvec{\phi ^i}({\mathcal {T}}))\) for any \({\mathcal {T}}<t<T_0\), and, consequently,

Since \({\dot{x}}^i(t)>0\), the right hand side of (A.20) is positive in \(({\mathcal {T}},T_0)\), hence

Integrating, we get

Combining (A.19) with (A.21), we conclude that

Moreover, notice that (A.12) and (A.13) keep on holding by replacing \(T_0\) with \({\mathcal {T}}\). In particular, according to (A.6), the following inequalities hold:

which, plugged in (A.22), lead to

Hence, there are \({\tilde{c}}_3>0\) and \({\tilde{c}}_4>0\) such that

Taking into account that \(T_0<0\), we finally infer that

where \(\left( -1+\frac{l}{\alpha -\delta }\right) <0\) by (A.3). Since \({\tilde{\zeta }}>0\) and \(M>0\) are fixed, while \({\bar{T}}^i<T_0\) is arbitrarily small, we can let \(T_0\) and, consequently, \({\bar{T}}^i\) go to \(-\infty \), obtaining a contradiction with (A.23). This proves Case 2. \(\square \)

Now we are ready to state the result proved in [10, Theorem 1.6] from which Proposition A.1 and Lemma 4.4 follow.

Proposition A.4

Consider Eq. (A.1) under the condition (A.2), and assume both \(\varvec{(\mathrm{K}_1)}\) and \(\varvec{(\mathrm{K}_2)}\). Then, there is a singular solution \(u^{\infty }(r)\) such that \(\liminf _{r \rightarrow 0} u^{\infty }(r) r^{\alpha }=0\) and \(\limsup _{r \rightarrow 0} u^{\infty }(r) r^{\alpha }=A_1(\chi )\).

Proof

Since \(u^i(r)< A_1(0) r^{-\alpha }\) and there is \(c>0\) such that \(|u'^i(r)|< c r^{-(\alpha +1)}\) for any \(0\le r<1\), it follows that, up to subsequences, \(u^i(r)\) converges uniformly in any compact interval of (0, 1] to a function, say \(u^{\infty }(r)\), together with its derivative. Notice that \(u^{\infty }(r)\) is a solution of (A.1); furthermore, from Lemma A.3 we know that \({\lim _{r \rightarrow 0}}u^{\infty }(r)=+\infty \), so \(u^\infty \) is singular. Let \(\varvec{\phi ^{\infty }}(t)=(x^{\infty }(t),y^{\infty }(t))\) be the trajectory of (2.2) corresponding to \(u^{\infty }(r)\).

Using (3.2) and Lebesgue convergence theorem for any \(t \le 0\), we find

Since \(\dot{{\mathcal {K}}}(t) \frac{[x^{\infty }(t)]^q}{q} \in L^1((-\infty ,0])\), we see that \({\lim _{t \rightarrow -\infty }}{\mathcal {H}}(\varvec{\phi ^{\infty }}(t),t)=0\). Being \(u^{\infty }(r)\) a singular solution, we conclude that \( x^{\infty }(t) \not \rightarrow (0,0)\) as \(t \rightarrow -\infty \), so \(x^{\infty }\) has the whole \(\Gamma _{\chi }\) as \(\alpha \)-limit set, and the proposition follows. \(\square \)

Proof of Proposition A.1

Proposition A.1 now follows immediately from Proposition A.4 recalling that \(u^i(r)=u(r;d^i) \rightarrow u^{\infty }(r)\) uniformly in any compact subset of (0, 1]. For any fixed \(\rho >0\) and \(\ell \in {\mathbb {N}}\) we can find \(\rho _\ell <\rho \) such that \(\varvec{\phi ^\infty }(t)\) intersects the x-axis at least \(\ell \) times in the interval \((\ln \rho _{\ell }, \ln \rho )\). So, the same property holds for \(\varvec{\phi ^i}(t)\), when the index i is sufficiently large; hence, for any \(\ell \) we find \(\varvec{\phi ^{i_{\ell }}}(t)=\varvec{\phi }(t,d^{i_{\ell }})\) such that \(R_\ell (d^{i_{\ell }})<\rho \).

We refer to [9], in particular [9, Lemma 2.2], for more details. \(\square \)

Rights and permissions

About this article

Cite this article

Dalbono, F., Franca, M. & Sfecci, A. Multiplicity of ground states for the scalar curvature equation. Annali di Matematica 199, 273–298 (2020). https://doi.org/10.1007/s10231-019-00877-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10231-019-00877-2

Keywords

- Scalar curvature equation

- Ground states

- Fowler transformation

- Invariant manifold

- Shooting method

- Bubble tower solutions

- Phase plane analysis

- Multiplicity results